95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Physiol. , 25 February 2025

Sec. Computational Physiology and Medicine

Volume 16 - 2025 | https://doi.org/10.3389/fphys.2025.1486763

Objective: This study aims to employ physiological model simulation to systematically analyze the frequency-domain components of PPG signals and extract their key features. The efficacy of these frequency-domain features in effectively distinguishing emotional states will also be investigated.

Methods: A dual windkessel model was employed to analyze PPG signal frequency components and extract distinctive features. Experimental data collection encompassed both physiological (PPG) and psychological measurements, with subsequent analysis involving distribution patterns and statistical testing (U-tests) to examine feature-emotion relationships. The study implemented support vector machine (SVM) classification to evaluate feature effectiveness, complemented by comparative analysis using pulse rate variability (PRV) features, morphological features, and the DEAP dataset.

Results: The results demonstrate significant differentiation in PPG frequency-domain feature responses to arousal and valence variations, achieving classification accuracies of 87.5% and 81.4%, respectively. Validation on the DEAP dataset yielded consistent patterns with accuracies of 73.5% (arousal) and 71.5% (valence). Feature fusion incorporating the proposed frequency-domain features enhanced classification performance, surpassing 90% accuracy.

Conclusion: This study uses physiological modeling to analyze PPG signal frequency components and extract key features. We evaluate their effectiveness in emotion recognition and reveal relationships among physiological parameters, frequency features, and emotional states.

Significance: These findings advance understanding of emotion recognition mechanisms and provide a foundation for future research.

Emotions represent a complex array of psychological and physiological reactions that individuals experience in response to specific stimuli (Shu et al., 2018). The spectrum of emotional states can exert varying influences on an individual’s physical and mental wellbeing, potentially precipitating severe health conditions (Ong et al., 2006) For instance, chronic exposure to negative emotional states has been linked to the etiology of mood disorders such as depression and anxiety (Button et al., 2012; Gou et al., 2023). Consequently, the accurate identification of emotions has emerged as a pivotal area of inquiry within the realm of psychological research.

The method of emotion assessment based on physiological signals stands out for its capability to collect data autonomously and discern emotional states (Maria et al., 2019), offering a significant advantage over traditional approaches that rely on subjective emotional scales (Bradley and Lang, 1994) and physical cues (Anthony and Patil, 2023; Harms et al., 2010; Hasan et al., 2019). Unlike these, physiological signals, which are inherently spontaneous and less prone to subjective influences, provide a more objective measure of emotional responses (Jang et al., 2014). The activation of emotions is inherently linked to the central nervous system’s regulatory functions. This has prompted a multitude of studies to extract multifaceted features from electroencephalogram (EEG) signals (Alvarez-Jimenez et al., 2024; Issa et al., 2021; Li et al., 2022; Sarma and Barma, 2021), aiming to construct models for emotion identification or to investigate effective methods through the application of deep learning algorithms (Dhara et al., 2023; Joshi and Ghongade, 2021). Beyond EEG, the realm of emotion identification research has also incorporated a range of other physiological signals. These include electrocardiogram (ECG) (Hsu et al., 2020; Sarkar et al., 2022), which captures the heart’s electrical activity; electromyography (EMG) (Kulke et al., 2020; Sato et al., 2008), which measures muscle electrical activity; and galvanic skin response (GSR) (Goshvarpour et al., 2017; Wen et al., 2014), which reflects the body’s sweat gland activity in response to emotional stimuli. Each of these modalities contributes unique insights into the complex interplay between physiological responses and emotional experiences.

The burgeoning ubiquity of portable devices has catapulted photoplethysmography (PPG) into the spotlight of research communities, thanks to its notable benefits such as ease of acquisition, operational simplicity, and minimal equipment costs. Concurrently, the existing body of research has established that a plethora of physiological changes triggered by emotional stimuli are modulated by the autonomic nervous system (ANS) (Gordan et al., 2015; Rainville et al., 2006), impacting vital organs like the heart, blood vessels, and muscles (Harris and Matthews, 2004; Kleiger et al., 2005). These physiological shifts are vividly reflected in PPG signals, serving as a tangible indicator of the body’s response to emotions (Chakraborty et al., 2020). For instance, the emotion of fear can induce vasoconstriction and tachycardia (Johnstone, 1971), while anger may lead to vasodilation in facial blood vessels, resulting in blushing and arrhythmia (Drummond, 1999). The spectrum of human emotions elicits a diverse array of effects on PPG signals (Davydov et al., 2011; Krumhansl, 1997), each offering a unique perspective on the intricate relationship between emotional states and physiological responses (Nummenmaa et al., 2014).

To date, the body of research leveraging photoplethysmography (PPG) signals for precise emotion recognition remains modest. Notable contributions include Paul’s work (Paul et al., 2024), where a novel time-domain feature was extracted from the DEAP (Koelstra et al., 2012) dataset to discern various emotional states. Beckmann et al. (2019), in another study, employed dual sensors to capture PPG’s Perfusion Time to Peak (PTT) features, subsequently integrating them into the realm of wearable device-based emotion recognition research. Li et al. (2017) contributed by acquiring both PPG morphological and PRV features to differentiate between states of sadness and happiness. Furthermore, Wang and Yu (2021) and Lee et al. (2020) have ventured into the application of deep learning methodologies for the analysis of PPG signals in emotion recognition, showcasing the potential of these advanced techniques.

Previous studies have made significant progress in the field of emotion recognition using PPG signals, yet further exploration remains warranted. Current research predominantly focuses on PRV and morphological features, with limited exploration of frequency-domain analysis of PPG waveforms. Given that the frequency domain of signals often contains substantial valuable information, this study aims to employ physiological model simulation to systematically analyze the frequency-domain components of PPG signals and extract their key features. Furthermore, this research will investigate the efficacy of these frequency-domain features in effectively distinguishing emotional states, thereby contributing to the advancement of emotion recognition methodologies.

The schematic diagram presented in Figure 1 delineates the emotion recognition methodology predicated on frequency-domain features derived from photoplethysmography (PPG) signals. The process encompasses several pivotal steps: 1) Constructing physiological simulation models, conducting frequency domain analysis, and extracting key features; 2) Designing experiments to collect PPG signals and preprocessing them; 3) Accurate recognition of emotional states; 4) Extracting PRV and morphological features for comparison and finally 5) Verifying the recognition universality based on PPG signals collected from the DEAP dataset.

Consequently, the development of PPG simulation models is of paramount importance for investigating the influence of different physiological factors on PPG signals. Among the available cardiovascular system models, the dual windkessel model Goldwyn and Watt (1967) proposed by Goldwyn and Watt stands out as one of the most frequently utilized frameworks. This model, along with its equivalent circuit representation, is depicted in Figure 2, providing a visual and theoretical foundation for understanding the complex dynamics of the cardiovascular system as they relate to PPG signal generation. This study constructed a simulation design for Simulink based on this model and obtained 7 variable parameters as shown in Table 1.

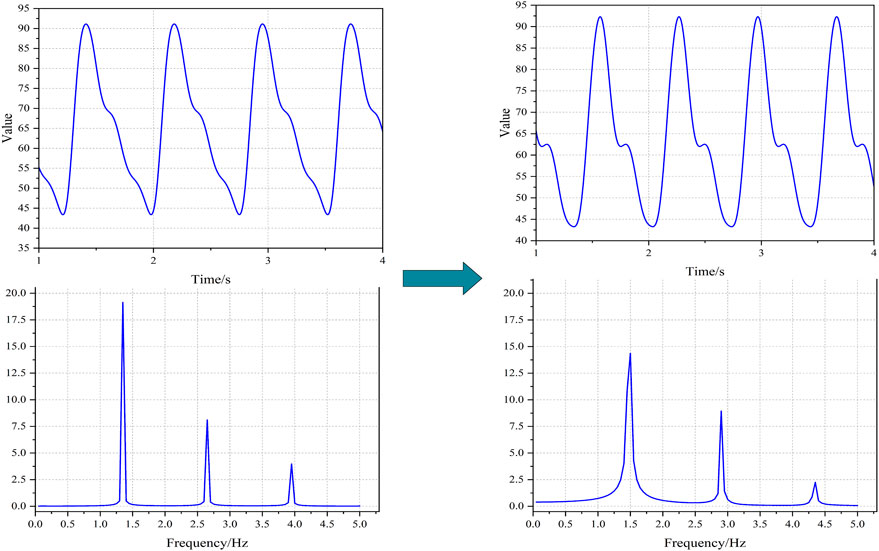

By systematically modulating the aforementioned variable parameters, significant alterations were observed in both the simulated PPG waveforms and their corresponding frequency spectra. As illustrated in Figure 3, the morphological state of the PPG waveforms exhibited visually discernible variations. Furthermore, distinct differences were identified in the spectral peaks of the frequency domain representation, demonstrating the sensitivity of these spectral components to parameter variations.

Figure 3. The PPGs under different states based on simulation. Notes: The physiological parameters were configured as follows: Left-side: R = 0.8, C₁ = 0.8, C₂ = 0.18, L = 0.008, Td = 0.77, Ts = 0.28, Q₀ = 395; Right-side: R = 1.2, C₁ = 1.2, C₂ = 0.20, L = 0.012, Td = 0.67, Ts = 0.25, Q₀ = 450.

Our analysis reveals that the frequency-domain information of the photoplethysmography (PPG) signal is predominantly characterized by its fundamental frequency and two harmonic frequency bands. Consequently, we derived multiple power-related features and ratio-based features from these three frequency bands as shown in Figure 4. The specific features and their corresponding variations in response to parameter changes during the simulation are comprehensively presented in Table 2. The computation of these features was performed through the following procedure: First, the average heart rate (HR) was obtained through preliminary data processing. Subsequently, Fast Fourier Transform (FFT) analysis was applied to the data segment. The power spectral density within the frequency bands of ±0.2 Hz centered at the fundamental HR frequency was identified as the Basic Frequency component (BF). Similarly, the power within ±0.2 Hz bands centered at twice and three times the HR frequency were designated as the First Harmonic Frequency (FHF) and Second Harmonic Frequency (SHF) components, respectively. The computational methodology for these ratio-based features is further detailed in Table 2.

The analysis demonstrates distinct patterns in frequency features corresponding to variations in hemodynamic parameters: (1) Increased peripheral vascular resistance leads to attenuation of the fundamental frequency while enhancing harmonic frequencies. (2) Elevated vascular compliance results in amplification of the first harmonic frequency, accompanied by attenuation of both the fundamental frequency and the second harmonic frequency. (3) Augmented blood flow inertia induces enhancement of both the fundamental frequency and the first harmonic frequency, with the latter exhibiting more pronounced amplification, while simultaneously causing attenuation of the second harmonic frequency. Furthermore, other physiological parameters also exert significant influences on these features.

This dataset is anchored in Ekman and Friesen (1971) theory of discrete emotions, which posits that emotions are distinct, universally identified mental states. In alignment with this theoretical framework and to ensure the selection of authentic and impactful emotion-inducing materials, our research team referred to authoritative emotion databases. Notably, we took cues from the DECAF database (Abadi et al., 2015) in curating a selection of video materials designed to elicit a variety of emotional responses. Our team has conducted extensive prior research to thoroughly assess and validate the efficacy of these selected materials in inducing the intended emotions during emotion induction experiments (Wang et al., 2022). The specific materials chosen for this study are detailed in Table 3, where each entry corresponds to a particular emotional state aimed to be induced.

The experimental protocol for this dataset was granted approval by the Medical Ethics Committee of the Department of Psychology and Behavioral Sciences at Zhejiang University, as evidenced by the ethical review document (Zhejiang University Psychological Ethics Review [2022] No. 059). A total of 192 students from Zhejiang University were initially recruited to partake in the study. Eligibility criteria for participants included having normal vision, hearing, and perception abilities, as well as being free from any physical or psychological conditions that could potentially influence emotional responses. Throughout the experimental process, stringent quality control measures were implemented. Regrettably, the dataset was compromised due to several factors: (1) instrumental operational errors resulted in the unsuccessful acquisition of 12 cases; (2) 4 cases were excluded due to participants’ personal reasons; and (3) preliminary quality assessment led to the elimination of 19 cases owing to suboptimal physiological signal acquisition. This data attrition, while regrettable, was necessary to maintain the integrity and reliability of the study. Consequently, the dataset was refined to include data and relevant information from 157 participants who met the criteria, comprising 96 females and 61 males.

The comprehensive experimental protocol for each participant was conducted in a dimly lit room, ensuring minimal interference from ambient light sources, with the exception of the computer display screen, as depicted in Figure 5. Prior to the commencement of the experiment, participants were provided with a comprehensive briefing on the experimental procedures and necessary precautions. They were required to sign an informed consent form, signifying their voluntary agreement to participate in the study. Subsequently, each participant was outfitted with a photoelectric sensor on their left index finger for the acquisition of PPG signals, as well as electrodes to capture additional physiological signals. The PPG signals were recorded using a physiological signal monitor (ePM-12M, Mindray, China), which operated at a sampling rate of 125 Hz. The video stimuli were presented on a computer screen positioned at a distance ranging from 0.5 to 1 m from the participant, allowing them to view the content from their most comfortable seated position. Throughout the experiment, each participant was exposed to a total of 8 distinct video materials designed to elicit various emotional responses. Upon completion of each video segment, participants were prompted to complete the Self-Assessment Manikin (SAM) Emotion Scale, followed by a brief respite. The sequence of video presentation and scale assessment was orchestrated by a specially designed experimental program, enabling participants to independently execute all steps of the experimental process until its conclusion.

PPG signals are among the most accessible physiological signals for collection; however, they are susceptible to various sources of noise and interference that can complicate the acquisition process. Despite the implementation of hardware-level denoising techniques, the integrity of PPG signals can still be compromised by factors such as respiration, bodily movement, and issues related to data transmission. To ensure the acquisition of high-fidelity PPG signals, it is imperative to employ additional processing measures. In the context of this study, all PPG recordings underwent a series of processing techniques aimed at enhancing signal quality.

Firstly, the 1–20 Hz bandpass filter was used to remove most of the noise and interference caused by breathing, body movements, and other factors, thereby focusing the signal on high-density information regions. Due to the presence of high-frequency disturbances, each PPG record y was smoothed by Formula 1 and the result was denoted as y1, where xi represented the sampling time of each point, i represented the sequence number of the data points, 3 ≤ i ≤ n-1, and n was the number of data points in the record.

Then, the method named “moving-pane” was used to identify fiducial points of each PPG record. This method created a pane with a specific width and moved it along the timeline of the PPG record. All maximum points in the pane and minimum points between every two maximum points were recorded during the movement and the maximum points that do not meet the following rules were excluded, as shown in Figure 6A: 1) The distance between this point Pi and Pi-1 less than 0.6s 2) The amplitude difference between this point pi and Ti-1 less than 0.5 times the amplitude difference between Pi-1 and Ti-2, or the amplitude difference between pi and Ti-1 less than 0.5 times the amplitude difference between pi+1 and Ti.

Following the application of the “moving-pane” method, a meticulous manual review was performed to identify and retain the extremum points within the PPG record, which correspond to the peaks and troughs that serve as the fiducial markers of the waveform. This process is crucial for the accurate characterization of the PPG signal’s morphology. Subsequently, to address the issue of baseline drift that can distort the PPG signal, the method illustrated in Figure 6B and encapsulated by Formula 2 was employed. This technique effectively removes the baseline wander, ensuring that the origin of all individual PPG waveforms is recalibrated to zero. This normalization is essential for the consistent analysis and comparison of PPG signals across different recordings.

In the concluding phase of signal processing, the z-score normalization technique was applied to eliminate the variability in the scale of each PPG record. This standardization procedure ensures that all records are brought onto a common scale, facilitating a unified analysis. The aforementioned processing methodologies were executed using a combination of self-devised algorithms and the scipy package (Virtanen et al., 2020), which is a fundamental component of the Python ecosystem for scientific computing. Post-processing, each PPG record was meticulously segmented in accordance with the distinct emotional stimuli that served as the triggers. Specifically, the records were divided into segments every 20 s, with each segment annotated to reflect the predominant emotional state during that interval.

Following the preprocessing of PPG signals, the data were segmented according to different emotional stimuli protocols. The continuous recordings were subsequently divided into 20-s epochs, with each epoch being annotated with corresponding emotional labels. This segmentation procedure yielded a total of 2,474 epochs for low arousal states, 5,560 epochs for high arousal states, 3,229 epochs for low valence states, and 4,805 epochs for high valence states, thereby establishing a comprehensive dataset for emotional state classification.

For each 20-s epoch, nine frequency-domain features (as specified in Table 3) were extracted. To account for inter-individual variability and other potential confounding factors, feature normalization was performed using a z-score-like transformation according to Equation 3. This standardization procedure ensures comparability across different subjects while preserving the relative distribution characteristics of the extracted features.

Among them, for specific feature F and a certain subject S, Fmean and Fstd were the average and standard deviation of feature F for all sections of subject S, Femotion was the value of feature F before processing for a specific emotional section of subject S, and Fremove was the processed feature value.

The Mann Whitney U-test (Rosner and Grove, 1999), a non-parametric statistical method, serves as a robust tool for assessing significant differences between two independent datasets, especially when the data does not meet the assumptions of parametric tests. In this study, the U-test, facilitated by Python’s SciPy library (Virtanen et al., 2020), was employed to scrutinize the variability in the newly extracted PPG frequency-domain features across different emotional dimensions, specifically comparing the high and low arousal states, as well as the high and low valence states. The preliminary evaluation of the emotion-discriminative capability of the extracted PPG frequency-domain features was conducted through two complementary approaches: (1) statistical analysis using p-values derived from the two-tailed U-test, and (2) comparative examination of feature distribution patterns across different emotional states. This dual-method assessment framework provides robust evidence for evaluating the effectiveness of the proposed features in emotion differentiation.

Subsequently, based on the preliminary analysis, the identified emotion-discriminative features were utilized to construct a machine learning model for emotion recognition using Support Vector Machines (SVM). In this study, the SVM was implemented using the Scikit-learn algorithm package in Python (Fabian et al., 2011). To effectively partition the dataset, the train_test_split function from the Scikit-learn package was utilized, segregating the feature set into training and testing subsets with a ratio of 7:3. Given the modest size of the dataset, traditional cross-validation could potentially result in overfitting. Consequently, the study opted for a leave-one-point-out method for model training, a technique that iteratively excludes a single data point from the training process. This approach was iterated 100 times, ensuring a comprehensive assessment of the model’s performance (Ma et al., 2023). The Area Under the Curve (AUC) of the Receiver Operating Characteristic (ROC) curve was computed for each iteration. The median AUC value, derived from the 100 models, was adopted as the representative performance metric for the SVM model.

ROC curves (Rodellar-Biarge et al., 2015) were employed to evaluate and visualize the discriminatory power of the features in identifying distinct emotional states. The model’s predictive accuracy, the AUCs for the ROC curves, and the precision metric were computed to quantitatively assess the model’s efficacy in recognizing emotions.

To further validate the effectiveness of the extracted PPG frequency-domain features while maintaining the exclusive use of PPG as the sole physiological signal, we additionally extracted two well-established feature sets that have been extensively validated by numerous researchers for emotion recognition: pulse rate variability (PRV) features and PPG morphological features, as detailed in Table 4. Following the same analytical protocol applied to the frequency-domain features, these comparative features underwent preliminary screening before being utilized to construct SVM-based machine learning models, thereby obtaining their respective emotion recognition accuracy metrics for systematic comparison. Furthermore, to investigate the underlying relationships among different feature sets, we conducted correlation analysis between the PPG frequency-domain features and the two additional feature groups. This analysis facilitated the development of an integrated feature set through optimal feature fusion, potentially enhancing the overall emotion recognition performance.

To ascertain the efficacy and generalizability of the methodologies and features delineated in this research, an expanded dataset of PPG recordings was sourced from the DEAP database, which is publicly accessible. The DEAP dataset (Koelstra et al., 2012) serves as a multimodal repository for affective analysis, encompassing physiological signal recordings from 32 participants exposed to 40 distinct video stimuli. For the purpose of this analysis, PPG recordings were exclusively selected. Consistent with the methodology applied to the aforementioned dataset, participants were prompted to rate the arousal, valence, and additional pertinent attributes of each video stimulus. The models established in the preceding section were then applied to discern the emotional states associated with the DEAP dataset entries. Subsequently, the derived accuracy metrics were utilized to assess the models’ performance in emotion recognition and their adaptability across different datasets.

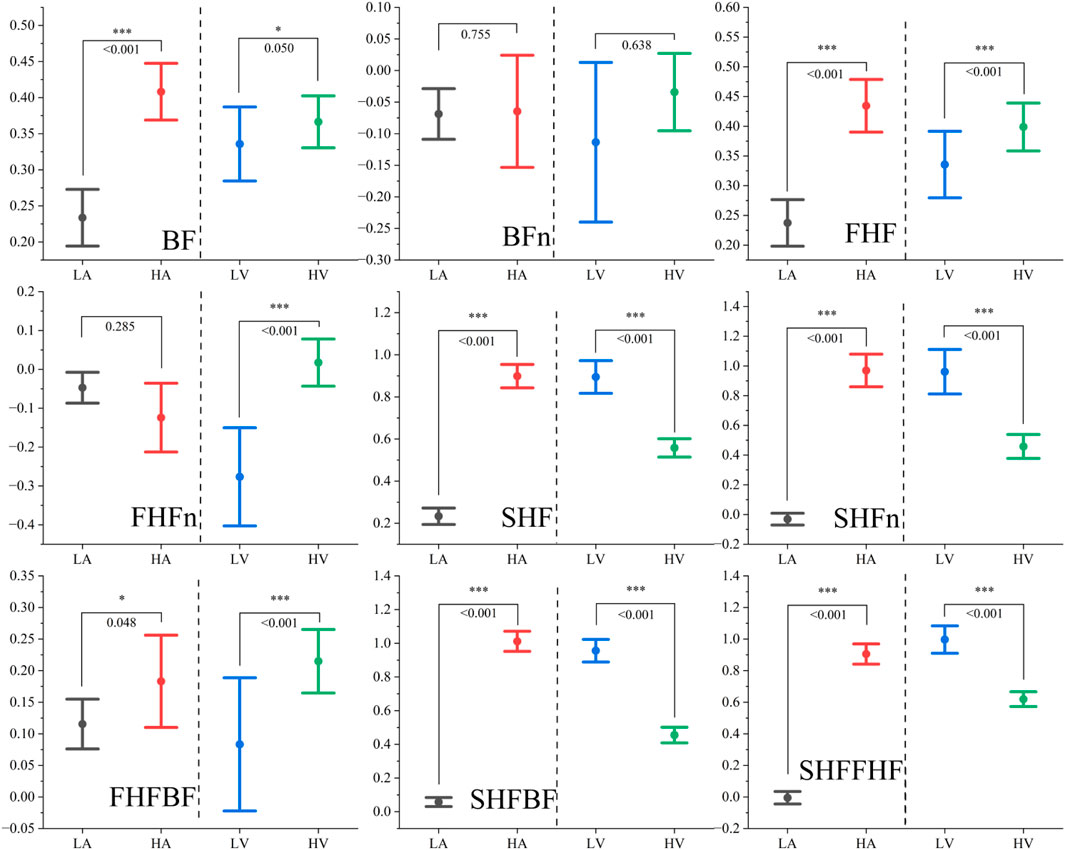

Figure 7 presents the distribution patterns of the nine extracted PPG frequency-domain features across different emotional states, categorized by high/low arousal and high/low valence. The corresponding p-values derived from U-tests, which indicate the statistical significance of differences between high and low arousal states as well as between high and low valence states, are also displayed. The analysis reveals distinct patterns of feature variations in response to different emotional dimensions.

Figure 7. The distribution of Peaks-based frequency-domain features under different emotional states. Note: *represents p < 0.05, ** represents p < 0.01, *** represents p < 0.001.

Regarding arousal levels, we observe synchronous increases in both the fundamental frequency and the first harmonic frequency, while the ratio-based features remain relatively stable. Notably, features associated with the second harmonic frequency demonstrate significant enhancement, indirectly reflecting the overall increase in total power during heightened arousal states. This observed phenomenon can be primarily attributed to the physiological correlates of increased peripheral vascular resistance and enhanced blood flow intensity. These physiological changes are consistent with the characteristic manifestations of heightened arousal states, which typically involve muscle tension, vasoconstriction, and intensified cardiac activity resulting from emotional excitation (Shu et al., 2018).

In contrast, valence levels exhibit a different pattern of influence: while both the fundamental frequency and the first harmonic frequency show moderate increases (with the first harmonic demonstrating more pronounced enhancement), the second harmonic frequency displays a marked decrease. Interestingly, the total power remains relatively unaffected by changes in valence. This observation is strongly associated with the fundamental nature of valence as a psychophysiological dimension that primarily reflects the distinction between positive and negative affective states (Gendolla and Krusken, 2001; Fairclough et al., 2014).

The analysis clearly demonstrates that the PPG frequency-domain features exhibit significant sensitivity to both arousal and valence variations, as evidenced by their systematic changes corresponding to different emotional states. To further evaluate the effectiveness of these features in emotion recognition, we conducted a comparative analysis with two well-established feature sets: PRV features and PPG morphological features. Figure 8 presents the results of intra-group and inter-group correlation analyses among these three feature sets.

The correlation matrix reveals several important patterns: (1) The PPG frequency-domain features maintain relatively low intra-group correlations, suggesting their complementary nature in capturing different aspects of emotional states. (2) Moderate correlations between frequency-domain features and morphological features indicate that the spectral information partially reflects certain morphological characteristics of PPG signals. (3) Both PRV and morphological feature sets exhibit substantially higher intra-group correlations compared to the frequency-domain features, indicating greater redundancy within these conventional feature sets. This comparative analysis suggests that the proposed frequency-domain features offer a more diverse and potentially more efficient representation of emotional states.

The comparative results of feature performance are presented in Table 5 and Figure 9. Among the three feature sets, the proposed PPG frequency-domain features demonstrated superior performance in machine learning models, achieving an accuracy of 87.5% in arousal classification and 81.4% in valence classification. The higher accuracy in arousal classification aligns with the more pronounced feature distribution differences observed in arousal states, as previously discussed. The PPG morphological features also showed reasonable discriminative capability, while the PRV features exhibited relatively poor performance. The suboptimal performance of PRV features may be attributed to two potential factors: (1) The exclusion of traditionally effective features such as LF and HF components, which could not be accurately computed due to the short duration (20s) of individual epochs; (2) The high intra-feature correlation within the PRV feature set, resulting in substantial redundancy despite the large number of features, effectively reducing the dimensionality of useful information.

To further validate the generalizability of our methodology, we replicated the analytical procedure on PPG signals from the DEAP dataset, maintaining identical processing pipelines and model construction approaches. The comparative results, as presented in Table 5, demonstrate remarkable consistency with our proprietary dataset findings, though with marginally reduced classification accuracy in the DEAP dataset. This performance variation can be attributed to multiple factors, including the use of default SVM parameters without specific optimization and inherent differences in emotional elicitation protocols between studies.

Capitalizing on the observed low inter-group feature correlations, we implemented a comprehensive feature fusion strategy. This integrated approach yielded exceptional emotion recognition performance, achieving accuracy rates surpassing 90% on our proprietary dataset. These findings not only confirm the standalone efficacy of the proposed PPG frequency-domain features in emotion recognition but also establish their crucial role as a fundamental component in advanced emotion recognition systems. The features’ unique complementary characteristics make them an indispensable element in the pursuit of enhanced recognition performance, serving as a critical piece in the development of more sophisticated emotion classification frameworks.

This study employs a physiological model-based simulation approach to systematically analyze the frequency-domain components of PPG signals and extract their essential characteristics. Through this comprehensive investigation, we examine the efficacy of these frequency-domain features in effectively discriminating emotional states. Furthermore, the research elucidates the intricate relationships between physiological parameters and emotional states, as well as the connections between PPG frequency-domain features and emotional responses, thereby providing a deeper understanding of the psychophysiological mechanisms underlying emotion recognition.

Through comprehensive investigation of PPG frequency-domain features, we have identified significant correlations between these features and various physiological parameters, including peripheral vascular resistance, blood flow inertia, and vascular compliance. Moreover, these features demonstrate remarkable sensitivity to variations in both arousal and valence levels, thereby establishing a crucial tripartite relationship among physiological parameters, PPG frequency-domain characteristics, and emotional states. These findings provide valuable psychophysiological foundations and references for subsequent emotion recognition analyses based on PPG frequency-domain features.

An intriguing observation from both our proprietary dataset and the DEAP dataset reveals that PPG frequency-domain features exhibit greater sensitivity to arousal levels compared to valence. This phenomenon may be attributed to the more pronounced cardiovascular changes associated with emotional intensity (arousal) rather than emotional polarity (positive/negative valence), suggesting that the autonomic nervous system’s response to emotional arousal might be more substantial and detectable through PPG analysis.

In comparison with existing studies, as summarized in Table 6, research utilizing PPG signals for emotion recognition remains relatively scarce, with even fewer studies employing PPG as the primary or exclusive physiological modality. When considering variations in emotional elicitation materials and differing analytical focuses across studies, our research demonstrates competitive emotion recognition accuracy, positioning itself within the upper-middle range of existing literature. This represents a significant achievement in the field. Notably, findings from other researchers corroborate our observations regarding the suboptimal performance of PRV features and the relatively better performance of morphological features. However, what distinguishes our study is the establishment of a comprehensive theoretical framework that bridges physiological parameters, PPG frequency-domain features, and emotional states. This tripartite model provides robust psychophysiological evidence supporting the use of PPG frequency-domain features for emotion recognition, thereby advancing our understanding of the underlying mechanisms and offering a solid theoretical foundation for future research in this domain.

Nevertheless, this study is subject to several limitations that warrant consideration. First, while we have established a tripartite framework connecting physiological parameters, PPG frequency-domain features, and emotional states, the current analysis primarily demonstrates their strong associations rather than establishing precise quantitative correlations. This limitation highlights the need for more sophisticated modeling approaches to quantify these relationships. Secondly, the selection of emotional elicitation materials presents inherent challenges. The material-specific characteristics sometimes exert a more substantial influence on the extracted features than the emotional states themselves. Additionally, the duration of stimulus materials significantly impacts feature selection and interpretation, a methodological concern that persists across numerous studies in this field. Finally, our research primarily focused on feature analysis and interpretation, with relatively less emphasis on optimization for classification accuracy. This methodological orientation, while providing valuable insights into feature characteristics, has resulted in classification performance that, while respectable, leaves room for improvement. Future studies should aim to strike a better balance between feature exploration and recognition performance optimization.

This study employs a physiology model-based simulation approach to systematically analyze the frequency-domain components of PPG signals and extract their essential characteristics. Through comprehensive investigation, we examine the efficacy of these frequency-domain features in effectively discriminating emotional states. Furthermore, the research elucidates the intricate relationships between physiological parameters, frequency-domain characteristics, and emotional states, thereby providing deeper insights into the psychophysiological mechanisms underlying emotion recognition. These findings establish a solid theoretical foundation and offer valuable references for subsequent research in this field.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by this study was performed in accordance with the Nurenberg Code. This human study was approved by Zhejiang University Psychological Ethics Review-approval: [2022] No. 059. All adult participants provided written informed consent to participate in this study. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

ZZ: Conceptualization, Methodology, Resources, Software, Writing–original draft. XW: Conceptualization, Investigation, Methodology, Validation, Writing–original draft. YX: Formal Analysis, Validation, Writing–review and editing. WC: Resources, Validation, Writing–review and editing. JZ: Supervision, Validation, Writing–review and editing. SC: Conceptualization, Supervision, Writing–review and editing. HC: Project administration, Resources, Supervision, Writing–review and editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors would like to thank Yimin Shen, Laboratory leader of College of Biomedical Engineering and Instrument Sciences at Zhejiang University (ZJU, China) for kind help in this work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphys.2025.1486763/full#supplementary-material

Abadi M. K., Subramanian R., Kia S. M., Avesani P., Patras I., Sebe N. (2015). DECAF: MEG-based multimodal database for decoding affective physiological responses. IEEE T. Affect. Comput. 6, 209–222. doi:10.1109/TAFFC.2015.2392932

Alvarez-Jimenez M., Calle-Jimenez T., Hernandez-Alvarez M. (2024). A comprehensive evaluation of features and simple machine learning algorithms for electroencephalographic-based emotion recognition. Appl. SCIENCES-BASEL 14, 2228. doi:10.3390/app14062228

Anthony A. A., Patil C. M. (2023). Speech emotion recognition systems: a comprehensive review on different methodologies. Wirel. Pers. Commun. 130, 515–525. doi:10.1007/s11277-023-10296-5

Beckmann N., Viga R., Doganguen A., Grabmaier A. (2019). Measurement and analysis of local pulse transit time for emotion recognition. IEEE Sens. J. 19, 7683–7692. doi:10.1109/JSEN.2019.2915529

Bradley M. M., Lang P. J. (1994). Measuring emotion: the self-assessment Manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 25, 49–59. doi:10.1016/0005-7916(94)90063-9

Button K. S., Lewis G., Munafo M. R. (2012). Understanding emotion: lessons from anxiety. Behav. Brain Sci. 35, 145. doi:10.1017/S0140525X11001464

Chakraborty A., Sadhukhan D., Pal S., Mitra M. (2020). PPG-Based automated estimation of blood pressure using patient-specific neural network modeling. J. Mech. Med. Biol. 20, 2050037. doi:10.1142/S0219519420500372

Choi E. J., Kim D. K. (2018). Arousal and valence classification model based on long short-term memory and DEAP data for mental healthcare management. Healthc. Inform. Res. 24 (4), 309–316. doi:10.4258/hir.2018.24.4.309

Davydov D. M., Zech E., Luminet O. (2011). Affective context of sadness and physiological response patterns. J. Psychophysiol. 25, 67–80. doi:10.1027/0269-8803/a000031

Dhara T., Singh P. K., Mahmud M. (2023). A fuzzy ensemble-based deep learning model for EEG-based emotion recognition. Cogn. Comput. 16, 1364–1378. doi:10.1007/s12559-023-10171-2

Drummond P. D. (1999). Facial flushing during provocation in women. Psychophysiology 36, 325–332. doi:10.1017/S0048577299980344

Ekman P., Friesen W. V. (1971). Constants across cultures in the face and emotion. J. Of Personality And Soc. Psychol. 17, 124. 129. doi:10.1037/h0030377

Fabian P., Gaël V., Alexandre G., Vincent M., Bertrand T., Olivier G., et al. (2011). Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830. Available at: https://scikit-learn.org/stable/

Fairclough S. H., van der Zwaag M., Spiridon E., Westerink J. (2014). Effects of mood induction via music on cardiovascular measures of negative emotion during simulated driving. Physiol. Behav. 129, 173–180. doi:10.1016/j.physbeh.2014.02.049

Gendolla G., Krusken J. (2001). Mood state and cardiovascular response in active coping with an affect-regulative challenge. Int. J. Psychophysiol. 41, 169–180. doi:10.1016/S0167-8760(01)00130-1

Goldwyn R. M., Watt T. B. (1967). Arterial pressure pulse contour analysis via a mathematical model for clinical quantification of human vascular properties. IEEE T. Bio.-Med. Eng. BM14, 11. doi:10.1109/TBME.1967.4502455

Gordan R., Gwathmey J. K., Xie L. H. (2015). Autonomic and endocrine control of cardiovascular function. World J. Cardiol. 7, 204–214. doi:10.4330/wjc.v7.i4.204

Goshvarpour A., Abbasi A., Goshvarpour A. (2017). An accurate emotion recognition system using ECG and GSR signals and matching pursuit method. Biomed. J. 40, 355–368. doi:10.1016/j.bj.2017.11.001

Gou X. Y., Li Y. X., Guo L. X., Zhao J., Zhong D. L., Liu X. B., et al. (2023). The conscious processing of emotion in depression disorder: a meta-analysis of neuroimaging studies. Front. Psychiatry 14, 1099426. doi:10.3389/fpsyt.2023.1099426

Harms M. B., Martin A., Wallace G. L. (2010). Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol. Rev. 20, 290–322. doi:10.1007/s11065-010-9138-6

Harris K. F., Matthews K. A. (2004). Interactions between autonomic nervous system activity and endothelial function: a model for the development of cardiovascular disease. Psychosom. Med. 66, 153–164. doi:10.1097/01.psy.0000116719.95524.e2

Hasan M., Rundensteiner E., Agu E. (2019). Automatic emotion detection in text streams by analyzing Twitter data. Int. J. DATA Sci. Anal. 7, 35–51. doi:10.1007/s41060-018-0096-z

Hsu Y., Wang J., Chiang W., Hung C. (2020). Automatic ECG-based emotion recognition in music listening. IEEE T. Affect. Comput. 11, 85–99. doi:10.1109/TAFFC.2017.2781732

Issa S., Peng Q., You X. (2021). Emotion classification using EEG brain signals and the broad learning system. IEEE T. Syst. Man. Cy.-S. 51, 7382–7391. doi:10.1109/TSMC.2020.2969686

Jang E., Park B., Kim S., Chung M., Park M., Sohn J. (2014). Data from: emotion classification based on bio-signals emotion recognition using machine learning algorithms. 1373, 1376. doi:10.1109/InfoSEEE.2014.6946144

Johnstone M. (1971). The effects of oral sedatives on the vasoconstrictive reaction to fear. Brit. J. Anaesth. 43, 365–379. doi:10.1093/bja/43.4.365-a

Joshi V. M., Ghongade R. B. (2021). EEG based emotion detection using fourth order spectral moment and deep learning. Biomed. Signal Proces. 68, 102755. doi:10.1016/j.bspc.2021.102755

Kang D., Kim D. (2022). 1D convolutional autoencoder-based PPG and GSR signals for real-time emotion classification. IEEE ACCESS 10, 91332–91345. doi:10.1109/ACCESS.2022.3201342

Kleiger R. E., Stein P. K., Bigger J. J. (2005). Heart rate variability: measurement and clinical utility. Ann. Noninvasive Electrocardiol. 10, 88–101. doi:10.1111/j.1542-474X.2005.10101.x

Koelstra S., Muhl C., Soleymani M., Lee J., Yazdani A., Ebrahimi T., et al. (2012). DEAP: a database for emotion analysis using physiological signals. IEEE T. Affect. Comput. 3, 18–31. doi:10.1109/T-AFFC.2011.15

Krumhansl C. L. (1997). An exploratory study of musical emotions and psychophysiology. Can. J. Exp. Psychol. 51, 336–353. doi:10.1037/1196-1961.51.4.336

Kulke L., Feyerabend D., Schacht A. (2020). A comparison of the affectiva iMotions facial expression analysis software with EMG for identifying facial expressions of emotion. Front. Psychol. 11, 329. doi:10.3389/fpsyg.2020.00329

Lee M., Lee Y. K., Lim M., Kang T. (2020). Emotion recognition using convolutional neural network with selected statistical photoplethysmogram features. Appl. SCIENCES-BASEL 10, 3501. doi:10.3390/app10103501

Lee M. S., Lee Y. K., Pae D. S., Lim M. T., Kim D. W., Kang T. K., et al. (2019). Fast emotion recognition based on single pulse PPG signal with convolutional neural network. Appl. Sci. 9 (16), 3355. doi:10.3390/app9163355

Li D., Xie L., Chai B., Wang Z. (2022). A feature-based on potential and differential entropy information for electroencephalogram emotion recognition. Electron. Lett. 58, 174–177. doi:10.1049/ell2.12388

Li F., Yang L., Shi H., Liu C. (2017). Differences in photoplethysmography morphological features and feature time series between two opposite emotions: happiness and sadness. Artery Res. 18, 7. doi:10.1016/j.artres.2017.02.003

Ma H., Zhang D., Wang Y., Ding Y., Yang J., Li K. (2023). Prediction of early improvement of major depressive disorder to antidepressant medication in adolescents with radiomics analysis after ComBat harmonization based on multiscale structural MRI. BMC Psychiatry 23, 466. doi:10.1186/s12888-023-04966-8

Maria E., Matthias L., Sten H. (2019). Emotion recognition from physiological signal analysis: a review. Electron. NOTES Theor. Comput. Sci. 343, 35–55. doi:10.1016/j.entcs.2019.04.009

Nummenmaa L., Glerean E., Hari R., Hietanen J. K. (2014). Bodily maps of emotions. Proc. Natl. Acad. Sci. U. S. A. 111, 646–651. doi:10.1073/pnas.1321664111

Ong A. D., Bergeman C. S., Bisconti T. L., Wallace K. A. (2006). Psychological resilience, positive emotions, and successful adaptation to stress in later life. J. Pers. Soc. Psychol. 91, 730–749. doi:10.1037/0022-3514.91.4.730

Paul A., Chakraborty A., Sadhukhan D., Pal S., Mitra M. (2024). A simplified PPG based approach for automated recognition of five distinct emotional states. Multimed. Tools Appl. 83, 30697–30718. doi:10.1007/s11042-023-16744-5

Rainville P., Bechara A., Naqvi N., Damasio A. R. (2006). Basic emotions are associated with distinct patterns of cardiorespiratory activity. Int. J. Psychophysiol. 61, 5–18. doi:10.1016/j.ijpsycho.2005.10.024

Rodellar-Biarge V., Palacios-Alonso D., Nieto-Lluis V., Gomez-Vilda P. (2015). Towards the search of detection in speech-relevant features for stress. Expert Syst. 32, 710–718. doi:10.1111/exsy.12109

Rosner B., Grove D. (1999). Use of the Mann-Whitney U-test for clustered data. Stat. Med. 18, 1387–1400. doi:10.1002/(sici)1097-0258(19990615)18:11<1387::aid-sim126>3.0.co;2-v

Sarkar P., Etemad A. (2022). Self-supervised ECG representation learning for emotion recognition. IEEE T. Affect. Comput. 13, 1541–1554. doi:10.1109/TAFFC.2020.3014842

Sarma P., Barma S. (2021). Emotion recognition by distinguishing appropriate EEG segments based on random matrix theory. Biomed. Signal Proces. 70, 102991. doi:10.1016/j.bspc.2021.102991

Sato W., Fujimura T., Suzuki N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. doi:10.1016/j.ijpsycho.2008.06.001

Shu L., Xie J., Yang M., Li Z., Li Z., Liao D., et al. (2018). A review of emotion recognition using physiological signals. Sensors-Basel 18, 2074. doi:10.3390/s18072074

Virtanen P., Gommers R., Oliphant T. E., Haberland M., Reddy T., Cournapeau D., et al. (2020). SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272. doi:10.1038/s41592-019-0686-2

Wang S., Yu S. (2021). “Emotion recognition based on photoplethysmography using ResNet and BiLSTM networks,” in 2021 international conference on E-health and bioengineering (EHB 2021). 9TH EDITION. doi:10.1109/EHB52898.2021.9657742

Wang X. Y., Zhou H. L., Xue W. C., Zhu Z. B., Jiang W. N., Feng J. W., et al. (2022). The hybrid discrete-dimensional frame method for emotional film selection. Curr. Psychol. 42, 30077–30092. doi:10.1007/s12144-022-04038-2

Wen W., Liu G., Cheng N., Wei J., Shangguan P., Huang W. (2014). Emotion recognition based on multi-variant correlation of physiological signals. IEEE T. Affect. Comput. 5, 126–140. doi:10.1109/TAFFC.2014.2327617

Keywords: photoplethysmography (PPG), emotion recognition, support vector machine (SVM), PPG frequency-domian analysis, dual windkessel model

Citation: Zhu Z, Wang X, Xu Y, Chen W, Zheng J, Chen S and Chen H (2025) An emotion recognition method based on frequency-domain features of PPG. Front. Physiol. 16:1486763. doi: 10.3389/fphys.2025.1486763

Received: 29 August 2024; Accepted: 29 January 2025;

Published: 25 February 2025.

Edited by:

Rajesh Kumar Tripathy, Birla Institute of Technology and Science, IndiaReviewed by:

Bingmei M. Fu, City College of New York (CUNY), United StatesCopyright © 2025 Zhu, Wang, Xu, Chen, Zheng, Chen and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hang Chen, Y2gtc3VuQDI2My5uZXQ=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.