- 1College of Energy Engineering, Zhejiang University, Hangzhou, China

- 2State Key Laboratory of Clean Energy Utilization, Zhejiang University, Hangzhou, China

Accurate particle detection is a common challenge in particle field characterization with digital holography, especially for gel secondary breakup with dense complex particles and filaments of multi-scale and strong background noises. This study proposes a deep learning method called Mo-U-net which is adapted from the combination of U-net and Mobilenetv2, and demostrates its application to segment the dense filament-droplet field of gel drop. Specially, a pruning method is applied on the Mo-U-net, which cuts off about two-thirds of its deep layers to save its training time while remaining a high segmentation accuracy. The performances of the segmentation are quantitatively evaluated by three indices, the positive intersection over union (PIOU), the average square symmetric boundary distance (ASBD) and the diameter-based prediction statistics (DBPS). The experimental results show that the area prediction accuracy (PIOU) of Mo-U-net reaches 83.3%, which is about 5% higher than that of adaptive-threshold method (ATM). The boundary prediction error (ASBD) of Mo-U-net is only about one pixel-wise length, which is one third of that of ATM. And Mo-U-net also shares a coherent size distribution (DBPS) prediction of droplet diameters with the reality. These results demonstrate the high accuracy of Mo-U-net in dense filament-droplet field recognition and its capability of providing accurate statistical data in a variety of holographic particle diagnostics. Public model address: https://github.com/Wu-Tong-Hearted/Recognition-of-multiscale-dense-gel-filament-droplet-field-in-digital-holography-with-Mo-U-net.

1 Introduction

Gel propellant, with the merits of high specific, strong density impulse [1, 2], smooth ignition [3], stable combustion [4] and good storage condition [5, 6], shows great potential in the field of rocket propellant for its unique properties. Recent years, studies on gel propellant involve formation [7], atomization [8, 9], flow characterization [10, 11] and combustion [12–14]. In practice, gel propellant is atomized in crossing flow and then combusts, and particularly, secondary atomization in downstream can dramatically influence the mixing and combustion efficiency [15]. Thus, the spatial-temporal evolution of the breakup of gel droplet, including morphology, droplet and fragment size and velocity, is of essential significance. The breakup process of a Newtonian droplet varies with the Weber number (We = ρυ2ι/σ, where ρ is the fluid density, υ is the characteristic velocity, ι is the characteristic length, σ is the surface tension coefficient of the fluid) and Ohnesorge number (Oh), and can be divided into five modes (vibrational, bag, multi-mode, sheet thinning and catastrophic breakup), with distinctive transition on the shape and size of broken droplet fragments [16]. As a typical non-Newtonian fluid, the secondary breakup of a gel droplet differs from that of a Newtonian one. Moreover, the extreme breakup condition of a gel propellant droplet under a high internal flow of a rocket, with We even over one thousand, makes the breakup complicated and subsequently its characterization more challenging.

To quantify the droplet breakup process, several advanced optical particle instruments have been applied to measure the droplet, e.g., phase Doppler analyzer (PDA) [17, 18] and Malvern particle size analyzer (MPSA). PDA can only deal with spherical particles and MPSA can only measure the one-dimensional particle descriptor, that is, equivalent size. However, the breakup of a gel droplet generates a large amount of irregular fragments, filaments and nonspherical droplets, and their morphological characterization, especially in 3D, are vitally important to have an insight into the mechanism of gel droplet breakup process. Digital in-line holography (DIH), as a real 3D imaging technique, can measure geometric parameters of particles, including 3D position, diameter and morphology, and 3D velocity when combined with particle imaging/tracking velocimetry (PIV/PTV) strategy, and thus suits to droplet dynamics characterization. Based on DIH, Radhakrishna, et al. [19] obtained the size and velocity of droplets with different We ranging from 30 to 120, explored the breakup pattern and thus gave the percent of required minimum reflux velocity, Prasad, et al. [20] explored the impact of density on cloud dynamics and examined two particle clouds with similar granularity and morphology and thus provided a measurement of velocity and concentration before ignition, Liebel, et al. [21] combined transient microscope and successfully demonstrated the time-resolved spectroscopy of gold nanoparticles to lay a foundation for single shot three-dimensional microscopic imaging on any timescale. One of the common challenges in filament-droplet field characterization during secondary breakup is accurately detecting and segmenting the object from background. Nowadays, threshold algorithms [22] especially adaptive-threshold methods (ATM) [23–25] are widely employed. The threshold value is calculated based on models originally derived from image by direct photography, while the reconstructed slice image in DIH has intrinsic twin-image noises. Besides, the liquid filaments with various morphology and multi-scale droplets pose additional challenge to their segmentations. These factors deteriorate the performance of threshold-based algorithms.

In nature, the segmentation of the reconstructed holographic image is equivalent to semantic segmentation in the field of deep learning. Fully convolutional neural network (FCN) [26] opened the door of deep learning to the field of semantic segmentation, and several models, e.g., Deeplab [27], Segnet [28], E-net [29], U-net [30] etc., were proposed. Among them, U-net is a classic network for semantic segmentation, and it is also embedded in various popular large models as a classic network backbone. Especially, U-net was widely used in the semantic segmentation of medical images owing to the mixture of low level features and high level features [30]. Tuning the loss function and data augmentation through cropping and rotating the initial image, can increase the accuracy and the robustness of the deep neural network prediction. The application scenario of this method is extended to DIH and become a feasible approach to segment the reconstructed holographic images. Altman, et al. [31] completed the characterization and track of hologram to recognize and locate the colloidal particles and thus eliminated the proprieties of it with deep convolutional network. Midtvedt, et al. [32] developed a weighted average convolutional network to analyze the hologram of single suspended nanoparticle and quantified the size and refractive index of a single subwavelength particle. But until now, these works mainly focus on relatively large [33, 34], spherical object [35, 36], sparse small particle field [37–39] or other objects (e.g., fiber internal structure [40], cell identification [41]), yet few focused on dense particle field consisted of liquid droplets and filaments with various morphological shapes like gel atomization field. And the combination of digital holography and deep learning methods were also extended to other particle-like objects, Belashov, et al. [42] utilized holographic microscopy combined with cell segmentation algorithm using machine learning to characterize the dynamic process of apoptosis and the accuracy achieved 95.5% and Wang, et al. [43] segmented some terahertz images of gear wheel and used average structural similarity to get the relatively best results which were proved to be better than some traditional segmentation algorithms in their paper.

This study combines semantic segmentation model based on deep neural network with the holographic images of gel secondary atomization field and proposes Mo-U-net to deal with this segmentation task. Experimental results show that Mo-U-net has a superior performance in overall, local boundary, internal structure prediction and particle retrieval of different scales than ATM in different conditions. Therefore, using the Mo-U-net can lay a solid foundation for the later mechanism analysis in droplet breakup, which also provides a feasible approach to segmenting other kinds of holograms.

2 Experimental Setup

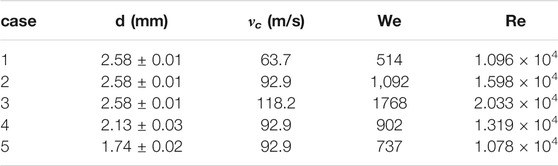

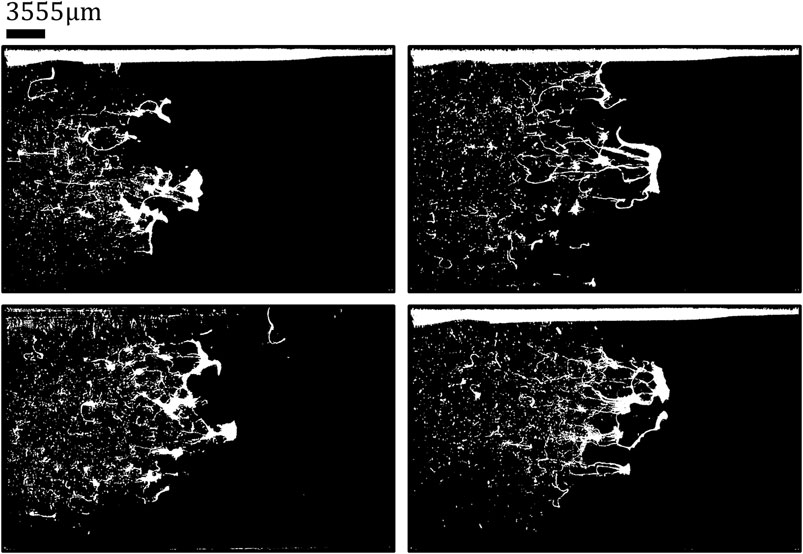

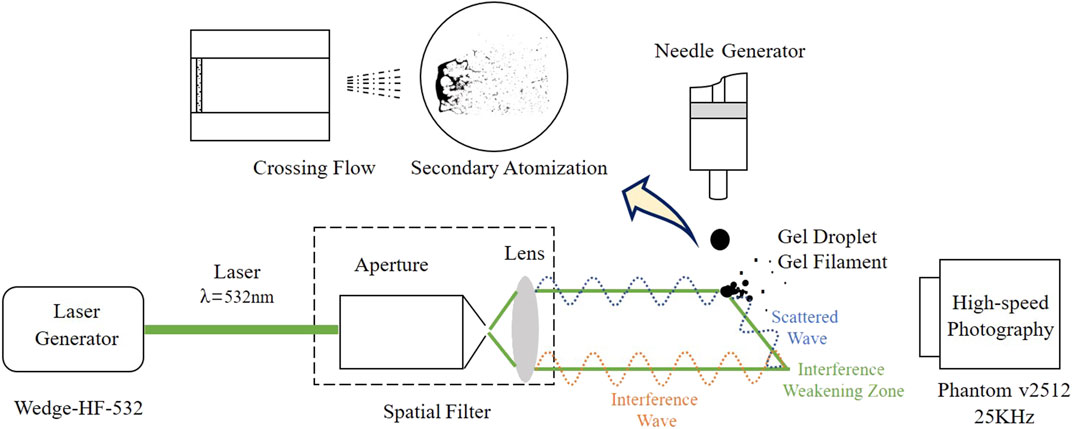

The experimental setup for gel droplet breakup in a crossing flow measured with DIH is shown in Figure 1. A gel droplet with a size ranging from 1.74 to 2.58 mm was produced by a needle generator, and then fell into a high-speed crossing flow, with a velocity ranging from 63.7 m/s to 118.2 m/s. All gel samples were distributed on the same day, with a density of 1,356.8 kg/m3 and a surface tension of 24.56 mN/m. The droplet was then accelerated and was broken up into filaments and small droplets, with the We spanning from 514 to 1768 and Reynolds number (Re) from 1.078 ×104 to 2.033 ×104. The experiments were carried out under five conditions as shown in Table 1. In this table, d means the droplet diameter, νc is the central velocity in the flow field, Re is the Reynolds number (Re = ρVL/μ, where ρ, μ are the density and dynamic viscosity coefficient of the fluid respectively, and V and L are the characteristic velocity and length of the flow field). The breakup processes of the gel droplets were visualized by a 25 kHz high-speed DIH system. A laser beam of λ = 532 nm wavelength was first generated by a pico-second pulsed laser, passed through a spatial filter, and then collimated into a plane wave. The plane wave traveled through the test region and illuminated the filament-droplet field. Part of the beam was scattered as the object wave and interfered with the reference wave without being scattered to generate the hologram with size of M ×N = 1,280 × 800, and equivalent horizontal and vertical pixel size Δx and Δy of 28 μm in this study. The holograms obtained in this study are shown in Figure 2A.

FIGURE 1. Schematic of the high-speed DIH system for measuring crossing flow atomization of gel droplet.

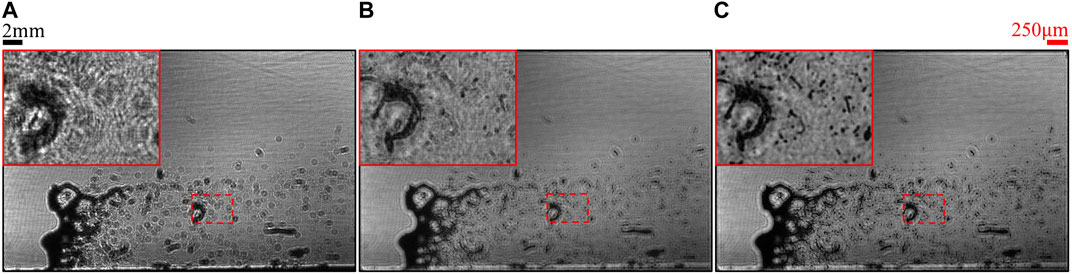

FIGURE 2. Experimental data for crossing-flow atomization of gel particles. Images with black boundary are the primitive images. Images with red boundary on the left upper side are enlarged ones of what the red dotted box frames. The subgraph (A) is the raw hologram obtained by the high speed camera. The subgraph (B) is one of the slices from a reconstructed hologram. The subgraph (C) is the EFI generated from all reconstructed slices.

The recorded hologram noted as Ih(m, n) was then reconstructed by angular spectrum method [44] as follows

where Er (k, l, zr) denotes the complex amplitude of the reconstructed image, zr and (k, l) denotes the reconstruction distance from the recording plane and the pixel coordinates of the reconstructed image respectively. And R = 1, denotes the reference light for reconstruction of DIH. h (m, n) is the hologram, and (m, n) is the pixel coordinate in the hologram.

In this study, 301 sections between 210 and 240 mm in the Z axis were reconstructed with 0.1 mm spacing in order to obtain a full 3D droplet field information while keep a relatively high reconstruction speed. Because the depth of field (DoF) of the holographic imaging system is calculated to be about 290 μm [45], as long as the section spacing is shorter than the DoF, then all particles in experimental space can be focused on certain section and if the spacing is too small, the time of reconstruction maybe extremely long. Due to the limited depth of field of reconstructed image, each reconstructed slice only had a few droplets in-focus, as shown in Figure 2B. So it is necessary to reconstruct multiple sections of particle field. To facilitate the subsequent droplet recognition and locating as well as speed up this process, the whole reconstructed 3D particle field was fused into one image called extended focus image (EFI) as shown in Figure 2C, using a region-based image depth-of-field extension algorithm [46]. The gel filament-droplet field in the EFIs has the following characteristics. 1) Due to the characteristics of digital in-line holography, the foreground twin-image is generated in the background of EFI accompanied with the bright and dark background stripes. They interfere with the gray distribution of the local foreground and produce some noises in the final recognition result. 2) In complex dense filament-droplet field, the twin-images generated by the foreground of EFI interfere with each other and form complex, changeable and irregular noises, which cripple the segmentation effect of classical threshold method based on gray statistics. 3) The shapes of gel filaments in EFIs are variable, and the scale span of gel filaments is very large (hundreds of microns to a few centimeters). These two factors make the general processing method based on geometry and morphology unfeasible, and increase the difficulty of threshold segmentation methods.

Thus, in this study, the EFIs will be processed with a deep neural network called Mo-U-net to overcome the difficulties mentioned above.

3 Methods

3.1 Model Setup

U-net consists a shrinking path named encoder and an expanding path named decoder. The shrinking path is used to extract the context information of the image while the expanding path is used to reconstruct the foreground and the background of the image precisely according to the information. The symmetrical two paths form a structure like “U” for which this network is called “U-net” [30]. Because of the complex segmentation scene and fine segmentation requirements, U-net adopts layer skipping link, which fuses the bottom information extracted by the encoder with the corresponding high-level feature map from the decoder layer by layer to make up for the lost features in encoding.

As is shown in Figure 3, the Mo-U-net used in this study, is based on the structure of U-net, and is improved in two aspects. 1) The original four down sampling blocks is replaced with a network named Mobilenetv2 [47] as our new encoder. Mobilenetv2 completely discards the pooling layer to retain more low-level features in down sampling and replaces normal convolution with depth-wise separable convolution and point-wise convolution, which enables a more precise and faster segmentation. And it is also consistent with the segmentation characteristics of the gel filament-droplet field in EFIs. 2) Block7 to 14 from Mobilenetv2 are removed to simplify the encoder and only two up-sampling and feature fusing process are kept to simplify the decoder for the timeliness of image processing and finite computing resources. Since the features of gel droplets and filaments are primitive in this study, a network with relative small capacity and few skipping connection will be qualified enough to extract adequate semantic information for fine segmentation while saving quantities of training time due to its fewer parameters.

FIGURE 3. The structure of Mo-U-net. The blocks and arrows marked as gray areas denote the parts that are pruned in actual use.

3.2 Dataset Establishment and Data Augmentation

The dataset for neural network training generally includes input images and true values which are also called ground truth in image segmentation field. In this study, the dataset is established in two steps. In the first step, the ATM [48] is used to preprocess a batch of EFIs to obtain relatively rough ground truth. Then, the obviously defective truth value labels are ruled out while the rest is regarded as the ground truth for training and testing. The reasons for this are as follows. 1) The current image processing method cannot make pixel-wise ground truth, and manual calibration costs a lot of time, which is unfeasible for subsequent experiments and industrial applications. 2) Different from the traditional threshold and regression algorithm, owing to the gradient back propagation and batch processing mechanism, deep neural network shows great robustness in noisy learning (the process of learning from a dataset containing partial error samples is usually called noisy learning), and has been verified in many experiments [49]. A total of 2,167 images were collected in the experiment. After filtered by ATM, a total of 2021 images with rough ground truth were obtained as shown in Figure 4.

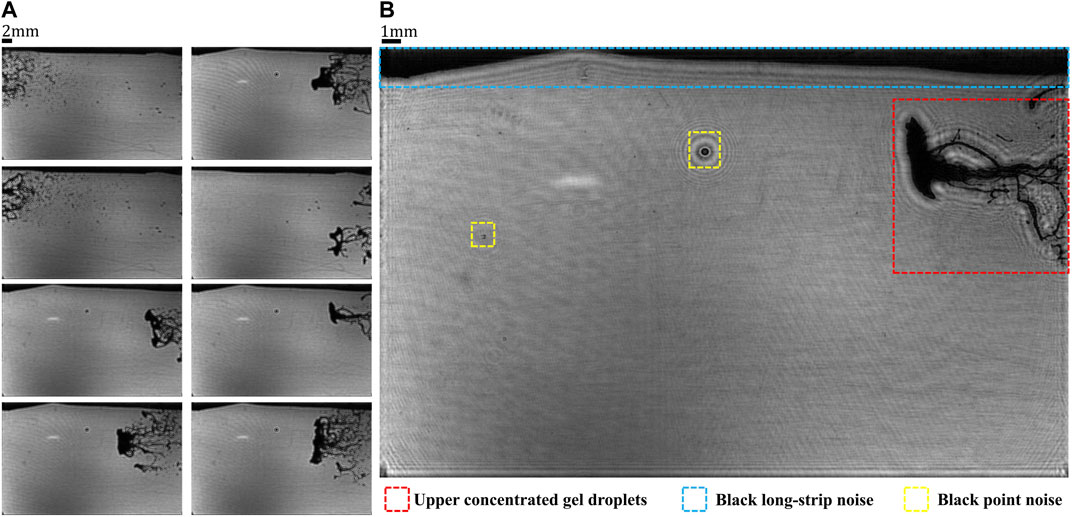

The second step is data augmentation. The EFIs obtained in experiment have some defects, which can be mainly divided into three aspects. 1) Uneven spatial distribution. As the red box shown in Figure 5B, the spatial distribution of the droplet particle field is of great unevenness. Under the experimental conditions, most of the gel droplet particle field is concentrated in the upper and the right parts which may cause a positional bias to network when training, thus affecting the generalization ability. 2) Black long-strip noise. The blue box in Figure 5B shows that, due to the limitation of lens range, black noise with long strip shape appears on the edge of the image. Although it is not a droplet, the ATM will mistake it as a foreground, which is harmful for the feature learning. 3) Dot-like black noise. From the yellow box in Figure 5B, the static dot-like black noise appears because some particles are attached to the cavity wall after being blown away during experiment. Although such noises donot belong to the dynamic particle field, its characteristics are the same as real particles in the EFIs. So it will not affect the learning of the characteristics of the EFIs of gel filaments and particles, and there is no need for extra calibration.

FIGURE 5. Defective dataset. The subgraphs in column (A) are the images chosen randomly from the dataset with the same defects. The subfigure (B) is the specific defects. The red frame denotes where the uneven spatial distribution of droplets are, the blue frame denotes the black noises with long black strip shape and the yellow frame refers to the black noise with a dot-like shape.

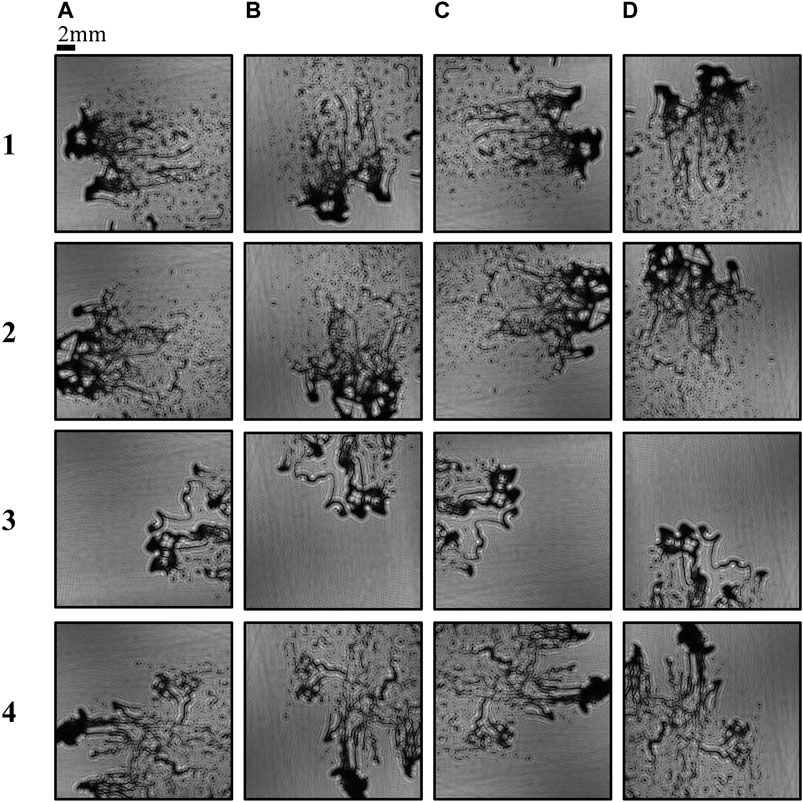

To deal with the first two main defects, the original dataset is augmented in two corresponding ways. 1) The image size is cropped from 1,280 × 800 to 672 × 672 to remove the unqualified boundary. 2) The images are rotated by 90°, 180° and 270° respectively and then added to the dataset. So the spatial distribution of the dataset can be normalized. Finally, a dataset contains 8,084 images with 672 × 672 resolution is obtained, as shown in Figure 6. Although the image is cropped in the experiment, the model after training does not strictly limit the input image size. If only the size meets the integer times of 224 and with the same height and width, the model can adjust to it after a fine tune with a very small cost.

FIGURE 6. Dataset with data augmentation. The subgraphs (1) to (4) in column (A) are the original images chosen randomly from the dataset which has been cropped into 672×672. The column (B) to D refer to the augmented images generated by rotating the original images by 90°,180°and 270°respectively.

3.3 Train Setting

In order to achieve good segmentation performance, the train strategy is set as follows. A pre-training weights is firstly used to accelerate the model convergence and improve the segmentation accuracy. The weights obtained from the famous image dataset ”ImageNet” [50] is used as pre-training weights of the Mobilenetv2 in the experiment. Then we use transfer learning strategy to fine tune. In detail, the initial learning rate of the model is set to 0.001 during training and the encoder layer with pre-training weights is frozen to improve the model performance and speed up the training process. Besides, cross entropy loss (CEL) function and Adam optimizer [51] are selected to accelerate the model convergence. An early stop strategy (stop training when the loss value is no longer decreased) and a linear decreasing learning rate strategy (when the loss is no longer decreased, the learning rate will be changed, in the experiment, the patient is set to three epochs, and the change ratio is 0.1) are employed to ensure that the model can be closer to the optimal solution, and avoid over-fitting in the later training. Finally, the network is trained for 100 epochs to ensure an adequate train.

4 Results and Discussions

4.1 Training and Validation

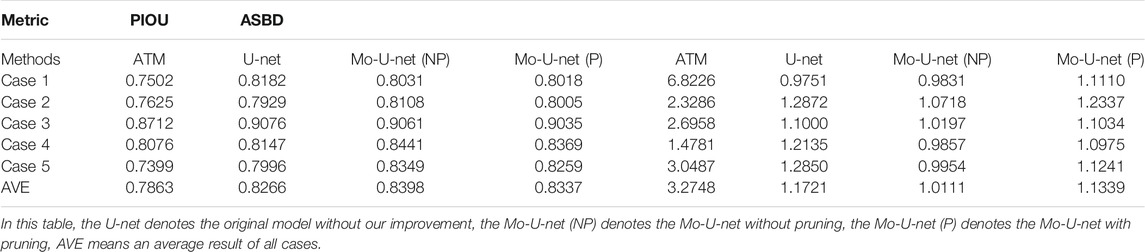

The dataset mentioned in Section 3.2 is divided into train set and validation set by 8:2 ratio to train model and validate its performance respectively. Based on the environment of tensorflow2.4.0, the Mo-U-net is trained with 2 T P100-PCIE-16 GPUs. And a Mo-U-net without pruning is also trained in the same case to evaluate the effect of the improvements mentioned in Section 3.1. As a result, Mo-U-net with pruning cost 8.5 min per epoch to train on average while Mo-U-net without pruning cost 14.6 min per epoch to train on average, which means a nearly 41% saving of training time by using pruning. Besides, the following test result shown in Section 4.3 also proves that pruning will not affect the performance of the model in this study. And to show the improvements by using Mobilenetv2, an original U-net is also trained in the same condition. The result in Section 4.3 shows that the original U-net also achieved excellent performance of 82.66% PIOU and 1.1721 ASSD, exceeding the performance of ATM in five working conditions. This shows that deep learning method has considerable advantages for the segmentation of complex dense droplet-filament-mixed field in EFIs. However, it can still be modified. On this basis, Mo-U-net proposed in this study has achieved better performance in PIOU (area segmentation accuracy) and ASSD (boundary segmentation error) than the original U-net, especially in the extreme complex disaster crushing of gel droplet (working conditions 4 and 5). This is precisely because we use the Mobilenetv2 to replace the four basic convolutional encoding blocks of the original U-net. After improvement, the Mo-U-net obtains a deeper coding path to form a stronger capacity of representation, so it can extract higher-level semantic information. Therefore, it will have better generalization ability and robustness in the face of complex scene in segmentation.

4.2 Morphological Analysis

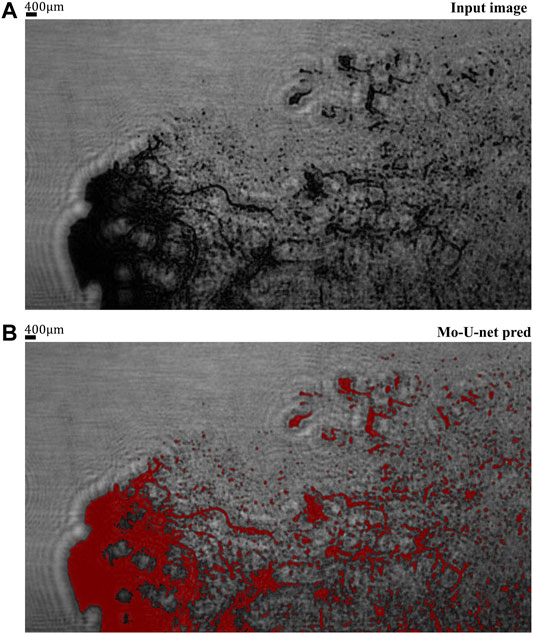

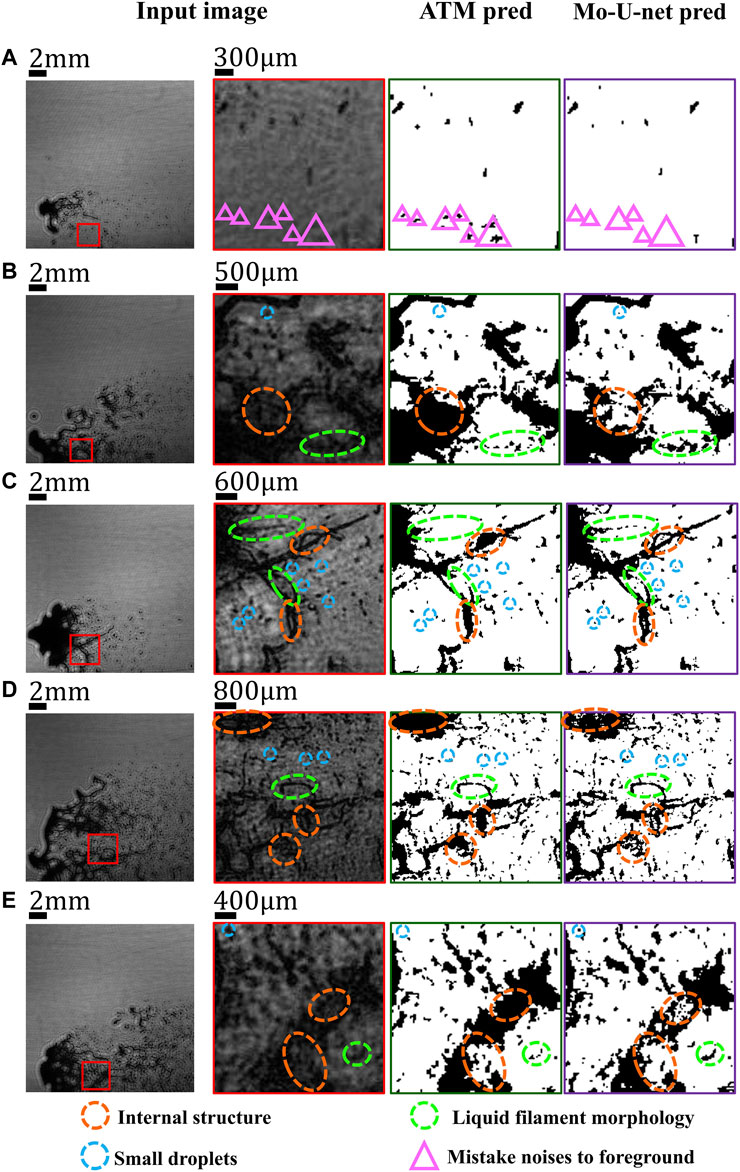

As shown in Figure 7, Mo-U-net has the ability to segment the foreground and background of the EFIs after training. To have a more lucid assessment between the performances of the Mo-U-net and the ATM, a comparison is conducted by the validation set. The experimental results show that the Mo-U-net outperforms the ATM in area, boundary and multi-scale droplet recognition.

1) As for the prediction of inner structure, Mo-U-net outdoes ATM. As the orange circles shown in Figure 8, in the experimental conditions, Mo-U-net can precisely recognize a big lump of gel droplets and the internal structure while ATM couldnot, e.g., ATM lost internal structures of eight areas from just five small blocks in Figure 8. This shortcoming is caused by its segmenting mechanism. ATM mainly segments the image according to the gray values in its selected region. However, in the EFIs of gel atomization field, the dense diffraction fringes of twin-images would decrease the gray values of the droplets and filaments with hollow parts inside, which leads to an incorrect threshold. Besides, from Figures 8B–E, there exist many inner structures of various scales. Even though it is possible to get the nearly identical inner structure through adjusting the size of processing block, extending the precise results to the whole image is far more difficult. But such a complex problem is actually an easy task for Mo-U-net. In Figure 8, inner structures of the gel droplet were well predicted whatever the scale. Fundamentally, the way Mo-U-net forms its criteria to segment the image determines its superior performance. The criteria comes from the context information from the whole image scale, which is wider and deeper than the statistics information depending on gray values of ATM. Thus, Mo-U-net can utilize not only the features of gray values in only one region or one image but also the overall distribution features to judge whether the pixel is foreground or not.

2) In terms of complex liquid filament morphology predicting, Mo-U-net shows an outstanding performance. As the green circles shown in Figure 8, ATM is quite sensitive to the brightness change when segmenting consecutive and slender liquid filaments. In details, only if there is some bright spots, ATM will ”cut off” the filament in this position (e.g., Figure 8E). What is more, the noises in the vicinity will harm the ATM segmentation and bring about a contractile boundary (e.g., Figures 8A,C,D total loss of filament (e.g., Figure 8B). These weaknesses can also be overcome by Mo-U-net. Assisted by the global information mentioned in Section 4.2(1), it can locate most of the liquid filaments, get rid of the interference from noises and omit the tiny brightness change of liquid filaments. The last two strengths account for the consecutive and complete liquid filament prediction.

3) Mo-U-net displays its distinguishing properties when predicting small droplets. ATM usually performs poorly to segment the droplets only with several pixels. Especially, in the EFIs of dense droplet and filament field, the tiny droplet itself has lower gray contrast and is interfered by the noises. For both of these two reasons, ATM can easily omit tiny droplets in the selected regions. As the blue circles shown in Figure 8, ATM lost a large number of small droplets in the dense field while Mo-U-net does well and is able to make up for this shortcoming by using the information including gray changing and particle distribution learned from other EFIs in the same train set.

4) Besides, Mo-U-net shares a same excellent performance on the prediction in sparse region with that in dense field. As the purple box shown in Figure 8, ATM will mistake the background to foreground in a high probability. In sparse region, gray values of background will be the main factor to choose the threshold. If there is no droplet and the gray values of background is lower in the chosen region, ATM will be more likely to mistake some areas in the background with low gray values to foreground. However, Mo-U-net has a superior performance in distinguishing the particles from both bright and dark background, because the criterion for judgement comes from the global information learned by the Mo-U-net when training, which provides hundreds of samples containing different kinds of background situations. So the Mo-U-net can make full use of these information and give a more accurate prediction result.

FIGURE 7. The overview of Mo-U-net predicting performance. Subgraph (A) shows the input image of filament-droplet field in case 5. Subgraph (B) is the superposition of the Mo-U-net prediction and input image, in which the predicted pixels are painted red.

FIGURE 8. Morphological comparison. The prediction of adaptive-threshold method is called ATM pred, the prediction of Mo-U-net is called Mo-U-net pred. The subgraphs (A) to E belong to case 1 to case 5 respectively. The subgraphs with red boundary are the enlarged ones of what the red boxes frame, meanwhile the blackish green refers to ATM pred and the purple denotes Mo-U-net-pred.

The comparison of Mo-U-net and ATM demonstrates that Mo-U-net is qualified enough to the segmentation of dense filament-droplet field in the EFIs. And predictions of Mo-U-net are closer to the reality in large droplet, filament morphology and multi-scale particles, which are relatively unfeasible for ATM.

4.3 Quantitative Analysis

4.3.1 Test Dataset

In order to evaluate the performance of the Mo-U-net and ATM objectively and precisely, a batch of data is selected from five working conditions mentioned in Section 2 that have never participated in the model training process as a test set. Meanwhile, to guarantee the reliability of the evaluation metrics and the validity of subsequent analysis, the previous ground truths produced ATM are examined and corrected artificially. The test set consists 25 images with 672 × 672 resolution.

4.3.2 Evaluating Metrics

In this study, the quantitative evaluation of the model segmentation performance can be divided into three parts 1) the Positive Intersection over Union (PIOU), 2) the Average Square Symmetric Boundary Distance (ASBD), 3) the Diameter-Based Prediction Statistics (DBPS). These three metrics judge the performance from the perspective of global, local and multi-scale so as to comprehensively demonstrate the advantages and disadvantages of Mo-U-net segmentation results.

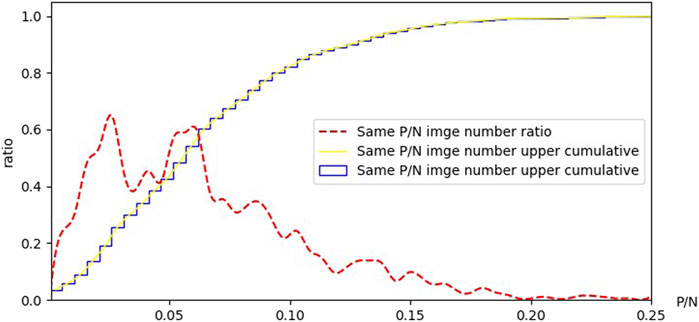

1) The prototype of PIOU used in this study is MIOU, a metric proposed to measure the similarity between two sets. MIOU is defined as follows

In this formula, kc denotes the number of category, in this situation kc is two, pii means the number of pixels which are marked as the category i in both label and predicted output, pij is the number of pixels which are marked as the category i in label while marked as other category j in predicted output, i and Ti denote the number of all pixels and the number of pixels of category i in labels respectively.

In fact, the EFIs in this experiment have an extreme sample imbalance, which means the number of samples in certain category is far more than that in other categories. In this study, samples denotes pixels. As shown in Figure 9, the average ratio of positive to negative samples is 0.065. In some specific circumstances, the ratio even reaches an exaggerated point of 0.01 (100 times). Thus, in such a condition, the normal MIOU will have a poor performance because it takes both the foreground (droplet) and background into consideration. Consequently, whether segmentation of the foreground which we really concern about is right or not makes nearly no contribution to the results. Therefore, we proposed PIOU to deal with the problem. PIOU is a special form of MIOU, which only considers the intersection and union ratio of the required classes through calculating the area of positive samples in predicted results and in ground truth. In this study, PIOU can be defined as follows

2) Average Symmetric Boundary Distance (ASBD) can serve as a powerful evaluation metric to measure the consistency of the boundary between the prediction and the ground truth. In this study, we use the ASBD to assess the performance of our model in boundary segmentation to show its ability to obtain the micro characteristics. The smaller the value is, the more accurate the predicted boundary is. The ASBD is defined as follows

where A and B denote foreground and background respectively, SA and SB mean certain predicted marginal pixels in the foreground and the background respectively, S(A) and S(B) denotes all true marginal pixels in foreground and background, the function d (⋅) is used to calculate the shortest distance between an input pixel and the corresponding true set of that pixel. In this study, a pixel-width distance in the EFIs denotes a 28 μm distance in reality.

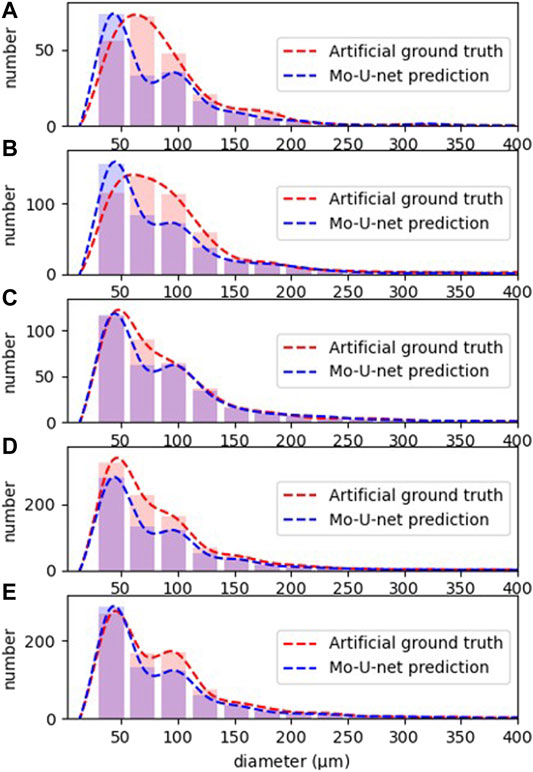

3) In this study, a Diameter-Based Prediction Statistics (DBPS) is used to measure the segmentation performance of the model for gel filaments and particles in different scales. When testing, the number of droplets is first calculated based on their diameter in Mo-U-net prediction and artificial ground truth. Then two histograms based on the diameter and number of droplets are drawn to show the distribution of Mo-U-net predictions and the artificial ground truths. The closer the fitting curves of the two histograms are, the better the model prediction is.

FIGURE 9. Statistical histogram of positive and negative samples of dataset. In this study, a pixel from a droplet is called a positive sample, and a pixel from the background is called a negative sample. Same P/N means images have the same ratio of positive to negative samples.

4.3.3 Metrics Analysis

According to the three evaluating metrics in Section 4.3.2, a comprehensive comparison can be obtained between our model and ATM in terms of the performance from three perspectives of global, local and multi-scale when segmenting the gel atomization field in the EFIs. The test set is utilized to assess Mo-U-net and ATM respectively and get the statistical results. The results of PIOU and ASBD are shown in Table 2 while the result of DBPS is shown in Figure 9.

From Table 2, it is clearly illustrated that Mo-U-net has superior performance in both PIOU and ASBD whatever the working condition is. Considering the overall segmentation effect (PIOU), these two methods both achieve around 80% but Mo-U-net surpasses ATM nearly 5%, which is coherent with 1) (3) 4) in Section 4.2. And when judging some pixels with obscure meanings, ATM can only make use of the extracted gray values in its processing block while Mo-U-net can utilize the features learned from the whole train set. With the adequate understanding of fundamental distribution, Mo-U-net performs better than ATM.

As Table 2 shown, the ASBD of Mo-U-net is over 60% lower than ATM. That means when using Mo-U-net, the boundary loss of segmentation can be decreased from nearly a droplet-width (a small droplet in the EFI always contains four or more pixels) distance to a pixel-width distance in the EFIs. This metric shows the ability of these two methods to segment fine structure e.g., the inner structure and the boundary of filaments in Section 4.2. The specific reason can be divided into two aspects as follows. On the one hand, whether ATM recognizes the pixel as foreground or background completely depends on whether its gray values surpasses the threshold or not, which accounts for the rough edge with some serrated areas, skin needing and boundary defects. On the other hand, Mo-U-net will not only consider the pixel gray values but also the adjacent edges and the regional shape so that it can segment the boundary more smoothly and get a more precise prediction of the inner structure than ATM.

As shown in Figure 10, under five different conditions, Mo-U-net has a very similar size distribution of multi-scale droplets to the artificial ground truth. Specifically, in most experimental conditions, they share a homologous peak area around 50 μm, and the nearly same decreasing tendency from 100 μm. However, in the range of 50–100 μm in diameter, there is a discrepancy in particle number between Mo-U-net prediction and the artificial ground truth. As shown in Figure 10, the number of the droplets 75 μm diameter predicted by Mo-U-net is lower than the artificial ground truth (more than a dozen droplets got lost) in general. Particularly, as shown in Figure 10, in case 1 and case 2, the number of the droplets around 50 μm diameter predicted by Mo-U-net surpasses the artificial ground truth in the same time. These phenomena may be related with low image resolution. In fact, in the EFIs, 50 μm denotes only two pixel-width and 75 μm denotes only three pixel-width respectively. That is to say, Mo-U-net only mistakes one pixel when predicting a three pixel-wise droplet and the mistaken three pixel-width droplets are then reduced to two pixel-width droplets. It is why the number of droplets around 75 μm diameter is relatively lower compared with the artificial ground truth while that of droplets around 50 μm diameter is relatively higher in case 1 and case 2. The reasons are also consistent with the result of ASBD in Table 2, which shows the disparity of the boundary between Mo-U-net prediction and artificial ground truth is around one pixel-width. Besides, as mentioned in the morphological analysis in Section 4.2 and metrics analysis in above, as a “teacher”, ATM suffers a low accuracy and will cause unexpected defeats in micro-droplets segmentation. Therefore, trained with the ground truth produced by ATM, Mo-U-net can hardly gain the capability to conduct a perfect segmentation in micro-droplets. Generally speaking, the prediction provided by Mo-U-net is basically consistent with the real size distribution of gel dense filament-droplet field in the EFIs, which can offer a relatively accurate statistical information of size distribution for subsequent research and analysis in droplets.

In all the three metrics, Mo-U-net shows a better performance in area prediction and boundary segmentation than ATM, and shares a coherent size distribution of multi-scale droplets to the artificial ground truth. These results are consistent with what morphological analysis claims in Section 4.2, which proves that Mo-U-net can automatically learn the characteristics provided by a large number of positive samples, and ignore small amount of noises in the real ground truth. In the final test, the model shows better performance than the ground truth produced by ATM, and gives an eloquent proof that neural network can be “better than its teacher”.

5 Conclusion

This work has investigated a deep neural network called Mo-U-net and its application to the segmentation of dense gel filament-droplet field in digital holography, with progresses as follows.

• A Mo-U-net model is proposed to segment dense filament-droplet field. The model is mainly based on the U-net and modified by two steps as follows. Firstly, the original four down sampling blocks of U-net are replaced with Mobilenetv2 to retain more low-level features when encoding. Secondly, the model is pruned by cutting off all the down sampling blocks below the sixth block and only reserve two corresponding up sampling blocks to reduce the parameters of the model. As a result, Mo-U-net achieves a high accuracy in segmentation while saves 41% of training time compared with the Mo-U-net without pruning.

• A morphological comparison is conducted by the validation set to assess the segmentation performance between the Mo-U-net and the ATM. The experimental results show that, the Mo-U-net achieves a finer boundary segmentation in large droplet, a more precise internal structure prediction in filament with complex morphology and a stronger ability in recognizing multi-scale particles than the ATM.

• Three metrics contain PIOU, ASBD and DBPS are proposed for a quantitative evaluation between the Mo-U-net and the ATM. The test results show that the area prediction accuracy (PIOU) of Mo-U-net reaches 83.3%, which is about 5% higher than that of adaptive-threshold method (ATM). The boundary prediction error (ASBD) of Mo-U-net is only about one pixel-wise length, which is about one third of that of ATM. And Mo-U-net also shares a coherent size distribution (DBPS) prediction of droplet diameters with the reality.

In EFIs, complex background noises, changeable shape of filaments and large span geometric size distribution are the main obstacles for the classical algorithm to deal with the particle detection task. However, the proposed model can adjust to it well. 1) Mo-U-net, as a specific neural network is a data-driven model which can avoid the difficulty to judge whether the pixel belongs to the foreground from the mechanism. It learns the distribution law under the data itself so that can deal with the problems that not be handled by morphology alone. 2) Mo-U-net extracts various information in the EFI by convolution, including multi-dimensional global information, shape and spatial position which enriches its segmentation basis, suppresses the influence of background noises and finally overcomes the limitations of traditional gray-statistics-based threshold methods. 3) Mo-U-net gains the predicted foreground and background segmentation image by deconvolution, step skipping and fusion methods. The combination of multi-level semantic information enables it to get a satisfied internal structure prediction. And there are many other similar scenarios. For example, in swirl atomization [52], there are vertical stripe noises caused by the spatial modulation of reference light and transmitted laser; interference between reference light and droplet diffraction light and irregular spots produced by the superposition of a large number of droplets and the surrounding interference fringes. The model is foreseeable to have a great performance in these tasks. At the same time, the Mo-U-net’s extensive information source also makes it not confined to the segmentation and recognition of holographic image of particle liquid filaments. It can also be expanded to medical imaging, biological cells, material defects and other fields of small dense objects. As long as a relatively good dataset can be obtained, a robust performance can be expected after a fine tune.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below:https://github.com/Wu-Tong-Hearted/Recognition-of-multiscale-dense-gel-filament-droplet-field-in-digital-holography-with-Mo-U-net.

Author Contributions

ZP conceived the idea, conducted the experiments. HZ participated in the experiments. YW participated in the experiments. LZ did experiments raw data and provided gel materials. XW and YW supervised the project. All the authors contributed to the discussion on the results for this manuscript.

Funding

Project Supported by National Natural Science Foundation of China (52006193), Zhejiang Provincial Natural Science Foundation of China under Grant No. LQ19E060010, National Key R\ and amp;D Program of China (Grant No. 2020YFA0405700 and No. 2020YFB0606200).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Haddad A, Natan B, Arieli R. The Performance of a boron-loaded Gel-Fuel Ramjet. Prog propulsion Phys (2011) 2:499–518. doi:10.1051/eucass/201102499

2. Natan B, Rahimi S. The Status of Gel Propellants in Year 2000. Combustion Energetic Mater (2002) 5(1-6):172–94. doi:10.1615/intjenergeticmaterialschemprop.v5.i1-6.200

3. Cabeal J. System Analysis of Gelled Space Storable Propellants. 6th Propulsion Joint Specialist Conference (1970). 609.

4. Ciezki HK, Naumann KW. Some Aspects on Safety and Environmental Impact of the German green Gel Propulsion Technology. Propellants, Explosives, Pyrotechnics (2016) 41:539–47. doi:10.1002/prep.201600039

5. Varma M, Pein R, Propulsion C. Optimisation of Processing Conditions for Gel Propellant Production. Int J Energetic Mater Chem Prop (2009) 8:501–13. doi:10.1615/intjenergeticmaterialschemprop.v8.i6.30

6. Pinto PC, Hopfe N, Ramsel J, Naumann W, Thumann A, Kurth G. Scalability of Gelled Propellant Rocket Motors. Milan, Italy: 7th European conference for aeronautics and space sciences (EUCASS) (2017).

7. Padwal MB, Mishra DP. Synthesis of Jet A1 Gel Fuel and its Characterization for Propulsion Applications. Fuel Process Tech (2013) 106:359–65. doi:10.1016/j.fuproc.2012.08.023

8. Padwal MB, Mishra DP. Characteristics of Gelled Jet A1 Sprays Formed by Internal Impingement of Micro Air Jets. Fuel (2016) 185:599–611. doi:10.1016/j.fuel.2016.08.012

9. Ma Y-c., Bai F-q., Chang Q, Yi J-m., Jiao K, Du Q. An Experimental Study on the Atomization Characteristics of Impinging Jets of Power Law Fluid. J Non-Newtonian Fluid Mech (2015) 217:49–57. doi:10.1016/j.jnnfm.2015.01.001

10. Baek G, Kim C. Rheological Properties of Carbopol Containing Nanoparticles. J Rheology (2011) 55:313–30. doi:10.1122/1.3538092

11. Fernández-Barbero JE, Galindo-Moreno P, Avila-Ortiz G, Caba O, Sánchez-Fernández E, Wang HL. Flow Cytometric and Morphological Characterization of Platelet-Rich Plasma Gel. Clin Oral Implants Res (2010) 17:687–93. doi:10.1111/j.1600-0501.2006.01179.x

12. Mishra DP, Patyal A, Padhwal M. Effects of Gellant Concentration on the Burning and Flame Structure of Organic Gel Propellant Droplets. Fuel (2011) 90:1805–10. doi:10.1016/j.fuel.2010.12.021

13. Jyoti BVS, Naseem MS, Baek SW. Hypergolicity and Ignition Delay Study of Pure and Energized Ethanol Gel Fuel with Hydrogen Peroxide. Combustion and Flame (2017) 183:101–112. doi:10.1016/j.combustflame.2016.11.018

14. Botchu V, Siva J, Muhammad S, Seung WB, Hyung JL, Sung-june C, et al. Hypergolicity and Ignition Delay Study of Gelled Ethanolamine Fuel (2017).

15. Brandenburg JE, Fox MD, Garcia RH, Ethanol Based Gel Fuel for a Hybrid Rocket Engine. US Patent (2012) 8:101–032. doi:10.2514/6.2007-5361

16. Pilch M, Erdman CA. Use of Breakup Time Data and Velocity History Data to Predict the Maximum Size of Stable Fragments for Acceleration-Induced Breakup of a Liquid Drop. Int J Multiphase Flow (1987) 13:741–57. doi:10.1016/0301-9322(87)90063-2

17. Snyder SE. Spatially Resolved Characteristics and Analytical Modeling of Elastic Non-newtonian Secondary Breakup, Heidelberg, Germany. Thesis (2015).

19. Radhakrishna V, Shang W, Yao L, Chen J, Sojka PE. Experimental Characterization of Secondary Atomization at High Ohnesorge Numbers. Int J Multiphase Flow (2021) 138:103591. ARTN 103591. doi:10.1016/j.ijmultiphaseflow.2021.103591

20. Prasad S, Schweizer C, Bagaria P, Saini A, Kulatilaka WD, Mashuga CV. Investigation of Particle Density on Dust Cloud Dynamics in a Minimum Ignition Energy Apparatus Using Digital In-Line Holography. Powder Tech (2021) 384:297–303. doi:10.1016/j.powtec.2021.02.026

21. Liebel M, Camargo FVA, Cerullo G, van Hulst NF. Ultrafast Transient Holographic Microscopy. Nano Lett (2021) 21:1666–71. doi:10.1021/acs.nanolett.0c04416

22. Guildenbecher DR, Gao J, Reu PL, Chen J. Digital Holography Simulations and Experiments to Quantify the Accuracy of 3d Particle Location and 2d Sizing Using a Proposed Hybrid Method. Appl Opt (2013) 52:3790–801. doi:10.1364/Ao.52.003790

23. Singh DK, Panigrahi PK. Automatic Threshold Technique for Holographic Particle Field Characterization. Appl Opt (2012) 51:3874–87. doi:10.1364/Ao.51.003874

24. Yao L, Wu X, Wu Y, Yang J, Gao X, Chen L, et al. Characterization of Atomization and Breakup of Acoustically Levitated Drops with Digital Holography. Appl Opt 54 (2015) A23–A31. doi:10.1364/Ao.54.000a23

25. Luo ZX, Ze-Ren LI, Zuo-You LI, Yan YEJAPS. An Automatic Segmenting Method for the Reconstructed Image of High Speed Particle Field (2007).

26. Long J, Shelhamer E, Darrell T. Fully Convolutional Networks for Semantic Segmentation. In: Ieee Conference on Computer Vision and Pattern Recognition. (Boston, MA: Cvpr) (2015). 3431–40. doi:10.1109/cvpr.2015.7298965

27. Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL. Deeplab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected Crfs. Ieee Trans Pattern Anal Machine Intelligence (2018) 40:834–48. doi:10.1109/Tpami.2017.2699184

28. Badrinarayanan V, Kendall A, Cipolla R. Segnet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. Ieee Trans Pattern Anal Machine Intelligence (2017) 39:2481–95. doi:10.1109/Tpami.2016.2644615

29. Paszke A, Chaurasia A, Kim S, Culurciello E. Enet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation (2016).

30. Ronneberger O, Fischer P, Brox T. U-net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing And Computer-Assisted Intervention. Pt Iii (2015) 9351:234–41. doi:10.1007/978-3-319-24574-4_28

31. Altman LE, Grier DG. Catch: Characterizing and Tracking Colloids Holographically Using Deep Neural Networks. J Phys Chem B (2020) 124:1602–10. doi:10.1021/acs.jpcb.9b10463

32. Midtvedt B, Olsen E, Eklund F, Hook F, Adiels CB, Volpe G, et al. Fast and Accurate Nanoparticle Characterization Using Deep-Learning-Enhanced off-axis Holography. Acs Nano (2021) 15:2240–50. doi:10.1021/acsnano.0c06902

33. Ye H, Han H, Zhu L, Duan QJJPCS. Vegetable Pest Image Recognition Method Based on Improved Vgg Convolution Neural Network 1237 (2019). 032018. doi:10.1088/1742-6596/1237/3/032018

34. Wang J, Li YN, Feng HL, Ren LJ, Du XC, Wu J. Common Pests Image Recognition Based on Deep Convolutional Neural Network. Comput Elect Agric (2020) 179. ARTN 105834. doi:10.1016/j.compag.2020.105834

35. Yi FL, Moon I, Javidi B. Automated Red Blood Cells Extraction from Holographic Images Using Fully Convolutional Neural Networks. Biomed Opt Express (2017) 8:4466–79. doi:10.1364/Boe.8.004466

36. Nguyen T, Bui V, Lam V, Raub CB, Chang LC, Nehmetallah G. Automatic Phase Aberration Compensation for Digital Holographic Microscopy Based on Deep Learning Background Detection. Opt Express (2017) 25:15043–57. doi:10.1364/Oe.25.015043

37. Li DY, Wang Z, Zhang HL. Coal Dust Image Recognition Based on Improved Vgg Convolution Network. Int Symp Artif Intelligence Robotics (2020) 11574:2020. Artn 115740o. doi:10.1117/12.2576974

38. Wu X, Li X, Yao L, Wu Y, Lin X, Chen L, et al. Accurate Detection of Small Particles in Digital Holography Using Fully Convolutional Networks. Appl Opt (2019) 58:G332–G344. doi:10.1364/AO.58.00G332

39. Zhu Y, Yeung CH, Lam EYJAO. Digital Holographic Imaging and Classification of Microplastics Using Deep Transfer Learning. Appl Opt (2021) 60:A38–A47. doi:10.1364/AO.403366

40. Di J, Han W, Liu S, Wang K, Tang J, Zhao JJAO. Sparse-view Imaging of a Fiber Internal Structure in Holographic Diffraction Tomography via a Convolutional Neural Network Applied Optics (2021) 60:A234–A242.doi:10.1364/ao.404276

41. O’Connor T, Anand A, Andemariam B, Javidi BJBOE. Deep Learning-Based Cell Identification and Disease Diagnosis Using Spatio-Temporal Cellular Dynamics in Compact. digital holographic Microsc (2020) 11:4491–508. doi:10.1364/BOE.399020

42. Belashov A, Zhikhoreva A, Belyaeva T, Kornilova E, Salova A, Semenova I, et al. In Vitro monitoring of Photoinduced Necrosis in Hela Cells Using Digital Holographic Microscopy and Machine Learning. J Opt Soc Am A Opt Image Sci Vis (2020) 37:346–52. doi:10.1364/JOSAA.382135

43. Wang Y, Li Q, Wang Y. Research on Bp Neural Network for Terahertz Image Segmentation. In: Eleventh International Conference on Information Optics and Photonics, Xi'an, China. CIOP (2019). doi:10.1117/12.2547541

44. Kreis T. Handbook Of Holographic Interferometry (Handbook of Holographic Interferometry: Optical and Digital Methods) (2005).

45. Meinhart CD, Wereley ST, Gray Mjms T. Volume Illumination for Two-Dimensional Particle Image Velocimetry. Meas Sci Tech (2000) 11:809–14. doi:10.1088/0957-0233/11/6/326

46. Wu YC, Wu XC, Yang J, Wang ZH, Gao X, Zhou BW, et al. Wavelet-based Depth-Of-Field Extension, Accurate Autofocusing, and Particle Pairing for Digital Inline Particle Holography. Appl Opt (2014) 53:556–64. doi:10.1364/Ao.53.000556

47. Howard A, Zhmoginov A, Chen LC, Sandler M, Zhu M. Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation (2018).

48. Bradley D, Roth GJJ. Adaptive Thresholding Using the Integral. image (2007) 12:13–21. doi:10.1080/2151237x.2007.10129236

49. Algan G, Ulusoy IJKBS. Image Classification with Deep Learning in the Presence of Noisy Labels. A Surv (2021) 215:106771. doi:10.1016/j.knosys.2021.106771

50. Jia D, Wei D, Socher R, Li LJ, Kai L, Li F, et al. Imagenet: A Large-Scale Hierarchical Image Database (2009). 248–55.

Keywords: gel droplet, secondary breakup, digital holography, deep learning, image segmentation

Citation: Pang Z, Zhang H, Wang Y, Zhang L, Wu Y and Wu X (2021) Recognition of Multiscale Dense Gel Filament-Droplet Field in Digital Holography With Mo-U-Net. Front. Phys. 9:742296. doi: 10.3389/fphy.2021.742296

Received: 16 July 2021; Accepted: 27 August 2021;

Published: 16 September 2021.

Edited by:

Jianglei Di, Guangdong University of Technology, ChinaReviewed by:

Shengjia Wang, Harbin Engineering University, ChinaLu Qieni, Tianjin University, China

Wenjing Zhou, Shanghai University, China

Copyright © 2021 Pang, Zhang, Wang, Zhang, Wu and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yingchun Wu, d3ljZ3NwQHpqdS5lZHUuY24=

Zhentao Pang1

Zhentao Pang1 Yingchun Wu

Yingchun Wu