94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Nutr., 20 October 2021

Sec. Nutrition and Food Science Technology

Volume 8 - 2021 | https://doi.org/10.3389/fnut.2021.755007

This article is part of the Research TopicThe Future Food AnalysisView all 29 articles

Classification of beef cuts is important for the food industry and authentication purposes. Traditional analytical methods are time constraints and incompatible with the modern food industry. Taking advantage of its rapidness and being nondestructive, multispectral imaging (MSI) has been widely applied to obtain a precise characterization of food and agriculture products. This study aims at developing a beef cut classification model using MSI and machine learning classifiers. Beef samples are imaged with a snapshot multi-spectroscopic camera within a range of 500–800 nm. In order to find a more accurate classification model, single- and multiple-modality feature sets are used to develop an accurate classification model with different machine learning-based classifiers, namely, linear discriminant analysis (LDA), support vector machine (SVM), and random forest (RF) algorithms. The results demonstrate that the optimized LDA classifier achieved a prediction accuracy of over 90% with multiple modality feature fusion. By combining machine learning and feature fusion, the other classification models also achieved a satisfying accuracy. Furthermore, this study demonstrates the potential of machine learning and feature fusion method for meat classification by using multiple spectral imaging in future agricultural applications.

Beef plays an important role in daily diet and the food industry because it contains essential nutrients with high biological value (1). The great variability of beef cuts often lead to highly variable qualities, such as tenderness juiciness, and flavor, which are important for consumer's evaluation of beef quality and purchase decision (2). However, the price of high-quality beef cuts, such as sirloin, is much higher than that of low-preference cuts, such as shank and flank. The higher growth demand of beef leads to frauds in retail or supply chain, which means the substitution of high-class beef cuts from low-class. In this sense, concern on beef quality management is highly demanded to identify frauds and prevent any potential hazard. Consequently, beef cut classification becomes essential to meet the demand of consumers and food safety regulators.

Among analytical techniques, the spectroscopy method has been proved to be of great potential for meat analysis because of rapidness, being nondestructive, and minimum preprocessing requirements (3–5). The basic composition of beef, such as water, proteins, myoglobin, fatty acids and lipids, possesses functional groups with certain chemical bonds (O-H, C-H, N-H, etc.) that cause the deviation of a spectrum at a particular wavelength (6). Based on vibrational spectroscopy, the chemometrics method could be applied to extract reliable information from the spectrum and analyze beef cuts qualitatively and quantitatively. However, the spectroscopy approach also suffers from a small sampling area and a lack of spatial information, which limit its application.

The multispectral imaging (MSI) system could capture spectral information at each pixel of a two-dimensional array (7). The three-dimensional hypercube with both spatial and spectral information may potentially make a classification model more detailed (8). Therefore, a high-data dimension also requires feature extraction and fusion to build a reliable classification model (9). In recent years, MSI has been widely applied for quality evaluation and variety classification of food products (10–12). Jiang extracted spectral features and textural features from hyperspectral data of chicken breasts, and then fused them for classification (13). The results demonstrated that fusion features were more conducive to the correct classification of the classifier than single features. Pu also proved that the combination of spectrum and texture information in the modeling process could improve the classification accuracy of different states of pork (14).

Because different types of beef cut might have similar composition, they are difficult to be identified with only spectral information. Meanwhile, the scattering and textural properties of beef are also related to sarcomere length and collagen content. The accuracy of a classification model is dependent on feature extraction, fusion method, and classifier. Feature extraction is recognized as an important approach to obtain an informative feature from high dimensional data. Considering the MIS measurement, feature fusion incorporating both spatial and spectral information has been investigated intensively in a remote sensing area to improve classification accuracy (15). Recently, machine learning (ML) was applied for the processing of spectral and multiple modality data (16–18).

The objective of this study is to develop a classification method by MSI in combination with machine learning-based classifiers. Based on multispectral images of beef cuts, spectral and textural features were extracted to reduce the dimension of data. Three machine learning-based classifiers were used to establish classification models with different feature sets. After the performance of single and multiple-modality features was compared, the best models were put forward. The results confirmed the feasibility of classifying beef cuts with a satisfactory accuracy. Using multiple-modality features, the optimally developed LDA classifier achieved a prediction accuracy of over 90%. Therefore, the proposed approach would provide an effective and empirical reference for meat classification in similar future research and application.

As shown in Figure 1, the MSI system consists of a light source (HL-2000-FHSA; Ocean Optics, Dunedin, FL, United States) and an adjustable focus lens (Nikon, Tokyo, Japan) coupled with a multi-channel spectroscopic camera (miniCAM5; QHYCCD, China). The spectroscopic camera has one TE-cooled, 16-bit scientific CCD camera and a six-position filter wheel, in which dichroic bandpass filters are mounted. With an adjustable focus lens, the system achieves high-resolution imaging of 1,290 × 960 pixels with six bands, centered at 500, 530, 570, 680, 760, and 808 nm. In this approach, each band covers a relatively wide range of wavelengths (about 16 nm in full-width half-maximum, FWHM), which is typically strong for fast imaging. MSI measurements were performed on fresh beef cuts bought from a local market. The spectra of the beef cuts were also measured with a visible near-infrared (VIS-NIR) spectrometer (BTC611E; BWTEK, United States).

In order to reduce the error caused by the configuration of the MSI system and avoid the oversaturation of the charge-coupled device (CCD) sensor, each image was normalized as a pointwise ratio of dark-noise-corrected intensity from the beef cuts and that from the diffuse reflectance standard (20% reflection; Labsphere Inc., United States), as follows:

where IR is the calibrated reflectance, Iraw is the raw intensity measured from the test sample, Idark is the intensity of the dark response, and Iref is the intensity of the 20% standard reference. This procedure could also compensate inhomogeneous illumination fields and serve as a relative intensity correction.

All the beef cuts were purchased from the Ming Yuan abattoir. The bulls were slaughtered according to standard procedure. The samples were sent to the laboratory within 20 min after purchase, sliced immediately, bagged separately, and stored in a freezing room at −18°C. Before data collection, the samples were thawed in a cold storage room at 6°C for 12 h. The processing steps of all the samples are generally the same, and the time interval of the experiment is short, which guarantees the reliability of the sampling process. To evaluate the representativeness of the samples, emphasis was placed on collecting diverse data with expected variations in color, size, and shape from different sites.

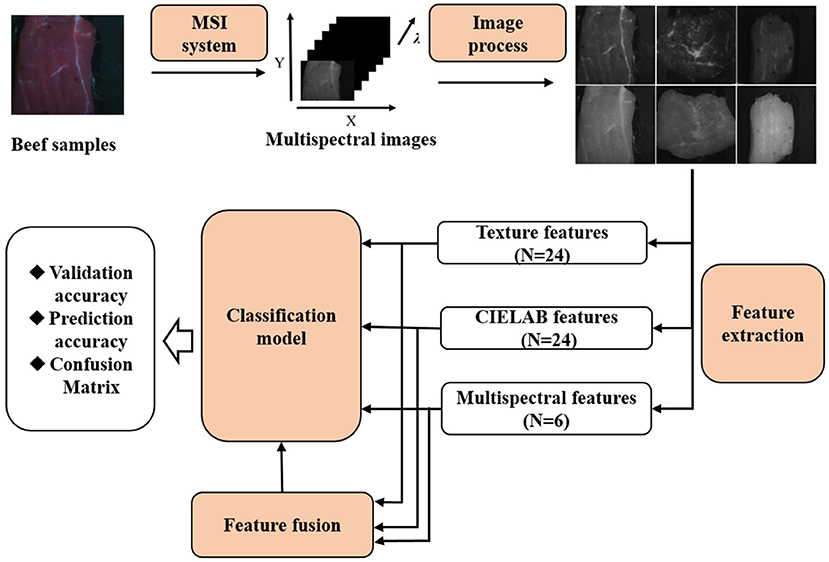

As shown in Figure 2, the basic steps of the classification model generally involved preprocessing, feature extraction, fusion, and classification. First, the spectral and textual features of multiple-spectral images were extracted and used as single-modality feature sets. After normalization, feature fusion process was introduced by concatenating the spectral and textural features into a single array, which represents the multiple-modality fusion features set. Linear discriminant analysis (LDA), support vector machine (SVM), and random forest (RF) algorithms were applied to establish the classification models based on the single-modality or multiple-modality feature sets. The classification accuracy of different classifier and feature sets were further compared. Details of every step are described in the following sections.

Figure 2. Flow chart of main steps in data acquisition and analysis using multispectral images combined with machine learning for classification of beef cuts.

We implemented all the algorithms, i.e., feature extraction, feature fusion, and classifiers, by employing a written script in Python. The built-in function of LDA, SVM, and RF from the sklearn library was used to build the classification models.

The multispectral images contain abundant information, with two spatial dimensions and one spectral dimension, which give more details of the samples. However, the high data curse of dimensionality also makes the classification model more complex. Feature extraction is required to obtain relevant information to make the classification model more accurate and robust.

Considering the anisotropic muscles of beef, the textural features could be extracted with the gray-level co-occurrence matrix (GLCM), which represents the probability that a pixel of specific gray level appears in a specified direction and distance from its neighboring pixels (19). In this study, four statistical textural features were calculated from GLCM, namely, homogeneity, contrast, energy, and correlation, as follows:

where , , , and (i,j) denote the probability statistics that both pixels with the gray levels i and j co-occur in the corresponding texture image, and g(i,j) is the (i,j)th entry in the gray-tone spatial dependence matrix. In general, homogeneity assesses the prevalence of gray-tone transitions; contrast quantifies the local variation in an image; energy reflects the uniformity of the image gray distribution; correlation measures its gray-tone linear dependencies. The four statistical features are obtained from the images of each band and finally formed the textural feature set with 24 variables.

To obtain the spectral features, the multiple-spectral images were quantified into CIELAB color space, and the values in this color space represent the information of the partial band intensity values of the multi-spectrum (20). First, the multispectral images were converted into the CIEXYZ space as follows:

where R, G, and B are the values of RGB channels and X, Y, and Z are the values in the CIEXYZ space. Then, the values in the CIEXYZ space were converted into the CIELAB space by the following:

where:

Finally, the L, a*, and b* values of the CIELAB space were used as the spectral features.

The spectral and textural features could be used as an input for the classification separately, and are referred to as a single-modality feature set. For multimodal measurement, feature fusion could combine the information obtained by a different modality and generate a composite representation containing more informative description than the single-modality feature could provide. In feature-level fusion, the spectral and textual features could be concatenated integrated into a high-dimensional vector used for classification. In order to eliminate the degradational effect of the feature value, the features were normalized by subtracting the mean and dividing by the standard deviation of each feature vector.

In this study, three supervised machine learning-based algorithms, LDA, SVM, and RF, were used to build the classification model for identifying three kinds of beef cuts. Among them, LDA is a generalization of Fisher's linear discriminant, which finds a linear combination of features that characterizes or separates two or more classes. After the input variables are first projected to low dimensions, LDA aims at maximizing the ratio of between-class cluster to within-class cluster. SVM separates different types of samples by mapping the low-dimensional space to the high-dimensional space with a kernel function (21). Generally, the linear kernel function is often a good choice that works with high speed and efficiency. For multi-classification tasks, multiple classifiers can be constructed through “one-vs.-one” strategies to vote. RF generates an ensemble of decision trees and is trained to get majority votes from all the trees with the highest information gain (22). Given an estimate of important variables in the classification, the single tree does not affect the overall prediction. Different trees may show different results from the same classification task, and the most popular result will be selected as the final result.

Considering the dimensionality of the features, we chose the optimization algorithm of “singular value decomposition”, which is good at handling high-dimensional features for LDA. The kernel function and penalty factor were the key parameters of the SVM model. In this study, we used linear as kernel function because of its being highly efficient. The best values for C = 0.6 were optimized using a grid search method. An RF classifier was constructed using the maximum number of features as the square root of the number of features, and the minimum number of samples in the leaf node as 1 to achieve high classification accuracy and efficiency. We tested the results of our proposed model on a various number of trees and found the best performance of RF when the number of trees was 160.

The experiments were carried out on 550 samples, including 200 cuts of sirloin, 195 cuts of shank, and 160 cuts of flank. All the samples were divided into two subsets manually, namely, calibration set (445 samples) and prediction set (110 samples). The samples in the calibration set were used to establish the classification model. A hierarchical 10-fold cross-validation was performed on the calibration set to verify the performance of the model.

Cross-validation is an effective and widely used technique to evaluate classification models by partitioning the original sample into a training set to train the models, and a test set to evaluate it. In 10-fold cross-validation, the original sample is randomly partitioned into 10 subsamples of equal size. Of the 10 subsamples, a single subsample is retained as the validation data for testing the model, and the remaining nine are used as training data. The cross-validation process is then repeated 10 times (the folds) with every subsample used exactly once as the validation data. For classification problems, the advantage of this method is each fold contains roughly the same proportions of class labels.

The performance of the classification models was evaluated in terms of accuracy, sensitivity, and specificity, which were calculated as follows:

where TP is the number of samples correctly classified as positive; TN is the number of samples correctly classified as negative; FP is the number of samples incorrectly classified as positive; FN is the number of samples incorrectly classified as negative. Based on these metrics, confusion matrices were also calculated to explore detailed classification performance.

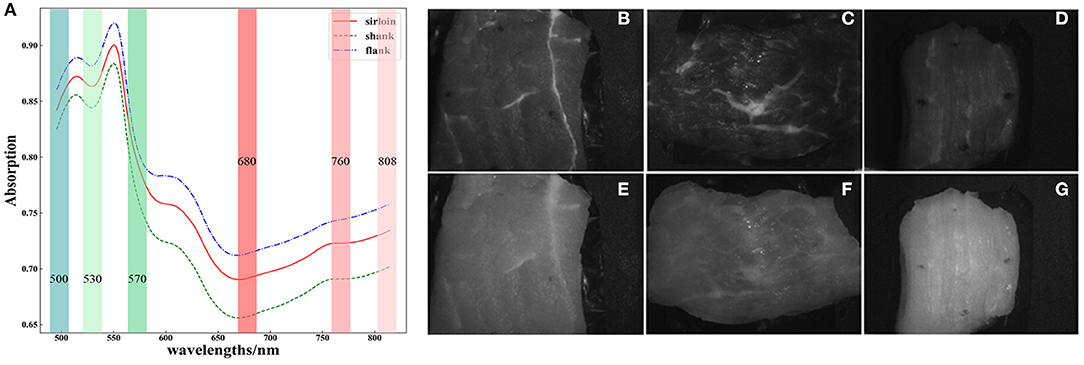

As shown in Figure 3A, the reflectance spectrum of three types of beef cut shows different intensity and absorption features in the range of 500–800 nm. There are obvious absorption peaks centered at 510, 550, and 640 nm. The absorbance at 510 nm corresponds to light absorption by muscle pigments, while the absorption bands at 550 nm are related to the oxymyoglobin absorption (23, 24). Red meats also show an absorption band at 630 nm, which has been attributed to sulfmyoglobin (24). The weak absorption peak centered at 760 nm corresponds to the third overtone region of O-H or an absorption band produced by deoxymyoglobin (23, 25). Because sirloin contains more water and deoxymyoglobin, the absorption peak around 760 nm is obvious. The main component of flank and shank is a muscle, which contains less water and deoxymyoglobin, resulting in less absorption at 760 nm. These compositions also determine the color and reflectance intensity of the beef, which makes the absorption differ between beef cuts. Among these three kinds of beef cuts, the shank cuts have lower absorption, because they have more white tendons and lipids.

Figure 3. Visible near-infrared (VIS-NIR) spectrum of (A) three kinds of beef cut and corresponding multispectral images at 500 nm of (B) sirloin, (C) flank, (D) shank; and multi spectral images at 760 nm of (E) sirloin, (F) flank, and (G) shank.

The multispectral images of the beef cuts also show heterogeneity in the arrangement of protein fibers and the presence of intramuscular fat and other tissues, as shown in Figures 3B-G. However, the texture properties also differed with spectral bands. The textural feature also reflects the main components of the beef cuts. Due to the high absorption of myoglobin in muscle, all the three types of beef cuts show lower reflectance at 500 nm, but the heterogeneity of beef was more detailed in this band.

As mentioned above, the three kinds of classification models established were LSVM (linear support vector machine), LDA (linear discriminant analysis), and RF (random forest). In order to prevent overfitting, the regularization parameters need to be set properly in SVM, and it was set as 1 finally. The optimization algorithm in LDA is set as singular value decomposition that is good at handling high-dimensional features. As for RF, the number of trees needs to be set reasonably to achieve high classification accuracy and good computing efficiency. In the attempt, it was found that after the number of trees reached 10, the accuracy of the model no longer improved significantly. The model parameters have been set so far, and model building and prediction are completed using the sklearn library in python3.

As shown in Table 1, the performance of the classification models based on single-modality features was validated by 10-fold cross-validation and prediction test. Generally, classification models based on textural features achieve a better accuracy than those based on spectral features. There was not much difference in the result between two linear classifiers with textual features. The SVM and LDA classifiers achieved a prediction accuracy of 70.91 and 72.73%, respectively. With the spectral features, the RF classifier achieved a cross-validation accuracy of 68.07% and a prediction accuracy of 64.55%. However, the prediction results of the model with spectral features were always lower than that of the model with textural features.

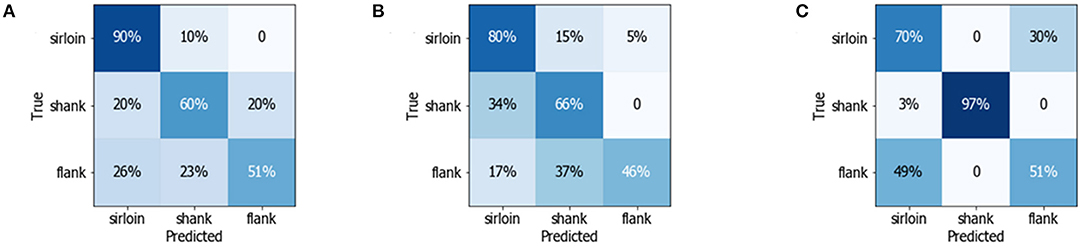

The confusion matrix was calculated to present the metrics of sensitivity and recall, providing insights into the classification capability of the models. As shown in Figure 4, the correctly classified results were located on the diagonal, which also indicated the sensitivity of the model. The results demonstrated that most of the sirloin cuts could be correctly classified based on the spectral features. Meanwhile, 97% of the shank could be identified by the LDA classifier with textural features, because the shank cuts have more textural features related to strips of white fascia and unique muscle fiber directions, which can be easily identified as a textual feature. However, the sensitivity of the shank cuts is below 60% with the three classification models, indicating that the flank samples are more difficult to distinguish with single-modality features. According to the confusion matrix, the classification accuracy of different beef cuts varied with the feature set. In that sense, the classification model could be further improved by multiple-modality feature fusion.

Figure 4. Confusion matrix detailing the multiclass discrimination results of three different beef cuts using (A) random forest (RF) model with multispectral data (B) linear discriminant analysis (LDA) model with CIELAB data, and (C) LDA model with texture data.

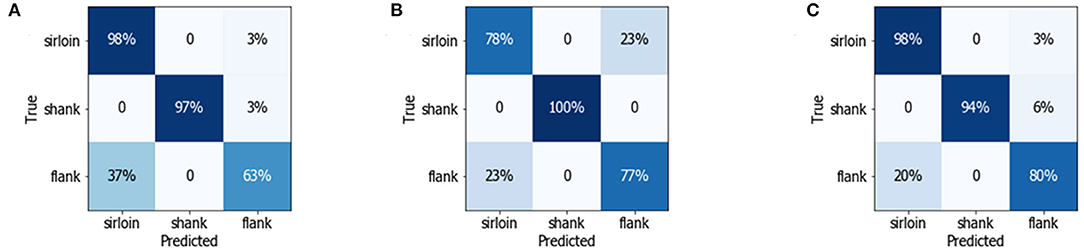

Table 2 summarizes the performance of the classification models with multiple-modality fusion feature sets. The classification accuracy was improved by merging the spectral and textural features. All of the three classifiers performed better with the multi-modality feature fusion set of MS- CIELAB-texture. Regarding feature fusion, the optimally developed LDA classifier shows the biggest improvement, achieving a prediction accuracy of over 90%. The RF classifier is not improved much as the two linear classifiers, because nonlinear classifier needs larger number of samples for training to find good classification criterion.

As shown in Figure 5, the classification accuracy of the linear classifiers is significantly improved with multiple-modality feature fusion sets. Compared with a single textural feature, the classification accuracy of flank was greatly improved with MS-CIELAB-texture set, achieving a sensitivity of 80% with the LDA classifier, as shown in Figure 5C. Meanwhile, the classification accuracy of sirloin and flank was also improved. The sensitivity of the LDA classifier with MS-textural features set was 98% for sirloin cuts, while the sensitivity for shank also reached an excellent level of 100% with the combination of CIELAB and textural features.

Figure 5. Confusion matrix detailing the multiclass discrimination results of three different beef cuts using (A) the linear support vector machine (LSVM) classifier with MS-texture fusion features, (B) the LDA classifier with CIELAB-texture fusion features, and (C) the LDA classifier with MS-CIELAB-texture fusion features.

All the results demonstrate that the multiple-modality feature could efficiently improve the accuracy and synergy of the classification model. Thus, feature fusion can combine the sensitive feature by which the classifier established performed better. However, the number of features will also affect the performance of a classifier, such as error tolerance, number of iterations, and training time. More importantly, we should consider all aspects of the model comprehensively when selecting feature set and classification algorithm.

To date, multimodality approaches have shown a considerable capacity to improve the performance of classification and analysis applications. However, most researches have merged redundant information based only on different vibrational spectroscopy techniques. More than improving the classification accuracy, this study tries to provide a better interpretation of a chemometric model and machine vision method.

Recently, portable multispectral devices were developed to meet the increasing requirements of food analysis. This study utilized portable equipment with simplified snapshot measurement, providing the potential to develop a low-cost instrument for online detection. By taking advantage of high-efficiency data acquisition and processing, the MSI approach could be widely applied for many tasks with a low budget. There is also a large potential for combining molecular information and machine vision in data fusion models for applications to different matrices, such as food authenticity and storage.

In this study, a classification method was proposed to identify different beef cuts with MSI and machine learning-based classifiers. With feature extraction and fusion strategy, multispectral images were converted into single-modality and multiple-modality feature sets and used further as input of machine learning-based classifiers. The results demonstrated that the classification model with multiple-modality fusion feature set performed better than the model with a single feature set. The performance of the LDA classifier was greatly improved with multiple-modality feature fusion, achieving a prediction accuracy of above 90%. This study also provided a better interpretation of the MSI approach, which should be considered for use in routine, nondestructive analyses of the food industry.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

AL: conceptualization, methodology, software, and writing—original draft. CL: conceptualization, methodology, formal analysis, writing—review and editing, and funding acquisition. MG: software and visualization. RL: validation, formal analysis, resources, and funding acquisition. WC: validation, resources, and funding acquisition. KX: formal analysis, resources, funding acquisition, and project administration. All authors contributed to the article and approved the submitted version.

The authors acknowledge the financial support provided by the National Natural Science Foundation of China (Grant Nos: 81871396, 81971657, and 81671727) and the Tianjin Natural Science Foundation (Grant Nos: 19JCYBJC29100 and 20JCZDJC00630).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Wu GY. Important roles of dietary taurine, creatine, carnosine, anserine and 4-hydroxyproline in human nutrition and health. Amino Acids. (2020) 52:329–60. doi: 10.1007/s00726-020-02823-6

2. Henchion M, McCarthy M, Resconi VC, Troy D. Meat consumption: Trends and quality matters. Meat Sci. (2014) 98:561–8. doi: 10.1016/j.meatsci.2014.06.007

3. Abdel-Nour N, Ngadi M, Prasher S, Karimi Y. Prediction of egg freshness and albumen quality using visible/near infrared spectroscopy. Food and Bioprocess Technology. (2011) 4:731–6. doi: 10.1007/s11947-009-0265-0

4. Wang WX, Peng YK, Sun HW, Zheng XC, Wei WS. Spectral detection techniques for non-destructively monitoring the quality, safety, and classification of fresh red meat. Food Anal Methods. (2018) 11:2707–30. doi: 10.1007/s12161-018-1256-4

5. Li JB, Huang WQ, Zhao CJ, Zhang BH, A. comparative study for the quantitative determination of soluble solids content, pH and firmness of pears by Vis/NIR spectroscopy. J Food Eng. (2013) 116:324–32. doi: 10.1016/j.jfoodeng.2012.11.007

6. Monago-Marana O, Wold JP, Rodbotten R, Dankel KR, Afseth NK. Raman, near-infrared and fluorescence spectroscopy for determination of collagen content in ground meat and poultry by-products. Lwt-Food Science and Technology. (2021) 140:8. doi: 10.1016/j.lwt.2020.110592

7. Gomez-Sanchis J, Gomez-Chova L, Aleixos N, Camps-Valls G, Montesinos-Herrero C, Molto E, et al. Hyperspectral system for early detection of rottenness caused by Penicillium digitatum in mandarins. J Food Eng. (2008) 89:80–6. doi: 10.1016/j.jfoodeng.2008.04.009

8. Liu YW, Pu HB, Sun DW. Hyperspectral imaging technique for evaluating food quality and safety during various processes: A review of recent applications. Trends in Food Science & Technology. (2017) 69:25–35. doi: 10.1016/j.tifs.2017.08.013

9. Li ST, Song WW, Fang LY, Chen YS, Ghamisi P, Benediktsson JA. Deep learning for hyperspectral image classification: an overview. Ieee Transactions on Geoscience and Remote Sensing. (2019) 57:6690–709. doi: 10.1109/tgrs.2019.2907932

10. Qin JW, Chao KL, Kim MS, Lu RF, Burks TF. Hyperspectral and multispectral imaging for evaluating food safety and quality. J Food Eng. (2013) 118:157–71. doi: 10.1016/j.jfoodeng.2013.04.001

11. Gowen AA, O'Donnell CP, Cullen PJ, Downey G, Frias JM. Hyperspectral imaging - an emerging process analytical tool for food quality and safety control. Trends in Food Science & Technology. (2007) 18:590–8. doi: 10.1016/j.tifs.2007.06.001

12. Gowen AA, O'Donnell CP, Taghizadeh M, Cullen PJ, Frias JM, Downey G. Hyperspectral imaging combined with principal component analysis for bruise damage detection on white mushrooms (Agaricusbisporus). J Chemom. (2008) 22:259–67. doi: 10.1002/cem.1127

13. Jiang HZ, Yoon SC, Zhuang H, Wang W, Li YF, Yang Y. Integration of spectral and textural features of visible and near-infrared hyperspectral imaging for differentiating between normal and white striping broiler breast meat. Spectrochimica Acta Part a-Molecular and Biomolecular Spectroscopy. (2019) 213:118–26. doi: 10.1016/j.saa.2019.01.052

14. Pu H, Sun DW, Ma J, Cheng JH. Classification of fresh and frozen-thawed pork muscles using visible and near infrared hyperspectral imaging and textural analysis. Meat Sci. (2014) 99:81–8. doi: 10.1016/j.meatsci.2014.09.001

15. Imani M, Ghassemian H. An overview on spectral and spatial information fusion for hyperspectral image classification: Current trends and challenges. Information Fusion. (2020) 59:59–83. doi: 10.1016/j.inffus.2020.01.007

16. Parastar H, van Kollenburg G, Weesepoel Y, van den Doel A, Buydens L, Jansen J. Integration of handheld NIR and machine learning to “Measure & Monitor” chicken meat authenticity. Food Control. (2020) 112:11. doi: 10.1016/j.foodcont.2020.107149

17. Cheng JH, Sun DW, Qu JH, Pu HB, Zhang XC, Song ZX, et al. Developing a multispectral imaging for simultaneous prediction of freshness indicators during chemical spoilage of grass carp fish fillet. J Food Eng. (2016) 182:9–17. doi: 10.1016/j.jfoodeng.2016.02.004

18. Danneskiold-Samsoe NB, Barros H, Santos R, Bicas JL, Cazarin CBB, Madsen L, et al. Interplay between food and gut microbiota in health and disease. Food Research International. (2019) 115:23–31. doi: 10.1016/j.foodres.2018.07.043

19. Zhu FL, Zhang DR, He Y, Liu F, Sun DW. Application of visible and near infrared hyperspectral imaging to differentiate between fresh and frozen-thawed fish fillets. Food and Bioprocess Technology. (2013) 6:2931–7. doi: 10.1007/s11947-012-0825-6

20. Uthoff RD, Song BF, Maarouf M, Shi V, Liang RG. Point-of-care, multispectral, smartphone-based dermascopes for dermal lesion screening and erythema monitoring. J Biomed Opt. (2020) 25:21. doi: 10.1117/1.Jbo.25.6.066004

21. Chang CC, Lin CJ. LIBSVM: A Library for support vector machines. ACM Transactions on Intelligent Systems and Technology. (2011) 2:1–27. doi: 10.1145/1961189.1961199

22. Svetnik V, Liaw A, Tong C, Christopher Culberson J, Sheridan RP, Feuston BP. Random 21 and QSAR modeling. J Chem Inf Comput Sci. (2003) 43:1947–58. doi: 10.1021/ci034160g

23. Cozzolino D, Murray I. Identification of animal meat muscles by visible and near infrared reflectance spectroscopy. LWT - Food Science and Technology. (2004) 37:447–52. doi: 10.1016/j.lwt.2003.10.013

24. Arnalds T, McElhinney J, Fearn T, Downey G, A. hierarchical discriminant analysis for species identification in raw meat by visible and near infrared spectroscopy. J Near Infrared Spectroscopy. (2004) 12:183–8. doi: 10.1255/jnirs.425

25. Zhao HT, Feng YZ, Chen W, Jia GF. Application of invasive weed optimization and least square support vector machine for prediction of beef adulteration with spoiled beef based on visible near-infrared (Vis-NIR) hyperspectral imaging. Meat Sci. (2019) 151:75–81. doi: 10.1016/j.meatsci.2019.01.010

Keywords: multi-spectral imaging, machine learning, beef cuts, classification, feature fusion

Citation: Li A, Li C, Gao M, Yang S, Liu R, Chen W and Xu K (2021) Beef Cut Classification Using Multispectral Imaging and Machine Learning Method. Front. Nutr. 8:755007. doi: 10.3389/fnut.2021.755007

Received: 07 August 2021; Accepted: 17 September 2021;

Published: 20 October 2021.

Edited by:

Tao Pan, Jinan University, ChinaReviewed by:

Lian Li, Shandong University, ChinaCopyright © 2021 Li, Li, Gao, Yang, Liu, Chen and Xu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rong Liu, cm9uZ2xpdUB0anUuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.