94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

HYPOTHESIS AND THEORY article

Front. Comput. Neurosci. , 18 February 2025

Volume 19 - 2025 | https://doi.org/10.3389/fncom.2025.1540532

This article is part of the Research Topic Biophysical Models and Neural Mechanisms of Sequence Processing View all articles

Janet M. Baker1*

Janet M. Baker1* Peter Cariani2

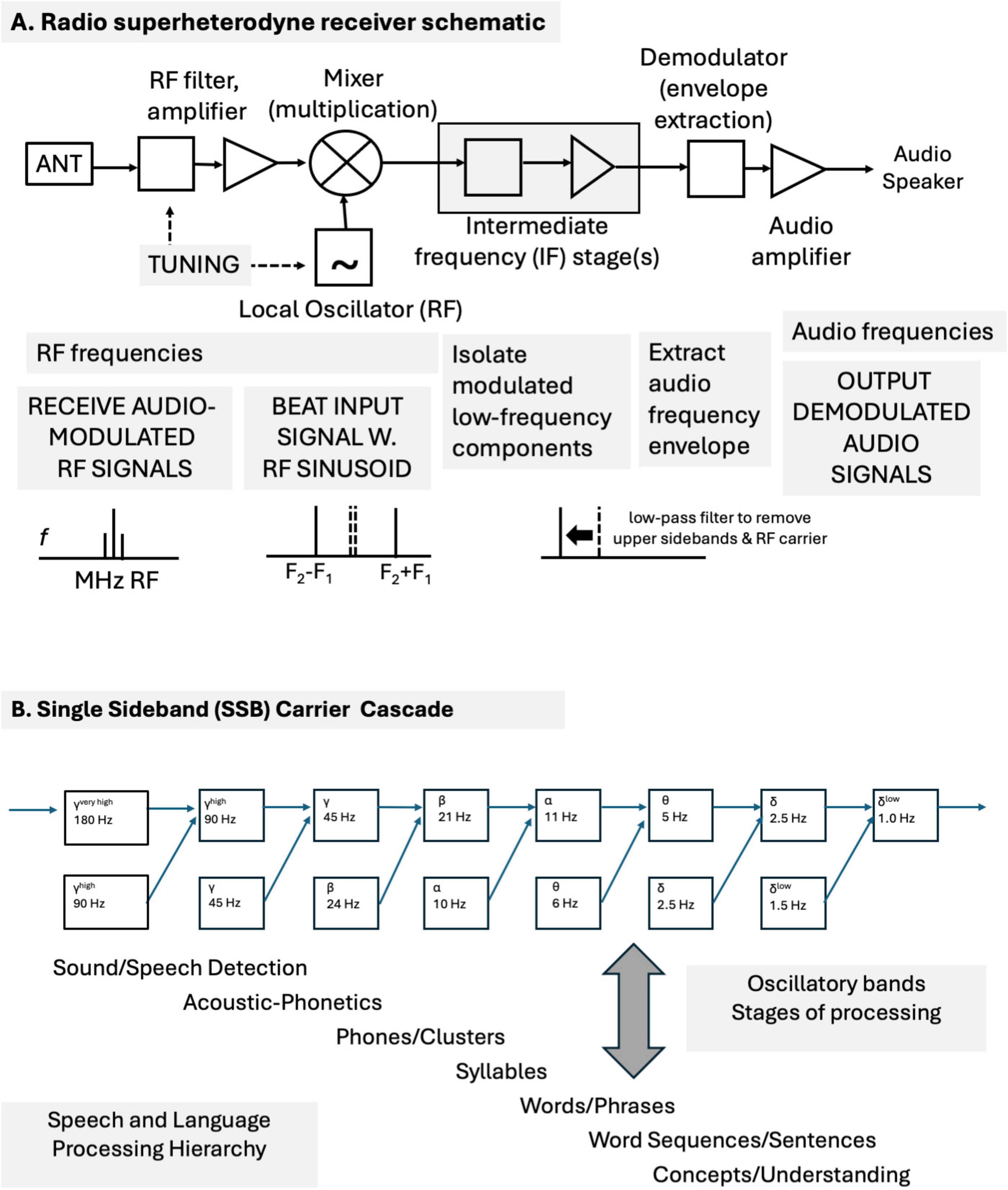

Peter Cariani2Time is essential for understanding the brain. A temporal theory for realizing major brain functions (e.g., sensation, cognition, motivation, attention, memory, learning, and motor action) is proposed that uses temporal codes, time-domain neural networks, correlation-based binding processes and signal dynamics. It adopts a signal-centric perspective in which neural assemblies produce circulating and propagating characteristic temporally patterned signals for each attribute (feature). Temporal precision is essential for temporal coding and processing. The characteristic spike patterns that constitute the signals enable general-purpose, multimodal, multidimensional vectorial representations of objects, events, situations, and procedures. Signals are broadcast and interact with each other in spreading activation time-delay networks to mutually reinforce, compete, and create new composite patterns. Sequences of events are directly encoded in the relative timings of event onsets. New temporal patterns are created through nonlinear multiplicative and thresholding signal interactions, such as mixing operations found in radio communications systems and wave interference patterns. The newly created patterns then become markers for bindings of specific combinations of signals and attributes (e.g., perceptual symbols, semantic pointers, and tags for cognitive nodes). Correlation operations enable both bottom-up productions of new composite signals and top-down recovery of constituent signals. Memory operates using the same principles: nonlocal, distributed, temporally coded memory traces, signal interactions and amplifications, and content-addressable access and retrieval. A short-term temporary store is based on circulating temporal spike patterns in reverberatory, spike-timing-facilitated circuits. A long-term store is based on synaptic modifications and neural resonances that select specific delay-paths to produce temporally patterned signals. Holographic principles of nonlocal representation, storage, and retrieval can be applied to temporal patterns as well as spatial patterns. These can automatically generate pattern recognition (wavefront reconstruction) capabilities, ranging from objects to concepts, for distributed associative memory applications. The evolution of proposed neural implementations of holograph-like signal processing and associative content-addressable memory mechanisms is discussed. These can be based on temporal correlations, convolutions, simple linear and nonlinear operations, wave interference patterns, and oscillatory interactions. The proposed mechanisms preserve high resolution temporal, phase, and amplitude information. These are essential for establishing high phase coherency and determining phase relationships, for binding/coupling, synchronization, and other operations. Interacting waves can sum constructively for amplification, or destructively, for suppression, or partially. Temporal precision, phase-locking, phase-dependent coding, phase-coherence, synchrony are discussed within the context of wave interference patterns and oscillatory interactions. Sequences of mixed neural oscillations are compared with a cascade of sequential mixing stages in a single-sideband carrier suppressed (SSBCS) radio communications system model. This mechanism suggests a manner by which multiple neural oscillation bands could interact to produce new emergent information-bearing oscillation bands, as well as to abolish previously generated bands. A hypothetical example illustrates how a succession of different oscillation carriers (gamma, beta, alpha, theta, and delta) could communicate and propagate (broadcast) information sequentially through a neural hierarchy of speech and language processing stages. Based on standard signal mixing principles, each stage emergently generates the next. The sequence of oscillatory bands generated in the mixing cascade model is consistent with neurophysiological observations. This sequence corresponds to stages of speech-language processing (sound/speech detection, acoustic-phonetics, phone/clusters, syllables, words/phrases, word sequences/sentences, and concepts/understanding). The oscillatory SSBCS cascade model makes specific predictions for oscillatory band frequencies that can be empirically tested. The principles postulated here may apply broadly for local and global oscillation interactions across the cortex. Sequences of oscillatory interactions can serve many functions, e.g., to regulate the flow and interaction of bottom-up, gamma-mediated and top-down, beta-mediated neural signals, to enable cross-frequency coupling. Some specific guidelines are offered as to how the general time-domain theory might be empirically tested. Neural signals need to be sampled and analyzed with high temporal resolution, without destructive windowing or filtering. Our intent is to suggest what we think is possible, and to widen both the scope of brain theory and experimental inquiry into brain mechanisms, functions, and behaviors.

This article outlines a general-purpose time domain framework for information processing in the brain based on temporal codes, representations, and correlation operations. In contrast to the bulk of existing theories of brain functions, which are based on complex patterns of which neural channels are activated at a given time (channel codes), here neural information processing is conceived in terms of interactions of complex patterns of spikes (temporal codes).

Its long-term goal is to understand how brains work as informational systems, to reverse-engineer brains, for both scientific and engineering ends. uapsNeurocomputational theories of information processing are neurally grounded functional models in that they attempt to explicate the kinds of functional organizations and operating principles that brains use to produce appropriate, effective behaviors. In contrast to, and complementary with, low-level detailed purely molecular, structural, dynamical, and high-level symbolic and behavioral approaches, neurocomputational theories are middle-level approaches that focus on how information processing might be realized in biological neural systems (Cariani, 2001b; Carandini, 2012; Cariani and Baker, 2022).

The time-domain perspective presented here arises from our work in both science and engineering on the perception and pattern recognition of sound, music, and speech. In the auditory domain, stimulus time structure and neural temporal codes are highly evident in the neurophysiology. Time-domain processing mechanisms appear compelling from artificial and biological signal processing perspectives. It has also been inspired by technologies of holography and radio communications.

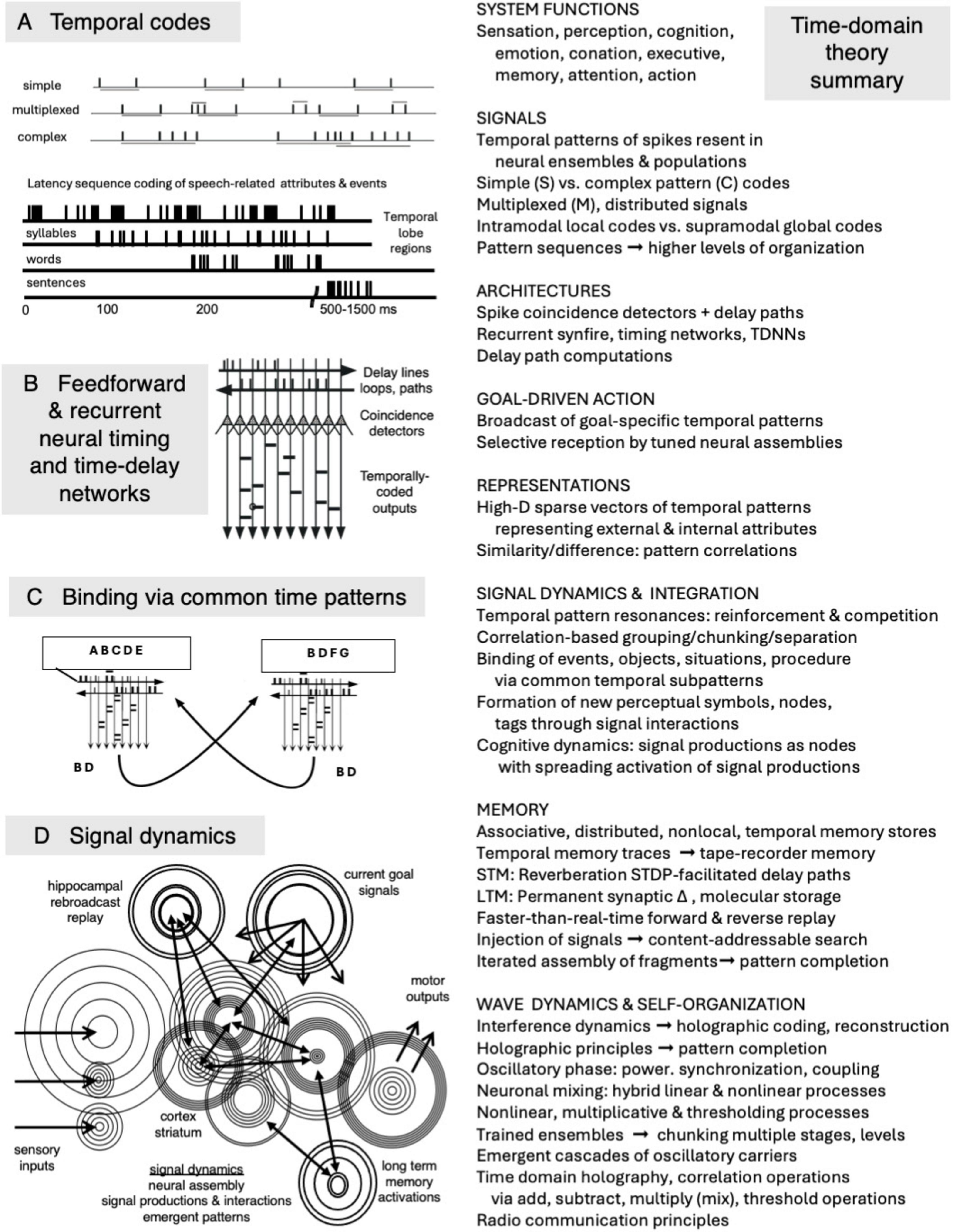

The major assertions of the theory are that peripheral and multiplexed central temporal codes and neural temporal processing architectures may subserve (1) many, possibly all, informational distinctions, and central neural representations, (2) many, possibly all, and major informational functionalities of brains [see section “2 Basic brain functionalities (what is to be explained),” Table 1, e.g., sensation, perception, cognition, emotion, motivation, attention, executive functions, orchestration of action, and motor programs], (3) correlation-based mechanisms of segmentation and binding, (4) associative memory representations and operations, (5) multidimensional cognitive dynamics via spreading activations of temporally coded neural signals, (6) emergence of new signals via nonlinear and multiplicative signal interactions, and (7) temporal coding of the multidimensional contents of memory traces. We further posit (see section “4 Time-domain waveforms, signals, and systems: common signal operations, holography, radio communications, and the brain”) that time-domain representations and operations can be effected using temporal correlations, time-domain holographic-like processing, wave interference patterns, and/or oscillatory cascades. These points are also outlined in Figure 1.

Figure 1. Summary of a signal-centric time-domain theory of brain function. (Left) Temporal codes, time-domain neural nets, correlation-based binding processes, and signal dynamics. (Right) Major conceptual components of the theory. Diagrams adapted from Cariani, (1997; 2015) and Cariani and Baker (2022).

To our knowledge, no other such comprehensive, pan-temporal theory of brain function has ever been proposed. Perhaps the closest would be theories of event timing sequences mediated by hippocampal replay and time cells (Eichenbaum, 2013; Howard et al., 2014), where timings of time cell responses and/or which time cells are activated can encode event sequences on coarse timescales, but these theories do not propose that the attributes of events, i.e., what different attributes distinguish one event from another, are also themselves temporally coded. There also exist mathematical models of representations of time that rely on temporal receptive fields of time cells (Lindeberg, 2023; Howard et al., 2024). Many functional parallels with Grossberg’s (2021) adaptive resonance theory can be drawn, except that in this time domain theory the pattern resonances involve signals consisting of temporal patterns of spikes, whereas in his theory the functional states consist of patterns of firing rates among neural channels.

We do not by any means claim that the theory is correct in all respects, but, given the present, relatively rudimentary state of brain theory, we do firmly believe that this possibility needs to be on the table for discussion, consideration, and investigation. The theory is open to empirical test by neurophysiological and neuropsychological experiments, some of which are outlined in section “5 Discussion – testing the time-domain theory.” Confirmation or dismissal of the theory will require solving the neural coding problem in central brain circuits, i.e., identifying the specific neural correlates of the specific informational contents of the attributes encoded in neural representations. Higher temporal resolutions and concerted efforts to identify temporal correlations and embedded patterns amongst spikes will be needed. Observation of nonlinear wave interactions and oscillatory cascades will require high resolution multi-region recordings and methods of analysis of neural responses that, in contrast to windowed Fourier analyses, do not smear out fine temporal structure.

Although many of the formative ideas that constitute parts of the temporal theory can be found in our earlier writings, this is the first time that we have attempted to incorporate them into a common framework. These elements began with time-domain analyses of speech (Baker, 1975), temporal coding of pitch (Cariani and Delgutte, 1996) and surveys of evidence for temporal coding of sensory information (Cariani, 1995, 2001c). Neural timing nets and time-domain correlation operations for matching common attributes of different stimuli, such as pitch, timbre, and rhythm were proposed (Cariani, 2001a; Cariani, 2002). Separating instruments and voices with different F0-pitches (auditory scene analysis and cocktail party problem) was demonstrated using correlation-based operations implemented via recurrent timing nets (Cariani, 2004). Work on the neural correlates of sequential hierarchical stages of speech and language processing has informed the cascade model presented here (Baker et al., 2011; Chan et al., 2011). Our previous paper (Cariani, 2022 Cariani and Baker, 2022) focused on possible types of central temporal codes, processing architectures, and connections to wave dynamics, holography, and oscillatory cascades, but did not attempt to relate these in any systematic way to the gamut of major brain functions. The present paper attempts to account for all these functions within a unified temporal representation and processing rubric. A strength of the study is that it offers an entirely new perspective on brain function. A limitation is that the theory is in early stages of development such that many parts of it have not been formalized. As best we know, this theory is not contradicted by available evidence.

What is novel about this theory? Time-domain approaches break with longstanding assumptions that regard brains as connectionist neural networks. In mainstream theories, neural functional states are described in terms of levels of activation (e.g., firing rates) amongst a set of elements (i.e., individual neurons, ensembles, and populations). In contrast, the time-domain conception regards brains as temporal correlation machines in which information is temporally coded. It begins with the alternative assumption that the signals of the system themselves (mostly) consist of temporal patterns of spikes. In the standard view, the functional informational states of brains are large vectors that represent across-neuron profiles of firing rates. These vectors are also called rate-place patterns, place being a neuron’s position within the network, its network connectivity. However, it is also possible that these functional states are instead vectors consisting of different temporal patterns of spikes that circulate in network delay paths.

Rather than a channel-centric theory of neuronal representations and information processing architectures, an alternative, time-domain, signal-centric theory is proposed. In the signal-centric view, different sets of neural signal productions switch and organize the action of the system to produce different behaviors. This involves a paradigmatic change of perspective in which most of the action is in the neural signals and their interactions rather than in which subsets of neurons happen to be most active.

Figure 1 illustrates the basic elements of the theory: a neural coding framework that includes simple, complex, multiplexed, local and global temporal codes, time-domain neural processing architectures, grouping operations based on common temporal patterns, and signal dynamics that support signal competition and mutual reinforcement, spreading activation, and content-accessible activation of memory traces. The theory draws on mathematical and technological principles of correlation operations, holography, and radio communications technology for potential means by which distributed, associative, content-addressable memory storage and access might be implemented using time-domain neural signals and mechanisms.

In a nutshell, temporally patterned spike trains arriving from sensory surfaces interact with each other and with those produced by existing, trained central neural assemblies to form regenerated sets of signals that circulate in reverberating short-term memory delay paths, including echoic and working memory. These signals in turn interact with those associated with current goals, affective states, and long-term memories to prepare the system for appropriate action and to orchestrate corresponding motoric temporal sequences.

The temporal theory requires a conceptual shift from digital to analog modes of signal representation and processing. Historically the dominant theories of brain function have assumed channel-based neural architectures that rely on inputs from arrays of dedicated tuned, feature-sensitive neural elements (i.e., “feature detectors”). These theories paralleled the sequential-hierarchical organization of information processing in digital electronic computers. Patterns of activation amongst arrays of these neural feature detectors or filters were traditionally assumed to be the default mode of representation in the central nervous system. Additionally, it was assumed that each neural element was sensitive to one attribute (feature), i.e., one neuron and one attribute. Their output signals were assumed to be one dimensional, scalar time-series signals in the form of moving averages of firing rates or spiking probabilities over tens to hundreds of milliseconds. Here for neurons with very low firing rates, a single spike can be significant. The basic assumption of coding based on moving time-averaged rates ruled out of hand any concurrent or interleaved multiplexing of different types of information. Ensembles of these scalar neural signals were thought to be subsequently processed using switchboards with highly specific interneural connectivities, synaptic weightings, and tunings (John, 1972; Eichenbaum, 2018).

Instead, the temporal theory proposes time-domain representations and operations based on temporal correlations of spikes. Major central neurocomputational structures (cerebral cortex, striatum, thalamus, hippocampus, and cerebellum) are re-envisioned in terms of arrays of neural delays and coincidence detectors that process temporally structured spike patterns, e.g., temporal correlation machines.

As used here, time-delay networks encompass two types of neural networks: those that dynamically self-organize to form temporary reverberating short-term representations and expectancies (e.g., synfire chains, neural timing nets, and wave interference networks), and those that have been organized through many repeated rewarded and/or salient unrewarded input patterns to form quasi-permanent, selective neural assemblies (e.g., classical time-delay neural networks, TDNNs). In the temporal view, dynamic and permanent changes in synaptic efficacies enable adaptive facilitation and selection of delay paths, permitting these two modes of self-organization and learning. Whereas both modes are based on spike temporal correlations, the dynamic changes are mediated by short-term spike timing dependent facilitations, whereas more permanent changes are mediated by long-term synaptic and molecular modifications.

The reverberatory networks that subserve short-term memory are erasable tabula rasas that have no permanent structure, making them capable of temporarily holding any contents, including novel input patterns that the system has never before encountered. In contrast, the permanent neural assemblies are tuned through accumulated experience to selectively respond to and produce particular temporal, spatial, or spatiotemporal patterns of spikes. In neural network terms, the reverberating networks can be regarded in terms of (unsupervised) synfire chains/cycles, neural timing nets, and oscillator networks, whereas the more permanent, long-term neural assemblies can be regarded in terms of (supervised and unsupervised) trainable TDNNs, oscillator networks, and central temporal pattern generators. All of these alternative network types are potentially capable of analyzing and producing temporally patterned neural spike train signals.

In this time-domain theory, reverberatory networks support labile, temporary short-term memory, whereas permanent synaptic modifications in neural assemblies, perhaps aided by molecular mechanisms (Cariani, 2017), support non-labile long-term memory. For both short- and long-term memory, the time-domain theory postulates a “tape recorder-like” process in which memory traces consist of temporal patterns of pulses. Memories associated with particular objects, events, situations, and episodes may reside in collections of related local and global temporal memory fragments that reside in different neural populations. The fragments are assembled and bound through an iterative, spreading activation process based on shared temporal subpatterns. This mode of distributed, content-accessible storage bears many similarities to wave interference dynamics, radio communications and holography (see section “4 Time-domain waveforms, signals, and systems: common signal operations, holography, radio communications, and the brain”), albeit in their less common correlation-based and time-domain variants.

For the most part, this present theory is framed in terms of temporal codes and neural timing nets, but hybrid combinations of temporal- and channel-based networks (e.g., TDNNs) are by no means ruled out of hand. The temporal approach questions many unexamined, default coding assumptions. Because stimulus- and situation-specific differences in firing rates amongst neurons that are widely observed may co-occur with differences in spike latencies, orders-of-firings, and temporal patternings, it is important to disambiguate these alternative coding possibilities by examining how well each candidate code predicts some specific function, be it a percept, emotional or cognitive state or overt behavior. Activation of specific sets of neurons can trigger the subsequent production of temporal patterns of spikes downstream (as in central pattern generators), or vice-versa. In all cases, correlations and causal linkages between patterns of firing rates and spike correlations and behavioral functions need to be carefully explored. Many of these neural coding questions may be eventually resolved through fine-grained spike train analyses and targeted interventions, such as electrical, magnetic, and optogenetic driving, of individual neurons and local ensembles.

Formulating and proposing a new, tentative theory based on time-domain signals and their dynamics is a daunting task. Such a theory needs to be consistent with available experimental neuroanatomical, neurophysiological, and neuropsychological evidence. In the past, most neuroscience textbooks have assumed that temporal coding is limited to sensory peripheries in specific modalities such as audition, mechanoreception, and electroreception, where phase-locked spikes are ubiquitous and obvious and spike timing information can reliably predict percepts with high precision (Cariani, 2001c; Cariani and Baker, 2025, under review)1. However, if one looks deeper into the literature, evidence for temporal coding can be found for virtually all modalities, including vision, the chemical senses, and pain (Perkell and Bullock, 1968; Cariani, 2001c). Because stimulus-related spike timing information is less obvious as one ascends sensory pathways, most theorists have adopted as a default assumption that temporal codes must be converted to channel-codes by the time spikes coursing through these pathways reach the cortex. However, the present state of understanding of neural coding in most central stations (e.g., cerebral cortex, striatum, hippocampus, and cerebellum) is still quite rudimentary, with many unresolved questions and confounds. Neural coding in these places has proven to be an extremely difficult problem to solve, such that there are few examples where even basic sensory attributes, such as shape, color, texture, pitch, timbre, phonetic distinctions, smell, and taste can now be reliably predicted with any high precision from cortical or hippocampal spike train data. Temporal patterns of spikes may exist interleaved with other patterns or as temporal correlations across neurons. These are forms of order that are notoriously hard to detect, unless concerted directed efforts are made to search for them using well-defined, interpretable stimuli. Even with the massive neural datasets now available, it is still difficult to rule out spike correlation codes out of hand.

There are many potential advantages of central time-domain neural architectures that use temporal codes in whole or in part, that drive interest in developing a general time-domain theory. These include the precision, robustness, and invariance of temporal coding of sensory distinctions; simplicity of encoding temporal relations between events, a common coding framework for all types of primitive features; combinations of temporal patterns for multidimensional representations of objects, features, situations, and internal procedures; perceptual grouping by common temporal subpatterns; signal multiplexing in spike trains of individual neurons and ensembles; multivalent neurons that are sensitive to multiple stimulus types and attributes; selective reception of specific temporal patterns; broadcast-based communication and control; content-addressable memory; nonlocal and distributed temporal patterns; temporal memory traces, tape-recorder-like memory storage and readout, compositionality; creation of new temporal patterns through nonlinear thresholding and multiplicative operations; and wave interference and holographic-like distributed storage in the time domain.

A general time-domain theory proposes that temporally coded neural spike patterns carry specific perceptual, cognitive, emotional, motivational, mnemonic, and motoric distinctions. Behavior is produced through competitive and cooperative signal dynamics in which the various signals interact to mutually reinforce, suppress, separate, or combine. In terms of metaphors, brains appear to be more like analog radio communication-control systems and holograms than discrete switchboards and conventional connectionist networks.

The paper first outlines the basic informational functionalities that need to be accounted for in any general neurocomputational theory of brain function [see section “2 Basic brain functionalities (what is to be explained)]. Then neural architectures, representations, and operations needed to carry out these functionalities – temporal neural codes, time-domain neural networks, multidimensional representations, binding processes, and signal dynamics are discussed (see section “3 Proposed time-domain operations and mechanisms”). Prospective functional principles taken from wave dynamics, holography, and radio communications systems are then considered (see section “4 Time-domain waveforms, signals, and systems: common signal operations, holography, radio communications, and the brain”).

A great deal of evidence from psychology and neuroscience strongly suggests to us that neural systems operate on multiplexed signals in the time domain. However, this theory is in initial stages of formulation, and far from complete, so this presentation should be taken as only a rough sketch rather than a fleshed-out, finished neurocomputational model. The final section (see section “5 Discussion – testing the time-domain theory”) discusses how the theory might be tested.

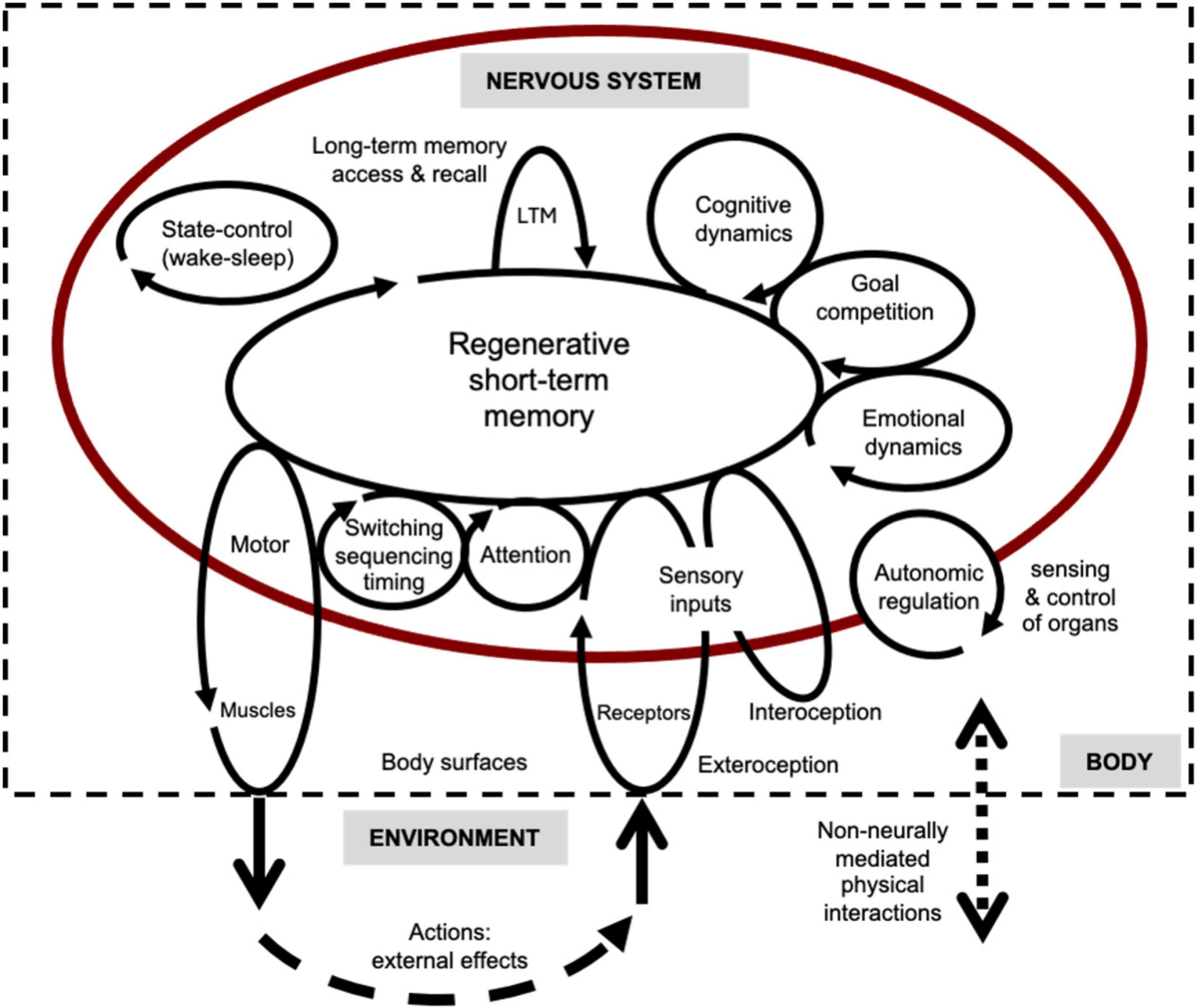

A general theory of how brains work seeks to explain how all the various basic, essential modes of internal information processing – mind-brain functionalities – can be realized by specific types of neural networks operating on specific types of neural signals. The schematic of Figure 2 depicts most of these functionalities in terms of recurrent internal processes embedded in goal-directed percept-coordination-action loops. These sets of loops are involved with interactions of the nervous system with the rest of the body (interoception and autonomic functions) and its external environment (exteroception and action). The functionalities are intended as a general framework for explaining animal behavior.

Figure 2. Functional schematic of brains as networks of circular-causal processes. Process loops consisting of sets of circulating neural signals that link three realms of environment, body, and nervous system. Each process loop in the diagram is related to a different basic functionality (shown) and its corresponding underlying neural circuits (not shown). Signals build-up, compete, persist, and decay in the circuits. This is an updated version of an earlier figure.

The basic functionalities of Figure 1 are listed in more detail in Table 1 and in following sections. These include:

• Regulation of bodily functions – Neural management of cells, tissues, and organs for maintaining system integrity (homeostasis).

• Modal control – Switching of system-wide functional states, such as wake-sleep cycles, levels of arousal, hypnotic and trance states, sleep stages, and stereotyped behavioral modes.

• Sensation – Interaction of sensory organs with the body (interoception) and world external to it (exteroception) to provide information regarding their current states.

• Perception – Organized sensations, neural representations, invariances/similarities/differences, object and event formation/grouping/separation, pre-attentive non-acquired, built-in expectancies, bottom-up depending on current and recent sensory signals, and modulated by top-down attentional, emotional, cognitive, and mnemonic contexts.

• Cognition – Pattern recognition; categorical perception; conceptual representations, operations, and dynamics; language understanding and production; and internal models and acquired expectancies.

• Emotion – Readout of the global state of the organism (e.g., anger, fear, interest, and affection) associated with current dominant mode of prospective action, e.g., fight, flee, explore, play, approach, and avoid/hide. Emotion-related neural signals, hypothesized to be temporally coded, are broadcast widely, biasing behavioral response modes and priming circuits related to all the other functionalities.

• Purposes – Internal systemic goals (conation and motivation) that organize neural circuits to steer behavior so as to bring about attainment (satisfaction) of current goal states. Goal signals are postulated to be temporally coded and broadcast widely.

• Executive functions – Deciding which individual goals to act upon, and in what order, and choosing which actions to take to attain them. These functions include decision-making and action selection: recognizing affordances (goals that are attainable within current situations), weighing priorities and urgencies of competing goals, assessing their respective current likelihoods of attainment, and planning appropriate action sequences. Through competitive, mutually suppressive, winner-take-all processes one goal or set of related goals tends to drive behavior at any given moment. Neural signals for different goals compete (see section “3.5 Signal dynamics: mutual reinforcement, competition, and spreading activation”) with the emergent, currently dominant goal-signals being widely broadcast throughout the brain, where they facilitate task-relevant attention and action preparation.

• Attention – Selective enhancement of specific neural channels, circuits, and/or signals. Attentional processes amplify signals relevant to current goals (voluntary attention) and/or to unexpected, perceptually salient events (involuntary attention). Signal-to-noise ratios of relevant signals can be improved by suppressing irrelevant channels and signals. Attentional facilitation for detecting particular patterns can also be realized by injecting matched signals from memory that are similar to the attributes of the object being sought (e.g., by imagining the object’s appearance). These top-down signals then reinforce similar bottom-up signals coming from sensory pathways.

• Action preparation and orchestration – Priming of motor programs and circuits relevant for achieving current goals and suppression of competing programs. Implementing sequencing and timing of procedures within motor programs.

• Action – Activity by effectors (muscles and secretory organs) that alter the states of the rest of the body and the external world.

• Evaluative feedback – Following an action or action sequence, positive and negative reward signals related to its efficacy in satisfying specific goals are fed back to decision processes. Reward signals within dopamine-mediated circuits encode an evaluation of success or failure that is then widely broadcast to the rest of the brain. These signals facilitate retention of recent rewarded action sequences in short- and long-term memory and promote adaptive reorganization of neural circuits to bias future action sequences to favor successful ones (reinforcement learning).

• Memory – Storage and later retrieval of representations of internal neural events for guiding future behavior. Internal neural events can be related to unrewarded recurring perceptual patterns (causal models, expectancies, and unsupervised learning) or to rewarded percept-action sequencies (supervised and reinforcement learning).

• Learning – Adaptive adjustment of neural circuits that modify internal functional states, procedures, and external behaviors on the basis of previous rewarded and unrewarded histories of predictions and actions. In their broadest senses, learning can be regarded as a kind of memory, and memory as a kind of learning.

The time-domain theory seeks to explain all these basic, essential psychological, mental functionalities [see section “2 Basic brain functionalities (what is to be explained)] in terms of neural temporal processing architectures (see section “3.2 Neural architectures for temporal processing”) that operate on temporally coded signals (see section “3.2”).

Omitted from explicit mention in the schematic and the table are dysfunctional, pathological states (modes of system failure), and social-psychological dynamics. So too is conscious awareness, which may be an inherent aspect of organized neuronal activity in minds and brains rather than an evolved, naturally selected function. In accord with global neural workspace theories (e.g., Dehaene, 2014), the temporal theory posits that the basic state of awareness depends on coherent regeneration of neural signals in global and local recurrent loops. Because signals determine the functional organization of the system, this is a form of organizational closure and provisional stability that constitutes the most fundamental requisite for any conscious, experiential state, i.e., an essential component of the “neural correlates of consciousness” (NCCs). The multi-modal, phenomenal contents of awareness are held to be the sets of particular neural signals currently circulating in global and local circuits. These signals constitute the “neural correlates of (the various possible) contents of consciousness” (NCCCs).

Brains function to regulate internal bodily processes and to coordinate perception and action in a manner that enhances survival. Brains are purposive control systems that analyze neural activity patterns associated with sensory, emotional, motivational, cognitive, and motoric states to produce highly structured neural activity patterns that subserve complex behaviors. They are representational systems that enable complex combinations of attributes to be held in short- and long-term memory stores. They are internal communications systems that allow information to be widely broadcast and selectively received.

To understand fully how brains work as informational systems, one must understand the nature of the signals of the system, the neural architectures that produce and process them, the representational frameworks that encode the attributes and consequences of objects, events, situations, and actions, and the mnemonic processes that permit stored records of past experience to inform prospective action.

Neural codes are the signals of the system, i.e., those aspects of neural activity that carry informational distinctions that are relevant to the functioning of the system and its subsequent survival. As with the genetic code, our working hypothesis is that there is one common neural coding framework within which all types of information related to all brain functions can be encoded, transmitted, stored, retrieved, and used in service of preparation and action. This universal framework needs to be able to account for all the many, diverse distinctions that the biological brains of a given species can make. It must have dimensional structure and informational capacity to support the many neural activity patterns that distinguish different sensory modalities, cognitive categories, emotional and motivational states, and possible motoric actions.

What kinds of specific distinctions are encoded in this framework? A distinction here is any difference in neural activity that switches internal functional states or resultant behaviors. These include all of the sensed properties (“attributes”) of external objects, events, and situations as well as those related to internal neural states (internal attributes, such as those related to current goals and rewards, thoughts, emotions, and internal procedures related to actions). Only a small subset of the distinctions made by the nervous system are ever consciously experienced.

Temporal codes are neural codes that convey informational distinctions through simple and complex patterns of spike timings. Temporal coding is found widely throughout sensory systems, and it has the potential to be a universal strategy for encoding most, if not all, types of sensory distinctions (Cariani, 1995, 1999, 2001c).

Temporal codes are fundamentally correlation-based codes in that they depend on the joint occurrences of spiking events that have particular timing relations in the form of time durations between events. They are mixed digital-analog signals in that the signals of the system consist of discrete pulsatile events (action potentials and spikes), but the various durations between spiking events can take on continuous sets of values, as in an analog signal.

Temporal codes can be further subdivided into temporal pattern vs. relative spike timing codes. Temporal pattern codes rely on specific temporal patterns of spikes within the same neural channels such that they produce auto-correlation-like representations (e.g., for pitch, tempo, and echo delay) whereas relative spike timings across different channels produce temporal cross-correlation-like representations (e.g., for localization of sound direction, location of a stimulus on a body surface, and visual motion detection).

At the lowest level of neural coding, interspike interval codes are the simplest temporal pattern codes. Here the time durations between spikes convey information (Figure 1A). These can involve durations between pairs of consecutive spikes (first-order intervals) or non-consecutive spikes (all-order intervals). Higher order interval sequence codes can involve characteristic temporal sequences or combinations involving many more than two spikes. Extended temporal patterns can also encode sequences of events, including relative event timings and, potentially, all of each event’s attributes. Such a coding scheme was proposed in Cariani and Baker (2022), and is schematized in the bottom-most set of spike trains in Figure 1A.

Temporal codes may be at least as fundamental and phylogenetically primitive as channel-based codes. Although rate-channel coding, with its tuned elements and selective receptive fields, has been by far the dominant assumption in neuroscience, evidence for temporal coding exists throughout the nervous system, often in quite unexpected places, such as in vision, pain, and the chemical senses.

Temporal codes and channel codes are by no means mutually exclusive – they can exist in combination as hybrid and joint codes (Cariani, 1999, neural coding taxonomy). For example, the Jeffress time-delay neural net model of binaural direction relies on channel-specific encodings of interaural time differences that combine a spike relative latency code with a channel-coded output. Activations of specific neuronal channels can produce temporally patterned outputs, e.g., via TDNNs, temporal pattern generators, oscillator networks, and rhythm assimilating neurons (Morrell, 1967).

Temporal codes in sensory systems can also be classified in terms of their relationship to the structure of the stimuli that they encode, whether their time structure comes from time-locking to a stimulus or from stimulus-triggered responses whose time structure is generated by characteristic neuronal interactions.

• Stimulus-locked (“phase-locked”) codes. Virtually all neurons fire preferentially in response to positive phases of stimuli that drive their excitatory inputs. In many sensory modalities, such as audition, vision, mechanoreception (touch), and electroreception, the timings of spikes produced by primary sensory neurons follow the time-structures of their respective stimuli. These stimulus-driven time-locked spikings, widely known as “phase-locking,” reflect the internal time structure of stimuli. Stimuli impress their time structure on that of the spike trains to produce iconic neural time-domain representations that resemble stimulus waveforms.

The temporal structures of the spike trains produced contain information directly related to specific perceptual attributes. In the first stages of neural representation of sounds in the auditory system, as a direct consequence of phase-locking, all-order interspike interval codes provide autocorrelation-like temporal representations of acoustic stimuli, up to the frequency limits of phase-locking (effectively up to ∼ 4 kHz). These provide robust and precise representations of perceptual qualities related to stimulus periodicities (pitch and rhythm) and low- and mid-frequency spectra (aspects of timbre, such as vowel quality) (Cariani, 1999). Analogously, on body surfaces interval codes enable flutter-vibration discrimination and relative spike timings enable localizations in tactile perception. For transient stimulus patterns (onset amplitude, frequency, and phase dynamics of sounds), spatiotemporal patterns of spike timings across characteristic frequency (CF) channels can provide cross-correlation-like representations of instrument timbres and consonant phonetic categories.

Usable phase-locking extends at least to ∼4 kHz, the highest note on a piano, in most human listeners enabling temporal representation of many attributes related to speech, music, and environmental sounds: periodicity (pitch), power spectrum (timbre and vowels), sound direction and distance (echo delay echolocation). In the auditory periphery, interspike interval representations of pitch and timbre are highly level-invariant with precisions that improve with higher sound levels, like perception but unlike the behavior of rate-place codes (Cariani, 1999; Heinz et al., 2001), such as periodicity (auditory pitch and cutaneous flutter-vibration frequency), spectrum (vowel timbres), stimulus direction (auditory and cutaneous localization, and electroreception) and distance (echolocation).

In vision, spikes phase-lock to temporal modulations of luminance as moving sinusoidal gratings travel across receptive fields, providing a precise, interspike interval-based representation of spatial frequency (Cariani, 2004) and motion (Reichardt, 1961). Many other parallels exist between auditory and visual systems: coarse temporal modulation tunings of neural elements, autocorrelation-like representations of timbre and texture, perception of missing spatial and temporal fundamentals (Reitboeck et al., 1988; Ando, 2009), as well as analogous scene analysis principles. Analogous autocorrelation and cross-correlation models for visual form based on spatial intervals (Uttal, 1975, 1988) and spatial correlation patterns (Kabrisky, 1966), respectively have also been proposed, but these rely on spatial rate-place patterns rather than spatial patterns of temporally correlated phase-locked spikes, as in Reichardt’s (1961) motion detectors or the texture scanning model of Reitboeck et al. (1988).

• Stimulus-triggered temporal codes for stimulus qualities are possible even in the absence of phase-locking of receptors. In sensory systems, such as the chemical senses and color vision, where there is no time locking of spikes to the stimulus quality being encoded, an adequate stimulus may generate characteristic temporal patterns of neuronal response in early sensory pathways that does not mimic the time structure of the stimulus quality itself (e.g., Kozak and Reitboeck, 1974; Di Lorenzo et al., 2009).

Codes and representations can involve patterns of neural activity in spike trains of individual neurons or be distributed across ensembles, sub-populations, and populations. Purely temporal codes with high reliability and precision can exist at the level of whole neural populations. Codes based on temporal patterns of spikes can exist within spike trains of single neurons, within spike volley patterns produced by neural ensembles, and within the statistics of temporal correlated spikes within neural populations.

A strong example of a population-wide temporal representation for pitch and timbre exists in the mass statistics of interspike intervals (“population-interval distributions”) taken across the whole population of tens of thousands of auditory nerve fibers (Cariani and Delgutte, 1996; Cariani, 1999; Cariani, 2019). Here almost every neuron’s response carries some partial information regarding acoustic attributes, including pitch, timbre, and loudness such that the information is widely distributed across this population. In this representation, the identities of the neural channels that produce the respective temporal intervals are not needed, such that one can discard all cochlear place information and still have highly precise, robust, level-invariant representations of these attributes (e.g., F0-pitches and timbres of different single and double vowels; Cariani, 1995; Palmer, 1990). Being based on interspike intervals within individual neurons, precise synchronization of spike timing across neurons in the population is also not required. Here is an existence proof that temporal, non-place, asynchronous, and population-wide neural representations are possible.

This strong example from our own experience led us to believe that similar kinds of population-wide temporal codes might exist in more central stations and that, in conjunction with temporal correlation operations, these representations could form the basis for a comprehensive neural coding framework.

Such a framework needs to handle all kinds of distinctions, from those involving but a single-dimension, e.g., loudness or brightness, to those involving a few dimensions (color, texture, timbre, odor, and taste) to complex multidimensional spaces (e.g., multiple attributes of objects and semantics of words; see section “5 Discussion – testing the time-domain theory”). In order to handle the full, multidimensional, multimodal encoding of situations, objects, events, and procedures codes on both local and global levels may be needed. For example, many unimodal perceptual and distinctions could be carried by local codes that operate mostly within unimodal neural populations (e.g., auditory or visual cortex). Supramodal distinctions (e.g., timing and rhythmic patterns of events) could be conveyed global codes that span many brain regions. If combinations of unimodal distinctions are frequently encountered and/or significantly rewarded, then characteristic patterns arising from their signal interactions may also propagate to global circuits. The time-domain theory posits that common supramodal temporal patterns that are present to some degree in all global and local circuits bind the various types of intramodal patterns together. In this manner, not all types of information for representing complex items with multimodal attributes need be present in any one brain region.

A critical assumption of the time-domain theory is that many, possibly all, and informational distinctions are conveyed by temporal codes. Testing the theory therefore requires positively identifying the neural codes that subserve representations of specific attributes (e.g., attributes related to percepts such as pitch, loudness, apparent direction, shape, color, texture, apparent location, smell, taste, as well as those related to emotional, motivational, and cognitive states). Positive demonstration that a particular candidate neural code is actually used by the system entails showing a causal linkage between observed neural encodings and behavioral functions.

Although a great deal of evidence exists for temporal codes in neurons that are proximal to sensory and motor surfaces, the nature of the neural coding in central stations, e.g., cerebral cortex, striatum, cerebellum, hippocampus, and other limbic system structures, remains mostly an enigma. The further one ventures from these surfaces to address neural coding of cognitive, affective, and conative distinctions, the more these distinctions depend on other, unobserved, ill-defined, and ill-controlled internal states, and the more difficult it is to clearly identify their precise neural correlates. When we read reports of observed neuronal responses in different brain regions, we ask ourselves what the same analytical methods applied to the auditory nerve would tell us – would they be able to detect a temporal code of this sort?

Possible reasons for this difficulty are (1) that each neuron may be participating in multiple neural assemblies (they are multivalent elements, not unitary feature detectors), (2) different types of information may be multiplexed in the same spike trains, and/or (3) temporal patterns encoding informational distinctions might be distributed across neurons in volley patterns.

Reliance on experimental methods that cannot observe spike trains in individual units and analytical methods that overlook spike temporal correlations within and across single units will not detect these kinds of possibilities. Although observables, such as averaged gross electrical and magnetic responses (EEG and MEG) are useful in capturing temporally correlated activity within neuronal populations, fine timing patterns on millisecond time scales are often obscured by low-pass filtering of signals and the ways that signals are averaged.

There is always more timing information available in individual neurons than in pooled, population-wide responses. If different parts of a neural population respond with different latencies, the fine, millisecond-scale temporal structure of those responses can be smeared out and obscured. In the auditory nerve statistically significant phase-locking to 5 kHz and beyond can be observed. However, if one combines spike trains of fibers of all characteristic frequencies to form a population peristimulus histogram, due to cochlear delays, all response periodicities above roughly 200 Hz are obliterated. Even if high sampling rates above several kilohertz are used, EEG and MEG reflect only the temporally synchronized components of population responses, such that observed frequency limits of neural responses may drastically underestimate the existence of fine timing information.

Enormous progress has been achieved in identifying neural correlates of memory storage and retrieval processes, salient examples being hippocampal place-phase-codes, place and time cells, hippocampal replay/pattern completion, and the roles of population- and sub-population-wide oscillations. However, the full problem of the engram – how the various specific attributes of remembered specific objects, events, and situations are encoded in neural memory traces in short- and long-term memory – has yet to be elucidated.

Brains analyze incoming sensory patterns to formulate, orchestrate, and implement appropriate actions. In order to formulate a general time-domain theory of brain function, neural networks must be reimagined as temporal correlation machines that perform time-domain operations on temporal patterns of spikes. The same central neurocomputational structures and operations are used to perform each of the various functions in Table 1, i.e., to analyze incoming sensory information, to decide what to do in terms of current goals, and to orchestrate and implement appropriate actions.

Different types of neural processing elements, operations, and architectures are required for the different types of neural codes they use for inputs, internal operations, and outputs. Taxonomies of neural networks can be formulated on the basis of what kinds of neural signals they use and what constitutes their functional states (Cariani, 2001a,c). Time-domain architectures in which spike timings play crucial roles can be contrasted with traditional “channel-domain” networks whose signals are average spike rates and whose states are characterized in terms of profiles of channel activations. These are typically rate-place activation patterns, be they dense or sparse and/or involving substantial changes in firing rates or single spiking events. Mixed time-, frequency-, and/or channel-domain neural signals and processing architectures cannot be ruled out.

The general-purpose time-domain architecture that is envisioned here has many properties in common with synfire networks (Abeles, 1990; Abeles, 2003; Abeles et al., 2004; Hayon et al., 2005), wave interference networks (Beurle, 1956; Heinz, 2010; Keller et al., 2024), neural timing nets (Cariani, 2001d; Cariani, 2004), and time-delay neural nets (TDNNs) (Jeffress, 1948; Licklider, 1951; Wang, 1995).

As with synfire chains and cycles, it consists of feedforward and recurrent networks of delay lines and coincidence detectors. As with synfire chains and wave interference networks, due to the spatial organization of delay lines, spatiotemporal patterns of spikes propagate spatially through neural populations. Patterns of interactions between waves of spikes can potentially support holograph-like distributed representations and memory traces (see section “4.2 Holography and some holography background”).

As with neural timing nets, but different from synfire networks and TDNNs, virtually all signals are also assumed to be temporally coded. TDNNs, broadly defined, are spiking neural networks that have both coincidence detectors and rate integrator elements (coincidence counters) with adjustable, stable interconnection weights and interneural delays. They can convert incoming temporal spike patterns to outgoing rate-place patterns to analyze spike temporal structure or convert incoming rate-place patterns to temporally patterned outputs as in central pattern generators.

As with TDNNs the time-domain network also has adjustable interconnection weights that can change either dynamically through spike-timing-dependent plasticity (STDP; Markram et al., 2012) or through more stable, permanent changes in synaptic efficacy. Short term memory, including echoic and working memory, is assumed to be reverberatory and dependent on STDP-mediated synaptic facilitations and depressions that are driven by spike timing correlations at synapses. Long term-memory is assumed to be mediated by more permanent synaptic changes and perhaps also by other molecular and cellular mechanisms as well that support formation of stable neural assemblies.

In time-domain networks any spatiotemporal pattern of spikes can be detected and/or produced by appropriate combinations of interconnection weights and delays. Specific interneural delays can be selected by adaptively modifying synaptic efficacies, so as to permit formation of quasi-permanent trained neural assemblies. Although these interneural delays are normally conceptualized at the level of whole neurons, there can exist slightly different delays for different synapses on the same neuron. Neurons with elaborate dendritic trees, such as cortical and hippocampal pyramidal cells, may be better regarded as trees of synapses and coincidence elements in which small numbers of well-timed spikes (spatiotemporal patterns) can trigger action potentials (Beniaguev et al., 2021). Such neural elements can participate in multiple neural assemblies by producing interleaved spikes associated with different sets of inputs (Abeles, 2009). These kinds of elements might explain why pyramidal cells with thousands of inputs have such irregular firing patterns.

Because characteristic mono- and multi-synaptic delays exist between every pair of neurons, every change in the efficacy of a given synapse produces a change in the interneural delay paths, and vice versa. Thus, if specific delays can be adaptively modified (MacKay, 1962; Gutig and Sompolinsky, 2006), then particular combinations of synaptic inputs can be arranged so as to arrive simultaneously at spike initiation points, sensitizing the neuron to those combinations.

Time-domain architectures appear to be consistent with many widely observed characteristics of individual neurons. The kinds of networks that are needed to analyze and produce temporal pulse codes require neural delays and spike coincidence detectors that have relatively precise temporal resolutions. Neural delays offset time durations between spiking events, while elements with narrow duration spike coincidence windows permit spike intervals of different durations to be discriminated.

Neural time delays are ubiquitous in brains. They can be produced within individual neurons or groups of neurons. Well known neural delays include synaptic, dendritic, and axonal transmission times, time-to-threshold integration times, recovery time courses (superexcitable phases and oscillatory resonances) and post-hyperpolarization rebound times (timings of “anode break excitations”). All of these delays are potential loci for plasticity and history-dependent adaptive tunings. Other possible neural delay mechanisms that could potentially support permanent storage of temporal patterns include neuroglial-mediated processes, microtubular transmissions, and molecular storage mechanisms.

By far the most widely appreciated neural delays are “tapped delay lines,” which use axonal conduction delays, branchpoints, and collaterals of axons spatially distributed along their lengths to provide systematic sets of successive delays. Sets of tapped delay lines are found widely in many canonical central structures: in cerebral cortex, hippocampus, and cerebellum. Cell types include pyramidal and granule cells as well as binaural bipolar cells of the auditory brainstem. It is not surprising then that these major canonical neurocomputational structures can be modeled as time-delay processing architectures.

These mechanisms provide for a rich set of delays. Conduction delays in myelinated and unmyelinated axons of individual neurons span several orders of magnitude, dependent on axon lengths and conduction velocities. The most numerous cell type in the brain is the unmyelinated granule cell, which is also the slowest conducting.

Recurrent delay-paths expand the lengths of delays available to these systems. Recurrent circuits enable unbounded durations of neural delays for extensive, iterated processing. Multi-synaptic delay-paths that traverse multiple neural elements combinatorically expand the numbers of delays in a network. The brain is nothing if not a network of delay loops, and its recurrent, cyclic paths, properly configured, make unbounded, longer and longer, delays and processing sequences possible.

Spike coincidence mechanisms can be found widely in brain structures (cerebral cortex, cerebellum, and brainstems of many sensory pathways). Cortical pyramidal cells famously have numerous synaptic inputs, irregular spiking, and multivalent responses to diverse stimuli, which is consistent with the inference that relatively small subsets of well-timed incoming spikes are sufficient to cause these cells to fire (Softky and Koch, 1993). As a result, these cells may be better regarded as structured ensembles of millisecond-scale coincidence detectors, e.g., as with the Tempotron of Gutig and Sompolinsky (2006) or the multi-layered convolutional network of Beniaguev et al. (2021), rather than running integrators that average input firing rates over much longer timescales (Abeles, 1982). Complex dendritic coincidence trees would enable many different sets of synapses, each set having a specific connectivity and relative delay pattern, to trigger spikes in these cells, thereby allowing them to participate in multiple neural assemblies.

Other kinds of architectures are possible that could also potentially handle some types of temporal codes. Neural networks that consist of coupled neural oscillatory elements (Greene, 1962) or ensembles of many elements. Oscillator networks have mainly been studied in terms of their dynamical behavior rather than specific information processing functions. Neural oscillations on population-wide scales are generally not thought to convey specific information about attributes due to their variability and limited bandwidth, but may nevertheless facilitate many non-specific integrative functions by synchronizing neural populations through common and emergent oscillatory frequency modes (Cannon et al., 2014; Cariani and Baker, 2022). Such networks can support neural codes that depend on oscillatory phase offsets (Hopfield, 1995; Cariani, 2022; Cariani and Baker, 2022). By virtue of their oscillatory resonances, such networks can be used for analyzing incoming coarse temporal patterns (e.g., beat tracking for musical rhythms), inducing brain states (e.g., sleep stages) associated with particular oscillatory modes, producing temporally patterned outputs (e.g., rhythmogenesis in central pattern generators), storing memories in the frequency-domain (Longuet-Higgins et al., 1970), visual segmentation and binding (Baldi and Meir, 1990), or creating new neural periodicities through emergent oscillatory dynamical modes (see section “4 Time-domain waveforms, signals, and systems: common signal operations, holography, radio communications, and the brain”).

With incorporation of nonlinear, multiplicative and thresholding processes, oscillatory architectures can potentially handle complex pulse-coded time-domain signals and operations, though less directly than time-delay neural coincidence networks, where specific interspike intervals can be offset with corresponding delays. Some of these issues are taken up in section “4 Time-domain waveforms, signals, and systems: common signal operations, holography, radio communications, and the brain” and elsewhere (Cariani and Baker, 2022).

Architectures that include greater neuroanatomical, cellular, and biophysical detail have also been proposed that operate on complex spatiotemporal spiking patterns (as in the polychronous networks, spiking orders, and spiking order trajectories). For reverse-engineering, the problem of how neural signal processing works to realize informational functions, simplified neural models with limited parameters may be more useful. Although ever more neuroanatomical and biophysical details can be incorporated, basic functional principles can be obscured by complex, inscrutable dynamics.

Beyond general time-domain architectures, canonical neurocomputational structures such as cerebral cortex, basal ganglia, hippocampus, and cerebellum will need to be reconsidered as temporally coded correlation devices (cf., Marr, 1991). Traditionally these circuits were functionally conceived in terms of channel connectivities and activations, but these circuits can be reimagined in terms of interactions of spike timing patterns in synfire chains and cycles.

Cerebral cortex can be reimagined in terms of analysis, processing, and production of temporal patterns. Cortical-striatal-thalamic circuits have been traditionally regarded in terms of task-specific motor control, interval timing, and attentional gating of thalamic sensory and motor channels. More recently, the presence of short temporal latencies, precisions, and narrow time windows of striatal action have been recognized (Pouzzner, 2020). In addition to gating or facilitating relevant channels, attentional modulation can also plausibly be achieved by matching top-down target signals with incoming, bottom-up sensory data (e.g., matched filters through correlational amplification of specific incoming signals). Given high temporal precisions, striatal spike trains could provide temporally patterned top-down signals that selectively disinhibit (amplify) similar temporal spike patterns entering the thalamus from afferent sensory pathways.

The cerebellum has long been considered as a neural timing organ (Braitenberg, 1961, 2002; Braitenberg, 1967). In the cerebellum massive arrays of slow, unmyelinated parallel fibers the flat, dense dendritic trees of Purkinje cells strongly suggest functions as canonical temporal coincidence elements. Although the cerebellum was originally conceived mostly in terms of motor timing relations and real-time control of sensory surfaces, more recently more general timing and sequencing roles in perception and cognition have been proposed (Braitenberg et al., 1997; Rodolfo Llinas and Negrello, 2015).

The hippocampus has traditionally been regarded in terms of a recurrent, autoassociative rate-place architecture (Marr, 1971). However, as in the cerebellum, its arrays of recurrent fibers support systems of switchable delay-paths. These paths then enable time-delay operations capable of rebroadcasting specific temporal spike sequences that can function as temporal-coded memory traces. In this view hippocampal replay essentially broadcasts selected temporal memory traces to the rest of the brain. Time compression of these spike patterns also enables them to be used as anticipatory guides in real time for prospective action (see section “3.6.1 Temporal memory traces”).

Brains are purposive, goal-driven feedback neural control systems that have embedded evaluative mechanisms for assessing goal satisfaction and for weighing the urgency of competing goals. The term “goal” is used here in a broad sense to mean any state that a system is organized to preferentially seek. Animal nervous systems have evolved sets of internal, embedded goals that promote survival and continuation of the lineage. Most goal-related operations, as well as their associated planning, and steering functions are thought to be mediated by neural circuits in pre-frontal cerebral cortex (Miller and Cohen, 2001).

Basic, immediate, “primary” goals under neural control involve preservation of internal organismic integrity (homeostasis: oxygen, water, ionic, nutrient requirements, injury avoidance, and damage control through pain minimization) and avoidance of imminent external threats (predation and injury). Longer term, less urgent, “secondary” goals go beyond basic survival to include reproduction, rearing of offspring, exploration, learning, play, stress and uncertainty reduction, and socially mediated rewards. Learning processes entail tunings of neural assemblies that improve functions (more reliable goal attainment, better performance, and better predictions) and can progress in the absence of more salient primary goals.

Motivations (goals and drives), expectations, and rewards, as well as emotional and cognitive states, determine what prospective actions will be taken. Depending on the current external and internal contexts, such as perceived opportunities for goal-attaining actions and emotional state, any goal can take potentially take priority in driving behavior. Internal goals thereby focus attention, drive decision-making through signal competition, and trigger task-specific behaviors.

The temporal theory posits that the neural signal for each goal is a characteristic temporally coded pattern of spikes. The pattern is broadcast widely throughout the brain to activate task-relevant local subpopulations, circuits, and signals and to suppress those that are not. Characteristic goal and reward signals permit representations to include goals that co-occurred with objects, events and situations, thereby enabling memories to be accessed according to the goals they fulfilled. In producing and broadcasting a goal signal, the system also begins to access memory traces that contain that signal, and with them still other signals related to brain states and action sequences that occurred with its past attainment (Cariani, 2017).

The temporal theory is anticipatory and similar to predictive coding models (Friston, 2010; Seth, 2013; Seth and Friston, 2016; Friston, 2019) in that the storage and access of signals related to perceived objects, events, environmental situations, and internal goals and procedures forms an internal model of the perceived likelihood of the efficacy of some particular prospective action in the context of some particular situation.

Representations, as used here, are organized systems of neural codes that signify related attributes. See Lloyd (1989) for discussion of representations in neurally grounded theories of mind. Attributes are informational distinctions, neural activity “differences that make a difference.” Distinctions constitute alternative functional states of the system that lead to different subsequent internal states and/or behavioral outcomes. Representations can involve attributes that vary along one dimension (e.g., loudness, lightness, and the pitches of notes on a piano), a few dimensions (e.g., timbre, color, texture, and odor), or many dimensions (the neural description of a physical object that includes basic sensory attributes as well as conceptual classifications, word labels, perceived affordances, emotional valences, and memories of similar objects).

Representations of objects, events, situations, and procedures are groupings of neural signals into unified, chunked, entities. We will refer to these represented entities as “composites.” Objects are collections of neural signals associated with sustained co-occurring attributes. Objects can include collections of perceived external attributes of particular physical objects (e.g., a specific fork) or collections of constructed internal attributes that constitute concepts (e.g., the concept of a fork). Objects are atemporal in that they are not defined primarily in terms of discontinuities that are marked in time – they do not have beginnings, ends, and durations. In contrast, events are collections of attributes that are demarcated in terms of neural time markers. Events have beginnings (onsets), ends (offsets), durations, and specific timings. Events can also contain mixtures of external and internal attributes such as a knock on the door, the emergence of a thought, or the recall of a memory. Situations are collections of attributes related to contexts, again either external or internal. These can be ongoing and quasi-stationary, as with objects, or emergent and episodic, as with events. Procedures are internal attributes associated with neural signals and signal sequences, such as in motor programs and trains of thought.

The time domain theory proposes that virtually all of these attribute distinctions are encoded in characteristic temporal patterns of spikes, be they within spike trains of individual neurons, spike volley patterns produced by neural ensembles, or spike timing correlations in populations.

In this theory, the dimensional structure of mental representations mirrors the correlation structure of the neural codes. For sensory representations this structure comes directly the stimulus in phase-locked sensory systems or from characteristic stimulus-triggered spiking patterns in non-phase-locked systems. Due to their iconic nature, the various temporal patterns produced by phase-locked systems can usually be related by some continuous deformation (affine transformation) that provides spaces of correlation-based perceptual similarities and differences. For example, the distributions of interspike intervals that encode nearby musical pitches (musical C3 vs. D3) overlap with each other (Cariani, 2019, Fig. 5.8). On the other hand, the divergent correlation structures produced by different sensory modalities, such as visual forms or smells, result in discrete, separate dimensions that highly independent of each other.

Different, independent attributes are encoded using different, orthogonal temporal patterns such that combinations of attributes can be represented as vectors. Each dimension encodes one attribute which has a characteristic set of temporal pulse patterns that indicate its signal type. For example, a musical note has a set of distinct, highly independent attributes that include loudness, duration, apparent location, F0-pitch, pitch height, and several dimensions of timbre. The theory posits that each one of these attributes is represented by characteristic neural temporal patterns of spikes that indicate which attribute is being distinguished (e.g., F0-pitch vs. loudness) and the specific value of that attribute (e.g., middle C).

The temporal coding thus permits the form of the neural signal to indicate its signal type, i.e., which attribute the neural signal signifies to the rest of the system. Because the signal type and specific value are no longer bound to particular neural channels or transmission paths, as they are in channel-domain networks, neural signals can therefore be liberated from particular nodes and wires. This in turn enables broadcast of signals and selective reception by distant neural assemblies that are tuned to respond to different signal types.

In the temporal theory, the strength (salience) of the neural signal associated with each attribute at any given time is indexed by the relative prevalence (fraction) of the characteristic temporal pattern associated with each attribute currently being produced within a given neural population or larger network. It can be described in terms of a positive scalar (ranging from 0 to 1) reflecting the fraction itself, or binary (0 or 1) reflecting whether its fraction exceeds some threshold of significance.

At any given time, the current functional, representational state of a local neural population or global network is the set of neural signals being produced (regenerated within) that system. This can be described in terms of N attributes (types), Mi alternative distinctions (values) for each attribute i, and the strength of each attribute-value combination. The resulting vector has N × Σ Mi dimensions with one signal strength-salience-prevalence-intensity scalar for each dimension. As there are typically large numbers (thousands) of discriminable attribute values for each external attribute, for cognitive internal attributes, and mnemonic distinctions, as well as those for emotional and motivational states, the total number of possible alternative functional states of the system is quite large.

This signal-based vectorial representational system can support analogies, i.e., that disparate entities are similar in some ways (common dimensions in signal space), generalizations, i.e., members of sets of entities that share several common attributes (multiple signal dimensions in common) and interpretability, i.e., how they are similar (what attributes the common signals connote).

Because this set is a small subset of possible neural signals, both in terms of numbers of dimensions (attribute signal types and of distinctions within each dimension (attribute values), the representational state vectors are very high dimensional (reflecting all possible attributes) and sparse (with only a small number of attributes and their associated spike train signals in play at any time). These kinds of high-dimension vectorial representations have many advantages for implementing concept dynamics, search/retrieval processes, and analogical reasoning (Widdows, 2004).

Beyond simple vectorial collections of attributes and signals currently in play, there is further organization that binds subsets of attributes into unified, separate, largely independent, objects, events, situations, and procedures.

The organization of perception has traditionally come under the rubric of Gestalt processes, segmentation and binding [the “binding problem” (von der Malsburg, 1981/1994; von der Malsburg, 1995b)], and scene analysis. The clearest, most obvious examples are found in vision and audition, with many parallel principles and analogous transformations between the two modalities. In vision, regions of images with similar forms, textures, colors, and correlated timings and motions group together. In audition frequency components group by common onsets (roughly synchronous event timings) and common subharmonics (harmonic relations). Hearing out separate streams of related events in music (polyrhythms and polyphony) and speech (“the cocktail party problem,”) involve separation of independent streams (voice lines, instruments, and speakers) and grouping together of events related to one or another stream. Within each stream are also groupings and separations of events in time (“chunking”). Events close in time tend to group together (the Gestaltist Proximity Principle): notes into musical phrases and phonetic sequences into syllables and words.

Similar binding processes also exist in cognition to group features into categories (concepts), in memory to group related events into discrete episodes, and in the orchestration of action to group specific movements and action sequences into wholes.

The temporal theory posits that bindings are realized through signal interactions. Signals with correlated timings and/or common subpatterns of pulses interact in delay-coincidence networks to reinforce each other (Figure 1B; Cariani, 2001a) and to create new temporal patterns that are related to their co-occurrence (see section below).

This theory is similar in many respects to Malsburg’s general theory of correlation-based perceptual and cognitive organization (von der Malsburg, 1981/1994; von der Malsburg, 1995a), but more explicitly asserts that the correlation relations are temporal correlations amongst spikes. There are also similarities to models of binding based on temporal synchrony (Singer, 1999; Engel and Singer, 2001). Whereas Singer’s mechanism for binding is based on temporal synchronies of channel activations, the time domain mechanism is based on temporal pattern similarities that do not necessarily require precise (zero-lag) synchronies: in the temporal pattern theory temporal proximity is sufficient for binding. As long as the similar patterns arrive at a given location within temporal integration windows of tens to hundreds of milliseconds, they can still be bound together. Because near simultaneous events also create characteristic patterns of signal interaction temporal pattern representations can also incorporate response synchronies. The two kinds of binding principles are therefore not at odds with each other.

Grouping requires some process that binds the various attributes that constitute a unified whole that can be represented and handled as a separate entity. The whole can be an object, event, situation, or procedure. In the time domain theory, binding is accomplished through signal interactions that produce new signal patterns that are characteristic of the whole, such that patterns that emerge from the relational interactions of the parts are different from simple superpositions (additions) of the constituent signals. The whole is more than a simple combination of parts.