- 1The Integrative Neuroscience of Communication Unit, National Institute on Deafness and Other Communication Disorders, National Institutes of Health, Bethesda, MD, United States

- 2Biostatistics and Clinical Epidemiology Service, National Institutes of Health Clinical Center, National Institutes of Health, Bethesda, MD, United States

- 3Department of Radiology and Imaging Sciences, National Institutes of Health Clinical Center, National Institutes of Health, Bethesda, MD, United States

- 4Clinical Epilepsy Service, National Institute of Neurologic Disorders and Stroke, National Institutes of Health, Bethesda, MD, United States

Functional connectivity (FC) refers to the activation correlation between different brain regions. FC networks as typically represented as graphs with brain regions of interest (ROIs) as nodes and functional correlation as edges. Graph neural networks (GNNs) are machine learning architectures used to analyze FC graphs. However, traditional GNNs are limited in their ability to characterize FC edge attributes because they typically emphasize the importance of ROI node-based brain activation data. Line GNNs convert the edges of the original graph to nodes in the transformed graph, thereby emphasizing the FC between brain regions. We hypothesize that line GNNs will outperform traditional GNNs in FC applications. We investigated the performance of two common GNN architectures (GraphSAGE and GCN) trained on line and traditional graphs predicting task-associated FC changes across two datasets. The first dataset was from the Human Connectome Project (HCP) with 205 participants, the second was a dataset with 12 participants. The HCP dataset detailed FC changes in participants during a story-listening task, while the second dataset included the FC changes in a different auditory language task. Our findings from the HCP dataset indicated that line GNNs achieved lower mean squared error compared to traditional GNNs, with the line GraphSAGE model outperforming the traditional GraphSAGE by 18% (p < 0.0001). When applying the same models to the second dataset, both line GNNs also showed statistically significant improvements over their traditional counterparts with little to no overfitting. We believe this shows that line GNN models demonstrate promising utility in FC studies.

1 Introduction

The interconnectivity of the human brain is a critically important component in the understanding of the neural basis of behavior, in general, and in linguistic behavior in particular. Patients with strokes that disrupt the pathways between designated ROIs have been reported to have specific language deficits, such as Broca’s and Wernicke’s aphasia as well as conduction and global aphasia. However, understanding the mechanisms by which these language deficits arise has been elusive but may be essential in developing novel approaches in neurorehabilitation. Therefore, the development of neurocomputational models of the neural basis of language processing in the healthy human brain may be an important step in better understanding the mechanisms that result in specific language deficits following brain injury. With the advancements in biotechnology over the last 30 years, neuroscientists have been able to image the human brain in action. The advancements of computational power over the last decade have the tremendous potential to advance our understanding of language processing following brain injury through the use of computer simulations. For these reasons, the merger of machine learning algorithms and non-invasive neuroimaging techniques that analyze brain activity in real time is a crucial step in the convergence of cognitive and behavioral neurology and computational neuroscience towards the burgeoning modern field of clinical neuroscience. Often, neuroimaging studies divide the brain into regions of interest (ROIs). Functional connectivity (FC) studies look at the relationships between those different brain regions by calculating the correlations in activation between them (Bullmore and Sporns, 2009; He and Evans, 2010). Functional magnetic resonance imaging (fMRI) is a non-invasive neuroimaging method in which MRI images are combined with blood oxygen level-dependent (BOLD) signals, allowing us to map BOLD effects in different parts of the brain and thus measure brain activity on a per-ROI basis.

Previous fMRI-based static FC studies have shown that language related connection strengths between ROIs change during language tasks (Tran et al., 2018; Doucet et al., 2017). Certain neurological conditions, such as strokes, are known to both impact the patient’s FC and their language abilities (Tao and Rapp, 2020; Berthier, 2005; Boes et al., 2015). This makes the neurocognitive modeling of FC processes underlying language crucial to understanding and predicting functional neural responses under conditions of central nervous system (CNS) injury (Kamarajan et al., 2020; Nebli et al., 2020). One area of mathematics which is particularly important in modeling FC is graph theory, which has traditionally allowed us to view ROIs as nodes and functional correlations as edges (Bullmore and Sporns, 2009; He and Evans, 2010).

There are examples of machine learning models using resting state FC data in predicting medical or demographic information, such as identifying depression in people with Parkinson’s disease and identifying an infant’s age; or classifying static FC changes in neurodegenerative diseases (Lin et al., 2020; Kardan et al., 2022). Graph neural networks (GNNs) are a type of neural network architecture designed to incorporate graph structures as data (Scarselli et al., 2009). Given the inherent graph-based nature of the brain, GNNs – including those that incorporate less-traditional definitions of nodes as edges, as are found in hypergraphs – are an intuitive step in analyzing network neuroscience data (Nebli et al., 2020; Kardan et al., 2022; Zong et al., 2024; Zuo et al., 2024). We assessed the use of GNNs in predicting FC changes for a language task, specifically an auditorily presented language comprehension task as proof of concept that GNN can serve as a powerful computational tool in predicting language related static functional connectivity spatial neuronal changes. We specifically included the comparison of traditional GNNs and line GNNs to predict changes in auditory language comprehension based FC.

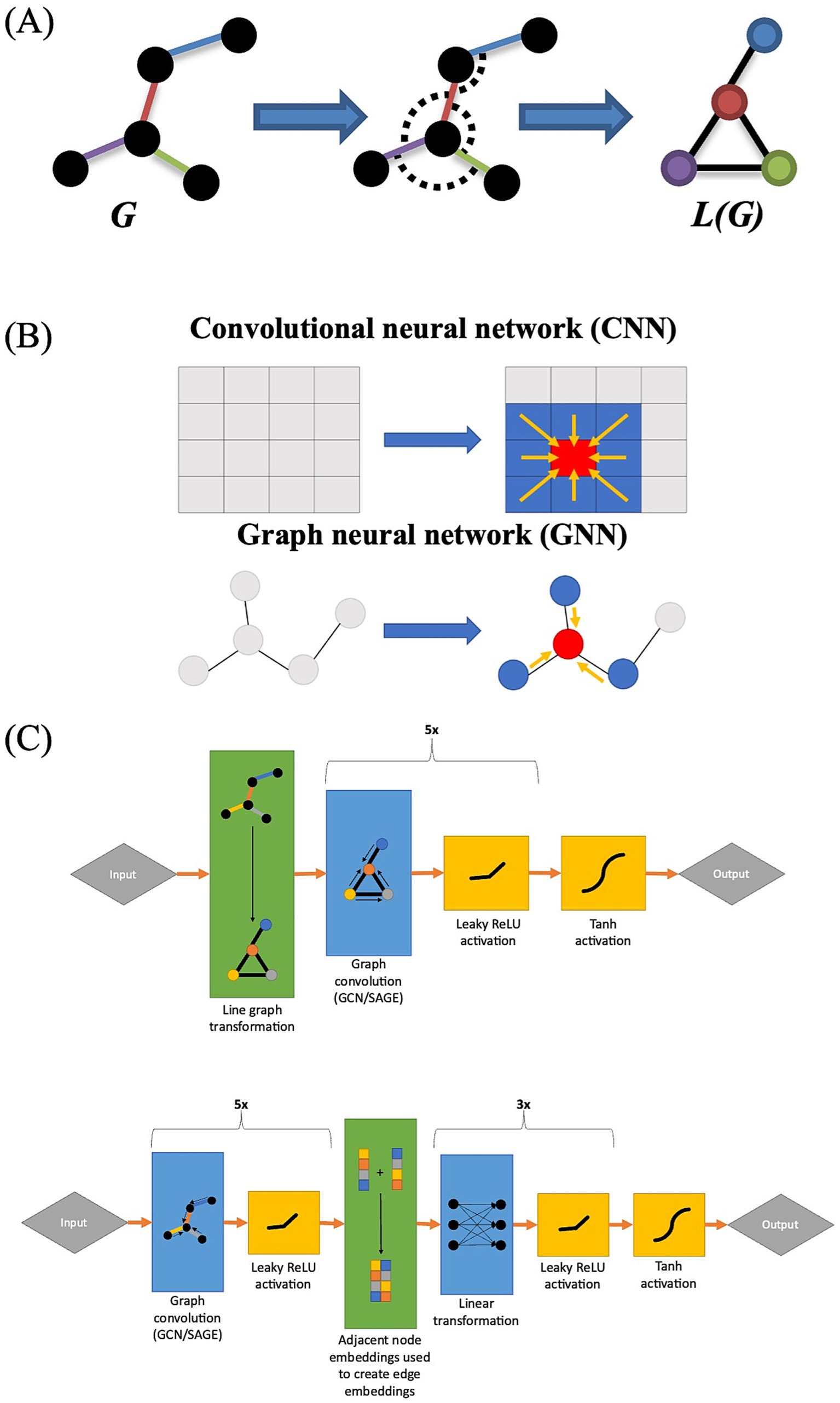

A line graph is a type of derivative graph in which the edges of the original graph are treated as nodes in the line graph (Cai et al., 2022) (Figure 1A). In computational chemistry and biomolecular interaction analysis, the use of line graphs in GNNs has proven effective in edge prediction, as line graphs permit the user to explicitly incorporate relational data into their models (Choudhary and DeCost, 2021; Zheng et al., 2022; Han and Zhang, 2023). In computational chemistry, line graphs allow researchers to represent atomic bond angles as nodes, whereas in biomolecular interaction analysis, they allow researchers to represent individual interactions as nodes (Choudhary and DeCost, 2021; Zheng et al., 2022; Han and Zhang, 2023). Given that FC analyses are edge-based, we also investigated the effectiveness of using line graph-based GNNs to predict changes in FC that occur during an auditory language task. We chose to utilize two common GNN architectures: a graph-based version of convolutional neural networks called graph convolutional networks (GCN) proposed by Kipf and Welling (2017) (Figure 1B) and an extension of this architecture called GraphSAGE (Kipf and Welling, 2017; Hamilton et al., 2017). We hypothesize that GNNs trained on FC line graphs (that is, functional correlations as nodes connected by ROIs) will show improved performance over GNNs trained on traditional FC networks.

Figure 1. (A) Graphical representation of a line graph transformation. Note how each edge in graph G corresponds to a node in graph L(G). (B) Graphical representation of the difference between GNNs and CNNs, with CNNs integrating information from adjacent cells while GNNs integrate information from adjacent nodes. (C) Architecture of line GNNs (top) and the architecture of traditional GNNs for FC analyses (bottom).

2 Methods

2.1 Data collection

We performed cross-validation on two datasets to assess model performance: dataset #1, which included 205 participants, and dataset #2, which included 12 participants from a different experiment.

2.1.1 Dataset #1

Task-based fMRI data for 205 healthy adult individuals were queried from the Human Connectome Project (HCP) database. The subjects were matched for age, education, and handedness and performed an auditory language comprehension task.

Subjects were asked to perform an experimental task in which they listened to a story and answered questions about the story’s topic. For example, after auditorily listening to a story about an eagle rescuing a man after he had done the same for the eagle, the participant may be asked “Was the story about revenge or reciprocity?” They were asked to register their responses by pressing a button box. Similarly, as a baseline control study, subjects were asked to solve an auditorily presented mathematical problem, such as “Four plus twelve minus two plus nine. Does this equal twenty-two or twenty-three?” The mathematical and language tasks are described in further detail in Binder et al. (2011) and Barch et al. (2013), with the examples adapted from Binder et al. (2011). For this data collection, there were two 3.8 min imaging acquisition sessions (a total of 7.6 min). Each session contained four ~1 min epochs in which story stimuli were presented and four ~1 min epochs of math calculations as described above. The authors reported that in cases in which subjects completed the math calculations with residual time remaining within the epoch, additional math calculations were performed in order to fill the time as needed. They used the mathematical task data as control to negate the neuroactivational contributions from nonlinguistic cognitive resources such as attention and executive memory as well as scanner and physiologic noise.

BOLD neuroactivation data were acquired noninvasively using gradient-echo EPI on a 3 T MRI with a FOV of 208-x 180 mm; slice thickness of 2.00×2.00×2.00 mm voxels and multiband factor of 8. The TR was 720-ms and TE of 33.1 ms and a Flip angle of 52 degrees.

2.1.2 Dataset #2

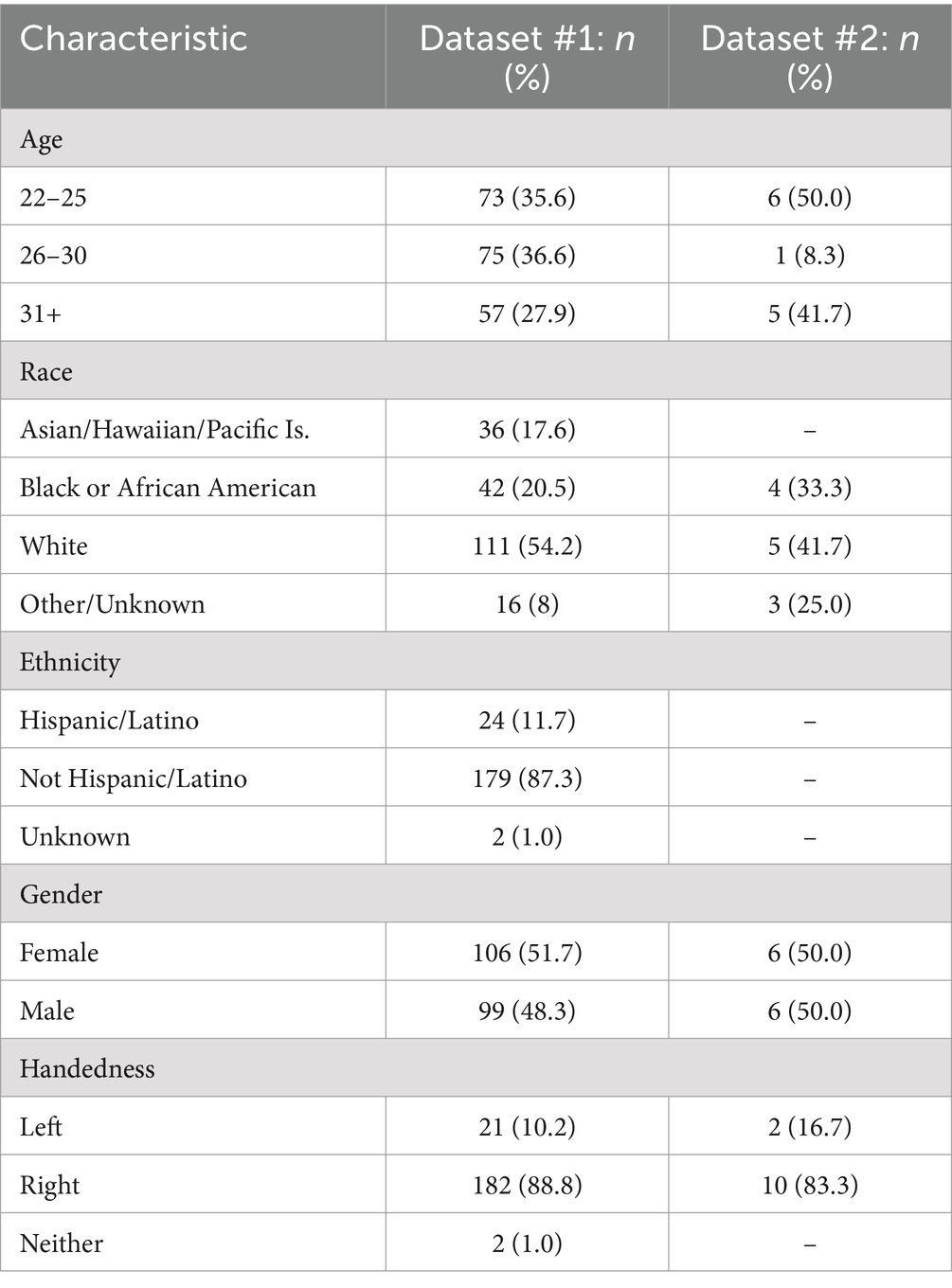

To assess the model’s generalizability, task-based fMRI data for twelve healthy controls recruited from the NINDS Epilepsy Unit under IRB approved protocol 14-N-0061 were included in this secondary retrospective analyses. The twelve subjects were matched for education, age, and handedness. Further demographic information can be found in Table 1.

These subjects performed a different auditory language comprehension task from the one performed in the HCP dataset. In this task, called an auditory description decision task (ADDT), subjects listened to a descriptive sentence of an item from the Boston Naming Test which was either true (“a large gray animal is an elephant”) or false (“spaghetti is something you sit on”) (Rolinski et al., 2020). Subjects answered yes or no using a button box. The baseline task for the second task was also different from the HCP dataset in that subjects were asked to listen to sentences played in reverse order. By using reversed speech as the baseline control, no syntactic or semantic information is conveyed when the subject listens to the stimuli. The associated neuroactivation in the control task, therefore, only represents listening to sound that is devoid of linguistic content.

Similar to the first dataset, the paradigm for the second dataset also followed a block design. Five epoch cycles where each cycle consists of an alternating block of task and control task was performed. Each epoch was repeated for a 30 s duration followed by the control task for a total scanning time of 2.5 min for the experimental task and 2.5 min for the control task.

Imaging acquisition was performed on a 3 T MRI scanner at the National Institutes of Health NMR Center using a 32-channel head coil. Imaging parameters were flip angle: 65 degrees; TR: 2000 ms, TE: 30; Voxel size: 3 mm x 3 mm x 4 mm; FOV: 216 × 216 mm; slice thickness of 4 mm. During resting state fMRI, participants lay still in the scanner while staring at a fixation mark in the display for the duration of the scan. During task based fMRI, participants were asked to perform an ADDT in which they listened to an auditorily presented sentence and then decided whether the following word matched the description. The detail of the tb-fMRI procedure has been described elsewhere (Rolinski et al., 2020; Gaillard et al., 2007).

2.2 MRI preprocessing

MRI preprocessing was performed per previously reported techniques (RaviPrakash et al., 2021). Specifically, we preprocessed the anatomical data to achieve accurate surface segmentation. We performed surface parcellation using T1 weighted MPRAGE and fluid-attenuated inversion recovery (FLAIR) images using FreeSurfer (RaviPrakash et al., 2021; Fischl, 2012). We registered the MPRAGE volume with the MNI-305 atlas using an AFNI registration to perform the cortical surface parcellation. We then performed skull stripping. We then followed the intensity gradients between the white- and gray-matter to generate surface segmentations for each hemisphere. Similarly, we generated the pial surface using the intensity gradients between the gray matter and cerebral spinal fluid. We used the different contrast in the FLAIR images to further define the pial surface segmentation, after which surface labeling was done as in Desikan et al. (2006).

2.3 fMRI preprocessing

We used Analysis of Functional NeuroImages toolbox (AFNI) to preprocess the fMRI data and used surface-based cortical analysis pipelines (Cox, 1996; Cox and Hyde, 1997). Pre-steady state volumes prior to reaching equilibrium magnetization were discarded. First, we conducted slice timing correction to synchronize timing across brain slices and then performed motion correction by setting the motion threshold to 0.3 mm. We censored BOLD signal that exceeded this threshold. We applied a regress bandpass filter tb-fMRI with a frequency of 0.01–0.10 Hz, to further surpass signal noise and then applied a 6 mm spatial smoothing kernel to further reduce the noise. We then added up the coherent signals as previously described (RaviPrakash et al., 2021).

The rs-fMRI preprocessing was nearly identical to the tb-fMRI preprocessing, including the use of a 6 mm spatial smoothing kernel. We used regress bandpass filter for rs-fMRI, with a frequency range of 0.01–0.5 Hz, to further eliminate noise.

2.4 Functional connectivity network analysis

The task network is generated by first concatenating the time-series of all task trails/blocks in a single subject. We then computed Spearman correlation to generate the static FC. We included all statistically significant task correlations compared to baseline, providing that the functional correlations were present in at least a certain percentage of subjects. We empirically calculated the participation threshold to be 85%. The associated p-values are corrected using Bonferroni correction. Of note, many investigators apply an edge strength threshold to ensure the inclusion of robust functional correlations in the statistical analyses. However, this can inadvertently eliminate functionally relevant functional correlations from our analyses. In order to minimize this occurrence, we included all significant (p < 0.05 post-Bonferroni correction) connections across sample dataset. But then applied a participation threshold to ensure that the correlations were robust and reproducible. This enables us to avoid arbitrarily cutting off the functional correlations with correlation strengths that may be relevant for a particular individual’s FC performance.

This left us with 32 ROIs connected by 71 functional correlations for the first dataset and 20 ROIs connected by 12 functional correlations for the second. The static FC graphs generated were then implemented as NetworkX objects in Python (Hagberg et al., 2008).

2.4.1 Model creation

Four machine learning architectures were assessed for their predictive capabilities. The first two were graph convolutional architectures implemented using a traditional GCN as well as the GraphSAGE architecture in which the nodes were embedded with per-timepoint ROI activity levels at baseline (Figure 1C). In the traditional GCN, the edges were embedded with functional correlation measurements at baseline (Figure 1C). These models use the traditional representation of FC networks as ROIs (nodes) connected by functional correlations (edges).

The final two compared GCN and GraphSAGE architectures that used a line graph configuration. In line graphs nodes (ROI) are converted to edges and FC network edges are represented as nodes. The line GCN included per-timepoint ROI activation data embedded in its edges, whereas the line GraphSAGE discarded this information because the architecture did not permit edge attributes to be incorporated. All neural networks were implemented using the PyTorch Geometric package (Fey and Lenssen, 2019).

All models were trained using the mean squared error (MSE) loss function. All neural networks were trained using the Adam optimizer over the course of 100 epochs, or training cycles. Apart from the line graph GCN, which had a learning rate of 1×10-3, all neural networks had a learning rate of 2×10-6. A higher learning rate was chosen for the line graph GCN because it was found to train far more slowly than the other models otherwise.

Each model had either five GCN or five GraphSAGE convolutional layers depending on model type with 512 neurons per layer, and there was a negative slope Leaky ReLU activation function of 0.05 between each layer. The models without line graph transformations also included a two-layer linear perceptron at the end to convert node embeddings to edge embeddings. A Tanh activation function was applied at the end of each model to transform the output to a (−1.0, 1.0) range.

The performance of each model was assessed using five-fold cross-validation. Benchmark error was calculated by creating a set of predicted values for a set of participants in the training set and applying this prediction to the validation set. We decided to use this metric to determine whether the models were creating truly personalized predictions, or they were simply converging on an average value for each functional correlation. The accuracy was quantified using MSE.

2.4.2 Statistical methods

Statistical testing was performed using SciPy version 1.9.0, and we determined the significance level to be p < 0.05. Comparisons between benchmark and validation performance for each model, as well as comparisons in performance between models, were performed through a Wilcoxon signed rank test. Comparisons in the time taken to train each model were performed using a paired t-test. Both analyses that involved comparing each model to each other model were corrected for multiple comparisons through Bonferroni correction.

3 Results

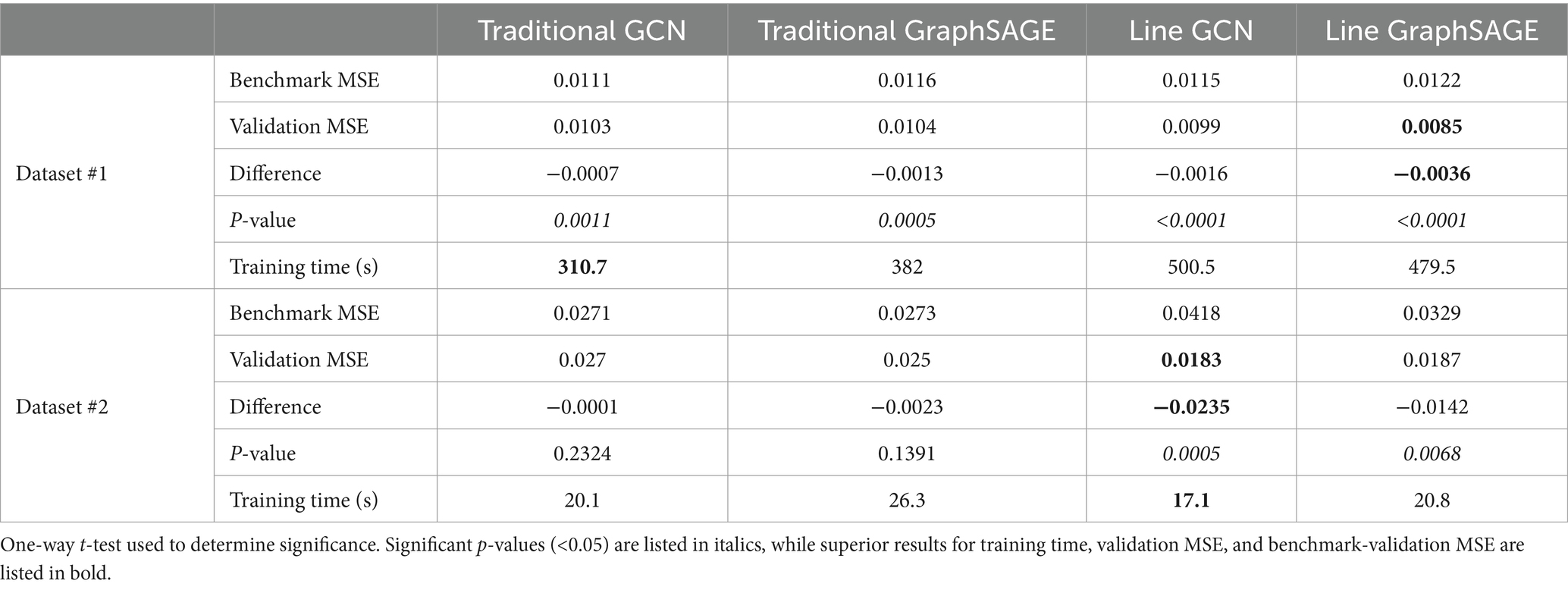

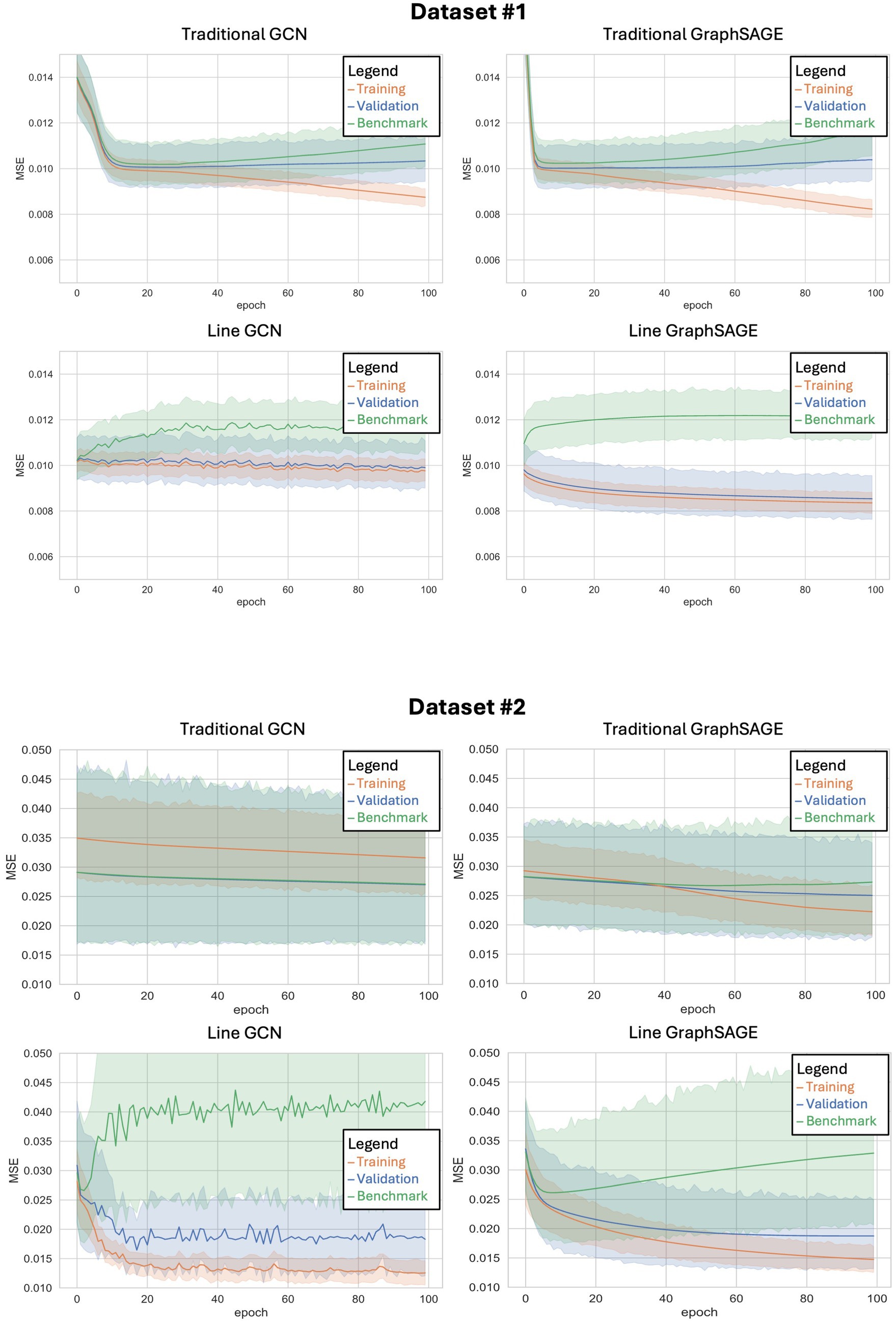

All four models were found to outperform their benchmarks with the HCP dataset and the second dataset, indicating that all of them were making individualized predictions (Figure 2; Table 2). After comparing each model’s validation performance to its benchmark performance, we examined their performance relative to one another. In the HCP dataset, we found that the line graph-based GCN, traditional GCN, and traditional GraphSAGE models did not show any statistically significant differences from each other; however, we did find that the line GraphSAGE showed a significant improvement – defined as a decrease in MSE – over all other tested architectures (p < 0.0001 in all cases).

Figure 2. Training curves for each of the four neural networks tested when applied to dataset #1 (top) and dataset #2 (bottom). The x-axis shows the number of epochs (or training cycles) that have occurred, while the y-axis shows the mean squared error for each epoch. The shaded region represents the 95% confidence interval.

When applied to the second dataset, using a different set of auditory language tasks, similar patterns emerged. The line GCN and the line GraphSAGE models demonstrated significantly improved performance over their traditional counterparts, with notable reductions in MSE and significant p-values (line GCN: p = 0.0005; line GraphSAGE: p = 0.0068), underscoring their robustness across varied datasets (Table 2; Figure 2). We performed paired t-tests comparing the validation MSEs across each fold. The p-value between traditional GCN and line GCN was 0.004953, indicating a statistically significant difference in their performance, with the line GCN model consistently outperforming the traditional GCN. Similarly, the paired t-test comparing traditional GraphSAGE and line GraphSAGE models yielded a p-value <0.003.

With the HCP dataset, both line graph-based neural networks immediately show a divergence between validation and benchmark performance early on in their training, and this difference in MSE only grows as more training epochs are completed (Figure 2). Additionally, these models show a striking resistance to overfitting, as their training error remains only marginally lower than their validation error even as the number of epochs increases. This is in stark contrast to the models without line graph transformations, both of which show an increase in training accuracy coupled with a decrease in validation accuracy in their later epochs – a widely recognized hallmark of overfitting.

As observed with the HCP dataset, both line graph-based neural networks initially diverged from the benchmark performance when trained on the second dataset (Figure 2). However, the degree of divergence in MSE between the training and validation phases was less pronounced. The line graph-based networks maintained a certain degree of resilience against overfitting, with the line GCN and line GraphSAGE models consistently demonstrating smaller gaps between training and validation errors across epochs. This adaptability was less evident in the traditional models, which, despite improvements, exhibited a greater tendency towards overfitting in the second dataset compared to the HCP dataset.

Training times varied significantly across models, with line GNNs requiring more time compared to traditional models for the HCP dataset. We found that line GNNs took significantly more time to train than traditional GNNs (line GraphSAGE and line GCN vs. traditional GCN: p < 0.0001; line GraphSAGE vs. traditional GraphSAGE: p = 0.0004; line GCN vs. traditional GraphSAGE: p = 0.0002). The traditional GraphSAGE trained significantly slower than the traditional GCN (p = 0.0001); however, the line GraphSAGE and line GCN did not show a statistically significant difference in training time from each other (p = 0.5150) (Table 2).

The training times for the second dataset revealed additional insights. Line GCN and line GraphSAGE models did not exhibit significant differences in training duration compared to the traditional models (Table 2). The traditional GraphSAGE exhibited a slower training time compared to the traditional GCN; however, the contrast in training times between the line and traditional models was not as pronounced with the second dataset as with the HCP dataset.

4 Discussion

Specific language deficits in patients with acquired CNS injuries have been well documented in the literature, with the first reported cases dating back to the 19th century. Speech and language deficits have been reported in patients with lesions in Broca’s and Wernicke’s areas, for example. Aphasias have also been reported in patients with injuries that disrupt the pathways between designated ROIs such as in conduction aphasia or global aphasia. However, understanding the mechanisms by which these language deficits arise is essential in developing a robust mechanistic understanding of large scale integration of brain’s highly integrated structural but also functional networks. Understanding how the effects of local injury may permeate throughout the brain that can lead to novel approaches in neurorehabilitation.

The development of neurocomputational models of the neural basis of language processing in the healthy human brain may be an important step in eventually understanding these mechanisms. GNNs are a type of network architecture that serve as a natural deep learning tool by which to characterize the brain’s static functional connectivity changes associated with cognitive behavior. Although it is beyond the scope of this paper to evaluate the performance of all graph-based neural networks, we specifically compared the performance of four graph based models; namely two traditional graph based architectures versus two line graph network architectures using two specific cognitive tasks with two different baseline datasets as input. We were particularly interested in assessing line graph architectures because they enable one to emphasize the “connections” between ROIs, rather than on the ROIs themselves as is typical of traditional graph base models.

In both the HCP dataset and the second dataset, we found that line GNNs outperform traditional GNNs in predicting changes in FC when using baseline FC as its input. Of specific interest, both datasets used fundamentally different baseline data as its input. The HCP dataset used FC associated with word based mathematical decisions. The model successfully predicted the static FC changes associated with language related computations. It demonstrated gradual decreased error rates over time with little to no overfitting patterns when compared to traditional GNNs.

Even when the model architectures were trained on a second dataset using a nonlinguistic baseline, both line GCN and line GraphSAGE models showed statistically significant improvements in MSE compared to the traditional models, suggesting a improved model performance across diverse datasets. However, it is important to note that all models registered higher MSEs when applied to the second dataset, a testament to the influence of dataset size and heterogeneity on the model’s performance. That said, one of the important elements of the model’s performance was its consistency in modeling the FC changes with no overfitting.

More recent studies have applied GNN machine learning models to characterize ROI activation in fMRI where ROIs are indicated as nodes and the connections linking the nodes as edges. We compared traditional GCN models to a similar common architecture of GNNs, called GraphSAGE. In the case of traditionally constructed FC data, we found that the GraphSAGE model performed similarly to the GCN model. However, we found that both models trained on traditional FC suffered from the problem of overfitting; this was particularly true for the GraphSAGE model.

When making predictions, GNNs tend to weight node-based data particularly heavily, with some common architectures (such as GraphSAGE) even omitting edge attributes altogether. This makes feature selection challenging when the most relevant features are edge-based, as is the case with FC studies, which in turn prompted our investigation of line graph-based methods. Indeed, we found that line graph models – namely the line GraphSAGE model – resulted in improved modeling of our data compared to the traditional GNN models, regardless of the context of the specific language-based task. The line graph models for both types of very different data inputs resulted in accurate modeling of the FC changes for two very different types of language-related changes with little to no data overfitting. This supports our hypothesis that line graph models would better characterize connections between ROIs, possibly because of inherent feature selection, which is a common method to avoid overfitting (Lever et al., 2016). Because the line graph construction means that line GNNs discard much of the ROI-based activation and structural information in the original graph, and even omit this information entirely in the case of the line GraphSAGE model, the information that does remain is FC data which is more likely to be relevant to the target predictions. Thus, overfitting on less-relevant ROI data is mitigated with line graph models, which could explain the line GNNs’ improved performance with respect to overfitting.

Although our findings are encouraging vis a vis the potential for using line graph-based models to accurately model changes in cognitive states, several caveats warrant mentioning: (1) We only used static FC as our input source to generate the model. (2) Like most deep neural networks, line graph-based models are still “black box” architectures and for these reasons future research will be needed to future develop new research tools, such as the addition of GNN explainers to make line graph based modeling more interpretable and explainable (Yuan et al., 2020) because explainable AI models will be essential in understanding structural perturbations that result from CNS injuries. (3) We found that line GNNs had longer training times than traditional GNNs when trained on the HCP dataset, which could imply a lack of scalability.

In conclusion, we found that line graph-based models can serve as powerful computational tools to model changes in static network correlations associated with different mental states as assessed here using two different tasks and to be able to do so with little to no overfitting, an important characteristic. That said, we think that the typology of graph-based in general can be exploited to better understand the dysfunctional relationships between local clusters of locally vs. long-distance affected functional correlations in whole brain network connectivity datasets, especially as affected in individual patients and patient populations. Further research comparing the performance of line GraphSAGE modeling to other graph-based models such as Bayesian networks is still warranted followed by the use of computer simulations to assessing the model’s performance in predicting network connectivity changes following CNS injury. Importantly, these models suggest that novel computational approaches that not only classify patients but provide the basis for improved mechanistic understandings of local and long-ranging network correlations changes following CNS injury may be possible. This provides the basis for a novel focus of research that uses these simulations to develop promising novel mechanistic therapeutic interventions in the future.

Data availability statement

The data analyzed in this study is subject to the following licenses/restrictions: the original raw imaging datasets are from the Human Connectome Project and are accessible publicly with the appropriate permissions granted from Washington University. The code itself is available through NIH/BTRIS data repository and can be shared with the approved request. In addition, the anonymized fMRI data for the second dataset is also available permission from NIH through the NIH/BTRIS data repository. Requests to access these datasets should be directed to Nadia Biassou, Ymlhc3NvdW5AbmloLmdvdg==.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements.

Author contributions

SA: Writing – review & editing, Writing – original draft, Visualization, Validation, Software, Methodology, Investigation, Formal analysis, Conceptualization. JL: Writing – review & editing, Software, Methodology, Formal analysis, Data curation. NS: Writing – review & editing, Software, Methodology, Formal analysis, Data curation. KW: Writing – review & editing, Writing – original draft, Validation, Software, Methodology, Investigation, Formal analysis. AK: Writing – review & editing, Methodology, Conceptualization. SR: Writing – review & editing, Writing – original draft, Formal analysis, Data curation, Conceptualization. SI: Writing – review & editing, Resources, Data curation. WT: Writing – review & editing, Resources, Data curation. NB: Resources, Writing – review & editing, Writing – original draft, Supervision, Project administration, Methodology, Investigation, Funding acquisition, Conceptualization.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The study was funded by the National Institutes of Health Intramural Program, the National Institute on Deafness and Other Communication Disorders, the National Institute of Neurological Disorders and Stroke, and the NIH Clinical Center. Data were provided in part by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

Acknowledgments

We also thank Ronald Summers MD, PhD and Barry Horwitz PhD for very helpful comments on multiple earlier drafts of the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Barch, D. M., Burgess, G. C., Harms, M. P., Petersen, S. E., Schlaggar, B. L., Corbetta, M., et al. (2013). Function in the human connectome: task-fMRI and individual differences in behavior. NeuroImage 80, 169–189. doi: 10.1016/j.neuroimage.2013.05.033

Berthier, M. L. (2005). Poststroke aphasia: epidemiology, pathophysiology and treatment. Drugs Aging 22, 163–182. doi: 10.2165/00002512-200522020-00006

Binder, J. R., Gross, W. L., Allendorfer, J. B., Bonilha, L., Chapin, J., Edwards, J. C., et al. (2011). Mapping anterior temporal lobe language areas with fMRI: a multicenter normative study. NeuroImage 54, 1465–1475. doi: 10.1016/j.neuroimage.2010.09.048

Boes, A. D., Prasad, S., Liu, H., Liu, Q., Pascual-Leone, A., Caviness, V. S., et al. (2015). Network localization of neurological symptoms from focal brain lesions. Brain 138, 3061–3075. doi: 10.1093/brain/awv228

Bullmore, E., and Sporns, O. (2009). Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198. doi: 10.1038/nrn2575

Cai, L., Li, J. D., Wang, J., and Ji, S. W. (2022). Line graph neural networks for link prediction. IEEE Trans. Pattern Anal. 44, 5103–5113. doi: 10.1109/Tpami.2021.3080635

Choudhary, K., and DeCost, B. (2021). Atomistic line graph neural network for improved materials property predictions. NPJ Comput. Mater. 7:185. doi: 10.1038/s41524-021-00650-1

Cox, R. W. (1996). AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 29, 162–173. doi: 10.1006/cbmr.1996.0014

Cox, R. W., and Hyde, J. S. (1997). Software tools for analysis and visualization of fMRI data. NMR Biomed. 10, 171–178. doi: 10.1002/(SICI)1099-1492(199706/08)10:4/5<171::AID-NBM453>3.0.CO;2-L

Desikan, R. S., Ségonne, F., Fischl, B., Quinn, B. T., Dickerson, B. C., Blacker, D., et al. (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage 31, 968–980. doi: 10.1016/j.neuroimage.2006.01.021

Doucet, G. E., He, X., Sperling, M. R., Sharan, A., and Tracy, J. I. (2017). From "rest" to language task: task activation selects and prunes from broader resting-state network. Hum. Brain Mapp. 38, 2540–2552. doi: 10.1002/hbm.23539

Fey, M., and Lenssen, J. E. (2019). Fast graph representation learning with PyTorch geometric. arXiv. doi: 10.48550/arXiv.1903.02428

Gaillard, W. D., Berl, M. M., Moore, E. N., Ritzl, E. K., Rosenberger, L. R., Weinstein, S. L., et al. (2007). Atypical language in lesional and nonlesional complex partial epilepsy. Neurology 69, 1761–1771. doi: 10.1212/01.wnl.0000289650.48830.1a

Hagberg, A., Swart, P. S., and Chult, D. (2008). Exploring network structure, dynamics, and function using NetworkX. Los Alamos, NM (United States): Los Alamos National lab. (LANL).

Hamilton, W. L., Ying, R., and Leskovec, J. (2017). Inductive representation learning on large graphs. Adv. Neur. :30. doi: 10.48550/arXiv.1706.02216

Han, Y., and Zhang, S. W. (2023). ncRPI-LGAT: prediction of ncRNA-protein interactions with line graph attention network framework. Comput. Struct. Biotechnol. J. 21, 2286–2295. doi: 10.1016/j.csbj.2023.03.027

He, Y., and Evans, A. (2010). Graph theoretical modeling of brain connectivity. Curr. Opin. Neurol. 23, 341–350. doi: 10.1097/WCO.0b013e32833aa567

Kamarajan, C., Ardekani, B. A., Pandey, A. K., Kinreich, S., Pandey, G., Chorlian, D. B., et al. (2020). Random Forest classification of alcohol use disorder using fMRI functional connectivity, neuropsychological functioning, and impulsivity measures. Brain Sci. 10. doi: 10.3390/brainsci10020115

Kardan, O., Kaplan, S., Wheelock, M. D., Feczko, E., Day, T. K. M., Miranda-Domínguez, Ó., et al. (2022). Resting-state functional connectivity identifies individuals and predicts age in 8-to-26-month-olds. Dev. Cogn. Neurosci. 56:101123. doi: 10.1016/j.dcn.2022.101123

Kipf, T. N., and Welling, M. Semi-supervised classification with graph convolutional networks. (2017) ICLR.

Lever, J., Krzywinski, M., and Altman, N. (2016). Model selection and overfitting. Nat. Methods 13, 703–704. doi: 10.1038/nmeth.3968

Lin, H., Cai, X., Zhang, D., Liu, J., Na, P., and Li, W. (2020). Functional connectivity markers of depression in advanced Parkinson's disease. Neuroimage Clin. 25:102130. doi: 10.1016/j.nicl.2019.102130

Nebli, A., Kaplan, U. A., and Rekik, I. (2020). “Deep EvoGraphNet architecture for time-dependent brain graph data synthesis from a single Timepoint” in I. Rekik, E. Adeli, S. H. Park, M. del, and C. V. Hernández (Eds), Predictive intelligence in medicine (Cham: Springer International Publishing).

RaviPrakash, H., Anwar, S. M., Biassou, N. M., and Bagci, U. (2021). Morphometric and functional brain connectivity differentiates chess masters from amateur players. Front. Neurosci. 15:629478. doi: 10.3389/fnins.2021.629478

Rolinski, R., You, X., Gonzalez-Castillo, J., Norato, G., Reynolds, R. C., Inati, S. K., et al. (2020). Language lateralization from task-based and resting state functional MRI in patients with epilepsy. Hum. Brain Mapp. 41, 3133–3146. doi: 10.1002/hbm.25003

Scarselli, F., Gori, M., Tsoi, A. C., Hagenbuchner, M., and Monfardini, G. (2009). The graph neural network model. IEEE Trans. Neural Netw. 20, 61–80. doi: 10.1109/TNN.2008.2005605

Tao, Y., and Rapp, B. (2020). How functional network connectivity changes as a result of lesion and recovery: an investigation of the network phenotype of stroke. Cortex 131, 17–41. doi: 10.1016/j.cortex.2020.06.011

Tran, S. M., McGregor, K. M., James, G. A., Gopinath, K., Krishnamurthy, V., Krishnamurthy, L. C., et al. (2018). Task-residual functional connectivity of language and attention networks. Brain Cogn. 122, 52–58. doi: 10.1016/j.bandc.2018.02.003

Yuan, H., Yu, H., Gui, S., and Ji, S. (2020). Explainability in graph neural networks: a taxonomic survey. IEEE Trans. Patt. Anal. Mach. Intel. 45, 5782–5799. doi: 10.48550/arXiv.2012.15445

Zheng, K., Zhang, X. L., Wang, L., You, Z. H., Zhan, Z. H., and Li, H. Y. (2022). Line graph attention networks for predicting disease-associated Piwi-interacting RNAs. Brief. Bioinform. 23:bbac393. doi: 10.1093/bib/bbac393

Zong, Y., Zuo, Q., Ng, M. K. P., Lei, B., and Wang, S. (2024). A new brain network construction paradigm for brain disorder via diffusion-based graph contrastive learning. IEEE Trans. Pattern Anal. Mach. Intell., 1–16. doi: 10.1109/TPAMI.2024.3442811

Keywords: graph theory, graph neural network, line graph, functional connectivity, machine learning, functional MRI

Citation: Acker S, Liang J, Sinaii N, Wingert K, Kurosu A, Rajan S, Inati S, Theodore WH and Biassou N (2024) Modeling functional connectivity changes during an auditory language task using line graph neural networks. Front. Comput. Neurosci. 18:1471229. doi: 10.3389/fncom.2024.1471229

Edited by:

Arpan Banerjee, National Brain Research Centre (NBRC), IndiaReviewed by:

Shuqiang Wang, Chinese Academy of Sciences (CAS), ChinaRupesh Chillale, University of Maryland, United States

Chittaranjan Hens, International Institute of Information Technology, Hyderabad, India

Copyright © 2024 Acker, Liang, Sinaii, Wingert, Kurosu, Rajan, Inati, Theodore and Biassou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nadia Biassou, Ymlhc3NvdW5AbmloLmdvdg==

Stein Acker

Stein Acker Jinqing Liang1

Jinqing Liang1 Ninet Sinaii

Ninet Sinaii Kristen Wingert

Kristen Wingert Sara Inati

Sara Inati Nadia Biassou

Nadia Biassou