- Department of Rehabilitation Engineering, Research Institute of National Rehabilitation Center for Persons with Disabilities, Tokorozawa, Japan

We propose a mini-max feedback control (MMFC) model as a robust approach to human motor control under conditions of uncertain dynamics, such as structural uncertainty. The MMFC model is an expansion of the optimal feedback control (OFC) model. According to this scheme, motor commands are generated to minimize the maximal cost, based on an assumption of worst-case uncertainty, characterized by familiarity with novel dynamics. We simulated linear dynamic systems with different types of force fields–stable and unstable dynamics–and compared the performance of MMFC to that of OFC. MMFC delivered better performance than OFC in terms of stability and the achievement of tasks. Moreover, the gain in positional feedback with the MMFC model in the unstable dynamics was tuned to the direction of instability. It is assumed that the shape modulations of the gain in positional feedback in unstable dynamics played the same role as that played by end-point stiffness observed in human studies. Accordingly, we suggest that MMFC is a plausible model that predicts motor behavior under conditions of uncertain dynamics.

Introduction

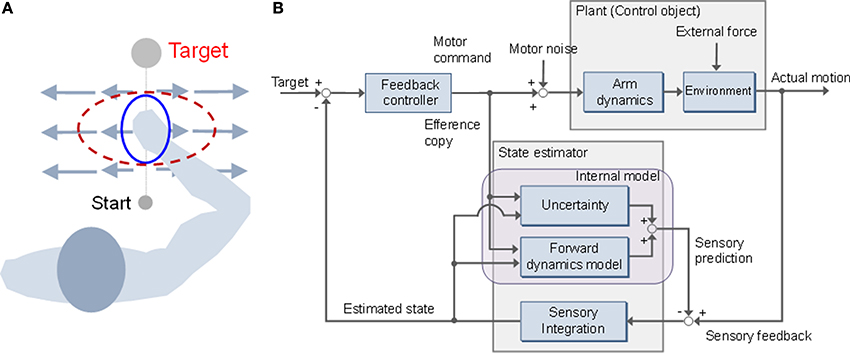

It is necessary to interact with various environments to learn how to use tools and to participate in unfamiliar sports, such as tennis and swimming. Skilled actions are achieved via interactive forces, based on human compensation. A considerable amount of research has focused on arm movement, to investigate learning mechanisms for adaptation to perturbed limb dynamics. It has been suggested that there are different mechanisms for adapting to stable and unstable dynamics (Franklin et al., 2003a,b; Osu et al., 2003). Under conditions where the dynamics are stable, it is possible to learn the forces necessary to compensate for perturbed dynamics in a feed-forward manner (Shadmehr and Mussa-Ivaldi, 1994). However, unstable dynamics make it necessary to learn the optimal mechanical impedance as the magnitude, shape, and orientation of the end-point stiffness (Figure 1A) (Burdet et al., 2001). Although an internal model can compensate for both stable and unstable dynamics, mechanisms have been identified for adapting to different approaches (Franklin et al., 2003b; Osu et al., 2003). Osu et al. reported that an inverse dynamics model that controlled the net joint torque performed well in a stable environment. However, in an unstable environment, the inverse dynamics model functions in parallel with an impedance controller to compensate for a consistent perturbing force (Osu et al., 2003). It has also been suggested that the impedance controller assists in the formation of the inverse dynamics model and contributes to improved stability (Franklin et al., 2003b). Both approaches are used selectively and combined in accordance with environmental dynamics.

Figure 1. Stiffness modulation and mini-max feedback control (MMFC). (A) Adaptation of stiffness geometry to unstable dynamics. The stiffness changes to the red dotted ellipse from the initial blue solid form. The long and short axes of the ellipse represent the directions of maximal and minimal stiffness, respectively. (B) Block diagram of MMFC with uncertainty.

Optimal feedback control (OFC) theory (Todorov and Jordan, 2002), which has been supported by the results of experimental and simulation studies (Liu and Todorov, 2007; Lockhart and Ting, 2007; Izawa and Shadmehr, 2008; Izawa et al., 2008; Nagengast et al., 2009; Pruszynski et al., 2011; Ueyama and Miyashita, 2013, 2014), suggests that the central nervous system sets up feedback controllers that continuously convert sensory input into motor output, optimally tuned to the task at hand, by trading off energy consumption with constraints, such as accuracy, on performance. According to OFC, trajectory planning is not required because the problems of motor planning and control are combined. An important feature of the model is the concept of minimum intervention: i.e., setting up feedback controllers only to correct variation deleterious to the task (Wolpert and Flanagan, 2010). For example, in a tennis serve, variation in the azimuth angle of the racket head should be corrected far less strongly than variation in the elevation angle, because the azimuthal variation has little effect on whether the ball will land in the court, whereas elevation variability can threaten the goal of landing the ball in the court. OFC is based on a linear-quadratic-Gaussian (LQG) design, which is used to describe uncertain linear systems disturbed by additive white Gaussian noise with imperfect state information (Todorov, 2005). However, a precise forward dynamics model is required. The control and sensory noise must be modeled as Gaussian statistics; however, real-world sensorimotor uncertainties are represented by non-Gaussian distributions (Orban and Wolpert, 2011). In the engineering field, robust control design has been used in various situations, because it does not require precise dynamic models for the control objects. It is necessary to represent the uncertainties of the dynamics model in a quantitatively expressible form, because the objective of robust control is to configure a control system to allow for such uncertainties. There are essentially two ways of representing the uncertainties: as unstructural or structural uncertainties. An unstructural uncertainty is represented as a perturbation of the transform function in the frequency domain. In contrast, a structural uncertainty is represented by an additive disturbance combined with the process and sensory noise, such as environmental dynamics, in the state-space model. H∞ control is a robust control technique that addresses the issue of worst-case controller design for linear plants subjected to unknown additive disturbances and plant uncertainties, including problems of disturbance attenuation and model matching and tracking (Djouadi and Zames, 2002). Furthermore, the role of game theory in the design of robust controllers, such as H∞ control, has also been recognized (Anderson and Moore, 1979; Bernhard, 1995), with the terminology “mini-max controller” adapted from statistical decision theory (Savage, 1955). Moreover, the brain might also be treated as an integrated robust control system in which components for sensing, computation, and decision are useful primarily to the extent that they affect action (Doyle and Csete, 2011).

Here, we applied a mini-max feedback controller (MMFC) to a sensorimotor control problem with environmental dynamics as a structural uncertainty (Figure 1B). MMFC operates as an extended model of OFC, by incorporating prior influence characterized by familiarity with novel dynamics. Such expansion of motor control and planning models has been recognized as a major factor in movement generalization (Yan et al., 2013). We performed numerical simulations and compared the performance of MMFC with that of OFC, as a reference, in different types of force fields with stable and unstable dynamics. In our simulations, mini-max feedback control showed better performance than OFC under conditions of dynamics, and could predict the impedance modulation in unstable dynamics to improve stability. These observations suggest that MMFC is a plausible model that predicts behaviors under structural uncertainty. Preliminary results of this study were presented in the proceedings of a conference (Ueyama and Miyashita, 2011).

Materials and Methods

In this paper, the solution to a robust control problem was obtained via a mini-max approach applied to dynamic game problems (Başar and Bernhard, 1995). We modeled the dynamics as structural uncertainties to apply the mini-max approach; the simulations used simple Euler integration with a 5 ms sampling time.

Mini-max Control Problem

The MMFC problem requires a control object to be represented as a generalized plant model. We provide the model with structural uncertainty, and the solution is obtained by minimizing energy consumption under conditions of maximal uncertainty.

Problem definition

The dynamics of a system are described by the following equation:

where xk, uk, and yk are the state, input, and output vectors, respectively, at time step k (k = 0, 1, …, N − 1), and the dynamics are described by the three matrices, A, B, and C. wk denotes a disturbance vector, and vk is a sensory noise vector, represented as zero-mean Gaussian white noise with unity covariance. The system can be rewritten as follows when a disturbance, such as an environmental perturbation or motor noise, affects the dynamics:

where ΔA, ΔB, and ΔC represent disturbances corresponding to the state, input, and output, respectively. A disturbance should be modeled as an uncertainty in the internal model. Thus, these disturbances are assumed to have the following form:

where Da, Db, Dc, Ea, Eb, and Ec are constant matrices, and Fak, Fbk, and Fck are time-varying matrices satisfying the following conditions: FTak Fak < I, FTbk Fbk < I, FTck Fck < I. The system can be transformed to the equivalent system as follows:

where,

Here, zk denotes the regulated output vector. Then, a system with structural uncertainty can be reduced to the following form, known as a generalized plant model (Zhou and Doyle, 1998):

where,

According to OFC, the cost function J(u) is given by,

where QN denotes a terminal state cost weight matrix. Instead, our proposed model adopts the cost function Jγ(u,w), given by the following equation:

where γ is a scalar parameter representing the level of disturbance attenuation. The objective of robust control is to determine the appropriate input for a worst-case disturbance. Thus, the robust control problem is related to the mini-max problem of minimizing the input u for a maximized disturbance w:

This cost function requires a task to be achieved with minimal energy consumption for the worst case of uncertainty as the maximized disturbance, in a manner analogous to the OFC problem: LQG design, which is described by a quadratic cost, and gives the solution as a combination of the feedback control law and a state estimator.

Solution

As in the LQG design, a solution of the MMFC problem can be written in a state feedback form:

where k and Lk are the estimated state and feedback gain, respectively. The estimated state and feedback gain are computed from two discrete Riccati differential equations of the following form:

where Mk and Σk denote the solutions of the Riccati equations obtained by the respective backward and forward time calculations. Here, we adopt the following assumptions to simplify the derivations:

These assumptions do not affect the generalizability, and they allow describing equations in simple forms, maintaining consistency with the OFC. Using these solutions, the feedback gain and estimated state are given by the following:

The estimated disturbance diverges to infinity if the level of disturbance attenuation γ is close to zero. Thus, the level of γ cannot be chosen freely and must satisfy the following constraints:

The strong concavity condition is given by

where

The constraints above can be translated into equivalent conditions on the spectral radius (i.e., the maximum of the absolute values of the eigenvalues) because the spectrum radius is equal to the norms of Mk + 1 DDT, Σk Qk, k + 1 Mk + 1, and Σk k. Thus, Equations (9) and (10) require the following conditions be satisfied: ρ(Mk + 1DDT) < γ2, ρ(Σk Qk) < γ2, and ρ(k + 1 Mk + 1) < γ2 or ρ(Σk k) < γ2, where ρ is the kernel of the spectral radius.

Application to a Sensorimotor System

We applied the MMFC approach to a sensorimotor system. The dynamics model is based on previous studies (Todorov, 2005; Izawa and Shadmehr, 2008; Izawa et al., 2008; Braun et al., 2009). The dynamics were simulated with uncertainties, represented by force fields: a velocity-dependent force field (VF) and a divergent force field (DF), representing stable and unstable environments, respectively (Franklin et al., 2003a,b; Osu et al., 2003). We also designed both optimal and mini-max feedback controllers for the problem and compared their performances.

Sensorimotor system

Dynamics model. We modeled a movement with two degrees of freedom, such as multi-joint flexion and extension of the shoulder and elbow joints, as cursor movements on a screen, described by shifting the position p(t) = [x(t), y(t)]T to designated targets p* = [x*, y*]T:

where m and b are the end-point mass and viscosity, respectively, and are set equal to m = 1.0 (kg) and b = 10 (Ns/m). The combined action of all muscles is represented by the force vector f(t) ∈ R2 acting on the hand. The motor command u(t) ∈ R2 is transformed into the force f(t) by adding control-dependent multiplicative noise and by applying a simplified first-order muscle-like low-pass filter of the following form:

with time constant, τ = 0.05 (s). The motor command u(t) is disturbed by signal-dependent multiplicative noise that exists in the neural system (Matthews, 1996), and plays an important role in motor planning (Harris and Wolpert, 1998). The signal-dependent noise (SDN) is given by the Gaussian white noise ε(t) ~ N(0, I) and the magnitude σu is set equal to 0.5.

Observation model. In our model, the state variables cannot be observed directly. The sensory output y(t) ∈ R8 is the position, velocity, force, and target position disturbed by sensory noise, and is given by:

where v(t) ∈ R8 and σy ∈ R8 × 8 are the Gaussian white noise v(t) ~ N(0, I) and the diagonal matrix defined by σy = diag([0.02c, 0.02c, 0.2c, 0.2c, c, c, 0, 0]), respectively. Here, c is the scaling parameter, equal to the SDNs: i.e., c = σu = 0.5, similar to a previous study (Todorov, 2005). The task is to move the hand from the starting position p(0) = [0, 0]T to the target position p*, which is located at a distance of 25 cm, and to stop at the terminal period between 600 and 700 ms, in accordance with experiments (Franklin et al., 2003a,b; Osu et al., 2003).

Environmental uncertainty

We assumed two different types of force field as uncertainty environments, VF and DF. The force fields exert a force Fext(t) ∈ R2 on the hand. The force generated by the VF is

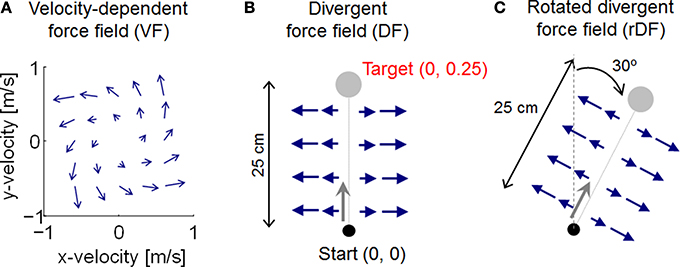

where α is a scaling parameter, set equal to 0.1 to generate effective perturbation for the trajectory. When reaching forward, the force is directed forward and to the left, as the velocity along the y-axis is increased (Figure 2A). DF produces a negative elastic force perpendicular to the target directions, with a value of zero along the y-axis: i.e., no force is applied when the path of the hand follows the y-axis, but the hand is pushed away whenever it deviates from the y-axis (Figure 2B). DF teaches subjects to move in a straight line, but to show no after-effects on the removal of the field. The task is achieved by increasing the stiffness of the arm, but only in the direction of maximum instability (Figure 1A). The force generated by DF is described by

where β is a scaling parameter, set equal to 100 to generate effective perturbation for the trajectory. Although end-point trajectories were almost straight without external dynamics, the initial movement direction varied slightly from trial to trial, due to motor output variability (Burdet et al., 2001). Thus, because DF produces an unstable interaction with the arm to amplify such variation by pushing the hand with a force proportional to the deviation from the y-axis, the initial trials in DF exhibited unstable behavior, diverging widely to the right or left of the y-axis. We also examined the additional DF case of a rotated divergent force field (rDF), which is necessary to reach a rotated position (Figure 2C). The exerted force is also rotated. If the target is realigned at an angle θ in the clockwise direction, the force is then given by

Figure 2. Illustration of force field dynamics. (A) Velocity-dependent force field (VF). (B) Divergent force field (DF). (C) Rotated divergent force field (rDF). The target and applied force are rotated 30° in the clockwise direction.

In this study, the rotational angle θ was set equal to 30°. With these force fields, the dynamic uncertainties can be expressed as follows:

In both cases, the environmental uncertainties do not depend on the motor command; however, the motor command is disturbed by the SDN. Thus, the uncertainty of the motor command is represented by ΔB = σu · B · ε(t).

Controller design

We carried out numerical simulations using both OFC and MMFC to compare their performances. In our simulations, the dynamics model was rewritten as a discrete-time system, using a state-space formulation:

where xk ∈ R8 is a state-space vector at time step k, defined by xk = [pTk, ΩTk, fTk, p*T]T. The matrices describing the system, A ∈ R8 ×8, B ∈ R8 × 2, and C ∈ R8 × 8, are expressed as follows:

where Δ is a single time step of the simulation, set equal to Δ = 0.005 s.

In these simulations, we assumed two types of condition: (i) with structural uncertainty and (ii) without structural uncertainty. Under the condition with structural uncertainty, the system matrix A of the state-space Equation (3) is not equal to the actual dynamics including the force field. In contrast, under the condition without structural uncertainty, the force field dynamics are completely represented in the internal model. Thus, the system matrix A in Equation (3) is replaced by A + ΔA.

Optimal feedback controller. An optimal feedback controller also generates motor commands, thus forming state feedback, as in Equation (6). The feedback gain is computed to minimize the following cost function:

where wp, wv, and wf are the cost weights of the end-point position, velocity, and force, with the assigned values, wp = 104, wv = 103, and wf = 102, respectively, to achieve the reaching task adequately without external dynamics, i.e., null force field (NF). In addition, the terminal cost is defined to evaluate the states between Ns = 0.6/Δ and N = 0.7/Δ steps. Thus, the cost function requires the expected state to be stabilized at close to the target in the terminal period (Ns < k < N). The feedback gain is determined by

where Sk + 1 is found by solving the Riccati equation

where Qk ∈ R8 × 8 is the task cost matrix, given by Qk = qTkqk, where

The cost weights are also used in the mini-max feedback controller design, and tuned to accomplish the tasks for all conditions in the MMFC. The state of the system is estimated from noisy observation using Kalman filtering and is expressed as follows:

where Kk ∈ R8× 8 is the Kalman gain: i.e., a function of the uncertainty of the estimated state and the measurement noise. We adapted a standard technique to calculate the gain, as follows:

where Pk|k − 1 ∈ R8×8 is the predicted accuracy of the state estimation and is given by

The Kalman gain is computed concurrently at each time step in the simulation, starting with the initial condition P0|0 = 10−3 × I.

Mini-max feedback controller. To apply the MMFC approach, uncertainty must be modeled as familiarity with itself. Thus, we represent the familiarity by the matrices Da ∈ R8 ×8, Db ∈ R8 × 8, Dc ∈ R8 × 8, and Dy ∈ R8 × 8, given by Da = κ ΔA, Db = λ σuB, Dc = 0, and Dy = λ σy, where κ and λ are the scaling parameters of familiarity. The parameter κ was set to a range of a closed interval [0, 1]. When the force field dynamics cannot be predicted—i.e., Da = 0 (κ = 0)–the structural uncertainty is not modeled. When the force field dynamics are modeled completely as the structural uncertainty, Da = ΔA (κ = 1). The controller is then designed to maximize the effect of the dynamics as the worst-case assumption. In addition, Db and Dy must be sufficiently large to exceed the maximum value of distribution, and hence the scaling parameter λ is set to λ = 5. This seems sufficient for the disturbances, because the SDN and sensory noise have a standard Gaussian white noise distribution.

Matrices representing the regulated outputs Ea ∈ R8 × 8, Eb ∈ R8 × 8, and Ec ∈ R8 × 8 were given to satisfy the assumption GT G = I and HT G = 0 by:

The terminal cost matrix QN has already been defined in the optimal feedback controller design, and the initial error cost Q0 ∈ R8 × 8 is defined as Q0 = P0|0. Finally, the disturbance attenuation level γ is set equal to 107 to satisfy Equations (9) and (10).

Results

We performed numerical simulations of point-mass reaching movement in different types of force fields–VF, DF, and rDF–using OFC and MMFC. The simulations were carried out 100 times for each case.

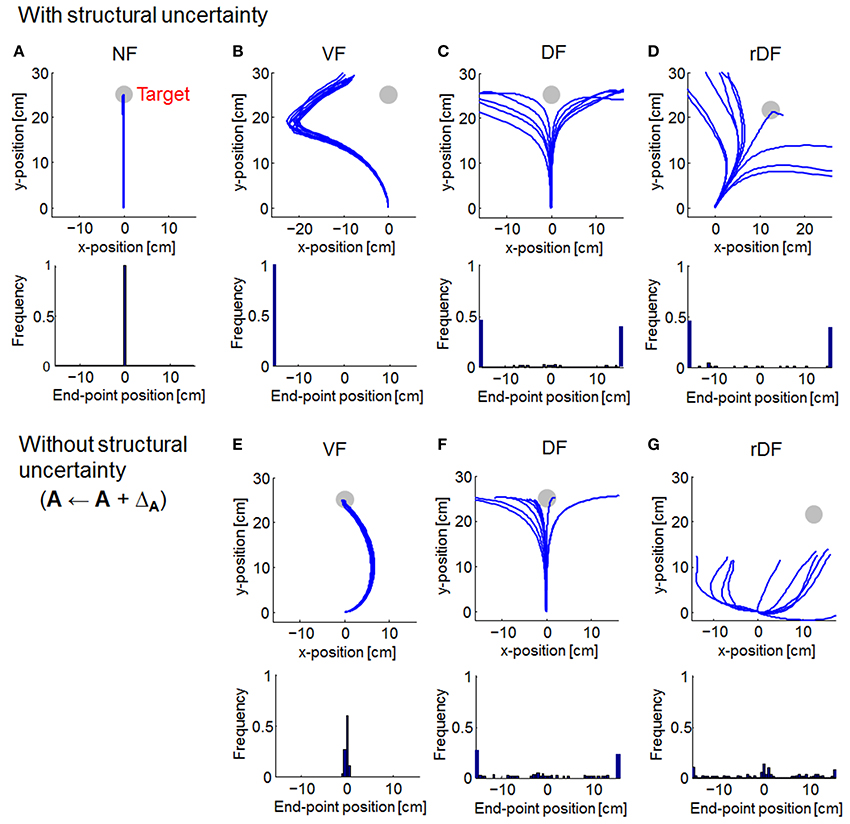

Comparison of Trajectories

We compared the trajectories of OFC and MMFC. Then, the end-point distributions were computed from the lateral distances of the target direction (based on curvature > 0.03 mm−1), following a previous report (Osu et al., 2003). The trajectories were almost straight lines for OFC in NF (Figure 3A). However, under conditions of a force field, reaching the target was difficult. In VF, the trajectories curved to the left (Figure 3B). In DF and rDF, the trajectories diverged to the left and right in accordance with the directions of the targets (Figures 3C,D). When the force field dynamics were modeled internally, i.e., A ← A + ΔA, the trajectories in VF came close to the targets with a curve; however, the trajectories of DF and rDF did not achieve their targets (Figures 3E–G). Under the rDF condition, in particular, some trajectories could not aim toward the target even immediately after the onset of movement. The behavior difference from DF was caused by cross talk in the coordinates. In DF, deviance on the x-axis was independent on the hand position on y-axis, because the diagonal components of the feedback gain were zero. In rDF, conversely, the lateral deviancy affected the vertical distance between the target hand positions through the feedback gain, and the task required more motor effort to reach the same distance to DF because each actuator acted on only the x- or y-axis.

Figure 3. Simulated trajectories of optimal feedback control (OFC). Solid lines indicate the simulated trajectories for 10 randomly selected simulations. Gray circles denote the targets. Histograms at the bottom of the trajectories show distributions of the end-point positions (based on curvature > 0.03 mm−1). (A) Null force field (NF) condition. (B–D) With structural uncertainty of force fields. (E–G) Without structural uncertainty of force fields.

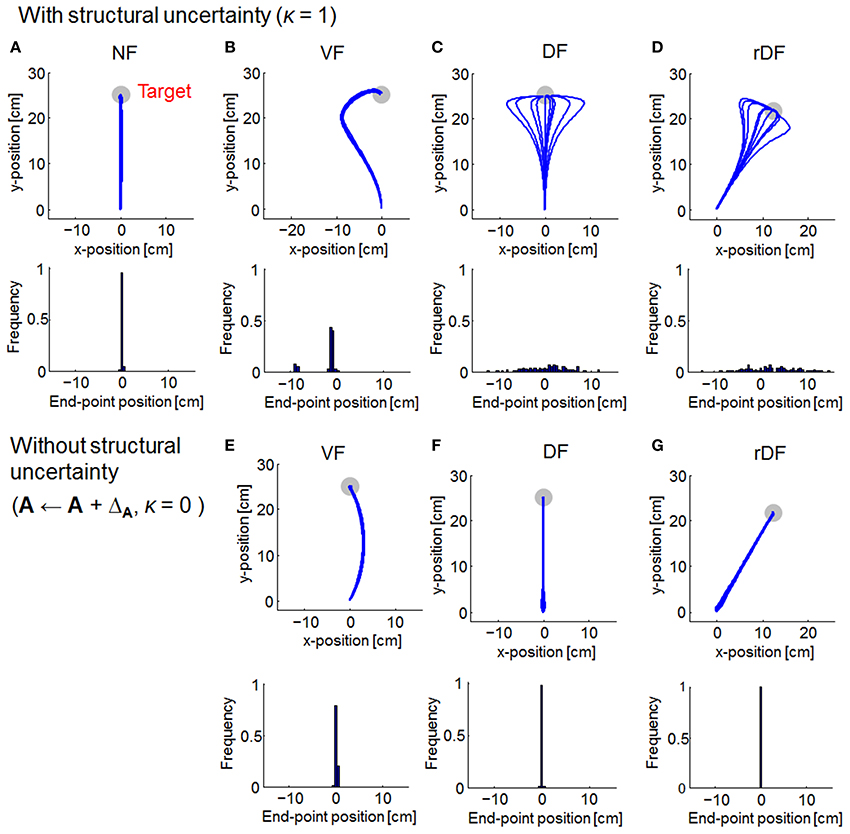

As with OFC, the trajectories of MMFC were almost straight lines in NF (Figure 4A). In VF, although the trajectories curved gradually after the onset of movement, they turned suddenly toward the target, even when the force field dynamics was not completely known (Figure 4B). In DF and rDF, even if the trajectories had diverged after the onset of movement, they finally converged to the target (Figures 4C,D). These trajectories were similar to those obtained from the results of initial trials, during adaptation to the same types of dynamics, in human experiments (Osu et al., 2003). However, in VF without structural uncertainty of force fields, i.e., A ← A + ΔA and κ = 0, the trajectories curved slightly to the right direction and achieved the target (Figure 4E). Subsequently, the trajectories were straight lines in DF and rDF (Figures 4F,G).

Figure 4. Simulated trajectories of MMFC for 10 randomly selected simulations. The format is the same as in Figure 3. In (A–D), the parameter of familiarity with the uncertainty κ is set equal to 1. In (E–G), the familiarity parameter κ is set equal to 0.

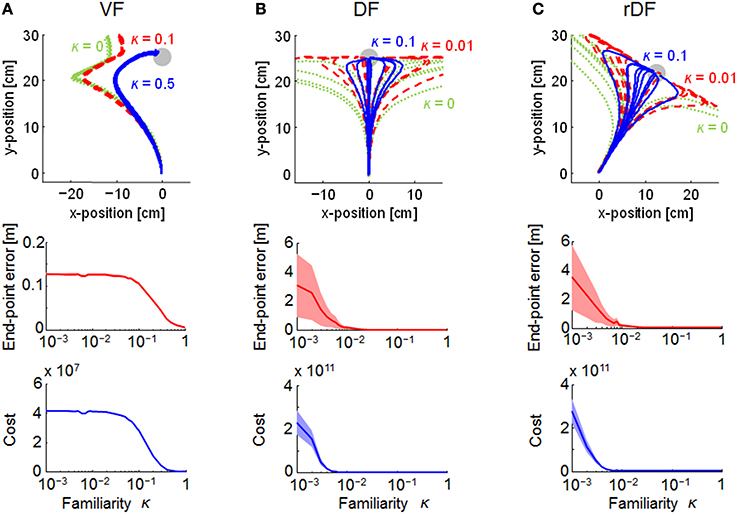

The familiarity parameter κ (0 ≤ κ ≤ 1) affects the performance of MMFC directly, because the structural uncertainty of the force fields was not reflected in the motor control when κ = 0. Thus, we evaluated the effect on the trajectories (Figure 5). When κ = 0, the trajectories could not reach the targets in all conditions, and those of DF and rDF diverged. With the increase in the parameter κ, the trajectories were close to the targets under all conditions. The variability of the trajectories as well as the end-point errors were decreased in DF and rDF. In addition, the quadratic costs, given by Equation (19), decreased to a slightly greater degree than those of the end-point errors, and the performances were saturated at around κ = 0.5 in VF, and κ = 0.01 in DF and rDF.

Figure 5. Effect of the range of structural uncertainty on the trajectories in MMFC. Green dotted, red dashed, and blue solid lines indicate lower, middle, and higher uncertainties, respectively, given by the familiarity parameter κ. The top row indicates the end-point trajectories. The middle and bottom rows are single logarithmic plots of the terminal end-point error (mean ± SD) and the mean quadratic costs and the 95% confidence intervals (CIs). The CIs were estimated using a bootstrapping procedure with re-sampling 10,000 times. (A–C) represent VF, DF, and rDF conditions, respectively.

Feedback Gain Geometries

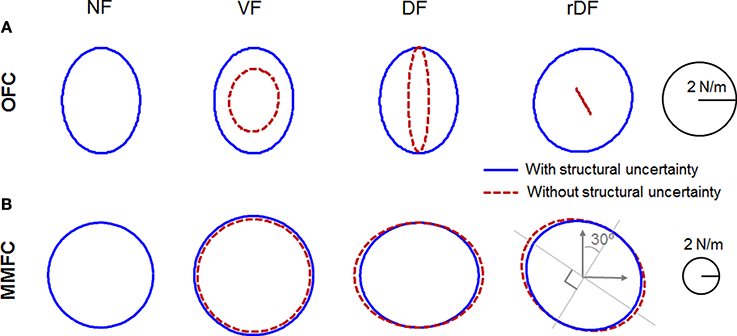

There are mathematical difficulties in incorporating the impedance generated by non-linear muscular properties with a feedback control law. However, several studies have provided evidence that sensorimotor control systems can and do regulate feedback gains for impedance control (Franklin et al., 2007; Krutky et al., 2010; Franklin and Wolpert, 2011). Although the impedance is not actually equal to the feedback gains computed by OFC or MMFC, the gains must contribute to the modulation of impedance. Thus, we computed sensory feedback gains, transferring sensory feedback errors to the motor command as products of the state feedback and filter gains. The sensory feedback gains for OFC and MMFC were then given by products of the state feedback and filter gains, as Lk · Kk and Lk · A(Σk + CT N−1C − γ−2 Qk)−1 CT N−1, respectively. We visualized the patterns of the positional gain at the midpoints of the movement time (350 ms) as ellipses, similar to the stiffness ellipses used previously (Burdet et al., 2001; Franklin et al., 2003a, 2007; Ueyama and Miyashita, 2014). The orientation, shape, and size of the ellipse are obtained by singular value decomposition of the positional gain matrix.

In NF, the gain of OFC was a vertically long ellipse (Figure 6A). In VF and DF of OFC, the gains with structural uncertainty were quite similar to the gain in NF. However, the gain in VF without structural uncertainty was rather small, and varied by ~4° in the clockwise direction; that of DF decreased in a lateral direction. In rDF, the gain with structural uncertainty was directed to the target at 30° in a clockwise direction. However, the gain without structural uncertainty was diminished and directed to −60° in a clockwise direction. As mentioned in Section Comparison of Trajectories, the lateral deviancy and target directed movement influenced each other through feedback gain. In particular, the y-axis movement was more dependent on the x-position than the y-position. Thus, the task required complicated cooperative action, and the gain geometry was squashed. However, the gain of mini-max feedback control in NF was a true circle, and larger than that of OFC (Figure 6B). The gains in VF also indicated true circles, even if they were larger than those of NF. In DF and rDF, the gains were tuned by the force field, according to the direction of instability, as in the experimental measurements of stiffness (Franklin et al., 2007). In DF, only the lateral axes of the gains were expanded, although the anteroposterior axes were the same as those of NF. In rDF, the gains were similar to the 30° rotations of those in DF. The gain without structural uncertainty of VF was a little smaller than that with uncertainty dynamics. In contrast, the gains in DF and rDF also increased toward the unstable directions, as in the conditions with structural uncertainty.

Figure 6. Positional sensory feedback gain geometries at the midpoint of the movements in Figures 3, 4. Red dotted and blue solid lines indicate the condition with uncertainty and without uncertainty, respectively. Each row indicates NF, VF, DF, and rDF conditions from the left. (A) Ellipses for OFC. (B) Ellipses for MMFC.

Discussion

In this study, MMFC is presented as an extension of OFC for use as a robust control technique. This method uses time-varying feedback control for estimated states, including worst-case disturbances expected by familiarity with novel dynamics. The uncertainties of dynamics and noise are defined as disturbances in accordance with a robust control theory. In previous research, the uncertainties were assumed to have a Gaussian distribution (Bays and Wolpert, 2007; Izawa and Shadmehr, 2008; Crevecoeur et al., 2010); however, it seems unlikely that real-world uncertainties would do so. Accordingly, we modeled the uncertainties of environmental dynamics as structural uncertainties, using the robust control design. The computational method seems adequate, because the central nervous system can minimize the uncertainty of sensory input in two ways: by combining multiple sensory signals with prior knowledge to refine sensory estimates, and by predictive filtering of sensory input to remove less informative components of the signal (Bays and Wolpert, 2007). The simulation results indicated greater performance for environmental dynamics of force fields in terms of robustness and stability, and also reproduced behavioral characteristics. Thus, we consider that MMFC could predict motor behavior in the presence of structural uncertainty, and explain the early process of motor adaptation because it was able to predict a behavior, and achieve the task without environmental information. Furthermore, the feedback gain was increased in unstable directions like the stiffness modulation of a multi-joint arm in arm-reaching movements with unstable dynamics. This suggests that the brain modulates optimal stiffness to obtain efficient robustness, overwhelming the instabilities of the environmental dynamics. Moreover, a recent study suggested that reflex gains (feedback gains) are modulated by the accumulated evidence in support of an evolving decision before the onset of movement (Selen et al., 2012). This seems to support our theory, in that the feedback gains are determined according to the uncertainty of the movement in the motor planning phase before the onset of movement.

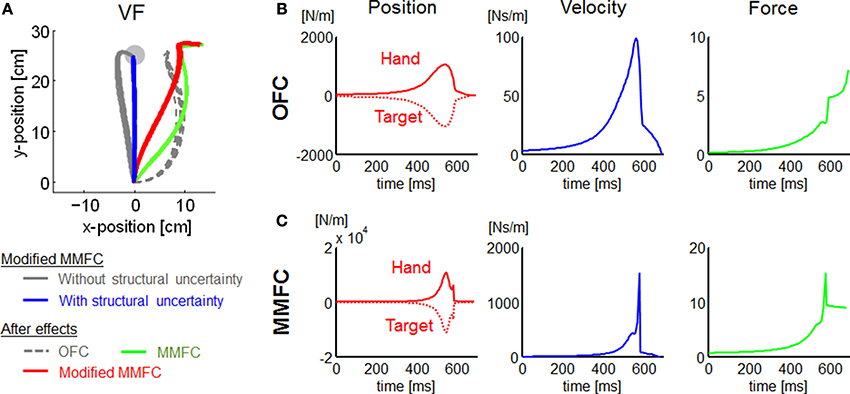

The trajectories in VF were somewhat different between our simulations and experimental measurements (Osu et al., 2003). Our simulations of the OFC and MMFC models could not predict the straight trajectory observed in the human study. The result may give the false impression that a trajectory control strategy to reduce motor effort requires a distinct deviation from the nominal straight line. However, the theoretical framework such as OFC actually may not be incompatible with the trajectory control by a cost function that trades off the discordant requirements of target accuracy, motor effort, and kinematic invariance in an acceleration-dependent force field (Mistry et al., 2013). This approach could be considered a MMFC representing the deviation from the straight line with a disturbance. During the period of movement (k ≤ Ns), we defined the regulated output matrix Ea as an identity matrix to generalize the MMFC model for motor adaptation problems. However, it was assumed that Ea transfers the state vector into a disturbance, which is determined by the kinematic constraints, bootstrapping the process of exploration and learning. The kinematic constraints appear reasonable to improve the task, particularly in the early phase of motor adaptation. Thus, we carried out extra VF simulations to examine this assumption. Then we modified the MMFC to replace Ea (k ≤ Ns) in Equation (23) with 100 · diag([1, 0, 1, 0, 0, 0, 0, 0]) as a kinematic constraint penalizing lateral deviances of the position and velocity (Mistry et al., 2013). Unsurprisingly, the modified MMFC resulted in trajectories that were close to linear (Figure 7A). Furthermore, the modified MMFC showed closer trajectories to the linear behavior than other models. These results suggest that kinematic constraints may be applied to determine an MMFC with environmental dynamics to ensure kinematic invariance.

Figure 7. Additional simulations for VF conditions, and state feedback gain profiles for NF conditions. (A) Simulated trajectories of the modified MMFC for VF conditions, and the after-effects of each model. (B) Gains for OFC. (C) Gains for MMFC. In (B,C), each row indicates position, velocity, and force gains from the left. With the position gains, the solid and dotted lines indicate the hand and target position gains, respectively, which are opposites in sign. Note that γ = 108 to satisfy Equations (9) and (10).

It has been suggested that a cost function should be modulated to increase the ratio of the energy cost, according to the uncertainty of the internal model (Crevecoeur et al., 2010), and standard forms for quantifying cost may not be sufficient to accurately examine whether human motor behavior abides by optimality principles (Berniker et al., 2013). In the model proposed here, the terms expressing the familiarity with the uncertainty are related to the cost values. That is, the cost function is indirectly modulated via the uncertainty of the internal model, which itself may also be reflected in the nervous system's use of impedance control to change the dynamic properties of the body (Burdet et al., 2001; Takahashi et al., 2001; Lametti et al., 2007; Mitrovic et al., 2010). These studies support our proposed model.

Limitations of this Model

In our simulation results, the changes in the gain in the direction of instability for the DF and rDF of MMFC model are fairly small compared to the magnitude of the experimental measurement (Franklin et al., 2007). This non-conformity may be caused by differences between actual and modeled muscle dynamics. In this study, arm dynamics was simplified as a linear point-mass model. However, the biological arm movement is actually induced by many muscles with non-linear dynamics. The muscle action forms limb stiffness geometry depending on task requirements. Our model did not reflect actual muscle dynamics. Especially, passive muscle mechanisms were not considered in the model. Even when the muscle is relaxed (the activation level is decreased), the active force disappears and the resting length is restored by the passive force (Huxley and Hanson, 1954). Then, limb stiffness is retained during maintained posture without muscle contraction, and the magnitude is not small, compared to muscle contraction effects on that (Osu and Gomi, 1999; Shin et al., 2009). Because the passive limb stiffness acts to inhibit the intended movement, agonist muscles are required to generate active force overwhelming the passive force retaining the posture to initiate movement. Thus, actual limb stiffness may be much higher than that in our simulations.

However, the feedback gain magnitude was small compared to the proprioceptive and visual feedback responses measured in human subjects (Bennett, 1994; Dimitriou et al., 2013). The difference between our simulation and the proprioceptive feedback response (the reflex response) may be attributable to the rigidity of the muscle model, analogous to the magnitude of stiffness modulation. The visual feedback gains measured in humans were purposed not to fall into the sensory feedback gain but the state feedback gain (Dimitriou et al., 2013). Moreover, the response was computed from a time window of 180–230 ms after perturbation onset, and it was not considered how the state estimation was updated for feedback latency. In fact, although the magnitude did depend directly on the cost weights and our model did not separate the visual feedback response from other feedback, our simulations for OFC and MMFC models showed sufficiently large feedback gains, exceed the feedback response reported in humans (Figures 7B,C). It has been suggested that the feedback gains show different time profiles. The visual feedback gain showed peaking at the middle of the movement and dropping rapidly at the movement end (Liu and Todorov, 2007; Dimitriou et al., 2013). In contrast, intrinsic feedback gain, measured as stiffness, showed a contrary profile, peaking at the movement onset and end, and dropping in the middle of the movement (Gomi and Kawato, 1997; Ueyama and Miyashita, 2014).

Other Models

Although an adaptation algorithm for uncertain dynamics has been proposed (Franklin et al., 2008), it is based on a feedback-error-learning strategy and requires a desired trajectory (Kawato, 1996; Ueyama and Miyashita, 2014). Thus, the adaptation process and motor planning of the desired trajectory must be considered separately and handled as different problems. In contrast, a MMFC can deal with both issues in the same context, as does OFC.

Friston raised the question of differences between internal models in motor control and perceptual inference in OFC, and suggested that active inference, a corollary of the free-energy principle, reduces to simply suppressing proprioceptive prediction errors (Friston, 2011). Moreover, it has been reported that active inference could acquire complex and adaptive behaviors using a free-energy formulation of perception (Friston et al., 2009), and generate movement trajectories shown to be remarkably robust to perturbations on a limb (Friston et al., 2010). In active inference, the cost function is absorbed into prior beliefs about state transitions and terminal states. Thus, active inference seems attractive as a means of recognizing biological optimization mechanisms, because OFC and MMFC have many free parameters (e.g., cost function and terminal time) that intricately affect the behavior. However, the behavior of active inference seems to be influenced by the estimated probability (i.e., prior assumption of noise and uncertainty) as a substitute for the definition of cost function. We consider that active inference and OFC are not mutually exclusive, and that the free-energy principle is just a “principle” that could unify motor control theories, based on the optimization of a cost. Although the free-energy principle has not been derived from empirical evidence, it can predict neurobiological implementation from the perspective of functional anatomy (Friston et al., 2012). For motor control studies, therefore, the free-energy principle seems to be a useful tool to connect the computational level to the hardware level.

Recently, behavioral studies have focused on understanding how uncertainty, or risk, is represented in motor control tasks, as well as in economic behaviors (Trommershäuser et al., 2008). Violations of risk neutrality have been reported various motor control tasks. For example, subjects exhibited risk-seeking behavior in a pointing task, because they systematically underestimated small probabilities and overestimated large probabilities (Wu et al., 2009). In addition, subjects exhibited risk-average behavior in a motor task that required them to control a Brownian particle with different levels of noise, which is consistent with the notion of a trade-off between the mean and the variance of movement cost (Nagengast et al., 2010). Moreover, it has also been suggested that the sensitivity of the risk is an important factor in motor tasks with speed/accuracy trade-offs (Nagengast et al., 2011). In contrast, when the uncertainty is assumed to have a Gaussian distribution and an exponential-quadratic error criterion, such as the expected unity function describing risk sensitivity, is used as the cost function, the MMFC problem is identified with the risk-sensitive optimal control problem of optimizing the exponential-quadratic error criterion (Speyer et al., 1992). Furthermore, an equivalence has already been established between a deterministic robust control that achieves a prescribed bound on the H∞ norm of a given closed-loop transfer function and a stochastic optimal control problem (Glover and Doyle, 1988). It has also been shown that the robust control directly connects to the risk-sensitive control via results on maximizing an entropy integral (i.e., the terminal time N → ∞). In addition, when the risk sensitivity parameter is equal to zero (in a risk-neutral case), the risk-sensitive control has been identified as an OFC problem. Although, in contrast to previous studies, the MMFC in this paper is derived as a time-varying controller, it is the same as OFC at two conditions: N → ∞ and γ → ∞. Thus, a risk-sensitive OFC seems to be a specific case of MMFC with Gaussian uncertainty. However, when there is uncertainty in the equations of motion themselves (e.g., the dynamics of a power tool such as a drill or a screwdriver are different from those of a can, resulting in strikingly different relationships between states and motor commands), structural uncertainty cannot be represented by a Gaussian distribution, and these different structures must be identified and learned (Orban and Wolpert, 2011). The MMFC proposed in this paper can handle the structural uncertainty. However, exploratory risk-taking is directly related to uncertainty in decision-making modulation (Doya, 2008), and the decision making itself may directly relate to motor control systems (Selen et al., 2012). However, the uncertainty problem may not be completely equivalent to the risk-taking problem, because the problems are distinguishable and could be identified as two independent problems (Bach et al., 2011).

Learning Process for Motor Adaptation

Feedback, adaptation, learning, and evolution have been identified as instances of wide sense adaptation, where sensory information is integrated and employed to change the control signals in various techniques and timescales (Karniel, 2011). Adaptive control is the change in the parameters of the control systems generated after the observation of previous control and sensory signals, and learning control is a structural change in the control system to generate a new type of behavior. In human studies, when we perform new or uncertain motor tasks, performance has been found to vary in accordance with the learning process (Shadmehr et al., 2010). Smith et al. reported that adaptation exhibited multiple timescales, driven by fast and slow processes (Smith et al., 2006). They suggested that the fast process, which decays quickly, is strongly affected by errors, but does not produce motor memory, whereas the slow process, which shows little decay, is weakly affected by errors but produces motor memory. On the other side, there are different mechanisms for adapting to stable and unstable dynamics (Osu et al., 2003). It has been proposed that adaptation learning is achieved by a combination of impedance control and an inverse dynamics model. In the early phase of learning, the impedance control also contributes to the formation of the inverse dynamics model, and helps to generate the necessary stability (Franklin et al., 2003b). Previous studies have shown that the function of the fast learning process is to increase the robustness of motor control systems, thereby improving their stability, and the internal model is obtained from multiple trials by impedance control during the slow learning process. We consider that the fast process is provided by instances of feedback and adaptation, whereas the slow process is achieved by adaptation and learning concepts. Thus, we propose MMFC as a robust control to increase the familiarity of both the uncertainty and the impedance in the adaptation of the fast process to improve the stability and reduce the error. The internal model, if it could improve the stability while achieving the task, would learn the actual dynamics across multiple trials, thereby decreasing the uncertainty in the learning of the slow process. Thus, it was recently proposed that complex behaviors in unstable dynamics cannot be explained in terms of a global optimization criterion, but rather require the ability to switch between different sub-optimal mechanisms (Zenzeri et al., 2014). We have assumed that the difference between the adaptation and learning mechanisms of stable and unstable dynamics requires that the internal model be represented in different forms, depending on the behavioral policies, off-policy and on-policy algorithms such as Q-learning and SARSA, respectively (Sutton, 1992). For example, unstable dynamics may require a deterministic behavior with an off-policy algorithm, because the cost (or reward) is assumed to be optimized through multiple trials fixing the policy to achieve the motor task in the unstable dynamics. That is, the estimated costs in any trials are required to converge to a value, similar to the idea of the worst-case design in the MMFC. In contrast, stable dynamics are assumed to require stochastic behavior with the on-policy algorithm, because it seems the best way to access the dynamics according to estimations by each trial. In addition, the off-policy algorithm has been recognized as an alternate strategy named “good-enough” control, in which the organism uses trial-and-error learning to acquire a repertoire of sensorimotor behaviors that are known to be useful, but not necessarily optimal (Loeb, 2012).

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

A part of this work was supported by JSPS KAKENHI Grant Number 26702023.

References

Anderson, B. D. O., and Moore, J. B. (1979). Optimal Filtering. Englewood Cliffs, NJ: Prentice-Hall.

Bach, D. R., Hulme, O., Penny, W. D., and Dolan, R. J. (2011). The known unknowns: neural representation of second-order uncertainty, and ambiguity. J. Neurosci. 31, 4811–4820. doi: 10.1523/JNEUROSCI.1452-10.2011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Başar, T., and Bernhard, P. (1995). H∞-Optimal Control and Related Minimax Design Problems: A Dynamic Game Approach. New York, NY: Birkhauser, LLC.

Bays, P. M., and Wolpert, D. M. (2007). Computational principles of sensorimotor control that minimize uncertainty and variability. J. Physiol. 578, 387–396. doi: 10.1113/jphysiol.2006.120121

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Bennett, D. J. (1994). Stretch reflex responses in the human elbow joint during a voluntary movement. J. Physiol. 474, 339–351.

Bernhard, P. (1995). A discrete-time min-max certainty equivalence principle. Syst. Control Lett. 24, 229–234. doi: 10.1016/0167-6911(94)00027-S

Berniker, M., O'Brien, M. K., Kording, K. P., and Ahmed, A. A. (2013). An examination of the generalizability of motor costs. PLoS ONE 8:e53759. doi: 10.1371/journal.pone.0053759

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Braun, D. A., Aertsen, A., Wolpert, D. M., and Mehring, C. (2009). Learning optimal adaptation strategies in unpredictable motor tasks. J. Neurosci. 29, 6472–6478. doi: 10.1523/JNEUROSCI.3075-08.2009

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Burdet, E., Osu, R., Franklin, D. W., Milner, T. E., and Kawato, M. (2001). The central nervous system stabilizes unstable dynamics by learning optimal impedance. Nature 414, 446–449. doi: 10.1038/35106566

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Crevecoeur, F., Mcintyre, J., Thonnard, J. L., and Lefevre, P. (2010). Movement stability under uncertain internal models of dynamics. J. Neurophysiol. 104, 1301–1313. doi: 10.1152/jn.00315.2010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dimitriou, M., Wolpert, D. M., and Franklin, D. W. (2013). The temporal evolution of feedback gains rapidly update to task demands. J. Neurosci. 33, 10898–10909. doi: 10.1523/JNEUROSCI.5669-12.2013

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Djouadi, S., and Zames, G. (2002). On optimal robust disturbance attenuation. Syst. Control Lett. 46, 343–351. doi: 10.1016/S0167-6911(02)00148-2

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Doya, K. (2008). Modulators of decision making. Nat. Neurosci. 11, 410–416. doi: 10.1038/nn2077

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Doyle, J. C., and Csete, M. (2011). Architecture, constraints, and behavior. Proc. Natl. Acad. Sci. U.S.A. 108, 15624–15630. doi: 10.1073/pnas.1103557108

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Franklin, D. W., Burdet, E., Osu, R., Kawato, M., and Milner, T. E. (2003a). Functional significance of stiffness in adaptation of multijoint arm movements to stable and unstable dynamics. Exp. Brain Res. 151, 145–157. doi: 10.1007/s00221-003-1443-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Franklin, D. W., Burdet, E., Peng Tee, K., Osu, R., Chew, C. M., Milner, T. E., et al. (2008). CNS learns stable, accurate, and efficient movements using a simple algorithm. J. Neurosci. 28, 11165–11173. doi: 10.1523/JNEUROSCI.3099-08.2008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Franklin, D. W., Liaw, G., Milner, T. E., Osu, R., Burdet, E., and Kawato, M. (2007). Endpoint stiffness of the arm is directionally tuned to instability in the environment. J. Neurosci. 27, 7705–7716. doi: 10.1523/JNEUROSCI.0968-07.2007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Franklin, D. W., Osu, R., Burdet, E., Kawato, M., and Milner, T. E. (2003b). Adaptation to stable and unstable dynamics achieved by combined impedance control and inverse dynamics model. J. Neurophysiol. 90, 3270–3282. doi: 10.1152/jn.01112.2002

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Franklin, D. W., and Wolpert, D. M. (2011). Computational mechanisms of sensorimotor control. Neuron 72, 425–442. doi: 10.1016/j.neuron.2011.10.006

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Friston, K. (2011). What is optimal about motor control? Neuron 72, 488–498. doi: 10.1016/j.neuron.2011.10.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Friston, K., Adams, R. A., Perrinet, L., and Breakspear, M. (2012). Perceptions as hypotheses: saccades as experiments. Front. Psychol. 3:151. doi: 10.3389/fpsyg.2012.00151

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Friston, K. J., Daunizeau, J., and Kiebel, S. J. (2009). Reinforcement learning or active inference? PLoS ONE 4:e6421. doi: 10.1371/journal.pone.0006421

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Friston, K. J., Daunizeau, J., Kilner, J., and Kiebel, S. J. (2010). Action and behavior: a free-energy formulation. Biol. Cybern. 102, 227–260. doi: 10.1007/s00422-010-0364-z

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Glover, K., and Doyle, J. C. (1988). State-space formulas for all stabilizing controllers that satisfy an H infinity-norm bound and relations to risk sensitivity. Syst. Control Lett. 11, 167–172. doi: 10.1016/0167-6911(88)90055-2

Gomi, H., and Kawato, M. (1997). Human arm stiffness and equilibrium-point trajectory during multi-joint movement. Biol. Cybern. 76, 163–171. doi: 10.1007/s004220050329

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Harris, C. M., and Wolpert, D. M. (1998). Signal-dependent noise determines motor planning. Nature 394, 780–784. doi: 10.1038/29528

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Huxley, H., and Hanson, J. (1954). Changes in the cross-striations of muscle during contraction and stretch and their structural interpretation. Nature 173, 973–976. doi: 10.1038/173973a0

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Izawa, J., Rane, T., Donchin, O., and Shadmehr, R. (2008). Motor adaptation as a process of reoptimization. J. Neurosci. 28, 2883–2891. doi: 10.1523/JNEUROSCI.5359-07.2008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Izawa, J., and Shadmehr, R. (2008). On-Line processing of uncertain information in visuomotor control. J. Neurosci. 28, 11360–11368. doi: 10.1523/JNEUROSCI.3063-08.2008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Karniel, A. (2011). Open questions in computational motor control. J. Integr. Neurosci. 10, 385–411. doi: 10.1142/S0219635211002749

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kawato, M. (1996). “Trajectory formation in arm movements: minimization principles and procedures,” in Advances in motor learning and control, ed. H. Zelaznik (Champaign, IL: Human Kinetics), 225–259.

Krutky, M. A., Ravichandran, V. J., Trumbower, R. D., and Perreault, E. J. (2010). Interactions between limb and environmental mechanics influence stretch reflex sensitivity in the human arm. J. Neurophysiol. 103, 429–440. doi: 10.1152/jn.00679.2009

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lametti, D. R., Houle, G., and Ostry, D. J. (2007). Control of movement variability and the regulation of limb impedance. J. Neurophysiol. 98, 3516–3524. doi: 10.1152/jn.00970.2007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Liu, D., and Todorov, E. (2007). Evidence for the flexible sensorimotor strategies predicted by optimal feedback control. J. Neurosci. 27, 9354–9368. doi: 10.1523/JNEUROSCI.1110-06.2007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lockhart, D. B., and Ting, L. H. (2007). Optimal sensorimotor transformations for balance. Nat. Neurosci. 10, 1329–1336. doi: 10.1038/nn1986

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Loeb, G. E. (2012). Optimal isn't good enough. Biol. Cybern. 106, 757–765. Doi: 10.1007/s00422-012-0514-6

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Matthews, P. (1996). Relationship of firing intervals of human motor units to the trajectory of post-spike after-hyperpolarization and synaptic noise. J. Physiol. 492, 597–628.

Mistry, M., Theodorou, E., Schaal, S., and Kawato, M. (2013). Optimal control of reaching includes kinematic constraints. J. Neurophysiol. 110, 1–11. doi: 10.1152/jn.00794.2011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Mitrovic, D., Klanke, S., Osu, R., Kawato, M., and Vijayakumar, S. (2010). A computational model of limb impedance control based on principles of internal model uncertainty. PLoS ONE 5:e13601. doi: 10.1371/journal.pone.0013601

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Nagengast, A. J., Braun, D. A., and Wolpert, D. M. (2009). Optimal control predicts human performance on objects with internal degrees of freedom. PLoS Comput. Biol. 5:e1000419. doi: 10.1371/journal.pcbi.1000419

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Nagengast, A. J., Braun, D. A., and Wolpert, D. M. (2010). Risk-sensitive optimal feedback control accounts for sensorimotor behavior under uncertainty. PLoS Comput. Biol. 6:e1000857. doi: 10.1371/journal.pcbi.1000857

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Nagengast, A. J., Braun, D. A., and Wolpert, D. M. (2011). Risk sensitivity in a motor task with speed-accuracy trade-off. J. Neurophysiol. 105, 2668–2674. doi: 10.1152/jn.00804.2010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Orban, G., and Wolpert, D. M. (2011). Representations of uncertainty in sensorimotor control. Curr. Opin. Neurobiol. 21, 629–635. doi: 10.1016/j.conb.2011.05.026

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Osu, R., Burdet, E., Franklin, D. W., Milner, T. E., and Kawato, M. (2003). Different mechanisms involved in adaptation to stable and unstable dynamics. J. Neurophysiol. 90, 3255–3269. doi: 10.1152/jn.00073.2003

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Osu, R., and Gomi, H. (1999). Multijoint muscle regulation mechanisms examined by measured human arm stiffness and EMG signals. J. Neurophysiol. 81, 1458–1468.

Pruszynski, J. A., Kurtzer, I., Nashed, J. Y., Omrani, M., Brouwer, B., and Scott, S. H. (2011). Primary motor cortex underlies multi-joint integration for fast feedback control. Nature 478, 387–390. doi: 10.1038/nature10436

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Savage, L. (1955). The foundation of statistics. Bull. Am. Math. Soc. 61, 236–239. doi: 10.1090/S0002-9904-1955-09900-2

Selen, L. P. J., Shadlen, M. N., and Wolpert, D. M. (2012). Deliberation in the motor system: reflex gains track evolving evidence leading to a decision. J. Neurosci. 32, 2276–2286. doi: 10.1523/JNEUROSCI.5273-11.2012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Shadmehr, R., and Mussa-Ivaldi, F. A. (1994). Adaptive representation of dynamics during learning of a motor task. J. Neurosci. 14, 3208–3224.

Shadmehr, R., Smith, M. A., and Krakauer, J. W. (2010). Error correction, sensory prediction, and adaptation in motor control. Annu. Rev. Neurosci. 33, 89–108. doi: 10.1146/annurev-neuro-060909-153135

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Shin, D., Kim, J., and Koike, Y. (2009). A myokinetic arm model for estimating joint torque and stiffness from EMG signals during maintained posture. J. Neurophysiol. 101, 387–401. doi: 10.1152/jn.00584.2007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Smith, M. A., Ghazizadeh, A., and Shadmehr, R. (2006). Interacting adaptive processes with different timescales underlie short-term motor learning. PLoS Biol. 4:e179. doi: 10.1371/journal.pbio.0040179

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Speyer, J. L., Fan, C. H., and Banavar, R. N. (1992). “Optimal stochastic estimation with exponential cost criteria,” in Decision and Control, 1992, Proceedings of the 31st IEEE Conference on (Tucson, AZ: IEEE).

Takahashi, C., Scheidt, R., and Reinkensmeyer, D. (2001). Impedance control and internal model formation when reaching in a randomly varying dynamical environment. J. Neurophysiol. 86, 1047–1051.

Todorov, E. (2005). Stochastic optimal control and estimation methods adapted to the noise characteristics of the sensorimotor system. Neural Comput. 17, 1084–1108. doi: 10.1162/0899766053491887

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Todorov, E., and Jordan, M. I. (2002). Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226–1235. doi: 10.1038/nn963

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Trommershäuser, J., Maloney, L. T., and Landy, M. S. (2008). Decision making, movement planning and statistical decision theory. Trends Cogn. Sci. 12, 291–297. doi: 10.1016/j.tics.2008.04.010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ueyama, Y., and Miyashita, E. (2011). “Robust control for an adaptation mechanism of uncertain dynamics in reaching movements,” in 21st Annual Conference of the Japanese Neural Network Society (Onna-son).

Ueyama, Y., and Miyashita, E. (2013). Signal-dependent noise induces muscle co-contraction to achieve required movement accuracy: a simulation study with an optimal control. Curr. Bioinformatics 8, 16–24. doi: 10.2174/157489313804871632

Ueyama, Y., and Miyashita, E. (2014). Optimal feedback control for predicting dynamic stiffness during arm movement. IEEE Trans. Ind. Electron. 61, 1044–1052. doi: 10.1109/TIE.2013.2273473

Wolpert, D. M., and Flanagan, J. R. (2010). Motor learning. Curr. Biol. 20, R467–R472. doi: 10.1016/j.cub.2010.04.035

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wu, S. W., Delgado, M. R., and Maloney, L. T. (2009). Economic decision-making compared with an equivalent motor task. Proc. Natl. Acad. Sci. U.S.A. 106, 6088–6093. doi: 10.1073/pnas.0900102106

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Yan, X., Wang, Q. N., Lu, Z. C., Stevenson, I. H., Kording, K., and Wei, K. L. (2013). Generalization of unconstrained reaching with hand-weight changes. J. Neurophysiol. 109, 137–146. doi: 10.1152/jn.00498.2012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Zenzeri, J., De Santis, D., and Morasso, P. (2014). Strategy switching in the stabilization of unstable dynamics. PLoS ONE 9:e99087. doi: 10.1371/journal.pone.0099087

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: reaching, motor control, H∞ control, robust control, optimal feedback control, feedback gain, stiffness tuning, force field

Citation: Ueyama Y (2014) Mini-max feedback control as a computational theory of sensorimotor control in the presence of structural uncertainty. Front. Comput. Neurosci. 8:119. doi: 10.3389/fncom.2014.00119

Received: 09 July 2014; Accepted: 05 September 2014;

Published online: 24 September 2014.

Edited by:

Arthur Prochazka, University of Alberta, CanadaReviewed by:

David W. Franklin, University of Cambridge, UKJ. Andrew Pruszynski, Umea University, Sweden

Copyright © 2014 Ueyama. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuki Ueyama, Department of Rehabilitation Engineering, Research Institute of National Rehabilitation Center for Persons with Disabilities, 4-1 Namiki, Tokorozawa, Saitama 359-8555, Japan e-mail:dWV5YW1hLXl1a2lAcmVoYWIuZ28uanA=

Yuki Ueyama

Yuki Ueyama