95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 13 March 2025

Sec. Precision Medicine

Volume 12 - 2025 | https://doi.org/10.3389/fmed.2025.1529335

This article is part of the Research Topic Large Language Models for Medical Applications View all 12 articles

Hong-Qi Zhang1

Hong-Qi Zhang1 Muhammad Arif2

Muhammad Arif2 Maha A. Thafar3

Maha A. Thafar3 Somayah Albaradei4

Somayah Albaradei4 Peiling Cai5

Peiling Cai5 Yang Zhang6*

Yang Zhang6* Hua Tang7,8*

Hua Tang7,8* Hao Lin1*

Hao Lin1*Introduction: Pathological myopia (PM) is a serious visual impairment that may lead to irreversible visual damage or even blindness. Timely diagnosis and effective management of PM are of great significance. Given the increasing number of myopia cases worldwide, there is an urgent need to develop an automated, accurate, and highly interpretable PM diagnostic technology.

Methods: We proposed a computational model called PMPred-AE based on EfficientNetV2-L with attention mechanism optimization. In addition, Gradient-weighted class activation mapping (Grad-CAM) technology was used to provide an intuitive and visual interpretation for the model’s decision-making process.

Results: The experimental results demonstrated that PMPred-AE achieved excellent performance in automatically detecting PM, with accuracies of 98.50, 98.25, and 97.25% in the training, validation, and test datasets, respectively. In addition, PMPred-AE can focus on specific areas of PM image when making detection decisions.

Discussion: The developed PMPred-AE model is capable of reliably providing accurate PM detection. In addition, the Grad-CAM technology was also used to provide an intuitive and visual interpretation for the decision-making process of the model. This approach provides healthcare professionals with an effective tool for interpretable AI decision-making process.

Pathological myopia (PM) is a serious visual disease that can lead to irreversible visual damage or even blindness (1–3). In recent years, PM has become one of the main causes of visual impairment and permanent blindness worldwide, especially in Asian countries. According to the research by Holden et al. (4), by 2050, nearly half of the global population will be affected by myopia, with approximately 10% suffering from high myopia, which will also become the leading cause of permanent blindness. In addition, retinopathy and complications related to myopia may also increase the risk of visual damage (5–7). Therefore, timely diagnosis and early detection of PM are crucial. Currently, develop an automated, accurate, and non-invasive method PM diagnosis method is an urgent task.

With the development of artificial intelligence (AI) and the accumulation of myopia data, a variety of computational methods have been developed (8–10). For example, Liu et al. (10) introduced a method using texture features and Support Vector Machine (SVM) (11–13) to automatically detect PM. This method processed retinal fundus images by extracting region of interest (ROI) and detecting the optic nerve head. Subsequently, texture-based metrics were generated, categorized and grouped into zones for context-based generation of features. Finally, SVM was used to detect PM based on these features, achieving an accuracy (ACC) of 87.5% (14). Zhang et al. (15) proposed an automatic detection method for PM based on max-relevance and min-redundancy (mRMR). This method built a feature space from information extracted from fundus images and medical screening data, created a ranked feature library using mRMR, searched for the most compact feature set with a forward selection wrapper, and then used SVM for detection. As a result, they achieved an ACC of 89.3% for the right eye and 88.5% for the left eye (15). Xu et al. (16) developed a detection method for PM based on bag-of-feature and sparse learning. During the training phase, the codebook for the bag-of-feature model and the classification model were learned, and the top related visual features were discovered through sparse learning.

In the detection phase, local features were first extracted from a given retinal fundus image, quantified using the learned codebook to obtain global features. Finally, the classification model was used to determine the presence of PM, achieving an ACC of 90.6% (16). Zhang et al. (17) also developed an automatic diagnostic method for PM based on heterogeneous biomedical data, integrating data from various sources including imaging data, demographic/clinical data, and genotyping data, and ultimately using a multiple kernel learning (MKL) approach to accurately detect PM, achieving an average Area Under Curve (AUC) of 0.888. Chen et al. (18) introduced a deep learning architecture for automating the diagnosis of glaucoma. This method used a convolutional neural networks (CNN) (19, 20) model with four convolutional layers and two fully connected layers, combined with dropout and data augmentation strategies to enhance diagnostic performance. The method achieved AUC values of 0.831 and 0.887 on the ORIGA and SCES datasets, respectively (18). Xu et al. (21) proposed an automated detection method for tessellated fundus based on texture features, color features and SVM. The method could achieve an ACC of 98%. Xu et al. (22) proposed a method for detecting ocular disease based on multiple informatics domains. This method combined pre-learned SVM classifiers effectively merging personal demographic data, genome information, and visual information from retinal fundus images. The final model obtained an AUCs of 0.935 for glaucoma, 0.822 for age-related macular degeneration (AMD), and 0.946 for PM (22). Septiarini et al. (23) introduced a method based on statistical features to automatically detect peripapillary atrophy in retinal fundus images. This method involved four steps: optic nerve head (ONH) localization, ONH segmentation, preprocessing, and features extraction. Through these steps, three key features were extracted: standard deviation (σ), smoothness (S), and third moment (μ3). By using a backpropagation neural network (BPNN), they achieved an ACC of 95% (23). Rauf et al. (24) proposed a CNN-based method for PM detection and obtained an ACC of 95%. Although these studies have achieved positive results, there are still several challenges: (1) Many advanced deep learning methods are emerging, but in the field of PM detection, these advanced technologies have not yet been applied. (2) Due to the uniqueness of the medical industry and the high requirements for model accuracy, model performance still needs to be improved. (3) Due to the differences in actual medical facilities, the efficiency of these models in poorly equipment medical environments is an important problem that needs to be overcome. (4) As an auxiliary diagnosis method, the interpretability of models was an important task, but current research in this area is still insufficient (25–28).

To address the aforementioned challenges, this study designed an improved model named PMPred-AE based on EfficientNetV2-L to automatically identify and diagnose PM. This study further enhanced the model’s ability to identify key features in the retina images by introducing the attention mechanism, thereby improving the accuracy of the diagnosis of PM. In order to provide visual explanations for the decision-making process of the model, we also adopted the Gradient-weighted class activation mapping (Grad-CAM) technique. Our study provides an efficient, accurate, and explainable model for the detection of PM.

The study utilized the PALM Challenge dataset, comprising training images, verification images and test images. The training dataset contains 187 non-PM and 213 PM. Similarity, the verification set consists of 400 images, with 189 labeled as non-PM and 211 as PM. Additionally, test set includes 400 images with corresponding labels: 187 categorized as non-PM and 213 as PM (29). This dataset configuration enabled rigorous evaluation and validation of the proposed methodologies.

The PMPred-AE architecture consists of two core components: a feature extractor and a classifier. In the feature extraction stage, we chose EfficientNetV2-L, an advanced CNN model aimed at accelerating image processing and improving its performance. As an upgraded version of the EfficientNet series, EfficientNetV2-L underwent pre-trained on a massive ImageNet dataset that covers millions of images and thousands of categories. Through its scalable architecture, EfficientNetV2-L cleverly balances the network depth, width, and resolution to achieve optimal performance and efficiency. EfficientNetV2-L is an upgraded version of the EfficientNet series. It optimizes the balance of network depth, width, and resolution to achieve high efficiency and accuracy in image processing tasks. Compared to advanced vision transformer (ViT) series’ ViT-L/16, EfficientNetV2-L achieves higher accuracy. Meanwhile, the training speed could increase by 7 times (30). In particular, the model utilizes lightweight depthwise separable convolution techniques, significantly reducing computational burden and model size while maintaining efficient feature extraction capabilities. Therefore, in the context of PM-detection, EfficientNetV2-L could efficiently identify key features in images and provide accurate data input for classifiers, significantly improving the performance of the model. Moreover, its superior computing speed and efficiency made it very suitable for application in medical environments with rudimentary equipment, providing strong technical support for early diagnosis and treatment. In the classification stage, we used an improved fully-connected neural network based on the attention mechanism. The core function of this improvement is to enhance the model’s attention to the most important parts of the input features. By assigning different weights to the input features, the attention mechanism allows the model to prioritize the features that contribute the most to the final classification decision, rather than treating all input features equally. This dynamic weight allocation method not only improves the model’s understanding of the data, but also increases the adaptability and flexibility of the model, enabling it to automatically focus on the most critical information. Specifically, we used a linear layer to transform all the features into a one-dimensional space, and then map them to a value between 0 and 1 using the Softmax function. Finally, this weight is multiplied by the original input features to emphasize the features that contribute the most to the classification result. This improvement was particularly important for the detection of PM. It allows the model to pay special attention to the areas that revealed the pathological features of myopia. Through this mechanism, our model provided an efficient tool for the early diagnosis and treatment of PM.

In order to visually explain the decision-making process of CNN in PM detection tasks, we used Grad-CAM technique to generate a heatmap. Through Grad-CAM, we can clearly see which areas are given more attention when the model makes detection. This approach relies on the gradient information of the model, particularly focusing on the gradients of the feature layers from the last convolutional layer, to highlight the regions that contribute most to the model predictions. The working principle of Grad-CAM can be briefly described by the following mathematical expression.

First, for each channel in the feature layer , the global average pooling of these slopes is calculated to obtain the weight coefficient (Equation 1):

where, is the output score of the model for category , is the activation value of the feature layer at position , k is the k-th channel in the feature layer A, and is the total number of units in the feature layer.

Then, the weight coefficient is multiplied by the activation value of the feature layer and then accumulated. The final heatmap is generated by filtering through the ReLU function (Equation 2):

This process ensures that only features that have positive impact on l prediction category c of the mode were visualized, thereby enhancing the clarity and interpretability of the model’s decision. By applying Grad-CAM to the PMPred-AE model, the heatmap clearly reveals that the model focuses on the location of key pathological changes in the retina image when identifying PM. The heatmap provided by Grad-CAM not only demonstrates the reason behind the model’s high performance, but also proves its focusing ability, which is crucial to improve the reliability and trust of the model in practical medical applications. Through this way, Grad-CAM provides healthcare professionals with an intuitive tool to better understand and explain the decision-making process of the PMPred-AE, especially in medical diagnosis and treatment planning.

The learning rate is set to 0.0001, the batch size is 8, the number of epochs is 50, and the optimizer is AdamW.

Several widely used evaluation indicators (31–37), including precision (Pre) (Equation 3), recall (Rec) (Equation 4), accuracy (ACC) (Equation 5), F1-score (F1) (Equation 6), and Matthew’s coefficient of association (MCC) (Equation 7), were utilized to evaluate model’s performance, defined as follows:

where TP, TN, FP, and FN represented the true positive, true negative, false positive, and false negative of the sample, respectively. We also drew the receiver operating characteristic curve (ROC) and precise recall curve (PRC), and obtained the area under the curve (AUC, AUPRC) (27, 38–41).

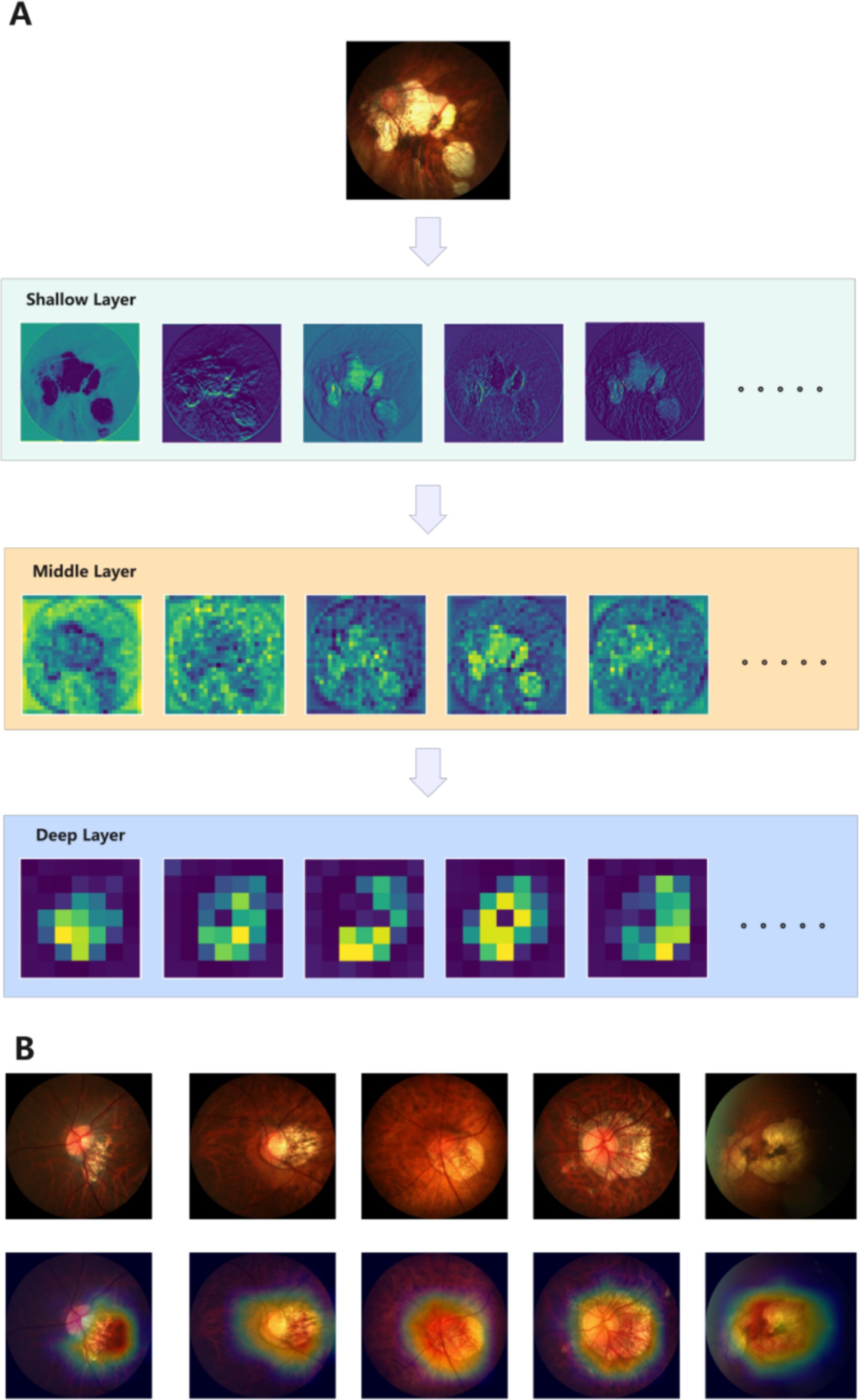

In our experiment, we first adopted data augmentation techniques to enrich and expand the original data set, and created more diverse training samples. Data enhancement included operations such as image rotation, resizing, and cropping. It was designed to simulate different shooting conditions and perspectives to improve the model’s generalization and robustness. The data-enhanced dataset was used to train our PMPred-AE model, which was based on the EfficientNetV2-L architecture and optimized to meet the specific requirements of PM-detection. EfficientNetV2-L is the foundation of our model. It has been pre-trained on the ImageNet data set, and therefore has strong feature extraction capabilities (42, 43). In order to further improve the performance of the model, we introduced an attention mechanism in the fully connected layer of the model. This mechanism enables the model to focus more on the key areas related to PM diagnosis in the image, thereby improving the accuracy of diagnosis. During the model training process, the model parameters were adjusted based on the performance on the verification set to achieve the optimal configuration. After training, we visualized the output of the model at different levels (shallow, middle, and deep). This step helped us understand how the model gradually extracted and utilized image features. In addition, we also used Grad-CAM technology to generate a heatmap that highlight the areas that the model focuses on when making predictions. In this way, we can not only verify the decision-making process of the model, but also provide intuitive visual explanations for doctors to help them better understand the basis of the model. Overall, our experiment combined data augmentation, attention mechanisms, and advanced model architecture and explanatory techniques to develop an efficient, accurate, and explainable model for the detection of PM (Figure 1).

Due to the difficulty of collecting and annotating pathological images, only a small number of data samples could be collected under normal circumstances. Therefore, data augmentation was a very necessary task. It can effectively reduce the over-fitting degree of the model, and allow the model to learn more general knowledge instead of focusing too much on noise and some unique features, thereby improve the generalization and robustness of the model (44–46). In this study, we employed a combination approach for sample augmentation. The detailed procedure included initially resizing the images to 256×256 pixels. Subsequently, they are randomly cropped to 224×224 pixels. Then anti-aliasing techniques were applied to ensure image quality. In addition, to increase visual variety, the probability of horizontal and vertical flipping was set to 50%. This method also incorporated subtle random affine transformations, including rotations between −10 to 10 degrees, translations of 10% of the image width or height, and scaling between 90 to 110%. Furthermore, random erasure is applied with a 50% probability, randomly covering a small portion of the image, enhancing the model’s ability to handle image occlusion (Figure 2). Finally, the images were converted into tensors and normalized according to a specific mean and standard deviation to suit the needs of model training. We mainly used these methods to address the following issues: by randomly cropping and resizing, we simulated the scene where doctors observe the eyes from different distances and angles, and random rotation and affine transformation helped the model identify pathological features from multiple angles. Random erasure simulates potential occlusions during actual medical image acquisition. Normalization ensures consistency of image data during training, while anti-aliasing maintains the clarity of image details, which is crucial for identifying pathological features. By introducing various visual perturbations, this comprehensive data augmentation strategy facilitates the model in extracting valuable features from diverse image transformations, thereby enhancing performance and robustness in real-world application scenarios.

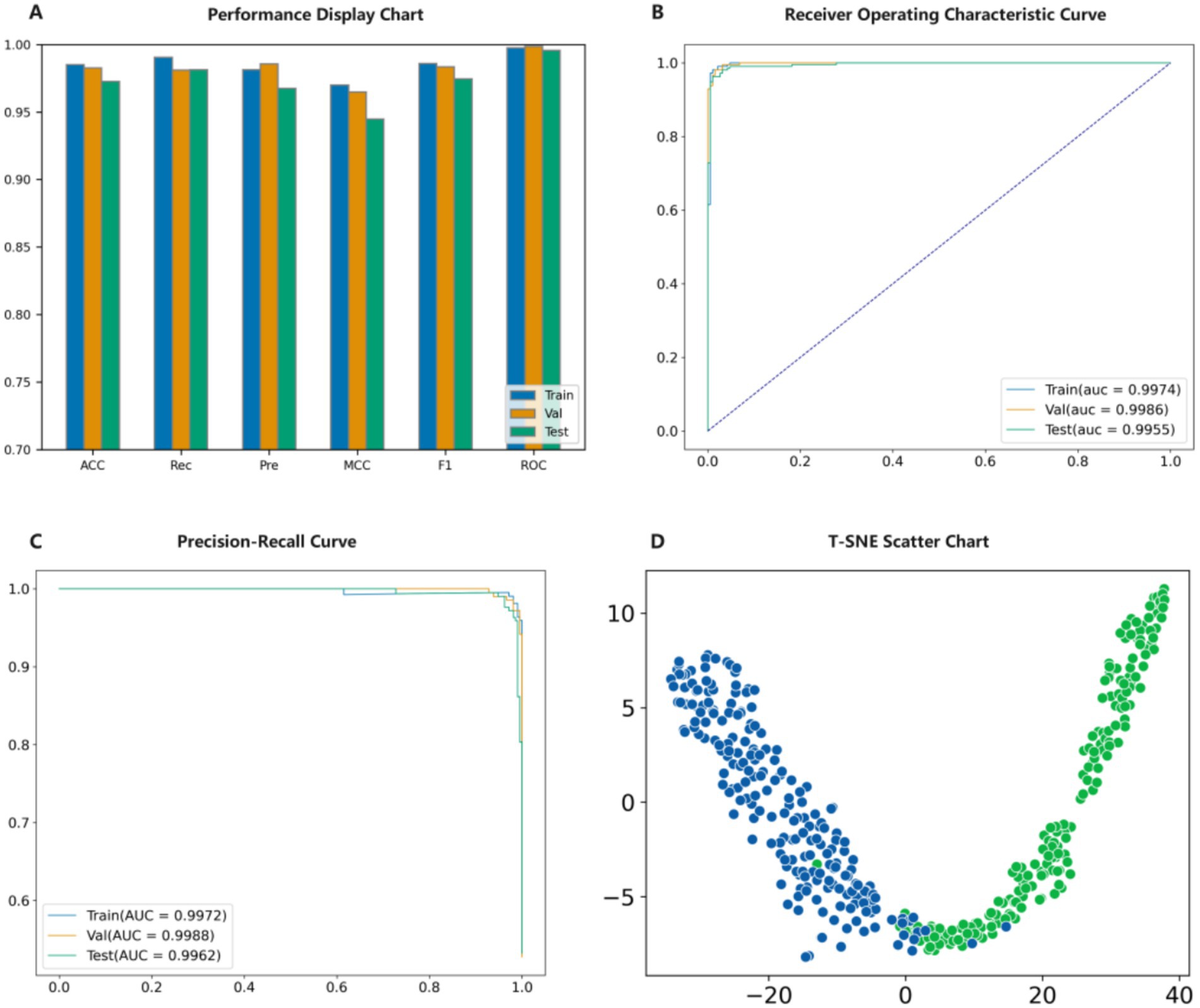

A series of experiments have shown that the PMPred-AE model exhibits excellent performance in PM classification tasks. Firstly, the model is trained on the training set to ensure that it has sufficient learning foundation and can capture the key features and patterns in the data (47–50). Then, the validation set was used to adjust the parameters of the model, which further improved its performance and ensured its generalization ability on unseen data (51, 52). The experimental results showed that PMPred-AE performed well on the test set, and all evaluation indicators reached a very high level, such as ACC, F1, Pre, Rec and MCC with values of 0.9725, 0.9744, 0.9676, 0.9812 and 0.9448, respectively. This indicates that PMPred-AE has excellent ability to effectively distinguish PM from non-PM (Figure 3A, Table 1). In addition, by plotting ROC and PRC, we observed that the PMPred-AE model had good AUC and AUPRC under both conditions, with values of 0.9955 and 0.9962, respectively. This further demonstrated the efficiency of PMPred-AE model in feature extraction and capability in recognizing PM (Figures 3B,C). Finally, we used t-SNE technology to visualize the output of the model (Figure 3D) (53). The results showed that PM and non-PM can be clearly distinguished in a low-dimensional space, indicating that the model can effectively represent their features in a low-dimensional space and capture the complex patterns and structural differences between them. This further suggests that the PMPred-AE model has broad application prospect in clinical practice.

Figure 3. Model validation result diagram. (A) Evaluation results of the model. (B) ROC results of the model. (C) PRC results of the model. (D) t-SNE visualization the model.

To further confirm that PMPred-AE could effectively extract features, we visualized the output of the model’s shallow, middle, and deep layers. It can be clearly observed that as the depth of the model increases, the model can extract more abstract and higher-level features. This proves that the hierarchical structure of PMPred-AE model effectively promoted the gradual extraction and refinement of features (Figure 4A). Later, in order to further investigate why PMPred-AE could efficiently distinguish PM and non-PM, we used the Grad-CAM technology to generate a heatmap that could reveal the areas that the model focused on when making predictions, thus providing an explanation for the model’s decision-making process (Figure 4B). The heatmap revealed that the PMPred-AE model could effectively focus on the location of the key pathological changes in the image when identifying PM. These positions were often the key for distinguishing between PM and non-PM, which explained why the model could achieve high accuracy. This focusing ability not only improved the prediction performance of the model, but also increased its reliability and credibility in practical applications, especially in medical diagnosis and treatment planning.

Figure 4. Model explanation display diagram. (A) Visualize the output results of shallow, middle, and deep layers of the model. (B) Visualization results of Grad-CAM.

To further demonstrate the performance of PMPred-AE in detecting PM, we should compare the proposed model with existed studies. However, those studies we mentioned earlier did not share their source code and used different datasets, making it impossible for use to make a fair comparison. Fortunately, we could use the PALM’s benchmark data from 2023 (Base-2023) (29). The experiment results showed that among all evaluation metrics, PMPred-AE is superior to Base-2023 (Figure 5, Table 2). By comparing with Base-2023, we further consolidated the validation of the PMPred-AE model and provided more reliable support for its application in clinical practice.

In this study, we designed an improved EfficientNetV2-L model based on the attention mechanism (PMPred-AE) for the automatic detection of PM. By using EfficientNetV2-L as the basic architecture for feature extraction and introducing improvements based on the attention-based mechanism in the classification stage, the PMPred-AE model could efficiently identify key features in eye image and significantly improve the prediction performance of the model. In the research, data augmentation techniques were used to expand the training samples, including image rotation, resizing, and cropping to improve the model’s generalization ability and reliability. In addition, Grad-CAM technology was introduced during the model training process to generate heatmaps, which provided a visual means to explain the decision process of the PMPred-AE in the identification of PM. The heatmap generated by Grad-CAM can clearly show the areas that the model focused on when making predictions, thereby enhancing the clarity and interpretability of the model’s decisions. Compared with existing work, PMPred-AE had a significant improvement in ACC, Rec, ROC, and F1. This confirmed its leading position in the field of PM-detection and provided strong support for its application in clinical practice.

The PMPred-AE model demonstrates significant potential and scalability in the field of medical image analysis. In addition to effectively detecting PM, PMPred-AE is also applicable to various medical imaging tasks, including the analysis of tumors, brain diseases, and lung diseases. Despite the unique characteristics of different medical images, PMPred-AE offers an efficient and interpretable framework that can be applied across diverse medical scenarios, showcasing substantial clinical application potential. The clinical value of PMPred-AE lies not only in its high accuracy and efficiency but also in its seamless integration with existing healthcare systems. The model can directly process images generated by standard medical devices without requiring additional workflows. Furthermore, PMPred-AE uses Grad-CAM technology to generate heatmaps that visualize the regions the model focuses on, helping physicians make more precise clinical decisions. The model’s lightweight design ensures efficient operation even in resource-constrained environments, making it particularly suitable for regions with limited healthcare resources. However, there are several challenges to be addressed in the deployment of PMPred-AE in practice. First, the quality and diversity of fundus images may vary due to differences in imaging devices and conditions, potentially affecting model performance. To address this, we can enhance the model’s generalization ability by expanding the training dataset and incorporating data augmentation techniques. Second, although the model employs an efficient network architecture, inference speed and computational resource requirements could become limiting factors in resource-constrained environments. To mitigate this, we plan to deploy the model on the cloud, leveraging cloud computing resources for inference to reduce the local computational burden. In summary, while the deployment of PMPred-AE faces several challenges, improvements in data quality, optimization of computational resources, and enhanced model robustness can effectively address these issues, ensuring the successful application of the model in clinical practice.

In summary, this research successfully developed an efficient, accurate, and explainable model for the detection of PM by combining advanced model architecture, attention mechanism, and explanatory techniques. This comprehensive method not only improved the performance of the model, but also provided a valuable reference for clinical diagnosis, demonstrating the great potential of deep learning in the field of medical image analysis. In the future, with the continuous advancement of algorithms and technology, such models are expected to play a greater role in improving the efficiency and accuracy of PM diagnosis. The source code has been uploaded to GitHub and can be accessed at: https://github.com/ZhangHongqi215/PMPred-AE.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

H-QZ: Data curation, Formal analysis, Investigation, Methodology, Resources, Software, Writing – original draft. MA: Investigation, Methodology, Validation, Writing – original draft. MT: Validation, Writing – original draft. SA: Methodology, Validation, Writing – original draft. PC: Formal analysis, Investigation, Writing – original draft. YZ: Conceptualization, Funding acquisition, Project administration, Writing – review & editing. HT: Conceptualization, Funding acquisition, Project administration, Supervision, Writing – review & editing. HL: Conceptualization, Funding acquisition, Project administration, Supervision, Writing – review & editing.

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the National Natural Science Foundation of China (62250028, 62172343), the Sichuan Science and Technology Program (Grant No. 2022YFS0614).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Morgan, IG, French, AN, Ashby, RS, Guo, X, Ding, X, He, M, et al. The epidemics of myopia: aetiology and prevention. Prog Retin Eye Res. (2018) 62:134–49. doi: 10.1016/j.preteyeres.2017.09.004

2. Hemelings, R, Elen, B, Blaschko, MB, Jacob, J, Stalmans, I, and De Boever, P. Pathological myopia classification with simultaneous lesion segmentation using deep learning. Comput Methods Prog Biomed. (2021) 199:105920. doi: 10.1016/j.cmpb.2020.105920

3. Saw, SM, Gazzard, G, Shih-Yen, EC, and Chua, WH. Myopia and associated pathological complications. Ophthalmic Physiol Opt. (2005) 25:381–91. doi: 10.1111/j.1475-1313.2005.00298.x

4. Holden, BA, Fricke, TR, Wilson, DA, Jong, M, Naidoo, KS, Sankaridurg, P, et al. Global prevalence of myopia and high myopia and temporal trends from 2000 through 2050. Ophthalmology. (2016) 123:1036–42. doi: 10.1016/j.ophtha.2016.01.006

5. Yang, J, Ouyang, X, Fu, H, Hou, X, Liu, Y, Xie, Y, et al. Advances in biomedical study of the myopia-related signaling pathways and mechanisms. Biomed Pharmacother. (2022) 145:112472. doi: 10.1016/j.biopha.2021.112472

6. Jonas, JB, Jonas, RA, Bikbov, MM, Wang, YX, and Panda-Jonas, S. Myopia: histology, clinical features, and potential implications for the etiology of axial elongation. Prog Retin Eye Res. (2023) 96:101156. doi: 10.1016/j.preteyeres.2022.101156

7. Agyekum, S, Chan, PP, Zhang, Y, Huo, Z, Yip, BHK, Ip, P, et al. Cost-effectiveness analysis of myopia management: a systematic review. Front Public Health. (2023) 11:1093836. doi: 10.3389/fpubh.2023.1093836

8. Wei, L, He, W, Malik, A, Su, R, Cui, L, and Manavalan, B. Computational prediction and interpretation of cell-specific replication origin sites from multiple eukaryotes by exploiting stacking framework. Brief Bioinform. (2020) 22:bbaa275. doi: 10.1093/bib/bbaa275

9. Liu, T, Qiao, H, Wang, Z, Yang, X, Pan, X, Yang, Y, et al. CodLncScape provides a self-enriching framework for the systematic collection and exploration of coding LncRNAs. Adv Sci. (2024) 11:e2400009. doi: 10.1002/advs.202400009

10. Alhatemi, RAJ, and Savaş, S. A weighted ensemble approach with multiple pre-trained deep learning models for classification of stroke. Medinformatics. (2023) 1:10–9. doi: 10.47852/bonviewMEDIN32021963

11. Wang, Y, Zhai, Y., Ding, Y., and Zou, Q. SBSM-Pro: support bio-sequence machine for proteins. arXiv [Preprint]. arXiv:2308.10275 (2023).

12. Wang, Y, Zhang, W, Yang, Y, Sun, J, and Wang, L. Survival prediction of esophageal squamous cell carcinoma based on the prognostic index and sparrow search algorithm-support vector machine. Curr Bioinforma. (2023) 18:598–609. doi: 10.2174/1574893618666230419084754

13. Liu, B. BioSeq-analysis: a platform for DNA, RNA and protein sequence analysis based on machine learning approaches. Brief Bioinform. (2019) 20:1280–94. doi: 10.1093/bib/bbx165

14. Liu, J, Wong, DW, Lim, JH, Tan, NM, Zhang, Z, Li, H, et al. Detection of pathological myopia by PAMELA with texture-based features through an SVM approach. J Healthc Eng. (2010) 1:1–11. doi: 10.1260/2040-2295.1.1.1

15. Zhang, Z, Cheng, J, Liu, J, Sheri, YCM, Kong, CC, and Mei, SS, editors. Pathological myopia detection from selective fundus image features. 2012 7th IEEE conference on industrial electronics and applications (ICIEA). IEEE; (2012)

16. Xu, Y, Liu, J, Zhang, Z, Tan, NM, Wong, DWK, Saw, SM, et al., editors. Learn to recognize pathological myopia in fundus images using bag-of-feature and sparse learning approach. 2013 IEEE 10th International Symposium on Biomedical Imaging. IEEE; (2013)

17. Zhang, Z, Xu, Y, Liu, J, Wong, DWK, Kwoh, CK, Saw, S-M, et al. Automatic diagnosis of pathological myopia from heterogeneous biomedical data. PLoS One. (2013) 8:e65736. doi: 10.1371/journal.pone.0065736

18. Chen, X, Xu, Y, Wong, DWK, Wong, TY, and Liu, J, editors. Glaucoma detection based on deep convolutional neural network. 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE; (2015)

19. Luo, X, Wang, Y, Zou, Q, and Xu, L. Recall DNA methylation levels at low coverage sites using a CNN model in WGBs. PLoS Comput Biol. (2023) 19:e1011205. doi: 10.1371/journal.pcbi.1011205

20. Dou, LJ, Zhang, ZL, Xu, L, and Zou, Q. iKcr_CNN: a novel computational tool for imbalance classification of human nonhistone crotonylation sites based on convolutional neural networks with focal loss. Comput Struct Biotechnol J. (2022) 20:3268–79. doi: 10.1016/j.csbj.2022.06.032

21. Xu, M, Cheng, J, Wong, DWK, Cheng, C-Y, Saw, SM, and Wong, TY, editors. Automated tessellated fundus detection in color fundus images. Proceedings of the Ophthalmic Medical Image Analysis International Workshop, University of Iowa; (2016)

22. Xu, Y, Duan, L, Fu, H, Zhang, Z, Zhao, W, You, T, et al., editors. Ocular disease detection from multiple informatics domains. 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018). IEEE; (2018).

23. Septiarini, A, Harjoko, A, Pulungan, R, and Ekantini, R. Automatic detection of peripapillary atrophy in retinal fundus images using statistical features. Biomed Signal Process Control. (2018) 45:151–9. doi: 10.1016/j.bspc.2018.05.028

24. Rauf, N, Gilani, SO, and Waris, A. Automatic detection of pathological myopia using machine learning. Sci Rep. (2021) 11:16570. doi: 10.1038/s41598-021-95205-1

25. Wei, L, Tang, J, and Zou, Q. Local-DPP: an improved DNA-binding protein prediction method by exploring local evolutionary information. Inf Sci. (2017) 384:135–44. doi: 10.1016/j.ins.2016.06.026

26. Zhang, Y, Liu, C, Liu, M, Liu, T, Lin, H, Huang, C-B, et al. Attention is all You need: utilizing attention in AI-enabled drug discovery. Brief Bioinform. (2024) 25:bbad467. doi: 10.1093/bib/bbad467

27. Liu, T, Huang, J, Luo, D, Ren, L, Ning, L, Huang, J, et al. Cm-siRPred: predicting chemically modified SiRNA efficiency based on multi-view learning strategy. Int J Biol Macromol. (2024) 264:130638. doi: 10.1016/j.ijbiomac.2024.130638

28. Xu, Y, Liu, T, Yang, Y, Kang, J, Ren, L, Ding, H, et al. Acvpred: enhanced prediction of anti-coronavirus peptides by transfer learning combined with data augmentation. Futur Gener Comput Syst. (2024) 160:305–15. doi: 10.1016/j.future.2024.06.008

29. Fang, H, Li, F, Wu, J, Fu, H, Sun, X, Orlando, JI, et al. PALM: open fundus photograph dataset with pathologic myopia recognition and anatomical structure annotation. arXiv [Preprint]. arXiv:230507816 (2023).

30. Tan, M, and Le, Q, (ed.) EfficientNetv2: smaller models and faster training. International conference on machine learning. PMLR; (2021)

31. Zou, X, Ren, L, Cai, P, Zhang, Y, Ding, H, Deng, K, et al. Accurately identifying hemagglutinin using sequence information and machine learning methods. Front Med (Lausanne). (2023) 10:1281880. doi: 10.3389/fmed.2023.1281880

32. Zulfiqar, H, Guo, Z, Ahmad, RM, Ahmed, Z, Cai, P, Chen, X, et al. Deep-STP: a deep learning-based approach to predict snake toxin proteins by using word embeddings. Front Med. (2024) 10:10. doi: 10.3389/fmed.2023.1291352

33. Zhu, H, Hao, H, and Yu, L. Identifying disease-related microbes based on multi-scale variational graph autoencoder embedding Wasserstein distance. BMC Biol. (2023) 21:294. doi: 10.1186/s12915-023-01796-8

34. Liu, X, Yang, H, Ai, C, Ding, Y, Guo, F, and Tang, J. Mvml-Mpi: Multi-view multi-label learning for metabolic pathway inference. Brief Bioinform. (2023) 24:bbad393. doi: 10.1093/bib/bbad393

35. Liang, C, Wang, L, Liu, L, Zhang, H, and Guo, F. Multi-view unsupervised feature selection with tensor robust principal component analysis and consensus graph learning. Pattern Recogn. (2023) 141:109632. doi: 10.1016/j.patcog.2023.109632

36. Li, H, Pang, Y, and Liu, B. BioSeq-BLM: a platform for analyzing DNA, RNA, and protein sequences based on biological language models. Nucleic Acids Res. (2021) 49:e129. doi: 10.1093/nar/gkab829

37. Liu, B, Gao, X, and Zhang, H. BioSeq-Analysis2.0: an updated platform for analyzing DNA, RNA and protein sequences at sequence level and residue level based on machine learning approaches. Nucleic Acids Res. (2019) 47:e127. doi: 10.1093/nar/gkz740

38. Zhang, ZY, Zhang, Z, Ye, X, Sakurai, T, and Lin, H. A Bert-based model for the prediction of lncRNA subcellular localization in Homo sapiens. Int J Biol Macromol. (2024) 265:130659. doi: 10.1016/j.ijbiomac.2024.130659

39. Dou, M, Tang, J, Tiwari, P, Ding, Y, and Guo, F. Drug-drug interaction relation extraction based on deep learning: a review. ACM Comput Surv. (2024) 56:1–33. doi: 10.1145/3645089

40. Charoenkwan, P, Schaduangrat, N, Lio, P, Moni, MA, Shoombuatong, W, and Manavalan, B. Computational prediction and interpretation of druggable proteins using a stacked ensemble-learning framework. iScience. (2022) 25:104883. doi: 10.1016/j.isci.2022.104883

41. Bupi, N, Sangaraju, VK, Phan, LT, Lal, A, Vo, TTB, Ho, PT, et al. An effective integrated machine learning framework for identifying severity of tomato yellow leaf curl virus and their experimental validation. Research. (2023) 6:0016. doi: 10.34133/research.0016

42. Cadrin-Chenevert, A. Moving from imagenet to radimagenet for improved transfer learning and generalizability. Radiol Artif Intell. (2022) 4:e220126. doi: 10.1148/ryai.220126

43. Li, X, Cen, M, Xu, J, Zhang, H, and Xu, XS. Improving feature extraction from histopathological images through a fine-tuning imagenet model. J Pathol Inform. (2022) 13:100115. doi: 10.1016/j.jpi.2022.100115

44. Charoenkwan, P, Schaduangrat, N, Manavalan, B, and Shoombuatong, W. M3S-ALG: improved and robust prediction of allergenicity of chemical compounds by using a novel multi-step stacking strategy. Futur Gener Comput Syst. (2025) 162:107455. doi: 10.1016/j.future.2024.07.033

45. Liu, M, Li, C, Chen, R, Cao, D, and Zeng, X. Geometric deep learning for drug discovery. Expert Syst Appl. (2023) 240:122498. doi: 10.1016/j.eswa.2023.122498

46. Zeng, X, Wang, F, Luo, Y, Kang, S-g, Tang, J, Lightstone, FC, et al. Deep generative molecular design reshapes drug discovery. Cell Rep Med. (2022) 3:100794. doi: 10.1016/j.xcrm.2022.100794

47. Hasan, MM, Tsukiyama, S, Cho, JY, Kurata, H, Alam, MA, Liu, X, et al. Deepm5C: a deep-learning-based hybrid framework for identifying human RNA N5-methylcytosine sites using a stacking strategy. Mol Ther. (2022) 30:2856–67. doi: 10.1016/j.ymthe.2022.05.001

48. Shoombuatong, W, Meewan, I, Mookdarsanit, L, and Schaduangrat, N. Stack-HDAC3i: a high-precision identification of HDAC3 inhibitors by exploiting a stacked ensemble-learning framework. Methods. (2024) 230:147–57. doi: 10.1016/j.ymeth.2024.08.003

49. Manavalan, B, and Lee, J. FRTpred: a novel approach for accurate prediction of protein folding rate and type. Comput Biol Med. (2022) 149:105911. doi: 10.1016/j.compbiomed.2022.105911

50. Pham, NT, Rakkiyapan, R, Park, J, Malik, A, and Manavalan, B. H2Opred: a robust and efficient hybrid deep learning model for predicting 2'-O-methylation sites in human RNA. Brief Bioinform. (2023) 25:bbad476. doi: 10.1093/bib/bbad476

51. Manavalan, B, and Patra, MC. MLCPP 2.0: an updated cell-penetrating peptides and their uptake efficiency predictor. J Mol Biol. (2022) 434:167604. doi: 10.1016/j.jmb.2022.167604

52. Charoenkwan, P, Chumnanpuen, P, Schaduangrat, N, and Shoombuatong, W. Stack-AVP: a stacked ensemble predictor based on multi-view information for fast and accurate discovery of antiviral peptides. J Mol Biol. (2024):168853. doi: 10.1016/j.jmb.2024.168853

Keywords: myopia, pathological myopia, deep learning, EfficientNetv2, Grad-CAM

Citation: Zhang H-Q, Arif M, Thafar MA, Albaradei S, Cai P, Zhang Y, Tang H and Lin H (2025) PMPred-AE: a computational model for the detection and interpretation of pathological myopia based on artificial intelligence. Front. Med. 12:1529335. doi: 10.3389/fmed.2025.1529335

Received: 16 November 2024; Accepted: 27 February 2025;

Published: 13 March 2025.

Edited by:

Alaa Abd-alrazaq, Weill Cornell Medicine-Qatar, QatarReviewed by:

Balachandran Manavalan, Sungkyunkwan University, Republic of KoreaCopyright © 2025 Zhang, Arif, Thafar, Albaradei, Cai, Zhang, Tang and Lin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yang Zhang, eWFuZ3poYW5nQGNkdXRjbS5lZHUuY24=; Hua Tang, aHVhdGFuZ0Bzd211LmVkdS5jbg==; Hao Lin, aGxpbkB1ZXN0Yy5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.