95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Environ. Sci. , 12 November 2020

Sec. Environmental Informatics and Remote Sensing

Volume 8 - 2020 | https://doi.org/10.3389/fenvs.2020.579856

This article is part of the Research Topic Emerging Technologies and Techniques for Remote Sensing of Coastal and Inland Waters View all 14 articles

Sentinel-2 satellite imagery has been successfully used to map submerged seagrasses in clear waters, and surface-canopy forming seaweed habitats in a range of water types. We examined the ability to use Sentinel-2 remote sensing reflectance to classify fully submerged seagrass and seaweed habitats in optically complex, temperate waters within a high priority management region in Atlantic Canada. To do so, we determined the “best” Sentinel-2 image available between 2015 and 2019 based on tidal height, absence of sun glint and clouds, and water transparency. Using the full Sentinel-2 tile, we atmospherically corrected the image using ACOLITE’s dark spectrum fitting method. Our classification goal was a two-class prediction of vegetation presence and absence. Using information obtained from drop-camera surveys, the image was first partially classified using simple band thresholds based on the normalized difference vegetation index (NDVI), red/green ratio and the blue band. A random forest model was built to classify the remaining areas to a maximum depth of 10 m, the maximum depth at which field surveys were performed. The resulting habitat map had an overall accuracy of 79% and ∼231 km2 of vegetated habitat were predicted to occur (total area 345.15 km2). As expected, the classification performed best in regions dominated by bright sandy bare substrate, and dense dark vegetated beds. The classification performed less well in regions dominated by dark bare muddy substrate, whose spectra were similar to vegetated habitat, in pixels where vegetation density was low and mixed with other substrates, and in regions impacted by freshwater input. The maximum depth that bottom habitat was detectable also varied across the image. Leveraging the full capacity of the freely available Sentinel-2 satellite series with its high spatial resolution and resampling frequency, provides a new opportunity to generate large scale vegetation habitat maps, and examine how vegetation extent changes over time in Atlantic Canada, providing essential data layers to inform monitoring and management of macrophyte dominated habitats and the resulting ecosystem functions and services.

Seaweeds and seagrasses (marine macrophytes) are submerged aquatic vegetation found in nearshore coastal environments. In Atlantic Canada, seagrasses (primarily eelgrass, Zostera marina, rarely widgeon grass, Ruppia maritima) occur within subtidal soft-sedimentary habitats and seaweed canopies (dominated by brown algae in the Fucaceae and Laminariaceae families) occur along rocky habitats. In the rocky intertidal zone, Ascophyllum nodosum (rockweed), is the dominant habitat forming species in sheltered areas while Fucus spp. dominates along exposed areas, with a transition to kelps (e.g., Laminaria digitata and Saccharina latissima) in the subtidal whose canopy does not reach the surface. Coastal ecosystems which are dominated by seaweeds and seagrasses are some of the most productive habitats globally and provide several important ecosystem functions and services (Barbier et al., 2011). These include acting as ecosystem engineers to provide biogenic habitat structure, providing coastal protection against erosion, absorbing nutrient runoff, providing carbon storage, supporting biodiversity and fisheries, and generally acting as an indicator of overall ecosystem health (Orth et al., 2006; Schmidt et al., 2011; Duarte et al., 2013; Wong and Dowd, 2016; Teagle et al., 2017; Wong and Kay, 2019). In Atlantic Canada, and globally, seaweed and seagrass habitat, and ecological services, are under threat from stressors such as invasive species, climate change, coastal development, and nutrient loading (Waycott et al., 2009; Filbee-Dexter and Wernberg, 2018; Murphy et al., 2019). Tools for mapping and monitoring marine macrophyte distribution are important to understand and quantify habitat changes, particularly to inform decision making related to conservation areas for seaweed and eelgrass habitat, and resource management for commercially important seaweeds.

Satellite remote sensing has been used to map and monitor marine macrophyte distribution globally in optically shallow waters (Duffy et al., 2019; Kutser et al., 2020). Satellites measure the amount of sunlight reflected off of the seafloor at several wavelengths (including water-column attenuation) in sufficiently transparent waters, which can be classified using several approaches including empirical, image-based classification algorithms (e.g., O’Neill and Costa, 2013; Poursanidis et al., 2019), object-based techniques (e.g., Roelfsema et al., 2014; León-Pérez et al., 2019), or physics-based semi-analytical model inversion (e.g., Lee et al., 1999; McKinna et al., 2015). As the light travels from the sun, to the seafloor, and back to the satellite, it interacts with the atmosphere, which amounts to up to 90% of the top-of-atmosphere signal (Wang, 2010), sea surface, and the water column, necessitating the requirements for atmospheric (Vanhellemont and Ruddick, 2016; Vanhellemont, 2019), sun glint (Hedley et al., 2005; Kutser et al., 2009), and water column corrections (Zoffoli et al., 2014). Empirical, image-based classifications, which require in situ data to train an algorithm, are widely used to quantify bottom habitat. Historically the maximum likelihood classifier has been the preferred classification algorithm (Richards, 1986), however, machine learning algorithms such as support vector machines (Vapnick, 1995) and random forests (Breiman, 2001) have been recently demonstrated to perform better than the maximum likelihood classification (Marcello et al., 2018; Ha et al., 2020). Empirical methods are less sensitive to rigorous atmospheric and water-column corrections, but are not readily applicable to other regions (Islam et al., 2020). Object-based classifications operate on similar principles as image-based classifications, with the exception that the image is first segmented into many objects, and the classification is performed at the level of the object, opposed to the pixel (Roelfsema et al., 2014; Su and Huang, 2019). This is a hybrid approach, which can include non-spectral data layers during classification. Physics-based semi-analytical inversion models retrieve simultaneously the inherent optical properties (IOPs) of the water column (i.e., absorption and scattering coefficients), water depth and bottom reflectance (e.g., Lee et al., 1999; McKinna et al., 2015). This approach requires the development of spectral libraries for all optically active components but regional in situ data are not required for model training given the globality of the spectral libraries (Kutser et al., 2020). Its application to any water body requires highly accurate atmospheric correction to retrieve seafloor reflectance and identify bottom habitat. Satellite remote sensing of seagrass and coral habitat is widely used in tropical clear waters, where bottom habitat is readily detectable to great depths (<40 m) (Hossain et al., 2015; Kovacs et al., 2018; Wicaksono et al., 2019). Satellite remote sensing is also widely used in a range of water types for certain seaweed habitats, when the vegetation canopy reaches the surface, as the measured signal comes from the sea surface, opposed to the seafloor, and there is negligible interaction of the water-leaving signal with the water column (e.g., Schroeder et al., 2019; Bell et al., 2020; Mora-Soto et al., 2020). A more complicated classification question arises for submerged macrophytes in optically complex temperate waters, where high CDOM, suspended particulate matter, and phytoplankton concentration reduce the maximum depth at which the seafloor is visible compared to tropical habitats (3–10 m vs. <40 m). The use of satellite remote sensing in temperate habitats is becoming more common (e.g., Casal et al., 2011; O’Neill and Costa, 2013; Dierssen et al., 2019), and in the process, new methods are being developed to leverage the many benefits of satellite remote sensing to accurately quantify the distribution of marine macrophytes in areas where water transparency still permits bottom habitat mapping with passive sensors.

In the optically complex, temperate waters of Atlantic Canada there has been considerable interest in using remote sensing to classify marine macrophyte habitat using sonar, lidar, and optical satellites. Intertidal rockweed habitat along the south shore of Nova Scotia has been classified with lidar (Webster et al., 2020) and multispectral satellite sensors including Worldview and Quickbird imagery (Macdonald et al., 2012). Rockweed habitat can be easily identified using vegetation indices given its strong signal in the near-infrared (NIR) compared to its environment. In the subtidal zone, completely submerged kelp habitat has been quantified with Landsat along the Gaspé Peninsula in Quebec (Simms and Dubois, 2001), with SPOT-7 along the Mingan Archipelago also in Québec (St-Pierre and Gagnon, 2020) and with lidar along the south shore of Nova Scotia (Webster, 2018). Eelgrass habitat has been quantified with lidar (Webster et al., 2016), sonar (Vandermeulen, 2014; Barrell et al., 2015), and a variety of multispectral sensors including Worldview, Quickbird, and SPOT (Milton et al., 2009; Barrell et al., 2015; Wilson et al., 2019) at various sites along the exposed Atlantic coast of Nova Scotia and along the Northumberland Straight. The above listed studies used either an image-based classification generally based on the maximum likelihood classifier (Wilson et al., 2019; St-Pierre and Gagnon, 2020; Webster et al., 2020), or object-based classification (Milton et al., 2009; Barrell et al., 2015) to quantify marine macrophyte habitat at bay-wide scales with commercial satellites.

In this study, we were interested in understanding to what extent detailed marine macrophyte habitat could be classified using the freely available Senintel-2 satellite data series and an image-based classification procedure. Varying from previous work in the region, we aimed to classify marine macrophyte habitat at large spatial scales (hundreds of square kilometers), and with the exception of the Landsat study, with a freely available imaging platform, which allows for repeated surveys over yearly to decadal time-scales without prior tasking. We focused on a high-priority management region characterized by complex bathymetry and a multispecies environment, including fully submerged kelp and eelgrass beds, in an optically complex coastal environment. We explored the impact of various preprocessing steps such as water column corrections, and image-based classification algorithms such as maximum likelihood classification and machine learning classifiers. While our original goal was to differentiate between eelgrass and seaweed (primarily brown) habitat this was found not to be possible and maps were produced denoting vegetation presence and absence. The driver of this work was to develop a method framework for using Sentinel-2 data in a systematic manner to classify the large-scale distribution of marine macrophyte habitat, to be able to provide data layers for marine spatial planning, monitor marine macrophytes habitat extent, and consequently inform management decisions in high-priority regions (e.g., conservation). To support this, the final map classification is presented in both a binary presence-absence map, and probability of vegetated habitat to define a level of certainty in the map. To the best of our knowledge, our study is the first to use Sentinel-2 data to quantify marine macrophyte habitats in Atlantic Canada, which includes completely submerged kelp beds and eelgrass meadows.

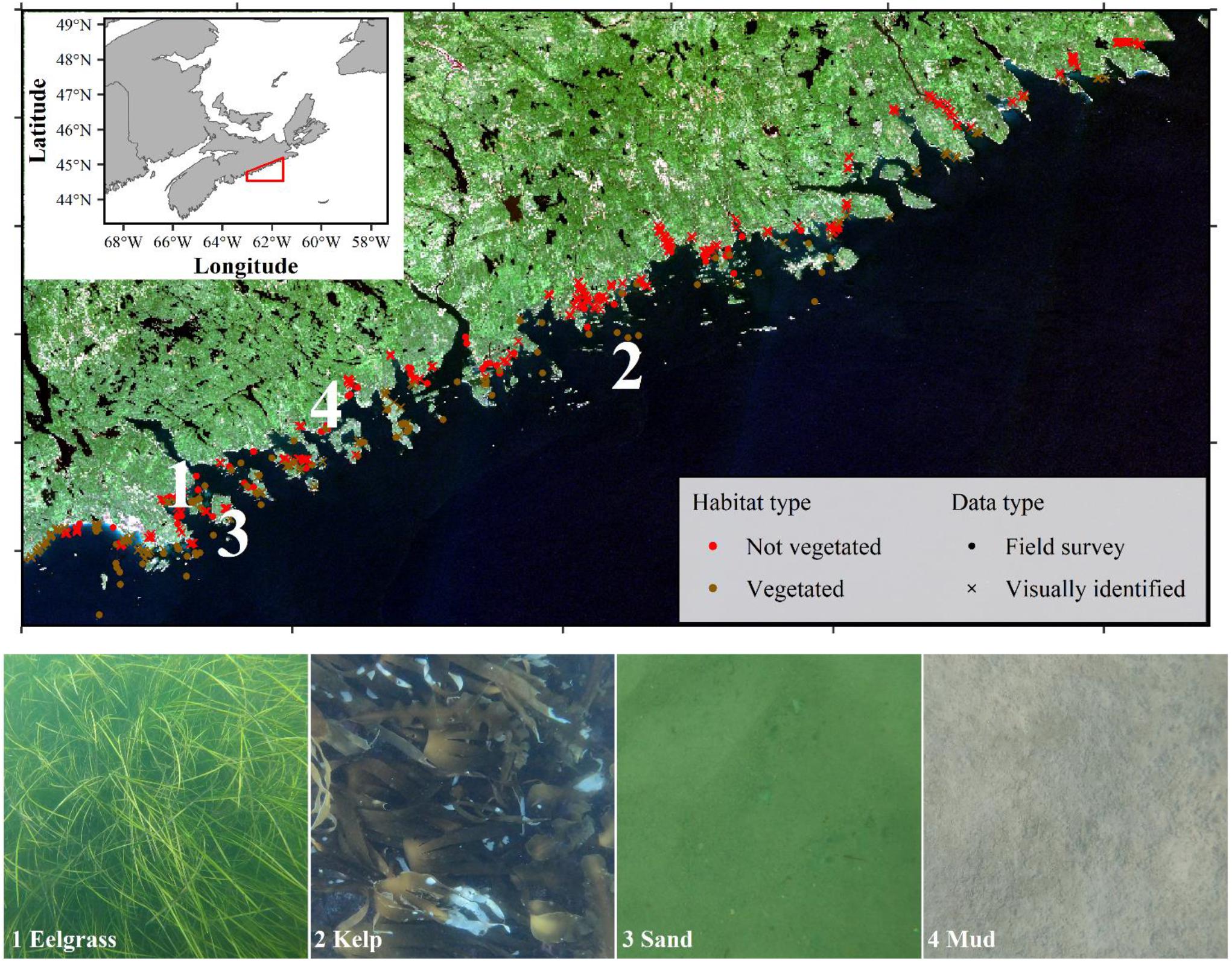

The Eastern Shore Islands (ESI) are an archipelago located along the Atlantic coast of Nova Scotia, Canada (Figure 1). The ESI archipelago is an important management area with most of the land already protected, and the marine environment under consideration for a marine protected area (DFO, 2019). The high management priority is given due to the relative pristine condition of the terrestrial and marine environments including several healthy eelgrass meadows, and rockweed and kelp beds, all important habitat forming species in the region, providing three-dimensional structure and nursery habitat for many marine species (Schmidt et al., 2011; Vercaemer et al., 2018). The ESI archipelago is characterized by a complex coastline including rocky shores, sandy beaches, and salt marshes, each at a varied degree of exposure to the Atlantic Ocean. The numerous islands result in a complex bathymetry with shallow depths (<10 m) extending kilometers offshore. This results in an optically complex environment for satellite remote sensing where bottom substrate and water transparency are highly variable.

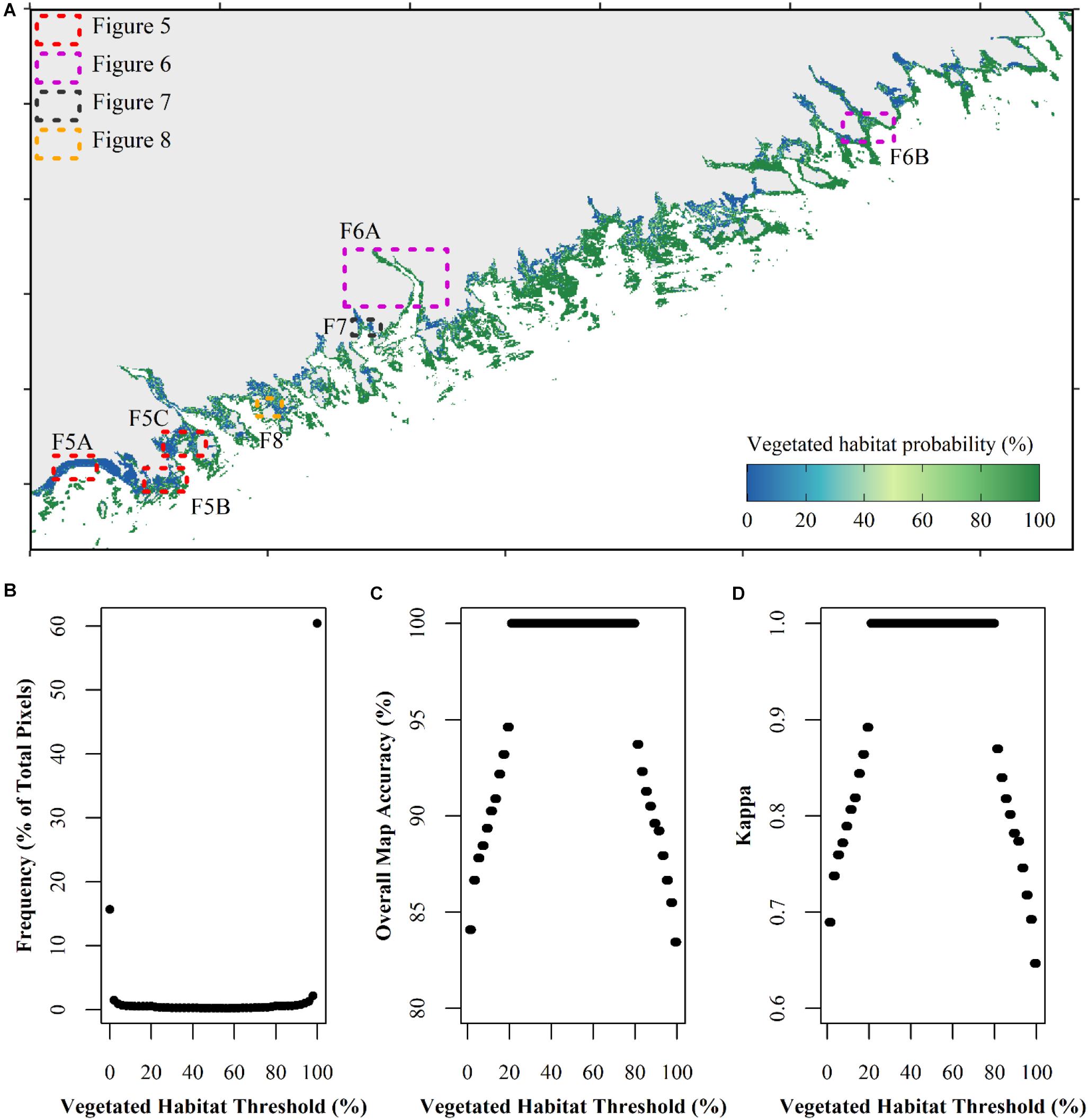

Figure 1. Atmospherically corrected true-color composite of the Sentinel-2 tile (T20NQ) of the Eastern Shore Islands in Nova Scotia, Canada acquired on September 13, 2016. Dots indicate stations where field survey data were collected, triangles indicate visually identified points, colors show where vegetated habitat is known and not known to occur. Numbers relate to image-stills of select habitat types occurring in the region.

Drop camera field surveys were conducted to characterize bottom type as well as eelgrass and seaweed presence/absence from September to October 2019. Stations (n = 128) were pre-identified based on depth (0–10 m) and substrate type (5 classifications ranging from soft mud to hard bottom) to allow stratified sampling across conditions in which both vegetated habitat types are found (hard versus soft substrate). At each station (Figure 1), an underwater video system (consisting of a GoPro Hero 7 camera inside a waterproof housing with lasers attached for scaling; Pro Squid, Spot X underwater Vision1) was deployed. The system was connected to a topside console that allowed operators to view video feed and record GPS position. The camera was lowered into the water to approximately 1 m above the sea bottom, and the boat was allowed to drift for 1–2 min while the camera video recorded the bottom substrate. Substrate type (i.e., bare, eelgrass, or seaweed) was visually determined from the live feed and then validated from the video at a later date. For image classification, all points were labeled as bare or vegetated following the video validation, as exploratory analysis showed it was not possible to separate eelgrass and seaweed habitat (results not shown).

There was about a 1- to 3-year period between the field surveys (2017–2019) and image acquisition (2016) depending on the source of the in situ data. While it is not uncommon to have a temporal gap between the field survey and image acquisition (e.g., O’Neill and Costa, 2013; Poursanidis et al., 2019), we assumed that large-scale vegetation distribution patterns would have minimally changed during the time period, particularly given that both the image acquisition and field survey were obtained in the same season. Yet, given that areas with patchy/mixed habitat types might have undergone slight shifts in vegetation density, particularly since the region was impacted by the passage of Hurricane Dorian in early September 2019, days before the field surveys were performed, an additional data quality control step was performed. The video footage for each field survey point was examined in relation to the true color composite of the satellite imagery and the spectra, to identify areas of mixed habitat types or very low density of vegetation coverage. These stations (n = 32) were omitted from image classification resulting in using 96 stations giving 218 data points. Each drift transect consisted of 2–3 observations depending on the number of GPS coordinates obtained. The quality control step was done both to use pure endmembers for model training, and to account for slight shifts in habitat, which are more likely to occur in fragmented areas. Percent cover was calculated for select video frames per station, and in general average vegetated percent cover was >75%, often higher, in stations that passed quality control. Additionally, a visual assessment between a Worldview-3 image acquired in August 2019 over a large portion of the tile, and the best Sentinel-2 image acquired in 2016 (see section “Satellite Data, Atmospheric Correction, and Land Masking”) was performed to ensure that large-scale vegetation patterns were fairly stable between 2016 and 2019.

In addition to the 2019 field survey (n = 218), additional field data from 2017 to 2019 were also included (n = 15; Wong et al. Unpublished data). Lastly some visually identified points from the imagery were added to assist in model training for habitat types missed in the field surveys for shallow bare substrate (n = 294), shallow vegetation (n = 202), and bare sand at moderate depths (n = 50; Figure 1). These visually identified points were selected from areas that were easily interpretable, were spaced evenly to cover the entire Sentinel-2 tile, and were added in an iterative approach to provide a more accurate vegetation map (Vahtmäe and Kutser, 2013). All field survey data and visually identified points were labeled as vegetated or non-vegetated for a two-class binary classification of vegetation presence and absence for a total of 779 data points (Table 1). Therefore, three separate data sources were used in model building/evaluation to maximize the amount of information available to the classification algorithm. The number of data points used in the current study lies within the number of points used for other coastal Sentinel-2 studies ranging from <150 (e.g., Fauzan et al., 2017; Poursanidis et al., 2019) to >1,000 (e.g., Traganos et al., 2018; Yucel-Gier et al., 2020).

Sentinel-2 is a European observation system composed of two identical satellites (A and B launched in 2015 and 2017, respectively), that provide images every 5 days at the equator and every 2–3 days at 45°N (Drusch et al., 2012). Sentinel-2 has a swath width of 290 km and provides 13 bands at a radiometric resolution of 12-bits and a spatial resolution from 10 to 60-m. At the spatial resolution of 10-m, four bands are available with centered wavelengths of ∼490 nm (band 2 blue), ∼560 nm (band 3 green), ∼665 nm (band 4 red), and ∼833 nm (band 8 NIR). These were the only bands used in the study to take advantage of the high spatial resolution. Level-1C products are geolocated and radiometrically corrected to top-of-atmosphere reflectances in local UTM coordinates and are available in 100 × 100 km tiles. Images can be freely downloaded, pending a registration, from Copernicus Open Access Hub2.

To determine the best image for classification, we assembled a catalog of available Sentinel-2 imagery for our region of interest between the launch of Sentinel-2 in 2015 to 2019 (see Supplementary Material 1). From the first day imagery was available for our region of interest on September 12, 2015 to December 31, 2019, we identified 464 days where the entire region, or a part of it, was imaged by Sentinel-2. Of which, 320 days were immediately discarded due to heavy cloud cover, leaving 144 days, which may be suitable for image classification (Supplementary Figure 1.1). All 2015 images were cloud covered, but in general from 2016 to 2019 at least one cloud free image existed per month and year. The tidal height at time of image acquisition varied between low and high tides, and 57 days (out of the 144) were impacted by different degrees of sunglint, both of which can impact classification success. Additionally, it was noted that water transparency varied highly across these cloud-free image dates and bottom habitat was not always visible in the Sentinel-2 imagery. Lastly, due to the various satellite tracks, only 64 days (out of the 144) imaged the entire region, the rest partially imaged the region of interest. This resulted in about 14% of all available images that were potentially suitable for bottom-habitat monitoring.

The best Sentinel-2 image from 2015 to 2019 for our region of interest was selected based on tidal height, the absence of clouds and sun glint, minimal wave action, and low turbidity. This image was acquired on September 13, 2016 at 15:07 UTC, within 10 min of a low tide (15:17 UTC at a tidal height3 of 0.58 m). The full Sentinel-2 tile (T20NQ) Level 1C image was downloaded for analysis. This image was assumed to represent the best-case scenario for image quality to understand what information about marine macrophyte coverage can optimally be extracted from Sentinel-2 for Atlantic Canada. A method workflow is presented in Figure 2 and described in Sections “Satellite Data, Atmospheric Correction, and Land Masking” and “Image Classification.”

The full tile image was atmospherically corrected with ACOLITE (Python v.20190326.0) using the dark spectrum fitting approach (Vanhellemont and Ruddick, 2016; Vanhellemont, 2019). Previous work with Sentinel-2 in another region of Atlantic Canada explored the use of ACOLITE to atmospherically correct Sentinel-2 images for bottom habitat identification and found the dark spectrum fitting method to be superior to the exponential approach (Wilson and Devred, 2019). Dark spectrum fitting is an entirely automated, image-based approach to aerosol calculation which makes no prior assumptions on which bands should be used to calculate atmospheric properties (for instance the red or NIR signal being negligible). It assumes that a tile contains pixels whose surface reflectance should be approximately zero for at least one band. Using the dark targets, and various aerosol models, a final model is chosen based on the band which defines the lowest atmospheric path reflectance. Rayleigh scattering is accounted for via lookup tables based on the 6SV radiative transfer model. All ACOLITE settings were left at their default values except for masking. All l2w_mask settings were disabled to allow for more fine-tuned masking.

Generating accurate land masks is an essential preprocessing step when working with coastal near-shore environments, where sharp transitions in the magnitude of surface reflectance occur. ACOLITE default mask settings define land values where top-of-atmosphere reflectance (ρt) in band 11 (SWIR; central wavelength ∼1,610 nm; 20-m resolution) are greater than 0.0215. Previous work in another region of Atlantic Canada found this threshold inadequate to define near-shore environments, and that an appropriate threshold was image dependent (Wilson and Devred, 2019). Additionally, as the aim of our study was to eventually use Sentinel-2 for repeated image classification over multiple years, a standard land mask was desired that would not be impacted by tidal height. Therefore, a high tide image of the same tile (T20NQ) was used to generate a standard regional land mask that could be used across multiple image dates (Roelfsema et al., 2009). High-tide was chosen over low-tide to include the entire range of habitat that marine macrophytes exist. This image was acquired on August 24, 2016 at 15:08 UTC corresponding to high tide (15:08 UTC at a tidal height of 1.62 m) and pixels with ρt ≥ 0.07 for band 11 were defined as land based on visual inspection and masked. While SWIR is strongly absorbed by water and therefore provides better delimitation of land and water surfaces than NIR, the different spatial resolution between the 10-m bands used in image classification, and the 20-m band used to generate a land mask should be accounted for. To do so, mixed pixels (i.e., containing both land and water areas) at the 20-m resolution were masked with the Normalized Difference Vegetation Index (NDVI) to identify any vegetated land pixels. Floating algae index (FAI) was not explored as it requires information from the 20-m SWIR bands. The August 24, 2016 image was atmospherically corrected with ACOLITE and surface reflectances of band 4 and band 8 were used to calculate NDVI. Pixels where NDVI ≥ 0.7 were identified as land vegetation based on visual examination and masked. This threshold was defined as the lowest threshold which did not include rockweed beds, which may float at the surface at high tide. As a final step, areas of freshwater (e.g., lakes) were manually masked.

Our regional land mask was applied to the September 13, 2016 image for the 10-m bands (i.e., bands 2, 3, 4, and 8). Next, all pixels in water depths ≥10 m were masked out to correspond with the maximum depth of the field survey (see section “Field Surveys”). The bathymetry was obtained from the Canadian Hydrographic Services multibeam data (Greenlaw Unpublished data) at a spatial resolution of 30-m and was resampled to a 10-m resolution with bilinear interpolation using the raster package (Hijmans, 2019) in R (R Core Team., 2019). As a last quality control step, pixels with at least one negative remote sensing reflectance in any of the four 10-m bands were discarded from the study (25 pixels; <0.001% of total pixels).

No water-column correction was performed on the imagery. While we explored the use of the commonly employed depth invariant indices (Lyzenga, 1978; results not shown here), we could not assume that water transparency was consistent across our study area breaking a key assumption of the approach (Zoffoli et al., 2014). Regardless, water column corrections based on depth invariant indices and more analytical bio-optical modeling have been shown to have mixed effects on image classification success (e.g., Marcello et al., 2018; Poursanidis et al., 2018; Traganos et al., 2018) and are commonly not applied (e.g., Hogrefe et al., 2014; Wicaksono and Lazuardi, 2018). In addition, given that we only used the four wavebands at 10-m resolution, resolving depth, water column and bottom properties might appear as an overoptimistic task.

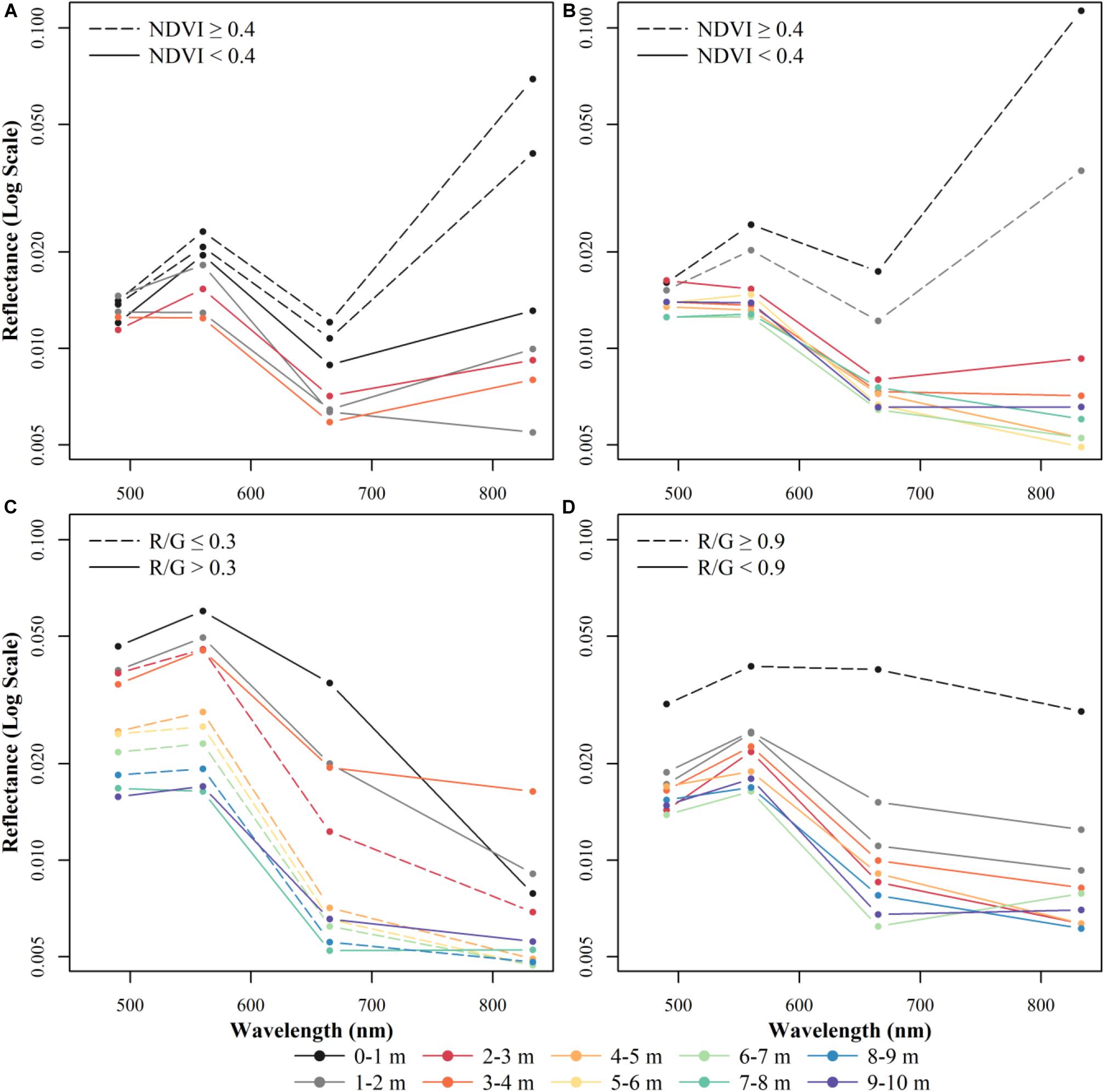

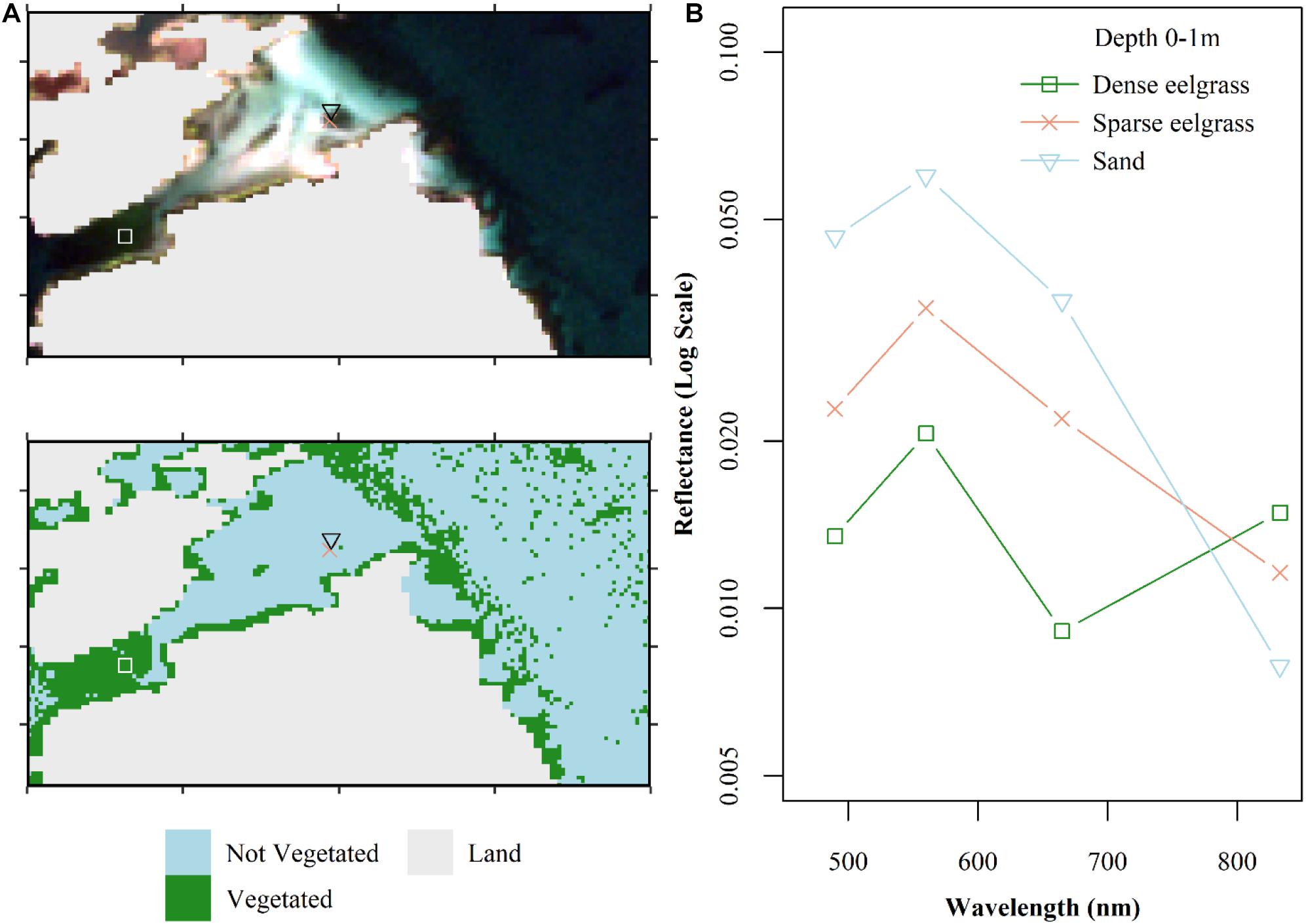

Our original classification goal was to differentiate between eelgrass and seaweed habitat but this was not found to be possible (results not shown) as their spectral signatures were very similar for the Sentinel-2 three visible bands (see Figure 3 and section “Results”). We therefore adapted our classification goal from species distribution mapping to detect vegetated versus non-vegetated habitat (see Figure 2 for an organization of data processing). Three common supervised (i.e., requiring a priori knowledge of habitat type), and one unsupervised image classification procedures were tried as a preliminary data exploration (Supplementary Table 2.1). All analyses were performed on remote sensing reflectance using R version 3.6.0 (R Core Team., 2019) with the raster (Hijmans, 2019), caret (Kuhn, 2019), RSToolbox (Leutner et al., 2019), irr (Gamer et al., 2019), and readxl packages (Wickham and Bryan, 2019). The first approach was a supervised statistical classification with maximum likelihood classification (Richards, 1986). Maximum likelihood is a simple approach, which assigns a pixel to the class it has the highest probability of being a member of. Maximum likelihood has been widely used in remote sensing studies; however, it requires many training points across all habitat (class) types, and that the remote sensing reflectance within each class follows a normal distribution. More recently, remote sensing studies have focused on machine learning classifiers that do not make any assumptions on data distributions and require fewer training points, notably support vector machines (SVM) and random forests (e.g., Traganos and Reinartz, 2018b; Poursanidis et al., 2019). The SVM approach differentiates classes based on defining the optimal hyperplane between the classes and can separate non-linearity by applying a kernel function. As such, we explored the use of SVM with a radial basis function kernel (Vapnick, 1995). Random forests build a collection of decision trees, and randomly sample these trees to create a final ensemble model, which is a robust classifier to outliers and noisy training data (Breiman, 2001). We lastly compared the supervised classifiers to an unsupervised k-means analysis (MacQueen, 1967). Following this preliminary data exploration (see Supplementary Material 2), the random forest algorithm was found to be the preferred classifier. As expected, the supervised classifiers outperformed the k-means classification, even when the k-means was performed on shallow depths (O’Neill and Costa, 2013), and the machine learning classifiers outperformed the maximum likelihood classification based on maximizing map accuracies and kappa (Supplementary Tables 2.2, 2.3; Traganos and Reinartz, 2018b; Ha et al., 2020). While SVM produced higher overall map accuracies and kappa coefficient than random forests (Supplementary Table 2.3), no threshold could be identified to correctly classify all data points in the final map (Supplementary Figure 2.1 and see following paragraphs). Additionally, visual examination of the imagery demonstrated that the random forests and SVM classifications were almost identical (Supplementary Figure 2.2). Therefore, the random forests approach was found to be the preferred classifier as it required significantly less model tuning, both in time, number of parameters, and dependency on kernel choice (Supplementary Table 2.1). It produced comparable habitat maps to the SVM classification with higher accuracy from the cross-validation runs (Supplementary Figure 2.2), and a threshold could be identified in the final map to correctly classify all data points (Supplementary Figure 2.1).

Figure 3. Example spectra from representative field survey points across multiple depths for (A) eelgrass, (B) seaweed, (C) sand, (D) mud. Dashed lines indicate pixels that would be classified using the NDVI or R/G band thresholds. Solid lines indicate pixels classified with the random forests model. Note that seaweed at 0–3 m, mud at 0–1 m, and sand at 1–2, 4–7, and 8–9 m of depth is based on visually identified points. No non-patchy eelgrass habitat was sampled at >4 m water depths and visually identified points at these depths could not be labeled with certainty to be an eelgrass bed (instead of a seaweed dominated habitat).

The full Sentinel-2 tile was classified as follows (Figure 2). First, intertidal, and very shallow subtidal pixels with canopy near-surface vegetation were classified using NDVI. Here, very shallow generally corresponded to depth ≤2 m, although we did not compare canopy height relative to water depth which would provide a more accurate threshold for vegetation detection with NDVI. NDVI was calculated with band 4 and band 8, and all pixels with a value greater or equal to 0.4 were assumed to be vegetated (Barillé et al., 2010). Second, for all pixels with NDVI < 0.4, band thresholds were used to classify some non-vegetated habitats. A threshold for band 2 (blue) of ≥0.035 was assigned to mask out the remaining “bright” pixels, which were assumed to be non-vegetated substrate such as uncovered intertidal areas to very shallow (<2–3 m) sand/rocks, or breaking waves on shore. Shallow to moderate depth bare sediment was classified using the Red/Green ratio (Band 4 divided by Band 3). Pixels with a Red/Green ratio ≤ 0.3 were classified as bare sand as in Dierssen et al. (2019), and pixels with a Red/Green ratio ≥ 0.9 were classified as mud (i.e., dark sediment) or contaminated by fresh tannic water runoff which is common along beaches in the region.

All remaining pixels (i.e., NDVI < 0.4 and Blue < 0.035 and 0.3 < Red/Green < 0.9), were classified using the random forests approach as described in Section “Image Classification” (Figure 2). The three visible wavelengths were used in the random forests model (bands 2, 3, 4; blue, green, red) for a two-class binary prediction of vegetation presence from absence. For differentiation of major macrophyte groups (e.g., eelgrass from brown seaweed) in these temperate waters, the maximum depth for image classification would need to be reduced from 10 to 2–3 m, significantly more field survey points at overlapping depths for the various vegetation types would need to be collected, and imagery with greater spectral (e.g., hyperspectral) and/or spatial resolution would be required (Kutser et al., 2020; Vahtmäe et al., 2020). In our study region, the three visible bands at 10-m resolution did not provide enough information to separate seagrass from seaweed dominated habitat.

Random forest model tuning with the “rf” method in R requires defining the number of random predictors to select at each branch of the tree. An initial model was developed with repeated k-fold cross-validations with fivefolds repeated 10 times to determine the best number of predictors. With model tuning complete, all training data were partitioned into fivefolds repeated 10 times. A random forests model was built on each partition’s training data, then predicted onto the full data set. For each partition’s test data, the withheld points were used to generate a confusion matrix including overall map accuracy, user accuracy, producer accuracy, and a Kappa coefficient with z-tests for significance from zero (Foody, 2002). This cumulated in 50 different models, habitat maps, and confusion matrices. A final confusion matrix was calculated based on the average accuracy metrics from the cross-validation runs. To generate the final map classification, the probability that a pixel was classified as vegetation was calculated by summing the number of times a pixel was classified as vegetation, divided by 50 (number of cross-validation runs) and converted to a percentage. Pixels labeled as vegetated with NDVI were labeled as 100%, and pixels labeled as bare habitat with the blue band or Red/Green ratio were labeled as 0%. Delivering a final map classification as a percentage demonstrates the level of confidence an end-user should have when using the vegetation map. Yet, if a binary map classification is required for presence from absence, we explored what vegetation probability threshold choice would maximize overall map accuracy and kappa using all data points in Table 1. For instance, if the final map classification was thresholded at 50%, where pixels ≥50% probability were labeled as vegetated and pixels with <50% were labeled as not-vegetated, we then compared how overall map accuracy and kappa would change relative to a 60% threshold.

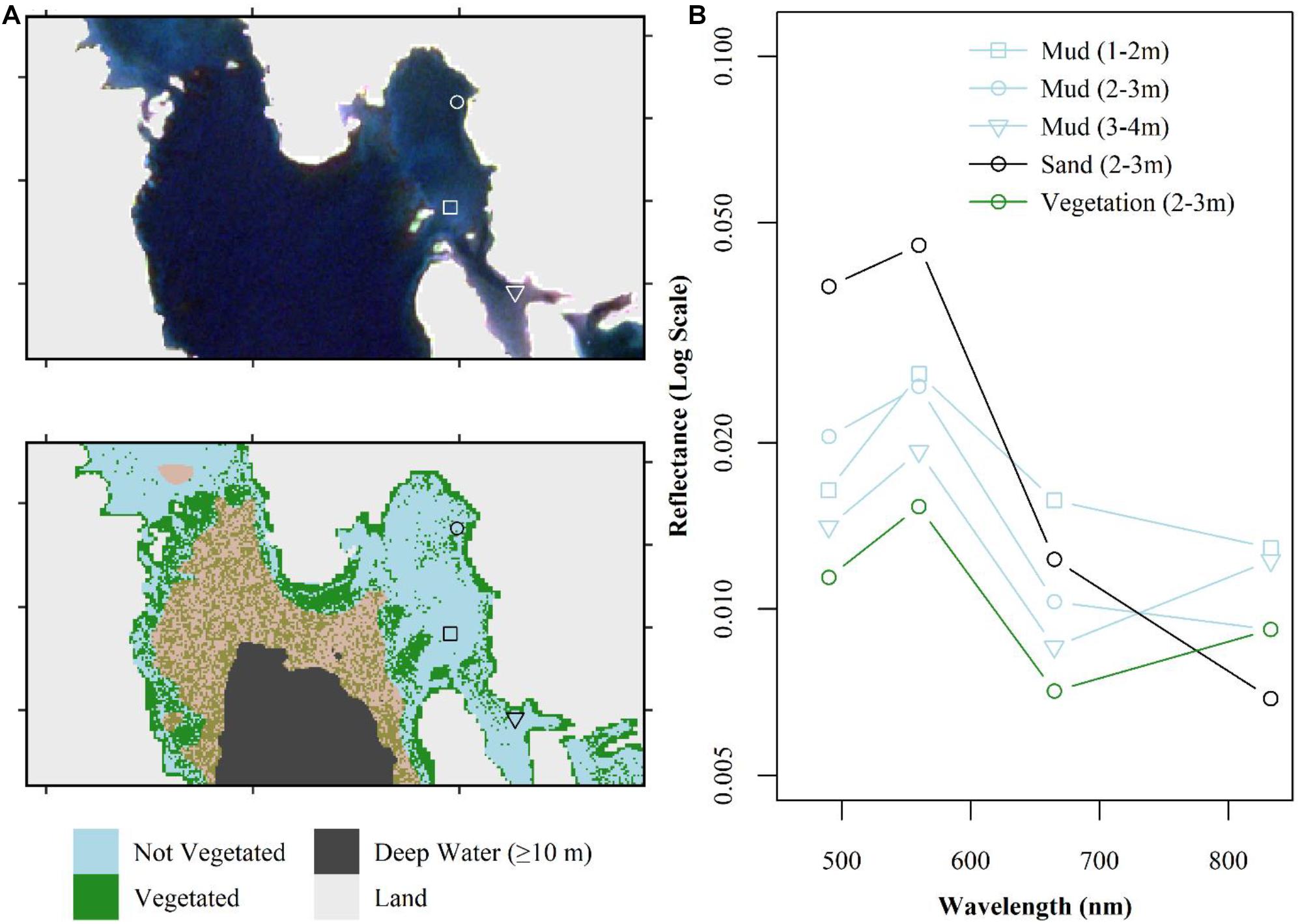

We examined changes in the spectral signatures of remote sensing reflectance for pixels extracted from the best Sentinel-2 image over four known habitat types: sand, mud, eelgrass, and seaweed (including rockweed and kelp habitat which are structured by vertical zonation patterns; Figure 3). As expected, sand had the highest reflectance values, with a clear decrease in magnitude as the wavelength increases (Figure 3C). Reflectance spectra from muddy substrate (Figure 3D) were dissimilar to the ones from sandy substrate with lower absolute reflectance values at comparable depths, and a weak spectral dependence at all depths, compared to the sharp decrease in the red and NIR for sand. For mud substrate, the red and NIR bands showed the clearest decrease as a function of depth. Both eelgrass (Figure 3A) and seaweed (Figure 3B) showed lower reflectance in the visible part of the spectrum at comparable depths than the reflectance for both sand and mud bare substrate habitats. Subtle differences are visible between the two vegetation types, where eelgrass has a higher Green/Blue ratio but a lower NIR/Red ratio. However, differences in vegetation type could not be differentiated by any image classifier and limited overlapping field points at comparable depths made a true comparison of the ratios difficult (Supplementary Figure 3.1 in Supplementary Material 3).

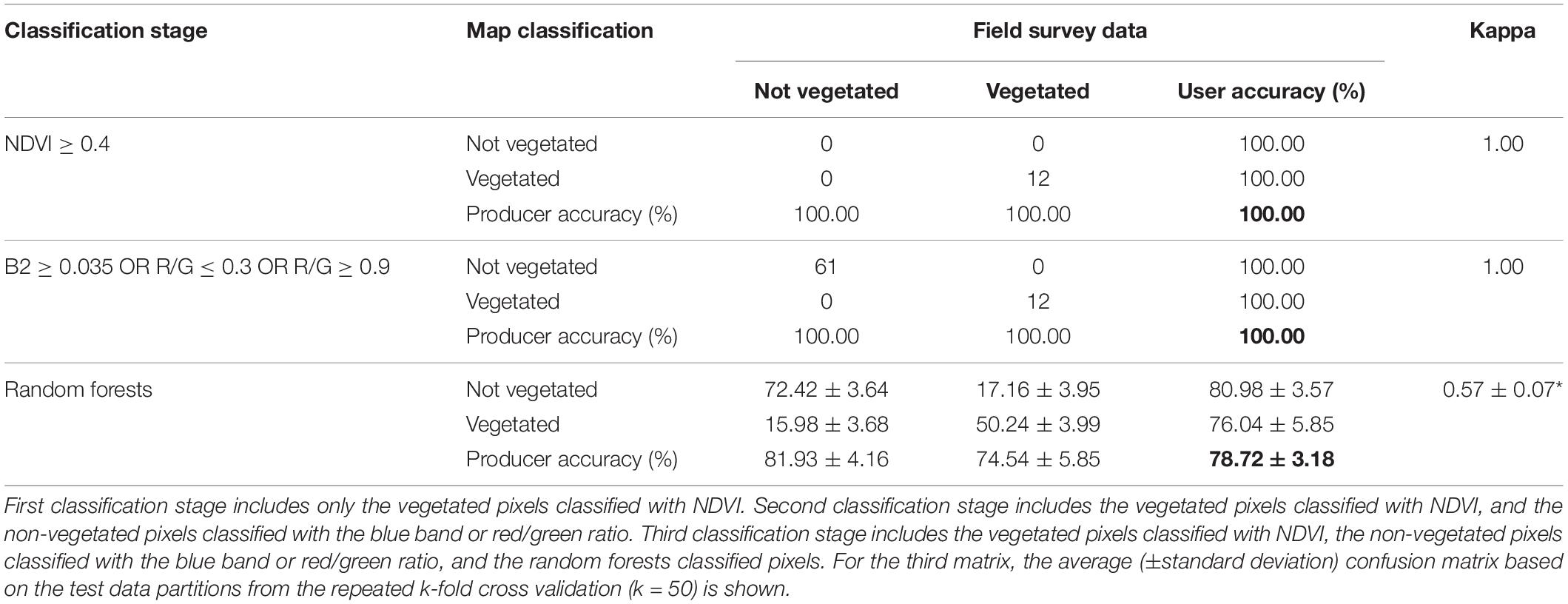

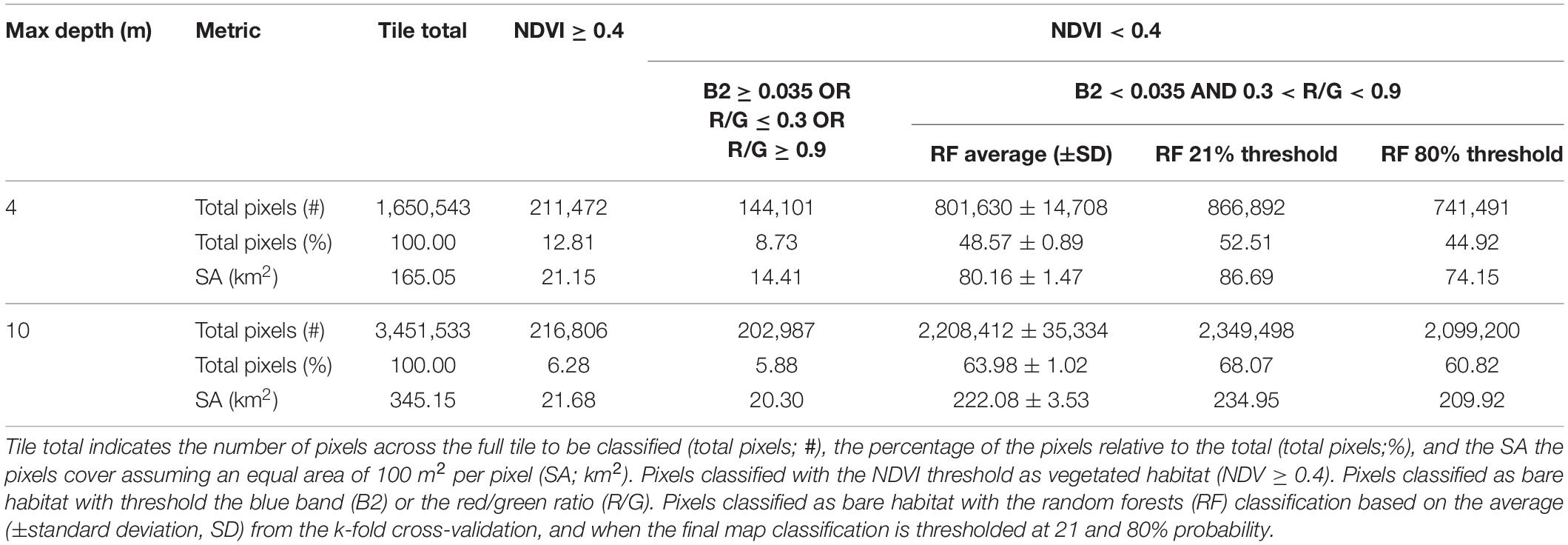

To classify the best image, the threshold for NDVI indicating vegetated habitat and the thresholds for non-vegetated habitat were set to perfectly classify (i.e., 100% classification success) the training points located within those values (Table 2). A total of 12 data points occurred within NDVI ≥ 0.4, and this threshold classified ∼6% of the pixels in the tile (Table 3). A total of 61 data points occurred within the non-vegetated thresholds (Table 2) and classified ∼6% of the pixels in the tile (Table 3). The remaining pixels (n = 3,031,740) were classified with a random forests model. Following the repeated k-fold cross validation of the random forests model, the final average overall map accuracy was 79% with an average moderate kappa value of 0.57 based on the withheld test data partitions (Table 2). Non-vegetated habitat had ∼5% higher user and producer accuracy for non-vegetated habitat, relative to the user and producer accuracy of vegetated habitat, indicating the classification was slightly better at predicting absence of vegetation.

Table 2. Confusion matrix after each classification step has been added in. Overall map accuracy is bolded. Kappa significance indicated by asterisk (*).

Table 3. Number and percentage of pixels and surface area (SA) of habitat classified across various classification steps and water depth.

The probability that a pixel was classified as vegetated with the random forests model was determined by summing the number of times a pixel was classified as vegetation, divided by 50 (number of cross-validation runs) and converted to a percentage (Figure 4A). In this classification map, 76% of the pixels were always classified the same in all 50 cross-validation runs where 16% (474,937) of the pixels were always classified as bare (non-vegetated) habitat, and 60% (1,831,911) were always classified as vegetated habitat by the random forests model (Figure 4B). When the threshold based classified pixels were included in the final classification map as either 0% (non-vegetated thresholds) or 100% (NDVI ≥ 0.4; total n = 3,451,533), we then explored the effect of thresholding the habitat probability map between 1 and 100% on overall map accuracy (Figure 4C) and kappa (Figure 4D) to determine an appropriate threshold for a binary map classification. When a threshold of 21% was chosen, meaning that any value ≥21% was labeled as vegetated habitat and any value <21% was labeled as non-vegetated, all data points were classified correctly resulting in an overall accuracy of 100% and a kappa of 1. This trend persisted until a vegetated threshold of ≥80%, where at vegetated thresholds >80% (i.e., 81% and higher) overall map accuracy and kappa began to decrease again.

Figure 4. Pre-threshold vegetation map demonstrating the probability that a pixel contains vegetated habitat with land (light gray) and deep water (≥10 m; white) masked (A). Pixels classified as vegetated or non-vegetated with the threshold classification were labeled as 100 or 0%, respectively. All other pixels were classified with the random forests classifier and probability was determined as the number of times a pixel was classified as vegetated divided by 50. Polygons with dashed borders indicate the location of zoomed in boxes in the following figures. The number of pixels at each probability value (B). The effect of various threshold choices for the map in (A) on overall map accuracy (C) and kappa value (D) using all data points (see Table 1).

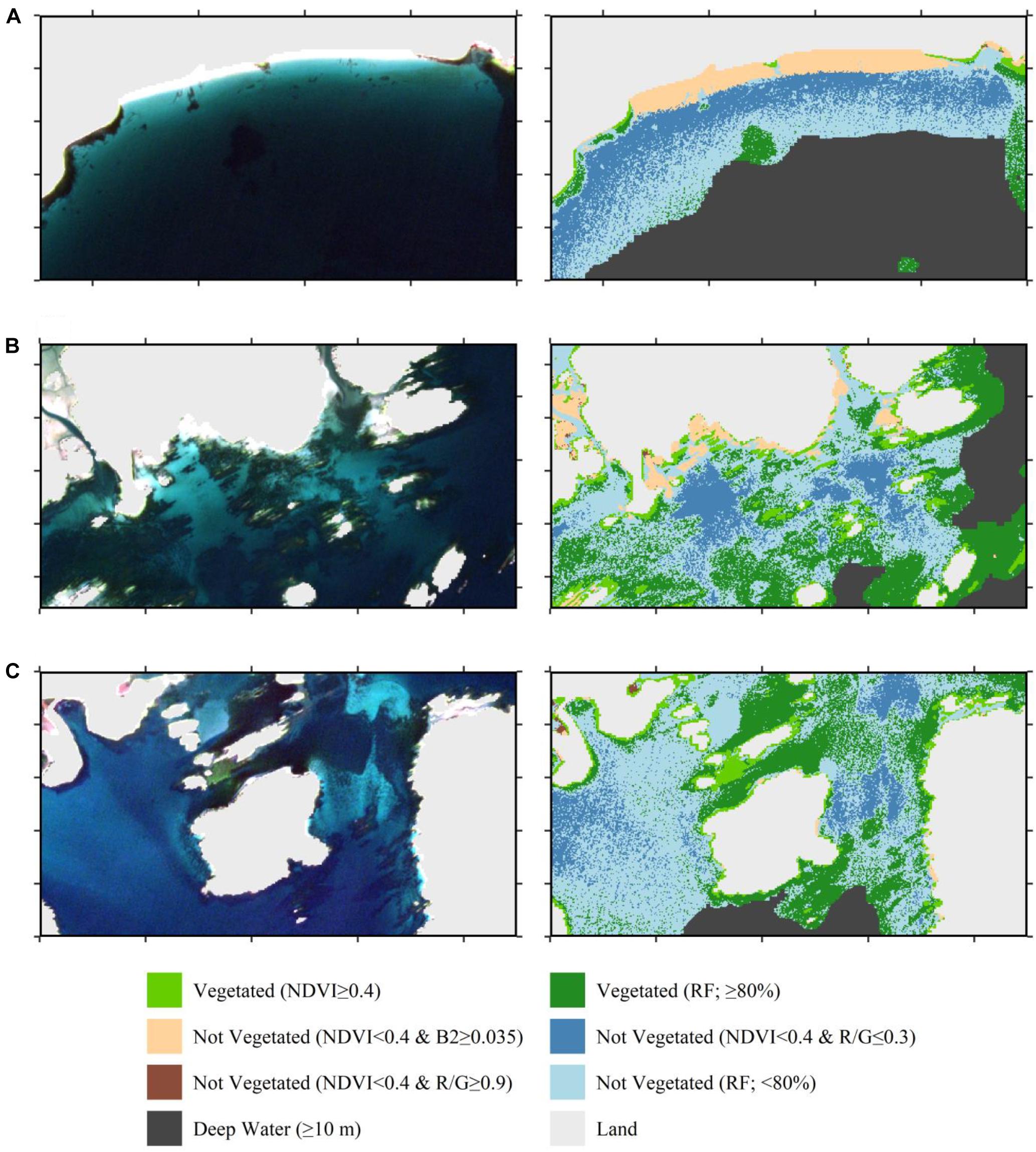

Our classification scheme applied to Sentinel-2 data performed best at identifying bright sandy habitats, and dense vegetated beds (Figure 5), which can be expected as they represent the two most distanced endmembers. The blue threshold (0.035) classified bright, bare sandy habitats to depths of 2–3 m (Figure 3). The R/G threshold of 0.3 classified bare sandy habitats from 2–3 m to roughly 7–8 m. The NDVI threshold classified both intertidal/shallow seaweed beds and shallow eelgrass beds as vegetated. The random forests classifier was able to differentiate vegetated from non-vegetated habitat to the edge of the 10 m deep water mask (Figure 5). However, this maximum depth at which bottom habitat could be classified was not consistent across the tile (Figure 6). For instance, in areas affected by freshwater runoff, even bright sand was misclassified at depths >5 m (Figure 6A), and in more estuarine regions almost all bottom habitat is classified as vegetated habitat (Figure 6B). While limited field survey data are available in estuarine regions to confirm this, it appears that freshwater with high concentrations of CDOM reduces the water depth at which the sea floor is visible due to strong absorption. These areas (i.e., pixels) present reflectance spectra similar to the ones of optically deep water even in regions of very shallow water depths. As the distance from the river increased, water transparency increased, and bottom habitat gradually became visible in seaward estuarine regions (Figure 6).

Figure 5. Finalized classification of the Sentinel-2 image from September 13, 2016 highlighting three example regions where the classification performed well including the differences between the classification stages. Left column is the true color composite. Right column is the map classification for the same region. (A) A predominantly sandy beach with distinct seaweed patches (F5A in Figure 4). (B) Mixed seaweed and eelgrass habitat interspersed with sandy habitat (F5B in Figure 4). (C) Large dense eelgrass meadows and separate kelp forests surrounded by bare substrate (F5C in Figure 4). Knowledge of vegetation type was from the field survey data only and not the map classification.

Figure 6. Finalized classification of the Sentinel-2 image from September 13, 2016 highlighting variance in maximum depth the classifier was successful. Left column is the true color composite. Right column is the map classification for the same region thresholded at 80% probability of being vegetated. (A) A sandy beach at the edge of an estuary influenced by freshwater input was successfully classified to ∼5 m, waters at depth from 5.5 to 10 m shown with transparent yellow overlay (F6A in Figure 4). (B) An estuarine brackish environment by freshwater input was successfully classified to ∼2 m, waters at depth from 2.5 to 10 m shown with transparent yellow overlay (“brown” in the figure; F6B in Figure 4).

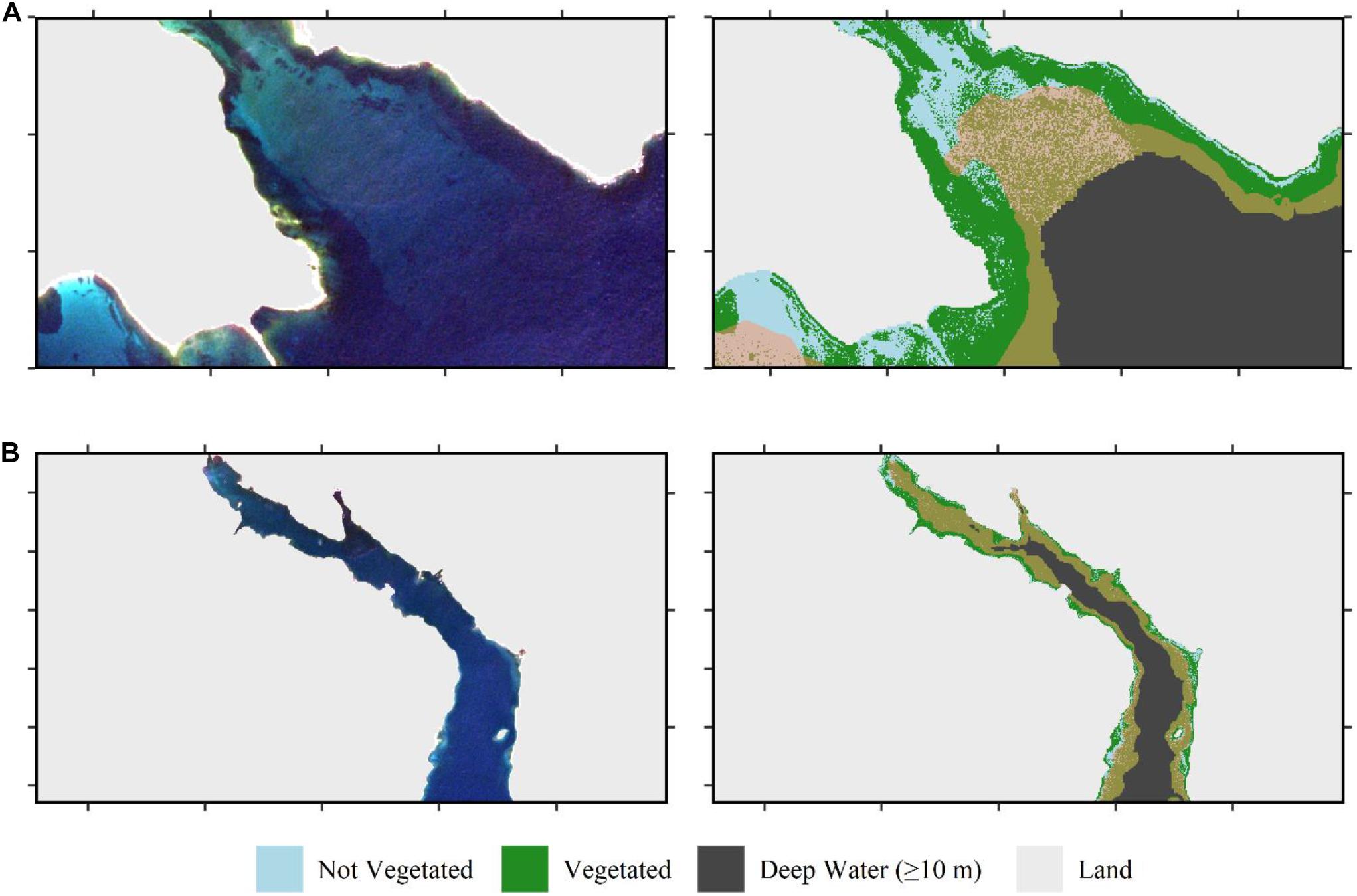

Additionally, the classification performs poorly in bare sediment habitats dominated by muddy substrate (Figure 7). The lower albedo of bare mud presents a spectral shape more similar to vegetated habitat than bare sand at comparable depths (Figure 7A). Consequently, around the 4–5 m depth (even shallower in some areas) the classification became “salt-and-peppered” suggesting that the binary classification has become purely random. Lastly, the classification also performed less well in areas of mixed habitat types. In areas of shallow waters with patchy vegetation, the bright reflectance from nearby bare sand overwhelmed the vegetation signal and the spectra of low-density vegetation on sandy substrate has a spectrum comparable to sand (Figure 8). This can be seen as a contamination due to the adjacency effect as it occurs in coastal waters close to land, where pixels in the vicinity of a brighter target are contaminated. Increased satellite resolution might address this issue. Therefore, the classification fails for low density or dense but isolated patches of vegetated habitat.

Figure 7. Finalized classification of the Sentinel-2 image from September 13, 2016 highlighting how mud is misclassified at greater depths. (A) True color composite and resulting map classification (thresholded at ≥80% probability of being vegetated) indicating location of field survey points of a muddy habitat at 1–2 m (square), 2–3 m (circle), and 3–4 m (triangle). Waters at depth from 5 to 10 m shown with transparent yellow overlay (“brown” in the figure). (B) Spectra for the same three survey points, compared to sand and vegetated dominated habitat at 2–3 m of water depth.

Figure 8. Finalized classification of the Sentinel-2 image from September 13, 2016 highlighting how low-density vegetation is misclassified. (A) True color composite and resulting map classification (thresholded at ≥80% probability of being vegetated) indicating location of field survey points of a sandy habitat (black triangle), low density eelgrass (red x), and a large, dense eelgrass bed (white square). (B) Spectra for the same three survey points which all are between 0 and 1 m of water depth. Note the low-density eelgrass training point would not have been included in model training (see section “Field Surveys”).

With these limitations in mind, the total surface area coverage of vegetated habitat was calculated (Table 3). At the maximum depth of image classification (10 m), ∼231 km2 (67%) of vegetated habitat were predicted to exist, 21.68 km2 were calculated with NDVI and 209.92 km2 calculated from the random forests calculation. Conversely, ∼114 km2 (33%) were dominated by bare sediment habitat, 20.30 km2 were calculated with thresholding the blue band and Red/Green ratio, and 93.25 km2 were calculated with the random forests classifier. There are uncertainties around the random forests surface area values depending on the average used for the cross-validation maps, and if the final map was thresholded. If the image was masked to 4 m, a depth where most of the image was well classified based on visual examination, about 95 km2 (∼58% of the total area) of the seafloor was vegetated.

We used the “best” Sentinel-2 image acquired between 2015 and 2019 for a high priority management region and examined to what detail marine macrophyte habitat could be classified in an optically complex costal environment based on empirical image-based classification procedures. We found that simple band thresholds were effective at classifying very shallow habitats, and bright sandy bare substrate to moderate depths, although a supervised image classifier was required to classify the remaining areas. Vegetated habitat extent was classified at depths shallower than 10 m, with an overall accuracy of 79%, although the maximum depth that bottom habitat was visible varied spatially across the tile (i.e., 4–10 m, due to shifts in water column content and habitat type). While the random forest model developed for our study area cannot be directly applied to other areas (Islam et al., 2020), our method workflow can be readily applied to other images provided that field survey data are available to train the algorithm. In the following section, we discuss the strengths and weaknesses of using Sentinel-2 to classify marine macrophyte habitat in Atlantic Canada, keeping in mind that our findings can be applied to other temperate coastal areas of the world.

Our stepwise approach to image classification is a unique hybrid of other methods of image classification, which generally focus on simple band ratios (e.g., Dierssen et al., 2019; Mora-Soto et al., 2020) or a supervised classifier to quantify bottom habitat (e.g., Traganos and Reinartz, 2018b; Poursanidis et al., 2019), but not both. The ratio approach was applied first to all pixels, and when the threshold was met, pixels were classified with no assumptions made on the other pixels (i.e., the one that did not meet the threshold criteria). These remaining pixels were then classified with random forests. This two-step process is performed in the classification stage, with no post-classification manipulation of pixels and therefore does not require contextual editing as in Mumby et al. (1998). It leverages the simplicity and high accuracy of band ratios in habitats that are relatively straightforward to classify, such as bright sand, and shallow, dense vegetation, with the power of machine learning classifiers in more complex classification schemes. The random forests classification was performed across all depths where the threshold approach had failed.

The band ratios include vegetation indices based on red-edge indices such as NDVI and the Red-Green ratio, which can be effective tools for quantifying intertidal vegetation at low tide or vegetation that floats at, or near, the surface (e.g., Barillé et al., 2010; Dierssen et al., 2019; Mora-Soto et al., 2020). Following Dierssen et al. (2019) we found that the Red-Green ratio could successfully classify bare sand at shallow to moderate depths, albeit at a slightly lower threshold value (<0.30 this study, <0.35 Dierssen et al., 2019). While no upper threshold could be determined to classify vegetated habitat, an upper threshold could be defined to exclude shallow muddy substrate (>0.9). These thresholds were conservatively set to not misclassify any field survey points, which cover a large spatial area, even though the inherent optical properties (IOPs) of the water would vary over this scale. The thresholds could be fine-tuned at smaller spatial scales, where water column properties remain fairly stable. In our study we also found NDVI (Band 4 and 8) to be effective at classifying intertidal to very shallow subtidal habitat (∼< 2 m). This threshold can be more readily defined from the literature instead of requiring field survey points as there is little impact of the water column in regions where NDVI is effective. No distinctions were made between major species groups, such as rockweed from shallow eelgrass beds. This is in agreement with the study of Mora-Soto et al. (2020) who found that NDVI and FAI based on Sentinel-2 imagery could be used to map Giant Kelp (Macrocystis pyrifera) forests, which float at the surface and intertidal green algae, but could not discriminate between the two vegetation types.

Our choice of a specific image classifier (random forests) was based on an initial exploratory analysis (Supplementary Material 2), and previous studies which compared different machine learning classifiers to the maximum likelihood classification and found the machine learning classifiers to be the superior image classifier (e.g., Ha et al., 2020). Therefore, our study provides further support for the shift away from the continued use of maximum likelihood. Both SVM and random forests have routinely high overall map accuracies as found in our study and other works (Traganos and Reinartz, 2018b; Poursanidis et al., 2019; Wicaksono et al., 2019). However, we chose to use random forests as it requires less model tuning than SVM. Furthermore, for acoustic seabed classification, random forests was found to be the preferred algorithm compared to k-nearest neighbor and k-means as it produced the most reliable and repeatable results (Zelada Leon et al., 2020). While the classification choice does have an impact on map accuracy values, we found that it had no effect on species level discrimination during exploratory analysis. For instance, all four classification types explored in our initial analysis could map vegetated versus non-vegetated habitat, to varying degrees of accuracy, but none could differentiate between eelgrass versus (predominantly brown) seaweed dominated habitat. We therefore had to modify our classification goal from separating eelgrass from seaweed habitat, to only classifying vegetated habitat presence and absence. This is in line with another study based on Sentinel-2 imagery in temperate waters that was also able to only classify vegetation presence from absence (Fethers, 2018). Using SPOT-6/7 imagery, with similar band placements to Sentinel-2 bands 2–4 (blue, green, and red) but at a higher spatial resolution (1.5-m), eelgrass was differentiated from seaweeds in only one of three different images for a bay-wide mapping study in Atlantic Canada (Wilson et al., 2019). Even in tropical clear waters, supervised classifiers with Sentinel-2 have had varying success to differentiate among submerged seagrass species (Kovacs et al., 2018; Traganos and Reinartz, 2018b). Therefore, while Sentinel-2 can produce large-scale coastal benthic habitat maps in Atlantic Canada, it would only be to a level of vegetation presence. If greater class separation is required, hyperspectral imagery (5–10 nm resolution depending on the part of the spectrum) can differentiate between different vegetation types, even in optically complex waters, albeit to much shallower depths (Vahtmäe et al., 2020). Our analysis found that the four 10-m spectral bands on Sentinel-2 were not enough to provide differentiation between eelgrass and seaweeds.

Including appropriate water penetrating bands is essential for developing accurate coastal benthic habitat maps. In the submerged habitat classification using random forests, we excluded the NIR band from the analysis. While the NIR provides critical information along the red-edge, strong absorption by water in this part of the spectrum introduces noise into the classification in all but the shallowest of waters (<2 m; Kutser et al., 2009). Exploratory analysis found that excluding the NIR band (8) increased the maximum depth from ∼5 to ∼10 m at which the classifier could successfully identify bottom habitat at the expense of misclassifying vegetation in very shallow (∼<2 m) waters (Wilson and Devred, 2019). Classifying intertidal/shallow habitat with NDVI provided comparable results in very shallow regions as including NIR in the random forests model, with the benefit of reducing “noise” in the classification allowing for classifying bottom habitat at greater depths. Consequently, we found the best band combination to maximize spectral information available to the classifier, while minimizing noise introduction to the blue, green, and red bands (Bands 2–4). The blue (band 2) and green (band 3) are commonly used in image classification of seagrass habitat with Sentinel-2, generally with a third band either being coastal blue (Traganos et al., 2018; Poursanidis et al., 2019) or red (Traganos and Reinartz, 2018b; Ha et al., 2020). In our study, classification performance decreased when the coastal blue band (band 1) was added due to its low spatial resolution (60-m), which was inappropriate for identification of highly heterogeneous habitat such as in our area of interest. Furthermore, while in very clear waters the coastal blue band provides valuable information on bottom habitat across all depths, the presence of CDOM and particulate matter in temperate optically complex waters limits the applicability of the coastal blue band due to their high absorption across these wavelengths (∼450 nm). Classification performance also decreased when the red band was omitted, which is commonly done in tropical studies (Traganos et al., 2018; Poursanidis et al., 2019), indicating that valuable information was still provided at this wavelengths even though it is strongly attenuated by water. Therefore, the optimum bands in temperate, optically complex waters vary compared to the typical band combination used in tropical studies. Classification of seaweed habitat with Sentinel-2 has focused on intertidal species (Kotta et al., 2018), or those with floating canopies such as Giant kelp (Mora-Soto et al., 2020), and can therefore use information about the red-edge for image classification, including the NIR bands.

Effective masking of optically deep water is an important step in image-based classification procedures as bottom reflectance is no longer detected by the satellite. If a poor maximum depth for image classification is chosen, then all habitat below this depth will be classified as vegetated due to the relatively dark spectra compared to optically shallow regions. Generally, the maximum depth is either specified to coincide with the maximum depth that field survey data were collected (Yucel-Gier et al., 2020), or analytical modeling is used to determine the maximum depth bottom reflectance that can be detected (O’Neill and Costa, 2013; Poursanidis et al., 2019). In Nova Scotia, eelgrass beds have been documented up to 12 m deep (DFO, 2009) and kelp beds to 20 m deep (Johnson and Mann, 1988), yet we masked water deeper than 10 m to coincide with the maximum depth at which field survey points were collected during our study. While the external bathymetry file was at a lower spatial resolution then the satellite data (30 versus 10-m), which would cause errors if bathymetry was required for every pixel particularly in shallower (<5 m) waters, it is reasonable to derive a deep water mask based on the 10-m contour. Therefore, most eelgrass habitat was included in our map product but there is an additional ∼333 km2 of total surface area between 10 and 20 m of water depth where kelp habitat may exist within the study area. While bottom type could be visually distinguished at depths shallower than the 11–12 m isobaths in regions with high albedo (sandy beach) and sharp contrast (sand into dense vegetation patch), this maximum depth was not consistent across the image. In some areas bottom habitat was only detectable for depths shallower than 2 m. It is not surprising that there is a large range of maximum water depths where bottom habitat can be classified. In the clear waters of the Mediterranean Sea, the seagrass species Posidonia oceanica has been consistently mapped with Sentinel-2 to water depths of 16–40 m (Traganos and Reinartz, 2018b; Traganos et al., 2018; Poursanidis et al., 2019). While in optically complex temperate environments, Sentinel-2 has been used to map eelgrass beds to depths of 4–5 m (Fethers, 2018; Dierssen et al., 2019), and retrieve bathymetry to 10-m deep in Ireland (Casal et al., 2020), both studies observed that this maximum depth was highly dependent on image quality, and depth limits were significantly shallower under non-optimal conditions, which is consistent with our results. Lastly, while Sentinel-2 has not previously been used to detect submerged kelp beds, SPOT and Landsat have been used to map vegetated bottom habitat (eelgrass and kelps) to depths of 7–8 m in Atlantic Canada (Simms and Dubois, 2001; Wilson et al., 2019; St-Pierre and Gagnon, 2020) and down to 10 m in turbid waters in Spain (Casal et al., 2011).

It is not surprising that water transparency would vary over a Sentinel-2 tile characterized by a complex coastline subject to river inputs, sediment redistribution and resuspension, CDOM runoff, and differing phytoplankton concentrations, which all impact the optical characteristics of the water column and are highly variable over even small spatial scales. Furthermore, while the 10-m maximum depth at which bottom habitat can be detected using Sentinel-2 provides a cost effective mean to quantify large-scale distribution of marine macrophytes in Nova Scotia, the variance in the maximum depth that bottom habitat is visible should be further explored. On small scales (single seagrass bed or inlet) a maximum depth should be readily detectable for classifying vegetation coverage. But on large-scales (several inlets to full Sentinel-2 tiles) another approach is required. The simplest approach would be to use the minimum water depth that bottom habitat could be consistently detected, although this would limit the extent of habitat maps to the shallowest waters. Another, albeit tedious, technique could involve manually digitizing a deep-water mask accounting for the spatial heterogeneity of water transparency. A more automated approach may involve deriving water column properties with ACOLITE and the use of detectability limits of different substrates (Vahtmäe et al., 2020) to develop contours where water transparency may shift. Furthermore, the high revisiting time of Sentinel-2 (every 2–3 days in Atlantic Canada) allows for multi-scene compositing, opposed to relying on only classifying the overall “best” image, within a time frame for which vegetation extent is expected to remain similar. This would minimize water transparency impacts on image classification as this process has shown promising results for satellite derived bathymetry studies in turbid waters with Sentinel-2 (Caballero and Stumpf, 2020), and coastal habitat classification with Landsat (Knudby et al., 2014). Regardless, our image classifier performed well to a maximum water depth of 10 m, but some interpretation is required to understand where water transparency is limiting the classification performance. As we used a “best” image for classification, we can conclude that marine macrophytes can be detected to the 10 m depth contour in Atlantic Canada under optimal conditions, and in sub-optimal conditions this value likely varies between the 4–8 m depth contour, although further work would be required to define the lower threshold.

The final map classification yielded an overall map accuracy of 79% for the binary classification of vegetation presence and absence. This is comparable to other Sentinel-2 coastal habitat mapping studies for submerged seagrasses in optically complex waters of Denmark (73%; Fethers, 2018) and in clear waters in Turkey (75–78%; Yucel-Gier et al., 2020), Italy (82–88%; Dattola et al., 2018), New Zealand (88%; Ha et al., 2020), and Greece (58–96%; Traganos and Reinartz, 2018b; Traganos et al., 2018; Poursanidis et al., 2019). The large range of accuracy values for the study carried out in the coastal waters of Greece reflects differences in their seascapes. The lowest accuracy values (58%) occurred in a fragmented seascape with large amounts of mixed habitat types (Poursanidis et al., 2019), the highest (96%) occurred in a small (∼3 km2) study region with large seagrass beds (Traganos and Reinartz, 2018b), and intermediate values (72%) were obtained for a classification encompassing the entire Aegean and Ionian Seas (Traganos et al., 2018). Elsewhere in Atlantic Canada, submerged kelp and eelgrass habitat has been classified using the high-resolution SPOT-6/7 imagery with an overall map accuracy of ∼88% at bay-wide scales (Wilson et al., 2019; St-Pierre and Gagnon, 2020). Consequently, our accuracy metrics would likely improve if we focused on smaller spatial scales where classification models can be fine-tuned to specific habitat-types and water column properties. Still, good accuracy metrics were achieved for the full tile classification, providing accurate maps of vegetated areas for marine spatial planning. While the previous Atlantic Canada studies were punctual in space and time, and demonstrated feasibility of using satellite remote sensing to map bottom habitat in Atlantic Canada, this study represents a first step to routinely classify and monitor bottom habitat in Atlantic Canada to inform habitat management policies at effective cost.

This study only explored the limits of empirical, image-based classification procedures to map marine macrophyte habitat with Sentinel-2 in the optically complex waters of Atlantic Canada. Further work includes comparing the results from the image-based classification to a map derived from a semi-analytical inversion models. Inversion methods solve simultaneously for depth, bottom reflectance, and water IOPs but are highly sensitive to atmospheric correction errors and were developed for hyperspectral data (Lee et al., 1999). To date, they have had limited applications for multispectral data due to the high number of unknown parameters (absorption, scattering, depth, and bottom reflectance) relative to the number of spectral bands (Garcia et al., 2020). In the case of Sentinel-2, only three water-penetrating spectral bands are available at a 10-m spatial resolution, which is inadequate to characterize both the water column, depth, and bottom reflectance (Dierssen et al., 2019). To our knowledge, only one study has explored semi-analytical inversion of Sentinel-2 data to map seagrass habitat and derive water column properties in a tropical environment (Traganos and Reinartz, 2018a). To do so, they first solved for water depth with an empirical satellite-derived bathymetry approach by regressing in situ measured depth against the coastal blue and green ratio, and then solved for water column properties and bottom reflectance using the three 10-m bands (blue, green, and red) with the downscaled 60-m coastal blue and 20-m red-edge (740 nm) bands, and the derived depth layer with a modified HOPE (Hyperspectral Optimization Process Exemplar) model (Lee et al., 1999). The addition of the lower-resolution spatial bands in our study region would complicate benthic habitat mapping due to the complex coastline and high spatial heterogeneity of both bottom habitat and water depth.

The freely available Sentinel-2 satellite imagery time series provides a new opportunity to generate large scale vegetation habitat maps, and examine how vegetation extent changes over time, at a spatial resolution of about a tenth that of the Landsat imagery series (i.e., 100 m2 for Sentinel-2 against 900 m2 for Landsat-8). As such, Sentinel-2 has been used to map the extent of marine macrophytes in single inlets and bays (Traganos and Reinartz, 2018a; Dierssen et al., 2019), regionally (Traganos et al., 2018), and globally (Mora-Soto et al., 2020). Such uses at both small and large spatial scales show promise in Atlantic Canada, provided that accurate water transparency masks are generated. In regions where water transparency limits Sentinel-2 mapping capabilities, gaps in coverage can be addressed with in situ approaches such as sonar deployment (Vandermeulen, 2014). Using Sentinel-2, we found that two complimentary approaches provide a unique, robust and efficient classification of bottom habitat. The simple band-ratio thresholds can classify vegetation extent in shallow, sandy waters, and when the thresholds method fails the random forests machine learning classifier successfully denotes the location of vegetated habitat. From the classified habitat maps, estimates of surface area coverage of marine macrophyte habitat can be generated. Although attempted, Sentinel-2 does not have the spectral resolution to distinguish seagrass from seaweed dominated habitats in the optically complex waters of our study region, this finding is in contrast to other studies that took place in tropical waters (e.g., Kovacs et al., 2018). As such, the final vegetation maps can be combined with other data layers including depth, substrate, exposure, species distribution models and/or local ecological knowledge to qualitatively differentiate eelgrass from seaweed habitats (Lauer and Aswani, 2008; Wilson et al., 2019; Beca-Carretero et al., 2020). Benthic habitat maps are an essential data layer to inform the monitoring and management of macrophyte dominated habitats, and Sentinel-2 provides a cost-effective tool to quantify these habitats.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation, to any qualified researcher.

MW and ED conceived the study. KW performed all data analysis and drafted the initial manuscript. MW organized field operations. All authors contributed substantially to the manuscript revision and review.

This study was funded by Fisheries and Oceans Canada Strategic Program for Ecosystem-Based Research and Advice (SPERA). The study also benefited from in-kind support from the Oceans and Coastal Management Division, Ecosystem Management Branch.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank J. O’Brien and B. Roethlisberger for support in the field and laboratory. We thank V. Stuart and C. Caverhill for reviewing the manuscript and A. Hilborn for IT support. We also thank ESA for freely providing Sentinel-2 data.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fenvs.2020.579856/full#supplementary-material

Barbier, E. B., Hacker, S. D., Kennedy, C., Koch, E. W., Stier, A. C., and Silliman, B. R. (2011). The value of estuarine and coastal ecosystem services. Ecol. Monogr. 81, 169–193.

Barillé, L., Robin, M., Harin, N., Bargain, A., and Launeau, P. (2010). Increase in seagrass distribution at Bourgneuf Bay (France) detected by spatial remote sensing. Aquat. Bot. 92, 185–194. doi: 10.1016/j.aquabot.2009.11.006

Barrell, J., Grant, J., Hanson, A., and Mahoney, M. (2015). Evaluating the complementarity of acoustic and satellite remote sensing for seagrass landscape mapping. Int. J. Remote Sens. 36, 4069–4094. doi: 10.1080/01431161.2015.1076208

Beca-Carretero, P., Varela, S., Stengel, D. B., Beca-Carretero, P., Varela, S., and Stengel, D. B. (2020). A novel method combining species distribution models, remote sensing, and field surveys for detecting and mapping subtidal seagrass meadows. Aquat. Conserv. Mar. Freshw. Ecosyst 30, 1098–1110. doi: 10.1002/aqc.3312

Bell, T. W., Allen, J. G., Cavanaugh, K. C., and Siegel, D. A. (2020). Three decades of variability in California’s giant kelp forests from the Landsat satellites. Remote Sens. Environ. 238:110811. doi: 10.1016/j.rse.2018.06.039

Caballero, I., and Stumpf, R. P. (2020). Towards routine mapping of shallow bathymetry in environments with variable turbidity: contribution of Sentinel-2A/B satellites mission. Remote Sens. 12:451.

Casal, G., Hedley, J. D., Monteys, X., Harris, P., Cahalane, C., and Mccarthy, T. (2020). Satellite-derived bathymetry in optically complex waters using a model inversion approach and Sentinel-2 data. Estuar. Coast. Shelf Sci. 241:106814. doi: 10.1016/j.ecss.2020.106814

Casal, G., Sánchez-Carnero, N., Sánchez-Rodríguez, E., and Freire, J. (2011). Remote sensing with SPOT-4 for mapping kelp forests in turbid waters on the south European Atlantic shelf. Estuar. Coast. Shelf Sci. 91, 371–378. doi: 10.1016/j.ecss.2010.10.024

Dattola, L., Rende, F. S., Di Mento, R., Dominici, R., Cappa, P., Scalise, S., et al. (2018). Comparison of Sentinel-2 and Landsat-8 OLI satellite images vs. high spatial resolution images (MIVIS and WorldView-2) for mapping Posidonia oceanica meadows. Proc. SPIE 10784:1078419. doi: 10.1117/12.2326798

DFO (2009). Does eelgrass (Zostera marina) meet the criteria as an ecologically significant species? DFO Can. Sci. Advis. Sec. Sci. Advis. Rep. 2009/018.

DFO (2019). Biophysical and ecological overview of the Eastern Shore Islands Area of Interest (AOI). DFO Can. Sci. Advis. Sec. Sci. Advis. Rep. 2019/016.

Dierssen, H. M., Bostrom, K. J., Chlus, A., Hammerstrom, K., Thompson, D. R., and Lee, Z. (2019). Pushing the limits of seagrass remote sensing in the turbid waters of Elkhorn Slough, California. Remote Sens. 11, 1664. doi: 10.3390/rs11141664

Drusch, M., Del Bello, U., Carlier, S., Colin, O., Fernandez, V., Gascon, F., et al. (2012). Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 120, 25–36. doi: 10.1016/j.rse.2011.11.026

Duarte, C. M., Losada, I. J., Hendriks, I. E., Mazarrasa, I., and Marba, N. (2013). The role of coastal plant communities for climate change mitigation and adaptation. Nat. Clim. Chang. 3, 961–968. doi: 10.1038/NCLIMATE1970

Duffy, J. E., Benedetti-Cecchi, L., Trinanes, J., Muller-Karger, F. E., Ambo-Rappe, R., Boström, C., et al. (2019). Toward a coordinated global observing system for seagrasses and marine macroalgae. Front. Mar. Sci. 6:317. doi: 10.3389/fmars.2019.00317

Fauzan, M. A., Kumara, I. S. W., Yogyantoro, R., Suwardana, S., Fadhilah, N., Nurmalasari, I., et al. (2017). Assessing the capability of Sentinel-2A data for mapping seagrass percent cover in Jerowaru. East Lombok. Indones. J. Geogr. 49, 195–203. doi: 10.22146/ijg.28407

Fethers, J. O. (2018). Remote sensing of eelgrass using Object Based Image Analysis and Sentinel-2 Imagery. Master Thesis, Aalborg University, Aalborg.

Filbee-Dexter, K., and Wernberg, T. (2018). Rise of turfs: a new battlefront for globally declining kelp forests. Bioscience 68, 64–76. doi: 10.1093/biosci/bix147

Foody, G. M. (2002). Status of land cover classification accuracy assessment. Remote Sens. Environ. 80, 185–201. doi: 10.1016/S0034-4257(01)00295-4

Gamer, M., Lemon, J., and Singh, I. F. P. (2019). irr: Various Coefficients of Interrater Reliability and Agreement. R package version 0.84.1.

Garcia, R. A., Lee, Z., Barnes, B. B., Hu, C., Dierssen, H. M., and Hochberg, E. J. (2020). Benthic classification and IOP retrievals in shallow water environments using MERIS imagery. Remote Sens. Environ. 249:112015. doi: 10.1016/j.rse.2020.112015

Ha, N. T., Manley-Harris, M., Pham, T. D., and Hawes, I. (2020). A comparative assessment of ensemble-based machine learning and maximum likelihood methods for mapping seagrass using Sentinel-2. Remote Sens. 12:355.

Hedley, J. D., Harborne, A. R., and Mumby, P. J. (2005). Simple and robust removal of sun glint for mapping shallow-water benthos. Int. J. Remote Sens. 26, 2107–2112. doi: 10.1080/01431160500034086

Hijmans, R. J. (2019). raster: Geographic Data Analysis and Modeling. R package version 3.0-7 Google Scholar

Hogrefe, K. R., Ward, D. H., Donnelly, T. F., and Dau, N. (2014). Establishing a baseline for regional scale monitoring of eelgrass (Zostera marina) habitat on the lower Alaska Peninsula. Remote Sens. 6, 12447–12477. doi: 10.3390/rs61212447

Hossain, M. S., Bujang, J. S., Zakaria, M. H., and Hashim, M. (2015). The application of remote sensing to seagrass ecosystems: an overview and future research prospects. Int. J. Remote Sens. 36, 61–114. doi: 10.1080/01431161.2014.990649

Islam, K. A., Hill, V., Schaeffer, B., Zimmerman, R., and Li, J. (2020). Semi-supervised adversarial domain adaptation for seagrass detection using multispectral images in coastal areas. Data Sci. Eng. 5, 111–125. doi: 10.1007/s41019-020-00126-0

Johnson, C. R., and Mann, K. H. (1988). Diversity, patterns of adaptation, and stability of Nova Scotian kelp beds. Ecol. Monogr. 58, 129–154.

Knudby, A., Nordlund, L. M., Palmqvist, G., Wikström, K., Koliji, A., Lindborg, R., et al. (2014). Using multiple Landsat scenes in an ensemble classifier reduces classification error in a stable nearshore environment. Int. J. Appl. Earth Obs. Geoinf. 28, 90–101. doi: 10.1016/j.jag.2013.11.015

Kotta, J., Valdivia, N., Kutser, T., Toming, K., Rätsep, M., and Orav-Kotta, H. (2018). Predicting the cover and richness of intertidal macroalgae in remote areas: a case study in the Antarctic Peninsula. Ecol. Evol. 8, 9086–9094. doi: 10.1002/ece3.4463

Kovacs, E., Roelfsema, C. M., Lyons, M., Zhao, S., and Phinn, S. R. (2018). Seagrass habitat mapping: how do Landsat 8 OLI, Sentinel-2. ZY-3A, and Worldview-3 perform? Remote Sens. Lett. 9, 686–695. doi: 10.1080/2150704X.2018.1468101

Kutser, T., Hedley, J., Giardino, C., Roelfsema, C. M., and Brando, V. E. (2020). Remote sensing of shallow waters – A 50 year retrospective and future directions. Remote Sens. Environ. 240:111619. doi: 10.1016/j.rse.2019.111619

Kutser, T., Vahtmäe, E., and Praks, J. (2009). A sun glint correction method for hyperspectral imagery containing areas with non-negligible water leaving NIR signal. Remote Sens. Environ. 113, 2267–2274. doi: 10.1016/j.rse.2009.06.016

Lauer, M., and Aswani, S. (2008). Integrating indigenous ecological knowledge and multi-spectral image classification for marine habitat mapping in Oceania. Ocean Coast. Manag. 51, 495–504. doi: 10.1016/j.ocecoaman.2008.04.006

Lee, Z., Carder, K. L., Mobley, C. D., Steward, R. G., and Patch, J. S. (1999). Hyperspectral remote sensing for shallow waters: 2 Deriving bottom depths and water properties by optimization. Appl. Opt. 38:3831. doi: 10.1364/ao.38.003831

León-Pérez, M. C., Hernández, W. J., and Armstrong, R. A. (2019). Characterization and distribution of seagrass habitats in a caribbean nature reserve using high-resolution satellite imagery and field sampling. J. Coast. Res. 35:937. doi: 10.2112/jcoastres-d-18-00106.1

Leutner, B., Horning, N., and Schalb-Willman, J. (2019). RStoolbox: Tools for Remote Sensing Data Analysis. R package version 0.2.6.

Lyzenga, D. R. (1978). Passive remote sensing techniques for mapping water depth and bottom features. Appl. Opt. 17:379. doi: 10.1364/ao.17.000379

Macdonald, C., Webster, T., and Livingstone, B. (2012). Identification and Quantification of Rockweed Using High-Resolution Satellite Imagery. Report submitted to Acadian SeaPlants Limited.

MacQueen, J. (1967). Some methods for classification and analysis of multivariate observations. Proceedings of 5th Berkeley Symposium on Mathematical Statistics and Probability. Univ. Calif. Press 1, 281–297.

Marcello, J., Eugenio, F., Martín, J., and Marqués, F. (2018). Seabed mapping in coastal shallow waters using high resolution multispectral and hyperspectral imagery. Remote Sens. 10:1208. doi: 10.3390/rs10081208

McKinna, L. I. W., Fearns, P. R. C., Weeks, S. J., Werdell, P. J., Reichstetter, M., Franz, B. A., et al. (2015). A semianalytical ocean color inversion algorithm with explicit water column depth and substrate reflectance parameterization. J. Geophys. Res. Ocean. 120, 1741–1770. doi: 10.1002/2014JC010224

Milton, G. R., Parsons, F. M. G., and McIntyre, L. B. J. H. C. (2009). Interim report of eelgrass classification project. Nova Sco. Dep. Nat. Resour. 32.

Mora-Soto, A., Palacios, M., Macaya, E. C., Gómez, I., Huovinen, P., Pérez-Matus, A., et al. (2020). A high-resolution global map of giant kelp (Macrocystis pyrifera) forests and intertidal green algae (Ulvophyceae) with Sentinel-2 imagery. Remote Sens. 12:694. doi: 10.3390/rs12040694

Mumby, P. J., Clark, C. D., Green, E. P., and Edwards, A. J. (1998). Benefits of water column correction and contextual editing for mapping coral reefs. Int. J. Remote Sens. 19, 203–210. doi: 10.1080/014311698216521

Murphy, G. E. P., Wong, M. C., and Lotze, H. K. (2019). A human impact metric for coastal ecosystems with application to seagrass beds in Atlantic Canada. Facets 4, 210–237. doi: 10.1139/facets-2018-0044

O’Neill, J. D., and Costa, M. (2013). Mapping eelgrass (Zostera marina) in the Gulf Islands National Park Reserve of Canada using high spatial resolution satellite and airborne imagery. Remote Sens. Environ. 133, 152–167. doi: 10.1016/j.rse.2013.02.010

Orth, R. J., Carruthers, T. J. B., Denntson, W. C., Duarte, C. M., Fourgurean, J. W., and Heck, Jr. K. L, et al. (2006). A global crisis for seagrass ecosystems. Bioscience 56, 987–997.

Poursanidis, D., Topouzelis, K., and Chrysoulakis, N. (2018). Mapping coastal marine habitats and delineating the deep limits of the Neptune’s seagrass meadows using very high resolution Earth observation data. Int. J. Remote Sens. 39, 8670–8687. doi: 10.1080/01431161.2018.1490974

Poursanidis, D., Traganos, D., Reinartz, P., and Chrysoulakis, N. (2019). On the use of Sentinel-2 for coastal habitat mapping and satellite-derived bathymetry estimation using downscaled coastal aerosol band. Int. J. Appl. Earth Obs. Geoinf. 80, 58–70. doi: 10.1016/j.jag.2019.03.012