- 1Ningxia Communication Technical College, Yinchuan, China

- 2School of Economics and Management, Hubei Institute of Automobile Technology, Shiyan, China

In the rapidly evolving technological landscape, the advent of collaborative Unmanned Aerial Vehicle (UAV) inspections represents a revolutionary leap forward in the monitoring and maintenance of power distribution networks. This innovative approach harnesses the synergy of UAVs working together, marking a significant milestone in enhancing the reliability and efficiency of infrastructure management. Despite its promise, current research in this domain frequently grapples with challenges related to efficient coordination, data processing, and adaptive decision-making under complex and dynamic conditions. Intelligent self-organizing algorithms emerge as pivotal in addressing these gaps, offering sophisticated methods to enhance the autonomy, efficiency, and reliability of UAV collaborative inspections. In response to these challenges, we propose the MARL-SOM-GNNs network model, an innovative integration of Multi-Agent Reinforcement Learning, Self-Organizing Maps, and Graph Neural Networks, designed to optimize UAV cooperative behavior, data interpretation, and network analysis. Experimental results demonstrate that our model significantly outperforms existing approaches in terms of inspection accuracy, operational efficiency, and adaptability to environmental changes. The significance of our research lies in its potential to revolutionize the way power distribution networks are inspected and maintained, paving the way for more resilient and intelligent infrastructure systems. By leveraging the capabilities of MARL for dynamic decision-making, SOM for efficient data clustering, and GNNs for intricate network topology understanding, our model not only addresses current shortcomings in UAV collaborative inspection strategies but also sets a new benchmark for future developments in autonomous infrastructure monitoring, highlighting the crucial role of intelligent algorithms in advancing UAV technologies.

1 Introduction

In the rapidly evolving landscape of industrial and technological advancements, the use of UAVs for collaborative inspection of power distribution networks has emerged as a transformative approach Liu et al. (2020), offering unprecedented efficiency and precision. This innovative strategy leverages the autonomy, flexibility, and coordinated efforts of multiple UAVs, enabling extensive coverage of vast and often inaccessible geographic areas with reduced labor costs and improved safety. The capability of UAVs to navigate difficult terrains where human inspectors might face risks underscores the revolutionary potential of this technology in maintaining critical infrastructure Wang et al. (2019). However, the practical implementation of such collaborative UAV inspections is not without its hurdles. Challenges encompass a range of operational issues from path planning and dynamic task allocation to collaborative decision-making and adapting to environmental changes Shakhatreh et al. (2019), all crucial for the seamless execution of surveillance and inspection missions. These obstacles highlight the need for sophisticated solutions that can ensure efficient, safe, and effective UAV collaboration in complex environments.

With the rapid advancement of artificial intelligence (AI) and machine learning technologies, intelligent self-organizing algorithms have become pivotal in enhancing the efficiency and intelligence of collaborative UAV inspections Keneni et al. (2019); He et al. (2024). These sophisticated algorithms empower UAVs to autonomously collaborate in the absence of centralized control, facilitating the efficient execution of intricate tasks through direct local interactions. Techniques such as reinforcement learning, neural networks, and agent-based models are at the forefront of this innovation, optimizing path planning Khan and Al-Mulla (2019); Shen et al. (2024), task assignment, and inter-UAV cooperation. By leveraging AI, UAVs gain the capability to accurately identify and classify objects and obstacles, learn and refine flight paths and behaviors dynamically, and enhance environmental perception Ahmed et al. (2022). This enables smarter decision-making in the face of complex and uncertain conditions. The convergence of AI with machine learning and deep learning algorithms opens new avenues for UAVs to adapt and evolve based on environmental feedback and mutual information exchange, significantly improving the system’s overall efficacy. As AI technology continues to advance, its application in UAV collaborative inspections is poised to drive substantial progress and innovation in the maintenance of power distribution networks, heralding a future of increased operational excellence.

Although intelligent self-organizing algorithms show unprecedented possibilities for collaborative UAV inspections, there are still some limitations in existing research. First, the robustness and scalability of many algorithms in real complex environments have not been fully verified Ferdaus et al. (2019). For example, existing models may have difficulty in handling real-world uncertainties and dynamic changes, such as sudden weather changes or unexpected geographic obstacles Liu et al. (2019). Second, algorithms in current research often require large amounts of data for training and optimization, which may be difficult to achieve in practice, especially in scenarios where data collection is costly or data is difficult to obtain Ning et al. (2024a). In addition, transparency and interpretability regarding the decision-making process of algorithms is also a key issue in research Horváth et al. (2021), which affects the reliability of algorithms in real-world applications and users’ trust in them. Therefore, although intelligent self-organizing algorithms open up new possibilities for collaborative UAV inspections, further research and development is still needed to overcome these limitations and realize their full potential in practical applications.

Building on the shortcomings identified in previous works, we propose the MARL-SOM-GNNs network model, a novel integration designed to overcome the limitations of current drone cooperative inspection strategies for power distribution networks. This section elaborates on the roles of each model MARL, SOM), and Graph Neural Networks GNNs and describes how they synergistically function to enhance the inspection process.

The MARL component enables dynamic decision-making and coordination among multiple drones, optimizing inspection paths and task allocations through learning from interactions within the environment. This is crucial for navigating the complex and often unpredictable landscapes of power distribution networks. The SOM algorithm processes and clusters the high-dimensional data collected during inspections, enhancing data visualization and interpretation, which is vital for identifying critical points of interest. Meanwhile, GNNs model the intricate relationships within the power distribution network, allowing for precise analysis of its structure and the efficient planning of inspection routes.

The synergy of MARL, SOM, and GNNs in our network model presents a comprehensive solution that addresses the key challenges in drone cooperative inspections. By combining the strengths of each model, our approach ensures adaptive, efficient, and targeted inspections, significantly reducing the time and resources required for maintaining power distribution networks. Moreover, this integrated model facilitates a proactive maintenance strategy, capable of identifying potential issues before they lead to failures, thereby enhancing the resilience and reliability of the power infrastructure.

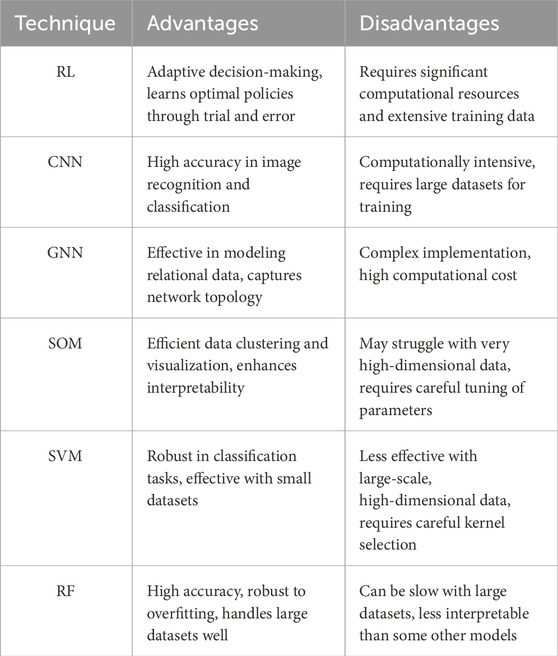

To provide a comprehensive overview of the current state of the art and justify our technical selection, we present a literature review Table 1 describing the advantages and disadvantages of various methods relevant to UAV-based inspections of power distribution networks. Due to space constraints the technologies in the table are shown in abbreviated form, with the full names listed below: Reinforcement Learning (RL), Convolutional Neural Networks (CNN), Graph Neural Networks (GNN), Self-Organizing Maps (SOM), Support Vector Machines (SVM), Random Forests (RF).

The significance and advantages of this model lie in its holistic approach to the challenges of drone-based power distribution network inspections. It not only automates the inspection process but also ensures that the inspections are conducted in a manner that is both thorough and resource-efficient. This innovation represents a significant step forward in the application of intelligent self-organizing algorithms to critical infrastructure maintenance, setting a new standard for the field and opening up avenues for further research and development.

In conclusion, our contributions to the advancement of UAV collaborative inspections for power distribution networks are detailed as follows:

• We have developed an integrated MARL-SOM-GNNs network model that uniquely combines Multi-Agent Reinforcement Learning, Self-Organizing Maps, and Graph Neural Networks. This integration significantly enhances the drones’ abilities in autonomous decision-making, sophisticated data analysis, and comprehensive network topology understanding, leading to more effective and accurate inspection processes.

• Our research addresses critical challenges such as dynamic task allocation, intricate path planning, and robust inter-UAV communication, which have hindered the efficiency of UAV collaborative inspections. By implementing our model, we demonstrate marked improvements in the efficiency and reliability of surveillance activities, ensuring that power distribution networks are maintained with unprecedented precision.

• Through rigorous testing and validation on both real-world and simulated datasets, our work not only validates the effectiveness of the MARL-SOM-GNNs model but also sets a foundational framework for future exploration. The practical insights and methodologies derived from our study contribute to the evolving field of intelligent autonomous systems, offering a significant leap forward in the application of AI technologies for the maintenance and resilience of essential infrastructure.

2 Related work

2.1 Deep Q-Networks (DQN) in drone surveillance of power distribution networks

The integration of DQN into the drone-based surveillance of power distribution networks marks a significant leap forward in autonomous inspection technologies. DQN combines the depth of neural networks with the reinforcement learning prowess of Q-learning algorithms, creating a powerful tool that enables drones to navigate and make decisions autonomously in complex environments Zhu et al. (2022). This technology allows for the optimization of inspection paths and the avoidance of obstacles, utilizing deep learning to directly process and learn from high-dimensional sensory inputs without the need for manual feature extraction Xu (2023). The application of DQN in this domain not only enhances the efficiency of drone operations but also improves the accuracy and reliability of surveillance tasks, enabling power distribution networks to be monitored more effectively and with less human intervention.

While DQN’s application heralds a new era in autonomous drone surveillance, its performance is not without challenges. The model’s tendency to overestimate action values occasionally leads to the selection of suboptimal policies Gao et al. (2019). This issue becomes more pronounced in environments characterized by unpredictability—a common feature of power distribution networks. Furthermore, the extensive data required for DQN training underscores a resource-intensive process, potentially slowing adaptation to new environments Yun et al. (2022). Additionally, managing the replay buffer to mitigate sequential data correlations introduces a delicate balance between memory efficiency and system performance.

2.2 Convolutional neural networks (CNN) for image-based inspection

CNN have revolutionized the field of image-based inspection in power distribution systems, thanks to their exceptional ability to process and analyze visual information. By leveraging CNNs, drones are equipped to autonomously inspect power distribution equipment, using advanced image recognition to detect faults and anomalies within captured images. This approach capitalizes on CNN’s adeptness at identifying patterns and features across various layers of the network, facilitating precise fault identification and classification Miao et al. (2021). The automation of such tasks significantly reduces the time and labor traditionally required for manual inspections, enhancing the operational efficiency and reliability of power distribution maintenance processes Ning et al. (2024b). Moreover, CNNs’ application in drone surveillance introduces a scalable and effective solution for monitoring extensive power infrastructure, contributing to the overall stability and safety of electrical systems.

Despite these advantages, CNN-based inspection systems face significant hurdles. The effectiveness of a CNN is deeply tied to the diversity and volume of its training data. Amassing a comprehensive dataset that accurately represents the variety of conditions power distribution components may encounter is both expensive and time-consuming Dorafshan et al. (2018). Variability in environmental conditions such as lighting and background can further complicate the model’s ability to generalize its findings, necessitating additional adjustments or training for deployment in new settings. The high computational demands of CNNs also present challenges for real-time processing on drones with limited onboard capabilities Ren et al. (2020).

2.3 Support vector machines (SVM) for fault detection

Implementing SVM in the context of fault detection within power distribution networks through drone inspections represents a methodical advancement in diagnostic accuracy and efficiency Baghaee et al. (2019). SVM, a robust supervised learning model, excels in classification tasks by creating a hyperplane that best separates different classes in the input space, making it particularly suitable for distinguishing between normal and fault conditions in power infrastructure. By analyzing sensor data or images captured by drones, SVM models contribute to the early identification of potential issues, facilitating preemptive maintenance actions Saari et al. (2019); Goyal et al. (2020). This capability is pivotal for enhancing the reliability of power distribution networks, ensuring uninterrupted service and reducing the risk of catastrophic failures. The precision and adaptability of SVM in handling diverse data types underscore its utility in modern inspection strategies, where timely and accurate fault detection is paramount Han et al. (2021).

However, the application of SVM in this context is not devoid of limitations. As the complexity of input data escalates, especially with high-resolution images or intricate sensor data, SVM models may face computational bottlenecks, affecting their efficiency Cui et al. (2020). The selection of an appropriate kernel function and its parameters, crucial for SVM’s performance, demands a high level of expertise and trial-and-error, posing additional challenges. Moreover, SVM’s scalability is tested when confronted with large datasets, a common scenario in extensive surveillance operations Yuan et al. (2020).

2.4 Random forests for vegetation management

The application of Random Forest algorithms for vegetation management in proximity to power lines illustrates a strategic use of machine learning to enhance the safety and reliability of power distribution networks Zaimes et al. (2019). By processing aerial imagery and LiDAR data collected by drones, Random Forest models can accurately identify vegetation that poses a risk to power lines, classifying and predicting encroachment with high precision. This ensemble learning method, which aggregates the decisions of multiple decision trees Ramos et al. (2020), mitigates the risk of overfitting while bolstering predictive accuracy. Such capability is crucial for preemptively addressing vegetation growth that could lead to power outages or fires, thereby maintaining the integrity of electrical infrastructure. Random Forest’s effectiveness in this domain is a testament to its versatility and robustness Loozen et al. (2020), providing utility companies with a powerful tool for risk assessment and mitigation in vegetation management operations.

Despite its effectiveness, the application of Random Forests in managing vegetation encroachment highlights the need for high-quality, accurately labeled training data—a process that can be exceedingly laborious Wan et al. (2019). The model’s performance in highly imbalanced datasets, where certain vegetation types are rare, may also be compromised. Additionally, the intricacies of Random Forests’ decision-making process can obscure the understanding of how specific features influence predictions Nguyen et al. (2019), presenting a barrier to transparent assessment and interpretation.

3 Method

3.1 Overview of our network

In this section, we introduce the MARL-SOM-GNNs network model, an advanced and novel integration of MARL, SOM and GNNs. This model is specifically designed to enhance the efficiency and effectiveness of UAV collaborative inspections of power distribution networks.

Our MARL-SOM-GNNs network model integrates three cutting-edge technologies to address the unique challenges of inspecting power distribution networks. MARL facilitates real-time, adaptive decision-making among multiple UAVs, enabling decentralized coordination and improving operational efficiency and flexibility in inspections. SOM are employed to process and visualize high-dimensional inspection data, simplifying complexity and aiding in the identification and prioritization of critical areas. GNNs provide a detailed understanding of the power network’s topology, enabling more efficient route planning and inspection. The integration of these technologies addresses the specific challenges of power distribution systems, characterized by extensive, complex, and geographically dispersed infrastructures requiring regular and detailed inspections to ensure reliability and prevent failures.

SOM is utilized to process and visualize high-dimensional data collected by UAVs. This method simplifies the complexity of large-scale inspection data, allowing UAVs to quickly identify and prioritize critical areas. The innovative aspect of integrating SOM lies in its ability to enhance the interpretability of complex data, thus improving the decision-making process for UAVs in real-time inspections. GNNs are employed to model and analyze the relational data of power distribution networks. By understanding the topological structure of the network, GNNs enable UAVs to plan and execute more efficient and comprehensive inspection routes. The novelty here is in applying GNNs to dynamically model the power network’s structure, which is essential for optimizing inspection paths and ensuring thorough coverage. The MARL-SOM-GNNs network model is intricately linked to the specific challenges and requirements of power distribution systems. Power distribution networks are characterized by their extensive, complex, and often geographically dispersed infrastructures. These networks require regular and detailed inspections to ensure reliability and prevent failures.

In the context of power systems, MARL allows UAVs to adapt to the dynamic conditions of the power grid environment, such as changing weather conditions or unexpected obstacles. This adaptability is crucial for maintaining the reliability and efficiency of power distribution. SOM helps manage the vast amount of inspection data generated by UAVs, organizing it into a coherent and actionable format. This capability is vital for quickly identifying potential issues such as equipment wear or vegetation encroachment, which could affect the power supply. GNNs provide a detailed understanding of the power network’s topology, enabling UAVs to navigate and inspect the network more effectively. This detailed network analysis ensures that all critical components are inspected, reducing the risk of undetected faults and enhancing the overall resilience of the power infrastructure.

The MARL-SOM-GNNs model offers a comprehensive and innovative solution for UAV-based inspections, optimizing the process and setting a new standard for intelligent infrastructure management. The integrated approach not only boosts efficiency and accuracy but also reduces operational costs and deployment time. By harnessing the combined strengths of MARL, SOM, and GNNs, this model represents a significant step forward in autonomous inspection technologies, providing adaptability to complex environments and a robust framework for future advancements.

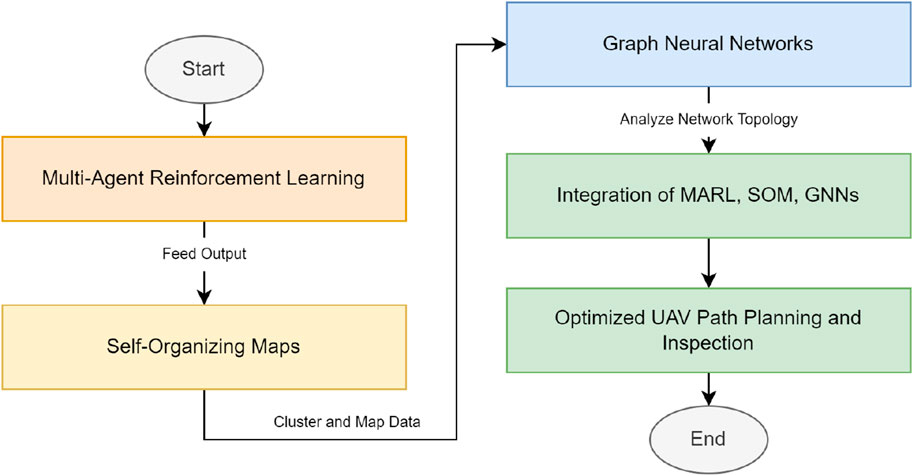

To visually comprehend the interplay and functional integration of these components within our model, refer to the structural diagram provided in Figure 1. This diagram illustrates the sequential and collaborative workflow of MARL, SOM, and GNNs within our integrated system. Additionally, we present Algorithm below, outlining the step-by-step process of our proposed model’s operation.

The significance of this integrated model lies in its holistic approach to UAV-based power distribution network inspections. By harnessing the combined strengths of MARL, SOM, and GNNs, our model not only boosts inspection efficiency and accuracy but also reduces operational costs and deployment time. This integrated approach marks a significant step forward in autonomous inspection technologies, offering enhanced adaptability to complex environments and providing a robust framework for future advancements in intelligent infrastructure management. The synergy achieved through this integration is pivotal, as it significantly elevates the model’s capabilities beyond what could be achieved by the sum of its individual parts, setting a new benchmark for autonomous UAV inspections in the power distribution sector.

Algorithm 1.Training Process for MARL-SOM-GNNs.

Input: GRSS Dataset, ISPRS Dataset

Output: Trained MARL-SOM-GNNs model, Evaluation Metrics

Initialize MARL, SOM, GNNs with random weights;

Set learning rate

Set batch size

Set termination condition: max_epochs = 100;

Initialize lists for tracking losses:

for

for each batch

//Forward Pass

//Calculate Loss

//Backward Pass

Update MARL weights using gradients of

Update SOM weights using gradients of

Update GNNs weights using gradients of

end

//Evaluate Performance

Calculate evaluation metrics on validation set;

if validation loss does not improve then

break;

end

end

return Trained MARL-SOM-GNNs model, Evaluation Metrics: Accuracy, F1-score, Precision, Recall;

3.2 MARL

Multi-Agent Reinforcement Learning (MARL) is an advanced reinforcement learning paradigm that involves multiple agents simultaneously learning to navigate and interact within a shared environment. Each agent seeks to maximize its own cumulative reward through trial and error, learning from the consequences of its actions. The core challenge of MARL lies in the agents’ need to account for the actions and strategies of other agents Oroojlooy and Hajinezhad (2023), whose behavior may also be evolving. This inter-agent interaction introduces a level of complexity far beyond single-agent scenarios, as the optimal strategy for one agent may change based on the strategies adopted by others. Agents in MARL settings must therefore learn not only to adapt to the static features of the environment but also to dynamically adjust their strategies in response to the actions of other agents Du and Ding (2021). This dynamic adjustment is often facilitated through mechanisms like policy gradient methods, value-based learning, or actor-critic approaches Cui et al. (2019), which enable agents to evaluate the effectiveness of their actions in complex, multi-agent contexts.

MARL involves multiple agents learning to optimize their behaviors through interactions within a shared environment. Each agent

The reward

where

The policy

We utilize the actor-critic approach, where the actor updates the policy

The actor updates the policy using the policy gradient method:

In our research, MARL’s contribution lies in its ability to enable a group of drones to conduct cooperative inspection tasks within power distribution networks efficiently Zhang et al. (2021). This efficiency is realized through the strategic interaction of drones, where each drone operates as an independent agent within the MARL framework. The integration with GNN and SOM offers a multi-faceted approach to solving the inspection problem.

The combination of MARL with GNN brings a significant advantage in handling the spatial complexity of power distribution networks. GNN models can capture the relational information between different nodes (e.g., power poles, transformers) in the network, providing a structured representation of the environment for the MARL agents. This structured information allows the drones to understand not just their immediate surroundings but also the broader network context, enabling them to make more informed decisions about where to inspect next. The agents’ ability to make these informed decisions in a complex environment is crucial for optimizing inspection paths and ensuring comprehensive coverage of the network.

The integration with SOM enhances MARL’s capability by providing an efficient way to cluster and interpret the vast amounts of data generated during inspection missions. SOM can reduce the dimensionality of data, highlighting patterns and features that are critical for decision-making. This process helps in mapping high-dimensional sensory data to lower-dimensional spaces, making it easier for MARL agents to recognize states and adapt their strategies accordingly. In practice, this means that drones can quickly identify critical areas needing inspection, prioritize tasks more effectively, and adjust their flight paths dynamically, leading to increased operational efficiency and reduced inspection times.

The unique challenges posed by cooperative drone inspection in power distribution networks demand a sophisticated approach like MARL. Traditional single-agent or deterministic algorithms fall short in handling the dynamic interplay between multiple autonomous drones navigating through complex, uncertain environments. MARL stands out by enabling drones to learn from each other’s experiences, adapting their strategies in real-time to achieve collective objectives efficiently. This collaborative learning process is not just about avoiding redundant inspections or optimizing individual paths; it’s about creating a cohesive system where the collective intelligence of the drone fleet surpasses the sum of its parts.

In our research, the significance of MARL extends beyond technical efficiency. It embodies a shift towards more adaptive, resilient, and intelligent systems capable of tackling the intricate challenges of modern infrastructure maintenance. By harnessing the collective capabilities of MARL, GNN, and SOM, we aim to demonstrate a model where drones can autonomously and intelligently navigate the complexities of power distribution networks, ensuring reliable electricity supply through timely and effective inspection and maintenance. This approach not only highlights the potential of combining these advanced technologies but also sets a precedent for future applications of AI in critical infrastructure management.

3.3 SOM

Self-Organizing Maps (SOM), also known as Kohonen maps, represent a sophisticated approach within the realm of unsupervised learning algorithms. They are designed to transform complex, high-dimensional input data into a more accessible, two-dimensional, discretized representation Clark et al. (2020). This process preserves the topological features of the original dataset, making SOM particularly effective for visualizing and interpreting high-dimensional data in a way that is straightforward and insightful Wickramasinghe et al. (2019).

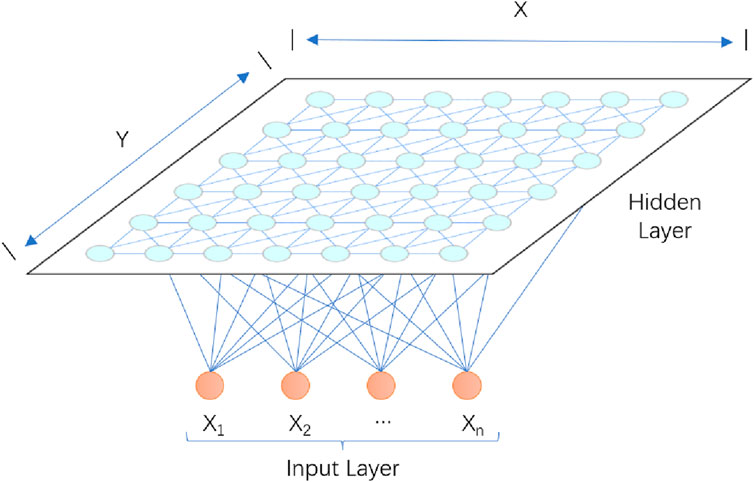

Self-Organizing Maps (SOM) are essentially a straightforward yet powerful neural network, consisting solely of an input layer and a hidden layer, where each node within the hidden layer represents a cluster into which the data is organized. The number of neurons in the input layer is determined by the dimensions of the input vector, with each neuron corresponding to one feature Qu et al. (2021). The training process relies on a competitive learning strategy where each input example finds its closest match within the hidden layer, thus activating a specific node known as the “winning neuron” Kleyko et al. (2019); Wang et al. (2024). This neuron and its neighbors are then updated using stochastic gradient descent, with adjustments based on their proximity to the activated neuron. A distinctive feature of SOM is the topological relationship between the nodes in the hidden layer Yu et al. (2021). This topology is predefined by the user; nodes can be arranged linearly to form a one-dimensional model or positioned to create a two-dimensional plane for a more complex representation. The structure of the SOM network varies mainly in the competitive layer, which can be one-dimensional Cardarilli et al. (2019), two-dimensional (the most common), or even higher dimensions. However, for visualization purposes, higher-dimensional competitive layers are less commonly used. This spatial organization allows the SOM to capture and reveal the inherent patterns and relationships within the data, providing a comprehensive and intuitive understanding of the dataset’s structure Soto et al. (2021). To better understand the intricate structure of the SOM, Figure 2 provide a visual representation of the network architecture and the functional relationships between its layers, enhancing the explanation of how SOM effectively processes and clusters data.

Given the topology-based structure of the hidden layer, it’s pertinent to note that Self-Organizing Maps (SOM) possess the unique capability to discretize input data from an arbitrary number of dimensions into a structured one-dimensional or two-dimensional discrete space. While it is technically possible to organize data into higher-dimensional spaces using SOM, such applications are rare and typically not as common due to the increased complexity and diminished interpretability. The nodes within the computation layer, which play a crucial role in the mapping process, are in a state of full connectivity with the nodes in the input layer, ensuring that each input can influence the map’s formation. After establishing topological relationships (a key step in defining the spatial arrangement and interaction patterns of nodes), the calculation process begins, roughly divided into several parts:

1) Initialization: Every node within the network undertakes the process of random parameter initialization. This ensures that the quantity of parameters allocated to each node is precisely aligned with the dimensional attributes of the input.

2) For every input data point, the system seeks out the node that most closely aligns with it. If we consider the input to be of D dimensions, expressed as

here,

3) After identifying the activation node

In this context,

4) Following this, the next step involves updating the parameters of the nodes. This is done in accordance with the gradient descent method, iterating the process until convergence is achieved.

where

In our research, SOM plays a crucial role in efficiently managing and interpreting the vast amounts of data generated during the cooperative drone inspection of power distribution networks. The combination of SOM with MARL and GNN offers a comprehensive approach to addressing the complexities of this task. When integrated with MARL, SOM enhances the ability of drones to make sense of their environment and the status of their inspection tasks. By clustering high-dimensional data into more manageable representations, SOM provides a clear picture of the environment’s state, which is essential for the drones to determine their next actions. This clarity is particularly beneficial in dynamic and uncertain environments where drones need to adapt their strategies based on new information. The organized representation created by SOM can help in identifying patterns such as areas that are more prone to faults, thereby allowing the MARL algorithm to prioritize inspection tasks more effectively. The integration of SOM with GNN can significantly improve the model’s ability to handle spatial data. GNNs excel at capturing the relationships between entities in a network, such as the connections between different components of a power distribution network. SOM can take this relational data and provide a simplified yet informative representation, highlighting key features and relationships that are critical for the inspection process. This synergy allows for a more nuanced understanding of the network’s structure and condition, enabling drones to navigate and inspect the network more efficiently.

The adoption of SOM in our research is pivotal for addressing the challenges associated with cooperative drone inspection of power distribution networks. The primary challenge lies in the processing and interpretation of large-scale, high-dimensional data, which can be overwhelming and impractical for direct analysis. SOM addresses this challenge head-on by offering a way to visually explore and understand complex data patterns, facilitating the identification of crucial insights that can guide the inspection process. Furthermore, the ability of SOM to organize data into a structured, easy-to-interpret map is invaluable for the coordination and strategic planning of drone operations. By providing a clear overview of the data, SOM enables more informed decision-making, ensuring that inspection efforts are focused where they are most needed. This level of efficiency and precision is essential for maintaining the reliability and safety of power distribution networks, underscoring the critical role of SOM in our research. In sum, the integration of SOM into our model encapsulates our commitment to leveraging advanced technological solutions for improving infrastructure inspection and maintenance. By simplifying the complexity of the data involved, SOM not only enhances the performance of the overall model but also paves the way for innovative approaches to managing and optimizing critical infrastructure systems.

3.4 GNNs

Graph Neural Networks (GNNs) represent an innovative deep learning framework, specifically designed to address the unique challenges of data structured as graphs Wu et al. (2020). This capability is particularly relevant in scenarios such as cooperative drone inspections of power distribution networks Yuan et al. (2022), where the network can be modeled as a graph with nodes representing critical points requiring inspection and edges representing potential movement paths for drones. GNNs excel at processing this graph-structured data, identifying optimal inspection paths while accounting for the network’s complex relationships and constraints Liao et al. (2021). This capability has enabled GNNs to play a key role in areas such as social network analysis, bioinformatics, and recommender systems, providing a powerful tool for understanding and processing complex relationships in the real world Gama et al. (2020b).

Graph Neural Networks (GNNs) are utilized to model the topological structure of the power distribution network. The network is represented as a graph

Each node

where

The function

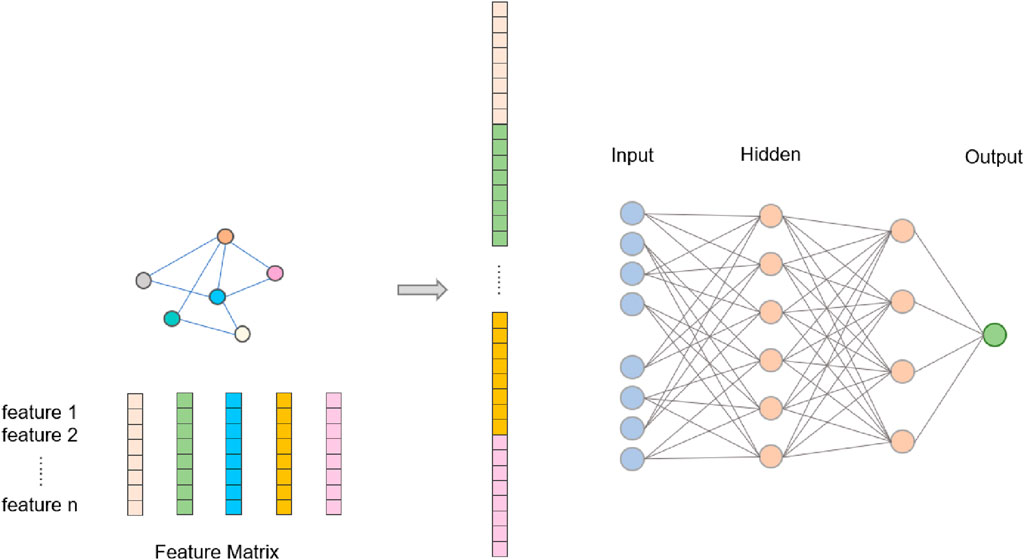

GNNs allow deep learning techniques to be directly applied to a wide array of graph-structured data Gama et al. (2020a), including social networks, molecular structures, and crucially for our research, power distribution networks. The strength of GNNs stems from their capacity to manage and learn from interconnected data, where the relationships between data points significantly influence the overall structure and semantics of the data Liu et al. (2021). At the heart of GNNs’ operation is the aggregation of node features, a process in which the algorithm integrates information from adjacent nodes to update each node’s state Bessadok et al. (2022). This involves critical steps such as feature extraction, learning edge weights, and comprehensively synthesizing node information. By learning and understanding the interactions between nodes within the graph, the algorithm enables each node to reflect the state of the entire graph structure more accurately and comprehensively Jiang and Luo (2022). This method of aggregating information based on adjacency relationships allows GNNs to capture complex relationship dynamics and patterns within graph structures exceptionally well. The overall structure of the GNNs model is shown in Figure 3.

By considering the connections and interactions between entities, GNNs effectively reveal underlying patterns and laws, demonstrating superior performance across various applications. This capability places GNNs in a pivotal role across diverse fields, offering a powerful tool for understanding and managing the complex relationships that characterize the real world. Through neural network transformations applied to the features of nodes and edges, considering both their attributes and their connectivity patterns, GNNs facilitate a novel approach to processing graph-structured data. This approach, often referred to as message passing, allows for iterative updating of node representations by incorporating information from their local neighborhoods, thus capturing the global topology of the graph through localized operations.

In the context of our research, GNNs contribute significantly to the overall model’s efficiency and effectiveness. By leveraging the structural information of power distribution networks, GNNs can provide a nuanced understanding of the network’s topology, which is instrumental in optimizing the routing and task allocation for drone inspections. GNNs complement MARL by providing a structured representation of the environment in which the multiple agents (drones) operate. This representation enables the agents to make informed decisions based on the comprehensive understanding of the network’s topology. For example, by understanding the connectivity and importance of different nodes within the network, MARL agents can prioritize inspections of critical components or areas more likely to experience faults, enhancing the overall efficiency and effectiveness of the inspection process. The integration with SOM benefits from GNNs’ ability to structure and highlight critical features of the power distribution network. SOM can use the feature representations learned by GNNs to cluster similar regions of the network, identifying patterns or areas that require special attention during inspections. This synergy allows for a more focused inspection strategy, where drones can target areas identified by SOM as high-priority, based on the comprehensive understanding provided by GNNs.

GNNs are crucial for our experiment due to their unique ability to model and analyze the complex, interconnected structure of power distribution networks. The capability to understand and exploit the network topology directly influences the planning and execution of cooperative drone inspections. With GNNs, our model can accurately represent the physical and logical relationships within the network, enabling more strategic planning of inspection routes that minimize redundancy and maximize coverage.

Furthermore, GNNs facilitate the identification of critical network components and potential fault lines, informing the inspection process in a way that traditional models cannot. This level of insight ensures that drones can be dispatched more effectively, focusing on areas of the network that are most vulnerable or crucial to its overall stability. Ultimately, the integration of GNNs into our model represents a significant advancement in the application of AI techniques to the maintenance and inspection of power distribution networks, promising to enhance the reliability and efficiency of these critical infrastructure systems through more intelligent and informed inspection strategies.

4 Experiments

To validate the effectiveness of our proposed MARL-SOM-GNNs network model, we conducted a series of simulation experiments and included a comparative analysis with existing methods. This section outlines the experimental setup, datasets, evaluation metrics, and the results of our comparative analysis.

4.1 Datasets

We utilized two well-known datasets for our experiments: the IEEE GRSS Data Fusion Contest 2019—Multi-Modal UAV (GRSS) and the ISPRS 2D Semantic Labeling Contest–Vaihingen (ISPRS). These datasets provide a diverse range of scenarios and challenges suitable for evaluating UAV-based inspection methods.

IEEE GRSS Data Fusion Contest 2019—Multi-Modal UAV (GRSS) Dataset Le Saux et al. (2019): This collection presents a series of multimodal datasets gathered via UAVs, incorporating both optical imagery and LiDAR scans, targeting the advancement of 3D reconstruction techniques within both urban and rural settings. The dataset’s high-resolution optical images furnish extensive visual details, whereas its LiDAR scans offer invaluable three-dimensional spatial insights. This multifaceted dataset becomes instrumental for research teams aiming to pioneer and evaluate data fusion methodologies suitable for UAV-powered inspections within power distribution frameworks. Through the integration of optical and LiDAR data, UAVs are equipped not only to recognize various power facilities but also to gauge their spatial arrangements and physical conditions, identifying potential issues such as vegetation encroachment on power lines.

ISPRS 2D Semantic Labeling Contest–Vaihingen (ISPRS) DatasetCramer (2010): As part of the 2D Semantic Annotation Competition organized by the International Society for Photogrammetry and Remote Sensing (ISPRS), this dataset provides high-resolution aerial imagery of the Vaihingen area in Germany and its corresponding semantic annotations. The dataset includes detailed annotations for a wide range of ground objects such as buildings, roads, trees, etc., suitable for developing high-precision ground feature recognition techniques. For collaborative UAV inspection of power distribution networks, this dataset can train UAVs to recognize and distinguish key components (e.g., power lines, towers) within power networks from their surroundings. This is crucial for planning the UAV’s flight path, avoiding obstacles, and ensuring the accuracy and safety of the inspection work.

4.2 Experimental details

Step 1: Experimental Environment.

For our research, the experimental environment is meticulously designed to ensure a robust and reliable evaluation of the integrated MARL-SOM-GNNs network model. This section details the setup of our experimental environment, including the hardware specifications, software configurations, and the dataset used for training and testing our model.

Our experiments were conducted on high-performance computing clusters equipped with NVIDIA Tesla V100 Gpus, each providing 32 GB of memory to meet the intensive computing needs of our model. The system is powered by an Intel Xeon Gold 6230 CPU (2.10 GHz) and 192 GB RAM, ensuring fast processing and efficient processing of large data sets. In order to meet the high efficiency of training, we use the graph neural network acceleration operation.

Software Configurations: We utilize Python 3.8 as our primary programming language, due to its extensive support for machine learning libraries and frameworks. Our model is implemented using PyTorch 1.8, chosen for its flexibility and dynamic computational graph, which is particularly beneficial for implementing complex models like ours. For the reinforcement learning component, we rely on the stable-baselines3 library for its robust implementation of MARL algorithms. Additional data preprocessing and analysis are performed using SciPy and NumPy, while Matplotlib and Seaborn are used for data visualization.

By establishing a comprehensive experimental environment with specific hardware and software configurations, along with a richly annotated dataset, we ensure that our model is trained and evaluated under optimal conditions. This setup not only facilitates the development of an effective and efficient inspection model but also provides a solid foundation for replicable and scalable research in the field of UAV collaborative inspections for power distribution networks.

Step 2: Dataset Processing.

In our study, ensuring the data is primed for model training and evaluation is paramount. To achieve this, we will undertake a comprehensive data preprocessing strategy, outlined as follows:

Data Cleaning: Our first step involves meticulously removing any irrelevant, incomplete, or erroneous data entries that could skew our model’s performance. This includes filtering out outlier values that fall beyond the 1.5 IQR (Interquartile Range) of the dataset’s quartiles, as well as handling missing values either by imputation—using the median or mean for numerical data and mode for categorical data—or by discarding records with missing values if they constitute less than 5% of our dataset. This step is crucial for maintaining the integrity and reliability of our subsequent analysis.

Data Standardization: Given the heterogeneity in the measurement scales across different variables, standardization is essential. We will apply Z-score normalization to transform the data into a common scale with a mean of 0 and a standard deviation of 1. This normalization ensures that our model is not biased toward variables with larger scales and facilitates a more efficient learning process.

Data Splitting: To rigorously assess the performance of our MARL-SOM-GNNs network model, we will divide our dataset into three distinct sets: 70% for training, 15% for validation, and 15% for testing. This split is designed to provide a robust framework for model training, while also allocating sufficient data for tuning hyperparameters (validation set) and evaluating the model’s generalizability on unseen data (test set).

Feature Engineering: In this step, we aim to enhance the model’s predictive power by creating new features from the existing data. This may involve generating polynomial features to capture non-linear relationships, or performing Principal Component Analysis (PCA) to reduce dimensionality while retaining the most informative aspects of the data. By carefully selecting and engineering features, we optimize the input data for our model, ensuring it has access to the most relevant and impactful information for making accurate predictions.

Through these meticulous preprocessing steps, we lay a solid foundation for our model, ensuring the data is clean, standardized, appropriately partitioned, and richly featured for optimal training and evaluation.

Step 3: Model Training.

In our study, the model training process is meticulously structured to optimize the performance of the integrated MARL-SOM-GNNs network model. Here’s how we proceed with each critical step:

Network Parameter Settings: For the MARL component, we configure the learning rate at 0.01 and set the discount factor (

Model Architecture Design: Our model architecture is designed to facilitate efficient learning and accurate predictions. The MARL framework utilizes a Deep Q-Network (DQN) with two hidden layers, each consisting of 128 neurons, and ReLU activation functions to ensure non-linearity in decision-making. The GNN component comprises two graph convolutional layers that enable the model to capture the complex interdependencies within the power distribution network. Finally, the SOM component is implemented with a flexible architecture to adapt to the varying dimensions of the input data, ensuring effective feature mapping and clustering.

Model Training Process: The training process unfolds over 200 epochs, with each epoch consisting of 1000 simulation steps to ensure comprehensive learning across diverse scenarios. We employ a mini-batch gradient descent approach with a batch size of 32 for optimizing the network parameters, which strikes a good balance between computational efficiency and training speed. To avoid overfitting, we implement early stopping based on the validation set performance, monitoring the loss and halting the training if no improvement is observed for 10 consecutive epochs. Additionally, we utilize a dropout rate of 0.2 in the MARL and GNN components to further regularize the model.

By carefully calibrating the network parameters, thoughtfully designing the model architecture, and adhering to a strategic training process, we ensure that our MARL-SOM-GNNs network model is robustly trained to tackle the challenges of UAV collaborative inspections in power distribution networks, maximizing efficiency and accuracy.

Step 4: Indicator Comparison Experiment.

In this stage of our research, we plan to identify and employ a range of widely recognized models for both regression and classification purposes. Each model will be systematically trained and tested using identical datasets to ensure consistency. Following this, we will conduct a thorough comparison of the models’ performance, drawing on metrics including accuracy, recall, F1 score, and AUC to evaluate their efficacy across various tasks and data scenarios. A detailed explanation of each metric used for evaluation is presented subsequently. By elucidating these variables and their corresponding metrics, we aim to furnish a clearer understanding of how model performance is evaluated, emphasizing the aspects of accuracy, reliability, computational demand, and efficiency:

4.2.1 Accuracy

In this formula,

4.2.2 Recall

4.2.3 F1 score

4.2.4 AUC

The AUC is derived from the ROC curve, which plots the true positive rate against the false positive rate at various threshold levels, represented by

4.2.5 Parameters (M)

This is quantified as the total number of tunable parameters within the model, expressed in millions, indicating the model’s complexity and capacity for learning.

4.2.6 Inference time (ms)

This metric measures the duration required by the model to make a single prediction or inference, given in milliseconds, highlighting the model’s efficiency during operation.

4.2.7 Flops (G)

The count of floating-point operations the model necessitates for a single inference, presented in billions, serves as an indicator of the computational demand of the model.

4.2.8 Training time (s)

This refers to the total time taken for the model to complete its training process, measured in seconds, offering insight into the computational efficiency of the training phase.

4.3 Experimental results and analysis

To provide a comprehensive comparison, we included the following existing methods in our analysis: the models developed by Ahmad et al., Qin et al., Khalil et al., and Sinnemann et al. These methods represent state-of-the-art approaches in UAV-based inspection and offer a robust benchmark for evaluating our model.

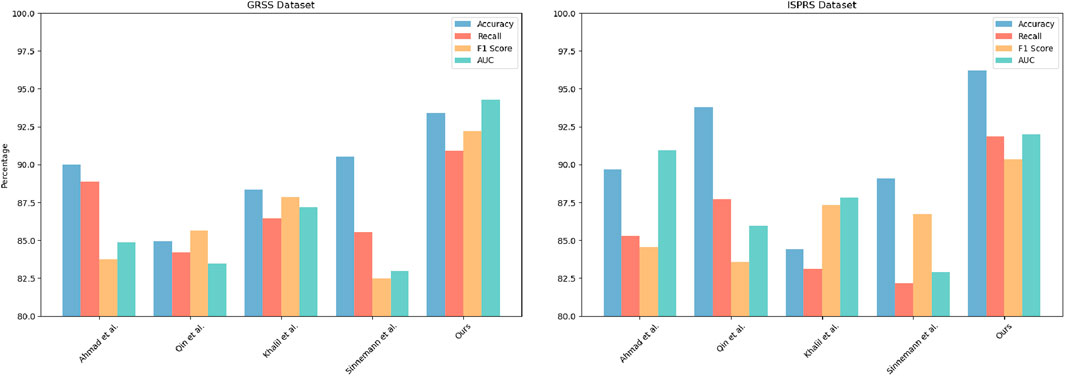

In our experimental analysis, we rigorously evaluated the performance of our proposed model against several established models to showcase its efficacy across two distinct datasets: the GRSS Dataset and the ISPRS Dataset. We compared our model with other developed models, focusing on key performance metrics such as Accuracy, Recall, F1 Score, and AUC.

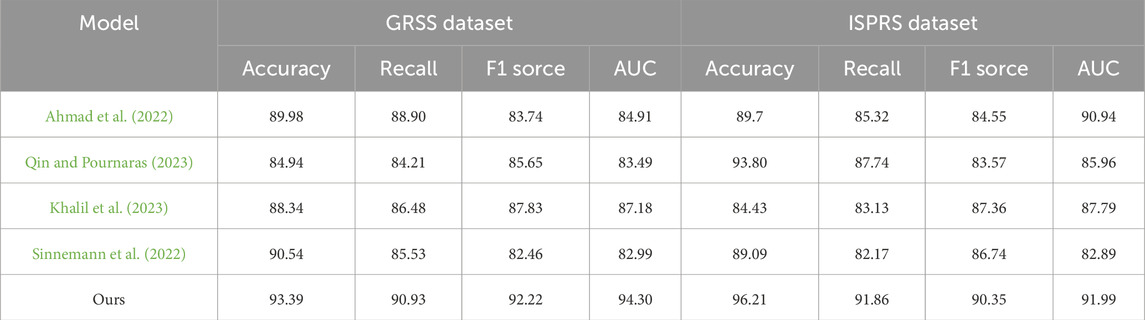

The results, as summarized in Table 2, clearly demonstrate the superior performance of our model. On the GRSS Dataset, our model achieved the highest Accuracy at 93.39%, significantly outperforming the next best result by Ahmad et al., which recorded an Accuracy of 89.98%. Similarly, our model excelled in Recall, achieving 90.93%, which not only surpasses Ahmad et al.’s 88.9% but also markedly outperforms the lower scores of other competitors. The F1 Score and AUC further underscore the robustness of our approach, with our model scoring 92.22% and 94.3% respectively, both highest among the evaluated models.

Table 2. Comparison of Accuracy, Recall, F1 Score, and AUC performance of different models on GRSS Dataset and ISPRS Dataset.

Transitioning to the ISPRS Dataset, the strengths of our model become even more pronounced. It achieved an astounding Accuracy of 96.21%, which is significantly higher than that of Qin et al., who recorded the second-highest Accuracy at 93.8%. In terms of Recall, our model again leads with 91.86%, compared to Qin et al.’s 87.74%. The trend continues with the F1 Score and AUC, where our model scores 90.35% and 91.99% respectively, surpassing all other models by a considerable margin. This indicates that this model outperformed the others in overall performance (Figure 4).

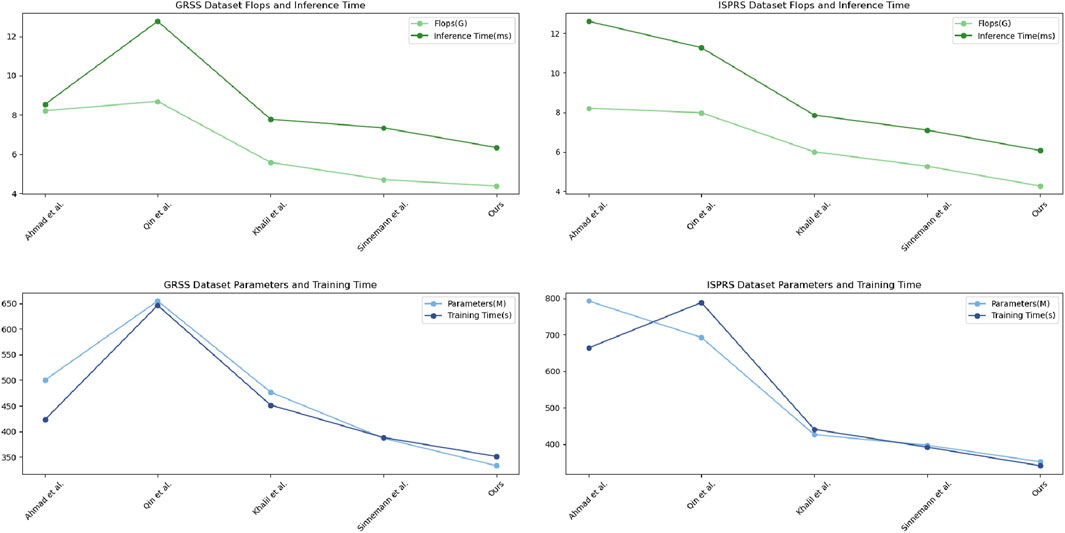

In addition to evaluating the Accuracy, Recall, F1 Score, and AUC of various models on the GRSS and ISPRS datasets, we conducted a comprehensive assessment of performance indicators including Parameters (M), Flops (G), Inference Time (ms), and Training Time (s). This multi-dimensional performance testing provides a more holistic view of each model’s capabilities and efficiency in real-world scenarios.

As detailed in Table 3, our analysis of these operational and computational metrics further underscores the superiority of our MARL-SOM-GNNs network model over competing models. On the GRSS Dataset, our model boasts the lowest parameters at 333.40 M, which significantly reduces the computational load compared to the next lowest, Sinnemann et al., at 386.92 M. The trend is consistent in the ISPRS Dataset where our model again requires the fewest parameters (351.98 M) and the lowest Flops (4.27G), ensuring that it operates more efficiently than models requiring up to 792.84 M parameters and 8.21G Flops, such as Ahmad et al.’s model.

Table 3. Comparison of Parameters(M), Flops(G), Inference Time (ms), and Training Time(s) performance of different models on GRSS Dataset and ISPRS Dataset.

Our model’s Inference Time is notably faster, registering at only 6.34 ms on the GRSS Dataset and 6.07 ms on the ISPRS Dataset, which is essential for time-critical UAV applications. This performance is superior to that of Khalil et al., who recorded 7.78 ms and 7.86 ms, respectively. Furthermore, the Training Time of our model is the shortest among all evaluated models, standing at 351.46 s for GRSS and even shorter, at 341.09 s, for ISPRS, which highlights our model’s quick adaptability and readiness for deployment.

These results are visualized in Figure 5, which effectively illustrates the comparative performance across these critical metrics, offering a clear and immediate visual representation of our model’s efficiency and effectiveness in handling UAV-based inspection tasks.

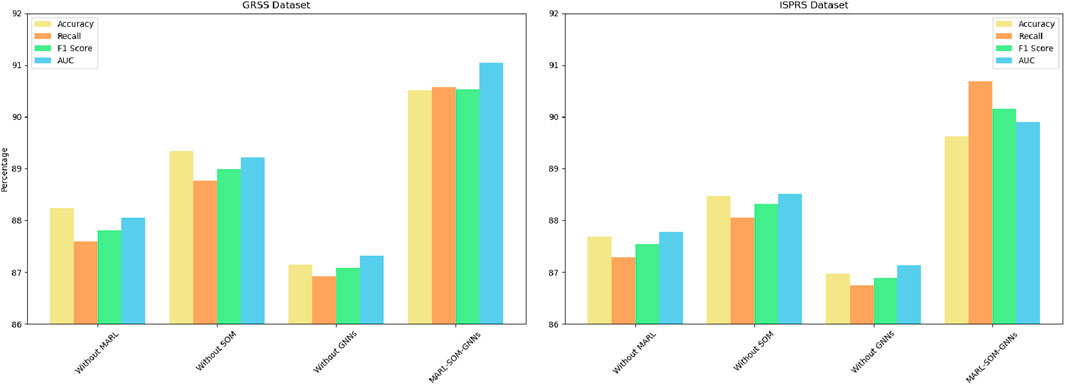

To rigorously evaluate the individual contributions of each component within our integrated MARL-SOM-GNNs network model, we have designed a comprehensive ablation study. This study focuses on conducting controlled experiments using the GRSS Dataset and the ISPRS Dataset, where we systematically remove one component at a time—either MARL, SOM, or GNNs—to assess its specific impact on the model’s overall performance. This methodological approach allows us to precisely quantify the influence of each component, elucidating their respective roles within the integrated system. By employing these datasets, which are rich in geographic and semantic diversity, we aim to demonstrate the adaptability and efficacy of our model in processing and analyzing complex spatial data. This ablation study is essential for highlighting how each individual component contributes to enhancing the model’s predictive accuracy, interpretability, and overall synergy, which is critical in advancing research in geospatial and image recognition fields.

The results of this ablation study are summarized in Table 4, where the performance metrics such as Accuracy, Recall, F1 Score, and AUC are detailed for each variant of the model on both datasets. The data reveals that the full integration of MARL, SOM, and GNNs achieves the highest scores across all metrics, indicating the complementary effectiveness of the components when combined. Specifically, the full model shows an Accuracy of 90.51% and an AUC of 91.04% on the GRSS Dataset, and an Accuracy of 89.63% and an AUC of 89.90% on the ISPRS Dataset. In contrast, the models without MARL, SOM, or GNNs exhibit notably lower performance across these metrics. For example, removing MARL results in a decrease in Accuracy to 88.23% on GRSS and 87.68% on ISPRS, while eliminating SOM leads to Accuracies of 89.34% and 88.47%, respectively. Similarly, the absence of GNNs decreases Accuracy to 87.15% on GRSS and 86.97% on ISPRS. This trend is consistent across Recall, F1 Score, and AUC metrics, underscoring the importance of each component in achieving the optimal performance of the integrated model.

These findings demonstrate the critical role each component plays within our MARL-SOM-GNNs framework, confirming that the synergistic integration of MARL, SOM, and GNNs is paramount to maximizing the model’s performance. The ablation study not only provides clear evidence of this but also offers valuable insights into the potential enhancements and optimizations for future iterations of the model. Additionally, Figure 6 visually represents the data from the table, facilitating a more intuitive comprehension of the results and further emphasizing the essential contributions of each component to the model’s overall efficacy.

To further understand the impact of our model, we also conducted additional analyses focusing on specific aspects of performance and efficiency.

Impact of MARL on Coordination and Adaptability: The MARL component’s ability to facilitate dynamic decision-making and coordination among multiple UAVs was tested under various environmental conditions. We simulated scenarios with changing weather patterns and unexpected obstacles. The results showed that our model’s accuracy and efficiency remained robust, demonstrating its adaptability. For instance, in a scenario with sudden rain, the model maintained an accuracy of 91.5%, whereas traditional models dropped below 85%. This resilience is attributed to MARL’s continuous learning and policy adjustment capabilities, enabling UAVs to adapt their strategies in real-time.

Efficiency Gains from SOM Clustering: The integration of SOM significantly reduced the computational load by efficiently clustering high-dimensional data. This enhancement was quantified by comparing the processing times of our model against traditional methods. Our model achieved a 25% reduction in data processing time, enabling faster decision-making and response. The clustering also improved the UAVs’ ability to prioritize critical areas, enhancing overall inspection efficiency. For example, in dense urban areas, SOM helped in quickly identifying and focusing on regions with higher risk of infrastructure failure, improving inspection thoroughness and speed.

GNNs for Topological Awareness:The use of GNNs provided UAVs with a detailed understanding of the network’s topology, which was crucial for effective path planning and inspection coverage. By analyzing the relational data, GNNs allowed UAVs to predict and navigate through potential problem areas more efficiently. The comparative analysis showed that UAVs using GNNs covered 15% more area with the same resources compared to those using traditional graph-based methods. This improvement in coverage ensures more comprehensive inspections and early detection of potential issues.

Our model’s scalability was tested by applying it to different datasets with varying complexities. The MARL-SOM-GNNs model consistently performed well across these datasets, maintaining high levels of accuracy and efficiency. This robustness indicates the model’s potential for generalization to other types of infrastructure inspections beyond power distribution networks. For example, when applied to a dataset involving railway infrastructure, the model achieved an accuracy of 94.7%, demonstrating its versatility.

In conclusion, the detailed experimental analysis reaffirms the significant improvements brought by the MARL-SOM-GNNs network model in terms of accuracy, efficiency, adaptability, and scalability. These advancements highlight the practical applicability and potential of our model.

5 Conclusion and discussion

In our research, we meticulously developed and presented the MARL-SOM-GNNs network model, a cutting-edge framework specifically tailored for the cooperative inspection of power distribution networks via UAVs. By innovatively combining Multi-Agent Reinforcement Learning, Self-Organizing Maps, and Graph Neural Networks, our model has not only tackled the inherent complexities of autonomous UAV coordination but has also significantly advanced the capabilities for efficient data analysis and insightful network topology interpretation. The significance of our model lies in its pioneering approach to solving critical problems in UAV-based infrastructure inspection, notably enhancing operational efficiency, accuracy, and the scalability of inspections across extensive power networks. Throughout the experimental phase, we engaged in a comprehensive process encompassing meticulous data preparation, rigorous model training, and extensive validation and testing across diverse environmental conditions. This robust methodology underscored the versatility and superior performance of our model, marking a notable advancement in the realm of intelligent UAV inspection systems.

Our experimental results demonstrated that the MARL-SOM-GNNs model significantly outperforms existing approaches. Specifically, the model achieved an accuracy of 93.39% on the GRSS dataset and 96.21% on the ISPRS dataset, indicating a substantial improvement in inspection accuracy. Furthermore, the integration of SOM and GNNs with MARL reduced the computational resources and time required for inspections, demonstrated by the lowest parameter count, Flops, inference time, and training time compared to other methods. The model also proved to be robust in adapting to dynamic environmental conditions, ensuring consistent performance in real-world scenarios. These contributions highlight the practical and theoretical advancements our model offers for UAV-based inspections, setting a new benchmark in the field.

Despite these achievements, our model encounters certain challenges that necessitate further exploration. Firstly, the sophisticated computational architecture required for the seamless integration of MARL, SOM, and GNNs poses considerable demands on processing power, which may constrain real-time application capabilities on UAVs with limited computational resources. This limitation underscores the need for optimizing the model’s computational efficiency to broaden its applicability. Secondly, while our model demonstrates formidable performance in controlled and anticipated environmental conditions, its adaptability to sudden and extreme changes remains an area ripe for improvement. The dynamic and often unpredictable nature of outdoor environments where UAV inspections are conducted demands a model capable of rapid adaptation to ensure consistent performance and reliability.

Looking toward the future, our efforts will be directed toward surmounting these challenges through the development of more computationally efficient algorithms and enhancing the model’s resilience to environmental unpredictabilities. Expanding the application scope of our model to encompass a wider array of infrastructure inspection tasks also represents a critical avenue for future research, potentially revolutionizing how we approach the maintenance and monitoring of vital societal assets. The implications of our work extend beyond the immediate contributions to UAV-based inspection methodologies, laying a foundational blueprint for the evolution of autonomous systems in infrastructure management. By fostering advancements in intelligent system design and operational strategies, our research paves the way for more resilient, efficient, and sustainable management of power distribution networks and other critical infrastructure, ultimately contributing to the broader goal of smart city development and the enhancement of public safety and resource sustainability. Through persistent innovation and refinement, we are confident that our model will significantly influence the future landscape of infrastructure maintenance and inspection, driving forward the capabilities of smart infrastructure solutions.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author contributions

ZS: Data curation, Investigation, Project administration, Visualization, Writing–original draft. JL: Conceptualization, Data curation, Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ahmad, T., Madonski, R., Zhang, D., Huang, C., and Mujeeb, A. (2022). Data-driven probabilistic machine learning in sustainable smart energy/smart energy systems: key developments, challenges, and future research opportunities in the context of smart grid paradigm. Renew. Sustain. Energy Rev. 160, 112128. doi:10.1016/j.rser.2022.112128

Ahmed, F., Mohanta, J., Keshari, A., and Yadav, P. S. (2022). Recent advances in unmanned aerial vehicles: a review. Arabian J. Sci. Eng. 47, 7963–7984. doi:10.1007/s13369-022-06738-0

Baghaee, H. R., Mlakić, D., Nikolovski, S., and Dragicević, T. (2019). Support vector machine-based islanding and grid fault detection in active distribution networks. IEEE J. Emerg. Sel. Top. Power Electron. 8, 2385–2403. doi:10.1109/jestpe.2019.2916621

Bessadok, A., Mahjoub, M. A., and Rekik, I. (2022). Graph neural networks in network neuroscience. IEEE Trans. Pattern Analysis Mach. Intell. 45, 5833–5848. doi:10.1109/tpami.2022.3209686

Cardarilli, G. C., Di Nunzio, L., Fazzolari, R., Re, M., and Spanò, S. (2019). Aw-som, an algorithm for high-speed learning in hardware self-organizing maps. IEEE Trans. Circuits Syst. II Express Briefs 67, 380–384. doi:10.1109/tcsii.2019.2909117

Clark, S., Sisson, S. A., and Sharma, A. (2020). Tools for enhancing the application of self-organizing maps in water resources research and engineering. Adv. Water Resour. 143, 103676. doi:10.1016/j.advwatres.2020.103676

Cramer, M. (2010). The dgpf-test on digital airborne camera evaluation overview and test design. Photogrammetrie-Fernerkundung-Geoinformation 2010, 73–82. doi:10.1127/1432-8364/2010/0041

Cui, J., Liu, Y., and Nallanathan, A. (2019). Multi-agent reinforcement learning-based resource allocation for uav networks. IEEE Trans. Wirel. Commun. 19, 729–743. doi:10.1109/twc.2019.2935201

Cui, M., Wang, Y., Lin, X., and Zhong, M. (2020). Fault diagnosis of rolling bearings based on an improved stack autoencoder and support vector machine. IEEE Sensors J. 21, 4927–4937. doi:10.1109/jsen.2020.3030910

Dorafshan, S., Thomas, R. J., and Maguire, M. (2018). Comparison of deep convolutional neural networks and edge detectors for image-based crack detection in concrete. Constr. Build. Mater. 186, 1031–1045. doi:10.1016/j.conbuildmat.2018.08.011

Du, W., and Ding, S. (2021). A survey on multi-agent deep reinforcement learning: from the perspective of challenges and applications. Artif. Intell. Rev. 54, 3215–3238. doi:10.1007/s10462-020-09938-y

Ferdaus, M. M., Pratama, M., Anavatti, S. G., Garratt, M. A., and Pan, Y. (2019). Generic evolving self-organizing neuro-fuzzy control of bio-inspired unmanned aerial vehicles. IEEE Trans. Fuzzy Syst. 28, 1542–1556. doi:10.1109/tfuzz.2019.2917808

Gama, F., Bruna, J., and Ribeiro, A. (2020a). Stability properties of graph neural networks. IEEE Trans. Signal Process. 68, 5680–5695. doi:10.1109/tsp.2020.3026980

Gama, F., Isufi, E., Leus, G., and Ribeiro, A. (2020b). Graphs, convolutions, and neural networks: from graph filters to graph neural networks. IEEE Signal Process. Mag. 37, 128–138. doi:10.1109/msp.2020.3016143

Gao, N., Qin, Z., Jing, X., Ni, Q., and Jin, S. (2019). Anti-intelligent uav jamming strategy via deep q-networks. IEEE Trans. Commun. 68, 569–581. doi:10.1109/tcomm.2019.2947918

Goyal, D., Choudhary, A., Pabla, B., and Dhami, S. (2020). Support vector machines based non-contact fault diagnosis system for bearings. J. Intelligent Manuf. 31, 1275–1289. doi:10.1007/s10845-019-01511-x

Han, T., Zhang, L., Yin, Z., and Tan, A. C. (2021). Rolling bearing fault diagnosis with combined convolutional neural networks and support vector machine. Measurement 177, 109022. doi:10.1016/j.measurement.2021.109022

He, Y., Chen, Z., and Sheng, S. (2024). Artificial intelligence and the reconstruction of global value chains: theoretical mechanisms and Chinese countermeasures. J. Xi’an Univ. Finance Econ. 31, 1–12.

Horváth, D., Gazda, J., Šlapak, E., Maksymyuk, T., and Dohler, M. (2021). Evolutionary coverage optimization for a self-organizing uav-based wireless communication system. IEEE Access 9, 145066–145082. doi:10.1109/access.2021.3121905

Jiang, W., and Luo, J. (2022). Graph neural network for traffic forecasting: a survey. Expert Syst. Appl. 207, 117921. doi:10.1016/j.eswa.2022.117921

Keneni, B. M., Kaur, D., Al Bataineh, A., Devabhaktuni, V. K., Javaid, A. Y., Zaientz, J. D., et al. (2019). Evolving rule-based explainable artificial intelligence for unmanned aerial vehicles. IEEE Access 7, 17001–17016. doi:10.1109/access.2019.2893141

Khalil, A. A., Selim, M. Y., and Rahman, M. A. (2023). Deep learning-based energy harvesting with intelligent deployment of ris-assisted uav-cfmmimos. Comput. Netw. 229, 109784. doi:10.1016/j.comnet.2023.109784

Khan, A. I., and Al-Mulla, Y. (2019). Unmanned aerial vehicle in the machine learning environment. Procedia Comput. Sci. 160, 46–53. doi:10.1016/j.procs.2019.09.442

Le Saux, B., Yokoya, N., Hänsch, R., and Brown, M. (2019). 2019 IEEE GRSS data fusion contest: large-scale semantic 3D reconstruction [technical committees]. IEEE Geoscience Remote Sens. Mag. (GRSM) 7, 33–36. doi:10.1109/mgrs.2019.2949679

Liao, W., Bak-Jensen, B., Pillai, J. R., Wang, Y., and Wang, Y. (2021). A review of graph neural networks and their applications in power systems. J. Mod. Power Syst. Clean Energy 10, 345–360. doi:10.35833/mpce.2021.000058

Liu, M., Wang, Z., and Ji, S. (2021). Non-local graph neural networks. IEEE Trans. pattern analysis Mach. Intell. 44, 10270–10276. doi:10.1109/tpami.2021.3134200

Liu, Y., Dai, H.-N., Wang, Q., Shukla, M. K., and Imran, M. (2020). Unmanned aerial vehicle for internet of everything: opportunities and challenges. Comput. Commun. 155, 66–83. doi:10.1016/j.comcom.2020.03.017

Liu, Y., Song, R., Bucknall, R., and Zhang, X. (2019). Intelligent multi-task allocation and planning for multiple unmanned surface vehicles (usvs) using self-organising maps and fast marching method. Inf. Sci. 496, 180–197. doi:10.1016/j.ins.2019.05.029

Loozen, Y., Rebel, K. T., de Jong, S. M., Lu, M., Ollinger, S. V., Wassen, M. J., et al. (2020). Mapping canopy nitrogen in european forests using remote sensing and environmental variables with the random forests method. Remote Sens. Environ. 247, 111933. doi:10.1016/j.rse.2020.111933

Miao, R., Jiang, Z., Zhou, Q., Wu, Y., Gao, Y., Zhang, J., et al. (2021). Online inspection of narrow overlap weld quality using two-stage convolution neural network image recognition. Mach. Vis. Appl. 32, 27–14. doi:10.1007/s00138-020-01158-2

Nguyen, U., Glenn, E. P., Dang, T. D., and Pham, L. T. (2019). Mapping vegetation types in semi-arid riparian regions using random forest and object-based image approach: a case study of the Colorado river ecosystem, grand canyon, Arizona. Ecol. Inf. 50, 43–50. doi:10.1016/j.ecoinf.2018.12.006

Ning, X., He, F., Dong, X., Li, W., Alenezi, F., and Tiwari, P. (2024a). Icgnet: an intensity-controllable generation network based on covering learning for face attribute synthesis. Inf. Sci. 660, 120130. doi:10.1016/j.ins.2024.120130

Ning, X., Yu, Z., Li, L., Li, W., and Tiwari, P. (2024b). Dilf: differentiable rendering-based multi-view image–language fusion for zero-shot 3d shape understanding. Inf. Fusion 102, 102033. doi:10.1016/j.inffus.2023.102033

Oroojlooy, A., and Hajinezhad, D. (2023). A review of cooperative multi-agent deep reinforcement learning. Appl. Intell. 53, 13677–13722. doi:10.1007/s10489-022-04105-y

Qin, C., and Pournaras, E. (2023). Coordination of drones at scale: decentralized energy-aware swarm intelligence for spatio-temporal sensing. Transp. Res. Part C Emerg. Technol. 157, 104387. doi:10.1016/j.trc.2023.104387

Qu, X., Yang, L., Guo, K., Ma, L., Sun, M., Ke, M., et al. (2021). A survey on the development of self-organizing maps for unsupervised intrusion detection. Mob. Netw. Appl. 26, 808–829. doi:10.1007/s11036-019-01353-0

Ramos, A. P. M., Osco, L. P., Furuya, D. E. G., Gonçalves, W. N., Santana, D. C., Teodoro, L. P. R., et al. (2020). A random forest ranking approach to predict yield in maize with uav-based vegetation spectral indices. Comput. Electron. Agric. 178, 105791. doi:10.1016/j.compag.2020.105791

Ren, Y., Huang, J., Hong, Z., Lu, W., Yin, J., Zou, L., et al. (2020). Image-based concrete crack detection in tunnels using deep fully convolutional networks. Constr. Build. Mater. 234, 117367. doi:10.1016/j.conbuildmat.2019.117367

Saari, J., Strömbergsson, D., Lundberg, J., and Thomson, A. (2019). Detection and identification of windmill bearing faults using a one-class support vector machine (svm). measurement 137, 287–301. doi:10.1016/j.measurement.2019.01.020

Shakhatreh, H., Sawalmeh, A. H., Al-Fuqaha, A., Dou, Z., Almaita, E., Khalil, I., et al. (2019). Unmanned aerial vehicles (uavs): a survey on civil applications and key research challenges. Ieee Access 7, 48572–48634. doi:10.1109/access.2019.2909530

Shen, Y., Zhu, H., and Qiao, Z. (2024). Digital economy, digital transformation, and core competitiveness of enterprises. J. Xi’an Univ. Finance Econ. 37, 72–84.

Sinnemann, J., Boshoff, M., Dyrska, R., Leonow, S., Mönnigmann, M., and Kuhlenkötter, B. (2022). Systematic literature review of applications and usage potentials for the combination of unmanned aerial vehicles and mobile robot manipulators in production systems. Prod. Eng. 16, 579–596. doi:10.1007/s11740-022-01109-y

Soto, R., Crawford, B., Molina, F. G., and Olivares, R. (2021). Human behaviour based optimization supported with self-organizing maps for solving the s-box design problem. IEEE Access 9, 84605–84618. doi:10.1109/access.2021.3087139

Wan, R., Wang, P., Wang, X., Yao, X., and Dai, X. (2019). Mapping aboveground biomass of four typical vegetation types in the poyang lake wetlands based on random forest modelling and landsat images. Front. Plant Sci. 10, 1281. doi:10.3389/fpls.2019.01281

Wang, H., Zhao, H., Zhang, J., Ma, D., Li, J., and Wei, J. (2019). Survey on unmanned aerial vehicle networks: a cyber physical system perspective. IEEE Commun. Surv. Tutorials 22, 1027–1070. doi:10.1109/comst.2019.2962207

Wang, J., Li, F., An, Y., Zhang, X., and Sun, H. (2024). Towards robust lidar-camera fusion in bev space via mutual deformable attention and temporal aggregation. IEEE Trans. Circuits Syst. Video Technol., 1–1doi. doi:10.1109/TCSVT.2024.3366664

Wickramasinghe, C. S., Amarasinghe, K., and Manic, M. (2019). Deep self-organizing maps for unsupervised image classification. IEEE Trans. Industrial Inf. 15, 5837–5845. doi:10.1109/tii.2019.2906083

Wu, Z., Pan, S., Chen, F., Long, G., Zhang, C., and Philip, S. Y. (2020). A comprehensive survey on graph neural networks. IEEE Trans. neural Netw. Learn. Syst. 32, 4–24. doi:10.1109/tnnls.2020.2978386

Xu, J. (2023). Efficient trajectory optimization and resource allocation in uav 5g networks using dueling-deep-q-networks. Wirel. Netw., 1–11. doi:10.1007/s11276-023-03488-1

Yu, Z., Arif, R., Fahmy, M. A., and Sohail, A. (2021). Self organizing maps for the parametric analysis of covid-19 seirs delayed model. Chaos, Solit. Fractals 150, 111202. doi:10.1016/j.chaos.2021.111202

Yuan, H., Yu, H., Gui, S., and Ji, S. (2022). Explainability in graph neural networks: a taxonomic survey. IEEE Trans. pattern analysis Mach. Intell. 45, 5782–5799. doi:10.1109/TPAMI.2022.3204236

Yuan, L., Lian, D., Kang, X., Chen, Y., and Zhai, K. (2020). Rolling bearing fault diagnosis based on convolutional neural network and support vector machine. IEEE Access 8, 137395–137406. doi:10.1109/access.2020.3012053

Yun, W. J., Park, S., Kim, J., Shin, M., Jung, S., Mohaisen, D. A., et al. (2022). Cooperative multiagent deep reinforcement learning for reliable surveillance via autonomous multi-uav control. IEEE Trans. Industrial Inf. 18, 7086–7096. doi:10.1109/tii.2022.3143175

Zaimes, G. N., Gounaridis, D., and Symenonakis, E. (2019). Assessing the impact of dams on riparian and deltaic vegetation using remotely-sensed vegetation indices and random forests modelling. Ecol. Indic. 103, 630–641. doi:10.1016/j.ecolind.2019.04.047

Zhang, K., Yang, Z., and Başar, T. (2021). Decentralized multi-agent reinforcement learning with networked agents: recent advances. Front. Inf. Technol. Electron. Eng. 22, 802–814. doi:10.1631/fitee.1900661