94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 09 April 2025

Sec. Digital Education

Volume 10 - 2025 | https://doi.org/10.3389/feduc.2025.1532376

Introduction: There has been a growing shift toward practically oriented research that focuses on analyzing and improving educational interventions rather than merely validating them. However, such research is often prone to theoretical bias and result fragmentation, as it tends to prioritize certain aspects of an intervention without clear justification, losing a systemic perspective.

Methods: By applying Activity Theory within a mixed-method approach, this study introduces a holistic framework for evaluating educational interventions as interconnected systems, offering a more comprehensive foundation for research and practice. As a demonstration, this study examines the effectiveness of the second version of the mobile application “Ace Yourself,” designed to develop soft skills in higher education.

Results and discussion: This approach enables an exploration of how the application aligns with students’ needs, considers the learning context, roles, and competing activities, and ultimately contributes to learning outcomes. Viewing education as a system rather than isolating individual elements reveals new insights and contradictions through their interconnections and lays the foundation for future decision-making.

The research-practice dialogue is an important and ongoing issue, where research aims to bring more value to practice while expecting more evidence in return (Rudolph et al., 2024; Sato and Loewen, 2022). However, academic research is often criticized for its lack of practical relevance, as it typically focuses more on proving and validating educational interventions rather than improving them (Honebein and Reigeluth, 2021). An educational intervention refers to the process of implementing a new approach, tool, or feature within an educational setting with the goal of enhancing learning outcomes.

In response to this challenge, more improvement-oriented research approaches—such as participatory, action, and design-based research—are gaining popularity (Burr and Degotardi, 2024; Dolmans and Tigelaar, 2012; Greenhow et al., 2022; Tinoca et al., 2022; Vaughn and Jacquez, 2020). These approaches help narrow the gap between research and practice by encouraging researchers to collaborate with practitioners, select guiding theories, and formulate research questions that steer both the investigation and the improvement of educational interventions.

However, while improvement-oriented research approaches have contributed to bridging the research-practice gap, they also have limitations and challenges that require solutions. For example, Henriksen and Ejsing-Duun (2022) highlighted the issue of limited impact beyond these improvement-oriented studies and proposed a two-dimensional model for categorizing review findings on implementation. Another common challenge is the fragmentation of studied educational interventions. In particular, relying on a single theory or lens can limit understanding of how the entire educational system operates. For instance, emphasizing students’ motivation may overlook issues like cognitive load within an educational intervention. This narrow focus may emphasize certain disadvantages while overlooking potential advantages, or vice versa. Furthermore, improvement-oriented research may exhibit ‘theoretical bias,’ as it often lacks clarity on why certain aspects of an educational intervention—such as cognitive load—are prioritized over others, like motivation, even when all these factors are important.

This paper aims to contribute to the ongoing research-practice dialogue by addressing the aforementioned issues of fragmentation and theoretical bias in improvement-oriented research. Specifically, it explores how Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978) can provide a more holistic framework for evaluating educational interventions. Activity Theory has already been applied in improvement-oriented research (Engeström, 1987; Engeström and Sannino, 2020); however, it has primarily been used in qualitative studies within organizational work systems. This paper demonstrates how Activity Theory can be adapted to the educational context and strengthened through a mixed-method study design. In doing so, it serves as a foundation for making more informed decisions about which aspects of an educational intervention require further research and which more specific theoretical frameworks should be applied. To illustrate this, I further analyze the effectiveness of an m-learning innovation designed to foster soft skills among higher education students.

The mobile learning (m-learning) literature provides extensive insights into its effectiveness and limitations. M-learning is widely applied in areas such as language acquisition (Viberg et al., 2020), reading instruction (Lin et al., 2020), and supporting self-regulated learning (Baars et al., 2022; Lobos et al., 2021). Its benefits include broad accessibility and the capability to gather real-time learning analytics (Dolowitz et al., 2023; Fu et al., 2021; Viberg et al., 2020). While m-learning can improve self-regulated learning (Palalas and Wark, 2020; Wei et al., 2022), it does not always succeed (Baars et al., 2022; Foerst et al., 2019). Similar to other learning modalities, m-learning demands self-regulation skills and depends on students’ motivation and cognitive load management (Baars and Viberg, 2022; Wei et al., 2022). Additionally, m-learning poses unique challenges, such as integrating it into existing schedules, addressing varying digital skills, ensuring perceived ease of use and usefulness, minimizing multitasking, and offering robust feedback through learning analytics (Amaefule et al., 2023; Hartley et al., 2020; Baars et al., 2022; Lindín et al., 2022; Palalas and Wark, 2020; Viberg et al., 2020).

Despite the common advantages and challenges associated with m-learning, a major criticism of m-learning programs and related research is the lack of a solid theoretical foundation (Palalas and Wark, 2020). This gap contributes to a vague understanding of “m-learning efficiency” and results in extensive lists of isolated considerations for developing m-learning programs (Baars and Viberg, 2022; Palalas and Wark, 2020; Mustafa, 2023; Wei et al., 2022). Lin et al. (2020) sought a systematic approach by examining Mobile Assisted Language Learning literature on reading skills development through the lens of the seven components of Activity Theory (Engeström, 1987; Engeström and Sannino, 2020; Leont’ev, 1978). Their systematic review culminated in systematic guidelines for designing m-learning programs to enhance reading skills groups based on several Activity theory elements: Rules, Object, Community, Division of Labor, and Outcome. By considering these broader contextual and systemic factors, Lin’s approach offers a more comprehensive framework for addressing the complexities of mobile learning.

Similarly to Lin et al. (2020) and Engeström and Sannino (2020), we turn to Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978) to systematically analyze the effectiveness of Ace Yourself app.1 This theory allows us to adopt a broader perspective on Ace Yourself, treating it as part of an educational system rather than isolating it to just the application. Such a systematic approach helps identify strengths and weaknesses, offering new opportunities for improvement.

Leont’ev (1978) viewed human life as a system of alternating activities, such as studying, socializing, or maintaining one’s health. According to Activity Theory, activity is a process of mutual transitions between the poles of “subject-object.” As Leont’ev (1978, p. 37) explains, “in activity, the object is transformed into its subjective form, into an image; at the same time, the activity is also transformed into its objective results, into its products.” In the context of learning, this means that educational content, tasks, and context in general are transformed into subjective forms, such as understanding, skills, competencies, attitudes, and overall experience. Activities have distinct structures, levels, and elements (Engeström, 1987; Engeström and Sannino, 2020; Leont’ev, 1978; Lin et al., 2020). In the following sections, I briefly introduce the Ace Yourself app (see text footnote 1) and analyze its structure, elements, and criteria of effectiveness through the lens of Activity Theory.

According to Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978), individuals engage in an activity because of a motive, which arises from a match between their need and an object that can satisfy it—something they expect to achieve through participating in the activity. The most powerful motives create personal meaning—a sense of value and significance of the activity in one’s life. Therefore, an educational system can be considered effective if it offers objects that align with students’ needs, allowing them to perceive the activity as meaningful and valuable (object–need fit).

Ace Yourself aims to support students in their transition by encouraging them to achieve specific “objects” in terms of Activity Theory—namely, improvements in social, study, and personal skills (see Table 1). These skills are based on the framework “Vaardighedenraamwerk voortgezet onderwijs - hoger onderwijs (VO-HO)” developed by the Ho-skills working group of the Rotterdam Partnership Working together for a better connection with vo-ho (2023). In essence, Ace Yourself assumes that students need these skills to facilitate their university transition. However, students may encounter problems and tasks requiring different skills, solutions unrelated to skills, or they may lack an understanding of how specific skills could address their challenges (Thompson et al., 2021). This misalignment—referred to as a lack of need–object match—raises the first research question: How adequately do the skills suggested by the Ace Yourself app address the university-related problems students experience?

In the more recent adaptation of Activity Theory, performing activities can lead to various outcomes—the tangible results of those activities (Engeström and Sannino, 2020). Following this perspective, I propose that an educational system can be considered effective if students achieve what was intended, creating an outcome–object fit. Ace Yourself primarily offers students scientifically-based information about social, personal, and study skills, including strategies, step-by-step guides, and examples of relevant problems and tasks. The app assumes that these skills will facilitate students’ transition to university. Consequently, the two primary expected outcomes of learning with Ace Yourself can be defined as “Supporting the transition to university” and “Developing study, social, and personal skills.” This brings me to the second research question: How does the Ace Yourself app contribute to students’ basic psychological needs (as defined by Ryan and Deci, 2020), the development of study, social, and personal skills, and the resolution of students’ university-related problems?

According to Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978), a tool acts as a mediator between an individual and the actions that constitute an activity, serving as an instrument to facilitate the performance of actions and the achievement of goals. Ace Yourself app.

Therefore, I propose that an educational system is effective if its tools support students in carrying out actions directed toward their desired goals. In this context, the Ace Yourself app can be considered a tool. This leads to the research question: What features of the Ace Yourself app support or hinder students in achieving their learning goals?

According to Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978), every activity occurs within a specific context, and individuals adjust their actions based on the conditions of their environment. Therefore, I propose that an educational system is effective if it aligns with the physical and psychological learning environment. The Ace Yourself app, designed to enable students to learn anytime and anywhere, must also adapt to these conditions. This leads to the research question: Where and when do students prefer to use the Ace Yourself app for learning?

According to Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978), individuals have different motives tied to various activities, which are organized hierarchically. These motives and activities could compete, with people allocating more time and effort to those they prioritize. Based on this, I propose that an educational system is effective if it helps students prioritize it over competing activities, ensuring time and space for learning. Since the Ace Yourself app is not part of the core curriculum, students are likely to face significant interferences. This leads to the research question: What factors help or hinder students in prioritizing the Ace Yourself app among other activities?

Activity may involve various rules and roles that influence its execution (Engeström and Sannino, 2020; Leont’ev, 1978). The division of labor defines how actions are distributed among the roles involved, aiming to facilitate collaboration and achieve the desired object. Based on this, I propose that an educational system is effective when all necessary roles are clearly identified, and actions are distributed in a way that supports participants in reaching their goals. This leads to the research question: How do students perceive their roles and responsibilities when learning with the Ace Yourself app?

All the aforementioned elements of activity are interconnected, and tensions or contradictions may arise between them (Engeström and Sannino, 2020). Thus, it is essential not only to analyze each element separately but also to evaluate the entire system holistically. An educational system can be considered effective when potential contradictions between its elements are identified and addressed. Adopting this systematic perspective can reveal unanticipated aspects of the activity system—here, the learning experience with the Ace Yourself app. This leads to the final research question: What tensions, contradictions, and insights can be identified between the aforementioned elements?

The research employed an experimental and control group design with pre- and post-measurements. A total of 152 first-year psychology students participated (Female: 132, Non-binary/Third gender: 4, Preferred not to say: 3; Mean age = 19.91, SD = 2.33). Surveys, completed via Qualtrics, took 5–15 min. Participants were randomly assigned to either the control or experimental group, with the experimental group further divided into study, social, and personal subgroups. These subgroups focused on the corresponding skills using the Ace Yourself app for one month, a duration informed by prior research on its earlier version (Baars et al., 2022). After the month, participants completed the survey again. The study was approved by the Erasmus University Rotterdam Research Ethics Review Committee (ETH2324-0283), and all participants provided informed consent. Students received course credits for their participation.

In this mixed-method study, a set of quantitative questionnaires aimed at measuring basic needs and learning outcomes was supplemented by a set of open-ended questions designed to help students reflect on their experience of learning with the Ace Yourself app.

The Need Satisfaction and Frustration Scale (NSFS) measured students’ basic psychological needs across six scales: competence satisfaction/frustration, autonomy satisfaction/frustration, and relatedness satisfaction/frustration (Longo et al., 2016).

To assess social, personal, and study skills, a combination of questionnaires and open-ended questions was administered. Personal skill “Confidence on own abilities” was measured using the Competence subscale from the NSFS and an open question on techniques for building confidence. Social skill “Peer learning” was assessed using the Peer Learning scale from the Motivated Strategies for Learning Questionnaire (MSLQ) (Pintrich, 1991), and “Create a diverse environment” was measured by five subscales from the Intercultural Effectiveness Scale (IES; Portalla and Chen, 2010): Behavioral Flexibility, Interaction Relaxation, Interactant Respect, Identity Maintenance, Interaction Management. Study Skill “Monitor your study process” were measured by the Metacognitive Self-Regulation scale from MSLQ; “Planning and predicting”—by the Resource Management scale from MSLQ and by Proximal Goal Setting scale from Motivational Regulation Questionnaire (Schwinger et al., 2009); “Study strategies” were measured by Elaboration, Organisation, and Rehearsal scales from MSLQ. All items were rated on a 7-point Likert scale. Also, students received open questions to reflect on the problems they face at university before and after using the Ace Yourself app. Only experimental groups participants reflected on their learning experience with the app. See Supplementary Tables A, B for a more detailed description of the questionnaires and open questions.

Confirmatory factor analysis resulted in the exclusion of item 11 from the MSLQ and item 56 from the IES due to near-zero or negative loadings (see Supplementary Tables C–E). The revised models demonstrated acceptable fit indices, with CFI and TLI values ranging between 0.5 and 0.6 and RMSEA around 0.09 for study-related scales, and CFI > 0.08 and RMSEA < 0.09 for basic needs and social scales (see Supplementary Table F). Reliability analysis confirmed that the final models’ scales had adequate reliability, with both Cronbach’s alpha and McDonald’s omega exceeding 0.60 (see Supplementary Table G).

To evaluate the app’s contribution to students’ basic needs and their study, social, and personal skills, I conducted within-group (pre-post survey) comparisons for each group and between-group (post-survey) comparisons between the experimental and control groups. Due to non-normal data, the Wilcoxon signed-rank test was used for within-group comparisons, and the Wilcoxon rank-sum test for between-group comparisons. Additionally, a thematic analysis was performed to categorize students’ reported problems, supplemented by proportion and frequency analyses to identify differences within and between groups.

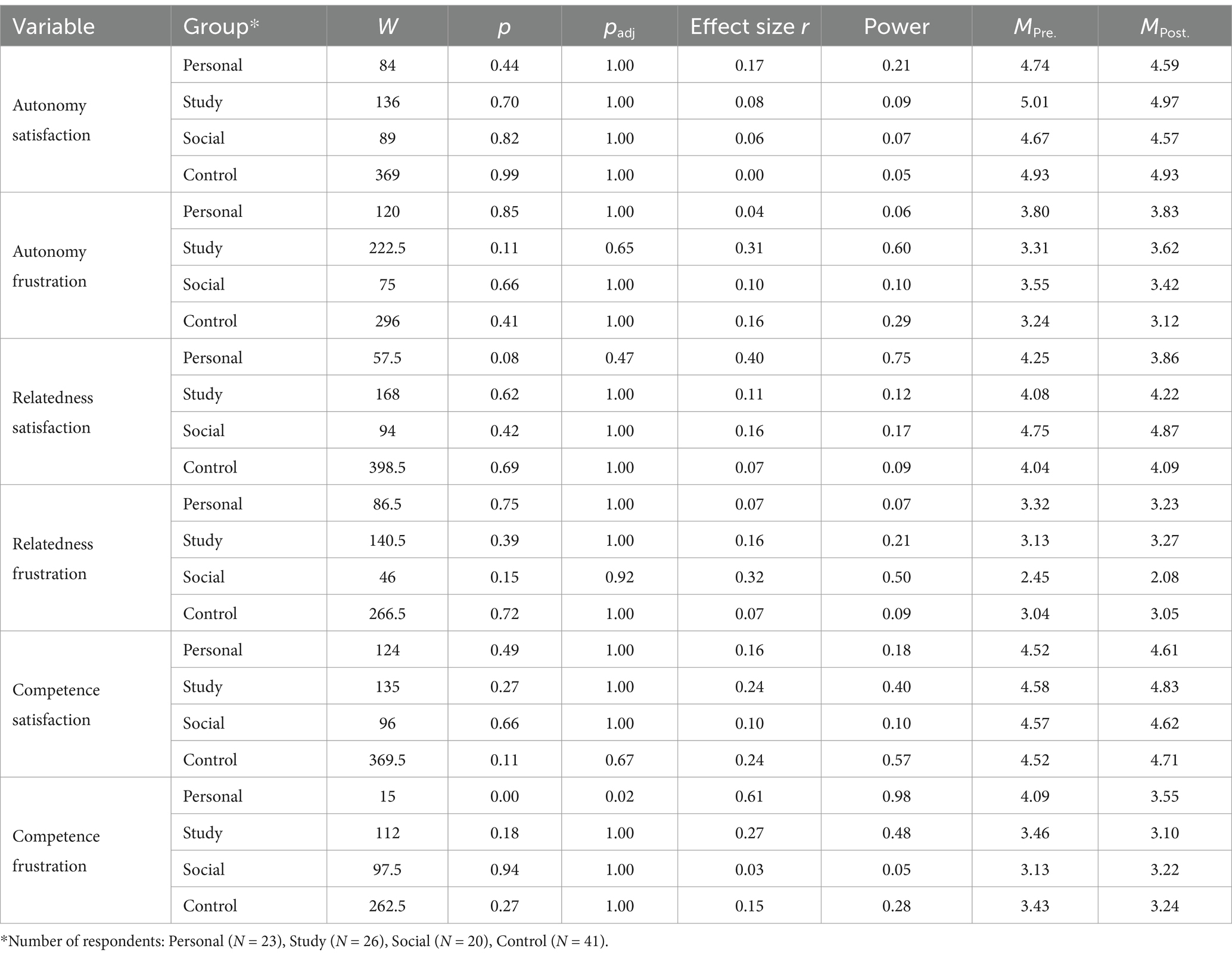

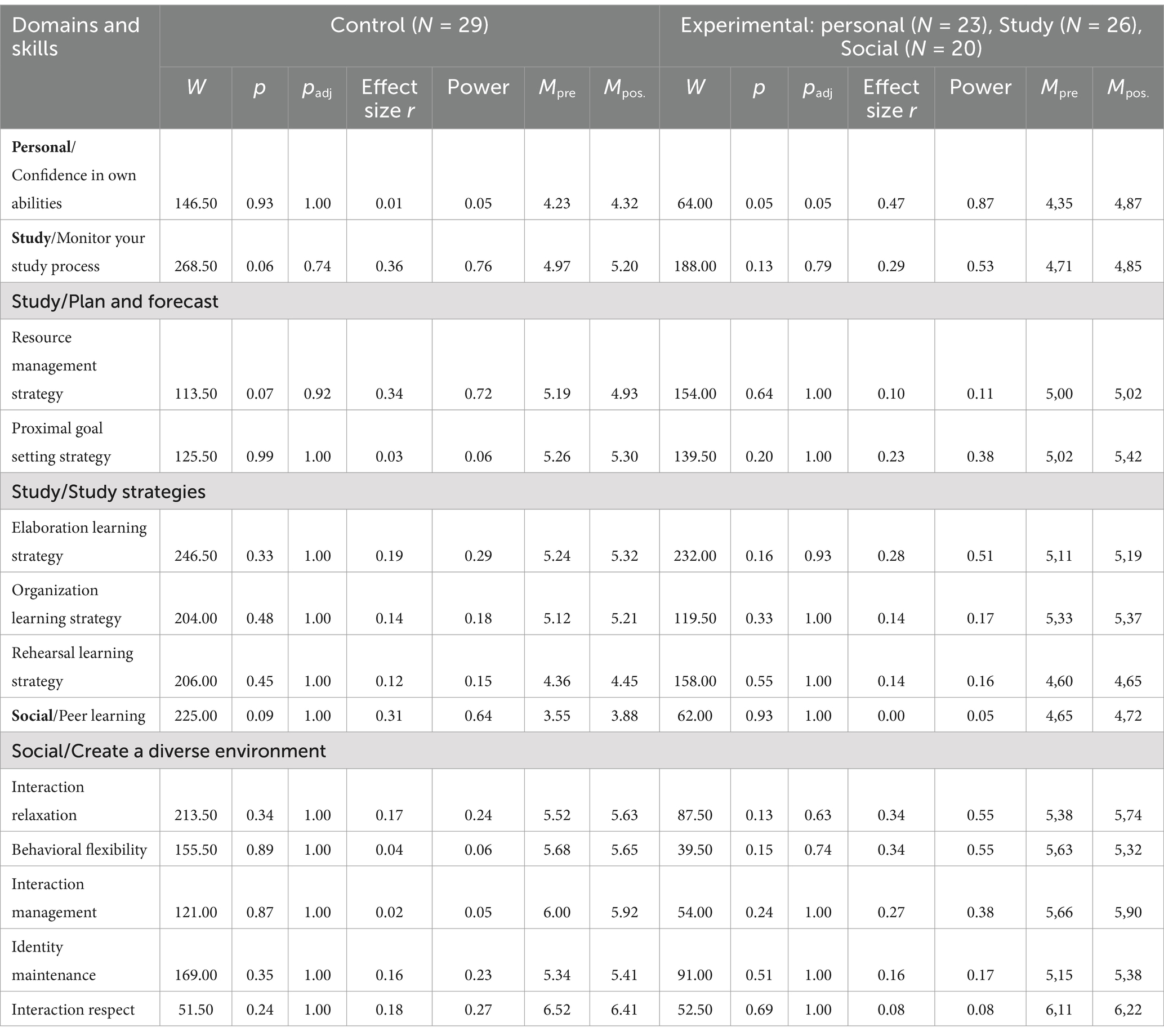

Table 2 indicates that in the within-group (pre-post survey) analysis, the Wilcoxon signed-rank test revealed no significant changes in students’ needs satisfaction, except for a decrease in competence frustration in the personal group (MPre. = 4.09; MPost. = 3.55), W = 15, p = 0.00, padj = 0.02, with a large effect size (r = 0.61). Similarly, Table 3 shows no significant changes in skills, apart from an increase in confidence in one’s own abilities within the personal group (MPre. = 4.35; MPost. = 4.87), W = 64, p = 0.048, padj = 0.048, with a medium effect size (r = 0.47).

Table 2. Wilcoxon signed-rank test results for within-group comparison of basic psychological needs (pre-post comparison).

Table 3. Wilcoxon signed-rank test results for within-group comparison of personal, study, and social skills development (pre-post comparison).

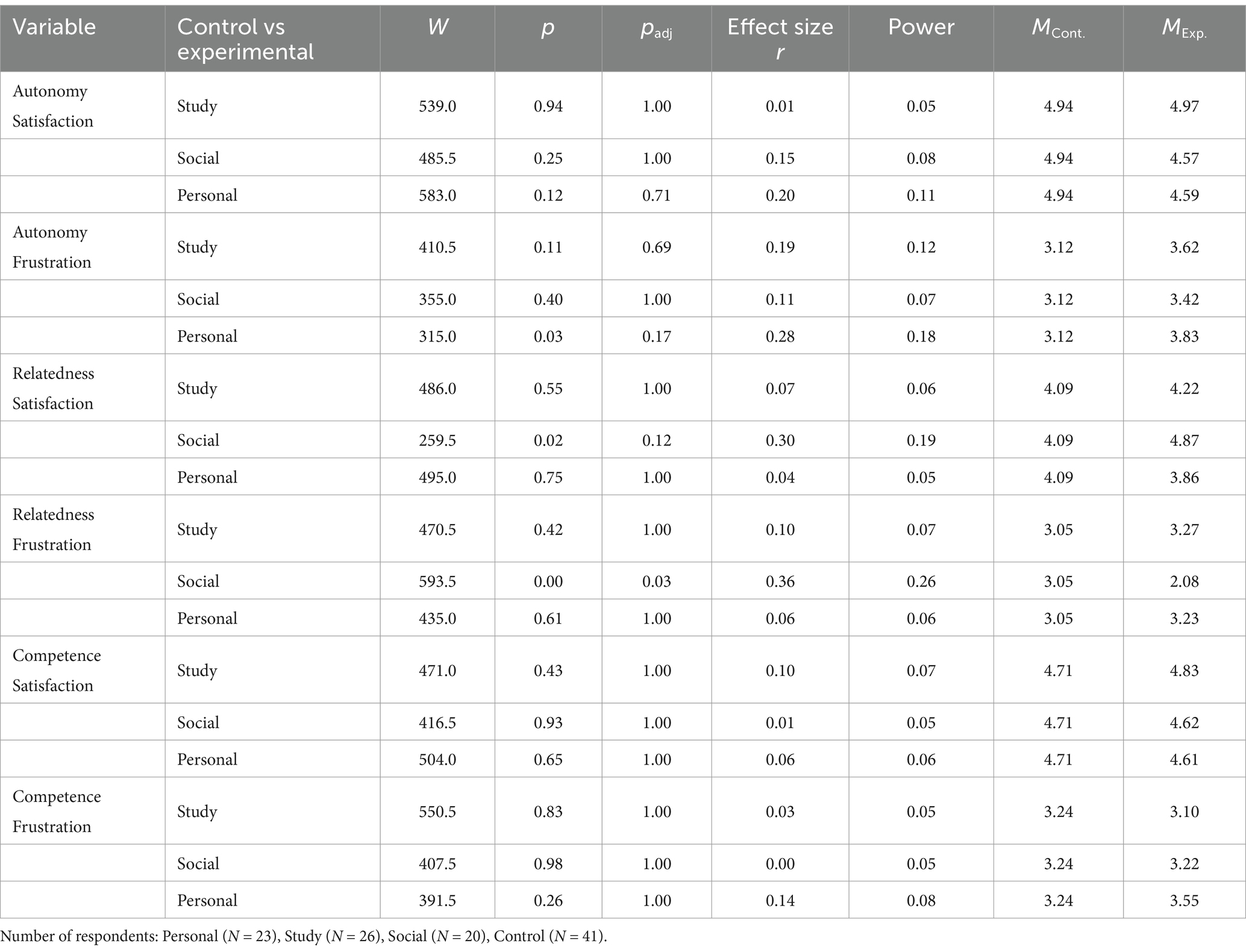

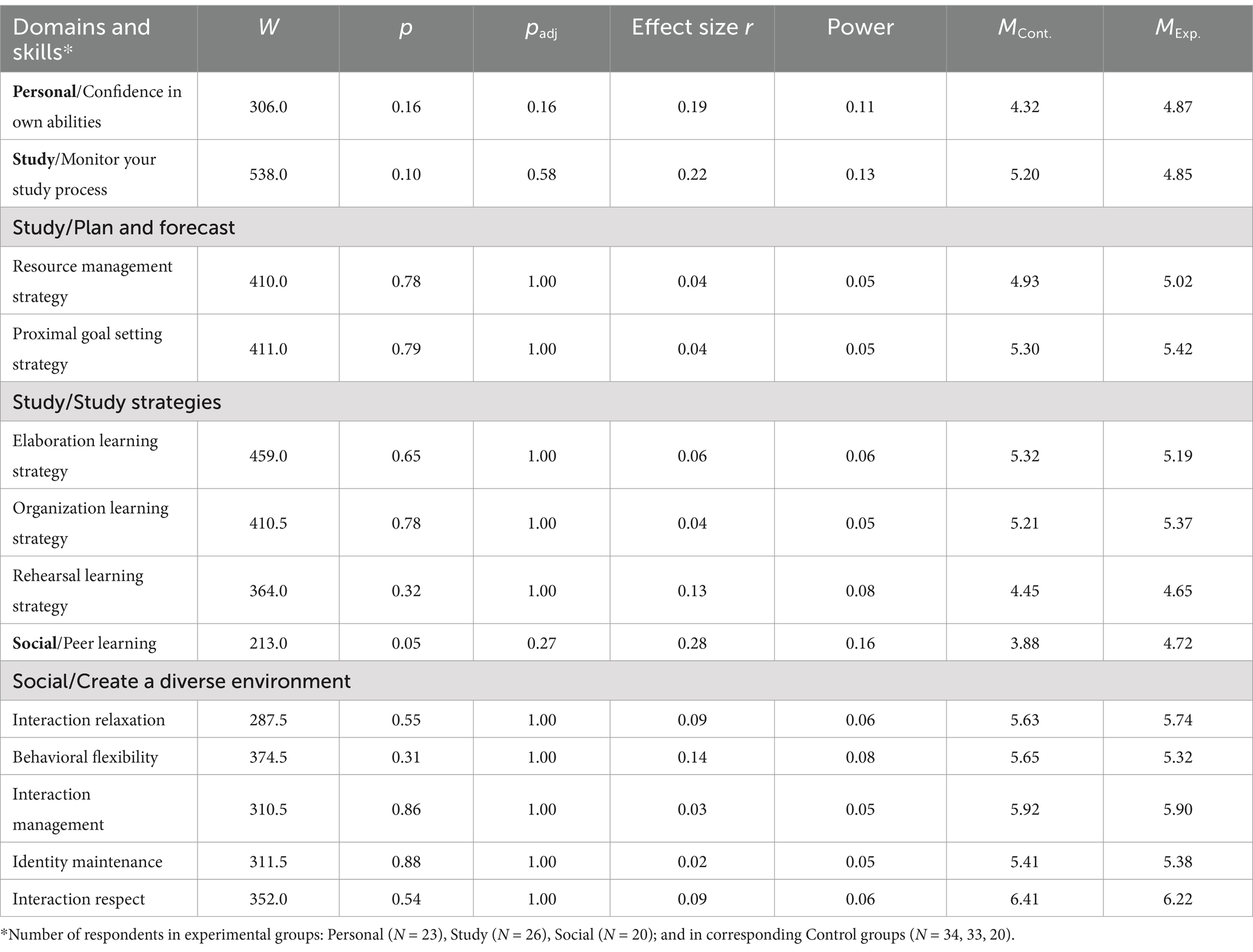

Table 4 reveals that in the between-group (post-survey) analysis, the Wilcoxon rank-sum test showed no significant differences in needs satisfaction between the control and experimental groups, except for a reduction in relatedness frustration in the social group (M = 2.08) compared to the control group (M = 3.05), W = 593.5, p = 0.01, padj = 0.03, with a medium effect size (r = 0.36). Similarly, Table 5 indicates that no significant differences in skills were observed between the control and experimental groups.

Table 4. Wilcoxon rank-sum test results for comparison of basic psychological needs between control group and experimental groups (post survey).

Table 5. Wilcoxon rank-sum test results for comparison of skills development between the control group and experimental groups—study, social, and personal (post-survey).

It is important to note that the sample size was relatively small, varying from 20 to 41 respondents per group across different comparisons. Consequently, post hoc power analysis revealed generally low statistical power for the post-survey, with values ranging from 0.05 to 0.19 for non-significant results. This suggests that the study may have been underpowered to detect smaller effects. Still, the effect sizes for non-significant results were relatively modest, with the highest effect size being 0.28.

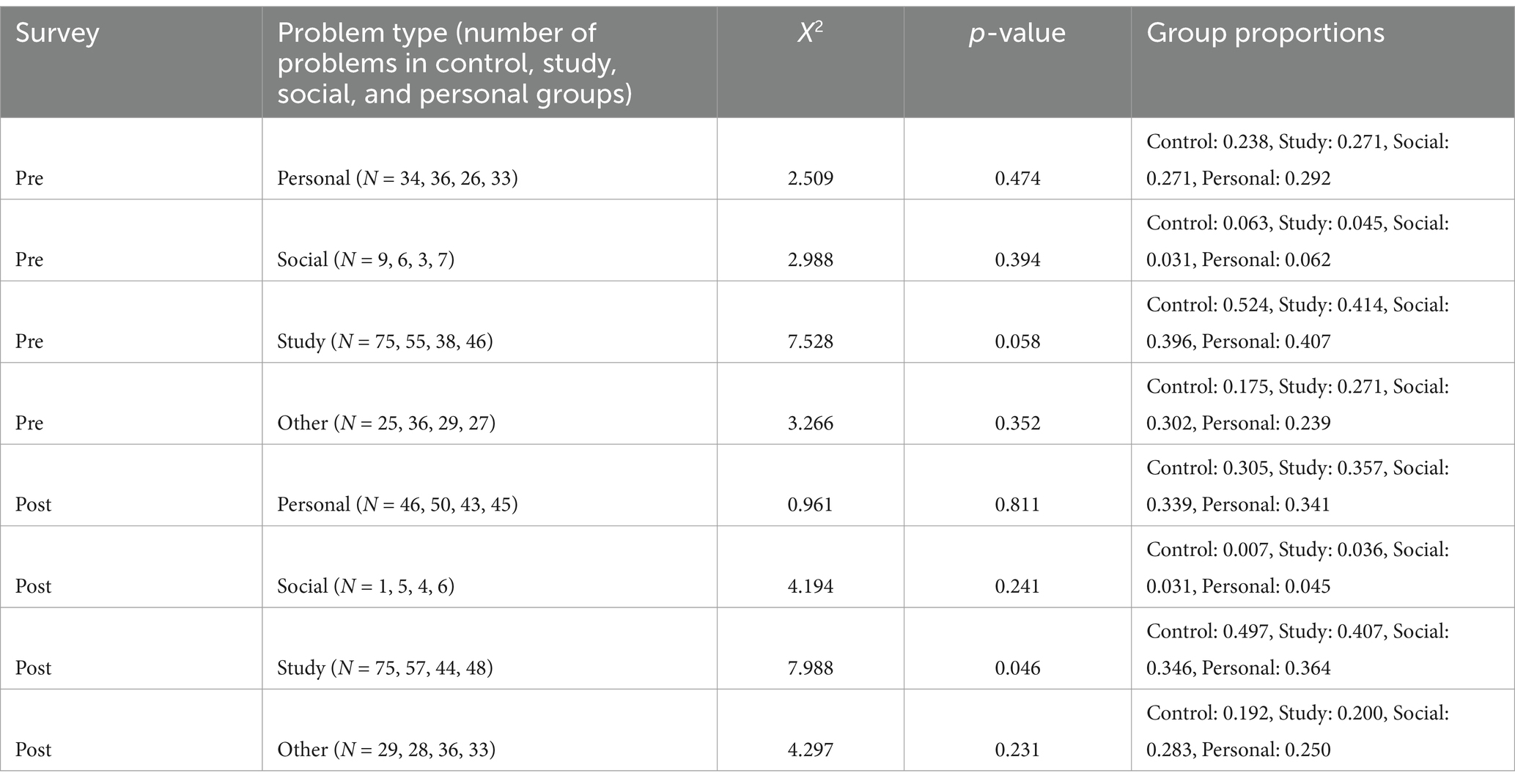

To measure learning outcomes, students were asked to list the problems they face at university, and their responses were thematically analyzed. A total of 146 students identified 485 problems in the pre-survey and 550 problems in the post-survey. These problems were categorized into personal, social, and study domains based on the skills framework underlying the Ace Yourself app (see Table 1). A four-sample test for equality of proportions without continuity correction was conducted to examine differences in the frequency of study, social, personal, and other problems across the study, social, personal, and control groups in both pre- and post-measurements.

As shown in Table 6, no significant differences were found, except for study-related problems in the post-survey (X2 = 7.988, p = 0.046). However, pairwise comparisons of proportions with Bonferroni adjustment revealed no statistically significant differences between any specific group pairs for study problems. This indicates that there were no meaningful differences between the experimental and control groups in the frequency of reported problems. Additionally, Figure 1 illustrates that the overall frequency of reported problems remained nearly unchanged from the pre- to post-survey.

Table 6. Proportion test results: comparing control group with study, social, and personal groups in the frequency of study, social, personal, and other problems.

Figure 1. Frequency of personal, social, study, and other problems mentioned by students in the pre- and post-survey.

To evaluate the alignment between students’ needs and the skills provided by the app, I analyzed the types of problems students face at university and how they value the skills suggested by the app. First, students were asked to describe the problems and tasks they encountered or anticipated at university. As shown in Figure 1, the most frequently mentioned issues were related to study and personal challenges, followed by other problems. Social problems were the least mentioned in both the pre- and post-surveys. Most of the reported problems aligned with the app’s skills framework (See Table 1). However, 117 out of 485 problems in the pre-survey and 126 out of 550 in the post-survey were categorized as “other problems,” which fall outside the current framework.

*Several skills from the current version of the Ace Yourself app were not represented in students’ reported problems: Create a diverse environment (7.5%), Presenting (7.5%), and Research skills (15.6%).

Second, to evaluate students’ perceived value of the skills suggested by the Ace Yourself app, we analyzed reflections from 82 students in the experimental groups on which skills they found valuable or unclear, and why. While five students did not respond, the remaining 77 expressed appreciation for the skills, with 173 categories extracted from their reflections indicating this sentiment. However, some students (N = 19) found the value of certain skills unclear, as reflected in 30 categories. The red boxes in the center of Figure 1 highlight the three most valued skills: “confidence in your abilities” from the personal domain (39 categories; 22.5%) and “study strategies” (29 categories; 16.8%) and “planning and predicting” (25 categories; 14.5%) from the study domain.

Students provided four main reasons for valuing a skill and five reasons for questioning its value. The most common reasons for considering a skill valuable were its perceived fundamental importance (37% of responses), its usefulness in overcoming challenges (25%), its contribution to academic success (24%), and its ability to foster a positive mindset (3%). An additional 10% of responses did not align with these themes. On the other hand, students questioned the value of a skill primarily due to a lack of perceived utility or implementation challenges (43%), already possessing the skill (30%), doubts about the app’s ability to develop the skill effectively (17%), vague or unclear skill descriptions (7%), and limited personal experience with the skill (3%).

Figure 2 shows that no one from experimental groups used the app daily and mostly students preferred to use the app 1–3 times a month.

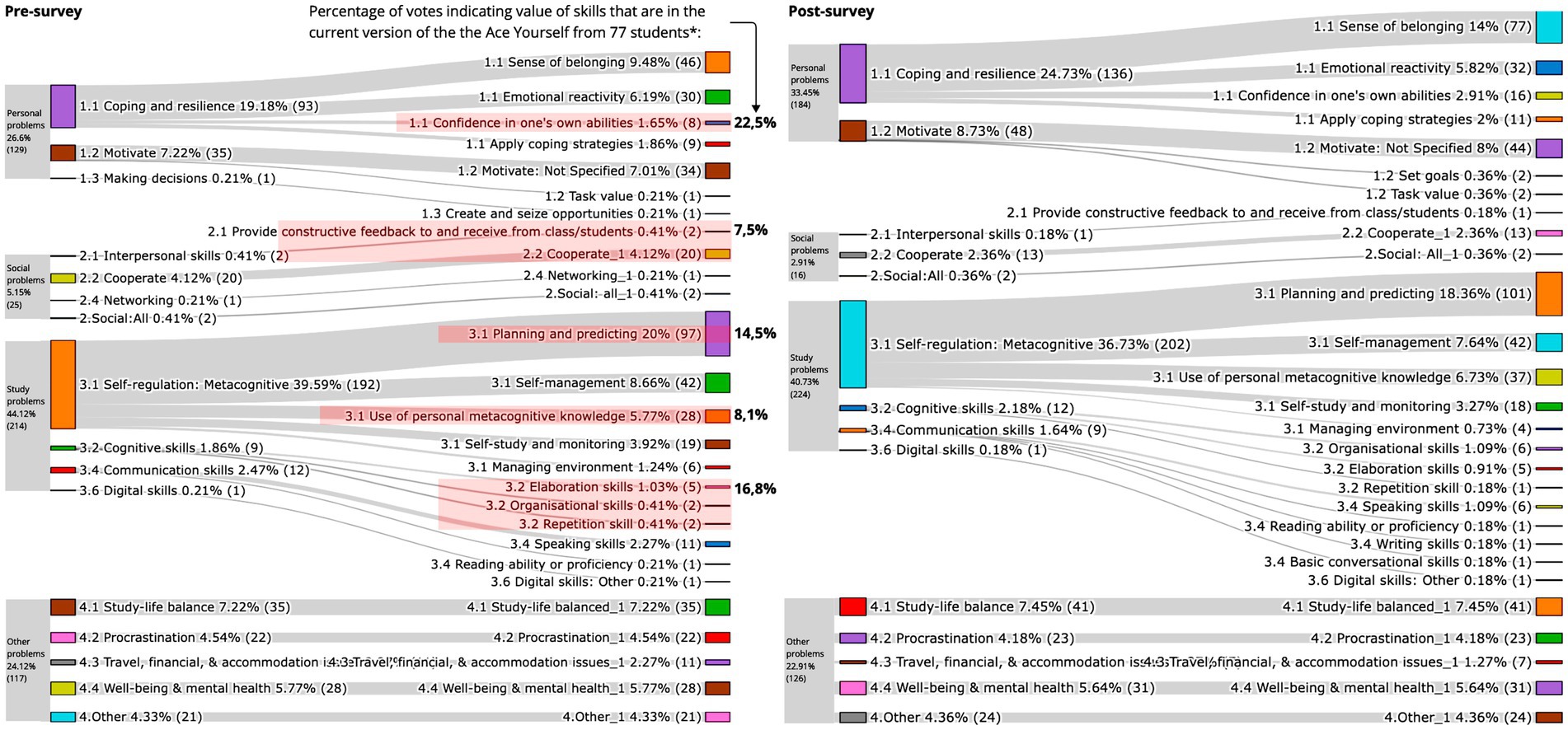

To explore how the Ace Yourself app supported or hindered learning, we analyzed reflections from 82 students in the experimental groups. These reflections included their general impressions of the app, features that facilitated or distracted from learning, reasons for not completing the modules, and their experiences with other tools designed to develop personal, social, and study skills.

First, among the 79 students who reflected on their general impressions of the app, most had a positive view: 72% of the 131 extracted categories indicated a positive impression, while 28% reflected a negative impression. Eighteen categories expressed a general positive impression, while the remaining 113 were divided across six more specific topics that shaped their perceptions (see the upper left part of Figure 3). Positive impressions were primarily linked to the app’s learning effectiveness (27% of categories) and content quality (15%), whereas negative impressions were mostly related to a perceived lack of learning effectiveness (9%) and lack of engagement (5%). Ease of use, visual appearance, and technical issues were also mentioned by students, though less frequently than the categories discussed above.

Figure 3. Distribution of the percentage of categories extracted from students’ responses regarding Ace Yourself features that facilitated and hindered learning.

Second, feedback was collected from 77 students on helpful features of the app and 72 on distracting ones. Among the 156 extracted categories, 32% reported no distractions, 2% found all features helpful, and 51% identified specific helpful features. Conversely, 3% felt nothing supported them while studying, while 15% mentioned distracting features. As shown in the upper-right part of Figure 3, among the nine identified features, the most frequently mentioned helpful aspects were the modules themselves (19%) and the content’s quality, clarity, and usability (10%), although 4% highlighted content overload as a distraction.

Third, 63 students shared what prevented them from completing the modules. As shown in the lower-left part of Figure 3, the primary reason was a lack of time (39%). Finally, we asked students how they determined that they had learned something. From the 77 responses, 86 categories were extracted. Among these, 6% indicated that students felt they had not learned anything, while only 8% credited the app’s assessment features for helping them track their learning progress. The lower-right part of Figure 3 illustrates that most students gauged their learning through practical application of the skills gained from the app (31%) and by observing changes in their thoughts and behavior (23%).

Finally, we asked students to compare Ace Yourself with other tools for developing study, social, and personal skills. Among 28 students, 70 categories were extracted: 43% reported experience with other tools, such as faculty courses (20%), coaching (17%), practical exercises (13%), personal improvement activities (10%), classroom learning (7%), and mindfulness exercises (7%). In comparison, Ace Yourself was highlighted for seven advantages: clarity, foundational support, conciseness, interactivity, convenience, guidance, and flexibility. However, students also noted 19 disadvantages, including lack of personalization (21%), learning alone (21%), absence of practice support (16%), and other limitations (21%).

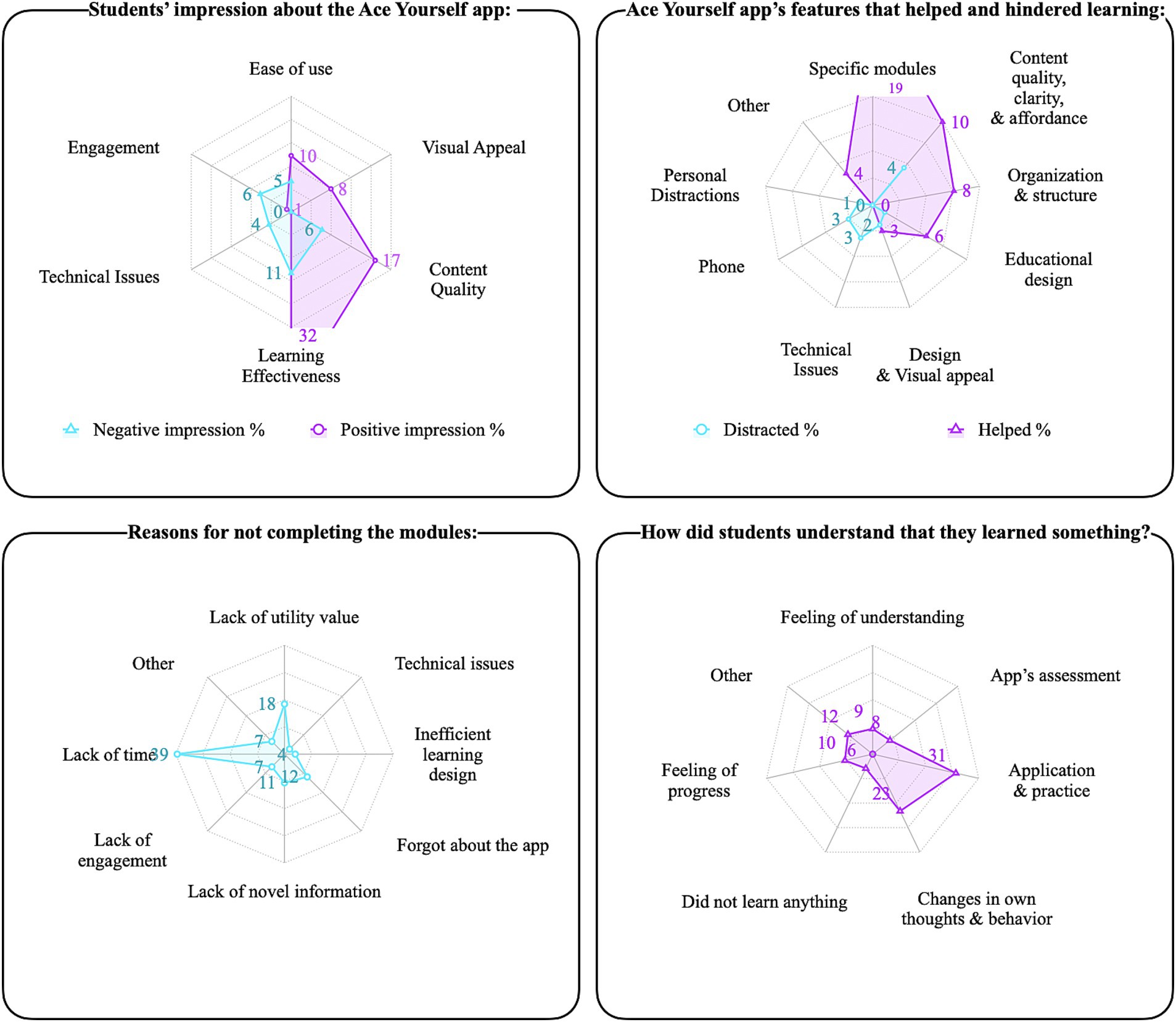

We asked students where and when they preferred to study with Ace Yourself (context), what other activities interfered when they considered studying with the app (activity system), and how they perceived their role and responsibilities while learning with it (division of labor). Figure 4 illustrates the percentage of categories extracted from students’ reflections on these themes.

Figure 4. Distribution of the percentage of categories extracted from students’ responses regarding the context, activity system, and division of labor.

First, from the responses of 79 students, 80 categories related to location and 61 categories related to time were extracted. The upper part of Figure 4 illustrates that most students preferred learning at home (79%), typically during the evening or night (44%). Among the 71 students who provided answers, 85% reported no situations where the app’s design prevented them from learning in place and time where they wanted to. However, a few students cited challenges, including technical issues (7%), crowded or noisy environments (6%), and difficulties completing assignments requiring peer collaboration (3%).

Second, students were asked whether they encountered situations where they had to choose between learning with Ace Yourself and engaging in another activity, and why they chose Ace Yourself. From the responses of 76 students, 77 categories were extracted: 42 indicated choosing Ace Yourself, while four chose another activity. The bottom-left part of Figure 4 highlights that the primary reasons for choosing Ace Yourself were the desire for self-development and curiosity (33%), as well as the app’s perceived utility value (24%).

Finally, students were asked how they perceived their roles and duties in learning with Ace Yourself. From 55 responses, 62 categories were extracted. The bottom-right part of Figure 4 reveals that most students saw themselves as active and self-directed learners (35%) or focused on testing the app and contributing to the research (31%).

This paper aimed to evaluate the effectiveness of the Ace Yourself app, which is designed to support students in their transition to university by enhancing personal, study, and social skills. The research is grounded in Activity Theory (Engeström and Sannino, 2020; Leont’ev, 1978), providing a systematic perspective to analyze how the app contributes to students’ skill development. The following sections examine each element of the activity system, “developing study, personal, and social skills with the Ace Yourself app,” based on the study’s findings, highlighting potential benefits and interrelated drawbacks.

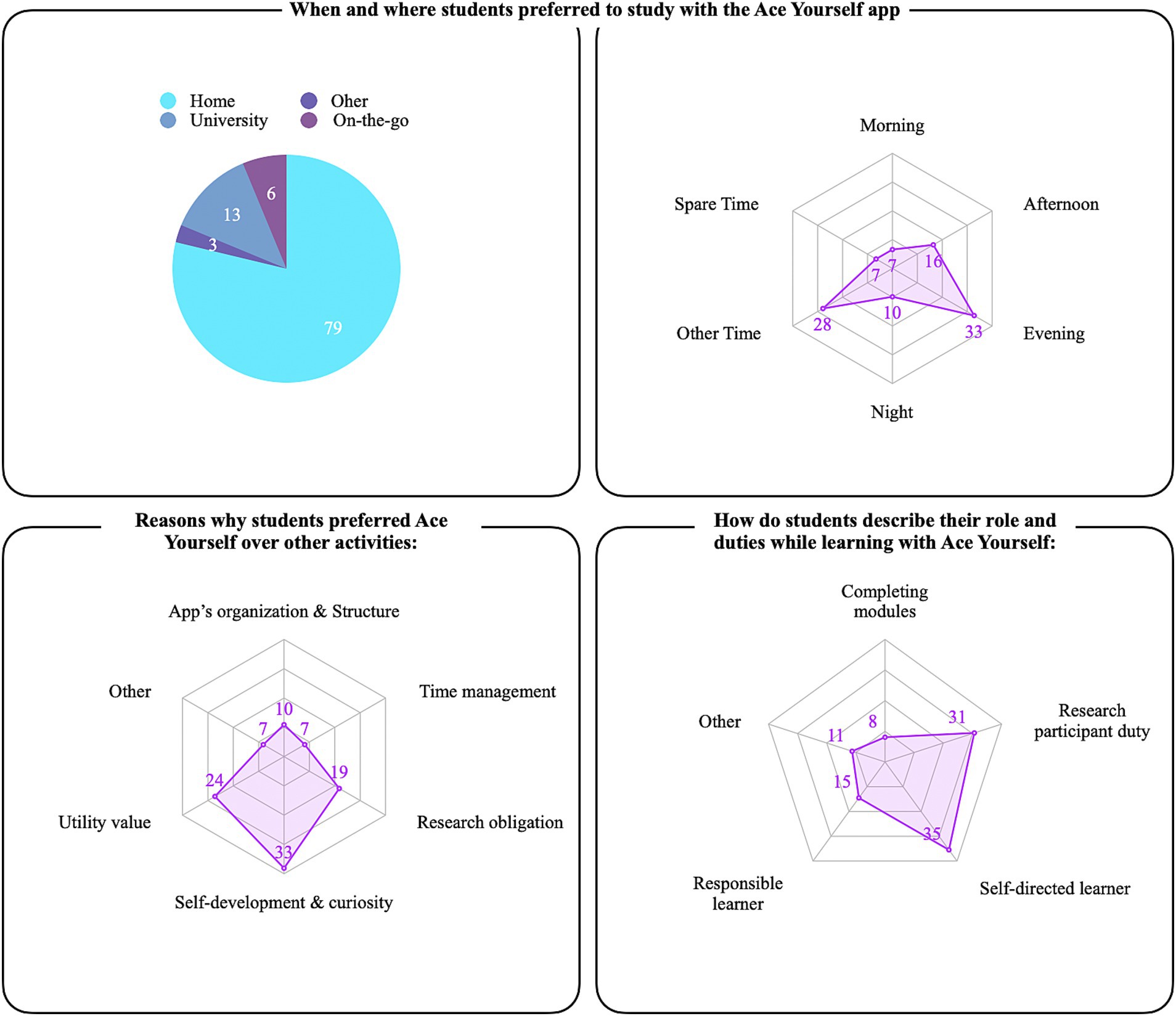

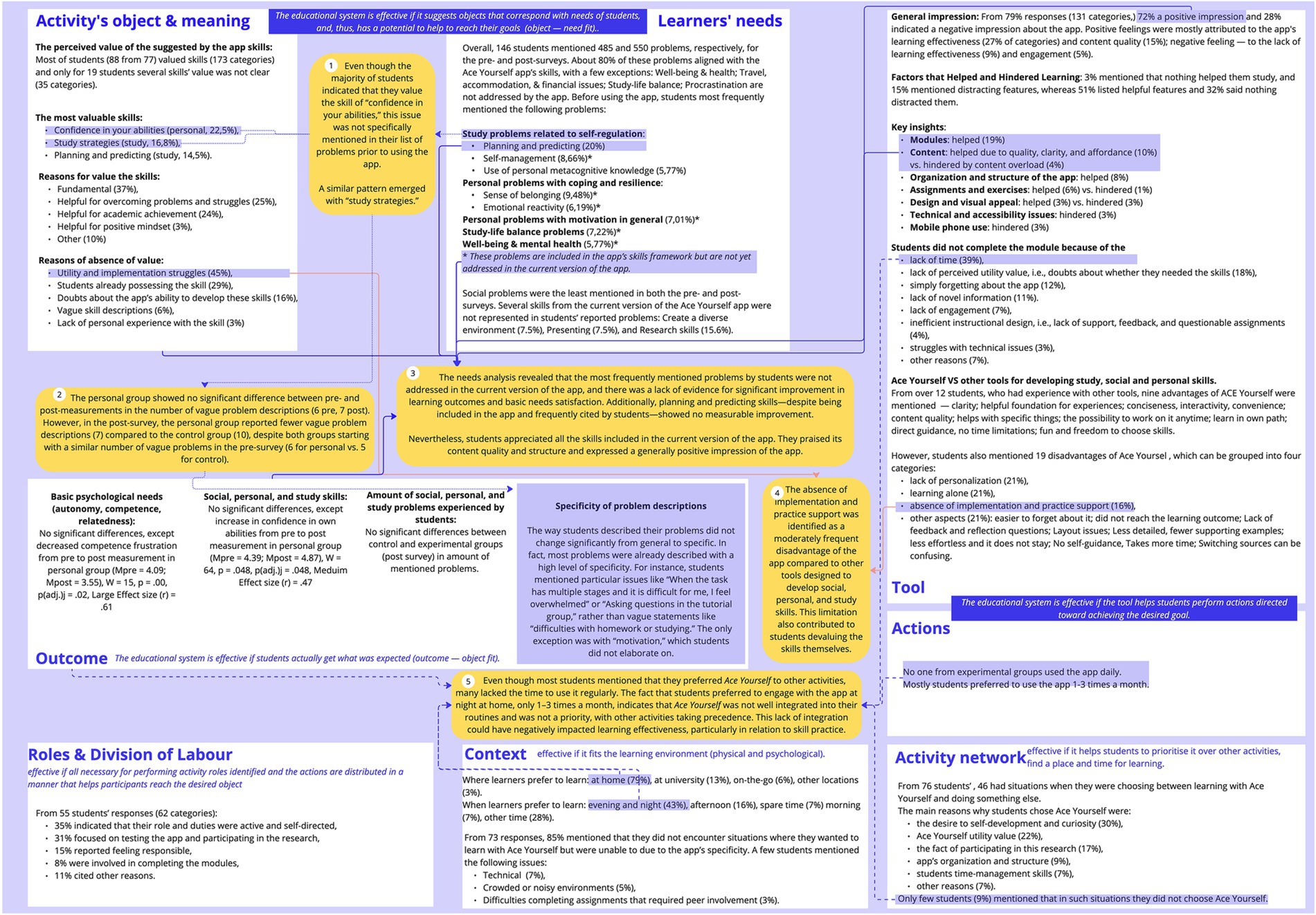

To evaluate how effectively the skills suggested by the Ace Yourself app address the university-related problems students experience (RQ1), I further discuss the alignment between the app’s suggested skills (objects) and students’ needs—specifically, whether students perceive these skills as meaningful and whether the app addresses all the problems students encounter. The upper left box of Figure 5 summarizes the key findings for the elements “object” and “meaning,” showing that the majority of students valued the skills provided by the current version of the app. Especially because if the skills perceived fundamentality and utility. Future versions of the Ace Yourself, and similar learning mobile apps, could include brief descriptions explaining why each skill is fundamental and how it addresses common student challenges. Such explanations could help students who may lack meaning and doubt the relevance or value of certain skills. Future research can examine how the specificity with which an educational activity communicates what it suggests to students influences students’ impression and perceptions of its value (Vansteenkiste et al., 2004; Priniski et al., 2018).

Figure 5. Elements of activity system “developing study, personal, and social skills with the Ace Yourself app”.

The upper central box of Figure 5 summarizes the key findings related to the element “need.” Most of the problems listed by students aligned with the skills framework underlying the Ace Yourself app (see Table 1), indicating that the framework addresses the majority of students’ challenges. However, this skills framework does not address significant areas such as study-life balance, well-being, and mental health, which were also frequently highlighted by students. Future versions of Ace Yourself and similar learning mobile apps could benefit from considering not only the problems and needs students experience on general but also which of these are prioritized and most likely to occur.

Now, I discuss how Ace Yourself contributed to element “student’s outcomes”—basic needs in autonomy, competence, and relatedness; social, study, and personal skills; and their experience of university-related problems (RQ2). The central box of Figure 5 shows that quantitative analysis of changes in students’ basic needs, social, personal, and study skills, as well as the number of problems they reported, revealed no significant differences, except for decreased competence frustration and increased confidence in their abilities. However, the small sample size may have limited the ability to detect significant results. Additionally, due to the app’s design—text-based content that explains how skills work and how to develop them—it may primarily lead to foundational learning outcomes, such as remembering and understanding, rather than the behavioral changes emphasized in this study. Measuring various levels of learning outcomes—from knowing and understanding to applying and doing—can provide a deeper understanding of the value and limitations of mobile learning (Krathwohl, 2002). Additionally, considering non-learning outcomes, such as joy, connection with others, and creating socially valuable impacts through projects, could enrich the evaluation of educational systems. After all, education is not solely about learning; it is an integral part of life (Reigeluth and An, 2020).

The right box of Figure 5 summarizes the key findings for the element “tool,” focusing on Ace Yourself features that supported or hindered learning (RQ3). Most students found the app effective and helpful for learning, primarily due to its structured and clear content. However, the most frequently cited barrier to learning was a lack of time. This suggests that it’s important to consider students time capacity when designing the mobile app’s by streamlining the content or finding new ways to fit to students’ schedules—for example, by integrating the app into their main curriculum.

The bottom three boxes of Figure 5 describe the final elements of the activity system—roles and division of labor, context, and activity network (RQ4–6). Most students perceived their role within Ace Yourself as either self-directed learners, focused on independent study, or research participants, focused on testing the app. Adding self-regulated learning (SRL) support features to the mobile app could enhance its effectiveness (Baars et al., 2022; Lobos et al., 2021). Furthermore, solo learning often lacks opportunities for feedback, discussions, or satisfying the need for connectedness. Including instructions on how students can self-organize peer learning teams and study collaboratively could further improve their learning experience and outcomes in mobile learning (Tlili et al., 2024).

The app was well-suited for studying, as neither the context nor other activities posed significant obstacles to learning. However, most students preferred to study at home in the evening, raising questions about the advantages of using a mobile application compared to other media, such as online courses or books. Some students may live in environments that are oversaturated with educational possibilities and demands. Future research could investigate how different educational tools can help students free up time or better align with their routines, opening new contexts for learning. Being mobile does not automatically equate to being flexible or well-suited to students’ needs and routines.

As an activity system is more than just the “sum of its elements,” it is crucial to examine the relationships between different elements, as this can reveal contradictions and insights that might remain hidden when elements are analyzed separately (RQ6). The yellow note №1 in Figure 5 highlights a contradiction between students valuing skills suggested by Ace Yourself and the problems they identify. For instance, “Confidence in your abilities” was among the most valued skills but was not initially mentioned as a problem by students, possibly being part of a broader, less defined “motivation” issue cited by 7–8% of students. After using the app, 2.91% explicitly mentioned “confidence in your abilities” as a problem. Similarly, “Study strategies” was valued but rarely identified as a problem (<3%), while higher-level issues like self-management (7%) and metacognitive knowledge use (6%) were more frequently cited.

This contradiction—students valuing skills but not articulating related problems—prompted further investigation. The yellow note №2 in Figure 5 shows the personal group, focused on “confidence in yourself,” had similar vague problem descriptions pre- and post-survey (6 and 7, respectively) but fewer vague descriptions than the control group post-survey (7 vs. 10), despite similar initial numbers. The specificity of problem descriptions may reflect what students learned from the app, aligning with Vygotsky’s theory of scientific and everyday concepts (Vygotsky, 1962; Eun, 2019). Students may lack the scientific concepts needed to analyze and articulate motivational problems or fail to connect these concepts to their experiences, leaving them abstract. Mobile apps that lack the ability to target higher-order learning outcomes, such as behavioral change, could instead focus on helping students identify new, previously unrecognized details of their experiences through the application of scientific concepts. Future research could focus on identifying strategies that help students connect scientific concepts to their everyday experiences (Romashchuk, 2023).

The yellow note №3 in Figure 5 highlights that the most frequently mentioned problems in students’ needs analysis are not addressed in the current version of the app. This result may explain the lack of measurable learning outcomes, as students likely prioritize addressing urgent problems not covered by the app, leaving limited opportunities to practice its provided skills. However, the planning and predicting skills—despite being included in the app and identified as key challenges by students—also showed no measurable improvement, indicating a need to revise and enhance these modules. Considering whether the intended learning outcomes of a mobile app align with the actual problems and needs of learners could be beneficial: If the app targets potential or future challenges, think about how students will practice these skills if they are not currently encountering these problems. Future research could explore how to complement mobile learning with simulations that enable students to practice solving problems they have not yet encountered yet or rarely face.

Nevertheless, students valued all the skills included in the app, praised its content quality and structure, and had a positive overall impression. This suggests that achieving complex learning outcomes may not always be essential for maintaining motivation; a perceived value of content for addressing potential problems might suffice to sustain engagement and encourage continued learning. Future research could focus on identifying the “border” where skills are still considered valuable even though they are not immediately used, and when potentially beneficial future skills loose their value.

The yellow note №4 in Figure 5 highlights that the lack of implementation and practice support was both a reason for students devaluing certain skills and a disadvantage of the app overall. This underscores the interplay between the educational form or tool and the skill it aims to develop. Different educational formats—such as a mobile app, lecture, or workshop—can target the same skill, and the same format can support the development of various skills. However, students may evaluate the effectiveness of an educational form based on their perception of the skill it addresses, and vice versa. To maintain students’ motivation to learn, it is crucial to distinguish these two aspects in their perception. For example, students could be informed about alternative ways to develop the same skills they practiced using the mobile app. The app can serve as an entry point, guiding students toward other educational formats such as books, workshops, or comprehensive training programs, creating a smooth network of educational systems. Future research could explore ways to help students distinguish between the skill or intended learning outcome and the educational format used to achieve it. Do not judge the skill based on the educational format, and vice versa.

The yellow note №5 in Figure 5 suggests that although students reported no issues with interfering activities or unsuitable contexts, this does not imply the app is adaptable to all potential learning environments. Based on students’ feedback about their preferred times and places for learning, the app’s design seems to predominantly support the “home in the evening” learning context, potentially overlooking other opportunities for engagement. For instance, incorporating just-in-time or procedural information to assist students during moments of difficulty while studying could expand the contexts in which the app is used (Van Merriënboer et al., 2024). This adjustment could enable learning in real-time, addressing problems as they arise.

This study has several limitations that should be acknowledged. First, the small sample size limits the generalizability of our findings and reduces the statistical power to detect subtle effects. While the results provide valuable insights, a larger sample would allow for more robust conclusions and greater confidence in the observed patterns. Second, the absence of behavioral measures, such as detailed data analytics from the app, represents a significant limitation. These measures could have offered objective insights into participants’ engagement and usage patterns, which would complement the self-reported data and provide a more comprehensive understanding of the intervention’s impact.

This paper evaluated the effectiveness of the Ace Yourself app, designed to enhance university students’ personal, study, and social skills, through the lens of Activity Theory. While students appreciated the app, several drawbacks were identified. The study emphasizes the importance of viewing education as a holistic system—encompassing needs, objects, meanings, outcomes, tools, context, division of labor, and related activities. It highlights the value of not only focusing on individual elements but also exploring the insights and contradictions that arise from their interconnections.

The datasets presented in this article are not publicly available due the Ethics and data management plan. Requests to access the datasets should be directed to ZGFyaWEuaWxpc2hraW5hQGdtYWlsLmNvbQ==.

The studies involving humans were approved by Erasmus University Rotterdam Research Ethics Review Committee (ETH2324-0283). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

DI: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

The author(s) declare that no financial support was received for the research and/or publication of this article.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1532376/full#supplementary-material

Amaefule, C. O., Breitwieser, J., Biedermann, D., Nobbe, L., Drachsler, H., and Brod, G. (2023). Fostering children’s acceptance of educational apps: the importance of designing enjoyable learning activities. Br. J. Educ. Technol. 54, 1351–1372. doi: 10.1111/bjet.13314

Baars, M., Khare, S., and Ridderstap, L. (2022). Exploring students’ use of a mobile application to support their self-regulated learning processes. Front. Psychol. 13:793002. doi: 10.3389/fpsyg.2022.793002

Baars, M., and Viberg, O. (2022). Mobile learning to support self-regulated learning: a theoretical review. Int. J. Mobile Blended Learn. (IJMBL) 14, 1–12. doi: 10.4018/IJMBL.315628

Burr, T., and Degotardi, S. (2024). Design-based research in early childhood education: a scoping review of methodologies. Early Childhood Educ. J. 52, 1–16. doi: 10.1007/s10643-024-01790-x

Dolmans, D. H., and Tigelaar, D. (2012). Building bridges between theory and practice in medical education using a design-based research approach: AMEE guide no. 60. Med. Teach. 34, 1–10. doi: 10.3109/0142159X.2011.595437

Dolowitz, A., Collier, J., Hayes, A., and Kumsal, C. (2023). Iterative design and integration of a microlearning mobile app for performance improvement and support for NATO employees. TechTrends 67, 143–149. doi: 10.1007/s11528-022-00781-2

Engeström, Y. (1987). Learning by expanding: an activity-theoretical approach to developmental research. Helsinki: Orienta-Konsultit.

Engeström, Y., and Sannino, A. (2020). From mediated actions to heterogenous coalitions: four generations of activity-theoretical studies of work and learning. Mind Cult. Act. 28, 4–23. doi: 10.1080/10749039.2020.1806328

Eun, B. (2019). The zone of proximal development as an overarching concept: a framework for synthesizing Vygotsky’s theories. Educ. Philos. Theory 51, 18–30. doi: 10.1080/00131857.2017.1421941

Foerst, N. M., Pfaffel, A., Klug, J., Spiel, C., and Schober, B. (2019). SRL in der Tasche?–Eine SRL-Interventionsstudie im App-Format. Unterrichtswissenschaft 47, 337–366. doi: 10.1007/s42010-019-00046-7

Fu, J. S., Yeh, H. C., Liang, P. C., and Heng, L. (2021). Exploring adult learners’ perceptions towards learning English via mobile applications. Int. J. Learn. Technol. 16, 181–196. doi: 10.1504/IJLT.2021.119471

Greenhow, C., Graham, C. R., and Koehler, M. J. (2022). Foundations of online learning: challenges and opportunities. Educ. Psychol. 57, 131–147. doi: 10.1080/00461520.2022.2090364

Hartley, K., Bendixen, L. D., Olafson, L., Gianoutsos, D., and Shreve, E. (2020). Development of the smartphone and learning inventory: measuring self-regulated use. Educ. Inf. Technol. 25, 4381–4395. doi: 10.1007/s10639-020-10179-3

Henriksen, T. D., and Ejsing-Duun, S. (2022). Implementation in design-based research projects: a map of implementation typologies and strategies. Nordic J. Digit. Lit. 17, 234–247. doi: 10.18261/njdl.17.4.4

Honebein, P. C., and Reigeluth, C. M. (2021). To prove or improve, that is the question: the resurgence of comparative, confounded research between 2010 and 2019. Educ. Technol. Res. Dev. 69, 465–496. doi: 10.1007/s11423-021-09988-1

Krathwohl, D. R. (2002). A revision Bloom’s taxonomy: An overview. Theory Pract. 41, 212–218. doi: 10.1207/s15430421tip4104_2

Leont’ev, A. (1978). Activity, consciousness, and personality. Translated by Maris J. Hall. Englewood Cliffs, NJ: Prentice-Hall (Original work published 1971).

Lin, C. C., Lin, V., Liu, G. Z., Kou, X., Kulikova, A., and Lin, W. (2020). Mobile-assisted reading development: a review from the activity theory perspective. Comput. Assist. Lang. Learn. 33, 833–864. doi: 10.1080/09588221.2019.1594919

Lindín, C., Steffens, K., and Bartolomé, A. (2022). Experiencing Edublocks: a project to help students in higher education to select their own learning paths. J. Interact. Media Educ. 2022:Article 7. doi: 10.5334/jime.731

Lobos, K., Sáez-Delgado, F., Bruna, D., Cobo-Rendón, R., and Díaz-Mujica, A. (2021). Design, validity and effect of an intra-curricular program for facilitating self-regulation of learning competences in university students with the support of the 4planning app. Educ. Sci. 11:449. doi: 10.3390/educsci11080449

Longo, Y., Gunz, A., Curtis, G. J., and Farsides, T. (2016). Measuring need satisfaction and frustration in educational and work contexts: the need satisfaction and frustration scale (NSFS). J. Happiness Stud. 17, 295–317. doi: 10.1007/s10902-014-9595-3

Mustafa, K. (2023). A systematic literature review on learning apps evaluation. J. Inf. Technol. Educ. Res. 21, 663–700. doi: 10.28945/5042

Palalas, A., and Wark, N. (2020). The relationship between mobile learning and self-regulated learning: a systematic review. Australas. J. Educ. Technol. 36, 151–172. doi: 10.14742/ajet.5650

Pintrich, P. R. (1991). A manual for the use of the motivated strategies for learning questionnaire (MSLQ). Ann Arbor, MI: The University of Michigan.

Portalla, T., and Chen, G.M. (2010). The development and validation of the intercultural effectiveness scale. Intercult. Commun. Stud. 9, 21–37.

Priniski, S. J., Hecht, C. A., and Harackiewicz, J. M. (2018). Making learning personally meaningful: a new framework for relevance research. J. Exp. Educ. 86, 11–29. doi: 10.1080/00220973.2017.1380589

Reigeluth, C. M., and An, Y. (2020). Merging the instructional design process with learner-centered theory: the holistic 4D model. New York: Routledge.

Romashchuk, A. N. (2023). Activity-based approach to the teaching and psychology of insightful problem solving: scientific concepts as a form of constructive criticism. Psychol. Russia State Art 16, 14–29. doi: 10.11621/pir.2023.0302

Rudolph, S., Mayes, E., Molla, T., Chiew, S., Abhayawickrama, N., Maiava, N., et al. (2024). What’s the use of educational research? Six stories reflecting on research use with communities. Aust. Educ. Res. 51, 2277–2300. doi: 10.1007/s13384-024-00693-5

Ryan, R. M., and Deci, E. L. (2020). Intrinsic and extrinsic motivation from a self-determination theory perspective: definitions, theory, practices, and future directions. Contemp. Educ. Psychol. 61:101860. doi: 10.1016/j.cedpsych.2020.101860

Sato, M., and Loewen, S. (2022). The research–practice dialogue in second language learning and teaching: past, present, and future. Mod. Lang. J. 106, 509–527. doi: 10.1111/modl.12791

Schwinger, M., Steinmayr, R., and Spinath, B. (2009). How do motivational regulation strategies affect achievement: mediated by effort management and moderated by intelligence. Learn. Individ. Differ. 19, 621–627. doi: 10.1016/j.lindif.2009.08.006

Thompson, M., Pawson, C., and Evans, B. (2021). Navigating entry into higher education: the transition to independent learning and living. J. Furth. High. Educ. 45, 1398–1410. doi: 10.1080/0309877X.2021.1933400

Tinoca, L., Piedade, J., Santos, S., Pedro, A., and Gomes, S. (2022). Design-based research in the educational field: a systematic literature review. Educ. Sci. 12:410. doi: 10.3390/educsci12060410

Tlili, A., Salha, S., Garzón, J., Denden, M., Kinshuk,, Affouneh, S., et al. (2024). Which pedagogical approaches are more effective in mobile learning? A meta-analysis and research synthesis. J. Comput. Assist. Learn. 40, 1321–1346. doi: 10.1111/jcal.12950

Van Merriënboer, J. J., Kirschner, P. A., and Frerejean, J. (2024). Ten steps to complex learning: a systematic approach to four-component instructional design. New York: Routledge.

Vansteenkiste, M., Simons, J., Lens, W., Soenens, B., Matos, L., and Lacante, M. (2004). Less is sometimes more: goal content matters. J. Educ. Psychol. 96, 755–764. doi: 10.1037/0022-0663.96.4.755

Vaughn, L. M., and Jacquez, F. (2020). Participatory research methods – choice points in the research process. J. Particip. Res. Methods 1. doi: 10.35844/001c.13244

Viberg, O., Wasson, B., and Kukulska-Hulme, A. (2020). Mobile-assisted language learning through learning analytics for self-regulated learning (MALLAS): a conceptual framework. Australas. J. Educ. Technol. 36, 34–52. doi: 10.14742/ajet.6494

Vygotsky, L. S. (1962). Thought and language. Cambridge, MA: MIT Press. (Original work published 1934).

Wei, D., Talib, M. B. A., and Guo, R. (2022). A systematic review of mobile learning and student’s self-regulated learning. Eurasian J. Educ. Res. 102, 233–252.

Working together for a better connection with vo-ho Vaardighedenraamwerk voortgezet onderwijs - hoger onderwijs (VO-HO) (2023). Het vaardighedenraamwerk inclusief rubrics. Available at: https://www.aansluiting-voho010.nl/materiaal/het-vaardighedenraamwerk-inclusief-rubrics (Accessed October 30, 2023).

Keywords: Activity Theory, m-learning, mobile application, soft skills, systematic assessment, evidence-based education, educational program evaluation

Citation: Ilishkina DI (2025) Rethinking the evaluation of educational intervention effectiveness through Activity Theory: a mobile app example. Front. Educ. 10:1532376. doi: 10.3389/feduc.2025.1532376

Received: 21 November 2024; Accepted: 13 February 2025;

Published: 09 April 2025.

Edited by:

Mohd Nihra Haruzuan Mohamad Said, University of Technology Malaysia, MalaysiaReviewed by:

Zaleha Abdullah, University of Technology Malaysia, MalaysiaCopyright © 2025 Ilishkina. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daria I. Ilishkina, ZGFyaWEuaWxpc2hraW5hQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.