- 1Graduate Institute of Sustainability Management and Environmental Education, College of Science, National Taiwan Normal University, Taipei, Taiwan

- 2Graduate Institute of Environmental Engineering, National Taiwan University, Taipei, Taiwan

Introduction: This study investigates the perceptual differences between ChatGPT and human tutors in fostering critical thinking among students, highlighting the effectiveness of Socratic tutoring methodologies in modern educational contexts.

Methods: Conducted with a sample of 230 university students in Taiwan, the research employed a mixed-methods approach, combining quantitative surveys and qualitative interviews.

Results and discussion: Results reveal that while a significant portion of students appreciates the benefits of ChatGPT—namely its non-judgmental nature and accessibility—human tutors are acknowledged for their capability to provide tailored feedback and emotional support. Through threshold analysis conducted via a Multilayer Perceptron (MLP) model, the study identified key features affecting student preferences, emphasizing the need for a balanced integration of AI and human tutoring methods. Findings underscore the importance of developing hybrid educational models that leverage both the strengths of human facilitators and the efficiencies of AI tools to enhance student learning and critical thinking skills.

1 Introduction

1.1 Overview of Socratic tutoring

Socratic tutoring is a teaching method rooted in the philosophies of Socrates, emphasizing dialogue and questioning to nurture critical thinking. By engaging students in structured conversations, this approach fosters deeper understanding and encourages learners to articulate their reasoning. The educational value of Socratic tutoring lies in its ability to develop higher-order thinking skills, such as analyzing, evaluating, and synthesizing information. Le (2019) highlights that Socratic questioning not only promotes deep comprehension but also enhances metacognitive abilities, enabling students to evaluate their own thought processes. Similarly, Pitorini (2024) emphasizes its transformative impact, fostering a growth mindset by challenging students to rethink their assumptions and explore alternative perspectives. These findings underscore that Socratic tutoring serves as a comprehensive tool for both cognitive and personal development.

This method aligns closely with Socrates' original intent, as described in Plato's dialogues, where his conversational style aimed to help interlocutors uncover contradictions in their beliefs and arrive at deeper truths. In modern contexts, Socratic tutoring reflects these principles by promoting critical self-reflection and open-ended inquiry, which remain central to contemporary education's focus on critical thinking as a core competency for navigating today's complex world (Vincent-Lancrin et al., 2019).

Traditional Socratic tutoring relies on interactive, student-centered dialogue, where tutors guide learners using open-ended questions while refraining from imposing their own views. Rahman et al. (2019) illustrate that a tutor's adaptability is critical to fostering productive discussions, ensuring conversations remain both relevant and student-centered. Hu (2023) provides empirical evidence that non-directive facilitation—where tutors focus on guiding rather than leading—encourages students to construct stronger arguments and engage critically with diverse perspectives. Together, these studies reveal the nuanced interplay between tutor guidance and learner autonomy in creating meaningful learning experiences.

By examining Socratic tutoring's methods and impact, we can better understand its enduring relevance as a pedagogical strategy that encourages independent thought, fosters intellectual curiosity, and empowers students to explore complex ideas collaboratively.

1.2 Role of AI in education

Artificial intelligence (AI) is rapidly transforming education, offering innovative tools and applications that enhance learning experiences. AI technologies provide several advantages, including personalized learning, immediate feedback, and support for self-directed study. Serban et al. (2020) highlight that AI-driven personalization significantly improves learning outcomes by tailoring content to individual needs, addressing a key limitation of traditional educational approaches. This adaptability makes education more inclusive by accommodating diverse learning styles. Efendi et al. (2020) add that AI's ability to deliver real-time feedback enables students to refine their understanding more efficiently, fostering a dynamic and iterative learning process. Together, these findings demonstrate AI's potential to create highly adaptive and learner-focused educational environments.

Despite its benefits, integrating AI into education also presents notable challenges. Abdullah et al. (2022) warn that over-reliance on AI tools can undermine intrinsic motivation and critical reasoning, potentially reducing students' engagement with deeper learning processes. Their study underscores the need for a balanced approach that leverages AI's strengths while addressing its ethical and pedagogical risks. Similarly, Sulaiman (2020) emphasizes that while tools like ChatGPT can replicate aspects of Socratic dialogue, they lack the contextual awareness and ethical judgment essential for nuanced discussions. These limitations highlight the trade-offs educators must navigate when incorporating AI into teaching practices.

ChatGPT serves as a prominent example of AI in education, capable of simulating human-like conversations to facilitate inquiry and exploration. It can answer questions, explain concepts, and engage students in dialogue similar to Socratic tutoring. Tofade et al. (2013) caution, however, that the effectiveness of such tools in fostering critical thinking depends on how they are integrated into the curriculum. Unstructured use may lead to surface-level engagement with content, whereas structured implementation paired with reflective practices can enhance students' analytical and reasoning skills. These insights illustrate that the success of AI tools lies not only in their design but also in the pedagogical frameworks that guide their use.

While ChatGPT and similar technologies have the potential to improve educational outcomes, educators must remain mindful of their limitations. These studies collectively suggest that AI's transformative potential extends beyond its technical features, emphasizing the need for ethical and strategic integration in learning environments. This calls for designing AI-driven educational experiences that prioritize critical thinking, ethical awareness, and balanced learner autonomy.

1.3 Rationale for the study

Fostering critical thinking skills is crucial for students' academic success and future problem-solving abilities. These skills enable students to analyze information, evaluate arguments, and make informed decisions, which are essential in both academic and real-world contexts (Freeman et al., 2014). As education shifts toward active learning, there is growing emphasis on teaching methods that encourage critical thinking. Integrating critical thinking into curricula not only enhances academic performance but also builds lifelong learning skills, which are increasingly important in today's evolving job market (Mal et al., 2021).

However, the Socratic method, despite its effectiveness in fostering deep thinking, faces practical challenges. One significant issue is its implementation in larger classrooms, where individualized, meaningful dialogue can be difficult to achieve. Chan and Hu (2023) warn that unstructured Socratic discussions often risk digressions or superficial engagement if the tutor does not provide adequate guidance. Similarly, Winkler and Söllner (2018) note that successful facilitation requires skilled educators capable of balancing open-ended inquiry with structured progress—skills that may not be universally available. These challenges highlight the need to critically assess the method's feasibility across diverse educational contexts.

In parallel, research on AI tools in education has revealed mixed perceptions regarding their effectiveness. For example, Chan and Hu (2023) found that students appreciate the benefits of generative AI in higher education but also emphasize the need for AI literacy to navigate its limitations. While AI tools like ChatGPT can emulate aspects of Socratic dialogue, they often lack nuanced judgment and may mislead users with plausible-sounding yet incorrect responses (Abdullah et al., 2022). Over-reliance on such tools could discourage independent critical thinking, a fundamental goal of the Socratic method.

Intelligent tutoring systems with conversational capabilities have shown promise in enhancing learning experiences, particularly through personalized feedback (Winkler and Söllner, 2018). However, there is still a significant gap in the literature comparing the effectiveness of human and AI tutors in promoting critical thinking through Socratic methods. This study aims to fill that gap, providing insights into how different tutoring approaches influence critical thinking development. Understanding these dynamics is essential for educators and researchers alike, as it can guide the design of more effective educational tools and practices (Rijdt et al., 2011).

Student perceptions of AI and human tutors play a pivotal role in determining the success of these technologies in learning environments (Khaw and Raw, 2016). By investigating these perspectives, this study not only explores the effectiveness of AI in fostering critical thinking compared to traditional human tutors but also considers the inherent limitations of both approaches. AI tutors may lack the human touch and contextual understanding required for complex discussions, while human tutors may face scalability and consistency issues in large systems. This balanced analysis provides a comprehensive view of the interplay between AI and traditional tutoring methods.

This study is timely and relevant, as it aligns with the growing integration of AI in education and the increasing demand for innovative teaching methods. By bridging gaps in the current literature, it contributes to a deeper understanding of how AI and human tutors can complement one another in fostering critical thinking.

1.4 Research objectives

The primary objectives of this study are 3-fold: first, to explore student perceptions of ChatGPT as a Socratic tutor in comparison to human tutors; second, to examine how these perceptions shape students' views on the role of AI in enhancing critical thinking; and third, to identify the strengths and weaknesses of both AI and human tutoring approaches from the student perspective. Achieving these objectives will provide a deeper understanding of how AI can complement traditional tutoring methods and its broader implications for educational practices.

This study's findings aim to contribute to the growing body of knowledge in educational psychology and AI-assisted learning. By examining how students perceive and interact with both AI and human tutors, educators, curriculum designers, and policymakers can make more informed decisions about integrating these tools effectively into teaching strategies. Furthermore, these insights may guide the development of AI technologies that better align with educational goals, ultimately improving the quality of learning experiences for students.

2 Methodology

2.1 Research design and participants

The study employed a mixed-methods research design, combining quantitative and qualitative approaches to gain a comprehensive understanding of student perceptions of ChatGPT as a Socratic tutor compared to human tutors. The quantitative component involved a survey distributed to students across various educational institutions in Taiwan, while the qualitative aspect consisted of semi-structured interviews.

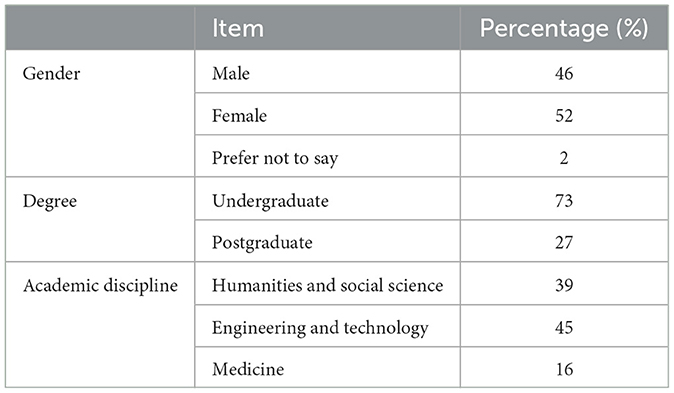

The study comprised a sample of 230 Taiwanese university students who had previous experience using ChatGPT for academic purposes. The participants were from different fields of study, including Humanities and Social Sciences, Engineering and Technology, and Medicine. Out of the total sample, 23 students (10% of the sample) were further chosen for a semi-structured interview, aiming to gain a comprehensive understanding of the student experiences and perceptions. The interview replies were transcribed, categorized, and systematically structured to discern reoccurring patterns and themes. The demographic information of the participants is presented in Table 1.

2.2 Data collection and analysis

Data was collected using an online survey and semi-structured interviews. The data was obtained over 3 months, and the questionnaire was conducted using an online platform to ensure broad dissemination and simplicity of access. Participants were encouraged to take the poll via email and social media platforms such as LinkedIn. The participants were chosen using a combination of purposive, stratified, and snowball sampling approaches, guaranteeing a fair representation of students from different educational backgrounds. A pilot test with 25 participants was conducted to ensure the questionnaire's reliability. The questionnaire's internal consistency was examined using Cronbach's alpha in SPSS software, which yielded a value >0.9, confirming its reliability for the research.

The questionnaire has two main sections: demographic information for participants and characteristics related to the study's objectives. A 5-point Likert scale was used to examine student perceptions of ChatGPT as a Socratic tutor in comparison to human tutors, assess how these perceptions affect students' opinions on critical thinking enhancement, and identify the benefits and drawbacks of both tutoring modalities from the student perspective. Additionally, semi-structured interviews with open-ended questions were conducted to gain a deeper understanding of students' impressions of their use of ChatGPT in the educational process and thematic analysis was performed on qualitative data from interviews.

2.3 Thematic analysis

The thematic analysis process started with familiarization, which included transcribing all interviews to ensure precise capture of the information. The data was carefully reviewed several times to uncover overarching patterns and notable points of interest. Preliminary notes were recorded to capture essential observations for subsequent investigation.

Subsequently, the process of initial coding involved pinpointing and tagging relevant segments of the text with descriptive codes that correspond to words, phrases, or concepts significant to the study. The qualitative analysis software NVivo was used to systematically organize and manage these codes, which may be classified as either semantic or latent.

Following the coding phase, the identification of themes began. Similar codes were organized into larger themes that represented overarching trends within the dataset, resulting in the creation of sub-themes that offered more profound insights. Themes were subsequently examined to ensure that they accurately represented the coded data and corresponded with the comprehensive dataset.

Following this, each theme was elaborated upon and assigned labels, with thorough descriptions provided to convey their importance in connection to the study's objectives. Every theme and subtheme received a concise, descriptive title that expressed its fundamental ideas. Ultimately, the thematic descriptions were enhanced with selected segments from the interviews. This comprehensive examination revealed multiple themes and subthemes, providing significant insights into the viewpoints of participants.

2.4 Multilayer Perceptron (MLP) model analysis

In order to analyze the perceptual differences between ChatGPT and human tutors in fostering critical thinking, a Multilayer Perceptron (MLP) model was employed. The MLP model is a type of artificial neural network that is well-suited for tasks requiring the integration of diverse data types, such as quantitative survey results and qualitative interview insights (Lazri, 2022).

Quantitative data were drawn from survey responses using a 5-point Likert scale. This data captured students' perceptions of ChatGPT vs. human tutors and their impact on critical thinking. Variables included demographic details (gender, degree level, and academic discipline) and Likert scale items reflecting student perceptions and experiences. Qualitative data were obtained from semi-structured interviews, which were transcribed and subjected to thematic analysis. Natural language processing (NLP) techniques were utilized to encode qualitative data numerically. Sentiment analysis scores and thematic frequency counts were computed to provide structured input to the MLP model.

2.4.1 MLP model design

The MLP model consisted of an input layer, two hidden layers, and an output layer. While input layer comprised numerical representations of quantitative survey data and transformed interview data, two layers with 64 and 32 neurons, respectively, included in hidden layers using the Rectified Linear Unit (ReLU) activation function to introduce non-linearity. Output layer designed with dual outputs to cater to the research objectives: A Softmax output for categorizing student perceptions into positive, neutral, or negative and a linear output for regression analysis to quantify the perceived impact on critical thinking.

2.4.2 Model training and validation

The dataset was split into training and validation subsets, with 80% allocated for training and 20% for validation. The Adam optimizer was employed for efficient handling of parameter updates. For classification tasks, the Categorical Crossentropy loss function was used, while the Mean Squared Error (MSE) loss function was applied for regression tasks. To validate the robustness of the model, k-fold cross-validation was implemented. This technique further reduced the risk of overfitting and enhanced model reliability.

Quantitative inputs were normalized to standardize the input range, facilitating effective training convergence. Categorical variables, such as gender and academic discipline, were encoded using one-hot encoding, ensuring model compatibility.

Qualitative interview data underwent tokenization and transformation using like Term Frequency-Inverse Document Frequency (TF-IDF) to transform textual data into a format suitable for MLP input. TF-IDF is a widely recognized method for feature extraction in text mining, which evaluates the importance of a word in a document relative to a collection of documents (Aninditya et al., 2019; Imelda and Kurnianto, 2023). This technique assigns weights to words based on their frequency in a specific document compared to their overall frequency across all documents, thereby highlighting terms that are more relevant to the specific context of the data being analyzed (Piskorski and Jacquet, 2020; Uslu and Onan, 2023). The MLP network structure is displayed in Figure 1.

3 Results and discussions

3.1 Quantitative results

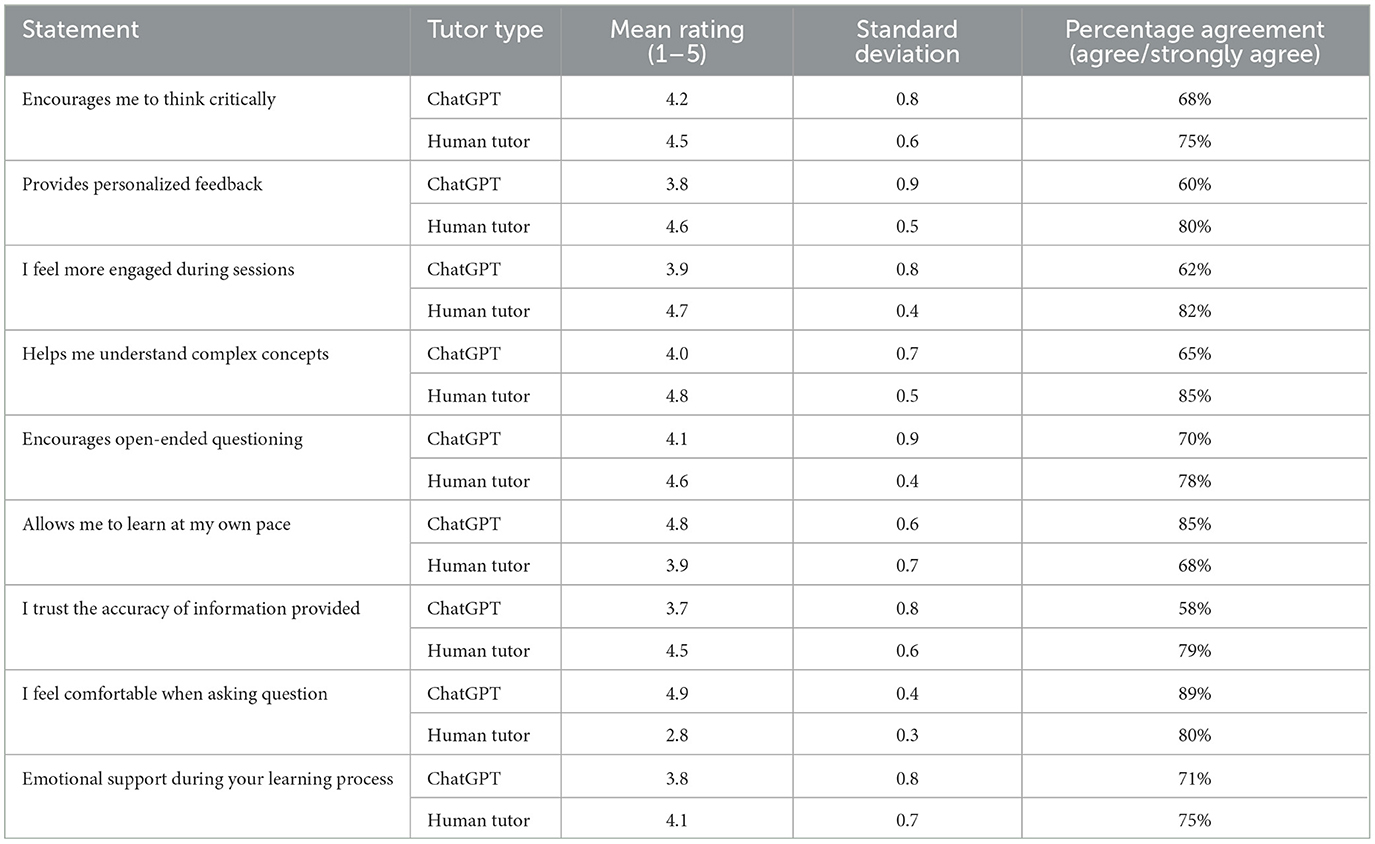

The study investigated the perceptual differences between ChatGPT and human tutors among 230 university students in Taiwan, focusing on their effectiveness in fostering critical thinking. As displayed in Table 2, responses indicated a generally positive reception toward both ChatGPT and human tutors. However, the students' perceptions of ChatGPT and human tutors also reveals distinct strengths and weaknesses for each. Human tutors scored higher across most categories, including encouraging critical thinking (mean rating 4.5 vs. ChatGPT's 4.2), providing personalized feedback (4.6 vs. 3.8), and helping students understand complex concepts (4.8 vs. 4.0). This suggests that the interpersonal engagement and tailored guidance offered by human tutors are highly valued by students.

Conversely, ChatGPT excels in allowing learners to learn at their own pace (mean rating 4.8) and creating a comfortable environment for asking questions (4.9), indicating a preference for the flexibility and immediacy of AI support. Trust in information accuracy also notably favored human tutors (4.5 vs. 3.7), reflecting students' concerns about the reliability of AI-generated responses. Overall, while human tutors are perceived as more effective in fostering critical thinking, engagement, and emotional support, ChatGPT offers unique advantages related to accessibility and personalized-paced learning.

3.1.1 Impact of demographic factors on perceptions of tutoring modalities

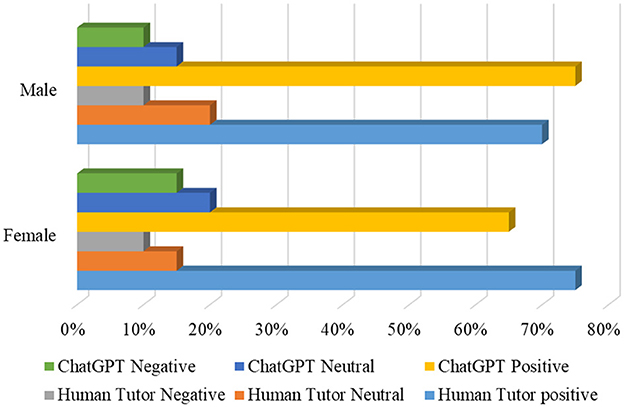

The grouped bar chart visualizes the perceptions of human tutors vs. ChatGPT among male and female students (Figure 2), illustrating distinct differences in how each demographic evaluates the effectiveness of both tutoring modalities in fostering critical thinking. For female students, 75% reported positive perceptions of human tutors compared to 65% for ChatGPT, indicating a strong preference for the relational and personalized benefits of human interaction. In contrast, 20% remained neutral and 15% had negative perceptions of ChatGPT, highlighting concerns about AI's limitations in providing individualized support. Interestingly, 75% of male students have a positive view of ChatGPT, showing a strong preference for automated assistance in learning. The lower neutral (15%) and negative (10%) perceptions of ChatGPT among males further emphasize this trend.

Overall, while both genders appreciate human tutors, female students demonstrate a more pronounced preference for them over ChatGPT, contrasting with the stronger acceptance of AI among male students. This visualization underscores the importance of human interaction in education, especially for emotional support and personalized learning. Understanding these dynamics can inform educational strategies that effectively integrate AI tools while maintaining opportunities for meaningful human engagement.

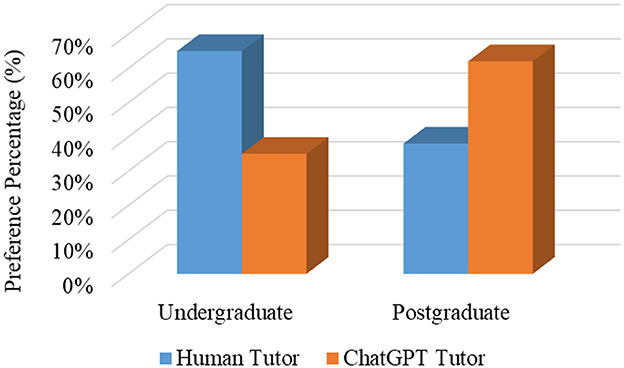

The analysis of degree level (Figure 3) reveals that undergraduates demonstrate a pronounced preference for human tutors, with 65% favoring traditional guidance over AI-based tutoring. In contrast, postgraduates display a marked shift toward AI integration, with 65% indicating a preference for ChatGPT tutors compared to human tutors. This pattern indicates a potential divide in comfort and reliance on technology-enhanced learning modalities, suggesting that undergraduates might still value direct human interaction in educational contexts. Meanwhile, postgraduates possibly exhibit greater openness and familiarity with advanced technological tools. This understanding could facilitate the development of targeted pedagogical strategies and resource allocations tailored to differing educational stages.

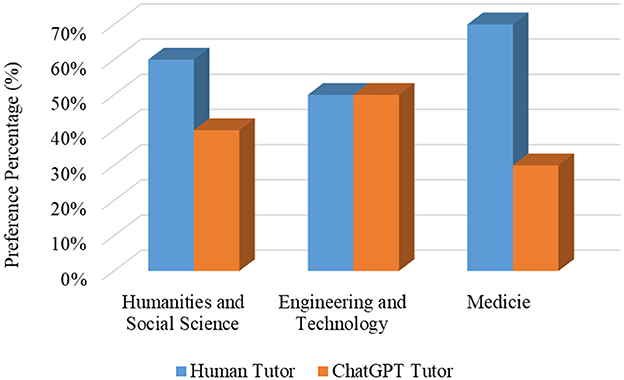

In terms of academic discipline (Figure 4), perceptions of tutoring effectiveness vary significantly. In the Humanities and Social Sciences, 60% of students exhibit a preference for human tutors, suggesting that traditional approaches to learning, which emphasize discussion and human interaction, remain highly valued. Conversely, in Engineering and Technology, there is an even distribution of preferences, with 50% of students favoring human tutors and 50% opting for ChatGPT. This parity reflects a balanced acceptance of both traditional educational methods and innovative technological approaches within the field. Besides, in Engineering and Technology, students are often at the frontier of technological innovation. This predisposes them to be comfortable and even enthusiastic about employing cutting-edge tools like AI-driven tutoring platforms. In Medicine, a significant 70% of students prefer human tutors, highlighting the critical role of personal interaction and empathy in the medical field, aspects that technology struggles to replicate. These insights, though hypothetical, offer a nuanced understanding of the varying degrees of comfort and perceived effectiveness associated with AI and human tutoring across different academic disciplines. Such knowledge is pivotal for crafting targeted educational strategies that align with the unique needs and learning styles of each discipline.

3.2 Qualitative results

3.2.1 Thematic analysis

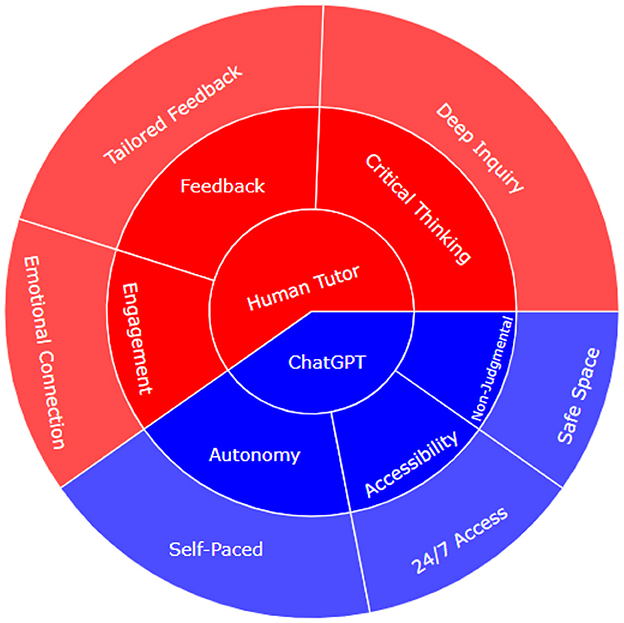

A dendrogram provides a clear visualization of the thematic analysis, illustrating the hierarchical relationships among themes, subthemes, and codes related to students' preferences for ChatGPT vs. human tutors. In the dendrogram, the main themes branch out from the center, with ChatGPT and human tutors each forming distinct pathways (Figure 5). For ChatGPT, prominent subthemes such as “Accessibility,” “Autonomy,” and “Non-Judgmental” support reflect students' appreciation for its 24/7 availability and self-paced learning, signified by large segments for codes like “24/7 Access” and “Self-Paced.” These observations underscore the appeal of ChatGPT's flexibility and independence, catering to students who thrive in self-directed and flexible learning environments. Conversely, for human tutors, the themes of “Engagement,” “Feedback,” and “Critical Thinking” branch out to highlight the importance of personalized attention. This is evidenced by significant segments for “Tailored Feedback” and “Deep Inquiry,” indicating the value students place on emotional connections and deep, analytical learning facilitated by human interaction. These findings suggest that human tutors are preferred for their ability to provide personalized guidance and foster critical thinking, elements that AI currently cannot fully replicate.

The dendrogram justifies a blended educational approach, where leveraging the strengths of both AI and human tutors could optimize learning environments. AI tools like ChatGPT can handle routine questions and flexible pacing, freeing human tutors to focus on complex, interpersonal, and critical-thinking tasks.

3.3 Trends and outliers in AI and human tutoring

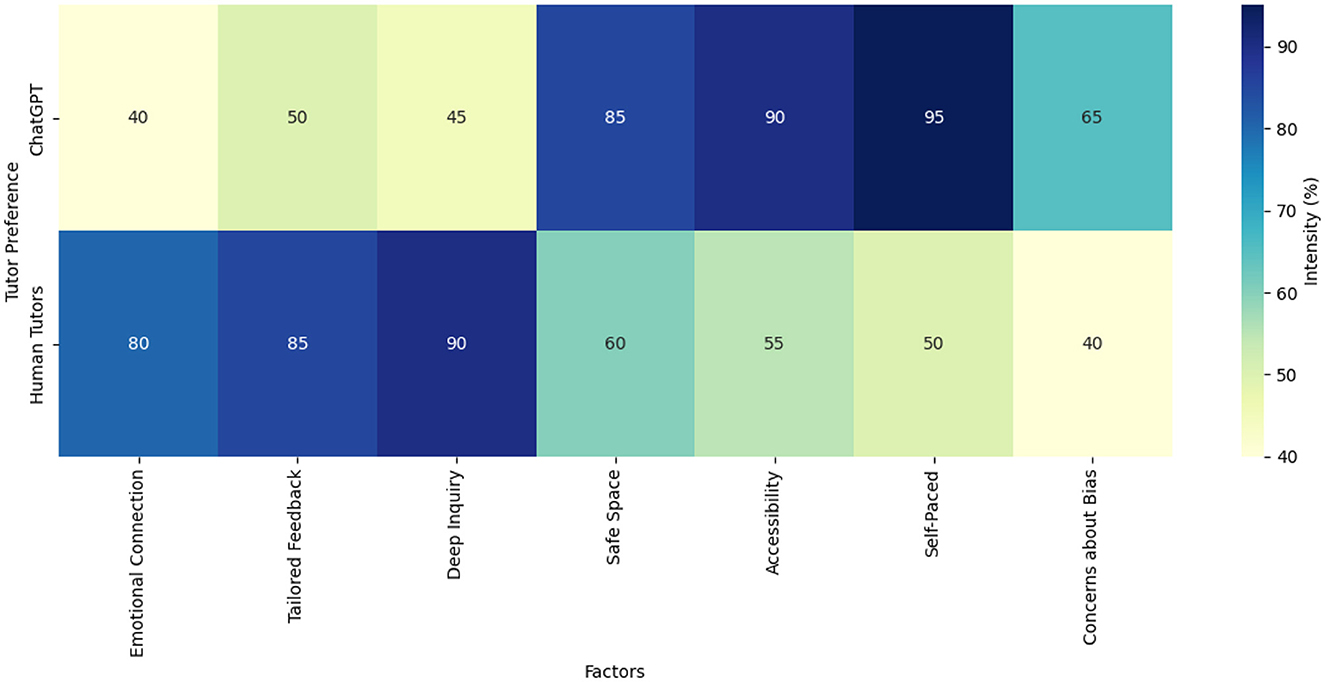

The heatmap analysis of qualitative data provides a clear visual summary of the comparative strengths and weaknesses of ChatGPT and human tutors and revealed several compelling trends and notable outliers that warrant further exploration (Figure 6). A distinct divergence in preferences based on learning styles was observed, with students who value flexibility and self-directed learning gravitating toward ChatGPT, while those who prioritize personalized attention and critical engagement lean toward human tutors. This trend highlights the need to align tutoring strategies with individual learning preferences to enhance educational outcomes.

Human tutors distinctly excel in delivering emotional connection, tailored feedback, and deep inquiry. These factors are critical in cultivating critical thinking and personal engagement, aligning with educational theories that emphasize relational pedagogy for deeper knowledge acquisition and emotional intelligence development (Boyatzis and Saatcioglu, 2008). Conversely, ChatGPT demonstrates significant strengths in providing a safe space, accessibility, and self-paced learning. This reveals a preference for conditions that foster autonomy and reduce the psychological barriers of asking questions, as evidenced by the rise of digital learning platforms that provide asynchronous and personalized learning experiences and have transformed the educational landscape, particularly in response to the COVID-19 pandemic. These platforms enable learners to access educational resources at their convenience, allowing for a tailored learning experience that meets individual needs and preferences (Nartiningrum and Nugroho, 2020; Songkram et al., 2023).

Notably, concerns about bias are more pronounced with ChatGPT, likely stemming from apprehensions about AI-generated inaccuracies and contextual limitations (Bender et al., 2021). However, some students appreciate the lack of human bias, highlighting the objective consistency AI can offer. By contrast, biases perceived in human tutors chiefly revolve around personal and cultural perspectives that might inadvertently skew interactions, suggesting the need for training and awareness to mitigate such tendencies.

These findings align with studies indicating that while AI can enhance operational aspects of learning through efficiency and accessibility, it struggles with emotional nuances and adaptive feedback that human tutors provide (Zawacki-Richter et al., 2019). Yet, there is a scholarly debate regarding AI's role in learning environments. Some argue that with advancements in natural language processing, the gap in understanding and empathy AI can offer may narrow, though others remain skeptical about AI's ability to fully replicate human-like emotional intelligence (Wang et al., 2021; Yaode Wang, 2024).

The heatmap serves not only as a testament to the multifaceted nature of educational experiences but also emphasizes the ongoing need for integrating both AI and human tutoring strengths to construct comprehensive learning environments. The insights reveal essential strategic areas for educators and policymakers to address, particularly in developing hybrid models that accommodate diverse learning preferences while balancing the benefits of technology and human connection.

3.4 Students' perceptions of critical thinking enhancement

The analysis of student perceptions regarding their critical thinking development reveals a complex interplay between the roles of human tutors and AI tools like ChatGPT. Human tutors are generally seen as pivotal in fostering critical thinking due to their ability to offer nuanced feedback and encourage deep questioning and reflection. This aligns with the Socratic method, which emphasizes dialogue to cultivate analytical skills (Oyler and Romanelli, 2014). Human tutors can dynamically adapt their questioning and feedback to stimulate students' intellectual growth, which is essential for deep analytical engagement. However, an intriguing contradiction arises from some student responses, where human tutors are perceived as impediments to critical thinking. This perception is linked to experiences in which certain educators were not receptive to questions, hindering inquiry. Studies indicate that in many East Asian educational contexts, including Taiwan, there is a significant emphasis on rote learning and teacher-centered classrooms, which may inadvertently suppress critical inquiry. This phenomenon is often attributed to cultural and systemic factors that prioritize memorization and standardized testing over critical thinking and inquiry-based learning (Brien, 2007). Such experiences resonate with broader observations of Taiwan's education system, where traditional lecture-based methods and cultural norms may discourage active student participation, ultimately limiting opportunities for critical engagement (Chang and Kuo, 2023).

In contrast, ChatGPT is praised for facilitating self-directed exploration by providing accessible information and encouraging independent questioning without the fear of judgment. This supports a more flexible learning environment conducive to initial levels of critical engagement. Nonetheless, AI tools like ChatGPT often fall short in fostering the depth of inquiry associated with advanced critical thinking due to their lack of contextual sensitivity and emotional intelligence (Bender et al., 2021). Research by Zawacki-Richter et al. (2019) similarly indicates that while AI supports foundational learning aspects, it lacks the nuanced interpersonal dynamics crucial for higher-order analytical processes.

AI tools, by offering efficiency and immediacy, serve as valuable assets for promoting independent learning. However, they cannot fully replace the deeper engagement facilitated by human educators. As Taiwan's educational practices continue to evolve, incorporating hybrid models that leverage both AI's technological advantages and the critical facilitation skills of human tutors will be crucial. This approach could help harmonize the technical facilitation of learning with pedagogical shifts toward more interactive, inquiry-based environments, ultimately enhancing student outcomes and aligning with global educational trends.

3.5 MLP model insights on student critical thinking preferences

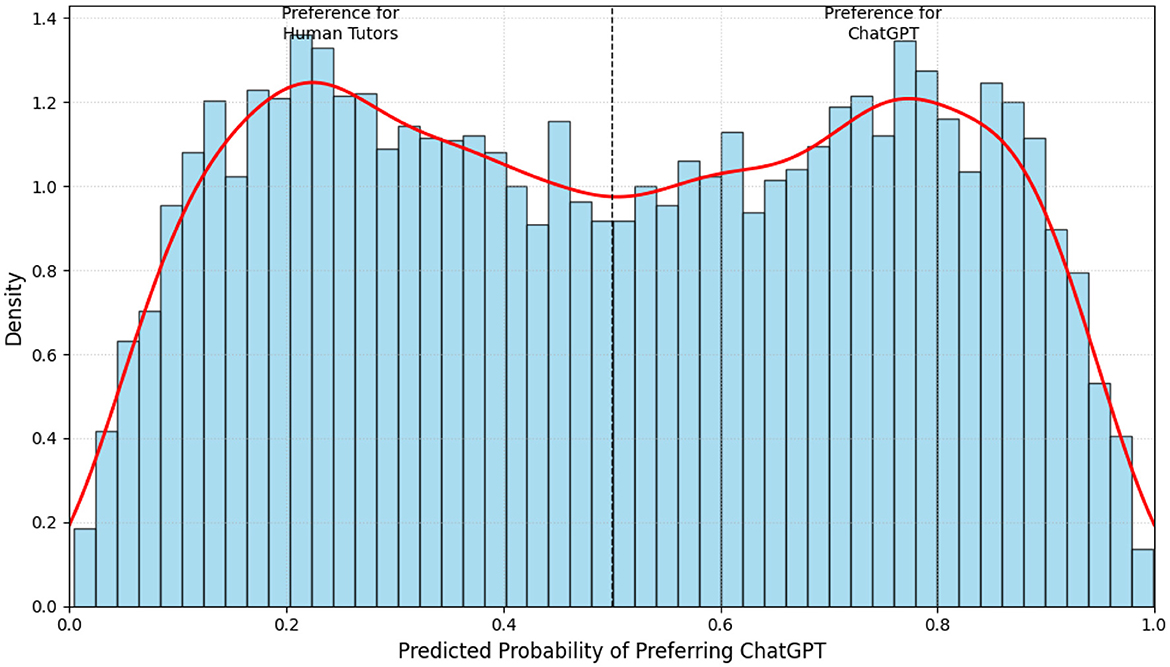

The threshold analysis conducted using the MLP model provides valuable insights into student preferences between human tutors and AI tools like ChatGPT in the context of critical thinking development (Figure 7). By setting a threshold of 0.5 for predicting preferences based on the likelihood of favoring ChatGPT, the analysis reveals a distinct segmentation of student responses.

The findings indicate that a considerable number of students displayed a strong inclination toward ChatGPT as a preferred learning tool. This preference aligns with the attributes outlined in the study—ChatGPT's non-judgmental nature, accessibility, and capacity for fostering self-directed exploration. These features are critical in creating an environment where students feel empowered to engage with learning material without the fear of judgment or criticism, reinforcing the notion that technology can complement educational experiences (Bender et al., 2021). Conversely, the analysis also highlighted a substantial group of students who preferred human tutors. The MLP model's prediction reflects students' recognition of the pivotal role human tutors play in enhancing critical thinking. Attributes such as the ability to provide tailored feedback and foster emotional connections are crucial for engaging students in deeper analytical processes (Zhang et al., 2021). The distribution is somewhat bimodal, with most predictions clustered at the extremes (very low or very high probability), and relatively few in the middle ranges. This distribution suggests that the MLP model is making quite polarized predictions, with most students predicted to either strongly prefer or strongly not prefer ChatGPT, and fewer uncertain cases. This suggests a clear understanding among students that while AI tools facilitate initial engagement, human tutors are indispensable for advancing critical thinking skills, which necessitate complex interpersonal dynamics and depth of interaction (Zawacki-Richter et al., 2019).

By examining the predicted probabilities from the MLP classifier, the analysis reveals that features such as Accessibility and Emotional Connection significantly shape student attitudes toward their preferred learning supports. For instance, the model suggests that students may only endorse AI tools like ChatGPT when they perceive an adequate level of Accessibility. This indicates that if students find the AI platform difficult to navigate or encounter barriers in information retrieval, their likelihood of favoring it diminishes significantly.

Additionally, the analysis illustrates the crucial role of emotional connection fostered by human tutors. The ML predictions indicate that many students still prefer human interaction primarily due to the tailored feedback and interpersonal support that human educators can provide. This finding aligns with existing literature, which stresses how emotional intelligence and relational dynamics are vital for promoting deep analytical engagement (Zhang et al., 2021). Therefore, teacher training programs should prioritize the development of interpersonal skills, enabling educators to forge stronger emotional bonds with students and ultimately enhancing the educational experience.

The ability to categorize student preferences through predictive thresholds enables educational stakeholders to identify critical comfort levels regarding different features influencing those preferences. If accessibility is determined to be a limiting factor and feedback indicates students require user-friendly interfaces, designing features that streamline navigation should be prioritized. Conversely, emphasizing emotional connection may involve developing more interactive and engaging mentorship programs that promote open communication between students and tutors.

3.6 Implications for educators and policy makers

First, this study emphasizes the importance of hybrid learning models that effectively combine AI technologies with traditional teaching methods. ChatGPT's attributes, such as its accessibility and non-judgmental interaction style, make it a valuable complement to the relational and personalized engagement provided by human tutors. This hybrid approach leverages the strengths of AI's efficiency and scalability alongside the emotional intelligence and adaptability that human educators bring, creating a tailored and inclusive learning experience. By strategically incorporating AI tools into teaching strategies, educators can foster environments that promote critical thinking while addressing the diverse needs of students, ultimately enhancing engagement and equity in education.

Second, teacher training programs must evolve to prioritize the integration of technology alongside established pedagogical methods. The findings indicate that for educators to maximize the potential of AI tools like ChatGPT, they must be trained in effectively combining these tools with traditional teaching practices. Workshops and professional development initiatives should focus on strategies for facilitating meaningful, critical-thinking-oriented dialogues through both AI interactions and human-led teaching methods. Equipping educators with these skills ensures that technology integration complements, rather than replaces, the interpersonal connections vital to effective learning.

Third, accessibility should be a cornerstone of educational technology policies. The study highlights that student preferences for AI tools are heavily influenced by their accessibility and ease of use. Addressing these concerns requires strategic investments in digital infrastructure, particularly in underserved areas. Policymakers should focus on improving access to reliable internet services and digital devices in schools and homes, ensuring that all students have the tools needed to benefit from AI-enhanced education. These efforts can eliminate barriers to AI utilization and contribute to more equitable learning opportunities.

In addition, ongoing research and evaluation are critical to optimizing AI's role in education. Policymakers should prioritize funding for studies that examine the academic, social, and emotional impacts of AI tools, providing insights into how these technologies affect student performance, engagement, and motivation. A robust framework for continuous evaluation and feedback will enable iterative improvements to educational technologies, ensuring they adapt to the evolving needs of learners and educators alike.

Finally, involving students in the development and evaluation of AI tools can lead to more practical and user-friendly solutions. Engaging students in this process provides actionable insights into the usability and effectiveness of AI in real-world classrooms. By incorporating student feedback, developers can create AI tools that are more aligned with educational goals, increasing student buy-in and improving the overall functionality of these technologies. This collaborative approach fosters a more authentic and student-centered educational experience.

3.7 Limitations and future research

While this study offers valuable insights into student perceptions of ChatGPT and human tutors in fostering critical thinking, several limitations must be acknowledged to provide a balanced interpretation of the findings and guide future research. The sample, comprising 230 university students from Taiwan, provides a focused perspective but may not represent the broader student population across diverse educational contexts. Taiwan's educational system, which heavily emphasizes lecture-based and teacher-centered approaches, may have influenced the results. As such, perceptions of students from different cultural and educational backgrounds could vary significantly. Future research should aim for larger and more diverse samples, incorporating a range of age groups, educational levels, and cultural contexts to improve generalizability.

Additionally, the study's cross-sectional design limits its ability to capture how perceptions and preferences evolve over time. A longitudinal approach could offer richer data by tracking the same cohort over multiple semesters, revealing trends in how familiarity with AI tools and critical thinking tasks influences student attitudes. This method would provide deeper insights into how students' preferences shift as they gain more experience with both AI and human tutors, as well as how advancements in educational technology impact these preferences.

While this study identifies key factors influencing student preferences, other significant aspects may have been overlooked. For example, peer interaction, technology anxiety, and the role of instructional design in fostering critical thinking through AI tools are areas that warrant further investigation. Future research should explore a broader range of factors and examine how these elements interact to shape learning outcomes.

Broader comparisons across educational contexts could also yield important insights. For instance, investigating student perceptions in secondary education, vocational training, or adult learning environments may reveal variations in how AI and human tutors are valued. Similarly, exploring the effectiveness of AI tools like ChatGPT across different disciplines—such as humanities, sciences, engineering, and social sciences—could help determine how subject matter influences preferences and perceptions of effectiveness.

Finally, given the importance of emotional connection identified in this study, future research should examine how AI tools can support not only cognitive but also social and emotional learning. Exploring how AI can simulate social presence or encourage peer interactions may contribute to richer, more engaging learning environments. This could involve designing AI systems that better integrate emotional and relational elements to complement their cognitive capabilities.

4 Conclusion

The findings from this research provide valuable insights for educators and policymakers working to enhance learning environments and cultivate critical thinking skills among students. The distinct preferences revealed through the MLP analysis highlight a clear tension between the benefits of AI tools like ChatGPT and the relational engagement offered by human tutors. As students increasingly use technology for learning, the results suggest that innovative pedagogical strategies are needed—ones that integrate AI with traditional teaching methods, especially when it comes to fostering emotional connections and personalized learning experiences. This dual approach not only addresses diverse student preferences but also supports the development of deeper cognitive skills necessary for academic success and future problem-solving.

Moreover, the study's insights into key features like accessibility and emotional engagement can inform the design of AI tools that are more responsive to student needs. As educational technologies continue to evolve, future research should focus on the long-term effects of AI tools on student outcomes, exploring their impact across different educational settings and demographic groups. Ultimately, leveraging the complementary strengths of both human and AI interactions will create more dynamic and adaptable learning environments, better preparing students for the complexities of the modern world.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by National Cheng Kung University Human Research Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

HF: Conceptualization, Funding acquisition, Project administration, Supervision, Writing – original draft. MI: Data curation, Software, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was financially supported by the National Science and Technology Council (NSTC) of Taiwan via grant number of 112-2410-H-003-184-.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. During the preparation of this manuscript, the authors used QuillBot and ChatGPT v2 to enhance readability and detect plagiarism. Following the use of these tools, the authors thoroughly reviewed and edited the manuscript, and assume full responsibility for its content.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdullah, N., Niazi, J., Idris, R., and Muhammad, N. (2022). Socratic questioning: a philosophical approach in developing critical thinking skills. Al Hikmah Int. J. Islamic Stud. Human Sci. 5, 143–161. doi: 10.46722/hikmah.v5i4g

Aninditya, A., Hasibuan, M. A., and Sutoyo, E. (2019). “Text mining approach using TF-IDF and Naive Bayes for classification of exam questions based on cognitive level of Bloom's taxonomy,” in Proceedings of the 2019 IEEE International Conference on Internet of Things and Intelligence System (IoTaIS), Bali, Indonesia, 112–117.

Bender, E. M., Gebru, T., McMillan-Major, A., and Mitchell, M. (2021). “On the dangers of stochastic parrots: can language models be too big?,” in Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (New York, NY: Association for Computing Machinery), 610–623. doi: 10.1145/3442188.3445922

Boyatzis, R. E., and Saatcioglu, A. (2008). A 20-year view of trying to develop emotional, social and cognitive intelligence competencies in graduate management education. J. Manage. Dev. 27, 92–108. doi: 10.1108/02621710810840785

Brien, D. L. (2007). Developing and enhancing creativity: a case study of the special challenges of teaching writing in Hong Kong. Text 11:31779. doi: 10.52086/001c.31779

Chan, C., and Hu, W. (2023). Students' voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. Higher Educ. 20:43. doi: 10.1186/s41239-023-00411-8

Chang, C.-C., and Kuo, H.-C. (2023). Exploring dialogic education used to facilitate historical thinking within the cultural context of East Asia: a multiple-case study in Taiwanese classrooms. Lang. Cult. Soc. Interact. 41:100729. doi: 10.1016/j.lcsi.2023.100729

Efendi, M., Cheng, T., Sadiyah, E., Wulandari, D., Qosyim, A., and Suprapto, N. (2020). Study of the implementation of socratic dialogue at history of physics course. Stud. Philosophy Sci. Educ. 1, 7–20. doi: 10.46627/sipose.v1i1.7

Freeman, S., Eddy, S., McDonough, M., Smith, M., Okoroafor, N., Jordt, H., et al. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc. Nat. Acad. Sci. U.S.A. 111, 8410–8415. doi: 10.1073/pnas.1319030111

Hu, Z. (2023). Promoting critical thinking through Socratic questions in health sciences work-integrated learning. Int. J. Learn. Teach. Educ. Res. 22, 137–151. doi: 10.26803/ijlter.22.6.8

Imelda, I., and Kurnianto, A. R. (2023). Naïve Bayes and TF-IDF for sentiment analysis of the COVID-19 booster vaccine. J. RESTI 7, 1–6. doi: 10.29207/resti.v7i1.4467

Khaw, C., and Raw, L. (2016). The outcomes and acceptability of near-peer teaching among medical students in clinical skills. Int. J. Med. Educ. 7, 189–195. doi: 10.5116/ijme.5749.7b8b

Lazri, M. (2022). Extreme learning machine versus multilayer perceptron for rainfall estimation from MSG data. E3S Web Conf. 353:01006. doi: 10.1051/e3sconf/202235301006

Le, N. (2019). How do technology-enhanced learning tools support critical thinking? Front. Educ. 4:0126. doi: 10.3389/feduc.2019.00126

Mal, P., Piryani, S., and Gautam, N. (2021). PBL session conducted for second year undergraduate students of medicine and dentistry at Universal College of Medical Sciences Nepal: perception of the participants. Janaki Med. College J. Med. Sci. 9, 55–60. doi: 10.3126/jmcjms.v9i1.38338

Nartiningrum, N., and Nugroho, A. (2020). Online learning amidst global pandemic: EFL students' challenges, suggestions, and needed materials. Eng. Franca Acad. J. Eng. Lang. Educ. 4:115. doi: 10.29240/ef.v4i2.1494

Oyler, D. R., and Romanelli, F. (2014). The fact of ignorance revisiting the Socratic method as a tool for teaching critical thinking. Am. J. Pharm. Educ. 78:144. doi: 10.5688/ajpe787144

Piskorski, J., and Jacquet, G. (2020). “TF-IDF character N-grams versus word embedding-based models for fine-grained event classification: a preliminary study,” in Proceedings of the Workshop on Automated Extraction of Socio-political Events from News 2020 [European Language Resources Association (ELRA)], 26–34.

Pitorini, D. (2024). Students' critical thinking skills using an e-module based on problem-based learning combined with Socratic dialogue. J. Learn. Dev. 11, 52–65. doi: 10.56059/jl4d.v11i1.1014

Rahman, Z., Hoon, T. S., and Sidhu, G. K. (2019). Using the peer Socratic questioning (PSQ) technique to develop critical thinking skills in group discussion. J. Penyelidikan Tempawan XXXVI, 91–103. doi: 10.61374/temp08.19

Rijdt, C., Rijt, J., Dochy, F., and Vleuten, C. (2011). Rigorously selected and well trained senior student tutors in problem-based learning: student perceptions and study achievements. Instruct. Sci. 40, 397–411. doi: 10.1007/s11251-011-9173-6

Serban, I. V., Gupta, V., Kochmar, E., Vu, D. D., Belfer, R., Pineau, J., et al. (2020). “A large-scale, open-domain, mixed-interface dialogue-based ITS for STEM,” in Artificial Intelligence in Education. AIED 2020. Lecture Notes in Computer Science, eds. I. Bittencourt, M. Cukurova, K. Muldner, R. Luckin, and E. Millán (Cham: Springer). doi: 10.1007/978-3-030-52240-7_70

Songkram, N., Chootongchai, S., Thanapornsangsuth, S., Osuwan, H., Piromsopa, K., Chuppunnarat, Y., et al. (2023). Success factors to promote digital learning platforms: an empirical study from an instructor's perspective. Int. J. Emerg. Technol. Learn. 18, 32–48. doi: 10.3991/ijet.v18i09.38375

Sulaiman, M. (2020). Application of teachers' knowledge of Socratic questioning in developing EFL critical thinking skills among Omani post-basic learners. Arab World Eng. J. 257, 1–187. doi: 10.31235/osf.io/kec3n

Tofade, T., Elsner, J., and Haines, S. (2013). Best practice strategies for effective use of questions as a teaching tool. Am. J. Pharm. Educ. 77:155. doi: 10.5688/ajpe777155

Uslu, N. A., and Onan, A. (2023). Investigating Computational Identity and Empowerment of the Students Studying Programming: A Text Mining Study. Necmettin Erbakan Universitesi Eregli Egitim Fakultesi, Necmettin Erbakan University.

Vincent-Lancrin, S., González-Sancho, C., Bouckaert, M., de Luca, F., Fernández-Barrerra, M., Jacotin, G., et al. (2019). Fostering Students' Creativity and Critical Thinking: What It Means in School, Educational Research and Innovation. Paris: OECD Publishing. doi: 10.1787/62212c37-en

Wang, G., Albayrak, A., Kortuem, G., and Cammen, T. J. M. v. d. (2021). A digital platform for facilitating personalized dementia care in nursing homes: Formative evaluation study. JMIR Format. Res. 5:e25705. doi: 10.2196/25705

Winkler, R., and Söllner, M. (2018). Unleashing the potential of chatbots in education: a state-of-the-art analysis. Acad. Manage. Proc. 2018:15903. doi: 10.5465/AMBPP.2018.15903abstract

Yaode Wang, M. G. (2024). Research on the innovation and implementation pathway of the integrated aesthetic education model in research universities within the context of digital information and intelligence strategies. J. Electr. Syst. 20, 1030–1035. doi: 10.52783/jes.1277

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education. Int. J. Educ. Technol. Higher Educ. 16:39. doi: 10.1186/s41239-019-0171-0

Keywords: Socratic tutoring, critical thinking, ChatGPT, human tutors, pedagogical strategies

Citation: Fakour H and Imani M (2025) Socratic wisdom in the age of AI: a comparative study of ChatGPT and human tutors in enhancing critical thinking skills. Front. Educ. 10:1528603. doi: 10.3389/feduc.2025.1528603

Received: 15 November 2024; Accepted: 06 January 2025;

Published: 22 January 2025.

Edited by:

Maurizio Sibilio, University of Salerno, ItalyReviewed by:

Michele Domenico Todino, University of Salerno, ItalyAmelia Lecce, University of Sannio, Italy

Copyright © 2025 Fakour and Imani. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hoda Fakour, aGZha291ckBudG51LmVkdS50dw==

†Present address: Moslem Imani, Yoder International Academy, Taoyuan, Taiwan

Hoda Fakour

Hoda Fakour Moslem Imani

Moslem Imani