94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 18 March 2025

Sec. Digital Learning Innovations

Volume 10 - 2025 | https://doi.org/10.3389/feduc.2025.1507803

Success in school is closely linked to students’ ability to regulate their own learning. In mathematics, self-regulated learning (SRL) strategies can help students become more independent and proactive in their learning. However, there is limited research on how students can be effectively supported in developing and applying these strategies, especially for younger students. The purpose of this study is to evaluate the impact of a self-instructional SRL material. The material is designed in collaboration between researchers and teachers, and integrated into regular mathematics instruction. Specifically, we examine whether the use of the SRL material varies across schools and student groups. A quasi-experimental research design with pre- and post-tests was employed, involving 258 students in grades 5 and 6 from five different schools over the course of one semester. Data was collected through structured surveys measuring student engagement with the material, along with performance tracking before and after the intervention. The findings indicate that co-developing SRL materials with teachers, and embedding them in regular instruction, has clear advantages. The findings also show that while the material was used equally across genders and performance levels, variations remain due to school culture, socio-economic factors, and individual teacher influence. Notably, lower-performing students showed greater progress compared to high-performers, and girls engaged more actively with SRL strategies than boys. These findings have important implications for the design and implementation of SRL interventions. While integrating SRL support within everyday teaching fosters engagement, additional measures may be needed to address persistent disparities between schools and student groups.

Having constructive strategies for regulating one’s learning, known as “self-regulated learning” (SRL), such as knowing what to do when one does not understand something or how to set realistic goals, can be crucial to how students perform in school (e.g., Dignath et al., 2008; Hattie et al., 1996). However, while some students possess a variety of strategies already at a relatively early age, others have only vague ideas on how to regulate their learning (Jönsson, 2022). Helping students find and use such strategies could therefore be a way to enable low-performing students to improve their academic outcomes. If this could be done successfully during the early stages of schooling, the gap between high- and low-performing students might be reduced, and other negative consequences for low-performing students (e.g., decrease in academic self-concept) might be diminished or avoided. Consequently, there is a strong incentive within educational research to identify constructive strategies that support the learning process, which has led to numerous studies on how to foster students’ SRL strategies (e.g., Boekaerts, 1999; Dent and Koenka, 2016; Hattie, 2009; Hitt, 2023; Muncer et al., 2022; Zimmerman and Schunk, 2001). However, there is still a lack of a clear understanding of how teachers can support students in developing SRL strategies within regular classroom teaching, especially in relation to younger students. This study thus involves the implementation and evaluation of a self-instructing material integrated into the regular curriculum.

Students who use self-regulation strategies are proactive on several levels: metacognitive, cognitive, behavioral, and motivational (Panadero, 2017). On the metacognitive level, students set specific goals and evaluate their progress in relation to these goals. They reflect on how well they performed when working on tasks and consider what they can do differently in the future (Puustinen and Pulkkinen, 2001; Zimmerman, 2002). Cognitively, students recognize what they understand and what they do not. They know and apply different strategies depending on the task and context.

On the behavioral level, students regulate their resources (such as time), adapt their learning environment when necessary, and seek help and feedback. Motivationally, they manage their emotions to achieve their goals, and failures are seen as part of the learning process (Pintrich, 2000).

Thus, self-regulated learning is not a static ability or personal trait, but rather a set of tools that help students regulate and direct their resources, behaviors, emotions, and knowledge to achieve their goals. These strategies relate to different levels (i.e., cognitive, metacognitive, behavioral, and motivational) and complement each other.

Since the late 1990s, the subject of mathematics has undergone important changes (De Corte et al., 2000). Shifting from a focus on learning concepts and procedures, mathematics has broadened to emphasize problem-solving and modeling. These changes are also reflected in the Swedish national curriculum for mathematics, which describes mathematical problem-solving both as a goal and a tool, placing great importance on students being able to communicate and reason using mathematical concepts (Swedish National Agency for Education, 2022).

However, this new perspective on mathematical proficiency places different demands on teaching and learning in mathematics (Valero et al., 2022). For instance, students need to take control to a greater extent and be more proactive in their learning, as merely memorizing answers or procedures is no longer sufficient. They need to reflect on the methods chosen, communicate their reasoning, and consider the effectiveness of the methods. Moreover, they need to be aware of how their own thinking can be used to solve problems and choose strategies accordingly.

A recent meta-analysis (Muncer et al., 2022) explores the link between the various aspects of self-regulated learning and mathematical performance. The results suggest a positive, medium-sized correlation between an individual’s self-regulation of their own learning and achievement in mathematics for adolescents aged 11–16 years. However, the meta-analysis also demonstrates heterogeneity across studies. Potentially important moderators for this heterogeneity include whether SRL is measured in offline or online contexts, and whether mathematical knowledge is assessed using simple or complex tasks. Hitt (2023) points out that self-regulated approaches to mathematical learning may not necessarily lead to increased achievement on traditional assessments in the short term. This is because it takes time and resources for students to develop ownership of their learning. Additionally, the design of assessment methods can influence the outcomes. If assessments do not capture the full range of knowledge and skills fostered through the SRL interventions, the impact on student achievement may not be evident at the level of individual schools or classrooms, even if scientific evidence suggests otherwise.

Although students can develop learning strategies independently, these strategies are not always constructive (Winne, 2016). A learning environment that supports the development of SRL could therefore be suitable for helping students develop constructive strategies. However, this view of supporting students’ development of mathematical competence through SRL is not predominant, as it is typically seen as the teacher’s responsibility to regulate students’ learning (Bell and Pape, 2014; De Corte et al., 2000; Darr and Fisher, 2005; Hitt, 2023).

A notable difference between the general use of self-regulation strategies, and the use of such strategies in mathematics, is that girls appear to use self-regulation strategies to a greater extent in mathematics, as compared to boys (Guo et al., 2023; Rohman et al., 2020). This difference may be explained by boys having more confidence in their mathematical abilities, even when they perform less well than girls. Boys are also generally more motivated to learn mathematics and perceive mathematics to be more important than girls do (Samuelsson and Samuelsson, 2016). Girls, on the other hand, tend to have less favorable views of their mathematical abilities, but are more inclined to put in effort when working on mathematical tasks (Bidjerano, 2005).

Several studies have explored how training students in SRL strategies can be implemented in classrooms (Bell and Pape, 2014; De Corte et al., 2000; Kramarski and Zoldan, 2008; Pape et al., 2003; Schunk, 1998). Meta-analyses (Dignath and Büttner, 2008; Donker et al., 2014; Wang and Sperling, 2020) have also examined the effects of various factors characterizing SRL interventions. Below, some of the most central factors affecting the implementation of SRL interventions are presented and discussed: (a) the type of strategies used in the interventions, (b) who carried out the interventions, and (c) the student population.

Previous meta-analyses (Dignath and Büttner, 2008; Donker et al., 2014) have shown that a combination of strategies at different levels contributes to better student performance compared to interventions that focus only on one or a few levels. When all four levels are included, students learn not only strategies but also how and when to use them. They are trained to metacognitively recognize when and why they need to use these strategies. Furthermore, students gain an understanding of the value of what they are learning (so-called “task value”) and become more aware of their attitudes toward the subject content (Donker et al., 2014).

In relation to learning in mathematics, Donker et al. (2014) reported that interventions including strategies focused on task processing (known as “elaboration”) result in a higher impact on student performance compared to other strategies, such as repetition. Elaboration here refers to connecting new information to prior knowledge, for example by finding similarities and differences between various tasks as a support for solving new problems. Other strategies that have proven successful in mathematics include learning to identify errors in a solution or reasoning (so-called “error diagnosis”) and self-questioning while solving a task (Wang and Sperling, 2020). Among motivational strategies, those targeting students’ perceptions of their abilities (so-called “self-efficacy”) have been the most effective (Wang and Sperling, 2020). Setting goals and creating engagement to achieve them are other strategies that have shown to be effective in improving student performance.

The effects of SRL interventions are generally higher when researchers, rather than teachers, are responsible for carrying out the intervention (Dignath and Büttner, 2008). The difference is believed to be due to researchers typically being more rigorous in applying the study’s framework when conducting the intervention. This is not necessarily because teachers lack the willingness to follow the study’s framework, but rather due to factors like limited time to plan and support students. It may also be that teachers are not sufficiently familiar with how the intervention is designed.

However, increased teacher involvement can result in a better understanding of SRL and improved alignment between teachers and researchers regarding teaching and learning. In mathematics, where most interventions are conducted by teachers, collaboration between researchers and teachers can create opportunities to integrate the intervention into the ongoing teaching (Wang and Sperling, 2020). This could lead to long-term benefits, as the use of strategies in teaching becomes more meaningful for both teachers and students. As a result, students have time to automate their use of strategies and refine their SRL skills. Therefore, more extensive professional development and increased engagement from teachers are important for achieving good outcomes from SRL interventions (Dignath and Büttner, 2008).

In terms of the student population, researchers have explored whether students’ performance levels affect the impact of SRL interventions. While there may be differences in how boys and girls apply SRL strategies, previous meta-analyses (e.g., Dignath and Büttner, 2008; Donker et al., 2014; Hattie et al., 1996; Wang and Sperling, 2020) have not identified gender as a factor influencing the effectiveness of SRL interventions. Therefore, boys and girls likely benefit equally from learning to regulate their learning.

Self-regulated learning interventions appear to benefit students across different performance levels. In their meta-analysis, Donker et al. (2014) reported positive effects for high-, medium-, and low-performing students, as well as for students with neurodevelopmental challenges. In contrast, Hattie et al. (1996) reported positive effects only for medium-performing and “underperforming” students (i.e., those performing below their potential). The researchers found that SRL interventions were too demanding for low-performing students to fully grasp. Donker et al. (2014) explained this discrepancy by suggesting that SRL interventions conducted after the meta-analysis by Hattie et al. (1996) may have been better tailored to students’ needs, making them more accessible to all students.

Regarding mathematics, studies have shown positive effects of SRL training for students struggling with problem-solving (Vauras et al., 1999) and for students with neurodevelopmental difficulties (Fuchs et al., 2003).

Research on SRL shows that students who use constructive self-regulation strategies perform better in school compared to those who do not use such strategies. In mathematics, SRL strategies not only contribute to better performance, but also form part of what is meant by mathematical competence. At the same time, SRL training is not yet a standard element of mathematics teaching. Furthermore, SRL interventions do not always yield the desired effects when implemented in practice, as they may not be fully integrated into the ongoing teaching. However, if SRL is embedded within the regular teaching, the conditions for more noticeable effects on students’ mathematics learning may improve. Since there is a lack of research in this area, it is an important task for educational research to find new ways to support teachers in implementing SRL strategies in their instruction.

In line with the conclusions outlined in the previous section, the purpose of this study is to examine how an SRL material, developed in collaboration between researchers and teachers and integrated into the ongoing teaching of mathematics, can influence students’ performance in mathematics and their use of SRL strategies in mathematics. A material integrated into the ongoing teaching may be perceived as more relevant and may thus contribute to a more rigorous application of the study’s principles. Positive effects could further lead to the reuse of the material and its dissemination among other teachers. It is therefore important to understand whether the implementation of SRL material can vary between schools and different groups of students. The content of the material addresses cognitive, metacognitive, behavioral, and motivational strategies and spans one semester.

The following research questions will be explored in relation to the different schools participating in the study and for the groups of students:

1. Are there differences between schools in terms of students’ progression in mathematics performance, degree of implementation, and perceived use of SRL strategies in mathematics?

2. Are there differences between groups of students of varying performance levels regarding progression in mathematics performance, degree of implementation, and perceived use of SRL strategies in mathematics?

3. Are there gender differences in terms of progression in mathematics performance, degree of implementation, and perceived use of SRL strategies in mathematics?

The methodological approach of this study is an intervention study, using a quasi-experimental research design with pre- and post-tests, where the intervention was conducted in authentic classrooms as an integrated part of the regular teaching. The intervention lasted for a total of 15 weeks, approximately equivalent to one semester, and was carried out with students in grades 5 and 6.

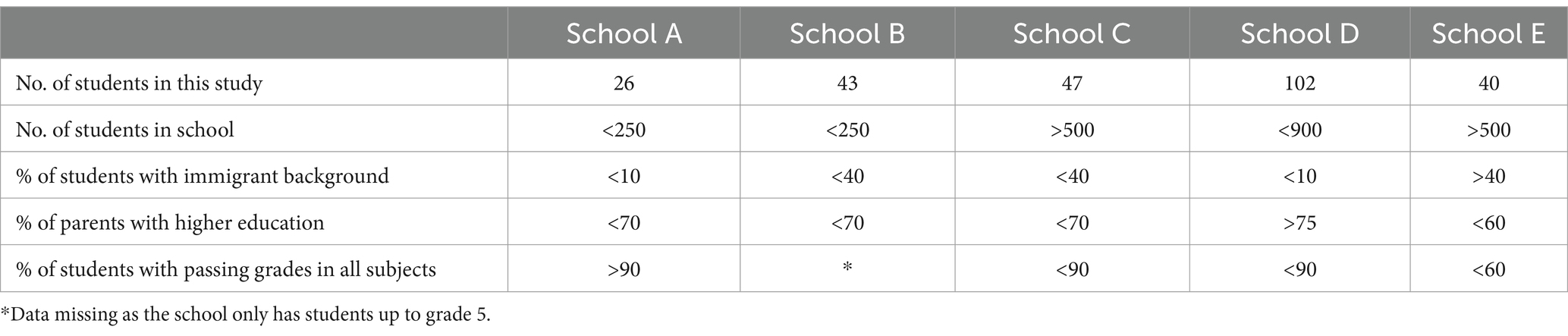

The participants in the study were students (n = 258; 45% girls) in grades 5 and 6 at five different schools, along with their teachers (n = 6). A description of key indicators for the five schools is provided in Table 1. The table does not display exact figures, as the schools could be identified based on material published online.

• Schools A and B are small schools with a relatively low proportion of students with an immigrant background.

• Schools C and E are medium-sized and comparable in size, but School E has a higher proportion of students with an immigrant background and a lower proportion of parents with higher education. The percentage of students with passing grades in all subjects is significantly lower at School E than at School C.

• School D is the largest of the schools, with the most students in the study and the highest proportion of parents with higher education.

Table 1. Key indicators for the participating schools, from the statistics provided by Swedish National Agency for Education.

The selection of teachers for the study was based on voluntary participation. The students were then asked for their consent to participate in the study through a consent form addressed to their legal guardians.

The intervention consisted of six digital modules that students accessed through hyperlinks. The material was created in collaboration between researchers and teachers and was linked to the mathematics content that the students worked on during the semester. The mathematical content areas included the four basic arithmetic operations, fractions and decimals, percentages, equation solving, unit conversion, and problem-solving.

The content of the modules was developed through an iterative process. The strategies covered in the modules, the mathematical content, the examples used, and the extent of each module were discussed between researchers and teachers. The design process involved adapting the content to the curriculum, the students’ prior knowledge, and common challenges students often struggle with, such as understanding the relationship between fractions, decimals, and percentages. The selection of strategies in the modules was based on previous research, primarily SRL interventions in mathematics (Dignath and Büttner, 2008; Donker et al., 2014; Hitt, 2023; Wang and Sperling, 2020). A key principle in selecting strategies was the need to combine strategies from different levels, i.e., (meta-) cognitive, behavioral, and motivational levels (Panadero, 2017).

A small pilot study was conducted with students outside the study to test the feasibility and clarity of the content, which led to further adjustments to the modules. Table 2 provides an overview of the strategies included in each module and the level to which these strategies belong.

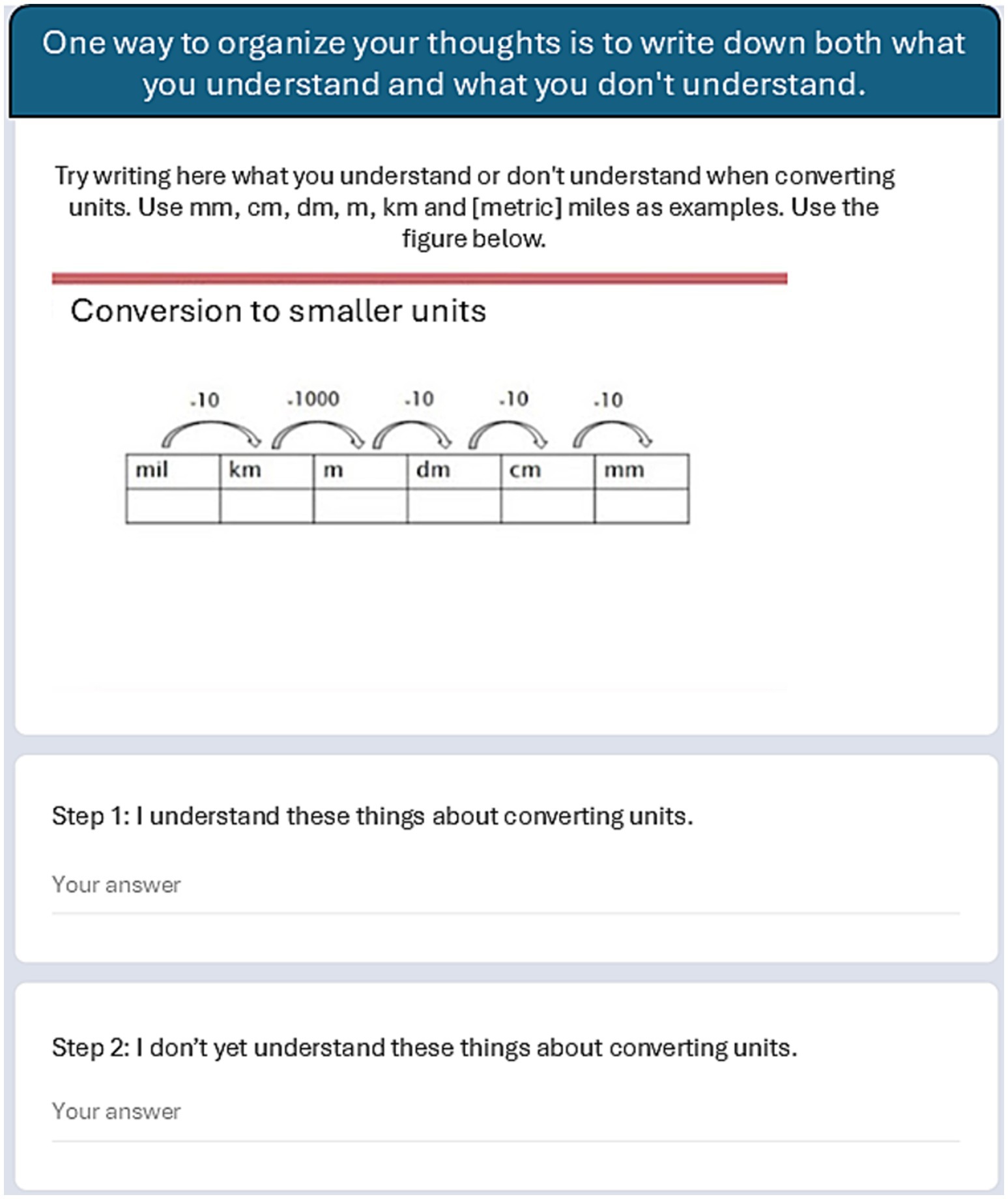

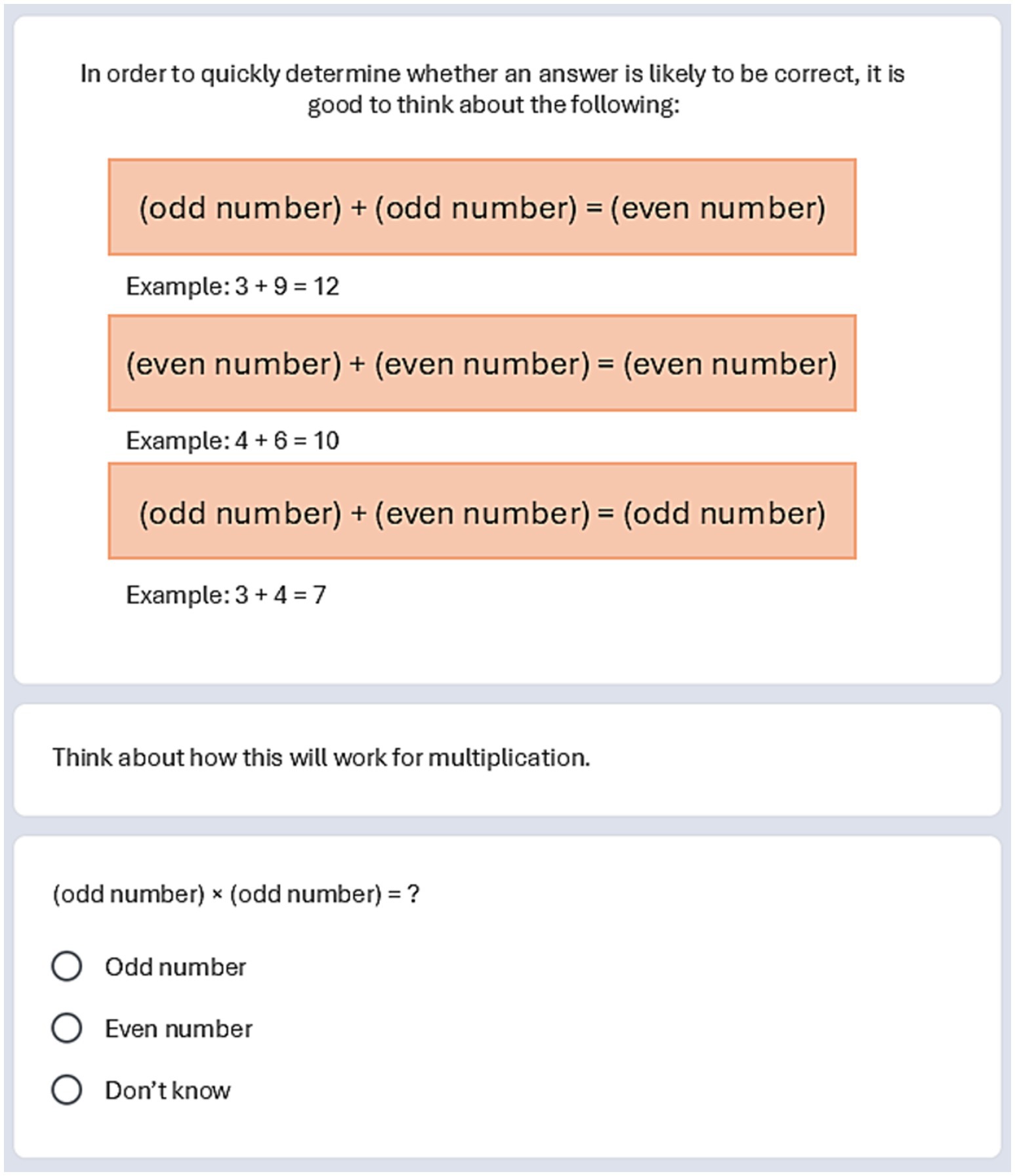

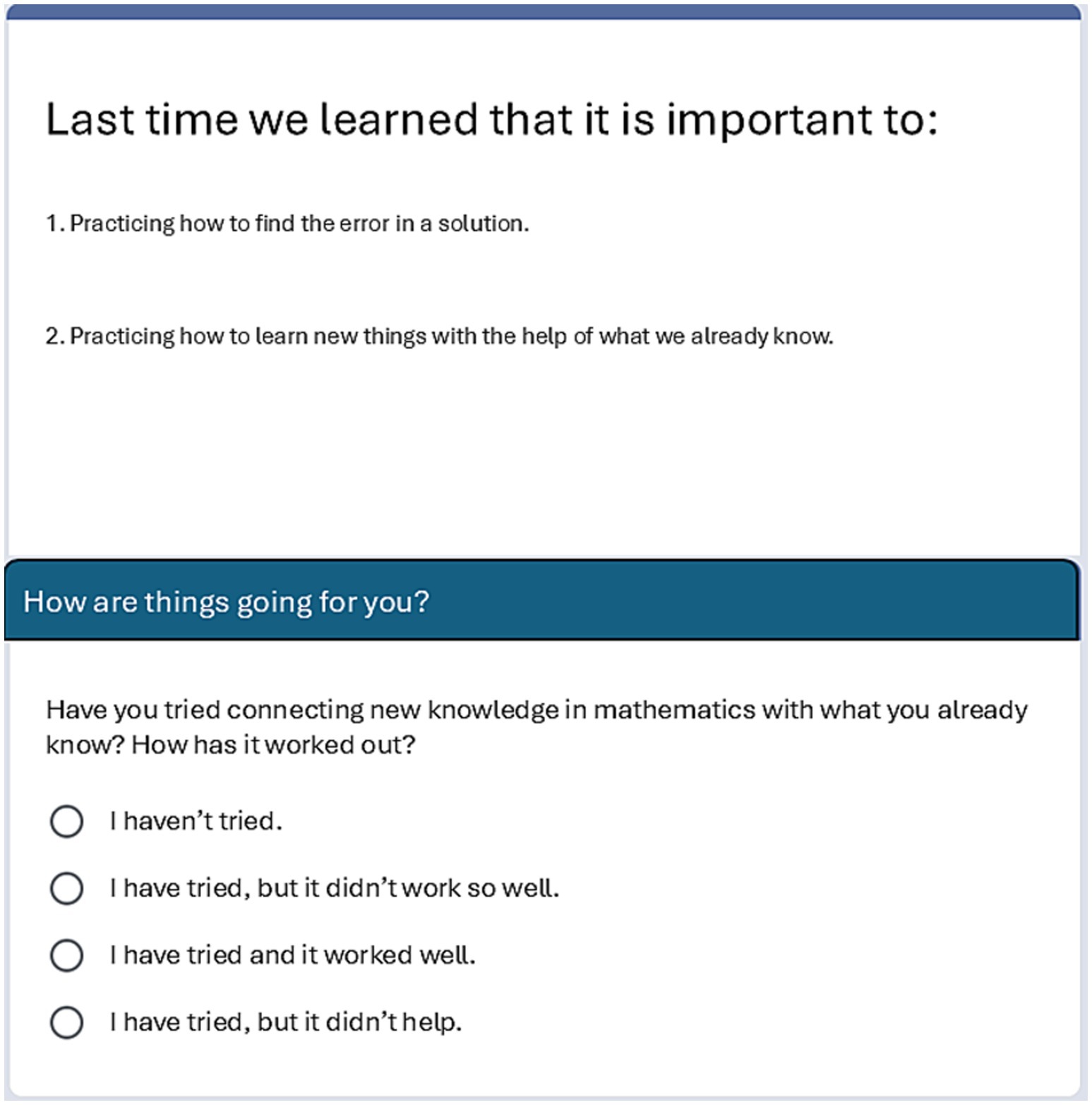

The structure of each module was as follows:

1. Remind students of the strategies covered in the previous module (except for the first module),

2. Use reflective questions on how the students experienced the application of the strategies covered,

3. Introduce new strategies through examples (see Figure 1),

4. Use reflective questions on the strategies introduced (see Figure 2), and

5. Conclude with a summary.

Figure 1. Excerpt from Module 4, which focuses on writing down what you understand (and what you do not understand) as a (cognitive) strategy. The example deals with unit conversion.

Figure 2. Excerpt from Module 6, which focuses on quickly assessing whether an answer is reasonable. The excerpt first presents an example and then prompts the student to apply the strategy in another situation.

The six modules were distributed evenly throughout the semester, with 1 week between each. The instructions for the teachers were to help students understand the content of the modules during the sessions and to assist students in practicing the strategies between modules. Each module session took between 20 and 30 min. The total time for module sessions over the semester was therefore 2–3 h. Figure 3 presents a flowchart of the intervention, outlining the duration of the intervention, the number of modules, and the data collection time points.

Data collection was conducted before, after, and during the intervention, using instruments that were specifically developed for this study. A knowledge test was used to measure students’ progression of mathematical performance in a specific area before and after the intervention. Students’ use of strategies was measured in two ways: by surveying their perceptions of their strategy use before and after the intervention, and by recording their ongoing use of strategies during the modules. In the modules, students were asked about the extent to which they had used the strategies covered in the previous module. Additionally, the number of modules each student completed was recorded during the intervention.

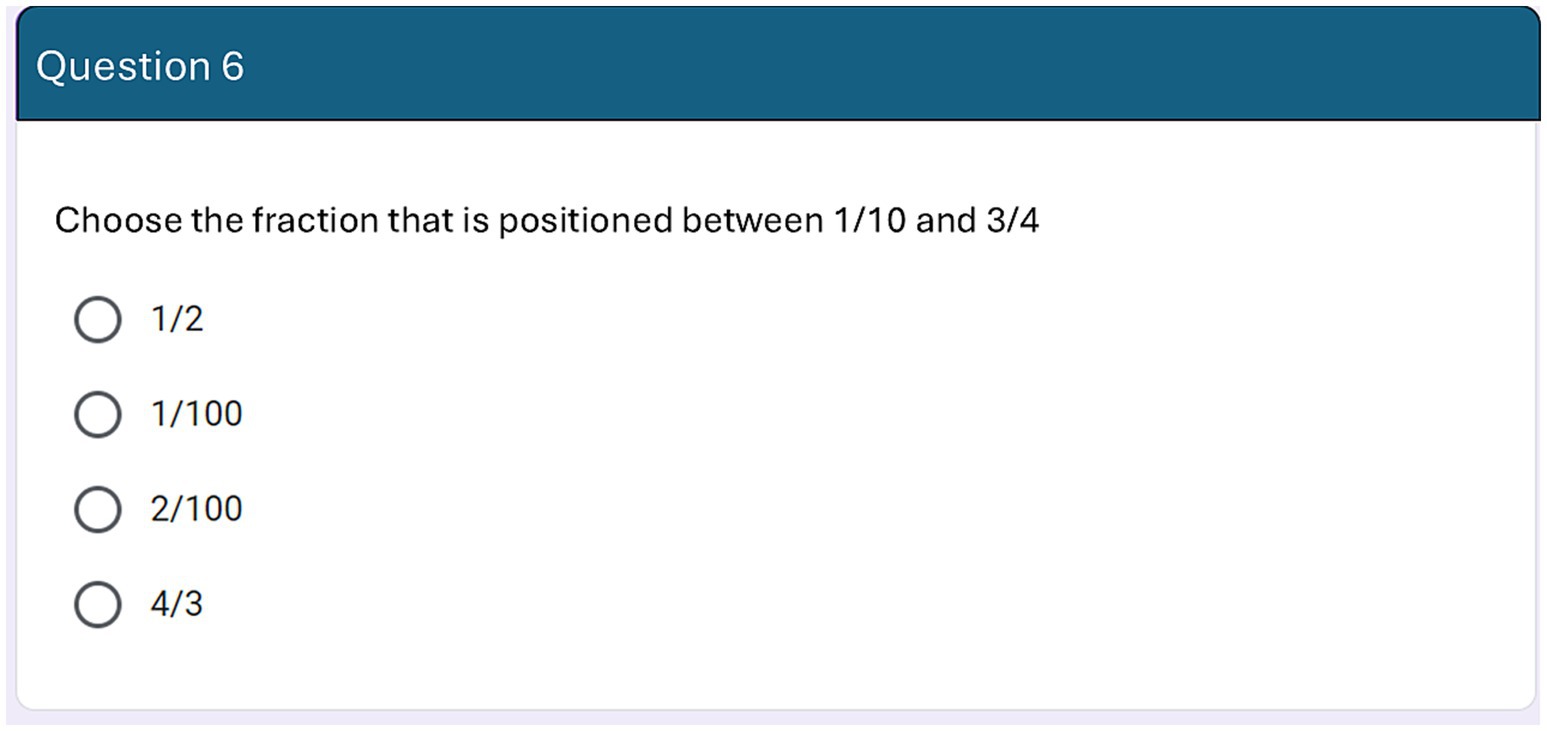

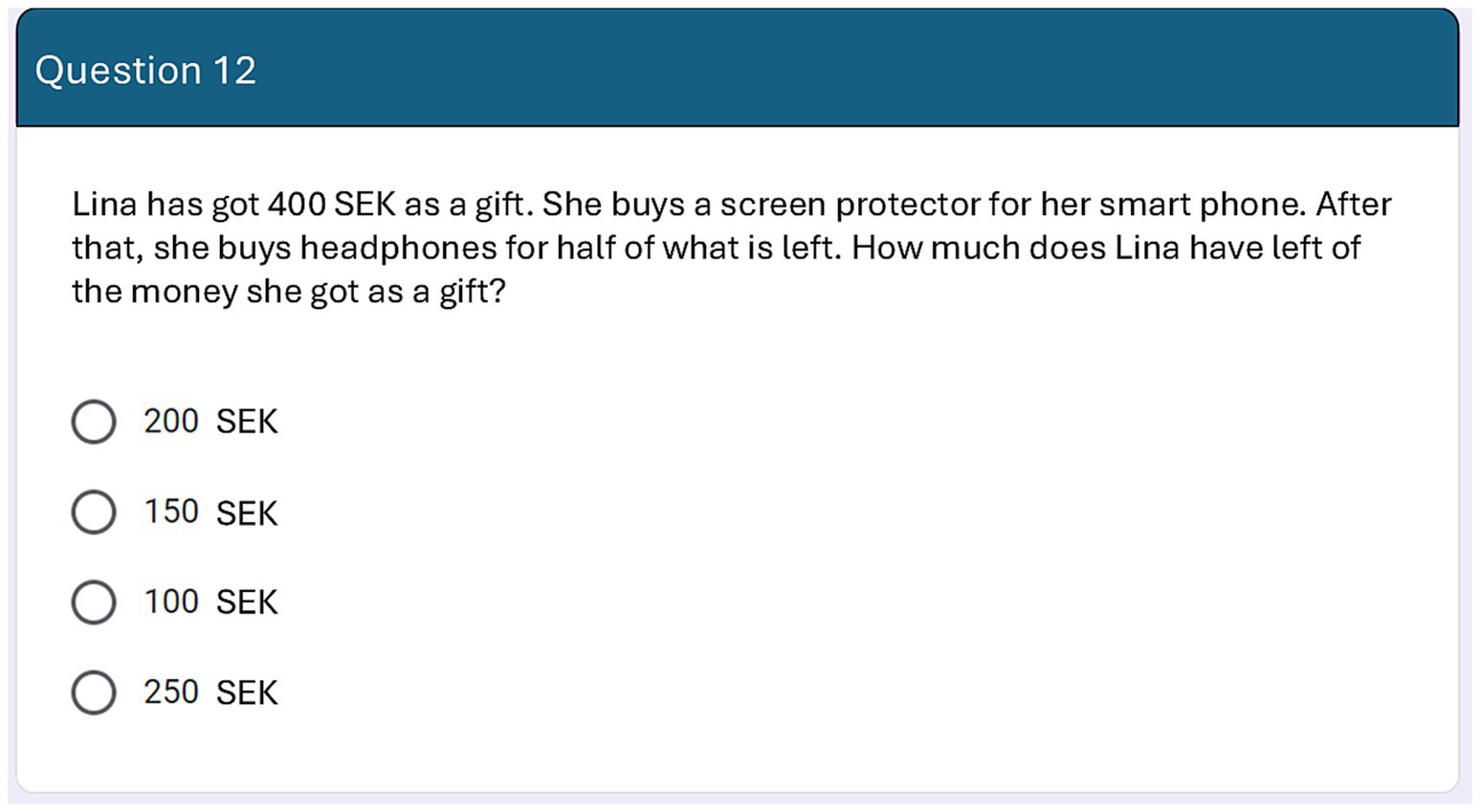

Students’ performance in mathematics was measured with a digital knowledge test before and after the intervention. The test consisted of 12 tasks that assessed students’ understanding of number sense. The tasks were based on parts of the national curriculum for grades 4–6, specifically addressing number sense. Figures 4, 5 show two examples. The first example tests students’ understanding of fractions, while the second example tests their ability to use fractions to solve problems in everyday situations. The reliability of the test was estimated using Cronbach’s alpha, which indicated an acceptable level of internal consistency (0.73).

Figure 4. The figure shows one of the tasks from the knowledge test on number sense. The task assesses students’ understanding of fractions.

Figure 5. Similar to Figure 3, the figure shows a task from the knowledge test on number sense. The task assesses students’ ability to solve problems involving fractions in everyday contexts.

The digital format of the knowledge test allowed students to use some of the strategies covered in the modules directly. After each incorrectly answered question, students received brief feedback and were given the opportunity to reattempt to solve the task. At the end of the knowledge test, students were informed of the number of correct answers and were given the option to retake the entire test. Thus, students were able to apply strategies such as “using the feedback you receive” and “not giving up easily” during the test. However, in the analysis, only the students’ first answers were registered as the number of correct responses.

Students’ use of strategies was examined using 11 statements linked to the strategies introduced in the modules. The survey was conducted before and after the intervention. Students were asked to respond to statements such as “When I learn something in mathematics, I write down both what I understand and what I do not understand,” “When I get a problem wrong, I try to find the mistake before asking for help,” and “When I fail to reach my goals in mathematics, I reflect on what went wrong.” The responses were rated on a five-point Likert scale, ranging from “Does not apply at all” to “Applies completely.” The reliability of the scale was high (Cronbach’s alpha = 0.87).

Questions about the extent to which the students had used the strategies covered in the previous module were integrated into the modules. The students were reminded of the strategies discussed in the earlier module and then asked questions like, “How did it go for you? Have you tried to find the mistake in a task when you got the wrong answer?” or “Have you tried connecting new knowledge in mathematics with what you already know?” The response options were formulated in levels, from not having tried at all to having tried and succeeded (Figure 6). Responses were converted into scores from 1 to 3. Reliability was estimated using Cronbach’s alpha, suggesting an acceptable level of internal consistency (0.64).

Figure 6. Excerpt from Module 3, showing a brief repetition of the strategies covered in Module 2, followed by a question about whether students had attempted to use those strategies.

The collected data from the knowledge tests and surveys were examined for outliers and normal distribution using histograms and the Shapiro–Wilk test. Since these tests indicated that the data did not follow a normal distribution, both parametric (t-tests) and non-parametric (Wilcoxon Signed-Rank tests) tests were used to analyze the differences between pre- and post-tests. As the results were consistent across both approaches, they are presented in terms of means and standard deviations.

To investigate the variation between schools, ANOVA was used. School affiliation was treated as the independent variable or fixed factor and coded as: School A, School B, School C, School D, and School E. The dependent variables included:

1. Knowledge progression in number sense,

2. Progression in students’ perceptions of strategies,

3. Students’ self-reported use of strategies during the intervention,

4. The extent to which students completed the modules,

5. Students’ performance levels,

6. Students’ gender.

Knowledge progression was calculated as the difference between the post- and pre-test in mathematics, while progression in students’ perceptions of strategies was calculated as the difference in survey responses before and after the intervention. Students’ self-reported use of strategies during the intervention was calculated based on their responses to questions in the modules about their use of strategies during the intervention. The extent to which students completed the modules was calculated as the number of completed modules. Students’ performance levels were classified into low-, and high-performing and coded as 0 = low performance and 1 = high performance. Since Swedish students are not awarded letter grades before year 6, their grades in mathematics could not be used as an indicator of performance levels. Instead, results from the pre-test in mathematics were used to categorize students as low-, or high-performing. Students’ gender was coded as 0 = boys and 1 = girls.

Significant differences from ANOVA were further examined with a post-hoc test (Tukey’s test) to identify which schools differed from each other.

To investigate variation among students, ANOVA was conducted in two rounds. In one analysis, gender was used as the independent variable, and in the other, performance level. In both analyses, the dependent variables were:

1. Knowledge progression in number sense,

2. Progression in students’ perceptions of strategies,

3. Students’ self-reported use of strategies during the intervention,

4. The extent to which students completed the modules.

To examine the interaction between gender and performance level, this combination was also included in the analyses.

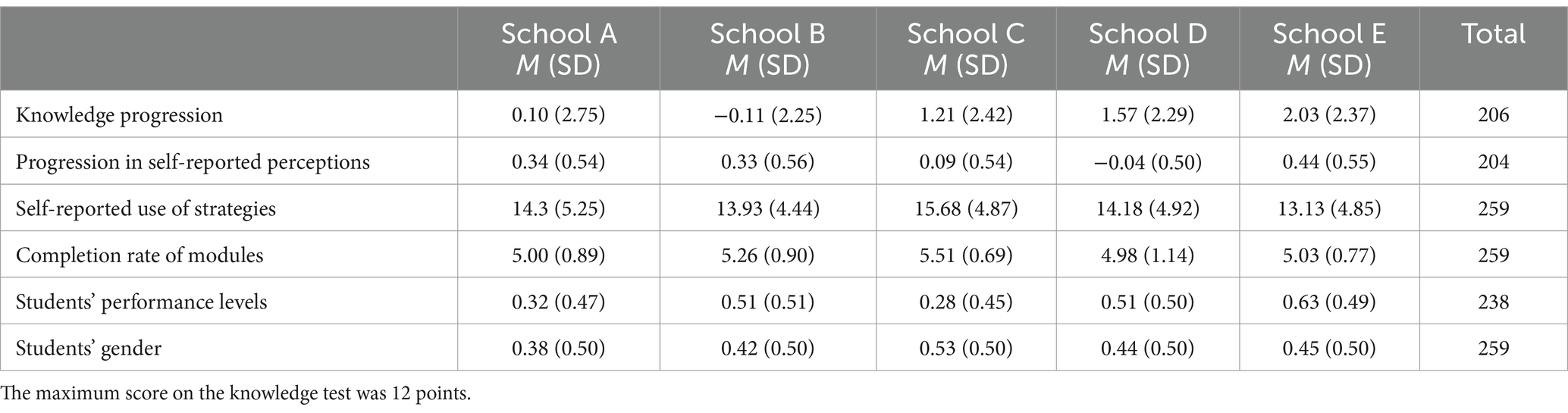

ANOVA shows a statistically significant difference in knowledge progression between at least two schools [F(4,201) = 4.804, p = 0.001]. Tukey’s test for multiple comparisons indicates that the average knowledge progression is statistically significantly different between School A and School E (∆ = 1.9 points; p = 0.03), between School B and School E (∆ = 2.1 points; p = 0.004), and between School B and School D (∆ = 1.7 points; p = 0.01). No statistically significant differences were found between School C and the other schools. Table 3 presents students’ progression on the knowledge test at the school level in terms of means and standard deviations.

Table 3. School-level results for the dependent variables: knowledge progression, self-reported use of strategies, completion rate of modules, progression in self-reported perceptions, students’ performance levels, and gender.

ANOVA shows a statistically significant difference in how students’ perceptions of their strategy use developed during the intervention between at least two schools [F(4,199) = 7.07, p < 0.001]. Tukey’s test indicates significant differences in perception progression between School A and School D (∆ = 0.38 points; p = 0.02), School B and School D (∆ = 0.38 points; p = 0.02), School B and School E (∆ = 0.35 points; p = 0.04), and School D and School E (∆ = 0.49 points; p < 0.01). The scale used in the survey that measured students’ perceptions of strategy use ranged from 1 to 5 points. Table 3 shows the progression in perceptions at the school level in terms of means and standard deviations.

ANOVA shows no statistically significant differences in students’ self-reported use of strategies [F(4,254) = 1.06, p = 0.173].

ANOVA shows a statistically significant difference in the completion rate of modules between at least two schools [F(4,254) = 2.94, p = 0.02]. Tukey’s test shows a significant difference in completion rates between School C and School D, with a mean difference of 0.53 modules (p = 0.01). Table 3 presents the completion rate at the school level in terms of means and standard deviations.

ANOVA shows a statistically significant difference in students’ performance levels between at least two schools [F(4,233) = 3.42, p = 0.01]. Tukey’s test shows that students’ performance levels differ significantly between School C and School E, with a mean difference of 0.35 performance steps (p = 0.01), where 0 represents low performance and 1 represents high performance. There are no significant differences between the other schools.

ANOVA shows no statistically significant difference in students’ gender distribution [F(4,254) = 0.48, p = 0.753].

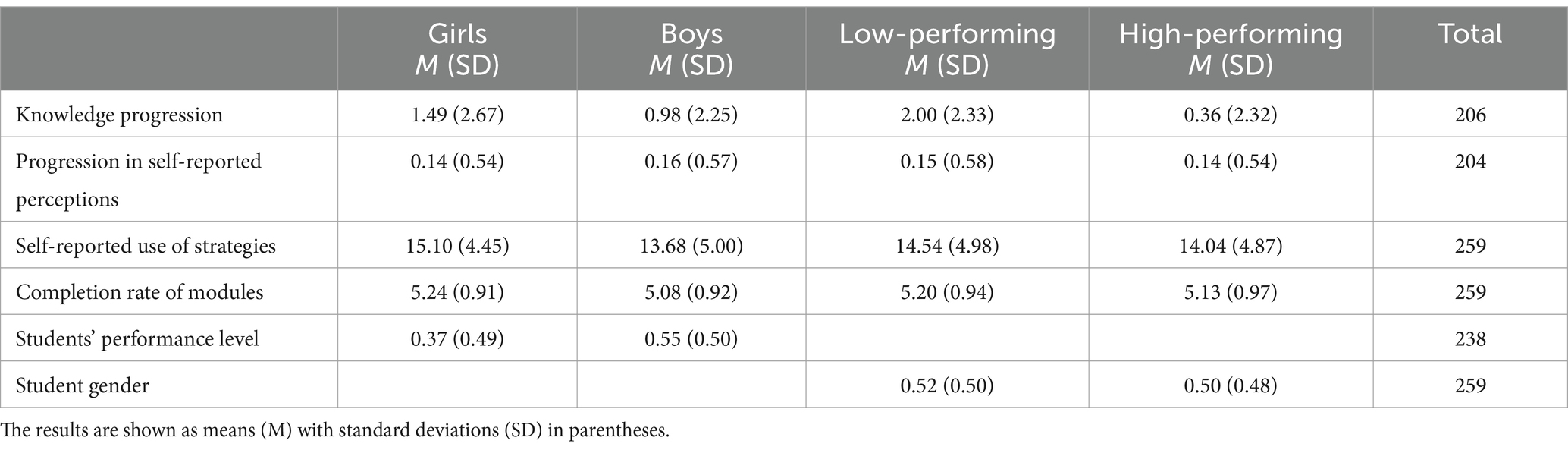

ANOVA shows no statistically significant difference in knowledge progression between girls and boys [F(1,204) = 2.19, p = 0.14], but there is a statistically significant difference between low-, and high-performing students [F(1,204) = 25.39, p < 0.001]. Table 4 presents knowledge progression for low-, and high-performing students in terms of means and standard deviations. Low-performing students show a knowledge progression of 2 points (equivalent to 17%), while high-performing students show a knowledge progression of 0.36 points.

Table 4. Results divided by gender and performance level for the dependent variables: knowledge progression, self-reported use of strategies, completion rate of modules, and progression in self-reported perceptions.

ANOVA shows no statistically significant difference in the progression of students’ perceptions of strategies regarding gender [F(1,202) = 0.04, p = 0.85] or performance level [F(1,196) = 0.01, p = 0.90].

ANOVA shows a statistically significant difference in students’ self-reported use of strategies between girls and boys [F(1,256) = 5.73, p = 0.02]. Table 4 presents means and standard deviations for students’ self-reported use of strategies, divided by gender. On average, girls (M = 15.10) reported using more strategies from the modules than boys (M = 13.68). ANOVA also shows no statistically significant difference in how low- and high-performing students reported using strategies from the modules [F(1,236) = 0.64, p = 0.32].

ANOVA shows no statistically significant difference in the completion rate of modules regarding gender [F(1,256) = 2.06, p = 0.15] or performance level [F(1,236) = 0.27, p = 0.61].

ANOVA shows that the combination of performance level and gender is statistically significant, regardless of whether gender is chosen as the dependent variable and performance as the independent variable or vice versa. Table 4 presents means and standard deviations for girls (M = 0.37) and boys (M = 0.55) based on performance levels. Boys have a higher performance level than girls, and this difference is statistically significant [F(1,235) = 7.27, p = 0.01].

The goal of this study was to investigate whether implementing an SRL material in mathematics that is (1) integrated into regular instruction and (2) developed in collaboration with teachers could impact students’ performance in mathematics and their use of self-regulated learning (SRL) strategies in the subject. The study also aimed to examine whether the implementation of the SRL material, integrated into ongoing teaching, varies between schools or among groups of students with different performance levels, as well as potential gender differences. The results are discussed below.

Previous research (e.g., Dignath et al., 2008; Hattie et al., 1996) has shown a positive correlation between the use of SRL strategies and student performance in school. At the same time, SRL interventions conducted by teachers (rather than researchers) tend to have a lower impact on student performance (Dignath and Büttner, 2008; Wang and Sperling, 2020). The results of this study show that there was no knowledge progression in number sense across all schools, even though the SRL material was developed jointly by teachers and researchers and integrated into regular instruction.

One explanation for the differences between schools could be the so-called teacher factor, which may be “hidden” within school affiliation. The teachers involved in the study may have structured their mathematics teaching in different ways, thereby providing varying levels of support to students. Previous research (e.g., Hattie, 2009) supports the idea that the teacher factor can have a greater impact on students’ progression than instructional approaches (such as an SRL material).

Another explanation for the differences between schools could be the extent to which students at each school completed the modules. For instance, School C had a higher average completion rate (M = 5.51 modules) than School D (M = 4.96 modules). Despite this difference, the completion rate does not seem to explain the variation in performance, as School D, with the lowest completion rate, had relatively strong progression (M = 1.57 points) in relation to results on the knowledge test.

Yet another explanation could be the students’ performance levels, which are linked to the overall performance levels of the schools (see Table 1). The results show differences in students’ performance levels, particularly between School C (with 0.28 performance steps) and School E (with 0.62 performance steps). However, performance levels do not seem to explain the differences in performance either, as School C, which had the lowest performance levels, had a progression of 1.28 points—higher than School A, which had a performance level of 0.32 steps and a progression of 0.10 points.

Students’ self-reported use of strategies during the intervention did not differ between schools. This indicates that students reported having tested the strategies covered in the modules to an equal extent, which is assumed to be due to the material being integrated into ongoing instruction. However, the results show differences between schools regarding the progression of students’ perceptions of SRL strategies. This means that students from different schools developed their perceptions of strategy use in mathematics to varying degrees. One explanation for this difference could (again) be the teacher factor. If teachers verbalized and repeated the use of strategies during teaching between modules, it may have positively influenced students’ perceptions. At the same time, teachers may have already worked with students’ development of SRL strategies before the intervention, leading to less progression in students’ perceptions during the intervention.

In summary, the results show certain similarities between schools, suggesting a consistent implementation of the SRL material. However, the results also reveal differences between schools that are less related to the use of the SRL material. These differences seem to be more connected to the teacher factor and the broader mathematics instruction at each school.

The results for the entire student population show greater progression for low-performing students compared to high-performing students. At the same time, the results indicate no differences between low-, and high-performing students in terms of the extent to which they completed the modules or tested the strategies covered in the modules. Nor were there differences in how low-, and high-performing students developed their perceptions of SRL strategies in mathematics. This aligns somewhat with previous research (Donker et al., 2014), which has shown that students’ benefits from SRL interventions are largely independent of performance level. Studies conducted in mathematics have demonstrated positive effects of SRL training for students struggling with problem-solving (Vauras et al., 1999) and for students with neurodevelopmental challenges (Fuchs et al., 2003), suggesting that low-performing students can benefit from SRL training.

One explanation for low-performing students’ greater progression could be the ceiling effect. High-performing students had relatively high scores from the start, meaning that a certain number of students reached the maximum score on the knowledge test. Another explanation could be that high-performing students had already developed effective self-regulation strategies before the intervention. In such cases, the SRL material may not have contributed much new knowledge, but may have raised students’ awareness of strategies they were already using, helping them articulate those strategies.

Previous studies (Dignath and Büttner, 2008; Donker et al., 2014; Wang and Sperling, 2020) have not identified gender as a factor influencing the effects of SRL interventions. This aligns with the results of this study, which show no differences between boys and girls regarding knowledge progression. The same applies to the extent to which boys and girls completed the modules and the progression of their perceptions of SRL strategies in mathematics. However, the results do show a difference between boys and girls regarding the extent to which they tested the strategies covered in the modules.

Studies on learning in mathematics (Bezzina, 2010; Seegers and Boekaerts, 1996; Zimmerman and Martinez-Pons, 1990) have shown that girls use self-regulated learning strategies more than boys. This may indicate that the girls in the study were more inclined to adopt the content of the SRL material and therefore tested more strategies. Another explanation for the difference between boys and girls could be their attitudes toward learning, particularly mathematics. Previous studies (Eccles et al., 1984; Seegers and Boekaerts, 1996) have shown that boys in early adolescence (ages 12–13) have greater confidence in their ability to succeed in school tasks (i.e., self-efficacy), while girls show a greater willingness to learn and exert effort in mathematics. This could have affected how the girls approached the exercises and content of the modules, leading to girls testing more strategies. Another explanation for the gender difference could be the difference in performance levels, as boys had higher performance and may have already developed effective self-regulation strategies.

In summary, the results indicate that the implementation of the SRL material was consistent for students with different performance levels. However, low-performing students showed greater progression, suggesting that they benefited more from the intervention, or that there were limitations in the knowledge measurement that may not have captured the high-performing students’ development. Regarding gender, the results show no significant differences between boys and girls. Despite this, girls tested more strategies in mathematics than boys, suggesting a slight difference in how boys and girls used the SRL material. Girls showed a greater inclination to test or use strategies in mathematics, possibly due to their lower confidence in their mathematical abilities compared to boys.

The results of this study indicate that involving teachers in the development of SRL material and integrating it into regular teaching has advantages. Students, regardless of gender or performance level, completed the SRL modules to the same extent. The same applies across schools, with the exception of two schools. However, the results also show that the use of SRL material does not eliminate differences between schools, which may stem from school culture, socio-economic background, or teacher-related factors. In other words, an SRL material does not have an equalizing effect between schools when it comes to students’ development in mathematics or their perceptions of strategies in mathematics.

Despite this, certain student groups appeared to benefit more from the SRL material than others. Low-performing students showed better progression than high-performing students, and girls tested more strategies in mathematics than boys. This suggests that a revision of the material would be appropriate to ensure that the content is tailored to all student groups, regardless of performance level or degrees of self-efficacy.

If these revisions are made, the digital format of the SRL material, combined with its self-instructing design, provides good opportunities for other teachers to use it, even if they were not involved in the study or in developing the content. However, to ensure a high completion rate and students’ awareness of strategy use, teachers need to integrate the content into the rest of the instruction and regulate students’ strategy use by consistently linking back to the content in the SRL material.

The conclusions of this study can thus serve as valuable insights into the factors that may affect the implementation of SRL material and help optimize its use to enhance students’ knowledge in mathematics.

The results of the study should be interpreted in light of certain limitations that characterize the intervention. A greater variation in the student population and a higher number of participating students and teachers would have increased the generalizability of the results. This is something that future studies could consider when selecting participants.

Another limitation relates to measuring the effect of the SRL material on students’ use of strategies. In this study, the measurement was limited to students’ self-assessments of their strategy use. Both educators and researchers would benefit from understanding more about how students’ actual use of strategies has been impacted. Such knowledge would provide a better understanding of what SRL material needs to include and how regular instruction needs to be designed to support students in becoming self-regulated learners.

It is also unknown to what extent the observed effect on students’ performance and/or use of SRL strategies diminishes over time after the intervention ends. The long-term effect of SRL training is therefore another interesting topic for future research. Understanding more about how long students need to practice SRL strategies to automate their use would greatly benefit both educators and researchers when designing SRL materials. For instance, is there a certain amount of SRL training necessary to develop self-regulation strategies, or does SRL training need to be continuous to contextualize strategy use within mathematics teaching?

From a technical and practical perspective, limitations related to the implementation of SRL interventions in diverse classroom settings should also be considered. Differentiated learning, which adapts instruction to individual student needs, is a critical factor that could influence the effectiveness of SRL training. Future research should explore how SRL strategies can be tailored to accommodate students with varying levels of prior knowledge and cognitive abilities. Additionally, the role of digital tools in facilitating personalized SRL instruction warrants further investigation. For instance, how can adaptive learning technologies support differentiated SRL training, and what challenges arise when integrating these tools effectively in everyday mathematics teaching? Addressing these questions would contribute to optimizing the design and implementation of SRL interventions for diverse student populations.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical approval was not required for the study involving human samples in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

AB: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Visualization, Writing – original draft, Writing – review & editing. AJ: Writing – original draft, Writing – review & editing.

The author(s) declare that no financial support was received for the research and/or publication of this article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that Gen AI was used in the creation of this manuscript. Generative AI was used to translate the manuscript from Swedish to English.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Bell, C., and Pape, S. (2014). Scaffolding the development of self-regulated learning in mathematics classrooms. Middle Sch. J. 45, 23–32. doi: 10.1080/00940771.2014.11461893

Bezzina, F. H. (2010). Investigating gender differences in mathematics performance and in self-regulated learning: an empirical study from Malta. Equal. Divers. Incl. 29, 669–693. doi: 10.1108/02610151011074407

Bidjerano, T. (2005). Gender differences in self-regulated learning. Annual Meeting of the Northeastern Educational Research Association, Kerhonkson, NY, USA. Available at: https://eric.ed.gov/?id=ED490777 (Accessed February 7, 2025).

Boekaerts, M. (1999). Self-regulated learning: where we are today. Int. J. Educ. Res. 31, 445–457. doi: 10.1016/S0883-0355(99)00014-2

De Corte, E., Verschaffel, L., and Eynde, P. (2000). “Self-regulation: a characteristic and a goal in mathematics education” in Handbook of self-regulation. eds. M. Boekaerts, P. R. Pintrich, and M. Zeidner (San Diego, CA: Academic Press), 687–726.

Dent, A. L., and Koenka, A. C. (2016). The relation between self-regulated learning and academic achievement across childhood and adolescence: a meta-analysis. Educ. Psychol. Rev. 28, 425–474. doi: 10.1007/s10648-015-9320-8

Dignath, C., and Büttner, G. (2008). Components of fostering self-regulated learning among students: a meta-analysis on intervention studies at primary and secondary school level. Metacognition Learn. 3, 231–264. doi: 10.1007/s11409-008-9029-x

Dignath, C., Buettner, G., and Langfeldt, H-P. (2008). How can primary school students learn self-regulated learning strategies most effectively? A meta-analysis on self-regulation training programmes. Educ. Res. Rev. 3, 101–129. doi: 10.1016/j.edurev.2008.02.003

Donker, A. S., de Boer, H., Kostons, D., Van Ewijk, C. D., and van der Werf, M. P. (2014). Effectiveness of learning strategy instruction on academic performance: a meta-analysis. Educ. Res. Rev. 11, 1–26. doi: 10.1016/j.edurev.2013.11.002

Eccles, J. S., Adler, T., and Meece, J. (1984). Sex differences in achievement: a test of alternative theories. J. Pers. Soc. Psychol. 46, 26–43.

Fuchs, L. S., Fuchs, D., Prentice, K., Burch, M., Hamlett, C. L., Owen, R., et al. (2003). Enhancing third-grade students’ mathematical problem-solving with self-regulated learning strategies. J. Educ. Psychol. 95, 306–315. doi: 10.1037/0022-0663.95.2.306

Guo, W., Lau, K. L., Wei, J., and Bai, B. (2023). Academic subject and gender differences in high school students’ self-regulated learning of language and mathematics. Curr. Psychol. 42, 7965–7980. doi: 10.1007/s12144-021-02120-9

Hattie, J. (2009). Visible learning: a synthesis of over 800 meta-analyses relating to achievement. London: Routledge.

Hattie, J., Biggs, J., and Purdie, N. (1996). Effects of learning skills interventions on student learning: a meta-analysis. Rev. Educ. Res. 66, 99–136. doi: 10.3102/00346543066002099

Hitt, L. E. (2023). A systematic review and meta-analysis of interventions based on metacognition and self-regulation in school-aged mathematics (Doctoral dissertation,: Durham University.

Jönsson, A. (2022). Young students’ use of explicit grading criteria for self-regulation. L’évaluation en éducation online, 6. doi: 10.48325/rleee.006.02

Kramarski, B., and Zoldan, S. (2008). Using errors as springboards for enhancing mathematical reasoning with three metacognitive approaches. J. Educ. Res. 102, 137–151. doi: 10.3200/JOER.102.2.137-151

Muncer, G., Higham, P. A., Gosling, C. J., Cortese, S., Wood-Downie, H., and Hadwin, J. A. (2022). A meta-analysis investigating the association between metacognition and math performance in adolescence. Educ. Psychol. Rev. 34, 301–334. doi: 10.1007/s10648-021-09620-x

Panadero, E. (2017). A review of self-regulated learning: six models and four directions for research. Front. Psychol. 8:422. doi: 10.3389/fpsyg.2017.00422

Pape, S., Bell, C., and Yetkin, I. (2003). Developing mathematical thinking and self-regulated learning: a teaching experiment in a seventh-grade mathematics classroom. Educ. Stud. Math. 53, 179–202. doi: 10.1023/A:1026062121857

Pintrich, P. R. (2000). “The role of goal orientation in self-regulated learning” in Handbook of self-regulation. eds. M. Boekaerts, P. R. Pintrich, and M. Zeidner (San Diego, CA: Academic Press), 452–502.

Puustinen, M., and Pulkkinen, L. (2001). Models of self-regulated learning: a review. Scand. J. Educ. Res. 45, 269–286. doi: 10.1080/00313830120074206

Rohman, F. M. A., Indriati, D., and Indriati, R. (2020). Gender differences on students’ self-regulated learning in mathematics. J. Phys. Conf. Ser. 1613:012053. doi: 10.1088/1742-6596/1613/1/012053

Samuelsson, M., and Samuelsson, J. (2016). Gender differences in boys’ and girls’ perception of teaching and learning mathematics. Open Rev. Educ. Res. 3, 18–34. doi: 10.1080/23265507.2015.1127770

Schunk, D. (1998). “Teaching elementary students to self-regulate practice of mathematical skills with modeling” in Self-regulated learning: from teaching to self-reflective practice. eds. D. Schunk and B. Zimmerman (New York: Guilford Press), 137–159.

Seegers, G., and Boekaerts, M. (1996). Gender-related differences in self-referenced cognitions in relation to mathematics. J. Res. Math. Educ. 27, 215–240. doi: 10.2307/749601

Swedish National Agency for Education (2022). Kursplaner för grundskolan [syllabi for compulsory school]. Stockholm: Skolverket.

Valero, P., Boistrup, L. B., Christiansen, I. M., and Norén, E. (2022). Matematikundervisningens sociopolitiska utmaningar [the sociopolitical challenges of mathematical teaching]. Stockholm: Stockholm University Press.

Vauras, M., Kinnunen, R., and Rauhanummi, T. (1999). The role of metacognition in the context of integrated strategy intervention. Eur. J. Psychol. Educ. 14, 555–569. doi: 10.1007/BF03172979

Wang, Y., and Sperling, R. (2020). Characteristics of effective self-regulated learning interventions in mathematics classrooms: a systematic review. Front. Educ. 5:58. doi: 10.3389/feduc.2020.00058

Zimmerman, B. J. (2002). Becoming a self-regulated learner: an overview. Theory Pract. 41, 64–70. doi: 10.1207/s15430421tip4102_2

Zimmerman, B. J., and Martinez-Pons, M. (1990). Student differences in self-regulated learning: relating grade, sex, and giftedness to self-efficacy and strategy use. J. Educ. Psychol. 82, 51–59. doi: 10.1037/0022-0663.82.1.51

Keywords: self-regulated learning, mathematics learning, instructional material, intervention, digital material

Citation: Balan A and Jönsson A (2025) Evaluation of a self-instructional self-regulated learning material in mathematics. Front. Educ. 10:1507803. doi: 10.3389/feduc.2025.1507803

Received: 08 October 2024; Accepted: 03 March 2025;

Published: 18 March 2025.

Edited by:

Yusuf F. Zakariya, University of Agder, NorwayReviewed by:

Fauziah Sulaiman, University Malaysia Sabah, MalaysiaCopyright © 2025 Balan and Jönsson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anders Jönsson, YW5kZXJzLmpvbnNzb25AaGtyLnNl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.