94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 05 November 2024

Sec. Teacher Education

Volume 9 - 2024 | https://doi.org/10.3389/feduc.2024.1451002

Teachers’ abilities to critically evaluate the credibility of online information are fundamental when they educate critical online readers. This study examined pre-service teachers’ abilities to evaluate and justify the credibility of online texts on learning styles. Pre-service teachers (N = 169) read and evaluated two more and two less credible online texts on learning styles in a web-based environment. Most pre-service teachers were able to differentiate the more credible texts from the less credible ones but struggled with justifying the credibility. Pre-service teachers’ inaccurate prior beliefs about learning styles impeded questioning the less credible texts. Implications for teacher education are discussed.

Critical online reading skills, which involve analyzing, evaluating, and interpreting online texts that provide conflicting information, are crucial for teachers. First, teachers often use the internet to build professional knowledge (Andreassen and Bråten, 2013; Bougatzeli et al., 2017) to understand new demands for learning, develop their teaching practices, and solve pedagogical problems (Zimmermann et al., 2022). However, the prevalence of misinformation on the internet is a major challenge (Ecker et al., 2022; Lewandowsky et al., 2017), and the educational field is not immune to this phenomenon (Sinatra and Jacobson, 2019). Consequently, teachers must be able to evaluate the credibility of information when perusing educational topics on the internet. Lack of sufficient credibility evaluation skills may lead them to rely on unverified information instead of basing their classroom practices on evidence-based information (Dekker et al., 2012; List et al., 2022; Zimmerman and Mayweg-Paus, 2021).

Second, teachers are responsible for educating critical online readers (e.g., ACARA, 2014; NCC, 2016) and preparing their students to evaluate conflicting information they may encounter on the internet. However, previous research suggests that education systems have not fully succeeded in this task, as many students evaluate online information superficially (Coiro et al., 2015; Fraillon et al., 2020; Hämäläinen et al., 2020). Since primary and lower secondary school students, in particular, require explicit instruction to learn how to justify their evaluations (Abel et al., 2024), teachers must be able to model various ways to justify credibility and scaffold students toward in-depth reasoning.

However, little research has been conducted on pre-service teachers’ ability to evaluate the credibility of online texts or, more specifically, online educational texts. Rather, the focus has been on higher education students in general (e.g., Barzilai et al., 2020b; Kammerer et al., 2021; see also Anmarkrud et al., 2021). Our study seeks to fill in this important gap by examining pre-service teachers’ credibility evaluations and underlying reasoning when reading more and less credible online texts on learning styles—the existence of which the current scientific knowledge does not support (Sinatra and Jacobson, 2019).

This study is situated in the multiple-document reading context, whose requirements are depicted in the Documents Model framework (Britt et al., 1999; Perfetti et al., 1999). Building a coherent mental representation from multiple texts that complement or contradict each other requires readers to consider and evaluate the source information (e.g., the expertise and intentions of the source); connect the source information to its related content; and compare, contrast, and weigh the views of the sources.

As this study focuses on the credibility evaluation of multiple online texts, it was further guided by a bidirectional model of first-and second-hand evaluation strategies by Barzilai et al. (2020b). According to this model, readers can use first-hand evaluation strategies to judge the validity and quality of information and second-hand evaluation strategies to judge the source’s trustworthiness (see also Stadtler and Bromme, 2014). First-hand evaluation strategies consist of knowledge-based validation, discourse-based validation, and corroboration. When employing knowledge-based validation, readers evaluate the quality of information by comparing it to their prior knowledge or beliefs about the topic (see Section 2.1 for more details). In discourse-based validation, readers focus on how knowledge is justified and communicated. A crucial aspect to consider is the quality of evidence and how it is produced (e.g., whether the evidence is based on research or personal experience; Chinn et al., 2014; Nussbaum, 2020). Importantly, readers can evaluate information quality through corroboration, which involves using other texts to verify the information’s accuracy.

When employing second-hand evaluation strategies, readers engage in sourcing; this is defined as attending to, evaluating, and using the available information about a source (Bråten et al., 2018). When evaluating the trustworthiness of a source, readers can consider whether the author has the expertise to provide accurate information on the topic in question (Bråten et al., 2018; Stadtler and Bromme, 2014). It is also essential to evaluate the author’s benevolence, which is the willingness to provide accurate information in the readers’ best interest (Hendriks et al., 2015; Stadtler and Bromme, 2014). In addition to the author’s characteristics, readers can pay attention to the publication venue—for example, whether it serves as a gatekeeper and monitors the accuracy of the information published on the website (Braasch et al., 2013). While the bidirectional model of first-and second-hand evaluation strategies separates these two sets of strategies, it accentuates their reciprocity as well (Barzilai et al., 2020b). In essence, the evaluation of the validity and quality of information is reflected in the evaluation of the trustworthiness of a source, and vice versa.

Following this theoretical framing we created a multiple-text reading task comprising four online texts on learning styles. In these texts, we manipulated the expertise and benevolence of the source, the quality evaluation evidence, and the publication venue. As we aimed to understand how pre-service teachers employ first and second-hand evaluation strategies, we asked them to evaluate the expertise of the author, the benevolence of the author, the publication practices of the venue, and the quality of evidence; they were also required to justify their evaluations. We also asked pre-service teachers to rank the four online texts according to their credibility.

Several cognitive, social, and affective processes may affect people’s acquisition of inaccurate information (Ecker et al., 2022). Prior topic beliefs are one such cognitive factor whose function has been explained in a two-step model of validation in multiple-text comprehension by Richter and Maier (2017). According to the two-step model of validation, in the first validation step, belief consistency is used as a heuristic when processing information across multiple texts, including the evaluation of information (see also Metzger and Flanagin, 2013). Relying on heuristic processing leads readers to evaluate belief-consistent information more plausibly, process belief-consistent information more deeply, and comprehend belief-consistent texts more clearly. If readers identify an inconsistency between prior beliefs and information in the text and are motivated and capable of resolving the conflict, they may engage in strategic processing to resolve this inconsistency. This strategic processing, which is the second step in the validation process, may engender a balanced mental model that incorporates the different views of the texts.

Anmarkrud et al. (2021), in their review of individual differences in sourcing, concluded that approximately half of the studies investigating belief constructs provided empirical support for relationships between sourcing and beliefs. For instance, Tarchi (2019) found that university students’ (N = 289) prior beliefs about vaccines correlated with trustworthiness judgments of online texts (five out of six), varying by position toward vaccination and trustworthiness. Positive prior beliefs about vaccines were positively associated with texts that had a neutral or positive stance toward vaccines and negatively with texts that had a negative stance toward vaccines. Similarly, van Strien et al. (2016) found that the stronger university students’ (N = 79) prior attitudes about organic food, the lower they judged the credibility of attitude-inconsistent websites.

Despite the importance of the credibility evaluation of online texts in teachers’ professional lives, only a few studies have examined pre-service teachers’ credibility evaluation. In Anmarkrud et al.’s (2021) review of 72 studies on individual differences in sourcing (i.e., attending to, evaluating, and using sources of information), participants represented an educational program only in nine studies.

Research suggests that although pre-service teachers tend to judge educational researchers as experts, they often judge educational practitioners more benevolent than researchers (Hendriks et al., 2021; Merk and Rosman, 2021). However, Hendriks et al. (2021) showed that epistemic aims may influence how pre-service teachers evaluate different aspects of the source trustworthiness. In their study, pre-service teachers (N = 389) were asked to judge the trustworthiness (i.e., expertise, integrity, and benevolence) of educational psychology researchers and teachers in two situations: when their epistemic aim was to seek an explanation for a case in the school context or to obtain practical advice for everyday school life. When the aim was to seek theoretical explanations, researchers were evaluated as possessing more expertise and integrity, but less benevolence, than teachers. However, when the aim was to gain practical advice, teachers were seen as possessing more competence, integrity, and benevolence than researchers.

In contrast to studies by Hendriks et al. (2021) and Merk and Rosman (2021) which used a researcher-designed inventory, Zimmerman and Mayweg-Paus (2021) examined pre-service teachers’ credibility evaluations with an authentic online research task. They asked pre-service teachers’ (N = 83) to imagine themselves as teachers while searching for online information about mobile phone use in class. Pre-service teachers conducted the search individually; afterward, they were asked to either individually or collaboratively justify their methods and rationale for selecting the most relevant websites. Notably, only 34% of the selected websites were science-related (e.g., journal articles, scientific blogs). While the collaborative reflection of the selections yielded more elaborate reasoning than individual reflection, the groups referred equally often to different types of criteria, such as criteria related to information (e.g., scientificness, two-sided arguments), source (e.g., expertise, benevolence), or media (e.g., layperson or expert forum). A rather prominent finding was that sources and media were mentioned relatively rarely when pre-service teachers justified their selections.

Furthermore, research suggests that pre-service teachers may perceive anecdotal evidence as more useful or trustworthy than research evidence when seeking information for professional decision-making (Ferguson and Bråten, 2022; Kiemer and Kollar, 2021; Menz et al., 2021). However, when examining pre-service teachers’ (N = 329) profiles regarding evidence evaluation, Reuter and Leuchter (2023) found that most pre-service teachers (81%) belonged to the profile in which both strong and limited scientific evidence was rated higher than anecdotal evidence. The rest of the pre-service teachers (19%) belonged to the profile in which all three evidence types (strong scientific evidence, limited scientific evidence, and anecdotal) were rated equally high. Reuter and Leuchter (2023) concluded that pre-service teachers might value strong scientific evidence but do not automatically evaluate anecdotal evidence as inappropriate. Finally, List et al. (2022) examined the role of source and content bias in pre-service teachers’ (N = 143) evaluations of educational app reviews. A total of four educational app reviews were manipulated in terms of content bias (one-sided or two-sided content) as well as source bias and commercial motivations (third-party review: objective or sponsored; commercial website: with or without a teacher testimonial). The authors found that, while pre-service teachers paid attention to source bias in their app review ratings and purchase recommendations, their considerations of source and content bias were not sufficiently prevalent. For instance, they rated the commercial website with a teacher testimonial and the objective third-party review site as equally trustworthy.

In sum, pre-service teachers may lack the skills to conceptualize the nuances of commercial motivations, and even if they value scientific evidence they do not necessarily rely on scientific sources when selecting online information for practice. It seems that evaluation of evidence and source trustworthiness are dependent on the epistemic aims.

The present study sought to understand pre-service teachers’ abilities to evaluate and justify the credibility of four researcher-designed online texts on learning styles on which both accurate (i.e., in line with current scientific knowledge) and inaccurate information (i.e., not in line with current scientific knowledge) spread online (Dekker et al., 2012; McAfee and Hoffman, 2021; Sinatra and Jacobson, 2019). Two more credible online texts (popular science news text and researcher’s blog) contained accurate information, while two less credible texts (teacher’s blog and commercial text) contained inaccurate information. While reading, pre-service teachers evaluated four credibility aspects of each text (the author’s expertise, author’s benevolence, venue’s publication practices, and quality of evidence), justified their evaluations, and ranked the texts according to their credibility. Before reading and evaluating the online texts, pre-service teachers’ prior beliefs of learning styles were measured.

We examined the differences in how pre-service teachers evaluated the author’s expertise, author’s benevolence, venue’s publication practices, and quality of evidence across the four online texts (RQ1). Furthermore, we investigated how pre-service teachers justified their evaluations (RQ2) and ranked the texts according to their credibility (RQ3). Finally, we examined the contribution of pre-service teachers’ prior beliefs about learning styles to the credibility evaluation of more and less credible online texts when the reading order of the texts was controlled for (RQ4).

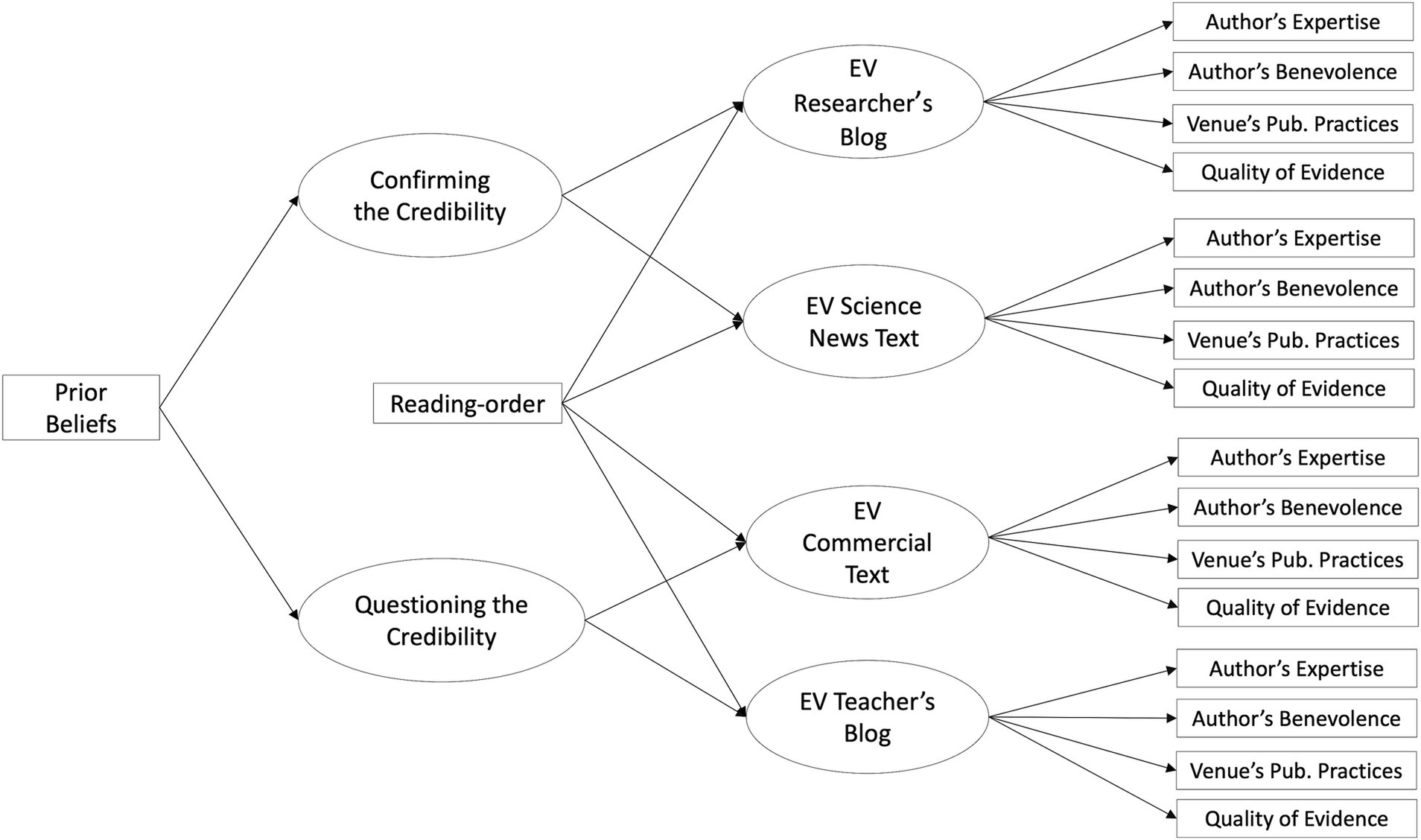

Figure 1 presents the hypothetical model of the associations between pre-service teachers’ prior beliefs about the topic and credibility evaluations. The structure of the credibility evaluation was based on our previous study (Kiili et al., 2023), in which students’ credibility evaluations loaded into four factors according to the online texts. These four first-order factors further formed two second-order factors: confirming the credibility of more credible online texts and questioning the credibility of less credible online texts (see also Fendt et al., 2023).

Figure 1. Hypothetical model of the associations between prior beliefs about the topic and credibility evaluations, with the reading order of the texts as a control variable.

Based on theoretical considerations and empirical findings (Barzilai et al., 2020b; Richter and Maier, 2017), we assumed that pre-service teachers’ prior beliefs about learning styles (i.e., beliefs in accordance with inaccurate information about learning styles) would be negatively associated with confirming and questioning the credibility of online texts. Finally, as the reading order of controversial texts has been shown to influence reading comprehension (e.g., Maier and Richter, 2013), the reading order of the texts was controlled for in the analysis.

Basic education in Finland comprises Grades 1–9 and is meant for students between the ages of 7 and 16. In primary school (Grades 1–6), class teachers teach various subjects; in lower secondary school (Grades 7–9), subject teachers are responsible for teaching their respective subjects (Finnish National Agency for Education, 2022). Finnish teacher education has an academic basis that presumes pre-service teachers have the competence to apply research-based knowledge in their daily work (Tirri, 2014; see, e.g., Niemi, 2012 for more details on Finnish teacher education). Accordingly, both class teachers and subject teachers must complete a master’s degree, which requires a minimum of 300 ECTS (The European Credit Transfer and Accumulation System; One ECTS is equal to 27 h of study).

Class teachers study education as their major, while subject teachers have their own majors, such as mathematics or English. Both degrees include teacher’s pedagogical studies (60 ECTS); moreover, the class teacher degree includes multidisciplinary studies in subjects and cross-curricular themes (60 ECTS). Subject teachers who supplement their studies by completing these multidisciplinary studies will have dual qualifications. The data collection of the present study was integrated into these multidisciplinary studies.

This study was integrated into an obligatory literacy course arranged in two Finnish universities. Of the 174 pre-service teachers taking this course, 169 gave informed consent to use a specific course task for research purposes. The age of the participating pre-service teachers varied from 19 to 43 years (M = 23.33; SD = 4.54). Most of the participants were females (81.2%), which is in line with the gender distribution in class teacher education. In 2021, 80.6% of the students accepted to class teacher programs were females (Vipunen – Education Statistics Finland, 2022). Due to the Finnish language requirements of teacher education programs, all pre-service teachers were fluent in Finnish.

The majority of the participants were enrolled in a class teacher program (86.5%), whereas the rest of the participants (13.5%) were enrolled in a subject teacher program targeting dual qualifications. Most of the participants (65.9%) had earned 100 or fewer ECTs.

The credibility evaluation task was created using Critical Online Reading Research Environment, which is a web-based environment where researchers can design critical online reading tasks for students (Kiili et al., 2023). We created four online texts on learning styles, each of which had five paragraphs and approximately 200 words (see Table 1). Two more credible online texts (Text 2: Researcher’s blog and Text 4: Popular Science Text) contained accurate information about learning styles, whereas two less credible texts (Text 1: Teacher’s blog and Text 3: Commercial text) contained inaccurate information. Text 1 claimed that students have a specific learning style, whereas Text 2 claimed the opposite. Text 3 claimed that customizing teaching according to students’ learning styles improves learning outcomes and Text 4 claimed that such improvement had not been observed. In each text, the main claim was presented in the second paragraph.

The more credible texts were based on research literature, providing evidence against the existence of specific learning styles. We wrote the researcher’s blog by drawing on several articles (Howard-Jones, 2014; Kirschner, 2017; Krätzig and Arbuthnott, 2006; Sinatra and Jacobson, 2019), whereas the popular science news text summarized a review that examined the impact of aligning instruction to modality-specific learning styles on learning outcomes (Aslaksen and Lorås, 2018). These references were also listed in the online texts that pre-service teachers read. While the texts were fictitious, existing websites served as loose templates (cf. Hahnel et al., 2020). To increase the authenticity, a graphic designer created logos for the web pages, and each web page included decorative photos (see Figure 2).

Figure 2. Screenshot from the task-environment. The text is on the left-hand side, and the questions are on the right-hand side.

We manipulated the credibility aspects of the texts in terms of the author’s expertise, author’s benevolence, quality of evidence, publication venue, and text genre (see Table 1). Pre-service teachers were asked to read and evaluate each text in terms of the author’s expertise (Evaluate how much the author has expertise about learning styles), the author’s benevolence (Evaluate how willing the author is to provide accurate information), publishing practices of the venue (Evaluate how well the publication venue can ensure that website includes accurate information), and the quality of evidence (Evaluate how well the author can support his/her main claim) on a 6-point scale (e.g., author’s expertise: 1 = very little; 6 = very much). Before evaluating the author’s expertise, pre-service teachers were asked to identify the author, and before evaluating the quality of evidence, they were asked to identify the main claim and supporting evidence. The purpose of these identification items was to address pre-service teachers’ attention to the aspects they were asked to evaluate. After the evaluations, pre-service teachers were also asked to justify their evaluations by responding to open-ended items. Their final task was to order the texts according to their credibility. Pre-service teachers were informed that the texts had been designed for the task; however, they were asked to evaluate the online texts as if they were authentic.

Pre-service teachers were randomly assigned to read the texts in two different reading orders (Reading Order 1 = Text 1, Text 2, Text 4, and Text 3; Reading Order 2 = Text 2, Text 1, Text 3, and Text 4). Reading order was based on the text pairs. The main claims of the first text pair (Texts 1 and 2) concerned the existence of specific learning styles. The main claims of the second text pair (Texts 3 and 4) concerned the impact of customizing teaching according to students’ learning styles. If pre-service teachers read the less credible text first in the first text pair, they read the more credible text first in the second pair. If pre-service teachers read the more credible text first in the first text pair, they read the less credible text first in the second pair. Text order was dummy coded for the analyses (0 = Reading Order 1, 1 = Reading Order 2).

Prior to completing the evaluation task, the participants’ prior beliefs about learning styles were measured with 3 Likert-scale items (from 1 = highly disagree to 7 = highly agree). Each item included a common misconception about learning styles. Items 1 and 3 represent misconceptions, while Item 2 provides accurate information; however, if reversed, it would reflect a common misconception. The items were as follows: (1) students can be classified into auditive, kinesthetic, and visual learners; (2) teaching according to students’ learning styles does not improve how well they acquire knowledge; and (3) students’ learning can be facilitated through learning materials, which are adjusted to their learning styles. Before computing a sum variable of the items (maximum score: 21), we reversed the second item. The McDonald’s omega for the total score was 0.71.

The literacy course, where data were collected, focused on pedagogical aspects of language, literacy, and literature, but not particularly on credibility evaluation of online texts. The data was collected in two courses, one of which was taught by the first author. Owing to the COVID-19 pandemic, the course was arranged online, and the study was conducted during a 90-min-long online class using Zoom. A week before the meeting, an information letter about the study was sent to pre-service teachers. The online meeting began with a short introduction to the task, during which the students watched a video tutorial on how to work in the task environment. Furthermore, pre-service teachers were informed that the online texts were designed for study purposes but that they simulate the resources people encounter on the internet.

After the introduction, pre-service teachers entered the online task environment using a code. First, they filled in a questionnaire for background information and indicated whether their responses could be used for research purposes. Following this, they completed the credibility evaluation task at their own pace. Once all pre-service teachers had completed the task, they discussed their experiences of the task. They also shared their ideas about teaching credibility evaluation to their students.

Each pre-service teacher responded to 16 justification items (justifications for credibility evaluations) during the task, resulting in 2704 justifications. We employed qualitative content analysis (Cohen et al., 2018; Elo and Kyngäs, 2008; Weber, 1990) to examine the quality of pre-service teachers’ justifications for their credibility evaluations. The unit of analysis was a justification that included the related response to the identification item.

The qualitative data analysis was performed in two phases. In Phase 1, four authors were involved in creating a scoring schema that could be applied to all credibility aspects. We employed both deductive and inductive analysis (Elo and Kyngäs, 2008). Previous knowledge of sourcing (e.g., Anmarkrud et al., 2013; Hendriks et al., 2015) and the quality of evidence (Chinn et al., 2014; Nussbaum, 2020) served as a lens for analyzing the written justifications. This phase was also informed by the scoring schema developed for a similar credibility evaluation task completed by upper secondary school students (Kiili et al., 2022). Inductive analysis was also utilized when reading through the data to allow us to become immersed in the data and consider the relevance and depth of the justifications for each credibility aspect in relation to the respective text. Thus, we read through the data, formulated the initial scoring schema, and tested it; following this, we discussed the scoring schema and revised and modified it based on the discussions.

The final scoring schema included four levels (Levels 0–3) depicting the differences in the quality of pre-service teachers’ justifications (Table 2). The lowest level (Level 0) represented inadequate justification. The response was regarded as inadequate on two occasions: First, pre-service teachers referred to the wrong author, venue, or evidence. Second, they did not provide any relevant source information that would reveal the author’s area of expertise, nor did they present relevant information about the author’s intentions, venue’s publication practices, or quality of evidence.

At the remaining levels, relevant reasoning, whether strengthening or weakening the credibility, was considered in determining the level of justifications. For example, pre-service teachers considered the domain of personal psychology (associate professor, popular science news text) either strengthening (learning styles could be considered in personal psychology, see Table 3 for an example) or weakening the credibility (his research focuses on personality, not learning). At Level 1, justifications were limited, including one piece of information on the credibility aspect in question: accurate information that specified the author’s key expertise, author’s intention, venue’s publication practices, or quality of evidence. Level 2 represented elaborated justification in which pre-service teachers provided two or more accurate pieces of information or elaborated on one piece of information. Finally, Level 3 represented advanced justification, namely those that included scientific reasoning or additionally considered, for example, the domain of the author’s expertise in relation to the text topic, multiple perspectives, or counterarguments. Authentic examples representing the quality levels of the justifications are presented in Table 2.

In Phase 2, we applied the scoring schema to the whole data. To examine the inter-rater reliability of the scoring, two of the authors examined the inter-rater reliability of 10.7% of the responses (i.e., 289 responses). The kappa values for expertise, benevolence, publication venue, and evidence varied from 0.72 to 0.84, 0.68 to 0.84, 0.65 to 1.00, and 0.66 to 0.86, respectively.

From these scored credibility justification responses, we formed a sum variable labeled Credibility Justification Ability Score. The reliability was assessed with McDonald’s omega, which was 0.72.

The statistical analyses were conducted using the SPSS 27 and MPlus softwares (Version 8.0; Muthén and Muthén, 1998–2017). We conducted four Friedman’s tests to examine whether pre-service teachers’ evaluations of the author’s expertise, author’s benevolence, venue’s publication practices, and quality of evidence differed across the online texts (RQ1). For the post hoc comparisons, we used the Wilcoxon rank test. The non-parametric methods were used because a few of the variables were skewed. We used the correlation coefficient r as the effect size measure: a value of 0.10 indicates a small effect, 0.30 a medium effect, and 0.50 a large effect (Cohen, 1988).

We examined the associations of pre-service teachers’ prior beliefs on learning styles with their credibility evaluations (RQ4) using structural equation modeling (SEM). Prior to SEM, a second-order measurement model for pre-service teachers’ credibility evaluation (see Kiili et al., 2023) was constructed using confirmatory factor analysis (CFA). The measurement model (see Figure 1) included four first-order factors based on the evaluated online texts that represented different genres (researcher’s blog, popular Science News text, teacher’s blog, and commercial text). These first-order factors were used to define two second-order factors: confirming the credibility of more credible texts (researcher’s blog and popular Science News text) and questioning the credibility of the less credible texts (teacher’s blog and commercial text). From the teacher’s blog, we excluded two items, namely evaluation of expertise and benevolence, because these did not require questioning. The teacher could be considered an expert who genuinely wanted to share information with her colleagues without realizing her blog included information not aligned with the current scientific knowledge. In the analysis, the scores for evaluations related to the teacher’s blog and commercial text (less credible texts) were reversed.

The CFA analysis for the structure of the credibility evaluation indicated that the model fit to the data well [χ2(84) = 121.16; p = 0.005; RMSEA = 0.05; CFI = 0.97; TLI = 0.96; SMRM = 0.06], and all parameter estimates were statistically significant (p < 0.001). Thus, the credibility evaluation structure was used in further analysis.

The model fit for the CFA and SEM models was evaluated using multiple indices: the chi-square test (χ2), root mean square error of approximation (RMSEA), comparative fit index (CFI), Tucker–Lewis index (TLI), and standardized root mean square error (SRMR). The cut-off values indicating a good model fit were set as follows: p > 0.05 for the chi-square test, RMSEA value < 0.06, CFI and TLI values > 0.95, and SRMR value < 0.08 (Hu and Bentler, 1999). These analyses were conducted using the Mplus software (Version 8.6; Muthén and Muthén, 1998–2017).

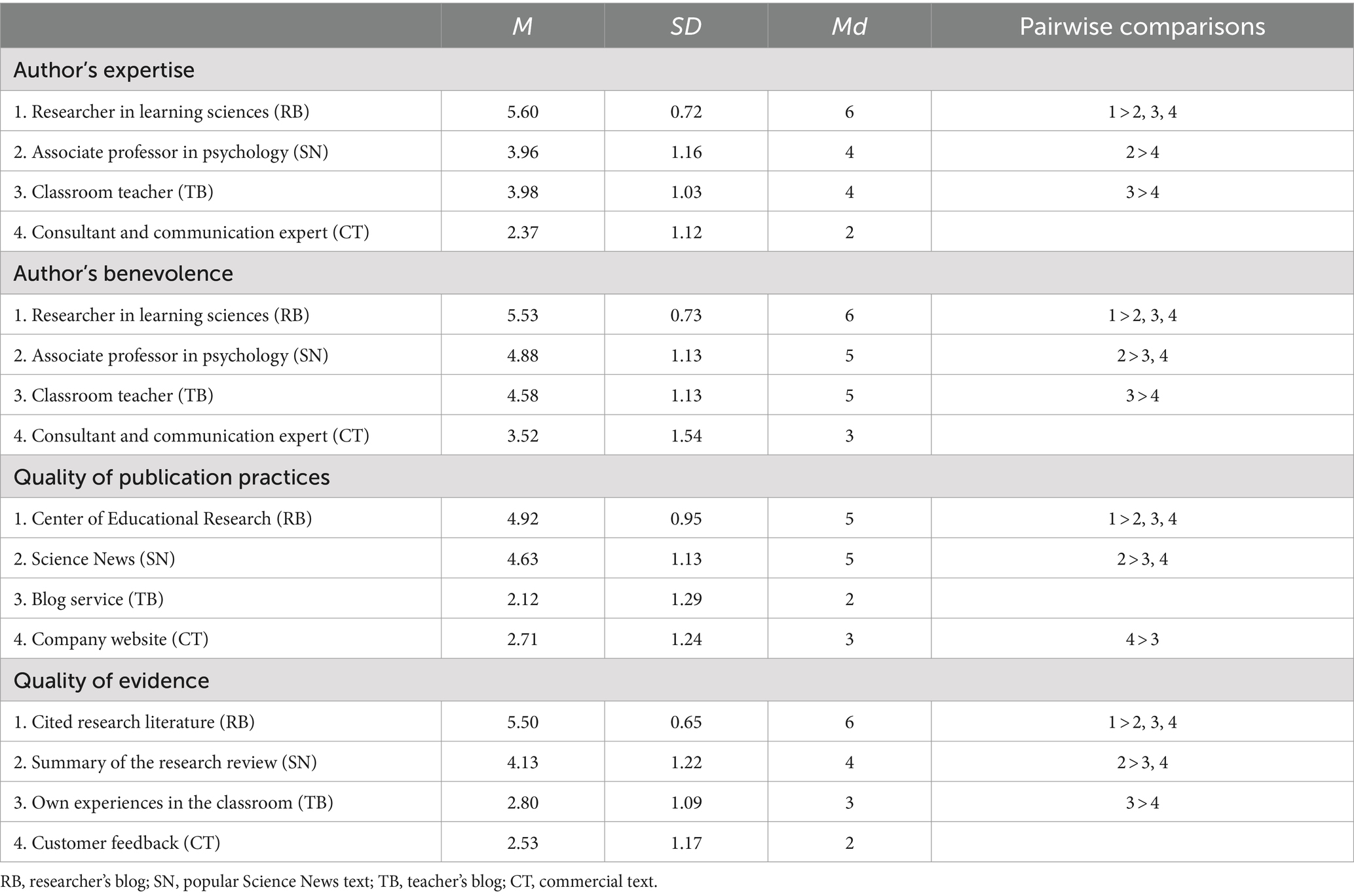

Table 4 presents the descriptive statistics for pre-service teachers’ evaluations of the author’s expertise, author’s benevolence, quality of the venue’s publication practices, and quality of evidence for each online text. Pre-service teachers’ evaluations of the author’s expertise [χ2(3) = 337.02, p < 0.001] and the author’s benevolence [χ2(3) = 157.99, p < 0.001] differed across the online texts. Post-hoc comparisons showed that pre-service teachers evaluated the researcher specialized in learning sciences as possessing the most expertise on learning styles and being the most benevolent author; furthermore, she was evaluated to have considerably higher expertise than the associate professor in psychology (Z = 10.19, p < 0.001; r = 0.78). The associate professor in psychology and the classroom teacher were evaluated to have a similar level of expertise. Furthermore, 28.4% of pre-service teachers did not question (i.e., used 5 or 6 on the scale) the benevolence of the consultant who served as a company’s communication expert.

Table 4. Descriptive statistics for the evaluations of the author’s expertise, author’s benevolence, quality of the venue’s publication practices, and quality of evidence across the online texts, with pairwise comparisons.

Similarly, pre-service teachers’ evaluations of the quality of the venue’s publication practices [χ2(3) = 314.65, p < 0.001] and the quality of evidence [χ2(3) = 335.79, p < 0.001] differed across the online texts. Regarding the publication practices, the Center of Educational Research and the blog service site were evaluated as having the highest and lowest quality, respectively, compared to the other venues (Table 4). Cited research literature (four references) was evaluated considerably higher in terms of the quality of evidence than the review of 10 studies (Z = 9.86, p < 0.001; r = 0.76). In essence, research-based evidence (i.e., cited research literature and summary of research review) were evaluated higher than teachers’ own experiences and customer feedback: the effect sizes (r) ranged from 0.62 to 0.85. Furthermore, 11.8% of pre-service teachers regarded the summary of the research review as unreliable evidence (i.e., using 1 or 2 in the scale). Customer feedback was evaluated as the most unreliable evidence. However, 7.7% of pre-service teachers were not critical toward the use of customer feedback as evidence (i.e., using 5 or 6 on the scale).

The average total credibility justification score was 17.28 (SD = 6.04) out of 48. Table 5 presents the scores for pre-service teachers’ credibility justifications, arranged by the justified credibility aspect. In general, several pre-service teachers’ justifications were shallow, as the mean scores varied from 0.47 to 1.62 (out of 3). However, some pre-service teachers engage in high quality reasoning (advanced justifications). Depending on the online text, 8 to 20% of pre-service teachers’ justifications for the author’s expertise reached the highest level. The corresponding numbers for justifying benevolence were 2–3%, venue’s publication practices 5 to 8% and for the quality of evidence 4–8%. Table 3 presents examples of the advanced justifications.

As suggested by the score means (Table 5), pre-service teachers struggled more with some credibility aspects than others. Across the texts, approximately a fifth of pre-service teachers failed to meet the minimum requirements in justifying the author’s expertise (i.e., scored 0 points); they did not consider the domain of the author’s expertise or referred to it inaccurately. In addition, four pre-service teachers (2%) referred to the publisher or the entire editorial board as the author; thus, even in higher education, students may mix up the author and the publisher or editors.

While the researcher and the associate professor were evaluated as benevolent (see Table 4), pre-service teachers struggled to justify their benevolent intentions. Notably, 47 and 59% of pre-service teachers failed to provide any relevant justification for their benevolence evaluation of the researcher and the associate professor, respectively. Several pre-service teachers considered issues related to evidence rather than the author’s intentions. In contrast, recognizing the less benevolent, commercial intentions of the consultant with marketing expertise seemed easier, as 78% of pre-service teachers received at least one point for their response.

Furthermore, pre-service teachers encountered difficulties in justifying the quality of the publication practices of the company website; 72% of pre-service teachers were unable to provide any relevant justification for their evaluation of these publication practices. They often referred to the author’s commercial intentions instead of explicating the publication practices. A few pre-service teachers (6.4%) also confused the publication venue (popular Science News) with the publication forum of the article that was summarized in the text.

Regarding the quality of evidence, pre-service teachers performed better when justifying the quality of the cited research compared to the results of the review (Z = 5.35; p < 0.001; r = 0.41). Pre-service teachers’ responses suggest that they did not recognize the value of the research review as evidence. For example, approximately 10% of pre-service teachers claimed that the author relied only on one study despite the text explicitly stating that the research review was based on 10 empirical studies. The number of references (four in the researcher’s blog vs. one in the text on the popular Science News website) was seemingly valued over the nature of the references.

Moreover, when justifying the customer survey as evidence, pre-service teachers exhibited similar patterns as when justifying the commercial website’s publication practices: some pre-service teachers’ responses focused on the author’s commercial intentions rather than the quality of evidence.

The majority of pre-service teachers (90%) were able to differentiate the texts with accurate information on learning styles from those with inaccurate information by ranking the credibility of the research-based texts (researcher’s blog and popular Science News text) in the two highest positions. Notably, some pre-service teachers (7%) ranked the teacher’s blog and the commercial text (3%) in the second position. The researcher’s blog was ranked more often as the most credible online text (80%) than the popular Science News text (20%) that summarized the results of ten experimental studies of learning styles.

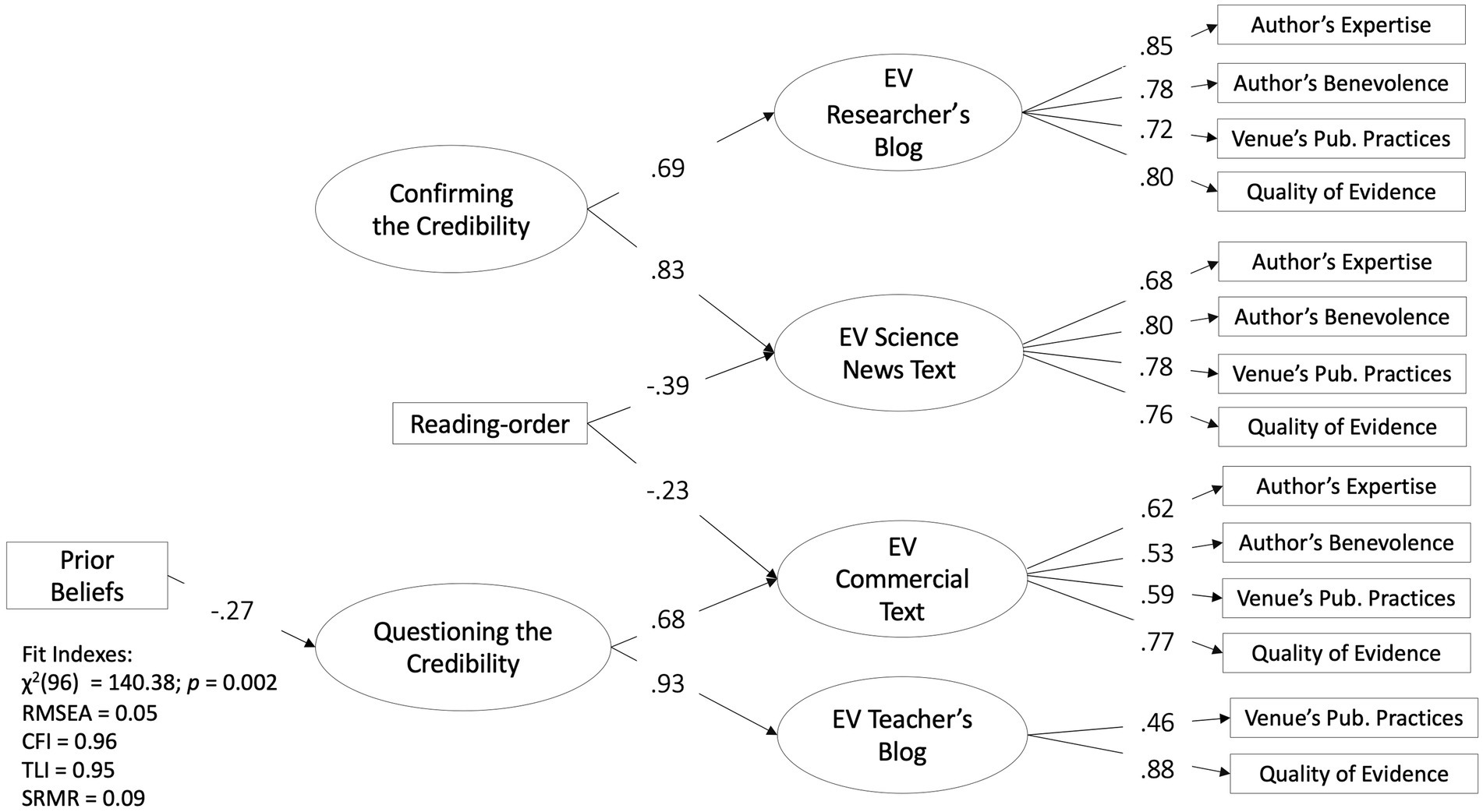

A Spearman correlation matrix for the variables used in the statistical analysis is presented in Supplementary Appendix. We used structural equation modeling (SEM) to examine whether pre-service teachers’ prior beliefs about learning styles predict their abilities to confirm the more credible texts and question the less credible ones. The fit indices were acceptable or approaching the cut-off values (see Figure 3); thus, the SEM model presented in Figure 3 was considered the final model.

Figure 3. Structural equation model with standardized estimates for the associations between credibility evaluations and prior topic beliefs, with the reading order as a control variable. All connections are at least p < 0.05.

Pre-service teachers endorsed the inaccurate conception about learning styles (M = 16.29; SD = 2.46, the maximum value was 21). Regarding the role of prior beliefs, our assumptions were partly confirmed. The stronger a pre-service teacher’s prior beliefs about the existence of learning styles, the less able they were to question the credibility of the online texts that included inaccurate information about learning styles. However, pre-service teachers’ prior beliefs were not associated with confirming the credibility of online texts containing accurate information.

This study sought to extend our knowledge of pre-service teachers’ ability to evaluate the credibility of online texts concerning learning styles on which accurate an inaccurate information spread online. We created four online texts that either supported or opposed the idea of learning styles and manipulated these texts in terms of the author’s expertise, author’s benevolence, publication venue, and the quality of evidence. This study provides unique insights into pre-service teachers’ ability to justify the credibility of more and less credible online texts from different perspectives and, thus, their preparedness to explicate and model credibility evaluation in their own classrooms. The study also demonstrates the associations between pre-service teachers’ prior beliefs about learning styles and credibility evaluations. Overall, the study offers insights that can aid in developing teacher education to ensure that pre-service teachers are supported with sufficient credibility evaluation skills while preparing for their profession as educators.

Our results showed that the majority of pre-service teachers evaluated the credibility of the more credible texts to be higher than that of the less credible texts. The researcher’s blog and the popular science news text were evaluated as more credible than the teacher’s blog and the commercial text in all the credibility aspects, with one exception: the associate professor’s expertise was evaluated as highly as the teacher’s expertise. Overall, these results suggest that pre-service teachers valued scientific expertise and research-based evidence; this is somewhat contradictory to previous findings showing that pre-service teachers may perceive researchers as less benevolent than practitioners (Hendriks et al., 2021; Merk and Rosman, 2021), particularly when seeking practical advice (Hendriks et al., 2021). In the present study, pre-service teachers valued research-based evidence considerably higher than the educators’ testimonials on the teacher’s blog and the commercial website. However, in different contexts, such as evaluating educational app reviews, pre-service teachers may value testimonials, especially if they are present by teachers (List et al., 2022).

Despite these encouraging results, over a fourth of pre-service teachers did not question the benevolence of the consultant who authored the text published on the commercial website. Furthermore, 12% of pre-service teachers perceived the summary of the research review as unreliable evidence, and 10% of pre-service teachers ranked either the teacher’s blog or the commercial text among the two most credible texts.

Moreover, several pre-service teachers struggled with justifying their credibility evaluations. The average total credibility justification score was 17.26 out of 48 points, and 10–70% of pre-service teachers’ justifications were inadequate, depending on the target of the evaluation. These findings are in accordance with previous research, which has shown that even pre-service teachers who attend to the source may do it in a superficial manner (List et al., 2022). This is illustrated by a fifth of pre-service teachers failing to explicate the domain of the author’s expertise in their justifications despite it being essential to consider how well the author’s expertise is aligned with the domain of the text (Hendriks et al., 2015; Stadtler and Bromme, 2014). Paying attention to the domain of expertise is essential in today’s society, where the division of cognitive labor is accentuated (Scharrer et al., 2017). This may be further relevant in education because many people, regardless of their background, participate in educational discussions, and even well-intended educational resources may share information that is inaccurate or incomplete (Kendeou et al., 2019).

There may be several reasons behind pre-service teachers struggling to justify the different aspects of credibility. First, problems may have stemmed from pre-service teachers’ lack of experience in considering different credibility aspects separately. Several pre-service teachers considered the evidence as an indicator of scientists’ benevolence or commercial intentions. Second, pre-service teachers may have lacked adequate evidence or source knowledge. For example, our results suggest that several pre-service teachers did not have sufficient knowledge of research reviews and, therefore, did not fully recognize its value as evidence. This is understandable as most pre-service teachers were at the beginning of their university studies. It would be worth to examine also pre-service teachers’ justification abilities in the later stage of their studies.

Notably, a small proportion of pre-service teachers’ responses showed an advanced understanding of the target of credibility evaluation. Such an understanding equips pre-service teachers with a firm basis for developing practices to educate critical online readers when providing thinking tools for their students (cf. Andreassen and Bråten, 2013; Leu et al., 2017).

Our assumption regarding the association between pre-service teachers’ prior beliefs about learning styles and credibility evaluations was only partially confirmed. In line with previous research (Eitel et al., 2021), several pre-service teachers believed in learning styles; the stronger their beliefs, the more they struggled with questioning the credibility of the less credible texts whose contents adhered to their prior beliefs. This is in line with previous findings showing that prior beliefs are reflected in readers’ evaluation of information (Richter and Maier, 2017, see also van Strien et al., 2016). However, we did not find an association between pre-service teachers’ prior beliefs and confirming the credibility of more credible texts. It is possible that pre-service teachers resolved the conflict between their prior beliefs and text content by trusting the expertise of the source (Stadtler and Bromme, 2014).

This study has certain notable limitations. First, the online texts evaluated by pre-service teachers were researcher-designed, and the texts were consistently designed so that all credibility aspects included indicators toward the more or less credibility, with the sole exception of two aspects of the teacher’s blog. Thus, the task did not fully represent credibility evaluation in an authentic online setting, which would have entailed additional complexities. For example, researchers can have biased intentions or commercial websites may share research-based information.

Second, our credibility evaluation task was not contextualized (cf. List et al., 2022; Zimmerman and Mayweg-Paus, 2021). This is a considerable flaw as pre-service teachers’ evaluations seem to depend on their epistemic aims (Hendriks et al., 2021). Furthermore, the lack of contextualization in teachers’ professional lives may have decreased readers’ engagement (Herrington and Oliver, 2000). Third, while the data were collected as part of an obligatory literacy course, pre-service teachers’ responses were not assessed. Consequently, some pre-service teachers may have lacked the motivation to respond to the items to the best of their ability (see List and Alexander, 2018). Moreover, some pre-service teachers may have found the number of items (16 open-ended responses) to be overwhelming, leading to a decrease in engagement.

Teachers are key players in educating critical online readers. To scaffold their students beyond superficial evaluation practices, pre-service teachers require adequate source and evidence knowledge as well as effective evaluation strategies. Our findings suggest that this is not necessarily the case at present, especially in the early stage of teacher education. Therefore, teacher education should provide opportunities for pre-service teachers to discuss what constitutes expertise in different domains, the limits of such expertise, the criteria for what constitutes good evidence in a particular domain, and the publication practices and guidelines that can ensure high-quality information. This would allow the prospective teachers to model the evaluation strategies to their students, provide them constructive feedback, and engage in high-quality reasoning about credibility with their students.

As pre-service teachers struggled with justifying their credibility evaluations, they would benefit from concrete models; these models could be justification examples by peers that would illustrate advanced reasoning. We collected pre-service teachers’ advanced responses, which can be used for modeling (see Table 3). Furthermore, the examples illustrate the level teacher educators can target when fostering credibility evaluation among pre-service teachers.

To engage pre-service teachers in considering the credibility of online information, they could select different types of online texts that concern the topical educational issue debated in public, such as using mobile phones in the classrooms. The pre-service teachers could explore whose voices are considered in public debates, what kind of expertise they represent, and how strong evidence the authors provide to support their claims. Before analyzing and evaluating the selected texts, pre-service teachers can be asked to record their own prior beliefs on the topic. This would allow pre-service teachers to consider how their prior beliefs are reflected in their credibility evaluations. Activation and reflection could also concern pre-service teachers’ beliefs about the justification of knowledge claims: whether knowledge claims need to be justified by personal beliefs, authority, or multiple sources (see Bråten et al., 2022).

Furthermore, a collaborative reflection is a promising practice to support pre-service teachers’ ability to evaluate the credibility of online educational texts (Zimmerman and Mayweg-Paus, 2021). Such reflection should cover not only different types of credible scientific texts but also less credible texts on complex, educational issues. Teacher educators could encourage discussions that cover different credibility aspects. These discussions could be supported with digital tools designed to promote the critical and deliberative reading of multiple conflicting texts (Barzilai et al., 2020a; Kiili et al., 2016).

Although pre-service teachers could differentiate the more credible texts from the less credible ones, they struggled with justifying the credibility. Credibility evaluation in current online information landscape is complex, requiring abilities to understand what makes one web resource more credible than others. The complexities are further amplified by readers’ prior beliefs, which may include inaccurate information. Therefore, readers must also be critical of their own potential inaccurate beliefs in order to overcome them. It is apparent, as well as supported by the findings of this study, that pre-service teachers require theoretical and practical support to become skillful online evaluators. Consequently, teacher education must ensure that prospective teachers master the rapidly changing developments in literacy environments, thereby enabling them to base their classroom practices on scientific, evidence-based information and be prepared to educate critical online readers.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical approval was not required for the study involving human participants since no sensitive or personal data was collected. The study was conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

PK: Investigation, Methodology, Project administration, Resources, Writing – original draft, Writing – review & editing. EH: Investigation, Methodology, Resources, Writing – review & editing. MM: Methodology, Resources, Writing – review & editing. ER: Formal analysis, Methodology, Writing – review & editing. CK: Conceptualization, Formal analysis, Funding acquisition, Methodology, Resources, Writing – original draft, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Strategic Research Council (CRITICAL: Technological and Societal Innovations to Cultivate Critical Reading in the Internet Era: No. 335625, 335727, 358490, 358250) and the Research Council of Finland (No. 324524).

Preprint version of this article is available in OSF (Kulju et al., 2022).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1451002/full#supplementary-material

Abel, R., Roelle, J., and Stadtler, M. (2024). Whom to believe? Fostering source evaluation skills with interleaved presentation of untrustworthy and trustworthy social media sources. Discourse Process. 61, 233–254. doi: 10.1080/0163853X.2024.2339733

ACARA (2014). Australian curriculum. Senior secondary curriculum. English (Version 8.4). Available at: https://www.australiancurriculum.edu.au/senior-secondary-curriculum/english/ (Accessed June 17, 2024).

Andreassen, R., and Bråten, I. (2013). Teachers’ source evaluation self-efficacy predicts their use of relevant source features when evaluating the trustworthiness of web sources on special education. Br. J. Educ. Technol. 44, 821–836. doi: 10.1111/j.1467-8535.2012.01366.x

Anmarkrud, Ø., Bråten, I., Florit, E., and Mason, L. (2021). The role of individual differences in sourcing: a systematic review. Educ. Psychol. Rev. 34, 749–792. doi: 10.1007/s10648-021-09640-7

Anmarkrud, Ø., Bråten, I., and Strømsø, H. I. (2013). Multiple-documents literacy: strategic processing, source awareness, and argumentation when reading multiple conflicting documents. Learn. Individ. Differ. 30, 64–76. doi: 10.1016/j.lindif.2013.01.007

Aslaksen, K., and Lorås, H. (2018). The modality-specific learning style hypothesis: a mini-review. Front. Psychol. 9:1538. doi: 10.3389/fpsyg.2018.01538

Barzilai, S., Mor-Hagani, S., Zohar, A. R., Shlomi-Elooz, T., and Ben-Yishai, R. (2020a). Making sources visible: promoting multiple document literacy with digital epistemic scaffolds. Comput. Educ. 157:103980. doi: 10.1016/j.compedu.2020.103980

Barzilai, S., Thomm, E., and Shlomi-Elooz, T. (2020b). Dealing with disagreement: the roles of topic familiarity and disagreement explanation in evaluation of conflicting expert claims and sources. Learn. Instr. 69:101367. doi: 10.1016/j.learninstruc.2020.101367

Bougatzeli, E., Douka, M., Bekos, N., and Papadimitriou, F. (2017). Web reading practices of teacher education students and in-service teachers in Greece: a descriptive study. Preschool Prim. Educ. 5, 97–109. doi: 10.12681/ppej.10336

Braasch, J. L. G., Bråten, I., Strømsø, H. I., Anmarkrud, Ø., and Ferguson, L. E. (2013). Promoting secondary school students' evaluation of source features of multiple documents. Contemp. Educ. Psychol. 38, 180–195. doi: 10.1016/j.cedpsych.2013.03.003

Bråten, I., Brandmo, C., Ferguson, L. E., and Strømsø, H. I. (2022). Epistemic justification in multiple document literacy: a refutation text intervention. Contemp. Educ. Psychol. 71:102122. doi: 10.1016/j.cedpsych.2022.102122

Bråten, I., Stadtler, M., and Salmerón, L. (2018). “The role of sourcing in discourse comprehension” in Handbook of discourse processe. eds. M. F. Schober, D. N. Rapp, and M. A. Britt. 2nd ed (New York: Routledge), 141–166.

Britt, M. A., Perfetti, C. A., Sandak, R. S., and Rouet, J.-F. (1999). Content integration and source separation in learning from multiple texts. In Narrative comprehension, causality, and coherence. Essays in honor of Tom Trabasso, eds. S.R. Goldman, A.C. Graesser, and P. Broekvan den (Mahwah, NJ: Lawrence Erlbaum Associates, Inc), 209–233.

Chinn, C. A., Rinehart, R. W., and Buckland, L. A. (2014). “Epistemic cognition and evaluating information: applying the AIR model of epistemic cognition” in Processing inaccurate information: Theoretical and applied perspectives from cognitive science and the educational sciences. eds. D. Rapp and J. Braasch (Cambridge, Massachusetts: MIT Press), 425–453.

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum Associates, Publishers.

Cohen, L., Manion, L., and Morrison, K. (2018). Research methods in education. 8th Edn. London: Routledge, Taylor & Francis Group.

Coiro, J., Coscarelli, C., Maykel, C., and Forzani, E. (2015). Investigating criteria that seventh graders use to evaluate the quality of online information. J. Adolesc. Adult. Lit. 59, 287–297. doi: 10.1002/jaal.448

Dekker, S., Lee, N. C., Howard-Jones, P., and Jolles, J. (2012). Neuromyths in education: prevalence and predictors of misconceptions among teachers. Front. Psychol. 3. doi: 10.3389/fpsyg.2012.00429

Ecker, U. K., Lewandowsky, S., Cook, J., Schmid, P., Fazio, L. K., Brashier, N., et al. (2022). The psychological drivers of misinformation belief and its resistance to correction. Nat. Rev. Psychol. 1, 13–29. doi: 10.1038/s44159-021-00006-y

Eitel, A., Prinz, A., Kollmer, J., Niessen, L., Russow, J., Ludäscher, M., et al. (2021). The misconceptions about multimedia learning questionnaire: an empirical evaluation study with teachers and student teachers. Psychol. Learn. Teach. 20, 420–444. doi: 10.1177/14757257211028723

Elo, S., and Kyngäs, H. (2008). The qualitative content analysis process. J. Adv. Nurs. 62, 107–115. doi: 10.1111/j.1365-2648.2007.04569.x

Fendt, M., Nistor, N., Scheibenzuber, C., and Artmann, B. (2023). Sourcing against misinformation: effects of a scalable lateral reading training based on cognitive apprenticeship. Comput. Hum. Behav. 146:107820. doi: 10.1016/j.chb.2023.107820

Ferguson, L. E., and Bråten, I. (2022). Unpacking pre-service teachers’ beliefs and reasoning about student ability, sources of teaching knowledge, and teacher-efficacy: a scenario-based approach. Frontiers in Education 7:975105. doi: 10.3389/feduc.2022.975105

Finnish National Agency for Education (2022). Finnish National Agency for Education's decisions on eligibility for positions in the field of education and training. Available at: https://www.oph.fi/en/services/recognition-teaching-qualifications-and-teacher-education-studies (Accessed June 17, 2024).

Fraillon, J., Ainley, J., Schulz, W., Friedman, T., and Duckworth, D. (2020). Preparing for life in a digital world. IEA international computer and information literacy study 2018 international report. Cham: Springer.

Hahnel, C., Eichmann, B., and Goldhammer, F. (2020). Evaluation of online information in university students: development and scaling of the screening instrument EVON. Front. Psychol. 11:562128. doi: 10.3389/fpsyg.2020.562128

Hämäläinen, E. K., Kiili, C., Marttunen, M., Räikkönen, E., González-Ibáñez, R., and Leppänen, P. H. T. (2020). Promoting sixth graders’ credibility evaluation of web pages: an intervention study. Comput. Hum. Behav. 110:106372. doi: 10.1016/j.chb.2020.106372

Hendriks, F., Kienhues, D., and Bromme, R. (2015). Measuring laypeople’s trust in experts in a digital age: the muenster epistemic trustworthiness inventory (METI). PLoS One 10:e0139309. doi: 10.1371/journal.pone.0139309

Hendriks, F., Seifried, E., and Menz, C. (2021). Unraveling the “smart but evil” stereotype: Pre-service teachers’ evaluations of educational psychology researchers versus teachers as sources of information. Z Padagog Psychol. 35, 157–171. doi: 10.1024/1010-0652/a000300

Herrington, J., and Oliver, R. (2000). An instructional design framework for authentic learning environments. Educ. Technol. Res. Dev. 48, 23–48. doi: 10.1007/BF02319856

Howard-Jones, P. A. (2014). Neuroscience and education: myths and messages. Nat. Rev. Neurosci. 15, 817–824. doi: 10.1038/nrn3817

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Kammerer, Y., Gottschling, S., and Bråten, I. (2021). The role of internet-specific justification beliefs in source evaluation and corroboration during web search on an unsettled socio-scientific issue. J. Educ. Comput. Res. 59, 342–378. doi: 10.1177/0735633120952731

Kendeou, P., Robinson, D. H., and McCrudden, M. (2019). Misinformation and fake news in education. Charlotte, NC: Information Age Publishing.

Kiemer, K., and Kollar, I. (2021). Source selection and source use as a basis for evidence-informed teaching. Do pre-service teachers’ beliefs regarding the utility of (non)scientific information sources matter? Zeitschrift für Pädagogische Psychologie 35, 127–141. doi: 10.1024/1010-0652/a000302

Kiili, C., Bråten, I., Strømsø, H. I., Hagerman, M. S., Räikkönen, E., and Jyrkiäinen, A. (2022). Adolescents’ credibility justifications when evaluating online texts. Educ. Inf. Technol. 27, 7421–7450. doi: 10.1007/s10639-022-10907-x

Kiili, C., Coiro, J., and Hämäläinen, J. (2016). An online inquiry tool to support the exploration of controversial issues on the internet. J. Literacy Technol. 17, 31–52.

Kiili, C., Räikkönen, E., Bråten, I., Strømsø, H. I., and Hagerman, M. S. (2023). Examining the structure of credibility evaluation when sixth graders read online texts. J. Comput. Assist. Learn. 39, 954–969. doi: 10.1111/jcal.12779

Kirschner, P. A. (2017). Stop propagating the learning styles myth. Comput. Educ. 106, 166–171. doi: 10.1016/j.compedu.2016.12.006

Krätzig, G. P., and Arbuthnott, K. D. (2006). Perceptual learning style and learning proficiency: a test of the hypothesis. J. Educ. Psychol. 98, 238–246. doi: 10.1037/0022-0663.98.1.238

Kulju, P., Hämäläinen, E., Mäkinen, M., Räikkönen, E., and Kiili, C. (2022). Pre-service teachers evaluating online texts about learning styles: there is room for improvement in justifying the credibility. OSF [Preprint]. doi: 10.31219/osf.io/3zwk8

Leu, D. J., Kinzer, C. K., Coiro, J., Castek, J., and Henry, L. A. (2017). New literacies: a dual-level theory of the changing nature of literacy, instruction, and assessment. J. Educ. 197, 1–18. doi: 10.1177/002205741719700202

Lewandowsky, S., Ecker, U. K. H., and Cook, J. (2017). Beyond misinformation: understanding and coping with the “post-truth” era. J. Appl. Res. Mem. Cogn. 6, 353–369. doi: 10.1016/j.jarmac.2017.07.008

List, A., and Alexander, P. A. (2018). “Cold and warm perspectives on the cognitive affective engagement model of multiple source use” in Handbook of multiple source use. eds. J. L. G. Braasch, I. Bråten, and M. T. McCrudden (New York: Routledge), 34–54.

List, A., Lee, H. Y., Du, H., Campos Oaxaca, G. S., Lyu, B., Falcon, A. L., et al. (2022). Preservice teachers’ recognition of source and content bias in educational application (app) reviews. Comput. Hum. Behav. 134:107297. doi: 10.1016/j.chb.2022.107297

Maier, J., and Richter, T. (2013). Text belief consistency effects in the comprehension of multiple texts with conflicting information. Cogn. Instr. 31, 151–175. doi: 10.1080/07370008.2013.769997

McAfee, M., and Hoffman, B. (2021). The morass of misconceptions: how unjustified beliefs influence pedagogy and learning. Int. J. Scholarship Teach. Learn. 15:4. doi: 10.20429/ijsotl.2021.150104

Menz, C., Spinath, B., and Seifried, E. (2021). Where do pre-service teachers' educational psychological misconceptions come from? The roles of anecdotal versus scientific evidence. Zeitschrift für Pädagogische Psychologie 35, 143–156. doi: 10.1024/1010-0652/a000299

Merk, S., and Rosman, T. (2021). Smart but evil? Student-teachers’ perception of educational researchers’ epistemic trustworthiness. AERA Open 5, 233285841986815–233285841986818. doi: 10.1177/2332858419868158

Metzger, M. J., and Flanagin, A. J. (2013). Credibility and trust of information in online environments: the use of cognitive heuristics. J. Pragmat. 59, 210–220. doi: 10.1016/j.pragma.2013.07.012

Muthén, L. K., and Muthén, B. O. (1998–2017). Mplus user’s guide. 8th Edn. Los Angeles, CA: Muthén & Muthén.

NCC (2016). National core curriculum for basic education 2014. Helsinki: Finnish National Board of Education.

Niemi, H. (2012). “The societal factors contributing to education and schooling in Finland” in Miracle of education. eds. H. Niemi, A. Toom, A. Kallioniemi, and H. Niemi (Rotterdam: Sense Publishers), 19–38.

Nussbaum, E. M. (2020). Critical integrative argumentation: toward complexity in students’ thinking. Educ. Psychol. 56, 1–17. doi: 10.1080/00461520.2020.1845173

Perfetti, C. A., Rouet, J. F., and Britt, M. A. (1999). Toward a theory of documents representation. In The construction of mental representation during reading, eds. H. OostendorpVan and S.R. Goldman. Mahwah, New Jersey, London: Erlbaum, 99–122.

Reuter, T., and Leuchter, M. (2023). Pre-service teachers’ latent profile transitions in the evaluation of evidence. J. Teach. Educ. 132:104248. doi: 10.1016/j.tate.2023.104248

Richter, T., and Maier, J. (2017). Comprehension of multiple documents with conflicting information: a two-step model of validation. Educ. Psychol. 52, 148–166. doi: 10.1080/00461520.2017.1322968

Scharrer, L., Rupieper, Y., Stadtler, M., and Bromme, R. (2017). When science becomes too easy: science popularization inclines laypeople to underrate their dependence on experts. Public Underst. Sci. 26, 1003–1018. doi: 10.1177/0963662516680311

Sinatra, G. M., and Jacobson, N. (2019). “Zombie concepts in education: why they won’t die and why you can’t kill them” in Misinformation and fake news in education. eds. P. Kendeou, D. H. Robinson, and M. T. McCrudden (Charlotte, NC: Information Age Publishing), 7–27.

Stadtler, M., and Bromme, R. (2014). “The content–source integration model: a taxonomic description of how readers comprehend conflicting scientific information” in Processing inaccurate information: theoretical and applied perspectives from cognitive science and the educational sciences. eds. D. N. Rapp and J. L. G. Braasch (Cambridge, Massachusetts: MIT Press), 379–402.

Tarchi, C. (2019). Identifying fake news through trustworthiness judgements of documents. Cult. Educ. 31, 369–406. doi: 10.1080/11356405.2019.1597442

Tirri, K. (2014). The last 40 years in Finnish teacher education. J. Educ. Teach. 40, 1–10. doi: 10.1080/02607476.2014.956545

van Strien, J. L. H., Kammerer, Y., Brand-Gruwel, S., and Boshuizen, H. P. A. (2016). How attitude strength biases information processing and evaluation on the web. Comput. Hum. Behav. 60, 245–252. doi: 10.1016/j.chb.2016.02.057

Vipunen – Education Statistics Finland (2022). Applicants and those who accepted a place. Available at: https://vipunen.fi/en-gb/university/Pages/Hakeneet-ja-hyv%C3%A4ksytyt.aspx (Accessed June 17, 2024).

Zimmerman, M., and Mayweg-Paus, E. (2021). The role of collaborative argumentation in future teachers’ selection of online information. Zeitschrift für Pädagogische Psychologie 35, 185–198. doi: 10.1024/1010-0652/a000307

Keywords: credibility evaluation, online reading, sourcing, critical reading, misinformation, pre-service teachers, teacher education

Citation: Kulju P, Hämäläinen EK, Mäkinen M, Räikkönen E and Kiili C (2024) Pre-service teachers evaluating online texts about learning styles: there is room for improvement in justifying the credibility. Front. Educ. 9:1451002. doi: 10.3389/feduc.2024.1451002

Received: 18 June 2024; Accepted: 07 October 2024;

Published: 05 November 2024.

Edited by:

Noela Rodriguez Losada, University of Malaga, SpainReviewed by:

Keiichi Kobayashi, Shizuoka University, JapanCopyright © 2024 Kulju, Hämäläinen, Mäkinen, Räikkönen and Kiili. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pirjo Kulju, cGlyam8ua3VsanVAdHVuaS5maQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.