- 1Department of Biomedical Sciences, Western Michigan University Homer Stryker M.D. School of Medicine, Kalamazoo, MI, United States

- 2Department of Biomedical Sciences, University of South Carolina School of Medicine Greenville, Greenville, SC, United States

Despite general agreement that science outreach is important, the effectiveness of science outreach programs on participants' learning often is not assessed. Brain Explorers is a neuroscience outreach program that allows medical students to partner with Biomedical Sciences faculty to develop lesson plans with learning objectives, interactive experiments for middle schoolers, and assessments of learning. These lessons are then implemented through a community-level intervention in which medical students teach their lesson plans to middle schoolers and assist them in performing activities to reinforce the concepts. Importantly, the efficacy of these active learning interventions is assessed. Throughout the program's evolution, a variety of different forms of assessment have been utilized to examine student understanding. While the goals of outreach programs are varied, here we have focused on the evaluation of content knowledge gains laying out three unique evaluation methods including post-event, immediate pre- and post-event, and spaced pre- and post-event evaluation. Specifically, using Brain Explorers lessons as examples we explore the practicality and feasibility of various learning assessments in outreach programs, begin to examine the impacts of participation in these programs on the medical school instructors and encourage others in the field to implement assessment of their programs.

1 Introduction

For years faculty, staff, and students at institutions of higher education have felt compelled to serve their communities by providing Science, Technology, Engineering, Math, and Medicine (STEMM) opportunities for community members, particularly K-12 students (Nation and Hansen, 2021). The reasons for conducting STEMM outreach range from a desire to give back to the community to improving representation in STEMM fields, among numerous others (Minen et al., 2023; Weekes, 2012). No matter the reason for conducting STEMM outreach, it is clear that engaging in this work provides value not only for the community but for the faculty, staff, and students that are participating (Vollbrecht et al., 2019; Saravanapandian et al., 2019). While conducting this work can be fulfilling and provide value to many stakeholders, it is important for outreach programs and events to have clear goals. Some of the most commonly stated goals of STEMM outreach are to improve societal science literacy (Kelp et al., 2023), representation in STEMM fields (Shoemaker et al., 2020), and public attitudes toward science (Crawford et al., 2021; Vennix et al., 2018).

While an increasing number of STEMM outreach programs have clear goals and objectives, many programs still lack a clearly stated direction and purpose (Jensen, 2015; Abramowitz et al., 2024). In general, many STEMM outreach programs that fail to explicitly list their goals aim to improve attitudes toward science and/or increase science content knowledge. Regardless of whether or not a program has a clearly stated goal, many programs fail to assess whether they are meeting these goals (Minen et al., 2023; Varner, 2014; Sadler et al., 2018; Cicchino et al., 2023). As a result, there is a paucity of evidence-based research on the most effective practices when conducting science outreach. We, and others, have recognized that effective science communication at any level begins with the development of clear goals for the interaction (Gilbertson et al., 2024; Deal et al., 2014; Gall et al., 2020). However, truly understanding the effectiveness of an outreach activity or program requires not only careful planning and goal setting but also assessment and reflection (Restini et al., 2024). The present manuscript (1) describes an outreach program that engages medical students in the process of creating, delivering, and evaluating science lessons for middle school students, and (2) presents a variety of evaluation methods for assessing learning within outreach programs to serve as a model for other programs seeking to investigate the effectiveness of their outreach efforts.

1.1 Background and rationale

Brain Explorers is a service-learning program based at Western Michigan University Homer Stryker M.D. School of Medicine (WMed), in which medical students teach foundational neuroscience concepts to middle school students (7th and 8th grade) through a variety of one-hour events. On the surface, Brain Explorers is a community outreach program directed at middle schoolers from groups underrepresented in STEMM (including racial/ethnic minorities, low socioeconomic status, and individuals from rural communities), intended to increase interest in basic sciences through exposure to neuroscience with the hope of inspiring these children to become involved in STEMM as scientists, engineers, physicians, or in other fields. Lessons presented to middle schoolers focus on active learning and hands-on engagement that can be mapped to neuroscience concepts and appropriately tagged to Next Generation Science Standards [NGSS; (National Research Council, 2013)]. While assessing the overall success of these types of programs remains difficult due to the longitudinal nature of the greater goals and outcomes (mainly more underrepresented individuals in STEMM), studies that have aimed at examining this effect tend to demonstrate positive effects from a variety of forms of outreach (Vennix et al., 2018; Yawson et al., 2016; Zhou, 2020; Demetry et al., 2009; Mohd Shahali et al., 2019). Thus, we anticipate that outreach efforts aimed at underrepresented individuals in our program will increase representation at higher levels although it remains to be formally evaluated.

Another, potentially less obvious, goal of the program is to provide medical students with supplemental training in communication through service-learning which can help to meet LCME accreditation standard 6.6 (Restini et al., 2024; Liason Committee on Medical Education, 2023). Medical students are tasked with performing the entire teaching “loop”, including both lesson and assessment planning, execution of those plans, assessment of middle school students' learning, and adaptation of their original lesson plan. During this loop, medical students have to distill complex scientific ideas down to digestible components while maintaining scientific accuracy and validity, something that they will be asked to do regularly as they work with patients in the future.

Among other possibilities, Brain Explorers yields two distinct assessment tracks to evaluate the program's efficacy: middle school students (as pupils) and medical students (as teachers). Middle school students were assessed for comprehension of the concepts taught. Varying assessment types were utilized including both qualitative and quantitative surveys, as well as knowledge-based quizzes. Medical students were assessed for changes in their ability to communicate scientific ideas to a lay audience. (Essentially, if you can explain it to an eighth grader, then you can explain it to a patient.) They were also assessed for effects on their own understanding of the concepts they taught.

2 Theoretical framework

Brain Explorers is conceptualized through three unique yet intersecting theoretical frameworks. These include constructivism, experiential learning, and logic models for program evaluation. Brain Explorers' overarching framework is Logic Models for Program Evaluation (Van Melle, 2016). Logic models require a complete program evaluation including identification of the “problem” being solved by the program, the mission of the program (purpose), necessary resources for the program (inputs), the activities being done to solve the problem (activities), the products of the program (output), and the expected results of the program (outcomes). The final piece of the logic model framework is evaluation of outcomes and outputs to determine the effectiveness of the program in solving the “problem”. Previous work has demonstrated the effectiveness of logic model for program evaluation in linking evaluation instruments to specific program objectives and better understanding which outcomes are being achieved and which need further attention (Helitzer et al., 2009; Mclaughlin and Jordan, 2015; Friedman et al., 2008). This framework drove the initial creation of a program with a strong sense of purpose and an understanding of our goals and continues to drive iterative assessment of the program which has three primary goals, improving attitudes toward science in K-12 students, improving science content knowledge in K-12 students, and improving science communication skills in medical students. The focus of this manuscript is assessing science content knowledge gains in middle school students.

Under the umbrella of the Logic Models for Program Evaluation framework, two additional pedagogical frameworks help us to understand the work being done within the programs' two primary populations, middle school students and medical students. Constructivism drives our understanding of the middle school student experience (Olusegun, 2015; Zajda, 2021; Mann and MacLeod, 2015). Middle school students are given opportunities to construct knowledge of the nervous system through active learning lessons and hands-on experiences. A driving question or observable phenomenon is presented early in the lesson such as, “How do I know my arm is raised if I close my eyes and raise my hand?”, and activities are designed to allow students to explore that question or phenomenon. We believe that this constructivist framework provides the best opportunity for students to learn and engage with the content, and also generates greater interest in the scientific process of exploration and experimentation.

Finally, the Experiential Learning framework drives our understanding of the medical student experience (Kolb, 1984; Yardley et al., 2012; Porter-Stransky et al., 2023). This framework requires action, evaluation, reflection, and experimentation. By allowing our medical students to serve as the instructors responsible for content creation, delivery, and evaluation, we provide a concrete experience that they can reflect on and then make adjustments to future iterations of the same event. Following the classic see one, do one, teach one approach of medical schools the world over, medical students first participate as secondary instructors to observe a lesson in action (see one), they then create a lesson plan using the template (Supplementary material) and mock teach a lesson (do one), before finally taking their lesson plan into the middle school classroom (teach one). During the mock teaching event other medical students and faculty serve as the “middle school students” and provide feedback on the timing, suitability, engagement and content of the event.

3 Learning environment & methods

3.1 Middle school students

In order to reach as many students as possible across the broadest possible range of science interest, outreach lessons were delivered in a middle school classroom during students' scheduled science classes (See Supplementary material for lessons). Each academic year Brain Explorers visited the 8th grade classes a total of 4 or 5 times to deliver novel neuroscience lesson plans. As such, each lesson described below was not necessarily presented to the same group of students. The middle school was located in a rural Midwest town (Porter-Stransky et al., 2024). Students had limited prior exposure to neuroscience. Approximately 18 middle school students were in each of the 8th grade classes visited. First and second year medical students served as the instructors for these lessons. At least one medical school faculty member was present to observe medical students and help as needed. The middle school science teacher was also present during these sessions. Lessons were designed to include didactic content delivery combined with active learning. All lesson plans created by Brain Explorers are designed to meet the Next Generation Science Standards (NGSS). Lesson plans were developed using a standardized lesson plan template (Supplementary material 1) to ensure that future instructors could replicate the lesson.

To assess the effectiveness of lesson plans in increasing content knowledge for middle school students, a variety of methods can be utilized. This includes differences in timing of assessments as well as the use of various assessment types. Over the years we have utilized several different evaluation formats in our own program. In this manuscript we lay out three unique methods of assessment that were used over the course of two years (2021–2023) including immediate post-event assessment, immediate pre- and post-event assessment, and spaced pre- and post-event assessment. The type of questions used in assessments to evaluate content knowledge gains has varied within our own program. At times we have asked students to answer two open-ended questions, one which assesses material covered during the lesson and another which evaluates neuroscience content that is not covered during the lesson. At other times, we have utilized simple multiple choice question quizzes. These quizzes have been in the form of simple paper copies and others have been in an electronic game format such as Kahoot!. Here we describe each of the assessment types we have utilized in assessing content knowledge. Data were analyzed and graphed using GraphPad Prism.

All assessment of K-12 student learning was approved by the WMed Institutional Review Board. Specifically, It was determined that it meets the criteria for exempt status as described in 45 CFR Part 46.104(d) Category 1: Research, conducted in established or commonly accepted educational settings, that specifically involves normal educational practices that are not likely to adversely impact students' opportunity to learn required educational content or the assessment of educators who provide instruction. Furthermore, the middle schools in which the outreach events were conducted agreed to these assessments being done within their school and used for research.

3.1.1 Immediate post-event assessment

The immediate post-event assessment is probably the simplest and most time efficient method of assessing content knowledge. In the example presented here (Supplementary material 2) students participated in a review quiz at the end of the lesson to assess knowledge of content from the lesson in the form of an online trivia competition using the platform Kahoot! (Oslo, Norway). In this platform points are awarded for correct answers based on speed with faster answers earning more points for students than slower answers, and incorrect answers earning no points. This assessment type is anonymous as students can make up their own names for the event. This style of assessment allows for the determination of the percent of students who answered each question correctly and is generally compared to chance probability (25%).

3.1.2 Immediate pre- and post-event assessment

The immediate pre- and post-event assessment was given as a short paper quiz. The pre-event assessment is taken by students at the beginning of the event before any content is delivered that day. Then content is delivered through the lesson for the day before a second quiz is given at the end of the event, again with our instructors still present. In this case, assessments included both a single written response as well as multiple choice questions (for an example see Supplementary material 3). Benefits of both multiple choice and short answer questions are discussed later. Students were asked to include their name on both the pre- and post-event assessment so that scores could be matched for later analysis.

3.1.3 Spaced pre- and post-event assessment

This assessment style is very similar to the immediate pre- and post-event assessment with the primary difference being the timing of assessment. The pre-event assessment is taken a day or two prior to instructors coming to the classroom. The post-event assessment is taken by students 1–2 weeks after the lesson took place. These assessments are both taken online by students during normal class hours. All students have a school-issued Chromebook on which to complete the assessment. In this case students were asked to answer two short written response questions. One question was based on content taught during the lesson while the “control” question was based on content that was not covered during the event (for example see Supplementary material 4).

3.1.3.1 Scoring of written responses

When scoring written responses, a rubric was created to help reviewers to rate responses. Responses ranged from “idk” (I don't know) to detailed responses involving specific content learned in the event. For an example reviewer scoring guide see Supplementary material 4.

3.2 Medical students

Medical students received course credit for participating in service-learning across a variety of possible sites. Most of the students participating in Brain Explorers were receiving credit as part of this Active Citizenship course. To investigate the effects of participation in Brain Explorers, medical students who had taught Brain Explorers lessons to middle schoolers were invited to participate in a focus group. A qualitative approach was chosen specifically due to (1) the program's nascency, (2) the desire to better understand the service-learning experience through open-ended questions, (3) the small sample size of medical student volunteers annually, making quantitative data insufficiently powered for quantitative analysis, and (4) the complexity and lack of standardization in evaluating communication skills.

Participation was optional and did not impact course credit. A semi-structured approach was used with open-ended questions that asked about medical students' motivations for participating in Brain Explorers and how they viewed the experience as impacting them. The focus session was conducted on Microsoft Teams, recorded, transcribed, and anonymized. Applied thematic analysis was conducted to evaluate this qualitative data (Guest et al., 2012). Two authors, including a medical student researcher who had not volunteered with Brain Explorers (TC) and a faculty member (KAPS) read the transcripts multiple times and generated initial codes. Then, we discussed together the codes that each identified and collaboratively agreed on terminology. Transcripts were reread and the codes were applied. Finally, we organized the codes into themes. The WMed Institutional Review Board determined that this project met the criteria for exempt status as described in 45 CFR Part 46.104(d).

4 Results

4.1 Middle school student assessment

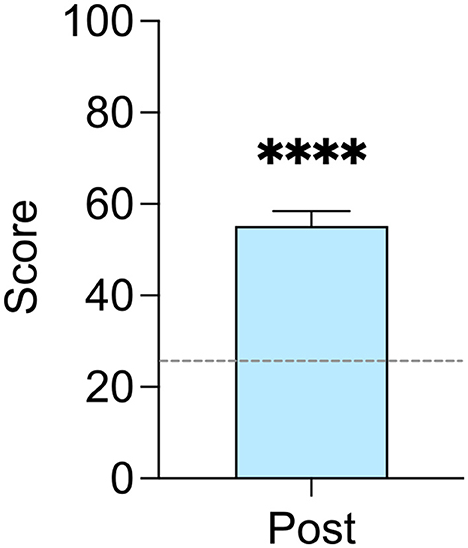

To evaluate the effectiveness of lessons in improving science content knowledge, we have utilized a variety of unique evaluation methods. The simplest assessment evaluated knowledge following a lesson by using a post-event quiz. To promote learner engagement, we used a trivia game creation platform called Kahoot! (Oslo, Norway). This platform allows for the creation of multiple-choice questions and audience members login and respond to questions to compete against each other to get the most right and to answer the fastest. Based upon the number of possible answer options, one can calculate the score that a student with no content knowledge would receive on average through random choices. For example, questions with four answer options would reach approximately 25%. Students performed significantly better than chance on this post-session quiz (Figure 1, one-sample t-test, t(57) = 9.038, p < 0.0001).

Figure 1. Assessment of learning through a post-session quiz. N = 58, ****p < 0.0001 compared to chance performance (~25%; dotted line).

Although administering only a post-session quiz is efficient, it is possible that some students had content knowledge before the lesson and thus could perform above chance levels. Therefore, during other events we have also used a pre- and post-event design whereby middle schoolers complete identical assessments before and after the lesson. With two separate lessons on different occasions, we observed a significant increase in student scores following the lesson (Figure 2, paired t-tests; 2A, t(66) = 8.836, p < 0.0001; 2B, t(68) = 14.151, p < 0.0001). These examples utilized both multiple-choice and open-ended questions.

Figure 2. Assessments of learning through in-class pre- and post-session quizzes. (A, B) Middle school students' scores improved after the sessions on somatosensation (A) and learning and memory (B). N = 67–69, ****p < 0.0001.

Finally, to test for longer-term retention, to eliminate potential impact of the instructors' presence, and to control for content taught vs. not taught, we have used a pre-post design spaced across multiple weeks. The first assessment was completed by students prior to having met the Brain Explorers instructors and again 1–2 weeks after the Brain Explorers visit. Interestingly, in this assessment, we utilized two open-ended questions, one which tested a primary focus of the event and another that did not. Despite the long delay, middle schoolers scored significantly better during the post-test on the question about content that was taught but not on the question about a neuroscience topic that was not covered during the lesson (Figure 3; two-way repeated-measures ANOVA; main effect of content, F(1, 57) = 19.440, p < 0.0001; main effect of time, F(1, 57) = 12.042, p = 0.001; interaction, F(1, 57) = 13.342, p = 0.0006; multiple comparisons with Bonferroni corrections, content taught p < 0.0001, content not taught p > 0.999).

Figure 3. Assessment of learning through spaced pre- and post-session quizzes including a control question. N = 58, ****p < 0.0001, ns = not statistically different.

4.2 Medical students

Applied thematic analysis of the qualitative data yielded insights into why medical students chose to participate in Brain Explorers and how they felt that the experience impacted them. Following each theme, a representative participant quotation is provided. Medical students' discussions of their motivations for volunteering for Brain Explorers clustered into two themes:

Theme 1: Desire for professional development. This theme centered on how the experience would benefit the medical students. Comments focused on gaining new experiences, potentially out of one's comfort zone, to build their skill set.

“I tend to be like a lot more introverted so I thought it would be kind of interesting to branch out and kind of give it a try.”

Theme 2: Desire to positively influence children with science. Some participants provided altruistic reasons for joining Brain Explorers. They reflected on their prior experiences as middle schoolers and sought to inspire the children through science outreach.

“I just remember like middle school being just so fun, and I remember these science events really sticking with me throughout the years, and, I thought it'd be a great way to like, pay it forward and kinda pay it back.”

When asked about how participating in Brain Explorers impacted the medical student volunteers, their responses revolved around two themes, both relating to communication:

Theme 3: Self-perceived improvement of communication skills. Multiple participants expressed that the experience of teaching the lesson plans to the middle schoolers improved their ability to effectively communicate. They learned the importance of assessing the knowledge levels of the audience members and practiced making on-the-fly modifications either within a session or between classes.

“…that aspect of Brain Explorers is really beneficial too… knowing that if you're going to explain something complex to an audience it's important to… gauge their baseline or gauge their background knowledge in more ways than one.”

“…from the first time doing it to the second time doing it, umm, you see what does work, what doesn't work, how you can be more efficient.”

Theme 4: Increased confidence communicating scientific content. Building upon the general communication skills in Theme 3, some participants also expressed an increased confidence in being able to communicate complicated scientific concepts. They recognized the difficulty in distilling complex scientific information for lay audiences and that this skill will be important in their future careers as physicians. Although the experience of teaching within the Brain Explorers program increased their confidence, some acknowledged that there was still room to grow in science communication.

“…something that, really, I think is important to me is learning how to kind of simplify complex things for people who, don't have maybe have the health literacy, or just don't have a science background.”

Lastly, experiences like Brain Explorers can yield another ancillary opportunity for medical student professional development. The aforementioned thematic analysis was performed by a medical student, working with a faculty member, and resulted in an international poster presentation and contributions to the present manuscript. Thus, in addition to community outreach and developing communication skills, Brain Explorers provides opportunities for medical students to engage in evidence-based practice in education and scholarship. Other medical students have gained research experience through systematically evaluating their lesson plans and middle schoolers' performance on the assessments. To date, Brain Explorers has yielded two medical student local/regional presentations with 5 student authors, 4 presentations at national or international meetings with 6 student authors, and 2 manuscripts with 4 medical student authors.

5 Discussion

The importance of science outreach cannot be overstated. Trust and interest in science remains a challenge. Despite a brief increase in trust in scientists during the pandemic, levels of trust in scientists continues to decline in the United States (Pew Research, 2023). For many youth, both in the United States and abroad, attitudes toward science have been noted to decline as they progress through their education (Murphy and Beggs, 2003; Kapici and Akcay, 2016; Tytler et al., 2008). Many professionals involved with STEMM outreach hope that by providing access to science professionals and interesting materials beyond what they might otherwise receive, we can stimulate greater interest in science among younger generations. It is important to note that while this may in fact be true, the evidence for long-term effects of science outreach is difficult to collect and even more difficult to interpret. Despite these challenges, it is important to continue to work toward this goal. Science professionals should know that evidence and evaluation is a critical driver of progress. Science communication and outreach done poorly may even have detrimental effects (Simis et al., 2016; Ecklund et al., 2012; Davies, 2008; The National Academies Press, 2017). To be sure that our programs and outreach events are meeting the goals set, continued evaluation should be seen not only as interesting and useful but as necessary. While we continue to evaluate our goals of reaching an underserved audience, as well as improving attitudes toward science, these are beyond the scope of this manuscript and have been reported elsewhere (Vollbrecht et al., 2019; Gall et al., 2020). As science outreach continues to gain a foothold within academic circles it has become clear that many of these programs lack a basic evaluation of their effectiveness and this lack of evaluation hinders the progress and success of these programs (Jensen, 2015; Abramowitz et al., 2024; Varner, 2014; Stofer et al., 2023; Borowiec, 2023). Here we have focused on short term evaluation methods that can be used in assessing content knowledge gains achieved through outreach efforts as well as evaluation of the impact of involvement in outreach on those students serving as instructors.

To evaluate the effectiveness of our lessons in improving science content knowledge we have utilized three unique evaluation methods. We have evaluated content knowledge at the conclusion of a visit, we have evaluated content knowledge at the beginning and the end of the visit, and we have evaluated content knowledge before and up to 2 weeks after a visit. These assessments have utilized a variety of question types including multiple choice questions and written responses. While each assessment type has its pros and cons, across multiple lesson plans and evaluation methods we have consistently demonstrated effective increases (or perceived increases) in middle school student content knowledge.

5.1 Immediate post-event assessment

There are many ways to assess content knowledge following an outreach event. Immediate post-event assessment is likely the simplest and most time-efficient way to assess content knowledge as it can take relatively little time depending on the assessment type that is used and can even be incorporated as an interactive element. Having a single post-session quiz provides students and instructors an assessment of the learners' content knowledge and takes less time than having both pre- and post-session quizzes; however, it does not directly capture learning due to the session alone. For example, some students may arrive with a baseline knowledge of the subject and thus could score above chance prior to the lesson. However, significant prior knowledge of the content is unlikely here, because the middle school participants had not yet taken a cell biology course, let alone neuroscience. Here we utilized a trivia-style online platform to assess content knowledge. This game allows students to anonymously compete against their classmates to answer the most questions correctly. Faster responses earn more points. While this gamification encourages participation from students and increases excitement for the assessment, it also runs the risk of decreasing the accuracy of responses due to the students' desire to answer quickly. Regardless of this potential decrease in scores, we saw students performing significantly greater than chance in this type of assessment (Figure 1). Even this quick assessment type can provide valuable insight into what students struggled with and what was conceptually easier for them to grasp, especially when reviewed on a question-by-question basis.

5.2 Immediate pre- and post-event assessment

When students are tested at the beginning and end of the session, significant gains in content knowledge are expected to be observed. We observed this in the two lessons reported here that were assessed in this manner (Figure 2). The immediate pre- and post-event assessments in this manuscript included both multiple choice questions as well as an open-ended question. While the immediate assessment is one of the simpler ways of assessing students due to time constraints within classrooms and ease of access to the students there are some obvious tradeoffs. First, there is no guarantee that content will be retained long-term as the assessment is done within minutes of the event conclusion. Second, by testing immediately prior to the event students are likely cued toward seeking out answers to some of the questions on the assessment impacting their scores on the post-event assessment. Finally, time with students is limited and including two assessments in your event can decrease the amount of time for content delivery or activities.

5.3 Spaced pre- and post-event assessment

Assessing content knowledge further removed from the intervention generally leads to a decrease in observed gains compared to immediate assessment but suggests greater retention depending on the length of time that passes from intervention to assessment. Here we demonstrated significant gains even after 1–2 weeks passed following the outreach lesson (Figure 3). The pre-event assessment was also done prior to the outreach team's arrival at the school to prevent any bias or accidental discussion of content prior to students taking the assessment. These assessments did not include any multiple-choice questions and were unique in that there were two open ended questions one of which we planned to cover in the event and one that we did not plan to cover in the event. This assessment type allowed measurement of specific gains in the content that was taught during that lesson. While a pre-post design can be effective at providing a baseline before the intervention as well as assessing knowledge after the intervention (especially in this spaced pre-post design), one should be aware of potential repeated testing effects and regression to the mean whenever the same assessment is given twice (Marsden and Torgerson, 2012). A randomized post-only design can control for these potentially confounding variables; however, it requires a large number of participants and random assignment to groups—both of which may not be feasible in STEMM outreach studies (Friedman et al., 2008).

5.4 Question types: multiple-choice vs. open-ended

When choosing an assessment not only should one consider the timing of the assessment relative to the event but also the format of the questions used on the assessment. Two common question types are multiple-choice questions and open-ended written response questions. Multiple-choice questions are easy to grade on the back end, and easy to evaluate for knowledge gains by determining if scores improve significantly. Multiple-choice and open-ended questions generally test different levels of learning with multiple-choice questions generally considered to be easier allowing for cueing of responses. Often multiple-choice questions are thought to test a lower level of knowledge or understanding because these are cued responses (Schuwirth et al., 1996; Melovitz Vasan et al., 2018; Polat, 2020), although carefully crafted multiple-choice questions can certainly still test high level thinking (Scully, 2019). Open-ended or free response questions on the other hand may test higher levels of comprehension and learning. However, these question types require a more careful analysis of the responses to evaluate content knowledge and in particular to measure content knowledge gains (Schinske, 2011), although analysis of these responses has received considerable attention from those looking to effectively automate the process (Sychev et al., 2019; Zhang et al., 2022; Pinto et al., 2023). Thus, utilizing both forms of questions has the potential to better gauge the level of learning that has occurred.

5.5 Choosing an evaluation method

In this manuscript we utilized three different assessment methods to demonstrate the strengths and limitations of each assessment type. Choosing an evaluation method requires careful examination of one's program or event goals, the setting, access to the audience for follow-up and the time available.

When the primary goal of the event is content knowledge gains, a more rigorous approach including spaced pre- and post-event evaluation is desirable (as we demonstrated in Figure 3). Additionally, if long term retention and learning are critical goals, open-ended questions that require critical thinking skills are useful. Students' responses to these questions can be quantified using a rubric and then learning gains measured, as we demonstrated in Figure 2. Spaced pre- and post-event evaluations will provide a better understanding of an audience's long-term learning, and the open-ended question format may better demonstrate understanding vs. ability to simply identify facts or information from a multiple-choice question. However, depending on the format of the outreach session and the ability to contact participants after the event, this may not be an option. When there is not a feasible way of contacting the audience after the conclusion of the event an immediate pre- and post-event evaluation is preferrable (as we did for Figure 2).

If the primary goal of your event or program is to improve attitudes toward science or engagement with science, more extensive content knowledge evaluation may be off-putting to participants, and a less rigorous evaluation method such as a simple post-event quiz or survey may be most appropriate (as we did for Figure 1). However, careful evaluation of the program's primary goal should guide the choice of the most appropriate assessment method.

The setting of the event should also be considered. All of the evaluations described here took place in a middle school classroom, where quizzes and testing are expected. If these lessons or activities were to have taken place at a science fair or open-house style event, a single post-event evaluation that took a gamified approach would have been most appropriate.

Whichever form of assessment is utilized, assessing content knowledge gains following outreach events can provide critical information for the improvement of these events and in helping to understand our audiences. What may be simple for one audience may be more difficult for another audience and each assessment allows for the continued adaptation of a lesson to the specific audience demographic. Depending on the goals of your program or event, long term retention of information may be more or less important. This should be considered when determining the timing of your assessment, as a temporally distant assessment may better evaluate your goals than an immediate assessment. However, when one is involved in outreach it can be difficult to carve out additional time, or to follow up with attendees after the fact. In these cases, immediate assessments may be more attractive.

One of the three major goals of Brain Explorers is increased content knowledge for our middle school students. Thus, assessing these gains in content knowledge is critical for the mission of our program. Through increased knowledge we are hopeful that we can drive interest in the sciences as a whole. Although assessing attitudes toward science is beyond the scope of this particular article and has been discussed previously, it remains a critical component of our outreach efforts (Gall et al., 2020).

5.6 Evaluation of the impact of participation on medical students

Medical professionals, especially physicians, are often the only scientists with whom many people will interact during their lives. For this reason, it is critical to build the confidence of future physicians in their ability to communicate difficult scientific topics to individuals who may not be familiar with scientific terminology or jargon. With smaller cohorts of medical students participating in Brain Explorers, it will likely take several more years to obtain data beyond narrative responses. Despite this limitation, providing opportunities to improve communication skills is a goal of the program and therefore should be evaluated as possible. Focus groups provide an abundance of qualitative data and can be particularly useful in examining self-reported qualities such as confidence and motivations for participation.

Medical students sought out Brain Explorers primarily looking for opportunities to develop communication skills and give back to the community. From our focus group it became apparent that medical students feel they were able to meet these desires, with a perceived increase in communication skills and increased confidence in their ability to explain scientific content. Previous reports have indicated similar effects of service-learning on perceived communication skills (McNatt, 2020; Tucker and McCarthy, 2001; Hébert and Hauf, 2015). Future studies will continue to examine effects of communication skills beyond self-reported gains in communication skills, although significant challenges exist in meeting this need.

Finally, the development of curricular content for outreach programs, teaching it to students, learner assessment, and programmatic evaluation offer scholarship opportunities not only for faculty but also medical students. Scholarship of teaching and learning (SoTL) is necessary to advance the field of education, and outreach and service-learning programs are ripe for SoTL (Restini et al., 2024). Such scholarship opportunities may be increasingly valuable for medical students' future careers and earning a residency placement (Wolfson et al., 2023).

6 Limitations and conclusions

Assessing the impact of STEMM outreach programs is crucial to ensure that such programs are meeting their goals. Through examples from our Brain Explorers program, the present work provides a variety of methods for learner assessment within outreach programs. Each assessment method has advantages and disadvantages. Furthermore, the outreach setting or participants' demographics could influence which assessment methods are acceptable or practical. The assessments of middle schoolers' learning within Brain Explorers were conducted in a rural, midwestern town with relatively small class sizes. Assessment on the impact of medical student participation in the outreach program as instructors was in a midwestern medical school. Without appropriate program evaluation, it is possible to overlook that what is effective in one population or setting may be ineffective in another population or setting. The data and evaluation methods presented here are heavily focused on content knowledge gains. This is only one possible goal, and often not the primary goal of STEM outreach work. We encourage evaluation of all program goals including improving attitudes toward science (Gall et al., 2020; Yawson et al., 2016; Septiyanto et al., 2024), increasing representation in STEM (Vollbrecht et al., 2019; Carver et al., 2017), and improving science literacy (Struminger et al., 2021; Arthur et al., 2021) among others.

While it is important to thoughtfully create an assessment plan, we caution against harming the participant's experience for the sake of experimental design. Indeed, rigor in assessment of STEMM outreach should be valued but not if it alienates or potentially traumatizes participants within the outreach activity (Friedman et al., 2008). Over-zealous assessment could ultimately diminish goals of STEMM outreach. This highlights the need for thoughtfulness in all outreach efforts and programs' evaluations of their efforts. Thus, while our data is limited to a specific goal of one specific outreach program, we believe that this limitation serves as a call to all involved in outreach work to continue to develop program goals and evaluate the effectiveness of these programs in reaching their goals. As more programs continue to evaluate and report their effectiveness, the outreach community can learn and grow, developing best practices from the combined efforts of all of our programs.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Western Michigan University Homer Stryker M.D. School of Medicine Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

Author contributions

PV: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. CC: Data curation, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. JM: Data curation, Formal analysis, Investigation, Methodology, Visualization, Writing – original draft, Writing – review & editing. TC: Data curation, Formal analysis, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. KP-S: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by a Pilot Grant to KP-S and PV from Western Michigan University Homer Stryker M.D. School of Medicine as well as support from the STEM Advocacy Institute.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1446205/full#supplementary-material

References

Abramowitz, B., Ennes, M., Kester, B., and Antonenko, P. (2024). Scientist-school STEM partnerships through outreach in the USA: a systematic review. Int. J. Sci. Math Educ. 2024, 1–23. doi: 10.1007/s10763-024-10445-7

Arthur, B., Roberts, D., Rae, B., Marrison, M., McCleary, H., Abbott, A., et al. (2021). Ocean outreach in Australia: how a national research facility is engaging with community to improve scientific literacy. Front. Environ. Sci. 9:610115. doi: 10.3389/fenvs.2021.610115

Borowiec, B. G. (2023). Ten simple rules for scientists engaging in science communication. PLoS Comput. Biol. 19:e1011251. doi: 10.1371/journal.pcbi.1011251

Carver, S., Sickle, J., Holcomb, J. P., Quinn, C., Jackson, D. K., Resnick, A. H., et al. (2017). Operation STEM: increasing success and improving retention among first-generation and underrepresented minority students in STEM. J. STEM Educ. 18:2182.

Cicchino, A. S., Weinberg, A. E., Sample McMeeking, L. B., and Balgopal, M. M. (2023). Practice and policy Critical pedagogy of place to enhance ecological engagement activities. Conserv. Biol. 37:14023. doi: 10.1111/cobi.14023

Crawford, A. J., Hays, C. L., Schlichte, S. L., Greer, S. E., Mallard, H. J., Singh, R. M., et al. (2021). Retrospective analysis of a STEM outreach event reveals positive influences on student attitudes toward STEM careers but not scientific methodology. Adv. Physiol. Educ. 45, 427–436. doi: 10.1152/advan.00118.2020

Davies, S. R. (2008). Constructing Communication Talking to Scientists About Talking to the Public. doi: 10.1177/1075547008316222

Deal, A. L., Erickson, K. J., Bilsky, E. J., Hillman, S. J., and Burman, M. A. (2014). K-12 Neuroscience education outreach program: interactive activities for educating students about neuroscience. J. Undergrad. Neurosci. Educ. 13, A8–A20.

Demetry, C., Hubelbank, J., Blaisdell, S. L., Sontgerath, S., Nicholson, M. E., Rosenthal, E., et al. (2009). Long-term effects of a middle school engineering outreach program for girls. J. Women Minor. Sci. Eng. 15, 119–142. doi: 10.1615/JWomenMinorScienEng.v15.i2.20

Ecklund, E. H., James, S. A., and Lincoln, A. E. (2012). How academic biologists and physicists view science outreach. PLoS ONE 7:36240. doi: 10.1371/journal.pone.0036240

Friedman, A. J., Allen, S., Campbell, P. B., Dierking, L. D., Flagg, B. N., Garibay, C., et al. (2008). Framework for Evaluating Impacts of Informal Science Education Projects Report from a National Science Foundation Workshop The Division of Research on Learning in Formal and Informal Settings (DRL) 2 Framework for Evaluating Impacts of Informal Science Education Projects Report from a National Science Foundation Workshop The Division of Research on Learning in Formal and Informal Settings (DRL). Available at: https://stelar.edc.org/publications/framework-evaluating-impacts-broadening-participation-projects-report-national-science (accessed September 9, 2024).

Gall, A. J., Vollbrecht, P. J., and Tobias, T. (2020). Developing outreach events that impact underrepresented students: are we doing it right? Eur. J. Neurosci. 52, 3499–3506. doi: 10.1111/ejn.14719

Gilbertson, R. J., Hessler, E. E., and Leff, D. J. (2024). Active learning and community engagement: pedagogical synergy through the “mobile neuroscience lab” project. J. Undergrad. Neurosci. Educ. 20, A324–A331. doi: 10.59390/VUNA6753

Guest, G. M., MacQueen, K. E., and Namey, E. (2012). Applied Thematic Analysis. Thousand Oaks, CA: SAGE Publications, Inc.

Hébert, A., and Hauf, P. (2015). Student learning through service learning: Effects on academic development, civic responsibility, interpersonal skills and practical skills. Active Learn. High. Educ. 16, 37–49. doi: 10.1177/1469787415573357

Helitzer, D., Hollis, C., Hernandez, B. U. d. e, Sanders, M., Roybal, S., et al. (2009). Evaluation for community-based programs: the integration of logic models and factor analysis. Eval. Program Plann. 33, 223–233. doi: 10.1016/j.evalprogplan.2009.08.005

Jensen, E. (2015). Highlighting the value of impact evaluation: enhancing informal science learning and public engagement theory and practice. J. Sci. Commun. 14:405. doi: 10.22323/2.14030405

Kapici, H. O., and Akcay, H. (2016). “Middle school students' attitudes toward science, scientists, science teachers and classes,” in Asia-Pacific Forum on Science Learning and Teaching, 17: doi: 10.1186/s41029-019-0037-8

Kelp, N. C., Mccartney, M., Sarvary, M. A., Shaffer, J. F., and Wolyniak, M. J. (2023). Developing science literacy in students and society: theory, research, and practice. J. Microbiol. Biol. Educ. 24, e00058–23. doi: 10.1128/jmbe.00058-23

Kolb, D. A. (1984). Experiential Learning: Experience as the Source of Learning and Development. Englewood Cliffs, NJ: Prentice-Hall.

Liason Committee on Medical Education (2023). Functions and Structure of a Medical School - Standards for Accreditation of Medical Education Programs Leading to the MD Degree. Available at: https://lcme.org/publications/ (accessed May 16, 2024).

Mann, K., and MacLeod, A. (2015). Constructivism: learning theories and approaches to research. Research. Med. Educ. 2015, 49–66. doi: 10.1002/9781118838983.ch6

Marsden, E., and Torgerson, C. J. (2012). Single group, pre- and post-test research designs: Some methodological concerns. Oxf. Rev. Educ. 38, 583–616. doi: 10.1080/03054985.2012.731208

Mclaughlin, J. A., and Jordan, G. B. (2015). “Using logic models,” in Handbook of Practical Program Evaluation: Fourth Edition, 62–87. “

McNatt, D. B. (2020). Service-learning: An experiment to increase interpersonal communication confidence and competence. Education and Training 62, 129–144. doi: 10.1108/ET-02-2019-0039

Melovitz Vasan, C. A., DeFouw, D. O., Holland, B. K., and Vasan, N. S. (2018). Analysis of testing with multiple choice versus open-ended questions: outcome-based observations in an anatomy course. Anat. Sci. Educ. 11, 254–261. doi: 10.1002/ase.1739

Minen, M. T., Lebowitz, N., Ekhtman, J., Oza, K., Yusaf, I., Katara, A., et al. (2023). critical systematic review of K-12 neurology/neuroscience pipeline programs. Front. Med. 10:1281578. doi: 10.3389/fmed.2023.1281578

Mohd Shahali, E. H., Halim, L., Rasul, M. S., Osman, K., and Mohamad Arsad, N. (2019). Students' interest towards STEM: a longitudinal study. Res. Sci. Technol. Educ. 37, 71–89. doi: 10.1080/02635143.2018.1489789

Murphy, C., and Beggs, J. (2003). Children's perceptions of school science. School Sci. Rev. 84:109.

Nation, J. M. B., and Hansen, A. K. (2021). Perspectives on community STEM: learning from partnerships between scientists, researchers, and youth. Integr. Comp. Biol. 61, 1055–1065. doi: 10.1093/icb/icab092

National Research Council (2013). “Next generation science standards: for states, by states,” in Next Generation Science Standards: For States, By States 1–2, 1–504.

Olusegun, S. (2015). Constructivism learning theory: a paradigm for teaching and learning. IOSR J. Res. Method Educ. 5, 66–70.

Pew Research (2023). Americans' Trust in Scientists and Views of Science Decline in 2023 | Pew Research Center. Available at: https://www.pewresearch.org/science/2023/11/14/americans-trust-in-scientists-positive-views-of-science-continue-to-decline/ (accessed June 6, 2024).

Pinto, G., Cardoso-Pereira, I., Monteiro, D., Lucena, D., Souza, A., Gama, K., et al. (2023). “Large language models for education: grading open-ended questions using ChatGPT,” in ACM International Conference Proceeding Series, 293–302.

Polat, M. (2020). Analysis of multiple-choice versus open-ended questions in language tests according to different cognitive domain levels. Novitas-ROYAL. 14, 76−96.

Porter-Stransky, K. A., Gibson, K., Vanderkolk, K., Edwards, R. A., Graves, L. E., Smith, E., et al. (2023). How medical students apply their biomedical science knowledge to patient care in the family medicine clerkship. Med. Sci. Educ. 33, 63–72. doi: 10.1007/s40670-022-01697-5

Porter-Stransky, K. A., Yang, W., Vollbrecht, P. J., Stryker, H., Meadors, A. C., Duncan, D. J., et al. (2024). Examining the relationship between attitudes toward science and socioeconomic status among middle-class, midwestern middle school students. Front. Educ. 9:1403039. doi: 10.3389/feduc.2024.1403039

Restini, C. B. A., Weiler, T., Porter-Stransky, K. A., Vollbrecht, P. J., and Wisco, J. J. (2024). Empowering the future: improving community wellbeing and health literacy through outreach and service-learning. Front. Public Health 12:1441778. doi: 10.3389/fpubh.2024.1441778

Sadler, K., Eilam, E., Bigger, S. W., and Barry, F. (2018). University-led STEM outreach programs: purposes, impacts, stakeholder needs and institutional support at nine Australian universities. Stud. Higher Educ. 43, 586–599. doi: 10.1080/03075079.2016.1185775

Saravanapandian, V., Sparck, E. M., Cheng, K. Y., Yu, F., Yaeger, C., Hu, T., et al. (2019). Quantitative assessments reveal improved neuroscience engagement and learning through outreach. J. Neurosci. Res. 97, 1153–1162. doi: 10.1002/jnr.24429

Schinske, J. N. (2011). Taming the Testing/Grading Cycle in Lecture Classes Centered Around Open-Ended Assessment.

Schuwirth, L. W. T., Van Der Vleuten, C. P. M., and Donkers, H. H. L. M. (1996). A closer look at cueing effects in multiple-choice questions. Med. Educ. 30, 44–49. doi: 10.1111/j.1365-2923.1996.tb00716.x

Scully, D. (2019). Constructing multiple-choice items to measure higher-order thinking. Pract. Assessm. Res. Evaluat. 22:4.

Septiyanto, A., Oetomo, D., and Indriyanti, N. Y. (2024). Students' interests and attitudes toward science, technology, engineering, and mathematics careers. Int. J. Eval. Res. Educ. 13, 379–389. doi: 10.11591/ijere.v13i1.25040

Shoemaker, D. N., Jesús, O. L. P. D. e, Feguer, L. K., Conway, J. M., Jesus, M. D., et al. (2020). Inclusive science: inspiring 8th graders from underrepresented groups to embrace STEM with microbiology and immunology. J. Microbiol. Biol. Educ. 21:1995. doi: 10.1128/jmbe.v21i1.1995

Simis, M. J., Madden, H., Cacciatore, M. A., and Yeo, S. K. (2016). The lure of rationality: Why does the deficit model persist in science communication? Public Underst. Sci. 25, 400–414. doi: 10.1177/0963662516629749

Stofer, K. A., Hanson, D., and Hecht, K. (2023). Scientists need professional development to practice meaningful public engagement. J. Respon. Innov. 10:2127672. doi: 10.1080/23299460.2022.2127672

Struminger, R., Short, R. A., Zarestky, J., Vilen, L., and Lawing, A. M. (2021). Biological field stations promote science literacy through outreach. Bioscience 71, 953–963. doi: 10.1093/biosci/biab057

Sychev, O., Anikin, A., and Prokudin, A. (2019). Automatic grading and hinting in open-ended text questions. Cogn. Syst. Res. 59, 264–272. doi: 10.1016/j.cogsys.2019.09.025

The National Academies Press (2017). Communicating Science Effectively: A Research Agenda. Washington, D.C.: The National Academies Press.

Tucker, M. L., and McCarthy, A. M. (2001). Presentation self-efficacy: increasing communication skills through service-learning. J. Manage. Issues 13, 227–244.

Tytler, R., Osborne, J., Tytler, K., and Cripps Clark, J. (2008). Opening up pathways: Engagement in STEM across the Primary-Secondary school transition A review of the literature concerning supports and barriers to Science, Technology, Engineering and Mathematics engagement at Primary-Secondary transition. Canberra ACT: Commissioned by the Australian Department of Education, Employment and Workplace Relations.

Van Melle, E. (2016). Using a logic model to assist in the planning, implementation, and evaluation of educational programs. Acad. Med. 91:1464. doi: 10.1097/ACM.0000000000001282

Varner, J. (2014). Scientific outreach: toward effective public engagement with biological science. Bioscience 64, 333–340. doi: 10.1093/biosci/biu021

Vennix, J., den Brok, P., and Taconis, R. (2018). Do outreach activities in secondary STEM education motivate students and improve their attitudes towards STEM? Int. J. Sci. Educ. 40, 1263–1283. doi: 10.1080/09500693.2018.1473659

Vollbrecht, P. J., Frenette, R. S., and Gall, A. J. (2019). An effective model for engaging faculty and undergraduate students in neuroscience outreach with middle schoolers. J. Undergrad. Neurosci. Educ. 17, A136–A150.

Weekes, N. Y. (2012). Diversity in neuroscience. we know the problem. Are we really still debating the solutions? J. Undergrad. Neurosci. Educ. 11, A52–4.

Wolfson, R. K., Fairchild, P. C., Bahner, I., Baxa, D. M., Birnbaum, D. R., Chaudhry, S. I., et al. (2023). Residency program directors' views on research conducted during medical school: a national survey. Academic Med. 98:5256. doi: 10.1097/ACM.0000000000005256

Yardley, S., Teunissen, P. W., and Dornan, T. (2012). Experiential learning: Transforming theory into practice. Med. Teach. 34, 161–164. doi: 10.3109/0142159X.2012.643264

Yawson, N. A., Amankwaa, A. O., Tali, B., Shang, V. O., Batu, E. N., Jnr, K. A., et al. (2016). Evaluation of changes in ghanaian students' attitudes towards science following neuroscience outreach activities: a means to identify effective ways to inspire interest in science careers. J. Undergrad. Neurosci. Educ. 14:A117.

Zajda, J. (2021). Constructivist Learning Theory and Creating Effective Learning Environments. 35–50. doi: 10.1007/978-3-030-71575-5_3

Zhang, L., Huang, Y., Yang, X., Yu, S., and Zhuang, F. (2022). An automatic short-answer grading model for semi-open-ended questions. Interact. Learn. Environm. 30, 177–190. doi: 10.1080/10494820.2019.1648300

Keywords: stem education, outreach, assessment, evaluation, K-12

Citation: Vollbrecht PJ, Cooper CEA, Magoline JA, Chan TM and Porter-Stransky KA (2024) Evaluation of content knowledge and instructor impacts in a middle school outreach program: lessons from Brain Explorers. Front. Educ. 9:1446205. doi: 10.3389/feduc.2024.1446205

Received: 09 June 2024; Accepted: 03 September 2024;

Published: 24 September 2024.

Edited by:

Kizito Ndihokubwayo, Parabolum Publishing, United StatesReviewed by:

Wang-Kin Chiu, The Hong Kong Polytechnic University, ChinaLorenz S. Neuwirth, State University of New York at Old Westbury, United States

Adrian Sin Loy Loh, National Junior College, Singapore

Copyright © 2024 Vollbrecht, Cooper, Magoline, Chan and Porter-Stransky. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peter J. Vollbrecht, cGV0ZXIudm9sbGJyZWNodEB3bWVkLmVkdQ==

Peter J. Vollbrecht

Peter J. Vollbrecht Carolyn E. A. Cooper1

Carolyn E. A. Cooper1 Joseph A. Magoline

Joseph A. Magoline Kirsten A. Porter-Stransky

Kirsten A. Porter-Stransky