- 1School of Education, The University of Queensland, Brisbane, QLD, Australia

- 2Institute for Social Science Research, The University of Queensland, Brisbane, QLD, Australia

- 3Faculty of Humanities and Social Sciences, The University of Queensland, Brisbane, QLD, Australia

- 4Melbourne Graduate School of Education, The University of Melbourne, Melbourne, VIC, Australia

This study investigated the effects of a teacher professional learning intervention, underpinned by a student-centred model of feedback, on student perceptions of feedback helpfulness. The study was conducted in the context of primary education English writing in Queensland, Australia. No overall differences in feedback perceptions of students in 13 intervention and 9 comparison schools were identified following the intervention. However, more detailed analyses revealed significantly greater increases in perceived helpfulness among intervention group students for six feedback strategies. This suggests the intervention changed teachers’ feedback practices, enhancing student perceptions of feedback helpfulness. Student focus group data provided valuable qualitative insights into student feedback perceptions. Overall findings highlight the interrelatedness between feedback strategies across the feedback cycle for enhancing student learning.

1 Introduction

The use of feedback to enhance students’ learning outcomes has been widely studied and is gaining much attention in educational practice and research (Winstone et al., 2017; Gotwals and Cisterna, 2022). Feedback is considered to be “among the most critical influences on student learning” (Hattie and Timperley, 2007, p. 102) because it plays a fundamental role in making students aware of how they can improve. Contemporary conceptualisations view feedback as involving bi-directional exchanges of information where the student is active, in contrast to a traditional transmissive approach with a focus on teachers providing feedback to passive student recipients (Hattie et al., 2016; Van der Kleij et al., 2019).

It is clear that the perspective of the learner is paramount given they must receive, interpret and act on feedback information in order to improve (Sadler, 1989; Hattie et al., 2016). However, empirical evidence on how feedback is perceived and used by school students is still inconclusive (Gamlem and Smith, 2013; Brooks et al., 2019b; Van der Kleij and Lipnevich, 2021). Given the potential for feedback to improve learning, there is a need to understand how school students perceive and act upon feedback so as to improve classroom feedback practices.

1.1 Feedback potential

The aim of feedback is to assist learners to close the distance between where they are and where they need to be (Sadler, 1989; Hattie and Timperley, 2007). Hattie and Timperley (2007) described feedback as “information provided by an agent (e.g., teacher, peer, book, parent, self, experience) regarding aspects of one’s performance or understanding” (p. 81). Feedback can enhance learning when students engage with it to confirm, add to, strengthen, modify or expand their existing knowledge or strategy repertoires (Butler and Winne, 1995). As such, feedback can boost achievement, for example by encouraging students to adopt more efficient learning strategies (Hattie and Timperley, 2007).

Feedback research has long focused on trying to identify aspects that make feedback effective (Van der Kleij et al., 2019). However, what happens after students “receive” feedback is highly unpredictable, and the potential of feedback to improve student learning outcomes is often not realised. Kluger and DeNisi (1996), in a major review on the effects of feedback, found that feedback produced both positive and negative effects and attributed this variance to task characteristics and individual interpretations of feedback messages. Many feedback researchers now recognise that of critical importance is how feedback is received and eventually acted upon (Hattie et al., 2016; Van der Kleij et al., 2019; Lipnevich and Smith, 2022).

1.2 Contemporary perspectives of feedback: students as active in feedback processes

Conceptualisations of the role of the student in feedback have evolved considerably over the last five decades (Van der Kleij et al., 2019). Early conceptualisations saw feedback as a one-way transmission of information. This approach to feedback was reliant upon students valuing, understanding, and acting upon feedback, which was assumed as a given. However, research shows that in practice, one-way transmissive feedback rarely results in the intended learning improvements, but rather can result in student disengagement with the feedback process (e.g., Winstone et al., 2017).

Contemporary (social) constructivist and sociocultural models of feedback build upon feedback reciprocity, a two-way process between a feedback provider and recipient, enabling the student to be active in the process (Van der Kleij et al., 2019; Gulikers et al., 2021; de Vries et al., 2024). Winstone et al. (2017) use the term “proactive recipience” to refer to “a form of agentic engagement that involves the learner sharing responsibility for making feedback processes effective” (p. 17). Importantly, in contemporary models of feedback, students are positioned as active agents in the feedback process where they engage in continuous feedback interactions with the teacher, peers, and themselves, actively seeking, interpreting and acting on feedback in order to self-regulate and improve their learning (e.g., Gulikers et al., 2021; Lipnevich and Smith, 2022; Veugen et al., 2024).

To realise sustainable feedback processes, teachers need to assist students in developing the capacity to self-regulate and actively engage with feedback to enhance their learning (Brooks et al., 2019a; Gulikers et al., 2021; de Vries et al., 2024). Hence, the feedback questions “Where am I going?” (feed up), “How am I going?” (feed back) and “Where to next?” (feed forward) (Hattie and Timperley, 2007), position the learner as central and active in the feedback process.

To effectively self-regulate, students first need to have a thorough understanding of the learning intentions. Further, they need to be able to evaluate how they, or their peers, are progressing in relation to the learning goals. This requires an understanding of what quality looks like (Wyatt-Smith and Adie, 2021). Simply announcing or transmitting learning goals and success criteria is not enough to assist students in developing these critical insights (Timperley and Parr, 2009; DeLuca et al., 2019; Gulikers et al., 2021). Rather, the co-construction of success criteria by teachers and students through the deconstruction of a range of models has been demonstrated to effectively develop students’ understandings of features of quality work (Brooks et al., 2021a,c; Wyatt-Smith and Adie, 2021).

Second, students need to be able to use feedback to reduce the distance between their current levels of progress in relation to the goal. Thus, students need to know how to improve (Hattie and Timperley, 2007). Research suggests that in practice, feedback is often insufficiently specific to enable students to take action (Van der Kleij and Lipnevich, 2021). Although students may prefer feedback that tells them how to improve, less individualised and specific feedback may in fact result in more productive learning, as it forces learners to engage more actively with the feedback message (Jonsson and Panadero, 2018). The major implication of these contrasting views is that students need to be empowered to decide which actions would be most appropriate in a certain context, and the degree of feedback specificity may need to be tailored to students’ levels of proficiency and self-regulatory capabilities.

Further, contemporary feedback research has highlighted the importance of students being active in the role of feedback providers, through peer and self-assessment (Van der Kleij et al., 2019; Lee et al., 2020). Ideally, students draw upon feedback from multiple sources, including teacher feedback, in shaping their understandings of what quality work looks like and undertaking peer and self-assessment (Wyatt-Smith and Adie, 2021). Importantly, the benefits of peer and self-assessment are interdependent; peer-assessment has been demonstrated to result in substantial learning gains in both receivers and providers of peer feedback (Huisman et al., 2018). Namely, student participation in peer feedback processes encourages the application of critical thinking skills to detect aspects for improvement and introduces students to diverse responses and methods, encouraging transfer of ideas for self-assessment and subsequent revision of their own work. Research suggests that teacher guidance is fundamental to the successful implementation of peer and self-assessment (e.g., Timperley and Parr, 2009; DeLuca et al., 2018; Lee et al., 2019). For example, findings of recent research showed that when teachers guided students in providing and using peer feedback, students perceived peer feedback as beneficial to their learning (Lee et al., 2019).

1.3 Student perceptions of feedback

Studies examining student perceptions of feedback have focused on a broad range of variables, such as perceived usefulness, effectiveness or quality in relation to feedback characteristics such as timing, amount, valence (positive versus negative) and specificity (Van der Kleij and Lipnevich, 2021; Lipnevich and Smith, 2022; Winstone and Nash, 2023). Overall, research evidence on student feedback perceptions has produced limited meaningful findings, which is partly due to a lack of common theoretical foundations (Van der Kleij and Lipnevich, 2021). For example, Van der Kleij and Lipnevich (2021) point to a lack of consistency in research approaches to examining student feedback perceptions, which makes it difficult to compare results and generate useful insights. Winstone and Nash (2023) identified that the outcomes of many studies pointed to the need to develop more in-depth understanding of the “mechanisms underlying effective feedback” (p. 120). One key area of focus is understanding student processes for engagement with feedback, and their perceptions of which feedback strategies are helpful, and why.

Despite inconsistencies in research evidence, research points to the importance of how useful or helpful students perceive feedback to be (Van der Kleij and Lipnevich, 2021). For example, several studies have reported a positive relationship between student perceptions of feedback usefulness and their achievement levels [e.g., Rakoczy et al., 2013; Harks et al., 2014; Brooks et al., 2021b] or self-reported achievement outcomes (Mapplebeck and Dunlop, 2019; Van der Kleij and Lipnevich, 2021). However, various studies have suggested that teachers generally perceive their feedback to be more useful than their students (e.g., Havnes et al., 2012; Gamlem and Smith, 2013). Reasons reported in research for a lack of perceived helpfulness include a lack of detail in feedback, lack of understanding of the meaning of feedback, feedback that is not useful beyond a specific task, or feedback that comes too late (Gamlem and Smith, 2013; Mapplebeck and Dunlop, 2019; Lipnevich and Smith, 2022).

How students are positioned to act in the feedback process also influences how they perceive feedback. In a study in secondary science education, students identified that feedback that required them to think and develop independence was helpful, rather than the teacher presenting information to them (Mapplebeck and Dunlop, 2019). However, not all students may want to take an active role, which can pose challenges for teachers trying to shift responsibilities in the feedback process (Jonsson et al., 2015). Considering the ultimate goal of feedback in the formative assessment process is crucial to enabling effective classroom practices (Gulikers et al., 2021). Thus, a critical question for classroom practice is how teachers can design feedback processes so that feedback is perceived as helpful by students and drives students’ active use of feedback to progress their learning.

1.4 Professional learning interventions for teachers in effective feedback

As noted, conceptualisations of feedback in the literature have shifted from a transmissive to a student-centred perspective (Van der Kleij et al., 2019). How teachers construct feedback interactions will have a major impact on how students are positioned to engage in feedback processes (Mapplebeck and Dunlop, 2019; Van der Kleij et al., 2019). Implementing feedback practices with a student-centred perspective will require a shift in thinking and practices for many teachers as well as students (Brooks et al., 2021a,c; Jonsson et al., 2015; DeLuca et al., 2019]. Substantial professional learning (PL) interventions may be needed to enable teachers to realise such a shift (Jonsson et al., 2015; Voerman et al., 2015; DeLuca et al., 2018, 2019; Mapplebeck and Dunlop, 2019).

PL interventions in feedback have yielded mixed success, with some reporting positive impacts on changes in feedback practices (Voerman et al., 2015), and others reporting moderate impact with difficulties in shifting traditional teacher-student interactions and positioning within the formative assessment process (Jonsson et al., 2015; Gulikers and Baartman, 2017; DeLuca et al., 2018, 2019). For example, Jonsson et al. (2015) reported on the implementation of a large-scale professional development project focused on Assessment for Learning, in which feedback plays a critical role. Their results showed that although teachers had reported positive changes to their classroom practices, many struggled to shift towards shared responsibility for assessment and feedback. As a result, students remained passive receivers of highly directive teacher feedback, and were not engaged in supporting their own and peers’ learning. These findings point to the difficulties in moving away from traditional teacher-dominated orientations to assessment practice. Consistent with these findings, various studies have concluded that shifting towards student-centred feedback practices is considered advanced formative assessment practice (e.g., DeLuca et al., 2019; Gotwals and Cisterna, 2022; de Vries et al., 2024). However, there is a lack of research on how teacher PL can be shaped to enhance effective feedback processes in which students are active.

1.5 Contribution and research questions of the present study

Much of the research on teacher PL in feedback has relied on teacher self-report data (Voerman et al., 2015), failing to consider the perspectives of students as key actors. Given the central role of students in feedback, the success of PL interventions in feedback ultimately depends on how helpful feedback practices are to students. Nevertheless, there do not appear to be any studies on feedback perceptions that take account of changes in student perceptions following PL interventions (Van der Kleij and Lipnevich, 2021). To address this gap in the literature, this mixed methods study drew primarily on quantitative data from a survey instrument, which was administered to an intervention and comparison group prior to and following PL in feedback. Qualitative student focus group data were used to complement the quantitative data, to gain detailed insights into which feedback strategies were perceived as more or less helpful by students, and importantly, why. Findings provide critical new insights into how PL may assist teachers in facilitating feedback practices that are perceived as helpful by students.

The present study examined the effects of a teacher PL intervention on student perceptions of feedback helpfulness. The PL intervention was underpinned by a student-centred model of feedback (Brooks et al., 2021a), which was developed based on (social) constructivist and sociocultural models of feedback, expanding the widely-used Hattie and Timperley (2007) feedback model. We hypothesised that training teachers in effective feedback would result in increased perceived helpfulness among students, because students being active in the feedback process would make them more able to effectively act on the feedback.

The following research questions guided the study:

1. Did the PL intervention change students’ perceptions of feedback helpfulness?

2. Which feedback strategies were perceived as more or less helpful following the PL intervention, and why?

2 Method

2.1 Research context and PL intervention

This study was part of a larger three-year study (blinded for review), investigating the effects of a PL intervention underpinned by a student-centred model of feedback upon instructional leadership, teacher practice and student learning outcomes. The larger study included a series of studies, with each year focusing on a different schooling year level (grade) and investigative focus. The intervention was contextualised within the subject of English, specifically writing. This study focused on student perceptions of a range of feedback strategies that are used in the intervention.

The intervention was implemented using a student-centred model of effective feedback [Author(s)]. This feedback model is based on the well-known model by Hattie and Timperley (2007), which posits that effective feedback processes revolve around three questions: “Where am I going?” (making learning goals explicit; feed up), “How am I going?” (assessing progress relative to the goals; feedback), and “Where to next?” (determining subsequent steps to achieve the goals and progress learning; feed forward). In our model, these questions have been translated into a classroom-level feedback cycle, consisting of three stages: (1) clarify success, (2) check in on progress and (3) promote improvement. These feedback processes make up the ‘inner wheel’ of the model. Acknowledging the importance of conditions beyond the classroom, a second level, referred to as the ‘outer wheel’, focuses on conditions at the whole school level. These conditions may enable or hinder implementation of effective feedback practices within a school. Key conditions within our model include:

• Shift thinking, from traditional conceptions of the roles of teachers and students in feedback practices, to a student-centred perspective, where teachers play a key role in activating learners

• Reviewing practice, aligning pedagogy with the prescribed curriculum and success criteria

• Build a learning culture, creating a culture of learning where there is a shared understanding about the purpose of feedback, and its role in the learning process.

The comprehensive intervention consisted of eight half-day collaborative PL sessions spaced across one school semester (6 months), as well as allocated collaboration and planning time for teachers and leaders. In addition, the research team provided on-demand support for school leaders, who were guided in providing ongoing support within their school. The intervention was supported by a resource book, which evolved around the student-centred feedback model. The PL sessions were led by two experienced facilitators with teaching and leadership expertise. These sessions aimed to enhance teachers’ capabilities in facilitating effective feedback processes, where students are active. Teachers and leaders worked collaboratively within and between sessions, supporting one another’s practice. The PL facilitators guided participants in implementing changes in feedback practices.

The intervention was structured around the student-centred feedback model, starting with the outer wheel to establish effective feedback conditions within the school. These sessions included discussions to develop a shared learning philosophy, discussions about the potential benefits of active student engagement in feedback processes, and interrogating curriculum standards to determine the kinds of thinking required in assessment tasks to demonstrate achievement of these outcomes. Follow-up sessions focused on the inner wheel, targeting detailed feedback processes. For example, discussions focused on how teachers could help clarify what success looks like with students. Various resources were used to illustrate how teachers may enable students to be active in the feedback process. For example, teachers were encouraged to develop models of different levels of quality, to illustrate quality features as well as common misconceptions. Teachers were then encouraged to use these models to stimulate student thinking about quality features, and co-construct success criteria. A culminating resource, containing models as well as explicit criteria, are ‘bump it up walls’. Teachers were guided in how to construct these walls, in collaboration with their students. Various other feedback strategies, such as peer feedback, were discussed and modelled during the collaborative PL sessions.

2.2 Participants

Participants were recruited through purposive sampling of 22 state primary schools from a Metropolitan Region in Queensland, Australia. School selection was linked to school interest in PL in feedback and writing as a school improvement priority. Thirteen schools participated as partners and took part in the intervention. Nine schools served as a comparison group. To incentivise participation, these comparison schools participated in a one-off PL session on effective feedback practice. For this reason, this group is referred to as the ‘comparison’, and not ‘control’ group. Participating schools represented a range of socio-educational advantage student populations, with intervention schools having on average slightly higher Index of Community Socio-Educational Advantage (ICSEA) values (ranging from 956 to 1,179).

This study employed purposive sampling to select Year 4 students (aged 8–9 years) from 22 schools, which were either in the intervention or comparison group. To ensure the robustness of the quantitative analyses, an a priory power analysis was conducted. This analysis identified a minimum requirement of 200 observations (100 per group), to achieve a power (1-β) of 0.80. When increasing the power to 0.90 while maintaining the other parameters, the minimum sample size rose to 265 observations in total.

Data were collected from students who had provided written informed consent (student assent as well as parental/carer consent) from 68 classes (intervention n = 52; comparison n = 16). Additionally, a small number of Year 5 (aged 10–11 years) students in composite Year 4/5 classes, who were completing the same learning tasks as their Year 4 peers, were also included. The number of participants in the pre-survey was 1,255 (intervention n = 985 comparison n = 270). A total of 1,197 students completed the post-survey (intervention n = 974; comparison n = 223). Both samples exceeded the minimum sample size identified in the power analysis. For the qualitative component of the study, a sub-sample of 33 Year 4 students (3 per school in eleven intervention schools) were randomly sampled to participate in focus group interviews. Given the random sampling approach, these students were not necessarily from the same class within a school.

2.3 Procedures and instruments

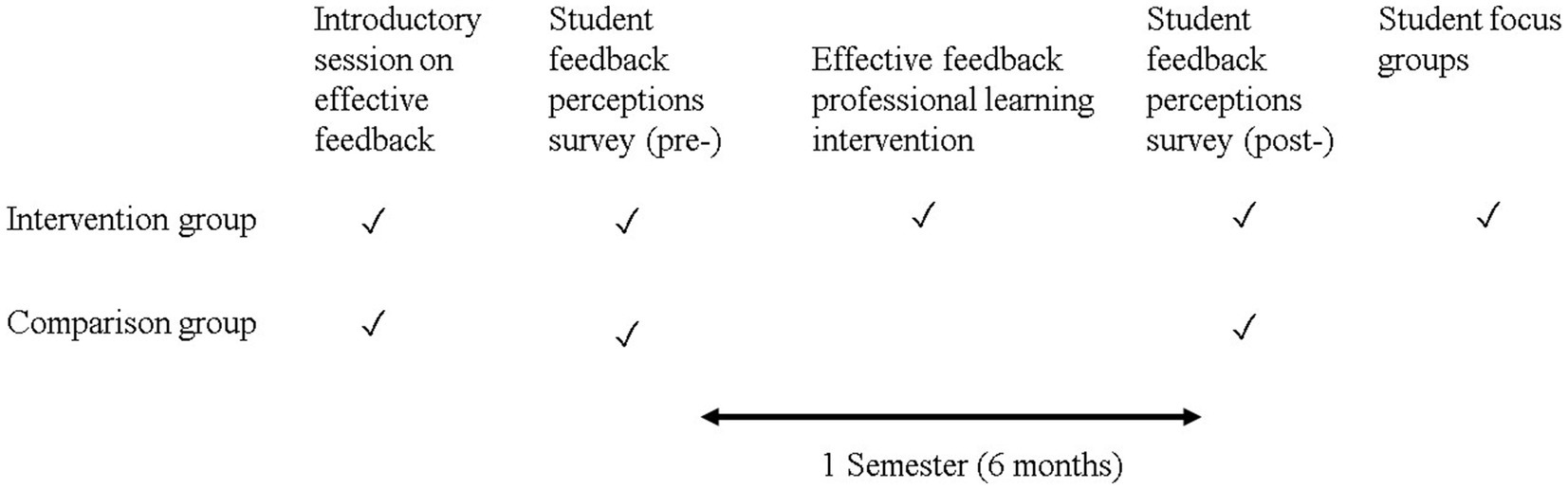

The study used an explanatory mixed methods design, with concurrent collection of quantitative and qualitative data (Creswell and Plano Clark, 2018). A quasi-experimental research design was adopted for the quantitative study component, with the qualitative component focusing only on students in the intervention group. The comparison schools participated in an introductory presentation session on effective feedback identical to that of the partnering schools, but did not take part in the PL intervention. Figure 1 provides an overview of the study procedures and data collection for the study. As outlined in Figure 1, data collection instruments included a student feedback perceptions survey pre-and post the PL intervention, and student focus groups.

2.3.1 Student feedback perceptions survey

The survey, administered by each teacher to their class at the beginning and end of the school semester, aimed to measure student perceptions of how helpful different feedback strategies were to their learning. Thus, student perceptions of feedback helpfulness as measured in the present study reflect their overall self-reported recollection of past experiences (Van der Kleij and Lipnevich, 2021). By comparing student ratings prior to and following the PL intervention, the data provide insights into the perceived usefulness of feedback strategies and how they are implemented. The survey was designed based on an existing survey for older students which was administered as part of the broader research study. For the current study, a new succinct survey incorporating key feedback strategies was designed to suit the Year 4 age group. The new 15-item survey addressed the interconnected elements of feed up, feed back, and feed forward (Hattie and Timperley, 2007) of the student-centred feedback model (Brooks et al., 2021a).

The three feedback questions (Hattie and Timperley, 2007) were contextually modified to: “What does success look like in English?”; “What progress are you making in English?”; and “How can you improve in English?.” Four items pertaining to helpfulness of different feedback strategies were generated for each feedback question. For example, item one asked participants “How helpful are success criteria at showing you what success looks like in English?” The survey incorporated a range of strategies from the feedback PL intervention. In particular, items addressed strategies that promoted the learner to be active, rather than passive, with many of these strategies emphasising the interconnectedness of the student-centred feedback model. Strategies perceived as more traditional and not aligned to the intervention—such as issuing marking guides or criteria sheets to students—were also included to ensure comprehensive representation of strategies. Students were required to rate the helpfulness of each feedback strategy on a scale of 1–7, with 1 being “not helpful” to 7 being “extremely helpful.” In cases where students were not familiar with a feedback strategy or their teacher did not use the strategy, a box labelled “do not use” could be ticked.

In addition, students were asked to rate their capability for each element of the feedback cycle (3 items). For example, students were asked: “Overall, how well do you know what success looks like in English?”

Item construction and selection was based on a review of feedback literature and previous research (Brooks et al., 2019b), as well as the researchers’ own experiences in the previous year of the study. Prior to implementation, face validity of the survey was established with students, teachers and school leaders.

To validate the survey instrument, data from a Year 5 cohort (not included in the present study) were first used to explore the underlying factor structure. Only cases with valid responses were considered in these analyses (i.e., excluding students with any missing responses, or who responded “do not use” to any of the items). These analyses revealed the presence of two factors, with most of the variance explained by the first factor. The second factor consisted of the “overall” items addressing self-reported capability in each element of the feedback cycle, with items cross-loading onto the first factor. These cross-loading items were removed, resulting in a one-factor solution. Exploratory factor analysis results were then tested through a confirmatory factor analysis using Year 4 data. Finally, tests of longitudinal invariance were performed to ensure consistency in the measurement of the feedback perceptions construct across pre-and post-survey administrations. Model fit and scalar invariance were satisfactory, with each item exhibiting a loading of 0.4 or greater.

The 12 individual items making up the perceived helpfulness of feedback scale showed substantial Cronbach’s alpha reliabilities for both pre-and post-intervention administrations (pre-intervention: α = 0.87, n = 692; post-intervention: α = 0.89, n = 987). The perceived helpfulness of feedback scale was constructed by taking the average of the 12 items for observations with valid responses on each item (pre-intervention n = 665; post-intervention n = 953). Scaled scores were not calculated for students with at least one “do not use” response, as these responses could not be quantified in the same way as the other responses. These responses could also not be considered missing at random, as they represented valid responses of a different order. These cases were therefore analysed separately, which provided some insights into strategies that were used more frequently following the PL intervention (see Supplementary material).

2.3.2 Student focus groups

To obtain detailed qualitative data about how and why elements of the feedback intervention were perceived as helpful by students, a semi-structured focus group interview (Creswell and Poth, 2018) was conducted with intervention group students. Interview questions focused on how or why classroom feedback practices emanating from the PL intervention were helpful to student learning. Due to the semi-structured nature of these interviews, the exact questions asked varied. For example, students were asked to elaborate or explain their responses, or respond to their peer’s contributions. The focus group interviews were led by the first two authors and were audio recorded. The duration of the focus groups ranged from 8 to 15 min.

2.4 Data analysis

All survey data were quantitatively analysed using multilevel modelling to account for students being nested in 68 classes taught by different teachers (Hayes, 2006). A multilevel approach is particularly appropriate in this context as it accounts for variation in teachers’ implementation of the intervention. A linear mixed model was used to compare intervention and comparison group students’ scaled scores of feedback helpfulness perceptions, prior to and following the intervention (addressing RQ 1). Next, item-based linear mixed model analyses were conducted to identify which strategies were perceived as more or less helpful following the PL intervention (addressing RQ 2).

Based on the feedback strategies that were perceived as significantly more or less helpful following the intervention, qualitative focus group data were thematically analysed. Analyses of student focus group data were used to provide information to explain the quantitative findings, and shed further light on which feedback strategies were perceived as more or less helpful following the PL intervention, and importantly, why (addressing RQ2). A narrow coding framework was developed by author one, based on quantitative analyses and the student-centred feedback model used in the intervention. Inter-rater reliability of coding was established between authors one and two, over two rounds of blind double coding (comprising 27% of overall data) using NVivo 12 (2018). These authors collaboratively evaluated the independent coding after each round. This process increased inter-rater reliability from substantial (first round: 96.96% agreement, Cohen’s Kappa = 0.77) to almost perfect agreement (second round: 97.76% agreement, Cohen’s Kappa = 0.88). Following establishment of rater reliability, remaining data were coded by author one and cross checked by author two. Author three narratively synthesized coded data to establish key findings.

3 Results

The following sections present the results of the student feedback perceptions survey, followed by an analysis of student focus group data to complement the quantitative results. To enhance readability, only statistical analyses in relation to the main findings are presented, with further detail provided in the Supplementary material.

3.1 Survey results: comparison of student perceptions of feedback helpfulness

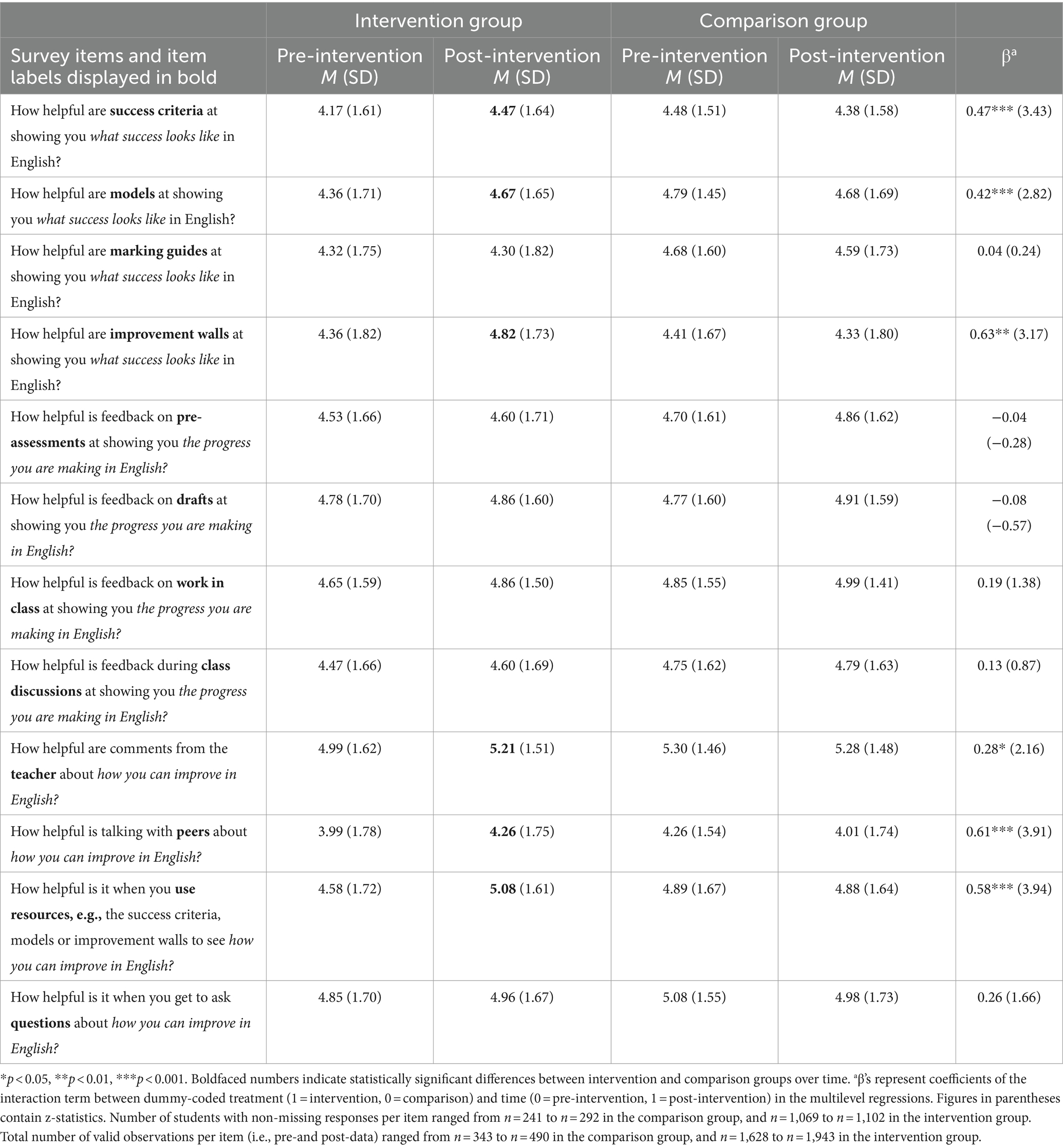

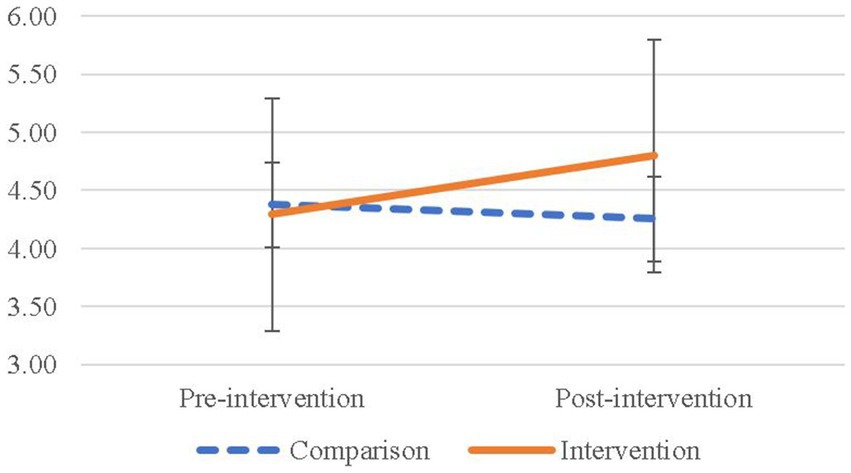

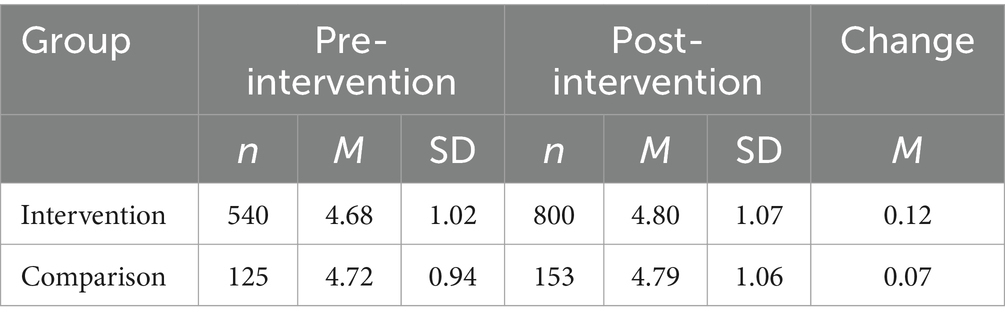

Table 1 presents the mean survey scaled scores for the intervention and comparison group, pre-and post-intervention. The results show that students in the intervention and comparison group overall perceived feedback to be mostly helpful across both survey administrations.

Table 1. Student perception survey mean scaled scores for the intervention and comparison group prior to and following the intervention.

Although student perceptions of feedback helpfulness had increased slightly from pre-to post-intervention, multilevel analyses revealed no statistically significant differences between the intervention and comparison group (β = 0.13, z = 1.17, p = 0.242). Since the scale was composed of items measuring perceived helpfulness of a range of feedback strategies, including strategies that were not a focus of the intervention, item-level analyses were conducted to more closely examine the data.

To answer the second research question, item-level responses (Table 2) were examined to identify which feedback strategies were perceived as more or less helpful following the PL intervention. Descriptive statistics showed that with the exception of the use of marking guides, all feedback strategies were perceived as more helpful by students in the intervention group post-intervention compared to pre-intervention. Of note, the use of marking guides was not endorsed in the PL intervention, as this strategy does not require active student engagement.

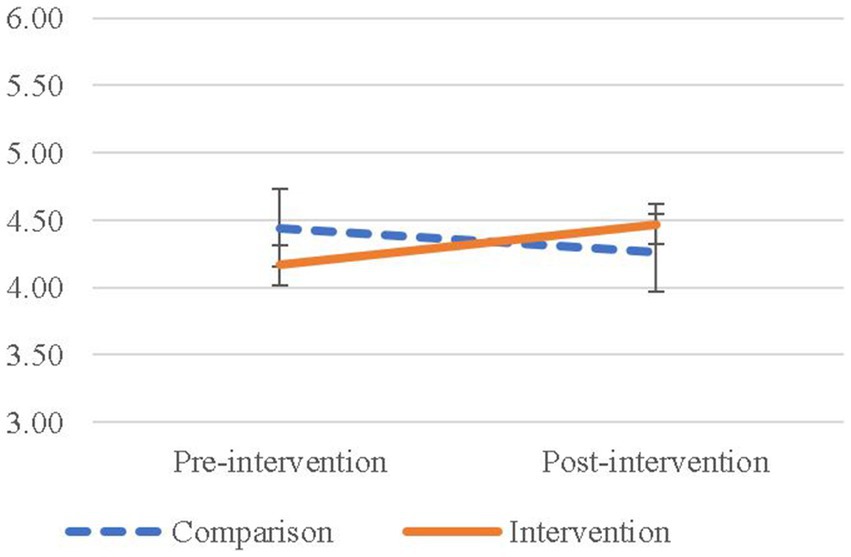

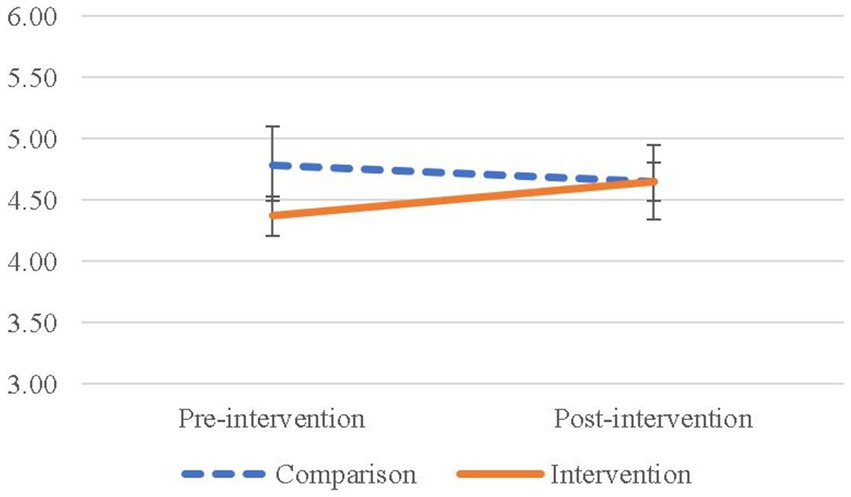

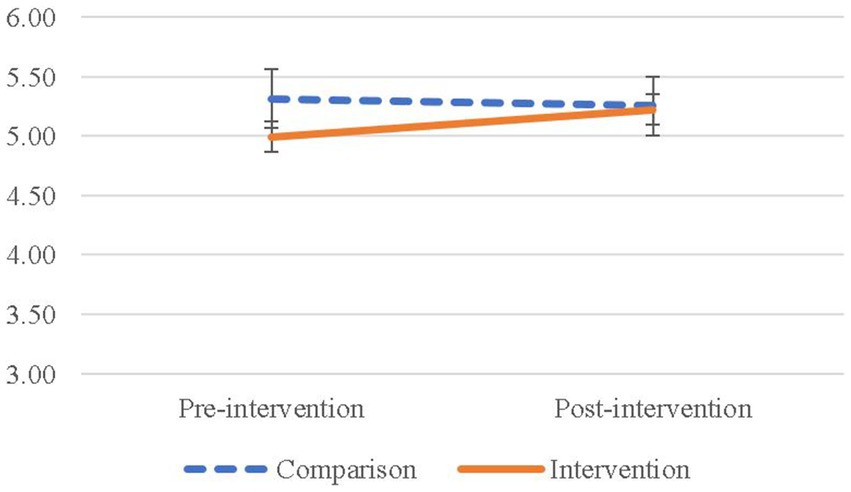

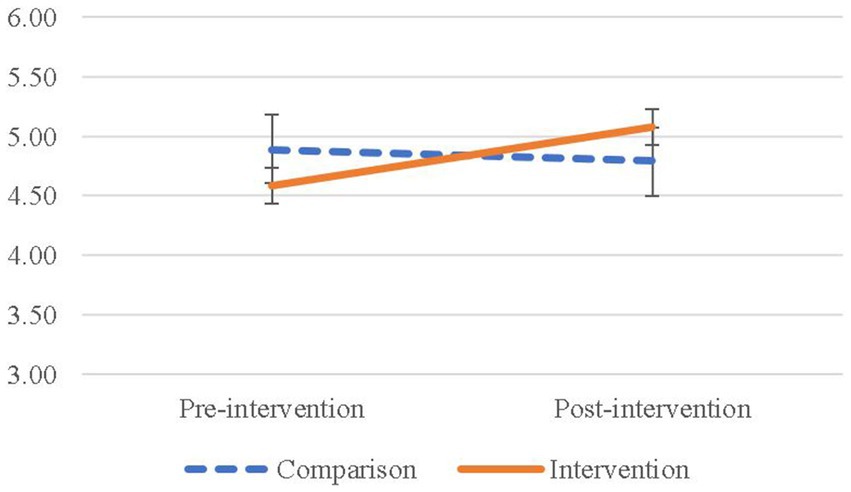

The largest positive change in perceptions in the intervention group was observed for items addressing use resources and improvement walls. These students also perceived models, success criteria, and feedback from the teacher and peers about how to improve as more helpful following the intervention. Comparison group students perceived these strategies as relatively less helpful. Results from the multilevel regressions (Table 2) showed that for items addressing success criteria (Figure 2), models (Figure 3), improvements walls (Figure 4), teacher (Figure 5), peers (Figure 6), and use resources (Figure 7), the intervention group showed statistically significant greater increases in perceptions of helpfulness than the comparison group. Next, qualitative data from student focus groups were analysed to provide in-depth insights into which feedback strategies were perceived as more or less helpful after the PL intervention, and student explanations of why feedback strategies were or were not helpful.

3.2 Focus groups: perceived helpfulness of specific feedback strategies

3.2.1 Helpfulness of success criteria at showing students what success looks like in English

Students identified success criteria were helpful to them because these clearly outlined the requirements for success, and hence what they needed to do to perform well. Success criteria enabled students to see what they needed to do to “work your way up.” Students demonstrated a thorough understanding of the criteria and features of quality. For example, they identified using meta-language, language features, and demonstration of higher-order skills such as comparing and explaining, as features of quality.

Students reported a variety of approaches used by teachers to ensure there was a shared understanding of the success criteria. These strategies included: (1) critiquing, sorting and discussing work samples, to identify the markers of success and quality (success criteria). (2) teacher-led classroom discussions to enable students to engage with and understand the success criteria. For example, students noted their teacher “puts into our words, so we can understand it and have a better understanding of what we need to do,” and “we get the success criteria and we compare our work to it,” and (3) collaborative goal setting, challenging students to improve their work by demonstrating how they could apply the criteria to identify aspects for improvement and next steps. It was clear from students’ responses that teacher support was perceived as critical in making success criteria helpful. Further, the focus group data shows the helpfulness of success criteria was linked to the use of models and improvement walls.

3.2.2 Helpfulness of models at showing students what success looks like in English

Students indicated that models were helpful in demonstrating what quality work looked like. Teachers used a variety of approaches to demonstrate different degrees of success by purposefully contrasting two or three models of varying quality. Importantly, teachers actively engaged students in the process of clarifying success using these models. For example, teachers placed students in small groups, and asked them to evaluate which model was better and why, and facilitated critical discussions about quality. Teachers would sometimes use students’ own work as samples for these discussions. As one student indicated, such discussions would focus on: “someone who wrote a good report and someone who wrote an okay report; and we compare and see which one is better.” By discussing quality in a constructive yet supportive manner, teachers were successful in making models helpful to students to guide their learning.

3.2.3 Helpfulness of improvement walls at showing students what success looks like in English

Students identified that improvement walls (also referred to as bump it up wall or similar)—a continuously available but ever-evolving resource in the classroom which visually matches the success criteria and models—provided a helpful reference point to clarify what success looked like. Teachers used a variety of approaches to construct these improvement walls, often in collaboration with students. To make the improvement walls appealing to students, teachers used metaphors such as the “road to success,” with cars representing features of quality “to get to the end of the road.” Students identified that they consulted the improvement wall regularly to remind themselves of quality features. One student stated that the improvement wall was particularly helpful in ensuring they kept in mind what quality looked like, without having to remember all the orally discussed quality features.

3.2.4 Helpfulness of teacher comments about how students can improve in English

Teachers used a variety of approaches to help students improve. This included whole class critiques using student work samples. For example, a student identified that:

Our teacher does it with the whole class; puts it up on the board and then we can see how they can improve their work; and then it gives us a better idea of how we work in our own work.

Some students also identified that their teacher demonstrated how to improve by collaboratively editing and improving the focus work sample (see 3.2.2), thereby highlighting strategies for improvement.

Although teacher feedback about how they can improve was perceived as important by most students, the teacher was often not their first point of reference. The improvement wall and peers (3.2.5 and 3.2.6) also played an important role in helping students determine next steps. Students identified that teacher feedback was particularly helpful when they found something “tricky” or something was not on the improvement wall, or they needed more specific guidance on what to do; “When you get to your teacher, you can get into the deeper knowledge of what you need to do”.

Students generally perceived teacher feedback on how to improve as helpful, yet several students identified that they did not always understand this feedback. One student indicated that self-assessment was critical in understanding and being able to use teacher feedback, as this enabled them to “see where you are at and you can improve using what they have said”.

3.2.5 Helpfulness of peer discussions about how students can improve in English

Students identified that talking to peers about how they can improve was very helpful. This helped them identify areas for improvement and check for completeness, and gave them ideas on how to improve and refine their understandings.

Peer assessment was organised in a number of different ways. Students worked in pairs to evaluate each other’s work and provide feedback focused on areas for improvement, often prior to requesting teacher feedback. Peer assessment was also organised in small groups, sometimes asking students to sort student work samples to assess their quality and provide feedback to promote improvement using the criteria. Students indicated that sharing knowledge amongst group members was helpful to enhance their own understanding. Sharing their work and being open to feedback was perceived by one student as critical to improvement; “we look at other people’s work and see what they have and if we can add anything to our work”.

Students indicated that at times it was helpful to ask a peer rather than the teacher for help, for various reasons. For example, teachers were not always available to answer questions, or peers may be better at explaining the next steps in language students can understand. For example, one student reflected:

My teacher uses lots of big words that I don’t understand. She’s like, “Oh, you should add more blah, blah, blah,” and I’m like, “What? I don’t get what you mean.” And then I go to a friend and I understand her much better.

Students appeared to value peer feedback greatly, which they identified was generally honest and helpful in guiding students how to make their work better, one step at a time. Notably, several students identified that peer assessment was helpful for their self-assessment. For example, one student noted that by reading and providing feedback on their peer’s work, “you can see how they are going and how you are going, to compare; see who has more detail. And then you realise you have to get to their standard.” Reading other students’ work also gave students new ideas for improving their own work.

3.2.6 Helpfulness of resources to help students identify how they can improve in English

Students identified that resources used in their classroom were helpful in assisting them to identify how they can improve, through processes of self-and peer assessment. Specifically, students were able to use the success criteria, models and improvement walls to self-assess their work to determine where they were at, identify aspects for improvement, and determine next steps to take. Students perceived that resources were helpful in giving them ideas for how to improve their work. For example, one student reflected that “you can compare your work and see what you need to add to make your work better.” Students also emphasised the helpfulness of examples on the improvement wall to scaffold their writing. They highlighted the importance of the improvement wall being available at all times, so they could use it to improve their work as an ongoing feedback loop. Importantly, the improvement wall appeared to have challenged students to set goals to further improve their work, as it facilitated breaking down how to improve into manageable steps.

4 Discussion

This study examined the effects of a teacher PL intervention underpinned by a student-centred model of feedback on student perceptions of feedback helpfulness. It sought to answer two research questions: (1) Did the PL intervention change students’ perceptions of feedback helpfulness? And (2) Which feedback strategies were perceived as more or less helpful following the PL intervention, and why? We hypothesised that training teachers in effective feedback would result in increased perceived helpfulness among students, because students being active in the feedback process would make them more able to effectively act on the feedback.

The findings showed no overall differences in student feedback perceptions prior to and following the PL intervention. However, item-level analyses showed statistically significant differences in perceived helpfulness between intervention group students and comparison group students for six feedback strategies. Students in the intervention group perceived these strategies as more helpful following the intervention. Three of these feedback strategies focused on feeding up, the remaining three focused on feeding forward. None of the feedback strategies were perceived as less helpful following the intervention. These findings show the potential for PL interventions to impact teacher classroom practices and (student perceptions of) feedback helpfulness for learning. Moreover, our findings demonstrate that activating students in feedback processes can successfully be achieved with students in lower primary education. Qualitative student focus group data were analysed to provide in-depth insights into the findings from quantitative analyses.

Students in the intervention group perceived three strategies for feeding up (Hattie and Timperley, 2007) as significantly more helpful following the intervention compared to those in the comparison group: success criteria, models, and improvement walls. The intervention strongly encouraged teachers to use these strategies in combination, due to their interdependent nature. We believe that the process of mapping the success criteria onto models, and deconstructing models using success criteria was what made things “stick” for students. In other words, clarifying success and using models go hand in hand. The improvement walls proved to be a useful vehicle to combine the strengths of each of these strategies, ensuring continued access to essential resources and information for students. The intention was for improvement walls to be fluid in nature, drawing on understandings of quality as these were gradually co-constructed. Although metaphors were helpful in making the improvement walls look appealing, the key to their success was the extent to which they aligned with ever-evolving notions of what quality looks like. These findings corroborate prior research highlighting the value of co-constructing criteria (Wyatt-Smith and Adie, 2021).

Importantly, the interviewed students demonstrated a thorough understanding of the criteria, which appears to be critical for students to perceive criteria as helpful. This finding sharply contrasts those in many previous studies (e.g., Timperley and Parr, 2009; DeLuca et al., 2018), where criteria may have been announced by the teacher and referred to, but not actively engaged with by students. Students reported several approaches used by teachers to achieve shared understandings of the success criteria. These strategies included (1) critiquing, sorting and discussing work samples, (2) teacher-led classroom discussions to enable students to engage with and understand the success criteria, and (3) collaborative goal setting. Teachers were encouraged to spend considerable time actively involving students in the ongoing process of co-constructing success criteria to enable them to have a deeper understanding of their purpose and intent (DeLuca et al., 2019; Brooks et al., 2021a). This ongoing process was crucial in building students’ capability to effectively self-and peer assess.

Further, following the intervention, three strategies for feeding forward (Hattie and Timperley, 2007) were perceived as significantly more helpful by students in the intervention group compared to those in the comparison group: teacher comments, peer discussions, and resources that help students in how to move forward. The qualitative results showed that students perceived these strategies as helpful at different stages of the feedback cycle, with improvement walls being a first point of reference throughout the cycle. These findings suggest that students were active in self-regulating their learning to determine next steps. Nevertheless, students continued to value teacher comments. Using a combination of strategies for identifying how to improve appeared to have enabled students to engage effectively and efficiently with feedback, lifting the burden of feedback for improvement off the teacher. This finding is encouraging in light of previous research, which identified difficulties in shifting responsibility for feedback from teachers to students (Jonsson et al., 2015; DeLuca et al., 2018, 2019).

We believe that the more effective use of certain strategies by teacher is only one reason for increased perceived helpfulness of these strategies by students. Another possible explanation is that teachers and students openly discussed feedback strategies, providing students more insights into their value for their learning. Such discussions provide an important starting point for changing how students are positioned to act in feedback processes, which is determined by how teachers construct their feedback practice (Mapplebeck and Dunlop, 2019; Van der Kleij et al., 2019). In addition, they enhance shared understandings of the purpose of feedback, as per the ‘build a learning culture’ in the student-centred feedback model’s outer wheel (Brooks et al., 2021a).

Given the young age of participating students, the positive findings in relation to perceived helpfulness of peer feedback and improvement walls, demonstrating strong self-regulatory capacities, are particularly noteworthy. However, we would like to stress the interconnected nature of the feedback strategies addressed in the intervention. For example, for peer feedback practices to be effective, students first need to develop an understanding of what quality looks like. Clarifying success is therefore a fundamental first step. Further, younger students will require significant support to enable them to formulate helpful feedback that is aligned with success criteria (DeLuca et al., 2018; Lee et al., 2019). In contrast to previous research (DeLuca et al., 2018; Lee et al., 2019), interviewed students did not report issues with the trustworthiness or helpfulness of peer feedback. The intervention encouraged teachers to guide students in how to provide peer feedback, by focusing on the key success criteria. We believe that this approach contributed to the perceived helpfulness of peer feedback. The findings further suggest that peer assessment was perceived as beneficial by feedback receivers, but also provided benefits to feedback providers, consistent with previous research (DeLuca et al., 2018; Huisman et al., 2018). Students indicated applying new insights from reading their peer’s work to their own work, suggesting that peer assessment sparked self-feedback.

4.1 Limitations and implications for future research

Some limitations need to be acknowledged. An initial limitation was that purposive rather than random sampling may have introduced bias into the findings in the quantitative component of the study. This limitation was due to the research design with intervention schools already receiving treatment as part of the wider study.

Furthermore, perceived helpfulness of feedback in intervention and comparison schools was already high pre-intervention, which limited the study’s ability to detect changes in perceived usefulness. There were also limitations in respect to the survey and the use of self-report data. Mechanisms were put in place to ensure age-appropriate procedures for data collection which proved effective as demonstrated by the high reliability values. The nature of the survey required excluding participant responses that selected “do not use” for one or more items, which reduced the sample size. The comparison group was of a smaller size than the intervention groups due to difficulties recruiting schools to join this condition, which reduced statistical power. Nevertheless, sample sizes were deemed sufficiently large to detect any significant overall or item-level effects. In addition, the findings only tell us that certain feedback strategies were perceived as more helpful, but not how effective this feedback was as evidenced by improved student achievement outcomes. Another limitation inherent in the study design was that students participated in the focus groups after completing the survey. As such, they had already been exposed to an overview of feedback strategies, making it more likely that they would refer to these. The interviewers tried to address this limitation by asking students to explain their responses, including by reflecting on specific examples.

Further research using additional measures is needed to investigate perceived and actual helpfulness of feedback (Van der Kleij and Lipnevich, 2021). For example, studies could use observational data and link self-reported perceptions of feedback helpfulness to actual use of feedback as demonstrated in different work samples. Additionally, this field of research would be informed by studies that differentiate perceived helpfulness of feedback for learners at different proficiency levels.

4.2 Implications for feedback practice in schools

Our findings have important implications for classroom practice and teacher PL and provide valuable insights into how teachers may facilitate active student engagement with feedback. Student-centred feedback processes, including the co-construction of success criteria and peer feedback, helped students to understand what success looked like and showed them how to improve, causing them to perceive feedback as more helpful than prior to the intervention. Teachers would be well advised to draw upon these active learning strategies to develop students’ in-depth understandings of quality work and self-regulated learning (Brooks et al., 2021a). These findings also call into question the benefit to students of traditional, transmissive models of feedback that exist in schools. Acknowledging this, school leaders should be considerate of PL opportunities to build teacher capability in using these student-centred feedback strategies in the classroom, so that students perceive feedback as helpful whilst not placing unnecessarily high burdens on teachers.

The above discussion has already highlighted the importance of the interrelatedness of certain feedback strategies. We would like to stress that although the feedback strategies perceived as more helpful by students following the intervention fell under feeding up and feeding forward, the process of feeding back to check in on progress is inextricably related (Hattie and Timperley, 2007). It is only when students are actively involved in each phase of the feedback cycle that the potential of feedback can be fully realised.

Data availability statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to their containing information that could compromise the privacy of research participants.

Ethics statement

The studies involving humans were approved by University of Queensland and Queensland Department of Education. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

CB: Formal analysis, Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Funding acquisition, Investigation, Methodology, Resources, Supervision. RB: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing, Project administration. FK: Formal analysis, Writing – original draft, Writing – review & editing. CA: Formal analysis, Validation, Writing – original draft, Writing – review & editing. AC: Conceptualization, Funding acquisition, Methodology, Writing – review & editing. JH: Conceptualization, Methodology, Writing – review & editing. JS: Data curation, Writing – original draft, Writing – review & editing.

Funding

The authors declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the Australian Government through the Australian Research Council (LP160101604).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1433184/full#supplementary-material

References

Brooks, C., Burton, R., Van der Kleij, F., Carroll, A., Olave, K., and Hattie, J. (2021a). From fixing the work to improving the learner: An initial evaluation of a professional learning intervention using a new student-centred feedback model. Stud. Educ. Eval. 100943. doi: 10.1016/j.stueduc.2020.100943

Brooks, C., Burton, R., Van der Kleij, F., Ablaza, C., Carroll, A., Hattie, J., et al. (2021b). Teachers activating learners: The effects of a student-centred feedback approach on writing achievement. Teach. Teach. Educ. 105:103387. doi: 10.1016/j.tate.2021.103387

Brooks, C., Burton, R., Van der Kleij, F., Carroll, A., and Hattie, J. (2021c). Towards student-centred feedback practices: Evaluating the impact of a professional learning intervention in primary schools. Educ.: Princ. Policy Pract. 28, 633–656. doi: 10.1080/0969594X.2021.1976108

Brooks, C., Carroll, A., Gillies, R. M., and Hattie, J. (2019a). A matrix of feedback for learning. Aust. J. Teach. Educ. 44, 14–32. doi: 10.14221/ajte.2018v44n4.2

Brooks, C., Huang, Y., Hattie, J., Carroll, A., and Burton, R. (2019b). What is my next step? School students’ perceptions of feedback. Front. Educ. 4:96. doi: 10.3389/feduc.2019.00096

Butler, D. L., and Winne, P. H. (1995). Feedback and self-regulated learning: a theoretical synthesis. Rev. Educ. Res. 65, 245–281. doi: 10.3102/00346543065003245

Creswell, J. W., and Plano Clark, V. L. (2018). Designing and conducting mixed methods research. 3rd Edn. Thousand Oaks, CA: Sage.

Creswell, J., and Poth, C. (2018). Qualitative inquiry & research design: choosing among five approaches. 4th Edn. Thousand Oaks, CA: Sage.

de Vries, J., Van Gasse, R., van Geel, M., Visscher, A., and Van Petegem, P. (2024). Profiles of teachers’ assessment techniques and their students’ involvement in assessment. Europ. J. Teach. Educ. doi: 10.1080/02619768.2024.2354410

DeLuca, C., Chapman-Chin, A., and Klinger, D. A. (2019). Toward a teacher professional learning teacher professional learning continuum in assessment for learning. Educ. Assess. 24, 267–285. doi: 10.1080/10627197.2019.1670056

DeLuca, C., Chapman-Chin, A. E. A., LaPointe-McEwan, D., and Klinger, D. A. (2018). Student perspectives on assessment for learning. Curric. J. 29, 77–94. doi: 10.1080/09585176.2017.1401550

Gamlem, S. M., and Smith, K. (2013). Student perceptions of classroom feedback. Assess. Educ. 20, 150–169. doi: 10.1080/0969594X.2012.749212

Gotwals, A. W., and Cisterna, D. (2022). Formative assessment practice progressions for teacher preparation: a framework and illustrative case. Teach. Teach. Educ. 110:103601. doi: 10.1016/j.tate.2021.103601

Gulikers, J. T. M., and Baartman, L. K. J. (2017). Doelgericht professionaliseren: formatieve toetspraktijken met effect! Wat DOET de docent in de klas? (targeted professional development: Formative assessment practices with effect! What the teacher DOES in the classroom) (NRO review report no. 405–15-722). Nationaal Regieorgaan Onderwijsonderzoek.

Gulikers, J. T. M., Veugen, M. J., and Baartman, L. K. J. (2021). What are we really aiming for? Identifying concrete student behaviour in co-regulatory formative assessment processes in the classroom. Front. Educ. 6:750281. doi: 10.3389/feduc.2021.750281

Harks, B., Rakoczy, K., Hattie, J., Besser, M., and Klieme, E. (2014). The effects of feedback on achievement, interest and self-evaluation: the role of feedback’s perceived usefulness. Educ. Psychol. 34, 269–290. doi: 10.1080/01443410.2013.785384

Hattie, J., Gan, M., and Brooks, C. (2016). “Instruction based on feedback” in Handbook of research on learning and instruction. eds. R. E. Mayer and P. A. Alexander (New York, NY: Routledge).2016

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Havnes, A., Smith, K., Dysthe, O., and Ludvigsen, K. (2012). Formative assessment and feedback: making learning visible. Stud. Educ. Eval. 38, 21–27. doi: 10.1016/j.stueduc.2012.04.001

Hayes, A. F. (2006). A primer on multilevel modeling. Hum. Commun. Res. 32, 385–410. doi: 10.1111/j.1468-2958.2006.00281.x

Huisman, B., Saab, N., Van Driel, J., and Van Den Broek, P. (2018). Peer feedback on academic writing: undergraduate students’ peer feedback role, peer feedback perceptions and essay performance. Assess. Eval. High. Educ. 43, 955–968. doi: 10.1080/02602938.2018.1424318

Jonsson, A., Lundahl, C., and Holmgren, A. (2015). Evaluating a large-scale implementation of assessment for learning in Sweden. Assess. Educ. 22, 104–121. doi: 10.1080/0969594X.2014.970612

Jonsson, A., and Panadero, E. (2018). “Facilitating students’ active engagement with feedback” in The Cambridge handbook of instructional feedback. eds. A. Lipnevich and J. Smith (Cambridge, UK: Cambridge University Press).

Kluger, A., and DeNisi, A. (1996). The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 119, 254–284. doi: 10.1037/0033-2909.119.2.254

Lee, H., Chung, H. Q., Zhang, Y., Abedi, J., and Warschauer, M. (2020). The effectiveness and features of formative assessment in US K-12 education: a systematic review. Appl. Meas. Educ. 33, 124–140. doi: 10.1080/08957347.2020.1732383

Lee, I., Mak, P., and Yuan, R. E. (2019). Assessment as learning in primary writing classrooms: an exploratory study. Stud. Educ. Eval. 62, 72–81. doi: 10.1016/j.stueduc.2019.04.012

Lipnevich, A. A., and Smith, J. K. (2022). Student – feedback interaction model: revised. Stud. Educ. Eval. 75:101208. doi: 10.1016/j.stueduc.2022.101208

Mapplebeck, A., and Dunlop, L. (2019). Oral interactions in secondary science classrooms: a grounded approach to identifying oral feedback types and practices. Res. Sci. Educ., 51, 1–26. doi: 10.1007/s11165-019-9843-y

Rakoczy, K., Harks, B., Klieme, E., Blum, W., and Hochweber, J. (2013). Written feedback in mathematics: mediated by students’ perception, moderated by goal orientation. Learn. Instr. 27, 63–73. doi: 10.1016/j.learninstruc.2013.03.002

Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instr. Sci. 18, 119–144. doi: 10.1007/BF00117714

Timperley, H. S., and Parr, J. M. (2009). What is this lesson about? Instructional processes and student understandings in writing classrooms. Curric. J. 20, 43–60. doi: 10.1080/09585170902763999

Van der Kleij, F. M., Adie, L. E., and Cumming, J. J. (2019). A meta-review of the student role in feedback. Int. J. Educ. Res. 98, 303–323. doi: 10.1016/j.ijer.2019.09.005

Van der Kleij, F. M., and Lipnevich, A. A. (2021). Student perceptions of assessment feedback: a critical scoping review and call for research. Educ. Assess. Eval. Account. 33, 345–373. doi: 10.1007/s11092-020-09331-x

Veugen, M. J., Gulikers, J. T. M., and den Brok, P. (2024). Secondary school teachers’ use of formative assessment practice to create co-regulated learning. J. Format. Design Learn. doi: 10.1007/s41686-024-00089-9

Voerman, L., Meijer, P. C., Korthagen, F., and Simons, R. J. (2015). Promoting effective teacher-feedback: from theory to practice thorugh a multiple component trajectory for professional development. Teach. Teach. 21, 990–1009. doi: 10.1080/13540602.2015.1005868

Winstone, N. E., and Nash, R. A. (2023). Toward a cohesive psychological science of effective feedback. Educ. Psychol. 58, 111–129. doi: 10.1080/00461520.2023.2224444

Winstone, N. E., Nash, R. A., Parker, M., and Rowntree, J. (2017). Supporting learners’ agentic engagement with feedback: a systematic review and a taxonomy of recipience processes. Educ. Psychol. 52, 17–37. doi: 10.1080/00461520.2016.1207538

Keywords: feedback, student perceptions, teacher professional learning, primary education, mixed methods research methodology

Citation: Brooks C, Burton R, Van der Kleij F, Ablaza C, Carroll A, Hattie J and Salinas JG (2024) “It actually helped”: students’ perceptions of feedback helpfulness prior to and following a teacher professional learning intervention. Front. Educ. 9:1433184. doi: 10.3389/feduc.2024.1433184

Edited by:

Robbert Smit, St. Gallen University of Teacher Education, SwitzerlandReviewed by:

Sebastian Röhl, University of Education Freiburg, GermanyPınar Karaman, Sinop University, Türkiye

Miriam Salvisberg, University of Applied Sciences and Arts of Southern Switzerland, Switzerland

Copyright © 2024 Brooks, Burton, Van der Kleij, Ablaza, Carroll, Hattie and Salinas. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fabienne Van der Kleij, ZmFiaWVubmUudmFuZGVya2xlaWpAYWNlci5vcmc=

Cameron Brooks

Cameron Brooks Rochelle Burton

Rochelle Burton Fabienne Van der Kleij

Fabienne Van der Kleij Christine Ablaza2

Christine Ablaza2 Annemaree Carroll

Annemaree Carroll John Hattie

John Hattie