- 1Faculty of Engineering, Ruppin Academic Center, Emek Hefer, Israel

- 2Faculty of Economics and Business Administration, Ruppin Academic Center, Emek Hefer, Israel

- 3Ruppin Research Group in Environmental and Social Sustainability, Ruppin Academic Center, Emek Hefer, Israel

Introduction: In recent years, numerous studies have compared traditional face-to-face (F2F) learning on campus with online learning, seeking to establish how the learning environment (online vs. F2F) affects outcomes such as student satisfaction and achievement. In a separate line of research, scholars have examined various facets of active learning—an approach that makes use of interactive learning methods—separately in online and F2F environments. However, few studies have compared the effects of active learning in classes taught online vs. F2F. The present study addresses this gap. It follows an earlier study in which we examined the effects of active learning in an online environment, particularly how the extent and variety of interactive teaching methods used affect students’ course evaluations (overall evaluations and perceived clarity of the teaching).

Methods: The present study repeats the setup of that previous study in a F2F environment, allowing us both to gain new insights into the effects of active learning in this context and to directly compare the examined outcomes in F2F vs. online learning.

Results: The results reveal consistent trends in both studies: more extensive and more varied use of interactive learning methods improves overall student evaluations and perceptions of the clarity of teaching in the course. Crucially, minimal use of interactive teaching methods results in notably lower student evaluations and perceptions of teaching clarity in F2F settings compared to online classes.

Discussion: The findings highlight the essential need for instructors to adopt diverse interactive methods in F2F environments to improve educational outcomes and reinforce the effectiveness of active learning.

1 Introduction

The COVID-19 pandemic prompted an urgent and significant transformation in educational delivery, accelerating the transition from traditional face-to-face (F2F) instruction to online learning environments. This shift was not merely logistical but also pedagogical, intensifying the focus on active learning—an educational approach that engages students through interactive methods.

Active learning techniques place students at the center of the learning process, making them protagonists of discovery rather than passive receivers of information (Deslauriers et al., 2019). These methods benefit the learning process in four main ways: (1) engaging students directly with the content, leading to deeper learning (Chi and Wylie, 2014); (2) challenging students to apply, analyze, and evaluate information rather than simply memorize facts, thereby developing higher-order cognitive skills (Konopka et al., 2015); (3) engaging students by using relevant real-world applications, such as problem-based learning techniques that often draw from real-world scenarios (Chi and Wylie, 2014); and (4) developing collaborative skills through group work, which helps students build important teamwork and communication abilities while improving academic achievement (Froyd, 2007; Saunders and Wong, 2020). Moreover, active learning seems to be especially valuable not only for raising achievement across the board, but for reducing achievement gaps for underrepresented students in STEM (Science, Technology, Engineering, and Mathematics) disciplines, as found by Theobald et al. (2020) in a comprehensive review. This finding highlights the potential of active learning to promote equity in education. The present study is part of ongoing research in which we focus on the effects of four specific active learning methods [see section “2 Background (active learning methods)”].

While extensive research has explored the efficacy of active learning within distinct online (Mou, 2021) or F2F contexts, comparative analysis of these methods across both modalities remains scant. Our prior research (Barnett-Itzhaki et al., 2023) also primarily examined these environments in isolation, potentially overlooking how active learning strategies influence student assessments depending on the instructional context. In that earlier work, we explored how students experienced active learning online during the COVID-19 period with respect to three main questions: (a) how students evaluated the course; (b) how students perceived the clarity of the teaching; and (c) how students assessed the effectiveness of online learning. That study was based on approximately 30,000 teaching evaluation surveys filled out by undergraduate and graduate students at our institution during the COVID-19 period. We analyzed these outcomes in relation to various factors, such as class characteristics and student demographics, to determine how the use of interactive learning methods influences students’ experiences when learning online. Our findings indicated that both the extent and the variety of interactive learning methods used significantly affect the perceived effectiveness and clarity of teaching.

In the 2021–2022 academic year, students at our institution returned to campus and to a face-to-face (F2F) learning environment. Simultaneously, the teaching staff adapted some of the methods and approaches acquired during the COVID-19 period for F2F learning, particularly the use of interactive learning methods. This situation prompted us to carry out a follow-up study to see how students experience active learning in a F2F environment, focusing on the same primary concerns as the previous study: (a) students’ evaluations of the course, and (b) how students perceived the clarity of the teaching in the course. The third aspect examined in the previous study, the perceived effectiveness of online learning, is not relevant to the present study.

Within the educational literature, the return to campus following the pandemic has led to several academic studies aimed at comparing online learning to F2F learning. However, most of these studies focus on differences in student performance and satisfaction between these two environments (Chisadza et al., 2021; Spencer and Temple, 2021; Shah et al., 2022). For instance, Regmi and Jones (2020) executed a systematic review to compare the effectiveness of online and F2F learning in health professions education. Their findings suggest that online learning is at least as effective as traditional F2F.

As far as we can tell, there are insufficient comparative studies that have examined online learning versus F2F learning in the context of how active learning impacts students’ perceptions and evaluations.

Addressing this gap, our current study aims to evaluate the impact of the extent and variety of interactive learning methods on student assessments in both online and F2F settings. We formulated two objectives: (a) to explore the active learning experience in a F2F environment, focusing on students’ course evaluations and perceived teaching clarity; and (b) to conduct a comparative analysis, examining students’ experiences of active learning in F2F versus online environments. To ensure as precise a comparison as possible, we drew our data from the same sources (teaching surveys filled out by students), in the same format as previously, and analyzed the data using the same methodology. For convenience, in what follows, the previous study is termed “the online study,” while the current study is termed “the F2F study.”

By employing a consistent set of measures and methodologies to evaluate approximately 30,000 teaching evaluation surveys, this study offers new insights into the comparative effectiveness of active learning across different learning environments. On the one hand, the findings of the F2F study are similar to those of the online study. Overall, they show that both the extent and variety of interactive teaching methods used significantly affect the studied outcomes: greater extent and variety are associated with significantly improved student evaluations of the course, and significantly higher perceived teaching clarity. However, classes with little or no use of interactive methods had statistically significant lower evaluations and lower perceived clarity in F2F compared to online classes.

This research innovatively dissects not merely the presence but also the diversity and intensity of active learning techniques, by directly comparing the impacts of these educational settings. Ultimately, this research contributes to a broader educational dialog on best practices in active learning, underscoring its pivotal role in ensuring student satisfaction and academic success across diverse instructional landscapes.

The rest of the paper is organized as follows: in section 2, we provide background on active learning methods; in section 3 we elaborate on related work; in section 4 we present our research objective and questions; in section 5 we describe the research methods; and in section 6 we present our findings. Finally, in section 7, we discuss the results and outline directions for possible future work.

2 Background (active learning methods)

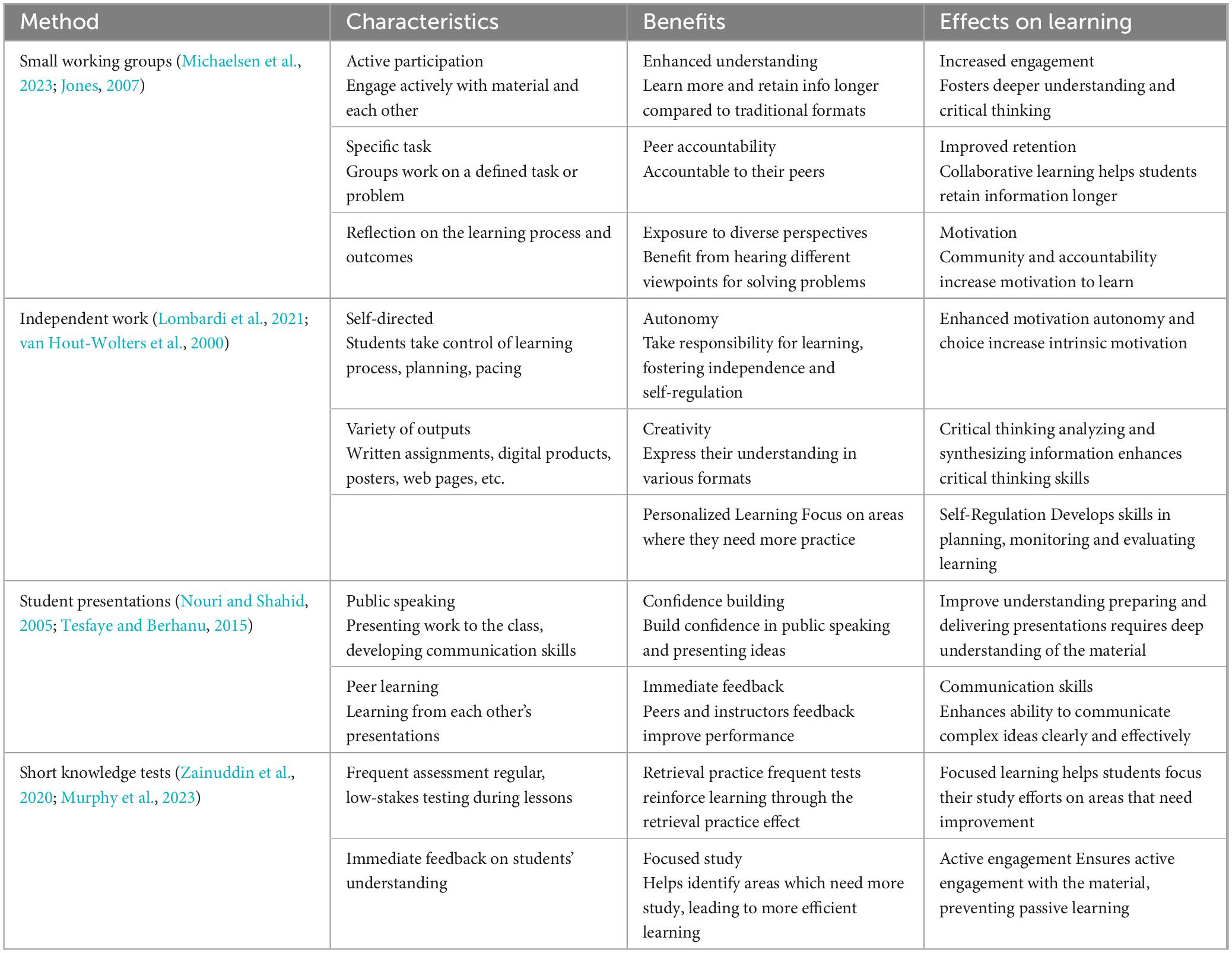

Active learning has been widely recognized as an effective pedagogical approach, leading to improved student outcomes across various disciplines in higher education (Theobald et al., 2020). According to Freeman et al. (2014), “Active learning increases student performance in science, engineering, and mathematics.” In recent years, many active learning methods have been developed (Lombardi et al., 2021). In our current and previous research (Barnett-Itzhaki et al., 2023), we have chosen to focus on four active learning methods: (1) small working groups for discussion, thinking through, or performing a task; (2) independent work during lessons (via written assignments or producing digital learning products, such as videos, posters, or web pages); (3) student presentations during lessons; and (4) short knowledge tests during lessons (e.g., quizzes and questionnaires).

In Table 1, we elaborate on the characteristics, benefits, and effects on learning with respect to each of these four methods.

In sum, these active learning techniques—small working groups, independent work, student presentations, and short knowledge tests—each contribute uniquely to enhancing the learning process. They promote active engagement, critical thinking, and deeper understanding, all of which are essential for effective learning.

3 Related work

Extensive research has explored various facets of active learning methodologies, examining their implementation in both online and F2F educational environments. In addition, numerous scholarly inquiries have delved into comparisons between online and traditional F2F education, with a particular emphasis on evaluating student performance, gauging satisfaction levels, and exploring pertinent influencing factors. However, in examining the existing literature, we identified a lack of studies comparing the use of active learning in the online and F2F modalities. This is the focus of the current research. Consequently, to put our research in context, in this section we offer an in-depth review of scholarly works that comprehensively compare these learning modalities (online and F2F) from perspectives other than active learning.

Several studies have examined students’ performance, satisfaction, or both in online versus F2F environments based on data collected in a specific course or teaching domain (e.g., Summers et al., 2005; Friday et al., 2006; Smith and Stephens, 2010; Ary and Brune, 2011; Biel and Brame, 2016; Paul and Jefferson, 2019; Regmi and Jones, 2020; Thai et al., 2020). Of these, most (e.g., Summers et al., 2005; Friday et al., 2006; Ary and Brune, 2011; Biel and Brame, 2016) found no statistically significant differences in student performance between the online and traditional environments. Smith and Stephens (2010) found a statistically significant difference in mean scores achieved on the final exam, with online students earning a markedly higher mean score. However, the study found no significant disparities in student satisfaction as assessed through student evaluations. In contrast, Summers et al. (2005) report that students who enrolled in the online version of a course rated their satisfaction markedly lower than their peers in the traditional classroom setting. Those authors recommended that instructors should carefully consider pedagogical factors, including student characteristics, motivation, and levels of instructor support, during the development of online courses to bolster student satisfaction; and they called for future research to delve deeper into the specific factors influencing student satisfaction in online courses. Biel and Brame (2016) offer several recommendations to enhance the effectiveness of online instruction, including the implementation of an online orientation for students, fostering interactions through digital communication tools, and incorporating elements that encourage student self-reflection and self-assessment.

Mali and Lim (2021) found that students perceived blended learning (BL) more positively during the COVID-19 pandemic, but preferred face-to-face (F2F) learning when COVID was no longer a concern. In a study with undergraduates using mixed methods, they showed that F2F is favored due to better interaction with lecturers, group work, peer engagement, class involvement, and the ability to ask technical questions. They argue that to improve BL, policymakers should incorporate social elements into netiquette frameworks to enhance the student experience and mitigate negative attitudes toward online/BL learning.

In a broader effort to assess the effectiveness of online versus traditional F2F instruction, Paul and Jefferson (2019) examined outcomes for an environmental science class that was offered both F2F and online between 2009 and 2016. Overall, they found no statistically significant differences in student performance between the two groups; and these conclusions remained consistent when considering factors such as gender and class rank. However, this study was context-specific, focusing primarily on a specific course tailored to non-STEM majors, which may constrain the extrapolation of these results to other academic disciplines or student populations.

Some studies have compared student achievement and satisfaction without restriction to specific teaching domains (e.g., Dell et al., 2010; Atchley et al., 2013). Intriguingly, Atchley et al. (2013) observed significant variations in course completion rates between different academic disciplines. Their study examined 14 different disciplines and found that course completion varied significantly by subject. Notably, reading courses had the highest completion rate at 98.2%, while finance courses had the lowest at 82.2%.1 These findings align with previous research suggesting that some disciplines may be better suited to online delivery than others (Noble, 2002; Carnevale, 2003; Nelson, 2007; Paden, 2006; Smith et al., 2008). This variation in completion rates across disciplines highlights the importance of considering subject matter when designing and implementing online courses. By contrast, Dell et al. (2010) found no statistically significant disparities in the quality of work between the two environments. Both these studies highlight the need for further research given the burgeoning popularity of online learning, including the potential role of variables such as student characteristics and prior experience with online learning (Atchley et al., 2013). Dell et al. (2010) also emphasize the paramount importance of instructional strategies that promote active learning, encourage student interaction, and facilitate self-reflection and self-regulation.

Other papers (e.g., Shah et al., 2022; Chisadza et al., 2021; Spencer and Temple, 2021; Kemp and Grieve, 2014; Driscoll et al., 2012; Johnson et al., 2000) also compared the effectiveness of online and face-to-face instruction in terms of satisfaction levels, performance, and other variables. The studies of Driscoll et al. (2012) and Johnson et al. (2000) found that F2F students exhibited marginally more favorable perceptions of instructors and course quality. F2F cohorts reported greater satisfaction with student interaction and the support provided by instructors and academic departments. However, no statistically significant differences were found in learning outcomes. The authors of both studies underscored the need for enhancing communication in online education settings. Shah et al. (2022) found a significant disparity in engagement and satisfaction emerged, favoring F2F classroom settings. They called for qualitative research to help explore these disparities and for the development and implementation of training programs aimed at enhancing the quality of online education. Kemp and Grieve (2014) found that students exhibited a preference for F2F activities over their online counterparts and that F2F discussions were perceived as more engaging and conducive to immediate feedback. However, as in other studies reviewed here (e.g., Driscoll et al., 2012; Johnson et al., 2000), there were no statistically significant differences in test performance.

Chisadza et al. (2021) examined factors influencing students’ performance during the transition from face-to-face to online learning due to the COVID-19 pandemic, using survey responses and grade differences from a South African university. The results indicate that good WiFi access positively impacted performance, while difficulty in transitioning to online learning and a preference for self-study over assisted study were associated with lower performance. The study suggests enhancing digital infrastructure and lowering internet costs to mitigate educational impacts of the pandemic.

To summarize, previous studies have yielded a range of results concerning the efficacy of online and traditional education. While some studies observed no significant disparities between the two formats, others reported variations in academic outcomes (e.g., completion rates and grades) and student perceptions. Variables such as student characteristics, engagement levels, instructor support, and course design were identified as influential in shaping the success of online learning experiences. In essence, online education emerges from this literature as a viable alternative to F2F learning, but studies consistently call for further research into ways to improve its effectiveness. Notably, students tend to favor F2F discussions for their interactive and feedback-rich nature, even though both modalities can lead to comparable academic performance outcomes. Therefore, improving communication and engagement in online learning environments is imperative.

Within the academic literature, there is a conspicuous dearth of comprehensive studies offering a comparative analysis of active learning in online and F2F education, particularly regarding student satisfaction and perspectives. The present research aims to address this gap in the literature.

4 Research objectives

As mentioned, the current study (the F2F study) has two objectives: (a) to investigate the experience of active learning among students in a F2F environment, using the same measures as in our previous online study; and (b) to compare the effect of active learning on students in an online versus F2F environment, based on the findings of the previous study and the present study, respectively. In both studies, active learning is expressed through the use of one or more of the following four interactive learning methods: (1) small working groups for discussion, thinking through, or performing a task; (2) independent work during lessons (via written assignments or producing digital learning products, such as videos, posters, or web pages); (3) student presentations during lessons; and (4) short knowledge tests during lessons (e.g., quizzes and questionnaires). Further, in both studies, we account for the following student and class characteristics: instructor’s gender, student’s gender, and student’s year of study.

Based on the above, we formulated the following research questions:

RQ (1) How do the extent and variety of interactive learning methods in a F2F course affect students’ evaluations of the course and students’ perceptions of the clarity of teaching in the course, alongside the different class and student characteristics (instructor gender, student gender, student year of study)?

RQ (2) What differences (if any) exist between online and F2F classes in students’ course evaluations and perceived clarity of the teaching in relation to the extent and variety of active learning?

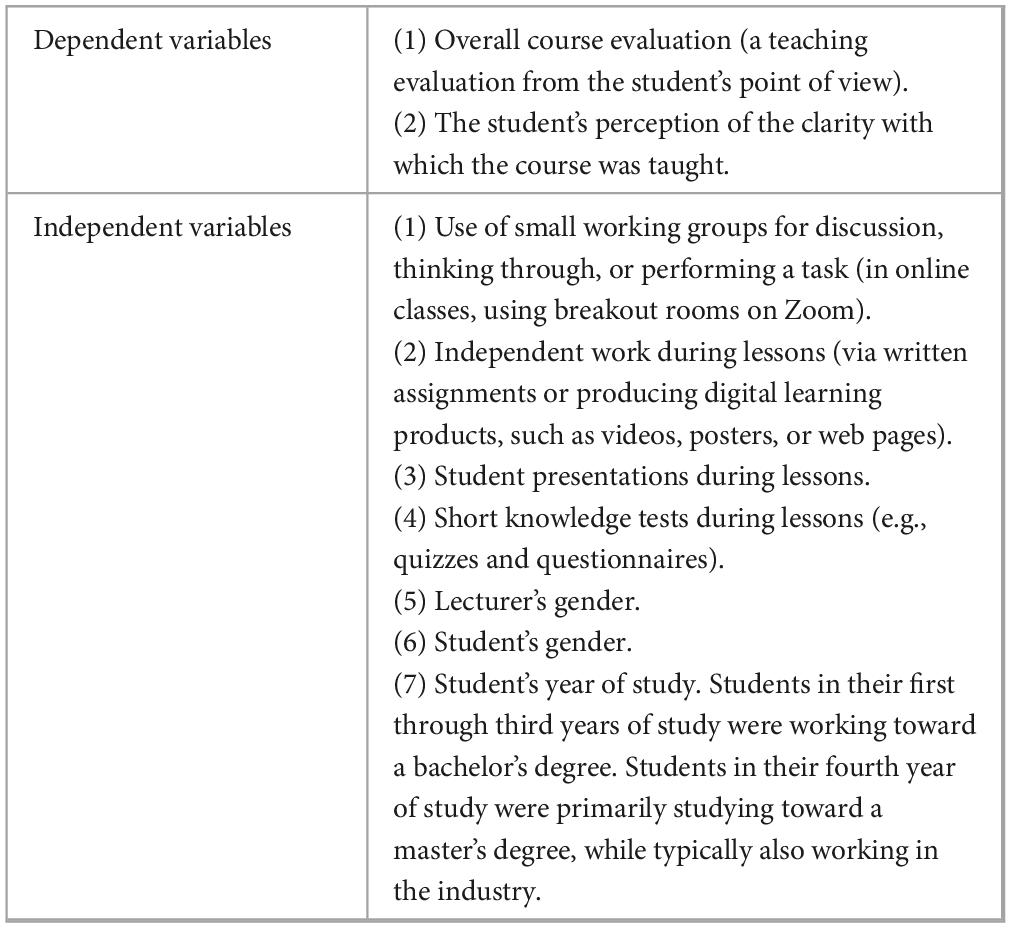

Accordingly, we have two dependent variables and seven independent variables: four for the different interactive learning methods (numbered 1–4), and three for the student and class characteristics (numbered 5–7). The variables are presented and described in Table 2.

5 Materials and methods

The research relied on evaluation surveys filled in by students during semesters A (autumn) and B (spring) of the 2021–2022 and 2022–2023 academic years, for a total of four semesters in all. Such surveys are routinely distributed by academic institutions to evaluate measures of student fulfillment and satisfaction. The surveys for both studies included questions related to the use of interactive learning methods.

5.1 Participants and procedure

Participants were all students at the same academic institute. The surveys related to classes in 23 departments in the four faculties of the institution: (1) Social and Community Sciences, (2) Marine Sciences, (3) Engineering, and (4) Economics and Business Administration. We analyzed only surveys referring to lecture-style classes (i.e., we did not include seminars, as these are naturally discussion-based and interactive, and so inherently employ active learning techniques). Thus, the findings specifically relate to the use of active learning methods in traditional lecture settings.

Students were asked to complete a survey for each class in which they were registered, resulting in multiple survey responses per student. Hence, the number of surveys is substantially larger than the number of students. The response rate was high, reflecting robust participation from the student body.

With respect to the gender distribution, the sample included both male and female students. The exact number of responses from male and female students was tracked but not individually identifiable due to anonymization. More precisely, as survey responses were anonymous, we cannot link particular surveys to particular students. However, the institute’s Teaching Promotion Unit (TPU), which administered the surveys, collected demographic information, including gender, during the survey administration process. This information was then provided to the researchers in a de-identified format, allowing them to track the gender distribution of responses for each class without compromising student anonymity. We also examined the gender of the instructor to test for potential differences in teaching evaluations based on instructor gender.

Table 3 provides a detailed breakdown of the sample.

5.2 Measures

The items included in the surveys were designed to elicit students’ assessments and perceptions of the course. We used six questions that appeared in the survey. Two questions solicited respondents’ overall evaluation of the class, and how they perceived the clarity of the teaching. Students were asked to rate their agreement or evaluation on a scale from 1 (lowest/most negative) to 6 (highest/most positive). The other four questions referred to the four interactive learning methods described above: (1) small working groups; (2) independent work during lessons; (3) student presentations during lessons; and (4) short knowledge tests during lessons. Students were asked to report the frequency with which the interactive learning methods were used in the class on the following scale: (1) Never used: The method was not used at all during the course. (2) Rarely used: The method was used occasionally, but not in most lessons. (3) Sometimes used: The method was used in some lessons, but not consistently throughout the course. (4) Frequently used: The method was used regularly in most or all lessons. Note, however, that these assessments are inherently subjective, as they reflect the students’ perceptions and experiences. Even the lowest score, “never used,” can vary in interpretation among students depending on their personal engagement and recollection.

5.3 Analytical strategy

As the course evaluation scores have a non-normal distribution, we analyzed them using nonparametric tests. Wilcoxon unpaired tests were used to compare the evaluation scores of male and female students and to compare evaluation scores given to male and female lecturers. We further compared scores given to male and female lecturers separately for male and female students. Pearson and Spearman correlations were used to calculate relationships between evaluation scores, perceived clarity, and student’s year of study.

As class sizes ranged widely, we created class-related entries based on the average evaluation scores and average use of interactive learning methods reported for each class. Wilcoxon tests were used to compare the use of interactive learning methods by male and female lecturers. Spearman tests were used to identify correlations between the extent to which interactive learning methods were employed and the two dependent variables (course evaluation scores and clarity of the teaching).

Next, we examined the effect of using a variety of interactive learning methods. Toward this end, we defined two groups of classes: those that made high use of a variety of interactive learning methods (at least three different methods, with interactive learning used in most of the lessons), and those that made little or no use of these methods (one method at most, and used only once). The dependent variables were compared between the two groups using Wilcoxon unpaired tests. Classes that fell between these groups, using one or two interactive learning methods infrequently but more than once, were not examined in this analysis.

Finally, we used Wilcoxon unpaired tests to address our second research question, comparing F2F and online classes for each of the two groups (the high-use/high-variety group and the low-use/low-variety group). All statistical analyses and prediction models were performed using Matlab© version R2021b.

6 Results

Before analyzing our research questions, we first examined the effects of student and class characteristics on evaluation scores. In both the 2021–22 and 2022–23 academic years, courses taught by female lecturers received statistically significantly higher scores than courses taught by male lecturers (p < 0.001; see Table 4). In addition, female students awarded statistically significantly higher evaluation scores overall than male students (p < 0.001; see Table 4). There were no differences between the scores given to male vs. female lecturers within each of the student gender groups. No statistically significant correlations were found between evaluation scores and student’s year of study.

Table 4. Effects of student and class characteristics on evaluation scores for online and F2F classes.

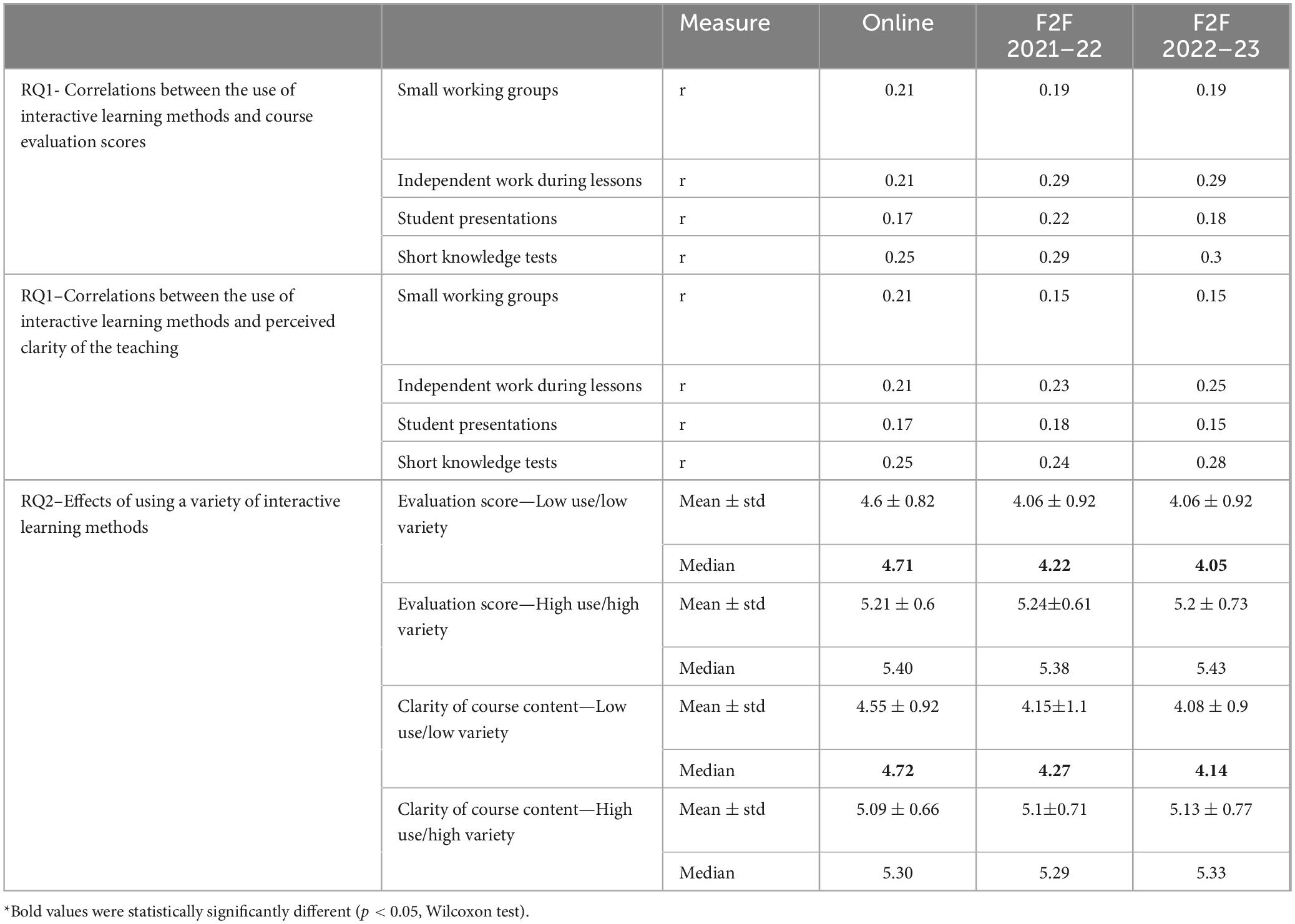

Next, we present the findings related to the research questions. All statistical results (mean, standard deviation, median, and r) are summarized in Table 5.

6.1 RQ1: Effects of interactive learning methods in F2F courses

6.1.1 Interactive learning methods (extent of use)

Overall, the students perceived that interactive learning methods were used more by female lecturers than by male lecturers (see Figure 1). This was also true for three of the four methods when considered individually: all the methods except short knowledge tests were statistically significantly perceived as more used by female compared to male lecturers (p < 0.001). For short knowledge tests, there was no statistically significant difference between male and female teachers.

Figure 1. Use of interactive learning tools by female vs. male lecturers (small working groups / independent work during lessons/student presentations / short knowledge tests). 1 = Never used, 4 = Used very frequently. (A) Stands for year 2021–2, (B) stands for year 2022–3.

Importantly, there were statistically significant correlations (p < 0.05) between the use of interactive learning methods and both outcome variables. The results are presented in Table 5.

6.1.2 Interactive learning methods (variety)

Comparison of the two dependent variables (course evaluation scores and clarity of teaching) between the two examined groups of classes—those that used a variety of interactive learning methods and those that made little or no use of such methods—shows that both variables are statistically significantly higher in the high-use/high-variety group (p < 0.001).

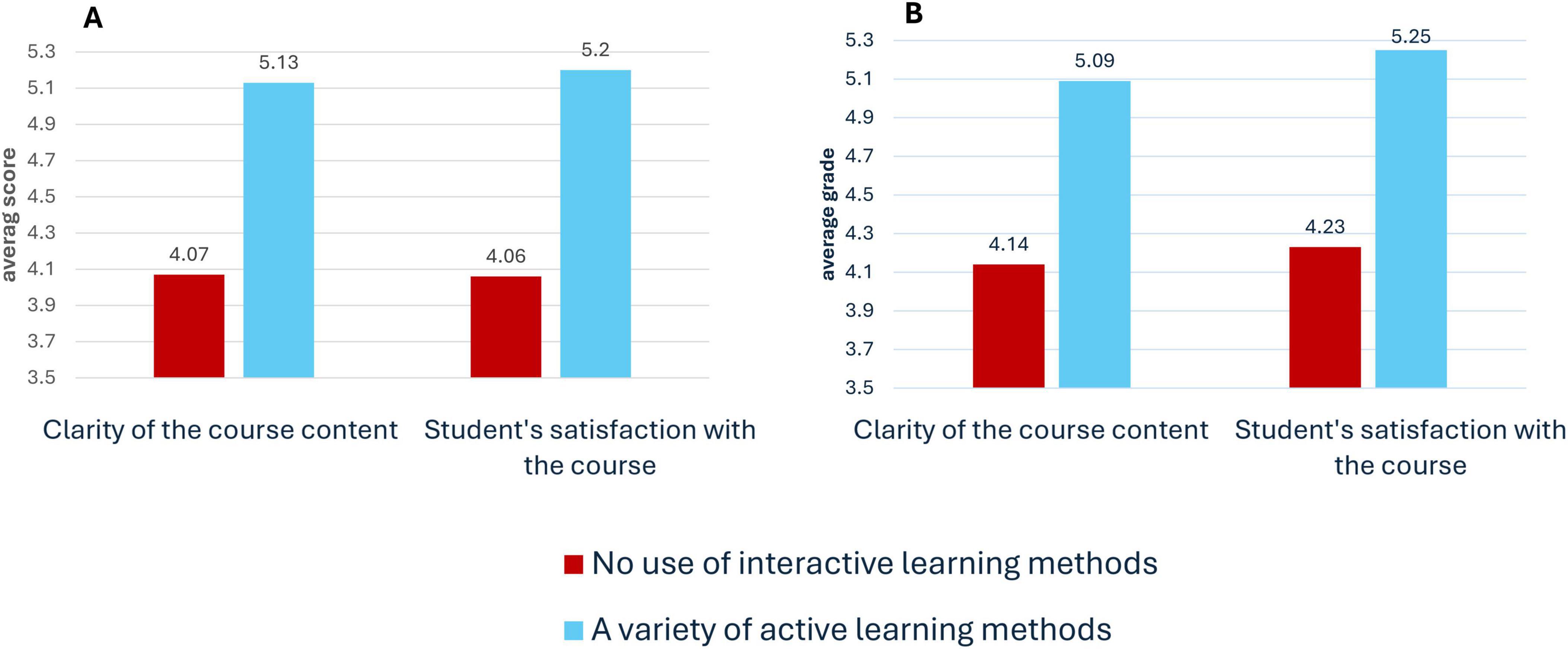

Figure 2 compares average scores for the two variables between the two groups. The comparisons were statistically significant (p < 0.01).

Figure 2. Average scores for the two dependent variables in the two groups based on the use of interactive learning methods (high-use/high-variety group vs. low-use/low-variety group). (A) Stands for 2021–2022, (B) stands for 2022–2023.

In sum, we found that greater use of interactive learning methods in F2F courses was associated with both higher satisfaction of the students with the course (expressed in overall class evaluations), and higher perceived clarity of the teaching in the class. Interestingly, both measures were also higher in courses taught by female lecturers.2 Most notably, greater use of a variety of interactive learning methods in F2F courses was associated with elevated satisfaction and perceived clarity of the teaching.

6.2 RQ2: The online versus F2F environment

Table 5 presents a comparison between the findings of the online and F2F studies, including the student and class characteristics and the use of interactive learning methods.

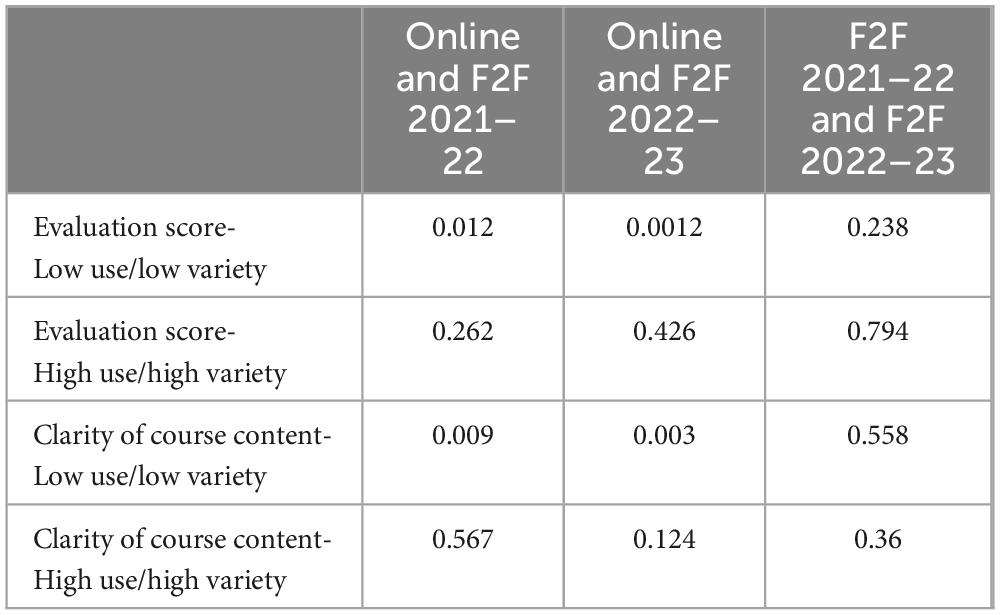

As can be seen, the trends in the results align closely between the two modalities. Moreover, importantly, when considering the effect of using a variety of interactive learning methods, no statistically significant differences were found between F2F and online classes when the instructor made extensive and varied use of interactive learning methods. However, in classes with little or no use of interactive methods, we found statistically significant lower evaluations and lower perceived clarity of teaching in F2F compared to online classes. It is worth mentioning that no statistically significant differences were found between the results for F2F classes of 2021–22 and 2022–23. The p-values results can be found in Table 6.

7 Discussion and conclusion

Faculty in academic institutions consistently strive for excellence in teaching by exploring and discovering educational methods and approaches that will enhance student satisfaction and their evaluation of courses, thereby improving their skills (Ribeiro, 2011; Wilson et al., 2021). Numerous studies have highlighted the positive effects of active learning on student satisfaction and academic achievement across various educational settings, from traditional classrooms to online platforms (Summers et al., 2005; Rajabalee and Santally, 2021). Despite this well-documented benefit, research exploring the impact of a diverse array of active learning techniques specifically in online versus face-to-face (F2F) environments remains limited (Barnett-Itzhaki et al., 2023). Our study contributes to this area by demonstrating that a variety of active learning methods significantly enhances students’ course evaluations and their perceptions of teaching clarity. Furthermore, our analysis reveals consistent patterns across all measured factors, underscoring the effectiveness of diverse teaching methods in both online and F2F settings (see Table 5).

Our analysis reveals consistent patterns across all measured factors, underscoring the effectiveness of diverse teaching methods in both online and F2F settings (see Table 5). The similar correlations between interactive learning methods and course evaluations/teaching clarity in both environments (r = 0.19 to 0.30 for F2F and r = 0.17 to 0.25 for online, as shown in Table 5) provide empirical evidence that active learning strategies are equally beneficial regardless of the mode of instruction. This consistency not only aligns with previous research highlighting the universal advantages of active learning (e.g., Summers et al., 2005; Freeman et al., 2014; Deslauriers et al., 2019; Theobald et al., 2020) but also extends these findings by demonstrating their applicability across different educational modalities. Specifically, our results support and expand on Freeman et al. (2014) meta-analysis, which found that active learning increases student performance in STEM disciplines, by showing similar benefits in non-STEM fields and in both online and F2F contexts. The observed patterns in our study also indicate that student engagement and satisfaction are driven more by the pedagogical approach than by the physical or virtual nature of the learning environment. This finding is consistent with research by Theobald et al. (2020), who found that active learning narrows achievement gaps for underrepresented students across various STEM disciplines, suggesting that the benefits of active learning transcend specific learning environments. Furthermore, it is particularly valuable for educators and institutions as they continue to navigate the evolving landscape of higher education, which increasingly includes both online and traditional classroom settings. The consistency in our findings reinforces the importance of implementing varied and interactive teaching methods across all educational formats to enhance student learning experiences and outcomes.

One of the most significant findings from our research is that both evaluation scores and perceived clarity of teaching are notably lower in F2F classes compared to online classes when active learning methods are minimally used or absent. This discrepancy could be attributed to several factors. Primarily, traditional frontal teaching methods may cause frustration among students confined to a classroom setting, where distractions and noise levels are typically higher than in-home learning environments. Online learning, by contrast, often provides a quieter, more controlled environment that may be conducive to concentration and deeper engagement. Moreover, the ability to revisit recorded online sessions allows for repeated exposure to material, a benefit not typically available in F2F settings.

These observations suggest that the physical classroom environment can significantly impact the effectiveness of teaching methods, particularly when active learning strategies are underutilized. As such, this study not only reinforces the need for implementing a broad spectrum of active learning techniques but also highlights the environmental factors that can affect their success.

Despite the significant insights gained from this study, several limitations must be acknowledged. First, the data was collected from a single institution, which may limit the generalizability of the findings to other educational contexts or institutions with different demographics and teaching practices. Second, the study relied on student self-reported data from course evaluations, which may introduce biases related to individual perceptions and experiences. Additionally, while the study controlled for various factors, it was not able to account for all potential variables that could influence student evaluations, such as instructor experience or specific course content. Moreover, the transition from online to face-to-face learning environments during the COVID-19 pandemic may have introduced unique challenges and adaptations that are not fully captured in this study. Finally, the focus on a limited number of active learning methods means that other potentially effective interactive techniques were not explored.

Looking ahead, the implications of our findings open several avenues for further research. Future studies might explore how interactive methods influence student satisfaction and perceptions in hybrid or blended courses, which combine online and F2F elements. Additionally, qualitative research could provide deeper insights into how these methods specifically enhance the learning process across different settings.

In conclusion, our findings advocate for the integration of a wide range of active learning strategies to overcome the challenges often faced in traditional educational environments. By adopting varied and innovative teaching methods, educators can significantly improve the educational experience and outcomes for students in both online and F2F formats.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Faculty of Social and Community Sciences Institutional Review Board Approval. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

DB: Conceptualization, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. AT: Conceptualization, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. ZB-I: Conceptualization, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The authors declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

We want to thank from the bottom of our hearts Ms. Sharon Nessis and Ms. Michal Barel, from our academic institution, for the collection of the data and for their significant contribution to the manuscript and the discussion.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

- ^ While Atchley et al. (2013) do not provide a precise definition of “reading courses,” given the context of their study and the other disciplines examined, these likely refer to courses focused on teaching reading or developing reading skills, presumably within teacher education programs or the field of education.

- ^ Only the first of these analyses (on overall class evaluations) is presented in this paper. Details of the analysis on clarity of teaching by gender of the lecturer are available from the authors upon request.

References

Ary, E. J., and Brune, C. W. (2011). A comparison of student learning outcomes in traditional and online personal finance courses. MERLOT J. Online Learn. Teach. 7, 465–474.

Atchley, W., Wingenbach, G., and Akers, C. (2013). Comparison of course completion and student performance through online and traditional courses. Int. Rev. Res. Open Distrib. Learn. 14, 104–116.

Barnett-Itzhaki, Z., Beimel, D., and Tsoury A. (2023). Using a variety of interactive learning methods to improve learning effectiveness: Insights from AI models based on teaching surveys. Online Learn. J. 27.

Biel, R., and Brame, C. J. (2016). Traditional versus online biology courses: Connecting course design and student learning in an online setting. J. Microbiol. Biol. Educ. 17, 417–422.

Chi, M. T., and Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educ. Psychol. 49, 219–243.

Chisadza, C., Clance, M., Mthembu, T., Nicholls, N., and Yitbarek, E. (2021). Online and face-to-face learning: Evidence from students’ performance during the Covid-19 pandemic. Afr. Dev. Rev., 33, S114–S125.

Dell, C. A., Low, C., and Wilker, J. F. (2010). Comparing student achievement in online and face-to-face class formats. MERLOT J. Online Learn. Teach. 6, 30–42.

Deslauriers, L., McCarty, L. S., Miller, K., Callaghan, K., and Kestin, G. (2019). Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proc. Natl. Acad. Sci. U.S.A. 116, 19251–19257. doi: 10.1073/pnas.1821936116

Driscoll, A., Jicha, K., Hunt, A. N., Tichavsky, L., and Thompson, G. (2012). Can online courses deliver in–class results? A comparison of student performance and satisfaction in an online versus a face-to-face introductory sociology course. Teach. Sociol. 40, 312–331.

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. U.S.A. 111, 8410–8415.

Friday, E., Friday-Stroud, S. S., Green, A. L., and Hill, A. Y. (2006). A multi-semester comparison of student performance between multiple traditional and online sections of two management courses. J. Behav. Appl. Manag. 8, 66–81.

Froyd, J. E. (2007). Evidence for the efficacy of student-active learning pedagogies. Project Kaleidosc. 66, 64–74.

Johnson, S. D., Aragon, S. R., and Shaik, N. (2000). Comparative analysis of learner satisfaction and learning outcomes in online and face–to–face learning environments. J. Interact. Learn. Res. 11, 29–49.

Jones, R. W. (2007). Learning and teaching in small groups: Characteristics, benefits, problems and approaches. Anaesth. Intensive Care 35, 587–592.

Kemp, N., and Grieve, R. (2014). Face-to-face or face-to-screen? Undergraduates’ opinions and test performance in classroom vs. online learning. Front. Psychol. 5:1278. doi: 10.3389/fpsyg.2014.01278

Konopka, C. L., Adaime, M. B., and Mosele, P. H. (2015). Active teaching and learning methodologies: Some considerations. Creat. Educ. 6, 1536–1545.

Lombardi, D., Shipley, T. F., and Astronomy Team, Biology Team, Chemistry Team, Engineering Team, et al. (2021). The curious construct of active learning. Psychol. Sci. Public Interest 22, 8–43. doi: 10.1111/tops.12752

Mali, D., and Lim, H. (2021). How do students perceive face–to–face/blended learning as a result of the Covid-19 pandemic? Int. J. Manag. Educ. 19:100552.

Michaelsen, L. K., Parmelee, D. X., Levine, R. E., and McMahon, K. K. (2023). Team-based learning for health professions education: A guide to using small groups for improving learning. Milton Park: Taylor & Francis.

Mou, T. Y. (2021). Online learning in the time of the COVID–19 crisis: Implications for the self-regulated learning of university design students. Act. Learn. High. Educ. 24:14697874211051226.

Murphy, D. H., and Little, J. L. and Bjork, E. L. (2023). The value of using tests in education as tools for learning-Not just for assessment. Educ. Psychol. Rev. 35:89.

Nelson, B. C. (2007). Exploring the use of individualized, reflective guidance in an educational multi-user virtual environment. J. Sci. Educ. Technol. 16, 83–97.

Noble, D. F. (2002). Digital Diploma Mills. The Automation of Higher Education. Science Bought and Sold: Essays in the Economics of Science, 431.

Nouri, H., and Shahid, A. (2005). The effect of PowerPoint presentations on student learning and attitudes. Glob. Perspect. Account. Educ. 2:53.

Paden, R. R. (2006). A comparison of student achievement and retention in an introductory math course delivered in online, face-to-face, and blended modalities. Doctoral dissertation, Capella University.

Paul, J., and Jefferson, F. (2019). A comparative analysis of student performance in an online vs. face-to-face environmental science course from 2009 to 2016. Front. Comput. Sci. 1:7. doi: 10.3389/fcomp.2019.00007.

Rajabalee, Y. B., and Santally, M. I. (2021). Learner satisfaction, engagement and performances in an online module: Implications for institutional e-learning policy. Educ. Inform. Technol. 26, 2623–2656. doi: 10.1007/s10639-020-10375-1

Regmi, K., and Jones, L. (2020). A systematic review of the factors–enablers and barriers-affecting e-learning in health sciences education. BMC Med. Educ. 20:91. doi: 10.1186/s12909-020-02007-6.

Ribeiro, L. C. (2011). The pros and cons of problem-based learning from the teacher’s standpoint. J. Univers. Teach. Learn. Pract. 8, 34–51.

Saunders, L., and Wong, M. A. (2020). Active learning: Engaging people in the learning process. Instruction in libraries and information centers. Illinois: Windsor & Downs Press, Illinois Open Publishing Network (IOPN).

Shah, A. A., Fatima, Z., and Akhtar, I. (2022). University students’ engagement and satisfaction level in online and face to face learning: A comparative analysis. Compet. Soc. Sci. Res. J. 3, 67–78.

Smith, D. F., and Stephens, B. K. (2010). Marketing education: Online vs traditional. Proc. Am. Soc. Bus. Behav. Sci. 17, 810–814.

Smith, G. G., and Heindel, A. J. Torres-Ayala, A. T. (2008). E-learning commodity or community: Disciplinary differences between online courses. Internet Higher Educ. 11, 152–159.

Spencer, D., and Temple, T. (2021). Examining students’ online course perceptions and comparing student performance outcomes in online and face-to-face classrooms. Online Learn. 25, 233–261.

Summers, J. J., Waigandt, A., and Whittaker, T. A. (2005). A comparison of student achievement and satisfaction in an online versus a traditional face-to-face statistics class. Innov. High. Educ. 29, 233–250.

Tesfaye, S., and Berhanu, K. (2015). Improving students’ participation in active learning methods: Group discussions, presentations and demonstrations: A case of Madda Walabu University second year tourism management students of 2014. J. Educ. Pract. 6, 29–32.

Thai, N. T. T., De Wever, B., and Valcke, M. (2020). Face-to-face, blended, flipped, or online learning environment? Impact on learning performance and student cognitions. J. Comput. Assist. Learn. 36, 397–411.

Theobald, E. J., Hill, M. J., Tran, E., Agrawal, S., Arroyo, E. N., and Behling, S. (2020). Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proc. Natl. Acad. Sci. U.S.A. 117, 6476–6483. doi: 10.1073/pnas.1916903117

van Hout-Wolters, B., Simons, R. J., and Volet, S. (2000). “Active learning: Self-directed learning and independent work,” in New learning, eds R. J. Simons, J. van der Linden, and T. Duffy (Dordrecht: Springer Netherlands), 21–36.

Wilson, R., Joiner, K., and Abbasi, A. (2021). Improving students’ performance with time management skills. J. Univers. Teach. Learn. Pract. 18, 16.

Keywords: active learning, interactive learning methods, student evaluation, online learning, F2F learning

Citation: Beimel D, Tsoury A and Barnett-Itzhaki Z (2024) The impact of extent and variety in active learning methods across online and face-to-face education on students’ course evaluations. Front. Educ. 9:1432054. doi: 10.3389/feduc.2024.1432054

Received: 13 May 2024; Accepted: 26 August 2024;

Published: 19 September 2024.

Edited by:

Carlos Saiz, University of Salamanca, SpainReviewed by:

Inés Alvarez-Icaza Longoria, Monterrey Institute of Technology and Higher Education (ITESM), MexicoJosé Cravino, University of Trás-os-Montes and Alto Douro, Portugal

Copyright © 2024 Beimel, Tsoury and Barnett-Itzhaki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dizza Beimel, ZGl6emFiQHJ1cHBpbi5hYy5pbA==

Dizza Beimel

Dizza Beimel Arava Tsoury

Arava Tsoury Zohar Barnett-Itzhaki

Zohar Barnett-Itzhaki