- 1School of Humanities and Education, Tecnologico de Monterrey, Monterrey, Mexico

- 2Institute for the Future of Education, Tecnologico de Monterrey, Monterrey, Mexico

- 3Department of Sciences, School of Engineering, Tecnologico de Monterrey, Monterrey, Mexico

Introduction: Industry 5.0 is the next phase of industrial work that integrates robots and artificial intelligence to boost productivity and economic growth. It emphasizes a balance between human creativity and technological precision, built on three pillars: human centrality, sustainability, and resilience. Corporations and educational institutions must adopt an integrated approach to training their future workforce, emphasizing digital and key competencies such as creativity, communication, collaboration, and critical thinking. Higher education institutions must measure digital competencies and other key Industry 5.0 competencies to prepare students for a sustainable future. However, there is a need to identify appropriate scientific instruments that can comprehensively evaluate these competencies.

Methods: This study conducted a Systematic Literature Review to analyze the existing digital competency assessment instruments in higher education from 2013-2023. The focus was on instruments that measure digital competencies and core competencies for Industry 5.0, such as creativity, communication, collaboration, and critical thinking. The search process began with a strategy applied across various databases, including ERIC, Google Scholar, ProQuest, Scopus, and Web of Science, to cover a broad range of literature on the design and validation of digital competency assessment tools.

Results: This search generated a total of 9,563 academic papers. Inclusion, exclusion, and quality filters were applied to select 112 articles for detailed analysis. Among these 112 articles, 46 focused on designing and validating digital competency assessment instruments in higher education. Within the reviewed literature, surveys and questionnaires emerged as the predominant methods utilized for this purpose. This study found a direct relationship between digital competencies and essential skills like communication and critical thinking.

Discussion: The study concludes that assessment tools should integrate a wide range of competencies, and students and educators should be actively involved in developing these skills. Future research should focus on designing tools that effectively evaluate these competencies in dynamic work contexts. Assessment instruments should cover a broader range of competencies, including creativity and collaboration, to meet the demands of Industry 5.0. Reliable assessments of digital competencies and soft skills are crucial, with a need for appropriate reliability tests that do not impact students’ preparedness for labor market challenges.

1 Introduction

Industry 5.0 is thought to be the next stage in the evolution of the industrial sector. It is characterized by the increased use of robotics and artificial intelligence (AI) to improve productive efficiency and promote economic growth. This stage involves integrating human creativity with technological precision and is based on three fundamental principles: the importance of the human factor, a commitment to sustainability, and resilience capabilities (Kemendi et al., 2022). Advancements in technology support a shift toward prioritizing people, inclusion, and sustainability, promoting collaboration between humans and technology for global progress and collective well-being (Leng et al., 2022).

Future professionals need advanced digital competencies to interact effectively with intelligent systems (Wang and Ha-Brookshire, 2018; Xu et al., 2021). It is important to recognize the significance of interpersonal abilities, including being creative (Forte-Celaya et al., 2021), communicating effectively (George-Reyes et al., 2024), and collaborating (Poláková et al., 2023). Possessing these skills is vital for adapting to new job demands in Industry 5.0, and companies and academic institutions must take a comprehensive approach to training their future employees (Matsumoto-Royo et al., 2021).

Continuous engineering updates are essential to ensure that current professionals and future industry entrants possess the technical knowledge and social skills required to lead in the era of Industry 5.0. This integrated approach is key for fostering a resilient and sustainable industrial ecosystem (Ahmad et al., 2023; Bakkar and Kaul, 2023). Education is pivotal in preparing individuals to adapt to constant workplace changes constantes (Pacher et al., 2023; Ghobakhloo et al., 2023b). It is essential to reassess and modernize engineering and higher education to cultivate adaptive skills that keep up with rapid technological changes (Suciu et al., 2023; Gürdür Broo et al., 2022).

Identifying instruments that assess digital competencies and key skills for Industry 5.0 is decisive. Measuring cross-cutting competencies enhances graduates’ employability and creates an innovative and resilient work environment (Miranda et al., 2021; Suciu et al., 2023). A literature review is essential to determine if specific tools adequately address digital competencies and essential skills for Industry 5.0 in higher education, particularly for engineering students. Exploring additional tools for certifying digital competencies through a prior systematic mapping is necessary. The study aims to identify existing instruments and evaluate their ability to comprehensively address digital competencies and core competencies, preparing students for Industry 5.0.

2 Theoretical framework

2.1 Industry 5.0 and its relations with context

Industry 5.0 is the upcoming phase of industrial work that focuses on utilizing robots and AI to improve productivity and economic growth. This new industrial revolution does not necessarily indicate a technological leap from Industry 4.0 but rather a continuation incorporating existing technology within a broader framework to benefit people, the planet, and prosperity (Kemendi et al., 2022). In 2017, the idea of Industry 5.0 started taking shape. Academics focused on introducing the Fifth Industrial Revolution and their efforts were successful in 2021 when the European Commission formalized Industry 5.0 as a new industrial phase. The aim of Industry 5.0 is to integrate social and environmental considerations into technological innovation. This extends and complements the advancements in Industry 4.0 (Xu et al., 2021; Kemendi et al., 2022).

Hence, Industry 5.0 reintegrates humans into factory floors, collaborating with autonomous machines to increase production efficiency. The autonomous workforce interprets and responds to human intentions, ensuring safe and efficient interaction with robots (Leng et al., 2022). According to Xu et al. (2021) and Ivanov (2023), Industry 5.0 focuses on three fundamental pillars: human centrality, sustainability, and resilience.

Industry 5.0’s first pillar is human centrality. It represents a paradigm shift in how workers are perceived in the production sphere. Workers are no longer viewed as resources or expenses but as valuable assets whose needs should take priority in designing and executing production processes (Castagnoli et al., 2023). Design technology to adapt to human diversity and promote safe work environments. This enriches work life and protects fundamental rights such as autonomy, dignity, and privacy. Technology should enhance human well-being, not diminish it (Breque et al., 2021).

Sustainability entails reconfiguring production processes based on the principle of circularity (Breque et al., 2021). This means prioritizing the reuse, reallocation, and recycling of natural resources, minimizing waste, and reducing the environmental impact of industrial production. This approach promotes efficient resource use and aligns with ecological responsibility and intergenerational equity principles, ensuring a sustainable production model in the long term (Ghobakhloo et al., 2022; Ghobakhloo et al., 2023a).

Resilience has become a essential aspect in dealing with the growing unpredictability and intricacy of the global landscape. This concept emphasizes the capacity of industrial production to preserve its strength and continuity despite being challenged by interruptions and crises caused by natural disasters or geopolitical shifts (Leng et al., 2023). Promoting productive systems that can quickly adapt and respond to these adversities is not only a requirement for business survival but also a fundamental strategy to ensure economic and social stability on a global scale (Ivanov, 2023).

In summary, Industry 5.0’s pillars provide a framework for production that balances technological advancement, social justice, and environmental responsibility (Breque et al., 2021). Industry 5.0 is a model that aims to prioritize human values, encourage sustainable and productive practices, and develop resilience capabilities. It goes beyond being efficient and innovative and instead seeks to contribute to building a more equitable and resilient future (Ivanov, 2023; Breque et al., 2021).

As Industry 5.0 takes hold, there is a growing demand for professionals with expertise in innovation, collaboration, and sustainability, which are the core values of this new industrial era (Xu et al., 2021). Today’s labor market requires professionals to possess advanced technical knowledge, people skills, and a deep understanding of technology’s social and environmental implications. This includes critical thinking, creativity, collaboration, and communication (Gürdür Broo et al., 2022).

2.2 Core competencies of Industry 5.0

Based on Ungureanu (2020) insights, the shift toward Industry 5.0 represents a central advancement in understanding and implementing global economic policies. This transition underscores the importance of human skills as key drivers of sustainable and equitable economic growth. In this context, the 4Cs—critical thinking, creativity, communication, and collaboration—are vital competencies that must be cultivated and integrated into our economic and educational systems.

Critical thinking is an essential skill that enables individuals to navigate the complexities of global information. It allows one to thoroughly analyze the challenges and opportunities the modern economy presents (Ungureanu, 2020). Critical thinking is considered a fundamental competency for future engineers. This skill is vital for efficiently analyzing complex problems, evaluating innovative solutions, and making informed decisions in uncertain environments that are constantly changing (Gürdür Broo et al., 2022).

Creativity is recognized for its potential to generate non-material values and promote sustainable development, with a focus on human capital. In today’s world, innovative ideas are considered the most valuable currency, and creativity is the driving force behind developing new products, services, and business models. This not only contributes to economic success but also ensures the satisfaction of human capital (Ungureanu, 2020; Aslam et al., 2020; Sindhwani et al., 2022). Creativity is an essential skill in engineering that empowers engineers to devise innovative and sustainable solutions to emerging challenges. Thinking creatively and beyond traditional frameworks is fundamental in developing technologies and systems that effectively address social and environmental needs (Gürdür Broo et al., 2022).

Effective communication is an important aspect of global economic behavior and strategy development. Communicating ideas, information, and emotions is essential in today’s interconnected world (Ungureanu, 2020). Engineers must possess strong communicative competency to exchange ideas, present technical solutions, and collaborate with colleagues from different disciplines. Communicating effectively in different contexts is necessary for leading projects, managing teams, and fostering innovation (Gürdür Broo et al., 2022).

Collaboration is working together to utilize a wide range of resources efficiently. In the global economy, where businesses are interconnected, collaboration has become essential for creating healthy business ecosystems and promoting inclusive and robust economic growth (Ungureanu, 2020; Wolniak, 2023). Working effectively in multidisciplinary and transdisciplinary teams is critical for developing complex cyber-physical systems and implementing innovative solutions that require integrating knowledge and skills from various fields (Gürdür Broo et al., 2022).

The current job market demands that future workers possess digital skills to collaborate with robots and machines effectively. Industry 5.0 is expected to create new job opportunities in human-machine interaction and computational factors, with critical areas such as AI, robotics, machine programming, machine learning, maintenance, and training (Saniuk et al., 2022). This evolution necessitates a shift in labor competencies, emphasizing the importance of continuous education and training in digital skills as a cornerstone for advancing toward a more technologically integrated economy and society (Kemendi et al., 2022).

2.3 Digital competencies

Digital competencies refer to a combination of knowledge, skills, attitudes, and values required for interacting autonomously, collaboratively, and ethically in the digital world. This includes effective communication, content creation, and management and protection of personal information and data (van Laar et al., 2017). These digital skills are considered essential competencies for personal and professional growth (Coldwell-Neilson and Cooper, 2019; Kozlov et al., 2019). Acquiring these skills enables individuals to lead in a technologically evolving environment. It equips them with the necessary tools to significantly contribute to the development and innovation in their respective fields of expertise (Rosalina et al., 2021).

The term digital competency is a constantly evolving concept influenced by technological advancements, political goals, and the expectations of citizens living in a knowledge-based society (Ilomäki et al., 2016). The definition of digital competency has undergone significant changes since its initial introduction in 2006, and its latest update in 2018 now includes not only the effective use of digital technologies but also a critical and ethical approach toward them that can be applied in educational, work, and social participation contexts (Council of the European Union, 2018; Vuorikari et al., 2022). The term digital competency has been associated with other terms, such as 21st-century skills, digital literacy, digital skills, e-skills, information and communication technologies (ICT) skills, and ICT literacy (Gutiérrez-Santiuste et al., 2023).

For instance, UNESCO (2018) defines digital competency as the knowledge, skills, attitudes, and values required to act autonomously, collaboratively, and ethically in digital environments. It includes communicating, producing digital content, and managing data. Oberer and Erkollar (2023) consider it one of the eight key competencies for lifelong learning. Various studies have emphasized the significance of acquiring digital competencies in Industry 4.0 (Kozlov et al., 2019; Wang and Ha-Brookshire, 2018; Rosalina et al., 2021; Farias-Gaytan et al., 2022; Isnawati et al., 2021; Kipper et al., 2021; Benešová and Tupa, 2017) to Industry 5.0 (Xu et al., 2021; Leng et al., 2022; Kemendi et al., 2022; Pacher et al., 2023).

As digital competencies become increasingly important in Industry 5.0, it is necessary to evaluate them according to Gutiérrez-Santiuste et al. (2023). However, assessing these competencies is challenging due to their intricate nature (van Laar et al., 2017). The evaluation of digital competencies is necessary for the sustainable development of society, particularly for providing young people with the necessary digital skills (Fan and Wang, 2022).

In higher education, it is crucial to assess digital competencies to prepare university students for the constantly changing educational model and meet future workforce demands (Zhao et al., 2021). However, measuring digital competencies accurately is challenging for educational institutions (Tzafilkou et al., 2022), as limited valid and reliable tools are available to assess university students’ digital competencies in specific contexts. Thus, it is necessary to develop and test a questionnaire’s reliability and validity to measure digital competencies in a particular setting (Fan and Wang, 2022). To design effective educational strategies and prepare students and teachers for future challenges, it is essential to develop and validate instruments to assess digital competencies accurately (Fan and Wang, 2022; Tang et al., 2022; Tzafilkou et al., 2022).

It is imperative to ensure the accuracy of assessments of digital competencies by validating and making instruments reliable. This helps accurately reflect the digital competencies of the individuals being assessed. It also leads to more effective educational and formative interventions based on evidence (Montenegro-Rueda and Fernández-Batanero, 2023; Lázaro-Cantabrana et al., 2019). Measuring instruments’ reliability is an essential principle in their evaluation. It represents their ability to produce consistent and reproducible results over time, in different contexts, and under the observation of various evaluators (Souza et al., 2017).

Reliability is important to ensure the quality of data obtained in research. It encompasses coherence, stability, equivalence, and homogeneity of measurements. There are several ways to assess reliability, including test–retest reliability, parallel forms or equivalent form’s reliability, Intra-Rater Reliability, and Inter-Rater Reliability. The Cronbach’s alpha coefficient is a widely used technique to calculate the internal consistency of a measurement instrument composed of multiple items (Echevarría-Guanilo et al., 2018).

Validation of an instrument ensures that it accurately measures what it intends to measure. However, validity is not a fixed property of the instrument and must be determined based on a specific topic and defined population (Souza et al., 2017). There are diverse types of validity, such as content validity, criterion validity, construct validity, structural construct validity, and face validity. The types of statistical techniques commonly used for instrument validation and structural analysis include Confirmatory Factor Analysis (CFA) and Exploratory Factor Analysis (EFA) (Lee, 2021).

EFA is a technique to explore the relationships between observable variables with one or more latent variables. Four key issues are important in EFA: sample size, extraction method, rotation method, and factor retention criteria (Goretzko et al., 2021). CFA is a statistical method to test if hypothetical constructs explain observed variables. CFA is useful when the researcher has specific theoretical expectations about the patterns of relationships between variables (Hoyle, 2000).

3 Methodology

This article presents a Systematic Literature Review (SLR) that was conducted using five essential databases: ERIC, Google Scholar, ProQuest, Scopus, and Web of Science selected because of their broad interdisciplinary coverage, relevance in the educational field, access to academic and gray literature, and ability to provide a thorough and rigorous review of the design and validation of instruments for assessing digital competencies. This review aimed to identify and examine the existing literature that focused on the design and validation of contemporary instruments for assessing digital competencies, specifically in higher education. The research aimed to determine if any of the dimensions assessed by these instruments showed a relationship with the core competencies of Industry 5.0. This was done to understand how assessment approaches align with the current and emerging demands of the educational sector in preparation for the advanced digital era’s challenges. Additionally, the review sought to recognize the reliability and validity of instruments used in recent research that covered the last ten years.

A systematic mapping of existing literature was developed to identify and analyze international research trends in designing and validating instruments, questionnaires, tools, and scales to assess digital competencies. The methodological scheme used was Petersen et al. (2015) and Petersen et al. (2008). A total of 112 documents were obtained in this search, out of which 46 documents were focused on higher education. This highlights that higher education is a significant area of analysis. Therefore, it is vital to delve deeper into the instruments designed specifically to validate digital competencies in higher education.

This SLR was conducted according to the methodological guidelines proposed by Kitchenham (2004) and Kitchenham and Charters (2007). The study aimed to provide an exhaustive analysis of studies on the design and validation of modern instruments for assessing digital competencies in higher education. This methodology has been previously used in academic works and has proven to be effective and relevant for research in the field of higher education (Peláez-Sánchez et al., 2023; Hassan, 2023; George-Reyes et al., 2023).

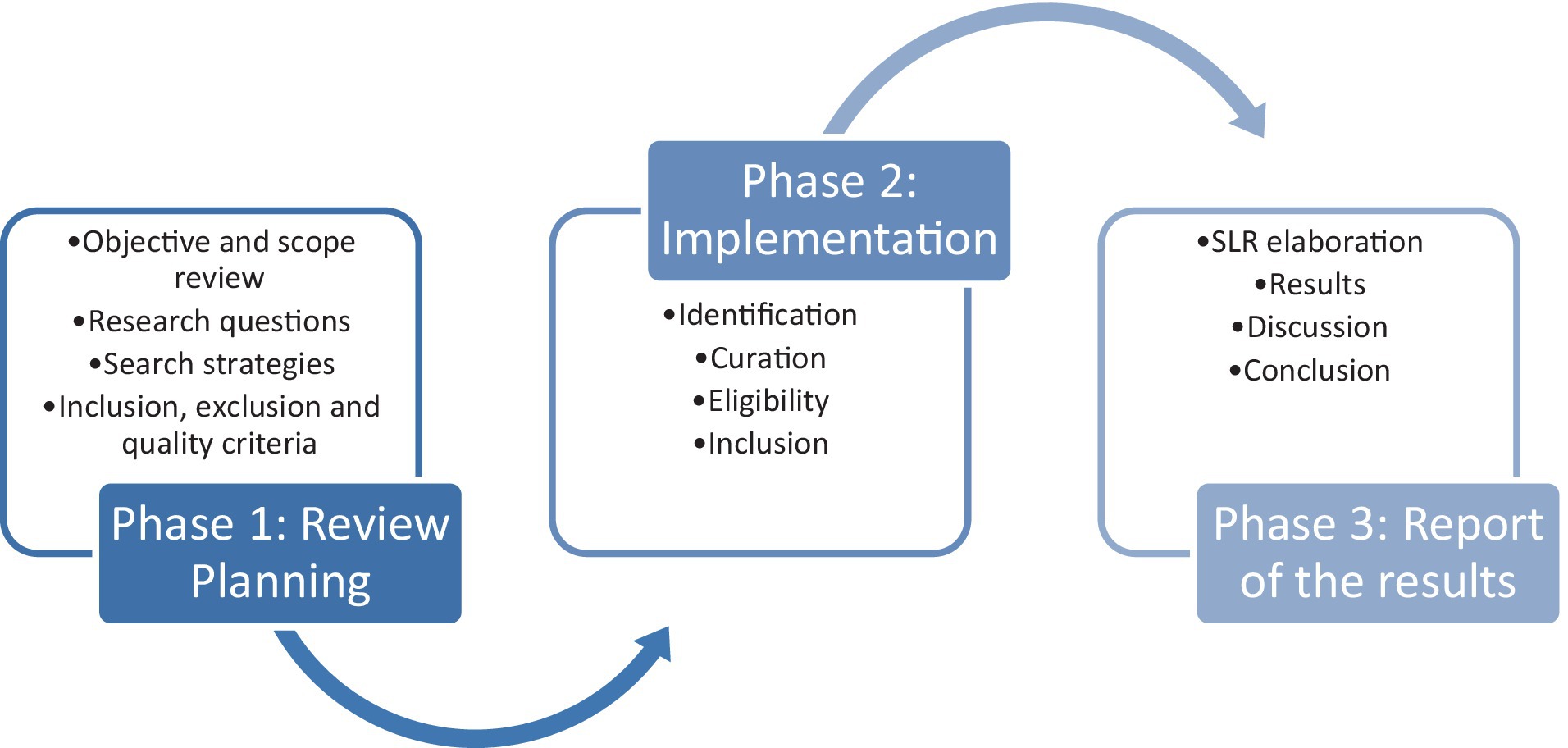

The study was conducted through three primary phases. The first phase was the planning phase, where the purpose and scope of the study are defined. Additionally, research questions were established, search strategies are determined, and inclusion, exclusion, and quality criteria are set. The second phase was implementation, which involves searching and selecting relevant database documents based on the previously established criteria. The selected documents were included in the SLR. Finally, the results reporting phase synthesized and discussed the research findings, culminating with the study’s conclusions (see Figure 1).

3.1 Phase 1: planning the SLR

The first phase involved establishing (a) the objective and scope of the research, (b) the research questions, search strategies, and inclusion, exclusion, and quality criteria. This planning phase was set through the methodological proposal of Kitchenham (2004) and Kitchenham and Charters (2007).

After establishing the planning foundations in Table 1, each identified component is further explored to expand the reasons for this SLR. These subsequent sections break down and expand the essential elements of planning, such as the precise definition of the research scope, the study’s research questions, the established search strategies, and the importance of setting inclusion, exclusion, and quality criteria at this phase of the study to guide a systematic study according to the methodological foundations of Kitchenham (2004) and Kitchenham and Charters (2007).

3.1.1 Objective and scope of the SLR

The objective and scope of this study were established based on a prior systematic mapping of the literature, which identified and analyzed international trends in the design and validation of instruments for assessing digital competencies, following the methodological framework recommended by Petersen et al. (2015) and Petersen et al. (2008). This systematic mapping revealed the existence of 112 documents, of which 46 were specifically developed in the context of higher education, thus highlighting the constant attention to this area. These preliminary findings underscored the importance of delving deeper into the identification and analysis of instruments designed to validate digital competencies in higher education.

With this foundation, the study’s objective focuses on conducting a thorough and detailed analysis of the existing literature on the design and validation of modern instruments for assessing digital competencies in higher education. This approach seeks not only to understand how these instruments align with the current and future demands of the educational sector in preparation for the challenges of the advanced digital era but also to examine the connection of these tools with the key competencies of Industry 5.0. Furthermore, the study aims to verify the reliability and validity of the instruments used in the most recent research, covering an analysis period of the last ten years.

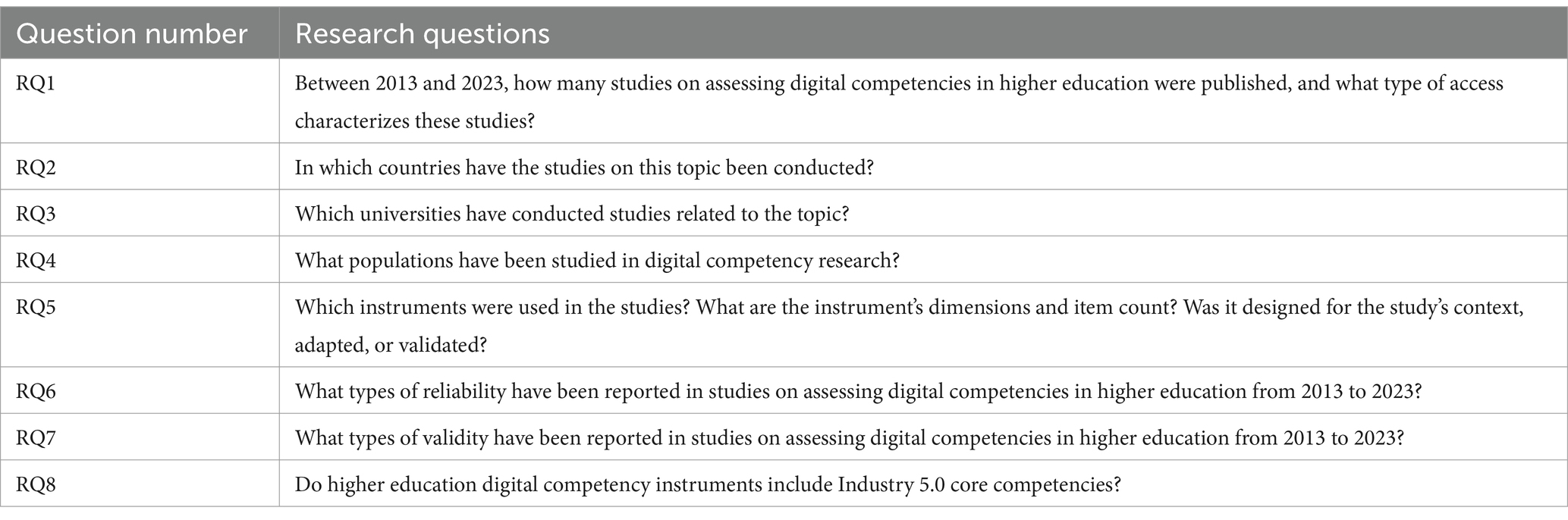

3.1.2 Research questions

The design of the research questions has been oriented to exhaustively explore the field of assessing digital competencies in higher education, particularly from 2013 to 2023. The questions RQ1, RQ2, RQ3, and RQ4 were defined to quantify the corpus of studies published in this period, discern the accessibility of these works by dividing them between open access and restricted access, and map out the geographic and institutional landscape of where and by whom this research has been carried out. It also sought to identify the target populations of these studies to understand to whom the digital competencies assessments are directed. On the other hand, RQ5 aimed to assess the extent to which the assessment instruments incorporate essential soft skills for Industry 5.0, such as creativity and leadership. RQ6 and RQ7 sought to identify the types of reliability and validity reported in the studies, as well as the fundamental aspects to ensure the effectiveness of the assessment instruments. Finally, question RQ8 sought to identify the relationship between the assessment of digital competencies and the key competencies of Industry 5.0 (see Table 1).

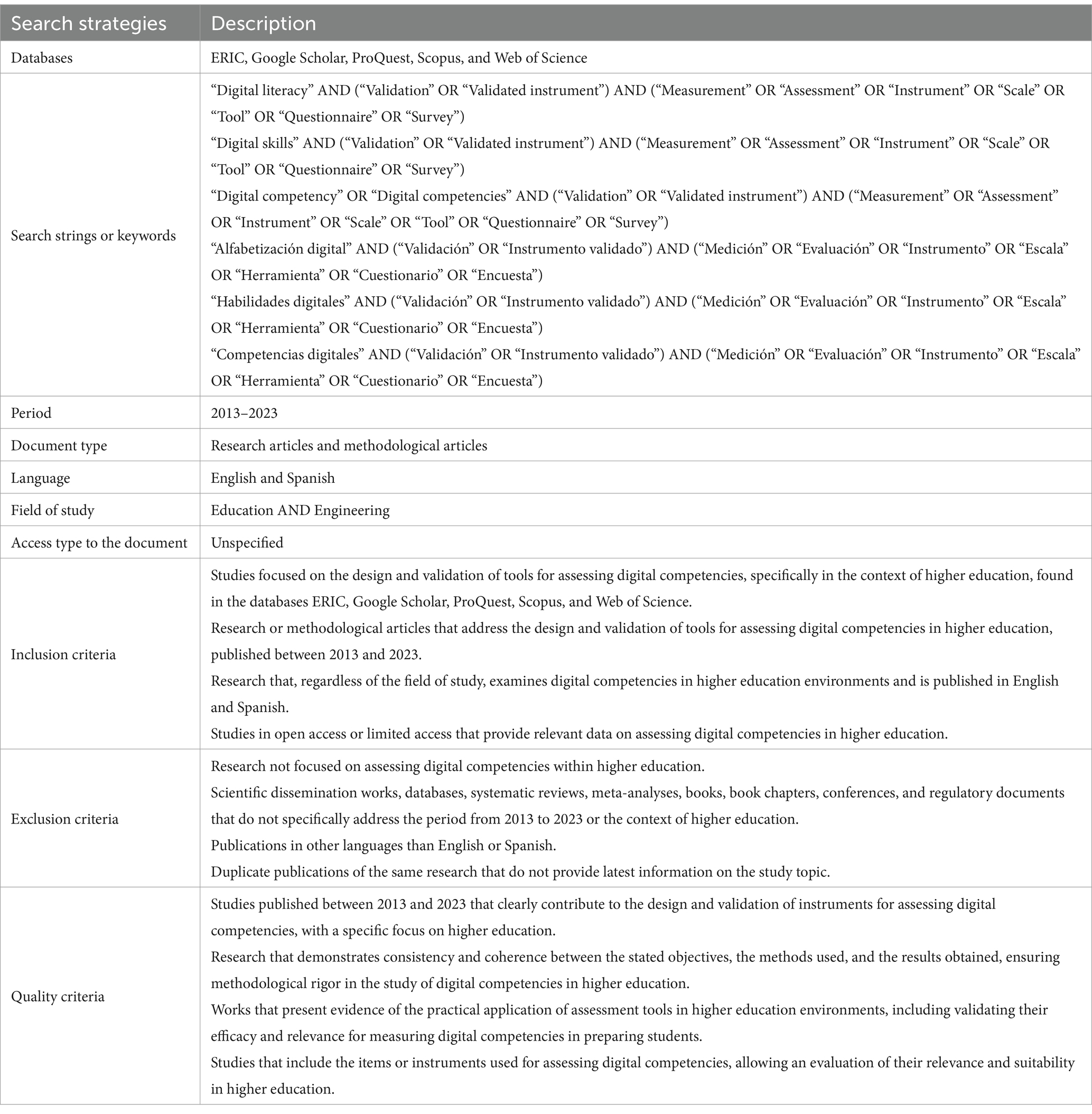

3.1.3 Search strategies

The search strategies considered: (a) databases, (b) search strings and keywords, (c) period, (d) type of document, (e) languages, (f) fields of study, and (g) type of access. Specifically, it was decided to initiate this review of existing literature through five databases: ERIC, Google Scholar, ProQuest, Scopus, and Web of Science. The goal was to gain an up-to-date understanding of the design and validation of instruments focused on assessing digital competencies in higher education. These databases were selected due to their broad recognition and usage within the academic community, ensuring access to reliable, high-quality sources. Additionally, searching these databases provides access to studies and research conducted in various countries, offering a global perspective.

The period from 2013 to 2023 was defined to capture a complete and current view of recent developments and progress in designing and validating instruments for assessing digital competencies in higher education. This decision covers a decade of research, ensuring that the most recent advancements and those works that have laid the groundwork for current studies are included. This temporal approach is essential to understanding the evolution of digital competencies in higher education and how assessment tools have adapted or need to adapt to meet the changing demands of the educational and technological landscape. In light of the fast-paced technological advancements, it is essential to take a temporal perspective when examining the progression of digital competencies in higher education. This is critical to determining the continued relevance and applicability of the instruments developed in this period, given the ever-changing demands of the educational and technological landscape, particularly in the context of Industry 5.0.

On the other hand, the choice of English and Spanish as languages for the search strategy was determined by the intention to capture a broad and diverse spectrum of research in the design and validation of instruments for assessing digital competencies in higher education. English is the predominant language in global academic literature, facilitating access to a significant volume of internationally impactful research (Liu and Hu, 2021; Meyerhöffer and Dreesmann, 2021; Kuzma, 2022). Additionally, Spanish is the second most spoken language globally and features a growing body of academic work, particularly in Spanish-speaking countries (Blaj-Ward, 2012). Including these two languages aims to maximize the covered and cultural diversity of the reviewed research, ensuring the integration of global and regional perspectives. However, it is acknowledged that excluding other languages may limit the scope of the study. Consequently, future research will explore incorporating analyses in additional languages.

Regarding the type of access, both open-access and restricted sources were included to encompass the widest possible range of relevant literature. Considering both types of access, the review was not limited to those works available at no cost but also considered potentially relevant research published on subscription platforms or journals. This mixed approach ensures that an exhaustive evaluation of the state of the art on the subject is conducted, including pioneering or highly impactful works that might not be available in open access.

Finally, the selection of the fields of study in education and engineering was made specifically due to the central objective of the study, which focuses on conducting a thorough and meticulous analysis of the existing literature related to the design and validation of modern instruments for assessing digital competencies in higher education (see Table 2).

3.1.4 Inclusion, exclusion, and quality criteria

To ensure an accurate and rigorous systematic review of digital competencies in higher education, our team established inclusion, exclusion, and quality criteria. We meticulously selected relevant research from esteemed databases, spanning from 2013 to 2023, and including both theoretical and applied studies in English and Spanish. We excluded works outside the scope of higher education, duplicates, as well as publications not focused on the specified period or in languages other than English and Spanish. Our chosen studies had to demonstrate significant contributions to the field, exhibit methodological coherence, and provide evidence of practical applicability in higher educational environments. Our meticulous approach ensured a rigorous analysis of trends in assessing digital competencies, thus enabling a deeper comprehension and enhancement of educational practices in the digital era (see Table 2).

3.2 Phase 2: implementation

As part of implementing the SLR, we followed the study selection and quality assessment procedure established in the planning phase. This process is an adaptation of the three stages outlined in the guidelines of Moher et al. (2015), which includes a PRISMA flow to show (a) identification of relevant studies, (b) data curation through predetermined filters, (c) eligibility of studies, and (d) the final documents included for analysis.

During the research process, a systematic selection of primary studies was conducted to identify documents focused on designing and validating tools for assessing digital competencies. This selection was conducted using databases such as ERIC, Google Scholar, ProQuest, Scopus, and Web of Science, resulting in 9563 documents. In the subsequent data curation stage, inclusion and exclusion criteria were applied to filter out irrelevant documents based on study topic, document type, discipline, language, type of access, period, thematic relevance, and duplication. After applying these filters, only 4,634 documents were included in the analysis.

Further analysis was conducted to determine the relevance of these documents to the research topic, leading to the inclusion of only 914 documents. A comprehensive review of these 914 documents was conducted to identify those specifically related to the design and validation of instruments for assessing digital competencies, resulting in 112 documents. Finally, to ensure the quality of the selected documents, a final filter was applied to identify those focused on higher education and with a detailed description of the items or instruments used to assess digital competencies. This rigorous selection process resulted in identifying and selecting 46 documents that met all the established criteria (see Figure 2).

Figure 2. PRISMA flowchart based on Moher et al. (2015).

A database was created using Microsoft Excel, containing information from various documents organized alphabetically by fields such as the database, author(s), document title, year, type of document, journal, or publisher, DOI, abstract, keywords, language, and type of access. The bibliographic database can be reviewed using the following link: https://bit.ly/databaseDL.

The documents’ analysis began to answer the research questions determined in the planning phase, as shown in Table 1. The results for questions RQ1, RQ2, RQ4, RQ6, RQ7, and RQ8 were visualized in the report using Tableau. The answers to questions RQ3 and RQ5 were placed in a table, considering the importance of the information and the rapid visualization of the analysis.

4 Results

The results section clearly and directly exposes the discoveries obtained from the SLR, organizing the report around the eight research questions posed. The structure of the report comprises (1) an introduction, (2) the methodology (Phase 1: Preparation of the review and Phase 2: Execution), (3) the results (Phase 3: Presentation of the findings), and (4) the discussion and conclusions.

4.1 Between 2013 and 2023, how many studies on assessing digital competencies in higher education were published, and what type of access characterizes these studies?

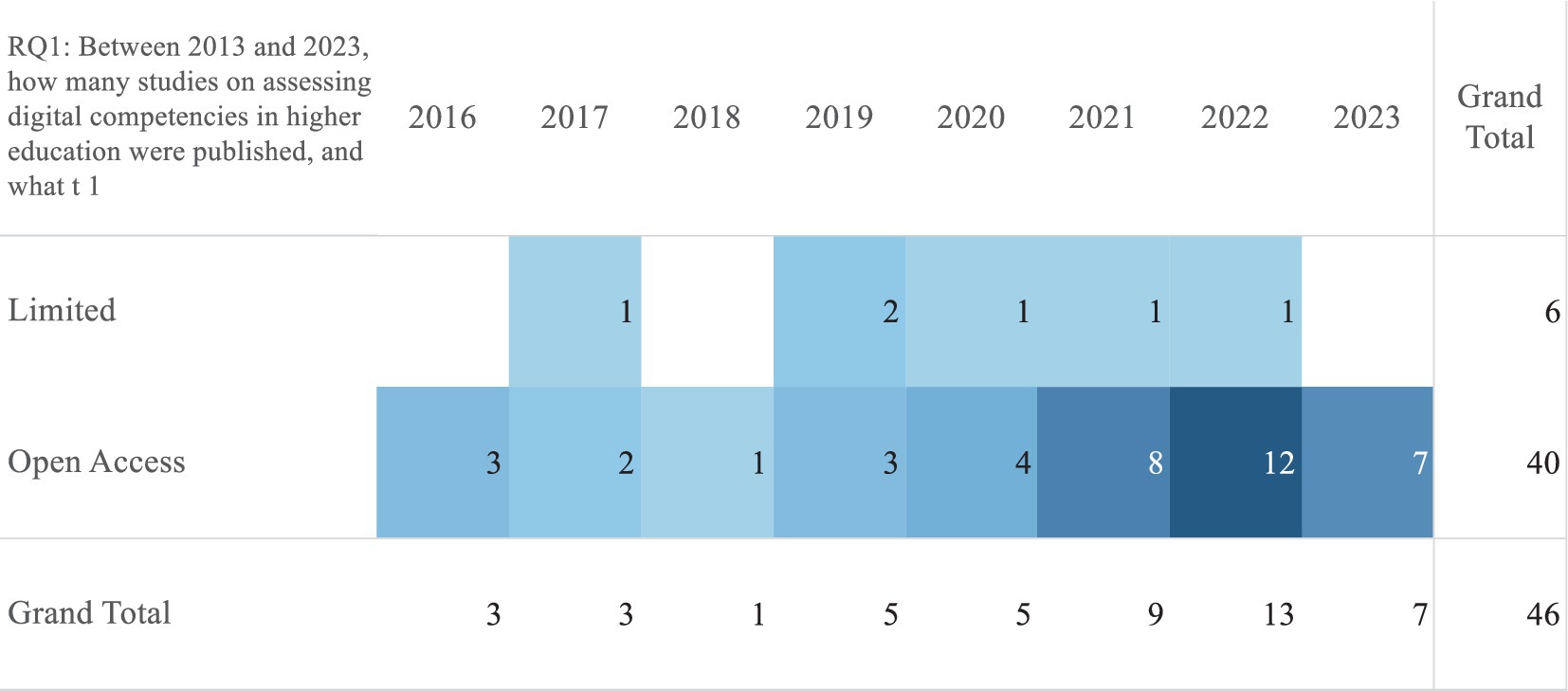

Through the literature analysis, 46 studies were identified that assessed digital competencies in higher education with available instruments from 2016 to 2023. It is recognized that no studies related to the topic in higher education with available instruments from 2013 to 2015 were found in the seven databases of the study. However, the analysis also shows an increase in the overall publication trend, with 46 studies in the 8-year period. Likewise, the year with the highest number of published studies is 2022, with a total of 13, and the year with the fewest studies was 2018, with only 1 study. Notably, the 40 open-access study publications suggest a growing trend toward greater accessibility of research in digital competencies in higher education (see Figure 3).

Figure 3. Temporal evolution of the publication of studies on digital competencies in higher education, classified by type of access.

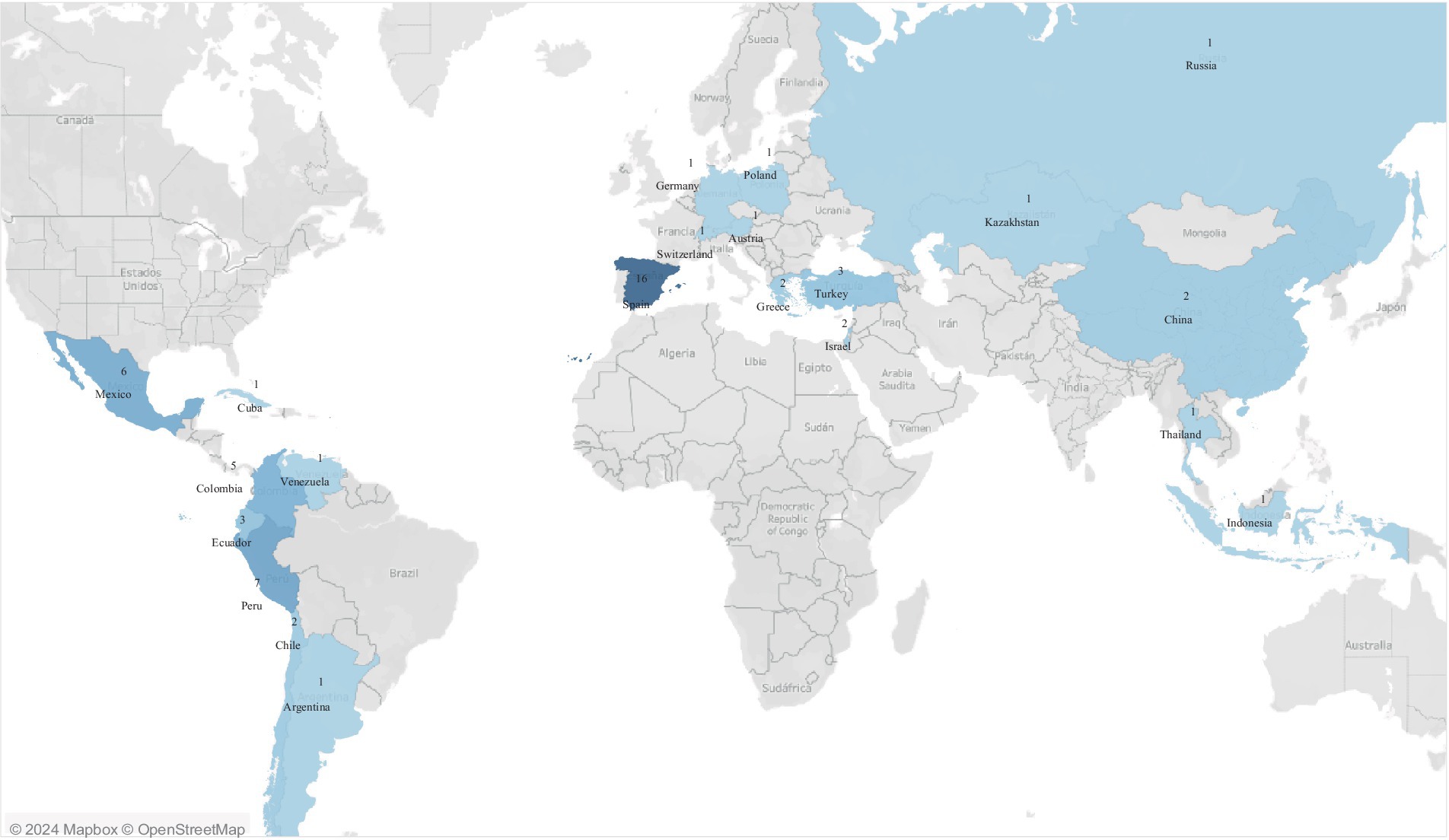

4.2 In which countries have the studies on this topic been conducted?

According to the 46 studies analyzed, a total of 58 countries were counted, not including the study developed in Europe, counted as a region [9], as six studies were identified that involved the participation of various universities in several countries within the studies [2, 21, 25, 26, 30, 38]. On the other hand, there is considerable research activity, with Spain leading the number of studies (n = 16). Additionally, research in Austria (n = 1), Greece (n = 2), Poland (n = 1), Switzerland (n = 1), and Germany (n = 1) on a generalized study across various European countries [9] was recorded. This reflects an active interest in the region in digital competencies in higher education. However, it was identified that the countries in second, third, and fourth positions are located in Latin America, with Peru (n = 7), Mexico (n = 6), and Colombia (n = 5). Furthermore, studies in Ecuador (n = 3), Chile (n = 2), Venezuela (n = 1), Argentina (n = 1), and Cuba (n = 1) also contribute to the research body, underscoring the relevance of the topic in Latin America. Regarding Asia, studies were identified in China (n = 2), Israel (n = 2), Thailand (n = 1), Indonesia (n = 1), Kazakhstan (n = 1), and Russia (n = 1). There were no studies found in English-speaking countries that addressed the topic of higher education, indicating a significant research gap in this area. Additionally, no studies with instruments related to the topic in higher education were identified in Africa (see Figure 4).

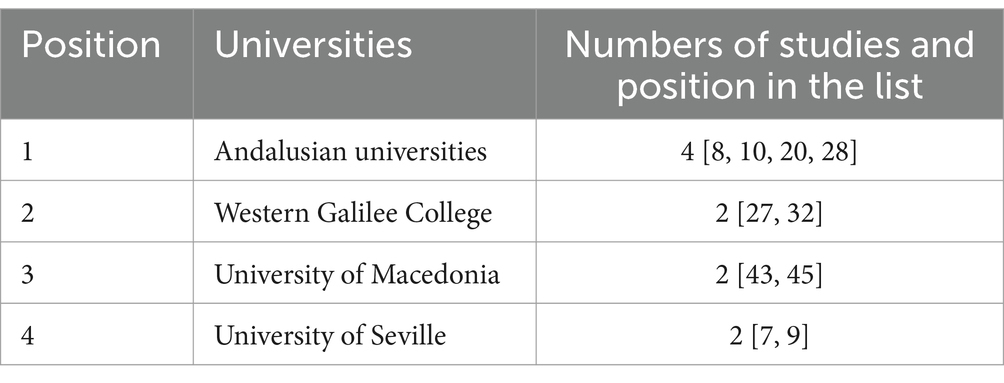

4.3 Which universities have conducted studies related to the topic?

Through the review of the 46 studies, a wide dispersion of studies among different institutions is identified, with a total of 72 institutions involved in conducting the studies. Most universities have contributed a single study, evidenced by 68 universities with a single publication. Among the institutions that have conducted more than one study, the Andalusian universities stand out with four cited research (numbers 8, 10, 20, 28), which may reflect a particular regional focus or a group of researchers active in this area in the Andalusia region. Additionally, the participation of Western Galilee College, the University of Macedonia, and the University of Seville, each contributing two studies, may indicate a sustained interest or specialization in the topic within these universities. It is noted that a study emphasizing research in various universities in Spain [12] was located. On the other hand, 15 studies [4, 11, 21, 23, 25, 26, 27, 30, 31, 33, 35, 37, 38, 41, and 42] developed their research in two or more universities. This may externalize a trend toward collaborative and transdisciplinary approaches in digital competency research. Collaboration between different institutions also reflects strategies to enhance the quality of research by including diverse educational and cultural contexts (see Table 3).

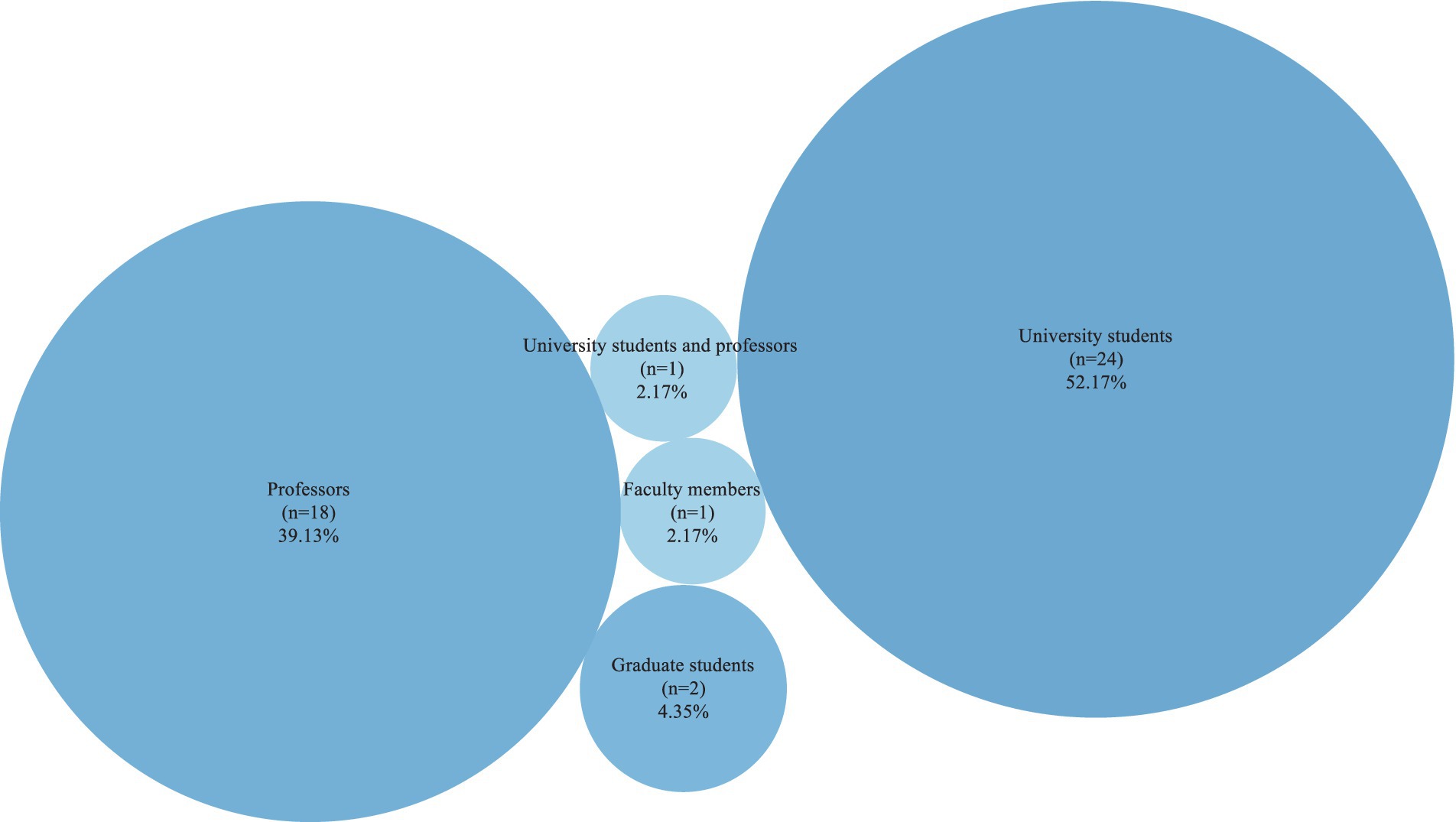

4.4 What populations have been studied in digital competency research?

The identification of the population that participated in the studies was established through the literature review. It was identified that university students represent half (52.17%, n = 24) of the studied population, indicating that they are the most researched group. The second most significant group participating in the studies were teachers (39.13%, n = 18), suggesting they are also a significant research focus. One study that established both population groups (2.17%, n = 1) may indicate an interest in exploring digital competencies in an interdisciplinary context. On the other hand, it was identified that graduate students (4.35%, n = 2) are less represented in the studies, which could reflect a lower frequency of research focused on these subpopulations within higher education institutions. This distribution indicates that most research focuses on undergraduate students and teachers, due to the direct relevance of digital competencies for these groups in the educational context (see Figure 5).

4.5 Which instruments were used in the studies? What are the instrument’s dimensions and item count? Was it designed for the study’s context, adapted, or validated?

An analysis of the instruments from each study was conducted to answer the study question, and the dimensions and items of each instrument used in the 46 studies were validated. The Table 4 identifies whether the instruments were designed for the specific study, validated, or adapted. According to the review of each document, it was identified that most of the instruments (n = 27) underwent a design and validation process. This implies that they were created from scratch to meet specific requirements and underwent a rigorous validation process to ensure they effectively and accurately measure the competencies and skills intended to be assessed. From this, a preference for creating customized instruments that fit higher education’s specific needs and contexts can be recognized. Thirteen instruments were adaptations of existing assessment tools, modified and validated for new contexts of use. Only six of the 46 studies reported that they subjected pre-existing instruments to validation in a particular context of higher education. It is important to note that the most used instrument was the DigcompEdu Check-in in seven studies [7, 8, 9, 10, 16, 28, 29]. Thirteen studies developed an unnamed instrument [6, 13, 15, 17, 20, 21, 23, 27, 31, 33, 37, 39, 40] (see Table 4).

Most assessment instruments have six dimensions (n = 12), with instruments having three dimensions also widely used (n = 10). Assessment tools with five or four dimensions are also prevalent, indicating a balance between breadth and specificity. There are fewer instruments with seven dimensions (n = 3) and only one each with eight (n = 1) and 10 dimensions (n = 1). Instruments with one or two dimensions are rare (n = 1 each), indicating less preference for assessment approaches focused on specific competencies. It was found that most assessment instruments used in higher education contain 22 items (n = 6). The next most common lengths were 20 items (n = 3) and 14 items (n = 3). There were some instruments with between 16 and 18 items (n = 6). Only one instrument had an extremely high number of items, with 181 items (n = 1). In contrast, one instrument had only nine items and another 11 items. This analysis shows a wide variation in the length of assessment instruments used in higher education (see Table 4).

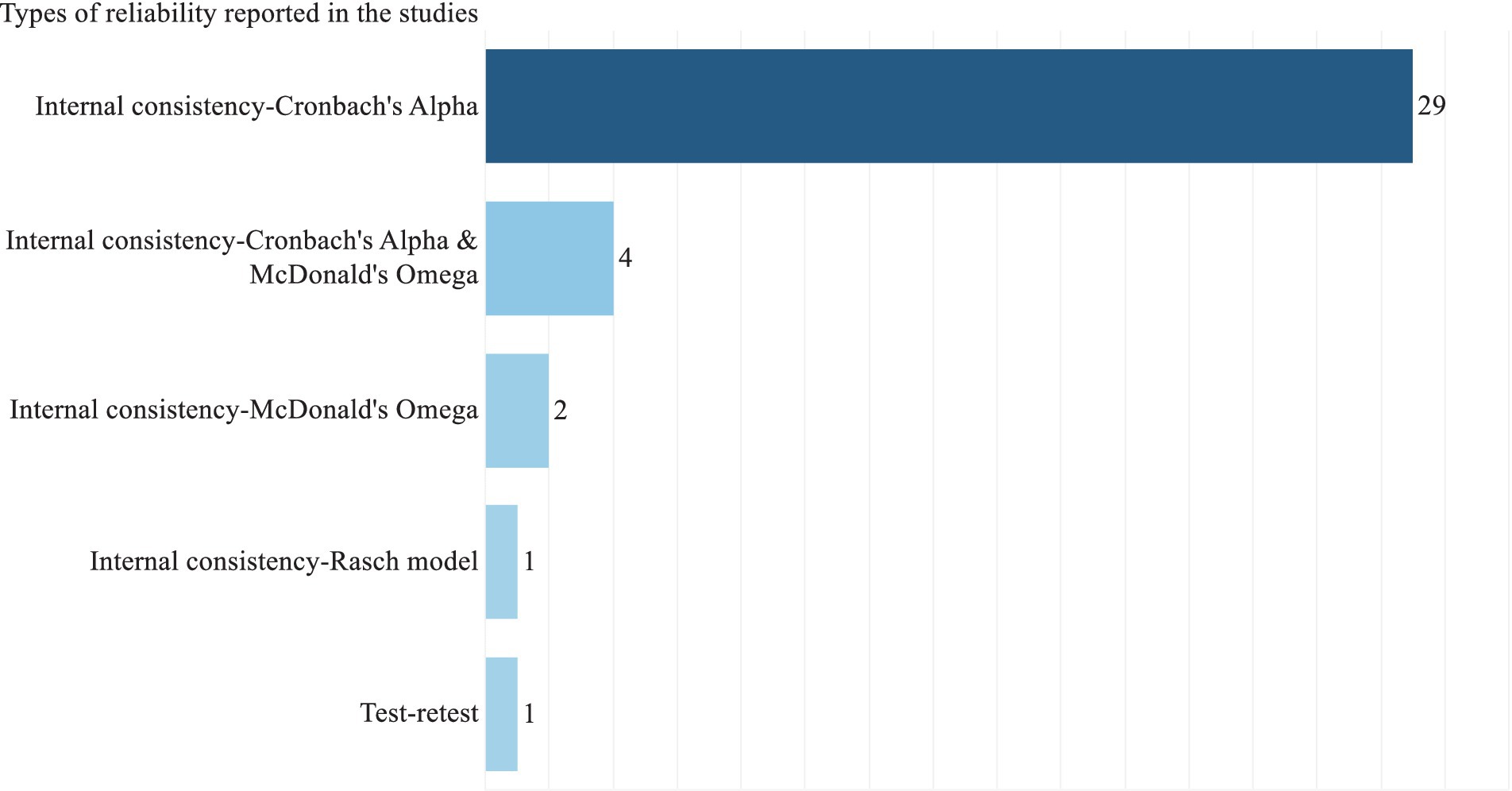

4.6 What types of reliability have been reported in studies on assessing digital competencies in higher education from 2013 to 2023?

Three types of reliability were considered to identify the instruments’ reliability types: (a) internal consistency, (b) split-half reliability, and (c) test–retest. It was found that most of the studies established the reliability of the instruments through internal consistency, primarily using Cronbach’s Alpha coefficient (n = 29). Additionally, studies were identified that considered reliability through two internal consistency tests, Cronbach’s Alpha, and McDonald’s Omega (n = 4). Two other studies established internal consistency through McDonald’s Omega. The coefficients of Cronbach’s Alpha and McDonald’s Omega are in the study database, where it is recognized that all the instruments that employed this type of reliability show acceptable to good reliability. One study [1] established reliability through the test–retest method. Lastly, one study established internal consistency through the Rasch Model [22]. Using this model in the study allowed for the calibration of a measurement model that establishes the correlation between an individual’s ability and the item’s difficulty level using a logit scale (logarithmic unit of probability). The study established that the Rasch Model was used to enhance the reliability of the results (Hidayat et al., 2023). It is important to mention that no studies using Split-half reliability were reported among the 46 studies analyzed. Additionally, 10 studies that used some instrument related to digital competencies in higher education did not report using any reliability test [3, 9, 15, 18, 23, 24, 34, 38, 39, 43] (see Figure 6).

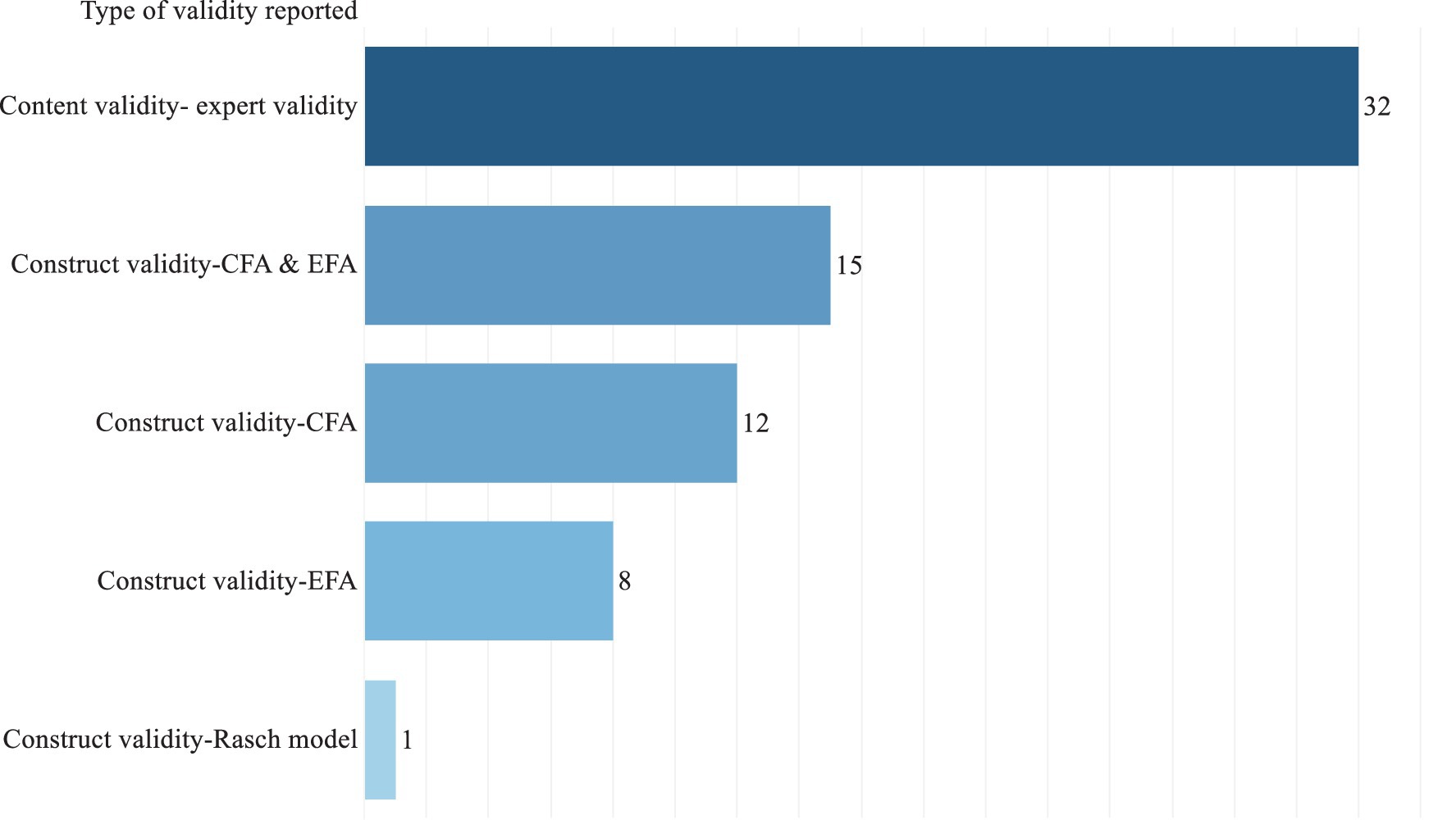

4.7 What types of validity have been reported in studies on assessing digital competencies in higher education from 2013 to 2023?

To identify the types of validity reported by the studies, four types of validity were considered: (a) Content validity, (b) Construct validity, (c) Criterion validity, and (d) Face validity. Regarding construct validity, two types of analysis were identified: CFA and EFA. Studies that reported both types of construct validity were 15, while only CFA was reported in 12 studies, and eight reported using only EFA to establish construct validity. It is important to recognize that content validity validated by experts is predominant in the analyzed research, with 32 studies reporting its use. This finding suggests meticulous attention to ensuring that the measurement instruments adequately reflect the conceptual realm of digital competencies with a qualitative review by experts in the field. Lastly, the study by Hidayat et al. (2023) established that the Rasch model was used for data cleaning and validation and reliability and validity tests of the instrument. It is important to highlight that studies did not report validity through Face validity. Only Criterion validity was reported in three studies [21, 29, 34]. It is important to recognize that all studies reported at least some type of validity of the instruments, content validity and construct validity (see Figure 7).

4.8 Do higher education digital competency instruments include Industry 5.0 core competencies?

To determine which tools are associated with key competencies of Industry 5.0, such as creativity, communication, teamwork, and leadership, an analysis of the dimensions and items of the instruments was conducted. It is important to note that for this analysis, the instruments prioritized provided full access to their dimensions and items from the initial selection phase, allowing for an exhaustive qualitative analysis of each key competency. This methodological strategy was essential to ensure that each evaluated instrument offered a detailed and deep view of how creativity, communication, teamwork, and leadership competencies are conceptualized and operationalized. Through a thorough analysis of these dimensions, it was possible to discern the presence of these competencies in instruments that assess digital competencies, which is critical for evaluating their alignment with the requirements of Industry 5.0. Each of the studies selected within the database reflects this rigor, allowing for visualization of how these key competencies manifest in real educational contexts and providing a solid foundation for future academic and curricular interventions.

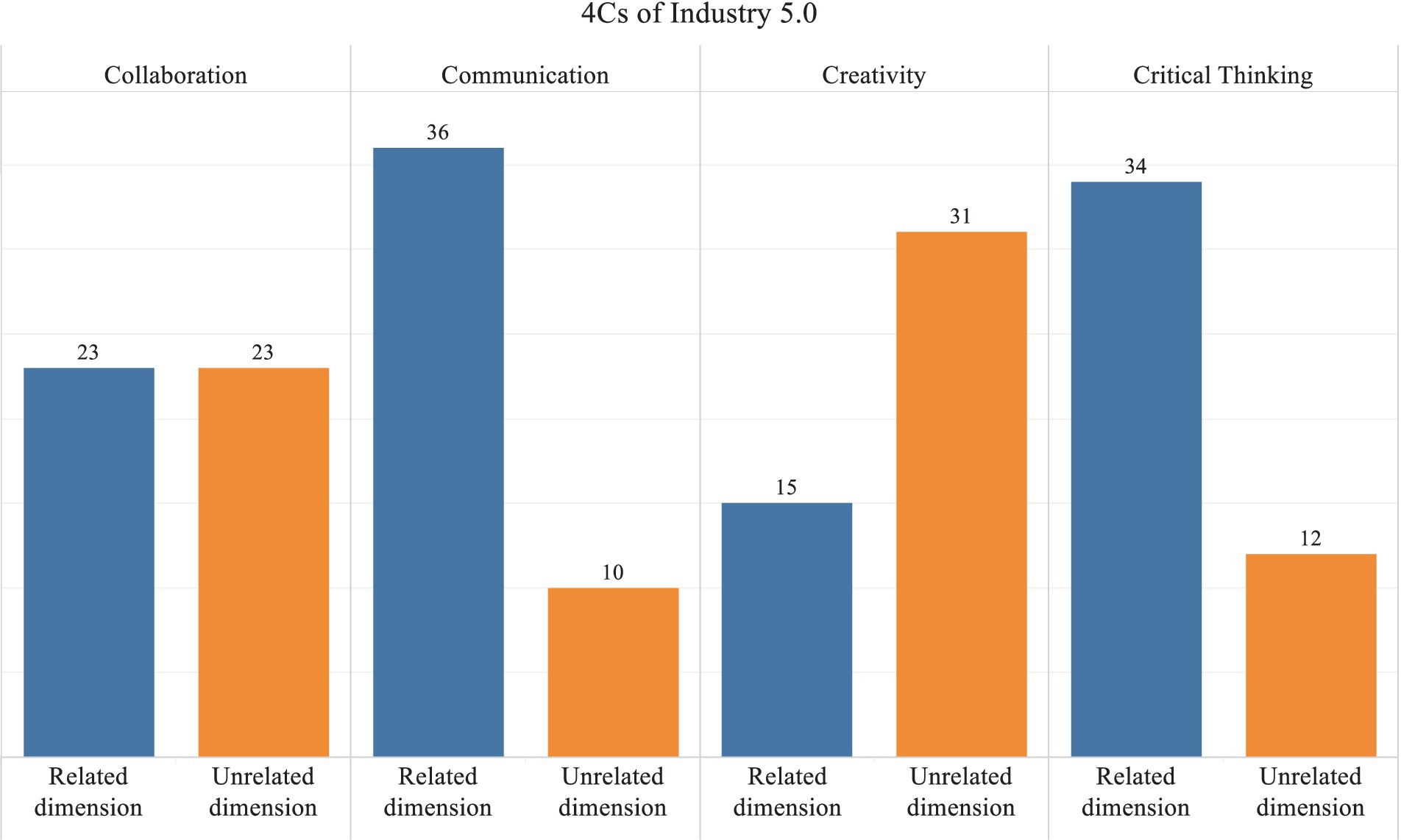

Communication emerged as the most represented skill across the instruments’ dimensions and items (n = 36), underscoring its crucial role in the digital landscape of Industry 5.0. Nonetheless, 10 tests were identified that did not relate to this Industry 5.0 competency. For instance, Tang et al. (2022) assessed digital competencies for online teaching, focusing on specific technical and pedagogical aspects rather than directly on communication skills. Similarly, Gallardo-Echenique (2013) validated an instrument for digital teacher competency that concentrated on general professional and pedagogical skills. Another example is the instrument adapted by Betancur-Chicué et al. (2023), which focused more on digital content and learning assessment than on direct communication, potentially explaining the lack of an explicit link to communication competency.

Critical thinking was the second competency linked with the instruments’ dimensions (n = 34), with only 12 instruments featuring dimensions unrelated to this competency. A notable example is the validation by Riquelme-Plaza et al. (2022), which incorporated a high level of critical thinking by requiring teachers to develop and implement innovative and effective solutions in digital educational contexts, with dimensions such as “Digital Content Creation” and “Problem-Solving.” Similarly, the instrument validated by Bernate et al. (2021) targeted digital competencies of university students, with a dimension focusing on “Critical Thinking, Problem Solving, and Decision Making,” underscoring the significance of critical thinking within digital competencies and its central role in the effective and ethical manipulation of technology. Moreover, Monsalve et al. (2021) study on digital competencies in virtual and distance learning programs included “Critical Thinking, Problem Solving, and Decision Making” as a dimension, highlighting the fundamental importance of critical thinking in navigating and managing digital environments effectively.

Furthermore, 23 instruments related to collaboration competency were identified, with an equal number of lacking dimensions linked to collaboration. A connection between communication and collaboration among the dimensions of the instruments addressing this Industry 5.0 competency was also noted. For example, von Kotzebue et al. (2021) evaluated communication and collaboration within the same dimension, emphasizing the importance of promoting collaborative practices and effective communication in science education environments. Tzafilkou et al. (2022) established a dimension encompassing communication, collaboration, and information sharing, essential competencies for teamwork and group projects in educational settings, facilitating student interaction and information exchange. Additionally, Cabero-Almenara and Palacios-Rodríguez (2020) validated the DigCompEdu Check-In questionnaire, establishing the linkage of communication and collaboration as skills for efficiently interacting and collaborating in digital environments, preparing teachers in training to utilize these competencies in their teaching practice, thereby enhancing group work dynamics and interaction with students.

A limited number of tools (n = 15) were observed to incorporate dimensions or items related to creativity, which is acknowledged as the least emphasized core competency in the context of digital skills for higher education. One of the instruments considering the relevance of creativity in the “Digital Content Creation” dimension was Casildo-Bedón et al. (2023), as creating digital content is a fundamental skill enabling students to explore and express their creativity, designing solutions and content that reflect their unique understanding and vision. Similarly, (Hidayat et al., 2023) analyzed teachers’ digital competencies in Indonesia, and one of the dimensions reviewed was the ability to create innovative digital content, which is critical for developing creative skills. This dimension assesses how future teachers design and create digital resources essential for creative teaching and active learning. Another similar example was the validation of an instrument based on the INTEF framework (2017), which considers the dimension of creating digital content to develop original and relevant digital content, a central skill for creative teaching and the generation of innovative educational materials (Chávez-Melo et al., 2022). These three studies consider the relationship of creativity among the digital competencies of teachers (Casildo-Bedón et al., 2023; Chávez-Melo et al., 2022; Hidayat et al., 2023). The results of the analysis of the 46 instruments can be visualized in Figure 8.

Figure 8. Exploring the relationship between Industry 5.0’s core competencies and digital competency instruments.

5 Discussion

The study’s findings reveal that the dimensions of the instruments measuring digital competencies in higher education across the 46 analyzed studies show a direct relationship with communication and critical thinking competencies. Specifically, the results demonstrate an interconnection of digital competencies with communication in various fundamental aspects. For instance, Durán Cuartero et al. (2016a) designed an instrument to certify ICT competencies among university faculty, including effective communication and collaboration with students and peers among its dimensions. On the other hand, González-Calatayud et al. (2022) developed a tool based on the EmDigital model to assess how university students utilize their digital competencies in entrepreneurship and collaboration initiatives, emphasizing the importance of clear communication and teamwork in the digital entrepreneurship domain. From this scenario, it is evident, as noted by Ungureanu (2020) and Wolniak (2023), that effective communication is essential as it acts as the link facilitating effective collaboration and idea exchange, fundamental elements for progress in academic and professional environments. This connection becomes even more relevant in a fully digitalized world, where the ability to adapt and function within digital communication networks is fundamental for personal and professional success.

Furthermore, the results reveal a clear trend toward integrating critical thinking into the dimensions assessed by various digital competency instruments, underscoring its significance in higher education. For example, Bernate et al. (2021) highlight how critical thinking, problem-solving, and decision-making is essential for managing digital information. This approach fosters technical competency and promotes a reflective and analytical attitude toward information and technology, encouraging responsible and conscious use. This is particularly important given the significance of critical thinking in Industry 5.0, as it is fundamental for navigating the complexity of global information, enabling individuals to analyze deeply and reflectively the challenges and opportunities presented by the modern economy (Ungureanu, 2020). Therefore, integrating critical thinking in digital education prepares students to face technological challenges and equips them with transferable skills crucial for success in the information and knowledge society. This perspective is supported by the growing labor market demand for skills that encompass both technical competency and critical and analytical capabilities, making critical thinking a central pillar of modern education at all levels (Gürdür Broo et al., 2022).

Through the analysis of the dimensions of the instruments, the interrelation between communication and collaboration in several of the analyzed instruments is evident, where these skills are recognized as fundamental for collective work and learning in digital environments. For instance, von Kotzebue et al. (2021) emphasize the need to promote collaborative practices and effective communication, particularly in the educational context of the sciences, where the ability to work together and communicate findings is vital. Similarly, Tzafilkou et al. (2022) recognize the importance of information sharing, communication, and collaboration as essential skills for successful group projects in education. These examples illustrate the perception that collaboration is not merely an additional skill but an essential component of digital competency that drives educational effectiveness and preparedness for the modern labor market, where the ability to work in teams and communicate effectively is more critical than ever. According to Gürdür Broo et al. (2022), communicative competency is a key element for engineers, enabling the effective exchange of ideas, clear presentation of technical solutions, and effective collaboration with colleagues from various disciplines. This integration of competencies reflects the growing demand for interdisciplinary skills and effective collaboration, underscoring the importance of educating future professionals in a context that values both technical competency and social and communicative skills.

The lack of creativity in the evaluated tools is a significant challenge and opportunity in higher education’s digital competencies. This analysis suggests an urgent need to expand the assessment of digital skills to include and enhance creative thinking, which is essential not only for responding to current technical demands but also for cultivating the innovative capacities necessary in a global and technologically advanced environment, as mentioned by Ungureanu (2020) and Aslam et al. (2020) in a world where innovative ideas are critically valuable, creativity is indispensable for developing new and effective solutions. This capability enriches the technology sector and drives efficiency and sustainability. This need to focus assessment on broader and transversal competencies aligns with the current demands of a constantly evolving work environment, where creativity is fundamental for the development of new technologies and sustainable solutions, as noted by Gürdür Broo et al. (2022). As we move toward a more interconnected and technologically dependent society, assessment instruments must reflect and promote a broader spectrum of digital skills, including those that drive innovation and adaptability.

In this sense, the studies highlight the importance of developing valid assessment tools encompassing a broader spectrum of competencies, including creativity and collaboration. This need aligns with the transition toward Industry 5.0, marking an evolution in the demand for labor competencies, where human skills emerge as essential elements to foster sustainable and equitable economic development (Breque et al., 2021; Ivanov, 2023). Therefore, it is necessary both to develop and to monitor the four key competencies of Industry 5.0: creativity, communication, collaboration, and critical thinking, both in the workforce and in higher education and engineering (Gürdür Broo et al., 2022). Assessment tools must link digital competencies with the competencies relevant to Industry 5.0, ensuring that graduates are competent in the use of advanced technologies and possess the interpersonal and creative skills necessary to lead in an evolving work environment.

Given the growing importance of core competencies alongside digital competencies for Industry 5.0, assessment instruments must be able to measure these complex constructs reliably. The analysis showed that 10 studies implementing assessment instruments for digital competencies in higher education did not report using any reliability test. Likewise, these instruments were evaluated through Cronbach’s Alpha and McDonald’s Omega, and only one study reported reliability through the test–retest method, with no instrument evaluated using Split-half reliability. From this context, the lack of reliability can lead to underestimating or overestimating these skills, affecting students’ preparedness for the job market challenges. It is crucial that future studies that design, adapt, or validate instruments for assessing digital competencies consider that each type of reliability addresses different aspects of reliability, from the internal consistency of an instrument to its temporal stability and the equivalence between various forms of measurement (Echeverría Samanes and Martínez Clares, 2018). The absence of reliability assessment limits the validity of the obtained results and affects educators’ ability to design appropriate educational interventions supported by reliable data. Moreover, although widely accepted, the predominance of Cronbach’s alpha as a method of assessing reliability may not be sufficient to fully capture the complexity and multidimensionality of digital competencies and soft skills. It is also important to consider that including these approaches in the reliability assessment not only enriches the interpretation of the data but also strengthens the validity of the instruments used, allowing for more precise and well-founded interventions in educational and professional settings. This approach is especially relevant in the context of digital competencies, where the speed of technological changes and the diversity of practical applications demand instruments that are not only current but also adaptive and sensitive to contextual variations (Xu et al., 2021; Gürdür Broo et al., 2022).

On the other hand, the review of the instruments used in 46 studies reveals a meticulous focus on validation, where each demonstrated having at least one type of validity. Notably, 32 instruments underwent a content validity process with expert intervention, emphasizing the importance of ensuring that the items faithfully reflect the specific domain intended to be measured. Furthermore, construct validity, assessed through CFA and EFA, was applied in 35 instruments, representing 76.08% of the total examined. Incorporating advanced statistical techniques, this approach facilitates a rigorous exploration of how items cluster into dimensions and how these reflect the specific competencies in question (Lee, 2021). However, carefully considering the context in which these instruments are applied is important to determine their validity and applicability, highlighting the need for an adaptive and contextualized approach in validating assessment tools (Goretzko et al., 2021). This emphasis on robust and contextualized validation of assessment instruments underscores a rigorous approach to measuring digital competencies and soft skills, which is essential for preparing students within the framework of Industry 5.0. The ability to reliably assess these competencies is crucial, as it paves the way for developing educational strategies that effectively respond to the changing demands of the work environment. Thus, this study significantly contributes to educational assessment, providing a solid foundation for future research and practical applications in higher education.

The findings of this SLR unequivocally underscore the imperative to persistently explore and refine the development of assessment instruments that comprehensively address both digital competencies and soft skills. The intricate interplay between these competencies and the evolving demands of the contemporary labor market highlights the essentiality of assessments that extend beyond mere technical proficiencies to encompass essential abilities such as creativity, communication, and collaboration, pivotal in the context of Industry 5.0 (Goretzko et al., 2021). This study not only accentuates the need for innovative approaches in measuring these competencies but also reinforces the indispensable role of higher education in cultivating professionals capable of leading and adapting within a global environment dominated by information and the knowledge economy. Additionally, this research could serve as a blueprint for guiding the design of future scholarly inquiries that integrate and assess both foundational and digital competencies within higher education frameworks, ensuring that students are adequately equipped to confront the challenges of a dynamically transforming workplace. This investigation lays the groundwork for subsequent initiatives to refine competency assessment methodologies, fostering a more holistic and pertinent educational paradigm.

6 Conclusion

The study aimed to explore the connection between Industry 5.0 core competencies and digital competency assessment instruments in higher education. The results highlight the need for valid assessment tools that cover a broad range of competencies, such as creativity, communication, and collaboration. These tools should include digital competencies and core competencies of Industry 5.0 to align higher education with the vision of a sustainable and human-centered future. It is essential to involve students and educators in developing digital competencies and soft skills. Future research should also focus on creating instruments that effectively measure technical and soft skills in dynamic work environments.

The study provides a significant and relevant analysis of the tools used to assess digital competencies. However, it should be noted that the study has limitations related to its design, methodology, and scope. One limitation is the selection of languages, as it only focused on documents in English and Spanish. This limited approach prevented the inclusion of relevant research published in other languages, which could have provided different perspectives on digital competencies in higher education. Considering this limitation when interpreting the results and planning future research is important. Future research aiming for a more global scope should include studies in various languages. Additionally, the study only focuses on higher education. Therefore, the findings and conclusions cannot be generalized to other educational levels, such as primary, secondary, or technical and vocational education. This limitation restricts exploring how digital competencies are developed at these other educational levels and in the labor market. This focus needs to include the possibility of exploring how digital competencies are developed and assessed throughout the entire educational and career trajectory. Understanding this evolution from early stages could be crucial for designing effective educational interventions that prepare students to face digital challenges at more advanced levels of education and in the job market.

On the other hand, instruments validated and evaluated in the context of higher education may not be directly applicable or relevant for students at lower educational levels, such as upper secondary or basic education. This underscores the importance of adapting and validating instruments for different student populations, considering age, cognitive development level, and prior digital competencies. In summary, while the study provides valuable information on the assessment of digital competencies in higher education, its limitation in terms of educational level highlights the need for additional research that addresses the assessment and development of these competencies across a broader educational spectrum with the depth of a SLR.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

IP-S: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. LG-M: Investigation, Supervision, Validation, Writing – review & editing. GR-F: Investigation, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The authors would like to thank Tecnologico de Monterrey for the financial support provided through the ‘Challenge-Based Research Funding Program 2023’, Project ID #IJXT070-23EG99001, titled ‘Complex Thinking Education for All (CTE4A): A Digital Hub and School for Lifelong Learners.’ Also, academic and finantial support from Writing Lab, Institute for the Future of Education, Tecnologico de Monterrey, México.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1415800/full#supplementary-material

References

Ahmad, Shabir, Umirzakova, Sabina, Mujtaba, Ghulam, Amin, Muhammad Sadiq, and Whangbo, Taegkeun. (2023). Education 5.0: requirements, enabling technologies, and future directions. Available at: http://arxiv.org/abs/2307.15846 (Accessed February 22, 2024).

Alagözlü, N., Koç, D. K., Ergül, H., and Bağatur, S. (2019). News media literacy skills and violence against women in news reporting in Turkey: instrument development and testing. Gend. Technol. Dev. 23, 293–313. doi: 10.1080/09718524.2019.1672296

Alarcón, R., del Pilar Jiménez, E., and de Vicente-Yagüe, M. I. (2020). Development and validation of the DIGIGLO, a tool for assessing the digital competence of educators. Br. J. Educ. Technol. 51, 2407–2421. doi: 10.1111/bjet.12919

Arroba-Freire, E., Bonilla-Jurado, D., Guevara, C., and Ramírez-Casco, A. (2022). Factor analysis: an application of the digital competencies questionnaire in students of the business administration career of the Instituto Tecnológico Superior España (ISTE). J. High. Educ. Theory Pract. 22:199. doi: 10.33423/jhetp.v22i18.5711

Arslantas, T. K., and Gul, A. (2022). Digital literacy skills of university students with visual impairment: a mixed-methods analysis. Educ. Inf. Technol. 27, 5605–5625. doi: 10.1007/s10639-021-10860-1

Aslam, F., Aimin, W., Li, M., and Rehman, K. U. (2020). Innovation in the Era of IoT and industry 5.0: absolute innovation management (AIM) framework. Information 11:124. doi: 10.3390/info11020124

Bakkar, M. N., and Kaul, A. (2023). “Education 5.0 Serving Future Skills for Industry 5.0 Era,” in Advanced Research and Real-World Applications of Industry 5.0. eds. M. Bakkar and E. McKay (IGI Global), 130–147.

Benešová, A., and Tupa, J. (2017). Requirements for education and qualification of people in Industry 4.0. Proc. Manufact. 11, 2195–2202. doi: 10.1016/j.promfg.2017.07.366

Bernate, J., Fonseca, I., Guataquira, A., and Perilla, A. (2021). Digital competences in bachelor of physical education students. Retos 41, 309–318. doi: 10.47197/retos.v0i41.85852

Betancur-Chicué, V., Goméz-Ardila, S.-E., Cárdenas-Rodríguez, Y.-P., Hernández-Gómez, S.-A., Galindo-Cuesta, J.-A., and Cadrazco-Suárez, M.-A. (2023). Instrumento Para La Identificación de Competencias Digitales Docentes Validación de Un Instrumento Basado En El DigCompEdu En La Universidad de La Salle, Colombia. Prisma Soc. 41, 27–46.

Blaj-Ward, L. (2012). Academic writing in a global context. Compare J. Comparat. Int. Educ. 42:3. doi: 10.1080/03057925.2012.657928

Breque, M., De Nul, L., and Petridis, A. (2021). Industry 5.0- towards a sustainable, human-centric and resilient european industry. Brussels, Belgium: European Commission.

Cabero-Almenara, J., Barroso-Osuna, J., Antonio, C. L.-C., Cabero-almenara, J., and Barroso-osuna, J. (2022). Validation of the European Digital Competence Framework for Educators (DigCompEdu) through Structural Equations Modeling. Revista Mexicana de Investigación Educativa RMIE 27, 185–208.

Cabero-Almenara, J., Barroso-Osuna, J., Gutiérrez-Castillo, J. J., and Palacios-Rodríguez, A. (2020a). Validation of the digital competence questionnaire for pre-service teachers through structural equations modeling. Bordon. Revista de Pedagogia 72, 45–63. doi: 10.13042/Bordon.2020.73436

Cabero-Almenara, J., Gutiérrez-Castillo, J.-J., Palacios-Rodríguez, A., and Barroso-Osuna, J. (2020b). Development of the Teacher Digital Competence Validation of DigCompEdu Check-In Questionnaire in the University Context of Andalusia (Spain). Sustain. For. 12:6094. doi: 10.3390/su12156094

Cabero-Almenara, J., and Palacios-Rodríguez, A. (2019). Digital Competence Framework for Educators «DigCompEdu». Translation and Adaptation of «DigCompEdu Check-In» Questionnaire. Edmetic Educación Mediática y TIC 9, 213–234.

Cabero-Almenara, J., and Palacios-Rodríguez, A. (2020). Marco Europeo de Competencia Digital Docente «DigCompEdu». Traducción y Adaptación Del Cuestionario «DigCompEdu Check-In». EDMETIC 9, 213–234. doi: 10.21071/edmetic.v9i1.12462

Casildo-Bedón, N. E., Sánchez-Torpoco, D. L., Carranza-Esteban, R. F., Mamani-Benito, O., and Turpo-Chaparro, J. (2023). Propiedades Psicométricas Del Cuestionario de Competencias Digitales En Estudiantes Universitarios Peruanos. Campus Virtuales 12:93. doi: 10.54988/cv.2023.1.1084

Castagnoli, R., Cugno, M., Maroncelli, S., and Cugno, A. (2023). “The role of human centricity in the transition from industry 4.0 to industry 5.0: an integrative literature review,” in Managing technology integration for human resources in industry 5.0. eds. N. Sharma and K. Shalender (Hershey, PA: IGI Global), 68–96.

Chávez-Melo, G., Cano-Robles, A., and Navarro-Rangel, Y. (2022). Validación Inicial de Un Instrumento Para Medir La Competencia Digital Docente. Campus Virtuales 11:97. doi: 10.54988/cv.2022.2.1104

Coldwell-Neilson, J., and Cooper, T. (2019). “Digital literacy meets industry 4.0” in Education for employability, vol. 2. eds. J. Higgs, W. Letts, and G. Crisp (NV, LEIDEN: KONINKLIJKE BRILL), 37–50.

Contreras-Espinoza, K. L., González-Martínez, J., and Gallardo-Echenique, E. (2022). Psychometric validation of an instrument to assess digital competencies. Revista Iberica de Sistemas e Tecnologias de Informacao 2022, 296–309. doi: 10.22430/21457778.1083.

Council of the European Union (2018). Key competences for lifelong learning: a European reference framework. Off. J. Eur. Union 2, 1–20.

Durán Cuartero, M., Gutiérrez, I., María, P., Espinosa, P. P., Durán, M., Paz, M., et al. (2016b). CERTIFICACIÓN DE LA COMPETENCIA TIC DEL PROFESORADO UNIVERSITARIO Diseño y Validación de Un Instrumento. Revista Mexicana de Investigación Educativa RMIE 21:14056666.

Durán Cuartero, M., Isabel Gutiérrez, P. M., Paz Prendes Espinosa, M. D., María, P., and Prendes, E. (2016a). Certificación de La Competencia TIC Del Profesorado Universitario. Diseño y Validación de Un Instrumento.” Revista Mexicana de Investigación Educativa RMIE, vol. 21.

Echevarría-Guanilo, M. E., Gonçalves, N., and Romanoski, P. J. (2018). PROPRIEDADES PSICOMÉTRICAS DE INSTRUMENTOS DE MEDIDAS: BASES CONCEITUAIS E MÉTODOS DE AVALIAÇÃO-PARTE I. Texto Contexto-Enfermagem 26, 1–12. doi: 10.1590/0104-07072017001600017

Echeverría Samanes, B., and Martínez Clares, P. (2018). Revolución 4.0, Competencias, Educación y Orientación. Revista Digital de Investigación En Docencia Universitaria 12, 4–34. doi: 10.19083/ridu.2018.831

Esquinas, R., Helena, M., González, J. M. M., Ariza, M. D. H., and Carrasco, C. A. (2023). Validación de Un Cuestionario Sobre Hábitos y Usos de Las Redes Sociales En Los Estudiantes de Una Universidad Andaluza. Aula Abierta 52, 109–116. doi: 10.17811/rifie.52.2.2023.109-116

Fabian, O., Richard, J., Galindo, W. G., Huaytalla, R. P., Samaniego, E. S., and Casabona, R. C. A. (2020). Competencias Digitales En Estudiantes de Educación Secundaria de Una Provincia Del Centro Del Perú. Revista Educación 45, 52–69. doi: 10.15517/revedu.v45i1.41296

Fan, C., and Wang, J. (2022). Development and validation of a questionnaire to measure digital skills of Chinese undergraduates. Sustain. For. 14:3539. doi: 10.3390/su14063539

Farias-Gaytan, S., Aguaded, I., and Ramirez-Montoya, M.-S. (2022). Transformation and digital literacy: systematic literature mapping. Educ. Inf. Technol. 27, 1417–1437. doi: 10.1007/s10639-021-10624-x

Forte-Celaya, J., Ibarra, L., and Glasserman-Morales, L. D. (2021). Analysis of creative thinking skills development under active learning strategies. Educ. Sci. 11:621. doi: 10.3390/educsci11100621

Gallardo-Echenique, E. E. (2013). Competencia digital: Revisión Integradora de La Literatura. Revista de Ciencias de La Educación ACADEMICUS 1, 56–62.

Garcia-Tartera, F., and Vitor, G. (2019). “Digital competence in University teaching of the 21st Century,” in Around innovation in higher education: Studies, perspectives, and research. eds. N. I. Rius and B. S. Fernández (Valencia: University of Valencia), 167–178. Available at: https://bibliotecadigital.ipb.pt/handle/10198/19817 (Accessed February 10, 2024).

George-Reyes, C. E., Peláez-Sánchez, I. C., and Glasserman-Morales, L. D. (2024). Digital environments of education 4.0 and complex thinking: communicative literacy to close the digital gender gap. J. Interact. Media Educ. doi: 10.5334/jime.833

George-Reyes, C. E., Peláez Sánchez, I. C., Glasserman-Morales, L. D., and López-Caudana, E. O. (2023). The metaverse and complex thinking: opportunities, experiences, and future lines of research. Front. Educ. 8:1166999. doi: 10.3389/feduc.2023.1166999

Ghobakhloo, M., Iranmanesh, M., Foroughi, B., Tirkolaee, E. B., Asadi, S., and Amran, A. (2023a). Industry 5.0 implications for inclusive sustainable manufacturing: an evidence-knowledge-based strategic roadmap. J. Clean. Prod. 417, 1–16. doi: 10.1016/j.jclepro.2023.138023

Ghobakhloo, M., Iranmanesh, M., Morales, M. E., Nilashi, M., and Amran, A. (2023b). Actions and approaches for enabling industry 5.0-driven sustainable industrial transformation: a strategy roadmap. Corp. Soc. Responsib. Environ. Manag. 30, 1473–1494. doi: 10.1002/csr.2431

Ghobakhloo, M., Iranmanesh, M., Mubarak, M. F., Mubarik, M., Rejeb, A., and Nilashi, M. (2022). Identifying Industry 5.0 contributions to sustainable development: a strategy roadmap for delivering sustainability values. Sustain. Prod. Consum. 33, 716–737. doi: 10.1016/j.spc.2022.08.003

González, C., Cristian, M. L. H., Vidallet, J. L. S., and Medrano, L. V. (2022). Propósitos de Uso de Tecnologías Digitales En Estudiantes de Pedagogía Chilenos: Construcción de Una Escala Basada En Competencias Digitales. Pixel-Bit Revista de Medios y Educación 64, 7–25. doi: 10.12795/pixelbit.93212

González Martínez, J., Vidal, C. E., and Cervera, M. G. (2010). La Evaluación Cero de La Competencia Nuclear Digital En Los Nuevos Grados Del EEES. @tic. Revista d’innovació Educativa 4, 13–20.

González-Calatayud, V., Paz Prendes-Espinosa, M., and Solano-Fernández, I. M. (2022). Instrument for analysing digital entrepreneurship competence in higher education. RELIEVE-Revista Electronica de Investigacion y Evaluacion Educativa 28, 1–19. doi: 10.30827/relieve.v28i1.22831

González-Quiñones, F., Tarango, J., and Villanueva-Ledezma, A. (2019). Hacia Una Propuesta Para Medir Capacidades Digitales En Usuarios de Internet. Revista Interamericana de Bibliotecología 42, 197–212. doi: 10.17533/udea.rib.v42n3a01

Goretzko, D., Pham, T. T. H., and Bühner, M. (2021). Exploratory factor analysis: current use, methodological developments and recommendations for good practice. Curr. Psychol. 40, 3510–3521. doi: 10.1007/s12144-019-00300-2

Guillén-Gámez, F. D., and Mayorga-Fernández, M. J. (2021). Design and validation of an instrument of self-perception regarding the lecturers’ use of ict resources: to teach, evaluate and research. Educ. Inf. Technol. 26, 1627–1646. doi: 10.1007/s10639-020-10321-1

Gürdür Broo, D., Kaynak, O., and Sait, S. M. (2022). Rethinking engineering education at the age of Industry 5.0. J. Ind. Inf. Integr. 25:100311. doi: 10.1016/j.jii.2021.100311

Gutiérrez-Castillo, J. J., Cabero-Almenara, J., and Estrada-Vidal, L. I. (2017). Design and validation of an instrument for evaluation of digital competence of university student. Espacios 38, 1–16.

Gutiérrez-Santiuste, E., García-Lira, K., and Montes, R. (2023). Design and validation of a questionnaire to assess digital communicative competence in higher education. Int. J. Instr. 16, 241–260. doi: 10.29333/iji.2023.16114a

Hamutoğlu, N. B., Güngören, Ö. C., Uyanık, G. K., and Erdoğan, D. G. (2017). Dijital Okuryazarlık Ölçeği: Türkçe’ye Uyarlama Çalışması. Ege Eğitim Dergisi 18, 408–429. doi: 10.12984/egeefd.295306

Hassan, R. H. (2023). Educational vlogs: a systematic review. SAGE Open 13:215824402311524. doi: 10.1177/21582440231152403

Hidayat, M. L., Hariyatmi, D. S., Astuti, B. S., Meccawy, M., and Khanzada, T. J. S. (2023). Digital competency mapping dataset of pre-service teachers in Indonesia. Data Brief 49:109310. doi: 10.1016/j.dib.2023.109310

Hoyle, R. H. (2000). “Confirmatory factor analysis,” in Handbook of applied multivariate statistics and mathematical modeling. eds. H. E. A. Cooper and S. D. Brown (Academic Press), 465–497. Available at: https://www.sciencedirect.com/science/article/pii/B9780126913606500173.