- 1University of Missouri, Columbia, MO, United States

- 2University of Minnesota Twin Cities, St. Paul, MN, United States

- 3Southern Methodist University, Dallas, TX, United States

- 4The University of Texas at Austin, Austin, TX, United States

- 5University of Missouri–St. Louis, St. Louis, MO, United States

In this paper we describe the process of monitoring fidelity of implementation for a teacher-implemented early writing intervention. As part of a large, federally funded project, teachers who worked with students in grades 1 through 3 in schools across two states in the US were recruited and then randomly assigned to implementation and control conditions. Using Data-Based Individualization (DBI) as a framework for best practice in assessment and intervention, teachers in the implementation group received professional development on early writing intervention and assessment and then implemented these practices with their students who had significant writing challenges. Coaches, who were part of the research project, supported teachers and also observed teachers in both the implementation and control conditions at least twice during the course of the 20-week study. This paper focuses on the results of the fidelity measures that were administered throughout the project. An overview of the importance of fidelity checks is followed by a description of the fidelity tools used, as well as data from those tools. Areas of strength and challenge for teachers when implementing early writing assessment and intervention and engaging in data-based decision making with fidelity are discussed, along with recommendations regarding the practical and research importance of fidelity checks.

Introduction

Early intervention in writing instruction is of increasing importance (Berninger et al., 2008). This is underscored by the current writing performance of students in the United States. Data from national assessments in the U.S. consistently show that ~75% of fourth, eighth, and 12th-grade students perform below proficient levels in writing (Irwin et al., 2021). Through early intervention, educators can help lay a strong foundation for developing writing and literacy skills. This foundation supports overall academic success and equips students with essential communication and critical thinking skills that extend beyond school.

Intervening in students' writing development during their formative years is crucial in part because it is related to their reading skills (Fitzgerald and Shanahan, 2000; Kendeou et al., 2009; Kim, 2020; Kim et al., 2015; National Early Literacy Panel, 2008). The interplay between reading and writing underscores the significance of early intervention because improvements in one domain can catalyze advancements in the other (Wanzek et al., 2017). Furthermore, early intervention in writing harnesses the power of writing as a learning tool. It enables children to establish connections between ideas, fostering critical thinking and encouraging them to explore and apply new concepts (McMaster et al., 2017). Early intervention not only can empower children to approach their education with confidence and curiosity, it may also reduce the number of students who need special education services under Specific Learning Disabilities (SLD; Lovett et al., 2017). Moreover, this early exposure to writing instruction sets the stage for students to excel in school and in the diverse life contexts they will encounter, from postsecondary education to the professional world (Diamond et al., 2008; Graham and Perin, 2007).

While the majority of students respond positively to standard intervention protocols, a small proportion requires more intensive, individualized instruction (Wanzek and Vaughn, 2009). This need for precision in implementation becomes particularly challenging for educators, given the potential hurdles arising from insufficient preparation (e.g., Boardman et al., 2005; Roehrig et al., 2008; Stecker et al., 2005). This is compounded by evidence that teachers often struggle to implement interventions as prescribed (Groskreutz et al., 2011; Noell et al., 2000; Sanetti et al., 2015, 2018). Thus, the overarching success of data-based individualization in early writing research and instruction lies in ensuring fidelity in its implementation, bridging the gap between intention and execution for the benefit of individual students.

Supporting teacher implementation of writing interventions

To address underachievement in writing, it is crucial for teachers to identify writing difficulties early and use systematic data-based approaches to align students' needs with evidence-based interventions. Data-based individualization (DBI) emerges as a systematic approach for customizing educational strategies for students with significant learning needs (Deno and Mirkin, 1977; Fuchs et al., 2010; National Center on Intensive Intervention, 2024). DBI involves targeted instruction, weekly progress monitoring, and data-based decision-making.

Data-based individualization (DBI), as outlined by Deno and Mirkin (1977), Fuchs et al. (2010), and the National Center on Intensive Intervention (2024), provides a structured framework to support teachers in tailoring instruction for students facing challenges in specific academic skills. DBI encompasses a systematic approach, employing evidence-based diagnostic assessments, targeted interventions, and consistent progress monitoring. This process enables educators to make data-driven decisions pertaining to student development and to implement necessary adjustments in their instructional strategies (National Center on Intensive Intervention, 2024). To ensure effective, targeted instruction and inform adaptations that maximize skill development, DBI is a vital tool for educators (Lembke et al., 2018). Research suggests DBI offers structure and guidance for teachers and has been effective at improving student outcomes as compared to business-as-usual or interventions implemented without considerations of student progress (Jung et al., 2018; Stecker et al., 2005).

The Early Writing Project

In the area of early writing and DBI framework utilization, tools were developed to support teachers' implementation of early writing interventions. This larger study was called Data-Based Individualization–Tools, Learning, Coaching (“DBI-TLC”). DBI-TLC is a comprehensive professional development system that incorporates several components to facilitate student growth in early writing. The DBI-TLC system was implemented through The Early Writing Project, an Institute of Education Sciences (IES)-funded project to support educators' implementation of intensive early writing interventions and adherence to the DBI process. The Early Writing Project has been implemented in various educational environments, with evidence of feasibility and usability (Lembke et al., 2018; Poch et al., 2020). It has enhanced teacher-related outcomes on DBI in early writing and has shown promising signs of improved student outcomes (McMaster et al., 2020). Multiple fidelity tools were created as part of the project to monitor and support effective implementation.

Learning modules

One key element of DBI-TLC is the training of teachers through learning modules in which they are provided with extensive training and practice of various tools, including instructional materials, assessment resources, and data analysis techniques. These tools assist teachers in gaining a deep understanding of their students' writing abilities, allowing for tailored and precise instruction. DBI-TLC emphasizes the importance of continuous learning and knowledge-building for educators through these learning modules. It recognizes that teachers require a solid foundation of expertise and skills to effectively support their students (McMaster et al., 2020). Therefore, DBI-TLC offers comprehensive educational resources to equip teachers with the necessary knowledge and competencies.

Coaching

Furthermore, ongoing coaching support is integral to the DBI-TLC process. Coaches provide educators with the guidance and assistance they need to adapt and improve their instructional practices. This coaching and support ensures that teachers can effectively address their students' intensive early writing needs over time. However, it is important to note that coaching support is not only an integral part of the DBI process, but also integral to maintain treatment integrity of the intervention and assessment. Continual support for implementation of interventions through coaching, ongoing PD, and performance feedback has been shown to encourage the maintenance of high levels of intervention fidelity (Codding et al., 2008; Saunders et al., 2021). Thus, fidelity is a crucial component of the Early Writing Project and the DBI-TLC materials to support teacher assessment, instructional, and decision-making alignment.

Role of fidelity

The first step in the DBI process, as outlined by the National Center for Intensive Instruction (intensiveintervention.org), is to ensure that the chosen and validated intervention is delivered with fidelity (National Center on Intensive Intervention, 2024). Fidelity, also known as implementation fidelity, in academic intervention is essential for effective educational practices, ensuring that prescribed procedures are closely followed (Durlak and DuPre, 2008; McMaster et al., 2017; Sanetti and Collier-Meek, 2015). Procedural fidelity pertains to how closely educators adhere to intended programs, assessments, and implementation plans (e.g., dosage, frequency) including student responsiveness and program differentiation (Dane and Schneider, 1998). Within intervention implementation, there is a widespread consensus on three factors that influence intervention outcomes: (1) adherence to the prescribed intervention, (2) exposure or the frequency and duration of the intervention, and (3) the quality of how well the intervention was delivered (Sanetti and Kratochwill, 2009).

When interventions and assessments are implemented with fidelity, intervention teams can make more accurate and informed decisions regarding individual students' progress and their need for intervention modifications or intensifications (Dane and Schneider, 1998; O'Donnell, 2008; Swanson et al., 2013). Without fidelity, it can become challenging to establish a clear link between student outcomes and the provided instruction, which hinders the ability to assess the effectiveness of interventions, emphasizing the importance of collecting this data in education research (Durlak, 2015; Guo et al., 2016; Sanetti and Kratochwill, 2009; Webster-Stratton et al., 2011).

Research findings indicate that when implemented with fidelity, interventions show average effect sizes that are two to three times greater than when fidelity is not monitored (Durlak and DuPre, 2008). Fidelity is vital for identifying whether a student requires more intensive support and forms the foundation for the DBI process (Lemons et al., 2014). Without implementation fidelity, the collected data may not effectively support appropriate individualization, as the lack of progress may be attributed to an ineffective intervention or an intervention not appropriately delivered (Collier-Meek et al., 2013; McKenna et al., 2014). Furthermore, fidelity data can indicate difficulties practitioners or interventionists have during the intervention implementation process (Knoche et al., 2010). This data can serve as a facilitator in promoting dialogue regarding potential barriers and modifications needed to best support the generalizability of the intervention (Gadke et al., 2021; Kimber et al., 2019; William McKenna and Parenti, 2017).

In educational research, the reporting of implementation fidelity is of increasing importance. A review of special education research conducted between 1995 to 1999 found 18% of studies reported implementation fidelity (Gresham et al., 2000), while in a review of general and special education research conducted between 2005 to 2009 found 47% studies conducted fidelity checks (Swanson et al., 2013). Further, in the field of school psychology, a review of literature from 1995 to 2008 found 37% reported treatment fidelity (Sanetti et al., 2011), and a more recent study of the same school psychology journals found that now a majority of the research incorporated treatment fidelity (72.8%; Sanetti et al., 2020). While it is gaining traction in educational research, making fidelity monitoring accessible and easily implemented for teachers is of utmost importance (McKenna et al., 2014).

We believe it's critically important to highlight fidelity to readers because of the strength of intervention that can be fully realized when implemented as intended. In this study, we did a thorough job of identifying areas where having fidelity data would be important, creating measures, and collecting and assessing data. One of the important considerations for our research team was not only the data, but how we used that data to provide feedback to teachers to improve their practice. This provision of feedback is a critical piece of data-based decision making. In this paper, we highlight the different types of fidelity data that we collected and how that data was collected in order to demonstrate how we used the data both as a way to support the internal validity of our study as well as to support teachers as part of the professional development we were providing.

Fidelity in The Early Writing Project

Given that the fidelity of implementation of a validated intervention program serves as the foundation of the DBI process and promotes the generalizability of an intervention, it is imperative to have fidelity checks integrated into the process (McMaster et al., 2020). To support teachers' fidelity to DBI implementation, fidelity assessment instruments, modified from the Accuracy of Implementation Rating Scales (AIRS; Fuchs et al., 1984), were created by principal investigators (PIs). These fidelity forms were designed to assess essential teacher and student actions expected following the Professional Development learning modules, including implementation in CBM Administration, Writing Instruction, and Decision Making. The forms (provided in the Appendices) were created to provide feedback to teachers, but perhaps more importantly, to ensure that the research procedures were implemented as intended. Fidelity observations were completed for each DBI component as well as for each TLC component. In the methods section, we describe the development of the fidelity tools as they were integrated into The Early Writing Project. While we focus on early writing in this paper, the fidelity checks we describe would be important to implement in any high-quality study where implementation as intended is critical. In this paper, we focus on two of the three factors that influence intervention outcomes: (1) adherence to the prescribed intervention and (2) the quality of how well the intervention was delivered (Sanetti and Kratochwill, 2009). It was beyond the scope of this project to collect exposure data systematically.

In an effort to highlight the importance of fidelity, in this paper, we report the results of the fidelity of implementation checklist data that was collected during observations conducted for The Early Writing Project. This fidelity data included teacher implementation of CBM administration and writing instruction; teacher decision making; implementation of professional development learning modules; and implementation of coaching. The research questions that guided this portion of the study included the following:

1. At what level did teachers implement DBI including CBM, writing instruction, and DBDM, and did fidelity vary by cohort?

2. At what level of fidelity was professional development and coaching implemented?

Method

Setting and participants

The Early Writing Project study was a randomized control trial conducted in 23 urban, suburban, and rural public school districts in two Midwestern states. The study took place over three academic years with three cohorts of teacher participants: 2018–2019 (Cohort 1), 2019–2020 (Cohort 2), and 2021–2022 (Cohort 3). Cohort 3 was postponed a year due to the COVID-19 pandemic. The methods and results of this randomized control trial are reported in detail in McMaster et al. (2024).1

To be eligible for participation in the study, teachers needed to provide direct writing instruction to students with significant writing difficulties. We only included teachers who had at least 2 years of teaching experience, given the time commitment that project participation required. Out of 154 teachers who were recruited and randomized to treatment and control conditions across 3 years, a total of 132 teachers completed the study (n = 68 treatment; n = 64 control). Of the 154 randomized teachers, most were female (96.1%), White (92.2%), and special educators (89.6%). Teachers had an average of 11.35 years of teaching experience (SD = 8.49). For Cohorts 1 and 3, there were no statistically significant differences in teacher demographics between the treatment and control groups. In contrast, for Cohort 2, the control group had significantly more experience, measured by years in the current position and years teaching elementary school, compared to the treatment group. The difference in hours of professional development (PD) in CBM (Curriculum-Based Measurement; M range = 1.7–2.3) and data-based decision making (DBDM; M range = 2.1–2.5) between the treatment and control groups was not significant. However, there was a significant difference in PD in Writing assessment/instruction/intervention favoring the treatment group for Cohort 2 (M range = 1.1–1.9). Teacher participants nominated students for participation in grades 1–3 who had significant writing difficulties, access to the general education curriculum, functional English skills, and the ability to write one or more letters of the alphabet. The research team screened nominated students using two Curriculum-Based Measurement (CBM) tasks: word dictation and picture word, each administered for 3 min. Word dictation is designed to evaluate transcription skills at the word level. Through individual administration, the examiner dictates phonetically regular, grade 1 words from the Common Core State Standards for students to write. The examiner may only repeat each word once during the administration of the task. This task is scored using correct letter sequences (CLS), which requires that any two adjacent letters be placed correctly according to the correct spelling of the word. Picture word is designed to measure a students' sentence-level transcription and text generation skills and can be administered in a group or individually. Prior to administration of this CBM, the examiner models the task for the student(s). After practice, the students are instructed to write as many sentences as they can using the picture and corresponding word to generate ideas for their writing. There is a total of 12 picture prompts on each picture word CBM. Students are given 3 min for this task. The task is scored using correct word sequences (CWS), which is when any two words are spelled and used correctly in a sentence. We selected two to three students who scored the lowest on these measures among their teachers' nominated students. Of 365 eligible students who completed pretesting, 309 completed their participation in the study. Of eligible students, most were male (67.4%), White (29.3%), in second grade (55.3%), or received special education services (84.9%). Students' most frequent primary disabilities were other health impairment (21.1%), autism (20.0%) or specific learning disability (19.7%). On average, students scored in the “low” range (M = 68.67, SD = 14.22) on the Kaufman Test of Educational Achievement—Third Edition (KTEA-3; Kaufman and Kaufman, 2014) Written Expression subtest.

Study design and conditions

The Early Writing Project study was a randomized control trial where teachers were randomly assigned within school to either a treatment or business-as-usual (BAU) control condition, and students were nested within teachers.

Treatment teachers received a DBI-TLC package which included instruction, assessment, and decision-making tools at the beginning of their participation to the study. They also received four training sessions, as well as bi-weekly coaching. Coaching meetings started around mid to late August and ended around late March or early April. Treatment teachers delivered interventions using mini-lessons and materials provided in DBI-TLC package, administered CBM and graphed student data weekly. Treatment teachers were instructed to implement writing interventions three times per week for at least 20–30 min per session. Teachers made an instructional decision for each of their target students after they collected eight progress monitoring data points, and they repeated decision-making every 4–6 weeks after first decision.

Business-as-usual teachers in the control condition did not have access to DBI-TLC during the study and continued implementing their typical direct writing instruction for their participating students. After the study was completed, BAU teachers received all DBI-TLC materials and participated in one 8-h training session that included abbreviated content from all learning modules.

DBI-TLC implementation fidelity

Given the multi-component nature of DBI-TLC, we measured teachers' fidelity of implementation for multiple study procedures. In this paper, we report fidelity data we collected related to teachers' writing instruction, CBM administration, and Data Based Decision Making. Additionally, we evaluated the fidelity of DBI-TLC procedures by measuring the research team's learning module and coaching fidelity. For each fidelity category, we outline its conceptualization, how it was measured, and procedures for measurement in the sections below. For fidelity forms, see Appendices A through G.

Writing instruction fidelity

In the pilot phase of DBI-TLC, we assessed teachers' implementation fidelity using an adapted version of the Accuracy of Implementation Rating Scales (AIRS), originally created by Fuchs et al. (1984), which measured adherence to core DBI procedures: writing instruction, CBM administration, and DBDM. However, we found that the pilot writing instruction fidelity tool did not meaningfully differentiate between the instruction of DBI and control teachers, suggesting that the intended impacts of DBI-TLC on instruction were not being adequately measured (Lembke et al., 2018). Evaluating writing instruction in the context of DBI presented a unique challenge, which was determining how to best capture fidelity when teachers were delivering individualized instruction. Additionally, our observations of DBI-TLC teachers' writing instruction suggested that our measure did not adequately capture dimensions of instructional quality, such as meaningful teacher-student interactions and student engagement. As a result, we revisited and refined our measure for the efficacy phase of our study.

The final writing instruction fidelity tool (see Appendix A) was a checklist of observed steps for adherence components of explicit and intensive writing instruction. These adherence components were scored using a scale of 0 to 2 (for single event observations: “not in-place” to “in-place and effective,” and for reoccurring observations: “rarely or never observed” to “all or almost all of observed instances”). Total fidelity scores were observed item points divided by possible item points multiplied by 100. The writing instruction of both treatment and control teachers was observed, as eligible teacher participants needed to provide direct writing instruction to their students with significant writing difficulties. One aim of the updated fidelity to tool was to capture flexible, adapted writing instruction fidelity. Meaning, we accounted for the individualized nature of our treatment teachers' instruction (e.g., Johnson and McMaster, 2013). In the primary writing study where we collected data for the present study, teachers created individualized lesson plans by selecting from researcher-created activities. To evaluate fidelity to the individualized aspects of instruction, we created two to three fidelity items for each instructional activity. Observers would then use those items as part of their fidelity evaluation. We also included items intended to measure instructional quality, in addition to adherence, which were also scored on a scale of 0 to 2 (for single event observations: “not in-place” to “in-place and effective,” and for reoccurring event observations: “rarely or never” to “all or almost all of observed instances”). These included items related to how students engaged with the instruction and the instructional environment.

Research team members evaluating teachers' writing instruction fidelity first reached 85% agreement with an expert evaluator on the research team. Fidelity of writing instruction was observed both informally and formally. These observations happened in person or, when necessary, using teacher-recorded videos of their writing instruction. Coaches informally observed the writing instruction of their assigned teachers once a month and provided feedback to support the teachers' implementation during coaching meetings. Graduate Research Assistants (GRAs) and Project Coordinators (PCs) also conducted formal observations twice, once in fall and once in spring, with teachers they were not coaching to ensure teachers were delivering intensive intervention as they had been trained during the Learning Modules. After observing, researchers reviewed writing instruction fidelity scoring with teachers and provided feedback on how to improve fidelity, if needed.

Control observations

Project staff observed control teachers twice during the study using the same fidelity of writing instruction tool used with treatment teachers and took detailed field notes. Observed activities ranged considerably (e.g., writing days of the week, sentence construction, writing responses to reading). A more detailed description of control teacher instruction is available in McMaster et al. (2024) (see text footnote 1).

Students were typically taught in small groups, for 8–50 min (M = 25 min). Fidelity of control teachers' writing instruction ranged from 38% to 97% (M = 75%, SD = 13.24) across cohorts, sites, and time points.

CBM administration fidelity

The purpose of collecting CBM administration fidelity data was to ensure that treatment teachers were collecting reliable progress monitoring data for their students. The CBM administration fidelity tool was a checklist of steps, measuring adherence, required to administer one of three CBM for writing tasks (see Appendix B–D). Observations were conducted in person, via videorecording, or via audio recording. Items were scored as 0 (not observed) or 1 (observed). In cases of audio recording, where steps could not be observed (e.g., “Has materials on hand”), evaluators would mark the item as “N/A” for not applicable. Teachers also had the option of using shortened CBM directions once their students were familiar with the procedure—in these cases, items not included in shortened directions were also scored as “N/A.” Total fidelity scores were observed item points divided by possible item points multiplied by 100.

Before assessing teachers' CBM administration fidelity, researchers were trained on how to administer the measures using the fidelity form. Researchers needed to demonstrate 85% fidelity during an administration rehearsal, and then again during several actual administrations with a student during pretesting for the study. Researchers formally observed teachers twice, once in the fall and once in the spring. After observing, researchers reviewed CBM administration fidelity scoring with teachers and provided feedback on how to increase fidelity, if necessary. If teachers demonstrated <80% fidelity during the administration, another CBM administration was observed to determine if fidelity had improved.

DBDM fidelity

Treatment teachers in the current study collected weekly CBM data and made one to three decisions per student over the course of the 20-week study, depending on the number of decision-making opportunities. The purpose of collecting decision-making data was to ensure that treatment teachers were making timely and/or appropriate decisions in response to student data. As they were making instructional decisions, teachers completed an online Qualtrics survey to record what decisions they made and why. A copy of these Google Sheets and a copy of progress monitoring graph for the corresponding student and decision was used to calculate DBDM fidelity. A fidelity tool (see Appendix E) created by the Principal Investigators was used to assess each teacher's decision-making fidelity. The form included the percentage of timely decisions, which measured DBDM adherence, appropriate decisions, which measured DBDM quality, and overall fidelity for each decision-making opportunity. Timely decisions were defined as those made after the first eight data points were collected and every 4–6 data points after that, and decisions were deemed appropriate if teachers raised the goal if the trend line was steeper than the goal line, continued with the same instruction if the trend line was on track with the goal, or changed instruction if the trend line was flatter than the goal line. All of the guidelines around timely and appropriate decisions were taught and practiced during the professional development learning modules and supported through coaching.

Fidelity of learning modules

Teachers participated in four learning modules throughout the study. During module development, key components that must be addressed through explanation, modeling, guided practice, and application during training were identified. While the trainings were delivered, an observer noted whether each component was observed or not. Fidelity of learning module adherence (see Appendix F for example) was recorded as the percentage of components observed during the trainings.

Fidelity of coaching

During biweekly coaching sessions with treatment teachers, coaches used a coaching conversation form to guide their meeting, following a general coaching procedure. All coaches audio recorded their two coaching sessions for fidelity purposes. Project Investigators (PIs) listened to the recordings and checked the fidelity of adherence to key components of coaching on a scale of 0–1 (see Appendix G), including rapport-building, review of objectives, review of DBI steps and goals, discussion of student data, and planning for next steps. Additionally, the PIs scored coaches on a scale of 1–4 for components of coaching quality, including how well the coach fostered a collaborative conversation with the teacher, how responsive the coach was to the teacher's needs, and how well the coach made appropriate and logical connections between challenges, solutions, and next steps (see Appendix G). Total coaching fidelity scores were calculated by dividing the number of observed points by the number of possible points and multiplied by 100.

Results

Our first research question addressed the level at which teachers implemented DBI including writing instruction, CBM, and DBDM, and whether that fidelity varied by cohort.

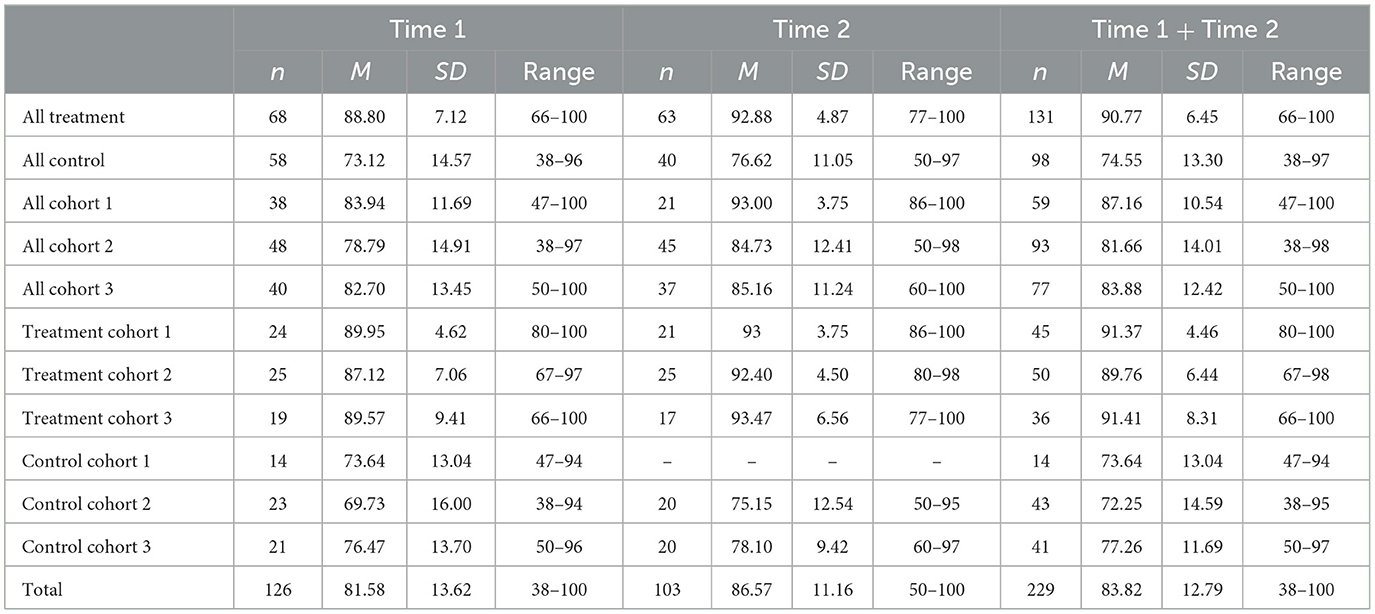

Table 1 shows the results of fidelity observations for writing instruction. When comparing the results by experimental condition, on average, treatment teachers demonstrated noticeably higher overall writing instruction fidelity (M = 90.77%, range = 66%−100%, SD = 6.45%) compared to that of control teachers (M = 74.55%, range = 38%−97%, SD = 13.30%). Lower scores were generally observed in the lesson setup and wrap-up components, though control teachers also demonstrated lower fidelity levels in other components. When examining cohorts and experimental conditions together, Cohort 1 treatment teachers demonstrated an average writing instruction fidelity of 91.37%, Cohort 2 reached 89.76%, and Cohort 3 achieved 91.41%. In contrast, control teachers in each cohort showed lower fidelity levels: 73.64% for Cohort 1, 72.25% for Cohort 2, and 77.26% for Cohort 3. These results reveal that, within the same experimental condition, the variation in fidelity levels across cohorts was negligible, with treatment teachers consistently maintaining high levels of overall fidelity in writing instruction.

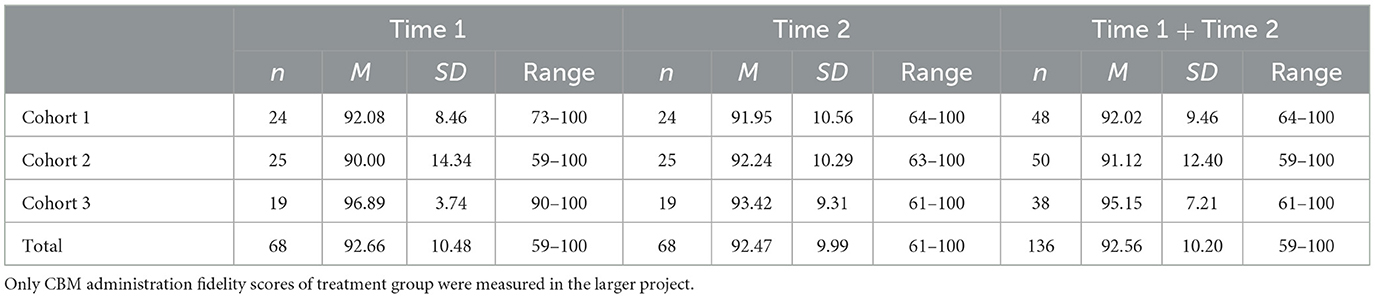

Table 2 presents the results of fidelity observations for CBM administration conducted with treatment teachers. On average, when collapsing across cohorts and fidelity observation times, treatment teachers administered various CBM tasks with 92.56% fidelity (range = 59%−100%, SD = 10.2%). We did not observe any significant patterns or differences based on cohort or observation timeframe.

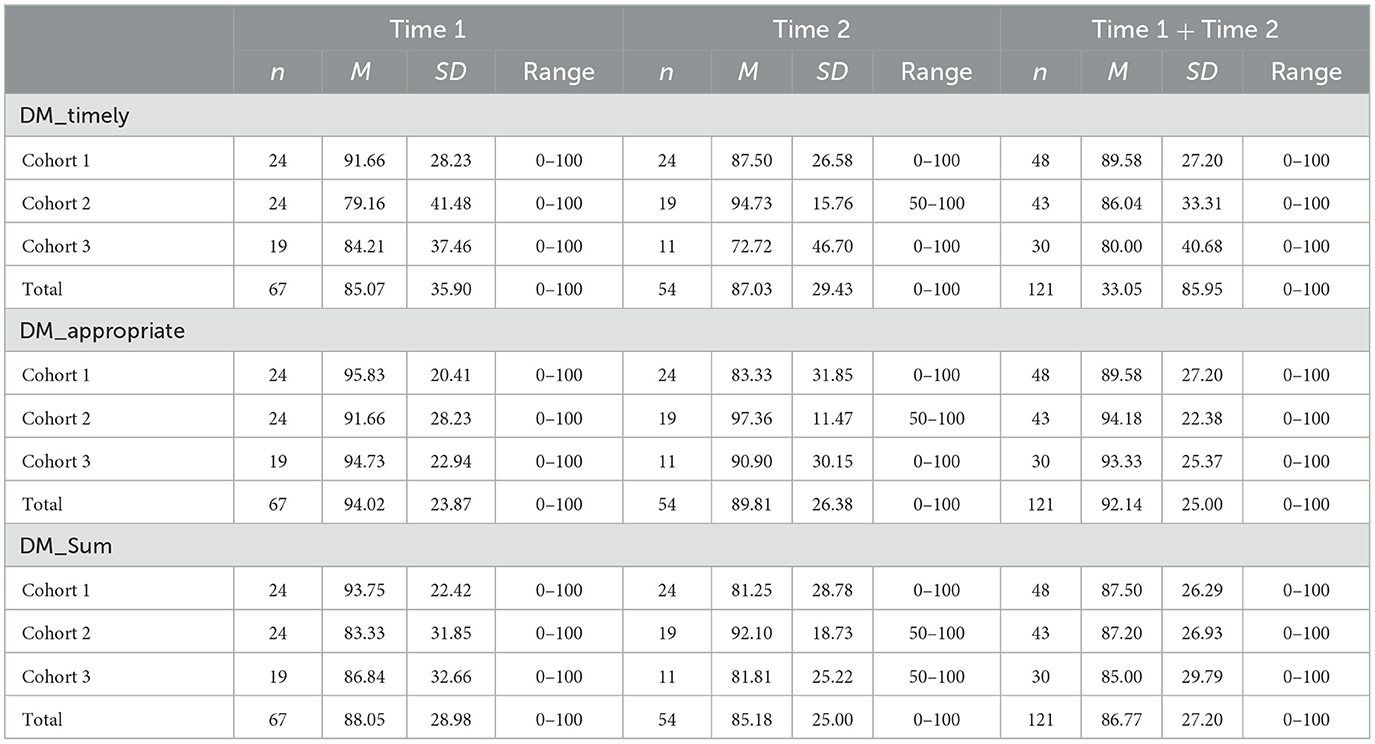

Table 3 displays the results of fidelity observations for decision-making, which were assessed only for treatment teachers. When collapsing across cohorts and fidelity evaluation time points, the overall fidelity for decision-making was 86.77% (range = 0%−100%, standard deviation = 27.2%). Breaking down the results into the adherence (i.e., timely) and quality (i.e., appropriate) of decision-making, fidelity of timely decision-making averaged at 85.95% (range = 0%−100%, SD = 33.05%), while the fidelity of appropriate decision-making averaged at 92.14% (range = 0%−100%, SD = 25%). Treatment teachers' fidelity in making appropriate and timely decisions showed a medium-size correlation (r = 0.42), each of which was highly correlated with overall decision-making fidelity (rs = 0.66 and 0.92, respectively). For treatment teachers, the correlations between their fidelity in CBM administration, writing instruction, and decision-making were marginal (−0.03 to 0.17) and not statistically significant, suggesting that a teacher showing high fidelity in one area did not necessarily show high fidelity in another.

Our second research question addressed the level of fidelity that professional development and coaching was implemented. In addition to evaluating the fidelity of teachers, it was crucial to assess the fidelity of the learning modules and coaching, as detailed in McMaster et al. (2024) (see text footnote 1). This was important to assure that our professional development and supports provided to teachers were implemented as intended. In addition, it was important to have uniformity in delivery of professional development and coaching given that the study was implemented across two sites.

Regarding the fidelity of the learning modules, for each module, assigned GRAs or PCs assessed the fidelity of presentation components and teacher activities using the research-developed checklist (see Appendix F). The score was determined by dividing the number of observed items by the total presentation components/activities (23 for Module 1, 30 for Module 2, 30 for Module 3, and 28 for Module 4) and then multiplying the result by 100. Across different learning modules, sites, and cohorts, adherence fidelity scores ranged from 96 to 100%.

The fidelity of coaching was also assessed and monitored using a research-developed checklist (see Appendix G). The checklist included the observation of adhering to each specified component in the coaching conversation form (0 = not observed; 1 = observed), as well as an evaluation of coaching quality during the sessions on a 4-point scale (with 4 indicating high quality). The overall fidelity scores, calculated by dividing the total points awarded by the total possible score (23, including 11 binary observation items and three items rated on a 4-point scale), and then multiplied by 100%, ranged from 83 to 100% across different cohorts and sites. When separating adherence and quality of coaching, the average scores were 88% and 95%, respectively.

Discussion

While the majority of students respond to standard interventions, some students do not and require intensive, individualized instruction (Wanzek and Vaughn, 2009). Unfortunately, research suggests that teachers often have difficulty implementing interventions as prescribed (Noell et al., 2000; Sanetti et al., 2015, 2018). Data-based individualization (DBI) offers a systematic approach that educators can use to customize educational strategies for students who are non-responsive to intervention (Deno and Mirkin, 1977; Fuchs et al., 2010; National Center on Intensive Intervention, 2024). DBI encompasses targeted instruction, along with weekly progress monitoring and data-based decision-making. It is important to not only measure fidelity for intervention, but also for CBM administration, data-based decision-making, and any related PD or coaching provided to teachers. Assessing intervention fidelity also has implications for special education determination in the US. Many states in which an instructional model (i.e., Multi-Tiered Systems of Support or Response-to-Intervention) is used to identify students for special education under the category of a specific learning disability (SLD), a required component of eligibility is documenting that research-based interventions have been delivered with fidelity and that the student did not make adequate progress (Fletcher et al., 2007; Fuchs and Fuchs, 2006).

In the current study, we monitored the fidelity of two groups: teachers participating in our study and members of our team delivering professional learning and coaching. First and foremost, it was important for us to monitor the fidelity with which our teachers were implementing DBI for several reasons: (1) we wanted to support the teachers in implementing the best possible framework for their students; (2) we wanted the teachers to implement well and with support so that they could sustain implementation after the study was complete; (3) to sufficiently test the use of DBI-TLC vs. business as usual, we needed to make sure that teachers were implementing the framework as intended. While it took some planning and a few trials to establish a good fidelity routine (including both the measures utilized and the system used for observation and feedback), it was time well spent in that the teachers demonstrated overall strong levels of fidelity for writing instruction, decision making, and CBM administration. Perhaps most important, when teachers did not demonstrate fidelity that was above 80% for a given observation, the coach would provide feedback to the teacher to help remediate the particular area of need and then a second observation was conducted after a brief time.

This pattern of observation and feedback could be replicated in a school if a fidelity checklist was developed (this could consist of the 5–10 top elements that are critical for the intervention or process to be effective) and either a video is collected for later observation, or a direct observation is conducted. A peer colleague could utilize the checklist to provide brief feedback on strengths and areas of need for the instruction. A cycle of observation and sharing feedback could be established with teacher pairs throughout the year. While this observation and feedback would take time, it would be time well spent in terms of enhancing instructional practices.

Our second set of fidelity observations were around our team's delivery of professional development through learning modules and our monitoring of our own coaching practices. These areas were important to maintain the integrity of the research practices but were also important so that we maximized teachers' time during the professional development and delivered coaching that was of the highest quality. Our teachers, as with most teachers, were very busy with lots of in school and outside of school obligations and activities. We wanted to provide coaching that was targeted and helpful, and that could support and improve their practices. So developing an effective coaching routine and training our coaches to utilize this routine was an important way to maximize teachers' time and provide high quality feedback.

Limitations

Several limitations of the current study highlight the need for more research on the fidelity of DBI-TLC. First, teachers were mostly female and white and students were mostly male and white, which limits the generalizability of the findings to other teacher and student populations. Furthermore, the current teacher sample was limited to those with at least 2 years of teaching experience. Less experienced teachers may have different needs and/or respond differently to coaching than more experienced teachers. Lastly, while the current study measured many aspects of fidelity, there were revisions to the initial writing instruction fidelity tool that capture instructional quality which may be more subjective than components of intervention adherence. For instance, there may be qualitative aspects that coaches had to make a decision on as they observed that may be more subjective. It was also not possible, given the nature of the project, to capture elements of exposure, which is a critical part of intervention fidelity (Sanetti and Kratochwill, 2009).

Directions for future research

Future studies should seek a more diverse sample that represents different racial, ethnic, and gender across both teachers and students to evaluate the effectiveness of DBI-TLC across different demographic groups. In addition, future studies may also want to include novice teachers to measure the effects across teachers with varying levels of experience. All treatment teachers in this study received bi-weekly coaching from a member of the research team. Follow-up studies may also want to explore ways in which to identify the appropriate frequency and duration of coaching for each teacher. For instance, teachers with a good understanding of DBI may need fewer coaching sessions than teachers with a more limited understanding. Another critical component that researchers may want to examine in the future is whether the skills and practices learned can be sustained without researcher or coaching support. Coaching in schools can be costly (Knight, 2012); therefore, it is important to explore the sustainability of DBI without coaching support.

Implications for practice

There are several implications for practice that can be drawn from the current study including those that relate to teacher PD, writing assessment, and writing intervention.

First, in the current study, treatment teachers were observed using the writing instruction fidelity tool that captured both individualized and flexible writing instruction components. Fidelity of writing instruction should include components of adherence (i.e., to what extent was the component implemented) and quality (i.e., how well the component was implemented), according to research (Collier-Meek et al., 2013; Sanetti and Collier-Meek, 2015). In the current study, treatment teachers were observed by their coach who utilized a fidelity tool, which was then used to inform feedback on what components of the intervention were delivered with fidelity and which could be improved. Overall, treatment teachers had higher levels of fidelity for writing intervention than control teachers. Therefore, one assumption might be that educators may want to consider utilizing fidelity checks as an opportunity to inform feedback on teacher instructional practices, especially when new instructional practices or interventions are introduced. It is important to remember that these teachers in the treatment group had also received Professional Development and Coaching, which could positively impact their implementation.

Second, intensive writing intervention should be informed by consistent CBM progress monitoring data. This allows teachers to monitor student progress and make appropriate instructional adaptations based on the data in a timely manner. In the current study, teacher CBM administration and scoring fidelity were measured. This was especially important given that all of the Early Writing Project CBMs are teacher-administered, scored, and graphed, unlike many reading and math CBMs, which are often computer-based. In the current study, teachers' fidelity was measured for both timely and appropriate decisions. Treatment teachers, with the support of their coach, were able to reach acceptable levels of fidelity for both timely and appropriate decisions, with teachers scoring higher for appropriate decisions, on average, compared to timely decisions. It is possible that real-life barriers, such as snow days, student absences, competing priorities, etc. somewhat hindered teachers' abilities to make timely decisions. Schools will want to plan ahead for these potential barriers to consistent progress monitoring and data-based decision-making.

Finally it is important for schools to invest in ongoing professional development (PD) opportunities for teachers that focus on evidence-based instructional practices. During PD, it is important to ensure that all components of the training are delivered each time; therefore, it may be useful for those delivering PD to create a fidelity checklist for an observer use to measure fidelity.

Data availability statement

The datasets presented in this article are not readily available. Requests to access the datasets should be directed to Erica Lembke, bGVtYmtlZUBtaXNzb3VyaS5lZHU=.

Ethics statement

The studies involving humans were approved by University of Missouri IRB, Columbia, MO and University of Minnesota IRB, Minneapolis, MN. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

EL: Writing – original draft, Writing – review & editing. KM: Writing – original draft, Writing – review & editing. MD-M: Writing – original draft, Writing – review & editing. EM: Writing – original draft, Writing – review & editing. SC: Writing – original draft, Writing – review & editing. ES: Writing – original draft, Writing – review & editing. JA: Writing – original draft, Writing – review & editing. KS-D: Writing – original draft, Writing – review & editing. SB: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The research reported here was funded by the Institute of Education Sciences, U.S. Department of Education, through Grant R324A170101 to the University of Minnesota.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

The opinions expressed are those of the authors and do not represent views of the Institute or the U.S. Department of Education.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1380295/full#supplementary-material

Footnotes

1. ^McMaster, K. L., Lembke, E. S., Shanahan, E., Choi, S., An, J., Schatschneider, C., et al. (2024). Supporting Teachers' Data-Based Individualization of Early Writing Instruction: An Efficacy Trial (under review).

References

Berninger, V. W., Nielsen, K. H., Abbott, R. D., Wijsman, E., and Raskind, W. (2008). Writing problems in developmental dyslexia: under-recognized and under-treated. J. Sch. Psychol. 46, 1–21. doi: 10.1016/j.jsp.2006.11.008

Boardman, A. G., Argüelles, M. E., Vaughn, S., Hughes, M. T., and Klingner, J. (2005). Special education teachers' views of research-based practices. J. Spec. Educ. 39, 168–180. doi: 10.1177/00224669050390030401

Codding, R. S., Livanis, A., Pace, G. M., and Vaca, L. (2008). Using performance feedback to improve treatment integrity of classwide behavior plans: an investigation of observer reactivity. J. Appl. Behav. Anal. 41, 417–422. doi: 10.1901/jaba.2008.41-417

Collier-Meek, M. A., Fallon, L. M., Sanetti, L. M., and Maggin, D. M. (2013). Focus on implementation: assessing and promoting treatment fidelity. Teach. Except. Child. 45, 52–59. doi: 10.1177/004005991304500506

Dane, A. V., and Schneider, B. H. (1998). Program integrity in primary and early secondary prevention: are implementation effects out of control? Clin. Psychol. Rev. 18, 23–45. doi: 10.1016/S0272-7358(97)00043-3

Deno, S. L., and Mirkin, P. K. (1977). Data-Based Program Modification: A Manual. Reston, VA: Council for Exceptional Children.

Diamond, K. E., Gerde, H. K., and Powell, D. R (2008). Development in early literacy skills during the pre-kindergarten year in Head Start: relations between growth in children's writing and understanding of letters. Early Child. Res. Q. 23, 467–478. doi: 10.1016/j.ecresq.2008.05.002

Durlak, J. A. (2015). Studying program implementation is not easy but it is essential. Prev. Sci. 16, 1123–1127. doi: 10.1007/s11121-015-0606-3

Durlak, J. A., and DuPre, E. P. (2008). Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am. J. Community Psychol. 41, 327–350. doi: 10.1007/s10464-008-9165-0

Fitzgerald, J., and Shanahan, T. (2000). Reading and writing relations and their development. Educ. Psychol. 35, 39–50. doi: 10.1207/S15326985EP3501_5

Fletcher, J. M., Lyon, G. R., Fuchs, L. S., and Barnes, M. A. (2007). Learning Disabilities: From Identification to Intervention. New York, NY: Guilford.

Fuchs, D., and Fuchs, L. S. (2006). Introduction to response to intervention: what, why, and how valid is it?. Read. Res. Q. 41, 93–99. doi: 10.1598/RRQ.41.1.4

Fuchs, D., Fuchs, L. S., and Stecker, P. M. (2010). The “blurring” of special education in a new continuum of general education placements and services. Except. Child. 76, 301–323. doi: 10.1177/001440291007600304

Fuchs, L. S., Deno, S. L., and Mirkin, P. K. (1984). The effects of frequent curriculum-based measurement and evaluation on pedagogy, student achievement, and student awareness of learning. Am. Educ. Res. J. 21, 449–460. doi: 10.3102/00028312021002449

Gadke, D. L., Kratochwill, T. R., and Gettinger, M. (2021). Incorporating feasibility protocols in intervention research. J. Sch. Psychol. 84, 1–18. doi: 10.1016/j.jsp.2020.11.004

Graham, S., and Perin, D. (2007). A meta-analysis of writing instruction for adolescent students. J. Educ. Psychol. 99:445. doi: 10.1037/0022-0663.99.3.445

Gresham, F. M., MacMillan, D. L., Beebe-Frankenberger, M. E., and Bocian, K. M. (2000). Treatment integrity in learning disabilities intervention research: do we really know how treatments are implemented? Learn. Disab. Res. Pract. 15, 198–205. doi: 10.1207/SLDRP1504_4

Groskreutz, N. C., Groskreutz, M. P., and Higbee, T. S. (2011). Effects of varied levels of treatment integrity on appropriate toy manipulation in children with autism. Res. Autism Spectr. Disord. 5, 1358–1369. doi: 10.1016/j.rasd.2011.01.018

Guo, Y., Dynia, J. M., Logan, J. A., Justice, L. M., Breit-Smith, A., Kaderavek, J. N., et al. (2016). Fidelity of implementation for an early-literacy intervention: dimensionality and contribution to children's intervention outcomes. Early Child. Res. Q. 37, 165–174. doi: 10.1016/j.ecresq.2016.06.001

Irwin, V., Zhang, J., Wang, X., Hein, S., Wang, K., Roberts, A., et al. (2021). Report on the Condition of Education 2021 (NCES 2021-144). U.S. Department of Education. Washington, DC: National Center for Education Statistics. Available at: https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2021144 (accessed July 23, 2024).

Johnson, L. D., and McMaster, K. L. (2013). “Adapting research-based practices with fidelity: flexibility by design,” in Evidence-Based Practices, 1st Edn, Vol. 26, eds. B. G. Cook, M. Tankersley, and T. J. Landrum (Leeds: Emerald Group Publishing Limited), 65–91. doi: 10.1108/S0735-004X(2013)0000026006

Jung, P. G., McMaster, K. L., Kunkel, A. K., Shin, J., and Stecker, P. M. (2018). Effects of data–based individualization for students with intensive learning needs: a meta–analysis. Learn. Disabil. Res. Pract. 33, 144–155. doi: 10.1111/ldrp.12172

Kaufman, A. S., and Kaufman, N. L. (2014). Kaufman Test of Educational Achievement, 3rd Edn. Bloomington, MN: NCS Pearson.

Kendeou, P., Van den Broek, P., White, M. J., and Lynch, J. S. (2009). Predicting reading comprehension in early elementary school: the independent contributions of oral language and decoding skills. J. Educ. Psychol. 101:765. doi: 10.1037/a0015956

Kim, Y. S., Al Otaiba, S., and Wanzek, J. (2015). Kindergarten predictors of third grade writing. Learn. Individ. Differ. 37, 27–37. doi: 10.1016/j.lindif.2014.11.009

Kim, Y. S. G. (2020). “Interactive dynamic literacy model: an integrative theoretical framework for reading-writing relations,” in Reading-Writing Connections. Literacy Studies, Vol. 19, eds. R. A. Alves, T. Limpo, and R. M. Joshi (Cham: Springer). doi: 10.1007/978-3-030-38811-9_2

Kimber, M., Barac, R., and Barwick, M. (2019). Monitoring fidelity to an evidence-based treatment: practitioner perspectives. Clin. Soc. Work J. 47, 207–221. doi: 10.1007/s10615-017-0639-0

Knoche, L. L., Sheridan, S. M., Edwards, C. P., and Osborn, A. Q. (2010). Implementation of a relationship-based school readiness intervention: a multidimensional approach to fidelity measurement for early childhood. Early Child. Res. Q. 25, 299–313. doi: 10.1016/j.ecresq.2009.05.003

Lembke, E. S., McMaster, K. L., Smith, R. A., Allen, A., Brandes, D., Wagner, K., et al. (2018). Professional development for data-based instruction in early writing: tools, learning, and collaborative support. Teach. Educ. Spec. Educ. 41, 106–120. doi: 10.1177/0888406417730112

Lemons, C. J., Kearns, D. M., and Davidson, K. A. (2014). Data-based individualization in reading: intensifying interventions for students with significant reading disabilities. Teach. Except. Child. 46, 20–29. doi: 10.1177/0040059914522978

Lovett, M. W., Frijters, J. C., Wolf, M., Steinbach, K. A., Sevcik, R. A., Morris, R. D., et al. (2017). Early intervention for children at risk for reading disabilities: the impact of grade at intervention and individual differences on intervention outcomes. J. Educ. Psychol. 109, 889–914. doi: 10.1037/edu0000181

McKenna, J. W., Flower, A., and Ciullo, S. (2014). Measuring fidelity to improve intervention effectiveness. Interv. Sch. Clin. 50, 15–21. doi: 10.1177/1053451214532348

McMaster, K. L., Kunkel, A., Shin, J., Jung, P., and Lembke, E. S. (2017). Early writing intervention: a best evidence synthesis. J. Learn. Disabil. 51, 1–18. doi: 10.1177/0022219417708169

McMaster, K. L., Lembke, E. S., Shin, J., Poch, A. L., Smith, R. A., Jung, P. G., et al. (2020). Supporting teachers' use of data-based instruction to improve students' early writing skills. J. Educ. Psychol. 112:1. doi: 10.1037/edu0000358

National Center on Intensive Intervention (2024). Washington, DC: Office of Special Education Programs; U.S. Department of Education.

National Early Literacy Panel (2008). Developing Early Literacy: Report of the National Early Literacy Panel. Washington, DC: National Institute for Literacy. Available at http://www.nifl.gov/earlychildhood/NELP/NELPreport.html (accessed August 25, 2024).

Noell, G. H., Witt, J. C., LaFleur, L. H., Mortenson, B. P., Ranier, D. D., and LeVelle, J. (2000). Increasing intervention implementation in general education following consultation: a comparison of two follow-up strategies. J. Appl. Behav. Anal. 33, 271–284. doi: 10.1901/jaba.2000.33-271

O'Donnell, C. L. (2008). Defining, conceptualizing, and measuring fidelity of implementation and its relationship to outcomes in K−12 curriculum intervention research. Rev. Educ. Res. 78, 33–84. doi: 10.3102/0034654307313793

Poch, A. L., McMaster, K. L., and Lembke, E. S. (2020). Usability and feasibility of data-based instruction for Students with intensive writing needs. Elem. Sch. J. 121, 197–223. doi: 10.1086/711235

Roehrig, A. D., Bohn, C. M., Turner, J. E., and Pressley, M. (2008). Mentoring beginning primary teachers for exemplary teaching practices. Teach. Teach. Educ. 24, 684–702. doi: 10.1016/j.tate.2007.02.008

Sanetti, L. M. H., Charbonneau, S., Knight, A., Cochrane, W. S., Kulcyk, M. C., Kraus, K. E., et al. (2020). Treatment fidelity reporting in intervention outcome studies in the school psychology literature from 2009 to 2016. Psychol. Sch. 57, 901–922. doi: 10.1002/pits.22364

Sanetti, L. M. H., and Collier-Meek, M. A. (2015). Data-driven delivery of implementation supports in a multi-tiered framework: a pilot study. Psychol. Sch. 52, 815–828. doi: 10.1002/pits.21861

Sanetti, L. M. H., Collier-Meek, M. A., Long, A. C., Byron, J., and Kratochwill, T. R. (2015). Increasing teacher treatment integrity of behavior support plans through consultation and implementation planning. J. Sch. Psychol. 53, 209–229. doi: 10.1016/j.jsp.2015.03.002

Sanetti, L. M. H., Gritter, K. L., and Dobey, L. M. (2011). Treatment integrity of interventions with children in the school psychology literature from 1995 to 2008. Sch. Psych. Rev. 40:72. doi: 10.1080/02796015.2011.12087729

Sanetti, L. M. H., and Kratochwill, T. R. (2009). Toward developing a science of treatment integrity: Introduction to the special series. Sch. Psych. Rev. 38:445.

Sanetti, L. M. H., Williamson, K. M., Long, A. C., and Kratochwill, T. R. (2018). Increasing in-service teacher implementation of classroom management practices through consultation, implementation planning, and participant modeling. J. Posit. Behav. Interv. 20, 43–59. doi: 10.1177/1098300717722357

Saunders, A. F., Wakeman, S., Cerrato, B., and Johnson, H. (2021). Professional development with ongoing coaching: a model for improving educators' implementation of evidence-based practices. Teach. Except. Child. doi: 10.1177/00400599211049821

Stecker, P. M., Fuchs, L. S., and Fuchs, D. (2005). Using curriculum-based measurement to improve student achievement: review of research. Psychol. Sch. 42, 795–819. doi: 10.1002/pits.20113

Swanson, E., Wanzek, J., Haring, C., Ciullo, S., and McCulley, L. (2013). Intervention fidelity in special and general education research journals. J. Spec. Educ. 47, 3–13. doi: 10.1177/0022466911419516

Wanzek, J., Petscher, Y., Otaiba, S. A., Rivas, B. K., Jones, F. G., Kent, S. C., et al. (2017). Effects of a year long supplemental reading intervention for students with reading difficulties in fourth grade. J. Educ. Psychol. 109:1103. doi: 10.1037/edu0000184

Wanzek, J., and Vaughn, S. (2009). Students demonstrating persistent low response to reading intervention: three case studies. Learn. Disabil. Res. Pract. 24, 151–163. doi: 10.1111/j.1540-5826.2009.00289.x

Webster-Stratton, C., Reinke, W. M., Herman, K. C., and Newcomer, L. L. (2011). The incredible years teacher classroom management training: the methods and principles that support fidelity of training delivery. Sch. Psych. Rev. 40, 509–529. doi: 10.1080/02796015.2011.12087527

Keywords: writing, data-based, intervention, fidelity, elementary

Citation: Lembke ES, McMaster KL, Duesenberg-Marshall MD, McCollom E, Choi S, Shanahan E, An J, Sussman-Dawson K and Birinci S (2024) Data based individualization in early writing: the importance and measurement of implementation fidelity. Front. Educ. 9:1380295. doi: 10.3389/feduc.2024.1380295

Received: 01 February 2024; Accepted: 30 September 2024;

Published: 30 October 2024.

Edited by:

Christoph Weber, University of Education Upper Austria, AustriaReviewed by:

John Romig, University of Texas at Arlington, United StatesDiana Alves, University of Porto, Portugal

Copyright © 2024 Lembke, McMaster, Duesenberg-Marshall, McCollom, Choi, Shanahan, An, Sussman-Dawson and Birinci. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Erica S. Lembke, bGVtYmtlZUBtaXNzb3VyaS5lZHU=

Erica S. Lembke

Erica S. Lembke Kristen L. McMaster2

Kristen L. McMaster2 McKinzie D. Duesenberg-Marshall

McKinzie D. Duesenberg-Marshall