- 1System Engineering and Evolution Dynamics, Université Paris Cité INSERM, Paris, France

- 2Learning Planet Institute, Research Unit Learning Transitions (UR LT, 202324521H, joint unit with CY Cergy Paris University), Paris, France

- 3Laboratoire de Recherche sur les Apprentissages en Contexte (LaRAC), Université Grenoble Alpes, Grenoble, France

- 4Université Rennes 2 Laboratory of Psychology: Cognition, Behavior and Communication, Rennes, France

- 5Laboratoire de Sciences Cognitives et Psycholinguistique, Ecole Normale Supérieure, CNRS, EHESS, PSL University, Paris, France

Introduction: Teachers’ use of research has been increasingly advocated for in the past few decades, and some research has documented the factors which positively or negatively influence teachers’ use of research. However, the existing research does not give relevant information to prioritize between different ways to facilitate teachers’ use of research. In addition, different professionals working in education may have divergent opinions about such priorities. This study therefore asks what are the factors that most influence teachers’ use of research according to teachers, teacher trainers, educational decision makers and researchers?

Methods: We conducted a factorial survey experiment on six factors with 100 participants (pilot study) and 340 participants (main study) to identify which factors were perceived as influencing the most teachers’ use of research and to compare respondents’ perceptions according to their main role in education.

Results: This study shows that support for research use by the institution and instrumental utility of research are the factors that were judged as most impactful. Some categories of respondents had conflicting views about specific factors, for instance researchers perceiving teachers’ involvement in research as less likely to facilitate teachers’ use of research.

Conclusion: These findings can help decision-makers and teacher-trainers with limited resources to allocate them in a more effective way, while taking into consideration the disagreements across professions in order to resolve possibly arising conflicts.

1 Introduction

In recent decades, educational policies have pushed to ground the teaching profession in research (Basckin et al., 2021) in many countries, including France (Lima and Tual, 2022) and the US (Joyce and Cartwright, 2020). In parallel, researchers have increasingly studied teachers’ use of educational research and have shown a clear gap between educational research and teachers’ practice: despite institutional pressure, teachers rarely rely on research as a primary source of knowledge to inform their practice (Carnine, 1997; Borg, 2010). Teachers’ use of research has been explored using terms such as evidence-based education or practice (Biesta, 2010; Dachet and Baye, 2020), use of research-based information (Dagenais et al., 2012) and use of research evidence (Tatto, 2020). Terms that refer to promising ways to improve teachers’ use of research include research-practice partnerships or knowledge brokering (Anwaruddin, 2016; Rycroft-Smith, 2022; Wentworth et al., 2023). This diversity of research and terminology makes it difficult to gain a broad understanding of the field, and some authors have highlighted the need to clarify what we mean by “research” or “use” (Rycroft-Smith, 2022). For example, Penuel et al. (2016) used Weiss and Bucuvalas' (1980) conceptualization, in which the use of research can be conceptual (changing one’s ideas about a problem), instrumental (changing one’s practices), or symbolic (justifying an action taken). A more complex model adds “imposed use” which is “use mandated by government initiatives to promote evidence-based programs and practices” (Doucet, p. 1) and ‘process use’, or “the learnings gleaned by practitioners when they engage in research production” Doucet (2019). Cain et al. (2019) sought to model more precisely the ways in which research can inform educational practice:

• It can inform bounded decision-making by providing evidence that is understood in the light of assumptions and brought into discussion from which decisions and actions emerge.

• It can inform teachers’ reflection, influencing both what teachers think about and how they think, leading to changes in their “professional self”.

• It can inform organizational learning when it is brought into professional conversations, both formal and informal (Cain et al., 2019, p. 12).

Referring to Weiss and Bucuvalas’ conceptual-instrumental-symbolic widely used model (Gitomer and Crouse, 2019; Finnigan, 2021), many authors have criticized an overemphasis on the instrumental use of research (Cain et al., 2019; Rycroft-Smith, 2022), while the conceptual use of research is often undervalued (Farrell and Coburn, 2016). One reason for this may be the difficulty for researchers in capturing teacher change related to conceptual use of research, which may be more long-term and less amenable to measurement or observation. However, the distinction may still be useful, as various studies highlight that many teachers want clear, practical activities inspired by research that they can adapt quickly (Drill et al., 2013; Joram et al., 2020), while deep conceptual use of research may take longer, although it is arguably more important.

Farrell and Coburn (2016) identify various ways in which conceptual use can occur, such as “introduce new concepts,” “broaden or narrow understandings about the kinds of solutions [that] should be considered and [that] are most appropriate to pursue” or to “provid[ing] a framework to guide action,” but we still need more comprehensive models of teachers’ use of research.

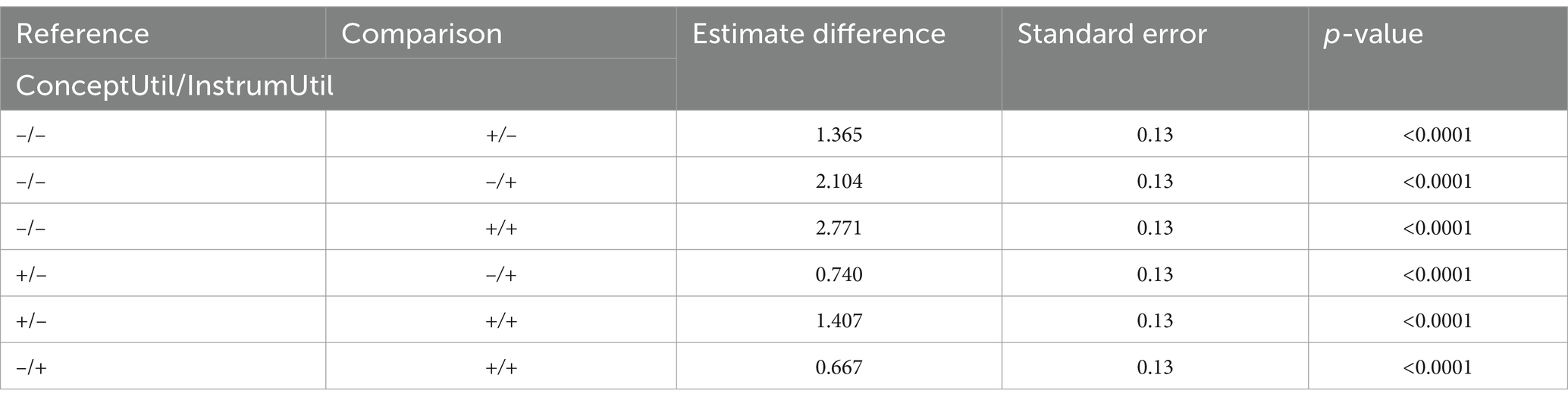

Dagenais et al. (2012) conducted a literature review in which they listed 32 factors that play a role in teachers’ use of research. This list was divided into four sections including factors related to the characteristics of practitioners (10), research (5), communication (7), and schools (10). Table 1 extracts for each category two examples of factors listed by Dagenais et al. (2012).

Table 1. Examples for each category of factors influencing teachers’ use of research (Dagenais et al., 2012, pp. 297–299).

On closer examination, some factors appear to overlap (e.g., Prior participation in research and Involvement in research), and most lack an explicit and detailed definition (e.g., Access to research and data). This leaves the understanding of these factors open to interpretation (e.g., what counts as relevant, according to whom and on the basis of what criteria). This ambiguity of terms raises similar problems to the lack of a clear definition of “research” or its use described earlier. Authors such as Rycroft-Smith (2022) argue that progress in supporting teachers’ use of research could be achieved with greater conceptual clarity. Studying factors that influence (positively or negatively) teachers’ use of research may help us to clarify the concepts and better support teachers willing to use research.

Many authors report that teachers lack time and institutional support to use research (e.g., Borg, 2010; Anwaruddin, 2016). It is therefore of interest to consider specifically how professionals who support teachers (e.g., researchers, teacher educators, or decision makers) could better support them to use research, rather than leaving the burden on teachers. Such a focus would lead us to set aside Dagenais’ factors related to the characteristics of practitioners and to emphasize the relevance of factors that these professionals can act on (e.g., researchers can act on the characteristics of research, trainers on communication, and decision makers on schools). Narrowing the focus to a few carefully selected factors could help to conduct empirical research on effective ways to support teachers’ use of research.

While Dagenais et al. (2012) contribute by providing a broad account of the factors that influence teachers’ use of research, some work is still needed to understand which factors should be considered first in order to best support teachers’ use of research.

Among efforts to facilitate teachers’ use of research, much work has been devoted to disseminating research findings (Anwaruddin, 2016) in a top-down, linear and unidirectional manner. Unfortunately, such an approach may not only be ineffective but also problematic (Rycroft-Smith, 2022): it disempowers teachers (Dupriez and Cattonar, 2018), who are seen as mere technicians applying things designed by researchers (Biesta, 2010). The example of the Education Endowment Foundation (2019), a large-scale research project in the UK investigating different ways of communicating research on literacy teaching and learning to schools, illustrates well the ineffectiveness of dissemination. Indeed, the project’s partners used research summaries, evidence-based practice guides, webinars, face-to-face continuous professional development events and online tools without any significant effect on teachers’ practice.

Beyond dissemination, according to Gorard et al. (2020), promising approaches include ongoing, iterative approaches, such as coaching with personalized feedback, or collaborations with researchers to involve practitioners who are doing research themselves. Having research champions or leaders within a school who are familiar with research on a topic can also help teachers engage more with that research. But as the authors put it “We need better studies of evidence-into-use in education [...] There are currently too few, and the overall picture is unclear.” Gorard et al. (2020, p. 29).

The professional judgment of teachers, researchers, teacher educators and decision makers may also be used to prioritize the actions we can take to facilitate teachers’ use of research. On the one hand, these education professionals are arguably in the best position to help teachers, and on the other hand, any divergent perspectives could be informative for improving the collaborations needed for teachers’ use of research. For example, it may be very important for teachers to be involved in the research process and to have clear guidance on how to translate research findings into concrete practice while researchers may overlook it. If researchers believe that teachers should not contribute to producing research because this would reduce its quality, these conflicting views need to be resolved in order to move forward. If they cannot, then teachers are faced with a “blizzard of advice” (Bryk, 2015, p. 471) that makes their decisions unmanageable.

Thus, comparing the perspectives of teachers and other educational stakeholders may shed light on the divergent views that need to be resolved in order to effectively support teachers to use research.

Our study aims to understand how different stakeholders perceive the influence of different factors on teachers’ use of research. We will focus on a limited number of factors that researchers, teacher trainers or decision makers can act on to support overburdened teachers. As we have already mentioned, promising factors might relate to time and support for teachers’ use of research, collaboration between teachers and researchers, or different ways in which research information could be effectively communicated to support different uses of research by teachers.

The research questions guiding this work are:

• According to different educational stakeholders, what are the factors that most influence teachers’ use of research?

• What are the differences in the judgements of educational stakeholders according to their role?

2 Methods

We first chose factors influencing teachers’ use of research and included them in vignettes (short descriptions of a fictional situation) rated in a survey by participants based on the likelihood that the fictional teachers would use research of interest to them. We conducted a pilot study with the first responses to the survey to generate specific hypotheses and estimate the sample size required to test them. We then tested the hypotheses in our main study and explored other findings with the responses of all the other participants.

We will first describe the experimental factorial survey method that we used. We will then describe the process of selecting the factors included in this study, the construction of the survey and its administration, and the data analysis.

2.1 Factorial surveys

Our study consisted of an experimental factorial survey (Hox et al., 1991; Wallander, 2009), also known as experimental vignette design (Atzmüller and Steiner, 2010; Aguinis and Bradley, 2014). This research method helps to understand participants’ beliefs or judgments (Auspurg and Hinz, 2015) by using vignettes that participants can judge according to specific questions. In most experimental vignette-based designs, many vignettes are systematically generated and each participant is asked to judge several vignettes. The responses of multiple participants to different vignettes are then analyzed to identify elements of the situation (or characteristics of the participants) that influence their responses. This method has been widely used to judge the fairness of household incomes based on situations in which socio-demographic characteristics such as gender, schooling, and years of professional experience vary (Auspurg and Hinz, 2015).

Factorial surveys have also been used in education (e.g., Geven et al., 2021 on teachers’ expectations of students). In our context, we used such a method to assess the relative weight of different factors influencing teachers’ use of research as perceived by various educational stakeholders. As vignette evaluation is useful for testing the influence of the participant’s role in the evaluation of the vignette (Gutfleisch, 2021), we used this method to assess the difference in perception between different educational stakeholders regarding the factors influencing teachers’ use of research.

2.2 Choice of the factors influencing teachers’ use of research

The methodological recommendation for factorial surveys is to have (7 ± 2) factors with a low number of levels (2 or 3) (Auspurg and Hinz, 2015, p. 48). This is to avoid having too large a vignette universe (defined as the product of the levels of each factor), which would either require a larger sample size or reduce the power of the survey.

We listed the 32 factors from Dagenais et al. (2012) in a table and defined each of them. We then invited two teachers, two decision-makers and two researchers from our personal contacts working in France to independently produce a shorter list of 10–15 factors. Their task was to select, reformulate and possibly merge the initial factors. They were asked to provide concrete examples of situations in which each factor could play a role. The instructions and files used are available on https://osf.io/xc948/files/osfstorage.

Our team then built on this work and scientific literature to produce a short list of clearly defined factors. For example, research led us to include the time required for research use (Borg, 2010), the distinction between instrumental and conceptual use of research (Penuel et al., 2016), or collaboration between researchers and teachers (Gorard et al., 2020).

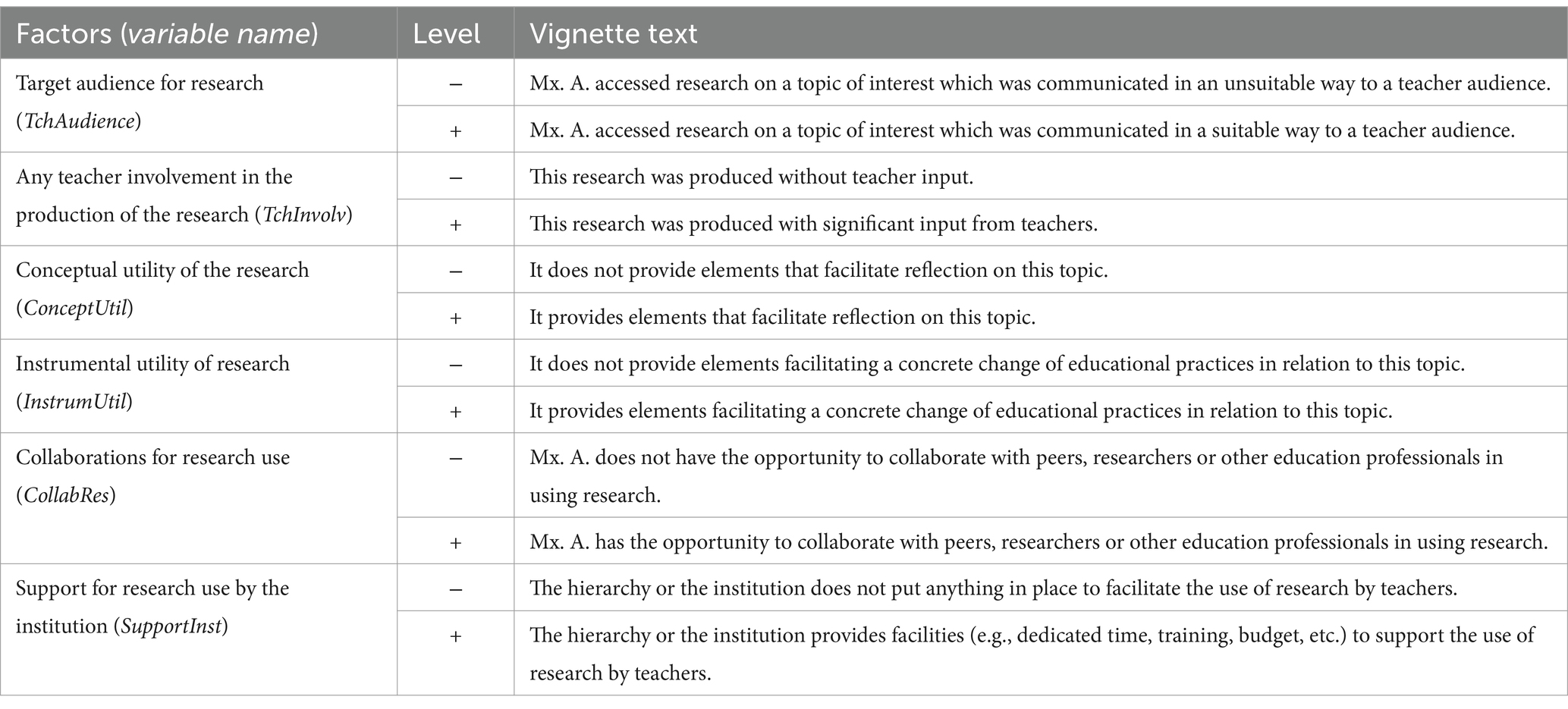

In Table 2, we present our final list of six factors influencing teachers’ use of research that are external to teachers (e.g., related to characteristics of the research or teachers’ institutional context), where each factor could take a negative (−) or positive (+) value. We described each level as a short sentence that was included in the vignettes shown to the participant, so that one piece of information from each factor was included in all vignettes (see example below). We described the factors so that there would be no overlap between them, and in such a way that each would refer to real-life situations in which it played a role in teachers’ use of research.

2.3 Survey construction

We created a survey to collect demographic information from respondents and present them with a series of vignettes. The demographic questions in our survey (see https://osf.io/xc948/files/osfstorage) included (1) years of experience in education; (2) main role held in the past three years; (3) other(s) role(s) held in the past; (4) category-specific information (e.g., school level and subject taught for teachers; research topics for researchers; specific roles and institutions held by trainers and decision makers). We included years of experience because both the use of research and years of experience have been studied in relation to teaching quality (e.g., Gorard et al., 2020; Graham et al., 2020). We included the other variables to explore the relationship between role and teachers’ judgments about teachers’ use of research, which, to our knowledge, has not been done before.

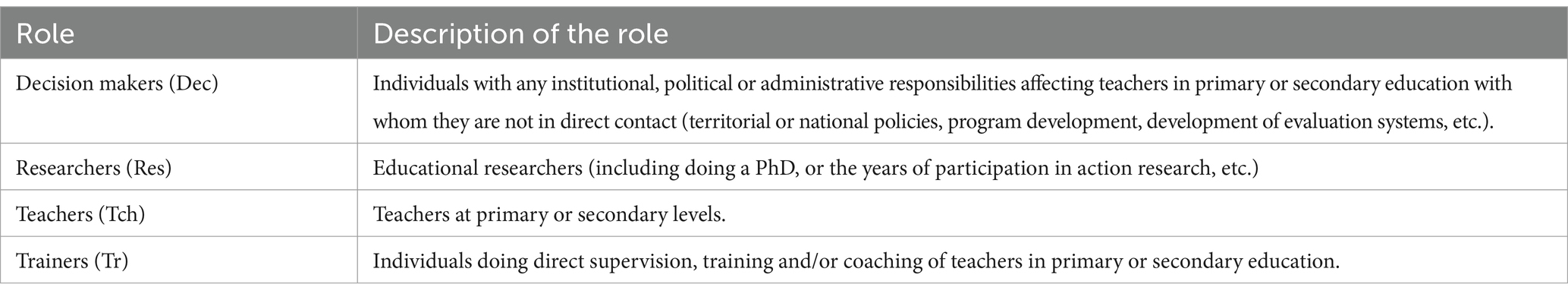

We categorized the different roles that educational stakeholders could take as (a) decision makers, (b) researchers, (c) teachers, and (d) trainers (see Table 3).

From the list of 6 factors with 2 levels, the full universe of vignettes (consisting of 2^6 = 64 possible combinations of the different levels) was systematically generated by selecting exactly one level per factor to create a short description. The following is an example of a vignette and all vignettes used are in https://osf.io/xc948/files/osfstorage.

“Mx. A. accessed research communicated in an unsuitable way to a teacher audience [TchAudience-] on a topic of interest to her. This research was produced with significant input from teachers [TchInvolv+].

It provides elements that facilitate reflection on this topic [ConceptUtil+] and it does not provide elements facilitating a concrete change of educational practices in relation to this topic [InstrumUtil-].

Mx. A. does not have the opportunity to collaborate with peers, researchers or other education professionals in using research [CollabRes-]. The hierarchy or the institution provides facilities (e.g., dedicated time, training, budget, etc.) to support the use of research by teachers [SupportInst+].”

After each vignette, respondents were asked to rate the situation: “Given this context, how likely do you think it is that [the teacher described in the situation] will use the research that (s)he is interested in?.” Participants had to answer using a slider corresponding to an 11-point Likert scale ranging from “extremely unlikely” (−5) to “extremely likely” (5).

To avoid respondent fatigue, it is recommended not to present more than 10 vignettes to each participant (Auspurg and Hinz, 2015). Furthermore, reducing the total number of vignettes reduces the number of participants required for the study. In order to reduce the number of vignettes in a study after choosing the factors and levels, the authors (Auspurg and Hinz, 2015) recommend using a D-efficient sample of the vignette universe instead of a random sample. A D-efficient sample is an optimal way to balance the number of occurrences of each level, thus gaining power for the analysis without losing too much information. The D value is an index representing the extent to which the sample is balanced, and the closer to 100 that value is, the better. The skpr package v. 1.0.0 in R (Morgan-Wall and Khoury, 2021) allows to generate not only a D-efficient sample of a given vignette universe, but also to separate it in blocks. We therefore used it to generate an optimal D-efficient sample (D = 100) of 16 out of the 64 possible vignettes with two equivalent blocks of 8 vignettes each, so that each participant will have to judge only 8 vignettes.

We used LimeSurvey software to create the survey. The survey began by informing all participants of how their data would be used for this research and of their right to request that their data be deleted afterwards. We then asked demographic information (see https://osf.io/xc948/files/osfstorage) and then randomly assigned respondents to one of two blocks of vignettes. Within each block, the order of presentation of the vignettes was randomized (Auspurg and Hinz, 2015). Participants could stop at any time by closing the survey, and previous responses were recorded. Responses from participants who only provided demographic information, but did not rate any vignettes, were not used.

2.3.1 Survey administration

Respondents were recruited in France and were expected to work in a French context. We used convenience sampling, social media, researchers’ networks and snowball sampling. The survey was launched on 23 June 2022 and closed after six months.

2.3.2 General analysis plan

We designed a fractional factorial survey experiment using multilevel modeling and a confounded factorial design. It includes both crossed (the factors influencing teachers’ use of research all co-occur for each participant) and nested variables (the vignette judgments are nested under the participants and the factors are nested under the blocks) (Auspurg and Hinz, 2015). The demographic characteristics and factors are independent variables, and the participants’ judgments of the vignettes is a dependent variable. This analysis plan was used for both pilot and main studies.

2.4 Pilot study

To our knowledge, no study compared the relative weights of the factors, we had thus limited background on which to build hypotheses. We therefore used the first 100 survey responses (50 from each block) to conduct an exploratory pilot study to generate specific hypotheses and estimate the sample size required to test them.

2.4.1 Data analysis

First, we created a full Linear Mixed-effects Model using the R package lme4 (Bates et al., 2015) including all 6 factors (TchAudience, TchInvolv, ConceptUtil, InstrumUtil, CollabRes, SupportInst) without Role and its interaction with the factors.

Here ‘answer’ corresponds to the participant’s judgment of the vignette; block is either of the two blocks of 8 vignettes that the participant was assigned to; Part_id is the unique identifier of each participant, Vign is the vignette being judged; 1|Part_id and 1|Vign are the random intercepts associated with the participant and vignette, respectively.

Our aim was to simplify each model as much as possible, while increasing our ability to explain the participants’ responses, in order to identify which factors played a significant role. To do this, we iteratively dropped variables from (m1) and tested whether the simpler model differed from the original. To compare the models, we used likelihood ratio tests via the anova command applied to lmer model objects (Bates et al., 2015). If the two models were not significantly different, we repeated the process using the simpler model. We first tried to drop the (1̣|Vign) component, as the randomization of vignettes should prevent any significant vignette random effect. We then tried to drop the non-significant interaction effects, but as all factors played a significant role, we kept the following model:

We then observed the relative weight of each factor with the reduced sample used for the pilot study according to the model m2. Our results showed differences worth exploring in our main study: some factors clearly seemed to be rated as influencing more teachers’ use of research than others, while other factors seemed to be judged more or less equally. We used this to generate our first two hypotheses below.

Similarly, we created a full linear mixed-effects model including all 6 factors and adding their interaction with the participant’s main role (Role), using the teacher role (Tch) as the reference level of all analyses.

Role*TchAudience (likewise for the others) means that the model includes both variables TchAudience and Role, but also their interaction (TchAudience:Role).

We dropped non-significant interactions one by one, to reach the following model in our pilot study:

This means that the only statistically significant interaction between Role and the factors was related to SupportInst in our pilot study. We therefore generated one hypothesis regarding this difference to be tested in the main study.

2.4.2 Hypotheses

From the models (m2) and (m4) found in the pilot study, we constructed the following hypotheses, as we found no reason in the scientific literature to explore other hypotheses:

H1: Educational stakeholders judge that teachers who benefit from institutional support for research use (SupportInst) or who have access to research suggesting instrumental use (InstrumUtil) are significantly more likely to use research results than teachers who benefit from collaboration for research use (CollabRes). We will write it (SupportInst ≈ InstrumUtil) > CollabRes below.

H2: Educational stakeholders judge that teachers benefiting from collaboration for research use (CollabRes) are significantly more likely to use research results compared to teachers benefiting from research communicated to a teacher audience (TchAudience), research involving teachers in its production (TchInvolv), or research suggesting conceptual use (ConceptUtil). We will write it CollabRes > (TchAudience ≈ TchInvolv ≈ ConceptUtil) below.

H3: Decision makers judge faculty who benefit from institutional support for research use (SupportInst) as more likely to use research than other respondents. We will write it RoleDec:SupportInst > (RoleRes:SupportInst ≈ RoleTr:SupportInst ≈ RoleTch:SupportInst) below.

For each hypothesis, when we say ‘teachers who benefit from [a factor]’, we mean ‘compared to teachers who do not benefit from [that factor]’. For example, teachers who benefit from support for the use of research by the institution are to be compared with teachers who do not benefit from such support, all other things being equal.

2.4.3 Power analyses and sample size estimation

To estimate the number of respondents needed to test our hypotheses with a standard power of β = 0.80 and a confidence threshold of 0.05, we conducted a power analysis based on the data from the pilot study. We first used bootstrapping (Efron, 1979) in R (v. 4.1.3) to create a database containing information with similar statistical properties to the original data, but with more participants. We created bootstrapped datasets of n = 200, 300,..., 1,000 participants and used the PowerSim function from the simr library in R (Green and MacLeod, 2016) to assess the number of participants with which we could expect to reach the 80% threshold for our hypothesis.

2.5 Main study

We discarded the data used in the pilot study and used the remaining 340 complete survey responses for the main study, which aimed to test our hypotheses. In order to test the underlying assumption that all factors are independent from each other, we tested the interaction effects among factors with the following model:

In the rest of the study, we added any significant interactions between factors to our other models and tested whether this changed the conclusions. If not, these effects are reported independently in the results sections and we otherwise use the simpler model without these interactions.

2.5.1 Hypothesis testing

To test H1 and H2 (relative importance between factors in their ability to influence vignette judgment), we fitted the following linear mixed effects model, following the same procedure as in the pilot study:

We performed pairwise comparisons using the glht package in R (Bretz et al., 2010) to test, for any two factors, the null hypothesis that their coefficients are not statistically significantly different. For the first hypothesis, our pairwise comparisons focused on SupportInst, InstrumUtil and CollabRes. Based on (m2), testing H1 – (SupportInst ≈ InstrumUtil) > CollabRes – meant comparing (a) SupportInst with InstrumUtil; (b) SupportInst with CollabRes; and (c) InstrumUtil with CollabRes. The null hypothesis would consist of having no statistically significant difference for (a), but showing that CollabRes is statistically significantly inferior in (b) and (c).

Similarly using the model m2, testing H2 – CollabRes > (TchAudience ≈ TchInvolv ≈ ConceptUtil) – meant comparing (a) CollabRes with TchAudience; (b) CollabRes with TchInvolv; (c) CollabRes with ConceptUtil; (d) TchAudience with TchInvolv; (e) TchAudience with ConceptUtil; and (f) TchInvolv with ConceptUtil. To validate H2 (a), (b) and (c) would simultaneously have to show a statistically significant difference, and (d), (e) and (f) would have to show no statistically significant difference.

To test H3, we created a full linear mixed effects model as in the pilot study, starting with the full model (m3) including all interactions.

We dropped one by one insignificant interaction effects between Role and the different factors according to successive likelihood ratio test comparisons using the anova command. As the interaction Role:SupportInst was not statistically significant, H3 could be rejected and we ended up using the following model for exploratory analyses:

As explained in the results, no further analyses were needed to test H3.

2.5.2 Exploratory analyses

For each factor, we first assessed the significance of the mean difference of the judgements with its negative and positive values using the emmeans package (Searle et al., 1980). For example, for the factor TchAudience, if TchAudience- and TchAudience+ are the means of the judgments with its negative and positive values respectively, we assessed whether the difference between TchAudience-and TchAudience + was statistically significant. If so, this difference can be interpreted as the respondents’ perceived weight of the factor in teachers’ research use. We then computed all pairwise comparisons using the glht package in R (Bretz et al., 2010), in addition to testing H1 and H2, in order to rank the factors based on their respective weights, grouping together factors for which the weight differences were not statistically significant.

We then carried out a second exploratory analysis with all the interaction effects between Role and the factors, a third including participants’ auxiliary or previous roles (Auxiliary) for each of the four roles (RoleTch, RoleRes, RoleTr, RoleDec), and a fourth including participants’ years of experience in education (yexp).

For the second exploratory analysis, we used the model (m5) that included all possibly statistically significant interaction effects between Role and the factors, therefore excluding interaction effects between Role and CollabRes or SupportInst, but keeping the interactions between Role and the four other factors.

For the third type of exploratory analysis, we conducted four similar analyses, each starting with the full model including all possible interaction effects between one of the variables RoleTch, RoleRes, RoleTr, RoleDec and the factors, and then iteratively dropping variables as before. Each of these variables could take three values: (1) Main (if the respondent reported this role as the main role they played in the last three years); (2) Auxiliary (if the respondent either played this role more than three years ago or played it in the last three years but as a minor function); (3) Never (if the respondent never played this role).

We set the significance threshold for model comparison at a conservative 0.01 to account for the multiple explorations and to try to avoid false positives.

For the fourth exploratory analysis, we started with the full model, including all possible interaction effects between yexp and the factors, and then iteratively dropped variables as before.

2.6 Research reproducibility

This study has been pre-registered on the Open Science Framework registry following analysis of the pilot study.1 The link to download the anonymized data, the code with detailed instructions for data analysis using R software, and all other can be found there.

3 Results

3.1 Factors choice

Of the six contacts invited to participate, both teachers and both decision-makers and one of the two researchers took part in this stage of the study. Each of these five participants independently suggested between 9 and 13 factors. Our research team then synthesized the work done, resulting in a list of 13 different factors. We then kept the 6 factors (Table 2) unrelated to teacher characteristics and iteratively defined through internal discussion, the wording of each factor and its levels to be used in the vignettes.

3.2 Power analysis

Eight hundred participants were needed to also test H3 with an 80% threshold. However, we closed the survey before this sample was reached. Although it is likely that we had enough participants to test H1 and H2, we did not test the number of participants required first.

3.3 Characteristics of survey respondents

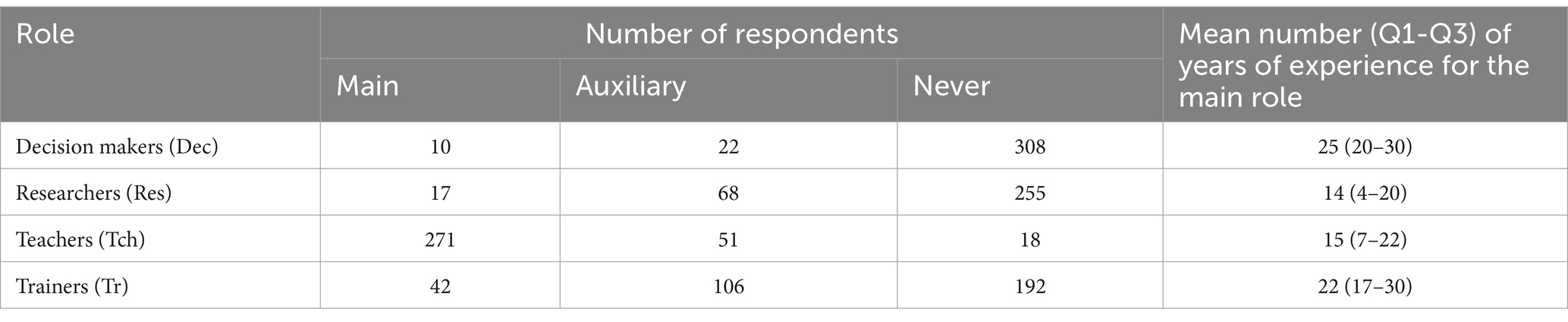

A total of 777 responses were collected, of which 337 were discarded because participants did not rate any of the vignettes. We then used 100 responses for the pilot study. This left a total of 340 participants for the main study. The unit of analysis in a factorial survey is the vignette, and in our case each participant could rate up to 8 vignettes, giving a total of 2,720 (=8*340) possible vignette ratings, of which our participants rated a total of 2,447 vignettes. On average, our participants had a total of 16 years of experience (min = 0, Q1 = 8, Q3 = 22, max = 55). Of the 340 participants in the main study, 10 participants (3%) presented their main role as a decision maker, 17 (5%) as a researcher, 271 (80%) as a teacher and 42 (12%) as a trainer. Table 4 below summarizes the distribution of respondents according to their main role in education and the number of vignettes each responded to.

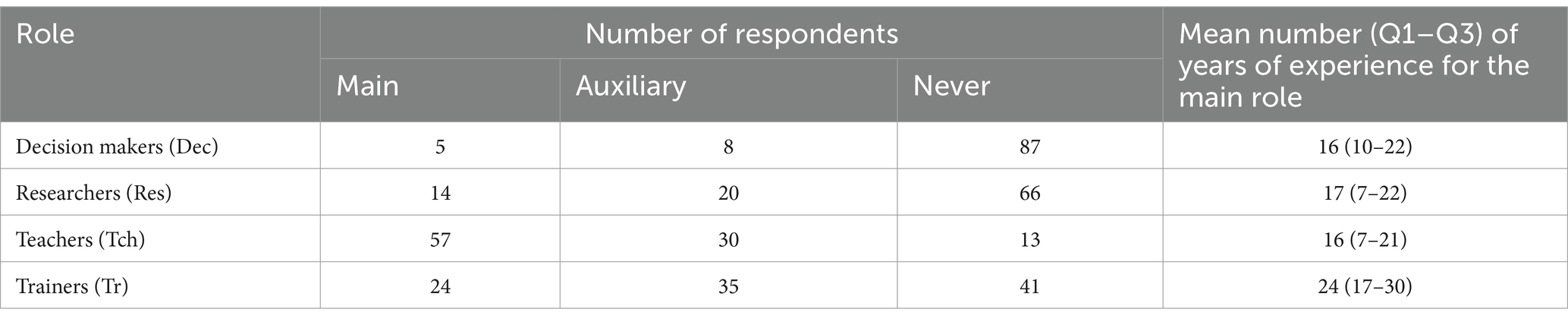

Compared to the participants whose responses were used for the pilot study, the main study participants were more likely to be teachers and less likely to be in any other role (see Table 5).

3.4 Interactions between factors

We simplified the model (m0) until we reached a model with only statistically significant interactions between factors (m0bis).

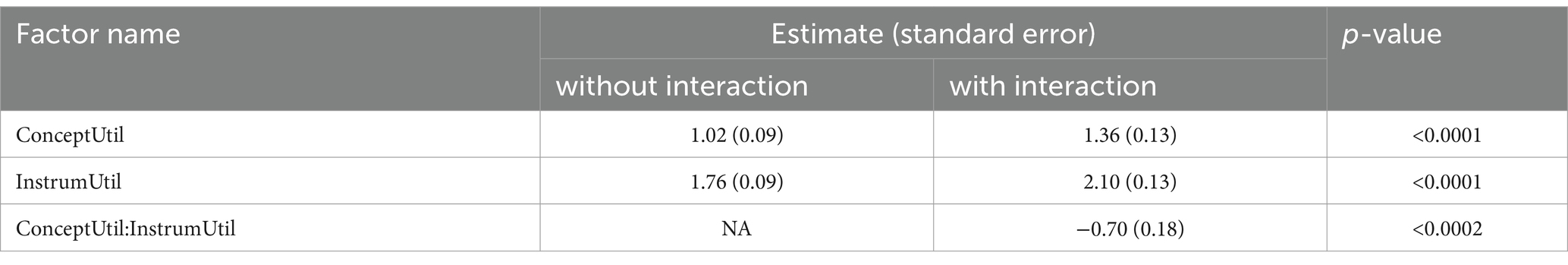

This model showed a significant difference between ConceptUtil and InstrumUtil which is compared to the simpler model (m2) in Table 6.

Including this significant interaction effect shows that ConceptUtil and InstrumUtil both appear to have larger effects, but only when only one of them has a positive value, while both at the same time create a more nuanced difference.

3.5 Hypotheses testing

We tested the hypothesis chosen after consideration of the scientific literature and our pilot study as described in the methodology section.

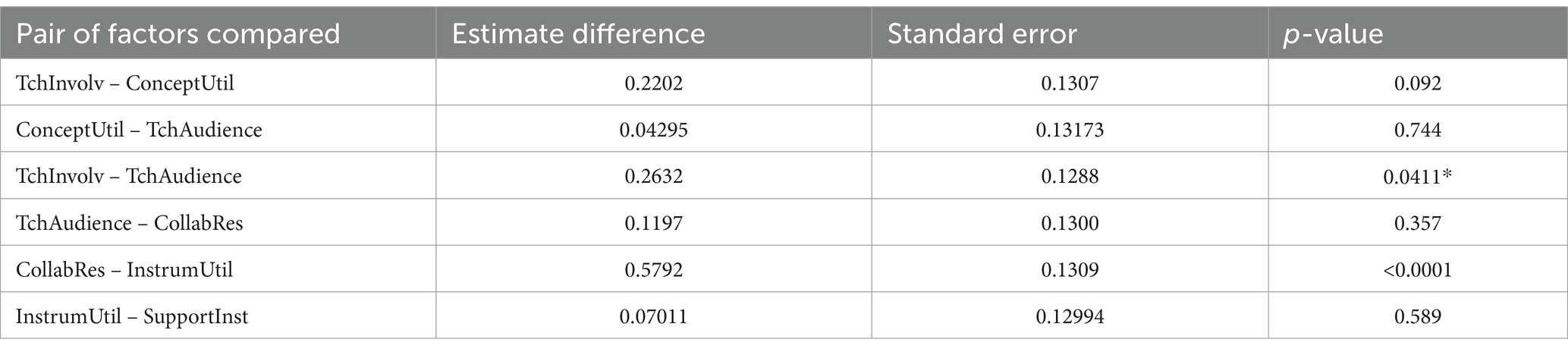

For the first hypothesis (H1): We found no statistically significant difference between the beta coefficients of InstrumUtil and SupportInst (p = 0.589), but there was a statistically significant difference between the beta coefficients of InstrumUtil and CollabRes (p < 0.001) and of SupportInst and CollabRes (p < 0.001). This confirms our hypothesis H1: (SupportInst ≈ InstrumUtil) > CollabRes.

For the second hypothesis (H2): There was no statistically significant difference between the beta coefficients of TchAudience and CollabRes (p = 0.357), but there was a statistically significant difference between the beta coefficients of TchInvolv and CollabRes (p = 0.003). However, there was no statistically significant difference between the beta coefficients of ConceptUtil and CollabRes (p = 0.217). This result refutes our hypothesis H2: CollabRes > (TchAudience ≈ TchInvolv ≈ ConceptUtil).

For the third hypothesis (H3): The interaction effect between SupportInst and Role was removed by successive model comparisons, which was sufficient to reject H3 RoleDec:SupportInst > (RoleRes:SupportInst ≈ RoleTr:SupportInst ≈ RoleTch:SupportInst).

3.6 Exploratory analyses

3.6.1 Factors’ weight comparison

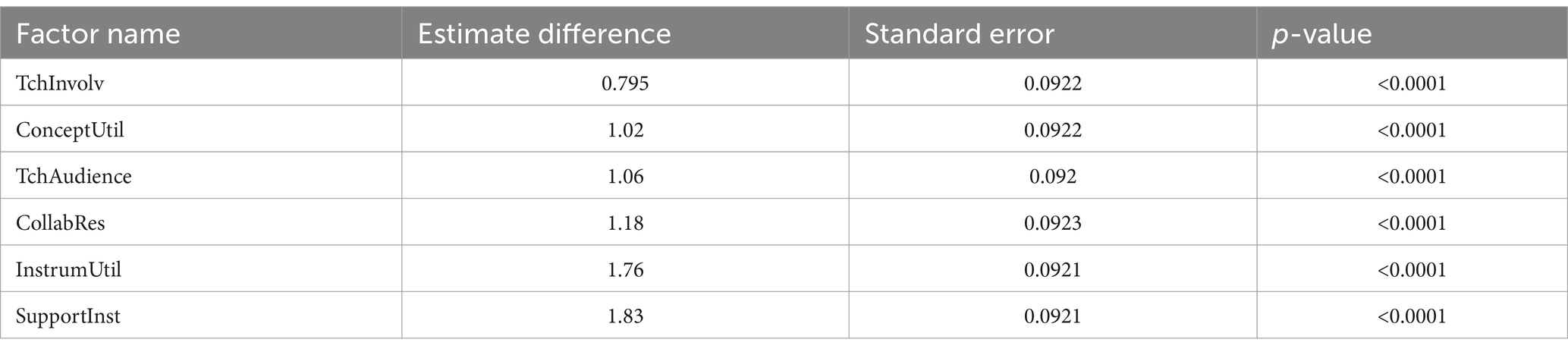

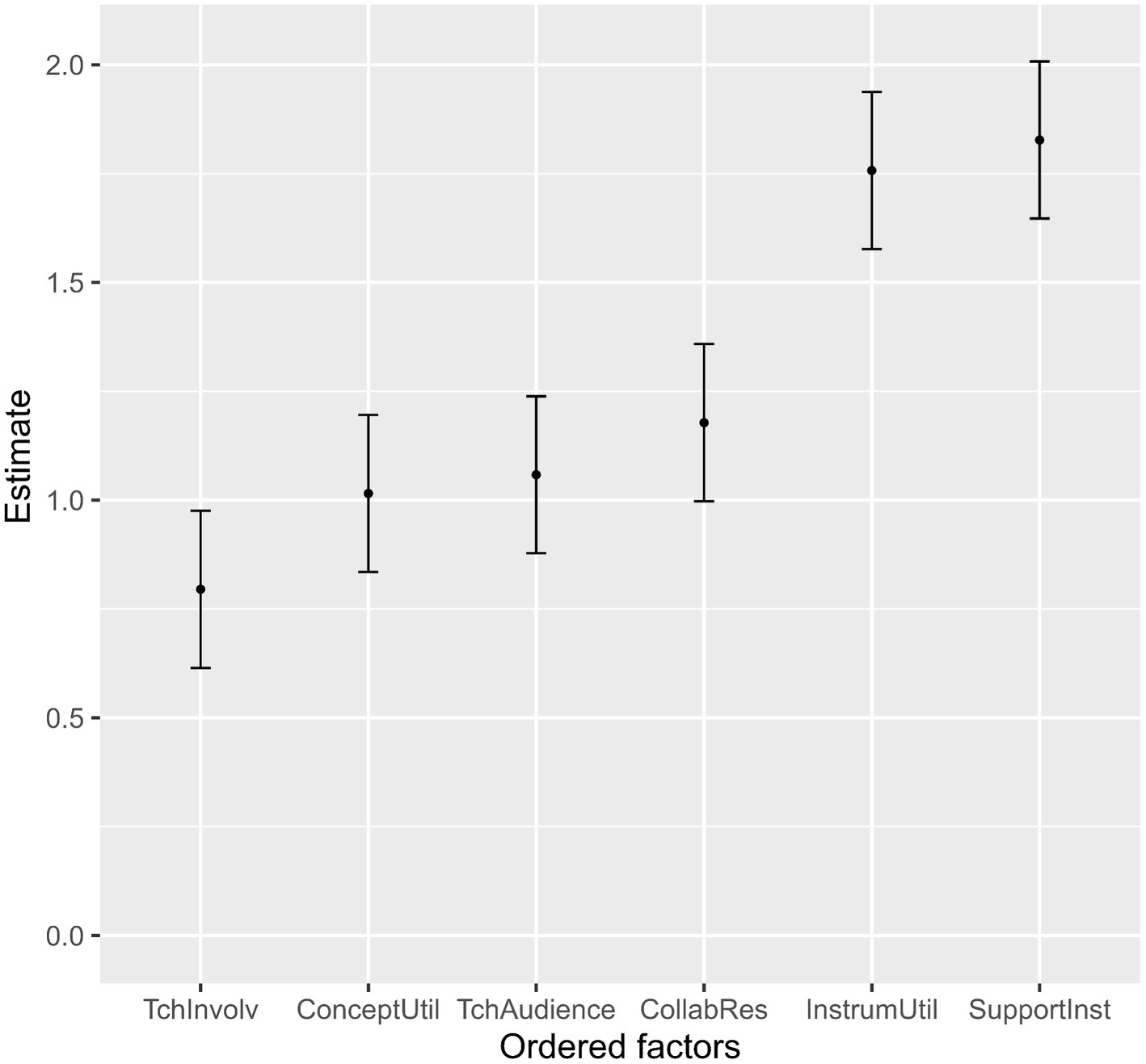

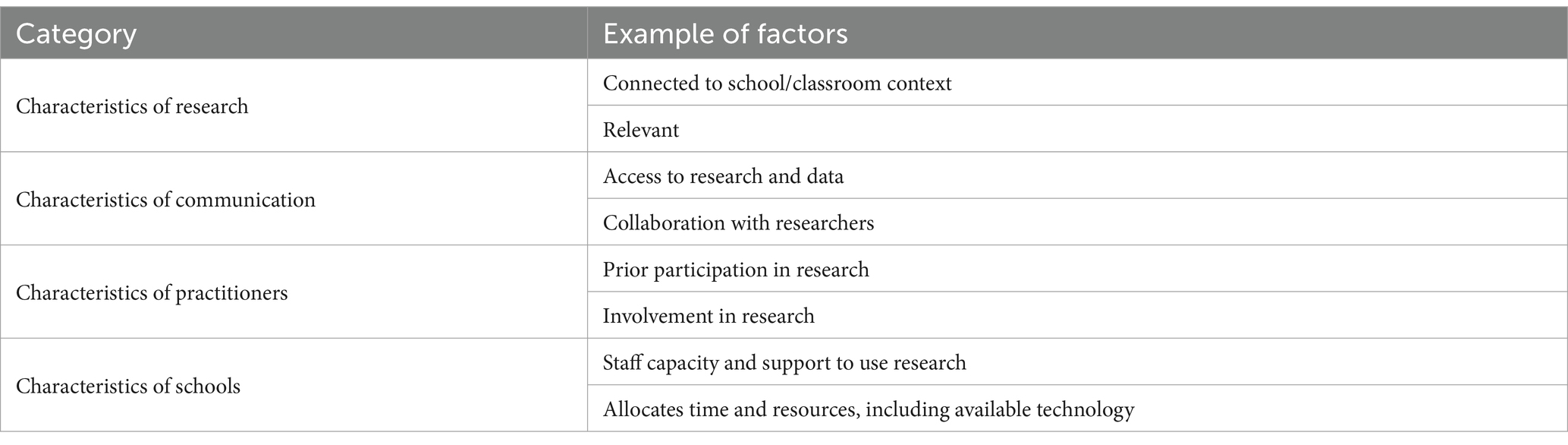

As Table 7 shows, pairwise comparisons of the beta coefficients for each factor revealed that each factor was judged to have an overall positive effect on teachers’ use of research, although to varying degrees.

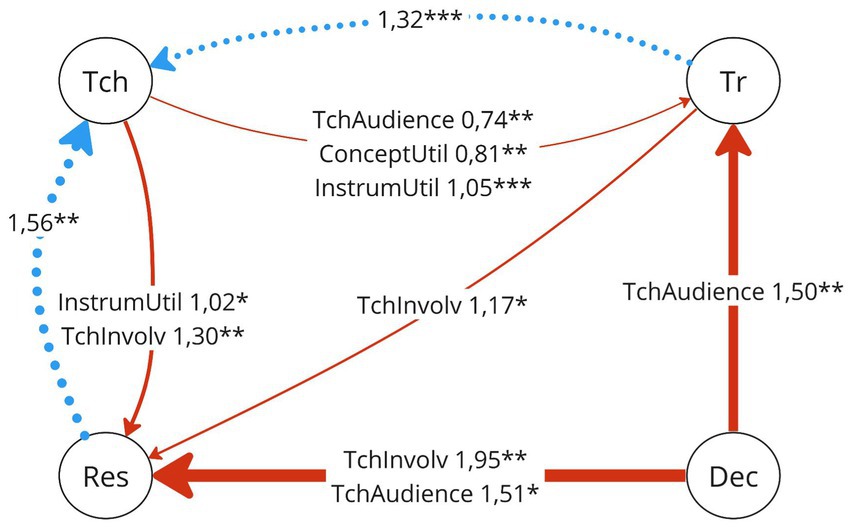

Figure 1 shows a suggested hierarchy in terms of how much each of the factors affect respondents’ judgments about teacher use of research.

Our pairwise comparisons (Table 7) support a clear difference between InstrumUtil and SupportInst on the one hand and the other factors on the other (which is consistent with our validation of H1). However, there is no strong significant difference between the lower rated group of four factors. The lowest rated factor, TchInvolv, does not show a statistically significant difference with the second lowest rated factor, ConceptUtil, but it does show a statistically significant difference with the third lowest rated factor, TchAudience. However, using the model including ConceptUtil:InstrumUtil, the increased weight of ConceptUtil causes it to become significantly different from the weight of TchInvolv. This suggests that TchInvolv may weigh significantly less than other factors according to our respondents Table 8.

We also looked into the interaction effect between CU and IU (Table 9).

3.6.2 Role-factor interactions

Each arrow represents a difference in judgment between two roles, with the role at the origin of the arrow rating higher on average than the role at the destination of the arrow. The dotted blue arrows (Tr → Tch and Res → Tch) represent the mean difference in the ratings of the vignettes, i.e., on average teachers tend to rate the vignettes significantly lower than trainers or researchers – in other words, they think it less likely that the fictional teacher shown would use the research. The full red arrows represent the difference in judgment for the named factors only. The size of the arrows reflects the relative weighting of the roles, while statistical significance is indicated by the following significance codes: “***” p < 0.001, “**” p < 0.01, “*” p < 0.05 (see Figure 2).

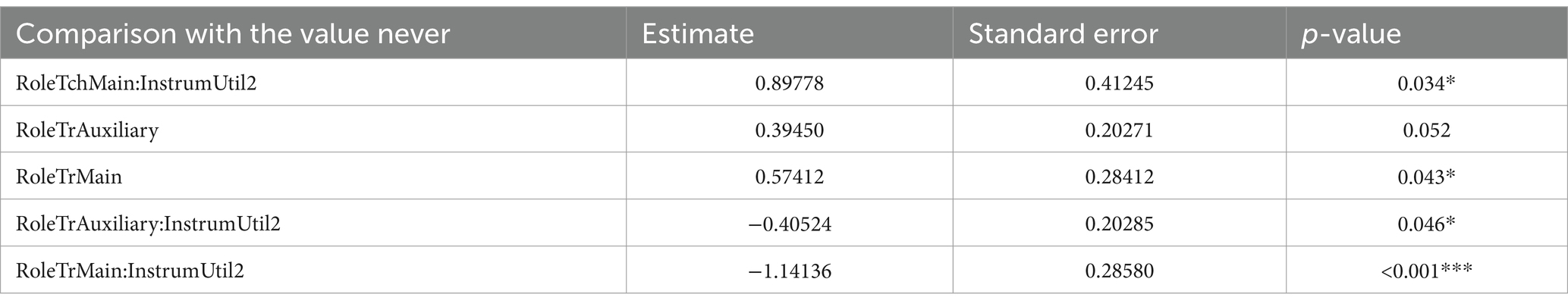

3.6.3 Moderator effects of the auxiliary roles

Using “never” as a reference in both cases, the two models with RoleTch and RoleTr showed statistically significant differences in some interaction terms. The more parsimonious models m8a and m8b were derived from m6a and m6b, respectively. Table 10 summarizes the statistically significant results.

3.6.4 Years of experience in education

Starting with a model with full interaction effects between yexp and the factors, we fitted our model by successive likelihood ratio test comparisons using the anova command and found no effect of yexp on participants’ judgements. This suggests that participants’ experience in education does not influence their perceptions of what influences teachers’ use of research.

4 Discussion

4.1 Interpretation of findings

We conducted a factorial survey experiment to assess the relative importance given by educational stakeholders to different factors influencing teachers’ use of research. We involved teachers, decision-makers and researchers in the development of our survey and ended up focusing on 6 factors that educational researchers, trainers or decision-makers can act on. We therefore excluded teacher-related factors. The first achievement of this study is to define and operationalize the factors used, building on the work of Dagenais et al. (2012). Based on the responses of 340 educational stakeholders in France, our main study shows that all the factors included in the study play a significant role in teachers’ use of research, according to the participants. This is in line with previous studies that have listed similar factors as influencing teachers’ use of research (e.g., Dagenais et al., 2012). In addition, our study contributed to a better understanding of the relative importance of these factors. In particular, institutional support for the use of research (Borg, 2010; Anwaruddin, 2016) and elements that facilitate the instrumental use of research (Oancea and Pring, 2008), for which decision-makers and researchers, respectively, may act, are considered most important. Our hypothesis testing did not allow us to identify other patterns. We will now discuss the interpretation of the exploratory analyses and suggest practical implications for both research and practice.

4.1.1 Factors interactions

The finding that ConceptUtil and InstrumUtil interact significantly is interesting and can be interpreted in three ways.

Firstly, the way the vignettes are constructed may have caused this difference, since both factors are presented together in the same sentence: “[The research] provides elements that (do not) facilitate reflection on this topic and it (does not) provide elements that facilitate a concrete change of educational practices in relation to this topic.” A clear separation of the two may qualify this interaction effect.

Secondly, participants may judge that both conceptual and instrumental use of research are important, but it is more important to predict that at least one of these two possible uses will be facilitated by the piece of research. This would explain the lower benefit of having both factors together in their positive value, compared to either of them in isolation.

Third, this interaction may be interpreted as a limitation of the Weiss and Bucuvalas (1980) model, as one could argue that the conceptual and instrumental uses of research are intertwined and strongly linked. For example, it could be argued that some kind of change in reflection (conceptual use) is necessary to lead to a change in practice (instrumental use), because if there is no reason to change, instrumental use would not occur.

Overall, this interaction does not change much the other findings of the current study.

4.1.2 Factors’ weight comparison

The superior ratings of InstrumUtil and SupportInst should encourage future research to empirically test the extent to which they actually contribute to teachers’ use of research. Due to methodological constraints, our study maintained a broad definition of instrumental utility and institutional support. We therefore believe that future research should explore which elements of these factors are the most cost-effective and scalable.

We also believe that while there is no clear hierarchy between the other factors, larger studies could help to prioritize among them. In the meantime, our study supports the idea of trying to address all of these factors simultaneously whenever possible.

4.1.3 Role-factor interactions

The most notable differences that might be worth exploring in further studies are first between teachers and trainers, and then between teachers and researchers. In particular, with regard to the InstrumUtil factor, which is rated higher by teachers than by trainers and researchers, it could be debated to what extent the elements that facilitate instrumental use of research by teachers actually work.

Many researchers have criticized the overemphasis on the instrumental use of research (e.g., Farrell and Coburn, 2016; Cain et al., 2019), instead emphasizing the importance of the conceptual use of research. As mentioned earlier, it is unlikely that there could be an instrumental use of research – that is, a change in practice – if there was no conceptual use at all, unless teachers were blindly applying research-based practices. Teachers are professionals (Bourdoncle, 1994; Dupriez and Cattonar, 2018) and their relationship with research is more complex, whether they use it (Cain et al., 2019) or not (Cain, 2016).

Similarly to Rycroft-Smith (2022), we believe that there is a tension in research knowledge brokering – or facilitating teachers’ use of research – which she identifies as “the potential conflict between short-and long-term goals, which may also be conceptualized as the tension between impact and teacher autonomy” Rycroft-Smith (2022 p. 35). The idea of focusing on the instrumental use of research can be seen as a short-term perspective, and the conceptual use a long-term one. Instrumental use responds to teachers’ need for something that can be implemented immediately in the classroom, while conceptual use may slowly influence the way they teach in different dimensions that may not be captured by research on instrumental use and impact. We agree with her statement that “Knowledge brokering is not just about translating findings from research into ‘takeaways’ for practice, and there is a real danger it is seen straightforwardly as such” (Rycroft-Smith, 2022). In their literature review, Heinsch et al. (2016) show that teachers’ use of research is seen as synonymous with evidence-based practice (Biesta, 2010).This is problematic in at least two ways. First, ‘evidence-based’ refers to a limited range of research that is then to be used in an instrumental way. Second, the focus on evidence-based research has been widely criticized, for example by Biesta (2010), who argues that what counts as evidence is always subject to interpretation and that underlying values may conflict with other values but are nonetheless important.

Further research could explore other ways of conceptualizing the different ways in which research can be used, which could then be used to better understand the discrepancy between teachers’ and other educators’ judgments about the instrumental use of research.

The other relevant interaction in our study is between the researchers and the ‘teacher involvement in research’ factor. Although not statistically significant, the only case where respondents on average rated the negative version of a factor higher than the positive situation was researchers rating TchInvolv. This means that some researchers believe that involving teachers in research makes them less likely to use research. Our study shows that all other roles rated involving teachers in doing research as significantly more important in influencing teachers’ use of research.

Such a difference between researchers and others reflects debates among researchers. On the one hand, the review by Borg (2010) cites various arguments against the idea that teacher involvement in research is good. For example, “the [limited] validity of the findings in much of this research” Borg (2010, p. 404), “that [teacher research] is of poor quality, methodologically-speaking, is also often underpinned by conventional scientific notions of research (e.g., large-scale, replicable, quantitative).” Borg (2010, p. 405) or “that in most teaching contexts teachers receive no compensation for the extra work that engaging in research involves.” Borg (2010). On the other hand, Gorard and colleagues mention in their review that “Users conducting research themselves is a promising idea that has not really been tested yet” Gorard et al. (2020, p. 26).

The idea of research produced by teachers has epistemological implications – the kind of knowledge produced with/by teachers may be necessary to solve “wicked problems in education” (Mosher et al., 2014). It also has political implications – those who decide what is “valuable knowledge for teaching” have power (Dupriez and Cattonar, 2018; Rycroft-Smith, 2022). In a sense, this element of TchInvolv calls into question the nature of the teaching profession (Bourdoncle, 1994) and research (Stenhouse, 1981).

Empirical research is needed to understand the settings in which such participation might be valuable for teachers’ use of research, and theoretical research will provide a better conceptualization of what teachers’ use of research entails.

4.1.4 Moderator effect of the auxiliary roles

Although small and of questionable significance, our results indicates an effect of being a trainer on the respondents’ judgements and to different views on the factor InstrumUtil. In line with the previous results of this paper, we believe that the discrepancy between teachers and trainers regarding the InstrumUtil factor seems important to investigate in order to better understand its cause and practical consequences. Finally, the role of the trainer seems to have a small but possibly interesting effect on the participants’ overall ratings on InstrumUtil. Future research could help to better understand this effect.

4.1.5 Years of experience in education

A surprising finding from our study is that there is no effect of years of experience in teaching on participants’ ratings of vignettes. This is consistent with Graham et al. (2020) who show no effect of years of experience in education on teaching competence. Similarly, years of experience is not a promising way to explain differences in judgments about what influences teachers’ use of research.

4.2 Limitations of the current study

The most important limitation of our study is that it focuses on the perceptions of educational stakeholders rather than the actual use of research by teachers. It is not because stakeholders believe that institutional support is important in facilitating teachers’ use of research that such support has an actual effect in facilitating teachers’ use of research. As Gorard et al. put it, “asking people what they prefer or what they think works can be so misleading” Gorard et al. (2020, p. 17).

A second limitation is that, despite our efforts to clarify the definition and operationalization of each of our factors, the factors had to remain somewhat vague and broad in the situations presented to participants in the vignettes. Further research could address this limitation by breaking down institutional support (or other relevant factors) into “sub-factors”. In this example, institutional support could be broken down into the provision of time or money for teachers to use research; having only verbal support from the hierarchy; having dedicated trained professionals within schools to help teachers find and interpret research.

A third limitation concerns our sample which may not be representative due to the process, and we had very different respondents between the pilot and main studies in terms of role sharing (57% of teachers for the pilot study versus 80% for the main study). Therefore, our study remains largely exploratory, and future studies in different countries should aim to benefit from institutional support to reach educational stakeholders in a more systematic way, or to collect specific demographic data to be able to compare with large-scale studies such as the Teaching and Learning International Survey (TALIS) (Ainley and Carstens, 2018).

A fourth limitation is that we do not address contexts in which teachers may not want or be able to access research. In our vignettes, it is assumed that the fictional teacher portrayed has accessed research on a topic of interest to her. As with teacher characteristics that may influence teachers’ use of research, the issue of teachers’ access to research was not included in this study and may be worth exploring in parallel.

A fifth limitation is that our study took place in France, and results may differ from country to country, as the research culture in education may differ, as may the educational settings. It may be interesting to replicate this study in countries with a different educational culture, and whenever necessary, to adapt our study to include variables specific to the educational context and environment studied.

A sixth limitation is that our study had a surprising, slightly significant vignette effect, which is not expected according to Auspurg and Hinz (2015). Nevertheless, we removed it from our model because its effect was very small and its significance limited. This effect could be due to an imbalance in the number of respondents for each block. If more important effects emerge in further studies, caution should be exercised in interpreting the results.

4.3 Conclusion

Echoing the concerns of authors such as Cain et al. (2019) or Rycroft-Smith (2022), there is a need for a better conceptualization of research use in education. This means, for example, moving beyond Weiss and Bucuvalas' (1980) simple separation of conceptual, instrumental and symbolic research use to include more detailed views of conceptual research use (Farrell and Coburn, 2016; Cain et al., 2019). Promising ideas include creating links with other scientific literatures such as information literacy and ergonomy, or theory of acceptance (Khechine et al., 2016) considering teachers as information seekers (e.g., Boubée and Tricot, 2010) or as users (e.g., Marion, 2018). Furthermore, clarifying the underlying epistemologies and possible consequences of the choices made when conceptualizing the use of research is an important commitment that researchers in our field should make.

Our study makes progress on the need to prioritize the means of supporting teachers’ use of research, and to map the different perspectives of educational stakeholders. We hope to leave promising avenues of research for both theoretical and empirical work on teachers’ use of research and knowledge brokering.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://osf.io/avdhu.

Ethics statement

The studies involving humans were approved by Inserm’s Institutional Review Board (IRB00003888). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

NJ: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Validation, Visualization, Writing – original draft, Writing – review & editing. JJ: Data curation, Formal analysis, Methodology, Supervision, Writing – review & editing. PD: Conceptualization, Methodology, Project administration, Supervision, Writing – review & editing. IA: Conceptualization, Data curation, Formal analysis, Methodology, Project administration, Supervision, Visualization, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was partly funded from a doctoral grant attributed to the main author (Université Paris Cité, ED 474 FIRE) and funding from Bettencourt Schueller Foundation as part of a larger research project entitled Teachers as Researchers, led by IA.

Acknowledgments

The authors would like to thank Murillo Pagnotta for his advice at an early stage of this project, Liliane Portelance and Kristine Balslev for their helpful comments, Thomas Canva for his time and help with some parts of the code in R. The authors are grateful toward Éric, Dominique, Audrey, Nicolas, and Florence who participated in the reduction and definition of the factors used in this study, and the authors appreciate the time taken by all the respondents of the survey. Huge thanks to Salomé Cojean for her useful comments on this manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Aguinis, H., and Bradley, K. J. (2014). Best practice recommendations for designing and implementing experimental vignette methodology studies. Organ. Res. Methods 17, 351–371. doi: 10.1177/1094428114547952

Ainley, J. et Carstens, R. (2018), « Teaching and learning international survey (TALIS) 2018 conceptual framework », Documents de travail de l'OCDE sur l'éducation, n° 187, Éditions OCDE, Paris.

Atzmüller, C., and Steiner, P. M. (2010). Experimental vignette studies in survey research. Methodology 6, 128–138. doi: 10.1027/1614-2241/a000014

Basckin, C., Strnadová, I., and Cumming, T. M. (2021). Teacher beliefs about evidence-based practice: a systematic review. Int. J. Educ. Res. 106:101727. doi: 10.1016/j.ijer.2020.101727

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Biesta, G. (2010). Why ‘what works’ still Won’t work: from evidence-based education to value-based education. Stud. Philos. Educ. 29, 491–503. doi: 10.1007/s11217-010-9191-x

Borg, S. (2010). Language teacher research engagement. Lang. Teach. 43, 391–429. doi: 10.1017/S0261444810000170

Bourdoncle, R. (1994). Savoir professionnel et formation des enseignants. Une typologie sociologique. Spirale. Revue de recherches en éducation 13, 77–95. doi: 10.3406/spira.1994.1894

Bretz, F., Hothorn, T., and Westfall, P. (2010). Multiple comparisons using R. Boca Raton: Chapman and Hall/CRC.

Bryk, A. S. (2015). Accelerating how we learn to improve AERA distinguished lecture. Educ. Res. 44, 467–477. doi: 10.3102/0013189x15621543

Cain, T. (2016). Denial, opposition, rejection or dissent: why do teachers contest research evidence? Res. Pap. Educ. 32, 611–625. doi: 10.1080/02671522.2016.1225807

Cain, T., Brindley, S., Brown, C., Jones, G., and Riga, F. (2019). Bounded decision-making, teachers’ reflection and organisational learning: how research can inform teachers and teaching. Br. Educ. Res. J. 45, 1072–1087. doi: 10.1002/berj.3551

Carnine, D. (1997). Bridging the research-to-practice gap. Except. Child. 63, 513–521. doi: 10.1177/001440299706300406

Dachet, D., and Baye, A. (2020). Evidence-based education: the (not so simple). Case of French-Speaking Belgium 4, 164–189. doi: 10.1177/2096531120928086

Dagenais, C., Lysenko, L., Abrami, P. C., Bernard, R. M., Ramde, J., and Janosz, M. (2012). Use of research-based information by school practitioners and determinants of use: a review of empirical research. Evid. Pol. J. Res. Debate Pract. 8, 285–309. doi: 10.1332/174426412X654031

Doucet, F. (2019). Centering the margins: (re)defining useful research evidence through critical perspectives. New York: William T. Grant Foundation.

Drill, K., Miller, S., and Behrstock-Sherratt, E. (2013). Teachers’ perspectives on educational research. Brock Educ. J. 23:1. doi: 10.26522/brocked.v23i1.350

Dupriez, V., and Cattonar, B. (2018). Between evidence-based education and professional judgment, what future for teachers and their knowledge? In R. Normand, M. Liu, L. M. Carvalho, D. A. Oliveira, and L. LeVasseur (Éds.) Education policies and the restructuring of the educational profession: global and comparative perspectives. Singapore: Springer.

Education Endowment Foundation (2019). The literacy Octopus: communicating and engaging with research: projects. Education Endowment Foundation. Available at: https://educationendowmentfoundation.org.uk/projects-and-evaluation/projects/the-literacy-octopus-communicating-and-engaging-with-research/ (Accessed September 8, 2023

Efron, B. (1979). Bootstrap methods: another look at the jackknife. Ann. Stat. 7, 1–26. doi: 10.1214/aos/1176344552

Farrell, C., and Coburn, C. (2016) What is the conceptual use of research, and why is it important? New York: William T. Grant Foundation.

Finnigan, K. S. (2021). The current Knowledge Base on the use of research evidence in education policy and practice: a synthesis and recommendations for future directions.

Geven, S., Wiborg, Ø. N., Fish, R. E., and van de Werfhorst, H. G. (2021). How teachers form educational expectations for students: a comparative factorial survey experiment in three institutional contexts. Soc. Sci. Res. 100:102599. doi: 10.1016/j.ssresearch.2021.102599

Gitomer, D., and Crouse, K. (2019). Studying the use of research evidence: a review of methods. New York: William T. Grant Foundation.

Gorard, S., See, B. H., and Siddiqui, N. (2020). What is the evidence on the best way to get evidence into use in education? Rev. Educ. 8, 570–610. doi: 10.1002/rev3.3200

Graham, L. J., White, S. L. J., Cologon, K., and Pianta, R. C. (2020). Do teachers’ years of experience make a difference in the quality of teaching? Teach. Teach. Educ. 96:103190. doi: 10.1016/j.tate.2020.103190

Green, P., and MacLeod, C. J. (2016). SIMR: an R package for power analysis of generalized linear mixed models by simulation. Methods Ecol. Evol. 7, 493–498. doi: 10.1111/2041-210X.12504

Gutfleisch, T. R. (2021). A study of hiring discrimination using factorial survey experiments: theoretical and methodological insights. Luxembourg: University of Luxembourg.

Heinsch, M., Gray, M., and Sharland, E. (2016). Re-conceptualising the link between research and practice in social work: a literature review on knowledge utilisation. Int. J. Soc. Welf. 25, 98–104. doi: 10.1111/ijsw.12164

Hox, J. J., Kreft, I. G. G., and Hermkens, P. L. J. (1991). The analysis of factorial surveys. Sociol. Methods Res. 19, 493–510. doi: 10.1177/0049124191019004003

Joram, E., Gabriele, A. J., and Walton, K. (2020). What influences teachers’ “buy-in” of research? Teachers’ beliefs about the applicability of educational research to their practice. Teach. Teach. Educ. 88:102980. doi: 10.1016/j.tate.2019.102980

Joyce, K. E., and Cartwright, N. (2020). Bridging the gap between research and practice: predicting what will work locally. Am. Educ. Res. J. 57, 1045–1082. doi: 10.3102/0002831219866687

Khechine, H., Lakhal, S., and Ndjambou, P. (2016). A meta-analysis of the UTAUT model: eleven years later. Canadian Journal of Administrative Sciences/Revue Canadienne des Sciences de l’Administration 33, 138–152. doi: 10.1002/cjas.1381

Lima, L., and Tual, M. (2022). De l’étude randomisée à la classe: Est-il suffisant d’avoir des données probantes sur l’efficacité d’un dispositif éducatif pour qu’il produise des effets positifs en classe? Éducation et didactique 16-1, 153–162. doi: 10.4000/educationdidactique.9899

Marion, C. (2018). Transfert des connaissances issues de la recherche (TCIR) en éducation: Proposition d’un modèle ancré dans une prise en compte des personnes que sont les utilisateurs.

Morgan-Wall, T., and Khoury, G. (2021). Optimal design generation and power evaluation in R: the skpr package. J. Stat. Softw. 99, 1–36. doi: 10.18637/jss.v099.i01

Mosher, J., Anucha, U., Appiah, H., and Levesque, S. (2014). From research to action: four theories and their implications for knowledge mobilization. Scholarly and research. Communication 5, 1–17. doi: 10.22230/src.2014v5n3a161

Oancea, A., and Pring, R. (2008). The importance of being thorough: on systematic accumulations of ‘what works’ in education research. J. Philos. Educ. 42, 15–39. doi: 10.1111/j.1467-9752.2008.00633.x

Penuel, W. R., Briggs, D. C., Davidson, K. L., Herlihy, C., Sherer, D., Hill, H. C., et al. (2016). Findings from a National Study on research use among school and district leaders Technical report no. 1. In National Center for research in policy and practice. National Center for research in policy and practice.

Rycroft-Smith, L. (2022). Knowledge brokering to bridge the research-practice gap in education: where are we now? Rev. Educ. 10:e3341. doi: 10.1002/rev3.3341

Searle, S. R., Speed, F. M., and Milliken, G. A. (1980). Population marginal means in the linear model: an alternative to least squares means. Am. Stat. 34, 216–221. doi: 10.1080/00031305.1980.10483031

Stenhouse, L. (1981). What counts as research? Br. J. Educ. Stud. 29, 103–114. doi: 10.1080/00071005.1981.9973589

Tatto, M. (2020). What do we mean when we speak of research evidence in education? In L. Beckett (Éd.), Research-informed teacher learning. Critical perspectives on theory, research and practice. Routledge.

Wallander, L. (2009). 25 years of factorial surveys in sociology: a review. Soc. Sci. Res. 38, 505–520. doi: 10.1016/j.ssresearch.2009.03.004

Weiss, C., and Bucuvalas, M. (1980). Social science research and decision-making. 1st. Columbia: Columbia University Press.

Keywords: use of research, evidence-based education, factorial survey, teachers, research-practice partnerships

Citation: Jeune N, Juhel J, Dessus P and Atal I (2024) Six factors facilitating teachers’ use of research. An experimental factorial survey of educational stakeholders perspectives. Front. Educ. 9:1368565. doi: 10.3389/feduc.2024.1368565

Edited by:

Raona Williams, Ministry of Education (United Arab Emirates), United Arab EmiratesReviewed by:

Lina Kaminskienė, Vytautas Magnus University, LithuaniaClara Gutierrez, National University of Cordoba, Argentina

Copyright © 2024 Jeune, Juhel, Dessus and Atal. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nathanael Jeune, bmF0aGFuYWVsLmpldW5lQGxlYXJuaW5ncGxhbmV0aW5zdGl0dXRlLm9yZw==

†ORCID: Nathanael Jeune, orcid.org/0000-0003-0835-5845

Philippe Dessus, orcid.org/0000-0001-6076-5150

Ignacio Atal, orcid.org/0000-0002-7323-6511

Nathanael Jeune

Nathanael Jeune Jacques Juhel

Jacques Juhel Philippe Dessus

Philippe Dessus Ignacio Atal1,2,5†

Ignacio Atal1,2,5†