94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 27 June 2024

Sec. Digital Learning Innovations

Volume 9 - 2024 | https://doi.org/10.3389/feduc.2024.1339142

This article is part of the Research TopicInsights in Digital Learning Innovations: 2022/2023View all 5 articles

Online education has become an integral part of everyday life. As one form of online education, traditional Massive Open Online Courses mostly rely on video-based learning materials. To enhance accessibility and provide more variety of the learning content, we studied how podcasts can be integrated into online courses. Throughout three studies, we investigated the acceptance and impact of podcasts made available to learners on the online education platform openHPI. Throughout the studies we applied different methodologies, such as a Posttest-Only Control Group study, and a Static-Group Comparison. In the initial two studies, we identified that podcasts can serve as reasonable addition to MOOCs, enabling additional learning just as well as videos, and investigated the optimal podcast design for our learners. In one of our six-week courses on cybersecurity with more than 1,500 learners, alongside the third study, we identified that consuming an additional podcast can increase learning outcomes by up to 7.9%. In this manuscript, we discuss the applied methodologies and provide reasoning behind design decisions concerning, e.g., the podcast structure or presentation to be taken as inspiration for other educators.

Amplified by the COVID-19 pandemic, online education has boomed over the past decade (Wang and Song, 2022). In workplaces, as well as in educational scenarios, online programs and opportunities have become increasingly prominent. The shift toward online (education) has increased the inclusion and enablement of various groups, including those who live remotely and cannot afford long commutes or students from abroad who were formerly unable to afford (travel) costs.

In the majority of online education programs and scenarios, such as Massive Open Online Courses (MOOCs) (Stöhr et al., 2019; Deng and Gao, 2023), or online schools (Nigh et al., 2015; Satparam and Apps, 2022) and university programs (Bruff et al., 2013; Muñoz-Merino et al., 2017; Voudoukis and Pagiatakis, 2022), video-based education is seen and used as quasi-standard. From recorded lectures (Kandzia et al., 2013; Santos-Espino et al., 2016) to Talking-Head-with-Slides videos (Kizilcec et al., 2014; Díaz et al., 2015), or YouTube-style Explainer videos (Beautemps and Bresges, 2021; Steinbeck et al., 2022), teachers are employing various forms of video-based education.

In favor of increased accessibility of online education, for example, during a daily commute with less robust internet access or while driving a car, we investigated and developed an auxiliary podcast for our video-based education platform, openHPI. Providing audio-based education further improves accessibility for visually impaired learners or educators potentially lacking the technical skills or equipment to record high-quality video-based education content.

This manuscript presents results from a year of podcast experimentation alongside three major online courses on the MOOC platform openHPI. We summarize findings from previous publications on two of the three studies performed and extend these by further analyzing and reflecting on all three studies. Therewith, our research targeted the question:

How do audio-based podcasts contribute to learner experience and success in video-based MOOCs?

We foster the following contributions from our studies:

Contribution 1: In a study with more than 900 learners during an online course offered in 2022, we assessed the applicability of audio-based education (Köhler et al., 2022). We identified no statistically significant differences in learning success between video and audio-based education. Hence, we derive that audio-based education could prove to be a suitable addition to video-based online course.1

Contribution 2: A follow-up study and survey in another 2022 online course with more than 1,400 participants and 250 survey respondents provided answers on learners' expectations for podcasts alongside video-based MOOCs. From that survey, we derived, e.g., the ideal length to be 35–45 min and the desired consumption method of podcasts for our participants.

Once we derived applicability, context, and participants' wishes toward the podcast design, we performed an in-depth study with weekly podcasts provided alongside a 6-week MOOC on cybersecurity.

Contribution 3: Using a Static-Group Comparison, we identified a statistically significant increase in learning success of participants' exposing themselves to podcasts (Köhler et al., 2023). We contextualize the findings by an in-depth discussion of alternative explanations or threats to the validity of our study.2

In this manuscript, we particularly extend our previous publications by providing insights into participants' expectations toward our podcasts (Contribution 2) and discussing the results of Contribution 3, such as investigating threats to validity and potential alternative explanations. In the remainder of this work, we highlight methodologies employed during the different studies (Section 3). In Section 4, we shortly present results already highlighted in the previous publications (Köhler et al., 2022, 2023), and perform an in-depth discussion of Contribution 3 to provide more references for contextualization of the observed results (Section 5).

Massive Open Online Courses (MOOCs) are online educational programs provided free of charge to anybody interested. MOOCs often have a fixed start and end date with a given duration, in which learners have to work through the course content to receive a certificate of completion. Educators design MOOCs using asynchronous teaching elements, such as pre-recorded short videos, to inherently be scalable, allowing thousands of learners to participate simultaneously. Our research on developing a podcast alongside a MOOC dives into the field of audio-based education, which can enable a more accessible learning experience. Similarly, mixed method approaches using podcasts enhance the variety of content provided in an online course, leading to increased learner engagement and higher perception of the course (Guàrdia et al., 2013; Celaya et al., 2019).

MOOCs provide open and scalable education over the Internet. They often rely on pre-recorded videos for primary educational content to enable these goals. One of the main target groups of MOOCs are lifelong learners aiming to pursue further education aside from their professional jobs. Such education could help to enable a change of career or to get a broader understanding of a specific target domain (Moore and Blackmon, 2022; Sallam et al., 2022).

Podcasts as a medium for information exchange and learning have been rising in popularity in recent years (Koehler et al., 2021). Similarly to video-based MOOCs, they provide free knowledge and are easy to consume by their listeners. To the best of our knowledge, explicit comparison between both types of education formats has been limited in previous publications, with some researchers explicitly excluding the comparison from their work (Drew, 2017). However, only some studies have investigated the impact of different education mediums, concretely comparing audio-based to video-based education. Yet, the respective research is often limited by a small study group size (N < 100) (Daniel and Woody, 2010; Shqaidef et al., 2021).

In other cases, podcasts are used to aid students with disabilities in keeping up with their peers and learning content (Kharade and Peese, 2012). In 2023, Gunderson and Cumming (2023) have performed a literature review on podcasts in higher education teaching and learning examining 17 previous studies. Building on the context of higher education in universities, however, the previous studies are also subject to the limitation of participant size with < 150 students or learners each. The authors report that most studies connect podcasts to favorable student perception and that podcasts increased or aided student's knowledge of the respective subject.

Our work adds to research on the media of podcasts in education (Salomon and Clark, 1977) by evaluating whether current video-based MOOCs can substantially benefit from podcasts. While learners can currently choose to only listen to videos (and thereby treat them as audio content), they would miss out on the video's visual component, including any slides shown. In contrast, if the content is created particularly for a podcast with an audio-first principle, it is designed to be listened to without relying on visual input. Thereby, research in our field could open up possibilities for integrating education into a busy day, such as on a commute, during household chores, or even enhance the MOOC experience for visually impaired learners (Kharade and Peese, 2012; Amponsah and Bekele, 2022).

Various researchers are investigating methods to help learners succeed in online courses. A particularly well-studied topic is, for example, the field of video-based education, in which researchers identify best practices for increasing learning success or content retention and maintaining the interest of students (Prensky, 2006; Xia et al., 2022). Similarly, gamification and game-based learning are topics studied by many researchers, as both can be implemented in various forms to improve learning outcomes for all age groups (Strmečki et al., 2015; Orhan Göksün and Gürsoy, 2019; Hadi Mogavi et al., 2022). Finally, the last years showed increased research on Self-Regulated Learning (SRL) methods, which should support learners to be more focused and achieve the most in their invested time (Zimmerman and Schunk, 2011; Broadbent and Poon, 2015; Kizilcec et al., 2017; Cohen et al., 2022).

Most previous studies on podcasts as an educational medium have reported podcasts to impact learner perception positively (Stoten, 2007; Kay, 2012; Goldman, 2018; Hense and Bernd, 2021; Gunderson and Cumming, 2023). Podcasts as a standalone medium are widely accepted due to their ease of use and flexibility. Our study investigates whether podcasts could be a suitable addition to video-based online courses to help with problems such as video fatigue and limited interactivity in the course, thereby opening up new possibilities for learners to consume the learning content without being tied to a screen.

We performed all three studies presented in this manuscript alongside regular MOOCs on internet technologies and cybersecurity topics offered on the online education platform openHPI (Meinel et al., 2022) in 2022. The platform enables round-robin randomization of learners into different groups to allow different experiment setups (Hagedorn et al., 2023). After a statement on human subject research, the following paragraphs introduce the methodologies used throughout the three different studies. We highlight the motivation toward each study methodology based on relevant related work and our learnings from the respective previous studies.

Throughout the three studies, we used podcasts to provide secondary content. This describes content that expands on the primary course content and topics as offered in traditional lecture videos and presented by the main lecturer of the course. Examples of secondary content include providing further perspectives, ideas, and considerations on a topic, deep dives into the technological background, or assessments of related studies and works. Our courses already contained optional Deep Dive learning elements in various presentation formats in which secondary knowledge is presented. Only one example is an additional deep dive video in which a researcher would provide a more in-depth explanation of his current state of research, which learners did not require to complete our course. For the podcast studies, we expand the variety of educational elements used for the deep dives by audio-based methods.

We provide the following semi-formal representations for the employed study methodologies, detailing the respective method in the following. These follow previously used representations of fellow researchers such as Campbell and Stanley (1966), or Ross and Morrison (2004). In the semi-formal representation, X indicates the treatments to be studied, in our case, the podcasts as optional deep dive elements. O indicates the use of assessments or tests. Finally, R indicates randomization between groups.

Within our studies, we investigate the behavior of human subjects who receive or do not receive a specific treatment. All studies were carried out on the online education and research platform openHPI. One of the core goals of the team of researchers behind openHPI is developing and assessing new methods and types of online education. Users agree that pseudonymized data can be evaluated to enhance the services and drive research. This data includes, e.g., users' course performance. Additional data, such as demographic information, can be provided optionally by a user in voluntary surveys, which are not linked to or required for course participation or success. All studies presented within this manuscript are based on additional, optional course content. Participation in the studies and working on the respective course content was not required to complete the courses offered on the platform, and users could opt out of the study at any time.

To the best of our knowledge, the impact of audio-based compared to video-based education is understudied. Some authors have explicitly excluded the comparison from their work (Drew, 2017), or the number of participants in their studies was limited (N < 100) (Daniel and Woody, 2010; Shqaidef et al., 2021). As an initial foundation for potential follow-up studies, we therefore challenged whether podcasts are fit to provide educational content in the context of MOOCs. To assess the differences between audio- and video-based content transmission, we performed a Posttest-Only Control Group (Campbell and Stanley, 1966) study. We randomized (R) learners into three groups, exposing them to three different treatments (X1, X2, X3), and observed the learning outcome through an identical posttest (O). The experiment is repeated three times with three different Deep Dives throughout the course. Assignments to the treatment groups of each user remain identical throughout the three experiments.

Traditionally, our MOOCs feature a presenter and slides to accompany the content presentation. To foster an initial understanding of the applicability of audio-based education, we provided two variants of podcasts for the Deep Dive elements. In one of the podcasts, we employed only a single presenter (Traditional Podcast). In the other, we recorded an interview between two presenters (Interview Podcast). To compare particularly the Interview Podcast against video-based education, we provided Interview Videos to the third cohort of learners. Upon completing each learning item, learners were tasked with a content quiz and asked to provide further feedback in a survey.

We first recorded the Interview Video to ensure content consistency throughout the treatments. Then, we extracted the audio track to provide as our Interview Podcast. Our presenters did not use additional visual aids, such as presentation slides, to help the presentation. This ensured that the supporting visual presentation would not be missing in the audio-only variant. Later, we wrote a script for a single presenter based on the recorded video and audio. We ensured that the Traditional Podcast with one presenter, which we recorded as a follow-up, covered the same content.

In the initial study, we learned that podcasts serve equally well as videos for providing learning content (Contribution 1, Section 4.1). Therefore, our second study aimed to identify how learners of the MOOC platform openHPI would prefer to consume their podcasts. For this, we performed a One-Shot Case Study (Campbell and Stanley, 1966).

Of particular interest in this study were answers by participants provided in a follow-up survey to the Deep Dive in which we questioned on, e.g., the ideal podcast length or what kind of content they prefer to listen to. For this study, we offered all learners of our MOOC two learning items for the Deep Dive, of which they could choose the presentation form freely. On the one hand, we provided an 80-min podcast with three presenters available either using a web player or to be streamed via common platforms, such as Spotify™. On the other hand, we provided participants with a video of the same podcast. For that video, we placed three cameras in the recording room to video-record the speakers while they recorded the podcast. Hence, no additional visual aid, such as presentation slides, was provided in the video, and the audio track is identical to the podcast available for streaming. Participants could freely move back and forth between both forms of presentation.

Building on the knowledge of ideal podcasts for learners on the MOOC platform openHPI, derived from the two previous studies, we performed a third study, analyzing the impact of podcasts in a 6-week course. Based on insights gathered through the previous study (Contribution 2, Section 4.2), we aimed for a podcast length of ~40–45 min. Previous research has shown that this is far too long for single videos in a MOOC (Guo et al., 2014; Renz et al., 2015). Hence, we decided not to offer any video-based alternative to the podcasts. As we wanted to offer the content to all learners, we refrained from applying group randomization in this study. Instead, learners could self-select if they wanted to consume or skip the optional podcasts. We, therefore, employed a Static-Group Comparison (Campbell and Stanley, 1966) as the methodology for our study. We discuss the implications of self-selecting the exposure to the study, such as threats to validity, in our later discussion, i.e., in Section 5.4.

In each of the 6 weeks of our course (as indicated by the annotation [[W1..W6]]), learners self-selected whether they want to listen to the podcast (X). We discuss the bias of self-selection later in the manuscript. As in the previous studies, each podcast is used as a Deep Dive in the course and followed by an optional survey and quiz for knowledge (OK). Further, each of the 6 weeks is concluded by a graded exam (OGE). After all six course weeks, learner knowledge was further assessed by a final examination (OFE). All three sources of knowledge assessment were used in the analysis of the impact provided by podcasts on secondary knowledge throughout the course. The dashed line (- - -) in the semi-formal representation of our study design indicates that without randomization, we cannot ensure that both groups are equal. Table 1 shows an overview of course structures on our platform, notably including the optional course elements of podcasts in this study.

We designed the podcasts for the third study by building on the knowledge derived from the previous two studies (see Section 4.2). Additionally, we investigated whether we can use podcasts to increase interaction in the course. Therefore, we dedicated some time (20%) of the podcast to picking up hot topics from the course forum and adjusted the content distribution accordingly. Generally, our podcasts are aimed at being 35–45 min long and were planned to consist of the following parts (Köhler et al., 2023):

20% Discussion Forum review and answers.

10% Review of the current week and overview of topics.

70% Additional Content building on the content from the current week.

Massive Open Online Courses on the learning platform openHPI are usually prepared and operated by a teaching team of experts on the course topic. A principal lecturer records presentation videos to ensure consistency throughout the videos. For our podcasts, we chose to have teaching team members as speakers. This allows more reflection on the course topics since other perspectives are included. The resulting reactionary content within the course can help foster learner reflection and interaction (Lewis, 2020).

In the following sections, we present an analysis of all three studies. The in-depth analysis of the Initial Study has previously been presented and published in 2022 (Köhler et al., 2022) and will thus only be shortly highlighted here. Similarly, preliminary results on the Third Study have been presented and published in 2023 (Köhler et al., 2023). Therefore, besides covering all studies, this manuscript focuses particularly on the in-depth analysis and discussion of the extensive Third Study on podcasts.

The initial study's research question covered how audio-based education performs compared to video-based education. Specifically, we investigated the learning success and the learner perception of these two education methods at hand.3 We performed the study alongside an English-speaking cybersecurity MOOC in which 2,815 learners actively participated. Throughout the course, learners were randomized into one of the three groups for the treatments we compared. If learners progressed far enough in the course, every learner could experience all three Deep Dive learning items in the respective style. After each item, they could answer a survey and test their knowledge in an optional quiz. A total of 1,186 learners (42% of all active learners) completed the course. Table 2 presents an overview of participation statistics on study content for the respective course.

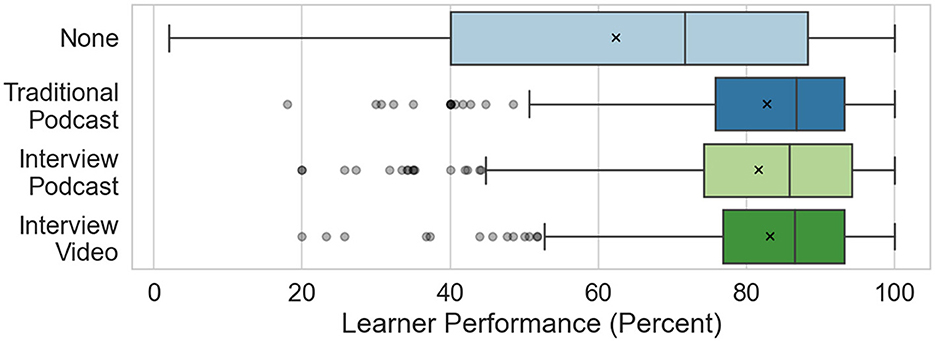

On average, the new learning items were rated at least Good on a school-grade scale (1: Very Good; 6: Insufficient). The average rating of the Traditional Podcast was worst with a grade of 2.18, followed by the Interview Video with 2.03, and the Interview Podcast rated best at 1.73. Regarding learning success, Figure 1 presents an overview of learner performance in the self-tests that followed the respective learning items, partitioned by the type of Deep Dive learning content they received. Between the three groups, we observe non-significant differences in learning outcomes interpreted as performance across the entire course (measured with a one-way ANOVA, p = 0.68). We, therefore, conclude that the three studied types of content transmission serve equally well at presenting knowledge to learners.

Figure 1. Course performance of learners partitioned by the different Deep Dive presentation formats. Black lines mark median values, × the mean. N = 1, 308, adapted from Köhler et al. (2022).

We further questioned participants' perception of whether the content was understandable. We used a 5-point Likert scale (Likert, 1932) on which participants could express their (dis-) agreement with the respective statement. Throughout all three Deep Dives, at least 89% of learners agreed that the content was well understandable for all content types. Generally, we observed that learners better understood the content presented by multiple presenters than that of our Traditional Podcasts with a single presenter.

In our surveys, we also questioned whether learners would prefer video-based or audio-based content and whether they would like to have it presented by single or multiple presenters. For those two variables altered in our study, we observe that regarding number of speakers, participants tend to opt for the variant they have experienced during the study. Regarding the availability of video content, all participants slightly preferred to have a video available. However, indecisiveness among those who previously received the audio-only podcast was higher than among the learners with the Interview Video. Throughout all Deep Dives, users wished to have more content in the form they experienced. However, the learners already showed tiredness when multiple podcasts were offered within a single course week (Köhler et al., 2022).

Contribution 1: In this initial study on the potential use of podcasts in combination with Massive Open Online Courses, we identified that the learning outcome using podcasts showed no significant differences to that of video-based learners. Learners showed great interest in singular podcasts but appeared to get tired once they were used multiple times within a single course week. Hence, the question of how podcasts should be integrated into MOOCs on our platform remains subject to future analysis.

Building on the knowledge obtained with the first study of podcasts on our online education platform, openHPI, we aimed to investigate further how learners would like podcasts to be presented, including the ideal length and topics to be covered. Alongside a 2-week German-speaking course on Cloud Computing, we provided an ~90-min podcast on secondary content, expanding on the content provided in the course videos.

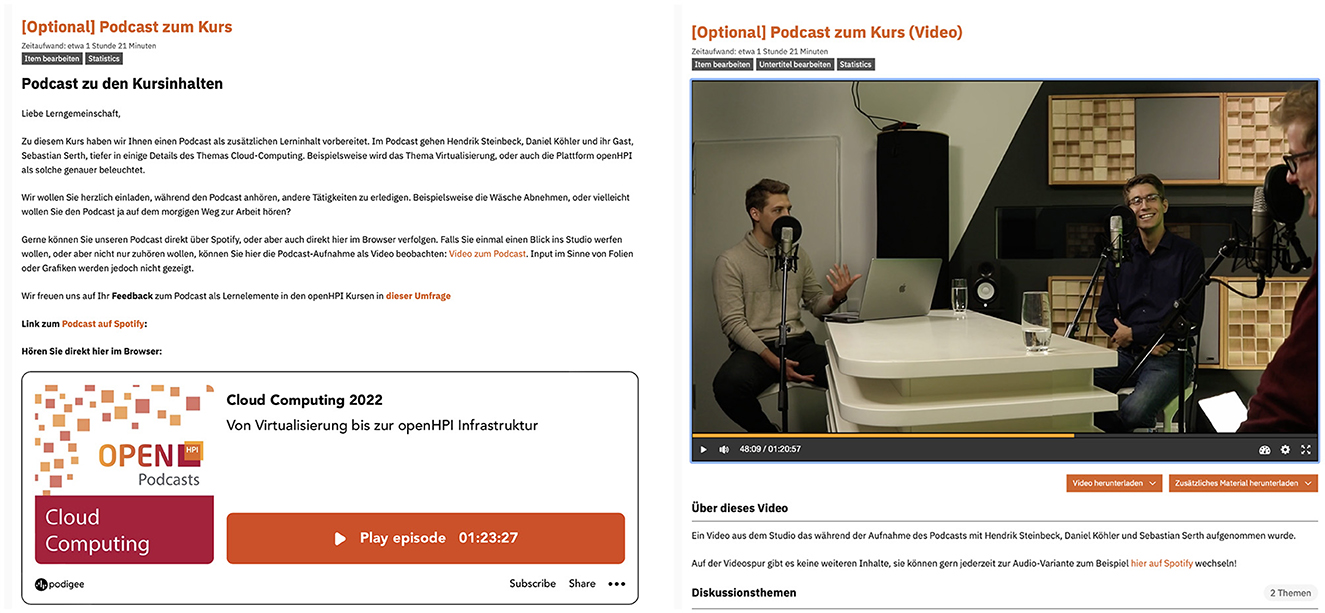

The podcast could be consumed in the course using an embedded audio player, listened to via Spotify™, or watched as a video from the recording studio with the regular video player. Figure 2 provides screenshots of both conditions, which the participants could choose freely. Afterward, learners could test their knowledge in a content quiz and provide additional feedback using a survey. Seven hundred and sixty-one of our learners answered that they only listened to the audio (independently of the player), while 445 opted to watch the video recording. One hundred ninety learners reported to have made use of both methods.

Figure 2. Comparison of the two available conditions in our second study. Introductory text with links to Spotify™ and an embedded web-player (left), and a video recording from the podcast recording studio (right).

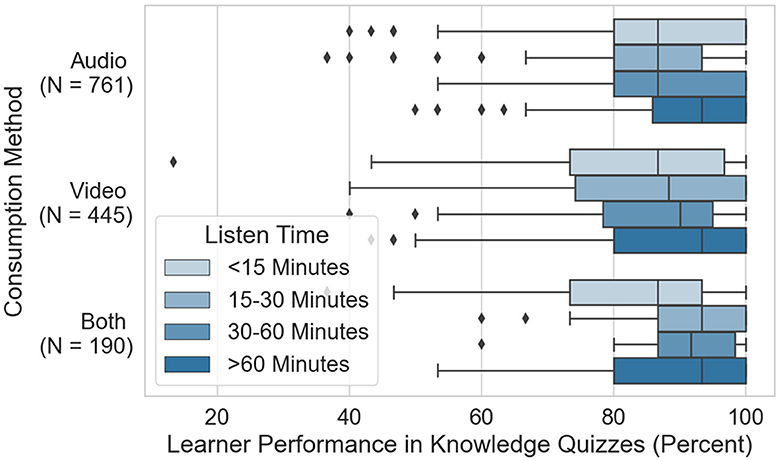

After listening to or watching the podcast, learners could take a content quiz covering content only presented as part of the podcast and not available in the remainder of the online course. Figure 3 depicts learners' performance in the respective content quiz. We refrain from directly comparing the consumption methods in this study, as these are subject to a strong selection bias, and a One-Shot Case Study cannot sufficiently counteract such implications. Instead, we want to draw attention to the fact that throughout all three consumption methods, prolonged exposure to the content results in increased performance in the quiz. We assume that the outliers from this generalization for the groups who used both methods and listened 15–30, or 30–60 min are due to the limited participant group size available (N15 − 30 = 28, N30 − 60 = 15). We primarily interpret the fact that even in prolonged times of podcast consumption (e.g., >60 min), participants are still able to understand and internalize (new) content.

Figure 3. Overview of participants' performance in the content quiz on the podcast grouped by consumption method and exposure (listening time).

The main goal of this study, however, was the qualitative exploration of participants' expectations toward podcasts used alongside our Massive Open Online Courses. Two hundred and eighty-three participants provided answers to the questions in the optional follow-up survey. Participation fluctuated slightly between the different questions presented in the following. Regarding ideal podcast length, participants could provide any arbitrary number. The mean of participants' preferences was 34.97 min (N = 234, σ = 30.22).

Regarding wishes for technical implementation of the podcasts (N = 283, multiple answers allowed), 61% of respondents wished for shownotes to be made available alongside the podcast. In qualitative free-text answers, the central argument for this wish was that written shownotes would allow a more accessible recap of specific information from the episodes. This is followed by 56% wishing for chapter marks to be made available for easier podcast navigation. Forty-five percentage of the participants wished for a content overview of the podcast either in the first minute(s) or in the description. Much less important was a better integration into our platform (11%) or the availability of the podcast on other streaming services (4%).

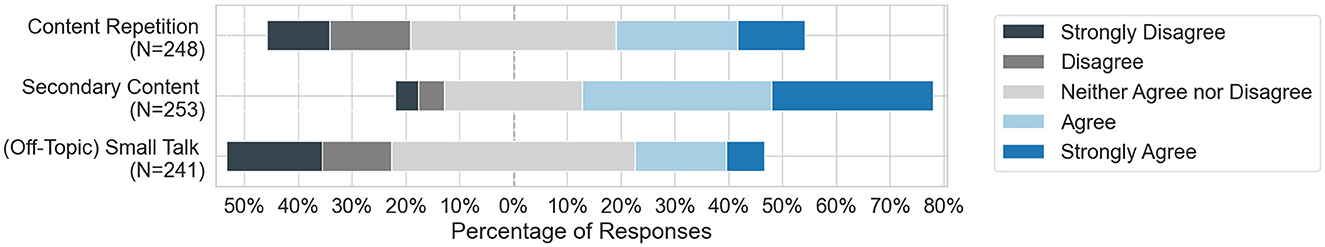

The content presented in our podcast can generally be divided into Repetition of underlying content and knowledge, Secondary Content, which expands on the basic content provided in the online course, and Small Talk, such as personal reflections and stories related to the topic. Using a 5-point Likert scale (Likert, 1932), we questioned whether participants liked how much time in the podcast was spent on the respective types of content. 77.5% of respondents (N = 258) liked the proportion of Repetition we included. 84.5% of 258 participants liked the proportion of Secondary Content as presented in the podcast. Only 58.1% of 248 participants liked the amount of Small Talk present in the podcast. We used the same Likert scale questions to investigate which content types learners would want to be presented more. Figure 4 presents the results of the respective question block for the three content types. Judging from both question blocks on the current length and a potential increase of each content type, the tendency shows that participants appreciate more Secondary Content and wish less Small Talk. At the same time, content repetition can stay as it is but should not become much more. Based on the presented insights from our survey questions, learners' desired consumption method, and the observed learning outcomes, we derive the following requirements for ideal podcasts integrated into our MOOCs.

Figure 4. Responses to the question of whether participants wished more of the respective content type in our podcasts. Feedback was provided on a 5-point Likert scale.

Contribution 2: Most learners self-selected to consume the audio-only presentation form of the learning content provided. An ideal podcast for our population would be ~35–45 min long and should combine content repetition (15%) with a vast amount of new, secondary content (~80%). Small Talk as a content element should only be used sparsely (remaining 5%), ideally resulting in valuable further perspectives on a certain topic. From a technical perspective, show notes, chapter marks, and an overview of the episode's content should be provided.

In the third study, we provided one podcast for each of the 6 weeks of a MOOC on cybersecurity. Learners who enrolled in the course could self-select whether they want to expose themselves to the treatments, i.e., listen to the podcasts. At any time, learners could decide to start listening to the weekly podcasts. Further, whenever they started to listen to a podcast, they could freely select to pause, continue, or stop listening to it.

Limitation 1: Due to technical limitations, we cannot precisely track a learner's exposure to the podcast in terms of the exact time they have listened to the podcast. Instead, we have to rely on their reflection in the surveys. We discuss this limitation in Section 5.3.

As highlighted in the methodology (Section 3.4), the study design allows us to measure users' learning success in three forms:

(1) The immediate learning result upon podcast completion in the respective knowledge quizzes (OK).

(2) Overall result for the learners in each of the course weeks as observed in the weekly graded exams (OGE).

(3) The overall knowledge of a learner upon completion of the entire course and exposure to up to six podcasts in the final examination of the course (OFE).

Finally, we can report on a few qualitative insights, e.g., if users did other activities while listening to the podcasts, by evaluating their survey responses. One optional survey was provided alongside each of the podcasts.

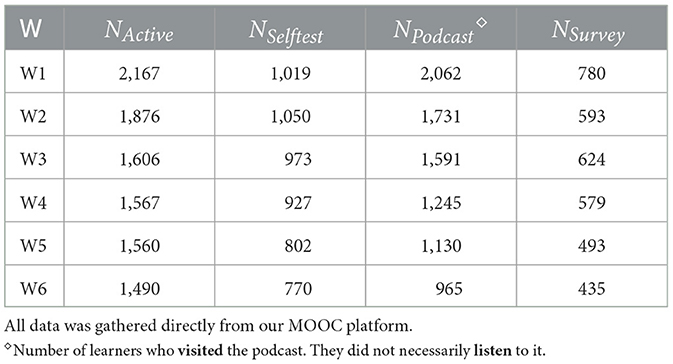

Table 3 presents participation statistics throughout the course weeks. We consider active learners per week (NActive) as those who participated in the weekly graded assignments. The learners who visited the podcasts (NPodcast) are not necessarily also listening to the podcasts (compare our discussion of the exposure in Section 5.3). The share of learners visiting the podcast drops to 64.8% over the 6 weeks, probably because learners not interested in the podcast get more used to skipping the individual learning item featuring the podcast. At the same time, the share of learners answering our survey on the podcasts respective to the learners visiting the podcast (NSurvey/NPodcast) increases from 37.8 to 45.1%. This aligns with the previous observation that those not interested in the podcast probably get better at skipping the learning item entirely.

Table 3. Overview of weekly active learners in comparison with learners visiting the podcast, those participating in the survey, or taking the content quiz.

To better understand how our learners, who exposed themselves to the treatment, are distributed regarding demographic criteria, we present Table 4. For each category and each group of learners, we listed the response rates to the surveys or the respective questions inside the surveys in this table. The ratio between the answers given to our survey is calculated relative to the total answers for a category. We observe that the self-reported gender of learners shows a relatively similar ratio between the treatment and control groups. However, people aged between 50 and 70 are slightly more likely to consume the additional podcasts. We further observe that the group of learners answering the surveys has a higher share of learners who previously consumed podcasts.

Table 4. Demography of learners divided by their exposure to the treatment. NTotal = 2, 167, adapted from Köhler et al. (2023).

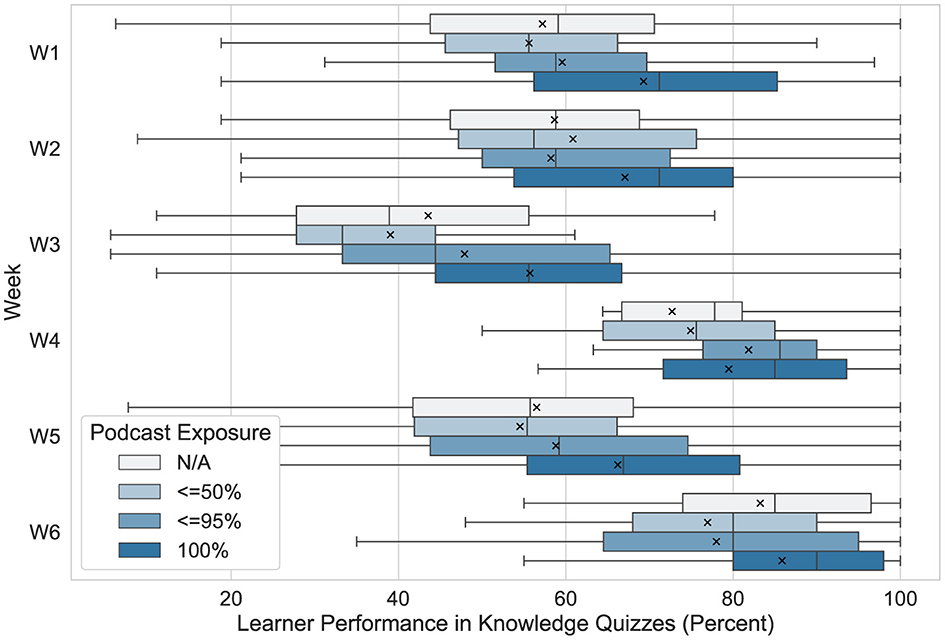

Before adequately assessing whether podcasts have impacted general learning success, we aim to verify if the deep-dive knowledge presented in the podcasts was new to learners. Figure 5 illustrates learners' performance in the quiz, which accompanied the podcast, compared to the learner's exposure to the treatment. The exposure is taken from the surveys in which learners reported how much percent of the respective podcast episodes they consumed. As depicted in the figure, we divided that exposure into four major categories. N/A is assigned to those who did not answer the survey. Therefore, comparing learners' performances with exposure N/A is slightly skewed, as that group includes those who might have listened to the podcast but have not answered in our surveys. The figure shows that more substantial exposure to the podcast is generally reported with a higher quiz performance. This confirms that learners actually derive new knowledge while listening to the podcast (Köhler et al., 2023).

Figure 5. Overview of learners' performance in the weekly knowledge quizzes following the podcasts, grouped by their exposure to the respective podcast as reported in the surveys. Participants who did not report whether they listened to the podcast are labeled as N/A. NTotal = 5, 547.

The previous analysis has shown that new (secondary) content is taught in the podcasts, as learners who listened less to the podcast performed worse and thus apparently had not known the content previously. Contrasting, the weekly graded exams were only based on the content provided in the week's educational videos. Hence, all knowledge required to answer the questions was available without listening to the podcasts. While we shortly reiterated the weekly learning content in the podcast (cf. Section 3.4), we did not touch on any details of the respective topics. Figure 6 presents the learner performance in the weekly graded exams, grouped as in the previous analysis, by their survey responses to podcast consumption. Learners with higher podcast exposure perform better in the quizzes (Köhler et al., 2023). We used an independent sample t-test to compare performances between those who were exposed to ≤ 50% of the podcasts and those who listened to more. The median value between both groups shows an 7.5% improvement from 84.2% ( ≤ 50% exposure, σ = 13) to 90.5% (>50% exposure, σ = 18). We observe a small effect (Cohen's d = 0.29), with a significant difference as indicated by a t-test (T = 10.12, p < 0.001).

Figure 6. Overview of learners' performances in the weekly graded exams throughout the course. NTotal = 10, 266, adapted from Köhler et al. (2023).

Knowledge from all 6 weeks of the course was examined for the final examination. On average, 40.2% of learners reported their podcast consumption in our surveys. To evaluate study results across the entire population of our course, we chose a different metric to indicate podcast exposure: Instead of relying on self-reported usage of podcasts in the surveys, we evaluated whether a learner had visited a podcast learning element in our course. The potential bias introduced by the missing precision for tracking learners' podcast listening time was noted earlier as Limitation: Exposure and will be discussed in Section 5.3.

Figure 7 depicts learners' performance in the final examination grouped by their visits to the learning items. We observe a 7.9% increase in mean performance [from 81.4% (σ = 14) to 87.8% (σ = 10)] between users who did not visit any podcast and those who visited all six podcasts. The t-test shows that the 7.9% increase is genuinely significant [t(758) = 5.9, p < 0.001]. We observe a moderate effect for this improvement (Cohen's d = 0.59). Of our learners, we interpret those with a performance in the lower quartile of the distribution as the weaker learners. With the same grouping of users by their visits of the podcast learning items, we identify an 12.9% increase for the weaker learners (from 74.3 to 83.9%). Thus, the podcast allowed weaker learners to catch up to their peers.

Interaction is one of the critical success factors of a course but, at the same time, one of the significant challenges when designing and running MOOCs (Shao and Chen, 2020; Estrada-Molina and Fuentes-Cancell, 2022). We classify interaction into two categories: learner-to-learner and learner-to-teacher interaction. Discussion forums offer a successful opportunity to enable both directions of interaction (Nandi et al., 2012; Staubitz et al., 2014; Khlaif et al., 2017; Staubitz and Meinel, 2020). However, a growing number of learners poses a significant challenge toward learner-to-teacher interaction in a discussion forum due to the number of posts and contributions with which teachers would be required to interact. Therefore, our podcasts were designed with two key features in mind:

• Approximately 5–10 min per episode were reserved for reiterating and catching up with major questions in the discussion forum.

• The content presented is more personal than the lecture videos. In every episode, we linked the context of theoretical knowledge to our daily lives. This generated a more personal connection between learners and the team.

Both design aspects of the podcasts aim to enhance the perceived interaction between the teaching team and the learners. Some learners reported that listening to the podcasts was as if one listened to a campfire chat. During our 2022 course, 448 discussions were opened in the forum, in which 592 users participated. These numbers account for a slightly smaller share of users involved in the forum than in a previous iteration of the course (29.5% in 2020 vs. 27.3% in 2022).

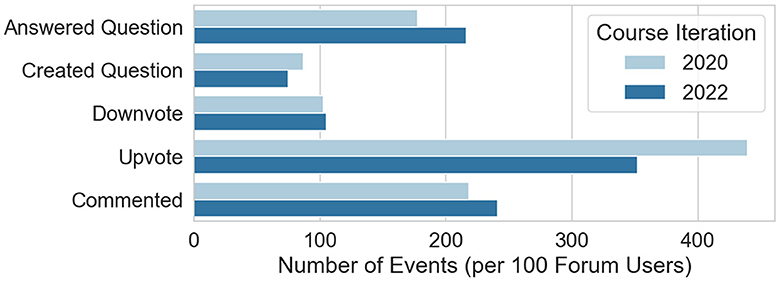

Figure 8 depicts the activities in the discussion forum compared between the current and a previous iteration of the online course, normalized by the number of active forum users in each course. As such, we observed that while the users in the 2022 iteration posted ~14% fewer questions; they showed a 15% higher interest in answering and commenting on the questions.

Figure 8. Number of events in the discussion forum normalized per 100 active forum users. NTotal = 16, 120.

The podcasts are comparable to our courses' other deep dive videos, as both are optional additional learning items. During the 2020 course without any podcasts, 40 posts were created on the topics from the deep dives (6.8 per 100 Users). In our 2022 iteration featuring the same deep dives but additional podcasts, learners began 15 discussions (3.3 per 100 Users) on the topics of the traditional deep dive videos. At the same time, 47 discussions (10.5 per 100 Users) on topics of the podcasts have been opened, sometimes with dozens of replies. Therefore, we conclude that podcasts provide a good starting point for increased learner engagement, as they served for more than 10% of the forum discussions in our 2022 course.

Contribution 3: In the Static-Group Comparison performed in this study, we observed improved learning outcomes for the learners exposed to our treatment. This improvement of up to 7.9% (in the final examination, 7.5% for the weekly exercises) is significant, as shown with t-tests and serves for a moderate effect size. Further, we observe that the podcasts foster learner interaction in the course forum.

In the previous sections, we presented the results from our three studies on podcasts in the context of video-based MOOCs. Few results were published previously (Köhler et al., 2022, 2023). Upon presentation of the preliminary results of study three to the scientific community at a conference in 2023, we have been able to discuss our results with fellow researchers. In this manuscript, we present the results of our internal and external discussions on results from the third study, which lacked investigation in the previous publication. To reflect on our results, we discuss to which extent the observation could be explained through other factors in Section 5.1. Following, we discuss the validity of the medium of podcasts compared to using more videos in Section 5.2. We continue by evaluating and discussing limitations, such as the observed exposure in Section 5.3 and consider threats to our study's internal and external validity in Section 5.4. We conclude by discussing the applied methodology of performing a Static-Group Comparison in Section 5.5.

The previous sections indicated that optional podcasts help to foster in-depth learning and learner's course success. We follow the proposal by previous researcher to enumerate alternative explanations to the observed study outcome (Shadish et al., 2002). In the following, we highlight three potential alternative explanations for the observed effects, providing cues that support the genuinity of the effect derived by the integration of podcasts in MOOCs.

We conducted our study with the second iteration of our online course. Hence, some of our learners might be retaking the course.

Hypothesis: Only learners retaking the course are interested in the podcast. Those with exposure to podcasts perform better in the examinations as it is their second time studying the course content.

Of the 1,668 learners who completed the course, 28% (462 learners) had already completed the previous course iteration. Exactly 92% out of both groups consumed at least one podcast. Podcast consumption is evenly spread between the two groups of learners.

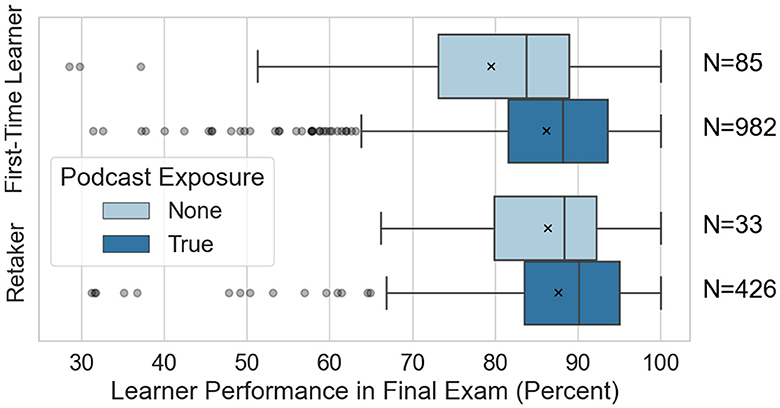

Figure 9 compares the final examination performances of both groups of learners and whether they did or did not expose themselves to any podcasts. We observe a significant improvement in the average performance for first-time learners of 8.4% [t(1, 065) = 5.59, p < 0.001]. The lower quartile of learners shows an even more substantial improvement of 11.6%. For second-time learners, a smaller improvement of the average grade was observable (1.5%) when being exposed to podcasts. A t-test proves this improvement of no significance [t(457) = 0.67, p = 0.50]. The missing significance could be accounted for by the limited group size of learners retaking the course and not being exposed to podcasts (N = 33). Still, we observe that weaker learners (lower quartile of the box plot) could improve by 4.5%.

Figure 9. Comparison of the performance in the final examination between learners who had previously taken the course and whether they were exposed to podcasts. NTotal = 1, 688.

We conclude that podcasts are used equally between the two groups. Those taking the course for a second time have a higher initial knowledge. The podcasts help those taking the course for the first time to catch up with their peers. Similarly, the stronger improvement for weaker learners persists in both groups. We hence reject our hypothesis that only those learners retaking the course are interested in the podcast.

Another factor for the observed improvement of performances generated through and enhanced by stronger exposure to podcasts could be a different (initial) skill level between the groups of learners.

Hypothesis: Only particularly good learners opt to consume the podcasts. Hence, the results of learners who listened to the podcasts are always better.

Independent of the variables we analyzed in the previous sections, almost all results show that (aside from the increase in median performance) learners with performances in the lower quartile of the distribution always benefit more substantially. Hence, while podcasts benefit stronger learners, they especially help those weaker learners willing to invest the additional time to catch up to their peers. Therefore, we reject this hypothesis and argue that all learners can benefit from the podcasts offered.

Another alternative explanation for the success of podcasts in our course might be the factor of “New”.

Hypothesis: Learners are particularly interested in new types of learning content, thereby listen more closely to podcasts and hence perform better.

The course spanned 6 weeks. Throughout all weeks, we observed an improvement in learner performance created by exposure to the podcasts (compare Figure 6). Kulik et al. (1983) previously identified that methods should be tested for at least 5 weeks to account for the factor of “New”. Therefore, we claim that by the last week(s), the factor should no longer be accountable for results. When evaluating results from the sixth week alone, we observe that those learners exposed to podcasts score 3.5% better than those who skipped it [89.9% (σ = 12) vs. 86.9% (σ = 18)]. We derive that a statistically significant effect is observable even in the sixth week alone [t(1,489) = 2.5, p = 0.012, p < 0.05], and the Factor “New” is not the driver of the improved success.

In the third podcast study, we provided additional secondary knowledge through podcasts and observed increased learning success for the graded exercises included in the course. However, the reason for that is not necessarily the medium of podcasts. One could hypothesize that presenting similar additional content as videos or live streams would be a suitable choice. However, increasing the weekly effort for an online course from three to approximately four hours with optional content burdens the learners' schedules. Audio-only education content, therefore, was the obvious choice for our study to provide the learner with as much flexibility for its consumption as possible.

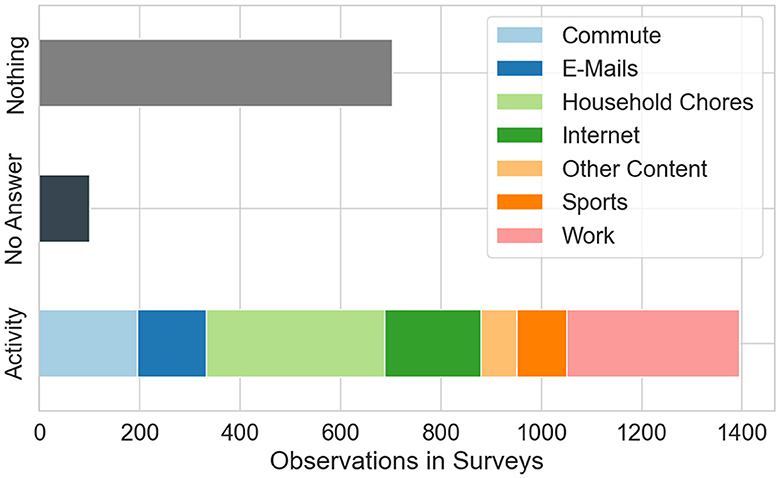

From answers to our surveys, highlighted in Figure 10, we observe that most learners use the flexibility the audio-only educational material provides. When asking the learners which activities they were doing while listening to the podcast, only a third answered Nothing. This is unchanged throughout the course weeks.

Figure 10. Activities learners reported to have conducted while listening to the podcasts in our third study. NTotal = 2, 198.

As presented in Section 4.3.5, learners were actively interacting and discussing with each other and the teaching team on the content of the podcasts inside our discussion forum. Based on qualitative feedback in the forum and course-end surveys, we judge that this discussion has benefited from the atmosphere of the podcast. As there is no need for a camera to record the podcasts and recording the audio alone is easier, we were less bound to our available time in the (video-) recording studio. Hence, we could take plenty of time to start the podcast recording, ensuring that each teaching team member was in the mood for the recording. This generated a relaxed and friendly atmosphere. With this setting, we enabled our speakers to remain relaxed for the prolonged recording and helped to create a certain feeling of privacy. Reflections by learners in the course forum suggested that it felt like being able to join in on a personal discussion between the speakers. Similar perceptions could be created with fireside-chat-like videos and interviews, but not traditional presentation videos in a professional atmosphere.

In summary, podcasts are easier to create for the teaching team and allow a more relaxed setting than videos. Further, podcasts allow learners to allocate the time required to consume the additional learning material more flexibly per week. Hence, we conclude that while we could have provided videos with the same content, we do not think similarly positive results would have been obtained. A concrete comparison, however, remains subject to future research.

Our Third Study on podcasts in the context of online courses has been performed in a large-scale MOOC spanning 6 weeks. However, as previously discussed, we were limited in evaluating participants' exposure to our podcast. Only 40.2% of learners responded to our surveys throughout the online course, providing information on how much of the podcasts they listened to. As the actual number of users listening to the podcasts is likely higher than those who reported it in the surveys, we had to perform our analysis based on a different proxy variable. For the performance analysis in the final examination (see Section 4.3.4), we therefore considered learner exposure to the treatment based on their visit to the learning item. However, this also includes learners visiting the learning item but not actually listening to the podcast. Both variables we measured in our study are thereby inaccurate, which is an important consideration for future research. In the following, we, therefore, attempt to perform an educated guess for the actual statistics on learner exposure to the podcasts.

As the first indicator, we calculate the upper maximum of podcast plays by our learners. A single learner could theoretically play and listen to a total of six podcasts, one during each course week. The sum of active learners over the six course weeks (cf. Table 3) is, hence, the upper bound of our estimation. As indicated in Table 5, this sum is 10,266 potential plays by course learners. Our learning analytics data allows us to evaluate which learning items a user has visited. Our podcasts generated a total of 8,724 impressions for learners who visited the respective learning items but did not necessarily listen to the podcast. As the final value of the upper bound, we can derive actual access to the podcast through either the web player or any streaming platform. Those podcast plays, however, cannot be annotated to learners in our course. From the hosting platform, we derive the information that ~7,900 podcasts were played throughout the course. However, this includes multiple counts for learners who have opened and listened to (different parts of) the same podcasts multiple times.

As a lower bound for our educated guess, we observed 3,504 reports of learners listening to (different) podcast episodes. These reports were provided in the weekly surveys. Based on previous experience with surveys in our online courses, we know that only ~30–40% of learners answer (weekly) surveys. Hence, we derive that 6,000 actual plays would be an optimistic estimated guess for the actual number of podcasts listened to. This would still be roughly 20% less than the number of plays our hosting platform reported, allocated to learners listening multiple times to that episode. With learner exposure in that range, we would have achieved that around 60% of active learners each week actually listened to the podcast. Even with a more pessimistic estimation of around 4,500 plays, we would see a conversion rate of 45%, which is worth the effort of creating the podcasts. For our future work (cf. Section 6), an improvement in the tracking of podcast consumption is essential to enable a more precise investigation.

The following paragraphs discuss the internal and external validity of the Third Study on podcasts. While we provide reasons to consider our study internally valid, this singular experiment cannot achieve external validity, interpreted as generalizability (McDermott, 2011).

Internal validity refers to the question of whether, for the performed experiment or study, the observed outcome and the inferred causality can be considered fool-proof (Campbell, 1957; McDermott, 2011). Mainly, quasi-experimental or pre-experimental designs such as our study are prone to issues regarding their internal validity (Ross and Morrison, 2004). Campbell and Stanley list eight categories of variables potentially threatening internal validity: (1) History, (2) Maturation, (3) Testing, (4) Instrumentation, (5) Regression, (6) Selection, (7) Mortality, (8) Interaction of the Previous (Campbell and Stanley, 1966). For Static-Group Comparisons, Campbell and Stanley mainly highlight threats for internal validity with four of the previous categories: (2, 6, 7, 8). For our described study, in which we added podcasts to an online course while allowing learners to self-expose themselves to the podcasts in each course week, we consider the following significant points of discussion regarding the internal validity of the study:

• History describes potential issues due to historical events occurring during the study. Within the 2022 iteration of our MOOC during which the weekly podcasts were employed, no major salient events occurred that we would reason to have impacted the study outcome.

• Maturation covers study participants who are particularly advanced in particular topics and might therefore alter the results. The participants in our online courses are diverse learners. Some have studied multiple online courses already, and some have taken the same course multiple times already. As discussed in Section 5.1.1, we observed similar results for both groups of learners, those completing the course for the first time and those retaking the course [NRetaker = 462 and NFirst = 1, 206].

• Selection challenges potential biases with the assignment of participants to the treatment. As we do not actively select the learners but rather have them seek exposure to the treatment themselves, we do not introduce such a bias. We previously discussed that the learners participating in the podcast are diverse regarding their (demographic) features (compare Table 4). Hence, the self-selection has not introduced any biographic bias. We observed slight trends toward older people and those accustomed to the medium of podcasts, but the overall distribution between both groups is similar. Still, a future repetition of the study would have to embrace the bias of self-selection.

• Mortality covers issues stemming from a potential dropout of participants due to treatment. We discuss the threat of experimental mortality in more detail in our discussion of the chosen study methodology in Section 5.5. As we performed multiple evaluations throughout the study, we consider experimental mortality a low threat to our analysis and the corresponding conclusions.

• Interaction of the factors considers whether other issues arise by the interconnection of any previous factors. We additionally evaluated our learners' familiarity with podcasts alongside their demography (see Table 4). As the pre-experience is distributed similarly across both groups, with a slight bias toward regular podcast consumers opting for our treatment, we conclude that there should not be any other interconnection of variables leading to a false claim of the factor.

External validity questions the generalizability of the findings of a study. Aronson et al. (1989) stated that no single research alone could achieve generalizability as it can only be inferred through systematic, repeated testing and verification of a hypothesis in different contexts. In our research, we compared the impact of podcasts in a study with a total of 2,167participants across all age groups who self-enrolled in the study. We thereby provide a good overview of the average population. Still, our courses educate on cybersecurity, a topic from the niche of IT education.

In our conception of the MOOC and the according study, we chose to allow all learners to consume the podcast. Therefore, we could not employ randomization and control groups for the treatment. Based on the previous discussions of validity, demographic factors, and alternative explanations, we argue that we would have observed similar results if we had experimented with randomization.

Campbell and Stanley (1966) challenge the experimental mortality, which refers to the fact that people receiving (or not receiving) a treatment could drop out precisely due to, e.g., the treatment itself and can hence not be evaluated anymore in comparison after the treatment. When comparing completion rates between the 2020 and the current version of the course in 2022, we observe that fewer enrolled learners completed the current course (77.0% in 2022 compared to 82.4% in 2020). The dropout could be induced by various reasons, from learners who previously took the course and realized that they remember almost everything to learners being tired of computer-based training after 2 years of COVID-19 safety practices. To ensure that experimental mortality alters our results as little as possible, we experimented with six stages of treatment (one podcast in each course week) and repeated evaluations throughout the course. During each week, our learners could seek exposure to the treatment voluntarily. Additionally, in each of the weeks, we evaluated the success of the treatment at two points: with a content quiz immediately after offering the podcast and a graded exam at the end of the week. Hence, we consider that we have counteracted the threat of experimental mortality as well as possible for our context.

To enhance the experience for learners, we further opted against a pretest at the beginning of our course, which would have turned our Static-Group Comparison into a quasi- or pseudo-experiment, such as the Nonequivalent Control Group Design (Campbell and Stanley, 1966). As we had already tasked the learners with weekly surveys on the podcasts, we wanted to keep further effort for participation in the study low. Additionally, learners could answer course-start and –end surveys, which are required for internal analysis of the courses. As we wanted to avoid burdening the learners with another quiz or task before the course began, we opted against the pretest.

The core limitation of our study is the missing precision for evaluating the learner's exposure to the podcasts. We could only rely on the survey results we retrieved from the learners to measure exposure. Unfortunately, this leaves room for interpretation for those learners not answering the surveys. During the planning of the study, we expected that we would be unlikely to properly report exposure because most learners would listen to the podcast on third-party platforms such as Spotify™. However, we derived from our hosting platform that ~55% of users listen to the podcast using the web player embedded in our course item. For a future study, we aim to develop a web player to collect more detailed and precise information on listening behavior and, hence, the exposure of our learners.

Further future work on the research of podcasts alongside online courses could cover, e.g., the technical implementation. In our Second Study, we identified that many participants wished for written shownotes to be made available. We omitted providing these in the Third Study, as we did not want the availability of these to impact our results, as that would allow learners to read instead of listen to the podcasts, i.e., while answering the exam or quiz questions. Additionally, we would like to evaluate the impact of the presenters on learners, for example by including external guests as speakers, potentially making the podcast even more attractive. Finally, further uses of podcasts could be investigated. An initial idea would be to evaluate a podcast as a preparation or kick-off for the course, even before the first week of the course starts.

This article presents insights into developing and assessing podcasts as a further learning element in the online education platform openHPI. Across three studies performed in German- and English-speaking Massive Open Online Courses (MOOCs), we evaluated the feasibility, learner acceptance, and impact of podcasts. While few individual results had been published previously, their in-depth discussion and reflection had previously been omitted and is performed in this manuscript. In our third, large-scale study on podcasts alongside a 6-week MOOC, we observed improved learning results for all learners listening to the podcasts. This improvement in results has been observed when evaluating performance on the primary content of the course, which was not the main focus of the podcasts, as measured through the regular graded exams. Instead, the repetition and contextualization of knowledge that was performed when discussing secondary content in the podcasts has significantly helped learners enhance their understanding of the fundamental content provided in the course.

Our study is subject to limitations and certain considerations. Therefore, we have discussed alternative explanations for the observed results internally and upon presentation of the preliminary results of our third study at a large-scale conference with fellow researchers (Köhler et al., 2023). Our exploration of alternative explanations, threats to validity, and limitations of our study presented in this work can provide a starting point for the discussion of similar research in the future.

Our research on providing podcasts alongside MOOCs has provided three major contributions:

C1 Podcasts serve equally well for providing content as videos and can, therefore, be considered a suitable expansion to MOOCs.

C2 Learners wish for podcasts on secondary content without much repetition of previous content or small talk, lasting about 35–45 min.

C3 Providing weekly podcasts alongside MOOCs can significantly increase the learning success of those learners opting to consume the podcast.

The advantages of podcasts, including a larger variety of perspectives, easier preparation and reflection of topics, and the lower technical skill required to record and provide podcasts to learners are at hand. Therefore, every course creator should consider them as a feasible medium to accompany their online course.

The data analyzed in this study is subject to the following licenses/restrictions: the data analyzed is based on the participation of learners on the MOOC platform openHPI. In accordance with the data protection regulations of the platform, we may only share aggregated results. Requests to access these datasets should be directed to DK, ZGFuaWVsLmtvZWhsZXJAaHBpLmRl.

Ethical approval was not required for the studies involving humans because the study is not endangering the participating persons in any way. They are actively deciding to participate in an online educational course. Within the study, all participants have received access to the same content, it was their decision to consume it or to skip it within their course context. Participants can have their data be deleted at any time. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants' legal guardians/next of kin in accordance with the national legislation and institutional requirements because the study was carried-out on the education and research platform openHPI. One goal of the platform, as stated in its terms of use, is to enhance online education. Therefore, participants agree that their platform usage data is evaluated in pseudonomized form for research purposes. All other information, such as gender or age was provided in dedicated questionnaires and surveys within each study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

DK: Conceptualization, Writing – original draft, Writing – review & editing. SS: Validation, Writing – review & editing. CM: Supervision, Funding acquisition, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Some illustrations, such as Figures and Tables used in this work have been published previously. We thank the corresponding publishers for the possibility to publish these in this manuscript again. Figure 1 and Table 2 have been first published in Lecture Notes in Computer Science (LNCS, volume 13450) in 2022 by Springer Nature (Köhler et al., 2022). Figure 6 as well as Tables 1, 4 have been first published in L@S '23: Proceedings of the Tenth ACM Conference on Learning @ Scale in 2023 by the Association for Computing Machinery (ACM) under a CC BY-NC 4.0 License (Köhler et al., 2023).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^The in-depth writeup of the study (Köhler et al., 2022) is published in EC-TEL 2022 proceedings by Springer.

2. ^A preliminary look at the results of the study (Köhler et al., 2023) is published in L@S 2023 proceedings by ACM.

3. ^We previously published the in-depth writeup of this study in Köhler et al. (2022).

Amponsah, S., and Bekele, T. A. (2022). Exploring strategies for including visually impaired students in online learning. Educ. Inf. Technol. 28, 9355–9377. doi: 10.1007/s10639-022-11145-x

Aronson, E., Ellsworth, P. C., Merrill, C. J., and Gonzales, M. H. (1989). Methods of Research in Social Psychology, 2nd Edn. London: McGraw-Hill Humanities, Social Sciences & World Languages, 376.

Beautemps, J., and Bresges, A. (2021). What comprises a successful educational science YouTube Video? A five-thousand user survey on viewing behaviors and self-perceived importance of various variables controlled by content creators. Front. Commun. 5:600595. doi: 10.3389/fcomm.2020.600595

Broadbent, J., and Poon, W. (2015). Self-regulated learning strategies & academic achievement in online higher education learning environments: a systematic review. Int. High. Educ. 27, 1–13. doi: 10.1016/j.iheduc.2015.04.007

Bruff, D. O., Fisher, D. H., McEwen, K. E., and Smith, B. E. (2013). Wrapping a MOOC: student perceptions of an experiment in blended learning. J. Online Learn. Teach. 9, 187–200. Available online at: https://jolt.merlot.org/vol9no2/bruff_0613.htm

Campbell, D. T. (1957). Factors relevant to the validity of experiments in social settings. Psychol. Bull. 54, 297–312. doi: 10.1037/h0040950

Campbell, D. T., and Stanley, J. C. (1966). Experimental and Quasi-Experimental Designs for Research. Boston, MA: Houghton Mifflin (Academic).

Celaya, I., Ramírez-Montoya, M. S., Naval, C., and Arbués, E. (2019). “The educational potential of the podcast: an emerging communications medium educating outside the classroom,” in Proceedings of the Seventh International Conference on Technological Ecosystems for Enhancing Multiculturality, TEEM'19 (New York, NY: ACM), 1040–1045. doi: 10.1145/3362789.3362932

Cohen, G., Assi, A., Cohen, A., Bronshtein, A., Glick, D., Gabbay, H., et al. (2022). “Video-assisted self-regulated learning (SRL) training: COVID-19 edition,” in Educating for a New Future: Making Sense of Technology-Enhanced Learning Adoption, Lecture Notes in Computer Science, eds. I. Hilliger, P. J. Muñoz-Merino, T. De Laet, A. Ortega-Arranz, and T. Farrell (Cham: Springer International Publishing), 59–73. doi: 10.1007/978-3-031-16290-9_5

Daniel, D. B., and Woody, W. D. (2010). They hear, but do not listen: retention for podcasted material in a classroom context. Teach. Psychol. 37, 199–203. doi: 10.1080/00986283.2010.488542

Deng, R., and Gao, Y. (2023). Using learner reviews to inform instructional video design in MOOCs. Behav. Sci. 13:330. doi: 10.3390/bs13040330

Díaz, D., Ramírez, R., and Hernández-Leo, D. (2015). “The effect of using a talking head in academic videos: an EEG study,” in 2015 IEEE 15th International Conference on Advanced Learning Technologies (Hualien), 367–369. doi: 10.1109/ICALT.2015.89

Drew, C. (2017). Edutaining audio: an exploration of education podcast design possibilities. Educ. Media Int. 54, 48–62. doi: 10.1080/09523987.2017.1324360

Estrada-Molina, O., and Fuentes-Cancell, D.-R. (2022). Engagement and desertion in MOOCs: systematic review. Comunicar 30, 107–119. doi: 10.3916/C70-2022-09

Goldman, T. (2018). The Impact of Podcasts in Education. Santa Clara, CA: Pop Culture Intersections, 29. Available online at: https://scholarcommons.scu.edu/engl_176/29/

Guàrdia, L., Maina, M. F., and Sangrà, A. (2013). MOOC Design Principles. A Pedagogical Approach from the Learners Perspective. eLearning Papers. Barcelona: elearningeuropa.info.

Gunderson, J. L., and Cumming, T. M. (2023). Podcasting in higher education as a component of Universal Design for Learning: a systematic review of the literature. Innovat. Educ. Teach. Int. 60, 591–601. doi: 10.1080/14703297.2022.2075430

Guo, P. J., Kim, J., and Rubin, R. (2014). “How video production affects student engagement: an empirical study of MOOC videos,” in Proceedings of the First ACM Conference on Learning @ Scale Conference (Atlanta, GA: ACM), 41–50. doi: 10.1145/2556325.2566239

Hadi Mogavi, R., Guo, B., Zhang, Y., Haq, E.-U., Hui, P., and Ma, X. (2022). “When gamification spoils your learning: a qualitative case study of gamification misuse in a language-learning app,” in Proceedings of the Ninth ACM Conference on Learning@ Scale (New York, NY: ACM), 175–188. doi: 10.1145/3491140.3528274

Hagedorn, C., Sauer, D., Graichen, J., and Meinel, C. (2023). “Using randomized controlled trials in elearning: how to add content A/B tests to a MOOC environment,” in 2023 IEEE Global Engineering Education Conference (EDUCON) (Kuwait), 1–10. doi: 10.1109/EDUCON54358.2023.10125270

Hense, J., and Bernd, M. (2021). Podcasts, Microcontent & MOOCs. EMOOCs 2021. Potsdam: Universitätsverlag Potsdam, 289–295. doi: 10.25932/publishup-51736

Kandzia, P.-T., Linckels, S., Ottmann, T., and Trahasch, S. (2013). Lecture recording—a success story. Inf. Technol. 55, 115–122. doi: 10.1524/itit.2013.1014

Kay, R. H. (2012). Exploring the use of video podcasts in education: a comprehensive review of the literature. Comput. Human Behav. 28, 820–831. doi: 10.1016/j.chb.2012.01.011

Kharade, K., and Peese, H. (2012). Learning by E-learning for visually impaired students: opportunities or again marginalisation? E-Learn. Digit. Media 9, 439–448. doi: 10.2304/elea.2012.9.4.439

Khlaif, Z., Nadiruzzaman, H., and Kwon, K. (2017). Types of interaction in online discussion forums: a case study. J. Educ. Issues 3, 155–169. doi: 10.5296/jei.v3i1.10975

Kizilcec, R. F., Papadopoulos, K., and Sritanyaratana, L. (2014). “Showing face in video instruction: effects on information retention, visual attention, and affect,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Toronto, ON: ACM), 2095–2102. doi: 10.1145/2556288.2557207

Kizilcec, R. F., Pérez-Sanagustn, M., and Maldonado, J. J. (2017). Self-regulated learning strategies predict learner behavior and goal attainment in Massive Open Online Courses. Comp. Educ. 104, 18–33. doi: 10.1016/j.compedu.2016.10.001

Koehler, D., Serth, S., and Meinel, C. (2021). “Consuming security: evaluating podcasts to promote online learning integrated with everyday life,” in 2021 World Engineering Education Forum/Global Engineering Deans Council (WEEF/GEDC) (Madrid), 476–481. doi: 10.1109/WEEF/GEDC53299.2021.9657464

Köhler, D., Serth, S., Steinbeck, H., and Meinel, C. (2022). “Integrating podcasts into MOOCs: comparing effects of audio- and video-based education for secondary content,” in Educating for a New Future: Making Sense of Technology-Enhanced Learning Adoption, Vol. 13450 of LNCS, Series Title: Lecture Notes in Computer Science, ed. I. Hilliger (Springer International Publishing.), 131–144. doi: 10.1007/978-3-031-16290-9_10

Köhler, D., Serth, S., and Meinel, C. (2023). “On air: benefits of weekly podcasts accompanying online courses,” in Proceedings of the Tenth ACM Conference on Learning @ Scale (Copenhagen: ACM), 311–315. doi: 10.1145/3573051.3596178

Kulik, J. A., Bangert, R. L., and Williams, G. W. (1983). Effects of computer-based teaching on secondary school students. J. Educ. Psychol. 75, 19–26. doi: 10.1037/0022-0663.75.1.19

Lewis, R. (2020). “This is what the news won't show you”: YouTube creators and the reactionary politics of micro-celebrity. Telev. New Media 21, 201–217. doi: 10.1177/1527476419879919

Likert, R. (1932). A technique for the measurement of attitudes. Arch. Psychol. 22, 5–55. Available online at: https://archive.org/details/likert-1932

McDermott, R. (2011). “Internal and external validity,” in Cambridge Handbook of Experimental Political Science (New York, NY: Cambridge University Press), 27–40. doi: 10.1017/CBO9780511921452.003

Meinel, C., Willems, C., Staubitz, T., Sauer, D., and Hagedorn, C. (2022). openHPI: 10 Years of MOOCs at the Hasso Plattner Institute. Potsdam: Technische Berichte des Hasso-Plattner-Instituts für Digital Engineering an der Universität Potsdam, 148. doi: 10.25932/publishup-56020

Moore, R. L., and Blackmon, S. J. (2022). From the learner's perspective: a systematic review of MOOC learner experiences (2008–2021). Comp. Educ. 190:104596. doi: 10.1016/j.compedu.2022.104596

Muñoz-Merino, P. J., Ruipérez-Valiente, J. A., Delgado Kloos, C., Auger, M. A., Briz, S., de Castro, V., et al. (2017). Flipping the classroom to improve learning with MOOCs technology. Comp. Appl. Eng. Educ. 25, 15–25. doi: 10.1002/cae.21774

Nandi, D., Hamilton, M., and Harland, J. (2012). Evaluating the quality of interaction in asynchronous discussion forums in fully online courses. Dist. Educ. 33, 5–30. doi: 10.1080/01587919.2012.667957

Nigh, J., Pytash, K. E., Ferdig, R. E., and Merchant, W. (2015). Investigating the potential of MOOCs in K-12 teaching and learning environments. J. Online Learn. Res. 1, 85–106. Available online at: https://www.learntechlib.org/p/149853/

Orhan Göksün, D., and Gürsoy, G. (2019). Comparing success and engagement in gamified learning experiences via Kahoot and Quizizz. Comp. Educ. 135, 15–29. doi: 10.1016/j.compedu.2019.02.015

Prensky, M. (2006). Don't Bother Me, Mom, I'm Learning!: How Computer and Video Games Are Preparing Your Kids for 21st Century Success and How You Can Help! St. Paul, MN: Paragon House.

Renz, J., Bauer, M., Malchow, M., Staubitz, T., and Meinel, C. (2015). “Optimizing the video experience in MOOCs,” in EDULEARN15 Proceedings, 7th International Conference on Education and New Learning Technologies (Barcelona: IATED), 5150–5158.

Ross, S. M., and Morrison, G. R. (2004). “Experimental research methods,” in Handbook of Research on Educational Communications and Technology, eds. D. Jonassen, M. Driscoll (New York, NY: Routledge), 1021–1043. doi: 10.4324/9781410609519

Sallam, M. H., Martín-Monje, E., and Li, Y. (2022). Research trends in language MOOC studies: a systematic review of the published literature (2012-2018). Comp. Assist. Lang. Learn. 35, 764–791. doi: 10.1080/09588221.2020.1744668

Salomon, G., and Clark, R. E. (1977). Reexamining the methodology of research on media and technology in education. Rev. Educ. Res. 47, 99–120. doi: 10.3102/00346543047001099

Santos-Espino, J. M., Afonso-Suárez, M. D., and Guerra-Artal, C. (2016). Speakers and boards: a survey of instructional video styles in MOOCs. Tech. Commun. 63, 101–115. Available online at: https://www.stc.org/techcomm/wp-content/uploads/sites/3/2016/08/TechComm2016Q2_Web.pdf#page=28

Satparam, J., and Apps, T. (2022). A systematic review of the flipped classroom research in K-12: implementation, challenges and effectiveness. J. Educ. Manag. Dev. Stud. 2, 35–51. doi: 10.52631/jemds.v2i1.71

Shadish, W. R., Cook, T. D., and Campbell, D. T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton, Mifflin and Company, US, 623.

Shao, Z., and Chen, K. (2020). Understanding individuals' engagement and continuance intention of MOOCs: the effect of interactivity and the role of gender. Int. Res. 31, 1262–1289. doi: 10.1108/INTR-10-2019-0416

Shqaidef, A. J., Abu-Baker, D., Al-Bitar, Z. B., Badran, S., and Hamdan, A. M. (2021). Academic performance of dental students: a randomised trial comparing live, audio recorded and video recorded lectures. Eur. J. Dental Educ. 25, 377–384. doi: 10.1111/eje.12614

Staubitz, T., Renz, J., Willems, C., and Meinel, C. (2014). “Supporting social interaction and collaboration on an xMOOC platform,” in EDULEARN14 Proceedings, Conference Name: 6th International Conference on Education and New Learning Technologies. Meeting Name: 6th International Conference on Education and New Learning Technologies Place (Barcelona: Spain Publisher: IATED), 6667–6677.

Staubitz, T., and Meinel, C. (2020). “A systematic quantitative and qualitative analysis of participants' opinions on peer assessment in surveys and course forum discussions of MOOCs,” in 2020 IEEE Global Engineering Education Conference (EDUCON) (Porto), 962–971. doi: 10.1109/EDUCON45650.2020.9125089

Steinbeck, H., Zobel, T., and Meinel, C. (2022). “Using the YouTube Video Style in a MOOC: (re-)testing the effect of visual experience in a field-experiment,” in Proceedings of the Ninth ACM Conference on Learning @ Scale, L@S '22 (New York, NY: ACM), 142–150. doi: 10.1145/3491140.3528268