95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 22 March 2024

Sec. Assessment, Testing and Applied Measurement

Volume 9 - 2024 | https://doi.org/10.3389/feduc.2024.1308688

Authentic assessment promotes professional skills, bridging the gap between academic knowledge and real-world scenarios. Given the challenges faced by vocational education in Chile, there is a keen interest in assessment methods within both secondary schools and higher education institutions. A non-experimental quantitative design was employed. The methodological approach used was a cross-sectional survey. The study's sample included 244 students, 37 teachers, and a compilation of 905 questions sourced from written examinations. The findings reveal certain disparities in perspectives between teachers, students, and the examinations reviewed. On one hand, secondary vocational education teachers perceive the quality of assessments to exceed those of their counterparts in higher vocational education. Conversely, students in higher vocational education express a heightened sense of depth and engagement in their learning compared to their secondary vocational education peers. Upon examination of the assessments, it becomes evident that while written examinations are more prevalent in secondary vocational schools, these often involve open-ended and analytical inquiries. In contrast, higher vocational education institutions predominantly rely on closed-response questions that lean toward rote memorization. However, when these questions are open-ended, they are more oriented toward the transfer of knowledge compared to the secondary vocational education level. The level of realism is more pronounced in higher vocational education than in secondary vocational education. Both educational tiers exhibit gaps in attaining the principles of authentic assessment.

The Chilean education system is complex and of a mixed nature, encompassing both public and private sectors. The system provides early childhood, primary, secondary, and higher education, with the first three being compulsory. Early childhood education is tailored for children from birth to school entry. From the age of five, education becomes mandatory, with 97.2% of 5-year-olds attending 4,373 educational establishments, totaling 250,316 preschool students. Regular primary education lasts for 8 years (ages 6–13). Secondary education spans 4 years (ages 14–17), with the first 2 years focusing on general education and the subsequent two on differentiated education: humanistic-scientific, technical-professional (or vocational education, as will be referred to in this article), and artistic. Nationally, there are 11,342 primary and secondary institutions catering to 3,607,980 students. The higher education system consists of the vocational and university subsystems, comprising 140 institutions serving 1,221,017 students (UNESCO, 2019).

Vocational education, discussed in this article, is offered at both the secondary and higher levels. Vocational secondary education covers 40% of the population of 16 and 17-year-old students in the country's schools. It provides specialized training in the last 2 years of secondary education, preparing students for the workforce while offering the option to pursue further studies and engage in lifelong learning. In Chile, 944 educational establishments offer vocational secondary education, with specialized secondary education institutions fostering collaborations with the productive sector and state Technical Training Centers (CFT). These schools provide organized offerings in 15 economic sectors (e.g., Health, Technology, Tourism, Construction, Electricity, Mining, Agriculture, Forestry, Gastronomy), 35 specialties (e.g., Information Technology, Electrical Installation, Culinary Arts, Automotive Mechanics), and 17 mentions (e.g., Network Administration, Pastry and Baking, Web Design, Solar Panel Installation) (Brunner et al., 2022).

Higher vocational education, accessible to students graduating from secondary education after the age of 18, constitutes over 50% of the first-year enrollment in the tertiary education sector. This education is delivered through Technical Training Centers (CFT) offering four-semester programs (e.g., Nursing Technician, Gastronomy Technician, Electrician Technician, and Tourism Technician) and Professional Institutes (IP) with eight-semester programs (e.g., Computer Engineering, Electrical and Industrial Automation Engineering, Logistics and Transportation Engineering, Costume and Textile Design, International Gastronomy). Presently, 57% of tertiary education students are enrolled in higher vocational education, while the remaining 43% are concentrated in universities (Higher Education Information Service, 2022).

The objective of vocational education is to equip students with specific skills and knowledge, along with personal and social abilities, enabling them to enter the technical and professional workforce upon completing secondary and/or higher education. Its goals are closely linked to integrating employment opportunities and fostering social mobility (Brunner et al., 2022). This is particularly relevant considering that 60% of students in Technical Training Centers and Professional Institutes come from low-income sectors (Alonso et al., 2024).

However, this training faces difficulties and challenges that affect its quality and effectiveness. On one hand, vocational education often contends with a perception of lower social prestige compared to university education, despite the actual demand in the labor market for technical professionals. Students entering this type of education tend to be economically vulnerable, with lower socio-cultural capital, and show significant academic weaknesses. For example, 50% of enrolled students demonstrate insufficient proficiency in reading and writing skills, while 60% do not exceed the introductory level of numeracy skills (Arroyo and Valenzuela, 2018). In connection with this, over 20% of vocational education students at the secondary and tertiary levels prematurely drop out of their studies (Higher Education Information Service, 2022), and less than half of the students graduate within the expected timeframe (National Productivity Commission, 2018).

There is also an observed gap between the technical training provided by educational institutions and the specific needs of the labor market. This lack of alignment can result in graduates with skills that do not adequately meet current labor market demands (Sotomayor Soloaga and Rodríguez Gómez, 2020). In this vein, the rapid evolution of industries and technologies demands constant curriculum updates in technical education, but the curricula tend to be inflexible in accommodating these changes and run the risk of becoming obsolete within a few years. For example, the probability of automation in Chile is 52%, according to Nedelkoska and Quintini (2018), with the highest-risk industries being transportation, storage, and communications (46.9%), mining and quarrying (27.6%), and financial intermediation (23.2%). If the automation scenario is not considered in the curriculum implementation for careers in these economic sectors, the graduates' competencies may not meet the needs of the workforce, affecting their employability (Alvarez et al., 2021).

Similarly, employers have questioned the technical skills of graduates and students in technical education, stating that they have gaps in their training. For example, they do not handle important technical procedures adequately, lack the necessary skills to operate machinery or other technology, and fail to follow safety protocols (Amaral et al., 2018; Riquelme-Brevis et al., 2018). From another perspective, graduates of technical education perceive that their technical skills lack depth and precision, affecting their performance in the workplace (Riquelme-Brevis et al., 2018). Investigating these training gaps has led to the conclusion that optimal training for technical education students depends, in part, on training and assessment activities being meaningful and challenging, requiring the application of skills in a context such as the workplace setting (Pugh and Lozano-Rodríguez, 2019).

There is an urgent need to better align vocational education with the requirements of the workforce, and one way to do this is related to transforming the assessment system. Graduates need to develop the necessary skills to navigate real-life situations and the workplace (Brunner et al., 2022) through a contextualized and realistic teaching and learning process for deep learning (Fullan et al., 2017; Magen-Nagar and Steinberger, 2022). Research has suggested that changes in assessment practices indirectly affect pedagogical approaches (Boud and Falchikov, 2007; Boud and Molloy, 2013; Yan, 2021). Focusing on assessment allows for the collection of evidence of achieving learning outcomes, and then adjusting teaching so that students can reach the expected results (Biggs, 2014; Reynolds and Dowell, 2017).

Assessment holds significant influence over what and how students study and learn (Kearney et al., 2015). When teachers implement assessment from a learning perspective, they begin to shift their focus from teaching to fostering deep student learning through practices that require students to take on a more active role (Biggs and Tang, 2011; Bingham et al., 2023). In this sense, it provides feedback and allows for the improvement of teaching activities (Watkins et al., 2005).

On the other hand, it has also been demonstrated that authentic assessment influences the quality and depth of the learning achieved by students (Brown and Sambell, 2023), as well as the development of higher-order cognitive skills (Ashford-Rowe et al., 2014; Ajjawi et al., 2020). Authentic assessment fosters learning and the development of professional competencies (Koh and Chapman, 2019). It connects curriculum knowledge with everyday life scenarios, enabling the application of acquired knowledge to solve real-world problems (Wiliam, 2011; Neely and Tucker, 2012; Haryanti, 2023). It engages students with significant problems or questions that hold value beyond the classroom. In this vein, assessment tasks replicate or analogize issues encountered in the outside world, aiming for students to employ their knowledge to highlight effective and creative performance through its application to genuine problems (Schlichting and Fox, 2015).

In authentic assessment, students undertake assessment tasks that simulate real-world challenges, and subsequently demonstrate through their performance the achievement of expected learning outcomes (Asgarova et al., 2022). Authentic assessment tasks, characterized by high intellectual demands, have rendered learning more meaningful and enjoyable for students, enabling them to recognize the connections between classroom activities and real-world applications (Koh et al., 2018; Akbari et al., 2022). Furthermore, it influences the development of graduates' generic attributes (Sotiriadou et al., 2020; Karunanayaka and Naidu, 2021) and contributes to employability (Dacre Pool and Sewell, 2007; Sokhanvar et al., 2021). For these reasons, one way to more meaningfully integrate vocational education provided to students with the demands of the working world is through authentic assessment due to its realism, contextualization, problematization, and development of complex competencies.

In this article, it is conceived that authentic assessment practices encompass three major dimensions: realism, cognitive complexity, and feedback (Villarroel et al., 2017, 2019, 2021). The following section describes them:

a. Realism. Realistic contexts or problem-based situations are presented, mirroring real-life and/or professional issues (Saye et al., 2017), aiming to establish connections with the real world beyond the classroom (Koh, 2011). This realistic context can take the form of a written case study, a YouTube video, an audio file, or a photograph that relates to the learning outcome being evaluated (Koh and Tan, 2016). The problem situation is relevant, pertinent, meaningful, useful, and valuable for students' everyday life (McArthur, 2022), and it includes background information to assist students in contextualizing the problematic situation (Koh and Tan, 2016).

b. Cognitive Complexity. Authentic tasks involve the application of knowledge and the use of higher-order cognitive skills to analyze, criticize, evaluate, or create (Wiggins, 2011), aiming to construct authentic products or performances (Koh and Tan, 2016). In this way, the goal is to foster depth of thinking, critical thought, knowledge application, and student agency (Koh, 2011). In other words, when faced with problematic situations anchored in realistic contexts, students engage in processes of problem-solving, knowledge application, and decision-making, corresponding to the development of cognitive and metacognitive skills, rather than simply repeating declarative or conceptual knowledge (Elliott and Higgins, 2005; Newmann et al., 2007).

Through the analysis and integration of various taxonomies (Marzano et al., 1997; Anderson and Krathwohl, 2001; Fink, 2003; Biggs, 2006; Chi and Wylie, 2014), three levels of cognitive complexity for assessment were determined: superficial (memorization), deep (analytical), and transference as also indicated by Hattie and Clarke (2020). Assessment is focused on a memorization level if it asks the student for recognition and recall of information, retrieving it “as a whole,” as it was taught, read, or memorized from a text. The assessment is analytical if it requires the student to discriminate what they have learned that is useful for solving the problem, separating, grouping, synthesizing, and integrating knowledge as needed. On the other hand, assessment measures knowledge transfer when the student is asked to go beyond school learning, using it in other contexts and for other purposes.

c. Feedback and Development of Evaluative Judgment. This entails practices that promote a student's understanding of the criteria and standards associated with proficient performance (Tai et al., 2018), receiving ongoing feedback from the teacher based on the quality standards used and their correlation with their performance (Panadero et al., 2014; Panadero and Brown, 2017). Additionally, engaging in self-assessment and peer assessment exercises (Koh et al., 2015; Schlichting and Fox, 2015) that enables them to practice evaluative judgment.

Considering the relevance of authentic assessment for vocational training, the objective of this article is to analyze how authentic the assessment applied in vocational training in secondary and higher education is, examining the realism, cognitive complexity, and feedback present in it. In this way, the authenticity of the assessment will be determined, as well as the alignment between secondary and higher education in terms of assessment instruments, and the congruence between teachers' and students' perceptions of what characterizes assessment in the vocational area.

A non-experimental quantitative design was employed to analyze both students' and teachers' perceptions of assessment practices used in vocational education, as well as the analysis of the assessment instrument used, mainly written examinations. The methodological approach used was a cross-sectional survey.

The sample was composed of students, teachers, and a set of assessment instruments (written examinations) from seven vocational training institutions (three secondary education schools and four higher education institutions) in the two most populous regions of Chile. The specialized areas of the secondary education schools included: Administration, Health and Education, Electricity, Industrial Mechanics, Metal Constructions, Automotive Mechanics, and Telecommunications. The higher education institutions' programs covered Education, Commerce, Information and Communication, Manufacturing Industries, Accommodation and Food Service, Health and Social Assistance, Electricity, Gas, Steam, and Air Conditioning Supply, Construction, and Artistic Activities.

In the case of the students, 244 individuals participated (96 females and 146 males), with an average age of 20.6 years. Among them, 150 students were from secondary education schools (21.6% females and 78.4% males), with an average age of 17 years (SD = 0.8), 39% in their junior year and 61% in their senior year. The remaining 94 students attended higher education vocational institutions for 2-year programs (68.1% females and 31.9% males), with an average age of 26.5 years (SD = 8.5), 72% of students in their first year of higher education, and 28% in their second year.

Regarding the teachers, 37 individuals participated (35.1% females and 64.9% males) with an average age of 50.2 years (SD = 10.0) and an average of 17.2 years of teaching experience in vocational education (SD = 10.4). Among them, 16.7% were from secondary education schools (50.0% females and 50% males) and 83.3% were from higher education vocational institutions offering 2-year programs (33.3% females and 66.7% males).

Finally, 905 questions from written examinations were reviewed, 235 questions (125 open-ended and 110 closed-ended) from secondary education schools tests and 670 questions (124 open-ended and 546 closed-ended) from 2-year higher education vocational program tests.

Data collection was carried out through a closed-ended questionnaire consisting of 75 items that inquired about: (a) the quality of assessment, (b) the use of traditional assessment such as written examinations, (c) the employment of performance-based task assessments, (d) the use of multiple-choice items in written examinations, (e) the use of open-ended items in written examinations, (f) different assessment modalities, (g) the use of written feedback, (h) the use of individual feedback, (i) the use of group feedback, as well as the inquiry about the teacher's teaching approach through: (j) student-centered teaching—ATI, (k) teacher-centered teaching—ATI.

These last two scales (j and k) belong to the questionnaire validated in Chile by González et al. (2011), titled “Approaches to Teaching Inventory (ATI)” by Trigwell and Prosser (1996). It was considered relevant to gather information about the characteristics of the assessment applied by the teacher, also integrating the teaching approach to which they adhere because it allows interpreting the evaluative decisions they make. The response format is on a Likert scale from 1 to 5 (5 = Always, 4 = Almost always, 3 = Sometimes, 2 = Rarely, 1 = Never). Below, Table 1 presents the reliability of the analyzed scales and examples of items from them.

Data collection was carried out through the administration of a closed-ended questionnaire consisting of 56 items that inquired about: (a) Assessment through multiple-choice items, (b) Assessment through open-ended items, (c) Assessment through performance-based tasks, (d) Assessment aiming at the application and use of knowledge, (e) Assessment through real-world related problems, (f) Utilization of various assessment methods, (g) Surface learning from the SPQ Scale, (h) Deep learning from the SPQ Scale, (i) Engagement vigor from the UWES Scale, (j) Engagement dedication from the UWES Scale, (k) Engagement absorption from the UWES Scale. Among the subscales, there are two validated questionnaires. One is the Study Process Questionnaire (SPQ) by Biggs (1987), validated in Chile by González et al. (2011), with its subscales of surface learning and deep learning (sub-scales g and h). The second is the Utrecht Work Engagement Student Scale (UWE-S) developed by Schaufeli et al. (2002), validated in Chile by Parra and Pérez (2010), with its three sub-scales: vigor, dedication, and absorption (sub-scales i, j, k). It was considered relevant to gather information about the assessment characteristics reported by the student, from their own perception, also integrating the learning approach to which they adhere and their student engagement, to characterize the vocational/technical professional student in these personal variables that affect their learning. The response format is on a Likert scale from 1 to 5 (5 = Always, 4 = Almost always, 3 = Sometimes, 2 = Rarely, 1 = Never). Table 2 presents the reliability of the analyzed sub-scales and examples of items from them.

The set of written examinations comprising the study sample was analyzed question by question. Each question from the written examinations was assessed using a rubric that measured three aspects: realism, cognitive complexity, and problem-solving. Each of these dimensions was scored at three levels of achievement: high, medium, and low, according to the performance level description at each level. These dimensions stem from the conceptualization of authentic assessment as outlined in the article. The rubric used is presented in the Supplementary Appendix of this article.

Each written test was analyzed by two research assistants, who had experience in the field and were trained for the task. Each question from each test had two assessments (from each assistant) for each of the analyzed dimensions. The inter-rater agreement was 0.96. In cases of disagreement, a third judge made the final decision.

Secondary education schools and higher education institutions with vocational programs, qualified within the range of excellence by the Chilean Ministry of Education, were invited to participate in the study. For this entity, excellence qualification involves three principles: high expectations focus on learning outcomes, and in-classroom focus. Out of the invitations extended, seven institutions accepted to participate, to whom the study's objectives were explained, and the ethical approval certificate of the research was presented.

The Ethics Committee of Universidad del Desarrollo approved this research in June 2022. The approval certificate ensures compliance with all ethical safeguards of scientific research, such as confidentiality, anonymity, voluntary participation, the right to withdraw from the study or choose not to answer, the absence of risks associated with participation, and the competence of the research team. This certificate of ethical approval was provided to the universities invited to participate in the study.

From each institution, the questionnaire was sent to teachers and students via email, as the questionnaires were in Google Form format. The questionnaires utilized in the study included informed consent, outlining the research objectives and the ethical safeguards in place. The ethical standards and codes of conduct adhered to encompassed those of the American Psychological Association (2017).

Likewise, the institution provided the research team representatives with a sample of technical training areas, along with a sample of written examinations, from different specialized areas, employed in the past year.

The Chi-square test of independence was used to analyze the relationship between two categorical variables, while the Mann–Whitney U test was applied to determine the presence of score differences between groups. The reliability analysis of the scales was conducted using the Cronbach's alpha coefficient. All analyses were performed using R version 4.3.0.

Through a general descriptive analysis, it is observed that all teachers perceive quality and coherence in their evaluative practices. They frequently provide written feedback, group feedback, and engage in student-centered teaching. Assessment with moderate frequency includes the use of different assessment modalities, performance-based tasks, open-ended response items, individualized feedback delivery, as well as teacher-centered teaching approaches. Traditional assessment methods and closed-ended response items are the least frequent.

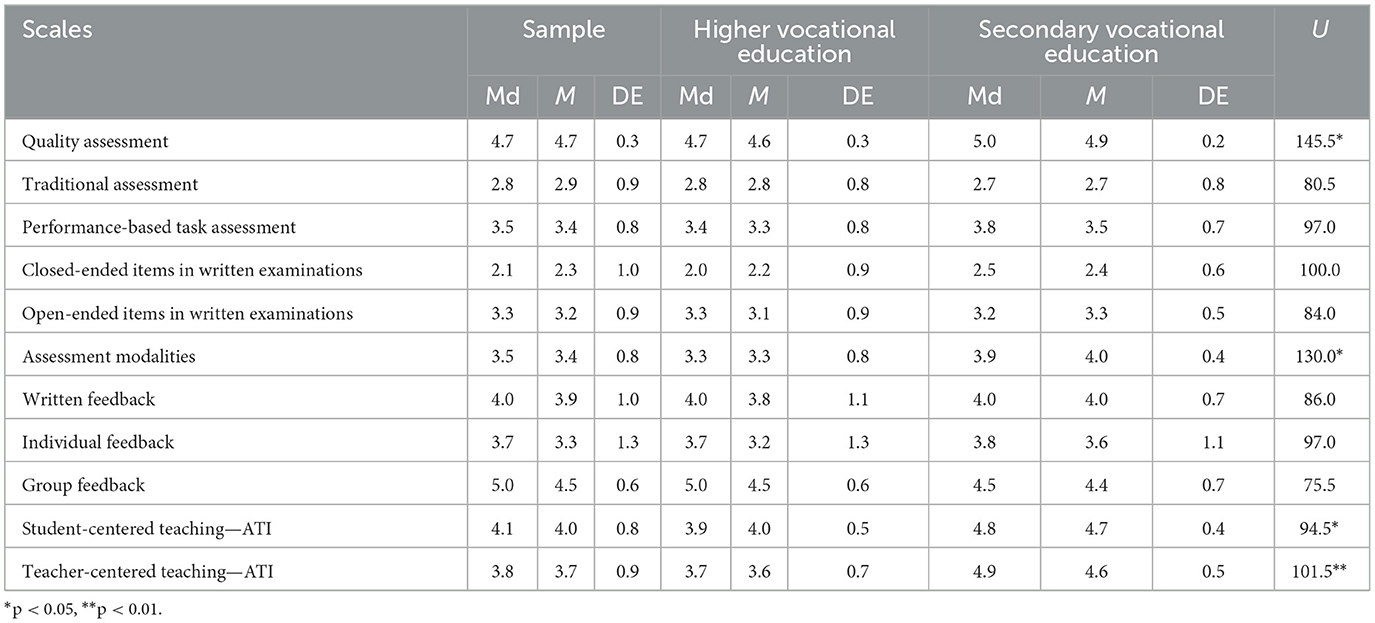

Secondary vocational education teachers differ significantly from their counterparts in higher education in four aspects: quality assessment, different assessment modalities, student-centered teaching, and teacher-centered teaching. In these areas, assessment is rated higher among secondary vocational education teachers, who therefore perceive their assessment practices with a better quality and coherence, more applied and contextualized, and utilizing various assessment methods. Secondary vocational education teachers also score higher than those in higher education in both teaching approach scales, exhibiting both tendencies, toward both student-centered and teacher-centered teaching approaches, more intensely than those in higher education. Table 3 illustrates these differences.

Table 3. Differences in perception of evaluative practices between secondary vocational education and higher vocational education teachers.

A general analysis of all student responses reveals that they perceive their assessments consistently demand the application and utilization of knowledge, and demonstrate dedication to their educational process. With moderate frequency, students perceive that assessments involve real-world issues, and utilize both closed-ended and open-ended response items. Additionally, students frequently or moderately perceive that they exhibit enthusiasm toward their learning process, achieving both deep and surface-level understanding of the content. At a lower frequency level, students report being evaluated through performance-based tasks or via different assessment modalities.

Higher vocational education students significantly differ from their secondary vocational education peers in six aspects: deep learning, vigor, dedication, absorption, perception that assessments seek the application and use of knowledge, and connection to the work world. They perceive that their learning approach is more profound, and that they have a higher commitment to their learning compared to their secondary vocational education counterparts. Additionally, they perceive that the assessments they face are more closely related to what they will encounter in the working world, aiming for the application of what they've learned, as opposed to secondary vocational education students. On the other hand, secondary vocational education students significantly differ from their higher vocational education peers in two aspects: assessments with closed-ended items and assessments with open-ended items. Therefore, secondary vocational education students perceive that they are more frequently evaluated through written examinations with closed-ended and open-ended items compared to higher vocational education students. Table 4 illustrates these differences.

Table 4. Differences in perception of learning and assessment practices experienced between secondary vocational education and higher vocational education students.

The analysis of the items in the written examinations was conducted considering item type, the presence of realism, the relevance of the realistic context to answer the questions, the cognitive complexity inherent in the question, and how much the question required some form of problem solving.

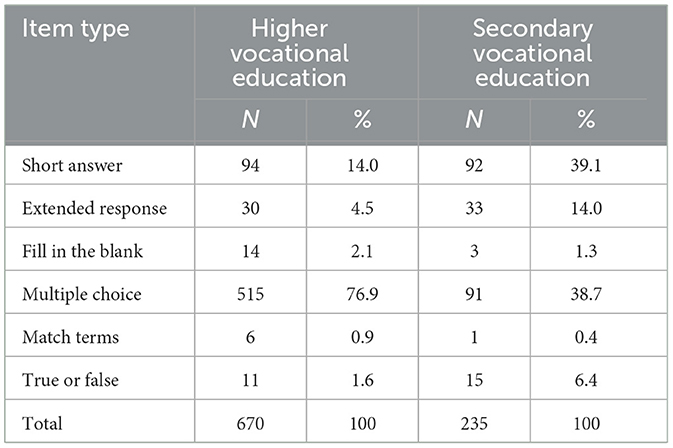

The difference in the type of items, present in the written examinations between secondary vocational education and higher vocational education, was significant [Chi2(5) = 128.8, p < 0.001]. The main differences occur in the use of multiple-choice (in favor of higher vocational education), short answer (in favor of secondary vocational education), and extended response (in favor of secondary education). Thus, the items used in higher vocational education tend to be mostly closed-ended, while those in secondary vocational education are mostly open-ended. Table 5 illustrates these differences.

Table 5. Comparison between secondary vocational education and higher vocational education item type used.

There are significant differences in the level of requested problem solving according to the types of items [Chi2(2) = 243.4, p < 0.001]. The main differences occur in the fill in the blank items, multiple choice and true or false, all of them being of the lowest realism level. Table 6 shows these differences.

There are significant differences in the level of cognitive complexity according to the types of items [Chi2(2) = 357.58, p < 0.001]. The main differences occur in the fill in the blank items, multiple choice, match terms, and true or false, all of them being significatively of more rote learning. Table 7 shows these differences.

There are significant differences in the requested level of problem solving according to the type of the item [Chi2(2) = 210.80, p < 0.001]. The main differences occur in the fill in the blank items, multiple choice, match terms and true or false, all of them being of in the lowest problem resolution level. Table 8 shows these differences.

There are significant differences in the level of realism of the assessment questions in secondary vocational education and higher vocational education [Chi2(2) = 39.86, p < 0.001]. The main difference is related to the low level of realism in secondary vocational education assessments and in the moderate realism in higher vocational education. Table 9 shows these differences.

There are significant differences in the level of Realism of the assessments in secondary vocational education and higher vocational education [Chi2(2) = 14.16, p < 0.001]. The main differences occur in the higher analytical level of assessments in higher vocational education and the higher presence of learning transfer in secondary vocational education. Table 10 shows these differences.

There are no significant differences in the level of problem solving in the assessments of secondary vocational education and higher vocational education [Chi2(2) = 1.81, p = 0.41]. Table 11 summarizes this situation.

The objective of this article was to analyze how authentic the assessment applied in vocational training in secondary and higher education is, examining the realism, cognitive complexity, and feedback present in it. This study aims to initiate a discussion on the authenticity of assessment in vocational education, considering that this assessment method may better articulate these two educational levels with the world of work. Authentic assessment precisely seeks this connection with reality, as proposed by Ajjawi et al. (2020).

From the analysis of results, it is concluded that at both educational levels—secondary and higher—there is a need to advance in the application of authentic assessment principles. Opportunities for improvement are observed in realism, cognitive complexity, and feedback, as well as the type of items included in written examinations. Particularly, secondary vocational education presents more opportunities for improvement in terms of realism, and both secondary and higher vocational education in cognitive complexity aligned with the application and transfer of knowledge, as well as in individual and dialogical feedback.

Taking actions to improve the authenticity of vocational training assessments would align the students' skills with the current labor market's demands, contributing to closing the gap between the vocational training and the needs of the labor market (Sotomayor Soloaga and Rodríguez Gómez, 2020).

Since secondary vocational education aims to prepare students either to join the workforce or to pursue lifelong learning, it could be that higher vocational education teachers include realist contexts in their assessments more than secondary vocational education teachers, since higher vocational education students could be more likely to start working after graduating, or they could be even working as they study, unlike secondary vocational education students, who probably don't even work experience yet. This last idea could also be an explanation for the situation above, being that secondary vocational education teachers could consider that including realist contexts in their assessments could adversely affect the student's performance, because of their lack of knowledge of work situations. Future research should explore further these hypotheses.

Also, findings about the cognitive complexity level present both at secondary and higher vocational education could be related to the fact that students in vocational training have academic weaknesses (Arroyo and Valenzuela, 2018), being a hypothesis that assessments in vocational education aim to match the students' skills. However, the lack of cognitive stimulation that these findings suggest could also be related to these academic weaknesses and the lower social prestige that vocational education holds (Arroyo and Valenzuela, 2018). Future research should explore further these hypotheses.

Regarding realism, teachers express that the assessments they administer are linked to real-world contexts and the world of work. However, student perceptions show significant differences, as higher vocational education students considered their tests to be more realistic and connected to everyday problems and the world of work than those in secondary vocational education. A possible explanation is that higher vocational education students could be more familiar with work situations than their secondary vocational education peers and, therefore be more aware of the realism of the contexts provided in their assessments. This does not necessarily coincide with the analysis of written examinations, as 54.2% of higher vocational education assessments and 77% of secondary vocational education assessments lack realistic contexts in vocational training, meaning that the assessment is not situated in authentic, realistic scenarios and does not address problematic situations resembling those encountered in the workplace. However, when analyzing the percentage of contextualized items, either theoretically or realistically, there is indeed greater contextualization in higher vocational education assessments (45.8%) than in secondary vocational education (33%), as reported by students.

Concerning cognitive complexity, higher vocational education students state that their assessments seek more to apply knowledge compared to secondary vocational education students. The perception of teachers does not show significant differences in this area between educational levels. However, the analysis of assessment instruments shows a tendency toward memorization. Specifically, 70.3% of questions in higher vocational education assessments and 74% of questions in secondary vocational education assessments involve low cognitive complexity related to the recall and recognition of information. This is linked to the prevalent use of closed-ended items in both educational levels, which, in more than 80% of cases, do not assess problem-solving. A possible explanation is that vocational education institutions hold a large number of students and, therefore, teachers prefer to use closed-ended items since they could be easier to review. In addition to this, there could be a lack of knowledge about how to involve higher cognitive complexity in closed-ended questions. Future research should investigate the teacher's assessment decisions.

Finally, regarding feedback, no significant differences are observed between what is reported by secondary and higher vocational education students and teachers. In general, there is a trend toward more frequent use of written and group feedback and less frequent provision of individual and dialogical feedback. What becomes significant is delving into how this feedback helps or hinders the internalization of quality criteria among students, guiding their future performance, as proposed by Villarroel et al. (2017, 2019).

On the other hand, secondary vocational education teachers perceive that their evaluative practices are of higher quality and consistent with the pursued objectives than higher vocational education teachers. Also, these secondary vocational education teachers perceive that their teaching is more student-centered and diversified in assessment modalities than higher vocational education teachers. Secondary vocational education teachers also report more open-ended items than those in higher vocational education, which aligns with the perception of secondary vocational education students and the analysis of written examinations. That is, when analyzing assessment instruments, secondary vocational education examinations present fewer multiple-choice items and more open-ended questions (brief and extensive) than higher vocational education tests.

However, despite this positive perception of teachers, students, and the analysis of instruments in secondary vocational education regarding the quality of teaching and assessment, higher vocational education students perceive that their learning experiences are deeper and demonstrate a greater commitment to their education than secondary vocational education students. They also perceive that the assessments they undergo are closely aligned with real-world work scenarios, seeking to assess the practical application of their acquired knowledge. Thus, it can be hypothesized that the realism of the assessment motivates and engages students in their learning, giving meaning and utility to what is learned.

In this sense, secondary vocational education has opportunities to improve in promoting deep learning, engagement with learning, as well as the application of knowledge in realistic contexts, from the students' perspective. Higher vocational education can improve the diversity of assessment, performance-based tasks, reducing closed-ended items, and increasing open-ended items to enhance authenticity, as suggested by Brown and Sambell (2023).

The study's limitations are related to the sample size and the exploratory nature of the study. Future research, in addition to increasing the sample of participating institutions, requires the input of employers and graduates from secondary and higher vocational education, as well as incorporating in-depth interviews to give meaning and interpret the collected data, plus reviewing assessments other than written examinations.

The inclusion of employers in spaces for dialogue to improve vocational education is scarce, and in cases where there are feedback processes that include the perceptions of graduates and employers, these are specific to each institution. In addition, it is observed that the results have not always been used to make changes in the training of each of them (Amaral et al., 2018). However, based on the aforementioned discussion, a crosscutting conversation from the world of work becomes essential, allowing for the enrichment and infusion of realism into the teaching, learning, and assessment processes.

The study findings may be valuable for the training of teachers in the vocational area to design assessment strategies that move toward an authentic, realistic, contextualized, challenging, and continuously feedback-oriented approach.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by Francisco Ceric, Ethics Committee of Universidad del Desarrollo. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

VV: Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Writing – original draft. RM: Formal analysis, Software, Writing – review & editing. JS: Data curation, Investigation, Writing – review & editing. DA: Data curation, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Center for Educational Leadership Innovation (CILED) at Universidad del Desarrollo under Research Grant Competition 2022.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1308688/full#supplementary-material

Ajjawi, R., Tai, J., Nghia, T. L. H., Boud, D., Johnson, L., Patrick, C., et al. (2020). Aligning assessment with the needs of work-integrated learning: the challenge of authentic assessment in a complex context. Assess. Eval. High. Educ. 45, 304–316. doi: 10.1080/02602938.2019.1639613

Akbari, M., Nguyen, H. M., McClelland, R., and Van Houdt, K. (2022). Design, implementation and academic perspectives on authentic assessment for applied business higher education in a top performing Asian economy. Educ. Train. 64, 69–88. doi: 10.1108/ET-04-2021-0121

Alonso, P., Bravo, M., Muñoz, M. P., and Ortúzar, S. (2024). Desafío Educación Media Técnico Profesional (EMTP) en Chile. Santiago de Chile: Editorial Grupo Educar.

Alvarez, J., Labraña, J., and Brunner, J. J. (2021). La educación superior técnico profesional frente a nuevos desafíos: la cuarta revolución industrial y la pandemia por COVID-19. Rev. Educ. Polít. Soc. 6, 11–38. doi: 10.15366/reps2021.6.1.001

Amaral, N., de Diego, M. E., Pagés, C., and Prada, M. F. (2018). Hacia un Sistema de Formación Técnico-Profesional de Chile: Un Análisis Funcional. Washington, DC: Banco Interamericano de Desarrollo. doi: 10.18235/0001395

American Psychological Association (2017). Ethical Principles of Psychologists and Code of Conduct. Available online at: https://www.apa.org/ethics/code (accessed September 28, 2022).

Anderson, L. W., and Krathwohl, D. R. (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives. Washington, DC: Pearson Education.

Arroyo, C., and Valenzuela, A. (2018). PIAAC: Competencias de la Población Adulta en Chile, un Análisis al Sistema Educativo y Mercado Laboral. Comisión Nacional de Productividad. Available online at: https://www.comisiondeproductividad.cl/wp-content/uploads/2018/06/Nota-T%C3%A9cnica-5-PIACC.pdf (accessed September 21, 2022).

Asgarova, R., Macaskill, A., and Abrahamse, W. (2022). Authentic assessment targeting sustainability outcomes: a case study exploring student perceptions. Int. J. Sustain. High. Educ. 24, 28–45. doi: 10.1108/IJSHE-07-2021-0266

Ashford-Rowe, K., Herrington, J., and Brown, C. (2014). Establishing the critical elements that determine authentic assessment. Assess. Eval. High. Educ. 39, 205–222. doi: 10.1080/02602938.2013.819566

Biggs, J. (1987). Study Process Questionnaire Manual. Hawthorn: Australian Council for Educational Research.

Biggs, J. (2006). SOLO Taxonomy. Available online at: https://www.johnbiggs.com.au/academic/solo-taxonomy/ (accessed October 14, 2022).

Biggs, J., and Tang, C. (2011). Teaching for Quality Learning at University: What the Student Does. Berkshire: Open University Press.

Bingham, R. P., Bureau, D., and Duncan, A. G. (Eds.). (2023). Leading Assessment for Student Success: Ten Tenets that Change Culture and Practice in Student Affairs. New York, NY: Taylor and Francis.

Boud, D., and Falchikov, N. (Eds.). (2007). Rethinking Assessment in Higher Education: Learning for the Longer Term. London: Routledge/Taylor and Francis Group. doi: 10.4324/9780203964309

Boud, D., and Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assess. Eval. High. Educ. 38, 698–712. doi: 10.1080/02602938.2012.691462

Brown, S., and Sambell, K. (2023). “Rethinking assessment by creating more authentic, learning-oriented tasks to generate student engagement”, in Formative and Shared Assessment to Promote Global University Learning, ed. J. Sánchez-Santamaría (Hershey: IGI Global), 150–167. doi: 10.4018/978-1-6684-3537-3.ch008

Brunner, J., Labraña, J., and Alvarez, J. (2022). Educación Superior Técnico Profesional en Chile: Perspectivas Comparadas. Santiago: Ediciones Universidad Diego Portales.

Chi, M. T. H., and Wylie, R. (2014). The ICAP framework: linking cognitive engagement to active learning outcomes. Educ. Psychol. 49, 219–243. doi: 10.1080/00461520.2014.965823

Dacre Pool, L., and Sewell, P. (2007). The key to employability: developing a practical model of graduate employability. Educ. Train. 49, 277–289. doi: 10.1108/00400910710754435

Elliott, N., and Higgins, A. (2005). Self and peer assessment – does it make a difference to student group work? Nurse Educ. Pract. 5, 40–48. doi: 10.1016/j.nepr.2004.03.004

Fink, L. D. (2003). Creating Significant Learning Experiences: An Integrated Approach to Designing College courses. San Francisco, CA: Jossey-Bass.

Fullan, M., Quinn, J., and McEachen, J. (2017). Deep Learning: Engage the World Change the World. Thousand Oaks, CA: SAGE Publications.

González, C., Montenegro, H., López, L., Munita, I., and Collao, P. (2011). Relación entre la experiencia de aprendizaje de estudiantes universitarios y la docencia de sus profesores. Calid. Educ. 35, 21–49. doi: 10.4067/S0718-45652011000200002

Haryanti, Y. D. (2023). The urgency of social studies learning through strengthening authentic assessment for teachers in elementary school. Pegem J. Educ. Instruct. 13, 140–148. doi: 10.47750/pegegog.13.04.17

Hattie, J., and Clarke, S. (2020). Aprendizaje Visible: Feedback. Madrid, España: Ediciones Paraninfo.

Higher Education Information Service (2022). Informe 2022. Matrícula en Educación Superior en Chile. Santiago: Higher Education Under Secretary, Ministry of Education of Chile (Accessed September 29, 2022).

Karunanayaka, S., and Naidu, S. (2021). Impacts of authentic assessment on the development of graduate attributes. Distance Educ. 42, 231–252. doi: 10.1080/01587919.2021.1920206

Kearney, S., Perkins, T., and Kennedy-Clark, S. (2015). Using self- and peer-assessments for summative purposes: analysing the relative validity of the AASL (Authentic Assessment for Sustainable Learning) model. Assess. Eval. High. Educ. 41, 1–14. doi: 10.1080/02602938.2015.1039484

Koh, K. (2011). Improving teachers' assessment literacy through professional development. Teach. Educ. 22, 255–276. doi: 10.1080/10476210.2011.593164

Koh, K., Burke, L. E. C., Luke, A., Grove, K., Gong, W., Tan, C., et al. (2018). Developing the assessment literacy of teachers in Chinese language classrooms: a focus on assessment task design. Lang. Teach. Res. 22, 264–288. doi: 10.1177/1362168816684366

Koh, K., and Chapman, O. (2019). “Problem-based learning, assessment literacy, mathematics knowledge, and competencies in teacher education,” in Papers on Postsecondary Learning and Teaching: Proceedings of the University of Calgary Conference on Postsecondary Learning and Teaching. 3, 74-80.

Koh, K., Hadden, J., Parks, C., Sanden, L., Monaghan, M., Gallant, A. LaFrance, M., et al. (2015). “Building teachers' capacity in authentic assessment and assessment for learning”, in Proceedings of the IDEAS: Designing Responsive Pedagogy Conference, eds P. Preciado Babb, M. Takeuchi, and J. Lock (Calgary: Werklund School of Education, University of Calgary), 43–52. Aailable online at: https://prism.ucalgary.ca/bitstream/handle/1880/50852/IDEAS%202015%20FINAL.pdf?sequence=3andisAllowed=y (accessed August 2, 2023).

Koh, K., and Tan, C. (2016). Promoting reflection in pre-service teachers through problem-based learning: an example from Canada. Reflective Pract. 17, 347–356. doi: 10.1080/14623943.2016.1164683

Magen-Nagar, N., and Steinberger, P. (2022). Developing teachers' professional identity through conflict simulations. Teach. Educ. 33, 102–122. doi: 10.1080/10476210.2020.1819975

Marzano, R., Pickering, D., Arredondo, D. E., Blackburn, G. J., Brandt, R. S., Moffett, C. A., et al. (1997). Dimensions of Learning. Teacher's Manual, 2nd ed. Aurora, CO: Association for Supervision and Curriculum Development.

McArthur, J. (2022). Rethinking authentic assessment: work, well-being, and society. High. Educ. 85, 85–101. doi: 10.1007/s10734-022-00822-y

National Productivity Commission (2018). Formación de Competencias Para el Trabajo en Chile. Santiago de Chile: National Productivity Commission.

Nedelkoska, L., and Quintini, G. (2018). Automation, Skills Use and Training. Berlin: OECD Publishing.

Neely, P., and Tucker, J. (2012). Using business simulations as authentic assessment tools. Am. J. Bus. Educ. 5, 449–456. doi: 10.19030/ajbe.v5i4.7122

Newmann, F. M., King, M. B., and Carmichael, D. L. (2007). Authentic Instruction and Assessment. Common Standards for Rigor and Relevance in Teaching Academic Subjects. Iowa Department of Education. Available online at: http://psdsped.pbworks.com/w/file/fetch/67042713/Authentic-Instruction-Assessment-BlueBook.pdf (accessed October 1, 2022).

Panadero, E., and Brown, G. T. L. (2017). Teachers' reasons for using peer assessment: positive experience predicts use. Eur. J. Psychol. 32, 133–156. doi: 10.1007/s10212-015-0282-5

Panadero, E., Brown, G. T. L., and Courtney, M. G. (2014). Teachers' reasons for using self-assessment: a survey self-report of Spanish teachers. Assess. Educ.: Princ. 21, 365–383. doi: 10.1080/0969594X.2014.919247

Parra, P., and Pérez, C. (2010). Propiedades psicométricas de la escala de compromiso académico (UWES-S) (versión abreviada), en estudiantes de psicología. Rev. Educ. Cienc. Salud 7, 128–133.

Pugh, G., and Lozano-Rodríguez, A. (2019). El desarrollo de competencias genéricas en la educación técnica de nivel superior: un estudio de caso. Calid. Educ. 50, 143–179. doi: 10.31619/caledu.n50.725

Reynolds, H., and Dowell, K. (2017). A planning tool for incorporating backward design, active learning, and authentic assessment in the college classroom. Coll. Teach. 65, 17–27. doi: 10.1080/87567555.2016.1222575

Riquelme-Brevis, H., Rivas-Burgos, M., and Riquelme-Brevis, M. (2018). Criteria for employability in professional technical education. Tensions and challenges in health specialty, Araucanía, Chile. Rev. Electr. Educare. 22, 1–25. doi: 10.15359/ree.22-2.11

Saye, J. W., Kohlmeier, J., Howell, J. B., McCormick, T. M., Jones, R. C., Brush, T., et al. (2017). Scaffolded lesson study: promoting professional teaching knowledge for problem-based historical inquiry. Soc. Stud. Res. Pract. 12, 95–112. doi: 10.1108/SSRP-03-2017-0008

Schaufeli, W. B., Martínez, I. M., Marques Pinto, A., Salanova, M., and Bakker, A. B. (2002). Burnout and engagement in university students: a cross-national study. J. Cross-Cult. Psychol. 33, 464–481. doi: 10.1177/0022022102033005003

Schlichting, K., and Fox, K. (2015). An authentic assessment at the graduate level: a reflective capstone experience. Teach. Educ. 26, 310–324. doi: 10.1080/10476210.2014.996748

Sokhanvar, Z., Salehi, K., and Sokhanvar, F. (2021). Advantages of authentic assessment for improving the learning experience and employability skills of higher education students: a systematic literature review. Stud. Educ. Eval. 70:101030. doi: 10.1016/j.stueduc.2021.101030

Sotiriadou, P., Logan, D., Daly, A., and Guest, R. (2020). The role of authentic assessment to preserve academic integrity and promote skill development and employability. Stud. High. Educ. 45, 2132–2148. doi: 10.1080/03075079.2019.1582015

Sotomayor Soloaga, P., and Rodríguez Gómez, D. (2020). Factores explicativos de la deserción académica en la Educación Superior Técnico Profesional: el caso de un centro de formación técnica. Rev. Estud. Exp. Educ. 19, 199–223. doi: 10.21703/rexe.20201941sotomayor11

Tai, J., Ajjawi, R., Boud, D., Dawson, P., and Panadero, E. (2018). Developing evaluative judgement: enabling students to make decisions about the quality of work. High. Educ. 76, 467–481. doi: 10.1007/s10734-017-0220-3

Trigwell, K., and Prosser, M. (1996). Changing approaches to teaching a relational perspective. Stud. High. Educ. 21, 275–284. doi: 10.1080/03075079612331381211

UNESCO (2019). Perfil de país: Chile. Buenos Aires: SITEAL. Available online at: https://siteal.iiep.unesco.org/sites/default/files/sit_informe_pdfs/chile_dpe_-_25_09_19.pdf (accessed December 28, 2023).

Villarroel, V., Bloxham, S., Bruna, D., Bruna, C., and Herrera-Seda, C. (2017). Authentic assessment: creating a blueprint for course design. Assess. Eval. High. Educ. 43, 840–854. doi: 10.1080/02602938.2017.1412396

Villarroel, V., Boud, D., Bloxham, S., Bruna, D., and Bruna, C. (2019). Using principles of authentic assessment to redesign written examinations and tests. Innov. Educ. Teach. Int. 57, 38–49. doi: 10.1080/14703297.2018.1564882

Villarroel, V., Bruna, D., Brown, G. T. L., and Bustos, C. (2021). Changing the quality of teacher's written tests by implementing an authentic assessment teachers' training program. Int. J. Instr. 14, 987–1000. doi: 10.29333/iji.2021.14256a

Watkins, D., Dahlin, B., and Ekholm, M. (2005). Awareness of the backwash effect of assessment: a phenomenographic study of the views of Hong Kong and Swedish lecturers. Instr. Sci. 33, 283–309. doi: 10.1007/s11251-005-3002-8

Wiggins, G. (2011). Moving to modern assessments. Phi Delta Kappan 92:63. doi: 10.1177/003172171109200713

Wiliam, D. (2011). What is assessment for learning? Stud. Educ. Eval. 37, 3–14. doi: 10.1016/j.stueduc.2011.03.001

Keywords: authentic assessment, assessment practices, vocational education, secondary education, higher education

Citation: Villarroel V, Melipillán R, Santana J and Aguirre D (2024) How authentic are assessments in vocational education? An analysis from Chilean teachers, students, and examinations. Front. Educ. 9:1308688. doi: 10.3389/feduc.2024.1308688

Received: 06 October 2023; Accepted: 11 March 2024;

Published: 22 March 2024.

Edited by:

Ronal Watrianthos, Padang State University, IndonesiaReviewed by:

Sara Cervai, University of Trieste, ItalyCopyright © 2024 Villarroel, Melipillán, Santana and Aguirre. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Verónica Villarroel, dmVyb25pY2EudmlsbGFycm9lbEB1c3MuY2w=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.