- School of Education, Johns Hopkins University, Baltimore, MD, United States

Background: Innovative approaches to graduate education that foster interdisciplinary learning are necessary, given the expansion of interdisciplinary research (IDR) and its ability to explore intricate issues and cutting-edge technology.

Purpose: This study examines an intervention to develop critical reading skills of the primary literature (CRPL), which are often assumed and unaided by formal instruction in graduate education (GE) yet are crucial for academic success and adapting to new research fields.

Methods: This study applied mixed methods and a pre-post design to assess the effectiveness of a CRPL intervention among 24 doctoral students from diverse fields engaging in the interdisciplinary field of science policy research. The intervention was a 4-week online course with explicit instruction in a categorical reading approach, the CERIC method (claim, evidence, reasoning, implications, and context), combined with social collaborative annotation (SCA) to facilitate low-stakes, peer-based discourse practice. It examined how participation changed participants’ CRPL skills and self-perceptions.

Results: The intervention significantly improved CRPL, t(23) = 13.6, p < 0.0001; research self-efficacy, t(23) = 4.9, p < 0.0001; and reading apprehension, t(23) = 4.3, p < 0.0001. Qualitative findings corroborated these findings and highlighted the importance of explicit CRPL instruction and the value of reading methods applicable to IDR. These results aligned with sociocultural and social cognitive theories and underscored the role of discourse and social engagement in learning critical reading, which is traditionally viewed as a solitary activity.

Conclusion: The findings present a valid and innovative model for developing CRPL skills in interdisciplinary GE. This approach provides a model for scaffolding CRPL that can be adapted to IDR contexts more broadly.

Implications: The study findings call for revising graduate curricula to incorporate explicit CRPL instruction with peer-based discourse, emphasizing integrations in higher education anywhere students encounter primary literature. The findings advocate for formal and informal adoption of the reviewed methods, offering a significant contribution to interdisciplinary GE pedagogy.

Introduction

Graduate education (GE) marks a crucial crossroads where dependent students evolve into independent researchers. This juncture forces a major change in learning: no longer focusing on developing academic skills as learning objectives, GE requires applying these skills as the primary means of generating original knowledge (Boote and Beile, 2005; Sverdlik, 2019). The successful co-construction of the core reading, writing, and research skills is integral to the entire scholarly endeavor (Norris and Phillips, 2003). Reading is the most important among these skills in shaping academic identity, thinking, writing, and expertise (McAlpine, 2012; Pyhältö et al., 2012). While there is an extensive body of literature on helping graduate students write about research (e.g., Aitchison and Guerin, 2014; Canseco, 2010; Feak and Swales, 2009; Kamler and Thomson, 2014; Swales and Feak, 2009), there is very little on helping them read (Burgess et al., 2012; McAlpine, 2012). “[While writing is often supported at university,] reading…is usually left unprobed and unaided” (van Pletzen, 2006, p. 105). Indeed, many graduate programs and advisors assume students already possess critical reading of the primary literature (CRPL) skills, leading to a lack of explicit instruction in this area (Kwan, 2008). Without strong CRPL skills, students struggle to make sense of primary research literature and synthesize it into new knowledge, skills that undergird GE reading and writing benchmarks, such as the literature review, thesis proposal, and dissertation (Boote and Beile, 2005; Kwan, 2009).

Lack of explicit CRPL instruction and its significance in graduate education

The teaching gap in CRPL skills is part of a broader problem where GE skills are assumed to exist without formal instruction. This “hidden curriculum” leads to barriers for graduate students, such as hidden expectations (Margolis, 2001) and major academic challenges with the co-constructed skills of writing and research (Kwan, 2008; van Pletzen, 2006). The latter is identified broadly as weak reading that leads to weak writing (Matarese, 2013), such as poorly constructed literature reviews (Boote and Beile, 2005). Academic challenges are especially acute for students from under-resourced backgrounds who may not have been exposed to these skills previously and may be disproportionately screened out (van Pletzen, 2006). This is exemplified by the finding that doctoral students of color typically quit their programs at 23 months, an entire year earlier than their White peers (Sowell, 2009). A common attribute among those more likely to complete their degrees is access to crucial resources and support (Bourdieu, 1986; Coleman, 1993).

The failure to explicitly teach CRPL skills can also lead students to adopt ineffective reading strategies. Examples include reading only for an assignment, skipping most readings, and skimming major sections, such as the abstract, figures, and discussion (Burgess et al., 2012; Lie et al., 2016; McMinn et al., 2009). Worse, students avoid reading altogether (Burchfield and Sappington, 2000; Burgess et al., 2012; Clump and Doll, 2007; Gorzycki et al., 2020; McMinn et al., 2009). This manifestation undermines the quality of academic work by impairing students’ capacity to participate in disciplinary discourse, where they practice synthesizing primary research findings into new knowledge. This situation is exacerbated by the assumption that students can independently manage complex tasks like literature reviews without adequate support (Zaporozhetz, 1987), potentially setting up many students for academic delays or dropping out (Bair and Haworth, 2004; Haynes, 2008; Spaulding and Rockinson-Szapkiw, 2012; Varney, 2010).

The imperative of developing strong CRPL skills is intrinsically linked to the evolving requirements of interdisciplinary research (IDR). IDR goes beyond combining two disciplines to create a unified output; it demands deeper integration and synthesis of diverse ideas and methodologies. This complexity requires researchers to engage critically with concepts and literature beyond their original disciplines in new fields (National Academy of Sciences et al., 2005), underscoring the necessity of strong critical reading abilities. Skills like CRPL that empower collaboration are becoming ever more valuable as IDR research topics grow in complexity and urgency, including areas such as climate change, nanotechnology, genomics, proteomics, bioinformatics, neuroscience, conflict, and terrorism (National Academy of Sciences et al., 2005). The importance of CRPL skills in the IDR context is further highlighted by the observable increase in cross-disciplinary references in scholarly work across the natural and social sciences since the mid-1980s (Larivière and Gingras, 2014; Van Noorden, 2015), as well as a notable rise in long-term citations (>13 years) of interdisciplinary papers (Wang et al., 2015), indicating a growing interconnectedness between various fields. These trends underscore the essential role of IDR in a research landscape that is becoming more interconnected, highlighting the necessity for CRPL skills to assess and evaluate evidence-based arguments within and across various fields.

Given the importance of CRPL skills for mastering the scope and complexities of IDR, it is essential to consider underlying theoretical frameworks that facilitate effective learning in GE around the fundamental concepts of language, learning, and socialization. Two theoretical perspectives pertain: sociocultural theory (Vygotsky et al., 1978) and social cognitive theory (Bandura, 1986). Sociocultural theory applied to GE posits that learning is primarily advanced through discursive interactions—encompassing dialogues between professor and student, among students, and between students and primary literature (Brown and Renshaw, 2000). This theory suggests that graduate students hone their academic abilities by engaging with reading, writing, and receiving feedback from more experienced practitioners, including advisors, professors, peers, and authors (Aitchison et al., 2012; Spaulding and Rockinson-Szapkiw, 2012). Social cognitive theory (Bandura, 1986) emphasizes the role of social contexts in learning processes. It emphasizes how graduate students internalize and practice disciplinary and cultural norms by participating in various academic activities such as coursework, lectures, conferences, and reading groups that prioritize primary literature—in other words, the literary practices of a discipline (Casanave and Li, 2008). This theory also stresses the significance of observational learning and behavioral modeling, illustrating how engagement with scholarly literature influences writing practices and guides future reading selections and research endeavors (Kwan, 2008). Together, these theories provide a comprehensive understanding of the multifaceted nature of learning in GE, suggesting that both social interaction and individual cognitive strategies are essential for developing the sophisticated skills required for successful reading, writing, research, and scholarly communication.

Literature review of critical reading interventions

The literature review examines strategies, approaches, and technologies that enhance critical reading practices and foster collaborative learning environments in higher education. First, a brief review of critical reading interventions highlights the significance of active and structured reading strategies and the importance of adaptable and interdisciplinary approaches to teaching and learning. Second, a brief review of social collaborative annotation (SCA) considers digital tools for interactive learning and how these tools can promote engagement, comprehension, and a sense of community among students through collaborative annotation.

Critical reading interventions

Critical reading involves a range of strategies, such as continual critique, close reading, rereading key sections, critical responses, use of graphics, and understanding text structure to enhance comprehension and apply disciplinary knowledge for a deeper understanding of primary literature (Shanahan and Shanahan, 2008; Shanahan et al., 2011). Moreover, critical readers apply these strategies to dissect primary literature, using text structure and disciplinary knowledge to understand the main ideas better, informing their oral and written discourse (Shanahan et al., 2011). Recognizing the essential role these strategies play in mastering CRPL, this section of the literature review focuses on how educational interventions incorporate these techniques across higher educational settings.

Active reading strategies such as paraphrasing, self-questioning, annotation, and note-taking have been shown to enhance undergraduate reading comprehension and the integration of reading and writing skills (Johnson et al., 2010; Kalir, 2020; Kiewra, 1985; Kobayashi, 2009; Ozuru et al., 2004; Peverly et al., 2003; Yeh et al., 2017). Categorical reading defined by Schunk (2012) employs an active top-down approach to finding information, focusing on finding pre-determined kinds of information, versus the more typical bottom-up approach, where students read the whole text from start to end. For example, the SQ3R and SOAR study systems underscore the importance of structured reading and note-taking methods for improved retention and understanding (Baker and Lombardi, 1985; Kiewra, 2005). A study by Jairam et al. (2014) comparing these systems among 25 undergraduate psychology students studying a 2,100-word text found that, despite similar initial abilities, the SOAR group surpassed the SQ3R group in concept understanding by 13%, fact recall by 14%, and identifying relationships by 20%, showcasing enhanced learning and information transfer (Mayer, 2008). The SOAR system’s emphasis on selecting, organizing, associating, and regulating information contributes to its efficacy, a finding supported by further research in online and hybrid settings (Daher and Kiewra, 2016; Jairam and Kiewra, 2009).

However, this literature review reveals how the research on critical reading strategies predominantly focuses on undergraduate and K-12 populations, leaving a significant gap in the context of graduate education (Hoskins et al., 2007; Janick-Buckner, 1997; Yarden et al., 2015). This gap in the literature supports prior findings that CRPL skills are often presumed to exist and often are not taught in GE (Kwan, 2008; van Pletzen, 2006). This gap is significant as many graduate students are unaware of the essential nature of reading primary literature for effective writing or its distinctiveness from narrative texts (Lie et al., 2016; Sverdlik et al., 2018).

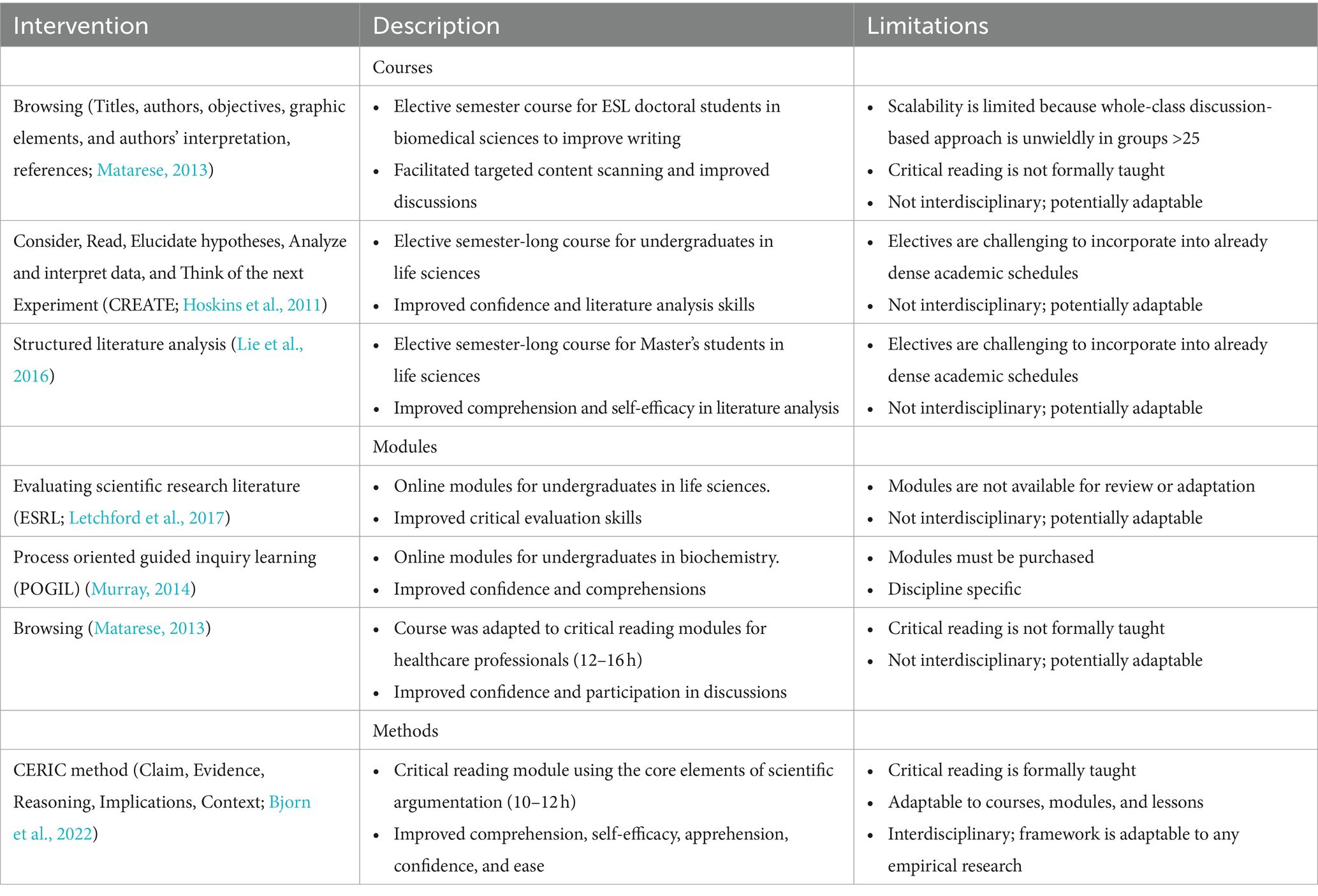

Despite this gap in the GE literature, numerous educational interventions in higher education settings aimed to improve CRPL skills by integrating critical reading strategies into various formats. Table 1 summarizes the educational interventions focusing on critical reading, literature analysis, and scientific argumentation. These interventions can be categorized into three main types: courses, modules, and a method, each with unique approaches and considerations.

Courses, such as the elective “Browsing” course for ESL doctoral students in biomedical sciences (Matarese, 2013), the CREATE course for undergraduates in life sciences (Kararo and McCartney, 2019; Hoskins et al., 2011), and the Structured Literature Analysis course for Master’s students in life sciences (Abdullah et al., 2015; Lie et al., 2016) focus on improving specific skills. These skills include writing, confidence, literature analysis skills, and discussions. It is unclear if the learning gains persist after students leave a CREATE course or change majors (Sato et al., 2014). However, elective courses have limited scalability because of dense academic schedules. In addition, courses lack interdisciplinarity by design, although they are potentially adaptable to fields outside of life sciences.

Modules, such as ESRL (Letchford et al., 2017) Figure Facts (Round and Campbell, 2013), and POGIL (Murray, 2014), and the adaptation of the Browsing course into modules for healthcare professionals (Matarese, 2013), offer flexibility through shorter duration hybrid delivery. Modules can be integrated into existing courses or delivered stand-alone. These interventions improved critical evaluation skills, confidence, and comprehension. Like courses, modules are potentially adaptable to other disciplines. However, there are limitations of access and availability, the need for purchase, and a focus on specific disciplines, limiting broader applicability.

One method, the CERIC method, is grounded in the core elements of scientific argumentation outlined by Toulmin et al. (1984) and further developed and modernized by Bjorn et al. (2022). CERIC enhances critical reading by teaching it formally. This method teaches claims, evidence, reasoning, implications, and context, mirroring categorical strategies from Matarese (2013) and the SOAR system (Jairam et al., 2014) while promoting a scientific mindset akin to the CREATE approach (Hoskins et al., 2011). It improves reading comprehension, research self-efficacy, apprehension, confidence, and ease. Unlike other interventions, CERIC is modular, adaptable, and applicable across various educational formats and disciplines, providing a versatile and interdisciplinary framework for the analysis of empirical research. Its design is scalable and accessible, making integration into any course or module feasible and efficiently bridging the instructional gap in CRPL skills (Bjorn, 2022).

In summary, interventions such as courses, modules, and methods play crucial roles in enhancing CRPL skills in higher education, and each presents unique benefits and considerations. However, the limitations observed in interdisciplinary integration, scalability, and broad accessibility highlight a pressing need for ongoing innovation and research in educational strategies. The CERIC method, in particular, stands out for its interdisciplinary and adaptable approach that integrates CRPL instruction into diverse educational contexts and offers a promising direction for overcoming existing barriers and effectively improving analytical skills across and between academic domains.

Social collaborative annotation

Social Collaborative Annotation (SCA) has emerged as a powerful tool in enhancing educational engagement, comprehension, motivation, and critical thinking across various levels of higher education. This innovative approach involves students and educators engaging in collaborative digital annotations. It fosters interactive learning environments beyond traditional teaching methods, classrooms, and course schedules (Novak et al., 2012; Zhu et al., 2020).

In practical applications, SCA can be performed with many tools, such as One Note (Microsoft; Seattle, WA), Google Suite (Alphabet, Inc.; Mountainview, CA), and Hypothes.is (San Francisco, CA). These tools can create and share digital reading notes and annotations. Digital annotations serve as rich resources for retrieval practice and, when shared within a group, transform into socially constructed learning opportunities. This collaborative approach has been shown to improve compliance with reading assignments and increase engagement in course activities, making it a valuable addition to the educational toolkit where interleaved practice is essential (Berry, 2017; Brown et al., 2014; Cohn, 2018; Martin and Bolliger, 2018).

The efficacy of SCA in educational settings has been the subject of numerous studies. Comprehensive reviews reveal a growing interest in this field, particularly in K-12 and undergraduate education contexts. Focusing only on higher education, an initial review in 2012 identified 10 empirical studies (Novak et al., 2012), with a later review in 2020 finding 16 empirical studies (Zhu et al., 2020), plus three of graduate education (Chen, 2019; Eryilmaz et al., 2014; Hollett and Kalir, 2017). These empirical studies report mixed effects on cognitive skills and motivation, with several highlighting significant improvements in reading comprehension when employing strategies such as predicting, questioning, clarifying, and summarizing, indicative of SCA’s potential in enhancing literacy development.

The effectiveness of SCA is influenced by several key factors, including the provision of adequate technology training for teachers and students, instructional support during SCA activities, and the use of small teams (e.g., two or three people) for collaborative efforts (Bateman et al., 2007; Johnson et al., 2010; Mendenhall and Johnson, 2010; Razon et al., 2012). Additionally, the design and technical features of SCA tools matter; for instance, ease of use and compatibility with various electronic formats play a crucial role in their usability and effectiveness in educational contexts (Kawase et al., 2009).

In addition, SCA offers diverse benefits in educational settings. For instance, studies by Reid (2014) and Gao (2013) offer insight into this range, highlighting its cognitive, motivational, social, and community-building benefits. Reid’s mixed-methods study among community college students demonstrates how synchronous collaborative annotation can significantly enhance reading comprehension and reduce mental effort. In contrast, Gao’s survey of undergraduate pre-service teachers using Diigo (Reno, NV) highlighted increased student engagement and community building.

Despite the evident benefits of SCA in undergraduate education, research on its impact on graduate learning remains limited. To date, three studies have considered SCA in GE. One explored SCA in a technical context of prompts that focused learners’ attention on challenging concepts, with the instructor-provided prompts as more effective for generating high-quality discussions (Eryilmaz et al., 2014). Two others explored the use of social apps like Slack and Hypothes.is for annotation in formal coursework found that SCA significantly improves student engagement, discussion quality, and a sense of agency among graduate students, highlighting its potential to enrich academic discourse by bridging formal and informal educational practices (Chen, 2019; Hollett and Kalir, 2017).

Notably, the literature review identifies a gap in research concerning integrating SCA with critical reading strategies, suggesting a promising area for future investigation. This gap underscores the need for further studies to explore how SCA can be effectively combined with critical reading approaches to enhance educational outcomes across various levels of education. Combining these approaches is a pedagogical innovation with the potential for integrating structured critical reading strategies with collaborative digital tools to create a more engaging, effective, and comprehensive educational environment and to better serve diverse learning needs and preferences, including those unique to interdisciplinary research.

Research gap and main claim

To date, the literature has not fully explored the integration of SCA with critical reading strategies within the context of GE, revealing a notable research gap. This oversight is particularly significant given the demonstrated efficacy of critical reading strategies to improve comprehension of primary literature (Shanahan et al., 2011) and of SCA to deepen engagement with course materials and improve comprehension and critical thinking among students in higher education (Chen, 2019; Gao, 2013; Reid, 2014). The current study demonstrates that integrating explicit instruction in the CERIC method (i.e., a categorical reading approach) with SCA supported by advanced organizers (Ausubel, 2012; Bjorn et al., 2022) significantly improved critical reading skills as CRPL among doctoral participants reading interdisciplinary research articles in the field of science policy. This approach represents a novel pedagogy designed to explicitly teach and practice CRPL in a socially constructed and interactive learning environment that better equips students for the complexities of interdisciplinary research (IDR).

Methodology

Sampling and recruitment

Participant recruitment occurred through convenience sampling (Creswell and Plano Clark, 2018). The sponsoring organization, NSPN, contacted their nationwide membership (n = 1,574) with an online invitation to participate in the course. NSPN sent the same invitation through the email newsletter, website posts, and social media channels. While the online intervention could, in theory, accommodate an unlimited number of participants, the study’s qualitative nature provided a practical limit on sample size. Thus, the first 58 people to enroll in the course were accepted and asked to check a box if they wanted to opt in to receive information about participating in the research study. Of those who opted in, 28 indicated an interest in the study, and 24 met inclusion criteria, consented, and began the study. The four people who did not qualify were not currently enrolled doctoral students (i.e., one was an undergraduate, and three were post-baccalaureates). All 24 participants (100%) completed the study and were included in the analysis. Nine study participants volunteered and completed follow-up interviews. While interdisciplinary writing was not a study’s goal, notably, a few weeks after the study was completed, the host organization, NSPN, held a science policy writing competition, and two study participants ranked among the top 10 finalists (Schmel, 2022).

Study design

The study employed a mixed-methods parallel convergent and interrupted time series design to examine an intervention’s impact on developing CRPL skills among doctoral students through a 4-week online course. The study balanced quantitative and qualitative data collection, with a sample size of 24 participants deemed sufficient based on a G*power analysis to achieve the necessary statistical power for detecting significant differences in reading scores from pre-to post-tests (Faul et al., 2007). Matched pairs refer to individual participants’ pre-test and post-test measures. This study achieved a sufficient sample size (n = 24) and completion rate (100%) for the desired statistical power (alpha 0.05). Insights from small-N studies can provide an in-depth look into how students learn science (Gouvea and Passmore, 2017). However, the study was not powered for more complex statistical analyses, such as ANOVA, and generalizing the findings was not a goal (Hatry et al., 2015).

The qualitative data, enriched by SCAs, reflections, and interviews, informed the process evaluation, helping to refine the intervention and contribute to future research directions. The design allowed the researcher to gain process evaluation information essential for modifying the intervention as it progressed to meet participants’ needs and for future research and refinement of the online course (Mertens and Wilson, 2019). This methodological approach aimed to triangulate findings for increased trustworthiness, as Guba (1981) recommended, while addressing the practical limits of qualitative analysis in educational research (Shadish et al., 2002; Matarese, 2013). Finally, because of the high volume of qualitative data from SCAs, reflections, and interviews with corresponding time-intensive analyses, a practical upper limit for the researcher was 25 participants (Shadish et al., 2002).

CRPL intervention approach

The study assessed the effectiveness of a 4-week online course in interdisciplinary science policy designed to improve CRPL skills among enrolled doctoral students. Aiming to fill gaps identified in the literature review, the intervention combined the critical reading method, CERIC (claim, evidence, reasoning, implications, and context; Bjorn et al., 2022), with SCA (Kalir, 2020) in an online graduate course with 10 h of treatment. This format included synchronous direct whole-class instruction and asynchronous individual CRPL practice with interdisciplinary research articles, advanced organizers, and peer-based discussion groups to facilitate engagement with the readings, deep learning, and skill development. Completion was determined by participants finishing 90 percent of the activities, and 100 percent of participants completed the intervention.

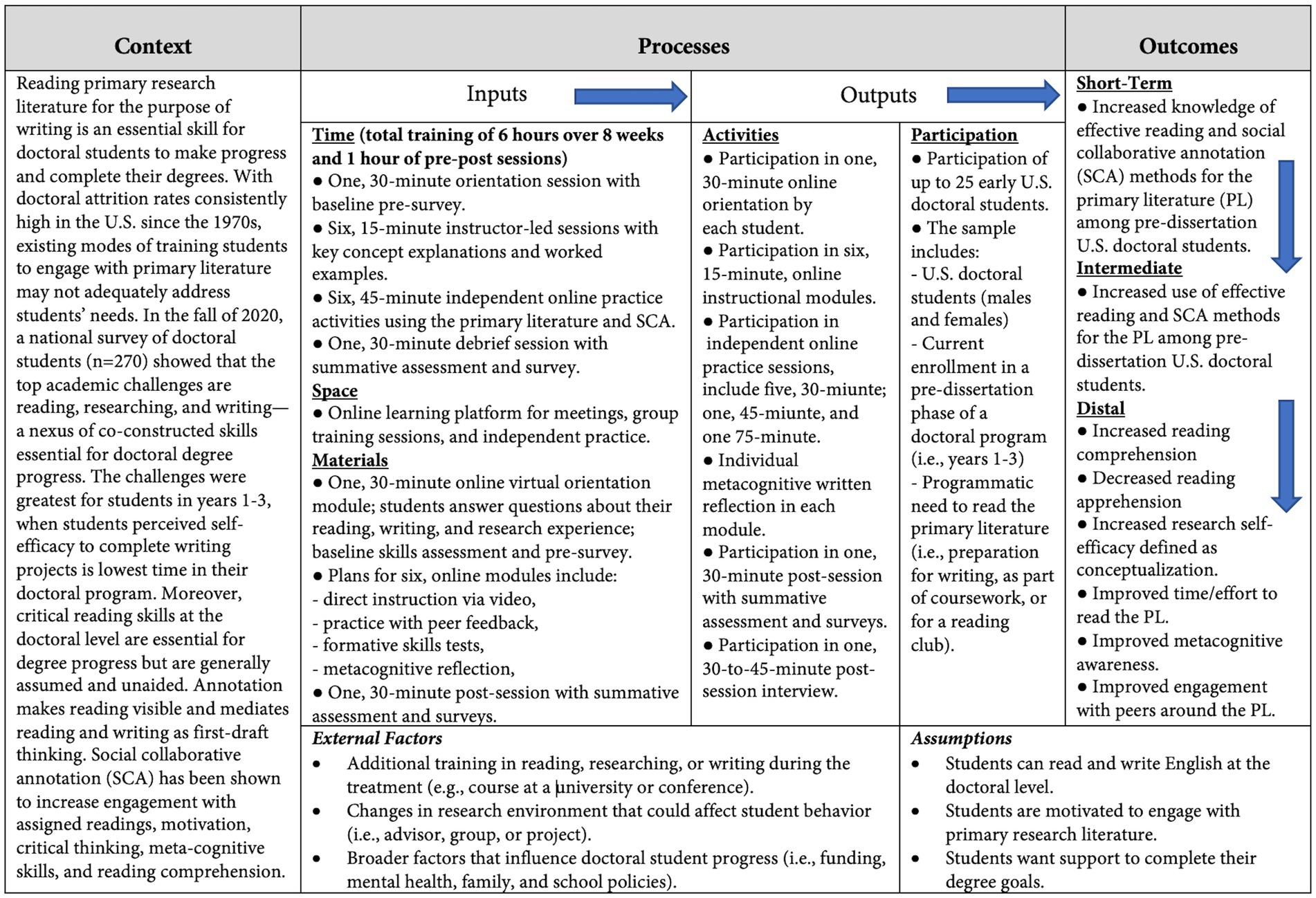

Theory of treatment and logic model

The theory of treatment appears in Figure 1, showing the idea that increased knowledge of effective CRPL methods would lead to increased usage of them. This theory of treatment informed the logic model, as shown in Figure 2, which describes the intervention’s learning activities divided between 4 weeks of synchronous meetings and asynchronous activities. The sync session activities included direct instruction in the CERIC reading method (Bjorn, 2022; Bjorn et al., 2022), worked examples, and practice time in breakout rooms. The async activities included optional instructional videos, common readings from the interdisciplinary primary literature on science policy, SCA activities with prompts (Kalir, 2020), a discussion board exercise, and a meta-cognitive self-reflection exercise.

Figure 1. Theory of treatment. The theory of treatment (from left to right) summarizes the educational intervention, the mediating variable of increased knowledge, the proximal outcome of increased use of knowledge, and the distal outcomes as they relate to study and scale measures.

Figure 2. Logic model. The logic model (from left to right) summarizes the context of the problem, the processes of inputs and outputs directly attributable to the education intervention, and the resulting outcomes, focusing on short-term, intermediate, and distal outcomes.

Course structure

The course was structured as synchronous and asynchronous components, tailored to accommodate learners’ schedules and preferences, as determined by a pre-course survey conducted by the National Science Policy Network (NSPN). Synchronous sessions were conducted via Zoom and involved a blend of check-ins, instruction, and breakout room activities. The synchronous meeting day and time (i.e., Tuesday from 6:30 p.m. to 8:00 p.m. Eastern) was selected by NSPN based on pre-course survey preferences and prior experience with professional development courses for their members. Four 90-min synchronous meetings were held on Zoom using NSPN’s account, and each participant accessed the meetings with a unique URL issued by Zoom upon registration. NSPN recorded the course meetings with learners’ permission (including study participants and non-participants). After each sync session, the student researcher posted a meeting recording link to the course LMS, allowing learners to review the sessions as needed.

The asynchronous sessions, led by students, included small group discussions, reading, and reflection activities aimed at processing the course material through peer interaction. The async sessions were student-led and self-paced. The async discussion groups were formed by students who signed up for a group with two or three members of their choosing, and the groups were stable throughout the course. The discussion groups were also led by students, with instructors only checking the annotation work for quality and questions. In addition, the instructor provided prompts for discussion each week (e.g., the same prompts for all discussion groups), and students were encouraged to raise additional questions within their discussion groups. When new questions arose, the instructor posted these on that week’s course page and reviewed them in the sync session so everyone would be exposed to the same questions.

Course sessions

The course sessions followed a regular pattern. The first 30 min of each sync session covered a weekly check-in survey, a recap of prior learning and discussion questions, and direct instruction of new knowledge. The remaining 60 min were devoted to working examples, practice, and work time in breakout rooms. Each session closed with a reminder list of asynchronous activities to be completed during the week. The weekly asynchronous activities consisted of other instructional videos (optional), common readings from the primary literature, SCA activities, a discussion board prompt, and a meta-cognitive self-reflection prompt. Three of the four intervention sessions included surveys (i.e., pre-, mid-, and post-surveys). Additional async activities included group annotation activities, an individual compare/contrast SCA activity using two readings, and an individual policy topic selection activity relevant to the second part of the course, which was not a part of the intervention. Further, when confusion arose on the week’s topics, such as on the Week One discussion board about qualitative research methods used in that module’s reading, the instructor added an optional set of readings and videos to the LMS. These optional activities were not included in data collection and analysis and were not part of the study evaluation, but they helped answer questions.

Finally, during the study, emergent needs arose from participants. They asked to form private SCA groups to carry on SCA after the intervention ended. Also, they asked for a group chat to stay in communication. After the intervention ended, the student researcher created a GroupMe chat to facilitate ongoing communication and guided participants through adding the private group function to their SCA software accounts to support these emergent needs. Notably, these interactions fell outside the scope of the study and were not included in data collection or analysis.

Participants

The study participants included only currently enrolled doctoral students in United States university programs. Individual doctoral students were the unit of analysis. The primary inclusion criterion was an active need to engage with primary literature (PL), such as having an interest in interdisciplinary science policy research. The primary exclusion criterion was enrollment in a non-United States doctoral program. A secondary exclusion criterion for United States doctoral students is enrollment in programs where reading is a topic of study, such as English, Journalism, and Rhetoric. There was no theoretical upper limit for participation in the course because it was free, online, and open to the NSPN membership. In total, 58 people enrolled in the 8-week course. However, data collection and analysis included only 24 participants who met all the study inclusion criteria and consented to participate in the 4-week study (i.e., the first half of the course).

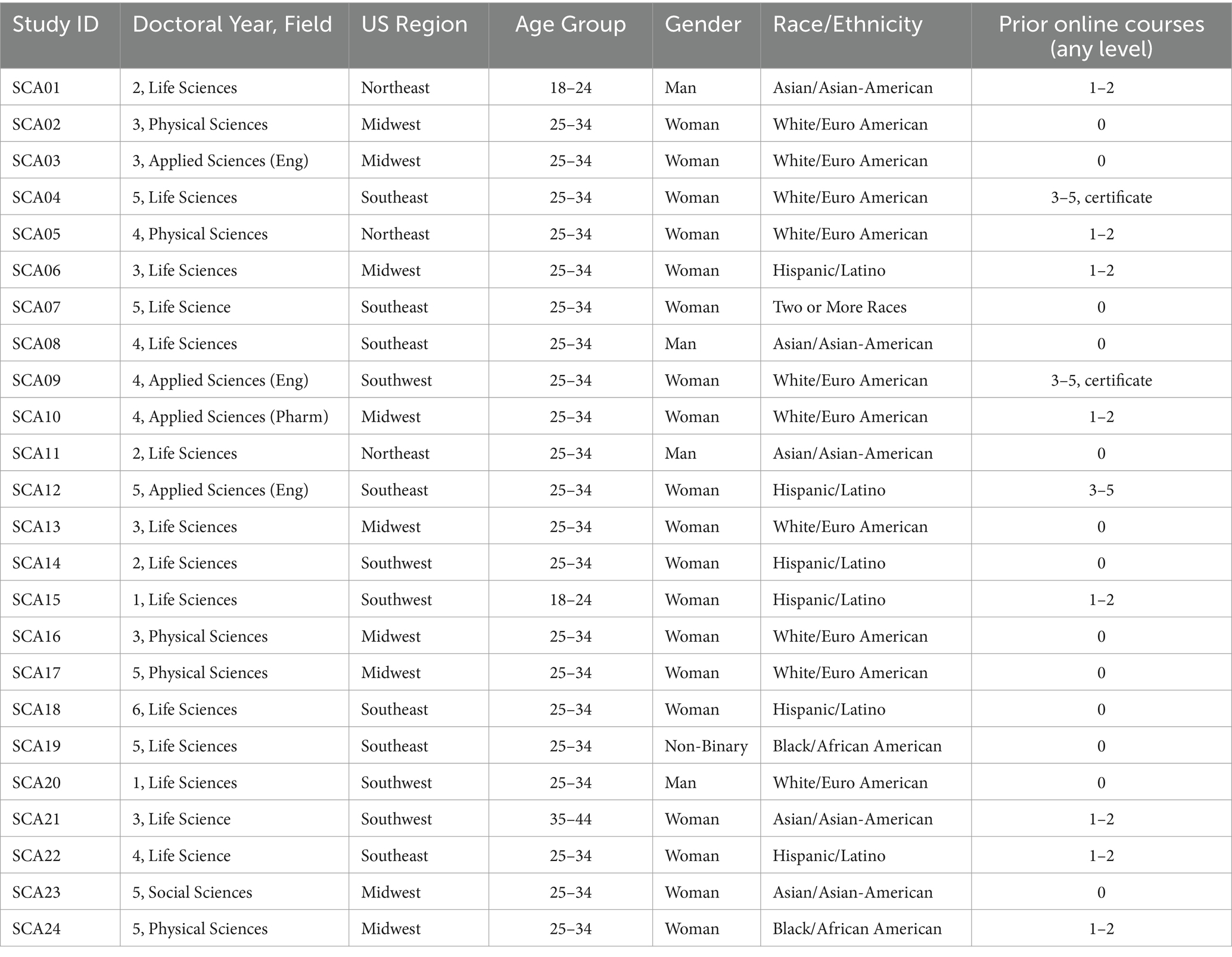

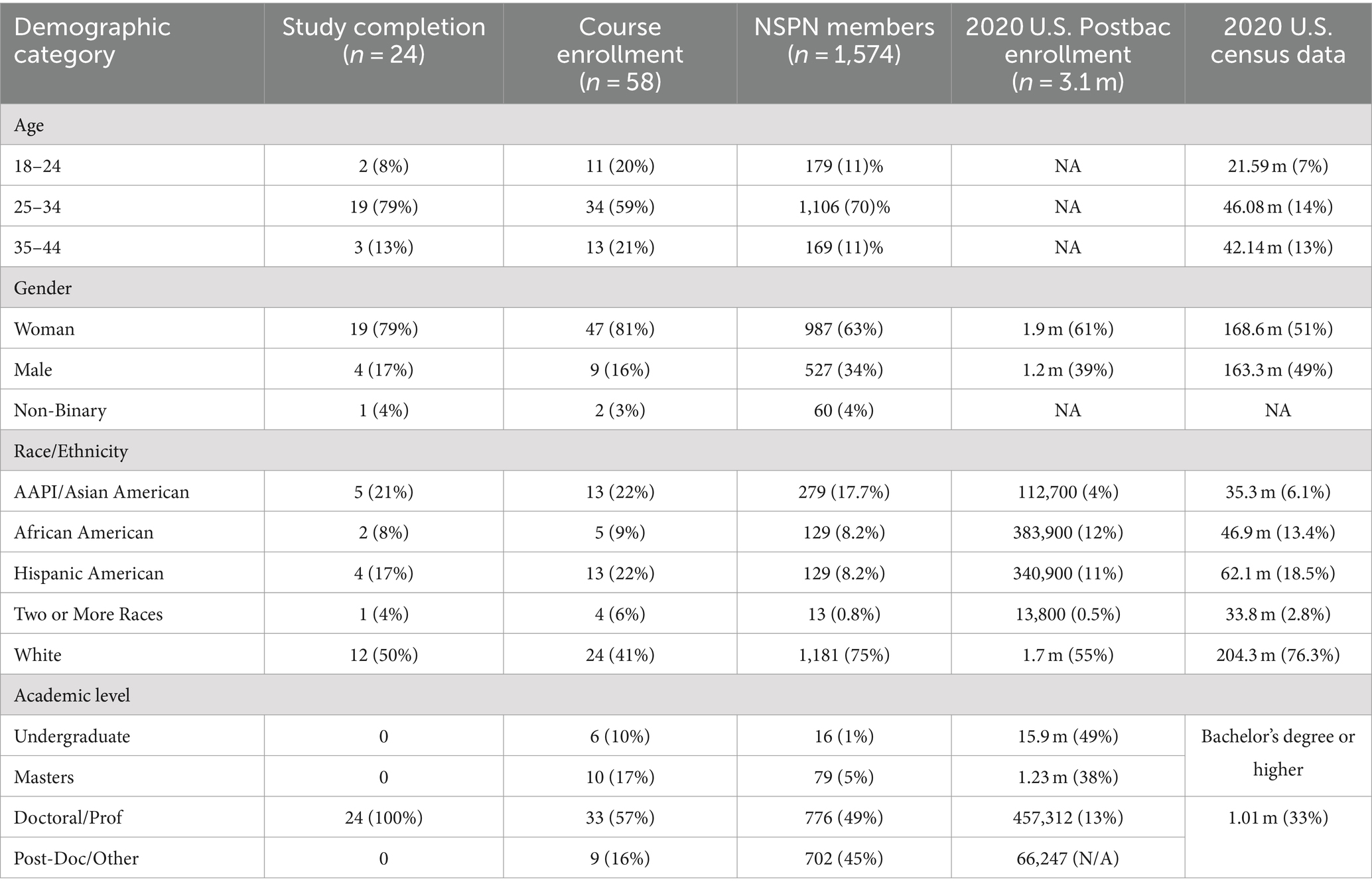

A summary of participant profiles appears in Table 2, and Table 3 shows how participant demographics compare to the learners in the course, NSPN members, national postbaccalaureate enrollment, and the United States population. Women and participants of color had higher representation in the study than other demographic groups. Race/ethnicity information was collected in this study to contextualize participants’ raciolinguistic experiences within the sociocultural framework of peer-based discourse about PL. The raciolinguistic perspective acknowledges that language is inherently related to race and vice versa (Rosa and Flores, 2017), and online environments can unmask covert racism (Eschmann, 2020).

No participants had formal preparation in science policy, the field that served as the intervention’s topic and source for interdisciplinary research articles, and thus, interdisciplinary science policy reading was new to all participants. Likewise, no participants had formal preparation in astrophysics, the field from which the pre-post reading assessments were drawn, and the course did not teach astrophysics content knowledge. This approach allowed for the isolation of CRPL in a new field as an intentional aspect of the study design for two reasons. First, reading in a new field neutralized participants’ formal academic preparation and disciplinary background knowledge inherent in the National Science Policy Network’s (NSPN) highly diverse membership, which attracts students in all fields. Second, reading in a new field reflects the interdisciplinary reality of working in United States science policy, even within broad science policy domains. For instance, students wanting to specialize in agricultural policy must be able to read, analyze, and interpret findings in fields as disparate as crop and soil sciences to climate change, infectious diseases, and social science.

Finally, participants were randomly assigned unique study numbers, ranging from SCA01 to SCA24, to protect their identities. Quotations in the remaining discussion reflect only this anonymous identifier. Citing participants by study number, while nontraditional, is not proscribed and allows for greater accuracy and transparency in reporting this rich and complex data set that would not be possible with traditional citation methods (Johnson and Onwuegbuzie, 2004; Shadish et al., 2002; Miles et al., 2013).

Data collection and analysis

Data collection included multiple quantitative and qualitative instruments. The quantitative instruments included a researcher-generated pre-post reading comprehension assessment (Bjorn, 2022), the 15-item Research Self-Efficacy (RSES) Conceptualization subscale with Cronbach’s alpha of 0.92 (Bieschke et al., 1996), the 10-item Reading Anxiety in College Students (RACS) scale with Cronbach’s alpha of 0.91 (Edwards et al., 2021), and software reports from Canvas LMS, Hypothes.is, and Google Suite. In addition, participant self-reports about ease, confidence, and understanding were measured using a five-point Likert scale, where one means never applicable and five means always applicable. Higher scores on this scale indicate participants’ perceptions of greater positive feelings.

The quantitative data were analyzed using descriptive statistics and matched-pair t-tests. Assumptions of normality were satisfied before running additional tests (Wagner, 2019). Data for the indicators of reading comprehension, research self-efficacy, and reading apprehension met the criteria for normality. Then, the author conducted one-tail (matched pair) t-tests of pre-post group means for each of the above indicators. The sample size was too small to apply additional tests, such as ANOVA or other correlation tests.

The qualitative instruments were numerous. These included participants’ work products during the intervention, weekly written responses to reflective prompts about reading skills, instructor observations, and structured interviews. The qualitative data were analyzed using content and thematic analysis (Miles et al., 2013). Content coding and thematic analysis of the participants’ interviews were appropriate analysis methods to address qualitative research questions about participants’ lived experiences and understanding (Miles et al., 2013). Specific qualitative analysis techniques included inductive coding, pattern analysis, thematic analysis, frequency (i.e., counting), and proximity analysis (Armborst, 2017; Hsieh and Shannon, 2005; Jackson and Trochim, 2002; Miles et al., 2013).

The initial analysis occurred in three rounds: content coding, pattern analysis, and thematic analysis. The first analysis round included pattern analysis and thematic coding, highlighting patterns and themes within the same participant’s work over a few weeks and between participants. The second coding round was focused coding, aiming for code saturation and testing emerging ideas with disconfirming information (Rädiker and Kuckartz, 2020). The final coding round was theoretical coding, building on emergent concepts to clarify relationships between codes and themes, comparing findings with theory, and generating new hypotheses. Finally, frequency analysis regarding SCA patterns from the Hypothes.is data sets were visualized by Crowdlaaers (Denver, CO) using learning analytics and quantization (Kalir, 2020). Additional software support for qualitative analysis came from the software NVIVO (QSR International Inc., Melbourne, Australia), which the researcher used to code all assets digitally.

Results

The online CRPL intervention with CERIC plus SCA produced rich results. The intervention improved participants’ pre-post test scores for reading comprehension as CRPL, research self-efficacy, and reading apprehension—an empowering combination of literacy skills. In addition, participants reported positive changes in their perceptions of reading comprehension and apprehension. Qualitative findings corroborated the quantitative pre-posttest findings and revealed nuanced experiences with the learning activities. Participants described numerous beneficial aspects of the intervention, including learning a categorical reading approach, the CERIC method, combined with SCA to interact with peers and discuss the primary literature in a low-stakes learning environment. In addition, the qualitative findings about SCA provided a very rich dataset sufficient to warrant a separate report about the effects of peer engagement (Bjorn, 2023).

Regarding participant demographics, all were doctoral students enrolled in United States postsecondary institutions, per the study design. The academic domains represented were life sciences (60%), physical sciences (22%), and applied sciences (18%). None had academic training in astronomy, the pre-post testing course content. Participants were based in 20 different U.S. states, representing a near-equal mixture of politically blue and red states. Participants were predominantly females (79%) ages 25–34 (79%). The study reflected patterns in NSPN membership of mostly females (63%) aged 25–24 (70%). BIPOC representation was similar in the study (50%) as the learners in the enrolled course (59%), and both the study and course had more BIPOC representation than NSPN’s membership (25%) and U.S. national postbaccalaureate enrolment (45%). For instance, course representation in the racial categories of Asian/Asian-American, Hispanic, and Two or More Races was higher in the study (21, 17, and 4%, respectively) than in NSPN membership (19, 8, and 1%, respectively) and U.S. national postbaccalaureate enrollment (4, 11, and 0.5%, respectively). By comparison, course representation in the Black/African American category (8%) was comparable to NSPN membership (8%) and slightly lower than national postbaccalaureate enrollment (11%). Course representation in the White racial category was much lower in the course (50%) than in NSPN membership (75%) and national enrollment (55%). Thus, course participation rates by people who identify as BIPOC was a significant outcome for NSPN, even though this representation was not an aim of the study. Nonetheless, the result was meaningful to stakeholders because the NSPN organization actively seeks ways to increase diversity, equity, and inclusion in science policy activities.

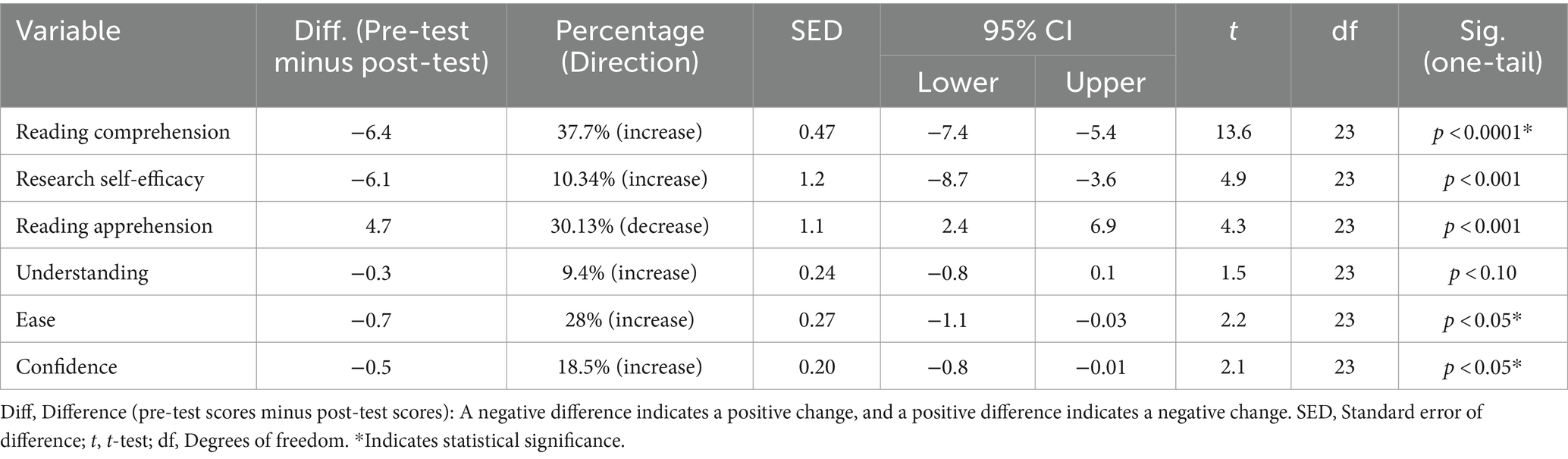

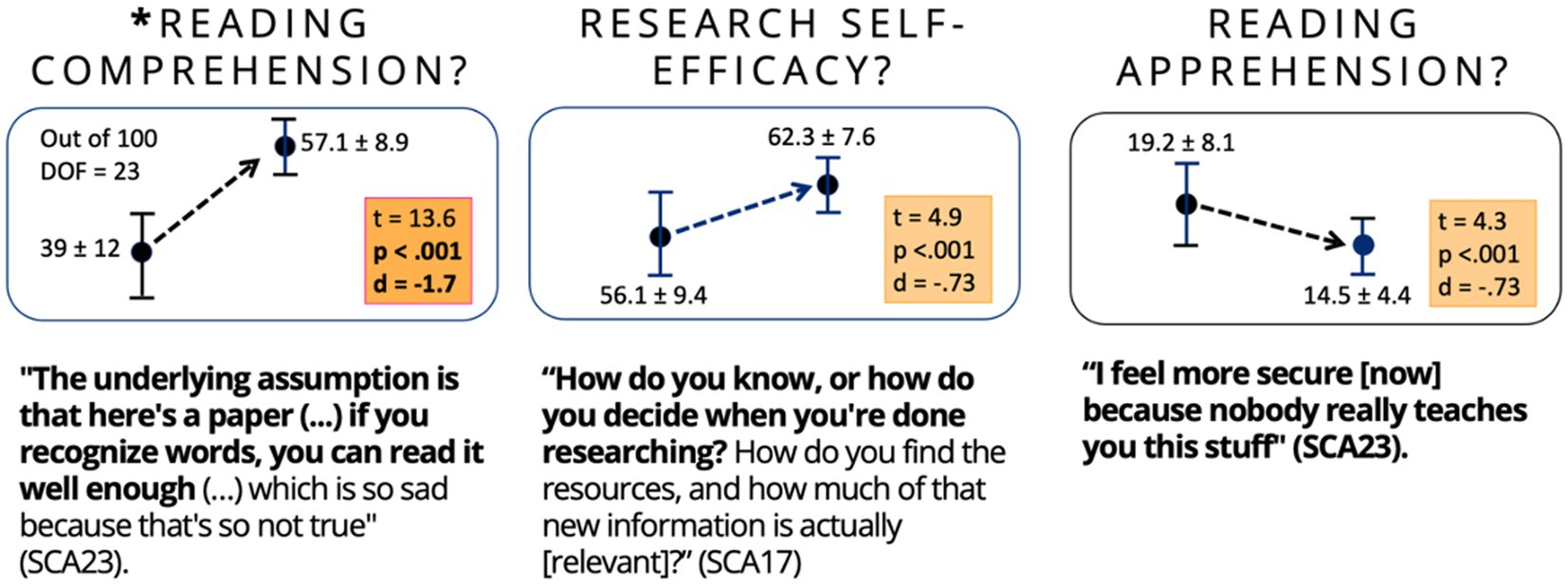

Finding 1: participation significantly improved reading comprehension as critical reading of primary literature

The quantitative analysis identified a statistically significant difference in the mean scores on the pre-post reading comprehension assessment, as shown in Table 4 as pre-posttest raw scores. The study power was sufficient to detect a difference in the pre-post-test means with a sample size of 24 for a matched pair, one-tail t-test, as shown in Table 5. The pre-test (M = 13.7, SD = 4.3) and post-test (M = 20.1, SD = 3.1) scores indicate that the intervention resulted in a statistically significant improvement in reading comprehension as CRPL outside the participants’ fields of study, t(23) = 13.6, p < 0.0001. By conventional criteria, the mean of the differences in reading comprehension pre-and post-test means (−6.4 p < 0.0001) is considered statistically significant with a large effect size, Cohen’s d = 1.7. The effect size adds a measure of practical significance to the reading comprehension finding. These findings are summarized visually in Figure 3. Thus, reading comprehension as CRPL was a significant area of improvement for participants in this study.

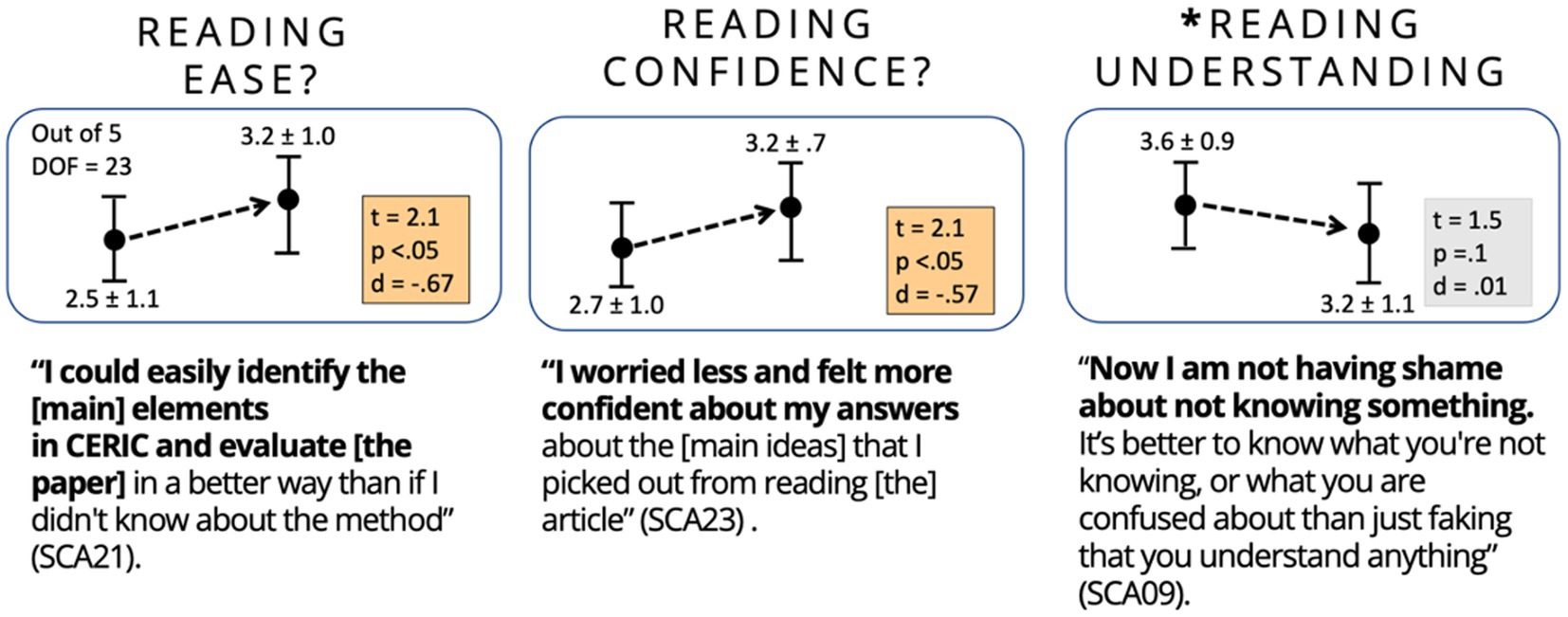

Figure 3. Summary of research findings: pre-post reading skills. Images depict pre-test and post-test results. *Reading comprehension was defined as critical reading, not content knowledge of astrophysics, which was the topic of assessment.

In addition, as shown in Table 5 and Figure 4, there was a statistically significant increase in participants’ self-reported feelings of ease with reading outside their field (M = 3.2, SD = 1) compared to before the intervention (M = 2.5, SD = 1.1), t(23) = 2.2, p < 0.005. Participants’ confidence in the correctness of their responses (M = 3.2, SD = 0.7) also showed a statistically significant increase compared to baseline (M = 2.7, SD = 1), t(23) = 2.1, p < 0.02. However, there was no statistically significant finding for self-reported feelings of understanding. These self-reported findings about ease, confidence, and understanding (Table 5; Figure 4) make sense in the study design context because the pre-post passages came from astrophysics, a field new to all participants, and astrophysics content was not addressed in any way during the intervention. Instead, the participants were taught a method for reading critically in a new interdisciplinary field of science policy using CERIC plus SCA, which improved their sense of ease and confidence without building any specific field-based knowledge.

Figure 4. Summary of research findings: pre-post self-reported perceptions. Images depict pre-test and post-test results. *Astrophysics was the topic of the critical reading assessment, which represented a field in which no participant had any formal academic training.

The qualitative data showed convergent results, reflecting emergent themes of reading comprehension, reading practices, and peer engagement. Participants expressed the idea that their reading comprehension as CRPL improved by learning a strategic reading method (i.e., CERIC). One participant explained her experience:

I feel very adept at reading papers in my own field, and I never consciously applied a formal method to it. I do a lot of skimming. So, it was interesting to learn about a more systematic way of [reading]. And I found this especially helpful when I was reading papers outside of my field of expertise. I could easily identify the [main] elements in CERIC and evaluate [the paper] in a better way than if I didn't know about the method (SCA21).

This student’s experience describes how learning a strategic reading method (i.e., CERIC) helped her read and understand CRPL in science policy research outside her graduate field of study in life science. Reading in multiple and interdisciplinary fields is an essential skill set for people interested in careers in public policy careers, which was her goal. She also described the learning process as interesting because she had “never learned a formal reading method [for primary literature]” (SCA21). Another participant echoed this practice of skimming, “I normally skim the paper first, to try to see the more relevant things related to my research topic, and then, when it is relevant, then I read the full paper from start to the end. It takes me a lot of time, which is why I’m always behind” (SCA12). One benefit of strategic reading is that it “allowed for deeper comprehension and discussion” (SCA13). Thus, many participants reported stopping skimming when trying harder to understand.

A key to improved comprehension of PL appears to be less about speed and more about “focus and embedding analysis in the reading process” (SCA10). For instance, a participant in psychology explained:

In my field, [papers] always have a leading argument for each section and then some evidence. We talk about it and analyze, How is this evidence tied to the leading argument? I feel like the flow of [analyzing] the paper while reading it with CERIC [in this course] was very much the same (SCA23).

Participants who previously practiced strategic reading still benefitted from explicit instruction and practice, instead of evaluating the main argument (Lie et al., 2016; McMinn et al., 2009).

Nonetheless, one participant experienced no change in her reading comprehension during the intervention. Instead, she felt that she already had an effective method and thought that the activities helped her deconstruct papers in a way that could make her a better teacher by making CRPL more accessible to her students. She explained:

When mentoring and teaching undergraduate students how to present a poster, I used the CERIC method to explain the logical flow between ideas and data. I noticed the students took to the concept easily, and it helped them a lot. I think this could be an effective monitoring tool for switching to new fields and catching up and for teaching junior students (SCA10).

Switching to new fields, mentoring, and teaching are some of the applications this participant identified as key uses of the approach.

Finally, three participants experienced a slight decrease in their reading comprehension during the intervention (i.e., −1, −2, and − 2 raw points pre-to posttest scores; Table 4). These participants expressed feeling “totally overwhelmed by so much new information” (SCA09, SCA23) and “like I was thrown into a deep end of learning SCA and CERIC at the same time” (SCA23). The participants expressed a need for more time to process and learn each element separately and would prefer an entirely self-paced course. The third felt that she had difficulty focusing during the intervention because of so many outside demands, including getting sick with COVID-19 (SCA06). These findings highlight some participants’ needs for a longer study duration and differentiated approaches.

The qualitative findings centered on a central theme that arose connecting CERIC and SCA to improved perceptions of reading comprehension. Several patterns emerged, explaining that the connection included seeing other people’s ideas, improved focus, and engaging in critical thinking. One participant succinctly summarized this experience as “annotating the reading materials helped me to process what I was reading and improved my comprehension” (SCA14). Only two participants reported no change in their self-perceptions of reading comprehension.

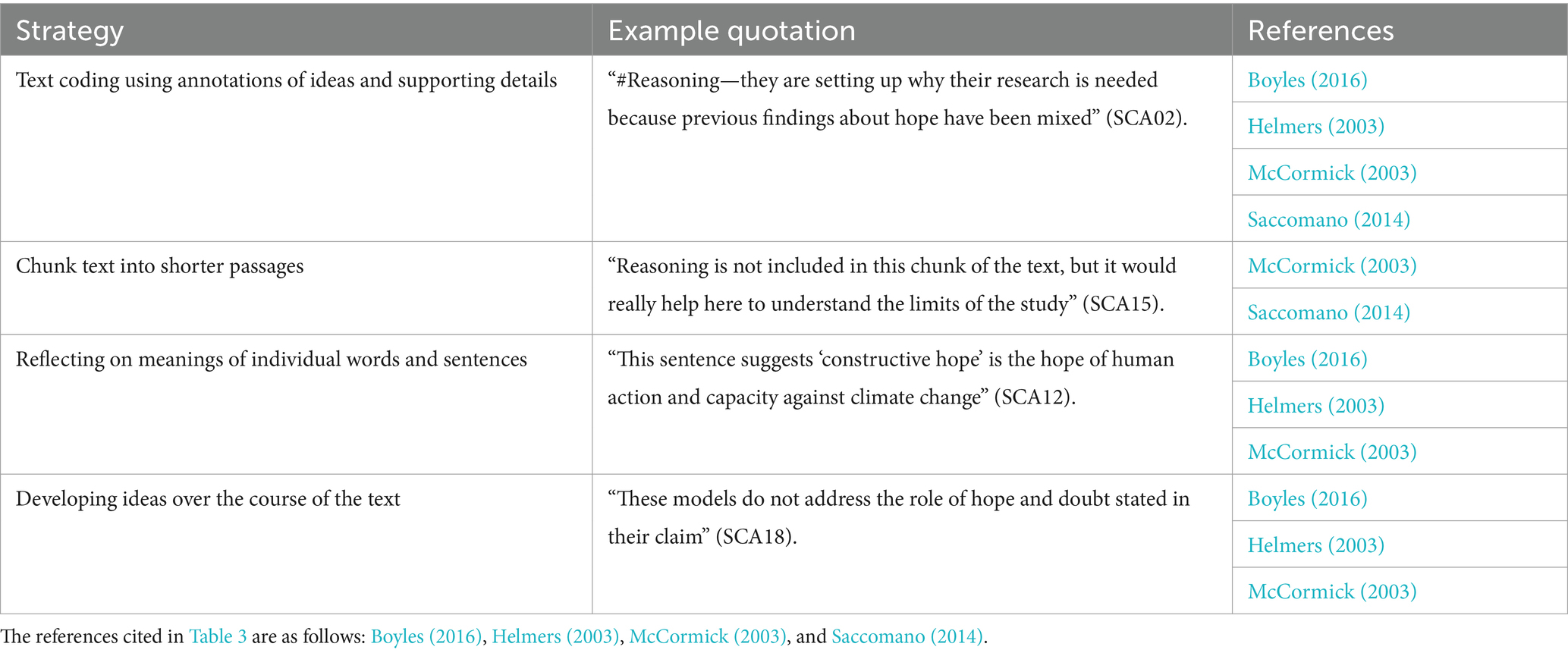

While the intervention offered formal instruction in a structured reading method, participants had openness and flexibility to use their preferred reading strategies and techniques. Participants engaged in structured and close reading methods, making 2–25 annotations per session. Further, frequency analysis of coded reading strategies appearing in annotations showed that participants most often used the CR strategies of determining importance (78%), summarizing (39%), asking questions (35%), making connections (34%), making inferences (22%), paraphrasing (13%), and synthesizing information (6%). Thus, the reading method, strategies, and frequency of annotations reflected a mix of formal instruction, prior knowledge, participant agency, and engagement with the activities and text.

The qualitative data further expound that all participants engaged in substantive annotations reflecting active usage of reading comprehension strategies (i.e., plans to achieve goals, such as reading with a purpose) and methods (i.e., ways to achieve goals, such as reading for CERIC information and using SCA to check for understanding). Strategic reading, close reading, and critical thinking and focus emerged as major activities supporting improved reading comprehension as CRPL.

Strategic reading

Participants used structured annotations to determine their importance. The researcher prepped the articles in advance with categorical reading prompts integrative to primary research literature. The prompts included instructions to identify types of information, tag them, and then determine their importance. Tags served as a shorthand for determining that the information is essential to an overall understanding of the text. In one example, a participant identified important information by tagging critical passages in the text, such as “for many people, reasons to be hopeful that we can address climate change are not obvious,” to which the participant added a tag, “#claim” (SCA01). In a second example, this participant again tagged essential information as, “in study 1, responses to open-ended questions reveal a lack of hope among the public.” The participant added a tag, “#evidence” (SCA01). This participant tagged various types of critical information to mark them as important.

In another pattern, participants used tags to identify important information. Then they added short summaries or paraphrases to determine importance, thereby layering two reading comprehension strategies into a single annotation. For instance, the text read, “Americans, by and large, are not hearing about these efforts,” to which the participant added a tag and paraphrased, “[tag] #claim – Americans, in general, are not receiving the full impact of efforts to improve the negative impacts of climate change” (SCA24). This participant tagged and summarized. In another instance, a participant annotated the section of the text, “most common reason relates to social phenomena—seeing others and believing that collective awareness is rising (constructive hope),” to which the participant added, “#reasoning – constructive hope = sense of everyone collectively increasing their awareness” (SCA06). This participant tagged and paraphrased. As participants followed embedded prompts, many engaged in strategic reading focused on gathering information, tagging, determining its importance, and paraphrasing (SCA01, SCA06, SCA07, SCA10, SCA12, SCA13, SCA18, SCA21, and SCA23).

Moreover, several participants described using prompts and related them to their perceptions of reading abilities in a new field (SCA01, SCA04, SCA09, SCA14, SCA17, SCA21, and SCA24). A participant described embedded prompts as “prompts within the small group ask[ing] about information, like general critical thinking, and [I] could trace it [the paper’s argument] with more confidence” (SCA17). Another participant explained, “I liked having the [prompts] as like a bar for a general expectation to meet [with annotations]” (SCA09). The participants felt that the prompts focused their reading and thinking, which improved their understanding and confidence.

Some participants suggested that the prompts could be developed further. Prompts could encourage people to “do more [idea] synthesis” (SCA21), “name and discuss doubts [about the paper]” (SCA15) or develop more group social interaction by prompting the group to “do a summary together, and then you have to submit those summaries” (SCA18). These participants suggested how prompts could be developed further to include additional uses beyond the scope of this study.

However, some participants found the prompts confusing or needed improvement. A participant expressed initial confusion over the prompts:

At first, [I] was struggling to parse out the different prompts and what they mean (i.e., what is a claim as compared to evidence or implication?). This is new information/tools I haven't encountered before (SCA24).

However, interaction with group members helped her “improve my confidence in [understanding] the prompts” (SCA24).

Participants also described how prompts could be improved. One participant elaborated:

I think more SCA prompts would be good because the goal is to synthesize the information that you got from this [paper]. And then a couple of general prompts are okay, as in you have this information. Here are some questions that you need to ask about information, which is just general critical thinking. But [prompts] specific to the [shared] paper should have more depth like, how did you know this was happening? And how could we trace it back and feel like really secure about the information? And then how did you know this was happening? (SCA17).

A mix of general and deeper prompts would allow for more practice opportunities to overcome initial confusion and could be formulated to prompt various critical reading and thinking strategies.

Nonetheless, prompting strategic reading practices moved participants beyond aimless skimming or minimalist agreement/disagreement and supported improved reading comprehension as CRPL. These findings support the notion that prompts embedded within the annotation space are pedagogically useful for improving CR and confidence.

Close reading

Many participants also engaged in line-by-line close reading and produced annotations reflecting a complex mix of reading comprehension strategies (SCA02, SCA03, SCA04, SCA05, SCA08, SCA09, SCA10, SCA11, SCA12, SCA16, SCA17, SCA18, SCA20, SCA21, and SCA22). Close reading, as defined by the Partnership for Assessment of Readiness for College and Careers (PARCC) (2011, p. 7), is a form of analytic reading that “stresses engaging with a text of sufficient complexity directly and examining meaning thoroughly and methodically, encouraging students to read and reread deliberately.” Close reading also enables readers to reflect on many levels, including individual words and sentences, paragraphs, and developing ideas and arguments about the text (Shanahan and Shanahan, 2008). The practice of close reading allows learners to arrive at a deeper, more holistic understanding of the text. The goal of close reading is for readers to reflect, monitor, and assess their thinking in the context of processing the thoughts of others (Elder and Paul, 2009) expressed in doctoral education through PL. At the graduate level and beyond, close reading strategies vary by discipline, for example, expert readers in Chemistry and History focused their re-reading only on the information identified previously as important (Shanahan et al., 2011).

Table 6 shows common close reading strategies paired with examples of participant quotations. To illustrate the theme of close reading, several example annotation sets reflected close reading of the text using multiple reading comprehension strategies (SCA03, SA09). In the first example, the participant determined that context was important by tagging “#context” and then paraphrased a primary point of the paper’s context as a “little body of previous research examining hope and doubt” (SCA03). She also annotated the rationale for the study as “further exploration is needed.” Next, the participant summarized an essential point of the experimental model as “one direction causation” and added her thinking about the strength of that implication as “a weak implication to me (…) [because] of limited evidence in the cross-sectional data.” Finally, the participant made connections between sections of the text, specifically evidence and implications, and then explained, “I think this is an exaggerated implication because 23% of respondents said they were not hopeful or do not know what makes them hopeful, meaning that 77% did have things that make them hopeful” (SCA03). The participant concluded that the authors exaggerated the implication compared to the evidence presented.

In another example of close reading using multiple strategies, a participant combined several reading strategies when making annotations related to a passage in the text. In the first annotation of the set, she connected with prior knowledge of statistics by noting, “I would love to know the R^2 value on that line,” and summarized the data as “all over the place” (SCA09). Next, she made an inference about what would strengthen the argument, “I feel this figure would fit their argument better if they were saying ‘there is a trend.’” Then she asked a question about the strength of the argument expressed as, “maybe I should go back a re-read (…) [because] I feel that they are making a stronger connection (…) which I do not know that this data actually support.” In the second annotation of the set, the participant paraphrased and made a connection between study 1 and study 2 as “another direct purpose statement [that] restates the purpose of study 1 for added background and reinforcement of initial idea and connection to [study 2].” Finally, in the third annotation set, the participant embedded a summary “although 42% saying they have hope human will act in some way” and then paraphrased “11% said they thought nature divine intervention was possible” into a broader question “one in 10 people think God is going to solve this?” These examples show how participants layered multiple close reading strategies to improve their reading comprehension as CRPL.

Critical thinking and focus

Another major pattern emerged whereby CERIC plus SCA helped participants focus and improve critical thinking, which led to a better understanding of the texts. CERIC supported strategic reading while SCA supported critical thinking through several processes, including checking for understanding (SCA12), identifying key points (SCA18, SCA04, SCA16), finding pitfalls and breaks in logic (SCA18, SCA04), making connections (SCA04, SCA10, SCA13), and summarizing conclusions (SCA04, SCA13, SCA16). A participant explained how SCA worked for her:

When first reading a paper in a new field, it's easy to accept the claims as probably valid since you don't have the background knowledge to be super critical of their methods yet. The course activities [CERIC plus SCA] reminded me to always be critical and take the time to learn the background knowledge necessary to properly critique a paper. While annotating isn't necessary to understand reading, SCA definitely helped me to learn the key points and make connections (SCA04).

Another participant also elaborated on the dynamic relationship between SCA, critical thinking, and checking thinking:

Annotating a reading helps provide labels to certain pieces of information for a reader. Understanding a reading means that a reader needs to connect the annotations and be able to explain critically why the authors did what they did. CERIC helps with that. SCA adds a way to check your thinking (SCA10).

Annotations became markers for strategic information, and SCA was a way to check the thinking.

CERIC plus SCA also helped participants focus, leading to an improved understanding of the research articles. Before the intervention, most participants reported skimming research papers and reading the abstract, a finding supported by the scholarship on doctoral reading approaches (McMinn et al., 2009). Annotation disrupts skimming “because it slows me down and helps me to fully grasp what the authors are trying to claim” (SCA19). The annotations also “require you to focus in on the specific details you might miss if you just skim over it” (SCA10). Better focus is “very helpful for improving your understanding of a paper” (SCA10). For instance, a participant explained her experience with reading papers outside of her field of study:

I have an internship in tech transfer where I'm faced with technologies outside my field. I have noticed that, with CERIC and SCA, I can understand these technologies more quickly than before. Annotation also helps me focus on the methods and rationales that other fields use, rather than what I used to do with these papers, which was to skim around aimlessly. They have made reading primary literature easier and more efficient (SCA24).

This situation of needing to read well in a field outside one’s primary area of study was an experience common to all participants by design and is a critical skill for interdisciplinary collaborations and transitioning to new fields, such as policy research. Monitoring reading skills in a new field is complex and challenging. CERIC plus SCA helped participants to focus, quickly grasp the central points of a paper, check their thinking, and make connections with other readings (SCA01, SCA10, SCA19, and SCA24).

In summary, participants annotated using one or more reading comprehension strategies that reflected either a strategic or close reading method (or both) and showed improved critical thinking and focus. The findings highlight the need for multiple and differentiated approaches. Participants reported an improved self-perception of their reading abilities in a new field that corresponded with statistically significant increases in reading comprehension, confidence, and ease. In an exit survey of participants about what they gained from the intervention, all reported improved reading skills, a better understanding of interdisciplinary science policy research, and skills to build a resume; most (19) reported enhanced research analysis skills and benefits from meeting like-minded people; and about half (11) reported an improved sense of confidence and competence. These findings aligned with what participants hoped to gain when surveyed at the beginning of the intervention and suggest that the study’s activities supported participants’ hopes and goals.

Finally, participants responded that annotation is a marker for understanding and does not replace deeper reading. For instance:

It's important not to be caught up in only annotating because when annotating, I tend to just hunt for sentences and mark them up. I don't fully process what I'm reading. It's very important to then go back and actually read the content of what you annotated, using the annotations as markers for understanding (SCA21).

Thus, annotation did not replace deeper reading and provided markers for understanding that the annotator must still process.

Finding 2: participation significantly improved research self-efficacy

The quantitative analysis found a statistically significant difference in the mean scores on the pre-post research self-efficacy assessment, as shown in Table 5; Figure 3. Bieschke et al. (1996, p. 60) defined research self-efficacy as “the degree to which an individual believes she or he [can] complete various research tasks.” The 15-question assessment used a five-point Likert scale, where one meant never applicable, and five meant always applicable (Bieschke et al., 1996). Higher scores on this scale indicate greater research self-efficacy. The results from the research self-efficacy survey appear in Figure 3. A dependent-sample t-test determined if participation in the intervention improved reading self-efficacy scores. The pre- (M = 56.1, SD = 9.4) and post- (M = 62.3, SD = 7.6) scores indicate that the intervention resulted in a statistically significant increase in research self-efficacy, t(23) = 4.9, p < 0.0001 with a medium effect size, Cohen’s d = 0.72. Thus, research self-efficacy represents a significant area of improvement for participants in this study.

The qualitative data showed convergent results. Participants expressed that their research self-efficacy improved by learning a strategic reading method (i.e., CERIC) and seeing others’ ideas with SCA helped them know when they had enough information. One participant explained the dilemma of not knowing when to quit:

How do you know, or how do you decide when you're done researching? How do you find the resources, and how much of that new information is actually [relevant]? A lot of times while finding your own original research, [it’s] hard knowing what sources to trust. All of that [in the course] was completely new to me (SCA17).

Knowing when to quit and what sources to trust are key aspects of research self-efficacy. Another participant shared her experience with this dilemma:

When I started doing a Ph.D., I felt really lost in reading papers. I didn’t know what was important or when to quit. I got much better over time by read [ing] a lot, and I wrote reviews, synthesizing literature, which forced me to read even more. But I would have struggled a lot less at the start of [my] research career if I had more training [in] strategic reading and [annotation] (SCA21).

This situation of not knowing what is important or when to quit reading for research was described in the scholarly literature by Bieschke et al. (1996), where a doctoral student’s number of years in graduate school (p < 0.05) and involvement in research activities (p < 0.01) contributed significantly to the prediction of research self-efficacy.

However, it is possible to go too far. Another participant described how she balanced reading and research:

At this point, I'm very overdoing research. I need a break. So, now I’m like, what’s the main takeaway of this paper? And then, basically, I look through figures and anything that describes the figures and then move on. I think the optimal time to learn a systematic reading method like CERIC was probably when I was first starting to read papers in undergrad, but also at the start of grad school. I think it would have been helpful then because now I already have my shortcuts (SCA04).

This participant described a shortcut of looking at a paper’s figures to know what is important and when to quit because she is overdoing research and needs a break. This participant is beginning to write the dissertation, and reading only the figures may be a way to cope with a heavy workload.

Finally, it was challenging for participants to know what they did not know about a new field. Where participants did not mark up the shared text generated insight that informed instruction. For instance, when participants avoided annotating the section in the intervention text about using Cronbach alpha values to validate constructs, the absence of any annotation suggested a need for support, explicit instruction, or other strategies to process the information. In response to these data, the author added information about Cronbach’s alpha to the weekly discussion about social science methods. Thus, SCA was also a method for improving the intervention in real time.

Finding 3: participation significantly reduced reading apprehension

The quantitative analysis found a significant difference in the mean scores on the pre-post reading apprehension assessment, as shown in Table 5 and Figure 3. The assessment used a five-point Likert scale, where one meant never applicable, and five meant always applicable (Edwards et al., 2021). Higher scores on this scale indicate greater reading apprehension, and no questions required reverse coding. However, reading apprehension is an inverse indicator, meaning a lower score was the intervention’s goal. A dependent-sample t-test determined if participation in the intervention improved reading apprehension scores. The pre- (M = 19.2, SD = 8.1) and post- (M = 14.5, SD = 4.4) scores indicate that the intervention resulted in a statistically significant decrease in reading apprehension of PL outside the participants’ fields of study, t(23) = 4.3, p < 0.0001 with a medium effect size, Cohen’s d = 0.73. Thus, reading apprehension represents a significant area of improvement for participants in this study.

The qualitative data showed convergent results. Participants expressed the idea that their reading apprehension (i.e., anxiety) of PL decreased by participating in the course activities, including learning a strategic reading method (CERIC) and seeing other people’s ideas (SCA). One participant explained her experience:

I was really getting anxious about [reading] a scientific paper [outside my field]. I was like, oh my God, I don't understand anything. So, CERIC was really enlightening because it was a different way to look for the important information in a paper. Social science papers are completely different from molecular biology or cell biology, which I am really used to. So, I learned how I can start seeing a paper and to get more reflective about the process. Also, the [social] annotations were really helpful. You can first annotate what you don't understand and then compare it to others. Now, I am not having shame about not knowing something. It’s better to know what you're not knowing or what you are confused about than just faking that you understand anything (SCA09).

This participant described how she experienced confusion, shame, and anxiety about not understanding a scientific paper outside her doctoral field. She also mentioned the act of faking understanding in the presence of confusion. She explained how participating in the course activities (i.e., CERIC and SCA) helped her become aware of what she did not know and develop a process for gaining an understanding that included a structured approach and checking her ideas with other people in the course.

Another participant elaborated on how the experience of explicit reading instruction had an impact on her deeper feelings of security:

I feel more secure having those skills because nobody really teaches you this stuff. I guess the underlying assumption is that here's a paper, go read it, and if you recognize words, you can read it well enough. Yeah, I think that has always been the assumption in undergrad training and graduate training, which is so sad, because that's so not true. I wish I would have learned this method when I first had to read primary lit as an undergrad. I would have struggled much less and probably felt like less of a fraud in grad school (SCA23).

This participant expressed how teaching reading methods for the PL earlier in her career in higher education would increase feelings of security, reduce struggle, and reduce feelings associated with the imposter phenomenon. Worry and apprehension are emotions with negative connotations.

Discussion

This intervention provides an empirically validated model for improving reading comprehension as CRPL. This study examined the effectiveness of a CRPL reading intervention among 24 doctoral student participants in various fields motivated to read research in a new field (i.e., interdisciplinary science policy research). The study was a 4-week online course that included explicit instruction in a strategic reading method (e.g., CERIC) with SCA practice. The study compared the participants’ pre-and post-test mean scores in reading comprehension as CRPL, research self-efficacy, and reading apprehension. After analyzing the results from the pre-posttests plus additional work products, surveys, and interviews with nine participants, participants in the intervention significantly improved outcomes in the measured indicators with large and medium effect sizes. The qualitative analysis corroborated and explained these findings. In addition, a major qualitative finding was that scientific reading is inadequately taught in GE, and doctoral students need and benefit from explicit instruction in CRPL.

Broadly, these findings connect with a central tenet of sociocultural theory that we learn from others (Brown and Renshaw, 2000). Social interaction is essential for human learning, and discourse is a primary way to construct knowledge at the doctoral level. SCA offers social interaction and a flexible forum for discourse about research literature, which helped participants understand the primary literature better.

The finding on reading comprehension as CRPL aligns with scholarship, showing that, without instruction, students will skim a paper’s major sections, such as the abstract, results, and discussion. McMinn et al. (2009) found that doctoral students later in their years of study (i.e., years 3 and 4) tend to read less thoroughly, skim more, and leave more assignments unread than first-year doctoral students. The CERIC method (first described by Bjorn et al., 2022) disrupted skimming by providing an explicit strategic reading method rooted in cognitive approaches to learning, namely categorical reading supported with advanced organizers. Both strategies shift reading from passive to active.

In addition, scholarship on SCA shows increased critical reflection among groups of learners using it (Kalir and Garcia, 2021; Yang, 2009). Critical reflection and critical thinking are often used interchangeably in the literature, where critical reflection is necessary for critical thinking. Critical reflection is active, persistent, and careful consideration of a belief or supposed form of knowledge, the grounds that support that knowledge, and the further conclusions to which that knowledge leads (Dewey, 1933). Learners monitor what they know and need to know and how they bridge that gap during learning situations. CERIC helped learners focus, and SCA provided low-stakes peer feedback essential for monitoring.

The finding on research self-efficacy connects to the literature on self-efficacy through a set of critical variables affecting doctoral students’ beliefs in their ability to conduct research-related activities, also known as research self-efficacy. Bieschke et al. (1996) identified a doctoral student’s number of years in graduate school (p < 0.05) and involvement in research activities (p < 0.01) as contributing significantly to the prediction of research self-efficacy. In addition, prior research warns that about 40–60% of doctoral candidates complete all degree requirements except for the written dissertation (Kelley and Salisbury-Glennon, 2016).

The finding on reading apprehension aligns with research on reading apprehension, whereby a situational fear of reading can have physical and cognitive ramifications (Jalongo and Hirsh, 2010) when an initially neutral stimulus (e.g., reading) pairs repeatedly with a negative unconditioned stimulus (e.g., teacher judgment, peer ridicule). Because of this pairing, the learner—even at the doctoral level—can form an association between reading and negative emotions, such as shame and anxiety. In addition, this finding connects with scholarship on self-concept, whereby reading anxiety correlates with other essential reading-related measures, such as reading self-concept (r = −0.58; Katzir et al., 2018). Reading self-concept is a person’s perception of their ability to adequately complete reading tasks (Conradi et al., 2014) and relates to self-efficacy (Bandura, 1986). Low confidence or worry, rather than anxiety, reflects a more optimistic evaluation of one’s knowledge and capability to deal with environmental demands.

The findings imply an opportunity to improve doctoral education by integrating CRPL interventions into doctoral programs, graduate education, and undergraduate education whenever students encounter primary literature. Improved reading comprehension and research self-efficacy support practices necessary for degree progress, while reading anxiety can impede skill development necessary for degree progress. Participants suggested many applications of CERIC and SCA, including formal instruction (i.e., integration with coursework and literature reviews) and informal guidance (i.e., integration with reading clubs and professional development seminars). In summary, doctoral students need explicit reading instruction in the PL, and this intervention serves as an evidence-based model for improving reading comprehension of PL. Further, this study’s findings suggest many possible implementations of this intervention that could improve doctoral education.

Finally, a limitation of this study was sampling using convenience sampling, where only people within the sponsoring organization’s membership network were invited to participate (Creswell and Plano Clark, 2018). However, NSPN’s members reflect a national network of individuals representing a diverse range of higher education institutions (e.g., 2-year and 4-year colleges and R1 through R3 universities), which offsets that concern. In addition, study recruitment filtered efforts through exosystem-level networks of research professionals across the United States (Bronfenbrenner, 1979) in various fields and at all academic levels.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Johns Hopkins University Homewood IRB (HIRB). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

GB: Software, Writing – review & editing, Writing – original draft, Visualization, Validation, Project administration, Methodology, Investigation, Formal analysis, Data curation, Conceptualization.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments