- IOE (Faculty of Education and Society), University College London, London, United Kingdom

Educators and educational researchers show continued interest in how schools can best make use of research evidence in bringing about change in practice in schools. A number of models have been developed to support schools in this challenge, such as research learning communities and lesson study. However, questions remain about the effectiveness of such models, their fit to the particular needs of schools and the extent to which they contribute meaningfully to the body of evidence used to inform changes to practice within the field of education. This issue is of particular relevance when considering the inclusion of autistic children in the classroom partly because of the large body of research being undertaken on autism across a range of domains with varying epistemological perspectives (e.g., neuroscience, psychology, pedagogy) and partly due to the widespread need to support autistic children in the classroom. Questions have also been raised about the evidence policy “agenda,” particularly in terms of reliance on positivist models centered on randomized controlled trials. These concerns focus on the extent to which performative or neoliberal perspectives on effectiveness might mask the complexity of how practice and knowledge (or evidence) are related in models of teacher professional working. One particular approach that could have potential in addressing these is that of Theory of Change (ToC). ToC models come from the field of theory-driven evaluation and draw on frameworks for relating practice to knowledge such as realist evaluations whereby the evaluation focuses on understanding how complex programs work in specific contexts by examining the mechanisms that lead to particular outcomes. ToC models consider under what conditions, for whom, and for what reasons or aims a given activity will achieve its intended outcomes. This paper considers the scope for the application of ToC models by reviewing a selected case from a completed study on the implementation of models for developing evidence informed practice in schools for autism education. By applying a ToC lens to what did happen in this case, we will “re-imagine” this case from a ToC perspective. This approach will serve to illustrate the possibilities for how ToC models could be used in future practice to advance evidence-informed practice in autism education.

Introduction

There has been a growing focus on the use of evidence-informed practice in schools. Slavin (2020) review notes the increasing trend toward the use of evidence-informed approaches from both a macro policy perspective as well as more locally at district or school level in terms of approaches encouraging teachers to implement practices which could facilitate improvements in educational outcomes. This trend is at least partly based on the millions of pounds which have been spent on the design and implementation of interventions, often based on evidence derived from randomized control trials (RCTs), to address persistent challenges in education. In the UK, for example, the Education Endowment Foundation (EEF) has published 16 guidance reports and offers a Teaching and Learning Toolkit, comprising of research summaries for early years, school and post-16 settings and has funded “hundreds of experiments” (Thomas, 2021, p. 504). In the US, the What Works Clearing House initiative (NCEE, n.d.) has similarly produced a range of recommendations and reports, again mainly based on evidence from trials.

How schools can effectively engage with evidence-informed practice is a particular issue for special educational needs (SEN) and autism education (by which we mean the practice of schools and teachers with autistic children in mainstream and specialist settings, and the study of such practice). This is because of the particular focus of psychology and other disciplines such as psychiatry and neuroscience on amassing evidence about interventions for children with SEN and autism in particular (Mintz, 2022). Sweileh et al.’s (2016) bibliometric review of academic articles on autism demonstrated that many thousands of new papers are published each year on this topic. Although this exercise has not been repeated more recently, it is likely that the numbers have increased further still. Autism’s positioning as a “quasi-psychological” or “quasi-health” condition that straddles education and health (see Mintz et al., 2012) means that the issue of what evidence to use and how to use it is particularly acute in respect of autism education.

There are of course long-standing debates about different types of evidence and how we can demonstrate causality in social science and education. The use of RCTs is premised on the idea that randomized designs can minimize the impact of confounding variables and thus make it clear whether a particular intervention had a causal effect on outcomes. In contrast, theory driven evaluation is based on the contrasting premise that causality in social contexts is complex and emphasizes the importance of developing a rich understanding of the underlying mechanisms and contextual factors which might influence the link between interventions and outcomes (Johnson et al., 2007). In this paper, we will explore the role of such theory-driven evaluation in relation to how schools can engage with evidence-informed practice in schools. We do this via discussion of the experience of implementing an intervention “MARAT” (Making Autism Research Accessible to Teachers) with seven primary schools in the London area.

As noted, there are a some concerns about a simplistic reliance on RCT evidence as a “gold standard.” These can be considered in two categories. Firstly, there are epistemological concerns (i.e., concerns about the approach to how we can best understand something) as to whether RCTs do in fact represent an effective way of deriving knowledge about complex social systems such as schools. Secondly, if RCTs are admitted as an epistemologically sound source of knowledge to guide teacher practice in schools., there are concerns about how the knowledge derived from a trial can in fact effectively be translated from the “lab” to the real-world context of different schools with different local contexts and actors. In this paper, we consider both categories of concern, but our main focus is on the latter category, i.e., considering how methods of adaptation to local context, particularly using Theory of Change (ToC) models, could help schools in the implementation of evidence-informed practices.

In terms of the first category of concern, i.e., what counts as evidence in making such recommendations about which interventions should be adopted by schools and educators, a number of theorists have raised objections. Biesta (2007) in particular has criticized the focus on the use of experimental approaches such as RCTs as a primary source of evidence for “what works” in education. Thomas (2021) presents a similar critique and notes that the large-scale fair test approach underpinning RCTs is not the only way to establish causation, i.e., what it is that makes a difference, in this case, in influencing different educational outcomes. Thomas notes that in scientific fields such as geology and paleontology, theorists work with a range of evidence including direct observation and simple deductive inference to deduce the best likely explanation for causation—“from the intelligent examination of evidence, theory about cause is built and rejected or refined and ultimately accepted” (p. 507). Thomas thus argues that it is possible to draw valid conclusions without the use of controlled experimentation and that even well-controlled studies are “as vulnerable to distortion” (p. 513) as any other type of enquiry.

The implication of critiques such as this is to raise the possibility of a more important role for what might be termed co-constructed, ecologically responsive research in education. This could include the long tradition of schools engaging in their own small-scale enquiries (Stenhouse, 1981; Stoll and Louis, 2007; Brown, 2015), where the causal chain is demonstrably complex but is crucially explored locally, aiming toward the deduction of the best likely explanation for causation. The counterargument, of course, and the explicit rationale for the emphasis on experimental approaches such as RCTs in social science and education, is that such small-scale localized studies present issues of validity and generalizability (see for example Gage, 1989). Part of Thomas’ (2021) response is that such a position masks the complexity and responsiveness to different types of evidence that can be counted as a scientific method in many scientific fields. A parallel response is that given in the teacher research (see Cochran-Smith and Lytle, 1999) and teacher reflective practice (e.g., Pollard et al., 2014) literature, which explores alternative conceptualizations of validity. Focusing on case study research, Yin (2003, p. 34) describes three types of validity: construct validity (establishing the correct model); internal validity (relevant only to case studies which investigate causal relationships) and external validity (which refers to the accurate establishment of the domain to which the case study results can be generalized). Yin (2003) argues that a different type of generalization than that used in statistical analysis enables case-studies to establish their validity. Instead, Yin describes an “analytical generalization” with different features than the more traditional “statistical generalization.” In analytical generalization, one aims to generalize a particular set of results to a more general theory. Yin discusses the example of Jacob’s (1961) seminal study of urban planning. This focused on experiences from one “case”—New York City, which are used to build a broader theory of urban planning covering issues such as the role of sidewalks, green spaces, and the need for mixed use in city design. Thus, it is the quality of the analysis rather than the representative nature of a sample size which might be regarded as the determining factor in establishing case study research validity.

Our argument in this paper is that one way school’s might be able to achieve such quality of analysis in relation to meeting the needs of their own students with SEN, could be via the explicit adoption of a theory of change model. We should also note that we see this happening in two different ways. Firstly, such analytical generalization could be within the school. That is, local action research, which could be based on the local implementation of evidence-based and/or evidence-informed practices, might first be undertaken in the school in one class, or one year group. The knowledge/theory development gained from that experience might then be generalized to other classes within the school or across the school as a whole. Secondly, there could also be external analytical generalization which might involve knowledge/theory development gained being generalized, as in the example from Yin above, to other schools. In either case, where the initial novel intervention or practice was based on external evidence such as the results of RCT trials, such an approach can be considered as a way of addressing the second category of concern about RCTs and evidence noted above. Thus, analytical generalizability could be used as a framework for considering how evidence from RCTs could effectively be adapted for implementation in local contexts. Introducing ToC models into the ways in which schools address the implementation of such evidence is a potential way to further support generalizing a particular set of results to a local theory that fits to the needs of individual schools. In respect of evidence-informed practice, this approach might also offer a deeper understanding of underpinning theory to support reflective practice and data collection.

We should note at this point that the debates outlined above are to some extent expressed in the use of different terms to describe the use of evidence both in the field of education and more widely. Evidence-based practice or practices (EBPs) typically refers to specific, structured programs, often, but not necessarily commercially available which have been tested for efficacy, usually through randomized controlled trials or quasi-experiments (Odom et al., 2014). This is differentiated from evidence-informed practice which can be understood as activities which are empirically supported, but have not necessarily been formally evaluated. The term evidence-informed practice also tends to reflect researcher and practitioner engagement with and acknowledgement of the complexities of a school environment (Nelson and Campbell, 2017). Our positioning is that evidence-informed is a more appropriate and useful way of thinking about the use of research evidence in schools, however both terms are employed in this paper, and we use EBP particularly when that is the term used by authors of studies that we discuss.

Theory of change

Ghate (2018) considers a ToC as connecting what a new intervention or practice does with its intended outcomes, with an explanation of why and how the change introduced brings about or could bring about those outcomes. For Chen (2016), ToC indicates the small steps which together achieve a longer-term aim or outcome, the connections and assumptions between individual intervention steps or activities and the links to what happens next after that activity (i.e., the intended outcome) as the overall intervention progresses. A ToC model considers importantly the assumptions and pre-conditions in relation to those explanations or explanatory factors which link what is done to the intended or hoped for outcomes. ToC models are widely used in evaluations of social enterprises particularly by voluntary or third sector organizations. For example, the National Council for Voluntary Organisations in the UK has a toolkit for developing a ToC model (see NCVO, n.d.) A ToC approach is also commonly used in the health sector, such as in the design and implementation of public health interventions (Breuer et al., 2018), implementing quality improvement interventions for primary care in Australia (Schierhout et al., 2013) or in implementing child health service interventions (Jones et al., 2022). Ghate (2018) argues, citing UK Medical Research Council guidance (Moore et al., 2015), that there is broad consensus in the health field on the importance of understanding the underlying theory of intervention in both implementing and evaluating the roll out of interventions.

A ToC usually will have a logic model or models, the purpose of which is to describe how resources and activities (i.e., what actors in the system will do differently) are designed to achieve the goals of the intervention or program, and how specifically they will bring about change (Kellogg Foundation, 2004; PCAR, 2018; NCVO, n.d.). A logic model usually includes a description of the situation (the problem or issue to be addressed); the resources available in terms of people and relevant infrastructure that will contribute toward activities (what people will actually do) in the program; outputs, which are the services provided which will reach targeted participants—usually children in education programs; and outcomes—the benefits for the participants arising from the activities (PCAR, 2018). Another element is the consideration of assumptions—often these are about the beliefs and attitudes of actors in the system and how they are thought to react to changes. Thinking about assumptions is also meant to help identify gaps or elements where additional activities may need to be inserted to bring about the expected outputs and outcomes (Kellogg Foundation, 2004). ToCs and the logic models that underpin them are developed usually through a collaborative process involving different actors in the system, both before and during the implementation of an intervention or program. It is this collaborative process of linking activities to outputs to outcomes, and in the thinking about how this relates to the local context and what needs to be adapted to meet that local context, that represents the potential of ToC models to make a difference in how well interventions are implemented in practice (Kellogg Foundation, 2004; NCVO, n.d.).

We now move on to considering a key approach in the field to considering how interventions link to practice and outcomes, namely implementation science.

Implementation science

There is already considerable attention in the field as to how evidence-based practices, usually derived from RCTs (as well as other pre and posttest designs such as ABA or ABAB studies), could be implemented in schools for autism education, with a particular focus on the potential role of implementation science in this.

Eccles and Mittman (2006, p. 1), defined Implementation Science (IS) as the “study of methods to promote the adoption and integration of evidence-based practices, interventions, and policies into routine care.” The development of IS, across a range of public services, was a reaction to the perception that although in many fields there was considerable scientific evidence available, it was unclear how that evidence could be translated into professional practice. IS frameworks aim to address this area. They focus on a number of areas including those that are specific to the internal specification of an intervention (or other evidence-based practice) such as treatment integrity (or fidelity) (see Sanetti et al., 2014). This can be seen as having 3 aspects—adherence, quality and exposure. Adherence is the extent to which an intervention is implemented as planned, quality is how well the intervention elements (or steps) are implemented, and exposure is the frequency and duration of the intervention. Thus, if a school only does an intervention once a week when it is intended to be every day, and the teachers do not follow the set out plan for the intervention and have a poor understanding of the techniques involved, treatment integrity will be low (at least as it was intended in the initial studies showing efficacy), and thus treatment integrity and subsequent impact from the intervention will be low. Models of IS also include consideration of wider elements outside of those related to the internal specification of an intervention. For example, the Exploration, Preparation, Implementation, and Sustainment (EPIS) model (Aarons et al., 2011) sets out an “ecology” of factors which surround and influence the implementation of an evidence-based practice. These are classified in to outer and inner contexts. Outer contexts include the sociopolitical framework, national or regional leadership in relation to policy, interorganizational networks, and the engagement of the intervention developer. Inner contexts include organizational characteristics including culture, local leadership styles and approaches, fiscal viability and resourcing, training, and fidelity and monitoring of activities and support. Models also usually have a linear aspect, moving from initial stages focused on introducing the intervention or practice to a new setting, through to later stages which focus on sustainability of use in the medium to longer term (see the review on IS models by Meyers et al., 2012). Such models then, clearly take into the account the need to position any intervention or practice in terms of how it might be interpreted and locally adapted by the social actors in a particular setting, and in fact one of the innovations, so to speak, of this aspect of IS, is its recognition that it is only through engagement with such local meaning-making that successful implementation might be achieved. Indeed, much of the literature is focused on identifying specific techniques and approaches to facilitate this (Schierhout et al., 2013). One way that this has been approached has been through the use of Theory of Change within IS. ToC has been considered in relation to IS in various fields including public health (Teachout et al., 2021), youth work (Moroney, 2020), and to a limited extent in education. One example of the use of ToC models in IS in education is Størksen et al.’s (2021) study of the rollout the results of an RCT study in early childhood education in Norway.

Some theorists working in the field such as Ghate (2018) have noted that it continues to be the case that many instances of the wider implementation of evidence-based approaches across social policy and health lack a fully articulated ToC (Davis et al., 2015). This is an aspect of effective implementation that is being increasingly recognized within IS (Ghate, 2018; Kainz and Metz, 2019). Such a focus, within the ToC approach, on understanding of local processes and local adaptation, points toward a tension with the more programmatic elements of models of IS, specifically those that focus on adherence to treatment fidelity. Kainz and Metz (2019) note explicitly that such an over emphasis on treatment fidelity could mean that those responsible for intervention implementation downplay or ignore the need for such local adaptation, i.e., for local actors to make sense of interventions in terms of their own existing frames of reference—their experiences, beliefs, limitations, and their motivations and wants (Heckman, 2005). The potential power of ToC, as Ghate (2018) argues, is partly in the way that it can help organizations set out the assumptions involved in moving from the introduction of an intervention to it having the expected impacts and outcomes. In education, some of those assumptions could and can relate to the ways in which teachers integrate (or do not integrate) new approaches into their existing classroom practice.

Implementation science, theory of change, and autism education

Echoing Ghate’s (2018) concerns in relation to social science and health, our review of the literature indicated relatively little attention to the use of ToC either in relation to education for special educational needs more widely or in relation to autism education specifically. Smolkowski et al. (2019) in their overview of the use of IS in research on learning disabilities note that as a whole the field is still relatively under-developed. Their review also indicates that both in terms of the perspective of the review authors, and in terms of the perspectives of the authors of the individual studies considered, the focus is very much on implementation fidelity, and issues of local adaptation or of the potential role of ToC are not given any real consideration. A similar picture can be seen in relation to the significant work on IS and autism interventions, most of which has taken place in the last 10 years or so in the United States. Odom et al. (2020) set out the picture in the US on this topic with a particular focus on regional strategies to roll out EBPs for autism. For example, Odom et al. (2013) used the US National Implementation Research Network (NIRN) IS model to implement state-wide systems of professional development in relation to EBPS for autism and their evaluation indicated changes in the extent to which teachers effectively used EBPs.

At regional and national level in the US, The National Professional Development Center on ASD (NPDC) established, at district level, the Evidence-Based Individualized Program for Students with Autism (EPIBSA) (Odom et al., 2012), which aims to promote effective use of EBPs for autistic children by teachers. The model includes, in common with other IS framework models, a focus on measuring quantified outcomes as an indicator of overall implementation effectiveness, in this case goal attainment in relation to student functioning. As a linear model, EPIBSA starts with a state-wide leadership team, and then a 2-year plan for state-wide partnership with the NPDC. An implementation and autism training team then work with districts and schools on the selection, implementation and evaluation of selected EBPs. This is a resource intensive large-scale operation that involves NPDC staff in setting up and supporting statewide implementation teams and then such teams facilitating regular visits to schools, including extensive coaching in line with the NIRN IS model.

However, looking across the literature on the development of these national and local level approaches to the use of IS in autism education (e.g., Odom et al., 2013, 2014, 2020; NIRN, n.d.), there is very limited reference to the use of ToC within such models. In fact, when we searched on PSYCINFO and SCOPUS for the terms “Implementation Science,” “Autism” and “Theory of Change” we could find no substantive papers that had looked at the use of ToC as an element of IS in autism education. We note this particularly given the concerns noted in the wider literature on IS in social science, health and education, in relation to the tensions between approaches to IS that focused purely on implementation fidelity and approaches which take account of local factors and local adaptation. It is true that Odom et al. (2014) do refer to the concept of ToC in discussing the use of IS to implement a high school program for autism, but there is in fact no clear definition of a particular model of ToC or its use. In fact, it could be argued that the work of Odom and colleagues, as cited, tends to elide the complexities which are at play when conceptualizing evidence in the context of autism education (Mintz, 2022). We further note that within the current field of implementation science and its application to autism education, there seems to be a similar lack of attention to the local factors at play when taking EBPs developed under trial conditions and implementing them in the wider field.

Applying ToC in autism education: the place of programs to support schools in engaging with evidence-informed practice

We can conceptualize the application of ToC, in the context of the work of teachers and other professionals, to autism education in two distinct but still interrelated ways. Firstly, as we have discussed, ToC could be used, within the context of implementation science, to improve the effectiveness of the roll out of EBPs, such as in the work of the NPDC in the US. However, such programs are only one element of the “ecosystem” of how schools engage with research evidence and EBPs in autism education. Another important element to which ToC could be applied is in the context of the growth of “research informed school programs” to support schools in engaging with research evidence. These programs, in which schools use collaborative models within and between schools to foster engagement with research evidence are now very common in many educational systems. Examples include research school networks in England (Dixon et al., 2020), professional learning community models focused on research evidence engagement in India (Zahedi et al., 2021), knowledge networks in Canada (Cooper et al., 2017), and Evidence Based Community of Practice approaches in the US (Office of Elementary and Secondary Education, 2020). There has also been some limited attention to the specific use of such networks for developing teacher engagement with research related to special educational needs and inclusion (Mintz et al., 2021). The aim of such initiatives, in common with programs which use IS models to directly roll out EBPs, is indeed to get schools to engage with research evidence and to then implement new approaches across the school based on such engagement. Of course, it should be noted that with such research informed schools programs, schools are usually looking at a wide base of potential interventions and evidence sources, and making judgments as schools as to what types of evidence to use and how to apply this in their own local context. However, such use and implementation of evidence also could be considered as being appropriate for the application of ToC models. This is because a ToC model can provide a mechanism for schools to consider how a particular practice or intervention, with the evidence provided whether RCT or case study, can be made sense of and interpreted in terms of the local context and conditions, as well as providing a framework for both understanding and evaluating the factors which contribute to successful implementation in that local context. At the same time, a ToC approach, as noted, can also provide a framework for considering analytical generalizability—that is how the evidence from case studies undertaken in other schools can potentially be applied in this school. As well, such analytical generalizability could also be applied to considering how case studies (e.g., pilots of the use of interventions) undertaken within this school in a particular class could be potentially applied across the school more widely. From our review of the literature on the application of ToC models to education, this would be a new departure. Extant studies in education generally have tended to focus on the use of ToC models in the implementation of specific interventions or EBPs. For example, Thompson et al. (2020) used a ToC approach in the implementation and evaluation of an intervention to manage significant disruptive behavior in schools. Jocson and Martínez (2020) used a ToC model to consider ways of engaging high school students with career and technical education. Wijekumar et al. (2013) developed a ToC for the implementation of a web based intelligent tutoring system for elementary schools. However, our review indicated that no studies to date have formally considered how ToC models could be utilized in relation to research informed schools programs, whether for education generally or for special and educational needs specifically.

We will next show how, albeit retrospectively, a clear articulation of theory of change applied to one existing case study derived from a particular research informed schools program for autism education could help schools with the implementation of evidence informed approaches. “Making Autism Research Accessible to Teachers” (MARAT) was conceptualized as a knowledge exchange program based on the model of research learning communities (RLC) (Brown, 2017; Mintz et al., 2021). We use the experiences of one school and its use of this program to illustrate how a ToC model could potentially enable schools to describe their work more accurately, ensure greater focus on the “active ingredient/s” of their change mechanisms leading to greater fidelity in both the implementation of their plans and their approaches to data collection.

The MARAT program—options for introducing ToC

Seven participant schools in and around the London area participated in MARAT in the 2017/2018 school year. These consisted of two mainstream elementary schools, one secondary mainstream school, two elementary special schools, and two “all-through” special schools (one for ages 3–16 and one for ages 4–19).

The program involved schools engaging with a literature review and research methods in the particular focus area of autism literature via a series of workshops, facilitated by a research team from the university including researchers with specific expertise in autism. The literature review was tailored by the university team and was designed to summarize the evidence on a particular area of interest to the schools in terms of potential interventions and approaches for autism education. This focus was agreed by all the participating schools in advance of the program starting and in this iteration the focus was on developing positive relationships for children with autism in elementary school education.

Two staff from each school—one a school leader and one a practitioner working at the “chalk-face” participated in the program and jointly attended the workshops, so that there was collaboration between the schools involved. In these workshops, schools developed an action plan based on an impact template, using themes, ideas or strategies arising from a series of literature engagement exercises.

We should be clear that in this iteration of MARAT, there was no formal introduction by the project team to the concept of Theory of Change or specific use of it as a model in the program. What we do here is to explore how such a ToC model could be introduced and why this might have been and potentially could be beneficial.

The action plan focused on drawing on the literature review to identify potential strategies to be piloted with particular children or classes that the school participants were currently thinking about in their day-to-day work. We see this as the initial “formation stage” for the emergence of embryonic theory(ies) of change as part of the program. Subsequently, schools worked with a facilitator on initiating a small-scale action research project in school, focused on the use of strategies identified from the literature review. School activities in relation to this were designed to be specific, actionable, and usually quite small-scale measurable interventions intended to achieve the desired outcome. We propose that these school actions could be re-framed as the logic models which describe the practical implementation model designed to action the outcome described by the emerging theory of change.

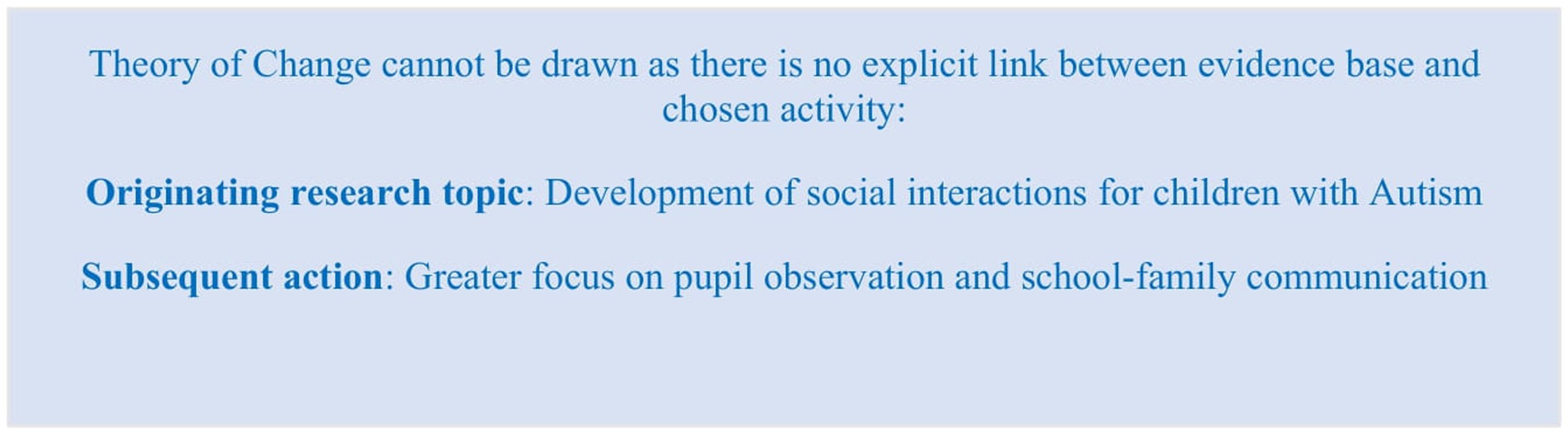

Seven case studies of the experiences of the school were written up for the project report for this iteration of MARAT. A research assistant undertook interviews with the participants about their experience of the program and what impact they felt it had had on bringing about change both in terms of their focus children and classes, and more widely across the school. The case studies were compiled primarily from these interviews as well as the school’s final presentation which reported on the outcomes of their action research project. Each case study articulated a “driver” for innovation and identified an element from the literature which inspired schools. For example, one of the elementary mainstream schools which sought to improve focus pupils’ access to imaginative play were inspired by Rubin’s (2012) chapter on play in autistic children. The participants from the school, based on their reading of this, commented that “social interaction is the bedrock for all humans and they (pupils with autism) will not develop unless they see others as interacting. I do not think we have looked at it in that way before.” This led to a refined focus on observation of children at play, staff collaboration and reflection on potential ways of offering additional support plus increased engagement with, and listening to, families. The result was, in the perception of the participants, the development of a more reflective culture in relation to working with autistic children in the school. No data was recorded, however, on changes to children’s play.

This case study provides an illustration of why a more explicitly argued theory of change might be useful. The school was inspired by a particular piece of research, but their subsequent activities were not specifically guided by it. The (tacit unstated) theory of change in fact could be said to be closer to something like this: “Higher expectation of pupils’ engagement in play, coupled with increased staff collaboration and focus, revitalizes elements of good practice such as structured observation of pupils and family engagement.”

In the first element of our proposed revised model (see Figure 1), the ToC needs to explicitly link to the research with which the school had engaged.

As noted, in this case, the lack of a specific articulation of a theory of change, based on their engagement with the literature, meant that the link between theory and intervention was weak. Thus, the absence of an evidence base led to a lack of clarity on the type of short-term outcome being sought. As well, no child related data was shared, perhaps suggesting lack of clarity over what could, or should, be measured. This is not to suggest that the program had no positive impact on the school; the question rather is whether an explicitly articulated theory of change underpinned by context-specific logic models could have offered enhanced clarity on theoretical models, leading to increased rigor in school-based practice. We should of course note here that this is not in any way to be construed as a criticism of the school, but rather to identify the potential space for the addition of ToC to the program design.

We will now look in more detail at the experiences of one of the schools, named here School 1. School 1 is a special school which takes children with a range of needs including autistic children and children with physical disabilities. It has around 180 students with 12 students per class.

The focus for School 1 in MARAT was on unstructured times in school, like break times, which were becoming an issue for students and for staff who were having to manage issues that spilled back into the classroom after break times. The school had noticed that autistic students in particular were falling out with each other, not understanding the rules of games and not engaging in games “appropriately,” leading to conflict, emotional dysregulation and frustration.

Case study-school 1

School 1’s engagement did not focus directly on the literature in the review with provided by the MARAT team. The staff from this school were engaged in studying on a master’s program and through this were interested in research by Calder et al. (2013) which suggested that there was little evidence that teaching social interaction skills directly to students with autism would be effective in this instance. They also looked at Hochhauser and Engel-Yeger’s (2010) study which seemed to indicate that autistic children may need time to do things on their own, at least initially, rather than being pressured into group activities. Based on this, their action research project, in part, looked at separating students (or giving them more space/opportunity to be separate) during break times. It should be noted that although these studies were not in the review, they are broadly in a cognate area to the focus of the literature review, and it may well be that engaging with that review stimulated their interest in looking at this further research. In addition, it should be noted that the teaching of social skills for autistic children was not considered to be an isolated activity in School 1; it was embedded within a child-centered educational context, with weight given to both child voice and an enabling environment as part of day-to-day school provision.

Their research question was: Will teaching positive interaction strategy improve social communications in breaktimes? They worked collaboratively with other teachers in the school, the school sports coach and a school speech and language therapist. After a period of observations, they implemented a multi-faceted approach to helping autistic pupils to identify and manage emotions during periods of conflict. This involved two members of staff independently observing three focus children during unstructured times as well as using a questionnaire with these students focusing on their behavior and activity at breaktimes. Their teacher also developed a tracking sheet where the focus students could track how “good” their breaktime was and who they had been playing with, which was triangulated with observations of break time by teaching assistants. The team then took the most common behaviors, such as not taking turns playing cards and recorded what they had seen. Staff used these recordings to create role playing scenes which were then shown to the students who were asked what they thought about the behaviors they observed. Children identified that they “lost their temper” and could not “remember what to do to behave sensibly.” In order to better understand what it felt like to lose their temper, the staff developed a further intervention which they called the “Gingerbread men” activity-where students were asked to write on a cut out figure what it felt like to win or lose. They found that some colored in the hands or heads red because losing made them want to hit things. Or they said that they felt like crying but could not cry. The focus was very much around confusion and the class teacher perceived this to be a direct result of the lack of clear guidelines at breaktime as opposed to their classroom experience. For example, they got cross when they did not understand the rules. So, in turn, staff talked about what they could do in this type of scenario. They also started to put in place some scripts for what to do in games (phrases like “can I join in,” “what game are you playing,” “can you tell me the rules”) and encouraged them to play with different children.

In interviews, the project participants (i.e., the school staff) noted that.

“after watching staff re-enact behaviours it broke down barriers. It had an element of humour in it, it took the anxiety out of it and engaged them…We found that talking with the focus students they were confused about the rules of games, they could not understand why the rules might change and that they should be the same for everyone.”

The project participants reported that staff asserted that they “are starting to see a more positive return to class.” The tracking system, included as part of the presentation by the project participants in the final workshop, showed that two of the three pupils felt more positive (although the teacher noted that one of the students may have been responding in the way they think the teacher would like them to).

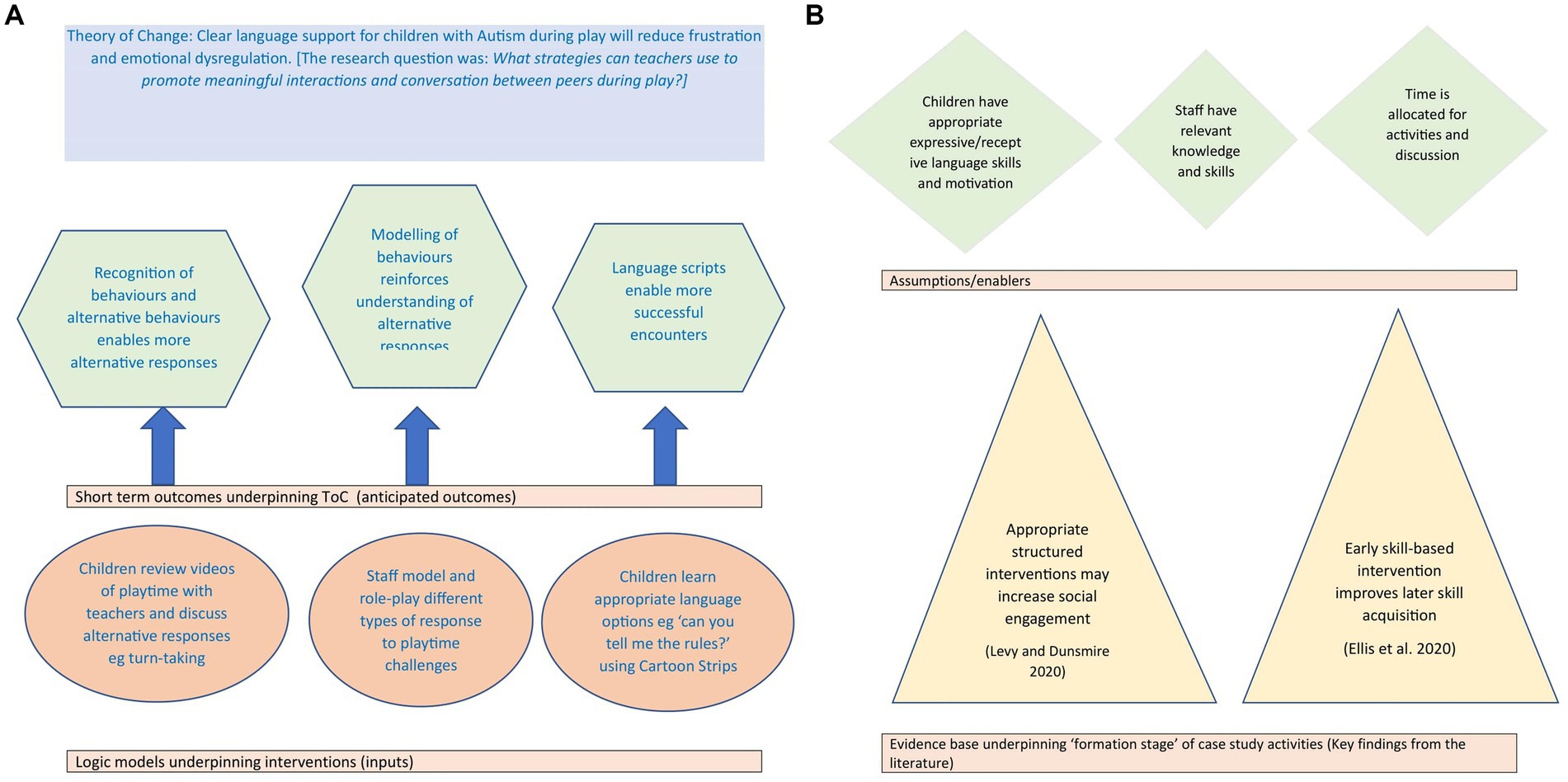

Adding in a ToC model for school 1

In considering how a ToC model could have been added into this case, we theorized that a simple Theory of Change with underpinning logic models for this project might be as presented in Figure 2.

The logic models consist of the “Input” or “Activity” linked to the “Short Term Outcome.” These represent strands of intentional activities undertaken by schools which combine to enable the school’s overarching theory of change. For example, the input of “children reviewing videos” underpins the short-term outcome “recognition of behaviors…enables more appropriate responses” which underpin the ToC “Clear language support for children with Autism during play will reduce frustration and emotional dysregulation.” This model illustrates the conceptualizations underpinning the initiation of a small-scale action research project in school, focused on the use of strategies identified from a literature review. The logic models describe the practical implementation designed to action the outcome described by the emerging theory of change, proving a clear and articulated link between theory and intervention.

Once the model has been established, more detailed decisions can be made, such as which material and human resources need to be deployed to achieve the desired activities (inputs). For example, “children reviewing videos” requires appropriate levels of permission; available equipment; people to operate equipment; time and space to review recording and appropriate opportunity to discuss footage and use it to deepen understanding of social skill development and enhancement of peer relationship.

Once this has been established, greater consideration can be given to the way in which the activity might be utilized to support the intended short-term outputs. For example: which types of footage are most beneficial for discussion? Are there any ethical issues or unintended outcomes? Have staff identified ways in which discussion of footage might extend situational understanding, enhance empathy or provide additional options for response? This may pave the way to challenge assumptions, such as the motivation of autistic students or the skill level of staff.

Finally, these discussions lend themselves to a much more focused approach to school data collection. School data collection is dogged by variability of quality (Godfrey, 2017; Tancred et al., 2018) and this approach enables consideration of monitoring the extent to which the planned activity was carried out and the fidelity by which it was undertaken. Then, the clarity as to outcome potentially enables data collection on outcome to be better conceptualized. For example, in this case, the school might collect data on changes to empathy; changes to response to situations similar to those explored in the footage or changes to language used to explore certain scenarios. Broader data on changes to pupil relationships might also be collected.

How then might such an explicit theory of change model have made a difference to the project? We propose several possibilities for this. Firstly, it may make the link between the actual actions in the school and the research engaged with more explicit for the school as a whole and for individual teachers, helping them to further reflect on that research, their assessment of its weight and how they feel it relates to their local context. Secondly, it creates a concrete representation of the operational working theory that the school and the actors within it adopt—it brings into sharper focus the process of analytical generalizability as an outcome from the process of engagement with the research, and the program. This could mean that in terms of rolling out changes across the school more widely, or to other schools in the local area, that there is an explicit model of the assumptions and processes involved in the change in the local context, which could lead to a more successful implementation of such change. This “more successful implementation” might include greater fidelity to the intentions of the intervention, better collegiality through shared language and shared vision and possible more rigorous data collection due to greater clarity of objectives and purpose. We recognize that, of course, in a sense, this is only a “thought experiment,” but nevertheless hope that it illustrates at least the potential for ToC models in this space.

Conclusion

Our review of the literature shows that models for the roll out of evidence-based (or evidence-informed) practices in autism education, including implementation science models, lack a focus on local adaptation. We also identify the role of research informed schools programs in helping such local adaptation in relation to a wide range of research evidence. Then, we argue, using a “thought experiment approach,” that the work of schools on implementing interventions using research informed schools programs could be further developed by the inclusion of ToC models. We also note that the adoption of such models would concomitantly allow schools to further theorize how they see such interventions or evidence informed practices being used in the local context—thus increasing the “analytical generalizability” of their local case studies exploring the implementation of interventions or practices.

By a simple articulation of a Theory of Change model or models, underpinned by a clear identification of inputs and anticipated outcomes, it is easier to position the work of schools within a theoretical framework and understand the actions being undertaken. This also enables greater clarity in respect of decisions around desirable data collection. In Yin’s terms, adopting ToCs in a research informed schools program could lead to increased validity and allow schools to present, in a more robust manner, both to internal and external audiences, more carefully curated local data about the impact of interventions. As well, the adoption of such ToC models may have the additional effect, particularly via enhanced transparency, of ensuring greater fidelity to program design during ongoing program implementation.

Over time, a Theory of Change structure utilized by schools in case study reporting may facilitate grouping and archiving of school reflective practice cases. This may also facilitate the building of a database of expected outcomes across similar logic models, against which schools may compare their own progress.

A further logical step could also be to include such programs, bolstered by ToC models, into the wider ecosystem of the rollout of interventions for autism education using IS models.

Further research on the use of ToC models in research informed school programs, such as MARAT, in practice, would of course be needed to further explore and validate the arguments made in this paper. We argue that there may be a potential paradigm shift created by schools’ articulating a Theory of Change model, with a number of possible benefits. In addition to deepening the understanding of the link between the evidence base and activities within school, there is the possibility that shared language of intent, outcome, theory and logic model may be supportive in sustaining a culture of ongoing reflective practice both within and between schools.

Data availability statement

The datasets presented in this article are not readily available because we do not have permission to share the data from the study further. Requests to access the datasets should be directed to ai5taW50ekB1Y2wuYWMudWs=.

Ethics statement

The studies involving human participants were reviewed and approved by the UCL Institute of Education Research Ethics Committee. The participants provided their written informed consent to participate in this study.

Author contributions

JM led on the development of the MARAT model. AR led on its implementation as described in this manuscript. AR and JM contributed equally to the conceptual and theoretical development and writing of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

Partial funding for the MARAT implementation discussed in this study was received from the UK Higher Education Innovation Fund via UCL IOE, Faculty of Education and Society.

Acknowledgments

The authors would like to acknowledge the contributions and input of the teachers involved in the implementation of MARAT described in this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aarons, G. A., Hurlburt, M., and Horwitz, S. M. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm. Policy Ment. Health Ment. Health Serv. Res. 38, 4–23. doi: 10.1007/s10488-010-0327-7

Biesta, G. (2007). Why “what works” won’t work: evidence-based practice and the democratic deficit in educational research. Educ. Theory 57, 1–22. doi: 10.1111/j.1741-5446.2006.00241.x

Breuer, E., De Silva, M., and Lund, C. (2018). Theory of change for complex mental health interventions: 10 lessons from the programme for improving mental healthcare. Glob. Ment. Health 5:e24. doi: 10.1017/gmh.2018.13

Brown, C. (2017). Research learning communities: how the RLC approach enables teachers to use research to improve their practice and the benefits for students that occur as a result. Res. All 1, 387–405. doi: 10.18546/RFA.01.2.14

Calder, L., Hill, V., and Pellicano, E. (2013). ‘Sometimes I want to play by myself’: understanding what friendship means to children with autism in mainstream primary schools. Autism 17, 296–316. doi: 10.1177/1362361312467866

Chen, H. T. (2016). Interfacing theories of program with theories of evaluation for advancing evaluation practice: reductionism, systems thinking, and pragmatic synthesis. Eval. Prog. Plann. 59, 109–118. doi: 10.1016/j.evalprogplan.2016.05.012

Cochran-Smith, M., and Lytle, S. L. (1999). The teacher research movement: a decade later. Educ. Res. 28, 15–25. doi: 10.3102/0013189X028007015

Cooper, A., Klinger, D. A., and McAdie, P. (2017). What do teachers need? An exploration of evidence-informed practice for classroom assessment in Ontario. Educ. Res. 59, 190–208. doi: 10.1080/00131881.2017.1310392

Davis, R., Campbell, R., Hildon, Z., Hobbs, L., and Michie, S. (2015). Theories of behaviour and behaviour change across the social and behavioural sciences: a scoping review. Health Psychol. Rev. 9, 323–344. doi: 10.1080/17437199.2014.941722

Dixon, M., Brookes, J., and Siddle, J. (2020). “Hearts and minds: the research schools network: from evidence to engagement” in Getting evidence into education, S. Gorard (London: Routledge), 53–68.

Eccles, M. P., and Mittman, B. S. (2006). Welcome to implementation science. Implement. Sci. 1:1. doi: 10.1186/1748-5908-1-1

Gage, N. (1989). The paradigm wars and their aftermath a “historical” sketch of research on teaching since 1989. Educ. Res. 18, 4–10. doi: 10.3102/0013189X018007004

Ghate, D. (2018). Developing theories of change for social programmes: co-producing evidence-supported quality improvement. Palgrave Commun. 4, 1–13. doi: 10.1057/s41599-018-0139-z

Godfrey, D. (2017). What is the proposed role of research evidence in England’s ‘self-improving’ school system? Oxf. Rev. Educ. 43, 433–446. doi: 10.1080/03054985.2017.1329718

Heckman, J. J. (2005). The scientific model of causality. Sociol. Methodol. 35, 1–97. doi: 10.1111/j.0081-1750.2006.00164.x

Hochhauser, M., and Engel-Yeger, B. (2010). Sensory processing abilities and their relation to participation in leisure activities among children with high-functioning autism spectrum disorder (HFASD). Res. Autism Spectr. Disord. 4, 746–754. doi: 10.1016/j.rasd.2010.01.015

Jocson, K. M., and Martínez, I. D. (2020). Extending learning opportunities: youth research in CTE and the limits of a theory of change. Equity Excell. Educ. 53, 165–176. doi: 10.1080/10665684.2020.1763552

Johnson, R. B., Onwuegbuzie, A. J., and Turner, L. A. (2007). Toward a definition of mixed methods research. J. Mixed Methods Res. 1, 112–133. doi: 10.1177/1558689806298224

Jones, B., Nagraj, S., and English, M. (2022). Using theory of change in child health service interventions: a scoping review protocol. Wellcome Open Res. 7:30. doi: 10.12688/wellcomeopenres.17553.1

Kainz, K., and Metz, A. (2019). Causal thinking for embedded, integrated implementation research. Evid. Policy 15, 125–141. doi: 10.1332/174426416X14779418584665

Kellogg Foundation (2004). Using logic models to bring together planning, evaluation, and action: Logic model development guide. Battle Creek, MI: Kellogg Foundation Available at: https://wkkf.issuelab.org/resource/logic-model-development-guide.html (Accessed June 30, 2022).

Meyers, D. C., Durlak, J. A., and Wandersman, A. (2012). The quality implementation framework: a synthesis of critical steps in the implementation process. Am. J. Community Psychol. 50, 462–480. doi: 10.1007/s10464-012-9522-x

Mintz, J. (2022). The role of universities and knowledge in teacher education for inclusion. Int. J. Incl. Educ., 1–11. doi: 10.1080/13603116.2022.2081877

Mintz, J., Branch, C., March, C., and Lerman, S. (2012). Key factors mediating the use of a mobile technology tool designed to develop social and life skills in children with autistic spectrum disorders. Comput. Educ. 58, 53–62. doi: 10.1016/j.compedu.2011.07.013

Mintz, J., Seleznyov, S., Peacey, N., Brown, C., and White, S. (2021). Evidence informed practice for autism, special educational needs and disability in schools: expanding the scope of the research learning community model of professional development. Support Learn. 36, 159–182. doi: 10.1111/1467-9604.12349

Moore, G. F., Audrey, S., Barker, M., Bond, L., Bonell, C., Hardeman, W., et al. (2015). Process evaluation of complex interventions: Medical Research Council guidance. Br. Med. J. 350:h1258. doi: 10.1136/bmj.h1258

Moroney, D. A. (2020). From model to reality: the role of implementation readiness. J. Youth Dev. 15, 162–170. doi: 10.5195/jyd.2020.1057

NCEE (n.d.). What works clearinghouse. Available at: https://ies.ed.gov/ncee/wwc/ (Accessed June 30, 2022).

NCVO (n.d.). How to build a theory of change—NCVO Knowhow. Available at: https://knowhow.ncvo.org.uk/how-to/how-to-build-a-theory-of-change (Accessed June 30, 2022).

Nelson, J., and Campbell, C. (2017). Evidence-informed practice in education: meanings and applications. Educ. Res. 59, 127–135. doi: 10.1080/00131881.2017.1314115

NIRN (n.d.). National implementation science network: active implementation framework: stages of implementation, lesson 7. Chapel Hill, NC: Frank Porter Graham Child Development Institute, University of North Carolina Available at: https://implementation.fpg.unc.edu/modules-and-lessons (Accessed June 30, 2022).

Odom, S. L., Cox, A. W., and Brock, M. E., National Professional Development Center on ASD (2013). Implementation science, professional development, and autism spectrum disorders. Except. Child. 79, 233–251. doi: 10.1177/0014402913079002081

Odom, S. L., Duda, M. A., Kucharczyk, S., Cox, A. W., and Stabel, A. (2014). Applying an implementation science framework for adoption of a comprehensive program for high school students with autism spectrum disorder. Remedial Spec. Educ. 35, 123–132. doi: 10.1177/0741932513519826

Odom, S. L., Hall, L. J., and Suhrheinrich, J. (2020). Implementation science, behavior analysis, and supporting evidence-based practices for individuals with autism. Eur. J. Behav. Anal. 21, 55–73. doi: 10.1080/15021149.2019.1641952

Odom, S. L., Hanson, M., Lieber, J., Diamond, K., Palmer, S., Butera, G., et al. (2012). “Prevention, early childhood intervention, and implementation science” in Handbook of youth prevention science, eds. B. Doll, W. Pfohl, and J. Yoon (New York: Routledge), 413–432.

Office of Elementary and Secondary Education (2020). Evidence-based practices community of practice resources. Available at: https://oese.ed.gov/resources/oese-technical-assistance-centers/state-support-network/resources/evidence-based-practices-community-practice-resources/ (Accessed June 30, 2022).

PCAR (2018). Theory of change and logic models. Pennsylvania coalition against rape Available at: https://pcar.org/resource/theory-change-and-logic-models (Accessed May 30, 2022).

Pollard, A., Black-Hawkins, K., Cliff-Hodges, G., Dudley, P., and James, M. (2014). Reflective teaching in schools: evidence-informed professional practice, London: Bloomsbury Publishing.

Rubin, L. C. (2012). “Playing on the autism spectrum” in Play-based interventions for children and adolescents with autism spectrum disorders, eds. L. Gallo-Lopez and L. C. Rubin (New York: Routledge), 47–64.

Sanetti, L., Kratochwii, T., Collier-Meek, M., and Long, A. (2014). PRIME (planning realistic implementation and maintenance by educators). Storrs, CT: University of Connecticut Available at: https://implementationscience.uconn.edu/wp-content/uploads/sites/1115/2014/12/PRIME_guide1.pdf (Accessed June 30, 2022).

Schierhout, G., Hains, J., Si, D., Kennedy, C., Cox, R., Kwedza, R., et al. (2013). Evaluating the effectiveness of a multifaceted, multilevel continuous quality improvement program in primary health care: developing a realist theory of change. Implement. Sci. 8, 1–15. doi: 10.1186/1748-5908-8-119

Slavin, R. E. (2020). How evidence-based reform will transform research and practice in education. Educ. Psychol. 55, 21–31. doi: 10.1080/00461520.2019.1611432

Smolkowski, K., Crawford, L., Seeley, J. R., and Rochelle, J. (2019). Introduction to implementation science for research on learning disabilities. Learn. Disabil. Q. 42, 192–203. doi: 10.1177/0731948719851512

Stenhouse, L. (1981). What counts as research? Br. J. Educ. Stud. 29, 103–114. doi: 10.1080/00071005.1981.9973589

Stoll, L., and Louis, K. S. (2007). Professional learning communities, London: McGraw-Hill Education.

Størksen, I., Ertesvåg, S. K., and Rege, M. (2021). Implementing implementation science in a randomized controlled trial in Norwegian early childhood education and care. Int. J. Educ. Res. 108:101782. doi: 10.1016/j.ijer.2021.101782

Sweileh, W. M., Al-Jabi, S. W., Sawalha, A. F., and Zyoud, S. H. (2016). Bibliometric profile of the global scientific research on autism spectrum disorders. Springerplus 5, 1–12. doi: 10.1186/s40064-016-3165-6

Tancred, T., Paparini, S., Melendez-Torres, G. J., Thomas, J., Fletcher, A., Campbell, R., et al. (2018). A systematic review and synthesis of theories of change of school-based interventions integrating health and academic education as a novel means of preventing violence and substance use among students. Syst. Rev. 7:190. doi: 10.1186/s13643-018-0862-y

Teachout, E., Rowe, L. A., Pachon, H., Tsang, B. L., Yeung, L. F., Rosenthal, J., et al. (2021). Systematic process framework for conducting implementation science research in food fortification programs. Glob. Health Sci. Pract. 9, 412–421. doi: 10.9745/GHSP-D-20-00707

Thomas, G. (2021). Experiment’s persistent failure in education inquiry, and why it keeps failing. Br. Educ. Res. J. 47, 501–519. doi: 10.1002/berj.3660

Thompson, A. M., Stinson, A. E., Sinclair, J., Stormont, M., Prewitt, S., and Hammons, J. (2020). Changes in disruptive behavior mediated by social competency: testing the STARS theory of change in a randomized sample of elementary students. J. Soc. Soc. Work Res. 11, 591–614. doi: 10.1086/712494

Wijekumar, K. K., Meyer, B. J., and Lei, P. (2013). High-fidelity implementation of web-based intelligent tutoring system improves fourth and fifth graders content area reading comprehension. Comput. Educ. 68, 366–379. doi: 10.1016/j.compedu.2013.05.021

Keywords: theory of change, autism education, evidence-informed teaching, implementation science, inclusion

Citation: Mintz J and Roberts A (2023) Prospects for applying a theory of change model to the use of research evidence in autism education. Front. Educ. 8:987688. doi: 10.3389/feduc.2023.987688

Edited by:

Waganesh A. Zeleke, Virginia Commonwealth University, United StatesReviewed by:

Wenonah Campbell, McMaster University, CanadaAthina Stamou, University of Roehampton London, United Kingdom

Copyright © 2023 Mintz and Roberts. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joseph Mintz, ai5taW50ekB1Y2wuYWMudWs=

†These authors have contributed equally to this work

Joseph Mintz

Joseph Mintz Amelia Roberts†

Amelia Roberts†