- 1Department of Natural and Applied Sciences, University of Dubuque, Dubuque, IA, United States

- 2Department of Biology, San Francisco State University, San Francisco, CA, United States

- 3Department of Biological and Environmental Sciences, Capital University, Columbus, OH, United States

- 4Department of Biology, University of Wisconsin-River Falls, River Falls, WI, United States

- 5Department of Medical Education, Tufts University School of Medicine, Boston, MA, United States

Course-based undergraduate research experiences (CUREs) are a high-impact educational practice that engage students with authentic research in the classroom. CURE development models include those designed and implemented at individual institutions to wide-reaching multi-institutional network CUREs. The latter have lowered barriers to implementation by providing a centralized support system, centralized training and curricula, and mentoring. CURE learning outcomes span the three domains of learning: knowledge, skills, and attitude. Assessment of these domains can serve a variety of purposes to a collection of different stakeholders. To better understand the CURE assessment landscape from the instructor’s point of view we surveyed instructors from an established network CURE. We found that these instructors, particularly those from associate’s colleges, overwhelmingly prefer use of knowledge and skills-based assessments over attitudinal CURE assessment instruments. Instructors value knowledge and skills assessment data when deciding whether to adopt a particular CURE and for identifying student misconceptions to improve CURE instruction, and for documentation necessary for Community College transfer agreements or for gaining approval from curriculum committees. CURE learning models have pointed toward use of generalizable instruments for measuring CURE outcomes, but since knowledge and skills assessments are typically CURE specific, obtaining funds for their development may not be realistic. To address this concern, we outline a CURE network stakeholder co-design process for developing and validating a knowledge and skills assessment instrument without external support or a sizable time commitment. We encourage network CUREs to leverage their communities to generate and validate knowledge and skills assessment instruments to further lower barriers for instructor adoption.

Introduction

Over several years, a large body of evidence has accumulated to support the value of Undergraduate Research Experiences (UREs) to promote student interest, engagement, and persistence in STEM (Kremer and Bringle, 1990; Alexander et al., 1998; Nagda et al., 1998; Zydney et al., 2002; Lopatto, 2004; Russell, 2006; Laursen et al., 2010). Course-based undergraduate research experiences (CUREs) can reach many more students than the traditional URE because the research can be embedded as part of a required curriculum (Shaffer et al., 2010; Bangera and Brownell, 2014; Jordan et al., 2014; Wolkow et al., 2014; National Academies of Sciences, Engineering, and Medicine, 2015; Dolan, 2016; Rodenbusch et al., 2016; Dolan and Weaver, 2021; Buchanan and Fisher, 2022; Hanauer et al., 2022; Hensel, 2023). CUREs are a high-impact educational practice (Kuh, 2008) that blend formal teaching and research with diverse learning outcomes. The cross-institutional network CURE model (Lopatto et al., 2014; Dolan, 2016; Shortlidge et al., 2016; Connors et al., 2021), which includes standardized curriculum and protocols across institutions with a common research goal, is an alternative to a locally developed CURE. The scalability of CUREs across multiple institutions has led to the generation of several national and international CURE networks (e.g., Jordan et al., 2014; Elgin et al., 2017; Murren et al., 2019; Roberts et al., 2019; Fuhrmeister et al., 2021; Hurley et al., 2021; Hyman et al., 2021; Vater et al., 2021; Pieczynski et al., 2022; CUREnet, 2023). Network CUREs often serve as a centralized support system providing training, curriculum, mentoring, and other support tools (Lopatto et al., 2014; Hanauer et al., 2017; Pieczynski et al., 2022). This is critical for many faculty that experience barriers to adopting and implementing CUREs (Lopatto et al., 2014; Spell et al., 2014; Genné-Bacon et al., 2020). Network CUREs often focus on engaging students early in their undergraduate training and have been cited as inclusive research education communities (Hanauer et al., 2017).

Early CURE assessment focused on student subjective self-assessment and then moved toward more objective assessments related to skills and knowledge learned during the duration of the CURE experience. More recently, seminal papers can be credited with using evidence, learning theory, and robust methodology to focus the CURE evaluation community to move beyond the simple pre- post-quiz and student self-reported learning gains, toward understanding whether CURE participation leads to desired long-term outcomes and which components of the experience contribute to those outcomes (Sadler et al., 2010; Sadler and McKinney, 2010; Auchincloss et al., 2014; Corwin et al., 2015; Linn et al., 2015; Krim et al., 2019). A comprehensive investigation to identify the full range of potential CURE outcomes delineated those expected to occur after minimal exposure to research vs. those which require multiple or longer exposures (Auchincloss et al., 2014; Corwin et al., 2015). These potential CURE learning outcomes span the three domains of learning (Hoque, 2016): (1) cognitive (knowledge), (2) psychomotor/behavioral (skills), and (3) affective (attitudes/social/emotional/feeling). The types of knowledge and skills expected to be gained through CURE participation have been discussed previously (Auchincloss et al., 2014; Corwin et al., 2015). Gains in knowledge and skills are predicted to be measurable over the short term whereas long-term outcomes are likely to trend toward the affective (e.g., enhanced science identity, persistence in science). Modeling to determine how outcomes are achieved identified potential focal points for assessment (Auchincloss et al., 2014; Corwin et al., 2015). These “hubs” include self-efficacy, sense of belonging, and science identity.

Generalizable, validated instruments are necessary to measure outcomes across different programs, institution types, and student demographic groups (Sadler et al., 2010; Sadler and McKinney, 2010; Auchincloss et al., 2014; Corwin et al., 2015; Linn et al., 2015). Knowledge and skills-based assessments are generally specific to each CURE, and therefore, not generalizable across different CUREs. Conversely, assessment of affective domains are generalizable and have the advantage of serving as presumed “hubs” that are foundational for reaching desired long-term outcomes.

Outcomes expected to occur during or after participation in a semester-long CURE may not be achieved after participation in a short CURE module. In the last few years, several modular multi-institutional network CUREs have been designed, to allow instructors to test out how it might feel to run a CURE in their course without committing to a full semester CURE (e.g., Staub et al., 2016; Elgin et al., 2017; Hanauer et al., 2018; Anderson et al., 2020; Dizney et al., 2021; Fuhrmeister et al., 2021; Reyna and Hensley, 2023). This is important for faculty who may not have complete control over their course or may be beholden to the administration or curriculum committees for new (CURE) course approval. In these cases, evidence of efficacy may be required before the instructor can embark on a full semester CURE, yet existing, validated instruments that measure presumed short-term outcomes such as developing hypotheses, designing experiments, or project ownership (Feldon et al., 2011; Sirum, 2011; Brownell et al., 2014; Dasgupta et al., 2014; Deane et al., 2014; Hanauer and Dolan, 2014) may not be appropriate due to the short nature of a single module CURE experience. We fully acknowledge that, as Brownell and Kloser (2015) point out, focusing on assessing gains in skills or learning does not capture the holistic nature of CUREs; however, we argue that in certain contexts, it may be valuable.

Instructor-perceived value of CURE assessment instruments and datasets

Assessments of CURE-derived knowledge and skills are seen as invaluable for certain populations that may be skeptical of CURE outcomes or may be deciding about whether to implement a CURE. Knowledge and skills data that are CURE-specific may persuade faculty of the value of the CURE or, at minimum, convince them that it will “do no harm.” To investigate instructor perceived value of CURE student assessment instrument types (e.g., knowledge, skills, affective) we surveyed (Supplementary material) the Prevalence of Antibiotic Resistance in the Environment (PARE) CURE network instructors. PARE is a network CURE focused on the systematic surveillance of environmental antibiotic resistance with participation from diverse classroom contexts and institution types including over 165 undergraduate institutions reaching thousands of students annually (Genné-Bacon and Bascom-Slack, 2018; Fuhrmeister et al., 2021; Bliss et al., 2023). PARE is implemented across diverse institutions, course types, and target students range from non-majors to upper-level biology majors. We specifically examined what assessment types (i.e., knowledge, skills, attitudinal) were most valuable to instructors for various purposes (e.g., student evaluation, course approvals, support for CURE implementation).

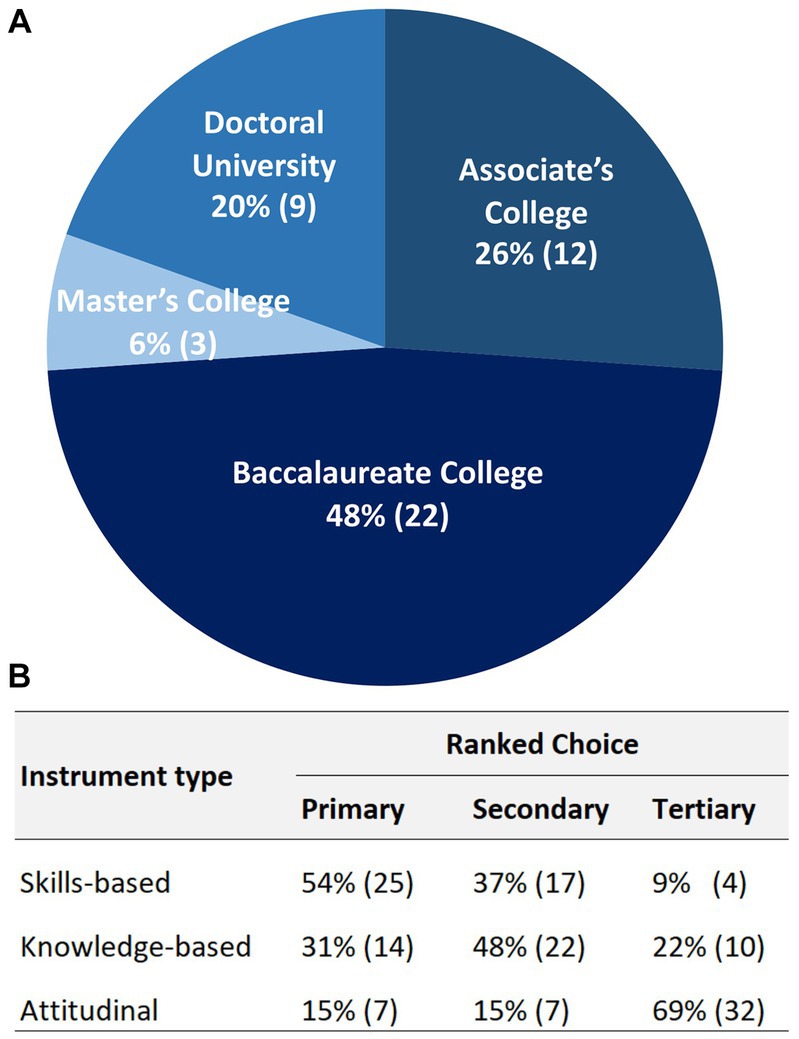

We received data from 46 respondents across four institution types, and at least 43 unique institutions (Figure 1A). Although instructors overwhelmingly believe attitudinal course learning outcomes (CLOs) are important, few have attitudinal CLOs on the books as part of their CURE-integrated course relative to knowledge or skills CLOs. Over 80% of the respondents have curriculum committee-approved knowledge and skills CLOs, while <20% of respondents have attitudinal CLOs as part of their CURE-integrated course (Supplementary Figures 1, 2). Furthermore, departments and/or institutions rarely require evidence for attitudinal CLOs compared to knowledge and skills CLOs. Over 78 and 71% of to respondents departments and/or institutions require or encourage collection of evidence for knowledge and skills-based CLOs respectively, while less than 24% of respondents’ departments and/or institutions require or encourage collection of evidence for attitudinal outcomes (Supplementary Figure 3A).

Figure 1. (A) PARE CURE network instructor survey respondents (n = 46) split by institution type. (B) Survey respondents overwhelmingly prefer the use of knowledge and skills-based assessments over attitudinal CURE assessment instruments when asked to rank their preference.

Individual instructors surveyed rarely collect attitudinal CLO data compared to knowledge and skills CLO data. Less than 48% of instructors anticipate collecting attitudinal evidence for personal use, while >97 and > 93% of instructors anticipate collecting knowledge- or skills-based evidence for personal use, respectively (Supplementary Figure 3B). Although a large percentage of instructors value attitudinal outcomes, a much smaller fraction of the respondents actually collect attitudinal data (Supplementary Figures 1, 3). Notably, there is a segment that collects attitudinal data even though not required or encouraged by their department and/or institution.

Over 80% of respondents indicated that it was somewhat or very important to see data on network CURE knowledge and skills outcomes prior to adoption, while 63% indicated that this was important for attitudinal outcomes data (Supplementary Figure 4). When considering whether to adopt a CURE, faculty generally value knowledge- and skills-based data more than attitudinal data. Thus, designing a CURE-specific knowledge and skills assessment instrument may result in greater likelihood of network CURE adoption.

When asked to rank assessment type preference, survey respondents overwhelmingly prefer use of knowledge- and skills-based over attitudinal CURE assessment instruments (Figure 1B). Of those respondents that indicated attitudinal CURE assessment instruments being least preferable (n = 32), the top reasons were that they are least useful for documenting that the CURE meets course requirements including community college transfer agreements, and for in-class formative and/or summative student assessment. Additionally, 70 and 80% of instructors indicated that limited class time and avoidance of student assessment fatigue, respectively, would influence their decision to implement a subset of the instruments if all three were available. Furthermore, knowledge and skills-based assessments were preferred independent of institution type (Supplementary Figure 5; 66–100% of respondents), but non-associate’s college respondents were more open to attitudinal assessments as their primary ranked preference (18–33% of respondents).

While instructors ranked affective outcomes as their lowest preference of assessment type (Figure 1B), this is likely linked to the fact that less than 20% of survey respondents have curriculum committee-approved CLOs focusing on affective outcomes (relative to greater than 80% with knowledge and skills-based CLOs; Supplementary Figure 2). Likewise, CLOs are typically guided by programmatic learning outcomes (PLOs) and over 84% of the respondents reported lack of attitudinal PLOs (Supplementary Figure 2). Furthermore, although instructors overwhelmingly believe that knowledge, skills, and attitudinal CLOs have value, the respondents indicated that departments and/or institutions place a greater importance on knowledge and skills CLOs (Supplementary Figure 1). This sentiment aligns well with previous characterization of PLOs across institutions (Clark and Hsu, 2023). Development of knowledge and skills-based assessment instruments, especially for network CUREs, may bolster wider adoption.

Attitudinal instruments have great importance for assessing long-term CURE benefits, but CURE-specific knowledge and skills instruments may play a role in recruiting faculty interested in implementing a specific network CURE. Over 32% of respondents indicated importance in having knowledge and skills-based data for acceptance and approval of CURE curricula by an institutional curriculum committee, while 52 and 48% of respondents indicated the importance of having these data for gaining departmental and administrative support, respectively (Supplementary Figure 4). Additionally, over 71% of respondents indicated that these data would be important for documenting that a CURE would meet course requirements including community college transfer agreements. Instructors also place importance on having access to a CURE pre- post-assessment instrument for measuring students’ gains in knowledge and skills after CURE adoption. Respondents indicated the need for a CURE knowledge and skills assessment instrument for course improvement and for in-class formative or summative assessment (Supplementary Figure 6). Thus, knowledge and skills outcome data can be used by educators for CURE adoption decisions, instructional improvement, for formative and summative student assessment, and can be important for demonstrating a newly adopted CURE meets course learning objectives critical to transfer agreements between community colleges and four-year institutions.

Network co-design model for knowledge and skills instrument development

Our CURE network instructor survey data were consistent with early program feedback from PARE instructors who desired a content and skills-based assessment instrument. We recognize the commitment of resources necessary to develop and validate such an instrument. These resources may be difficult to secure given that knowledge and skills assessment is not the focus of the research community. However, survey respondents overwhelmingly indicated the importance of seeing network CURE knowledge and skills outcomes prior to adoption.

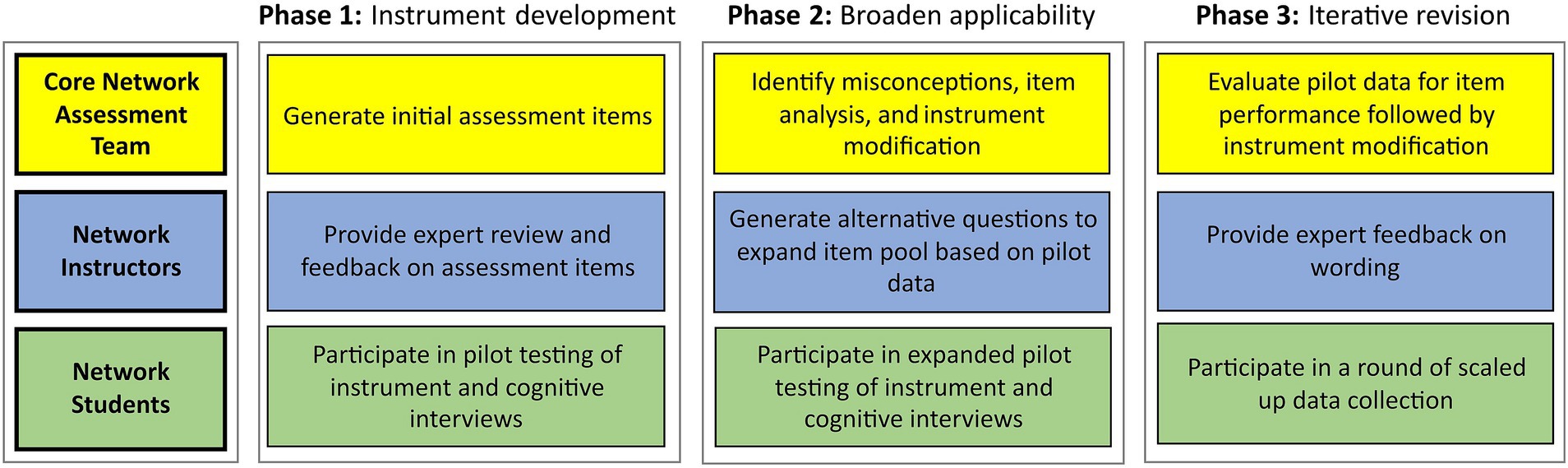

We present an assessment development and validation model for network CUREs (Figure 2) that can lower barriers for creation of a tailored knowledge and skills assessment instrument. This network co-design model for instrument development encompasses participants with varied viewpoints, institution types, and course types in a comprehensive effort to generate a widely applicable and reliable instrument (Hong and Page, 2004). We provide a mechanism that incorporates feedback from current faculty implementing the network CURE but does not impose such a time burden as to require extensive external funding.

Figure 2. Inclusive network codesign model for knowledge and skills assessment instrument development and validation.

Our three-phased co-design methodological framework is grounded by a CURE network assessment team composed of disparate stakeholders. Stakeholders include CURE network participants with varying levels of disciplinary experience and a wide variety of course-implementation and institution types. During phase I, the core assessment team works collaboratively to generate a mixture of open-response and multiple-choice questions built around CURE curricular learning outcomes including assessment of gateway concepts of the discipline and identifying student misconceptions. Expert review by other network CURE participants through asynchronous surveying provides feedback and is used as one means for validity testing. Piloting the survey with network students including multiple choice follow-up explanation fields and/or cognitive interviews provides an additional degree of validity testing. Pilot dataset item analysis and pre-post scoring provides insight into item difficulty and can identify questions with problematic wording and/or issues in clarity.

Phase II focuses on increasing instrument applicability to a broader range of student readiness levels and transforming the instrument to streamline scoring for scaling up deployment. Instrument revision is driven by the core assessment team, but with expert input solicited by instructors across the network. Using item difficulty data from piloting the instrument, additional items targeting the same concept or skill are generated to provide a subset of questions with a range of difficulty. Additionally, student-generated open-response items are used to identify common misconceptions to assist in the generation of multiple-choice versions of open-response style questions from the pilot instrument. Item wording and other modifications are completed before a second extended round of piloting the revised instrument. Pilot data from phase II is used to evaluate performance on items converted to multiple-choice for scaling up data collection. Item analysis and pre-post scores are considered for item performance.

Phase III provides a subsequent round of iterative instrument revision and refinement by CURE network participants. Expert and student feedback are taken into account. Poor-performing items are revised and/or alternative items are proposed by network faculty after review. This methodological framework provides a foundation for network CUREs to adopt for establishing their knowledge and skills assessment toolkit, while leveraging the diverse human capital within the network to potentially lower instrument development barriers.

Conclusion

While measuring attitudinal outcomes in CURE evaluation is important, there is a coexisting perspective that values measurement of knowledge- and skills-based outcomes. Here we have shed light on the instructors’ perspective of CURE assessment and have presented a model for knowledge and skills assessment instrument generation and validation that incorporates input from instructors from many different teaching contexts (Figure 2). The resulting knowledge and skills assessment instrument that we developed using this process for the PARE network has generated a public dataset that can be reviewed by prospective CURE instructors (Kleinschmit et al., 2023). We hope that the results presented here help other network CUREs reflect on their own assessments and what might best benefit their network. In our instructor survey, we also examined how data collected from the PARE student knowledge and skills assessment was used by administering instructors. These instructors indicated that the resulting data from their course was useful for tailoring instructional planning for future course improvement, as well as for gauging the effectiveness of the CURE when deciding whether to continue implementing. Other respondents mentioned that the instrument was useful as they did not have to invest as much time developing their own assessments.

Network CUREs have historically pooled intellectual and physical resources for adoption and implementation. These shared resources, coupled with the instrument development model presented, could be further utilized for developing network knowledge and skills assessment instruments. Our data suggest that instructors strongly desire such instruments, and the resulting aggregate data could be used as a recruitment tool. An aggregate CURE knowledge and skills dataset can establish a foundation of short-term outcomes for stakeholders to more confidently validate medium- and long-term affective outcomes as depicted in CURE assessment frameworks (Corwin et al., 2015). The framework by Corwin et al. (2015) underscores the importance of the need for CURE students to master knowledge and skills domains in order to gain access to potential added value from affective outcomes.

In summary, faculty within our network see value in knowledge and skills data for greasing gears often necessary to integrate CUREs into curricula such as gaining department and/or administrative support, approval of CURE curricula by institutional curriculum committees, and documentation necessary for Community College transfer agreements. Additionally, when considering limited classroom time and student attention, faculty were more receptive in administering knowledge and skills instruments and anticipated they were more likely to use the data than when compared to affective survey instruments. Considering the wide reach of network CUREs, it could be beneficial to use a mechanism such as the one proposed here to generate a knowledge and skills-based assessment instrument.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by University of Dubuque Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AK: Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Formal analysis, Supervision, Visualization. BG: Writing – review & editing, Conceptualization. JL: Writing – review & editing, Conceptualization. AQ: Writing – review & editing, Conceptualization. CB-S: Writing – original draft, Writing – review & editing, Conceptualization, Funding acquisition.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This manuscript is based upon work supported by the National Science Foundation Grant #1912520 awarded to CB-S.

Acknowledgments

We would like to thank the PARE network survey respondents for providing insights into the instructor perceived value of CURE student assessment instrument types.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1291071/full#supplementary-material

References

Anderson, L. J., Dosch, J. J., Macalester College, Lindquist, E. S., McCay, T. S., et al. (2020). Assessment of student learning in undergraduate courses with collaborative projects from the ecological research as education network (EREN). Scholar. Prac. Under. Res. 4, 15–29. doi: 10.18833/spur/4/1/2

Alexander, B., Foertsch, J., Daffinrud, S., and Tapia, R. (1998). The spend a summer with a scientist program: a study of program outcomes and essential elements for success. University of Madison-Wisconsin, LEAD center Madison, Madison WI.

Auchincloss, L. C., Laursen, S. L., Branchaw, J. L., Eagan, K., Graham, M., Hanauer, D. I., et al. (2014). Assessment of course-based undergraduate research experiences: a meeting report. CBE—Life. Sci. Educ. 13, 29–40. doi: 10.1187/cbe.14-01-0004

Bangera, G., and Brownell, S. E. (2014). Course-based undergraduate research experiences can make scientific research more inclusive. CBE—Life. Sci. Educ. 13, 602–606. doi: 10.1187/cbe.14-06-0099

Bliss, S. S., Abraha, E. A., Fuhrmeister, E. R., Pickering, A. J., and Bascom-Slack, C. A. (2023). Learning and STEM identity gains from an online module on sequencing-based surveillance of antimicrobial resistance in the environment: an analysis of the PARE-Seq curriculum. PLoS One 18:e0282412. doi: 10.1371/journal.pone.0282412

Brownell, S. E., and Kloser, M. J. (2015). Toward a conceptual framework for measuring the effectiveness of course-based undergraduate research experiences in undergraduate biology. Stud. High. Educ. 40, 525–544.

Brownell, S. E., Wenderoth, M. P., Theobald, R., Okoroafor, N., Koval, M., Freeman, S., et al. (2014). How students think about experimental design: novel conceptions revealed by in-class activities. Bioscience 64, 125–137. doi: 10.1093/biosci/bit016

Buchanan, A. J., and Fisher, G. R. (2022). Current status and implementation of science practices in course-based undergraduate research experiences (CUREs): a systematic literature review. CBE—Life. Sci. Educ. 21:ar83. doi: 10.1187/cbe.22-04-0069

Clark, N., and Hsu, J. L. (2023). Insight from biology program learning outcomes: implications for teaching, learning, and assessment. CBE—Life. Sci. Educ. 22:ar5. doi: 10.1187/cbe.22-09-0177

Connors, P. K., Lanier, H. C., Erb, L. P., Varner, J., Dizney, L., Flaherty, E. A., et al. (2021). Connected while distant: networking CUREs across classrooms to create community and empower students. Integr. Comp. Biol. 61, 934–943. doi: 10.1093/icb/icab146

Corwin, L. A., Graham, M. J., and Dolan, E. L. (2015). Modeling course-based undergraduate research experiences: an agenda for future research and evaluation. CBE—Life. Sci. Educ. 14:es1. doi: 10.1187/cbe.14-10-0167

CUREnet. (2023). Available at: https://serc.carleton.edu/204585. Accessed 20 August 2023

Dasgupta, A. P., Anderson, T. R., and Pelaez, N. (2014). Development and validation of a rubric for diagnosing students’ experimental design knowledge and difficulties. CBE—Life. Sci. Educ. 13, 265–284. doi: 10.1187/cbe.13-09-0192

Deane, T., Nomme, K., Jeffery, E., Pollock, C., and Birol, G. (2014). Development of the biological experimental design concept inventory (BEDCI). CBE—Life. Sci. Educ. 13, 540–551. doi: 10.1187/cbe.13-11-0218

Dizney, L., Connors, P. K., Varner, J., Duggan, J. M., Lanier, H. C., Erb, L. P., et al. (2021). An introduction to the squirrel-net teaching modules. CourseSource 7. doi: 10.24918/cs.2020.26

Dolan,. (2016). Course-based undergraduate research experiences: current knowledge and future directions. Natl. Res. Counc. 1, 1–34.

Elgin, S. C., Hauser, C., Holzen, T. M., Jones, C., Kleinschmit, A., Leatherman, J., et al. (2017). The GEP: crowd-sourcing big data analysis with undergraduates. Trends Genet. 33, 81–85. doi: 10.1016/j.tig.2016.11.004

Feldon, D. F., Peugh, J., Timmerman, B. E., Maher, M. A., Hurst, M., Strickland, D., et al. (2011). Graduate students’ teaching experiences improve their methodological research skills. Science 333, 1037–1039. doi: 10.1126/science.1204109

Fuhrmeister, E. R., Larson, J. R., Kleinschmit, A. J., Kirby, J. E., Pickering, A. J., and Bascom-Slack, C. A. (2021). Combating antimicrobial resistance through student-driven research and environmental surveillance. Front. Microbiol. 12:577821. doi: 10.3389/fmicb.2021.577821

Genné-Bacon, E. A., and Bascom-Slack, C. A. (2018). The PARE project: a short course-based research project for National Surveillance of antibiotic-resistant microbes in environmental samples. J. Microbiol. Biol. Educ. 19. doi: 10.1128/jmbe.v19i3.1603

Genné-Bacon, E. A., Wilks, J., and Bascom-Slack, C. (2020). Uncovering factors influencing instructors’ decision process when considering implementation of a course-based research experience. CBE–Life Sci. Educ. 19:ar13. doi: 10.1187/cbe.19-10-0208

Hanauer, D. I., and Dolan, E. L. (2014). The project ownership survey: measuring differences in scientific inquiry experiences. CBE–Life Sci. Educ. 13, 149–158. doi: 10.1187/cbe.13-06-0123

Hanauer, D. I., Graham, M. J., Jacobs-Sera, D., Garlena, R. A., Russell, D. A., Sivanathan, V., et al. (2022). Broadening access to STEM through the community college: investigating the role of course-based research experiences (CREs). CBE–Life Sci. Educ. 21:ar38. doi: 10.1187/cbe.21-08-0203

Hanauer, D. I., Graham, M. J., SEA-PHAGES, Betancur, L., Bobrownicki, A., Cresawn, S. G., et al. (2017). An inclusive research education community (iREC): impact of the SEA-PHAGES program on research outcomes and student learning. Proc. Natl. Acad. Sci. 114, 13531–13536. doi: 10.1073/pnas.1718188115

Hanauer, D. I., Nicholes, J., Liao, F.-Y., Beasley, A., and Henter, H. (2018). Short-term research experience (SRE) in the traditional lab: qualitative and quantitative data on outcomes. CBE–Life Sci. Educ. 17:ar64. doi: 10.1187/cbe.18-03-0046

Hensel, N. H. (2023). Course-based undergraduate research: Educational equity and high-impact practice. Stylus Publishing, LLC, Sterling, Virgina

Hong, L., and Page, S. E. (2004). Groups of diverse problem solvers can outperform groups of high-ability problem solvers. Proc. Natl. Acad. Sci. 101, 16385–16389. doi: 10.1073/pnas.0403723101

Hoque, M. (2016). Three domains of learning: cognitive, affective and psychomotor. J. EFL Educ. Res. 2, 45–51.

Hurley, A., Chevrette, M. G., Acharya, D. D., Lozano, G. L., Garavito, M., Heinritz, J., et al. (2021). Tiny earth: a big idea for STEM education and antibiotic discovery. MBio 12, e03432–e03420. doi: 10.1128/mbio.03432-20

Hyman, O., Doyle, E., Harsh, J., Mott, J., Pesce, A., Rasoul, B., et al. (2021). CURE-all: large scale implementation of authentic DNA barcoding research into first-year biology curriculum. CourseSource 6. doi: 10.24918/cs.2019.10

Jordan, T. C., Burnett, S. H., Carson, S., Caruso, S. M., Clase, K., DeJong, R. J., et al. (2014). A broadly implementable research course in phage discovery and genomics for first-year undergraduate students. MBio 5, e01051–e01013. doi: 10.1128/mbio.01051-13

Kleinschmit, A. J., Genné-Bacon, E., Drace, K., Govindan, B., Larson, J. R., Qureshi, A. A., et al. (2023). A framework for leveraging network course-based undergraduate research (CURE) faculty to develop, validate, and administer an assessment instrument. Submitted 2023.

Kremer, J. F., and Bringle, R. G. (1990). The effects of an intensive research experience on the careers of talented undergraduates. J. Res. Develop. Edu. 24, 1–5.

Krim, J. S., Coté, L. E., Schwartz, R. S., Stone, E. M., Cleeves, J. J., Barry, K. J., et al. (2019). Models and impacts of science research experiences: a review of the literature of CUREs, UREs, and TREs. CBE—Life. Sci. Educ. 18:ar65. doi: 10.1187/cbe.19-03-0069

Kuh, G. (2008). High-impact educational practices: what they are, who has access to them, and why they matter. Available at: https://www.aacu.org/publication/high-impact-educational-practices-what-they-are-who-has-access-to-them-and-why-they-matter. Accessed 12 July 2023

Laursen, S., Hunter, A.-B., Seymour, E., Thiry, H., and Melton, G. (2010). Undergraduate research in the sciences: engaging students in real science. Jossey-Bass. San Francisco

Linn, M. C., Palmer, E., Baranger, A., Gerard, E., and Stone, E. (2015). Undergraduate research experiences: impacts and opportunities. Science 347:1261757. doi: 10.1126/science.1261757

Lopatto, D. (2004). Survey of undergraduate research experiences (SURE): first findings. Cell Biol. Educ. 3, 270–277. doi: 10.1187/cbe.04-07-0045

Lopatto, D., Hauser, C., Jones, C. J., Paetkau, D., Chandrasekaran, V., Dunbar, D., et al. (2014). A central support system can facilitate implementation and sustainability of a classroom-based undergraduate research experience (CURE) in genomics. CBE—Life Sci. Educ. 13, 711–723. doi: 10.1187/cbe.13-10-0200

Murren, C. J., Wolyniak, M. J., Rutter, M. T., Bisner, A. M., Callahan, H. S., Strand, A. E., et al. (2019). Undergraduates phenotyping Arabidopsis knockouts in a course-based undergraduate research experience: exploring plant fitness and vigor using quantitative phenotyping methods. J. Microbiol. Biol. Educ. 20. doi: 10.1128/jmbe.v20i2.1650

Nagda, B. A., Gregerman, S. R., Jonides, J., von Hippel, W., and Lerner, J. S. (1998). Undergraduate student-faculty research partnerships affect Studen retention. Rev. High. Educ. 22, 55–72. doi: 10.1353/rhe.1998.0016

National Academies of Sciences, Engineering, and Medicine. (2015). Integrating discovery-based research into the undergraduate curriculum: Report of a convocation. Washington, D.C.: National Academies Press.

Pieczynski, J., Wolyniak, M., Pattison, D., Hoage, T., Carter, D., Olson, S., et al. (2022). The CRISPR in the classroom network: a support system for instructors to bring gene editing technology to the undergraduate classroom. FASEB J. 36. doi: 10.1096/fasebj.2022.36.S1.R3488

Reyna, N. S., and Hensley, L. L. (2023). CBEC - cell biology education consortium (RCN-UBE introduction). QUBES Educ. Resour. doi: 10.25334/7ZJZ-W895

Roberts, R., Hall, B., Daubner, C., Goodman, A., Pikaart, M., Sikora, A., et al. (2019). Flexible implementation of the BASIL CURE. Biochem. Mol. Biol. Educ. 47, 498–505. doi: 10.1002/bmb.21287

Rodenbusch, S. E., Hernandez, P. R., Simmons, S. L., and Dolan, E. L. (2016). Early engagement in course-based research increases graduation rates and completion of science, engineering, and mathematics degrees. CBE—Life Sci. Educ. 15:ar20. doi: 10.1187/cbe.16-03-0117

Russell, S. H. (2006). Evaluation of NSF support for undergraduate research opportunities: Follow-up survey of undergraduate NSF program participants: draft final report. Menlo Park, CA: SRI International.

Sadler, T. D., Burgin, S., McKinney, L., and Ponjuan, L. (2010). Learning science through research apprenticeships: a critical review of the literature. J. Res. Sci. Teach. 47:256. doi: 10.1002/tea.20326

Sadler, T. D., and McKinney, L. (2010). Scientific research for undergraduate students: a review of the literature. J. Coll. Sci. Teach. 39, 43–49. doi: 10.2505/3/jcst10_039_05

Shaffer, C. D., Alvarez, C., Bailey, C., Barnard, D., Bhalla, S., Chandrasekaran, C., et al. (2010). The genomics education partnership: successful integration of research into laboratory classes at a diverse group of undergraduate institutions. CBE—Life Sci. Educ. 9, 55–69. doi: 10.1187/09-11-0087

Shortlidge, E. E., Bangera, G., and Brownell, S. E. (2016). Faculty perspectives on developing and teaching course-based undergraduate research experiences. Bioscience 66, 54–62. doi: 10.1093/biosci/biv167

Spell, R. M., Guinan, J. A., Miller, K. R., and Beck, C. W. (2014). Redefining authentic research experiences in introductory biology laboratories and barriers to their implementation. CBE—Life Sci. Educ. 13, 102–110. doi: 10.1187/cbe.13-08-0169

Staub, N. L., Poxleitner, M., Braley, A., Smith-Flores, H., Pribbenow, C. M., Jaworski, L., et al. (2016). Scaling up: adapting a phage-hunting course to increase participation of first-year students in research. CBE—Life Sci. Educ. 15:ar13. doi: 10.1187/cbe.15-10-0211

Vater, A., Mayoral, J., Nunez-Castilla, J., Labonte, J. W., Briggs, L. A., Gray, J. J., et al. (2021). Development of a broadly accessible, computationally guided biochemistry course-based undergraduate research experience. J. Chem. Educ. 98, 400–409. doi: 10.1021/acs.jchemed.0c01073

Wolkow, T. D., Durrenberger, L. T., Maynard, M. A., Harrall, K. K., and Hines, L. M. (2014). A comprehensive faculty, staff, and student training program enhances student perceptions of a course-based research experience at a two-year institution. CBE—Life Sci. Educ. 13, 724–737. doi: 10.1187/cbe.14-03-0056

Keywords: course-based undergraduate research experience (CURE), assessment, network CUREs, instrument development and validation, undergraduate research experiences, course-based research experiences, science education

Citation: Kleinschmit AJ, Govindan B, Larson JR, Qureshi AA and Bascom-Slack C (2023) The CURE assessment landscape from the instructor’s point of view: knowledge and skills assessments are highly valued support tools for CURE adoption. Front. Educ. 8:1291071. doi: 10.3389/feduc.2023.1291071

Edited by:

Melissa C. Srougi, North Carolina State University, United StatesReviewed by:

Ellis Bell, University of San Diego, United StatesCopyright © 2023 Kleinschmit, Govindan, Larson, Qureshi and Bascom-Slack. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Adam J. Kleinschmit, YWtsZWluc2NobWl0QGRicS5lZHU=

†ORCID: Adam J. Kleinschmit https://orcid.org/0000-0003-3820-524X

Jennifer R. Larson https://orcid.org/0000-0003-4352-3517

Carol Bascom-Slack https://orcid.org/0000-0002-2950-8997

Adam J. Kleinschmit

Adam J. Kleinschmit Brinda Govindan

Brinda Govindan Jennifer R. Larson

Jennifer R. Larson Amber A. Qureshi4

Amber A. Qureshi4 Carol Bascom-Slack

Carol Bascom-Slack