95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 22 September 2023

Sec. Assessment, Testing and Applied Measurement

Volume 8 - 2023 | https://doi.org/10.3389/feduc.2023.1241998

This article is part of the Research Topic Insights in Assessment, Testing, and Applied Measurement: 2022 View all 21 articles

Introduction: Teacher conceptions of feedback ideally predict their feedback practices, but little robust evidence identifies which beliefs matter to practices. It is logical to presume that teacher conceptions of feedback would align with the policy framework of an educational jurisdiction. The Teacher Conceptions of Feedback (TCoF) inventory was developed in New Zealand which has a relatively low-stakes, formative policy framework.

Methods: This study surveyed 451 Swedish teachers working in Years 1-9, a policy context that prioritises teachers using multiple data sources to help students learn. The study used a translated version of the TCoF inventory, but isolated six items related to formative feedback practices from various factors.

Results: A six-factor TCoF was recovered (Praise, Improvement, Ignore, Required, PASA, and Timely), giving partial replication to the previous study. A well-fitting structural equation model showed formative practices were predicted by just two conceptions of feedback (i.e., feedback improves learning and students may ignore feedback).

Discussion: This study demonstrates that the TCoF inventory can be used to identify plausible relations from feedback beliefs to formative feedback practices.

Teacher conceptions of or beliefs about feedback are likely to matter to how feedback is implemented and whether it contributes to greater learning or better teaching (Barnes et al., 2015). However, The Theory of Planned Behaviour (Ajzen, 1991) indicates that cognitive and affective attitudes towards intentions within a behavioural phenomenon are constrained by social norms and perceptions of behavioural control. Thus, as teacher feedback practices are likely to be shaped by the shared conceptions of feedback within a specific educational system, it is important to examine these relationships within a specific context. The present paper contributes to the field by being the first survey study to explicitly link teacher conceptions of feedback to their self-reported feedback practices. Moreover, it reports a structural equation model of these relationships within the education system of Sweden, which takes a low-stakes formative approach to assessment, rather than implementing a high-stakes testing regime. Incidentally, the study also provides insights into the generalisability of a teacher self-report inventory about feedback conceptions developed in New Zealand and deployed in Swedish. Thus, a stronger emphasis on understanding feedback as a mechanism for improving educational outcomes might be expected in the Swedish educational system than in educational systems where assessments are more high-stake. In addition, it is reasonable to expect that a conception that emphasizes feedback as a vehicle for improvement will be reflected in teachers’ feedback practices. Thus, the goal of this study was to measure teacher self-reported formative feedback practices and identify possible predictor beliefs from their conceptions of the nature and purpose of feedback. Our hypotheses were:

1. Swedish teachers will strongly exhibit improvement-oriented beliefs about feedback.

2. We expected improvement-oriented beliefs about feedback to influence the self-reported formative feedback practices.

Based on a conventional definition, feedback is consequential to performance (Hattie and Timperley, 2007). An important function of feedback then is to provide information to learners and teachers about what each party needs to do in the classroom to meet curricular objectives of schooling. Performance data can be interactions in a classroom (e.g., question and answer) but also includes more formal diagnostic testing (Brown and Hattie, 2012) or analysis of errors made in classroom or home practice (Bejar, 1984). From these kinds of performance data teachers can formatively make appropriate adjustments to their classroom instruction and to student learning activities (Lai and Schildkamp, 2016). This includes giving learners feedback as to the task, the learning process, and the metacognitive self-awareness students have about the instructional objectives (Hattie and Timperley, 2007). Naturally, this approach to assessment requires resources (i.e., assessments that diagnose needs and time to plan responses), policies that prioritise using assessment formatively rather than solely for administrative or summative purposes, and teacher commitment to generating and providing feedback formatively for improvement.

Further, considering the importance of the teacher’s active role in using performance data in this way, it is logical to imagine that teacher beliefs about feedback matter to the efficacy of these processes. Ajzen’s (1991) Theory of Planned Behaviour (TPB) identifies the importance of teachers’ beliefs and attitudes toward the phenomena of assessment and feedback as essential to understanding their intentions and actions. TPB points to the importance of attitudes, social norms, and perceptions of behavioural control as predictors of intentions, behaviours, and outcomes. This aligns well with Fives and Buehl’s (2012) model in which teacher beliefs act as filters, frames, and guides to cognitive resources that impact their actions. Of course, teacher beliefs about the proper role of feedback are not universal; they are context bound by the policy and practice framework in which they are employed (Brown and Harris, 2009; Fulmer et al., 2015). As Bonner (2016) makes clear, teacher assessment practices are pressed by policy and regulatory pressures. Where external agencies permit teachers a great deal of control and autonomy in teaching (e.g., Sweden), it is highly likely that their beliefs will very much shape their practices. Hence, it is plausible that teacher conceptions of feedback will influence their self-reported feedback practices.

Research into teacher beliefs about feedback indicates that teachers have multiple conceptions about it with varying degrees of intensity (Brown et al., 2012). Because of the multiple purposes and uses of feedback, teachers have multiple and complex attitudes or conceptions in response to those uses within any jurisdiction. The relative strength of these varying conceptions appears to be ecologically rational in that teachers in general endorse the policies and purposes that apply to their level of employment (Rubie-Davies et al., 2012).

The research on how conceptions of feedback relate to behaviours is largely limited to self-reported practices rather than to actual observed practices. This raises the possibility that such data are invalid because of memory failure or ego-protective responses. To minimise that threat, valid data collection uses multiple items for each potential latent cause, designed to present theoretically important stimuli that are analysed mathematically to determine fit to the theory (Brown, 2023). Reliance on observation alone cannot expose what the most knowledgeable informant knows about what lies in the beliefs, thoughts, ideas, emotions, and attitudes of that individual; hence, self-report (Brown, 2023).

Thus, survey research still produces limited information about how beliefs about feedback relate to feedback practices. For example, a survey of 54 Tanzanian mathematics teachers (Kyaruzi et al., 2018), found that endorsement of approaches to feedback that focus on monitoring (e.g., asking students to indicate what went well and what went badly with their assignments) and scaffolding (e.g., adjusting instruction whenever I notice that students do not understand a topic) had strong prediction on high-quality feedback delivery practices (e.g., being supportive when giving students feedback and encouraging students to ask for feedback whenever they are uncertain). In contrast, a small survey of 61 Ethiopian teachers found that the relationship of teacher beliefs about feedback had a statistically not significant correlation with their practices (Dessie and Sewagegn, 2019).

In contrast, based on responses to the Teachers Conceptions of Feedback inventory (TCoF; Harris and Brown, 2008), a nationally representative survey of 518 New Zealand teachers found that endorsement of feedback about learning processes and involving students in feedback predicted greater use of non-teacher feedback methods (Brown et al., 2012). The same study reported that the use of praise in feedback predicted feedback actions that protected students from negative evaluative consequences. A survey of 390 Pakistani teachers, using the TCoF, found that endorsement of feedback as encouragement led to greater use of protective evaluation practices such as giving positive messages to students and not making critical comments (Aslam and Khan, 2021).

According to the joint European Values Study and World Values Survey 2005–2022 (EVS/WVS, 2022), Sweden is a strongly secular-rational and individualistic country with a strong emphasis on equality and the individual’s freedom and wellbeing. A similar description is found in the six-dimensional Index of National Culture (INC) by Hofstede et al. (2010). This is reflected in teachers’ relatively high degree of freedom to interpret and concretize the objectives of the national curriculum and to decide on appropriate teaching methods to help students achieve these goals (Helgøy and Homme, 2007). The Swedish egalitarian ideal is also reflected in teacher–student relations. Teachers do not receive, nor demand, respect solely based on their position/role in society. In the classroom, the average student is the norm and discrimination between students in terms of, for example, special classes or educational tracks for gifted or underperforming students, is rare. Rating of students in public, whether explicit or implicit, based on their school achievement is not in line with the Swedish culture.

The Swedish curriculum is goal-oriented, with national standards for student learning in years 3, 6, and 9 (nominally aged 9, 12, and 15). Grades are given in school years 6–9, but only the year 9 grades are high stakes because they matter for admission to upper secondary school. The grades are criterion-referenced, meaning if the standards are achieved, any number of students can receive that grade. Legally, the municipality is responsible for providing adequate resources for education and to conduct systematic evaluations [Utbildningsdepartementet (Ministry of Education), 2010]. In order to support schools and teachers to fulfil their obligations, the Swedish National Agency for Education (SNAEd) provides national screening materials, assessment support material, and standardized national tests. These assessment materials and tests serve various purposes: to inform decisions about support and adaptations of teaching, grading, and, at an aggregated level, to provide point estimates of student achievement at school or system level to support between-school equivalence in grading and for trend analysis [Skolverket (National Agency for Education), 2020].

The national standardized tests (NSTs) in years 3, 6, and 9 are mandatory. When grading students, the teachers are required to use all available information about students’ knowledge and skills, with particular consideration of the results from the NSTs [Utbildningsdepartementet (Ministry of Education), 2010]. Thus, the SNAEd advises teachers to design and use different types of assessment situations for formative and summative purposes [Skolverket (National Agency for Education), 2022], and that teachers on all school levels choose, design and implement their own classroom assessment.

The research on Swedish teachers’ conceptions of feedback is limited in both number and scope. However, by interviewing and surveying approximately 70 teachers and principals with different qualifications and experiences at seven schools in four of the largest cities in Sweden and Norway, Helgøy and Homme (2007) found that Swedish teachers to a higher extent than Norwegian teachers perceived NSTs as valuable tools in grading and in the improvement of their teaching. Moreover, unlike the Norwegian teachers, the Swedish teachers did not perceive NSTs as limiting their autonomy in the interpretation of national goals and how to organize the teaching to help students reach those goals.

This study used a self-administered, self-reported survey inventory administered with a forced-choice ordinal agreement response scale. A survey was used for several reasons: (a) human beliefs are not directly observable, meaning self-report is viable; (b) observations of teacher practices cannot be done anonymously nor easily surreptitiously, meaning accuracy and completeness cannot be guaranteed; and (c) a reliable measure of a teacher’s feedback based on classroom observations would require many hours of lesson observation per teacher. Consequently, survey methodology was deemed to be appropriate and feasible methodology for the present study. Furthermore, a contribution of this study is to examine whether the inventory including both conceptions factors and a practice factor has validity (see the section Adaptation below). Analysis was done within the multiple indicators, multiple causes (MIMIC; Jöreskog and Goldberger, 1975) framework in which each survey item response is explained by a latent factor and a residual capturing the universe of unexplained variance and in which each latent construct is manifested by multiple indicators. The study uses confirmatory factor analysis and structural equation modeling to establish both the structure of responses and the relations of factors to each other. While the data are non-experimental, we consider that there is a causal path of influence from precedent conceptions of feedback to self-reported practices of feedback.

A total of 461 teachers working between years 1 and 9 in a northern city in Sweden responded to the survey. This is a 62% response rate from the municipality. Prior to analysis, data preparation involved identifying and removing from consideration participants who had more than 10% of responses missing per instrument. This sample was chosen because the research group and the municipality had previously decided to initiate a larger research project on improving assessment practices in these school years. This meant investigation of teacher conceptions was included as part of a multi-method, multi-study project. After deletion of 11 cases for high number of missing values, 450 teachers were retained (82% women, 17% men, 1% missing). Most participants had a teaching degree (78%), with 5% not having such a degree, and 17% not answering. Length of teaching experience was grouped by year ranges: 5% <2 years, 16% 2–5 years, 12% 6–10 years, and 66% >10 years. Distribution across the grade levels taught was almost equal (Years 1–3, n = 149; Years 4–6, n = 156, Years 7–9, n = 141).

The Teachers Conceptions of Feedback inventory (TCoF; Harris and Brown, 2008) probes nine different aspects of how teachers perceive or conceive of the nature and purpose of feedback. In response to Hattie and Timperley’s (2007) description of feedback, factors were developed for the levels of feedback and the assumption that feedback exists to improve learning and the legitimate expectation that feedback will exist (Table 1). The Improvement factor focuses on students using the feedback they receive. Reporting and Compliance contain statements indicating the existence of feedback is expected by stakeholders (e.g., leaders and parents) and should inform parents about student progress. The Task factor focused on giving students information about aspects of their work that could be improved. The Process factor focused on allowing students to engage actively in responding to feedback. The Self-regulation factor included items about student autonomy and agency in evaluating their own work. The Encouragement factor included statements suggesting that providing students with praise would boost their self-esteem. Two additional factors drew on assessment for learning emphases of involving students in assessment and providing timely feedback. The Peer and Self-Feedback factor focused on students actively giving themselves and each other feedback. The Timeliness factor included items relating to the importance of prompt response to student work.

This inventory was developed in New Zealand with a statistically invariant measurement model for both primary and secondary teachers (Brown et al., 2012). Positive endorsement (i.e., mean score > 4.00 out of 6.00) was seen in both New Zealand primary and secondary groups for Improvement, Task, and Process factors, with substantial differences (i.e., Cohen’s d > 0.60) in mean in favour of primary teachers for feedback is Required, Peer and Self-feedback, Process, and Timeliness. The survey related TCoF conceptions to self-reported practices of feedback that had been aggregated into four types. Consistent with the notion that beliefs predict behaviours, the Improvement factor had a positive loading on Teacher Formative feedback practices (i.e., giving detailed written comments, writing hints, tips, and reminders on work, discussing work with students, and giving spoken comments in class). The Encouragement conception of feedback predicted teachers’ Protective-Evaluation feedback involving giving stickers, stamps, or smiley faces on student work and praising students for how hard they have worked. Emphasis on feedback Reporting and Compliance with expectations increased the prevalence of Parent Reporting practices (i.e., Parent-teacher conferences and reports to parents).

While the TCoF focused on teacher conceptions of the nature and purpose of feedback, a close reading of the inventory suggested that embedded within the TCoF, there were eight statements that described specific behaviours teachers might enact. Six of these practices were from the Hattie and Timperley (2007) process (Process1, 2, 5), self-regulation (SRL1, 3), and praise (Praise6) factors. The two other possibilities were from Timeliness (Time1, 4). To test the possibility that these items formed a Practices factor they were disaggregated from their original scale and aggregated into a new separate scale of Feedback Practices, which theoretically would be predicted by the remaining TCoF conceptions of feedback factors. Hence, a major contribution of this study is to examine whether this adaptation had validity. An advantage to this approach would be to minimise the number of items needed to elicit both beliefs about feedback and practices.

The instruments were translated into Swedish by the authors, prioritising functional equivalence rather than literal equivalence. After translation, the functional equivalence of the items was validated by three external reviewers, who were fluent in both languages. Items were presented in jumbled order seen by the item number in Supplementary material. Participants responded using a positively packed, 6-point agreement scale. This type of scale has two negative options (Strongly Disagree and Moderately Disagree, scored 1 and 2 respectively) and four positive options (Slightly Agree, Moderately Agree, Mostly Agree and Strongly Agree, scored 3–6, respectively). This approach gives greater ability to discriminate the degree of positivity participants hold for positively valued statements and is appropriate when participants are likely to endorse statements (Lam and Klockars, 1982; Klockars and Yamagishi, 1988; Masino and Lam, 2014). Hence, in circumstances when participants are expected to respond positively to a stimulus (e.g., teachers responding to a policy expectation), giving them more choices in the positive part of the response continuum produces good results.

After deleting participants with more than 10% missing responses, we imputed missing values with the expectation maximisation algorithm (Dempster et al., 1977). The imputation had a statistically not significant result (χ2 = 1508.122, df = 1,453, p = 0.153) for Little’s (1988) Missing Completely at Random (MCAR) test showing the distribution of missing responses was random. Hence, all analyses were conducted without missing values. All but one variable met accepted standards for skew (<2.00) and kurtosis (<7.00), meaning variables were normal (Kim, 2013). Because item Irr4 had kurtosis =11.40, it was transformed using a Box-Cox transformation (Courtney and Chang, 2018) in the normalr ShinyApp.1 This produced kurtosis = −0.40 and that version of the item was used in all analyses.

Coherent with the MIMIC framework, a two-step process (Anderson and Gerbing, 1988) of testing measurement models for each construct (i.e., conceptions of feedback and feedback practices) through confirmatory factor analysis (CFA) was implemented before testing a structural model that linked the beliefs to practices. Once a well-fitting model for each construct was found, a structural equation model (SEM) was tested in which beliefs about feedback were positioned as predictors of feedback practices on the assumption that beliefs are a predictor of behaviours (Ajzen, 1991).

We tested a correlated model of eight conceptions of feedback factors but this had poor fit. Consequently, we inspected modification indices to identify items that violated simple structure (Revelle and Rocklin, 1979) or independence of residual assumptions (Barker and Shaw, 2015), while aiming to retain the eight conceptions factors. Items with weak loadings on their intended factors (i.e., <0.30) were candidates for deletion. Items with strong modification indices (i.e., MI > 20) to other factors or whose residuals are strongly attracted to those of other items were also candidates for deletion (Bandalos and Finney, 2010). Although, most researchers expect three items per factor, it is possible in multi-factorial inventories to estimate factors that have only two items (Bollen, 1989).

Fit of both CFA and SEM models was established by inspection of multiple fit indices (Hu and Bentler, 1999). Because the chi-square measure of discrepancy between a model and its underlying data is sensitive to sample size and model complexity, we accepted not statistically significant values for the normed chi-square (i.e., χ2/df) as support for a model (Wheaton et al., 1977). Further evidence for non-rejection of a model arises when the comparative fit index (CFI) is >0.90 and the root mean square error of approximation (RMSEA) is <0.08. However, both the CFI and RMSEA indices are sensitive to models with more than three factors, with the CFI entering reject space and the RMSEA entering not reject space under those conditions (Fan and Sivo, 2007). Thus, greater reliance is put on the gamma hat >0.90 and the standardized root mean residual (SRMR) <0.08 because these are more robust against sample size, model complexity, and model misspecification than the CFI or RMSEA indices. Scale reliability was estimated using the Coefficient H maximal reliability index, which is based on an optimally weighted composite using the standardised factor loadings (Hancock and Mueller, 2001). Factor mean scores were calculated by averaging the raw score for each item contributing to the factor, an appropriate method when simple structure (i.e., items belong to only one factor) is present (DiStefano et al., 2009).

CFA and SEM were conducted in the Jamovi Project (2022) platform using the lavaan package (Rosseel, 2012). Because six-point ordinal scales function similarly to continuous variables (Finney and DiStefano, 2006), maximum likelihood estimation was used. Model syntax is provided in Supplementary material.

After analysis was completed, member checking (Tong et al., 2007) was conducted in two interview groups in the same city in which the survey had been administered. This was done to examine our interpretation of items that did not load as expected and the meaning of the unexpected paths. Ten teachers were recruited face-to-face on a volunteer, convenience basis to participate in two group interviews (n = 4 and 6, respectively) at separate occasions. In the interviews, the teachers were first given time to think individually about each issue and then shared their thinking in a joint discussion. The teachers had completed the survey, but their responses had been anonymous and so they were commenting on aggregate data results to which they had contributed. Groups were led by two of the authors. In these discussions, we explored how respondents had interpreted the items and how they understood the unexpected relations we had detected. The authors took field notes during the 1 h conversations and conversations were audio-recorded. The interviews were verbatim transcribed from which themes were identified. Aggregation of responses to the focus issues was carried out by authors 2 and 4 and manual coding of themes was carried out.

An inter-correlated factor model had promising fit, but still below expectations. Because of the high correlation values merging of SRL, Task, Process, and Improvement items into a single factor of Improvement helped fit. Further modifications removed items that violated simple structure or were strongly correlated with other items, resulting in improved fit to the data. Paths that were not statistically significant were also removed. These modifications created six correlated conceptions of feedback and one practices of feedback factor.

The teacher conceptions of feedback factors were:

I. Feedback praises students (Praise),

II. Feedback improves student learning (Improvement),

III. Students ignore feedback (Ignore),

IV. Feedback is expected or required by school policy (Required),

V. Feedback is generated by involving peers and the self (PASA), and

VI. Feedback is prompt or timely (Timely).

The teacher Feedback Practices (Practices) factor consisted of three process items, two self-regulation of learning items, and one praise item. Together, these items create a set of formative practices that focus on giving students information and time to think about and improve their work, while taking responsibility for their own outcomes. Additionally, the feedback teachers provide includes commenting on the effort students put into their work, as well as noting how it can be improved.

Items, standardised loadings, and scale coefficient H statistics for all seven factors are given in Table 2.

The inter-correlation of conceptions of feedback factors is shown in Table 3. As expected, the Students Ignore factor had negative values to three other factors (i.e., Improvement, Required, and PASA) and non-significant values to Praise and Timely. In contrast, all other factors were moderately and positively inter-correlated with values ranging from 0.36 ≤ r ≤ 0.70. This suggests commitment to feedback for improvement is simultaneously weakly related to feedback being required, using praise, feedback from peers and self, and being timely, while not being something that students ignore. This suggests teacher beliefs are generally adaptive and in line with feedback theory.

Factor means are shown in Table 4, with between factor effect sizes (Cohen’s d; Cohen, 1992). Values d > 0.60 are considered large in educational research (Hattie, 2009). In general, teachers gave strongest endorsement to the conception of feedback for improvement, with large effects compared to all other feedback factors and practices. Praise and Prompt feedback, with scores less than moderately agree, had large differences to both Expected and Students Ignore feedback factors. Both Students Involved in PASA and Expected, above slightly agree, had large differences only to Students Ignore feedback, which was close to mostly disagree. Note that low score indicated that on the average teachers rejected the notion that students ignore feedback. The Formative feedback practices, with a score just above moderately agree, was much larger than Students Involved in PASA, Expected, and Students Ignore feedback factors, had medium to small differences with Prompt and Praise factors, respectively, and was much smaller than the Feedback Improvement conception.

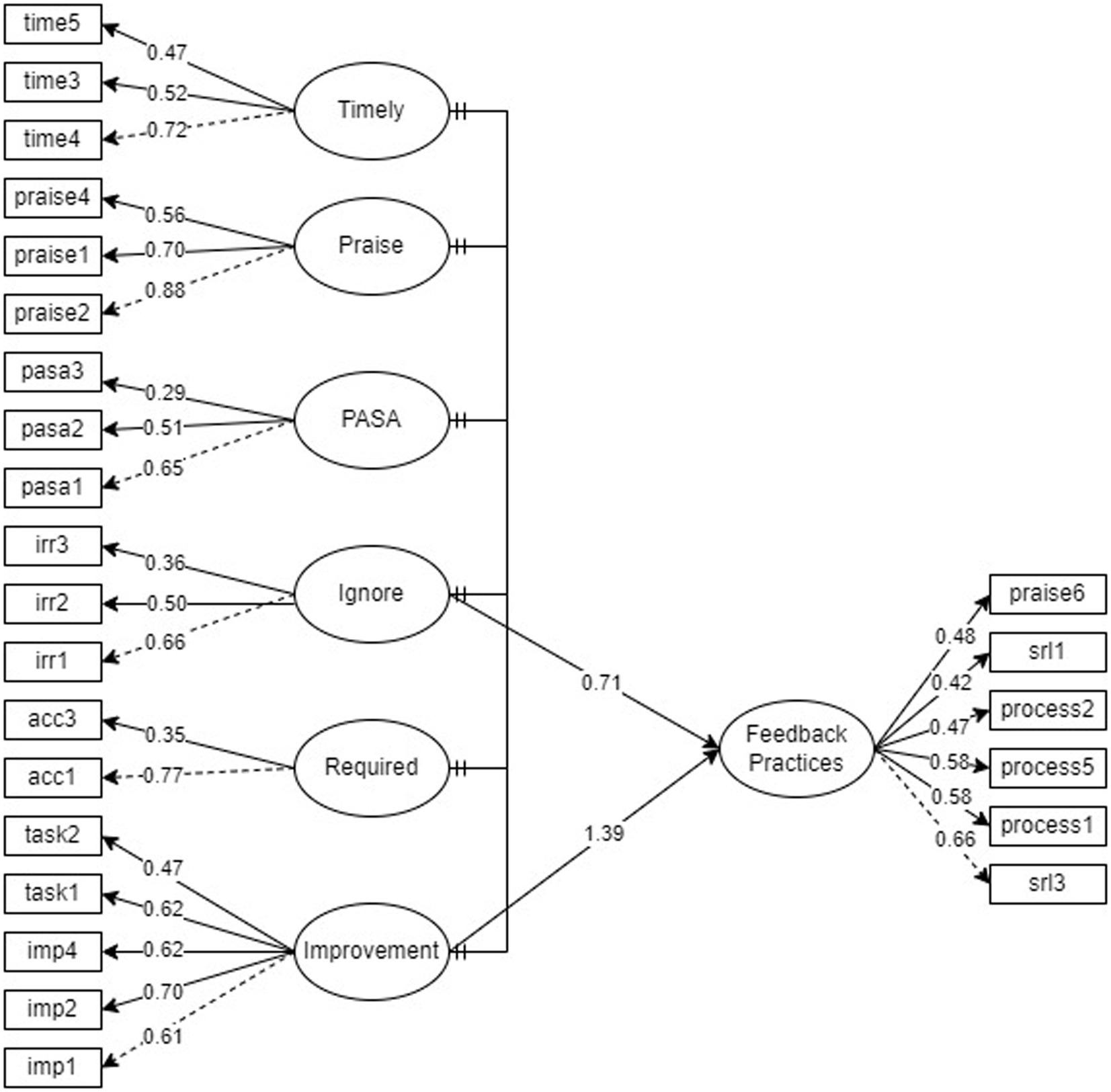

Structural paths were retained in the model only if they were statistically significant. The only statistically significant predictors of Practice were the Improvement (β = 1.24) and Ignored (β = 0.61) (Figure 1). This model had acceptable to good fit indices (χ2 = 465.334, df = 258, χ2/df = 1.80, p = 0.18; CFI = 0.90; gamma hat = 0.96; RMSEA = 0.042, 90%CI = 0.036–0.048; SRMR = 0.050) and so was not rejected. Note that the correlation between Improvement and Ignore was negative but their common positive loading on Practices is a non-transitive relationship, something possible given the relatively modest value (r = −0.65) (Kim and Mueller, 1978). This suggests that the implementation of the formative feedback practices depends both on seeing feedback as something students tend to ignore and thus may need to be overcome through these practices and something which will help them improve their learning. This conclusion was supported by the member checking process, in that all teachers expressed the view that feedback is an inherent part of good teaching and something they are expected to provide. Moreover, in case students ignore feedback, the teachers saw this as an indication that they need to either improve the characteristics of their feedback itself or the learning situation in which the feedback is provided.

Figure 1. TCoF conceptions as predictors of self-reported feedback practices. Dashed line is seed value path; values are standardised loadings; residuals removed for simplicity; no correlated residuals; inter-correlation values in Table 2.

This study surveyed teacher self-reported conceptions of feedback and related those conceptions to self-reported formative feedback practices. Confirmatory factor analysis of the Swedish primary and junior secondary school teachers partially recovered previously published results from New Zealand teachers (Brown et al., 2012). The factor structure of the TCoF model was to a large extent similar, albeit reduced to six factors and the removal of the practices items into a new separate scale. Moreover, in general, the teachers moderately to mostly agreed with feedback for improved teaching and learning, while rejecting the ideas that students ignored feedback. In addition, the Swedish teachers moderately agreed with the formative feedback practices, which is a construct not previously identified. It is important to remember that the conception that Students Ignore Feedback has a moderate negative correlation (r = −0.65) with the Improvement Feedback conception. This exposes a non-transitive relationship in how negatively correlated factors both have positive loadings on the same outcome (β = 0.71 and 1.39, respectively).

Unlike, the small-scale survey in Ethiopia (Dessie and Sewagegn, 2019), this study found that teacher beliefs about feedback did have statistically significant relationships to practices, a result reported elsewhere (Brown et al., 2012; Kyaruzi et al., 2018; Aslam and Khan, 2021). Like Kyaruzi et al. (2018), this study found that formative feedback practices were supported by improvement-oriented beliefs about the purpose of feedback. Previous studies (Brown et al., 2012; Aslam and Khan, 2021) found that feedback as Praise loaded onto Protective practices. This was not replicated, most likely because feedback practices were operationalised here as formative, improvement-oriented practices. A novel result was the supportive role of beliefs that students might ignore feedback had on formative feedback practices.

Teachers’ conceptions of feedback are related to each other and to self-reported feedback practices. Most importantly, this study showed that formative feedback practices were increased only by two conceptions of feedback. Specifically, the belief that feedback should contribute to improved learning and the belief that students tend to ignore feedback explain substantial variation in practices (R2 = 0.94). Together with the results from the member checking, this paper indicates that if teachers are concerned students might ignore formative feedback, they mitigate that concern by engaging in these formative feedback practices. Furthermore, if they want feedback to support improved learning they claim to use these feedback practices.

These results may be understood by the function of beliefs on teacher actions (Fives and Buehl, 2012). The theory of planned behaviour (TPB; Ajzen, 1991) identifies the importance of social norms, attitudes, and perceptions of behavioural control as essential predictors of intentions and actions. Teacher beliefs about the role of feedback are context bound by the policy and practice framework in which they are employed (Brown and Harris, 2009; Fulmer et al., 2015). Swedish teachers work within an education system that claims that all students can learn, and which provides a high degree of freedom for teachers to interpret and concretize the objectives of the national curriculum and selection of appropriate teaching methods and materials. The education system also supports teachers to use multiple data sources to monitor student learning and the stakes of the assessments are moderate or low for both teachers and students. In such an educational system, feedback with the main purpose of learning and improvement may be seen as an integral part of the day-to-day teaching and assessment practice,

In contexts that de-emphasise consequences around achievement, there is opportunity to use error and failure productively for greater achievement and performance. However, in cultures and educational systems with high-stakes testing regimes and policies that use assessment mostly for demonstrating accountability and summative purposes, teacher perceptions of what feedback is and how it functions will be coloured by the first known effect of accountability (i.e., compliance with superiors; Lerner and Tetlock, 1999). Consequently, the teacher perceptions of feedback and their associations with formative feedback practices reported in this study may be similar only to those of other jurisdictions that have similar low-stakes frameworks for assessment (e.g., New Zealand).

The Swedish context avoids pronounced incentives for students to ignore feedback because there is little risk of looking bad if they fail to use it successfully. Public ranking of students, as is the case in more competitive environments, may inculcate a culture of ignoring information that induces shame. Nonetheless, student autonomy permits the possibility of choosing to ignore feedback that could be perceived as threatening to ego enhancement or well-being (Harris et al., 2018). Hence, it is reasonable for teachers to consider this possibility and act to minimise ego-protective reasons to disregard important information in feedback. Thus, it seems legitimate for teachers to signal that their feedback practices incorporate minimising the possibility that students would treat feedback maladaptively.

Teachers generally agreed with the conception that the purpose of feedback is to enhance students’ self-esteem and, hence, should be full of encouraging and positive comments (i.e., Praise). Although this goal is commendable, a focus on giving praise may hamper learning. Studies have shown that focussing on praise may come at the expense of identifying students’ learning needs and suggestions on how to improve learning (Brown et al., 2012). Indeed, research has shown that praise commonly does not have a positive effect on students’ achievement (Hattie and Timperley, 2007). These results are corroborated by our model (Figure 1), where praise is not directly associated with the formative feedback practices that focus on the improvement of students work and self-regulation in learning. Hence, the goal of caring about the student and giving formative feedback may be in conflict. Since both goals are present in most curriculum statements, the solution is not to exclude either one, but instead to learn how to accomplish both. Thus, teacher education needs to address this tension and discuss how to circumvent it.

This could, for example, involve prospective teachers having to learn how to establish a classroom culture in which shortcomings (e.g., failure or not knowing) are seen as a natural part of learning and that attending to them is essential for learning. A prerequisite for the establishment of such a culture would be to find strategies to counteract the tendency of many students to link their school achievement with their self-esteem. Then, person-centered praise aiming at making students feel good about themselves could be replaced by positive comments aimed at linking positive outcomes to causes controllable by the student. Indeed, helping students to make adaptive attributions and to experience learning progress is associated with wellbeing (Winberg et al., 2014). Thus, teacher education must ensure that prospective teachers understand and enact caring for students by helping them to develop competence, rather than simply protect them from “bad” news.

We consider that the results presented here are likely to be typical of Swedish teachers rather than just the teachers participating in this study. While school administration is very localised, the policy and resource constraints exist equally for teachers elsewhere in the nation working in publicly funded primary and junior secondary schools. Nonetheless, a national survey would be needed to assure of generalisability claims made here. The relatively modest coefficient H values (i.e., all H < 0.80) suggest that the stability of these results is less than ideal. The stability of the factor measurement models needs to be tested in a further sample of Swedish teachers.

Of course, given the data are from a survey, we have made informed interpretations, corroborated by a small-scale member checking exercise, of what the factors mean and why the path values are what they are. Follow-up qualitative studies with teachers exploring their understanding of the results may provide further confidence in our explanations. Potentially, providing teachers with their own factor scores may provide further insights as to the meaning of teacher confidence in our findings.

This study contributes to our understanding of how teachers conceive of feedback. A significant contribution is the identification of a separate self-reported feedback practices scale within the TCoF inventory. The study shows clearly that teacher concerns to use feedback to improve learning and to minimise student tendencies to ignore feedback explain the formative feedback practices they implement.

The datasets presented in this article are not readily available because, in accordance with Swedish law and GDPR, the datasets cannot be published until the data have been anonymized. Anonymization of the data can be done 10 years after the completion of the research project at the earliest. Requests to access the datasets should be directed to the corresponding author.

The research took take place in accordance with the research ethics guidelines recommended by the Swedish Research Council (codex.vr.se). Informed consent was obtained from participating teachers. Before they were are asked for consent to participate in the study, they were informed about the project’s intended implementation and purpose, that they could cancel their participation at any time, that data would be handled by the researchers only and that results would only be reported in such a way that individuals could not be identified. The data collected did not contain sensitive personal data and was processed in accordance with Umeå University’s data management plan. This plan was developed to ensure that data is processed in accordance with the General Data Protection Regulation (GDPR). This kind of project with this kind of Swedish data does not require approval from the Swedish Ethics review board.

GB: conceptualisation, methodology, software, formal analysis, data curation, and writing – original draft. CA: validation, investigation, and writing – review and editing. MW: validation, investigation, writing – review and editing. TP: conceptualisation, validation, investigation, writing – review and editing, project administration, and funding acquisition. All authors contributed to the article and approved the submitted version.

This research was funded by a grant from the Swedish Research Council (#2019-04349).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1241998/full#supplementary-material

Ajzen, I. (1991). The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50, 179–211. doi: 10.1016/0749-5978(91)90020-T

Anderson, J. C., and Gerbing, D. W. (1988). Structural equation modeling in practice – a review and recommended 2-step approach. Psychol. Bull. 103, 411–423. doi: 10.1037/0033-2909.103.3.411

Aslam, R., and Khan, N. (2021). Secondary school teachers’ knowledge and practices about constructive feedback: evidence from Karachi. Pakistan. Cakrawala Pendidikan 40, 532–543. doi: 10.21831/cp.v40i2.35190

Bandalos, D. L., and Finney, S. J. (2010). “Factor analysis: exploratory and confirmatory” in The Reviewer's guide to quantitative methods in the social sciences. eds. G. R. Hancock and R. O. Mueller (New York: Routledge), 93–114.

Barker, L. E., and Shaw, K. M. (2015). Best (but oft-forgotten) practices: checking assumptions concerning regression residuals. Am. J. Clin. Nutr. 102, 533–539. doi: 10.3945/ajcn.115.113498

Barnes, N., Fives, H., and Dacey, C. M. (2015). “Teachers’ beliefs about assessment” in International handbook of research on teacher beliefs. eds. H. Fives and M. Gregoire Gill (New York: Routledge), 284–300.

Bejar, I. I. (1984). Educational diagnostic assessment. J. Educ. Meas. 21, 175–189. doi: 10.1111/j.1745-3984.1984.tb00228.x

Bonner, S. M. (2016). “Teachers’ perceptions about assessment: competing narratives” in Handbook of human and social conditions in assessment. eds. G. T. L. Brown and L. R. Harris (New York: Routledge), 21–39.

Brown, G. T. L. (2023). Principles and assumptions of psychometric measurement. Revista Digital de Investigación en Docencia Universitaria [Digital Journal of University Teaching Research] 17:e1834. doi: 10.19083/ridu.2023.1834

Brown, G. T. L., and Harris, L. R. (2009). Unintended consequences of using tests to improve learning: how improvement-oriented resources heighten conceptions of assessment as school accountability. J. Multidiscip. Eval. 6, 68–91. doi: 10.56645/jmde.v6i12.236

Brown, G. T. L., Harris, L. R., and Harnett, J. (2012). Teacher beliefs about feedback within an assessment for learning environment: endorsement of improved learning over student well-being. Teach. Teach. Educ. 28, 968–978. doi: 10.1016/j.tate.2012.05.003

Brown, G. T., and Hattie, J. (2012). “The benefits of regular standardized assessment in childhood education: guiding improved instruction and learning” in Contemporary educational debates in childhood education and development. eds. S. Suggate and E. Reese (London: Routledge), 287–292.

Courtney, M. G. R., and Chang, K. C. (2018). Dealing with non-normality: an introduction and step-by-step guide using R. Teach. Stat. 40, 51–59. doi: 10.1111/test.12154

Dempster, A. P., Laird, N. M., and Rubin, D. B. (1977). Maximum likelihood estimation from incomplete data via the EM algorithm (with discussion). J. R. Stat. Soc. Ser. B 39, 1–38. doi: 10.1111/j.2517-6161.1977.tb01600.x

Dessie, A. A., and Sewagegn, A. A. (2019). Moving beyond a sign of judgment: primary school teachers' perception and practice of feedback. Int. J. Instr. 12, 51–66. doi: 10.29333/iji.2019.1224a

DiStefano, C., Zhu, M., and Mîndrilă, D. (2009). Understanding and using factor scores: considerations for the applied researcher. Pract. Assess. Res. Eval. 14. doi: 10.7275/da8t-4g52

EVS/WVS. (2022). European values study and world values survey: Joint EVS/WVS 2017–2022 dataset (joint EVS/WVS). GESIS Data Archive, Cologne. ZA7505. Dataset Version 4.0.0,. doi: 10.4232/1.14023

Fan, X., and Sivo, S. A. (2007). Sensitivity of fit indices to model misspecification and model types. Multivar. Behav. Res. 42, 509–529. doi: 10.1080/00273170701382864

Finney, S. J., and DiStefano, C. (2006). “Non-normal and categorical data in structural equation modeling” in Structural equation modeling: A second course. eds. G. R. Hancock and R. D. Mueller (Greenwich, CT: Information Age Publishing), 269–314.

Fives, H., and Buehl, M. M. (2012). “Spring cleaning for the “messy” construct of teachers' beliefs: what are they? Which have been examined? What can they tell us?” in APA Educational Psychology handbook: individual differences and cultural and contextual factors. eds. K. R. Harris, S. Graham, and T. Urdan, Vol. 2 (Washington, DC: APA), 471–499.

Fulmer, G. W., Lee, I. C. H., and Tan, K. H. K. (2015). Multi-level model of contextual factors and teachers’ assessment practices: an integrative review of research. Assessment in Education: Principles, Policy & Practice 22, 475–494. doi: 10.1080/0969594X.2015.1017445

Hancock, G. R., and Mueller, R. O. (2001). Rethinking construct reliability within latent variable systems. In R. Cudeck, S. ToitDu, and D. Sörbom (Eds.), Structural equation Modeling: Present and future – a festschrift in honor of Karl Jöreskog (pp. 195–216). Lincolnwood, IL: Scientific Software International Inc.

Harris, L. R., and Brown, G. T. (2008). Teachers' conceptions of feedback inventory (TCoF) [Measurement instrument]. Auckland, NZ: University of Auckland, Measuring Teachers’ Assessment Practices (MTAP) Project. doi: 10.17608/k6.auckland.4800901.v1

Harris, L. R., Brown, G. T. L., and Dargusch, J. (2018). Not playing the game: student assessment resistance as a form of agency. Aust. Educ. Res. 45, 125–140. doi: 10.1007/s13384-018-0264-0

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Helgøy, I., and Homme, A. (2007). Towards a new professionalism in school? A comparative study of teacher autonomy in Norway and Sweden. European Educational Research Journal 6, 232–249. doi: 10.2304/eerj.2007.6.3.232

Hofstede, G., Hofstede, G. J., and Minkov, M. (2010). Cultures and organizations: Software of the mind (3. ed.). New York: McGraw-Hill.

Hu, L.-T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Jamovi Project. (2022). jamovi. In (Version 2.3.21) [Computer Software]. Available at: https://www.jamovi.org

Jöreskog, K. G., and Goldberger, A. S. (1975). Estimation of a model with multiple indicators and multiple causes of a single latent variable. J. Am. Stat. Assoc. 70, 631–639. doi: 10.1080/01621459.1975.10482485

Kim, H.-Y. (2013). Statistical notes for clinical researchers: assessing normal distribution (2) using skewness and kurtosis. Restorative Dentistry & Endodontics 38, 52–54. doi: 10.5395/rde.2013.38.1.52

Kim, J.-O., and Mueller, C. W. (1978). Factor analysis: Statistical methods and practical issues (14). Thousand Oaks, CA: Sage.

Klockars, A. J., and Yamagishi, M. (1988). The influence of labels and positions in rating scales. J. Educ. Meas. 25, 85–96. doi: 10.1111/j.1745-3984.1988.tb00294.x

Kyaruzi, F., Strijbos, J.-W., Ufer, S., and Brown, G. T. L. (2018). Teacher AfL perceptions and feedback practices in mathematics education among secondary schools in Tanzania. Stud. Educ. Eval. 59, 1–9. doi: 10.1016/j.stueduc.2018.01.004

Lai, M. K., and Schildkamp, K. (2016). “In-service teacher professional learning: use of assessment in data-based decision-making” in Handbook of human and social conditions in assessment. eds. G. T. L. Brown and L. R. Harris (New York: Routledge), 77–94.

Lam, T. C. M., and Klockars, A. J. (1982). Anchor point effects on the equivalence of questionnaire items. J. Educ. Meas. 19, 317–322. doi: 10.1111/j.1745-3984.1982.tb00137.x

Lerner, J. S., and Tetlock, P. E. (1999). Accounting for the effects of accountability. Psycho. Bull. 125, 255–275. doi: 10.1037/0033-2909.125.2.255ra

Little, R. J. A. (1988). A test of missing completely at random for multivariate data with missing values. J. Am. Stat. Assoc. 83, 1198–1202. doi: 10.2307/2290157

Masino, C., and Lam, T. C. M. (2014). Choice of rating scale labels: implication for minimizing patient satisfaction response ceiling effect in telemedicine surveys. TELEMEDICINE and e-HEALTH 20, 1–6. doi: 10.1089/tmj.2013.0350

Revelle, W., and Rocklin, T. (1979). Very simple structure: an alternative procedure for estimating the optimal number of interpretable factors. Multivar. Behav. Res. 14, 403–414. doi: 10.1207/s15327906mbr1404_2

Rosseel, Y. (2012). Lavaan: an R package for structural equation Modeling. J. Stat. Softw. 48, 1–36. doi: 10.18637/jss.v048.i02

Rubie-Davies, C. M., Flint, A., and McDonald, L. G. (2012). Teacher beliefs, teacher characteristics, and school contextual factors: what are the relationships? Br. J. Educ. Psychol. 82, 270–288. doi: 10.1111/j.2044-8279.2011.02025.x

Skolverket (National Agency for Education). (2020). Att planera, bedöma och ge återkoppling: stöd för undervisning [To plan, assess, and give feedback: Support for teaching]. Stockholm: Skolverket [National Agency for Education].

Skolverket (National Agency for Education) (2022). “Betyg och prövning. Kommentarer till Skolverkets allmänna råd om betyg och prövning” in Grades and examination. Comments to the National Agency for Education’s general advice on grades and examination (Stockholm: Skolverket [National Agency for Education])

Tong, A., Sainsbury, P., and Craig, J. (2007). Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int. J. Qual. Health Care 19, 349–357. doi: 10.1093/intqhc/mzm042

Utbildningsdepartementet (Ministry of Education) [(2010): 800]. Skollag [The Education Act] Stockholm: Utbildningsdepartementet [Ministry of Education].

Wheaton, B., Muthén, B., Alwin, D. F., and Summers, G. F. (1977). Assessing reliability and stability in panel models. Sociol. Methodol. 8, 84–136. doi: 10.2307/270754

Keywords: feedback, formative practices, classroom teachers, Sweden, beliefs, perceptions, conceptions

Citation: Brown GTL, Andersson C, Winberg M and Palm T (2023) Predicting formative feedback practices: improving learning and minimising a tendency to ignore feedback. Front. Educ. 8:1241998. doi: 10.3389/feduc.2023.1241998

Received: 18 June 2023; Accepted: 05 September 2023;

Published: 22 September 2023.

Edited by:

Jeffrey K. Smith, University of Otago, New ZealandReviewed by:

Erkan Er, Middle East Technical University, TürkiyeCopyright © 2023 Brown, Andersson, Winberg and Palm. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gavin T. L. Brown, Z2F2aW4uYnJvd25AdW11LnNl

†ORCID: Gavin T. L. Brown https://orcid.org/0000-0002-8352-2351

Catarina Andersson https://orcid.org/0000-0002-8738-8639

Mikael Winberg https://orcid.org/0000-0002-1535-873X

Torulf Palm https://orcid.org/0000-0003-0895-8232

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.