- 1Department of Curriculum and Instruction, College of Education, Zhejiang Normal University, Jinhua City, Zhejiang, China

- 2Department of Teaching and Learning, College of Education, University of Iowa, Iowa City, IA, United States

- 3Northwest Evaluation Association (NWEA), Portland, OR, United States

- 4Department of Psychological and Quantitative Foundations, College of Education, University of Iowa, Iowa City, IA, United States

- 5Department of Curriculum and Instruction, College of Education, University of Alabama, Tuscaloosa, AL, United States

The dimensionality of the epistemic orientation survey (EOS) was examined across four occasions with item factor analysis (IFA). Because of an emphasis on the knowledge generation of epistemic orientation (EO), four factors were selected and built into a short form of EOS (EOS-SF) including knowledge generation, knowledge replication, epistemic nature of knowledge, and student ability. To track the stability of the factor structure for each factor of EOS-SF, longitudinal invariance models were conducted. Partial measurement invariance was obtained for each of the four factors of EOS-SF. This study provides an example of ongoing instrument development in the field of applied assessment research.

1. Introduction

Substantial work in the learning sciences and especially in science education emphasizes teachers eliciting students’ prior knowledge and using this as a basis to support generative classrooms in which students’ knowledge can grow and develop (Chin, 2007; National Research Council, 2012; NGSS Lead States, 2013; Chen and Techawitthayachinda, 2021). Shifts in classroom practice that adopt this approach represent a change in the role of the teacher from a fount of established knowledge to a resource for accessing and validating knowledge (Duschl and Bybee, 2014; Ash and Hand, 2022; Seung et al., 2023), which necessarily require support for teachers’ changing practices, understandings, and beliefs (Hashweh, 1996; Fulmer, 2008; Maggioni and Parkinson, 2008; Desimone, 2009). One foundational element in this change is a teacher’s epistemic orientations, which are beliefs about the epistemic nature of knowledge (Suh et al., 2022) that influence their planning and practice (Windschitl, 2002; Buehl and Fives, 2009). The epistemic nature of knowledge addresses the extent to which a teacher believes that knowledge is evolving, and that students’ own thinking and abilities are malleable through learning experiences (Muis, 2007; Suh et al., 2022).

Recent work has shown that teachers’ epistemic orientation plays a critical role in implementing reform-oriented classroom environments (Bae et al., 2022) by helping teachers move beyond the status quo to think more deeply about their instructional practice and their classroom environment (Morandi et al., 2022; Lammert et al., 2022b) to embrace adaptiveness rather than routines (Suh et al., 2023). Attention to epistemic orientation has also explored how it can be developed over time through teacher professional development and ongoing support (Lammert et al., 2022a, 2023).

The substantial work in defining epistemic orientation and studying its role in classroom practice shows a successful and impactful line of study around the import of epistemic orientation. However, one area of concern is whether the measurement of epistemic orientation is itself stable across time and can be used to study teacher change over the medium to long terms. This is one example of the need for ongoing validation and interpretation of measurement instruments (Liu and Fulmer, 2008; Ding et al., 2023). To address this issue, the present study examines the factor structure of an existing epistemic orientation survey (EOS; Suh et al., 2022). The EOS includes four dimensions measuring teachers’ orientation towards knowing, knowledge, teachers’ instruction, and students’ learning ability. Based on the focus of this study, we will select certain domains of epistemic orientation developed by Suh et al. (2022) and build a short form of the questionnaire of EOS.

2. Literature review

2.1. Defining epistemic orientation

Suh et al. (2022) defined epistemic orientation among teachers as beliefs about knowing and teaching. A focus on teachers’ epistemic orientation towards constructivism is argued to help researchers explore how their classrooms allow students to engage in knowledge construction (Weiss et al., 2022). Epistemic orientation toward teaching for knowledge generation is defined as “a particular direction of thinking concerning how to deal with knowledge and knowledge generation processes when a teacher aims to create generative learning environments” (Suh et al., 2022, p. 1653) that is informed by beliefs while also drawing on preferences and tendencies that influence one’s thinking and actions.

With the definition of epistemic orientation for teachers, Suh et al. (2022) developed an instrument to measure teachers’ epistemic orientation, which has four domains: epistemic alignment, classroom authority, epistemic nature of knowledge, and student ability. Two domains, classroom authority and epistemic nature of knowledge, had similar beliefs of knowledge defined by Hofer and Pintrich (1997). Specifically, the epistemic nature of knowledge means that knowledge is continuously changing. Suh et al. (2022) defined the nature of knowledge as a set of beliefs about what knowledge is, which emphasizes that scientific knowledge is open to revision with new evidence. Moreover, the classroom authority domain was more aligned with the idea that knowledge could be challenged and created through critical thinking. Classroom authority is a set of beliefs about power relations between teachers and students, which emphasizes that students are active learners and have ownership of knowledge. Classroom authority also emphasizes that teachers should give students opportunities to develop ideas and construct their own knowledge.

In addition to the epistemic nature of knowledge and classroom authority, two key elements in epistemic orientation (Palma et al., 2018), Suh et al. (2022) also created another two domains for epistemic orientation: epistemic alignment and study ability. Suh et al. (2022) defined epistemic alignment as a set of beliefs about knowing, learning, and teaching, which emphasizes scientific investigation and knowledge justification by argumentation with evidence. Student ability is a set of beliefs about students’ competence to learn, emphasizing that students can overcome learning challenges. Those two domains connect teaching and learning and go beyond the theories of knowledge.

2.2. Measuring epistemic orientation

Epistemic orientation is conjectured to comprise a continuum from replication to constructivism (Weiss et al., 2022). Teachers with an epistemic orientation towards constructivism tend to provide students with more opportunities to construct their knowledge and engage students in epistemic science practice (Bae et al., 2022). Also, such teachers will be aware that students are active agents in knowledge construction and have the power to shape knowledge production (Stroupe, 2014; Miller et al., 2018). Finally, they will facilitate a learning environment for students to construct new knowledge in science for meaningful learning. Teachers’ epistemic orientation guides their decisions to create a learning environment for students and employ teaching approaches.

The original questionnaire by Suh et al. (2022), the Epistemic Orientation Survey (EOS), has four subscales to address the dimensions of epistemic orientation. The instrument consists of 44 Likert-type items in four subscales representing the four domains—epistemic alignment, authority relations, nature of knowledge, and student ability—with five response anchors: strongly disagree, disagree, unsure, agree, and strongly agree. The initial study provided evidence about the instrument’s domain analysis and functionality.

Epistemic orientation is a trait that may change. Howard et al. (2000) developed constructivist approaches in a teacher training course. They found that three aspects of teachers’ epistemology changed, and teachers tended to accept that students can: (1) examine complex knowledge and draw conclusions; (2) learn by discovering or doing rather than from textbooks and well-designed curricular material; and (3) develop their concepts through construction and clarifying misconceptions. Thus, they concluded that epistemology was a less stable trait than was previously supposed. More recently, Morandi et al. (2022) found that 2 years of teacher professional development showed changes in teachers’ epistemic orientation toward knowledge generation, although not necessarily on all subscales with the greatest change in the area of epistemic alignment and student ability. They did not find significant differences after only 1 year of professional development. So, they argued that change in epistemic orientation may be slow and could be uneven. Taken together, these disparate findings emphasize the need for studies that can examine epistemic orientation over time while controlling for potential changes in the factor structure that could affect measures and interpretations. Our science education program conducted a two-year professional development and collected data with the survey developed by Suh et al. (2022), so it is possible to track the change in teachers’ epistemic orientation.

2.3. Factor analysis and measurement invariance of instrument development

One way to study and manage potential changes in a factor structure is repeated analysis of the factor structure in different samples or at different times, which is an ongoing process that provides further evidence for an instrument (Betts et al., 2010; Byrne and van de Vijver, 2010; Kaufman et al., 2016). Suh et al. (2022) developed the EOS and examined the factor structure of the instrument with the sample of pre-and in-service teachers at a single occasion. Examining the factor structure over time would provide a stronger basis for understanding the stability and validity of the construct. In this study, the factor structure analysis was repeatedly examined with in-service teachers on four occasions. This not only expands the investigation of the construct validity evidence of the EOS but also provides a broader perspective on the topic.

First, the present study examined whether the hypothesized structure of EOS was consistent across time. If the factor structure of epistemic orientation changes over time, then a new structure may be needed to track the changing nature of the construct. However, if the factor structure stays stable, this indicates that the instrument measures the same construct over time. Second, the study examined the invariance of the EOS over time, aiming to determine the extent to which the relationship between indicators and underlying factors for each subscale of EOS remains consistent across occasions (Millsap and Yun-Tein, 2004; Liu et al., 2017). For instance, if the indicator measurement properties were not invariant across time, then inferences drawn from scores on EOS may not relate to just factor-level change as intended. Failure to adequately comprehend temporal variation in the measurement model parameters could result in inaccurate interpretations of teachers’ teaching orientation (Liu et al., 2017). Additionally, the variation in measurement also offers valuable insights for researchers about teachers’ epistemic orientation. Moreover, if the instrument demonstrates a comparable factor structure across time via evidence for measurement invariance, it will be better suited to offering valuable insights for future studies. For example, that makes it more likely that an observed change could be due to intervention rather than shifts in the construct definition.

3. Research questions

The purpose of this paper is to investigate the factor structure of an instrument of epistemic orientation and investigate the measurement invariance of the instrument. There are three questions:

1. What is the dimensionality of the EOS at each occasion?

2. Based on the factor structure of the EOS, what would be an appropriate short form of the EOS (EOS-SF) for potential future use?

3. To what extent does the EOS-SF provide for measurement invariance across time for elementary science teachers? This means that each dimension of EOS-SF measures the same construct over time.

4. Method

This research investigated the EOS (Suh et al., 2022) with epistemic orientation as an overall latent variable with four hypothesized subfactors, using data from four waves of teacher data gathered during a longitudinal professional development project. All analyses were completed in Mplus Version 8.3 (Muthén and Muthén, 2021).

4.1. Data collection and participants

Respondents in the study were teacher participants in a two-year professional development (PD) workshop on the Science Writing Heuristic that focused on elementary teachers’ orientation to generative learning in the teaching of science. In the workshops, teachers explored theories of learning and how to apply teaching approaches in science classrooms (Lammert et al., 2022a) by focusing on certain epistemic tools (Fulmer et al., 2021, 2023). All elementary teachers were from midwestern or southeastern U.S. districts, and they joined the program based on their interest in knowing more about learning theories and new teaching approaches. The Epistemic Orientation Survey (EOS) was distributed to teachers through email during the initial workshop and then approximately every six months afterward. Each survey response was gathered through the online survey platform Qualtrics.

The current study sample consisted of 123 elementary science teachers with years of teaching experience ranging from 1 to 32 years (M = 14, SD = 8) with complete data at baseline (Occasion 1). On occasion 2, there were 111 respondents with years of teaching experience ranging from 1 to 32 years (M = 14, SD = 9). On occasion 3, there were 123 respondents with years of teaching experience ranging from 1 to 32 years (M = 14, SD = 9). On occasion 4, there were 104 respondents with years of teaching experience ranging from 1 to 32 years (M = 15, SD = 9). Most teachers identified as women; three teachers identified as men participated in the workshop four times. The time interval between any two adjacent occasions is about 6 months. The sample size is adequate to estimate the IFA for each dimension per occasion (Singh and Masuku, 2014).

4.2. Measures

The Epistemic Orientation Survey (EOS; Suh et al., 2022) has been designed to measure teachers’ orientation toward constructivist learning. The self-report measure consists of 44 items on a five-point Likert scale response format, and each scored on a scale of 0 to 4. Participants responded to the statement that best describes their understanding of learning in the workshops. Negatively-wording items were reverse-coded before conducting analyses, such that higher scores indicate higher epistemic orientation and lower scores indicate lower epistemic orientation. The EOS includes four subscales: Epistemic nature of knowledge (ENK), measured by eight items; Epistemic Alignment (EA), measured by 24 items; Classroom Authority (CA), measured by eight items; and Student Ability (SA), measured by four items.

4.3. Data analysis

Item factor analysis (IFA) was first conducted for the original EOS with 44 items. Then a short form of EOS (EOS-SF) was created based on this study’s definition of epistemic orientation. Measurement invariance was conducted for each factor of the EOS-SF after examining the stability of each factor.

4.3.1. Item factor analysis (IFA)

Item factor analysis (IFA) with a limited-information diagonally weighted least squares (WLSMV) estimator was used to examine each of the four hypothesized EOS scales separately (Liang and Yang, 2014). For each subscale, polychoric correlations were used to investigate the items’ associations (Maydeu-Olivares, 2013). All measurement models in this study were scaled with a Z-score method, which sets factor means to 0 and factor variances to 1 and estimates all item loadings and thresholds (Ferrando and Lorenzo-Seva, 2018). We evaluated global model fit based on the comparative fit index (CFI), the standardized root mean square residual (SRMR), and the root mean square error of approximation (RMSEA). Good model fit is indicated by a CFI value ≥0.95, and SRMR and RMSEA values ≤0.06 (Brown, 2015). For nested models, the DIFFTEST function was used to identify the best model (Asparouhov et al., 2006; Muthén and Muthén, 2021).

Sources of local misfit were identified by examining the correlation matrix of residuals, which shows how far the model-predicted polychoric correlations were off from the polychoric correlations in the data and can be used to refine the factor structure (Shi et al., 2018; Bandalos, 2021). For the correlation matrix of residuals, the presence of relatively large positive residual correlations would indicate that these items were more related than was predicted by the latent factors. Relatively large negative residual correlations indicate that these items were less related than was predicted by the latent factors. Also, modification indices suggest which pair of items should be more related (Hill et al., 2007). Items’ residual correlations in which the standardized expected parameter change index was greater than.3 were considered for inclusion, but decisions to add error covariance were made by combining local misfit information with a review of the item content. When three or more items have additional error covariance, this can be accounted for using a new latent factor (McNeish, 2017), that measures a different construct than the rest of items. Otherwise, given only pairs of two items, error covariances can be added to account for their additional relationship, as in the present study.

In IFA models, reliability is trait-specific and most often characterized by a quantity known as test information per factor. With the WLSMV estimator, test information was calculated for each factor. Reliability for the test information per factor can range from 0 to 1. Reliability for each factor was calculated by using the formula: reliability = information/ (information +1) (Milanzi et al., 2015).

4.3.2. Item selection for a short form of EOS (EOS-SF)

Based on the analysis of the hypothesized four-scale EOS factor structures, the study can identify items appropriate for a short form of EOS (EOS-SF). Creating an effective short form can make the survey focus more on the generative orientation of teaching while reducing the response burden of participants. Indicators that are suitable for inclusion in the short form must closely relate to the idea of knowledge generation and how teachers prepare the learning environment, and they must also have strong factor loadings contributing to the test information per factor. Factors that are not chosen include redundancy compared to existing factors or unrelated meaning to the defined epistemic orientation (Lorenzo-Seva and Ferrando, 2021).

4.3.3. Longitudinal measurement invariance

The longitudinal invariance of each subscale of EOS was examined within the IFA framework (Muthén and Asparouhov, 2002). Three steps were used for assessing measurement invariance: configural invariance, metric invariance, and scalar invariance (Byrne and van de Vijver, 2010). At each step of the analysis, the estimated model has additional restrictions imposed on the measurement model. Each less-restricted model is then compared with the comparable model with the more-restrictive model with the DIFFTEST function. Invariance is met if the fit of the more restrictive model was not significantly worse than the less restricted model (Widaman et al., 2010). Examples of Mplus input scripts for measurement invariance are available in supplementary material.

First, configural invariance allows all measurement model parameters (loadings, thresholds) to be estimated at each of the four occasions. This is a baseline model for further comparison with the following measurement invariance models. Factor means and factor variances for all occasions were constrained as 0 and 1, respectively, and all item residual variances across occasions were initially fixed to 1. Factor covariances and same-item residual covariances were estimated.

Second, metric invariance, also called weak factorial invariance (Hirschfeld and Von Brachel, 2014), evaluates the equality of factor loadings across time (Milfont and Fischer, 2010). To do so, all factor variances at the reference occasion were fixed to 1 and were freely estimated at other occasions. Factor means for all occasions were fixed to 0. The first metric model was a full metric model with equal factor ladings for the same item across time. Modification indices suggested whose loadings tend to differ across occasions, based on which one loading at a time was released. The model was then re-estimated and compared with the configural model. The procedure was repeated until the metric invariance model was not worse than the configural invariance model. At this step, at least a partial metric invariance model was achieved.

Third, scalar invariance, also called strong factorial invariance (McGrath, 2015), evaluates the equality of thresholds across time (Milfont and Fischer, 2010; McGrath, 2015). For the first occasion, the factor mean was fixed to 0, and the factor variance was fixed to 1. Factor means, and factor variances at other occasions were freely estimated. For measurement invariance at this stage, the thresholds of items that failed to have equal loadings in the metric invariance model were freely estimated in the scalar model. Other thresholds were then freed based on modification indices. Models for scalar invariance were compared with the last model in metric invariance. At each re-examination, only one item’s threshold was freely estimated. The procedure was repeated until the scalar invariance model was not worse than the metric invariance. The final resulting model offers a set of factors that have stable structure over time and measurement invariance over time. The supported scalar invariance model indicates that the survey measures the same construct overtime and teachers’ epistemic orientations across time are comparable (Bollen, 1989; Byrne and van de Vijver, 2010).

5. Results

5.1. Item factor analysis (IFA)

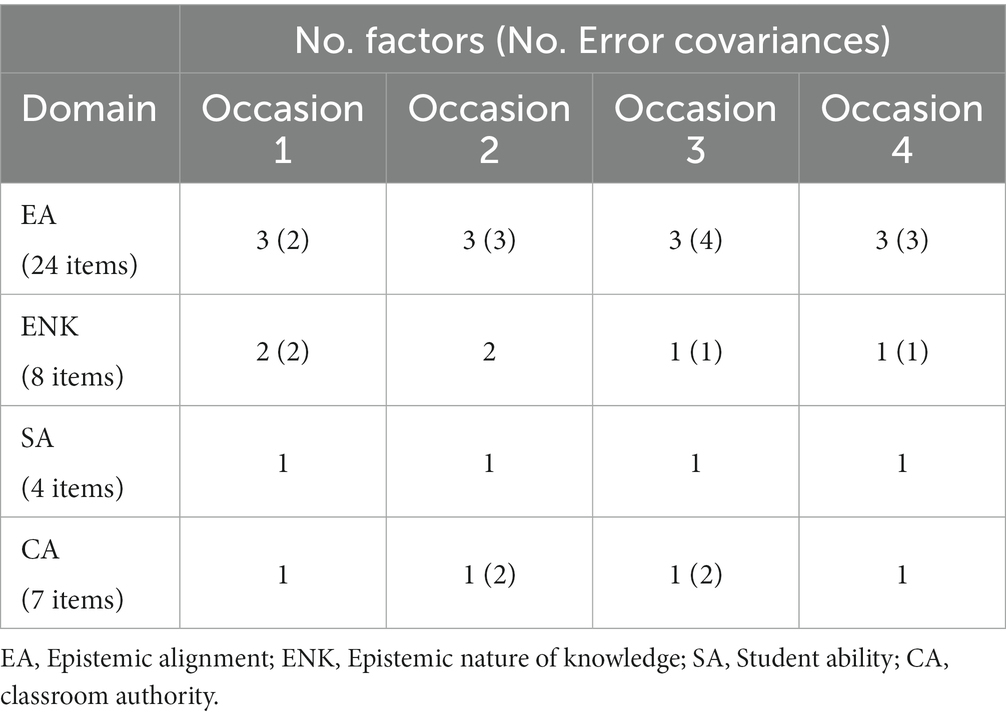

To answer research question 1, the factor structure of the EOS was examined with item factor analysis. Although each of the four dimensions was designed to be unidimensional (Suh et al., 2022), multiple factors were found for two dimensions of EOS on each of the four occasions. As we outline below, two dimensions (epistemic alignment, EA; epistemic nature of knowledge, ENK) had different factor structures across the four occasions, and two dimensions (student ability, SA; classroom authority, CA) had consistent factor structures across the four occasions. Table 1 summarizes the results for the factor structures; the detailed results for EA, ENK, SA, and CA follow.

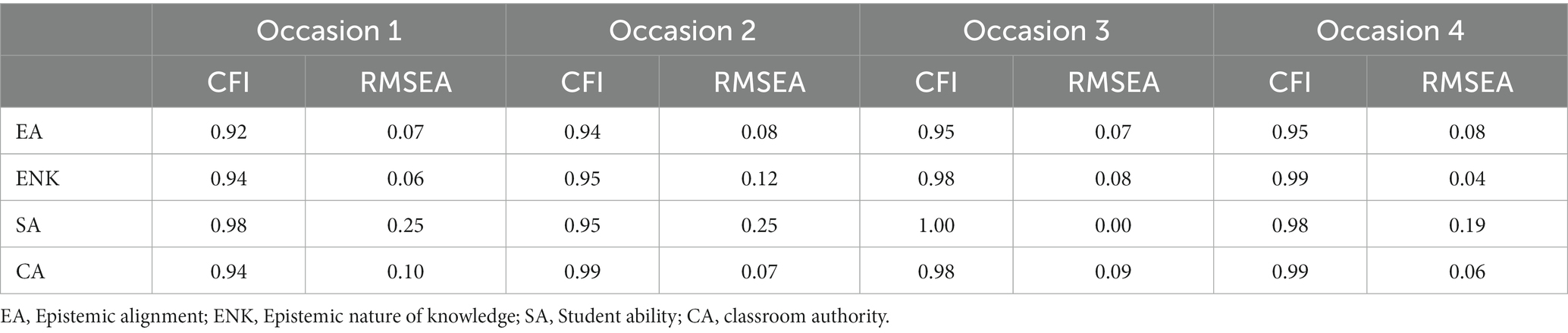

The EA dimension broke into three factors across four occasions: attitudes towards correct answers (AtCA), knowledge generation (KG), and knowledge replication (KR). The three-factor models had acceptable model fit (Table 2). The test reliability values for AtCA fluctuated over time. Specifically, the reliability values were more informative (0.70 ~ 0.90) on occasions 2 and 4 for people with traits from −3.0 to 2.0 SD. Meanwhile, reliabilities on occasions 1 and 3 were lower (0.65 ~ 0.80) than on occasions 2 and 4. The test reliabilities for KG were consistently informative (0.80 ~ 0.95) for people with lower traits from −4.4 to 1.6 SD on occasions 2, 3, and 4. The reliability of KG at occasion 1 was lower (0.70 ~ 0.80) for people with traits from −4.0 to 3.6 SD compared to the other three occasions. The test reliabilities for KR were informative (0.80 ~ 0.92) for people with lower traits from −4.0 to 1.2 SD on occasions 2, 3, and 4. The reliability of KR at occasion 1 was lower (0.75 ~ 0.85) for people with traits from −4.2 to 2.2 SD. This means that the hypothesized one-factor EA includes three unidimensional factors, and each factor measures one construct.

The ENK indicators had two-factor models on occasions 1 and 2 and had one-factor models on occasions 3 and 4. Models for each occasion had good model fit (Table 2). Two factors of ENK at occasions 1 and 2 had test reliability (0.70 ~ 0.92) for people with lower medium level ability from −4.0 to 1.2 SD. On occasions 3 and 4, the ENK had higher test reliabilities (0.75 ~ 0.90) for people with lower and medium level ability from −3.8 to 1.8 SD. This means that the factor structure of ENK changes over time.

The SA indicators had a one-factor structure across time. The CFIs were good, but the RMSEAs were unacceptable (Table 2). The test reliabilities for SA across time were stable and high (0.70 ~ 0.93) to measure students with lower traits from −3.2 to 0.3 SD but lacked information at higher trait levels. This means that the hypothesized one-factor SA has a stable one-factor structure over time.

For the CA dimension, item I23, “Teachers are responsible for managing classroom environments,” was unclear about what kind of classroom environment teachers should create for students and had low polychoric correlations. This item was deleted for further analysis. The rest of the seven items had one-factor models across four occasions with a good model fit (Table 2). Reliabilities for CA across time were stable and high (0.75 ~ 0.90), measuring people with lower or medium traits from −4.0 to 1.8 SD. This means that the hypothesized one-factor CA has a stable one-factor structure over time.

These results indicate that the number of factors for the four proposed dimensions of the original EOS is not equally stable across measurement occasions. This is not only a matter of the number of items in the proposed dimensions but also of the structure. Therefore, attempting to create a short form of the EOS, as described in the next step, would require analyzing and building off the stable factor structure to select dimensions that are consistent with the theoretical definition of epistemic orientation.

5.2. Short form of EOS (EOS-SF)

To solve research question 2, a short form of EOS was created with the results from the factor structure of EOS. As shown, the hypothesized four-factor structure of EOS can be represented by six stable factors across time: knowledge generation (KG), knowledge replication (KR), attitudes towards correct answers (AtCA), classroom authority (CA), epistemic nature of knowledge (ENK), and student ability (SA). Not all of these empirical factors are fully consistent with the theoretical definition of epistemic orientation that this study focused on. In this study, we define epistemic orientation as a continuum from knowledge replication to construction. We chose the orientation towards knowing and knowledge and did not examine the orientation towards teaching and learning defined by Suh et al. (2022).

Teachers with a more informed orientation towards knowledge construction give students control over their learning because they know that scientific knowledge is constructed by scientists and develops with the efforts of the scientific community. Based on this definition of epistemic orientation, four factors were chosen to form a short form of EOS (EOS-SF): ENK, which relates to scientific knowledge’s constructive and evolving nature; KG and KR, which relate to the two ends of the orientation continuum; and CA, which relates to student and teacher control of learning in the classroom. Two factors were not chosen: AtCA, which shows redundancy with KR, but was less reliable across measurement occasions, and SA, which measured beliefs about students’ own competence but did not closely relate to the core idea of stance toward knowledge in the classroom.

Taking these four factors, a shortened version of the EOS was created that consisted of 35 items, and together named the short form of epistemic orientation survey (EOS-SF; Appendix A) to measure teachers’ epistemic orientation. The factors are classroom authority (CA) with seven indicators, epistemic nature of knowledge (ENK) with eight indicators, knowledge generation (KG) with twelve indicators, and knowledge replication (KR) with eight indicators. The four factors together focus on teachers’ beliefs in knowing and knowledge: more toward generative or replicative belief. Then, these four factors were analyzed for measurement invariance over time.

5.3. Measurement invariance of each factor in EOS-SF

To solve research question 3, the measurement invariance of each factor of EOS-SF was investigated. The factor structure over time for the EOS-SF can be tested for measurement invariance to examine to what extent the measurement is effective, even as respondents change in their levels of the underlying attribute over time. Two sets of structural models were tested for the measurement invariance of the four factors. The first structural model was a consistent one-factor model over time for three of the factors (CA, KG, and KR). The second structural model was for ENK, which had a two-factor structure on occasions 1 and 2 but a one-factor structure on occasions 3 and 4 (Table 1).

The first type of structural model for longitudinal measurement invariance was a one-factor model where each factor’s latent variables across time were correlated, such as for CA, KG, and KR. In addition, the responses for each item across four occasions were also dependent, so each item’s residuals across four occasions were correlated. The reference occasion’s factor variance and factor mean were fixed to 1 and 0, respectively. Detailed tables of model comparisons are available in supplementary material, and summarized below.

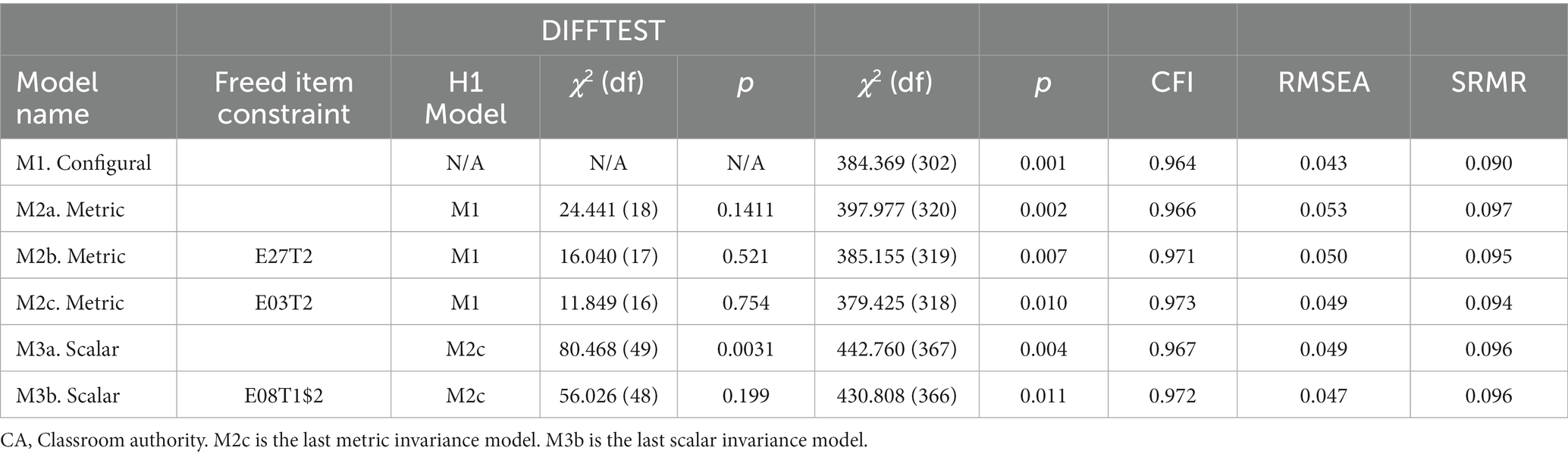

The subdomain classroom authority (CA) was measured by seven ordinal indicators (items 03, 08, 27, 13, 18, 31, 35). By conducting configural invariance, metric invariance, and scalar invariance testing, it was found that a partial scalar invariance model (model 3b in Table 3) is the best-fitting invariance model. For model 3b, factor loadings of five items (08, 13, 18, 31, 35) were kept invariant across time. Also, thresholds of four items (13, 18, 31, 35) were kept invariant across time. With four out of seven items having the same structure with the latent factor over time, seven items measured something in common. The invariant items served as anchors for the relations between items and latent factors at each occasion to the same scale, which made the subdomain comparable across occasions (Byrne and van de Vijver, 2010).

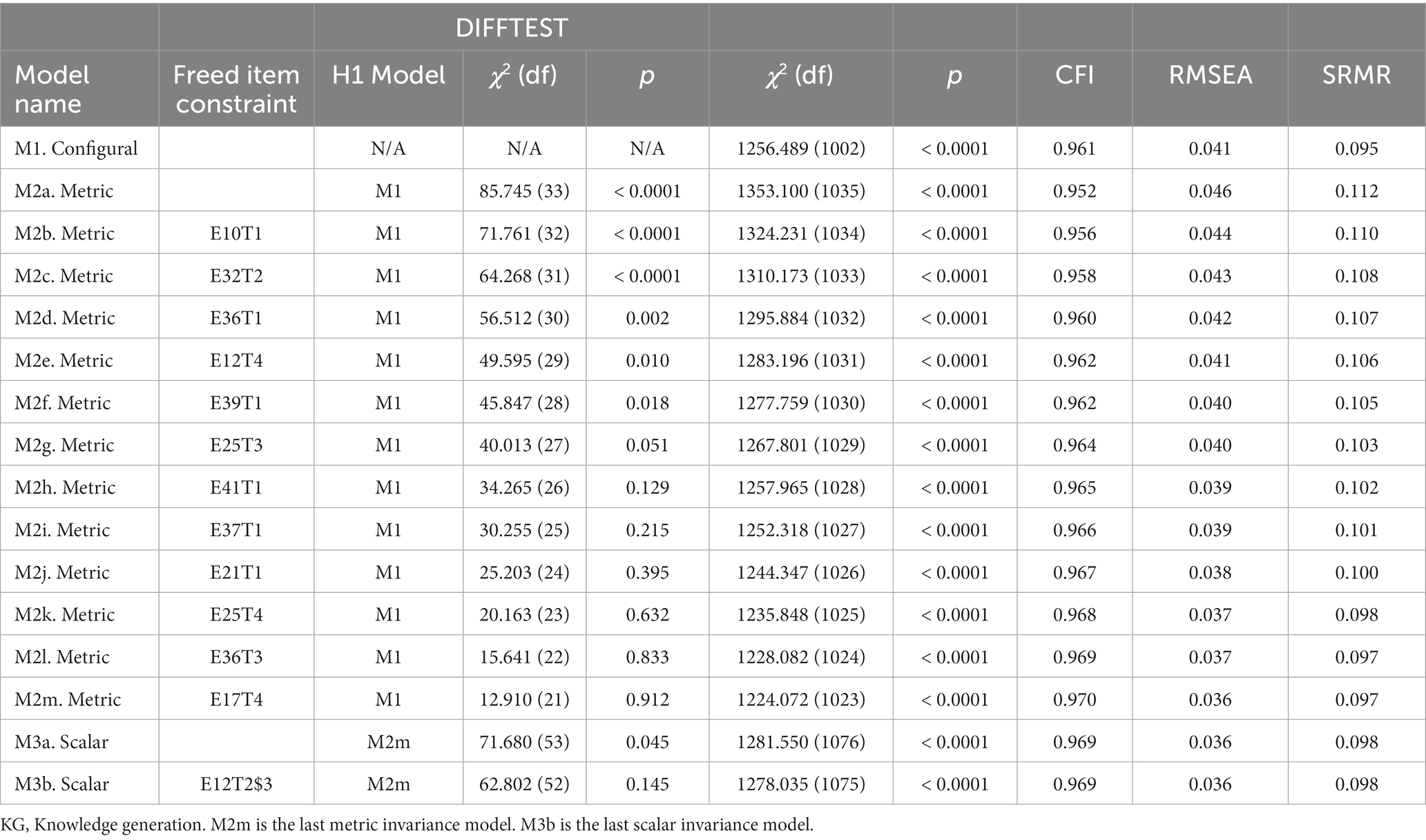

The subdomain knowledge generation (KG) was measured by 12 ordinal indicators (items 05, 10, 12, 17, 21, 25, 32, 36, 37, 39, 41, 43). By conducting configural invariance, metric invariance, and scalar invariance testing, it was found that the partial scalar model (model 3b in Table 4) is the best-fitting model for invariance. For model 3b, factor loadings and thresholds of two items (5, 43) were kept invariant across time.

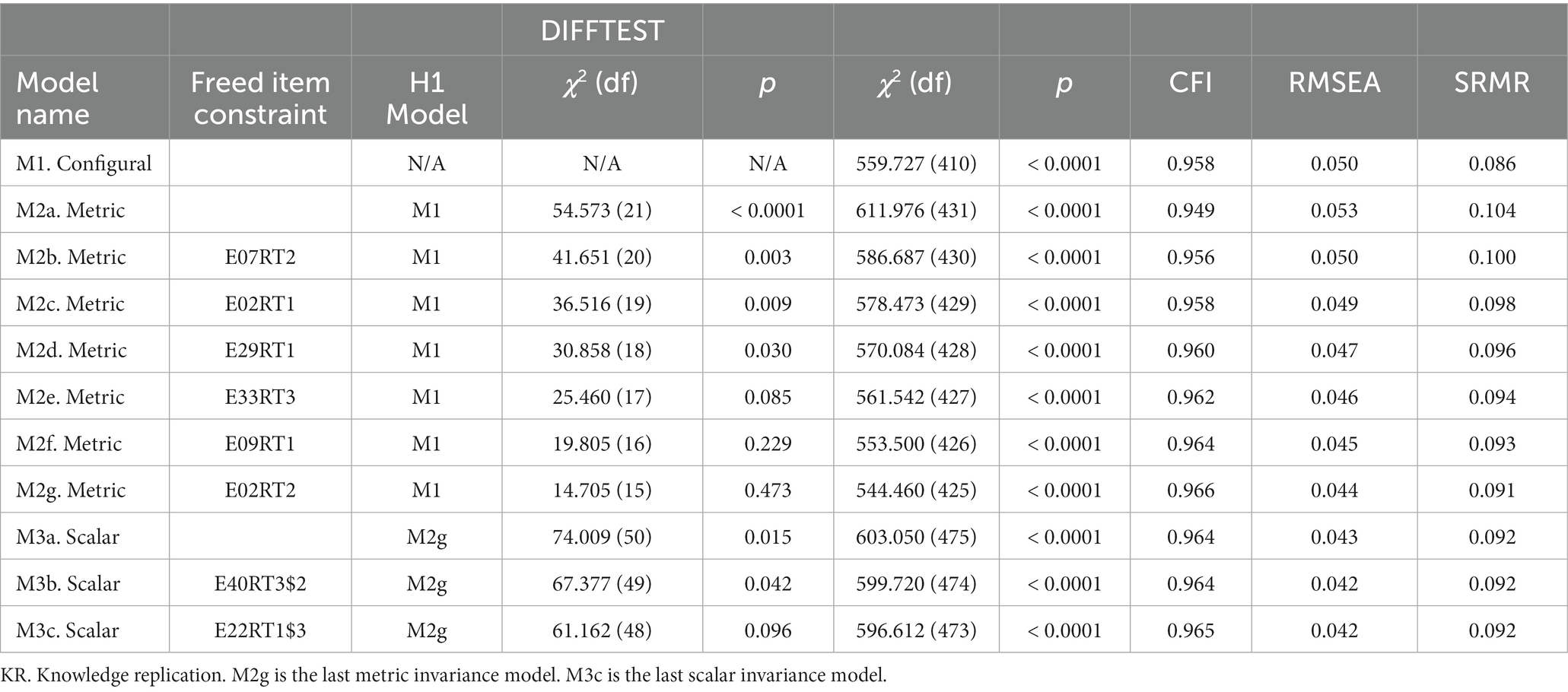

Knowledge replication (KR) was measured by eight ordinal indicators (items 02, 07, 09, 22, 26, 29, 33, 40). By conducting configural invariance, metric invariance, and scalar invariance testing, it was found that the partial scalar model (model 3b in Table 5) was the final model. For model 3b, factor loadings of three items (22, 26, 40) kept invariant across time. Also, the thresholds of one item (26) were invariant across time.

The second type of structural model for measurement invariance is for ENK because this factor had different factor structures across occasions. On occasions 1 and 2, ENK had the same two-factor structure: one factor included four items (1, 6, 14, 19), and the other included four indicators (11, 16, 24, 28). However, the subdomain ENK had a one-factor structure on occasions 3 and 4. To conduct measurement invariance, the factor structure of ENK was kept the same across time. Therefore, eight items of ENK had a one-factor structure with four items (1, 6, 14, 19) correlated on each occasion to approximate the second factor as needed. In addition, the residuals for the same items across time were also correlated.

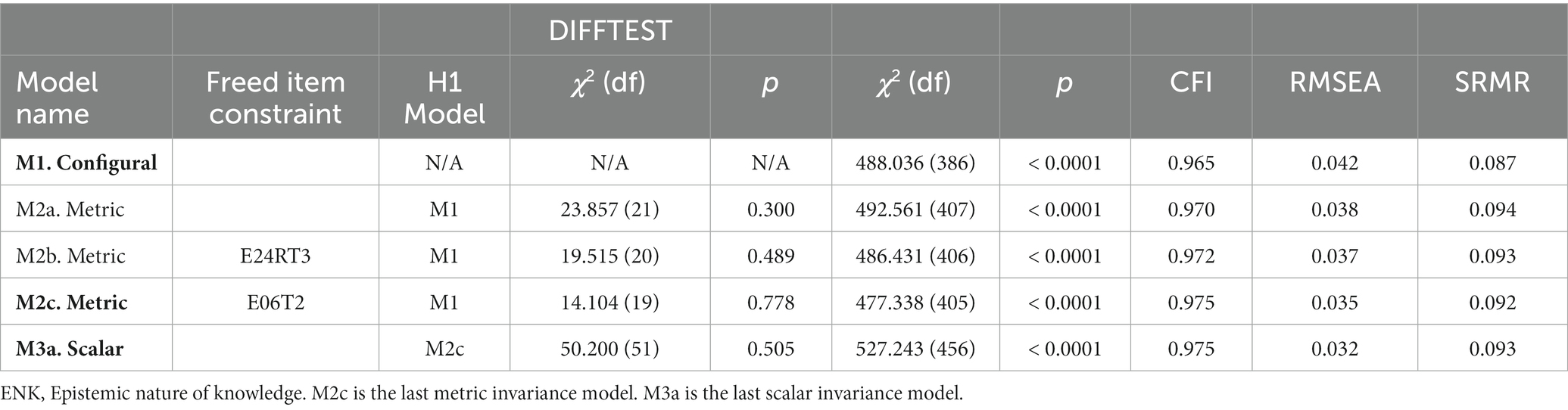

The subdomain epistemic nature of knowledge (ENK) was measured by eight ordinal indicators (items 01, 06, 14, 19, 11, 16, 24, 28). By conducting configural invariance, metric invariance, and scalar invariance testing, it was found that the partial scalar model (model 3a in Table 6) was the last invariance model. For model 3a, factor loadings and thresholds of 6 items (01, 14, 19, 11, 16, 28) were kept invariant across time.

In sum, this study first used item factor analysis (IFA) to examine the four dimensions of EOS developed by Suh et al. (2022). It was found that epistemic alignment (EA) had three factors across time: knowledge generation (KG), knowledge replication (KR), and attitudes toward correct answers (AtCA). The dimension of student ability (SA) and classroom authority (CA) had a one-factor structure over time. The dimension epistemic nature of knowledge (ENK) had a two-factor structure on occasions 1 and 2 and a one-factor structure on occasions 3 and 4. Based on the definition of epistemic orientation, four factors were chosen to shape a short form of EOS (EOS-SF), including KG, KR, ENK, and CA. Longitudinal invariance testing was conducted for each of the four factors of the EOS-SF.

6. Discussion

The present study examined the dimensionality of the Epistemic Orientation Survey (EOS) across four occasions. We found that the hypothesized four dimensions included six factors, which described different aspects of epistemic orientation. Based on our focus on generative learning as the target of teachers’ epistemic orientation, four factors were selected to be included in a short form version, EOS-SF. We found that there was evidence for longitudinal measurement invariance of each factor in EOS-SF, suggesting that the subscales of the short form survey could capture the change in epistemic orientation over time.

Researchers can choose certain subscales or the whole survey depending on their research purposes. The six factors of the original EOS could be regarded as subscales measuring different aspects of the epistemic orientation. Taking the present study as an example, the short form survey was created by choosing four of the six factors from the EOS originally developed by Suh et al. (2022) to match our focus on the learning orientation to generate knowledge and the evolving nature of science knowledge. Therefore, the attitude toward correct answers (AtCA) was not chosen because its content appeared redundant for the knowledge replication (KR) factor, and student ability (SA) was not chosen because it is more about a mindset toward students’ intelligence. The short form proposed in this current study is consistent with the stance that all students can generate their own knowledge of science regardless of their perceived intelligence level.

One finding from the study of the factor structure of time is that the factor structure of evolving nature of knowledge (ENK) showed changes over time. On the first two occasions, ENK included two sub-factors: revisable knowledge and absolute knowledge. On the last two occasions, ENK had one factor: the evolving nature of knowledge, which indicates there was no distinction between revisable and absolute knowledge. The changes of factor structure of the ENK factor might indicate that teachers gradually adopt a more informed orientation toward knowledge generation (Suh et al., 2022) after 1 year of participation in the PD workshops—thereby developing a closer connection between the aspects of ENK over time.

A second finding is that the partial measurement invariance was obtained for each of the four factors of EOS-SF. We found that CA had four out of seven items that kept strong invariance. KG had two out of twelve items with strong invariance, which was barely invariant. KR had three out of eight items that had strong invariance, and ENK had six out of eight items that kept strong invariance. The partial invariance is an important empirical finding that supports an underlying assumption of many studies that aim to track change in epistemic orientation over time. The finding of partial measurement invariance, coupled with tools for estimating factor scores that account for measurement non-invariance, provide a strong case for using the questionnaire across repeated occasions to study growth. This is because that the invariant items put items on different occasions on the same scale, so we can compare the change of the latent trait over time. That supports other research that has aimed to study changes in epistemic orientation across time (e.g., Bae et al., 2022), and how this may connect to experiences of professional learning or to enacted instructional practice (e.g., Lammert et al., 2022b; Morandi et al., 2022). We suggest that subsequent work is needed to use invariant factor scores as part of their analyses of teacher growth of epistemic orientation as an essential next step.

Thirdly, the methods used in this study could be used as an example for the measurement of how constructs change over time. Factor structure and measurement invariance were both used to examine the stability of the factors. Usually, researchers choose one method to examine the dimensionality of an instrument (Lei et al., 2020). When we use the instrument multiple times, we can gain a comprehensive understanding of the dimensionality and stability of the instrument. In addition, the measurement invariance provides opportunities for researchers to compare the change of latent traits after several training of a specific teaching theory or instructional methods.

Fourthly, the EOS-SF measuring epistemic orientation may be applied to teachers in other grade levels, even though the EOS-SF was developed with data from elementary science teachers. With epistemic orientation, teachers tend to have a high possibility to create a generative learning environment for students to construct their understanding of science concepts (Bae et al., 2022; Weiss et al., 2022), which is also important for middle and high school students. Moreover, the EOS-SF would be a useful tool for PD developers and implementers to track teachers’ levels of epistemic orientation at the beginning of a PD experience and across time, as well as use this information to tailor the learning opportunities to teachers’ different levels of epistemic orientation. Teachers with more informed levels of epistemic orientation may need different types of support and experiences during PD than teachers with epistemic orientations less supportive of knowledge generation.

Lastly, this work is part of an emerging tradition in the field to engage in applied assessment research using measurement principles as an ongoing area of inquiry. This differs from work that would release a “finalized” instrument or questionnaire. Rather, the field shows a keen interest in the persistent study of the application and interpretation of tools (Liu and Fulmer, 2008; Ding et al., 2023; Fulmer et al., 2023), whether for the improvement of that specific tool or to inspire new patterns of assessment development and use (Ruiz-Primo and Shavelson, 1996; Mislevy, 2018; Harris et al., 2022). In the present case, this enables us to gain a richer interpretation of the instruments and understanding of epistemic orientation. It also provides a stronger basis for future work that can study changes of epistemic orientation over time. Thus, the field’s effort in the reexamination of instruments can provide additional evidence for the quality and effectiveness of any existing assessment.

7. Limitation

In this study, error covariances in CFA and IFA were added to the factor structure. Usually, the common variance among items is explained by the factor loadings. Theoretically, the residuals of items are uncorrelated (Barker and Shaw, 2015) because the latent trait is the only reason why they relate to each other. However, some researchers suggest adding error covariances when two items have something else in common and cannot be explained by the latent trait (Cattell and Tsujioka, 1964). The residual covariances among items partition the measurement noise covariance for a better fit of the latent trait (Deng et al., 2019). Researchers can choose to add a new factor for three or more overly-related items with reasonable choices (McNeish, 2017). Take this study as an example, we added error covariances for three factors in epistemic alignment construct (See supplementary material). Researchers could decide the factor structure based on the residual covariance and statements of items.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found at: https://www.openicpsr.org/openicpsr/project/184283.

Ethics statement

The studies involving humans were approved by Institutional Review Boards (IRBs). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

CD conducted data analysis and drafted the paper. GF assisted in revising the writing. LH provided help with the data analysis when CD had questions about data analysis. GF, BH, and JS, who are co-PIs for the funded grant, designed the project, recruited teachers, conducted PD workshops, and collected data used in this research. All authors contributed to the article and approved the submitted version.

Funding

The project was funded by the National Science Foundation (Grant Agreement Number: DRL-1812576). This grant is about developing the adaptive expertise of elementary science teachers.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1239751/full#supplementary-material

References

Ash, A. M., and Hand, B. (2022). Knowledge evaluation and disciplinary access: mutually supportive for learning. Learn. Res. Pract. 8, 116–132. doi: 10.1080/23735082.2022.2092645

Asparouhov, T., Muthén, B., and Muthén, B. O. (2006). Robust chi square difference testing with mean and variance adjusted test statistics. Matrix 1, 1–6. Available at: http://www.statmodel.com/download/webnotes/webnote10.pdf

Bae, Y., Hand, B. M., and Fulmer, G. W. (2022). A generative professional development program for the development of science teacher epistemic orientations and teaching practices. Instr. Sci. 50, 143–167. doi: 10.1007/s11251-021-09569-y

Bandalos, D. L. (2021). Item meaning and order as causes of correlated residuals in confirmatory factor analysis. Struct. Equ. Model. Multidiscip. J. 28, 903–913. doi: 10.1080/10705511.2021.1916395

Barker, L. E., and Shaw, K. M. (2015). Best (but oft-forgotten) practices: checking assumptions concerning regression residuals. Am. J. Clin. Nutr. 102, 533–539. doi: 10.3945/ajcn.115.113498

Betts, J. E., Appleton, J. J., Reschly, A. L., Christenson, S. L., and Huebner, E. S. (2010). A study of the factorial invariance of the student engagement instrument (SEI): results from middle and high school students. Sch. Psychol. Q. 25, 84–93. doi: 10.1037/a0020259

Brown, T. A. (2015). Confirmatory factor analysis for applied research. New York: Guilford publications.

Buehl, M. M., and Fives, H. (2009). Exploring teachers' beliefs about teaching knowledge: where does it come from? Does it change? J. Exp. Educ. 77, 367–408. doi: 10.3200/JEXE.77.4.367-408

Byrne, B. M., and van de Vijver, F. R. (2010). Testing for measurement and structural equivalence in large-scale cross-cultural studies. Int. J. Test. 10, 107–132. doi: 10.1080/15305051003637306

Cattell, R. B., and Tsujioka, B. (1964). The importance of factor-trueness and validity, versus homogeneity and orthogonality, in test scales. Educ. Psychol. Meas. 24, 3–30. doi: 10.1177/001316446402400101

Chen, Y. C., and Techawitthayachinda, R. (2021). Developing deep learning in science classrooms: tactics to manage epistemic uncertainty during whole-class discussion. J. Res. Sci. Teach. 58, 1083–1116. doi: 10.1002/tea.21693

Chin, C. (2007). Teacher questioning in science classrooms: approaches that stimulate productive thinking. J. Res. Sci. Teach. 44, 815–843. doi: 10.1002/tea.20171

Deng, F., Yang, H. L., and Wang, L. J. (2019). Adaptive unscented Kalman filter based estimation and filtering for dynamic positioning with model uncertainties. Int. J. Control. Autom. Syst. 17, 667–678. doi: 10.1007/s12555-018-9503-4

Desimone, L. M. (2009). Improving impact studies of teachers’ professional development: toward better conceptualizations and measures. Educ. Res. 38, 181–199. doi: 10.3102/0013189X08331140

Ding, C., Lammert, C., and Fulmer, G. W. (2023). Refinement of an instrument measuring science teachers’ knowledge of language through mixed method. Disciplinary and Interdisciplinary Science Education Research 5. doi: 10.1186/s43031-023-00080-7

Duschl, R. A., and Bybee, R. W. (2014). Planning and carrying out investigations: an entry to learning and to teacher professional development around NGSS science and engineering practices. Int. J. STEM Educ. 1, 1–9. doi: 10.1186/s40594-014-0012-6

Ferrando, P. J., and Lorenzo-Seva, U. (2018). Assessing the quality and appropriateness of factor solutions and factor score estimates in exploratory item factor analysis. Educ. Psychol. Meas. 78, 762–780. doi: 10.1177/0013164417719308

Fulmer, G. W. (2008). Successes and setbacks in collaboration for science instruction: connecting teacher practices to contextual pressures and student learning outcomes. Riga, Latvia: VDM Publishing.

Fulmer, G. W., Hansen, W., Hwang, J., Ding, C., Ash, A., Hand, B., et al. (2023). “Development and application of a questionnaire on teachers' knowledge of argument as an epistemic tool” in Advances in applications of Rasch measurement in science education. eds. W. Liu and W. Boone (Cham: Springer)

Fulmer, G. W., Hwang, J., Ding, C., Hand, B., Suh, J. K., and Hansen, W. (2021). Development of a questionnaire on teachers' knowledge of language as an epistemic tool. J. Res. Sci. Teach. 58, 459–490. doi: 10.1002/tea.21666

Harris, L. R., Adie, L., and Wyatt-Smith, C. (2022). Learning progression–based assessments: a systematic review of student and teacher uses. Rev. Educ. Res. 92, 996–1040. doi: 10.3102/00346543221081552

Hashweh, M. Z. (1996). Effects of science teachers' epistemological beliefs in teaching. J. Res. Sc. Teach. 33, 47–63. doi: 10.1002/(SICI)1098-2736(199601)33:1<47::AID-TEA3>3.0.CO;2-PC

Hill, C. D., Edwards, M. C., Thissen, D., Langer, M. M., Wirth, R. J., and Burwinkle, T. M. (2007). Practical issues in the application of item response theory: a demonstration using items from the pediatric quality of life inventory (PedsQL) 4.0 generic core scales. Med. Care 45, S39–S47. doi: 10.1097/01.mlr.0000259879.05499.eb

Hirschfeld, G., and Von Brachel, R. (2014). Improving multiple-group confirmatory factor analysis in R–A tutorial in measurement invariance with continuous and ordinal indicators. Pract. Assess. Res. Eval. 19:7. doi: 10.7275/qazy-2946

Hofer, B. K., and Pintrich, P. (1997). The development of epistemological theories: beliefs about knowledge and knowing and their relation to learning. Rev. Educ. Res. 67, 88–140. doi: 10.3102/00346543067001088

Howard, B. C., McGee, S., Schwartz, N., and Purcell, S. (2000). The experience of constructivism: transforming teacher epistemology. J. Res. Comput. Educ. 32, 455–465. doi: 10.1080/08886504.2000.10782291

Kaufman, E. A., Xia, M., Fosco, G., Yaptangco, M., Skidmore, C. R., and Crowell, S. E. (2016). The difficulties in emotion regulation scale short form (DERS-SF): validation and replication in adolescent and adult samples. J. Psychopathol. Behav. Assess. 38, 443–455. doi: 10.1007/s10862-015-9529-3

Lammert, C., Hand, B., Suh, J. K., and Fulmer, G. (2022a). “It’s all in the moment”: a mixed-methods study of elementary science teacher adaptiveness following professional development on knowledge generation approaches. Discip. Interdiscip. Sci. Educ. Res. 4:12. doi: 10.1186/s43031-022-00052-3

Lammert, C., Sharma, R., and Hand, B. (2023). Beyond pedagogy: the role of epistemic orientation and knowledge generation environments in early childhood science teaching. Int. J. Sci. Educ. 45, 431–450. doi: 10.1080/09500693.2022.2164474

Lammert, C., Suh, J. K., Hand, B., and Fulmer, G. (2022b). Is epistemic orientation the chicken or the egg in professional development for knowledge generation approaches? Teach. Teach. Educ. 116:103747. doi: 10.1016/j.tate.2022.103747

Lei, L., Huang, X., Zhang, S., Yang, J., Yang, L., and Xu, M. (2020). Comparison of prevalence and associated factors of anxiety and depression among people affected by versus people unaffected by quarantine during the COVID-19 epidemic in Southwestern China. Medical science monitor: international medical journal of experimental and clinical research, 26:e924609-1. doi: 10.12659/MSM.924609

Liang, X., and Yang, Y. (2014). An evaluation of WLSMV and Bayesian methods for confirmatory factor analysis with categorical indicators. Int. J. Quan. Res. Educ. 2, 17–38. doi: 10.1504/IJQRE.2014.060972

Liu, X., and Fulmer, G. (2008). Alignment between the science curriculum and assessment in selected NY state regents exams. J. Sci. Educ. Technol. 17, 373–383. doi: 10.1007/s10956-008-9107-5

Liu, Y., Millsap, R. E., West, S. G., Tein, J. Y., Tanaka, R., and Grimm, K. J. (2017). Testing measurement invariance in longitudinal data with ordered-categorical measures. Psychol. Methods 22, 486–506. doi: 10.1037/met0000075

Lorenzo-Seva, U., and Ferrando, P. J. (2021). MSA: the forgotten index for identifying inappropriate items before computing exploratory item factor analysis. Methodology 17, 296–306. doi: 10.5964/meth.7185

Maggioni, L., and Parkinson, M. M. (2008). The role of teacher epistemic cognition, epistemic beliefs, and calibration in instruction. Educ. Psychol. Rev. 20, 445–461. doi: 10.1007/s10648-008-9081-8

Maydeu-Olivares, A. (2013). Goodness-of-fit assessment of item response theory models. Measurement 11, 71–101. doi: 10.1080/15366367.2013.831680

McGrath, R. E. (2015). Measurement invariance in translations of the VIA inventory of strengths. Eur. J. Psychol. Assess. 32, 187–194. doi: 10.1027/1015-5759/a000248

McNeish, D. (2017). Thanks coefficient alpha, we'll take it from here. Psychol. Methods 23:412. doi: 10.1037/met0000144

Milanzi, E., Molenberghs, G., Alonso, A., Verbeke, G., and De Boeck, P. (2015). Reliability measures in item response theory: manifest versus latent correlation functions. Br. J. Math. Stat. Psychol. 68, 43–64. doi: 10.1111/bmsp.12033

Milfont, T. L., and Fischer, R. (2010). Testing measurement invariance across groups: applications in cross-cultural research. Int. J. Psychol. Res. 3, 111–130. doi: 10.21500/20112084.857

Miller, E., Manz, E., Russ, R., Stroupe, D., and Berland, L. (2018). Addressing the epistemic elephant in the room: epistemic agency and the next generation science standards. J. Res. Sci. Teach. 55, 1053–1075. doi: 10.1002/tea.21459

Millsap, R. E., and Yun-Tein, J. (2004). Assessing factorial invariance in ordered-categorical measures. Multivar. Behav. Res. 39, 479–515. doi: 10.1207/S15327906MBR3903_4

Mislevy, R. J. (2018). Sociocognitive foundations of educational measurement. Oxfordshire, England, UK: Routledge.

Morandi, S., Hagan, C., Granger, E. M., Schellinger, J., and Southerland, S. A. (2022). Exploration of epistemic orientation towards teaching science in a longitudinal professional development study. Paper presented at the annual meeting of NARST (Vancouver, BC). Available at: https://par.nsf.gov/servlets/purl/10330968

Muis, K. R. (2007). The role of epistemic beliefs in self-regulated learning. Educ. Psychol. 42, 173–190. doi: 10.1080/00461520701416306

Muthén, B., and Asparouhov, T. (2002). Latent variable analysis with categorical outcomes: multiple-group and growth modeling in Mplus. Mplus Web Notes 4, 1–22. Available at: https://www.statmodel.com/download/webnotes/CatMGLong.pdf

Muthén, L. K., and Muthén, B. (2021). Mplus. The comprehensive modelling program for applied researchers: User’s guide, 8 Available at: https://www.statmodel.com/download/usersguide/MplusUserGuideVer_8.pdf.

National Research Council (2012). A framework for K-12 science education: practices, crosscutting concepts, and Core ideas The National Academies Press.

NGSS Lead States (2013). Next generation science standards: for states, by states. NW Washington, DC: The National Academies Press.

Palma, E. M. S., Gondim, S. M. G., and Aguiar, C. V. N. (2018). Epistemic orientation short scale: development and validity evidence in a sample of psychotherapists. Paidéia (Ribeirão Preto) 28:e2817. doi: 10.1590/1982-4327e2817

Ruiz-Primo, M. A., and Shavelson, R. J. (1996). Rhetoric and reality in science performance assessments: an update. J. Res. Sci. Teach. 33, 1045–1063. doi: 10.1002/(SICI)1098-2736(199612)33:10<1045::AID-TEA1>3.0.CO;2-S

Seung, E., Park, S., Kite, V., and Choi, A. (2023). Elementary preservice teachers’ understandings and task values of the science practices advocated in the NGSS in the US. Educ. Sci. 13:371. doi: 10.3390/educsci13040371

Shi, D., Maydeu-Olivares, A., and DiStefano, C. (2018). The relationship between the standardized root mean square residual and model misspecification in factor analysis models. Multivar. Behav. Res. 53, 676–694. doi: 10.1080/00273171.2018.1476221

Singh, A. S., and Masuku, M. B. (2014). Sampling techniques & determination of sample size in applied statistics research: an overview. Int. J. Econ. Com. Manag. 2, 1–22.

Stroupe, D. (2014). Examining classroom science practice communities: how teachers and students negotiate epistemic agency and learn science-as-practice. Sci. Educ. 98, 487–516. doi: 10.1002/sce.21112

Suh, J. K., Hand, B., Dursun, J. E., Lammert, C., and Fulmer, G. (2023). Characterizing adaptive teaching expertise: teacher profiles based on epistemic orientation and knowledge of epistemic tools. Sci. Educ. 107, 884–911. doi: 10.1002/sce.21796

Suh, J. K., Hwang, J., Park, S., and Hand, B. (2022). Epistemic orientation toward teaching science for knowledge generation: conceptualization and validation of the construct. J. Res. Sci. Teach. 59, 1651–1691. doi: 10.1002/tea.21769

Weiss, K. A., McDermott, M. A., and Hand, B. (2022). Characterising immersive argument-based inquiry learning environments in school-based education: a systematic literature review. Stud. Sci. Educ. 58, 15–47. doi: 10.1080/03057267.2021.1897931

Widaman, K. F., Ferrer, E., and Conger, R. D. (2010). Factorial invariance within longitudinal structural equation models: measuring the same construct across time. Child Dev. Perspect. 4, 10–18. doi: 10.1111/j.1750-8606.2009.00110.x

Keywords: epistemic orientation, factor structure, longitudinal invariance, item factor analysis, knowledge generation

Citation: Ding C, Fulmer G, Hoffman L, Hand B and Suh JK (2023) The dimensionality of the epistemic orientation survey and longitudinal measurement invariance for the short form of EOS (EOS-SF). Front. Educ. 8:1239751. doi: 10.3389/feduc.2023.1239751

Edited by:

Gavin T. L. Brown, The University of Auckland, New ZealandReviewed by:

Kerstin Kremer, University of Giessen, GermanyChia-Lin Tsai, University of Northern Colorado, United States

Copyright © 2023 Ding, Fulmer, Hoffman, Hand and Suh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gavin Fulmer, Z2F2aW4tZnVsbWVyQHVpb3dhLmVkdQ==

Chenchen Ding

Chenchen Ding Gavin Fulmer2,3*

Gavin Fulmer2,3* Lesa Hoffman

Lesa Hoffman Brian Hand

Brian Hand Jee K. Suh

Jee K. Suh