- Department of Mathematics, Freudenthal Institute, Utrecht University, Utrecht, Netherlands

Introduction: We present a validated instrument for assessing Environmental Citizenship (EC) of students in lower secondary education. The Environmental Citizenship Opinions (ECO) questionnaire focusses on general citizenship components, key sustainability competences, and Socio-Scientific Reasoning aspects. By combining these domains, our work provides a needed innovation as these different aspects of EC have not previously been covered in one single, balanced and validated measurement instrument.

Methods: The ECO questionnaire was validated through a pilot round and a subsequent large-scale study (781 lower secondary students). Several rounds of Confirmatory Factor Analysis resulted in a final model of 38 items divided in 7 first order and 5 s order constructs.

Results: The final model fit statistics indicate near excellent quality of our model (RMSEA = 0.036, CFI = 0.93, TLI = 0.93, SRMR = 0.05), which consists of EC knowledge, EC attitudes, EC skills, EC reflection and complexity of EC issues. Calculations on the relative attribution of each of the five main constructs to overall environmental sustainability citizenship, highlight that attitudes and reflection skills are the most important constituents.

Discussion: Our result present the ECO questionnaire as a valuable, valid and reliable tool to measure environmental citizenship of students. Applications in practice include monitoring student’s development and supporting teachers during the challenging task of effective teaching for EC in and outside the classroom.

1. Introduction

Sustainability issues and Environmental Citizenship (EC) are increasingly important themes for (science) education throughout the world. The UN Decade of Sustainable Development (Buckler et al., 2014), the UN Sustainable Development Goals (United Nations, 2019), and EU Competences for Lifelong Learning (European Commission, 2019) all pay explicit attention to sustainability and EC. A key aspect of EC is collective and individual decision making and action taking on sustainability issues (Benninghaus et al., 2018). Since many sustainability issues can be labelled as wicked problems, this decision making is a complex and open-ended endeavor (Lönngren and Van Poeck, 2021). Because of this wicked nature, taking appropriate action on sustainability issues first demands opinion forming to determine what course of action to take. Furthermore, many sustainability issues can be considered Socio-Scientific Issues (SSIs), since they are open-ended, complex, concern multiple stakeholders, and have both scientific and social ramifications (Ratcliffe and Grace, 2003). Opinion forming and dialog have been singled out as being of critical importance as educational strategies for effective SSI education in general (Cian, 2020) and sustainability education specifically (Garrecht et al., 2018).

It is clear then that opinion forming plays an important role in EC from a theoretical perspective. Many national and local science curricula also acknowledge the importance of opinion forming in a sustainability context. The Next Generation Science Standards in the US for example pay explicit attention to this aspect of EC, for instance in its section on human sustainability: “When evaluating solutions, it is important to take into account a range of constraints, including cost, safety, reliability, and aesthetics, and to consider social, cultural, and environmental impacts” (National Research Council, 2012, p. 208). In a similar fashion, the Dutch national curriculum aims to teach secondary school students how to make conscious decisions regarding sustainability issues and how to oversee the consequences these decisions might have (Stichting Leerplanontwikkeling, 2016).

Despite a widespread implementation of sustainability opinion forming and decision making in national curricula, science teachers struggle with assessing progress of fostering these EC competences in an educational setting. In a large-scale interview study with science teachers in the Netherlands, we found that about half of them feel ill-equipped to teach about citizenship in their science lessons, because of difficulty with differences of opinion, of guiding discussions, and a lack of assessment and evaluation tools (Van Harskamp et al., 2021). Many teachers from this study aimed for opinion forming as an EC learning outcome, yet they felt this was hard teach as well as to assess. Similarly, throughout a 3 year long professional development course, teachers in Sweden indicated that their main struggle when implementing education for sustainable development into their educational practice, is the assessment of the students’ learning outcomes (Boeve-de Pauw et al., 2022). There appears to be a clear need for validated assessment tools that teachers can use to track EC development of their students in general, and on opinion forming and decision making aspects of EC specifically. The research community could employ this type of tool to assess effectiveness of interventions.

There have been previous efforts to develop assessment instruments for EC or closely related concepts, each with their own specific focus. However, in the current landscape of assessment instruments, opinion forming regarding sustainability issues is underrepresented. For instance, Gericke et al. (2018) developed an instrument based strongly on the Sustainable Development Goals, which means opinion forming aspects are represented less clearly. It focusses on EC aspects related to taking pro-environmental action and preventing new environmental issues, but since EC is a broader, complex concept, “it [their questionnaire] might need to be complemented with other instruments when evaluating educational interventions, depending on what specific aspects of EEC need to be evaluated” (Ariza et al., 2021, p. 18). On the other end of the spectrum, Ten Dam et al. (2011) developed an assessment tool that focuses specifically on general citizenship competence, without the ambition to include sustainability competences. Although there are other examples (e.g., Bouman et al., 2018; Hadjichambis and Paraskeva-Hadjichambi, 2020; Olsson et al., 2020; Sass et al., 2021a), none of the existing assessment tools paint an integrated picture of EC competence that would render the instrument useful for assessing educational goals related to sustainability opinion forming and decision making.

Most existing instruments focus on learning aims that deal with attitudes and sustainability behavior, whereas learning aims related to sustainability opinion forming and the ability to take part in dialogue about sustainability issues remain uncovered. Additionally, none of the existing assessment tools focus specifically on lower secondary level, or more precisely 11–15 year olds. During this age, students have been found to go through a dip in sustainability attitudes and behaviors (Olsson et al., 2019), or a dip in sustainability knowingness, attitudes and behaviors in for instance Sweden (Olsson and Gericke, 2016) and Taiwan (Olsson et al., 2019). Similarly, Ten Dam et al. (2014) found that students age 14–15 scored slightly lower on citizenship attitudes and reflection compared to their younger peers. They argue that people in this age category might be busier with developing their own identity and are therefore less interested in processes around them. To better describe the changes that this age category is going through, it is worthwhile to assess EC opinion forming and decision making for this specific age group.

To this end, the current study aims to develop and validate an assessment tool that focusses on opinion forming and decision making aspects of EC at lower secondary level. First, the concepts of sustainability and EC competence are defined, after which relevant pre-existing assessment instruments are discussed. We then move on to describing what these instruments have to offer, and what is still lacking based on research literature about EC. Next, we describe the developmental process of our assessment instrument and discuss how it was validated. Finally, we discuss the possibilities of our instrument and implications of its development for practice.

2. Theoretical background

Defining sustainability remains challenging, yet the definition from the influential Our Common Future report is widely used. It defines sustainable development as development ‘that meets the needs of the present without compromising the ability of future generations to meet their own needs’ (WCED, 1987, p. 14). Sustainability has three main dimensions: ecology, society, and economy, more commonly referred to with the 3 Ps of people, planet, and prosperity (Benninghaus et al., 2018). This complexity has led to sustainability issues being dubbed wicked problems (Lönngren and Van Poeck, 2021). Being able to form informed opinions and make informed decisions regarding sustainability issues while taking into account their inherent complexity is an important characteristic of EC (Ojala, 2013; Olsson et al., 2022).

As is commonly the case with sustainability related competences, and as can be seen from the definition of Our Common Future, EC includes a focus on developments within and between generations, and on collective and individual action taking (Benninghaus et al., 2018). This focus of EC on justice within and between generations culminates in decisions that take into account people elsewhere and in other points in time. Taking action in this sense requires opinion forming regarding possible action strategies and action possibilities. Wiek et al. (2011) have marked a set of five key competences for sustainable action taking, which therefore are important constituents of EC: (i) systems thinking competence, which involves variables and complex cause-effect chains; (ii) anticipatory competence, concerning past, present, and future effects, plausibility and risk; (iii) normative competence, involving values, fairness and justice; (iv) strategic competence, which concerns interventions, success factors and obstacles; and (v) interpersonal competence, focusing on collaboration and empathy. An environmental citizen is able to employ these competences when taking sustainable action. These five competences should therefore be covered by assessment tools for EC that aim to assess learning outcomes related to sustainable opinion forming and decision making.

Another main constituent of the opinion forming and decision making process is reasoning about sustainability issues. Because many sustainability issues are SSIs, the concept of Socio-Scientific Reasoning (SSR) is relevant for an instrument assessing opinion forming and decision making aspects of EC. Sadler et al. (2007) identified four main dimensions when coining the concept of SSR: (i) recognizing inherent complexity of SSIs, for instance related to environmental, social, and economic sides of the dilemma; (ii) examining issues from multiple perspectives, ensuring points of view from different stakeholders and individuals are heard; (iii) appreciating that SSIs are subject to ongoing inquiry, related to uncertainty and risk associated with SSIs and sustainability issues; and (iv) being skeptical to information about issues, for instance consulting multiple sources and checking conflicts of interest of authors of information. SSR fits well in an educational context that observes holism and pluralism, which are two central concepts of effective education for EC (Boeve-de Pauw et al., 2015; Olsson et al., 2022). Holism, on the one hand, concerns observing different dimensions of issues, for instance related to spatial and temporal dimensions and ecological, social, and economic aspects (Öhman, 2008). Pluralism, on the other hand, concerns leaving room for different points of view, different values, emotions and other affective or normative considerations in education for EC (Sund and Öhman, 2014). SSR fits well into these essential aspects of EC, for instance because of its inherent focus on complexity (ensuring holism) and multiperspectivity (ensuring pluralism) in dialogue about controversial issues. It therefore is worthwhile to pay attention to the four aspects of SSR in education for EC, since they are known to influence the opinion forming and decision making process in the context of SSI, and, to that extent, of sustainability issues.

2.1. Existing assessment tools

Several EC assessment instruments exist, each of them with a different emphasis. The Sustainability Consciousness Questionnaire (SCQ; Gericke et al., 2018) introduces the concept of sustainability consciousness. It is defined by the researchers as ‘the experience or awareness of sustainability phenomena. These include experiences and perceptions that we commonly associate with ourselves such as beliefs, feelings and actions.’ (Gericke et al., 2018, p. 3). The SCQ contains items on sustainability knowingness, or ‘what people acknowledge as the necessary features of [sustainable development]’; sustainability attitudes, which explore attitudes towards sustainable development, and sustainability behavior, or ‘what people do in relation to [sustainable development]’ (Gericke et al., 2018, p. 5). Each of these dimensions is divided in environmental, social, and economic items. Overall, the SCQ is strongly connected to the UN’s Sustainable Development Goals. Because of this focus, it does not incorporate the reflexive component of citizenship competence as defined by Ten Dam et al. (2011). The SCQ is tailored to monitor development related to knowingness, attitudes and behaviors, with a focus on EC goals such as civic participation, critical and active engagement, and solving and preventing environmental problems (Ariza et al., 2021). It does not include items or subscales related to opinion forming or discussion, and is therefore less suitable to track learning in these areas of EC.

Another recent instrument is the Environmental Citizenship Questionnaire (ECQ), developed by Hadjichambis and Paraskeva-Hadjichambi (2020). It contains three main categories: activities as an environmental citizen, competences of an environmental citizen, and intention to act in the future as an environmental citizen. The ECQ is rooted firmly in the definition of EC as formulated by the ENEC project (European Network for Environmental Citizenship, 2018). This translates into items that are often based on activism, social and environmental justice, and fairness. It also contains highly specific EC actions that students could perform, such as organizing an online discussion group or contacting elected representatives to discuss their sustainability policy. Because of this highly specific nature, the ECQ is deemed less suitable for measuring EC in a more general, less applied context. Its items are less suitable to track other citizenship learning aims such as dialog skills. The ECQ is aimed mostly at assessing learning aims that concern sustainability behavior.

Several other researchers chose a narrower focus, for instance developing instruments limited to environmental or sustainability attitudes (Milfont and Duckitt, 2010) or values (Bouman et al., 2018). The recently developed Action Competence in Sustainable Development Questionnaire (ACiSD-Q) aims to assess the concept of Action Competence in the context of sustainable development (Sass et al., 2021a). The authors identify four aspects of AciSD: (i) relevant knowledge, (ii) willingness of individuals to take action, (iii) capacity expectations related to trust in one’s capacity for change and self-efficacy, and (iv) outcome expectancy which concerns a trust in effectiveness of the action. It goes one step beyond the Self-Perceived Action Competence for Sustainability Questionnaire (SPACS-Q; Olsson et al., 2020), which does not discern between the two capacity expectations of the AciSD. Other large-scale studies incorporate subscales or sets of items that relate to EC or sustainability in more general instruments. An example is the Relevance Of Science Education (ROSE) questionnaire (Schreiner and Sjøberg, 2004), which focusses on the participants’ views on science. While these instruments each incorporate aspects of EC and sustainability, they are not specifically designed to assess these concepts in relation to opinion forming and decision making.

On the other end of the spectrum are studies that aim to measure citizenship in general, while sometimes touching on aspects of EC and sustainability. An example is the Civic and Citizenship Education Study (ICCS; Schulz et al., 2018). This study contains several items that explore the perceived threat of environmental and sustainability issues to the participant’s life quality, thus dealing with EC attitudes. Ten Dam et al. (2011) developed the Citizenship Competences Questionnaire (CCQ), which focuses solely on citizenship competence. Its four main constituents are Knowledge, Attitudes, Skills, and Reflection. In this context, reflection entails adopting a critical outlook on oneself, situations in the world and one’s personal role in these situations (Ten Dam and Volman, 2007). It relates to self-reflectiveness, evaluating your thoughts and actions, and discussing these with others (Bandura, 2001).

While all these instruments focus on EC or related concepts, there is as of yet no assessment tool that specifically focusses on opinion forming and decision making aspects of EC. The discussed instruments are therefore less suitable for assessing learning aims related to one of EC’s central concepts, opinion forming. Such a tool should incorporate the five key sustainability competences of Wiek et al. (2011) and the four aspects of Socio-Scientific Reasoning (Sadler et al., 2007). Finally, most of these instruments are aimed at upper secondary students, mostly ignoring lower secondary level. In sum, it is fair to state that within the landscape of survey tools focusing on EC of students in formal education, there is both a conceptual gap regarding integrated assessment of opinion forming and decision making literature as well as regarding the specific age of early adolescence (11–15 year olds). With the current study we aim to fill exactly these gaps.

2.2. Conceptualizing our assessment tool

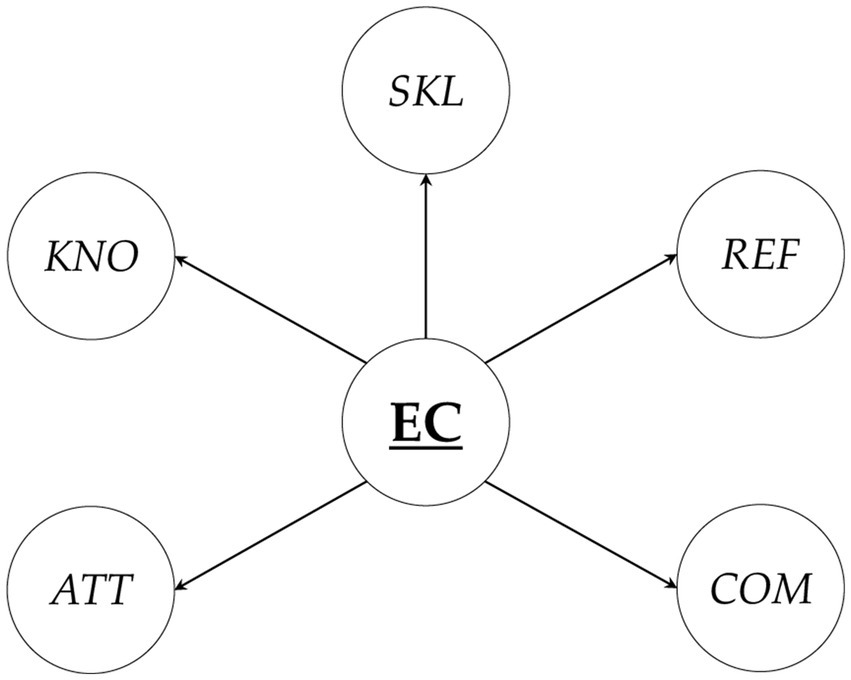

Common aspects of many of the previously discussed tools are knowledge, attitudes, skills, and reflection. These, according to Ten Dam et al. (2011), are the main constituents of citizenship competence. In their model, knowledge is interpreted on a meta-level, concerning knowing what principles underly citizenship, and knowing how to deal with differences of opinion. Citizenship attitudes, according to Ten Dam et al. (2011), relate to thoughts, desires, and willingness to act, concerning a desire to learn about different opinions, willingness to explore conflicts, and upholding social justice. Citizenship skills in their vision refer to an estimation of what one can do in relationship to asserting opinions of oneself and others, and being able to function in unfamiliar social situations. Finally, reflection is important for citizenship for its critical character: “‘Good citizenship’ therefore implies that they can critically evaluate different perspectives, explore strategies for change, and reflect on issues of justice (in)equality and democratic engagement” (Ten Dam et al., 2011, p. 354). In this interpretation of citizenship, reflection entails thinking about conflicts of interest, about equality, democracy, the possibilities to solve conflicts. These four aspects of citizenship competence should therefore be central to an EC assessment tool, since such a tool concerns citizenship in context of sustainability. Translating Ten Dam et al.’s (2011) four aspects of general citizenship competence into a sustainability context, knowledge in the case of EC relates to students understanding what sustainability entails. Sustainability attitudes describe the relation between the student and sustainability, such as their interest in the topic and their willingness to invest in a sustainable world. EC skills relate both to discussion skills and the ability to make sustainable decisions. They include the ability to discuss opinions while leaving room for multiple perspectives or ideologies. They furthermore include a critical attitude towards information sources and the skills to find out suitable courses of action. Finally, sustainability reflection concerns how often students think about sustainability related themes, and whether they discuss these topics at home or with friends. These four aspects of citizenship form the first four main constructs of EC that we aim to integrate into our novel assessment tool (Figure 1).

Figure 1. The overarching construct of environmental citizenship (EC) and its five main second order constructs of our assessment tool: knowledge (KNO) about EC; attitudes (ATT) towards EC; EC skills (SKL); reflection (REF) about EC; and complexity (COM) of EC.

Environmental citizens are able to reason about complex sustainability issues in order to determine suitable courses of action. This type of reasoning closely resembles Socio-Scientific Reasoning as described by Sadler et al. (2007), for instance because of its previously discussed holistic and pluralistic outlook and its collaborative nature, which are important aspects of effective education for EC (Boeve-de Pauw et al., 2015; Olsson et al., 2022). Because of the importance of reasoning about sustainability issues, its four main aspects (complexity of SSIs, multiple perspectives, ongoing inquiry, and skepticism) should be present in an assessment tool for EC (Table 1). Since complexity is uncovered by the Ten Dam et al. categorization, it is added to our model as the fifth main construct (Figure 1). Finally, an assessment tool for EC that focusses on opinion forming and decision making should take into account common competencies that are labelled essential for sustainable decision making and action taking. Wiek et al.’s (2011) five key sustainability competences (systems thinking competence, anticipatory competence, normative competence, strategic competence, and interpersonal competence) relate to reasoning about sustainability issues, discussion and dialog, values, discussing sustainability with others, and inquiry skills. The items for our assessment tool were therefore designed to cover these five key competences (Table 1).

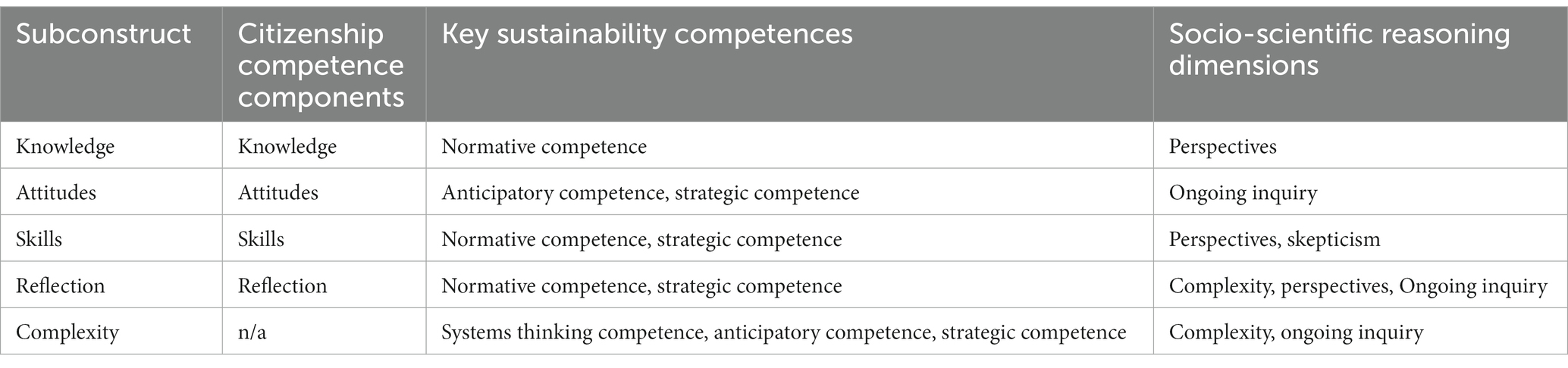

Table 1. The five subconstructs from our factor model of the assessment tool, with connections to the four main components of citizenship competence (Ten Dam et al., 2011), the five key sustainability competences (Wiek et al., 2011), and the four socio-scientific reasoning aspects (Sadler et al., 2007).

For an assessment tool for EC to be effective in measuring learning gains related to opinion forming and decision making, it should unite Ten Dam et al.’s (2011) citizenship competence components, for these strongly focus on opinion-forming as a pivotal competence in citizenship. Furthermore, Wiek et al.’s (2011) sustainability competences are important aspects for such a tool, since they cover commonly pursued competences for effective sustainable decision-making and action-taking. Finally, Sadler et al.’s (2007) Socio-Scientific Reasoning dimensions are important to include since they inherently feature two central requirements of effective education for EC, holism and pluralism, while covering the broader scope of reasoning and opinion-forming regarding complex and controversial issues such as those related to sustainability. An assessment tool that focusses on measuring EC opinion-forming and decision-making should unite these three models into a coherent whole. This important area of EC competence is as of yet uncovered by existing assessment instruments, despite its widely acknowledged societal value and worldwide prevalence as curricular aim. Our model unites these three dimensions in its five main constructs: EC Knowledge, EC Attitudes, EC Skills, EC Reflection, and EC Complexity. With this study we aim to develop a validated assessment tool for opinion forming and decision making aspects of EC at lower secondary level.

3. Methods

3.1. Initial version and pilot study

In a first step, we designed a pilot version of the Environmental Citizenship Opinions (ECO) questionnaire in which we included an items battery drawn from existing surveys. About a quarter of the pilot items was based on the instruments of Ten Dam et al. (2011), Gericke et al. (2018), Milfont and Duckitt (2010), and Schreiner and Sjøberg (2004). The rest of the items we constructed ourselves during the design process, based on the theoretical underpinning described in the theoretical framework. The instrument was developed and administered in Dutch. To ensure translation accuracy, backtranslation was used whenever items were originally written in English. The tool’s items offer 5-point Likert scale response options, in either a Strongly disagree, Disagree, Neutral, Agree, Strongly agree format or, for the Reflection subscales, a Never, Occasionally, Sometimes, Often, Very often format. Several items adopted inverted scales in order to check for response bias.

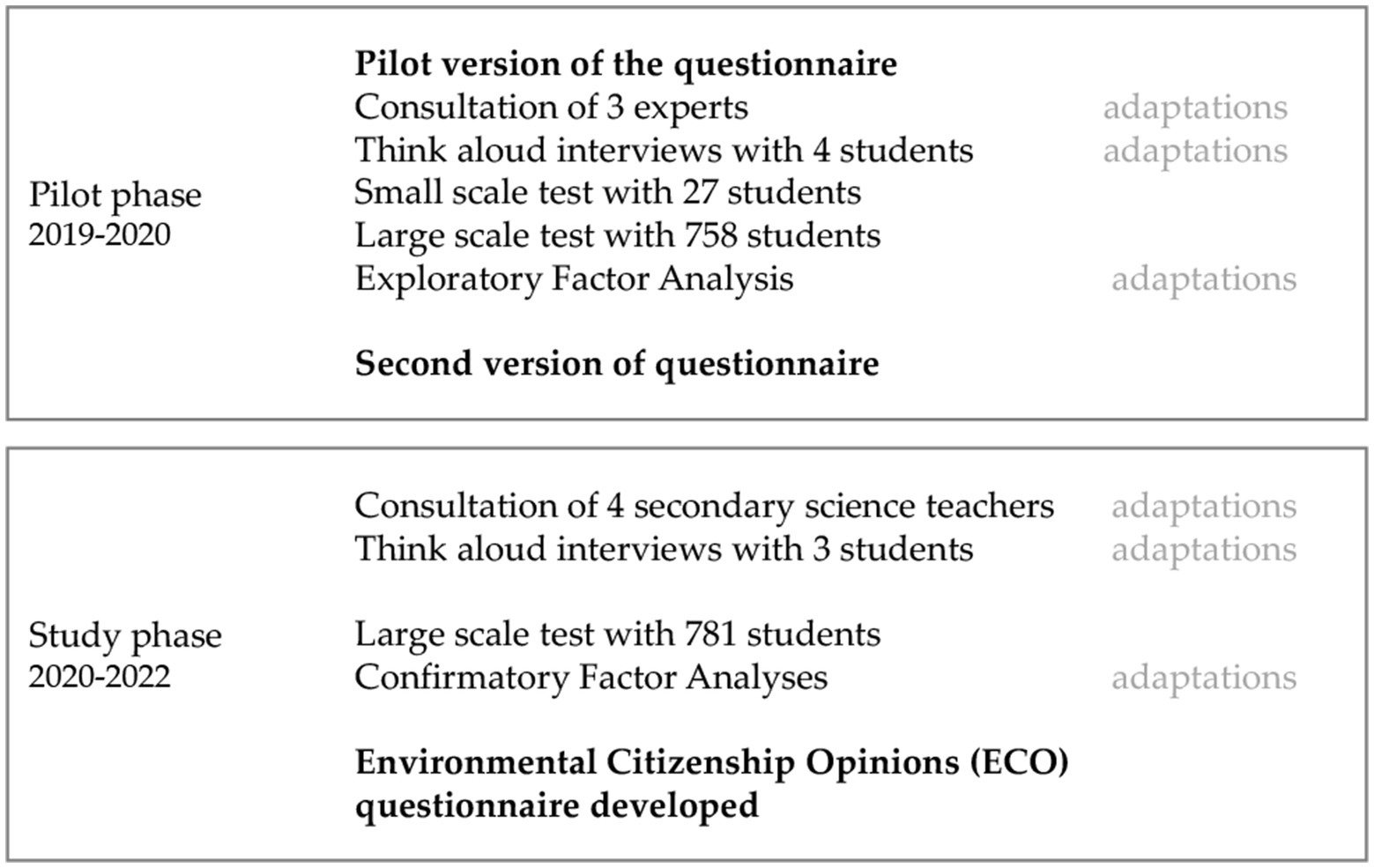

The pilot version of the questionnaire was discussed in one-on-one setting with several independent experts (Figure 2), after which they provided written feedback (Marissen, 2019). The consulted experts included a science teacher who commented on the applicability of the questionnaire for the target group, a psychology professor with experience with developing questionnaires commented on the questions and wording, and an assistant professor specializing in sustainability discussed its inclusion of the topic of sustainability. This resulted in minor but meaningful changes in wording. Subsequently, think aloud interviews with four lower secondary students were carried out, during which the questionnaire’s cognitive validity was tested. This again led to some formulation changes. A small-scale test with a class of 27 students followed, which was aimed mainly at testing the platform and the process of online administration. Finalizing the pilot phase, the initial questionnaire of 65 items was tested in a large-scale data collection round with 758 lower secondary students. The data for this pilot round were analyzed with IBM SPSS (version 25) during several rounds of exploratory factor analysis (Principle Axis Factoring). Based on this pilot phase, a second version of the questionnaire was developed. Some items were adapted, new items were added, ultimately leading to 73 items. Most adaptations were based on results from the EFA, to improve item loadings whilst ensuring theoretical coverage of the items would remain sufficiently high. For example, new items were designed for the knowledge and complexity subscales in order to improve their quality.

Figure 2. Summary of the pilot phase and the study phase, and the steps taken during the development of the assessment tool for EC.

The first part of the study phase (Figure 2) again started with expert consultation. The questionnaire was discussed with four secondary school teachers and during think aloud interviews with three lower secondary students. Some small changes were made regarding wording of several items. The resulting version of the questionnaire was subsequently tested during a round of large scale data.

3.2. Participants

Participants for the large scale test during the study phase were sought through teachers from the researchers’ network. Purposive sampling was used, selection of schools was based on ensuring representational spread of urban and rural areas. Ultimately, 894 students from 11 schools throughout the Netherlands took part in the study. 113 responses were excluded from analysis because they featured 80% or higher in one answer category or judging by the inverted items that were included as a check for social-desirability bias. The final dataset contained fully filled in questionnaires of 781 lower secondary students (female: 399; male: 363; neither female nor male: 19; average age 13.5; median age 13).

3.3. Data collection

Data were collected from January 2020 till June 2022. The questionnaire was administered online using FormDesk software. Participants either filled in the questionnaire during school hours in a regular science lesson or at home in their own time. Data collection took place within ethical boundaries set by Utrecht University.

3.4. Data analysis

The collected data were used to test the fit of our theoretical model to the dataset. To that end we conducted a series of Confirmatory Factor Analyses (CFAs) using the Mplus software package, version 8.8 (Muthen and Muthen, 2010). First, we checked for normality by looking at the skewness and kurtosis calculations of the individual items. Several rounds of CFA followed. In each round, the model fit parameters were checked as a reference for applicability of the model. We considered dropping items if very low factor loadings occurred and if this improved the model fit in subsequent CFAs. Multiple fit indices were used to evaluate the model, with the recommended values of 0.95 for the comparative fit index (CFI) and Tucker-Lewis index (TLI). For the root mean square error of approximation (RMSEA) we used values of 0.06 (Tabachnick and Fidell, 2007). Where necessary, modification indices based on meaningful error co-variances between items were used to further improve the model (Byrne, 2012), based on insights from theory and based on suggestions from Mplus that lowered the overall Chi Squared by more than 50 whilst being sensible from a theoretical point of view.

Finally, Cronbach’s alpha was calculated using IBM SPSS Statistics (version 82.0.1.1) for each second- and third order construct to explore their reliability as an indication of their internal consistency. This combination of factor analysis, which models single-construct scales, and Cronbach’s alpha, which indicates equivalence of items within these single scales, provides relevant information about reliability of scales (Taber, 2018).

4. Results

Several rounds of confirmatory factor analysis (CFA) were carried out to test the hypothetical model, which was based on the theoretical underpinning of our instrument. We set out to confirm that the construct of EC is composed of five latent psychometric constructs: knowledge, attitudes, skills and reflection, with the addition of complexity of EC issues (Figure 1).

For the first round of CFA, we included 46 of the initial 73 items. The item pool from the pilot study was narrowed down to improve usability of the instrument whilst simultaneously ensuring theoretical coverage of the instrument was not diminished. This first selection of items was based on factor loadings and creating a balanced distribution of items across the subconstructs. The first CFA showed a promising yet unacceptable model fit to the data, with the Root Mean Square Error Of Approximation (RMSEA) being 0.048, a Comparative fit index (CFI) of 0.84, and a Tucker-Lewis Index (TLI) of 0.83. This indicated the need for modifications to improve the model. Modification indices in Mplus showed possible improvements, but none would improve model fit indices above unacceptable levels (CFI = 0.89, TLI = 0.88, and RMSEA = 0.039). Error covariances that were suggested and applied include all six covariances present in the final model, with the addition of six further covariances. None of the covariances were between items in different latent constructs.

During the second round of CFA we decided to drop items with very low loadings from the model with the aim to improve model fit. This led to exclusion of items 42 and 43 from the Attitudes subscale and 48 and 51 from the Skills subscale, meaning the second round model included 42 items. One more error covariance was included, between 64 and 61. Removing error covariances that were included in the first CFA round would not lead to improvements in model fit, which made us decide to keep the six covariances from the first round of CFA. Adaptations to our base model improved model fit indices, but CFI and TLI maxed out at 0.92 and 0.91, respectively, with an RMSEA of 0.036, which indicates an almost acceptable fit of our model to the data.

A third and final round of CFA followed (Figure 3), for which the model from round two was used as a base. This third round was furthermore informed by looking at a CFA which included all 73 original items. This 73 item model suggested to us to base the Knowledge subscale solely on social and human rights items (those being 8, 9, 10, and 11). The Knowledge subscale was therefore adapted accordingly. Furthermore, the Complexity subscale was adapted by excluding 61 and 64 because of low factor loadings, whilst 62 was included again to keep the number of items in the subscale in balance with the other subscales. Finally, 69 and 70 were excluded to further improve the Complexity subscale.

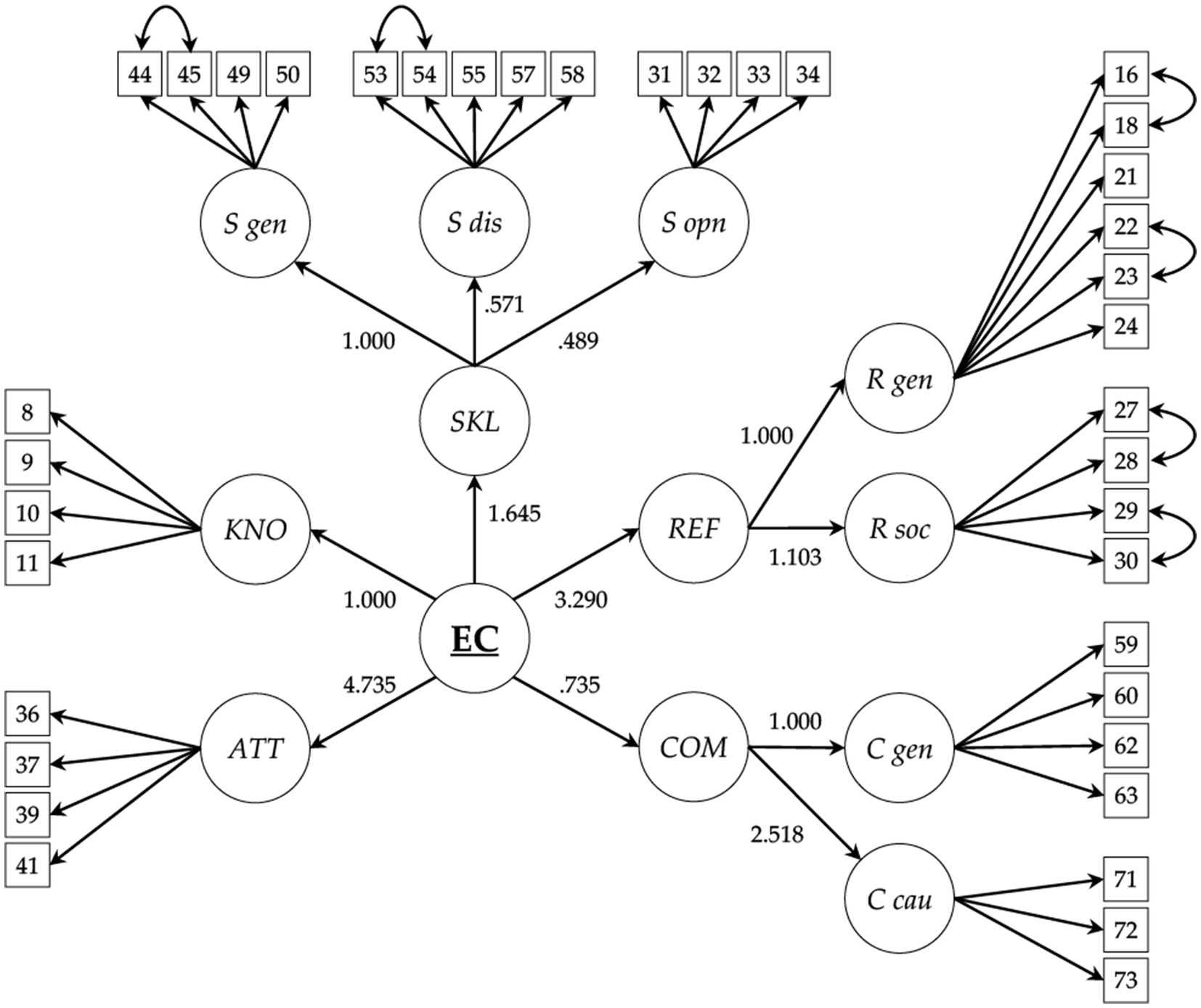

Figure 3. The final higher order factor structure of the ECO questionnaire. EC, Environmental Citizenship; KNO, Knowledge; ATT, Attitudes; SKL, Skills; REF, Reflection; COM, Complexity. Subconstructs include general skills (S gen), discussion skills (S dis), opinion skills (S opn), general reflection (R gen), social reflection (R soc), general complexity (C gen), and causes complexity (C cau). Estimates for the structural parameters based on the final confirmatory factor analysis are shown. Estimates for the residual errors, error covariances and measurement errors available in Online Resource 1.

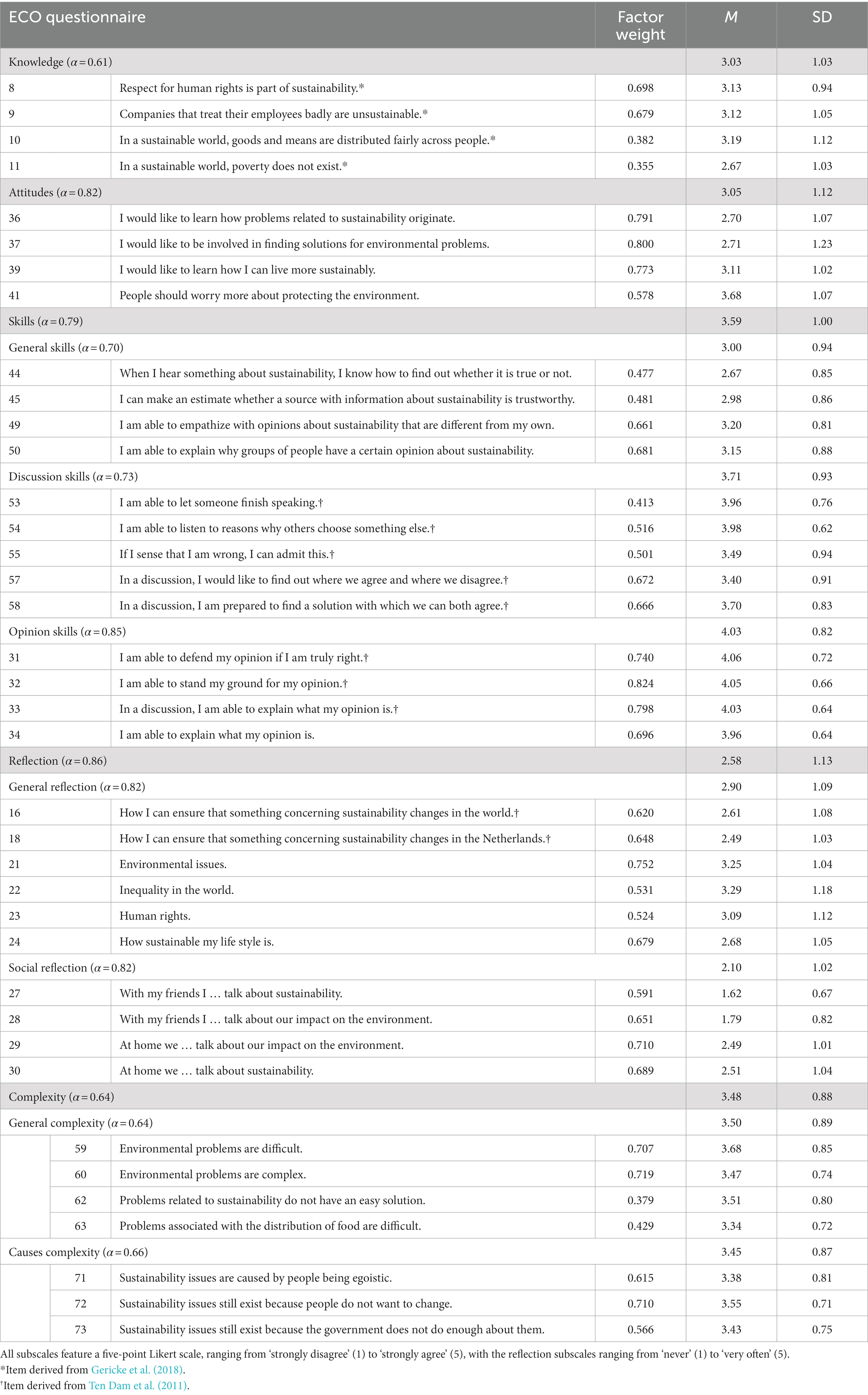

The final model thus included 38 items that cover the broad scope of EC theory that we initially set out to include in our model. These adaptations improved the model fit values, leading to a final model fit of RMSEA = 0.036, CFI = 0.93, and TLI = 0.93, with the Standardized Root Mean Square Residual being 0.05, indicating near excellent model fit. The SRMR acts as an approximation of model fit that combines the standardized residual covariances into one overall statistic (Maydeu-Olivares and Shi, 2017). The final list of items, including their standardized factor weight, mean scores and standard deviations, is provided in Table 2. This table also shows the Cronbach’s alpha values that were calculated for each of the subscales as an estimation for their reliability. All alpha values are above the level of acceptable, ranging from 0.61 for the Knowledge subscale to 0.86 for the Reflection subscale.

Table 2. Final version of the environmental citizenship opinions (ECO) questionnaire, showing Cronbach’s alpha (α) for each (sub)construct, and standardized factor weight, mean (M) and standard deviation (SD) for each item.

5. Discussion

In this study we set out to describe the development and validation of the Environmental Citizenship Opinions (ECO) questionnaire. Based on the results of the different steps in our analyses we will first discuss the ECO questionnaire’s quality. We then describe underlying relationships between the different constructs of EC in our instrument, and finally we reflect on implications for teachers.

As can be seen from the results of our analyses, the ECO questionnaire offers a valid and reliable way to assess EC of students in lower secondary education. For the first time, opinion forming and decision making aspects are integrated in a single validated EC assessment tool. The overall structure of the model was based on Ten Dam et al.’s (2011) four main components of citizenship competence. Two of the third-order subconstructs are entirely made up from items of their measurement tool for citizenship, those being Discussion skills (items 53–55, 57, 58) and Opinion skills (31–34). The fifth subconstruct in our model, Complexity, was included because of its importance in Sadler et al.’s (2007) Socio-Scientific Reasoning model, one of the main constituents of the ECO questionnaire. Other Socio-Scientific Reasoning items in our assessment tool are for instance ‘I can assess whether a source with information about sustainability is trustworthy’ (item 45, representing ‘scepticism’). Wiek et al.’s (2011) key sustainability competences are covered by such items as ‘In a discussion I am prepared to find a solution with which we can both agree’ (item 58, a strategic competence item) and ‘I am able to empathize with opinions about sustainability that are different from my own’ (item 49, Normative competence). With this, the ECO questionnaire covers the theoretical concepts which we set out to unite in one assessment tool.

Model fit indices and Cronbach’s alpha estimates indicate that our assessment tool is both valid and reliable. The final model fit indices fall well within range of excellent quality (Tabachnick and Fidell, 2007). Furthermore, all subconstructs have acceptable to excellent Cronbach’s alpha estimates. Since these alphas were calculated on single-construct scales, as modeled during factor analysis, these values are assumed to indicate that items within these constructs are interrelated (Taber, 2018). Four subconstructs have alpha values between 0.6 and 0.7. Since the items in these subconstructs are related given their performance in our model during the rounds of Confirmatory Factor Analysis, and since the generally assumed level of satisfactory alpha is an arbitrary one (Taber, 2018), the reliability of these subscales is considered to be satisfactorily high to use them as a measure for their corresponding subconstructs of EC.

Looking at the items scores, there is no occurrence of a ceiling effect, which indicates that our tool is not prone to elicit favorable answering modes from students. Furthermore, a large spread of student scores between respondents can be found, with standard deviations of individual items frequently being greater than one. Taken together, these findings show the instrument is sensitive to measure differences between individuals in a valid and reliable manner.

5.1. Environmental citizenship and relationships between its subconstructs

This novel tool in itself is an important outcome of our study, but the results also allow us to explore the interrelations of subconstructs of EC included in the questionnaire. When looking at the factor structure and the estimates for its structural parameters, a clear difference in relative weight of the subconstructs can be seen (Figure 3). The Attitudes and Reflection subconstructs for instance have a relative weight that is three to four times higher than the the other second order constructs. We know from literature that attitudes and other affective constructs are an important constituent for one’s pro-environmental behavior (e.g., Böhme et al., 2018). In redefining action competence for sustainable development, Sass et al. (2020) for instance included the attitudinal construct of ‘Willingness to take action’ as one of the driving forces behind taking action. We also know from research that affective variables such as emotions and intuitions, like attitudes, strongly influence decision making (Haidt, 2001; Ojala, 2013). Since we cannot distinguish between fruitful and unfruitful emotions for environmental citizens, the emotional aspect was beyond the scope of our assessment tool for EC. Yet development of our model once more underscores the relatively strong weight of affective variables on EC compared to for instance the cognitive subscale.

Just like in our model, previous studies show that knowledge does not always play an equally important role in this process: “Even for the most engaged citizens, automatic unconscious intuitions are generally responsible of final political decisions, which are often resistant to any information that confronts those emotional insights” (Estellés and Fischman, 2021, p. 224). Similarly, in their model for pro-environmental competences for adolescents, Roczen et al. (2014) found that different knowledge subscales had a lower impact on general ecological behavior than attitudinal factors. Likewise, our model further emphasizes this relatively low weight effect of knowledge on EC and the relatively stronger relationship of Attitudes and EC.

Going one step beyond this previously identified relationship, our results point at Reflection about sustainability as being in the same range of importance as affective constructs for ones EC. Although the relevance of reflection as a subconstruct for citizenship competence (Ten Dam et al., 2011) or Socio-Scientific Reasoning (Sadler et al., 2007) has previously been acknowledged within the research community, our findings provide further empirical basis for underscoring its importance as one of the key constituents of EC. This has several implications for education. If one for example wishes to promote EC, learning aims related to reflecting on sustainability are recommended. Education could be tailored towards increasing the frequency of these reflective moments.

The Knowledge subconstruct could not be composed of items representing both environment themed items on the one hand and human rights or socio-economic items on the other with satisfactory model fit indices and reliability estimates. This tells us something about the participating students’ interpretation of sustainability. It shows that the consulted students do not equally consider the environmental and the social or economic dimension of sustainability. Although further research is needed to check conceptual understanding of the target group, previous studies provide similar results. This was for instance shown by an overemphasis of the environmental or planet dimension of sustainability in student understanding of the concept (Walshe, 2008; Benninghaus et al., 2018; Sass et al., 2021b) or of underrepresentation of the economic dimension in student summaries of sustainability issues (Berglund and Gericke, 2018; Van Harskamp et al., 2022). It is furthermore known that science teachers have narrow views of sustainability. An interview study on teachers’ EC practice showed that the ecological dimension is overemphasized in science teachers’ definitions of sustainability (Van Harskamp et al., 2021). With teachers having this overly ecological interpretation of what sustainability entails, it comes as no surprise that their students hold similar views. The relationship of teacher and student interpretation of sustainability as a concept could be explored further in subsequent studies. For our assessment instrument, however, it is important to focus on aspects of sustainability knowledge that would otherwise be underrepresented, those being the people and prosperity dimensions. These are therefore strongly represented in the current items of the Knowledge subconstruct as well as throughout other subconstructs, for instance in Reflection (e.g., inequality and human rights).

The relatively low overall score for the Social reflection subconstruct in our sample (a 2.1 average) indicates that the participating students hardly ever discuss sustainability with friends or their family. This contrasts with the students’ interpretation of their own Discussion and Opinion skills (3.7 and 4.0, respectively), which is relatively high. The students in our sample feel confident in their abilities to discuss their sustainability opinions, but they simultaneously mention hardly bringing these skills in practice at home or with friends. Since our sample does not represent spread across educational levels in the Netherlands, these results cannot be easily generalized. In our sample, the vocational level was underrepresented. There is no reason to assume that this has an effect on the structure of the model, but it does possibly influence the overall scores of the subconstructs. The Ten Dam et al. (2011) items, for instance, have been found to lead to higher averages for pre-university students than for those of other educational levels (Ten Dam et al., 2014). A mitigating factor here could be that the aforementioned means of the Skills subscales in our study closely resemble the means of the original instrument from which these items were taken, for which a representative sample was used (Ten Dam et al., 2011). However, caution needs to be taken when interpreting mean scores from our study, since our sample was selected with the eye on high quality instrument development rather than on representation of the Dutch population of students.

5.2. Implications for use in practice

Our assessment tool can be used by researchers to monitor EC opinion forming and decision making learning outcomes at lower secondary level, for instance by pre-post-test design. To make our instrument usable by (science) teachers, several steps need to be taken. First, guidelines need to be provided for teachers to ensure data collection conditions are appropriate. Second, instructions need to be written which describe how to calculate scores for first, second, and third order constructs. An (online) tool to perform these calculations would be helpful. Finally, an explanation needs to be given for how to interpret the results and for drawing conclusions and understanding implications for teaching practice. The clear and straightforward structure of our instrument, mainly when considering the five second order constructs, might facilitate this process. Furthermore, with 38 items, our assessment tool is relatively short, which enhances usability in a field where time is a scarce commodity.

5.3. Limitations

As with every questionnaire, choices were made during the development process which must be considered before implementation. Our instrument was validated in a Dutch context, and was developed with the specific age group of lower secondary level in mind. General higher and pre university level were overrepresented in the final dataset. Its validity and reliability have not been explored in other contexts, which means applying the questionnaire in other countries or using it for other age groups should be done with caution. Further studies of the ECO questionnaire in other contexts would be a valuable addition to our understanding of its broader applicability. These studies could also explore specifications of the general subscales in the current version (e.g., General skills, General reflection). Despite the high quality of the current version of the instrument, these aspects could be improved in subsequent developmental rounds.

Apart from these limitations, the instrument behaves satisfactorily and offers valuable insight in students’ EC. Of course, our model does not cover the theoretical constructs of EC in all of its broadness. However, taken together, our assessment tool is able to assess learning outcomes that were previously excluded from validated assessment tools. This provides insight in key characteristics of opinion forming and decision making regarding the elusive construct of EC.

5.4. Conclusion

We set out to develop an assessment tool for EC of lower secondary students. The ECO questionnaire and all of its lower order constructs form a broad and meaningful overview of one’s level of EC. Judging by its performance through rigorous testing, it measures EC in a valid and reliable way. The tool therefore offers opportunities for teachers to assess students’ levels of EC in the classroom. This could help them understand what areas of EC are sufficiently developed, and which EC aspects demand further nourishment. Additionally, it could provide science education researchers with valuable information on where students currently stand concerning EC.

The added value of this new tool to the existing pool of instruments is threefold. First, it is the first time that these three dimensions of EC, the general citizenship components of Ten Dam et al. (2011), the key sustainability competences of Wiek et al. (2011), and the Socio-Scientific Reasoning aspects from Sadler et al. (2007), are unified in one coherent tool. Our effort to unify these EC elements thus fills a gap in the availability of tools. It enables teachers to assess learning aims other than those related to behaviors or knowledge. It also allowed us to explore the different constituents of EC and their underlying relationships which improved our understanding of EC in general. Second, the scores from our assessment tool show a large spread and there is no ceiling effect among the data. Our tool therefore is sensitive enough to discern differences between individual students. Finally, our instrument was aimed at lower secondary students, age 11–15. This specific target group has not yet been selected as main target group for previously developed Ec assessment tools. With the development of the Environmental Citizenship Opinions questionnaire, we have filled several gaps in availability of EC assessment tools. In doing so, we hope to contribute to effective teaching for EC in the (science) classroom for lower secondary education and beyond.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://doi.org/10.17026/dans-xra-buzx.

Ethics statement

The studies involving human participants were reviewed and approved by the Utrecht University Ethics Board of the Faculty of Science. Written informed consent from the participants' legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

MvH, M-CK, and WvJ contributed to the study conception, material preparation, and data collection. MvH, M-CK, JB-dP, and WvJ contributed to the study design, data analysis, writing process, and commented on the different versions of the manuscript. MvH wrote the first draft of the manuscript. All authors read and approved the final manuscript.

Funding

This study was funded by the Nationaal Regieorgaan Onderwijsonderzoek (NRO) under grant number 40.5.18540.030.

Acknowledgments

The authors would like to thank Anne-Wil Marissen and the consulted experts for their work during the pilot round, and the teachers and their students who participated in this study during the different test phases.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1182824/full#supplementary-material

References

Ariza, M. R., Christodoulou, A., van Harskamp, M., Knippels, M.-C. P. J., Kyza, E. A., Levinson, R., et al. (2021). Socio-scientific inquiry-based learning as a means toward environmental citizenship. Sustainability 13:11509. doi: 10.3390/su132011509

Bandura, A. (2001). Social cognitive theory: an agentic perspective. Annu. Rev. Psychol. 52, 1–26. doi: 10.1146/annurev.psych.52.1.1

Benninghaus, J. C., Kremer, K., and Sprenger, S. (2018). Assessing high-school students’ conceptions of global water consumption and sustainability. Int. Res. Geogr. Environ. Educ. 27, 250–266. doi: 10.1080/10382046.2017.1349373

Berglund, T., and Gericke, N. (2018). Exploring the role of the economy in young adults’ understanding of sustainable development. Sustainability 10:2738. doi: 10.3390/su10082738

Boeve-de Pauw, J., Gericke, N., Olsson, D., and Berglund, T. (2015). The effectiveness of education for sustainable development. Sustainability 7, 15693–15717. doi: 10.3390/su71115693

Boeve-de Pauw, J., Olsson, D., Berglund, T., and Gericke, N. (2022). Teachers’ ESD self-efficacy and practices: a longitudinal study on the impact of teacher professional development. Environ. Educ. Res. 28, 867–885. doi: 10.1080/13504622.2022.2042206

Böhme, T., Stanszus, L. S., Geiger, S. M., Fischer, D., and Schrader, U. (2018). Mindfulness training at school: a way to engage adolescents with sustainable consumption? Sustainability 10:3557. doi: 10.3390/su10103557

Bouman, T., Steg, L., and Kiers, H. A. L. (2018). Measuring values in environmental research: a test of an environmental portrait value questionnaire. Front. Psychol. 9, 1–15. doi: 10.3389/fpsyg.2018.00564

Buckler, C., and Creech, H., UNESCO. (2014). Shaping the future we want: UN Decade of Education for Sustainable Development (2005–2014): Final Report. International Report; UNESCO: Paris, France.

Byrne, B. M. (2012). Structural equation modeling with Mplus: basic concepts, applications, and programming. New York: Routledge.

Cian, H. (2020). The influence of context: comparing high school students’ socioscientific reasoning by socioscientific topic. Int. J. Sci. Educ. 42, 1503–1521. doi: 10.1080/09500693.2020.1767316

Estellés, M., and Fischman, G. E. (2021). Who needs global citizenship education? A review of the literature on teacher education. J. Teach. Educ. 72, 223–236. doi: 10.1177/0022487120920254

European Commission. (2019). Key competences for lifelong learning. International report; EC: Luxembourg. doi: 10.2766/569540

European Network for Environmental Citizenship (2018). Defining environmental citizenship 2018, 52–54. doi: 10.21820/23987073.2018.8.52

Garrecht, C., Bruckermann, T., and Harms, U. (2018). Students’ decision-making in education for sustainability-related extracurricular activities—a systematic review of empirical studies. Sustainability 10:3876. doi: 10.3390/su10113876

Gericke, N., Boeve-de Pauw, J., Berglund, T., and Olsson, D. (2018). The sustainability consciousness questionnaire: the theoretical development and empirical validation of an evaluation instrument for stakeholders working with sustainable development. Sustain. Dev. 27, 35–49. doi: 10.1002/sd.1859

Hadjichambis, A. C., and Paraskeva-Hadjichambi, D. (2020). Environmental citizenship questionnaire (ECQ): the development and validation of an evaluation instrument for secondary school students. Sustainability 12, 1–12. doi: 10.3390/SU12030821

Haidt, J. (2001). The emotional dog and its rational tail: a social intuitionist approach to moral judgment. Psychol. Rev. 108, 814–834. doi: 10.1037/0033-295X

Lönngren, J., and Van Poeck, K. (2021). Wicked problems: a mapping review of the literature. Int. J. Sustain. Dev. World Ecol. 28, 481–502. doi: 10.1080/13504509.2020.1859415

Marissen, J. W. (2019). Development of a questionnaire to assess lower secondary school students’ environmental citizenship. Master thesis, Utrecht University, Utrecht, Netherlands. Available at: https://studenttheses.uu.nl/handle/20.500.12932/33362

Maydeu-Olivares, A., and Shi, D. (2017). Effect sizes of model misfit in structural equation models. Methodology 13, 23–30. doi: 10.1027/1614-2241/a000129

Milfont, T. L., and Duckitt, J. (2010). The environmental attitudes inventory: a valid and reliable measure to assess the structure of environmental attitudes. J. Environ. Psychol. 30, 80–94. doi: 10.1016/j.jenvp.2009.09.001

Muthen, L. K., and Muthen, B. O. (2010). Mplus user’s guide (6th Edn.). Los Angeles, United States: Muthén & Muthén.

National Research Council. (2012). A framework for K-12 science education: practices, crosscutting concepts, and Core ideas. Committee on a conceptual framework for new K-12 science education standards. Board on science education, division of Behavioral and social sciences and education. Washington, DC: The National Academies Press.

Öhman, J. (2008). Values and democracy in education for sustainable development: contributions from Swedish research. Malmö:Liber.

Ojala, M. (2013). Emotional awareness: on the importance of including emotional aspects in education for sustainable development (ESD). J. Educ. Sustain. Dev. 7, 167–182. doi: 10.1177/0973408214526488

Olsson, D., and Gericke, N. (2016). The adolescent dip in student sustainability consciousness—implications for education for sustainable development. J. Environ. Educ. 47, 35–51. doi: 10.1080/00958964.2015.1075464

Olsson, D., Gericke, N., and Boeve-de Pauw, J. (2022). The effectiveness of education for sustainable development revisited–a longitudinal study on secondary students’ action competence for sustainability. Environ. Educ. Res. 28, 405–429. doi: 10.1080/13504622.2022.2033170

Olsson, D., Gericke, N., Boeve-de Pauw, J., Berglund, T., and Chang, T. (2019). Green schools in Taiwan – effects on student sustainability consciousness. Glob. Environ. Chang. 54, 184–194. doi: 10.1016/j.gloenvcha.2018.11.011

Olsson, D., Gericke, N., Sass, W., and Boeve-de Pauw, J. (2020). Self-perceived action competence for sustainability: the theoretical grounding and empirical validation of a novel research instrument. Environ. Educ. Res. 26, 742–760. doi: 10.1080/13504622.2020.1736991

Ratcliffe, M., and Grace, M. (2003). Science education for citizenship. Maidenhead: Open University Press.

Roczen, N., Kaiser, F. G., Bogner, F. X., and Wilson, M. (2014). A competence model for environmental education. Environ. Behav. 46, 972–992. doi: 10.1177/0013916513492416

Sadler, T. D., Barab, S. A., and Scott, B. (2007). What do students gain by engaging in socioscientific inquiry? Res. Sci. Educ. 37, 371–391. doi: 10.1007/s11165-006-9030-9

Sass, W., Boeve-de Pauw, J., De Maeyer, S., and Van Petegem, P. (2021a). Development and validation of an instrument for measuring action competence in sustainable development within early adolescents: the action competence in sustainable development questionnaire (ACiSD-Q). Environ. Educ. Res. 27, 1–20. doi: 10.1080/13504622.2021.1888887

Sass, W., Boeve-de Pauw, J., Olsson, D., Gericke, N., De Maeyer, S., and Van Petegem, P. (2020). Redefining action competence: the case of sustainable development. J. Environ. Educ. 51, 292–305. doi: 10.1080/00958964.2020.1765132

Sass, W., Quintelier, A., Boeve-de Pauw, J., De Maeyer, S., Gericke, N., and Van Petegem, P. (2021b). Actions for sustainable development through young students’ eyes. Environ. Educ. Res. 27, 234–253. doi: 10.1080/13504622.2020.1842331

Schreiner, Camilla., & Sjøberg, Svein. (2004). Sowing the seeds of ROSE: background, rationale, questionnaire development and data collection for ROSE (The Relevance of Science Education): a comparative study of students’ views of science and science education. International Report; Unipub AS: Oslo, Norway.

Schulz, W., Ainley, J., Fraillon, J., Losito, B., Agrusti, G., and Friedman, T. (2018). Becoming citizens in a changing world IEA international civic and citizenship education study 2016 international report. International Report; IEA: Amsterdam, Netherlands.

Stichting Leerplanontwikkeling, (2016). Karakteristieken en Kerndoelen. Onderbouw voortgezet onderwijs. Report. Nationaal Expertisecentrum Leerplanontwikkeling, Enschede, Netherlands.

Sund, L., and Öhman, J. (2014). On the need to repoliticise environmental and sustainability education: rethinking the postpolitical consensus. Environ. Educ. Res. 20, 639–659. doi: 10.1080/13504622.2013.833585

Tabachnick, B.G., and Fidell, L. S. (2007). Using multivariate statistics. 5th Edn.. New York: Allyn and Bacon.

Taber, K. S. (2018). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 48, 1273–1296. doi: 10.1007/s11165-016-9602-2

Ten Dam, G., Geijsel, F., Reumerman, R., and Ledoux, G. (2011). Measuring young people’s citizenship competences. Eur. J. Educ. 46, 354–372. doi: 10.1111/j.1465-3435.2011.01485.x

Ten Dam, G. T. M., and Volman, M. L. L. (2007). Educating for adulthood or for citizenship: social competence Asan educational goal. Eur. J. Educ. 42, 281–298. doi: 10.1111/j.1465-3435.2007.00295.x

Ten Dam, G., Geijsel, F., Ledoux, G., Reumerman, R., Keunen, M., and Visser, A. (2014). Burgerschap Meten - Handleiding, versie 1.2 Amsterdam:Rovict BV/Universiteit van Amsterdam.

United Nations. (2019). The sustainable development goals report. International Report; UN: New York, USA.

Van Harskamp, M., Knippels, M. C. P. J., and van Joolingen, W. R. (2021). Secondary science teachers’ views on environmental citizenship in the Netherlands. Sustainability 13, 1–22. doi: 10.3390/su13147963

Van Harskamp, M., Knippels, M.-C. P. J., and Van Joolingen, W. R. (2022). “Sustainability issues in lower secondary science education: a socioscientific, inquiry-based approach” in Innovative approaches to Socioscientific issues and sustainability education. Learning Sciences for Higher Education. eds. Y. S. Hsu, R. Tytler, and P. J. White (Singapore: Springer).

Walshe, N. (2008). Understanding students’ conceptions of sustainability. Environ. Educ. Res. 14, 537–558. doi: 10.1080/13504620802345958

WCED. (1987). Our common fututre: the United Nations world commission on environment and development. International Report; UN: Oslo, Norway.

Keywords: environmental citizenship, questionnaire, lower secondary, confirmatory factor analysis, sustainability education

Citation: van Harskamp M, Knippels M-CPJ, Boeve-de Pauw JNA and van Joolingen WR (2023) The environmental citizenship opinions questionnaire: a self-assessment tool for secondary students. Front. Educ. 8:1182824. doi: 10.3389/feduc.2023.1182824

Edited by:

Janet Clinton, The University of Melbourne, AustraliaReviewed by:

Cristina Corina Bentea, Dunarea de Jos University, RomaniaDurdane Dury Bayram Jacobs, Eindhoven University of Technology, Netherlands

Copyright © 2023 van Harskamp, Knippels, Boeve-de Pauw and van Joolingen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michiel van Harskamp, bS52YW5oYXJza2FtcEB1dS5ubA==

Michiel van Harskamp

Michiel van Harskamp Marie-Christine P. J. Knippels

Marie-Christine P. J. Knippels Jelle N. A. Boeve-de Pauw

Jelle N. A. Boeve-de Pauw Wouter R. van Joolingen

Wouter R. van Joolingen