- 1School of Rehabilitation Science, McMaster University, Hamilton, ON, Canada

- 2CanChild Centre for Childhood Disability Research, McMaster University, Hamilton, ON, Canada

Background: Partnering for Change (P4C) is a school-based occupational therapy service intended to build the capacity of educators to support children with motor difficulties.

Aims: This paper describes the development of the Partnering for Change Educator Questionnaire and evaluates its structural validity and internal consistency.

Methods and procedures: The P4C Educator Questionnaire was completed by 1,216 educators four times across 2 years. Data from the initial time point were analysed via exploratory factor analysis (n = 436). Subsequently, Cronbach’s alpha and mean interitem correlations were calculated. Finally, the proposed factor structure was confirmed by testing it against data from times two through four using confirmatory factor analysis (n = 688).

Outcomes and results: A three-factor structure was evident and confirmed in hypothesis testing. The factor structure was interpretable according to the framework for building educator capacity used in this study. Internal consistency was high, with the total scale outperforming each subscale.

Conclusions and implications: A novel measure of educator self-reported capacity to support students with motor difficulties demonstrated structural validity and internal consistency. We currently recommend use as a complete scale accompanied by additional validation research.

1. Introduction

School-based occupational therapy traditionally has involved identifying children’s impairments via direct assessment (Bayona et al., 2006; Villeneuve, 2009), and then removal of children from their social and academic contexts to receive remedial interventions (McIntosh et al., 2011; Grosche and Volpe, 2013; Jimerson et al., 2016). However, more recently, there has been a notable shift away from one-to-one direct models of service delivery toward models that focus on capacity building in adults around the child (Villeneuve, 2009; Hutton et al., 2016) and that target universal, school-wide outcomes (Hutton et al., 2016; Chu, 2017). Indeed, occupational therapists internationally are transitioning to tiered models of service delivery (Cahill et al., 2014; Kaelin et al., 2019), including Response to Intervention and Partnering for Change models (Kaelin et al., 2019). This shift in service models is attributable to a number of influences, including efforts to better align school-based services with inclusive education mandates (e.g., Tomas et al., 2018), recognition of the importance of participation as a key foci for school-based services (e.g., Bonnard and Anaby, 2016), and a growing need to make effective use of limited resources coupled with increasing demand for services (e.g., Anaby et al., 2019). In this contemporary context, supports for educators through training and capacity building have featured prominently in recommendations for efficient and effective school-based services (Anaby et al., 2019).

Partnering for Change (P4C) is a model for delivering school-based occupational therapy services that was designed with capacity building and knowledge translation as essential elements (Missiuna et al., 2012a,b). P4C is a ‘tiered’ model where children receive occupational therapy intervention of varying intensity depending upon the extent of their needs and their response to interventions (Missiuna et al., 2012a,b). The interventions provided move beyond one-to-one therapy to include whole class and differentiated instruction. Services are organized in tiers from universal design suggestions that are collaboratively developed for whole classes (tier 1) through to targeted strategies and supports for groups of children (tier 2), and, finally, to individualized strategies and accommodations that are necessary to support a specific child (tier 3). Children are not restricted to receiving supports at a specific service tier and may receive supports simultaneously at multiple tiers. Dynamic performance analysis (Polatajko et al., 2000) is used to observe the child in the classroom context and generate strategies to be trialled at any tier; the child’s response to intervention is then monitored and supports are adjusted as needed (Campbell et al., 2016). Occupational therapists (OTs) then share this knowledge with educators and families. P4C was originally developed to support children with motor coordination difficulties who were experiencing significant wait times for school-based occupational therapy services (Missiuna et al., 2012a,b). However, the model is relevant for the development of many other skills needed for successful engagement and participation at school (Missiuna et al., 2017).

P4C aims to support the entire school community to facilitate the meaningful participation of all children in their daily environments, including through supporting educators (Pollock et al., 2017). P4C “focuses on capacity building through collaboration and coaching in context (Missiuna et al., 2012a,b, p. 43),” harnessing opportunities for job-embedded learning to affect change in the classroom to support children with motor difficulties. Recognized as an effective way to build educators’ capacity (Coggshall et al., 2012), job-embedded learning takes place when educators ‘learn by doing’ throughout the workday, reflect on their current practices, and identify solutions or areas for improvement (Croft et al., 2010). In P4C, therapists collaborate with educators, problem-solving about possible strategies or changes to the environment. When a solution is found, knowledge exchange is emphasized such that therapists and educators discuss why that strategy was helpful and other circumstances when it could be used. Qualitative research has indicated that educators appreciate and respond in overwhelmingly positive ways to this collaborative knowledge sharing with occupational therapist (Wilson and Harris, 2018). Further, building educator capacities has been recommended as a key area of growth for school-based occupational therapy (Wehrmann et al., 2006). Indeed, findings from a recent scoping review by Meuser et al. (2023) highlighted that enhancing educator competencies and working collaboratively is a key feature of interventions that facilitate children’s classroom participation. However, measures are needed that align with this focus in occupational therapy practice to determine if educators perceive that their capacity and skills are increasing, and thus, that services are having the impact that is intended. Maciver et al. (2020) similarly recognized the need for a measure that not only could evaluate a child’s successful participation in the classroom, but that also reflected the importance of the classroom environment. Their development of the educator completed, School Participation Questionnaire, reflects the need for instruments that better target the changed focus of school-based interventions and services.

Building capacity to support children with disabilities is recognized as a key active ingredient of tiered service delivery models (VanderKaay et al., 2021), including the one implemented in this study. Teacher self-report of their capacities to support children with all needs is linked to their attitudes towards and use of inclusive educational practices (Yada et al., 2022), and formal and informal capacity building is recommended as an essential element of inclusive educational practice (Wray et al., 2022). To our knowledge, there are no available, published outcome measures designed for assessing educators’ capacities to support children with motor difficulties. As a core ingredient of the P4C service delivery model, an outcome measure for this concept was needed. Consequently, we developed a self-report measure to address this need. In this study, we report an initial evaluation of a novel outcome measure designed to capture changes in educators’ knowledge and skills regarding supporting children with motor difficulties within daily classroom activities. We focus specifically on the structural validity and internal consistency of the scale, as these have been identified as key measurement scale properties by international consensus (Mokkink et al., 2010). We acknowledge that many other attributes of outcome measurements, psychometric and otherwise, are critical to consider when appraising a measure for research or for clinical practice (Hesketh and Sage, 1999; Mokkink et al., 2010). However, we have chosen this starting point because evaluating these measurement properties is a critical first step that lays the groundwork for future validation work. Indeed, other key psychometric properties seem unlikely to be robust if structural validity and internal consistency are lacking.

To summarize, the purpose of this study was to evaluate a novel measure for educator self-reported knowledge, skills, and capacities to support children with motor difficulties in the classroom. Specifically, the research objectives were as follows:

1. To assess the structural validity of the scale by modelling the underlying factor structure,

2. To evaluate the internal consistency of the total scale, as well as any subscales,

3. And to confirm the proposed factor structure by testing model fit against independent sample data.

2. Materials and methods

2.1. Study design

Using a quasi-experimental pre- to post-test research design, the P4C service delivery model was implemented and evaluated in 40 elementary schools over 2 years in Ontario, Canada (Missiuna et al., 2012a,b). In Ontario, elementary schools generally are inclusive of grades Kindergarten through Grade 8. Ethics approval was obtained from the Hamilton Integrated Research Ethics Board. The study also was approved by each school board and by the agencies who funded school-based occupational therapy services at the time. Parents or guardians were informed about the purpose of the study and provided written informed consent for their children to participate (see Missiuna et al., 2017). Educators who completed surveys were told the purpose of the study and that their survey data was being collected anonymously. Their informed consent was implied by their decision to complete and submit their survey.

2.2. Participants

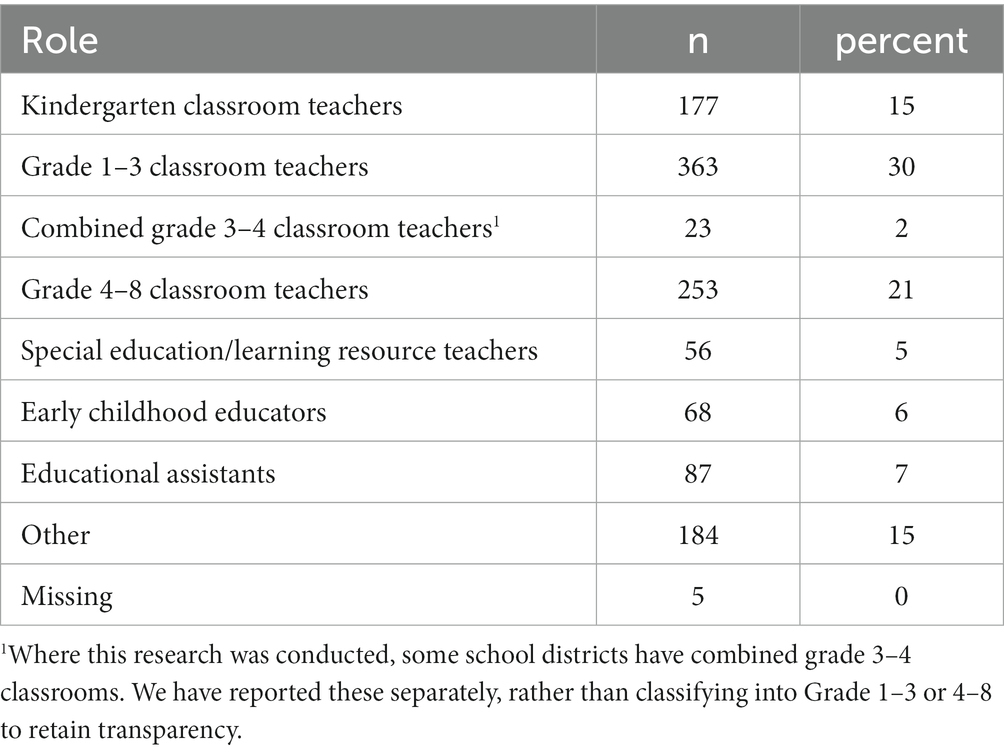

Educators at each of the 40 participating schools participated voluntarily by completing the P4C Educator Questionnaire (Missiuna et al., 2014), further described under measures. A total of 1,216 educators participated in the study. See Table 1 for a breakdown of their roles within their schools. A formal sample size calculation was not completed, as this analysis was not the primary goal of the overall research project. However, each time point sample met and far exceeded sample size guidelines for more complex models with latent factors (Wolf et al., 2013), which range from 30 to 460 observations depending on the features of the sample and model at hand, and so we judged the sample size to be sufficient.

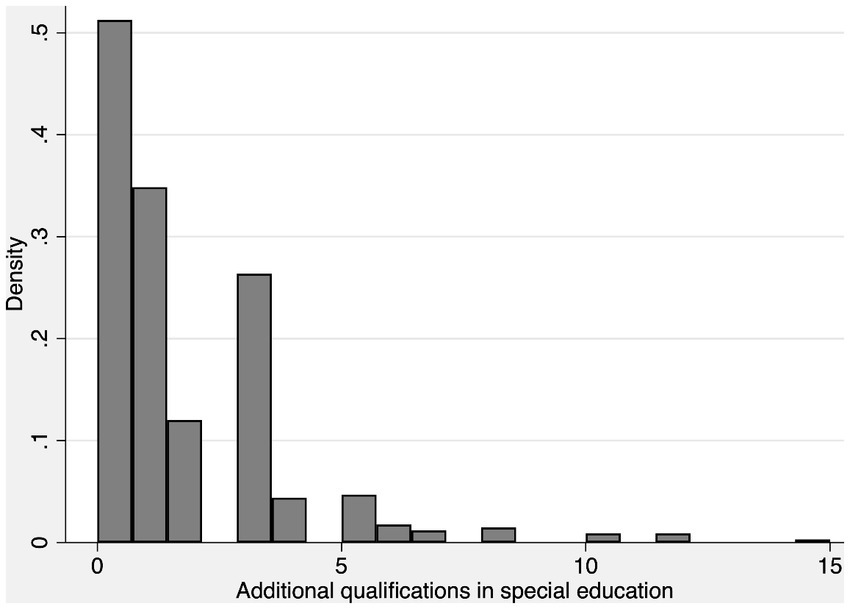

To describe the sample for each analysis, we calculated basic descriptive statistics using the demographic data available. Participants were only asked to provide information about their professional experiences, specifically, their years of experience in education and the number of additional qualifications in special education that they had completed. Due to skew in these distributions, we report the median and interquartile range (IQR). We also provide histograms to display the dispersion of demographic descriptors. The median years of experience was 10 years with an IQR of 9 years. For additional qualifications, the median was 1 qualification, and the IQR was 3. See Figures 1, 2 for visual representations of the distributions.

2.3. Procedures

From 2013 to 15 the Ontario Ministries of Health and Long-Term Care and Education funded a study involving the delivery of P4C in 40 schools, located in three schoolboards, within two health care regions. Questionnaires from a prior demonstration project (Missiuna et al., 2012a,b) were reviewed by the multidisciplinary team, modified, and then used with educators as measures of capacity-building. While the focus at the beginning of this study was on providing the P4C service to children with developmental coordination disorder, the population was expanded, and services were provided to all children with motor difficulties.

As part of this project, we administered the P4C Educator Questionnaire at four time points, once at the beginning and end of each school year. Paper copies of the measure were given to the participating OTs to distribute to educators. Educators were in turn asked to complete them and return them to the OT. No personally identifying information was collected on the questionnaire. A total of 1,216 educators completed the questionnaire. Some educators completed the questionnaire more than one time (n = 327), although most educators completed it once (n = 877).

2.4. Measures

2.4.1. Measure development

The P4C Educator Questionnaire was developed over the course of several studies of the P4C service delivery model (Missiuna et al., 2012a,b). The questionnaire as evaluated in the present study was piloted and refined in a demonstration study (Missiuna et al., 2012a,b). Occupational therapists who provided P4C services were trained regarding best practices for supporting children with motor coordination disorders in schools (Pollock et al., 2017). This training included using the M.A.T.C.H. Framework (Missiuna et al., 2004) for building educator capacities for managing the needs of children with motor coordination difficulties (including children with developmental coordination disorder). The acronym stands for (1) modifying tasks, (2) altering expectations, (3) teaching strategies, (4) changing the environment, and (5) helping by understanding. In the demonstration study (Missiuna et al., 2012a,b), the OTs used the M.A.T.C.H Framework (Missiuna et al., 2004) in collaboration with 209 educational staff (including classroom teachers, special education teachers, and education assistants) to support students in 183 distinct classrooms (Missiuna et al., 2012a,b).

The research team consulted with OTs involved in the demonstration study to develop the questionnaire items, which reflected the occupational therapists’ use of the framework in their own collaboration with educators. Based on this consultation, the specific strategies and targets reported by the OTs were used to develop the P4C Educator Questionnaire. The measure was expanded and refined by members of the research team, incorporating members’ experience in school-based services for children with motor difficulties and input from the occupational therapists who first implemented the P4C model of service. This process yielded the version of the P4C Educator Questionnaire used in the present study. In this way, the measure was informed by, and is reflective of, but not directly derived from, the M.A.T.C.H. Framework (Missiuna et al., 2004).

2.4.2. Measure description

This version of the P4C Educator Questionnaire is a 19-item measure that uses a 5-point scale ranging from “Not at all confident” (anchored to 1) to “Very confident” (anchored to 5). The measure was positively scaled, with a higher score indicating greater self-reported knowledge and skills to support children with motor difficulties in the classroom. Educators were instructed to rate their knowledge and confidence regarding their understanding of the impact of motor coordination difficulties on classroom functioning, as well as their ability to differentiate instruction and provide appropriate accommodations for children with motor difficulties.

2.5. Data analysis

Study data were collected, entered, and managed using REDCap electronic data capture tools hosted at McMaster University (Harris et al., 2009). All statistical analyses were completed using STATA IC.16 (StataCorp, 2019).

2.5.1. Step 1: exploratory factor analysis

First, we performed a principal factor exploratory factor analysis with orthogonal rotation to investigate the underlying factor structure using data from Time 1 only. Exploratory factor analysis (EFA) is an important multivariate method used to understand how multiple observed measures reflect underlying, unobservable constructs of theoretical interest (Watkins, 2018). In this method, each observed measure is assumed to reflect one or more underlying factors of interest which cannot be directly measured (i.e., a latent factor), in combination with some degree of measurement error. When observed items vary together strongly and systematically, they will be grouped together on a shared latent factor (Boateng et al., 2018). By allowing each observed measure to be explained by one or more latent factors plus measurement error, an EFA can help “cut through” a larger number of items to demonstrate the smaller number of underlying constructs which are driving observed variation, and which are of greater interest for clinical and research inference than are individual items.

Data were inspected visually and by indicators of skew and kurtosis to evaluate whether an assumption of normality was justified. Sampling adequacy was determined using the Kaiser-Meyer-Olkin Test, following established interpretation guidelines (Kaiser, 1974). We used scree plots and parallel analysis to guide selection of the number of factors and required that items placed on a factor have a loading of at least 0.5 to be retained. These factor loadings indicate how much variation in the observed measure can be attributed to the latent factor, with numbers closer to 1 indicating a stronger relationship between the observed variable and the latent factor. Ideally, in an EFA of a well-constructed scale, all observed items will load heavily (here, defined as 0.5 or greater) onto a single underlying factor, while only trivially loading onto other factors (Watkins, 2018), indicating that the variation in the observed item is primarily driven by variation in a single underlying latent factor of interest. After selecting the number of factors to retain after inspection of the EFA results (with the assistance of factor loadings, scree plots, and parallel analysis), we divided the scale into subscales and then calculated descriptive statistics for each subscale, as well as the total questionnaire.

2.5.2. Step 2: estimates of internal consistency

Internal consistency is a psychometric property related to how closely items correlate independent of the number of items (Tang et al., 2014), or in other words, how well items vary together, consistently representing a single construct. Traditionally, a strict binary approach to internal consistency has been used, relying on cut-off values of Cronbach’s α (Spiliotopoulou, 2009). However, this approach is no longer considered best practice; instead, a combination of internal consistency indicators is recommended (Ponterotto and Ruckdeschel, 2007; Spiliotopoulou, 2009). This change reflects a recognition that numerous aspects of the data can influence estimates of Cronbach’s α (Spiliotopoulou, 2009). Importantly, it is easy to inflate internal consistency with a single indicator by increasing scale length (Ponterotto and Ruckdeschel, 2007). Consequently, careful interpretation of Cronbach’s α supplemented by interitem correlations has been recommended (Ponterotto and Ruckdeschel, 2007; Spiliotopoulou, 2009). We calculated Cronbach’s α and mean interitem correlation for each subscale as well as the total questionnaire. We used recommended guidelines for interpretation in psychometric research, targeting an α ≥ 0.80 and a mean interitem correlation between 0.40 and 0.50 given our sample size, items per scale, and conceptual narrowness of the constructs (Ponterotto and Ruckdeschel, 2007).

2.5.3. Step 3: confirmatory factor analysis

We then performed a confirmatory factor analysis using the SEM command in Stata IC.16, modelling the factor structure derived from the EFA. CFA models are a preferred standard in testing many aspects of scale construction because they offer the ability to test model hypotheses (Jackson et al., 2009). Consistent with recommendations for scale development (Boateng et al., 2018), we used this technique to confirm that the model derived from the EFA analysis was not idiosyncratic to our sample, and that the proposed factor structure showed evidence of generalizability. Using this technique, researchers propose an underlying model (whether derived from a prior EFA or existing theory) and can test the hypothesized model against the data, with various fit indices used as indicators of whether data from independent samples falsify the hypothesized model.

We drew on data from a group of educators who had not provided a Time 1 questionnaire; therefore, they provided a unique data set allowing us to test the factor structure beyond the original EFA sample. We considered modification indices in the case of a lack of absolute or relative fit, using the root mean squared error of approximation (RMSEA) and the standardized root mean squared residual (SRMR) for the former and the comparative fit index (CFI) and Tucker-Lewis index (TLI) for the latter. Using these fit indices, the hypothesized model can be tested against the data. Following recommended guidelines (Schreiber et al., 2006), we targeted an RMSEA with a point estimate < 0.06, an SRMR ≤ 0.08, and a CFI and TLI ≥ 0.95, and required at least one measure of absolute and relative fit each to meet these targets before accepting the model. In the case of disagreement between the measures of absolute fit, we deferred to the SRMR, as this index outperforms the RMSEA for factor analysis using ordinal scales with large samples (Shi et al., 2020). Although complex in their interpretation, not meeting these suggested values for fit indices indicates that the hypothesized model does not match the observed patterns in the data.

If a model does not perform well on fit indices, it is sometimes possible to introduce minor modifications into the model to achieve fit, where the model is no longer falsified by the data; however, it is important to strictly constrain any modifications to prevent “fishing” for an adequately fitting model that is not linked to appropriate theoretical frameworks. Modifications were only considered if they had a clear theoretical or substantive rationale, while maximizing model parsimony. To select the final model, we required that the model meet these requirements, and that any modifications to the model improve the Bayesian and Akaike’s Information Criteria, which are indicators that penalize the addition of unnecessary model complexity. In other words, although we modified the model so that it could better represent the independent sample data, these modifications were carefully restricted to ensure that all changes were theoretically justified, while simultaneously retaining the simplest possible model which was not falsified by the data.

3. Results

3.1. Step 1: exploratory factor analysis

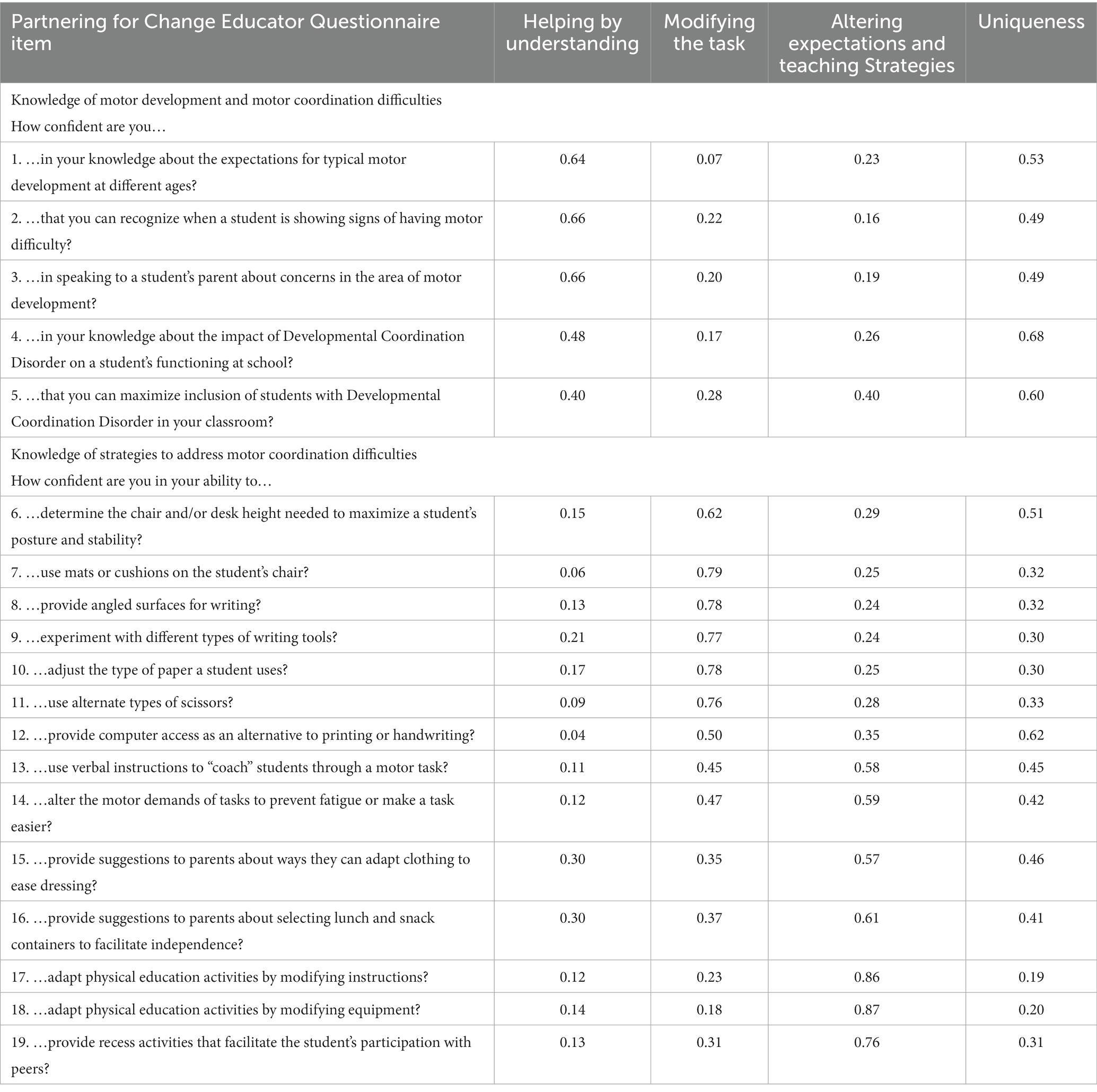

To assess the underlying structure of this novel outcome measure, we performed the exploratory factor analysis as planned. Visual inspection of scale items and scale totals, as well as estimates of kurtosis and skew were consistent with normality assumptions. Sample suitability for factor analysis was 0.92 or “marvelous” according to interpretation guidelines (Kaiser, 1974; Watkins, 2018). The analysis included 436 participants, with 41 participants having been removed due to missing items (9% missingness for this sample). Following inspection of the scree plot and the parallel analysis results, we retained three factors in the model. All but two items loaded well (factor loading ≥0.50) onto a single factor, and the results were not consistent with any cross loading of items. Two items did not load onto any factor, and so these items were removed from the scale in subsequent analyses. See Table 2 for item factor loadings and communalities, including those for excluded items. Our proposed three-factor model accounted for 96% of the observed variance after rotation.

Table 2. Factor loadings and communalities from the exploratory factor analysis of items from the Partnering for Change Educator Questionnaire (n = 436).

3.2. Step 2: estimates of internal consistency

To evaluate internal consistency, we calculated Cronbach’s α and average interitem correlations for each subscale, as well as the complete scale. See Table 3 for these estimates, along with descriptive statistics for each subscale. The mean interitem correlations for each subscale exceeded the recommended targets. Additionally, α was low for the first subscale, although this is likely an artefact of the scale length (3 items), as both excluded items had originally been included in this subscale. However, the overall scale met established targets for internal consistency.

Table 3. Descriptive statistics for the Partnering for Change Educator Questionnaire (subscales and questionnaire total).

3.3. Step 3: confirmatory factor analysis

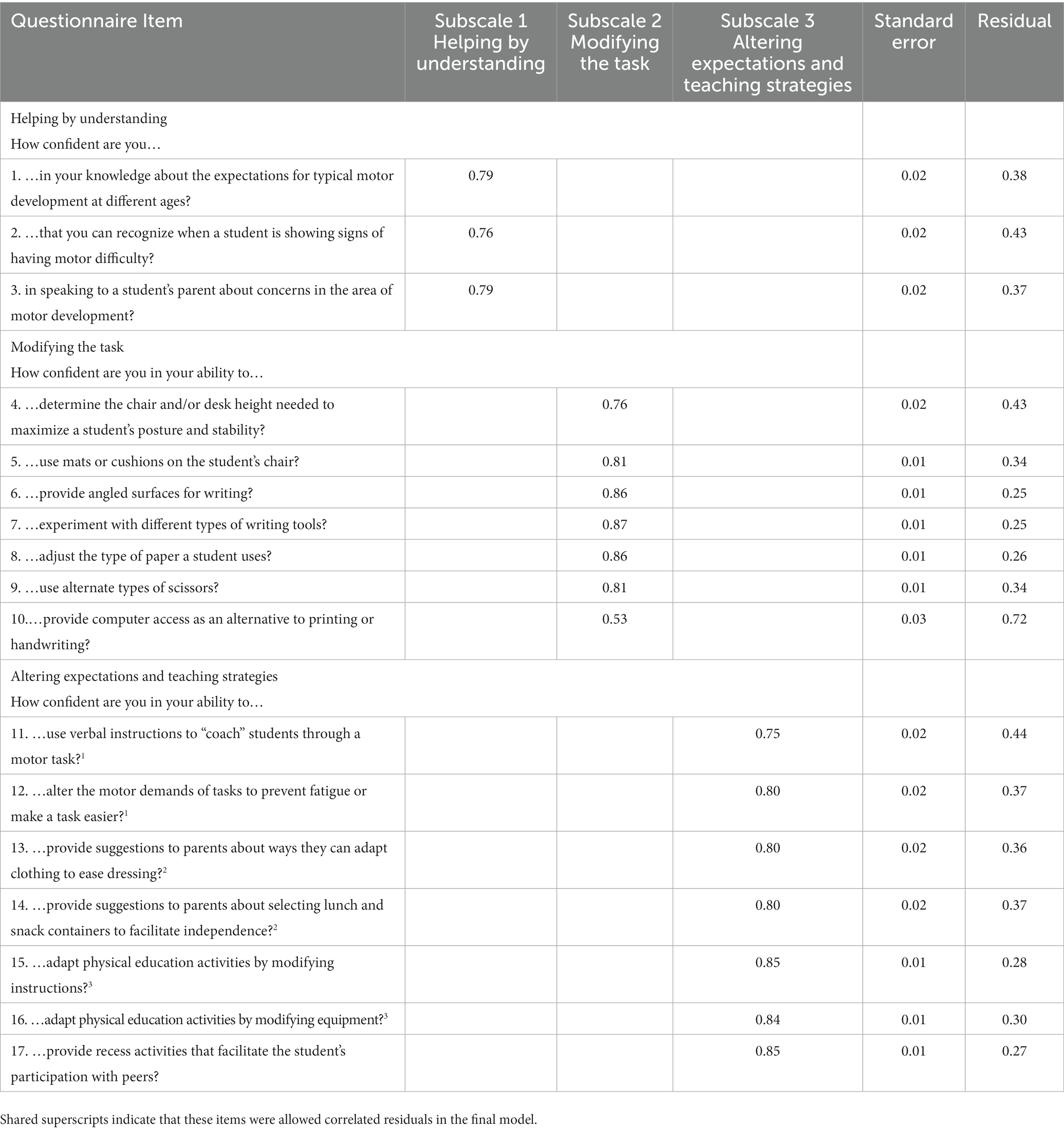

To test the structural validity of the scale and its underlying factor structure, we performed a confirmatory factor analysis with an independent sample of P4C Educator Questionnaire data using the model derived from the EFA. In this model, each item was loaded only onto a single factor and the scale was reduced to 17 items through the removal of two items which did not load onto any factor in the EFA. A sample of 733 P4C Educator Questionnaire administrations were included in the CFA, with 45 excluded due to missing items (6% missingness), yielding a final sample size of 688. We judged this to be a low level of missingness and were unable to discern any pattern to missing data, and so used a maximum likelihood estimation. The RMSEA = 0.12 (90% confidence interval, 0.11–0.13), CFI = 0.88, and TFI = 0.86 indicated lack of fit, although the SRMR = 0.05 was adequate. We then revised this model following consideration of the modification indices, allowing error terms to be correlated in cases where there was a close and clear conceptual relation between items. Specifically, we allowed items 11 and 12, 13 and 14, and 15 and 16 to have correlated residuals (these items are labelled with matching superscripts in Table 4). When we re-estimated the model, the SRMR = 0.04, CFI = 0.96, TLI = 0.95 indicated that our model specifications fit the data, although the RMSEA = 0.07 (90% confidence interval, 0.07–0.08), continued to exceed the recommended value. However, given that SRMR outperforms the RMSEA when sample size is large and observed variables are ordinal data (Shi et al., 2020), and that both measures of comparative fit agreed with the SRMR, we deferred to the SRMR for absolute fit. Finally, both information criteria improved non-trivially, and our modifications did not introduce substantive challenges to parsimony, and so we chose to accept this as the final model. The results of this CFA estimation (standardized factor loadings, standard errors, and residuals) can be found in Table 4.

Table 4. Factor loading results from confirmatory factor analysis (n = 688) for Partnering for Change Educator Questionnaire.

4. Discussion

In this study, we used a series of analyses to evaluate the construction of a novel outcome measure targeting self-reported educator capacity to support children with motor difficulties in the classroom. The results of this evaluation are promising, notwithstanding the need for further revisions and improvements.

4.1. Exploratory factor analysis

In the EFA, a three-factor structure without cross loadings was evident, indicating that responses to each question were primarily driven by one underlying construct (plus measurement error). We noted that the items for each latent factor corresponded well to the M.A.T.C.H. Framework (Missiuna et al., 2004), although changing the environment was not represented by any factor, and altering expectations and teaching strategies were represented by a single latent factor. As the OTs had originally been trained to use this framework, we considered the emergence of factors related to the framework domains to be appropriate and plausible. These results are consistent with a useful and interpretable underlying structure to the questionnaire, while leaving open the possibility for conceptual expansion in subsequent versions. Therefore, we judged this factor structure appropriate to justify the three subscales (one per factor) in the current version of the outcome measure and have named each subscale after the appropriate sections of the M.A.T.C.H. Framework (Missiuna et al., 2004).

4.2. Estimates of internal consistency

In the analysis of internal consistency, the total questionnaire performed well, while there were challenges with each of the three subscales. Specifically, the mean interitem correlations were higher than the ideal targets, although not outside an acceptable range (Ponterotto and Ruckdeschel, 2007). In other words, these subscales overperformed, with items correlating closely with other all items. These results suggest that the questions within each subscale may be overly homogenous and narrow, allowing for additional diversification within subscales in future revisions. For example, it may be possible to include less commonly addressed task modifications to increase the breadth of the instrument while maintaining appropriate internal consistency. However, the overall measure performed well within the pre-established ideal target range, strengthening the argument for use of the entire scale.

4.3. Confirmatory factor analysis

In the CFA, we tested and confirmed the three-factor structure identified by the EFA by testing the hypothesized latent model against independent sample data. We were able to achieve appropriate fit with minor modifications that were conceptually justified and did not pose significant threats to model parsimony. We allowed three pairs of items (six items in total) to have correlated residuals (error terms), indicating that these questions had extremely similar response patterns (i.e., educators responded similarly to each pair of questions). These allowed residual correlations, like the high mean interitem correlations, suggest the possibility of further item revision and differentiation. Nevertheless, we were able to construct and test a latent conceptual model of the scale that corresponded closely with the framework for occupational therapist-educator capacity building (Missiuna et al., 2004) used to train OTs working within the P4C model of school-based service delivery, further strengthening our confidence in scale construction and score interpretation.

4.4. Integrated findings

Synthesizing our results, we affirm that the P4C Educator Questionnaire appears highly promising as an outcome measure of educator capacities. While we have highlighted potential improvements, we argue that such an outcome measure is useful and timely given the importance of collaboration between health professionals and educators (Villeneuve, 2009; Anaby et al., 2019) and the significance of teacher self-assessment of capacities to inclusive education (Wray et al., 2022; Yada et al., 2022). This measure addresses an important area of growth for school-based OT services (Wehrmann et al., 2006). Such a measure has great utility for service models that include educator capacity building as an essential component, including, but not limited to, P4C. Additionally, this outcome measure, combined with other appropriate measures, facilitates the empirical testing of theories of service organization, which already posit that educator knowledge and skills as an important outcome contributing to capacity building within the service system (VanderKaay et al., 2021). Such empirical studies would provide relevant evidence for occupational therapists on which to base decision making around service organization and delivery. Acknowledging the limitations of this measure, we currently recommend that the scale be used in its entirety, unless the choice of a subscale is strongly motivated by an appropriate theoretical framework. A complete, copyrighted reproduction of this measure is available as Supplementary material to this manuscript.

4.5. Limitations

At this point, we must be cognizant of the substantial limitations of this work. First, additional research is needed to validate the use of this measure to support specific clinical inferences, such as estimating the increase in scores necessary to detect minimally clinically important change in capacities (Streiner et al., 2015), as well as to evaluate its performance on other key psychometric properties not addressed within the current study, such as responsiveness and test–retest reliability (Mokkink et al., 2010). Further, the sample of participants partially self-selected, and so it is possible that we would observe different results had we used a different sampling strategy. We also took an exploratory-confirmatory approach to the current study, rather than building the entire conceptual framework a priori and immediately testing it against the empirical data. We have not yet completed an invariance analysis, nor have we examined differential item functioning to determine how the scale results may differ based on participant characteristics, limiting our ability to understand the measures implications for equitable services. This is an important area for future work. Finally, all data collection took place within an implementation project for a specific service delivery model in a particular jurisdiction; thus, we cannot preclude the possibility that, in other contexts, the measure would yield different results.

5. Conclusion

In this study, we reported a preliminary investigation into the measurement properties of the P4C Educator Questionnaire, a novel outcome measure of educator capacity to support children with motor difficulties in their daily academic and social environments. Overall, the measure performed well, demonstrating a parsimonious and interpretable factor structure, confirmed against data from independent samples, and with good internal consistency at the whole scale level. The scale, given its current construction, reflects underlying variation in educator capacities to help students by understanding motor difficulties, modifying classroom tasks and activities, and altering expectations and teaching strategies to better support students with motor difficulties. We believe that such an outcome measure is important and timely as school-based services transition towards collaborative, capacity-building models of school-based supports, and could serve as a template for the development of additional measures of educator capacity to support students with other challenges, such as communication or behavioral challenges. We recommend further scale revisions and improvements, coupled with additional research evaluating the validity of this outcome measure to support specific clinical inferences, as there was sufficient evidence for structural validity and internal consistency in the tested version of the Partnering for Change Educator Questionnaire.

Data availability statement

The dataset analyzed for this study can be found online via the Open Science Framework: https://osf.io/c9tpq/?view_only=bec5271526794ea28d528d199a090165.

Ethics statement

The studies involving humans were approved by Hamilton Integrated Research Ethics Board. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin because Educators participating in this study completed an anonymous survey. Consent was implied by their decision to fill out and submit the survey.

Author contributions

CM, WC, LD, and CD conceptualized and designed the study. PC designed and performed all statistical analyses. PC and WC prepared the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by the Ontario Ministries of Health and Long-Term Care and Education (grant number HLTC2972FL-2012-324). CM and WC also acknowledge the support of the John and Margaret Lillie Chair in Childhood Disability Research.

Acknowledgments

We acknowledge Nancy Pollock, John Cairney, and Sheila Bennett for their contributions to the questionnaire construction and study conceptualization. We also thank Scott Veldhuizen for his statistical consultation services. The authors are grateful to the Ontario Ministries of Health and Long-Term Care and Education for funding this research, to the Central West and Hamilton Niagara Haldimand Brant Community Care Access Centres who provided occupational therapy services through School Health Support Services, and to the partners and stakeholders who provided leadership and contributed to the research activities. The authors would also like to thank the children, families, educators and school communities, occupational therapists, and team members who contributed to this study.

Conflict of interest

All authors have been involved in the development and/or evaluation of the Partnering for Change service delivery model.

The authors declare that this research was conducted in the absence of any financial relationships that could be construed as potential conflicts of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1174097/full#supplementary-material

References

Anaby, D. R., Campbell, W. N., Missiuna, C., Shaw, S. R., Bennett, S., Khan, S., et al. (2019). Recommended practices to organize and deliver school-based services for children with disabilities: a scoping review. Child Care Health Dev. 45, 15–27. doi: 10.1111/cch.12621

Bayona, C. L., McDougall, J., Tucker, M. A., Nichols, M., and Mandich, A. (2006). School-based occupational therapy for children with fine motor difficulties: evaluating functional outcomes and fideility of services. Phys. Occup. Therapy Pediat. 26, 89–110. doi: 10.1300/J006v26n03_07

Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., and Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: a primer. Front. Public Health 6, 1–18. doi: 10.3389/fpubh.2018.00149

Bonnard, M., and Anaby, D. (2016). Enabling participation of students through school-based occupational therapy services: towards a broader scope of practice. Br. J. Occup. Ther. 79, 188–192. doi: 10.1177/0308022615612807

Cahill, S. M., McGuire, B., Krumdick, N. D., and Lee, M. M. (2014). National survey of occupational therapy practitioners’ involvement in response to intervention. Am. J. Occup. Ther. 68, e234–e240. doi: 10.5014/ajot.2014.010116

Campbell, W. N., Kennedy, J., Pollock, N., and Missiuna, C. (2016). Screening children through response to intervention and dynamic performance analysis: the example of partnering for change. Curr. Dev. Disord. Rep. 3, 200–205. doi: 10.1007/s40474-016-0094-6

Chu, S. (2017). Supporting children with special educational needs (SEN): an introduction to a 3-tiered school-based occupational therapy model of service delivery in the United Kingdom. World Federation of Occupational Therapists Bulletin. 73, 107–116. doi: 10.1080/14473828.2017.1349235

Coggshall, J. G., Rasmussen, C, Colton, A, Milton, J., and Jacques, C. (2012) Generating teaching effectiveness: The role of job-embedded professional learning in teacher evaluation, National Comprehensive Center for teacher quality. Available at: https://gtlcenter.org/sites/default/files/docs/GeneratingTeachingEffectiveness.pdf (Accessed November 4, 2021).

Croft, A., Coggshall, J.G., Dolan, M/, and Powers, E. (2010) Job-embedded professional development: What it is, who is responsible, and how to get it done well, National Comprehensive Center for teacher quality. Available at: https://gtlcenter.org/sites/default/files/docs/JEPDIssueBrief.pdf (Accessed November 4, 2021).

Grosche, M., and Volpe, R. J. (2013). Response-to-intervention (RTI) as a model to facilitate inclusion for students with learning and behaviour problems. Eur. J. Spec. Needs Educ. 28, 254–269. doi: 10.1080/08856257.2013.768452

Harris, P. A., Taylor, R., Thielke, R., Payne, J., Gonzalez, N., and Conde, J. G. (2009). Research electronic data capture (REDCap)-a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 42, 377–381. doi: 10.1016/j.jbi.2008.08.010

Hesketh, A., and Sage, K. (1999). For better, for worse: outcome measurement in speech and language therapy. Adv. Speech-Lang. Pathol. 1, 37–45. doi: 10.3109/14417049909167152

Hutton, E., Tuppeny, S., and Hasselbusch, A. (2016). Making a case for universal and targeted children’s occupational therapy in the United Kingdom. Br. J. Occup. Ther. 79, 450–453. doi: 10.1177/0308022615618218

Jackson, D. L., Gillaspy, J. A., and Purc-Stephenson, R. (2009). Reporting practices in confirmatory factor analysis: an overview and some recommendations. Psychol. Methods 14, 6–23. doi: 10.1037/a0014694

Jimerson, S. R., Burns, M. K., and VanDerHeyden, A. M. (2016). “From response to intervention to multi-tiered Systems of Support: advances in the science and practice of assessment and intervention” in Handbook of response to intervention: The science and practice of multi-tiered systems of support. eds. S. R. Jimerson, M. K. Burns, and A. M. VanDerHeyden. 2nd ed (Springer Science+Business Media: New York, NY), 1–6.

Kaelin, V. C., Ray-Kaeser, S., Moioli, S., Kocher Stalder, C., Santinelli, L., Echsel, A., et al. (2019). Occupational therapy practice in mainstream schools: results from an online survey in Switzerland. Occup. Ther. Int. 2019, 1–9. doi: 10.1155/2019/3647397

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika 39, 31–36. doi: 10.1007/BF02291575

Maciver, D., Tyagi, V., Kramer, J. M., Richmond, J., Todorova, L., Romero-Ayuso, D., et al. (2020). Development, psychometrics and feasibility of the school participation questionnaire: a teacher measure of participation-related constructs. Res. Dev. Disabil. 106:103766. doi: 10.1016/j.ridd.2020.103766

McIntosh, K., MacKay, L. D., Andreou, T., Brown, J. A., Mathews, S., Gietz, C., et al. (2011). Response to intervention in Canada: definitions, the evidence base, and future directions. Can. J. Sch. Psychol. 26, 18–43. doi: 10.1177/0829573511400857

Meuser, S., Piskur, B., Hennissen, P., and Dolmans, D. (2023). Targeting the school environment to enable participation: a scoping review. Scand. J. Occup. Ther. 30, 298–310. doi: 10.1080/11038128.2022.2124190

Missiuna, C. A., Pollock, N., Campbell, W. N., Bennett, S., Hecimovich, C., Gaines, R., et al. (2012b). Use of the Medical Research Council framework to develop a complex intervention in pediatric occupational therapy: assessing feasibility. Res. Dev. Disabil. 33, 1443–1452. doi: 10.1016/j.ridd.2012.03.018

Missiuna, C., Pollock, N., Campbell, W., DeCola, C., Hecimovich, C., Sahagian Whalen, S., et al. (2017). Using an innovative model of service delivery to identify children who are struggling in school. Br. J. Occup. Ther. 80, 145–154. doi: 10.1177/0308022616679852

Missiuna, C. A., Pollock, N. A., Levac, D. E., Campbell, W. N., Whalen, S. D. S., Bennett, S. M., et al. (2012a). Partnering for change: an innovative school-based occupational therapy service delivery model for children with developmental coordination disorder. Can. J. Occup. Ther. 79, 41–50. doi: 10.2182/cjot.2012.79.1.6

Missiuna, C. A., Rivard, L., and Pollock, N. (2004). They’re bright but can’t write: developmental coordination disorder in school aged children. TEACHING Excep. Child. Plus 1:3.

Missiuna, C. A., Pollock, N., Campbell, W., Bennett, S., and Levac, D. (2014). P4C educator questionnaire.

Mokkink, L. B., Terwee, C. B., Patrick, D. L., Alonso, J., Stratford, P. W., Knol, D. L., et al. (2010). The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. J. Clin. Epidemiol. 63, 737–745. doi: 10.1016/j.jclinepi.2010.02.006

Polatajko, H. J., Mandich, A., and Martini, R. (2000). Dynamic performance analysis: a framework for understanding occupational performance. Am. J. Occup. Ther. 54, 65–72. doi: 10.5014/ajot.54.1.65

Pollock, N. A., Dix, L., Whalen, S. S., Campbell, W. N., and Missiuna, C. A. (2017). Supporting occupational therapists implementing a capacity-building model in schools. Can. J. Occup. Ther. 84, 242–252. doi: 10.1177/0008417417709483

Ponterotto, J. G., and Ruckdeschel, D. E. (2007). An overview of coefficient alpha and a reliability matrix for estimating adequacy of internal consistency coefficients with psychological research measures. Percept. Mot. Skills 105, 997–1014. doi: 10.2466/pms.105.3.997-1014

Schreiber, J. B., Nora, A., Stage, F. K., Barlow, E. A., and King, J. (2006). Reporting structural equation modeling and confirmatory factor analysis results: a review. J. Educ. Res. 99, 323–338. doi: 10.3200/JOER.99.6.323-338

Shi, D., Maydeu-Olivares, A., and Rosseel, Y. (2020). Assessing fit in ordinal factor analysis models: SRMR vs. RMSEA. Struct. Equ. Model. 27, 1–15. doi: 10.1080/10705511.2019.1611434

Spiliotopoulou, G. (2009). Reliability reconsidered: Cronbach’s alpha and paediatric assessment in occupational therapy. Aust. Occup. Ther. J. 56, 150–155. doi: 10.1111/j.1440-1630.2009.00785.x

Streiner, D. L., Norman, G. R., and Cairney, J. (2015). Health measurement scales: A practical guide to their development and use. 5th edn. Oxford, UK: Oxford University Press.

Tang, W., Cui, Y., and Babenko, O. (2014). Internal consistency: do we really know what it is and how to assess it? J. Pschol. Behav. Sci. 2, 205–220.

Tomas, V., Cross, A., and Campbell, W. N. (2018). Building bridges between education and health care in Canada: how the ICF and universal Design for Learning Frameworks mutually support inclusion of children with special needs in school settings. Front. Educ. 3:18. doi: 10.3389/feduc.2018.00018

VanderKaay, S., Dix, L., Rivard, L., Missiuna, C., Ng, S., Pollock, N., et al. (2021). Tiered approaches to rehabilitation services in education settings: towards developing an explanatory programme theory. Int. J. Disability Dev. Educ. 70, 540–561. doi: 10.1080/1034912X.2021.1895975

Villeneuve, M. (2009). A critical examination of school-based occupational therapy collaborative consultation. Can. J. Occup. Ther. 76, 206–218. doi: 10.4324/9781003233671-10

Watkins, M. W. (2018). Exploratory factor analysis: a guide to best practice. J. Black Psychol. 44, 219–246. doi: 10.1177/0095798418771807

Wehrmann, S., Chiu, T., Reid, D., and Sinclair, G. (2006). Evaluation of occupational therapy school-based consultation service for students with fine motor difficulties. Can. J. Occup. Ther. 73, 225–235. doi: 10.2182/cjot.05.0016

Wilson, A. L., and Harris, S. R. (2018). Collaborative occupational therapy: teachers’ impressions of the partnering for change (P4C) model. Phys. Occup. Therapy Pediat. 38, 130–142. doi: 10.1080/01942638.2017.1297988

Wolf, E. J., Harrington, K. M., Clark, S. L., and Miller, M. W. (2013). Sample size requirements for structural equation models: an evaluation of power, bias, and solution propriety. Educ. Psychol. Meas. 73, 913–934. doi: 10.1177/0013164413495237

Wray, E., Sharma, U., and Subban, P. (2022). Factors influencing teacher self-efficacy for inclusive education: a systematic literature review. Teach. Teach. Educ. 117:103800. doi: 10.1016/j.tate.2022.103800

Keywords: Partnering for Change, occupational therapy, structural validity, internal consistency, motor disorders, capacity building, children, schools

Citation: Cahill PT, Missiuna CA, DeCola C, Dix L and Campbell WN (2023) Structural validity and internal consistency of an outcome measure to assess self-reported educator capacity to support children with motor difficulties. Front. Educ. 8:1174097. doi: 10.3389/feduc.2023.1174097

Edited by:

Raman Grover, Consultant, Vancouver, CanadaReviewed by:

Francine Seruya, Mercy College, United StatesAmayra Tannoubi, University of Sfax, Tunisia

Copyright © 2023 Cahill, Missiuna, DeCola, Dix and Campbell. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wenonah N. Campbell, Y2FtcGJlbHdAbWNtYXN0ZXIuY2E=

Peter T. Cahill

Peter T. Cahill Cheryl A. Missiuna1,2

Cheryl A. Missiuna1,2 Leah Dix

Leah Dix Wenonah N. Campbell

Wenonah N. Campbell