- 1Faculty for Physics, Ludwig-Maximilians-Universität München, München, Germany

- 2Institute of Physics (IFP), Didactics of Physics, Otto-von-Guericke-Universität, Magdeburg, Germany

The ability to direct pupils’ attention to relevant information during the experimental process is relevant for all science teachers. The aim of this article is to investigate the effects of training the ability of prospective physics teachers to direct attention during the presentation of experiments with eye tracking visualizations of pupils’ visual attention as a feedback tool. Many eye tracking studies in the field of learning use eye movement recordings to investigate the effectiveness of an instructional design by varying cues or the presentation format. Another important line of research relates to study the teacher’s gaze in a real classroom setting (mobile eye tracking). Here we use eye tracking in a new and innovative way: Eye tracking is used as a feedback tool for prospective teachers, showing them the effects of their verbal moderations when trying to direct their pupils’ attention. The study is based on a mixed methods approach and is designed as a single factor quasi-experiment with pre-post measurement. Pre- and post-test are identical. Prospective teachers record their verbal moderations on a “silent” experimental video. The quality of the moderation is rated by several independent physics educators. In addition, pupils’ eye movements while watching the videos are recorded using eye tracking. The resulting eye movements are used by the lecturer to give individual feedback to the prospective teachers, focusing on the ability to control attention in class. The effect of this eye tracking feedback on the prospective teachers is recorded in interviews. Between the pre-test and the post-test, the results show a significant improvement in the quality of the moderations of the videos. The results of the interviews show that the reason for this improvement is the perception of one’s own impact on the pupils’ attention through eye tracking feedback. The overall training program of moderating “silent videos” including eye tracking as a feedback tool allows for targeted training of the verbal guidance of the pupils’ attention during the presentation of experiments.

1. Introduction

1.1. Directing attention

1.1.1. Learning and the role of paying attention

The Cognitive Theory of Multimedia Learning (CTML) is based on three assumptions that are known as the cognitive principles of learning. The first principle is that multimedia learning takes place via a visual and an auditory processing channel. The second is that both channels have a limited capacity. Accordingly, learners can only process a certain amount of information per channel at the same time. The last is the principle of active learning. It states that learning takes place through cognitive processes (Mayer, 2014). Mayer (2014) identifies five cognitive processes: (1) selecting relevant words, (2) selecting relevant images, (3) organizing the selected words into a coherent verbal representation, (4) organizing the selected images into a coherent pictorial representation, and (5) integrating the pictorial and verbal representations and prior knowledge. The selection of words or images implies that learners pay attention to the information presented (Mayer, 2014). With regard to attention, a distinction is made between visual attention, auditory attention and other forms that are not relevant here (see Amso, 2016). With the help of “silent videos,” we want to investigate the ability of prospective teachers to direct pupils’ visual attention through speech. Therefore, only visual and auditory attentions are relevant.

• Visual Attention. Lockhofen and Mulert (2021) further specify the role of attention in the learning process. They define: “Visual attention is the cognitive process that mediates the selection of important information from the environment.” (Lockhofen and Mulert, 2021, p. 1).

• Auditory Attention. “It is well-known that stimulus-focused attention improves auditory performance by enabling one to process relevant stimuli more efficiently” (Folyi et al., 2012, p. 1).

Another distinction is the trigger that activates attention. Katsuki and Constantinidis (2014) distinguish between bottom-up attention and top-down attention. Bottom-up attention is an externally induced process. The information to be processed is selected automatically. Top-down attention is an internally generated process. The information is actively sought out based on self-selected factors (Katsuki and Constantinidis, 2014). The reasons for the attention diversion are different for bottom-up and top-down. However, their effects are similar. In both attentional processes, objects are processed preferentially. In both cases, a stronger neural response follows, which can induce better storage in memory (Pinto et al., 2013).

Therefore, both forms of attention may be of interest for teaching. The bottom-up process is a stimulus-driven process (Pinto et al., 2013). So, it could be specifically triggered by signals or cues to direct visual or auditory attention to relevant information. The top-down process is influenced by prior knowledge (Lingzhu, 2003; Lupyan, 2017) or previous experience (Addleman and Jiang, 2019).

1.1.2. Controlling attention through cueing

Cues are often defined as content-free information that is intended to direct attention and thus support cognitive processes (Hu and Zhang, 2021). Spotlights (de Koning et al., 2010; Jarodzka et al., 2013), color changes (e.g., Ozcelik et al., 2010) and arrows (Kriz and Hegarty, 2007; Boucheix and Lowe, 2010) are good ways of directing visual attention. However, cues often differ greatly, and not only in how they appear, when they appear, or what they look like. The classical categorization by modality (e.g., auditory or visual) does not do justice to this fact. One category that has received little attention so far is the question of the content richness of the cues (Watzka et al., 2021), which by definition should be absent. However, in many classic examples, such as the label, it is present. A label therefore has a different quality than a spotlight, which is only intended to direct attention. In this study, only verbal cues are used, which can be offered with or without content. For example, one can direct attention (“Look to the left!”), another can help with specific details (“the wooden block is an opaque object”).

In meta-analyses regarding different subject areas, Richter et al. (2016), Schneider et al. (2018), and Alpizar et al. (2020) confirm the positive effect of the cueing principle on learning especially for novices. The analysis of Richter et al. (2016) includes 27 studies. Their main finding is that cues have a positive effect on learning performance with small to medium effect sizes and that especially learners with low prior knowledge benefit from cues. The analysis by Schneider et al. (2018) includes 103 studies and also includes eye tracking data. In summary, they also confirm the beneficial effect of cueing on learning success. In addition, attentional cues with small to medium effect sizes seem to induce longer learning times in general and longer gaze durations on relevant information in particular (Schneider et al., 2018). The mean gaze duration can be attributed to the cognitive process of organizing the CTML (Alemdag and Cagiltay, 2018) and indicate the degree of mental effort (Jarodzka et al., 2015). Ozcelik et al. (2010) interpret long mean gaze duration as more demanding tasks and correspondingly higher mental effort. Cues lead to longer viewing of the information addressed by the cues in learning materials than in learning materials without cues (Boucheix and Lowe, 2010; Ozcelik et al., 2010; Glaser and Schwan, 2015; Xie et al., 2019).

In a predominantly image-based learning material such as videos, verbal cues in particular have a positive effect on visual attention and learning success (e.g., Glaser and Schwan, 2015). An explanation for the better suitability of spoken text compared to written text can be found in CTML (Mayer, 2014). Due to the limited capacity of the processing channels, it makes sense to use additional resources of the auditory processing channel and thus follow the modality principle (see section “1.1.3. Modality principle and learning with experimentation videos”).

1.1.3. Modality principle and learning with experimentation videos

The modality principle generally means that it is beneficial for learning if the text which accompanies graphics is spoken instead of written. Among other things, the modality principle has a positive effect on visual attention, because there is no split-attention effect to worry about (Schmidt-Weigand et al., 2010). In a predominantly image-based learning material (e.g., videos), spoken texts (e.g., moderations) in particular have a positive effect on visual attention and learning (Glaser and Schwan, 2015).

In a meta-analysis comprising of 43 studies which cover a vast spectrum of subjects and visualizations, Ginns (2005) confirms the modality effect with a medium effect size and shows that learning materials with visualizations and spoken texts generally lead to better learning outcomes than learning materials with visualizations and written texts.

The beneficial learning impact of the modality effect is explained by a more effective use of working memory capacity (see section “1.1.1. Learning and the role of paying attention”). Accordingly, more cognitive resources can be used for processing the learning content and learning performance increases (Sweller et al., 2011). When demonstrating experiments in class, teachers automatically use their voice as their main tool of communication. They automatically give verbal cues, some of which are content-related (e.g., mentioning the function) and some of which control attention (e.g., mentioning a surface feature). The question is how do prospective teachers learn to control the attention of their pupils? This paper is about fostering prospective physics teachers to guide their pupils in selecting relevant information by controlling bottom-up visual attention during experimentation through verbal cues. The control of visual bottom-up attention in this study is done via the cueing principle (verbal cues) since this technique can be applied without effort to classroom practice when teachers present experiments. Support for prospective teachers provides a special feedback format, which is theoretically classified subsequently.

1.2. Feedback

1.2.1. Definition and phases

“Feedback is information provided by an agent regarding aspects of one’s performance or understanding” (Hattie and Timperley, 2007, p.81). Focusing especially on learners, Shute defines feedback as “information communicated to the learner that is intended to modify his or her thinking or behavior for the purpose of improving learning” (Shute, 2008, p.153). Feedback thus shows the gap between the target and the current state and should enable the recipient to recognize and close this gap. In this study, the presentation of pupils’ gaze behavior is intended to provide feedback and to help prospective teachers become aware of their ability to control attention. The three classic feedback phases described in the literature occur, namely, (Wisniewski et al., 2020):

• “Feed-up” (comparison of the actual status with a target status) Students and teachers get information about the learning goals to be accomplished: By watching the gaze overlays of their first moderation (pre) the prospective teachers got information about how the pupils reacted to their moderation of the video.

• “Feed-back” (comparison of the actual state with a previous state) Students and teachers see, what they have achieved in relation to an expected standard or previous performance: By watching at the gaze overlay of their second try, the prospective teachers could see what they have achieved relative to their first performance.

• “Feed-forward” (explanation of the target state based on the actual state), Students, and teachers receive information that leads to an adaption of learning in the form of enhanced challenges: After analyzing both moderations, the prospective teachers became aware of the positive skills they should develop, and the mistakes they should avoid in the future.

In general, feedback is considered a very powerful tool. Wisniewski et al. (2020) obtain an average effect size of 0.48 in a meta-analysis. However, feedback does not per se lead to better learning outcomes. Kluger and DeNisi (1996) note that about one third of feedback results in negative learning effects. However, learning depends on a variety of different influences (Hattie, 2021), so there is no standardized way to use feedback. What helps one student today may not help another. Tomorrow, the same feedback may have the opposite effect or no effect at all (Hattie, 2021). How feedback is received depends not only on the form in which it is given, but also on a variety of factors about the recipient (Shute, 2008). For example, important factors are the recipient’s self-assessment and experience of self-efficacy (Shute, 2008).

1.2.2. Levels and forms of feedback

To understand the effectiveness of feedback, one must first be aware of the different levels that feedback addresses (Hattie and Timperley, 2007). Firstly, feedback works at the task level (FT). Is the answer on the task wrong, or right? Second, feedback addresses the process level (FP), i.e., information about the process, how to deal with the task and/or how to understand it. Thirdly, feedback works on the self-regulation level (FR), where the learner checks, controls, and self-regulates his or her processes and behavior. Finally, feedback also provides feedback on the so-called self-level (FS), where positive (and negative) expressions and evaluations about the learner are expressed (Hattie and Timperley, 2007). Eye tracking feedback on your own moderation should ideally trigger the task and process level.

The level of feedback addressed depends largely on the form in which it is given. Different authors distinguish between written, computer aided, oral, pictorial, etc., according to the medium, or according to the content, for example, formative tutorial (Narciss and Huth, 2006) or actionable (Cannon and Witherspoon, 2005). A detailed description of the different forms can be found in Hattie and Timperley (2007) and Wisniewski et al. (2020). By watching the gaze overlays of individual moderated videos, we concentrate on a certain form of visual and auditive feedback (see section “1.2.5. Eye tracking as feedback tool” and section “3.2.1. Pre-test, first eye tracking feedback and pre-interview”).

1.2.3. Feedback directions/student feedback

Much of the research describes forms and effects of feedback on the learner. Recently, feedback as feedback from the learner to the teacher has received more attention (Rollett et al., 2021). The focus here is on the question of the extent to which pupil feedback affects the quality of the teacher’s teaching and thus improves the pupil’s learning success. The question is to what extent pupil feedback is reliable and valid, but recent studies show that pupil feedback provides teachers with valid information about their teaching quality (Rollett et al., 2021). In this study, training with pupils’ gaze overlays should provide valid information for feedback, especially since the pupils provide this feedback without their own knowledge.

Röhl et al. (2021) describe in the “Process Model of Student Feedback on Teaching (SFT)” a circuit diagram of how pupil feedback affects the teacher. The process begins with collecting and measuring pupil perceptions, which are then reported back to the teacher. The teacher interprets this feedback information, which stimulates cognitive but also affective reactions and processes in the teacher. This information can increase the teacher’s knowledge about his teaching and thus trigger a development to improve his own teaching, so that in the following the learning success of the pupils can increase again. By giving the prospective teachers feedback information about their moderations we assume that the development will be triggered to better direct the attention of pupils.

1.2.4. Eye tracking as feedback tool

The use of eye tracking as a feedback tool in education has recently been increasingly emphasized in various disciplines (e.g., Cullipher et al., 2018). Eye movement recordings have been used to analyze and optimize the effectiveness of the design of learning materials (Langner et al., 2022). Mussgnug et al. (2014) describe how eye tracking recordings as a teaching tool improve awareness of user experiences with designed objects and how these experiences can be implemented in design education. Xenos and Rigou (2019) outline the use of eye tracking data collected and analyzed to help students improve their design. In contrast to gaze data of other people looking at specific objects, the gaze of teachers in real classrooms has also been the subject of various studies (McIntyre et al., 2017; Stuermer et al., 2017; McIntyre and Foulsham, 2018; Minarikova et al., 2021). In addition to using the gaze data of others, one’s own gaze can also be used as feedback (Hansen et al., 2019). Szulewski et al. (2019) investigated the effect of eye tracking feedback on emergency physicians during a simulated response exercise, presumably triggering self-reflection processes. Keller et al. (2022) examined the effect of eye tracking feedback on prospective teachers observing and commenting on their own gaze during a lesson they were teaching.

We use eye tracking in a different way, somewhere in between the above: Eye tracking is used as a feedback tool for prospective teachers, showing them the effects of their verbal moderations as they try to direct their pupils’ attention, as happens in the regular classroom.

2. Research question

Directing pupils’ attention during the presentation of an experiment is crucial to its success. Pupils need to look at the right time at the right place to make the important observations. External cues such as speech can influence visual attention (Glaser and Schwan, 2015; Xie et al., 2019; Watzka et al., 2021). The overall question is how to improve the competence of prospective teachers in moderating experiments in the classroom. Therefore, we used the method of moderating “silent videos” to train prospective teachers’ ability to control their pupils’ attention. The particular focus of this method is on verbal cues through spoken language during the presentation of a video. Based on the five cognitive processes (Mayer, 2014), one of the main objectives of an appropriate presentation is to allow pupils to make the necessary observations, among many other aspects (see section “3.3.1. Assessment of prospective teachers’ competence in moderating experimental videos”). To assess this process, the times when observation tasks are set and when pupils are explicitly given the opportunity to observe are summarized as “pupil-activating time”.

Eye tracking is often used to study how a stimulus affects a person’s perception. Conversely, visualizations of eye tracking data can be used to draw conclusions about the observer’s attention and the effectiveness of cues. By using eye tracking as a feedback tool, we tried to show prospective teachers the impact of their moderation of an experimental video on pupils, so that they in turn could draw consequences for further presentations. This leads to the following research questions.

RQ: To what extent can training with eye-tracking visualizations of pupils’ visual attention improve prospective teachers’ guidance of pupils’ gaze? The following more detailed questions should be considered.

RQ1: Does training with eye tracking feedback help prospective teachers explain the set-up of an experiment in a way that is adapted to pupils’ prior knowledge and cognitive and linguistic development?

RQ2: Does training with eye tracking feedback help prospective teachers to increase pupils’ activating time?

3. Materials and methods

3.1. Participants

A subsample of 15 physics prospective teachers from a German (Bavarian) university was selected. They were on average 22.4 years old (SD = 3.4) and in the 5th semester. Of the participants two were female and 13 were male. All participants had heard the experimental physics lectures and an introductory lecture on physics education with a theoretical introduction to criteria for setting up and conducting experiments before the study. Thus, all students had the necessary content and pedagogical knowledge on the topic of the study.

3.2. Procedure and material

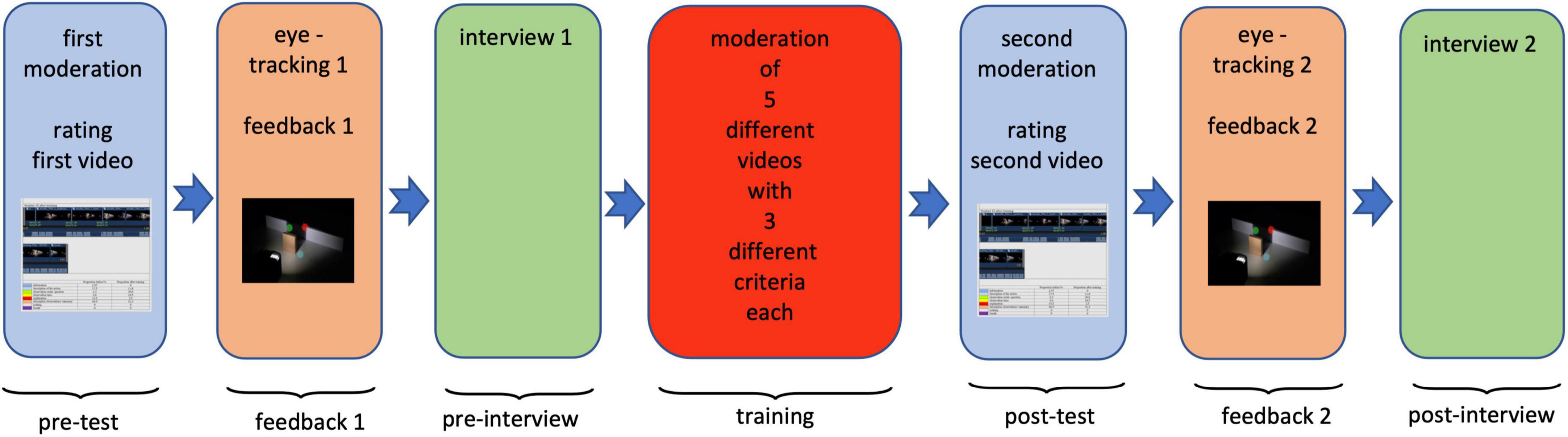

The study uses a pre/post-test design. Between the pre-test and the post-test, a training phase of several weeks took place for the moderation of demonstration experiments. “Silent videos” were used both in the pre- and post-test as a survey instrument and in the training as learning material. The overall process of the study is shown in Figure 1.

3.2.1. Pre-test, first eye tracking feedback and pre-interview

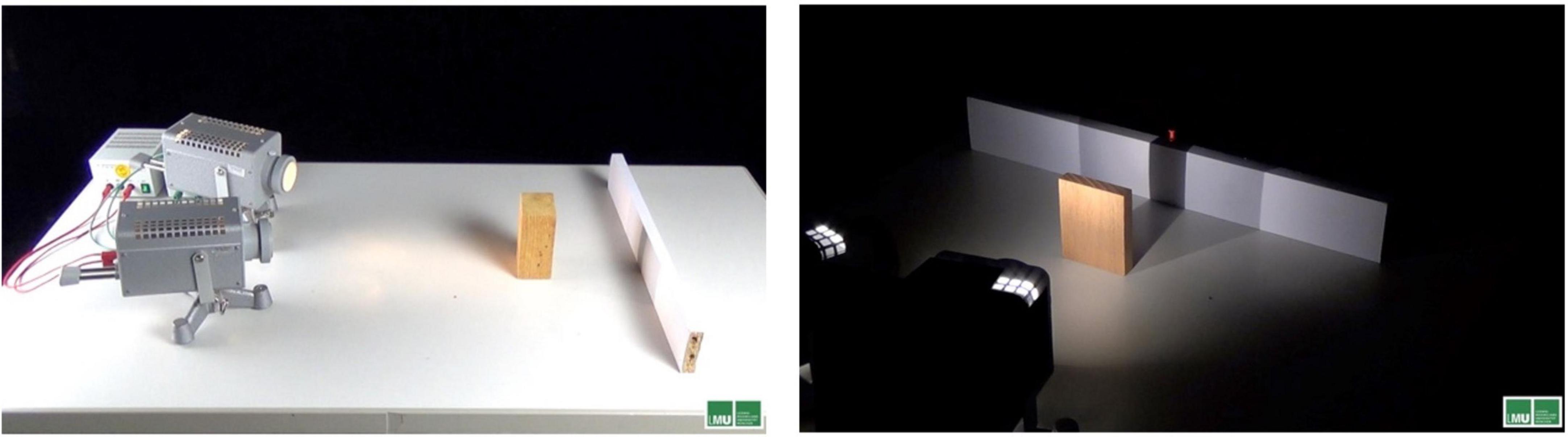

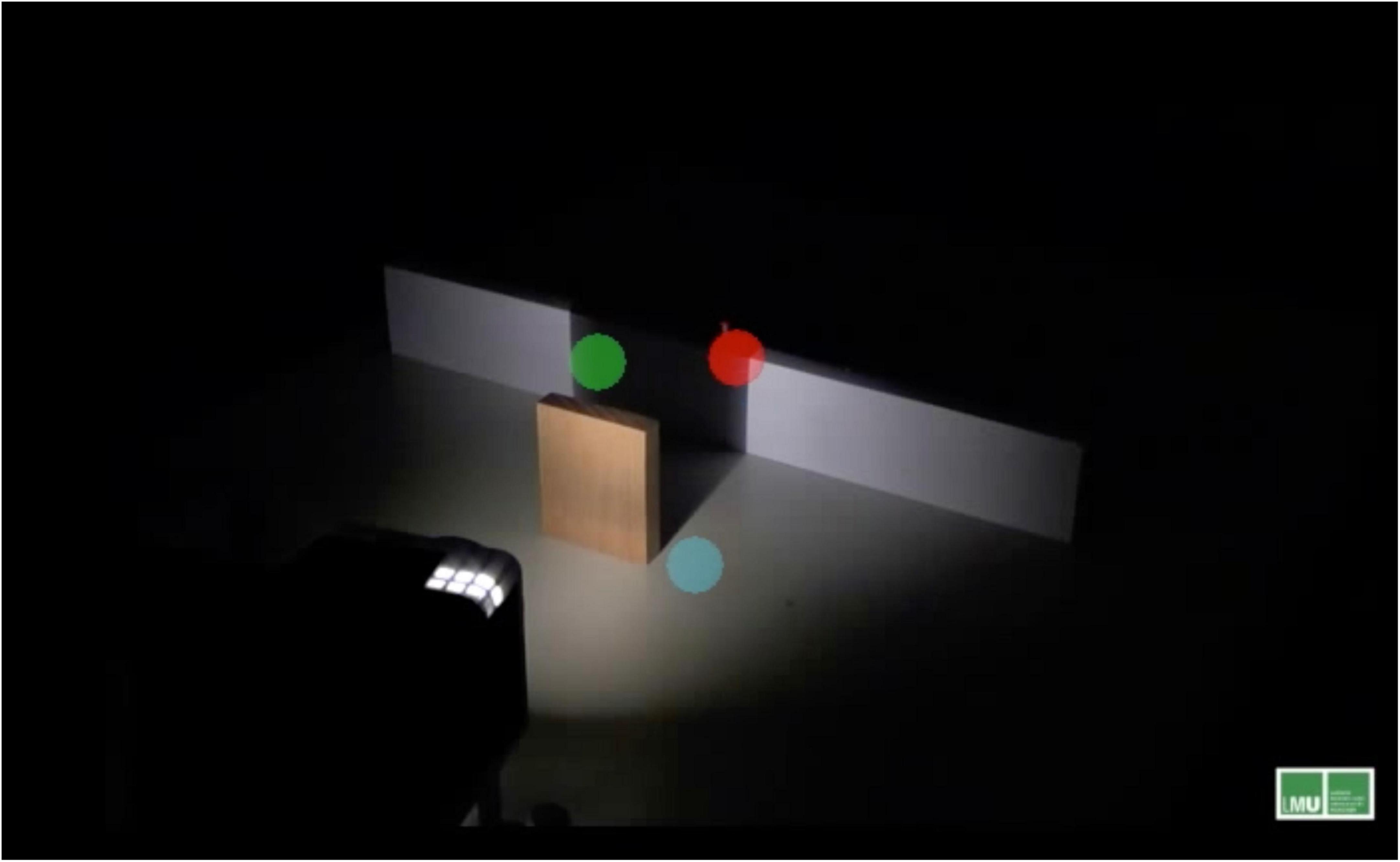

As a pre-test the prospective teachers had to moderate a “silent video” about core shadows and semi shadows (see Figure 2). The video is divided into two main parts: a static part showing the set-up for about 30 s and a dynamic part showing the execution of the experiment for about 60 s. The video shows a small opaque block that is illuminated by two sources from different angles. A white elongated rail serves as a screen on which the different kinds of shadow can be seen. Everything is recorded from the pupils’ perspective, and it is presented in real time. All activities are shown as they would normally be done in a live classroom demonstration. For further information1 about the training with “silent videos” (see Schweinberger and Girwidz, 2022).

Figure 2. Screenshot of set-up and execution of the study’s experiment. The method of “silent videos” is described in detail by Schweinberger and Girwidz (2022).

The task for the prospective teachers was to moderate the video appropriately for pupils in their first year of learning physics in junior high school. The prospective teachers were told to assume that their pupils had prior knowledge of the model of the rectilinear propagation of light and the appearance of cast shadows. The moderation of the videos was evaluated by four or five raters according to the criteria (see section “3.3.1. Assessment of prospective teachers’ competence in moderating experimental videos”).

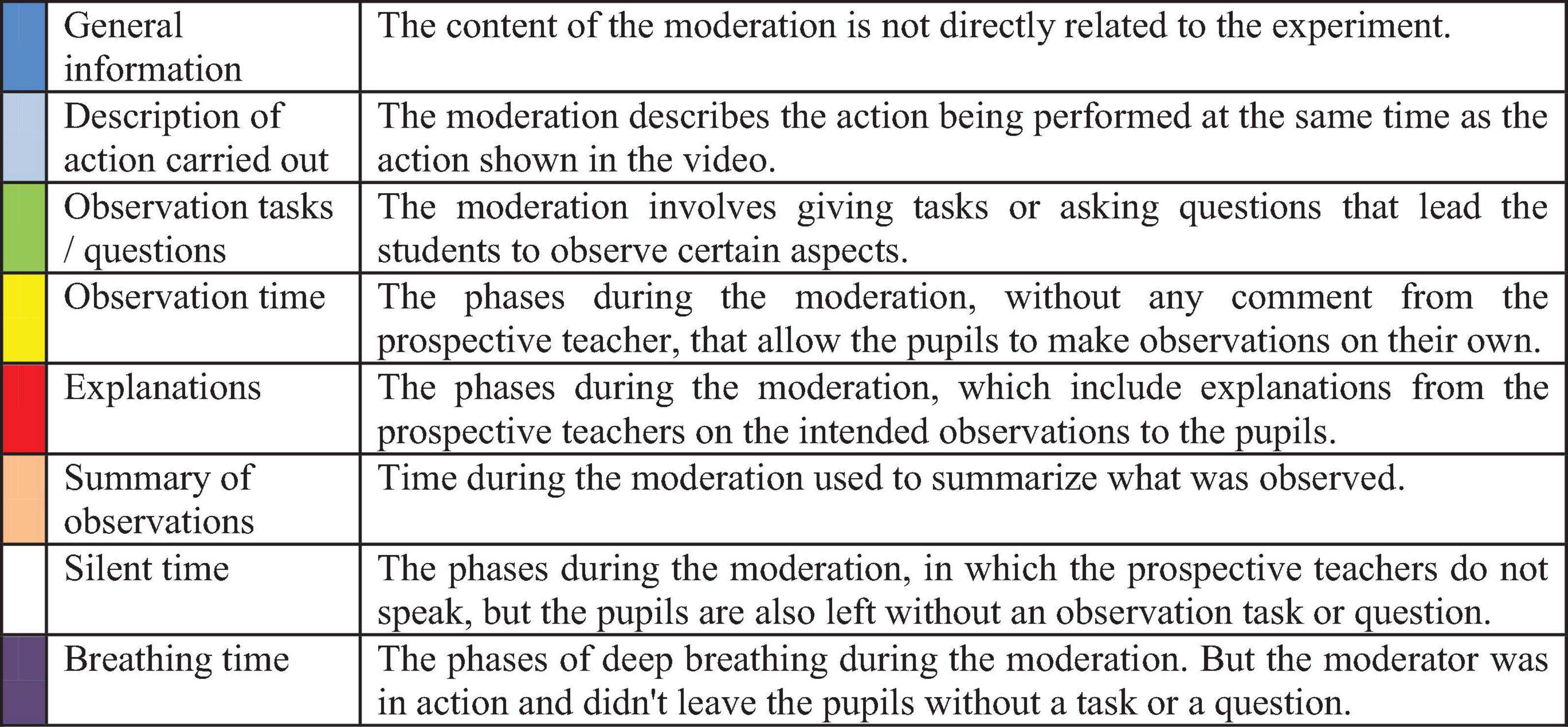

In the next step, individual feedback was given to the prospective teachers. For the eye tracking feedback, each video moderated by the prospective teachers was shown to three randomly selected pupils of the 7th grade and their eye movements were recorded using eye tracking. The data from this tracking was used to create a single gaze overlay video, in which the three gaze overlays of the pupils were superimposed (see Figure 3). When the three overlays are superimposed at the same time, it is easier to see the commonality of the pupils’ responses than when all three overlays are viewed in sequence.

Figure 3. Video excerpt of the gaze overlay feedback to a prospective teacher’s moderation of the shadow video. The colored dots are the gaze overlays of three different pupils watching and reacting one prospective teacher‘s moderation.

The feedback took the form of a short, written critique by the lecturer before the discussion of the gaze overlay video. The gaze overlays were used to illustrate to the prospective teacher the immediate consequences of the criticisms previously made. In an individual conversation the lecturer and every prospective teacher watched the video with the gaze overlays together and discussed the connection between moderation and the pupils’ reactions (e.g., did the pupils look to the area they should and how long did they stay). For this purpose, the gaze overlay feedback was stopped at important points to study certain situations more intensively. If necessary, the gaze overlay feedback was viewed several times.

Afterward the prospective teachers were interviewed for the first time by another research assistant. They were asked to what extent the feedback from the pupils’ views helped them to assess their own ability to manage their pupils’ attention (see section in details “3.3.2. Interview and survey guide”).

3.2.2. Training phase

The prospective teachers moderated a total of six videos over a 10-week period later in the term to build up their skills. Like the pre-test, in the training phase the prospective teachers had to moderate “silent videos” of different experiments. To do this, they had to write their own script in advance, considering the criteria. In order to train as many facets of a moderation as possible, three criteria from the catalog (see list of criteria in the Supplementary Figure 1) were given by the lecturer for each training video. The main focus was on the development of the prospective teachers’ ability to direct pupils’ attention in a targeted way. Each of these moderations was analyzed individually in a small group discussion based on the three pre-defined criteria. In preparation for these discussion meetings, each prospective teacher received a brief written critique in advance. Afterward, they had to set up the respective experiment in the seminar and present it to their colleagues. Thus, the prospective teachers received verbal and written feedback from the lecturer and their student colleagues in the training phase, no further gaze overlays were shown (see section “6. Limitations”).

3.2.3. Post-test, second eye tracking feedback and post-interview

After the training, at the end of the term, the prospective teachers had to moderate the first video about core shadows and semi shadows from the pre-test for a second time (post-test). The moderation of the videos in the post-test was also evaluated according to the criteria (see section “3.3.1. Assessment of prospective teachers’ competence in moderating experimental videos”) as in the pre-test.

To generate the second eye tracking feedback, the moderated videos from the post-test were again shown to three different pupils and their gazes were recorded. We decided to use different pupils than in the pre-test, because we expected quite a large repetition effect. The content was a very simple phenomenon, and we wanted all pupils to have the comparable prior knowledge. The resulting gaze overlay videos were produced as in the first feedback and shown to the prospective teachers visualizing a second short written critique. In addition, the prospective teachers watched the gaze overlay video of their first trial, to discuss the developments between the pre and post.

The prospective teachers were then interviewed a second time by another research assistant using the same questions as in the first interview.

3.3. Assessment

3.3.1. Assessment of prospective teachers’ competence in moderating experimental videos

The criteria were developed over several years from practical experience and then discussed intensively by five physics lecturers from the chair of Physics Education at LMU Munich and two physics teacher trainers. The criteria are subject to constant further development. Due to the two different parts of the video (static set-up and dynamic execution), two evaluation schemes had to be developed (which also were explained to the prospective teachers).

In the set-up, each relevant object had to be described by three categories of the object in the experiment:

• the location (e.g., “on the left side of the table”),

• two surface characteristics (e.g., “brown, wooden block”), and

• the function (e.g., “provides shade”).

Reading from left to right results in three consecutive sequences: first the lamps, then the block, and finally the screen. For each of the relevant objects, the number of mentions was counted.

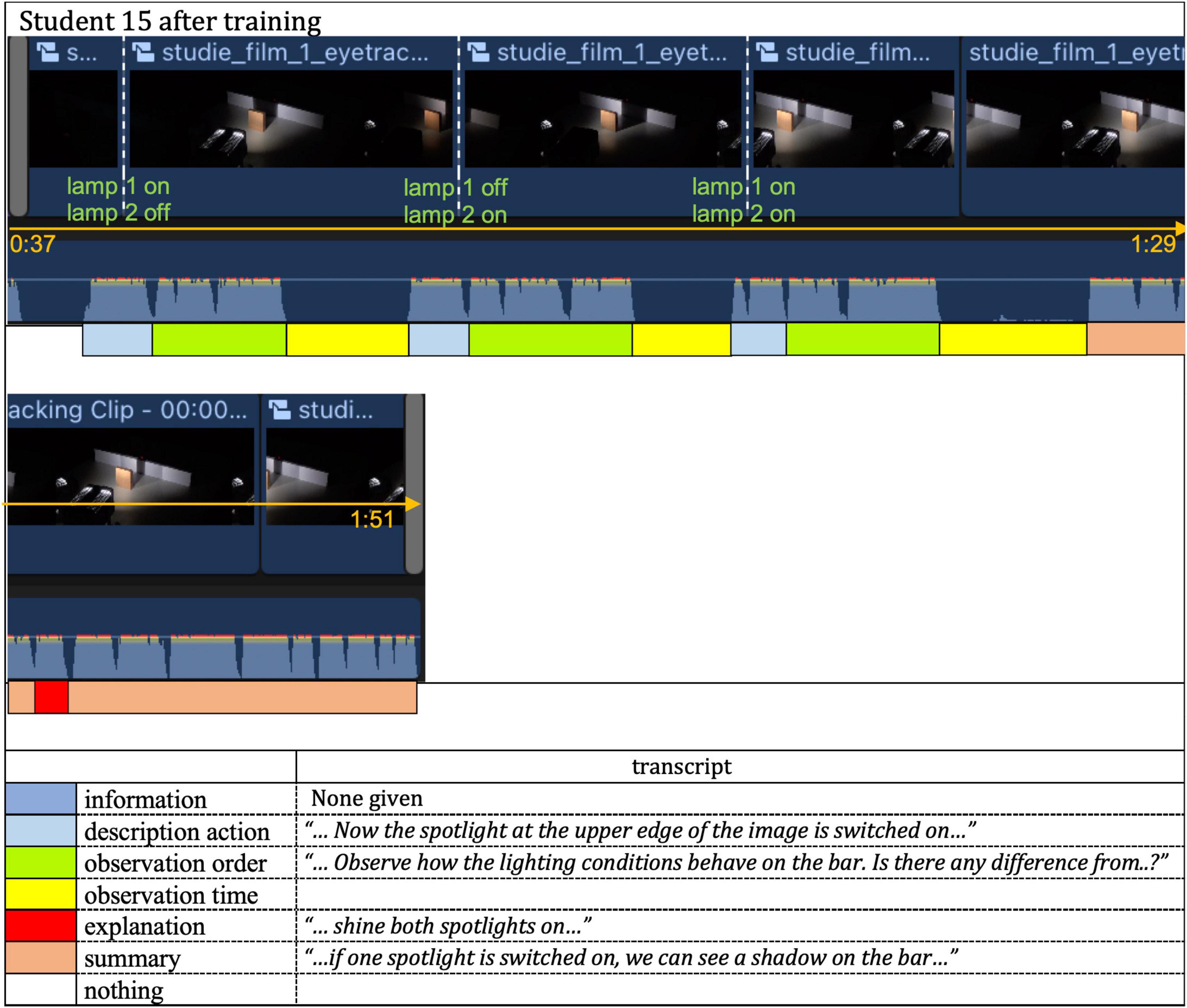

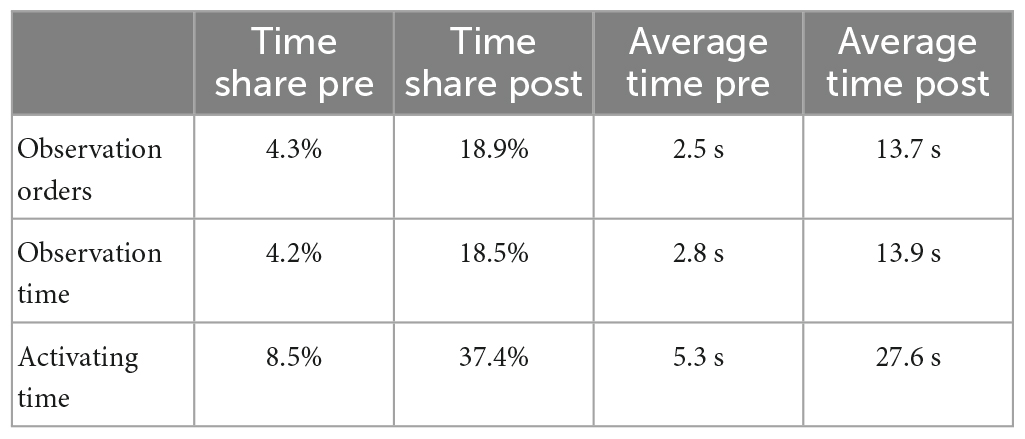

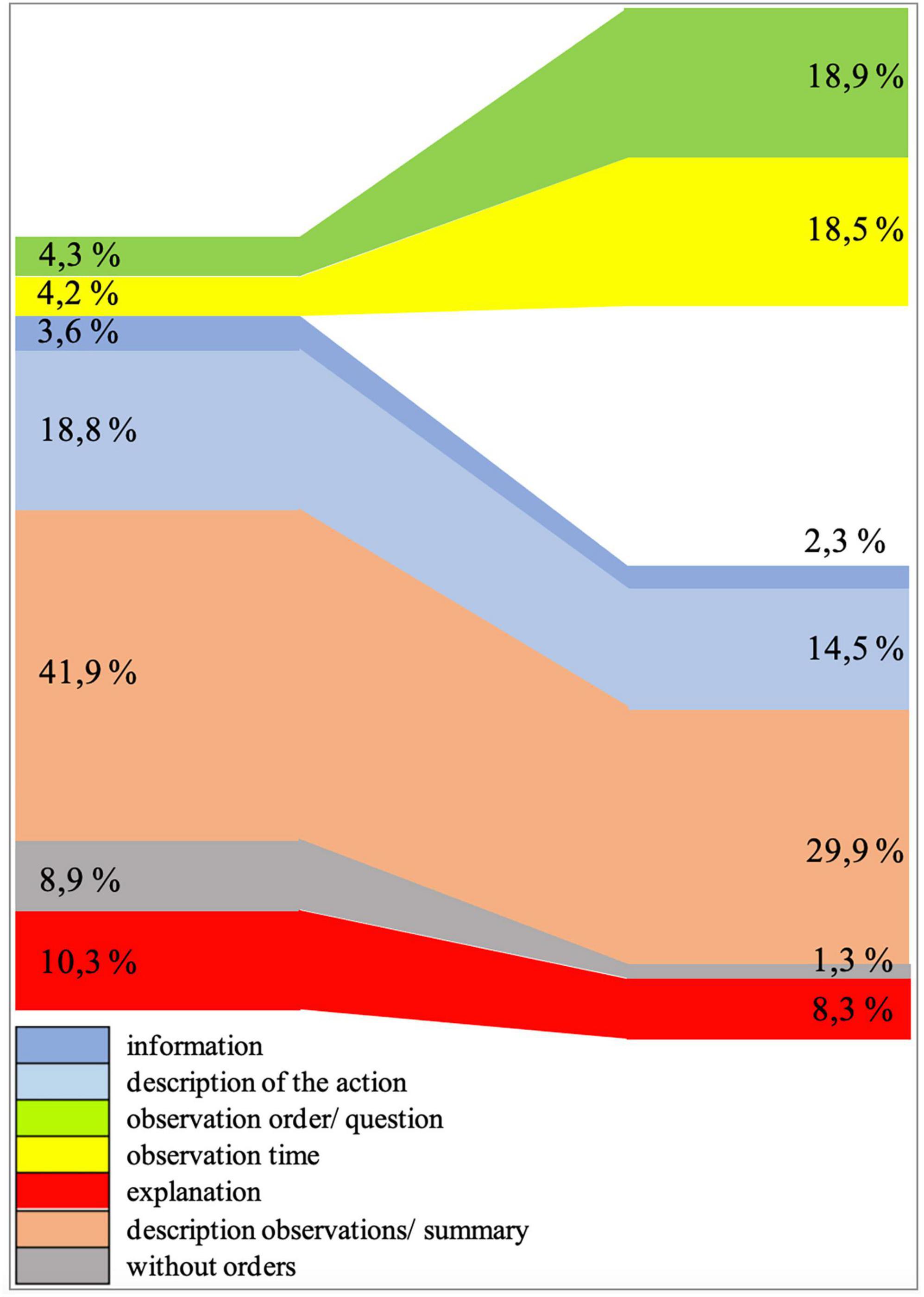

Different categories were chosen to assess the moderation of the execution of the experiments (see Figure 4):

Figure 4. Coding scheme for moderating the execution of the experiment. The colors represent the categories.

The following procedure was used to assess the extent to which the moderations met the criteria. After adding the prospective teachers’ audio tracks to the “silent videos,” the different categories were localized in the timeline and marked by a corresponding, colored bar. The categories were: general information to the experiment (blue), description of action carried out (light blue), observation orders or questions (green), observation time (yellow), explanations (red), summary of the observations (orange) and time without content (no color), breathing time (purple). The length of the bars is proportional to the temporal length of these intervals. The codes are rated on whether they appear. Then the ratios of the corresponding intervals to the total length of the moderation of the execution were calculated. This was done for both moderations of pre and post-test (see Figure 5).

Figure 5. Timeline with rating of a prospective teacher‘s moderation: at the top the video track, below it the audio track and again below sample codes for the assignment to the categories.

This, of course, raises the question of the relationship of each category to each other for optimal moderation. Discussions with various training teachers and lecturers led to the conclusion that it is difficult, if not impossible, to define an ideal moderation of an experiment. Different individual teaching styles and current classroom situations are too different. We limited ourselves to the general consensus that a good moderation must give the pupils the opportunity to make the necessary observations, i.e., the pupils must be activated to do these observations. Also, a good moderation should also not involve explanations, as these disrupt the observation process, deprive pupils of the opportunity to think through the process themselves, or become the content of subsequent lessons. To assess these pupil-activating segments, we added both observation time and observation orders to the one total pupil-activating time.

3.3.2. Interview and survey guide

The interview and survey guide included 13 questions, the eight questions used for this research were the same in pre-and post-interviews. The prospective teachers’ ratings were recorded using single items in the form of a 4-point Likert scale (4: completely agree,” “3: agree,” “2: disagree,” and “1: completely disagree). To obtain detailed information about their specific experiences, an open-ended question about this item was added. The interview and survey guide contained questions about the effects of moderating “silent videos” and of getting eye tracking feedback on their personal learning process (see Supplementary Figure 2). They should describe how their skills in controlling attention in particular and in moderating the videos in general had changed (Q 2). They were also asked about the effects on their professional language (Q 3, 6) and the consequences for their own actions in experimentation (Q 7). Another important part of the interview questions was the prospective teachers’ experiences of eye tracking as a feedback tool. They were asked how they perceived the effectiveness of their facilitation on the pupils. A major question was how eye tracking showed the connection between guiding (tasks and questions) and the pupils’ attentional response (Q 9, 10, 11, and 12). Finally, the prospective teachers were asked how they rate their learning progress between the two measurement points concerning approach, controlling attention through using language in facilitating experiments (additional question in the second interview). All interviews were evaluated and analyzed by two independent persons.

3.4. Eye tracking system

In this study, eye tracking was used as a feedback tool. It is therefore not a measurement tool to measure an outcome variable, instead it is a part of the intervention/training. The eye movements were recorded with an eye tracker. The system used was an Eye Follower from LC Technology. This system uses four cameras, two for tracking head motions, and two for tracking the eyes. The accuracy was less than 0.4° of visual angle. The distance of a participant to the monitor was between 55 and 65 cm. The video area has a resolution of 1920 × 1080 pixels and the resolution of the 24″ monitor is 1920 × 1200 pixels. The stimulus was enlarged to full monitor width and proportionally adjusted in height. The fixations and saccades were recorded at a sampling rate of 120 Hz and the discrimination between saccades and fixations was done by LC Fixation Detector (a dispersion-based algorithm: Salvucci and Goldberg, 2000).

3.5. Analysis

The moderations of the videos were rated by four to five independent raters based on the categories (see section “3.3.1. Assessment of prospective teachers’ competence in moderating experimental videos”). The raters marked the beginning and end of each category on the timeline of the videos and calculated the percentage of time. The interclass reliability coefficient (model: two-way mixed and type: absolute agreement) was used to determine the agreement of the raters.

Dependent samples t-tests were used to test whether the mean speaking times per category differed between the pre-test and the post-test. The Bonferroni correction was used to counteract the accumulation of alpha errors by performing each individual test at a reduced significance level. The significance level of individual tests is calculated as the global significance level to be maintained divided by the number of individual tests (4 tests, significance level α = 0.0125).

The interviews were analyzed using qualitative content analysis according to Mayring (2015). We followed a descriptive approach, analyzing the texts with a deductively formulated category system. We recorded the occurrence of these categories in category frequencies. The resulting scale has an ordinal scale level, so the “Cohen’s Weighted Kappa” coefficient was calculated for the raters’ agreement. We chose quadratic weights, where the distances between the raters are squared. This gives more weight to ratings that are far apart than to ratings that are close together.

4. Results

The moderation of the set-up was evaluated by four independent raters. The results of the rater agreement analyses show an agreement between the four raters of r = 0.799 [95% CI (0.686, 0.887)].

The moderation of the execution was evaluated by five independent raters. The value of the inter-rater correlation coefficient r = 0.993 shows a very high level of agreement between the five raters [95% CI (0.991, 0.994); see Cicchetti, 1994].

The interviews were evaluated by two independent raters. The results of the rater agreement analyses show an agreement between the two raters of κ = 0. 694 and are just above the 5% significance level [α = 0.068; 95% CI (0.378, 1.010)].

The findings to answer the first research question, namely, whether eye tracking feedback helps prospective teachers to explain the experimental set-up in a way that is appropriate for pupils, are divided into a general and a specific part.

4.1. Set-up: general results

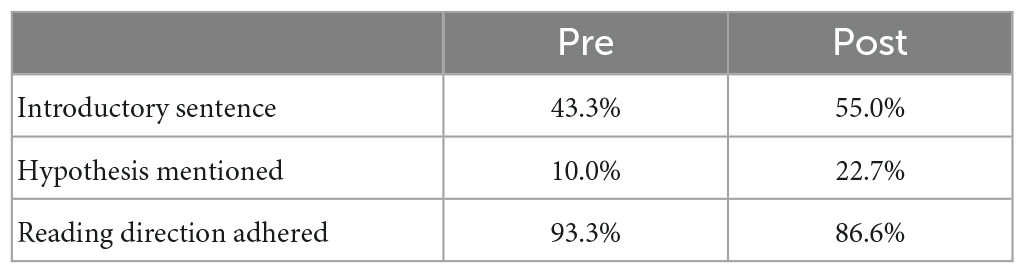

Before looking at the individual objects of the set-up to answer RQ 1, we will examine the connection of the set-up with the previous knowledge and the subsequent execution. A total of 43% of the prospective teachers started their first moderation attempt with an introductory sentence about the topic of the upcoming experiment, with only two of them really connecting to the pupils’ prior knowledge. The number of participants who started with a reasonable introductory sentence increased to 55% in the post attempt. The number of participants moving from set-up to execution with a research question or hypothesis increased from 10 to 23%. In both cases, the low percentage indicates that the participants were not aware or did not become aware of the importance of the transition between set-up and execution of the experiment. With 93%, the overwhelming majority adhered to the reading direction (from left to right), with virtually all participants (except one) adhering to the reading direction in the post-trial when trying to direct the pupils’ attention (see Table 1).

Table 1. Percentage of items “introductory sentence”, “hypothesis mentioned” and “reading direction adhered” mentioned in the pre- and post-test.

4.2. Set-up: specific results

Since the introductory sentence, the link to prior knowledge and the reading direction do not directly influence the pupils’ visual attention to certain areas. Thus, there is no focusing effect on the observed gaze overlays; the pupils’ gazes move across the whole screen and become more focused as soon as the experimental set-up appears, and the prospective teachers start talking. This behavior of the pupils didn’t change between the pre- and post-trial.

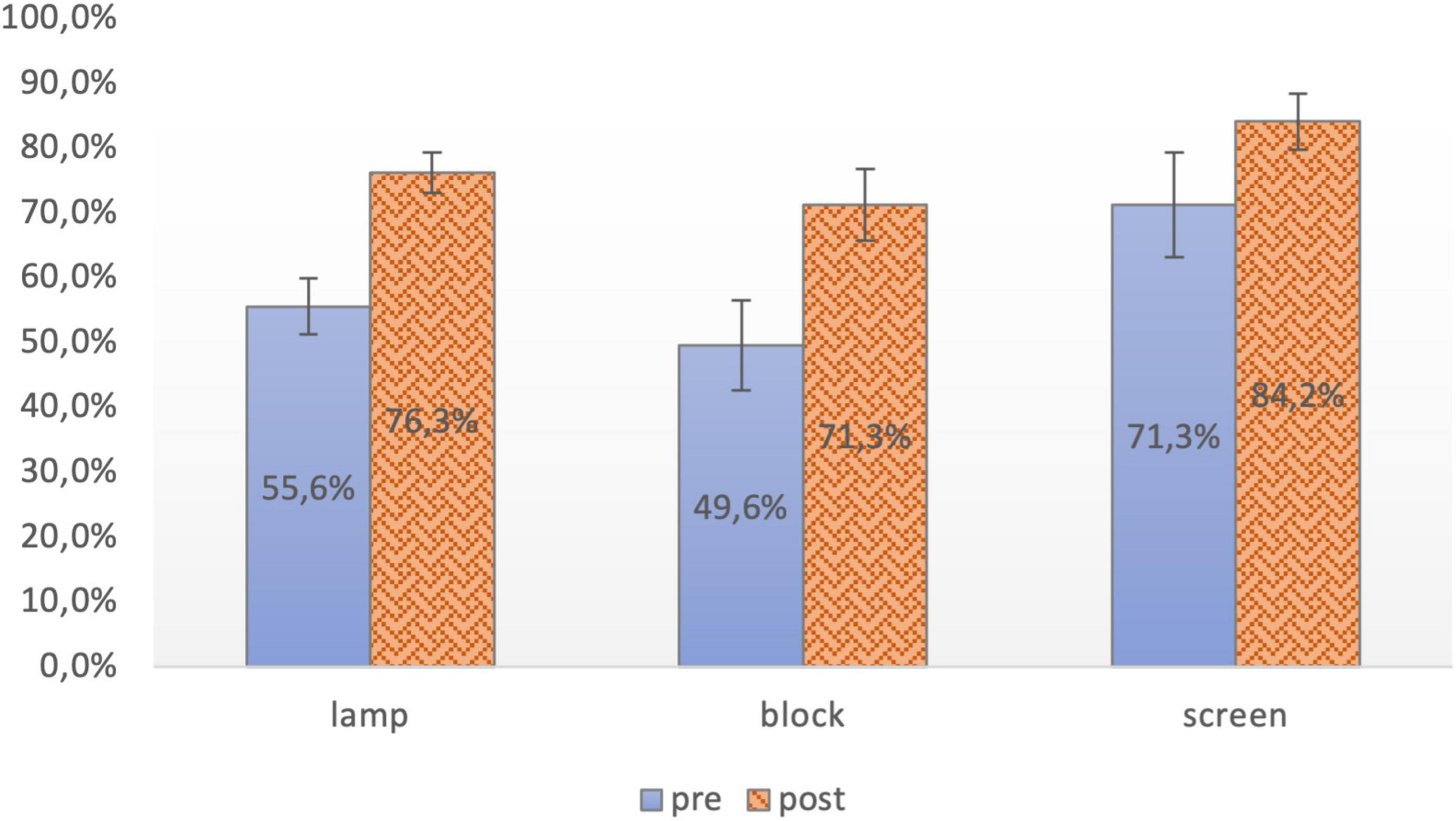

After the introductory sentence the numbers of mentions regarding an object are counted (e.g., location, function and two surface features, see section “3.3.1. Assessment of prospective teachers’ competence in moderating experimental videos”). The mentions for the lamps increased from 56 to 76% (t = −4.636, p < 0.001, Cohens’ |d| = 4.575, n = 15), those concerning the block from 50 to 71% (t = −15.756, p < 0.001, Cohens’ |d| = 2.926, n = 15) and the screen from 71 to 84% (t = −9.1454, p < 0.001, Cohens’ |d| = 1.698, n = 15). The number of mentions increased for all subjects (see Figure 6).

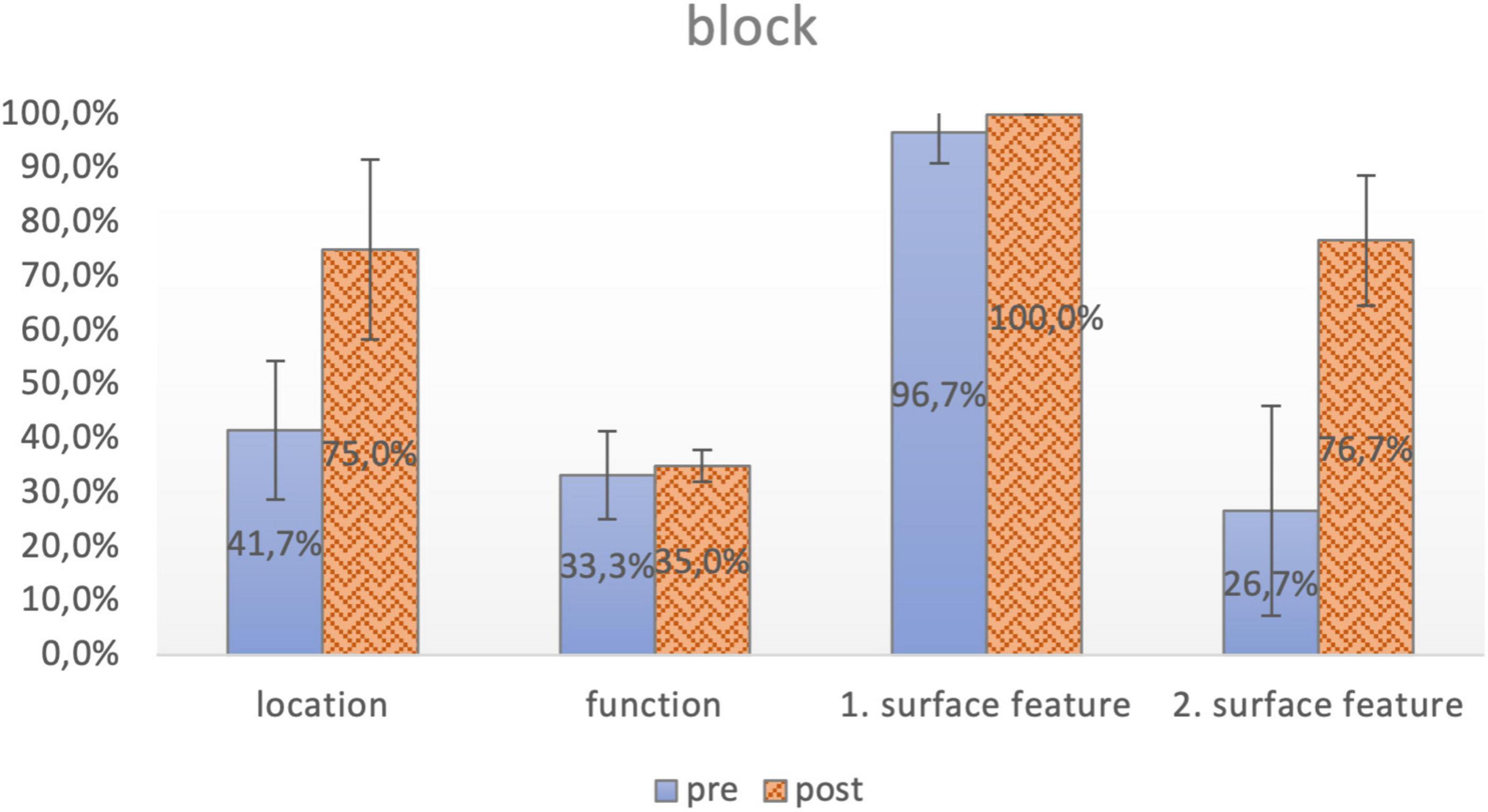

4.2.1. Mentioning “block” (detailed)

A more detailed analysis–here of the description of the opaque block in the light path—provides further insights: Cues referring to the location of the block increased from 42 to 75% applicable mentions, while the description of the block’s function in the experiment remained at about 33% (two participants who had mentioned the block’s function in the first attempt didn’t mention it in the second attempt.) Altogether, the function of the block seems to be too obvious for many prospective teachers to mention. In the post-attempt, all prospective teachers described the block with at least one surface feature, with the number of mentions increasing from 97 to 100%. A total of 77% of them mentioned also a second feature, up from 27% in the first trial (see Figure 7).

4.3. Execution: specific results

A total of 60% of the prospective teachers did not ask any question or gave any observation order to the pupils in the first attempt. The same situation resulted for giving the pupils’ time for observations, where also 60% of the prospective teachers didn’t leave any time to do so. In the second moderation–after the training phase and the eye tracking feedback- all prospective teachers gave observation orders and time to do these tasks.

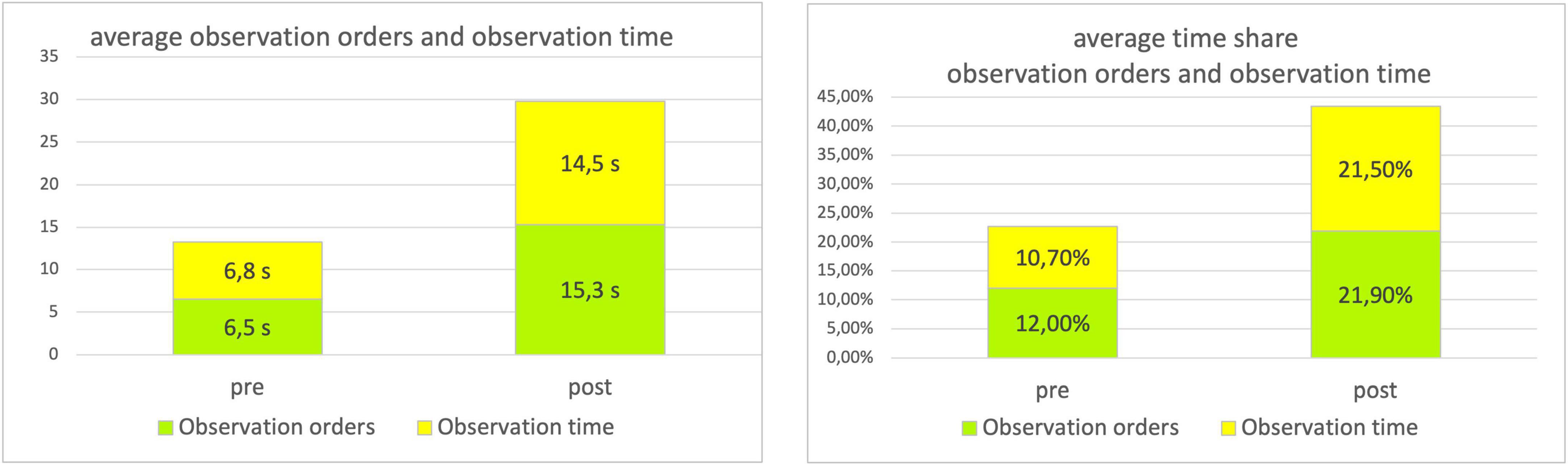

4.3.1. Activating time

Observation order time and observation time together result in the activating time. A total of 40% of the prospective teachers placed observation orders. The average order time increased from 2.5 to 13.7 s, which means an increase of time share from 4.3 to 18.9%. The same development can be seen in the amount of observation time given to the pupils. A total of 40% of the prospective teachers gave the pupils time for observations. The average observation time for all participants increased from 2.8 to 13.9 s, which means an increase of time share from 4.2 to 18.5%. Due to the high number of prospective teachers who did not give observation orders or time in the pre-trial, the SD is very high, so that the variance in response behavior is also large. The time span between prospective teachers activating pupils and non-activating is very large.

If we restrict ourselves to the participants who gave both observation order and observation time (n = 7), the following picture emerges:

The results of the dependent samples t-test show a significant difference with a high effect size between the mean percentage of activating time before and after moderation training with feedback [t = −3.075, p = 0.033, 95% CI (−29.21, −7.61), Cohen’s |d| = 15.77, n = 7]. After training with eye tracking feedback (M = 29.66%, SD = 11.29), subjects used significantly more pupil activating “tools” in their moderation than before training (M = 11.33%, SD = 11.13). Due to the small sample size, a bootstrapping procedure with 10.000 samples was applied.

For prospective teachers who gave both orders and observation time in the first trial, the average length of orders increased from 6.5 to 15.3 s while the observation time given increased from 6.8 to 14.5 s. The share of pupil activating time more than doubled after the training (see Table 2 and Figure 8).

Table 2. Prospective teachers’ time share and average time (pre and post) for observation orders, observation time and activating time when moderating the execution of an experiment.

Figure 8. Increase of observation orders and observation time (pupils activating time) for prospective teachers who have given both in the first trial.

Overall, however, one of the most important findings is that all prospective teachers, regardless of what they did on the pre-trail, gave observation orders and observation time after the training with eye tracking feedback. The average percentage of pupils-activating time on the second trial was 34%, so that more than one-third of the execution time was used to activate the pupils.

4.3.2. Decrease of other parameters

Part of the training concept is that no explanations should be given while conducting an experiment. Explanations are major part of the next step in the lesson, only observations should be made and recorded during the experiment. Nevertheless, the percentage of explanations given by the prospective teachers decreased from 10% of time to 8.3% of time, with the number of teachers giving explanations remaining the same. The prospective teachers did not seem to find this instruction meaningful.

The time spent on summaries also decreased from 42 to 30%, which seems to be a consequence of the fact that the prospective teachers were able to describe the essential content more precisely.

The descriptions of action followed the same trend as the summaries, falling from 19 to 15% of time, although the number of individual descriptions increased: The execution of the experiment is divided into three sequences (lamp1, lamp2, lamp1 and 2). In the post-trial 14 out of 15 prospective teachers gave concise and accurate action descriptions of these sequences. This was followed by observation tasks, with the timing of these messages much better aligned with the temporal sequence. The shorter duration of the action descriptions is again a consequence of the much more precise formulation of the descriptions.

Fortunately, the number of prospective teachers who temporarily left their pupils without any task dropped from eleven to five, i.e., by more than halved. The portion of time fell from 8.9 to 1.3% of the time, with only five instead of 11 prospective teachers leaving pupils without any instruction at all. All increases and decreases are shown in the following Sankey diagram (see Figure 9).

Figure 9. Sankey-diagram of all changes of the moderation of the execution of an experiment (all prospective teachers).

4.4. Results of the interviews and surveys

To answer the research question 2 (RQ2) of whether training with eye tracking feedback helps the prospective teachers to increase their pupils’ activating time, we analyzed the interviews and surveys. We considered the following statements to evaluate and rate the interviews:

• Category 1: Awareness of own impact “Eye tracking made me aware of my own impact on pupils.”

The Likert scale with the question “Eye tracking made me aware of my own impact on pupils” was answered in the pre-test with a mean M = 3.20 and SD = 0.75 and in the post-test with a mean M = 3.40 and SD = 0.49. This indicates that a large share of the prospective teachers showed high agreement with the statement and that this agreement even increased in the post-test. The decrease in SD shows that they even more agreed.

To evaluate the interviews regarding this category the following two key phrases were used: (1) “see reaction of the pupils.” and (2) “see importance of orders.”

Analyzing the interviews 63% of the participants fell into this category after the pre-trial, 70% after the post-trial. A comment of a prospective teacher (student_14) was: “Eye tracking feedback is really good because you can just see how you’re affecting the pupils. You’re really doing something practical where you can directly see the consequences of your actions.”

• Category 2: Connection between control codes and pupils’ reaction “Eye tracking made me realize the connection between control codes (such as assignments and questions) and the response of the pupils.”

The question “Eye tracking made me realize the connection between control codes (such as assignments and questions) and the response of the students” was answered in the pre-test with a mean M = 3.20 again and SD = 0.65 and in the post-test with a mean M = 3.73 and SD = 0.44. This indicates that a large share of the prospective teachers showed high agreement with the statement and that this agreement even increased in the post-test to very high agreement. The decrease in SD shows that they even more agreed. All prospective teachers of the study saw the pupils’ reaction on the control codes they applied.

To evaluate the interviews regarding this category the following three key phrases were used: (1) “see the effect of the control codes,” (2) “see effect of the spoken word,” (3) “see where the pupils look to.”

A total of 53% of the participants fell into this category after the pre-trial, 63% after the post-trial. “You can clearly see where the children look during the experiment, especially how they react to instructions”, was one of the prospective teachers’ comments (student_10).

• Category 3: Perceived difficulty in directing attention “I found it easy to direct the attention of the pupils in a certain area of the experiment).”

The Likert scale with the question “Eye tracking made me aware of my own impact on pupils” was answered in the pre-test with a mean M = 2.20 and SD = 0.65. After the first interview it became clear that the prospective teachers were rather reserved about their ability to direct pupils’ attention. With a mean M = 2.87 and SD = 0.44 in the post-test it is obvious that the difficulties in directing the attention of the pupils decreased and the prospective teachers were in consensus about this development. However, agreement with this category lagged behind the others in all Likert-scored questions.

Responses that prospective teachers felt were important in directing pupils’ attention were used to evaluate this category, i.e., the following three key words were used: (1) “location items,” (2) “… surface features,” (3) “observation order.”

According to the interviews 63% of the participants fell into this after the pre-trial, 80% after the post-trial. “I have noticed which work orders help more,” commented student_9.

Another interesting finding from the interviews with the prospective teachers and the examination of many moderated videos must be mentioned. A total of 75% of prospective teachers indicated that it is not only important to keep the pupils’ eyes on a particular area, but also to keep them there. So, it seems to be necessary to give the pupils after the order where to look at a second assignment so that the pupils’ gazes stay on that spot.

This result was not expected in this way and was not previously part of our considerations of attention-controlling moderation of experiments. Rather, it seems necessary to investigate this circumstance more closely.

Generally, the overwhelming majority of prospective teachers stated, that “it was very enlightening and informative to see where the pupils were looking and how they reacted to the instructions” (student_13).

5. Discussion

In this study, we investigated a training with eye tracking feedback to improve prospective teachers’ abilities of moderating experiments in class. To do this, we demonstrated to prospective teachers their ability to direct pupils’ attention using only verbal cues. We encouraged these skills through training and intensive feedback on their abilities. The three phases of feedback described in the literature (Wisniewski et al., 2020) could be realized in our approach: “feed-up” was realized by the prospective teachers watching the gaze overlay videos of their moderation, “feed-back” by comparing pre- and post-trial, and “feed-forward” by becoming aware which skills they should develop and which they should avoid. This feedback consisted of an assessment of the quality of the moderation (rating) and, in particular, the pupils’ reactions to the moderation (eye tracking). The success of this approach was measured by an assessment and through interviews.

Our results answering RQ 1 show that our approach significantly improves the ability to moderate experimental set-up through verbal cues. We could show that training with eye tracking feedback and rating feedback helped prospective teachers to explain the set-up of an experiment in a pupil’s appropriate way. The number of categories mentioned by the prospective teachers increased for all three objects in the set-up. The prospective teachers rarely provided a second surface feature and often relied on the pupils’ presumed prior knowledge. It is interesting to note that although the prospective teachers are better at locating the individual objects and at the same time name a second surface feature much more frequently, there is no significant change in all three objects in terms of their function. The function of an object also hardly appears in the interviews. To the prospective teachers the function of an object seemed to be automatically supplied with the naming of the object or not worth naming it. The wooden block seemed to be of little concern in both trials, although compared to lamps and screens, the block’s function as a shade provider is not natural. When asked why the block was given so little attention, reference was made to the corresponding preliminary experiment, although only two prospective teachers and then only in the second trail made a sufficient connection to the previous knowledge (in this case the creation of a simple shadow in the model of the rectilinear propagation of light). Overall, the prospective teachers had difficulty making transitions between the different phases of the experiment, with a total of only three (pre) or four (post) leading to execution with a research question or similar. With regard to the moderation of the execution of the experiments, the results show that the time in which pupils were given observation orders and got observation time more or less doubled, while all the other parameters approximately halved. Not only did the activating time increase, but the prospective teachers also paid much more attention to the respective sequences, so that the observation period corresponded much better to the action in the experiment. The time share spent explaining also decreased, although not as much as hoped. The prospective teachers also seemed to have difficulties to refrain from explanations during the presentation. But with the more intensive study of one’s own linguistic guidance the prospective teachers’ moderations became steadily shorter and more concise in content. This was reflected in the decrease of complaints that the playtime of the videos was too short. Nevertheless, some moderations remained long-winded. However, the linguistic content analysis of the moderations is still pending.

Training with feedback through eye tracking and assessment resulted in a significant increase in pupil activating time (RQ2). Our results of the interviews and surveys show that training with eye tracking as a feedback tool has a high level of acceptance and perceived usefulness among the prospective teachers. In the interviews, the prospective teachers described, among other things, how eye tracking feedback made them aware of their previous abilities to accompany experiments linguistically. The direct feedback from the pupils set in motion a process that made them realize the value of a good description of the experimental set-up but also the possibilities of attention-grabbing work assignments. This feedback acted back on our prospective teachers as described in the Process Model of SFT (Röhl et al., 2021). Furthermore, the eye tracking feedback with the accompanying verbal analysis by the lecturer provided prospective teachers with information on how to improve their moderation skills. The interviews revealed the extent to which prospective teachers grappled with this information and developed individual instructional approaches (see section “1.2.2. Levels and forms of feedback”).

In the gaze overlay videos, when comparing pre- and post-moderation, one can clearly see the stronger focus of the pupils’ gaze and the longer stay in one area. Unfortunately, this effect cannot be statistically represented in our approach since we only had three pupils per prospective teacher available. Watching the gaze overlay videos of their own moderation showed the prospective teachers the gap between the target (directing pupils’ attention) and the current state.

6. Limitations

The results of the study should be interpreted with the following limitations. Firstly, the sample size is relatively small. Fifteen 5th semester prospective teachers participated in the study. At that time, they were all prospective teachers enrolled in that semester, at that location and on that course. Expanding the sample to include other locations or prospective teachers from other semesters might have led to biases in their prior knowledge or experience. In addition, the recorded video experiments of the pre-test and the post-test of all participating subjects were shown to three pupils each and their eye movements were recorded. The effort involved was already very high and would have increased massively with a larger sample. This was therefore not done. Secondly, the pupils’ eye movements show the participants how their attentional cues work on the pupils’ visual attention. Of course, they do not provide any information about what the pupils have actually learned. The changes in the pupils’ knowledge were not the purpose of this study, but the reactions of them to the verbal input of prospective teachers providing feedback.

7. Conclusion

Observing the gaze behavior of pupils watching a “silent video “moderated by prospective teachers themselves gives them authentic feedback on their own effectiveness. Prospective teachers can literally see the impact of their words, the reaction of the pupils listening to them. They see where the pupils are looking on and individually recognize when or why the pupils leave the currently important areas of the set-up. The most important achievement, however, is that all prospective teachers can directly see and experience their own individual learning progress to accompany experiments in an attention-activating verbal way. They can see how even small changes (e.g., giving a second surface feature or describing the function) in moderating an experiment can have a lasting impact on pupils’ attention.

The analysis of the connection between control codes (given as verbal attentional cues) and pupils’ response leads to another important result of the use of eye tracking: pupils follow the command to look at a particular area of the set-up immediately almost every time, but as quickly as they look, they leave it again. To keep their attention on the spot, it is necessary to give a second assignment or to describe another feature such as the function (given as verbal content-related cues) or another surface feature of an object. Pupils who have received at least two pieces of information or assignments stay longer on this area of the set-up. To stay longer on a certain spot is very necessary for the pupils to make the observation the teacher intended. Thus, the results show that one strength of verbal cues, namely, being able to offer attentional guidance and content support, should also be used. With the more intensive study of one’s own linguistic guidance the prospective teachers’ moderations became steadily shorter and more concise in content. However, the linguistic content analysis of the moderations is still pending.

Demonstrating experiments in class in a way that is effective for learning requires a lot of practice. Training with “silent videos” is a promising method to support this exercise process, although it cannot replace real-life execution. It is not the intent of this training to standardize prospective teacher moderation, just as there is no ideal type of moderation, but rather everyone should develop their own individual appropriate teaching style. However, using eye tracking feedback gives prospective teachers unbiased and direct feedback from real pupils on their verbal skills and the impact of their use of language on their pupils. This study has so far analyzed only the group that received both the training and the eye tracking feedback. It is therefore unclear how much of the prospective teachers’ positive development can be attributed to the training or the eye tracking feedback. Further research should explore how much of the improvement in the video presentations can be explained by the eye tracking feedback factor and how much by the training factor.

Data availability statement

The original contributions presented in this study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author contributions

MS, BW, and RG contributed to the conception and design of the study. MS organized the database and wrote the first draft of the manuscript. MS and BW performed the statistical analysis. BW wrote sections of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work “silent videos” of the Chair for Physics Education at LMU Munich is part of the project Lehrerbildung@LMU run by the Munich Center for Teacher Education, which was funded by the Federal Ministry of Education and Research under the funding code 01JA1810.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1140272/full#supplementary-material

Footnotes

- ^ For all “silent videos” see: https://www.didaktik.physik.uni-muenchen.de/lehrerbildung/lehrerbildung_lmu/video/

References

Addleman, D. A., and Jiang, Y. V. (2019). Experience-driven auditory attention. Trends Cogn. Sci. 23, 927–937. doi: 10.1016/j.tics.2019.08.002

Alemdag, E., and Cagiltay, K. (2018). A systematic review of eye tracking research on multimedia learning. Comput. Educ. 125, 413–428. doi: 10.1016/j.compedu.2018.06.023

Alpizar, D., Adesope, O. O., and Wong, R. M. (2020). A meta-analysis of signaling principle in multimedia learning environments. Educ. Tech. Res. Dev. 68, 2095–2119. doi: 10.1007/s11423-020-09748-7

Amso, D. (2016). Visual attention: Its role in memory and development. Washington, DC: American Psychological Association. Available online at: https://dcnlab.psychology.columbia.edu/sites/default/files/content/Visual-attention-Its-role-in-memory-and-development.pdf

Boucheix, J. M., and Lowe, R. K. (2010). An eye tracking comparison of external pointing cues and internal continuous cues in learning with complex animations. Learn. Instr. 20, 123–135. doi: 10.1016/j.learninstruc.2009.02.015

Cannon, M. D., and Witherspoon, R. (2005). Actionable feedback: Unlocking the power of learning and performance improvement. Acad. Manage. Perspect. 19, 120–134. doi: 10.5465/ame.2005.16965107

Cicchetti, D. V. (1994). Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 6:284. doi: 10.1037/1040-3590.6.4.284

Cullipher, S., Hansen, S. J., and VandenPlas, J. R. (2018). “Eye tracking as a research tool: An introduction,” in Eye tracking for the chemistry education researcher, eds J. R. VandenPlas, S. J. R. Hansen, and S. Cullipher (Washington, DC: American Chemical Society), 1–9. doi: 10.1021/bk-2018-1292.ch001

de Koning, B. B., Tabbers, H. K., Rikers, R. M., and Paas, F. (2010). Attention guidance in learning from a complex animation: Seeing is understanding?. Learn. Instr. 20, 111–122. doi: 10.1016/j.learninstruc.2009.02.010

Folyi, T., Fehér, B., and Horváth, J. (2012). Stimulus-focused attention speeds up auditory processing. Int. J. Psychophysiol. 84, 155–163. doi: 10.1016/j.ijpsycho.2012.02.001

Ginns, P. (2005). Meta-analysis of the modality effect. Learn. Instr. 15, 313–331. doi: 10.1016/j.learninstruc.2005.07.001

Glaser, M., and Schwan, S. (2015). Explaining pictures: How verbal cues influence processing of pictorial learning material. J. Educ. Psychol. 107:1006. doi: 10.1037/edu0000044

Hansen, S. J. R., Hu, B., Riedlova, D., Kelly, R. M., Akaygun, S., and Villalta-Cerdas, A. (2019). Critical consumption of chemistry visuals: Eye tracking structured variation and visual feedback of redox and precipitation reactions. Chem. Educ. Res. Pract. 20, 837–850. doi: 10.1039/C9RP00015A

Hattie, J. (2021). “Foreword,” in Student feedback on teaching in schools, eds W. Rollett, H. Bijlsma, and S. Röhl (Cham: Springer), 1–7.

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Hu, J., and Zhang, J. (2021). The effect of cue labeling in multimedia learning: Evidence from eye tracking. Front. Psychol. 12:736922. doi: 10.3389/fpsyg.2021.736922

Jarodzka, H., Janssen, N., Kirschner, P. A., and Erkens, G. (2015). Avoiding split attention in computer-based testing: Is neglecting additional information facilitative?. Br. J. Educ. Technol. 46, 803–817. doi: 10.1111/bjet.12174

Jarodzka, H., Van Gog, T., Dorr, M., Scheiter, K., and Gerjets, P. (2013). Learning to see: Guiding students’ attention via a model’s eye movements fosters learning. Learn. Instr. 25, 62–70. doi: 10.1016/j.learninstruc.2012.11.004

Katsuki, F., and Constantinidis, C. (2014). Bottom-up and top-down attention: Different processes and overlapping neural systems. Neuroscientist 20, 509–521. doi: 10.1177/1073858413514136

Keller, L., Cortina, K. S., Müller, K., and Miller, K. F. (2022). Noticing and weighing alternatives in the reflection of regular classroom teaching: Evidence of expertise using mobile eye tracking. Instr. Sci. 50, 251–272. doi: 10.1007/s11251-021-09570-5

Kluger, A. N., and DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 119:254. doi: 10.1037/0033-2909.119.2.254

Kriz, S., and Hegarty, M. (2007). Top-down and bottom-up influences on learning from animations. Int. J. Hum. Comput. Stud. 65, 911–930.

Langner, A., Graulich, N., and Nied, M. (2022). Eye Tracking as a Promising Tool in Pre-Service Teacher Education- A New Approach to Promote Skills for Digital Multimedia Design. J. Chem. Educ. 99, 1651–1659. doi: 10.1021/acs.jchemed.1c01122

Lockhofen, D. E. L., and Mulert, C. (2021). Neurochemistry of Visual Attention. Front. Neurosci. 15:643597. doi: 10.3389/fnins.2021.643597

Lupyan, G. (2017). Changing what you see by changing what you know: The role of attention. Front. Psychol. 8:553. doi: 10.3389/fpsyg.2017.00553

Mayer, R. E. (2014). “Cognitive theory of multimedia learning,” in The Cambridge handbook of multimedia learning, ed. R. E. Mayer (Cambridge: Cambridge University Press), 43–71. doi: 10.1017/CBO9781139547369.005

Mayring, P. (2015). “Qualitative content analysis: theoretical background and procedures,” in Approaches to qualitative research in mathematics education. Advances in mathematics education, eds A. Bikner-Ahsbahs, C. Knipping, and N. Presmeg (Dordrecht: Springer). Available online at: https://doi.org/10.1007/978-94-017-9181-6_13

McIntyre, N. A., and Foulsham, T. (2018). Scanpath analysis of expertise and culture in teacher gaze in real-world classrooms. Instr. Sci. 46, 435–455. doi: 10.1007/s11251-017-9445-x

McIntyre, N. A., Mainhard, M. T., and Klassen, R. M. (2017). Are you looking to teach? Cultural, temporal and dynamic insights into expert teacher gaze. Learn. Instr. 49, 41–53. doi: 10.1016/j.learninstruc.2016.12.005

Minarikova, E., Smidekova, Z., Janik, M., and Holmqvist, K. (2021). Teachers’ professional vision: Teachers’ gaze during the act of teaching and after the event. Front. Educ. 6:716579. doi: 10.3389/feduc.2021.716579

Mussgnug, M., Lohmeyer, Q., and Meboldt, M. (2014). “Raising designers’ awareness of user experience by mobile eye tracking records,” in DS 78: Proceedings of the 16th International conference on engineering and product design education (E&PDE14), design education and human technology relations, (The Netherlands: University of Twente).

Narciss, S., and Huth, K. (2006). Fostering achievement and motivation with bug-related tutoring feedback in a computer-based training for written subtraction. Learn. Instr. 16, 310–322. doi: 10.1016/j.learninstruc.2006.07.003

Ozcelik, E., Arslan-Ari, I., and Cagiltay, K. (2010). Why does signaling enhance multimedia learning? Evidence from eye movements. Comput. Hum. Behav. 26, 110–117. doi: 10.1016/j.chb.2009.09.001

Pinto, Y., van der Leij, A. R., Sligte, I. G., Lamme, V. A. F., and Scholte, H. S. (2013). Bottom-up and top-down attention are independent. J. Vis. 13:16. doi: 10.1167/13.3.16

Richter, J., Scheiter, K., and Eitel, A. (2016). Signaling text-picture relations in multimedia learning: A comprehensive meta-analysis. Educ. Res. Rev. 17, 19–13. doi: 10.1016/j.edurev.2015.12.003

Röhl, S., Bijlsma, H., and Rollett, W. (2021). “The process model of student feedback on teaching (SFT): A theoretical framework and introductory remarks,” in Student feedback on teaching in schools, eds W. Rollett, H. Bijlsma, and S. Röhl (Cham: Springer). doi: 10.1007/978-3-030-75150-0_1

Rollett, W., Bijlsma, H., and Röhl, S. (2021). “Student feedback on teaching in schools: Current state of research and future perspectives,” in Student feedback on teaching in schools, eds W. Rollett, H. Bijlsma, and S. Röhl (Cham: Springer), 259–270. doi: 10.1007/978-3-030-75150-0_16

Salvucci, D. D., and Goldberg, J. H. (2000). “Identifying fixations and saccades in eye-tracking protocols,” in Proceedings of the 2000 symposium on eye tracking research & applications, (Palm Beach Gardens, FL: ACM), 71–78. doi: 10.1145/355017.355028

Schmidt-Weigand, F., Kohnert, A., and Glowalla, U. (2010). A closer look at split visual attention in system- and self-paced instruction in multimedia learning. Learn. Instr. 20, 100–110. doi: 10.1016/j.learninstruc.2009.02.011

Schneider, S., Beege, M., Nebel, S., and Rey, G. D. (2018). A meta-analysis of how signaling affects learning with media. Educ. Res. Rev. 23, 1–24. doi: 10.1016/j.edurev.2017.11.001

Schweinberger, M., and Girwidz, R. (2022). “Silent videoclips’ for teacher enhancement and physics in class—material and training wheels,” in Physics teacher education, eds J. Borg Marks, P. Galea, S. Gatt, and D. Sands (Cham: Springer), 149–159. doi: 10.1007/978-3-031-06193-6_11

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

Stuermer, K., Seidel, T., Mueller, K., Häusler, J., and Cortina, K. S. (2017). What is in the eye of preservice teachers while instructing? An eye-tracking study about attention processes in different teaching situations. Zeitschrift für Erziehungswissenschaft 20, 75–92. doi: 10.1007/s11618-017-0731-9

Sweller, J., Ayres, P., and Kalyuga, S. (2011). Cognitive load theory. New York, NY: Springer. doi: 10.1007/978-1-4419-8126-4

Szulewski, A., Braund, H., Egan, R., Gegenfurtner, A., Hall, A. K., Howes, D., et al. (2019). Starting to think like an expert: An analysis of resident cognitive processes during simulation-based resuscitation examinations. Ann. Emerg. Med. 74, 647–659. doi: 10.1016/j.annemergmed.2019.04.002

Watzka, B., Hoyer, C., Ertl, B., and Girwidz, R. (2021). Wirkung visueller und auditiver Hinweise auf die visuelle Aufmerksamkeit und Lernergebnisse beim Einsatz physikalischer Lern-videos. Unterrichtswissenschaft 49, 627–652. doi: 10.1007/s42010-021-00118-7

Wisniewski, B., Zierer, K., and Hattie, J. (2020). The power of feedback revisited: A meta-analysis of educational feedback research. Front. Psychol. 10:3087. doi: 10.3389/fpsyg.2019.03087

Xenos, M., and Rigou, M. (2019). Teaching HCI design in a flipped learning m. sc. course using eye tracking peer evaluation data. arXiv [preprint].

Keywords: silent videos, eye tracking, feedback, directing attention, self-perception, verbal cues

Citation: Schweinberger M, Watzka B and Girwidz R (2023) Eye tracking as feedback tool in physics teacher education. Front. Educ. 8:1140272. doi: 10.3389/feduc.2023.1140272

Received: 08 January 2023; Accepted: 05 May 2023;

Published: 30 May 2023.

Edited by:

Pascal Klein, University of Göttingen, GermanyReviewed by:

Sebastian Becker, University of Cologne, GermanyNicole Graulich, University of Giessen, Germany

Copyright © 2023 Schweinberger, Watzka and Girwidz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matthias Schweinberger, bS5zY2h3ZWluYmVyZ2VyMUBsbXUuZGU=

Matthias Schweinberger

Matthias Schweinberger Bianca Watzka

Bianca Watzka Raimund Girwidz1

Raimund Girwidz1