- 1Department of Physics and Department of Chemical Engineering, Auburn University, Auburn, AL, United States

- 2Graduate School of Education, Stanford University, Stanford, CA, United States

Introduction: Many experts have predicted a drop in students’ academic performance due to an extended period of remote instruction and other harmful effects of the pandemic.

Methods: As university instructors and education researchers, we sought to investigate the effects of the pandemic on students’ preparation for college-level coursework and their performance in early college using mixed effects regression models. Data were collected from STEM students at a public research university in the southeastern United States.

Results: We found that demographic gaps in high school preparation (as measured by ACT scores) between men and women, as well as underrepresented minority and majority students, remained relatively consistent after the start of the pandemic. These gaps were approximately 1 point (out of 36) and 3 points, respectively. However, the gap between first generation and continuing generation students increased from prior to 2020, to after 2020, going from approximately 1 point to 2 points. This gap in preparation was not accompanied by a corresponding shift in the demographics of the student population and there was no corresponding increase in the demographic gaps in students’ first term grades.

Discussion: The data seem to suggest that first-generation students in STEM suffered more from the changes to secondary instruction during the pandemic, but that college instructors were able to mitigate some of these effects on first-semester grades. However, these effects were only mitigated to the extent that they preserved the status quo of pre-pandemic inequities in undergraduate STEM education.

Introduction

The COVID-19 pandemic has had a profound impact on all aspects of the lives of instructors and post-secondary students during the pandemic. Some examples from the realm of formal education are reported declines in student mental health (Malik and Javed, 2021; Elsner et al., 2022) and increased concerns about academic dishonesty in an online teaching environment (Hill et al., 2021). Not all reported outcomes of the pandemic have been negative – for example, there have been some positive changes in the realm of online education (Pokhrel and Chhetri, 2021) – though the majority of reported effects have had a negative impact on students and teachers alike. Surveying instructors and students across three vastly different universities from the three different continents (Europe, United States, and Australia), authors found that both instructors and students significantly preferred in-person classes over the emergency remote instruction experience (Salehi et al., in review). What yet remains unclear is the long-term effect of the pandemic and emergency remote teaching on student academic outcomes.

Despite the relative scarcity of data, there are many reports in the popular media in which university faculty claim that students are much less academically prepared than they were before the pandemic (How to Solve the Student Disengagement Crisis, 2022; Malesic, 2022). The most comprehensive study of academic preparedness at this level in the United States comes from a private company that administers college entrance exams (the ACT). Their study compared 600,000 11th grade students from 3,900 high schools across 38 states, who took the ACT during the school day in the spring of 2021, with historical data (Allen, 2022). The analysis showed a reduction in ACT scores in the U.S. of about 0.5 points on a 36-point scale, which the authors equate with a 3-month loss of learning. However, it is unclear what this indicates about college preparation, as this reduction may have been due to many universities waiving standardized test requirements during the pandemic. That is, students may not have been motivated to try as hard during the test because the tests were not considered in the admissions process at many different schools. Additionally, there were some methodological choices that the author made that could have made the sample unrepresentative of the national student population. For example, they omit students who took the tests on Saturday mornings outside of school. They also only include schools where 75% of students or more took the test (many not going to college or attending community college may choose not to take the ACT, for example). They also acknowledge that, because the data is coming from in-person schools, there is likely an under sampling of students from areas where remote instruction continued into 2021 (Allen, 2022). Most relevant for this study, the author found that decreases in ACT scores were less pronounced for students of color, and students from low-income households.

One factor that has received limited attention in most investigations of academic preparedness during the pandemic is demographics. There are many reports in STEM education of the systemic inequities that disproportionately affect marginalized groups: women, students of color, and first-generation students (Van Dusen and Nissen, 2020). Many have hypothesized that these groups would be affected more by the pandemic than white men. In some ways (e.g., access to health care: Robertson et al., 2022; access to proper remote study setting, differential gender changes in commitments outside study, and/or anxiety about familial and professional stressors due the pandemic: Sifri et al., 2022.) This has been shown to be true, but there was relatively little data available on how academic outcomes differed for marginalized versus overrepresented students during the pandemic. Additionally, among the investigations available, most focus on the student body as a whole and do not break down how STEM and non-STEM students may have fared. As the ACT has been shown to be strongly predictive of STEM outcomes (Salehi et al., 2020), it seems relevant to consider the population of STEM students separately. The contribution of this study is to specifically investigate the impact of the pandemic on academic outcomes for STEM students along multiple dimensions of demographic backgrounds, which, to our knowledge, has not been done previously.

The primary goal of this work is to provide additional data as to how students’ academic preparation and performance may have changed from pre-pandemic times to the present. We are particularly interested in whether any changes observed may differ by different demographic groups. Such examinations can help educators to leverage more insightfully institutional and instructional practices to mitigate the long-term educational impacts of the pandemic. The generalizability of this study may be limited as we draw data from a single institution in the United States, but we hope to encourage others with similar data to investigate and share their results so that the educational research community can develop a more complete understanding of the problems that will face students and instructors moving forward.

Theoretical framework

We draw on theoretical frameworks of equity that are rooted in quantitative critical theory (QuantCrit; Stage, 2007; Lopez et al., 2018). QuantCrit complements qualitative critical studies of equity, which are historically centered on the lived experiences of marginalized students, by using large-scale data to represent student data in ways that draw attention to structural inequities that perpetuate injustices against gender minorities, people of color, and people from lower socioeconomic classes. QuantCrit investigations should also encourage researchers to identify where society fails to measure outcomes for marginalized groups. Importantly for this study, QuantCrit conceptualizes differences in educational outcomes between marginalized and majority groups as “educational debts” owed to the marginalized students (Van Dusen and Nissen, 2020). These are also sometimes referred to as “opportunity gaps” (Shukla et al., 2022).

We note that, for the population studied here (STEM students), there are many aspects of identity that can lead to marginalization. This includes gender (women are underrepresented in STEM), race/ethnicity, and whether a students’ parents attended college (Reardon, 2018). In the United States, race/ethnicity and parental education often serve as proxies for socioeconomic status. The demographically marginalized students tend to come from underfunded rural and urban school districts without the same educational opportunities as those found in districts which are predominantly white and Asian students. As a result, white and Asian students have been overrepresented in higher education relative to their share of the total US population (Van Dusen and Nissen, 2020). There is also a plethora of research showing that women are underrepresented in STEM generally (Costello et al., 2023).

Our framework of equity is based on work by Rodriguez et al. (2012) and was extended by Burkholder et al. (2020). This framework provides four different definitions of equity:

1. Equity of individuality: students from a marginalized group see improvements in performance following an intervention. This definition of equity does not compare different groups of students, but instead centers itself on improving the experiences of and outcomes for marginalized students. For the current study, a relevant example would simply be an increase in GPAs of first-generation students in 2021 compared to pre-pandemic.

2. Equity of outcomes: this is the definition of equity instructors and researchers typically strive for in educational interventions. This is defined as students from marginalized and majority groups having the same outcomes at the end of an intervention, such as a course. In our study, this would correspond to majority and marginalized students having identical distributions of ACT scores after the transition to online instruction.

3. Equity of learning: this is defined as students in marginalized and majority groups seeing equal changes in performance over the duration of an intervention. For example, if administering a pre- and post-test of conceptual knowledge, the change in scores would be the same for students of color and white students. Note that this does not necessarily indicate equity of outcomes.

4. Equity of opportunity: this definition of equity compares outcomes for students from marginalized and majority groups accounting for existing inequities between the groups at the start of an intervention. Specifically, it compares the outcomes of students from marginalized groups and majority groups who were otherwise identical on available measures of academic performance and experience. In our study, this is operationalized by calculating demographic opportunity gaps in first-semester GPA while controlling for ACT scores. Thus, our reported effect size may be, for example, the average difference in GPA scores between first- and continuing-generation students who had otherwise equivalent ACT scores.

Each definition of equity has different implications for how opportunity gaps or educational debts might shift over time. Equity of learning and equity of opportunity correspond to a preservation of systemic inequities – the marginalized group is still owed an educational debt, but the debt is not any larger than it was before the intervention. Equity of outcomes represents the resolution of educational debts from a quantitative perspective: despite inequities in academic preparation, the marginalized and majority groups achieve the same level of understanding or performance. Equity of individuality may correspond to either preservation or mitigation of educational debts but is centered on the experiences of marginalized students, so it does not make the comparison directly. Finally, if educational debts grow, no definition of equity is achieved.

We argue that the multiple definitions of equity are not all mutually exclusive and may be used in combination. For example, one may simultaneously investigate equity of outcomes and equity of individuality. In the study below there is an explicit example of this where we show that students from marginalized racial/ethnic groups were seeing faster increases in standardized test scores compared to their overrepresented counterparts prior to the pandemic (equity of individuality), but that there remains a racial gap in standardized test scores (equity of outcomes). The only definition of equity that will not be used in this study is equity of learning, as we are not investigating changes because of an instructional intervention.

The authors of this paper are white and cisgendered. E. W. B identifies as a man and a member of the LGBTQIA+ community and is a U. S. citizen. S. S. identifies as an immigrant and a woman with background in engineering. The authors are both dedicated to understanding and mitigating demographic disparities in higher education outcomes for students of all races, ethnicities, genders, and socioeconomic strata. We believe that disparities in academic achievement reflect systemic inequities and not deficiencies in the students. Thus, when we discuss gaps in ACT scores, we interpret these gaps as how the system fails or is biased against certain groups of students.

Research questions

In light of our theoretical framework, we had the following research questions:

1. How did opportunity gaps between majority and minority groups in undergraduate STEM education, as measured by standardized tests such as ACT/SAT, shift during the pandemic?

2. Did any changes in opportunity gaps correspond to changes in the demographic composition of undergraduate STEM students in the population studied?

3. Did any changes in opportunity gaps correspond to changes in early academic performance in college?

We hypothesize that there will be an increase in the demographic gaps between majority and minority students along racial/ethnic and parental education boundaries as these serve as proxies for socioeconomic status. There are many reports indicating that the pandemic was most impactful on the poorest Americans (Robertson et al., 2022). We do not anticipate any change in the gender gap, as the student population studied is relatively young and mostly lives on campus, so most do not have caretaking responsibilities. We anticipate that any gaps in pre-college education will translate into gaps in academic performance in college, as research has shown that pre-college performance is highly predictive of performance early in college (Salehi et al., 2020). We would also expect a shift in demographic composition of the incoming student body, as standardized tests such as the ACT play an important role in college admissions in the U. S. That is, we expected that increased demographic gaps in ACT scores would mean that more marginalized students were scoring low enough that they were not admitted to the school in the first place. This assumes that the procedure for admitting students remained the same after the start of the pandemic, which we cannot say for sure as it is a highly guarded process.

Methods

Data were collected from institutional records at a single public research university in the southeastern United States. We had access to student records for nearly 50,000 students who first enrolled at the university between the Fall of 2013 and the Fall of 2022. We removed transfer students and international students from this sample because (1) they represent a very small fraction of students at the university (<3%) and (2) they may be subject to different admissions requirements than first-time freshmen (for example, ACT scores are not required for transfer students, and international students must also complete an English proficiency test). We included only students initially intending to study STEM. We used a broad definition of STEM including engineering, physics, chemistry, mathematics, geoscience, biological sciences, nursing, agriculture, forestry, and wildlife ecology. The final pool of students was 18,701 STEM majors. Students who matriculated in or prior to the Fall of 2020 were considered “pre-pandemic” (they had already been admitted by the start of the pandemic in the United States), and students who matriculated after the Fall of 2020 were considered “during-pandemic.”

For each student in the sample, we had access to their ACT composite scores. The ACT is one of two college entrance examinations offered in the United States; the other test is the SAT. This university accepts both SAT and ACT scores but converts all scores using information from the test manufacturers (ACT, 2018) to create an equivalent ACT score for all students (as only 15% of the scores were SAT scores). The ACT covers mathematics, science, reading, and writing and is scored out of 36 points. Historically, the interquartile range of ACT scores at this university is 25–31. We note an important change in the admissions policy at this institution that occurred at the start of the pandemic. Prior to the pandemic, all students were required to submit ACT scores to the university. As of the Fall of 2021, students who had a high school GPA greater than 3.6 (on a 5.0 scale) were no longer required to submit these scores, though less than 3% of students who met this criterion chose not to submit scores. Because the amount of missing data is small, we simply omitted students for whom we had no ACT score, but we discuss the potential bias of this choice in the discussion below. We found that both the low-GPA and high-GPA students tended to submit their scores while students who were closer to the GPA threshold were more likely not to submit. Thus, the range and variance of high school GPAs (which is correlated with ACT score) was not changed by deleting the missing ACT scores. Indeed, the difference between high school GPA for students with and without ACT scores submitted was not significant (p = 0.31), therefore we do not expect this omission to bias our results.

In addition to these measures of high school preparation, we had access to student demographic information: namely a binary measure of their gender, their race, and whether they were a first-generation college student (defined as a student with no parent that received a bachelor’s degree). Because the student population at this university is relatively racially homogeneous (80% white), we aggregated students into two groups for statistical power: persons excluded on the basis of ethnicity or race (PEER; Asai, 2020) – defined as non-white and non-Asian students – and non-PEER students. Students with multiple racial identities are coded as PEER. We acknowledge this as an important limitation of the analysis that may obscure effects of other major events that occurred during the pandemic, e.g., high-profile instances of police violence against black people in the United States, that may have differentially impacted different groups of students. We encourage other institutions to study similar trends to better understand the variation in needs of their incoming students. We also acknowledge the limitation of binary measures of gender (which is still standard data-keeping practice at most institutions). We urge institutions that maintain more nuanced measures of gender identity to repeat our analyses and share their results so that we can better understand the impacts of the pandemic on non-binary people.

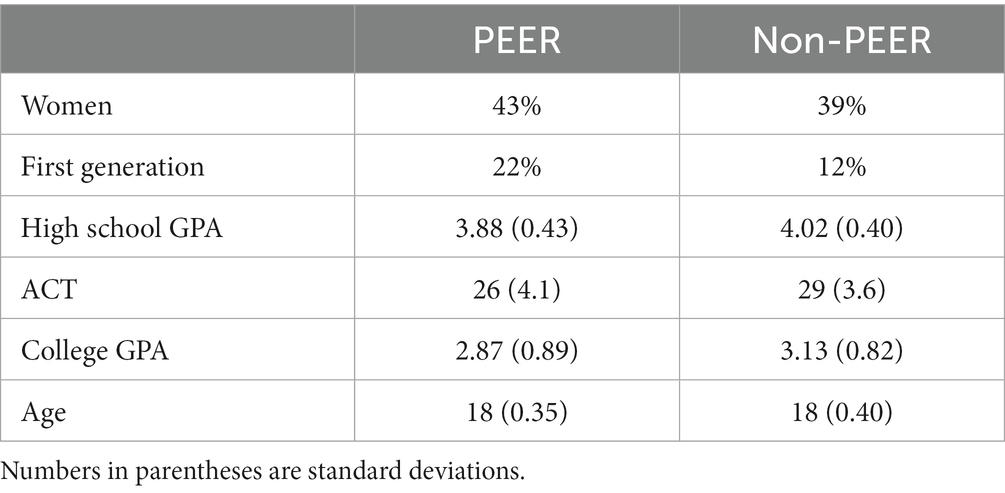

Finally, we had students’ first-term GPAs. A first-term STEM student will typically enroll in a writing course, a math course (either calculus or trigonometry depending on the program), a science course (most often chemistry or physics) and an elective course. These GPAs are reported on a standard 4.0 scale. We note that instruction was fully in-person prior to the 2020 Spring Semester and had returned to fully in-person in the fall of 2021. In a previous study of instructors from this institution, we found that relatively little changed with respect to assessment policies and teaching practices because of the pandemic (Salehi et al., in review). A demographic summary of the sample population may be found in Table 1.

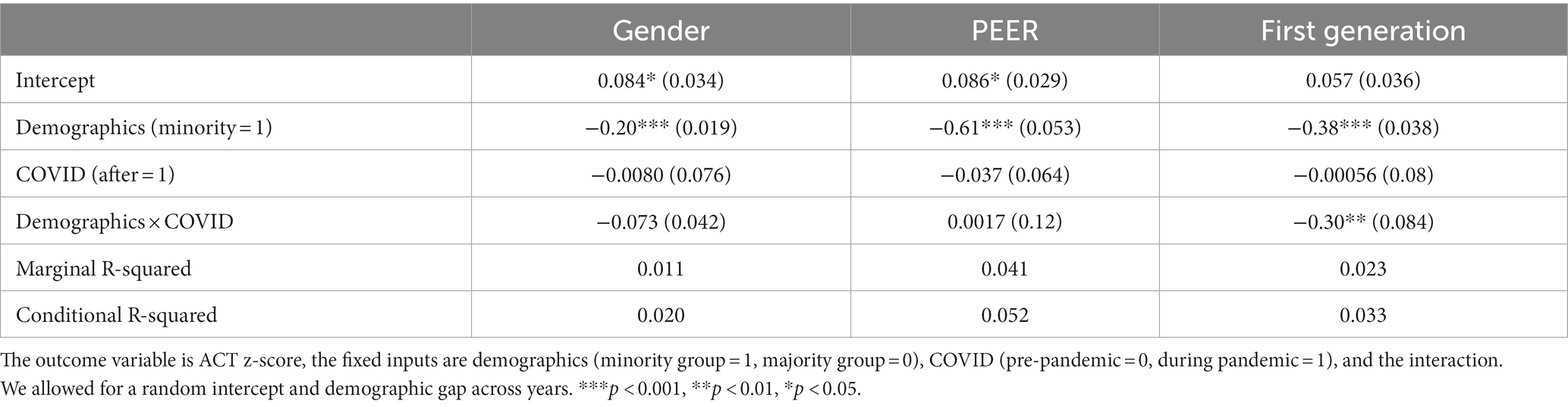

The first research question we answered using mixed-effects regression models of the difference between the ACT scores of majority and minority students. In particular, the model was:

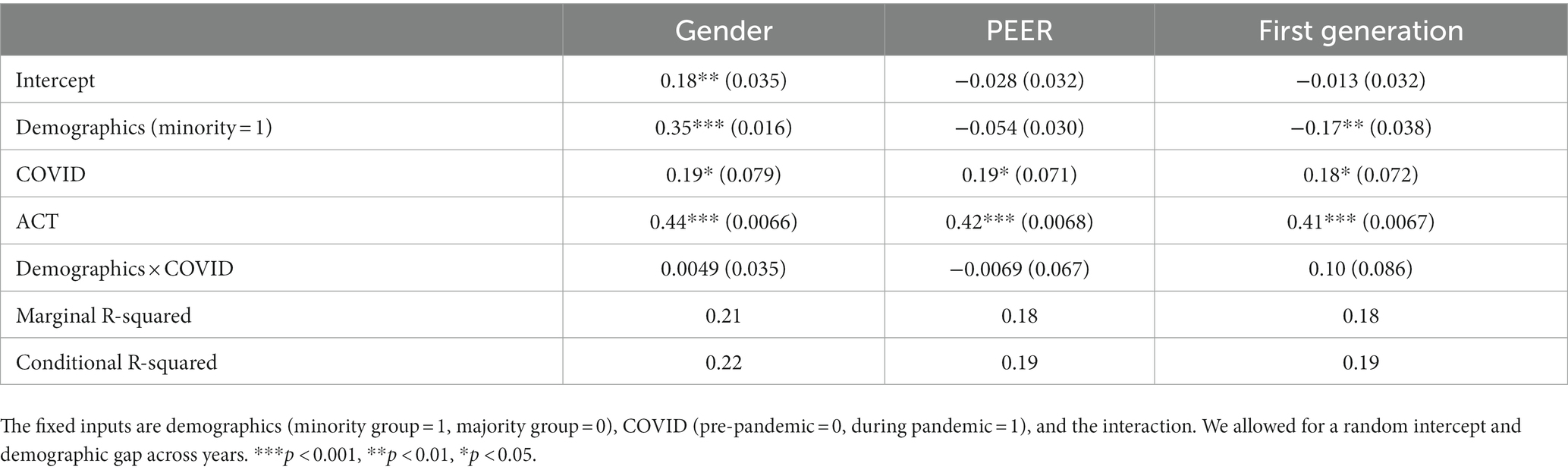

The second research question we answered by examining the demographic composition of the population before and during the pandemic. The third research question we answered by calculating differences between majority and minority students’ first-term GPAs in each year using a model analogous to the equation above.

Results

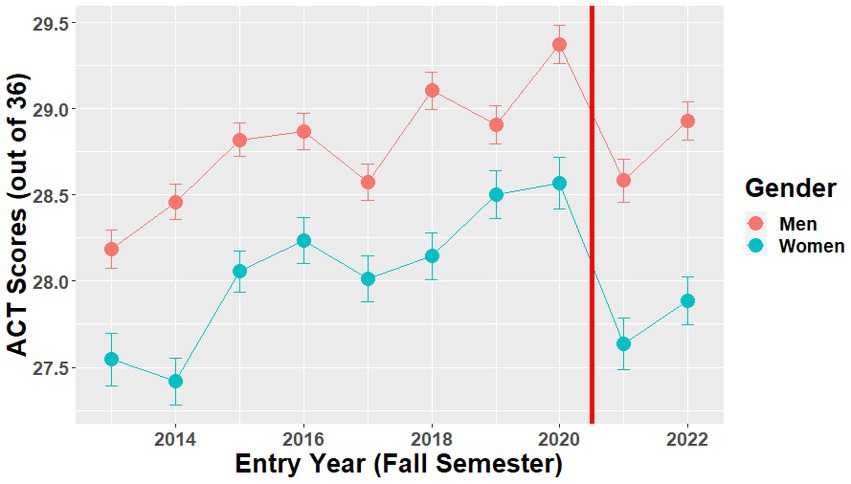

In the following, we first review the differences in ACT scores across different demographic status. The differences in ACT scores between men and women are shown in Figure 1. Before the pandemic, men on average scored 0.19 standard deviations higher than women on the ACT (p < 0.001). After 2020, men’s average ACT scores dropped by 0.08 standard deviations, which was within the annual fluctuations of the ACT score (p = 0.35). The gender gap after the pandemic grew to 0.25 standard deviations, but this increase was within the year-to-year fluctuations in gap size (p = 0.12), and hence the changes in ACT gender gap was not significant between prior and after the pandemic. We note that there is a trend of increasing ACT scores over time (p = 0.004), but this trend is not significantly different for men and women (p = 0.31).

Figure 1. ACT scores for men (blue) and women (red) over time. Error bars represent standard error. The vertical red line indicates the division between pre-pandemic and current data.

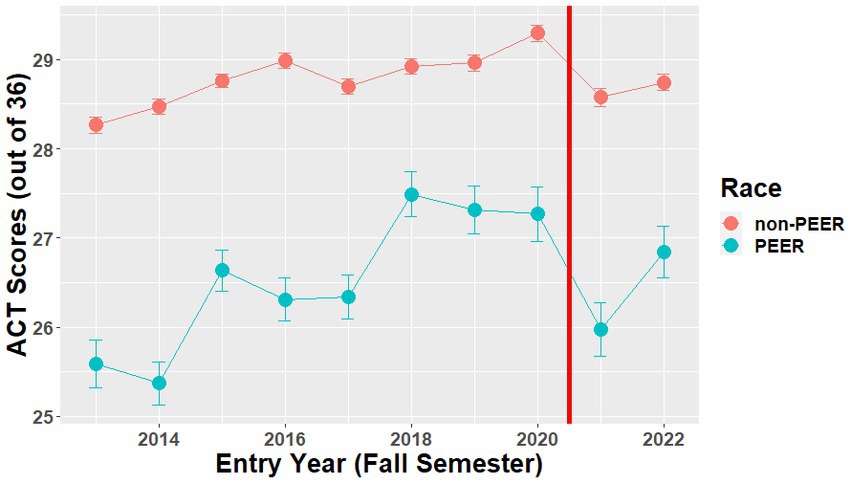

The differences in ACT scores for PEER and non-PEER students are shown in Figure 2. Prior to the pandemic, PEER students on average scored 0.60 standard deviations lower than non-PEER students on the ACT (p < 0.001). During the pandemic, non-PEER students saw a decrease in scores of 0.03 standard deviations (not significant, p = 0.71), while PEER students saws a decrease of 0.04 standard deviations (difference not significant). Therefore, the changes in ACT gaps across PEER status was not significant between prior and after the pandemic data, Similar to above, there has been a trend of ACT scores increasing over time for all students, but the scores of PEER students have been increasing faster than non-PEER students (p = 0.002).

Figure 2. ACT scores for PEER students (blue) and non-PEER students (red) over time. Error bars represent standard error. The vertical red line indicates the division between pre-pandemic and current data.

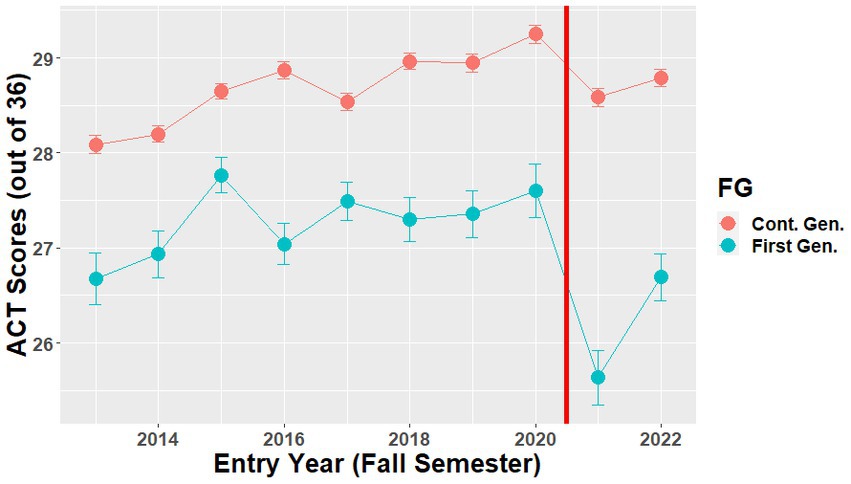

The differences in ACT scores for first generation (FG) and continuing generation (CG) students are shown in Figure 3. Prior to the pandemic, the gap in ACT scores between FG and CG students was 0.36 standard deviations (p < 0.001). During the pandemic, there was essentially no change in CG students’ ACT scores (0.004 standard deviations, p = 0.96), whereas FG students’ ACT scores fell by 0.28 standard deviations (p < 0.001). Therefore, unlike the results for gender and PEER status, the ACT gaps across FG and CG students significantly increased from prior to the pandemic to after the pandemic. Once again, there was a general trend of increasing ACT scores prior to the pandemic (p < 0.001), but this increase was marginally smaller for FG students compared to CG students (p = 0.072; Table 2).

Figure 3. ACT scores for FG students (blue) and CG students (red) over time. Error bars represent standard error. The vertical red line indicates the division between pre-pandemic and current data.

To summarize the results for research question 1: the gaps in ACT scores between men and women, and PEER and non-PEER students remained the same after 2020. The gap between FG and CG students, however, grew.

Quantitative Critical theories strongly urge the consideration of intersectionality in quantitative analysis. That is, when possible, how do the results change for students with multiple overlapping marginalized identities. We considered these variables during our analysis and found that none of the interaction terms between different demographic variables were significant. This was found using a backwards stepwise regression procedure. Indeed, for research question 1, the results showed only main effects of gender, ethnicity, and first-generation status and the interaction between COVID and first-generation status. What this indicates for our sample is that students with multiple overlapping marginalized identities experience compounded effects of inequities. For example, a first-generation student of color has lower ACT scores than a continuing generation student of color. However, the effects of the pandemic did not compound on multiple different axes of identity.

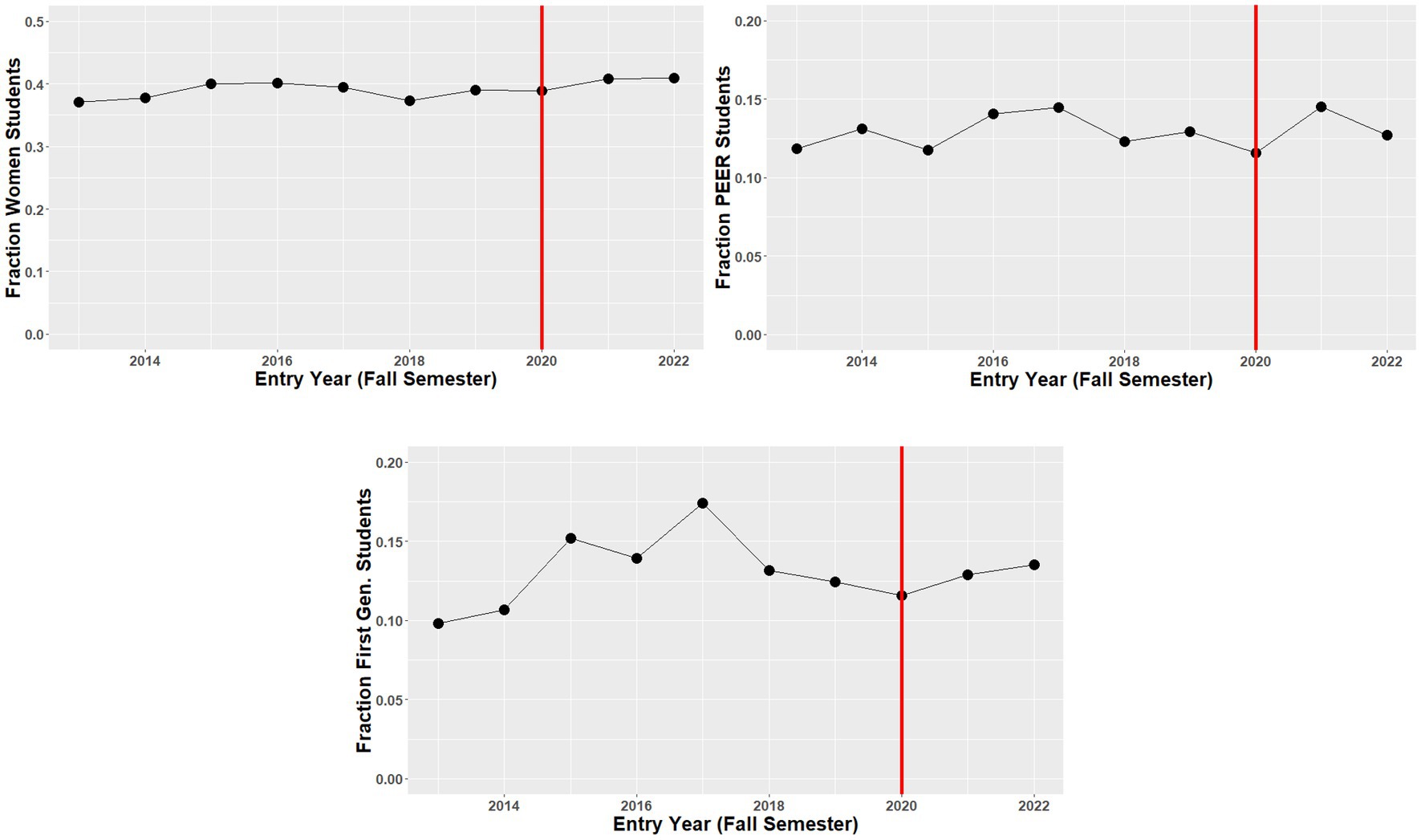

To answer research question 2, we looked at the demographic composition of the entering freshman class over time. As seen in Figure 4 below, there was minimal change in the demographics of the freshman class during the past 10 years. The fraction of PEER and FG students remained steady over time, whereas the fraction of women in the incoming class has grown slightly (p = 0.036), but this change is not significant if controlling for multiple comparisons using the Bonferroni procedure. Thus, any changes in ACT scores over time observed in the prior results have not translated into immediate different demographic compositions of the incoming student population.

Figure 4. Demographic composition of the incoming class over time. Vertical red line indicates the transition to the pandemic.

To investigate the third research question, repeated the analysis for research question 1 with students’ first semester GPAs as the outcome variable. In addition, we controlled students’ ACT scores to investigate how the first-semester GPA changed relative to the temporal and demographic changes in ACT scores. The results are shown in Table 3. Prior to the pandemic, we saw that women were earning higher first-semester GPAs than men with equivalent ACT scores (p < 0.001), and that FG students were earning lower GPAs than CG students with the same ACT score (p < 0.01). We observed no gap between PEER and non-PEER students with equivalent ACT scores. We did see an increase in GPA for all students after the pandemic, unlike the non-uniform change in ACT scores.

Discussion

In our analysis, we found that the ACT score gap only grew between FG and CG students during the pandemic, and that it remained constant across gender and PEER status. For most students, there was no significant change in ACT score after 2020. These findings largely stand in contrast with anecdotal reports (Malesic, 2022) and reports from the ACT (Allen, 2022) that students are, overall, not as prepared for their college coursework as they were prior to the pandemic. However, we did observe that for FG students, unlike other demographic groups, ACT scores significantly dropped compared to pre-pandemic years and hence there was a significant increase in ACT gap between FG and CG students. This FG gap, nevertheless, did not translate into an immediate different composition of the admitted students, nor did it translate into an increased gap in college academic performance.

Connecting back to our theoretical framework, we explored equity of outcomes and equity of opportunities with the analyses presented above. Overall, we found neutral to negative results in terms of equity of outcomes. There were significant gaps in ACT scores between majority and minority students, most of which remained the same over time, indicating a dynamic issue with equity that seems to be preserved even as characteristics of the whole student population continue to changes.

The more clearly negative result in terms of equity of outcomes was the increased FG gap in ACT scores during the pandemic. This could have many possible explanations, but we hypothesize this was primarily due to an issue in access to educational resources (Donham et al., 2022). In the United States, FG students are more likely to come from households that earn below the national median income (Reardon, 2018), and at this university, are more likely to come from more rural areas. Thus, they may not have had access to high-speed internet necessary to fully participate in online schooling, leading to reduced learning and lower ACT scores (Cullinan et al., 2021). Another reported issue was simply access to ACT testing locations (Schnieders and Moore, 2022). Because these students are from more rural areas, they typically would have to travel farther to take the ACT. Taking the ACT also costs a substantial amount of money. Thus, taking the test multiple times to get the best possible score may not have been an option for these students compared with students whose parents attended college.

We originally hypothesized that the increased FG gap in academic preparation would not only affect students who enrolled at this university but would also affect the application process. That is, we expected the increased demographic gaps in ACT scores would mean that more FG students were scoring low enough that they were not admitted to the school in the first place. We did not observe this in the data. One possible explanation is that the university adjusted its admissions policies slightly after the start of the pandemic, for example by placing less weight on standardized test scores during the process or giving more consideration to other situational factors. As the admission process is highly guarded, we cannot say for sure whether this was the case.

The question of whether first semester college GPA changed as a result of the pandemic was investigated as a question of equity of opportunity: given certain high school preparation as measured by the ACT, were there demographic differences and did they change over time? The results were different for different demographic groups. For PEER students, we found equity of opportunity: given a student’s ACT score, their ethnicity did not significantly affect their first-term GPA. For women, we saw in increase in equity of opportunity, as women had higher GPAs than would be expected given their generally lower ACT scores than men. However, for FG students, the story was reversed. Even considering the fact that FG students had lower ACT scores, their first-term GPAs were lower than CG students. None of these results changed over time.

We expected that this increased FG gap in ACT scores would translate to increased gaps in first-term GPAs at the university, due to the many reports that performance in introductory college courses is strongly related to metrics of high school preparation (Salehi et al., 2020). Again, we did not observe this in the data. What is interesting is that, in a separate survey of instructors at this same university, there were minimal changes reported in assessment and teaching practices during the pandemic (Salehi et al., in review). Thus, it seems unlikely that there were teaching policies put in place that helped remedy the increased FG gap in high school preparation. Another point to make a note of is that we did not see any changes in the demographic gaps in high school GPAs over time, another measure of college preparation (albeit a more complex one). This indicates that the increased gap in ACT scores could simply be reflective of less access to take the ACT multiple times, and not actually reflective of the learning that took place during the pandemic.

We do not wish to discount the human toll of this pandemic on students’ lives. Students’ mental health has suffered profoundly as a result of the pandemic, and many students have lost friends relatives. The total impact of the pandemic on students’ lives cannot be measured by ACT scores and GPAs. However, a detailed understanding of how the pandemic impacted student preparation is essential for faculty and institutional policymakers alike. Ethically, those responsible for educating the next generation of scientists should make sure they are doing their best to serve the students at their institutions and in their classrooms. That means that, if the students are less prepared, instructors should adjust to account for this fact.

Conclusion

The COVID-19 pandemic appears to have had a disproportionate impact on the academic preparation of first-generation STEM students while inequities along other dimensions of demographics have been maintained through the pandemic. The fact that most students saw minimal changes in college preparation during the pandemic contradicts narratives in the popular media. One way to interpret this result is that inequities in STEM preparation and performance transcend the pandemic and are much larger systemic issues that were relatively unchanged as a result of lockdowns and emergency remote teaching. Thus, while continuing on with pre-pandemic instructional strategies may prove equally effective overall, it will continue to systematically exclude certain groups of students.

Data availability statement

The data analyzed in this study is subject to the following licenses/restrictions: data is not available per IRB restritictions. Requests to access these datasets should be directed to a2xiMDAwMkBhdWJ1cm4uZWR1.

Ethics statement

The studies involving human participants were reviewed and approved by Auburn University IRB. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

EB is responsible for executing the data analysis and writing the manuscript. SS provided guidance on methodology to address the research questions and interpretation of results, and contributed to the writing of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

ACT . (2018). Guide to the 2018 ACT/SAT Concordance. Available at: https://www.act.org/content/act/en/products-and-services/the-act/scores/act-sat-concordance.html (Accessed March 3, 2023).

Allen, J. (2022). Have ACT Scores Declined during the COVID-19 Pandemic? An Examination of State and District Testing Data. Available at: https://www.act.org/content/act/en/research/pdfs/COVID-Impact-Fall-ACT-State-and-District-2021-5.html

Burkholder, E., Walsh, C. J., and Holmes, N. G. (2020). Examination of quantitative methods for analyzing data from concept inventories. Phys Rev Phys Educ Res 16:010141. doi: 10.1103/PhysRevPhysEducRes.16.010141

Costello, R. A., Salehi, S., Ballen, C. J., and Burkholder, E. W. (2023). Pathways of opportunity in STEM: comparative investigation of degree attainment across different demographic groups at a large research institution. Accepted Int. J. STEM Educ.

Cullinan, J., Flannery, D., Harold, J., Lyons, S., and Palcic, D. (2021). The disconnected: COVID-19 and disparities in access to quality broadband for higher education students. Int. J. Educ. Technol. High. Educ. 18:26. doi: 10.1186/s41239-021-00262-1

Donham, C., Barron, H. A., Alkhouri, J. S., Kumarath, M. C., Alejandro, W., Menke, E., et al. (2022). I will teach you here or there, I will try to teach you anywhere: perceived supports and barriers for emergency remote teaching during the COVID-19 pandemic. Int. J. STEM Educ. 9:19. doi: 10.1186/s40594-022-00335-1

Elsner, J. N., Sadler, T. D., Zangori, L., Friedrichsen, P. J., and Ke, L. (2022). Student interest, concerns, and information-seeking behaviors related to COVID-19. Discip. Interdiscip. Sci. Educ. Res. 4:11. doi: 10.1186/s43031-022-00053-2

Hill, G., Mason, J., and Dunn, A. (2021). Contract cheating: an increasing challenge for global academic community arising from COVID-19. Res. Pract. Technol. Enabled Learn. 16:24. doi: 10.1186/s41039-021-00166-8

How to Solve the Student Disengagement Crisis . (2022). Chronicle of Higher Education. Available at: https://www.chronicle.com/article/how-to-solve-the-student-disengagement-crisis

Lopez, N., Erwin, C., Binder, M., and Chavez, M. J. (2018). Making the invisible visible: advancing quantitative methods in higher education using critical race theory and intersectionality. Race Ethn. Educ. 21, 180–207. doi: 10.1080/13613324.2017.1375185

Malik, M., and Javed, S. (2021). Perceived stress among university students in Oman during COVID-19-induced e-learning. Middle East Current Psychiatry. 28:49. doi: 10.1186/s43045-021-00131-7

Pokhrel, S., and Chhetri, R. (2021). A literature review on impact of COIVD-19 pandemic on teaching and learning. High. Educ. Future. 8, 133–141. doi: 10.1177/2347631120983481

Reardon, S. F. (2018). “The Widening Academic Achievement Gap between the Rich and the Poor,” in Inequality in the 21st Century (New York: Routledge), 177–189. doi: 10.4324/9780429499821-33

Robertson, M. M., Shamsunder, M., Brazier, E., Mantravadi, M., Rane, M. S., Westmoreland, D. A., et al. (2022). Racial/ethnic disparities in exposure to COVID-19, susceptibility to COVID-19 and access to health care - findings from a U.S. national cohort. Emerg Infect Dis 28, 2172–2180. doi: 10.1101/2022.01.11.22269101

Rodriguez, I., Brewe, E., Sawtelle, V., and Kramer, L. H. (2012). Impact of equity models and statistical measures on interpretations of educational reform. Phys. Rev. Spec. Top. Phys. Educ. Res. 8:020103. doi: 10.1103/PhysRevSTPER.8.020103

Salehi, S., Cotner, S., and Ballen, C. J. (2020). Variation in incoming academic preparation: Consequences for minority and first-generation students. Front. Educ. 5, 1–14.

Salehi, S., Ballen, C. J, Laksov, K. B., Ismayilova, K., Poronnik, P., Ross, P. M., et al. (in review). Learning from Emergency Remote Instruction during the COVID-19 pandemic: Global perspectives in higher education.

Schnieders, J. Z., and Moore, R. (2022). College preparation opportunities, the pandemic, and student preparedness. ACT Insights Educ. Work.

Shukla, S. Y., Theobald, E. J., Abraham, J. K., and Price, R. M. (2022). Reframing educational outcomes: moving beyond achievement gaps. CBE Life Sci. Educ. 21:es2. doi: 10.1187/cbe.21-05-0130

Sifri, R. J., McLoughlin, E. A., Fors, B. P., and Salehi, S. (2022). Differential impact of the COVID-19 pandemic on female graduate students and postdocs in the chemical sciences. J. Chem. Educ. 99, 3461–3470. doi: 10.1021/acs.jchemed.2c00412

Stage, F. K. (2007). Answering critical questions using quantitative data. New Dir. Inst. Res. 2007, 5–16. doi: 10.1002/ir.200

Keywords: equity, COVID-19, demographics, ACT, GPA and academic achievement and study persistence

Citation: Burkholder EW and Salehi S (2023) Effects of the COVID-19 pandemic on academic preparation and performance: a complex picture of equity. Front. Educ. 8:1126441. doi: 10.3389/feduc.2023.1126441

Edited by:

Christoph Kulgemeyer, University of Bremen, GermanyReviewed by:

Christoph Vogelsang, University of Paderborn, GermanyRebecca L. Matz, University of Michigan, United States

Copyright © 2023 Burkholder and Salehi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eric W. Burkholder, ZXdiMDAyNkBhdWJ1cm4uZWR1

Eric W. Burkholder

Eric W. Burkholder Shima Salehi

Shima Salehi