- 1InZone, Faculté de traduction et d'interprétation, University of Geneva, Geneva, Switzerland

- 2CTI/African Higher Education in Emergencies Network, University of Nairobi, Nairobi, Kenya

- 3Azraq Refugee Camp, Azraq, Jordan

This study reports on the design and development of a methodological toolbox prototype for evaluating EdTech deployed in the contexts of fragility and crisis. The project adopted a bottom-up approach: training EdTech users in participatory action research approaches was followed by a comprehensive mapping of problems in the Azraq refugee camp that might be addressed through the chosen EdTech installed in a local Makerspace. Students as researchers used a developmental evaluation approach to deepen their understanding of evaluation as a concept and as a process and proceeded to match the results of their Azraq camp problem-tree analysis with evaluation questions related to the EdTech tools available in the Makerspace. The study concludes with a proposed methodological toolbox prototype, a set of approaches and processes that include research capacity building in fragile contexts, and user-led evaluation that emphasizes the notion of evaluation as a learning process driven by those designed to benefit from EdTech in fragile contexts.

Background

“EdTech is a tool that needs to be constructed with principles of pedagogy in mind” (Tauson and Stannard, 2018, p. 8). While early responses to the challenges imposed by the COVID-19 pandemic focused on technology, attention shifted quickly to pedagogy and engagement as the key determinants of keeping the learning going (Vegas, 2020). IRC (2022) defines EdTech as the application of a tool that combines hardware, software, educational theory, and practice, which promotes learning. Kucirkova (2022) contends, however, that EdTech and companies have become bigger but not necessarily more educational. According to Hirsh-Pasek et al. (2022), education lags behind digital leaps, which allows technology rather than educators to define what counts as an educational opportunity. IRC (2022) leverages the six Cs first introduced by Hirsh-Pasek et al. (2022) to describe the characteristics that make EdTech truly educational: Collaboration, Communication, Content, Critical Thinking, Creative Innovation, and Confidence. Tauson and Stannard (2018) deplore the extraordinary lack of evaluations and impact studies of EdTech in emergency settings and how challenging it had been to represent the views on EdTech of those living in humanitarian contexts due to the paucity of research carried out in those settings in general, and the complete absence of relevant cost-benefit analyses. The promise of EdTech to produce change at scale in humanitarian and development contexts has not materialized (Rodriguez-Segura, 2022). Literature reviews of usability studies of EdTech (Lu et al., 2022) make no mention of humanitarian and development contexts and focus more on the ease of use of technologies, rather than on their specific relevance to the context in which they were used. Lu et al. (2022) emphasize that no standard evaluation tool exists for assessing usability with all of the studies included in their meta-analysis employing a study-specific observation or questionnaire protocol. The gap in evaluation approaches for EdTech is therefore not limited to humanitarian and development contexts, although the significant funding gaps in Education in Emergencies (EiE) should have encouraged (Geneva Global Hub for Education in Emergencies, 2022) more systematic analyses of how investments in EdTech in emergencies impact humanitarian contexts and youth in displacement.

Very little of published research in EdTech has been led by researchers living in contexts of forced displacement (Tauson and Stannard, 2018), and the literature offers only occasional mention of participant-led monitoring and evaluation (Davies and Elderfield, 2022). As decolonization has taken root in humanitarian and development contexts, extractive research led by Global North institutions is increasingly and rightly questioned on ethical grounds (Haelewaters et al., 2021). When considering EdTech, there continues to be considerable emphasis on all education initiatives being designed to contribute to meeting national curriculum requirements for primary and secondary school students and ensuring that at higher education levels, students reach the same learning outcomes verified through formal, yet modified assessment procedures. As the COVID-19 pandemic has abated, universities have relaunched, promoting the advantages of online and hybrid learning, while primary and secondary schools have returned to the classroom, with modifications. There are numerous discussions on catch-up strategies to achieve scheduled learning outcomes; some countries, such as Kenya, decided to simply skip an entire school year and had everyone repeat. There continues to be the talk of school reforms, education reforms, and higher education reforms, but only scant inquiry into learning itself, insufficient questioning of education models, or of the delivery channels that are designed to ultimately serve the same purpose as education was designed to serve prior to the pandemic. The focus appears to remain on delivery channels and the corresponding tools to deliver learning and content, without questioning either the learning or the content, or paying close attention to what kind of EdTech and how its deployment affects youth in displacement (UNICEF-Innocenti, 2022). Compilations of how digital technologies accompany youth from learning to earning document promising practices (UNICEF, 2021) but focus more on digital technology in humanitarian and development contexts in general, rather than on EdTech, and do not include clear references on user assessment.

With the entire world as an EiE context during the COVID-19 pandemic, EdTech was seen as key to overcoming the gaps in learning, resulting from schools and universities closing their doors, and the initial response to physical isolation was thus emergency remote teaching. This only exacerbated inequities already present in education systems worldwide, as few countries were able to respond by furnishing their learners with the needed devices, while others, including those living through other types of emergencies, were quick to return to the lowest levels of technology, with educational radio making a major comeback (Muñoz-Najar et al., 2021).

The project we report on in this study was launched prior to the onset of, but implemented during the COVID-19 pandemic and it was conceived for humanitarian contexts from the start. The project output was to design and develop a methodological toolbox for evaluating EdTech deployed in contexts of fragility and crisis and to identify evaluation questions related to the context and location in which EdTech was used with a view to informing policy and practice surrounding EdTech in humanitarian contexts.

Monitoring and evaluation approaches in humanitarian contexts encounter challenges in developing reliable indicators and in meaningfully capturing the important parameters of interventions. ALNAP's Guide to Evaluating Humanitarian Action (Buchanan-Smith et al., 2016) lists six challenges to reliably evaluate projects: constrained access to where a project is implemented; lack of data, where data quality is either very poor, irrelevant, unavailable, with no baselines recorded, which is particularly difficult in protracted emergencies; rapid and chaotic responses in the absence of a theory of change whenever the program was implemented rapidly; high staff turnover as projects are often of short duration and key informants are difficult to come by; and data protection, as well as ethical considerations regarding data collection within a do-no-harm framework.

In considering the above, the activities identified to design the EdTech methodological toolbox prototype included the co-design of a participatory, youth-led, research methodology that would be part of an evolving methodological toolbox to assess the potential of EdTech tools in humanitarian contexts. Leveraging the potential of forcibly displaced youth is an integral part of the Grand Bargain's spirit of a coordinated approach to community engagement and participation (IASC, 2017). It emphasizes the inclusion of the most vulnerable and promotes sharing and analyzing data to strengthen decision-making, transparency, and accountability in humanitarian contexts. The Inter-Agency Standing Committee (IASC), the United Nation's humanitarian coordination mechanism, is home to the Grand Bargain, a commitment of currently 66 signatories—donors, UN member states, NGOs, UN agencies, and the Red Cross movement—that originated at the World Humanitarian Summit 2016. Workstream 6 focuses on the Participation Revolution and promotes the inclusion of people receiving aid in making decisions that affect their lives (IASC, n.d.) and defines effective “participation” of people affected by humanitarian crises as “put[ting] the needs and interests of those people at the core of humanitarian decision making, by actively engaging them throughout decision-making processes.” This revolution “requires an ongoing dialogue about the design, implementation, and evaluation of humanitarian responses with people, local actors, and communities who are vulnerable or at risk…” (IASC, 2017). Similarly, the Inter-Agency Network for Education in Emergencies Minimum Standards include Community Participation as Standard 1 (INEE, 2010): “Community members participate actively, transparently, and without discrimination in analysis, planning, design, implementation, monitoring, and evaluation of education responses.”

In line with these recommendations and standards, the methodological framework chosen for this research is Participatory Action Research (PAR), which is collaborative in nature and focuses on gathering information that can be used for change on social issues by those who are affected by those issues and encouraged to take a leading role in producing and using knowledge about it (Pain et al., 2012). PAR offers a democratic model of who can produce, own, and use knowledge and is thus greatly adaptable to the contexts in which it is applied.

The implementation context

Designed originally as a project with three implementation sites, Lebanon, Jordan, and Djibouti, the fallout of both political and health emergencies in Lebanon and Djibouti left Jordan as the only remaining project site. This clearly dealt a blow to the ambition of significantly strengthening the evidence base. Having to choose between rescheduling the entire project and continuing the implementation in Jordan's Azraq refugee camp, the authors decided to modify the original terms of reference to adapt to a double emergency as follows: Syrian refugees living in the Azraq camp as an already protracted crisis, coupled with that of the pandemic that exacerbated already existing humanitarian challenges. Expertise gained by one of the authors in other refugee contexts through systematic programming to build social-emotional learning competencies further strengthened the resolve to continue the project in the Azraq camp and to build on a foundation that had been laid through the relevant course and project work over the preceding 18 months. During that period, a new learning hub for tertiary education had been completed in Azraq's Village 3 where two HP Learning Studios had been installed (Global Citizen, 2018) as EdTech solutions.

The Azraq camp in Jordan, nestled in a remote desert plot approximately 100 km east of Amman, opened in 2014 to receive refugees from the Syrian Civil War; its population has remained stable at approximately 40,000, of whom 60% are children, with families living in an estimated 9,000 shelters (UNHCR, 2022). Over 11,000 children are enrolled in formal schooling in 15 camp schools. There are no tertiary education institutions on-site, and only 8% of the total working-age population hold work permits for Jordan, with another 10% employed by UN agencies and NGOs in the camp's incentive-based work scheme as paid volunteers.

Addressing the deliverable of a methodological toolbox prototype to assess the potential of EdTech tools in humanitarian contexts, this project site was chosen based on the prior engagement of refugee students with EdTech within projects related to Makerspace. This was to ensure shared interest/knowledge, opportunities, and relationships, the three parameters identified by the Connected Learning Alliance as key to meaningful and powerful learning, whether digital, blended, or face-to-face (Connected Learning Alliance, 2020).

Azraq refugee camp in Jordan had benefited both from programming on the HP Learning Studios, consisting of a Sprout Pro by HP and including an HP 3D Capture Stage, a business-size printer, a charging cabinet, and 15 laptops tailored to different education levels. Additionally, two editions of a development engineering course offered by Purdue University had been rolled out, followed by the setting up of an engineering projects interest group and an engineering facilitator training course (de Freitas and DeBoer, 2019). The foundation engineering class, Localized Engineering in Displacement, was designed to challenge students to apply engineering skills to solve local problems. Purdue's DeBoer Lab leveraged the affordances of the HP Learning Studios, given that the learning outcomes envisaged by the HP Learning Studios and the engineering in displacement course showed considerable overlap. The HP laptops and modeling software (HP Sprout) aligned with the engineering course curriculum. The laptops were versatile and compatible with all the software used to program and visually sketch circuits for microcontroller setups that students used in their prototypes. Students in the Azraq camp had thus learned to collaborate, explore, innovate, and test real solutions, taking advantage of the variety of technologies afforded by the devices of the HP Learning Studio.

Participants in the engineering course had worked on the design of spaces in preparation for their role in shaping the construction of the new Learning Hub in Azraq's Village 3, thus contributing to the design of a common space in their community using insights from observation and interviews. Projects built on the design thinking process were explored in the HP Learning Studio training course and introduced themes of inclusive design, accessibility, and equity. Together, then, the HP Learning Studio approach and that of Localized Engineering in Displacement, both identified and addressed real-world problems; they encouraged participants to work in teams to solve these problems and explore, test, and evaluate multiple possible solutions on their own. In short, the Azraq refugee camp offered the capacity to pursue the development of the methodological toolbox for EdTech assessment.

The methodological EdTech tool(box)—prototype as the main outcome of the project

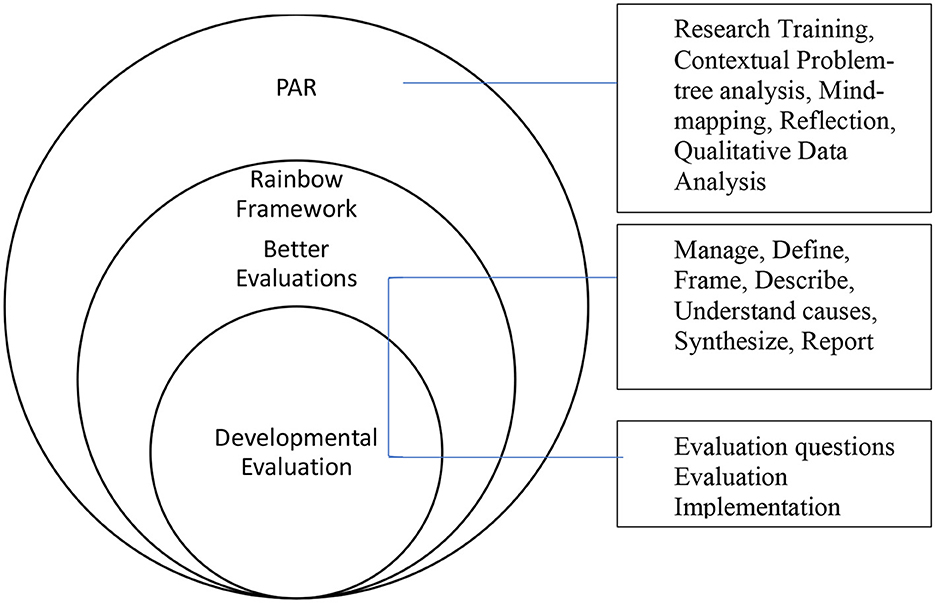

First, we describe the methodological toolbox in detail (Figure 1), followed by a description of how it was developed, tested, and verified for humanitarian contexts. We then synthesize and discuss the implications of the toolbox for evaluating EdTech solutions for protracted displacement contexts and conclude with recommendations regarding the involvement of forcibly displaced youth in monitoring and evaluation.

BetterEvaluation (2022) approach served as the basic framework for the evaluation process chosen for this project; it sets out the different steps and provides a timeline along which these need to be implemented. The methodological toolbox contains the following: capacity building through training in Participatory Action Research (PAR) as a process, with more specific tools of bottom-up problem-tree analysis facilitated by mind-mapping, qualitative data analysis of reflections (iterative) and outputs (evaluation questions), and an open developmental evaluation approach to selecting evaluation questions and implementing the final evaluation phase as outcomes.

BetterEvaluation as an overarching framework

The Rainbow Framework (BetterEvaluation, 2022) provides a systematic approach to Monitoring & Evaluation (M&E) in that it organizes the different M&E methods and processes into tasks and task clusters that facilitate application. These task clusters are labeled as follows: Manage, Define, Frame, Describe, Understand Causes, Synthesize, and Report & Support Use. This framework is part of the Global Evaluation Initiative that supports efforts aligned with local needs, goals, and perspectives.

Managing an evaluation involved agreeing on how decisions would be made for each task cluster of the evaluation. This involved understanding and engaging stakeholders, establishing decision-making processes, deciding who will conduct the evaluation for the tool we were to develop, what resources we had available, and how we would secure them, defining ethical and quality standards, documenting the process, developing planning documents for the evaluation system, and building the capacity to assess whether indeed a tool developed using this approach could be used in different complex contexts.

The challenge was defining what was to be evaluated and how it is supposed to work. While the HP Learning Studio itself could have lent itself as the EdTech to be evaluated, its installation had not been linked to any specific outcome. This meant that no assumptions were made a priori about what the evaluation tool was to measure or what it would look like, there was no theory of change and hence no traditional logic frame to inform the build phase of the project. It was thus important to identify an evaluation approach, which did not require these traditional M&E components, that was flexible and could be implemented in low-resource environments involving the ultimate users/beneficiaries of EdTech as the drivers of the build phase. They would constantly learn and adapt their developments to new levels of knowledge and understanding of the context in which the tool was built. This led us ultimately to identify the developmental evaluation approach as optimal for the humanitarian contexts in which the tool would be developed and later used.

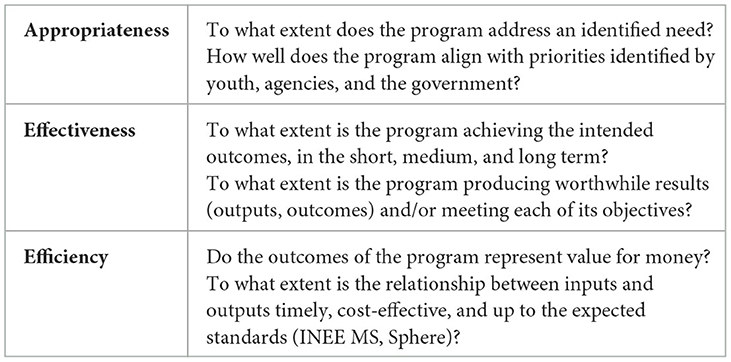

Framing the boundaries of the evaluation represented a key task cluster, as it included identification of intended users, deciding on one or all of the evaluation purposes, accountability, knowledge construction, and improvement, specifying the key evaluation questions (Table 1), and eventually also determining what success might look like. This task cluster represented a major component of the methodological toolbox prototype as can be seen from the description below of activities and outputs. Synthesizing data from the evaluation to document the merit of an EdTech and reporting on the findings in ways that are useful to the intended users completed the series of tasks within the BetterEvaluation Framework.

Table 1. Types of evaluation questions (Adapted from BetterEvaluation, 2022).

Participatory action research training

As the remit for this project was the development of an evaluation tool(box) for EdTech and as the program within which this project was embedded was a Higher Education in Emergencies program, we wanted to proceed within the framework of a participatory approach and therefore needed to start with training researchers/evaluators in humanitarian contexts where EdTech was to be used and ultimately evaluated. Starting with the skill-building approach ultimately allowed for observing how this empowers forcibly displaced youth, the ultimate beneficiaries of EdTech in the refugee camp, to design a tool that would genuinely evaluate educational technology in a complex and changing environment, such as a humanitarian context. Our choice among evaluation frameworks was guided by our vision of refugee empowerment within the larger localization framework of the 2016 World Humanitarian Summit (IASC, 2017).

Potential researchers were recruited during the Management phase of the project. Outreach by one of the engineering course graduates was initiated to recruit participants through purposive sampling in what came to be called the HP Research Group, linking the project to the installation of the HP Learning Studio. A total of eight participants joined the project (three female, five male; age range 19–35; all Syrian refugees living in the Azraq refugee camp, Azraq, Jordan), supported by two humanitarian interpreters with a background in engineering and research. All of them had graduated from secondary school, and some were enrolled in formal or non-formal higher education programs offered in the Azraq refugee camp or by local universities. The level of English proficiency was mixed, requiring educational interpreting during the on-site seminars on participatory research and when the group was meeting in the Learning Hub where the HP Learning Studio had been set up. To facilitate communication, a dedicated forum on a messaging app was set up in which the interpreters also participated to ensure sharing of information across the entire group. The messaging forum was active for a total of 288 days (678 messages, 11,645 words, 64 media files made up mostly of project photos, and 35 documents. The analysis was based on the Chatvisualizer output).

Participants received detailed printed information and subsequently reviewed it during the first onboarding workshop, regarding the objectives of the project. It was made clear that this research project did not provide academic credit, but that individual participants would receive confirmation of their participation in the project. It was then decided at a later stage that participants would co-author the present academic paper and that this would represent evidence of the competencies they had acquired on this project.

Participants nominated one of their female peers, a graduate of the Localized Engineering in Displacement and the engineering projects course, who also had participated in the initiation course to the HP Learning Studios and was active in designing and developing engineering projects. As on-site project lead, she would be in charge of local coordination and decision-making as well as liaison with the management of the Learning Hub. She supervised the document production process, coordinated on-site meetings, ensured that assignment deadlines were respected, and kept supplies well stocked.

Building research capacity was accomplished through a bespoke blended course module designed by the project lead from the University of Geneva, launched with an on-site workshop in the Azraq refugee camp and continued virtually using the participatory action research (PAR) framework (Pain et al., 2012; KT Pathways, 2022). The course module walked project participants through the typical and recurring stages of a PAR project such as planning, action, and reflection, followed by evaluation. More specifically, the topics covered through the eight phases of the course module involve research design, ethics, knowledge and accountability, research questions, working relationships and information required, and the evaluation of reflection processes as a whole.

At the end of the capacity-building phase of the project, participants had learned about different kinds of research, knew how to distinguish between researcher-led and community-led research projects, had decided that the project course would fall into the category of community-led inquiry, and proceeded to define the EdTech tool on which their evaluation toolbox project would focus as a Makerspace.

Developmental evaluation

As there was no a priori intervention to be evaluated and in light of the complexity of the humanitarian context, developmental evaluations (Patton, 2010, 2012) emerged as the most appropriate tool to be added to our methodological toolbox. They are designed for complex systems, for innovation spaces where the indicators are not known, where we do not know where to find them, but where the objective is to create them through approaches that are flexible and adaptable, that respect local culture, identify, and build on existing knowledge, that design for learning over time, that support the application and adaptation of new knowledge to local contexts, and that establish true partnerships for mutual learning (BetterEvaluation, 2022).

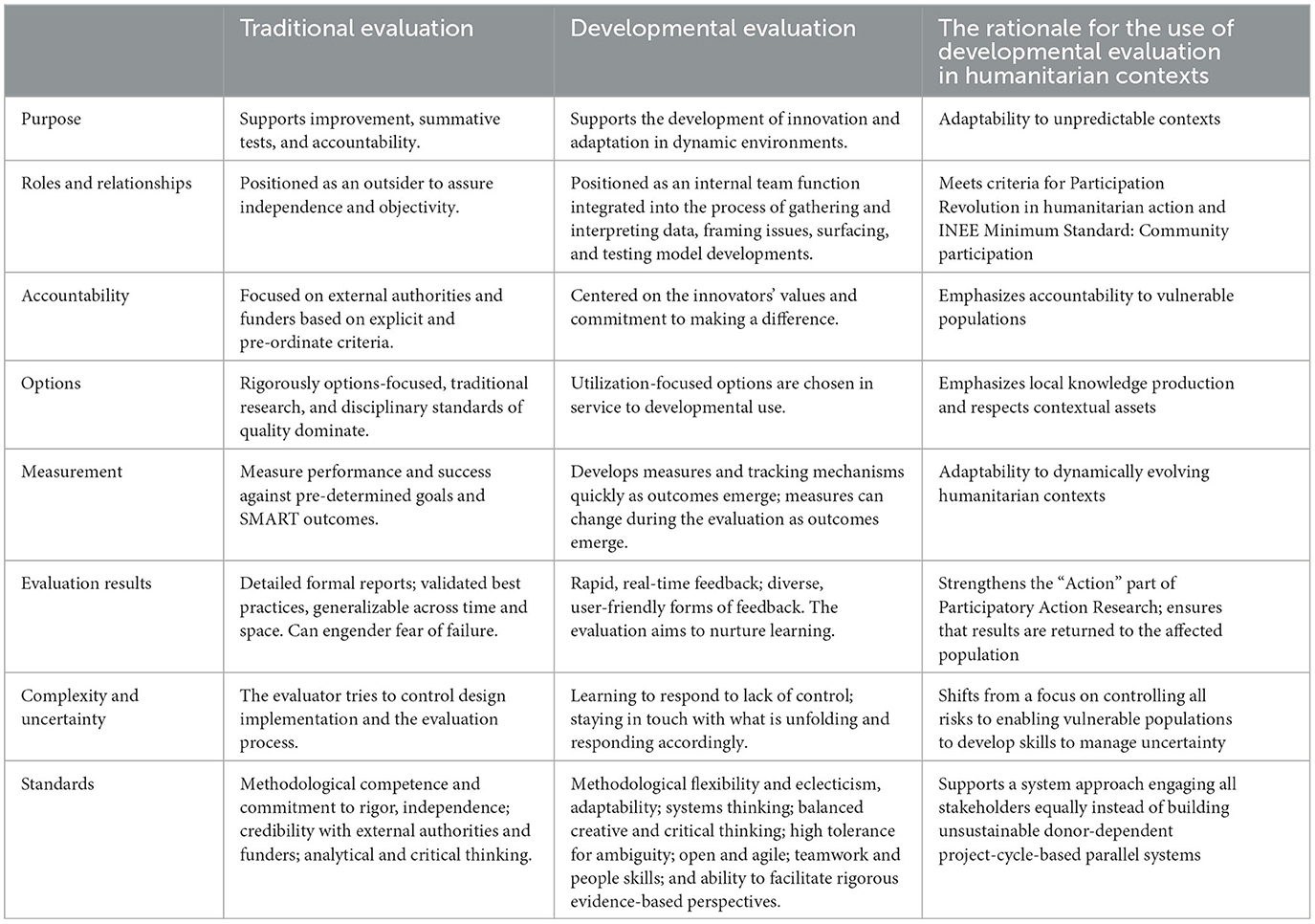

With traditional evaluation models, evaluators create logic models that include well-defined and preferably SMART indicators (Sandhu-Rojon, 2017) to clearly measure outcomes and ultimately impact. In developmental evaluation, however, it is appropriate to start without well-defined goals and objectives if we find ourselves in new territory where the goalposts might be moving and where we are confronted with “wicket problems, where we don't know the solutions” (Patton, 2012), where the key is to find out what works and what does not, rather than following established pathways to change, and where continuous adaptive learning is key. The developmental evaluation framework captures that learning in real time. The focus is therefore on innovation and adaptation to emergent and complex situations. Patton (2006) lays out the differences between traditional and developmental evaluation along eight parameters, a comparison that provided helpful guidance and corroboration of the innovative approach to evaluation this project was taking.

According to Dozois et al. (2010), there are no established or fixed ways, or templates for going about a developmental evaluation. One of the key differences highlighted by Simister (2020) between traditional evaluation, which is often carried out primarily to demonstrate accountability to donors or government agencies, and developmental evaluation is that the latter is focused primarily on learning in order to improve performance within the project or program being evaluated. Table 2 illustrates the key differences between traditional and developmental evaluation and highlights the relevance of the latter for humanitarian contexts.

Table 2. Differences between traditional and developmental evaluations (adapted and expanded from Patton, 2006).

Operationalizing the EdTech toolbox

In this section, we provide concrete examples of how each of the tools was operationalized by the group of student researchers following the task sequence of the Rainbow Framework and present examples of the output.

Putting the rainbow framework in place

The management phase

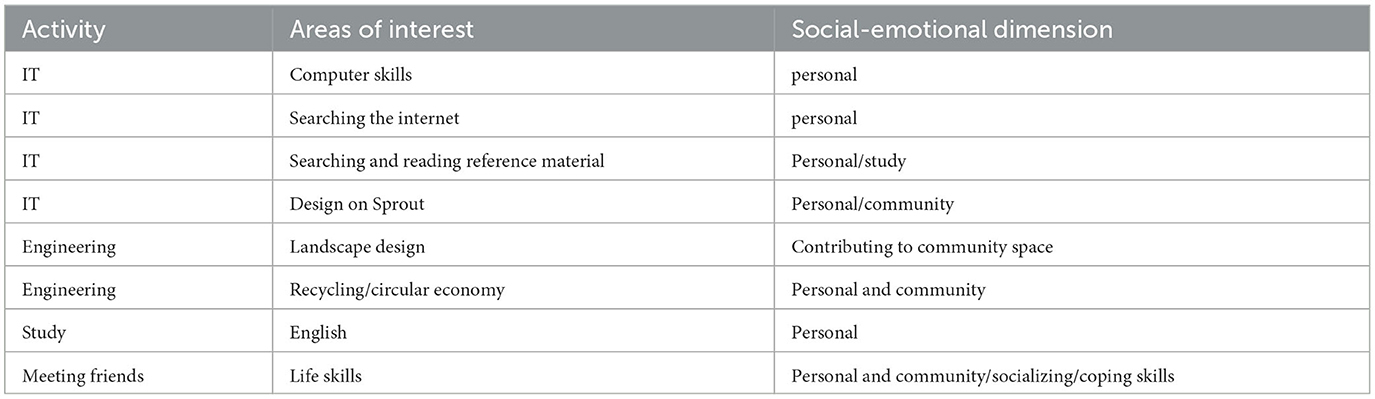

The HP Research Group set up the HP Learning Studio combined with the EngStarter and additional materials as the Makerspace, reflected on possible users, invited a pilot group of users, observed their use of the space, and then created an outreach flier. Users reached through this recruitment phase, which was organized according to guidelines established for outreach in humanitarian contexts, and then came into the Makerspace. The HP Research Group mapped the users, observed what they were interested in doing in the Makerspace, and documented their own observations and reflections as part of the PAR course assignments. Throughout these first six phases of the PAR course, the group had undergone iterative cycles of Action–Reflection–Action–Reflection by way of sixcourse assignments, with an additional two scheduled for the last phase of the course, which overlapped with the operationalization of the methodological EdTech toolbox prototype.

Participants shared their insights into what they had learned throughout the first six phases of the PAR course module. A thematic analysis (Braun and Clarke, 2006) of their contributions, posted on the messaging forum, identified the following key concepts: cooperation, social change, assessing community needs, working as a team, building knowledge, dividing tasks among team members, and stakeholder participation. Key concepts were then returned to the group with each member contributing an example of at least one of the concepts (cf Supplementary material). Participants were now equipped with the necessary skills to carry out contextual problem-tree analyses, use specific tools, such as mind-mapping (Genovese, 2017), design short surveys, collect and analyze data, and reflect on their practice.

In addition, the group also mapped the users' interests over the course of 3 months for a total number of users in the Makerspace over the project period of 256. This provided input to a second thematic analysis of the different project ideas users produced (plastic art, computer skills, Arabic calligraphy, hydroponics, time-bank project to swap expertise, using recycled materials to make toys, learning English, developing expertise in distance learning, etc.) and was subsequently organized by the group by area of interest as shown in Table 3.

The definition phase

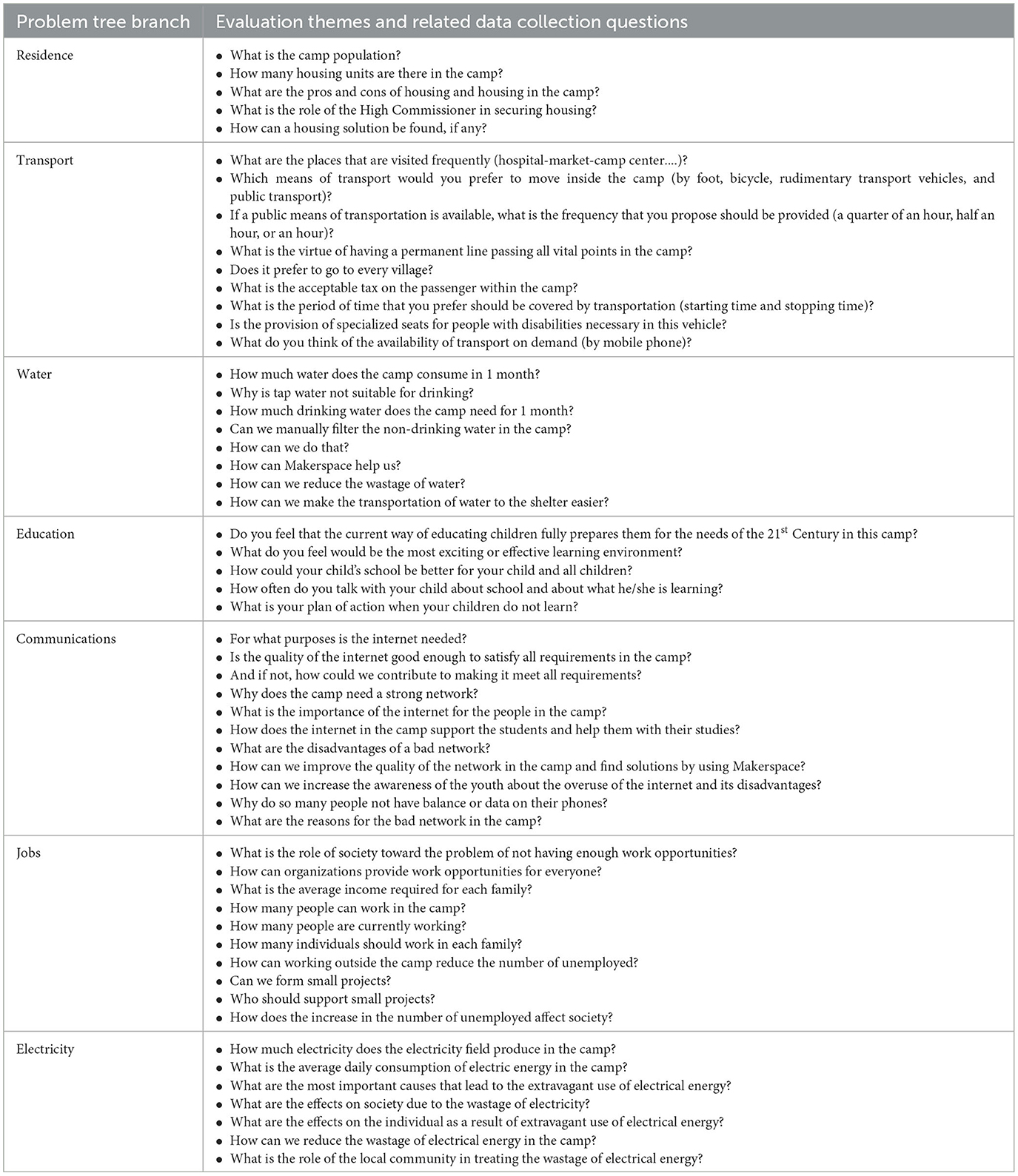

The group had thus arrived at the critical stage of defining what is to be evaluated (Table 4). They now knew enough about the Makerspace as the EdTech tool they would evaluate, about youth as users of the Makerspace in the Azraq camp, and about their interests and preferences, to be able to advance to identifying the “what is to be evaluated” before formulating research and evaluation questions. In keeping with the bottom-up approach, participants were introduced to tools rather than given theoretical lectures on designing research and evaluation questions. Through videos and facilitated readings, the group learned mind mapping and proceeded to design their problem tree (Chevalier and Buckles, 2019), a fairly involved and time-consuming process, which produced rich information and insights into the needs of the Azraq camp inhabitants and their community.

All group members worked on the identification of the main topic, the trunk of the tree, using the problem-tree or situational analysis tool (Chevalier and Buckles, 2008) that is widely used in participatory research to map problems, their causes, and needs. Two group members then teamed up to identify the topics that would become the branches (Figure 2), and all branches were then combined and presented as the completed tree (Figure 2).

Figure 2. Completed map transformed into a problem tree with seven branches (clockwise from the top: residence, transport, water, education, communication, jobs, and electricity).

Framing the evaluation phase

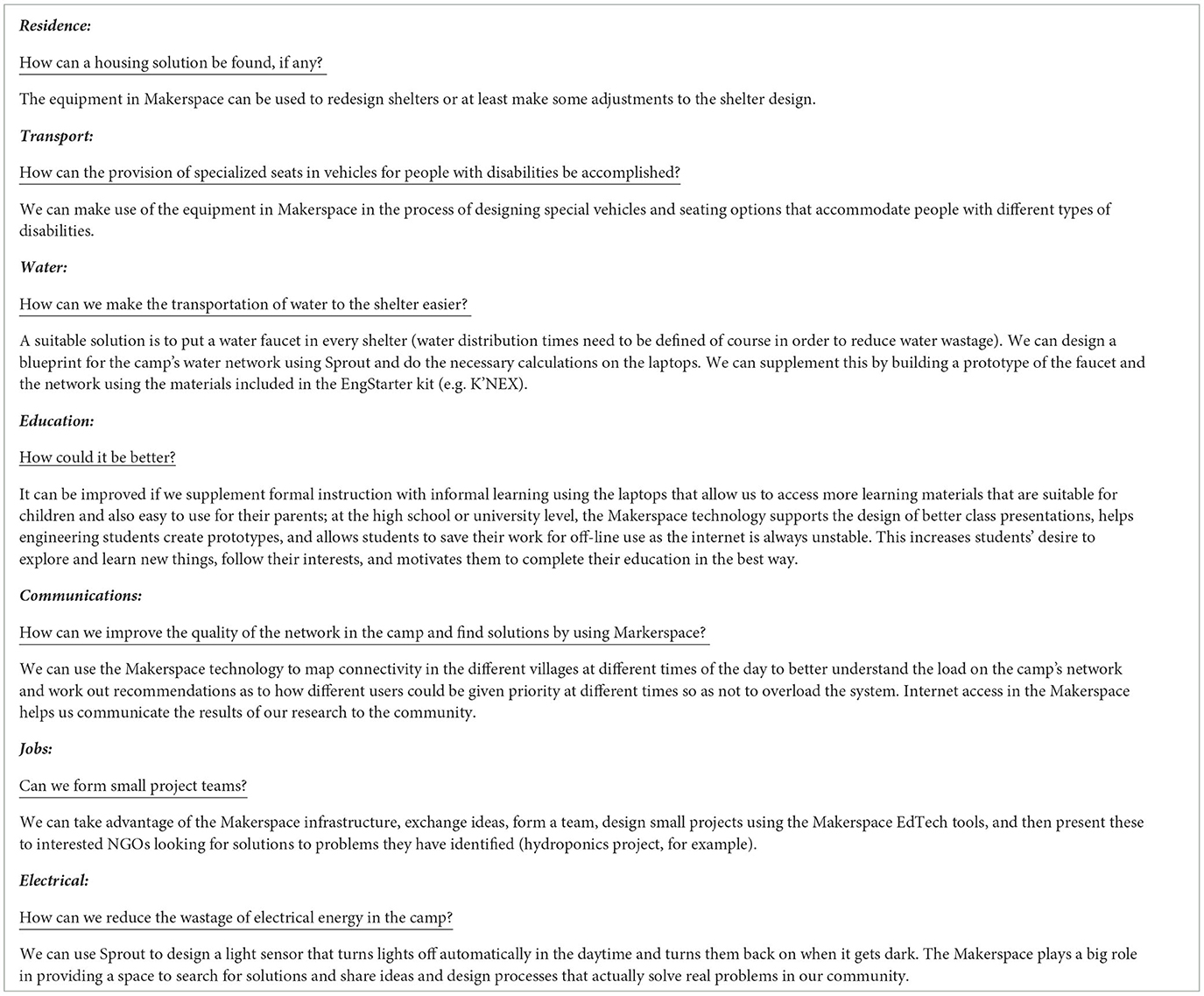

The group had thereby developed a very comprehensive picture of the needs of the Azraq camp community and how these needs were related to each other and was ready to relate the affordances of the Makerspace to solving these needs; in short, they were ready to evaluate how the Makerspace and its EdTech as installed in their camp could actually solve problems of camp residents that they had identified through their problem-tree analysis.

They then proceeded to the next step of the project: defining evaluation questions, i.e., if and how the EdTech of Makerspace could contribute to solving community problems. In that, the group was guided by three key question types (cf Table 3), with output summarized in Table 5.

Description and causal allocation phase

Based on the Azraq camp problem tree, the group produced a large number of potential evaluation questions (cf Table 5).

The synthesis phase

In a second iteration, the table became the input for in-depth group discussions as to which of these were evaluation questions linked to the potential uses of the Makerspace as an example of EdTech, and how this type of EdTech could serve the needs identified by the HP Research Group and the users of the Makerspace. Table 5 summarizes the selection of evaluation questions linked to the Makerspace as EdTech and provides examples of how, with the EdTech embedded in the Makerspace, solutions could be generated by the users. The addition of potential solutions in response to the evaluation questions identified by the researchers was to test the contextual relevance of the evaluation questions.

Meeting the criteria for developmental evaluation in humanitarian contexts

The comparison between traditional and developmental evaluations and the latter's relevance to humanitarian contexts is summarized in Table 2 above. Moving to the last task of the Rainbow Framework, and in light of the fact that pandemic-related restrictions and cost-cutting had not yet allowed for carrying out evaluations in the camp, participants decided to repurpose the last phase of the BetterEvaluation framework and describe the impact of designing and using new tools to evaluate EdTech in humanitarian contexts. To this end, the research group engaged in reflection on how the overall framework and the different tools met the needs of forcibly displaced youth and their communities. Had the Rainbow Framework and developmental evaluation together with the tools of Participatory Action Research responsibly supported Core Humanitarian Standards (CHS Alliance, 2014)? Traditional evaluations are almost always initiated by outside consultants to ensure impartiality. However, accountability to affected populations requires deeper and more sustained community engagement that goes beyond participation in interviews, surveys and focus groups. While fear of negative feedback from communities, lack of resources, and competing priorities are often cited by humanitarian actors as reasons for choosing traditional and donor-facing evaluation approaches, community participation ensures access to affected populations and their resources, makes assistance more efficient and speeds up recovery (CHS Alliance, 2014). Putting crisis-affected youth at the center of a humanitarian response also yields important (social) protection benefits and contributes to their self-reliance (Holmes and Lowe, 2023).

From the key criteria listed for developmental evaluations and their application in humanitarian contexts, this project had adapted to the needs of an unpredictable camp context, emphasized community participation, local knowledge production, and respect for contextual assets; it had put vulnerable youth in charge of “Action” and through detailed analyses of needs in their camp, it enabled them to exercise accountability to their community. The project illustrates how it met the criteria for Participation Revolution in humanitarian action and the INEE Minimum Standard for community participation as referenced above.

Reporting impact

The last phase of the Rainbow Framework (BetterEvaluation, 2022) provides for reporting on the impact and on developing recommendations that support the future use of the evaluated EdTech. In Phase 3 of the BetterEvaluation framework, participants produced a number of evaluation questions related to the potential use of the EdTech involved in this project and demonstrated how participatory approaches could produce a comprehensive contextual understanding of the impact a particular EdTech could have on the humanitarian community where it was implemented. The final selection of evaluation questions and implementation of the remaining phases of the BetterEvaluation framework for this particular EdTech remained subject to funding and restrictions related to the pandemic.

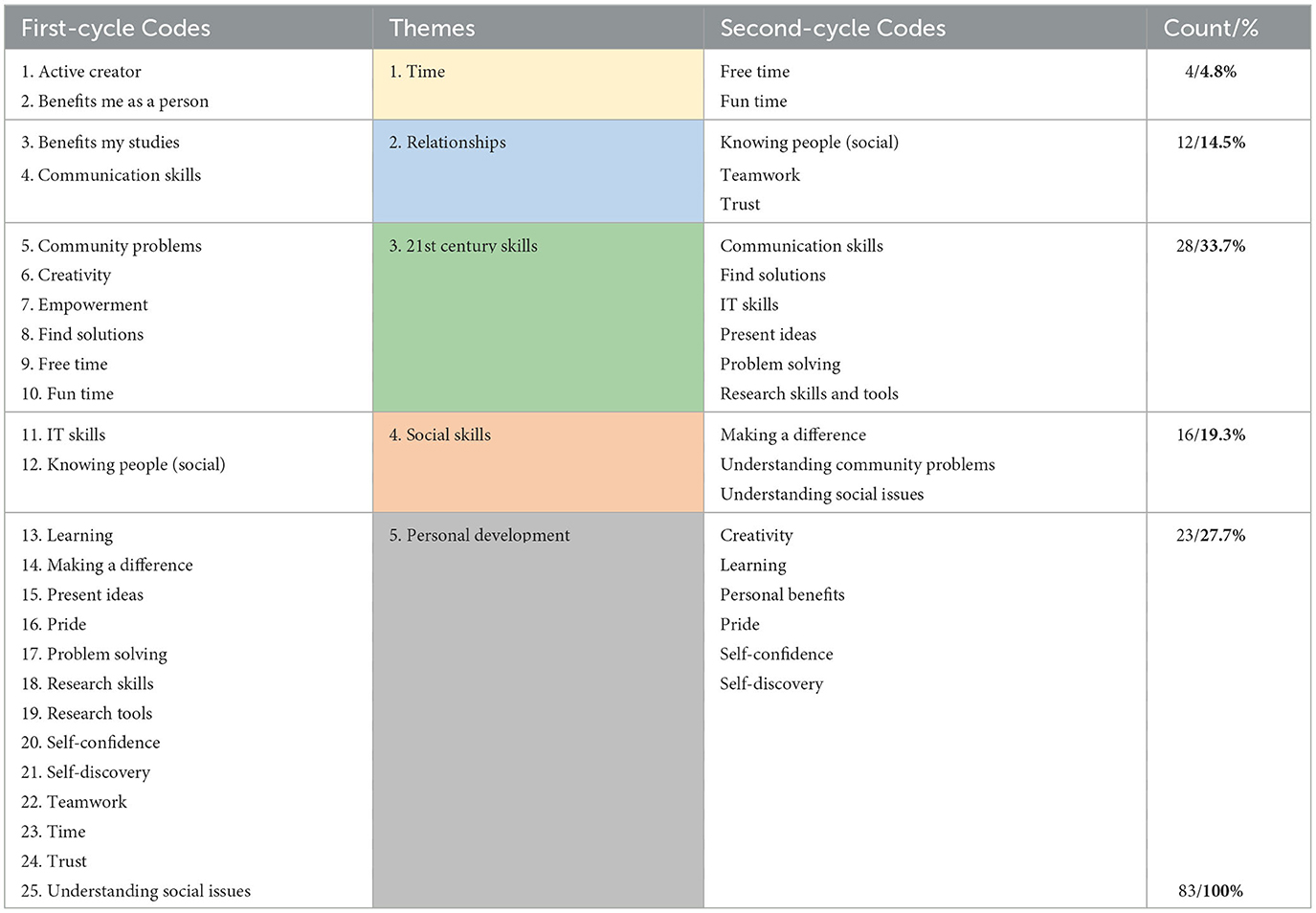

As the focus of this study is on the design and development of methodological tools, we, therefore, propose a meta-analysis of the process, the program of activities, and the impact these have on the community, in particular on the group of student researchers. To this end, personal reflections on the project and its implementation were requested from each group member. There was no prescribed format; participants could choose video, audio, or narrative, as well as samples of the creations at the Makerspace. A total of 12 comprehensive files were submitted anonymously and subsequently analyzed by the project lead using thematic analysis based on Braun and Clarke (2006). Submitted video and audio files were first transcribed for analysis. Inductive and semantic coding was used to then develop first-cycle codes; these 25 codes were then grouped into five themes with duplicate codes or near duplicates eliminated, and during a subsequent third sweep through the qualitative data, a total of 83 items distributed across the five themes were identified.

Table 6 provides an overview of the process from the first-cycle coding to the grouping into salient themes to the second-cycle coding and quantification, with percentages of second-cycle codes allocated to each theme (cf. Appendix for sample quotes).

Synthesis and discussion

Understanding evaluation as a concept and as a process was challenging for recent high school graduates with little background in the social sciences. This required balancing the building of foundational knowledge of specific accepted scientific approaches and minimal interference with locally informed approaches, and also preserving a degree of fuzziness and messiness that would support bottom-up innovation that did not follow a received innovation design process. The developmental evaluation framework provided considerable flexibility for the participants to scope ideas, follow up with collaborative inquiry, observation of the Makerspace users, and scope of evaluation questions while remaining entirely embedded in the reality of the humanitarian context of their community and for which the use of EdTech tools was to be evaluated. Emerging from an education system that did not prioritize independent, creative, and critical thinking, these recent high school graduates were ideal participants in that they were not entirely set in their ways of learning and exploring their environment. This supported trial-and-error learning, being receptive to feedback, opening up to new and diverse ideas, and gaining confidence in their abilities to contribute to science. As a learning opportunity, this project allowed them to acquire 21st century skills and contributed greatly to social-emotional learning during the pandemic. “What is important is that we develop a culture of evaluation, in which mistakes are seen as learning opportunities and learning as a major source of growth and development” (Feinstein, 2006, p. 8).

From a methodological point of view, the different tools that make up the toolbox prototype are responsive to the Grand Bargain Commitments regarding local ownership of projects and processes, appropriate to the context in which they will be used, effective in that they produce usable results, and efficient in that they do not require lengthy training. The prototype as a whole is greater than the sum of its parts, i.e., its individual methods and tools. And yet, even the selection of individual tools may likely generate important insights and learning opportunities that are participant-led and community-embedded.

As noted in the background section, there is scant evidence of EdTech in emergency situations (World Bank, 2016). The project reported on in this study seeks to contribute to the creation of evidence regarding EdTech interventions in fragile contexts through the design of a methodological toolbox prototype, by and for forcibly displaced youth living in fragile contexts. The project built on principles that included a clear understanding of low-resource humanitarian contexts, that EdTech is a tool and not the solution, that we need to start with the problem and not the technology, and that the human factor is critical to impactful EdTech applications (Tobin and Hieker, 2021). The proposed methodological toolbox prototype was designed with refugee youth aged 18–35, but the framework and tools can be easily adapted for use by secondary school students, and even upper-level primary school students can engage in participatory research initiatives. The EdTech to be evaluated for implementation need not be an entire suite of IT tools, as was the case with the HP Learning Studio, as the toolbox approach lends itself readily to participatory evaluation for low-resource humanitarian contexts of stand-alone tools, such as educational apps and learning management systems.

Conclusion

Raluca et al. (2022) conclude a recent policy brief regarding EdTech use during the global pandemic as follows:

“What we know less about is whether distance learning and EdTech can help offset [these] negative consequences…A core constraint, however, is the availability of local data and information to inform this decision-making. Without an operating footprint of schools to channel information up and down, policymakers have to be realistic. They are likely to have less information than what is normally available from weak education management and data collection systems, meaning they will be unable to optimize their decisions.” (Raluca et al., 2022, p. 3).

Years of emphasis on more evidence-based approaches to learning in fragile contexts have largely oriented the focus to applying the “gold standard” of research methods, RCTs, and other quasi-empirical methods, to constructing this evidence. The guide to assessing the strength of evidence in education (Building Evidence in Education Working Group, 2015) follows a similar pattern, relying on the understanding that evidence is constructed using the accepted scientific method as usually applied in Western research centers and higher education institutions where controlling variables and methodological finesse are an integral part of the research enterprise and where research output generally does not directly affect people's lives. When such approaches are exported to fragile contexts they invariably set up a power differential between those who are studied and those who are doing the research (Fox et al., 2020). This approach, however, is increasingly questioned by those whose voices are supposedly included in the research, but who are at best enumerators, collecting data on variables and indicators to whose identification they had never contributed, and which will inform research and evaluation questions that they themselves rarely learn about. As the research and evaluation results are only occasionally returned to the population under study, this type of extractive research is increasingly questioned (Bastida et al., 2009; Cordner et al., 2012) not only on ethical grounds (Kouritzin and Nakagawa, 2018) but also in terms of validity and reliability of its results. If including refugee voices is limited to their participation in focus groups and KI interviews—the selection is often co-determined by their level of proficiency in the language of the researcher(s)—it is difficult to claim that the quality of the evidence warrants its consideration for policymaking that directly affects the studied population. It is thus not surprising that so much of EdTech in humanitarian contexts remains unused or not used as intended and that potentially valuable uses remain unidentified. This usually leads to more studies using the same traditional research and evaluation approaches being commissioned and which largely produce more of the same results.

In this project, we invested considerable effort in being and remaining grounded (Strauss and Corbin, 1994), digging into the toolbox of research methods that would be compatible with empowering young people to become evaluators and researchers, giving them enough time and opportunity to build their skills, for using their voices and breaking new ground not by inventing new research methods, but by combining respected methods in ways that would ultimately contribute to a responsible methodological toolbox for use in humanitarian contexts. Setting the approach within a highly respected evaluation framework (BetterEvaluation, 2022) was initially a risky departure from our grounded approach, but the Rainbow framework stood the test and proved to be a valuable guide and roadmap that also ensured the interface to more traditional evaluation approaches with which the humanitarian community would be more familiar and thus perhaps improving chances for acceptance of the project's outcomes.

While building the methodological toolbox took time and benefited from available resources locally, the resulting prototype responds to the demands of efficiency in humanitarian contexts: the user does not need to go through all the stages in the same in-depth way but can prioritize certain stages and invest more resources where considered appropriate. No two humanitarian contexts are alike. The resource base can be extremely varied, and a one-size-fits-all approach is thus ill suited for fragile contexts. Each context merits to be studied by those who live in it and are the intended beneficiaries of EdTech. EdTech innovators should constantly ask themselves if they have correctly identified the “customer's pain point” (Atwater, 2022), “it's easy to fall in love with a solution first and back into the problem it solves. In a crisis, we make fewer mistakes in the choice of the problem, and we do a much better job about picking solutions.” Much of EdTech is designed as a solution without a profound understanding of the problem, worse yet, it is designed as a solution, rather than as an open-ended, fuzzy, and messy tool that lets the ultimate users decide on its use and usefulness. Thus evaluating EdTech needs to be user- and context-driven and the process of learning how to evaluate EdTech might turn out to be at least as, if not more important than the outcome, strengthening both our accountability to youth in fragile contexts and our engagement as higher education actors to contribute to operationalizing the Grand Bargain commitments.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study involving human participants in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants in accordance with the national legislation and the institutional requirements.

Author contributions

KA coordinated the junior researchers RAlh, RAlS, AA, AH, BB, MHA, and MMA in the refugee camp during research training and research implementation. BM-M provided the research training and framework, compiled and analyzed the data, and wrote the article. All authors contributed equally to this study.

Funding

This research was conducted as part of a Ford Foundation grant awarded to the corresponding author while at the Université de Genève/InZone Center. Grant # 129478. The grant was awarded for the development of Higher Education in Emergencies programs in Azraq refugee camp, Jordan. Open access funding by University of Geneva.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Atwater, E. (2022). Available online at: https://entrepreneurship.babson.edu/babson-magazine/spring-2020 (accessed September 5, 2022).

Bastida, E., Tseng, T., McKeever, C., and Jack, L. (2009). Ethics and community-based participatory research: perspectives from the field. Health Prom. Pract. 11, 16–20. doi: 10.1177/1524839909352841

BetterEvaluation (2022). BetterEvaluation. Available online at: https://www.betterevaluation.org/ (accessed September 5, 2022).

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qualit. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Buchanan-Smith, M., Cosgrave, J., and Warner, A. (2016). ALNAP Evaluation of Humanitarian Action Guide. Available online at: https://www.alnap.org/system/files/content/resource/files/main/alnap-evaluation-humanitarian-action-2016.pdf (accessed September 5, 2022).

Building Evidence in Education Working Group (2015). Assessing The Strength Of Evidence In The Education Sector - World. (2022). Available online at: https://reliefweb.int/report/world/assessing-strength-evidence-education-sector (accessed September 5, 2022).

Chevalier, J., and Buckles, D. (2019). Handbook of participatory action research. Available online at: https://www.betterevaluation.org/sites/default/files/Toolkit_En_March7_2013-S.pdf (accessed September 5, 2022).

CHS Alliance (2014). The Core Humanitarian Standards. Available online at: https://corehumanitarianstandard.org/files/files/Core%20Humanitarian%20Standard%20-%20English.pdf (accessed April 7, 2023).

Connected Learning Alliance (2020). What is connected learning. Available online at: https://clalliance.org/about-connected-learning (accessed September 5, 2022).

Cordner, A., Ciplet, D., Brown, P., and Morello-Frosch, R. (2012). Reflexive research ethics for environmental health and justice: Academics and Movement Building. Soc. Move. Stud. 11, 161–176. doi: 10.1080/14742837.2012.664898

Davies, D., and Elderfield, E. (2022). Language, power and voice in monitoring, evaluation, accountability and learning: a checklist for practitioners. Forced Migr. Rev. 70, 32–35.

de Freitas, C., and DeBoer, J. (2019). “A Mobile Educational Lab Kit for Fragile Contexts,” in 2019 IEEE Global Humanitarian Technology Conference (GHTC). doi: 10.1109/GHTC46095.2019.9033133

Dozois, E., Langlois, M., and Blanchet-Cohen, N. (2010). “A Practitioner's Guide to Developmental Evaluation,” in Family Foundation and the International Institute for Child Rights and Development, ed. J.W. McConnell (Montreal, Quebec, Canada). Available online at: https://mcconnellfoundation.ca/wp-content/uploads/2017/07/DE-201-EN.pdf (accessed September 5, 2022).

Feinstein, O. (2006). Evaluation as a learning tool. Paper presented at the International Congress on Human Development 2006, Madrid. Available online at: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.1068.6086andrep=rep1andtype=pdf (accessed September 5, 2022).

Fox, A., Baker, S., Charitonos, K., Jack, V., and Moser-Mercer, B. (2020). Ethics-in-practice in fragile contexts: Research in education for displaced persons, refugees and asylum seekers. Br. Educ. Res. J. 46, 829–847. doi: 10.1002/berj.3618

Geneva Global Hub for Education in Emergencies (2022). Education in Emergencies. Financing in the wake of COVID-19: Time to reinvest to meet growing needs. Available online at: https://eiehub.org/wp-content/uploads/2022/06/2022_EiE-Financing-in-the-Wake-of-COVID-19_Time-to-Reinvest-to-Meet-Growing-Needs.pdf (accessed April 7, 2023).

Genovese, J. (2017). The Mindmapper's Toolkit. Available online at: https://mailchi.mp/d90a79068c8c/the-mind-mappers-toolkit (accessed December 7, 2020).

Global Citizen (2018). How HP Is Creating Educational Opportunities for Syrian Refugees in Jordan. HP Learning Studios' initiative is helping refugees rebuild their lives. Available online at: https://www.globalcitizen.org/en/content/hp-jordan-azraq-refugee-camp-education/ (accessed April 7, 2023).

Haelewaters, D., Hofmann, T. A., and Romero-Olivares, A. L. (2021). Ten simple rules for Global North researchers to stop perpetuating helicopter research in the Global South. PLoS Comput. Biol. 17, e1009277. doi: 10.1371/journal.pcbi.1009277

Hirsh-Pasek, K., Zosh, J. M., Shwe Hadani, H., Golinkoff, R. M., Clark, K., Donohue, C., et al (2022). A Whole New World. Education Meets the Metaverse. Center for Universal Education at Brookings. Available online at: https://www.fenews.co.uk/wp-content/uploads/2022/02/A-whole-new-world-Education-meets-the-Metaverse-Feb-(2022).pdf (accessed April 7, 2023).

Holmes, R., and Lowe, C. (2023). Strengthening inclusive social protection systems for displaced children and their families. London: ODI and New York: UNICEF. Available online at: https://odi.org/en/publications/strengthening-inclusive-social-protection-systems-for-displaced-children-and-their-families/) (accessed April 8, 2023).

IASC (2017). Grand Bargain Participation Revolution work stream. Agreed, practical definition of the meaning of “participation” within the context of this workstream. IASC. Available online at: https://interagencystandingcommittee.org/system/files/participation_revolution_-_definition_of_participation.pdf (accessed April 7, 2023).

IASC (n.d.) The Grand Bargain. Available online at: https://interagencystandingcommittee.org/node/40190 (accessed April 7 2023)..

INEE (2010). Minimum Standards for Education. Domain 1: Foundational Standards. Available online at: https://inee.org/minimum-standards/domain-1-foundational-standards/community-participation-standard-1-participation (accessed April 7, 2023).

IRC (2022). International Rescue Committee. Humanitarian EdTech Innovation Toolkit. Tools and insights for innovating with technology-based education in humanitarian contexts. Available online at: https://reliefweb.int/report/world/humanitarian-edtech-innovation-toolkit-tools-and-insights-innovating-technology-based-education-humanitarian-context (accessed April 7, 2023).

Kouritzin, S., and Nakagawa, S. (2018). Toward a non-extractive research ethics for transcultural, translingual research: perspectives from the coloniser and the colonised. J. Multil. Multic. Develop. 39, 675–687. doi: 10.1080/01434632.2018.1427755

KT Pathways (2022). A Short Guide to Community Based Participatory Action Research | KT Pathways. Available online at: https://www.ktpathways.ca/resources/short-guide-community-based-participatory-action-research (accessed September 5, 2022).

Kucirkova, N. (2022). EdTech has not lived up to its promises. Here is how to turn that around. Opinion piece. World Economic Forum. Available online at: https://www.weforum.org/agenda/2022/07/edtech-has-not-lived-up-to-its-promises-heres-how-to-turn-that-around/ (accessed April 7, 2023).

Lu, J., Schmidt, M., Lee, M., and Huang, R. (2022). Usability research in educational technology: a state-of-the-art systematic review. Educ. Tech Res. Dev. 70, 1951–1992. doi: 10.1007/s11423-022-10152-6

Muñoz-Najar, A., Gilberto, A., Hasan, A., Cobo, C., Azevedo, J. P., and Akmal, M. (2021). Remote Learning during COVID-19: Lessons from Today, Principles for Tomorrow. Washington, D.C.: World Bank Group. Available online at: http://hdl.handle.net/10986/36665 (accessed September 5, 2022).

Pain, R., Whitman, G., Milledge, D., and Lune Rivers Trust. (2012). Participatory action research toolkit: an introduction to using PAR as an approach to learning, research and action. Durham University/RELU/Lune Rivers Trust. Available online at: https://www.durham.ac.uk/media/durham-university/research-/research-centres/social-justice-amp-community-action-centre-for/documents/toolkits-guides-and-case-studies/Participatory-Action-Research-Toolkit.pdf (accessed September 5, 2022).

Patton, M. (2006). Evaluation for the Way We Work - Non Profit News | Nonprofit Quarterly. Non Profit News | Nonprofit Quarterly. Available online at: https://nonprofitquarterly.org/evaluation-for-the-way-we-work/ (accessed September 5, 2022).

Patton, M. (2010). Developmental Evaluation: Applying Complexity Concepts to Enhance Innovation and Use. New York: Guilford Press.

Patton, M. (2012). Developmental evaluation: Conclusion. Expert seminar at Wageningen Center for Development Innovation. Available online at: https://www.youtube.com/watch?v=h6-vCT-cv4Y (accessed September 5, 2022).

Raluca, D., Pellini, A., Jordan, K., and Phillips, T. (2022). Education during the COVID-19 crisis. Opportunities and constraints of using EdTech in low-income countries. Policy Brief. Available online at: http://edtechhub.org/coronavirus (accessed September 5, 2022).

Rodriguez-Segura, D. (2022). EdTech in developing countries: a review of the evidence. World Bank Res. Obser. 37, 171–203. doi: 10.1093/wbro/lkab011

Sandhu-Rojon, R. (2017). Selecting indicators for impact evaluation. UNDP. Available online at: https://www.ngoconnect.net/sites/default/files/resources/Selecting%20Indicators%20for%20Impact%20Evaluation.pdf (accessed September 5, 2022).

Simister, N. (2020). Developmental evaluation. Available online at: https://www.intrac.org/wpcms/wp-content/uploads/2017/01/Developmental-evaluation.pdf (accessed September 5, 2022).

Strauss, A., and Corbin, J. (1994). “Grounded theory methodology,” in Handbook of qualitative research, ed. N., Denzin, and Y., Lincoln (Thousand Oaks: Sage) 217–285.

Tauson, M., and Stannard, L. (2018). EdTech for learning in emergencies and displaced settings. London: Save the Children. Available online at: https://resourcecentre.savethechildren.net/node/13238/pdf/edtech-learning.pdf (accessed September 5, 2022).

Tobin, E., and Hieker, C. (2021). What the EdTech experience in refugee camps can teach us in times of school closure. Blended learning, modular and mobile programs are key to keeping disadvantaged learners in education. Challenges 12, 19. doi: 10.3390/challe12020019

UNHCR (2022). Refugee camps Jordan. Available online at: https://www.unhcr.org/jo/refugee-camps (accessed April 7, 2023).

UNICEF (2021). Unlocking the Power of Digital Technologies to Support ‘Learning to Earning' for Displaced Youth. New York: UNICEF. Available online at: https://www.unicef.org/media/105686/file/Learning%20to%20earning%E2%80%99%20for%20displaced%20youth.pdf (accessed April 7, 2023).

UNICEF-Innocenti (2022). Responsible Innovation in Technology for Children. Digital Technology, Play and Child-Wellbeing. Florence: UNICEF-Innocenti. Available online at: https://www.unicef-irc.org/ritec (accessed April 7, 2023).

Vegas, E. (2020). What can COVID-19 teach us about strengthening education systems? Brookings Institution – Education Plus. Available online at: https://www.brookings.edu/blog/education-plus-development/2020/04/09/what-can-covid-19-teach-us-about-strengthening-education-systems/ (accessed September 5, 2022).

World Bank (2016). ICT and the Education of Refugees. Available online at: https://documents1.worldbank.org/curated/en/455391472116348902/pdf/107997-WP-P160311-PUBLIC-ICT-and-the-Education-of-Refugees-final.pdf (accessed April 7, 2023).

Appendix

This produced the following rich output of (unedited) examples:

Output from follow-up assignment:

Building knowledge: We have developed the skills of members (using the place) and expanded their awareness in many areas, including teaching them how to use advanced computers (Sprout).

Social change: By working in the Makerspace and following up on place users, we have noticed a lot of harmony, cooperation, and exchange of ideas, despite their different cultures and social groups, this is a goal that we have achieved and a great achievement. Hope this works!

Stakeholders: They are the people directly related[sic] and affected, and the involvement of stakeholders in the research helps in gathering information and helps to define the needs of the local community. They are generally people in the community (and part of them are users of the place).

Division of tasks: We divided the tasks among the team members according to the skills and experiences of each member of the team, for example, when we featured the brochure, Mohamed Al-Hamoud, with the assistance of Rawan Al-Maher, designed the external pictures of the publication. Ahmed and Ali were tasked with writing the content in both languages. Arabic and English, Rowan and Bashar were tasked with spelling and final design on the laptop, which was the task of Kawkab and Muhammad al-Qadri.

Community needs assessment: Through our follow-up of the place, users, their sharing of ideas, and through discussions, we were able to determine the needs of the local community. For example, the youth in the camp need to learn the English language in order to study and work. Another example: People here need plants, but the soil is not suitable for cultivation, so the idea of a hydroponic project was born.

Cooperation between the members of the group: Since the beginning of the course, I and the members of the group were sympathetic at the beginning. We distributed the tasks to the members according to the skill of each individual. Some of them had the task of entering data on the laptop, and some of them had to register what we needed of[sic] materials. Also, they cooperated in distributing publications in the centers. In addition, we had to determine the content and form of the publication.

Working as a team: We were always in contact with each other, either through periodic sessions or via WhatsApp, cooperating and helping each other to complete tasks in[sic] time and coordinating among us. For example, when we distribute brochures in the camp, we worked as a team and each member helped.

Keywords: EdTech in humanitarian contexts, developmental evaluation, accountability to affected populations, localization, PAR

Citation: Moser-Mercer B, AlMousa KK, Alhaj Hussein RM, AlSbihe RK, AlGasem AS, Hadmoun AA, Bakkar BA, AlQadri MH and AlHmoud MM (2023) EdTech in humanitarian contexts: whose evidence base? Front. Educ. 8:1038476. doi: 10.3389/feduc.2023.1038476

Received: 07 September 2022; Accepted: 17 May 2023;

Published: 16 June 2023.

Edited by:

Jorge Membrillo-Hernández, Tecnologico de Monterrey, MexicoReviewed by:

Claudio Freitas, Purdue University Fort Wayne, United StatesGerid Hager, International Institute for Applied Systems Analysis (IIASA), Austria

Copyright © 2023 Moser-Mercer, AlMousa, Alhaj Hussein, AlSbihe, AlGasem, Hadmoun, Bakkar, AlQadri and AlHmoud. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Barbara Moser-Mercer, YmFyYmFyYUB1b25iaS5hYy5rZQ==

Barbara Moser-Mercer

Barbara Moser-Mercer Kawkab K. AlMousa3

Kawkab K. AlMousa3 Rawan M. Alhaj Hussein

Rawan M. Alhaj Hussein