94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 25 October 2022

Sec. Teacher Education

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.994739

This article is part of the Research TopicGood Teaching is a Myth!?View all 6 articles

Conceptualizing and measuring instructional quality is important to understand what can be understood as “good teaching” and develop approaches to improve instruction. There is a consensus in teaching effectiveness research that instructional quality should be considered multidimensional with at least three basic dimensions rather than a unitary construct: student support, cognitive activation, and classroom management. Many studies have used this or similar frameworks as a foundation for empirical research. The purpose of this paper is to investigate the relation between the conceptual indicators underlying the conceptual definitions of the quality dimensions in the literature, and the various operational indicators used to operationalize these factors in empirical studies. We examined (a) which conceptual indicators are used to conceptualize the basic dimensions theoretically, (b) to which extent the operational indicator in the literature cover these conceptual indicators, and (c) if which additional indicators are addressed by the measurement instruments, which are not part of the theoretical conceptualization. We conducted a systematic literature review on the conceptualization and operationalization of Instructional Quality in Primary and Secondary Mathematics Education based on PRISMA procedures. We describe the span of conceptual indicators connected to the three basic dimensions over all articles (a) and analyze to which extent the measurement instruments are in line with these conceptual indicators (b, c). For each measurement dimension, the identified quality dimensions identified are, taken together, largely representative of the conceptual indicators connected to the core factor, but also a number of critical misconceptions occurred. Our review provides a comprehensive overview of the three basic dimensions of instructional quality in mathematics based on theoretical conceptualizations and measurement instruments in the literature. Beyond this, we observed that the descriptions of a substantial amount of quality dimensions and their conceptualizations did not clearly specify if the intended measurement referred to the learning opportunities orchestrated by the teacher, or the utilization of these opportunities by students. It remains a challenge to differentiate measures of instructional quality (as orchestrated by the teacher) from (perceived) teacher competencies/knowledge, and students’ reactions to the instruction. Recommendations are made for measurement practice, as well as directions for future research.

For almost five decades, instructional quality has remained a key topic in mathematics education research (FIAC, Schlesinger and Jentsch, 2016). The evaluation of teaching quality in mathematics has become increasingly important following international student assessment studies indicating that even in economically developed countries such as those in Europe, the USA, and Australia, approximately 20% of students lack sufficient skills in mathematics (Maass et al., 2019). Therefore, improving the quality of mathematics instruction has become a pressing issue for both researchers and practitioners (Cobb and Jackson, 2011).

Instructional quality, which is generally considered an “elusive” concept (Brown and Kurzweil, 2017, p. 3), refers to the degree to which instruction is effective, efficient, and engaging. Brophy and Good (1984) argued that research on effective teaching was largely influenced by the measurement of instructional quality. In this study, instructional quality refers to observable characteristics of classroom instruction that are orchestrated by teachers and goes along with desirable development of students’ learning outcomes in a theoretically plausible way, supported by empirical evidence. Valid measures of instructional quality are important and imperative since they provide theoretical conceptualizations of instruction that lead to students’ cognitive and affective–motivational learning progress, which have been put to an empirical test. Therefore, they have the potential to go beyond simply measuring the amount of instruction and can serve as a means of improving instruction (Boston, 2012), to provide useful feedback to guide instructional improvements (Schlesinger and Jentsch, 2016), to focus on the quality of the learning environments teachers create for students, to assist districts in monitoring and evaluating reform efforts (Learning Mathematics for Teaching Project, 2010), and to trigger conversations about equitable learning opportunities (Boston, 2012). However, researchers lack adequate knowledge of the characteristics of effective teaching in classrooms in order to establish the robust link between teaching and learning (Blömeke et al., 2016). Furthermore, there has been a long-standing debate about how these characteristics of effective teaching for successful learning in schools should be evaluated (Schlesinger and Jentsch, 2016).

All models of instructional quality differentiate between various measurement dimensions, which are assumed to describe different characteristics of effective instruction, which relate to differences in learning progresses. In this study, the term measurement dimensions refers to a single, empirically measurable dimension of instructional quality mentioned in a manuscript, which can be at different levels of granularity (Praetorius and Charalambous, 2018): for example, dimensions, sub-dimensions, indicators, coding-items, -rubrics, and single codes. A well-known German framework of Three Basic Dimensions has been developed by several studies from German-speaking countries within the TIMSS Video Study to define teaching quality as a combination of three overarching basic measurement dimensions: (a) a clear, well-structured classroom management, (b) supportive, student-oriented classroom climate, and (c) cognitive activation (Klieme, 2013).

Many studies have used this or a similar three-dimensional framework provided by Klieme et al. (2009) as a foundation for further empirical research (Schlesinger and Jentsch, 2016). In the sequel, we will refer to these three dimensions as basic dimensions. Taut and Rakoczy (2016) briefly addressed a content-specific conceptualization for an extended version. Seidel and Shavelson (2007) argued that classroom assessment is also an important additional factor of instructional quality. They further divided student orientation and added classroom assessment to the three frequently identified basic dimensions: (a) classroom management; (b) cognitive activation; (c) student orientation, consisting of the component of (c1) organizational choices on the one hand, and (c2) supportive relationships on the other; and (d) classroom assessment. Pianta and Hamre (2009) also conceptualize three global, generic dimensions that help to understand how practices and content are implemented. Similar to the three dimensions proposed by Klieme et al. (2009), they distinguish (a) classroom organization, (b) emotional support, and (c) instructional support. In their teaching and learning model, Seidel and Shavelson (2007) distinguish (a) goal clarity and orientation, (b) learning climate, (c) teacher support and guidance, (d) executing learning activities, and (e) evaluation.

The popularity of the three-dimensional framework is plagued by incoherent conceptualization. Multiple labels (e.g., classroom management/organization, classroom climate/student orientation/emotional support) are used to characterize the same nature of the classroom, which leads to misunderstandings among scholars and practitioners. Theoretically, the three-dimensional frameworks we just described were conceptualized as being generic in nature (Praetorius et al., 2018). However, the discussion as to what extent a generic perspective on instructional quality requires a subject-specific specification, extension or differentiation is subject to ongoing discussions in the field (e.g., Schlesinger and Jentsch, 2016; Jentsch et al., 2020; Lindmeier and Heinze, 2020; Praetorius et al., 2020; Dreher and Leuders, 2021; Praetorius and Gräsel, 2021). Furthermore, the conceptualization of the dimension Cognitive Activation can differ largely between studies within one subject (e.g., mathematics; Schlesinger and Jentsch, 2016).

Given this heterogeneity, it has become important to structure or systematically analyze previous studies to capture the commonalities and differences in these approaches, to clarify misconceptions, and to propose recommendations for future conceptualizations of instructional quality. For example, Praetorius and Charalambous (2018) recently reviewed 12 classroom observation frameworks used for measuring quality in mathematics instruction and reflected on the differences in the theoretical underpinnings and their operationalization related to the frameworks. This work represents one of two perspectives that may provide insights into what is actually understood under the basic dimensions: Measurement instruments applied in empirical studies to measure instructional quality, and specifically the basic dimensions, draw on compositions of observable and measurable properties of classroom instruction, called operational indicators (Figure 1, blue area). However, how a construct is operationalized using sub-scales and items and dimensions is crucially dependent on how it is conceptualized from a theoretical perspective. While Praetorius and Charalambous (2018, p. 539) already claimed that “the process of operationalization is closely associated with fundamental theoretical questions,” measurement and conceptualization were addressed independently. From this second theoretical perspective, research works usually start from conceptual definitions (Quarantelli, 1985) for each basic dimension. In the literature, these conceptual definitions often comprise essential and in principle observable properties of classroom instruction, called conceptual indicators here (Figure 1, yellow area), which define the corresponding basic dimension of instructional quality. The conceptual indicators used to define the basic dimensions in the original manuscript are presented by the red area in Figure 1.

The primary purpose of this study is to investigate the extent to which the indicators from the two perspectives match the three basic dimensions from Klieme’s (2013) framework: To what extent do the operational indicators and conceptual indicators used in the literature overlap? Are there indications of concept underrepresentation (yellow area without blue area) or indication of construct-irrelevant measurement (blue area without yellow area)? Finally, does the original definition of the basic dimensions fall inside the overlap of conceptual and operational indicators? The second goal of the manuscript is concerned with measurement dimensions, which are discussed in the literature, but do not come under the purview of one of the three basic dimensions. The main question here is, how can they be systematized into a set of new, additional overarching quality dimensions, which may represent additional “basic dimensions” in the future? Even though initial answers to this question based on the analysis of observation tools are available, more and diverse indicators of instructional quality are discussed in the literature, beyond those found in observation tools. In the following sections, we will prepare this investigation by considering each of the two perspectives comprehensively.

Deciding on measurements in research involves the process of defining how a construct will be assessed, known as conceptualization. Prior attempts to measure instructional quality traditionally focused on instructional inputs or instructional outputs (Brown and Kurzweil, 2017). Output-based definitions of instructional quality focus on how student behaviors and accomplishments—such as student performance on a posttest or student affect toward learning after instruction (Merrill et al., 1979). While students’ learning progress is unquestionably a critical criterion to validate measures of instructional quality, students’ test performance does not indicate which characteristics of classroom instruction may have caused the corresponding development (Boston, 2012). To capture the relationship between instruction and students’ development, it is essential to examine all major factors influencing student achievement, which may relate to students’ prior characteristics, school- or family-related context variables, or instruction itself (Junker et al., 2005). Meanwhile, input measures for instructional quality include school infrastructure, teaching and learning materials, or characteristics of teachers or instructors (Otara and Niyirora, 2016). This research tradition reflects, for example, the assumed importance of teacher characteristics for high-quality teaching (Blömeke et al., 2016). However, this perspective again does not directly capture the effects of classroom instruction, which makes it difficult to exploit the corresponding measures as described above.

Further research focused on the teaching process, that is, teacher behavior and observable characteristics of classroom instruction instead of output or input measures. Traditionally, this research focused on what are called “surface structures” today (Köller and Baumert, 2001). These surface structures are discrete and easily observable units of teaching activity, such as whether the teacher asks questions, whether the students give correct answers, or whether the teacher reinforces students. These behaviors are measured in terms of their presence or absence of specific actions, and require limited inference by the observer. This process–product paradigm produces a diverse list of effective teaching components that the researchers choose to observe and assume to influence its quality. Following Borich (1977), classroom assessment should include both process and product measures, since we cannot assume that stable teacher behavior always produces stable pupil outcomes, or that stable pupil outcomes are always attributable to stable teacher behaviors. Glass (1974) qualifies this statement, by specifying that no characteristic of teaching should be incorporated into rating scales until research has established that it can be reliably observed and that it significantly relates to desired pupil outcomes.

Research during the past four decades has ceased to concentrate on discrete, directly observable teaching practices and teacher personality or teacher behavior in the classroom to explain learning progress (Creemers and Kyriakides, 2015). The research focus has shifted toward a more interactive Process–Mediation–Product Paradigm (Brophy and Good, 1986; Brophy, 2000, 2006). This emphasizes, models, and investigates the relationship between the teaching acts, techniques or strategies (processes) as orchestrated by the teacher, and students’ usage (mediation) of the learning opportunities entailed in this orchestration, which ultimately lead to students’ progress (product) (Praetorius et al., 2014). From this perspective, instructional quality is a construct that reflects those teachers’ instructional practices that can be connected to students’ learning processes (mediation) in a theoretically and empirically observable plausible way (Blömeke et al., 2016).

Although most studies on instructional quality are grounded in this process–mediation–product paradigm (Jentsch and Schlesinger, 2017), available empirical results are rather weak and inconsistent for those characteristics of instruction, which go beyond discrete, directly observable characteristics, and require a substantial amount of inference by the observer, teachers, or students (Krauss and Bruckmaier, 2014). For example, Johnson and Johnson (1999) argued that the traditional classroom learning group, in which students are assigned to work together and accept that they have to do so, are different from the “real” cooperative learning group in which students work together to accomplish shared goals by discussing material with each other, helping one another understand it, and encouraging each other to work hard. In this sense, indicators for instructional quality can be categorized into surface structures (e.g., grouping students), that can be observed directly, without much inference, and deep structures (e.g., encouraging cooperative learning) that require interpretations based on subject-specific or general models of teaching and learning (Schlesinger and Jentsch, 2016).

Evaluating deep structures usually requires more inference than surface structures in their evaluation, but have often provided more valid results (e.g., on the role of high-level thinking) than related surface structure measures (e.g., students’ oral participation in class) (Schlesinger and Jentsch, 2016). Praetorius et al. (2014) argue that low-inference ratings cannot adequately assess the characteristics of the deep structure of instruction, for instance, cognitive activation. Therefore, most classroom observation tools dig into the deep structure of teaching (Lanahan et al., 2005; Praetorius et al., 2014) using high-inference coding systems. The presence of aspects from surface structures and the quality of the deep structures can vary almost independently from each other (Baumert et al., 2010). Nevertheless, Schlesinger and Jentsch (2016) claimed that an instrument is necessary that contains items at any inference level, since in some cases, the indicators for the surface structure are a necessary, yet not sufficient indication for the quality at deep structure. For example, students’ mathematical high-level thinking can only occur when the lesson time is connected to the learning of mathematics (i.e., time on task).

First models, such as the basic dimension framework, exist to describe main (and hypothetically independent) factors of instructional quality at the deep structure. However, as argued above, conceptualization and operationalization of the construct in the literature nevertheless vary widely. Praetorius and Charalambous (2018) identified critical issues regarding the conceptualization of instructional quality: First, previous studies conceptualized the construct with various foci from individual learning and development to classroom discourse, from teacher knowledge over task potential to teacher classroom behavior. Second, for none of the reviewed frameworks was it entirely clear as to why certain conceptual indicators were included. Even in cases when explicit references were made to theories, these references were often rather brief “leaving the reader without a clear understanding of how the respective theory led to the conceptualization of a specific framework element” (Praetorius and Charalambous, 2018, p. 539). In short, a coherent theoretical understanding of the deep structure of instructional quality is not available. The main goal of this study is to approach this issue by reviewing how different studies conceptualize basic dimensions of instructional quality, and relate these to the way these dimensions are measured in research.

The way mathematics instructional quality is measured in empirical studies might thus provide a second, additional perspective on the construct. Research on instructional quality has drawn on a range of data sources to capture how teachers orchestrate classroom instruction. Until recently, the body of research measuring instructional quality relied predominantly on data from student ratings of instruction and teacher self-reports to tap a variety of different quality aspects (Wagner et al., 2016). Student ratings are occasionally criticized as being rather global, not specific to different dimensions of teacher behavior, and easily influenced by students’ personal preferences (De Jong and Westerhof, 2001). Teacher reports are sometimes considered to be biased by self-serving strategies or perceived teaching ideals. Some scholars have used teacher surveys or teacher lesson logs to reconstruct patterns of curriculum coverage and the way this curriculum is delivered, or draw on interviews to gather information about teachers’ instructional practice (Ball and Rowan, 2004). Other studies have focused on teaching documents, such as tasks (e.g., Baumert et al., 2010) or text books to derive indicators of instructional quality (e.g., Van Den Ham and Heinze, 2018; Sievert et al., 2019, 2021a,2021b) although this method does not yield accurate information about the nature of interactions between teachers and students and interpretations of reform practices (Mayer, 1999). Likewise, analyses of student work may provide crucial information about students’ use of learning opportunities provided by the teacher and their performance. However, these documents reflect not only the quality of instruction, as orchestrated by the teacher, but also the characteristics of the group of students, for example, in terms of prior achievement or motivation.

In light of this criticism, research has turned toward more direct assessments of instructional quality, such as classroom observations. Such observation has involved either detailed field notes of teachers’ and students’ activities, videotaping, or the use of more structured checklists or codes to reduce the data into categories of construct(s) underlying consistent high-quality instruction, such as students’ opportunities to learn, their engagement in learning, and teachers’ interactions with students over instructional tasks (Ball and Rowan, 2004). Even though they are time-consuming and expensive and also prone to distortions by rater biases, they are considered to be among the most promising ways to assess instructional quality (Taut and Rakoczy, 2016). Capturing how teachers orchestrate students’ work and learning in classrooms and the process of teaching and learning, may offer an external and, in the best case, objective perspective on the quality of instruction.

Over the past decade, a wide range of observation instruments have been developed to assess the classroom environment globally or examine specific aspects of the classroom setting, which vary in the facets of instructional quality addressed and in terms of their specificity to a single subject, such as mathematics and the bandwidth of grade levels covered (Praetorius et al., 2014). The Classroom Assessment Scoring System (CLASS; Pianta et al., 2008) is a standardized observation measure that assesses global classroom quality across grades and across content areas, from preschool to high school. Hamre and Pianta (2007) proposed a latent structure for organizing meaningful patterns of teacher–child interaction, which in turn are the basis for the three dimensions of interaction—Emotional Support, Classroom Organization, and Instructional Support. Within each of its three broad domains are a set of more identifiable and scalable dimensions of classroom interactions that are presumed to be important to students’ academic and social development. For example, the domain of Emotional Support includes three dimensions: positive classroom climate, teacher sensitivity, and regard for student perspectives. Within each of these dimensions are posited a set of behavioral indicators reflective of that dimension. For instance, the positive classroom climate dimension includes observable behavioral indicators such as the frequency and quality of teacher affective communications with students (smiles, positive verbal feedback) as well as the degree to which students appear to enjoy spending time with one another. The Elementary Mathematics Classroom Observation Form (EMCOM; Thompson and Davis, 2014) was designed to observe classroom strategies and activities with a specific focus on the teaching and learning of primary mathematics, with quality dimensions such as (a) calculation and math concepts, (b) student engagement, (c) instruction, (d) technology activities, and (e) materials and manipulative activities.

In sum, there is a growing focus on observation as a useful approach to capturing the quality of classrooms. The available observation tools select different sets of quality dimensions based on their respective focus. However, some of these quality dimensions show partial overlap between observation tools, for example, instruction in EMCOM, classroom talk in the Instructional Quality Assessment (IQA; Matsumura et al., 2008), and the two talk dimensions in the Flanders Interaction Analysis Category (FIAC, Amatari, 2015). Therefore, the question arises as to what extent these observation instruments reflect a joint understanding of instructional quality, as it is, for instance, proposed by Klieme et al.’s (2009) framework. Moreover, this perspective, as well as perspectives drawing on other data sources, require that the conceptual definitions and their entailed conceptual indicators, are described in terms of operational indicators, which can be evaluated objectively, reliably, and validly by the rater (e.g., trained research staff, student, teacher) based on the available data (e.g., videos, independent classroom observation, or own experience of the lesson). This leads to the question as to what extent the operational indicators used in the literature actually reflect the conceptual definitions of basic dimensions of instructional quality.

Curby et al. (2011) argued that without consistent and appropriate conceptualization of a construct, attempts to operationalize, measure, and manipulate instructional quality by professional development are doomed to failure. In the past, there was a strong emphasis on the measurement of instructional quality rather than the conceptualization of the multifaceted construct (Seidel and Shavelson, 2007). While numerous attempts have been made to conceptualize instructional quality, the corresponding frameworks vary widely.

Only the few frameworks mentioned above have explicitly justified their theoretical structure (Praetorius and Charalambous, 2018). One of them is the German framework of the Three Basic Dimensions (TBD) as mentioned above, where the three-part structure is explained referring to the three generic goals of classroom teaching and learning distinguished by Diederich and Tenorth (1997). According to Lipowsky et al. (2009), these basic dimensions are latent variables that are related but not identical to specific instructional practices. Praetorius et al. (2018) identified a few studies that conducted confirmatory or exploratory factor analyses to examine the underlying factor structure of the three-dimensional instrument (Kunter et al., 2005; Lipowsky et al., 2009; Kunter and Voss, 2013; Fauth et al., 2014; Künsting et al., 2016; Taut and Rakoczy, 2016). Lipowsky et al. (2009) found that 10 video rating dimensions of instructional quality could be subsumed under the three-factor structure. Fauth et al. (2014) found the same three-dimensional structure in elementary school students’ ratings on instructional quality. Kunter et al. (2007) combined three sources of information (student reports, teachers’ self-reports, and expert ratings of tasks given to students by teachers) to examine instructional quality and confirmed its three-dimensional structure.

While most of the identified empirical studies support the three-factorial separation of the basic dimensions, two other studies identified more than three dimensions in their analyses (Kunter et al., 2005; Taut and Rakoczy, 2016). Rakoczy (2008) found four factors in a factor analysis based on the data used by Lipowsky et al. (2009) but using a larger set of video rating dimensions. The factor called student-oriented climate was divided into one factor for organizational aspects (provision of choice, individualization) and one for social aspects (teacher–student relationship). Taut and Rakoczy (2016) further indicated that the empirical structure of the observation instrument lacks correspondence with its original normative model but mirrors a five-factor model based on recent literature, with an extension of an assessment and feedback factor, as well as two different aspects of student orientation. Furthermore, Praetorius and Charalambous’s (2018) analysis of observation instruments found operational indicators that could not be subsumed under the three basic dimensions. Therefore, the authors of this paper proposed four additional dimensions (Content Selection and Presentation, Practicing, Assessment and Cutting across Instructional Aspects aiming to maximize student learning).

In sum, the strong theoretical basis speaks for taking the three basic dimensions as a first structuring framework, when analyzing conceptual and operational indicators from the literature on instructional quality in mathematics. Most extensions proposed in the past were based on existing observation frameworks (or data generated with these frameworks), and resulted either in splitting existing dimensions into sub-dimensions, or adding further dimensions. However, a broader consideration of instructional quality in the literature, including but not limited to observation instruments and taking into account conceptual as well as operational indicators, may provide a more accurate picture of how instructional quality is conceptualized in current research on mathematics instruction.

One may ask whether frameworks of instructional quality that are specific to a single subject such as mathematics are necessary. While Occam’s Razor principle calls for prioritizing generic frameworks when more specific extensions do not add to understanding, points have indeed been made that all three basic dimensions may contain subject-specific indicators in some sense (e.g., Praetorius et al., 2020). It remains unclear in many papers, however, what constitutes subject-specificity of instructional quality framework.

First, one may ask whether an indicator of instructional quality can be described and applied validly without referring to the domain (e.g., using visualizations; example adapted from Dreher and Leuders, 2021), whether it must be specified to the subject (e.g., using representations that support mathematical learning), or even to the specific learning content (e.g., using representations that appropriately represent the structure of algebraic expressions). Several authors argue that many generic indicators need to be specified in a subject- or content-specific way to be measured validly. Lipowsky et al. (2009) followed exactly this approach and could show that video-based coding of instructional quality, which was very specific to the content at hand, contributed to the explanation of student learning beyond generic dimensions of instructional quality. Therefore, from the perspective of applicability, it is an open question as to what extent indicators of instructional quality need to be specified to a single subject or content.

Second, and related to this, one may consider to what extent the same indicators of instructional quality dimensions are considered relevant by instructional quality experts from different subjects. In Praetorius et al. (2020), experts in science, physical, and history education jointly compared indicators for each dimension of the Praetorius and Charalambous (2018) framework between the three subjects. They found indicators that were only considered relevant for one or two of the three subjects for five of the seven dimensions (including classroom management, but not the dimensions related to exercises and formative assessment).

Third, another perspective connects subject-specificity of instructional quality to the extent, to which subject-specific knowledge is necessary to judge or rate (Wüsten, 2010; Dorfner et al., 2017; Heinitz and Nehring, 2020; Lindmeier and Heinze, 2020; Dreher and Leuders, 2021). Some studies have shown that at least some aspects of instructional quality are related to teachers’ subject-specific professional knowledge (e.g., Baumert et al., 2010; Jentsch et al., 2021). We do not know of any studies on the effects of raters’ subject-specific knowledge on ratings of instructional quality. Studying the necessity of subject-specific knowledge for judging or enacting certain indicators of instructional quality is a promising, but challenging desiderate for future research.

Finally, how general or specific an indicator of instructional quality is may also be judged empirically by studying if it is equally predictive for student learning in different subjects or, on a more fine-grained level, for different contents or learning goals (cf., Lindmeier and Heinze, 2020; Dreher and Leuders, 2021) provided sufficiently broad applicability of the indicator.

This review primarily aims at forming a basis for judging the relevance of different indicators for one specific subject, mathematics. This approach allows describing what is discussed in the literature for this specific subject, retaining potentially specific aspects, and preventing too early abstraction into generic dimensions. This way, one may not necessarily expect (though it is possible) to identify dimensions or indicators that are specific to a single subject. However, one may expect to find patterns of indicators and dimensions that are characterized by a specific subject and deviate from corresponding patterns for other subjects. It must be noted, however, that analyzing a single subject restricts the possibility of finding dimensions as clearly subject-specific. What can be done is to provide first insights by analyzing subject-specificity regarding applicability relevance, knowledge, or predictivity (as described above) of the different dimensions and indicators for mathematics based on the literature.

In summary, a coherent analysis of conceptual and operational indicators used to describe instructional quality in mathematics is not currently available. However, such an analysis would be of particular importance to systematize the wide range of existing conceptualizations and measurement instruments into a coherent structure, and to contrast the emerging conceptualization for mathematics with similar conceptualizations in other subjects.

How the quality of mathematics instruction as a multifaceted construct is conceptualized, measured, and how these measures are validated in terms of their content, is of considerable importance for mathematics education. In this contribution, we examine conceptualizations and measurements of instructional quality under the perspective of Klieme et al.’s (2009) basic dimensions framework. As noted above, conceptualizations of the basic dimensions in this framework vary in the literature.

Therefore, one of the goals is to systematize descriptions of the basic dimensions from the literature into a clear and concise conceptual definition (conceptualization). In this vein, our first goal is to collect, for each basic dimension, those observable characteristics of classroom instruction that are usually used to characterize the dimension in theoretical terms (conceptual indicators, yellow ellipse in Figure 1). The starting point for this is the conceptual indicators given in Klieme et al.’s (2009) framework for each basic dimension (red area in Figure 1), but other conceptual indicators may arise from the literature. A second goal is to describe which observable characteristics of classroom instruction are captured by measurement dimension that intend to assess the basic dimensions (operational indicators, blue ellipse in Figure 1). Our third goal is to compare conceptual indicators and operational indicators assigned to each of the three basic dimensions. To capture the overall state of the discussion, we disregard if the conceptual indicators and operational indicators occur in the same or in different manuscripts. Optimally, all conceptual indicators that are used in a conceptual definition of a basic dimension correspond to an operational indicator, which is assessed by some measurement dimension. Conversely, all operational indicators assessed by any measurement dimension reflect a conceptual indicator, which occurs in a conceptual definition of the basic dimension (overlap of yellow and blue region). We assume that the conceptual indicators given in the original framework (Klieme et al., 2009) fall into this region. Other indicators in the overlapping region outside the red area might be candidates for extending the original definition. We also assume that the overlap between the conceptual indicators and measurement dimensions is not perfect. This allows the study of construct-irrelevant aspects of measurement dimensions (parts of the blue ellipse outside the yellow ellipse), and construct underrepresentation in the measurement dimensions in the literature (parts of the yellow ellipse outside the blue ellipse).

Based on the distinction between conceptual definitions and measurement dimensions, and the distinction between conceptual indicators and operational indicators, the purpose of this review is to describe the commonalities and differences between the conceptualizations and the measurement of the three basic dimensions of instructional quality in mathematics. Accordingly, we conducted a systematic analysis of the literature on instructional quality in primary and secondary mathematics education, focusing on the following guiding questions:

1) Conceptual definitions and conceptual indicators for basic dimensions

a) Which conceptual indicators are used in the literature to conceptualize the three basic dimensions in instructional quality from a theoretical perspective?

b) How much variability can be found in this theoretical conceptualization of the basic dimensions in the literature?

2) Measurement dimensions and operational indicators for basic dimensions

a) To what extent is it possible to assign the measurement dimensions found in the literature to one of the three basic dimensions of Klieme et al.’s framework based on the operational indicators used to assess instructional quality?

b) To what extent do the descriptions of these operational indicators define subject-specific aspects of instructional quality?

3) How can the conceptual (from Q1) and operational indicators (from Q2) be synthesized to sharpen and extend the basic dimensions framework?

a) Which characteristics of classroom instruction occur as conceptual indicators as well as operational indicators for each basic dimension of instructional quality (overlapping area of yellow and blue ellipses)? We assume that this overlap characterizes a common understanding of the corresponding basic dimension of instructional quality.

b) To what extent are the conceptual indicators (from Q1) completely covered by the identified operational indicators (from Q2)? This question refers to construct underrepresentation, that is, “blind spots” in the empirical research on the basic dimensions (yellow, without blue part).

c) To what extent do the operational indicators (from Q2), which are used to assess the basic dimensions, correspond to conceptual indicators (from Q1) for the same basic dimension? This question refers to the content validity of the measurement dimensions found in the literature. Measurement dimensions, which are subsumed under a basic dimension in empirical research, but address conceptual indicators that are not connected to conceptual definitions of the basic dimension (blue, without yellow), run counter to the validity of the measurement dimension.

d) How can the operational indicators (from Q2) belonging to measurement dimensions, which cannot be assigned to basic dimensions, be grouped into new factors of instructional quality?

This study has been undertaken as a systematic literature review based on PRISMA guidelines (Moher et al., 2009; Figure 2). PRISMA statement consist of four steps: identification, screening, eligibility, and inclusion criteria. Identification is the process to enrich the main keywords using several steps so that a wide range of articles can be retrieved from the database. The second phase is screening, a process to include or exclude articles based on criteria decided by the authors and generated using the database. Excluding articles means eliminating unnecessary articles according to the types. The third phase is eligibility; all articles are examined by reading through the title, abstract, method, result, and discussion to ensure they meet the inclusion criteria and parallel with the current research objectives. The final phase is inclusion criteria where the articles left fulfill the requirement to be analyzed.

The Web of Science (all databases) was searched last mainly by one reviewer on October 25, 2020. The search strategy consisted of three groups of search terms combined with the Boolean operator “OR,” representing the following components: (1) “Mathematics AND Instructional Quality,” (2) “Mathematics AND Classroom Quality,” and (3) “Mathematics AND Teaching Quality.” Title and abstract were included as search fields. Articles in languages other than English were not included. After eliminating duplicates, n = 1,841 publications were in the initial database.

Studies with a focus on general issues relating to mathematics instruction, such as School Management, Education Policy, Text Books, New Technology, Teachers’ Professional Development, Cultural and International Comparisons (n = 413) were excluded. We also excluded studies that specialized in instruction in University, College, and Higher Education (n = 384), which focused on learners in Kindergarten, Preschool, Early Childhood, and the Head-start Program (n = 112), or on learners with disabilities and other special needs (n = 61). Finally, studies that primarily investigated teaching in Physics, Science, general STEM instruction, and other disciplines (n = 356) were not included. Studies that assessed instructional quality by Student Performance, Motivation, Competences, Interest, Self concept, Peer-Interaction or other measures that did not directly correspond to actual classroom instruction (n = 200) were also excluded. Abstracts were further selected for retrieval of the paper only if they were peer-reviewed journals and conference papers or book chapters.

The full text could not be obtained for 48 of the remaining 341 articles. Therefore, 237 articles were considered in detail for eligibility based on titles, abstract, method, result, and discussion to ensure they met the inclusion criteria. The full texts were coded independently by two reviewers, who marked each article as “included” or “excluded.” For excluded articles, a reason for the exclusion was documented. The first author selected relevant studies by judging the title, abstract, and full text against the criteria for inclusion and exclusion. In case of doubt, the second author independently judged these papers. Subsequently, two authors discussed the eligibility of these publications until consensus was reached. Accordingly, another 125 publications were excluded, leaving 112 publications (95 journal articles and 17 conference papers) from 2006 to 2020 for the analysis of operational indicators.

To analyze conceptualizations of the Basic Dimensions proposed by Klieme et al. (2009) used in the literature, we selected 10 of the remaining publications for each basic dimension (Classroom Management, Student Support, and Cognitive Activation). Since the keywords for the conceptual definitions of the three basic dimensions, such as Cognitive Activation, may not appear in the title or abstract, we selected those 10 articles from the existing pool of full-text articles that were included for the analysis of operational indicators. The study applied a systematic approach to collect conceptual definitions based on three inclusion criteria: (a) conceptualizing all the three basic dimensions; (b) specifying the multiple indicators that can be used to measure the basic dimensions and the different aspects of the basic dimensions; (c) citing other references to affirm the validity of the conceptual definitions.

A qualitative content analysis was conducted to identify the conceptual indicators that constitute the conceptual definitions given in the texts selected for each basic dimension. To derive these conceptual indicators from the definitions, we applied a text mining method proposed by Kaur and Gupta (2010). Conceptual indicators were extracted by identifying the “keywords” that are a small set of words, or key phrases to comprise very crucial information about the conceptual definitions.

Accordingly, the conceptual definitions were preprocessed manually in the following steps: (a) stopword elimination—common words with no semantics and which do not aggregate relevant information to the task (e.g., “the,” “a”) were eliminated; (b) stemming: semantically similar terms, such as “Dealing with disruptions,” “Coping with disruptions,” and “Managing disruption” were considered as equivalent to each other and therefore redundant words were replaced by a single term. In this case, we only retained the verb “Deal with.” However, the semantically related words of a target educational field should be kept separate and clustered as a group of candidate indicators, such as disruption, misbehavior, and disciplinary conflict. Consequently, all the semantically similar words relative to the conceptual element were combined and counted as one indicator “Dealing with disruptions/misbehavior/disciplinary conflict.” The tedious efforts of stopword removal and semantic stemming were to convert textual data to an appropriate format and size for further qualitative analysis.

We used a qualitative method that combines deductive and inductive coding to analyze measurement dimensions carried out in empirical studies on instructional quality. From a deductive standpoint, our analysis is to test the German framework of three basic dimensions and hence anchored in classifying the measurement dimensions to the three basic dimensions. From an inductive standpoint, there are no previous frameworks comprehensive enough to code all operational indicators of the measurement dimensions. Although the findings are built on the German framework of three basic dimensions and also influenced by conceptual indicators outlined by previous researchers, the findings arise directly from the analysis of the raw data, not from a priori expectation or predefined model. The combination of these approaches allows us to (a) condense raw textual measurement dimensions into a brief, summary format; (b) establish clear links between the conceptual indicators and the summary operational indicators derived from the empirical evidence on measurement dimensions; and (c) develop a framework of the underlying operational indicators going beyond the existing German framework of Three Basic Dimensions.

To support full-text analysis, the computer-assisted qualitative data analysis software MAXQDA was used (Kuckartz and Rädiker, 2019). The code system was developed iteratively based on a subsample of the text, adding further articles after each revision. Results of reliability crossing segmenting, deductive and inductive coding phases can be found in Table 1.

As units of analysis (Strijbos et al., 2006), we extracted measurement dimensions from the publications. Measurement dimensions are single, empirically measurable dimensions of instructional quality mentioned in a manuscript text under consideration. As mentioned above, measurement dimensions are structured hierarchically (Praetorius and Charalambous, 2018). At the lowest hierarchy level, single coding rubrics for one observable classroom characteristics or one questionnaire item may form a measurement dimension. In a manuscript, authors may combine or aggregate several measurement dimensions into a higher-level (parent) measurement dimension (e.g., different rubrics or items referring to higher-order thinking), and several of these higher-level measurement dimensions may again be collected into even higher-level parents (e.g., cognitive activation).

For segmenting, the names of each measurement dimension were marked in each manuscript. Moreover, the following data were marked for each measurement dimension: its operational definition, its parent measurement dimension (if there was a parent measurement dimension). Two coders initially segmented five randomly selected manuscripts to obtain a joint understanding of the measurement dimensions that would be identified as the unit of analysis. The level of agreement between the coders was calculated by Percent Agreement. According to House et al. (1981) a value of 70% is necessary, 80% is adequate, and 90% is good. During the training phase, the percentage of agreement on segmenting between two raters ranged from 84% for the first training phase of segmenting (5 manuscripts) and 86% for the second phase (10 manuscripts). Both reviewers segmented all remaining articles. Disagreements were resolved by group discussion between the authors, and by jointly reviewing the articles until consensus was reached.

We assigned each measurement dimension to one of the basic dimensions proposed by Klieme et al. (2009): Student Support, Cognitive Activation, and Classroom Management. If none of them was found to fit, the measurement dimension would be labeled as Not Assignable. The decision was based on the name and operational definition of the measurement dimension. When there was still doubt, we also took into account the parent measurement dimensions to which it was assigned in the corresponding article.

Two human coders were trained in the spring of 2020, introducing them to the project, the coding manual, and unit of analysis. Any comprehension questions were resolved in this context as well. In the four coding phases, both coders coded about 30 randomly selected measurement dimensions independently. After the first two coding phases, interrater agreement was considered suboptimal, so further training and clarifying discussions were implemented. In the fourth coding phase, the two raters reached 77% agreement and a Cohen’s Kappa of 0.68, which was considered sufficient. Each of the two coders then analyzed the disjointed subsets of all articles. One more phase of double coding was conducted to check whether the two coders still achieved an acceptable level of interrater agreement.

The general inductive approach was used to analyze the measurement dimensions to identify operational indicators to measure instructional quality. Since the unit of analysis (measurement dimensions) was identified, the inductive coding began with close and multiple readings of measurement dimensions and consideration of the operational indicators inherent in the dimensions.

Two coders then created a label (e.g., a word or short phrase) for an emerging indicator to which the measurement dimension was assigned. The label conveyed the core theme or essence of a measurement dimension. Emerging indicators were developed by studying the measurement dimensions repeatedly and considering corresponding conceptual indicators and how these fit with the German Framework of Three Basic Dimensions. The principles of the inductive coding included: (a) the label for the upper level of operational indicators referred to the general basic dimensions (e.g., Classroom Management, Cognitive Activation); (b) the label for the lower-level or specific indicator could be the sub-dimensions of the three-dimensional framework (see Appendix 1), if the sub-dimensions outlined from the previous works perfectly represented the underlying meaning of the operational indicators (e.g., Challenging Task and Questions, Effective Time Use/Time on Task); (c) some indicators could be combined or linked under a superordinate indicator when the underlying meanings were closely related, according to the outlined conceptual indicators. For example, Behavior Management referred to all classroom activities to identify/strengthen desirable student behaviors, to prevent disciplinary conflicts/disruptions/undesirable behaviors, and to deal with disruptions/misbehavior/disciplinary conflict; (d) If an operational indicator was closely associated with one conceptual indicator outlined before, but is not explicitly described by the conceptual definition, we gave them a label and integrated the new label into the existing framework of the basic dimensions. For example, Instructional Design and Plan was assumed to be closely associated with Lesson Structure, Lesson Procedure, and Transition between Lesson Segment. Therefore, we linked all the closely associated indicators under a superordinate indicator instructional structure. The primary purpose of the inductive approach is to allow research findings to emerge from the frequent, dominant, or significant themes inherent in raw data; (e) If the measurement dimension was not closely associated by any conceptual indicator, but appeared quite often in the empirical instruments, we assigned them to a unified label, such as Technology, Assessment, Content, and Presentation. This process led to broader operational indicators that might neither be embedded in any basic dimension of the German framework nor be described in their conceptual definitions.

In inductive coding, the coded indicators were continuously revised and refined. During the process, coders searched for new insights, including contradictory points of view, and consequently gained joint understanding of the coding system. If new codes emerged, the coding frame was changed according to the new structure. Finally, a hierarchical framework of specific operational indicators was developed inductively. We identified 27 operational indicators on the second level and 15 on the third level corresponding to one of the basic dimensions, which were deductively coded as the first level of the hierarchical framework. A complete list of these operational indicators can be found in Table 2.

We analyzed the conceptual indicators derived from the conceptual definitions given in the 10 selected texts for each basic dimension. In the section, we will present the conceptual indicators across all texts (Q1a), as well as results on the variability in conceptual definitions (Q1b).

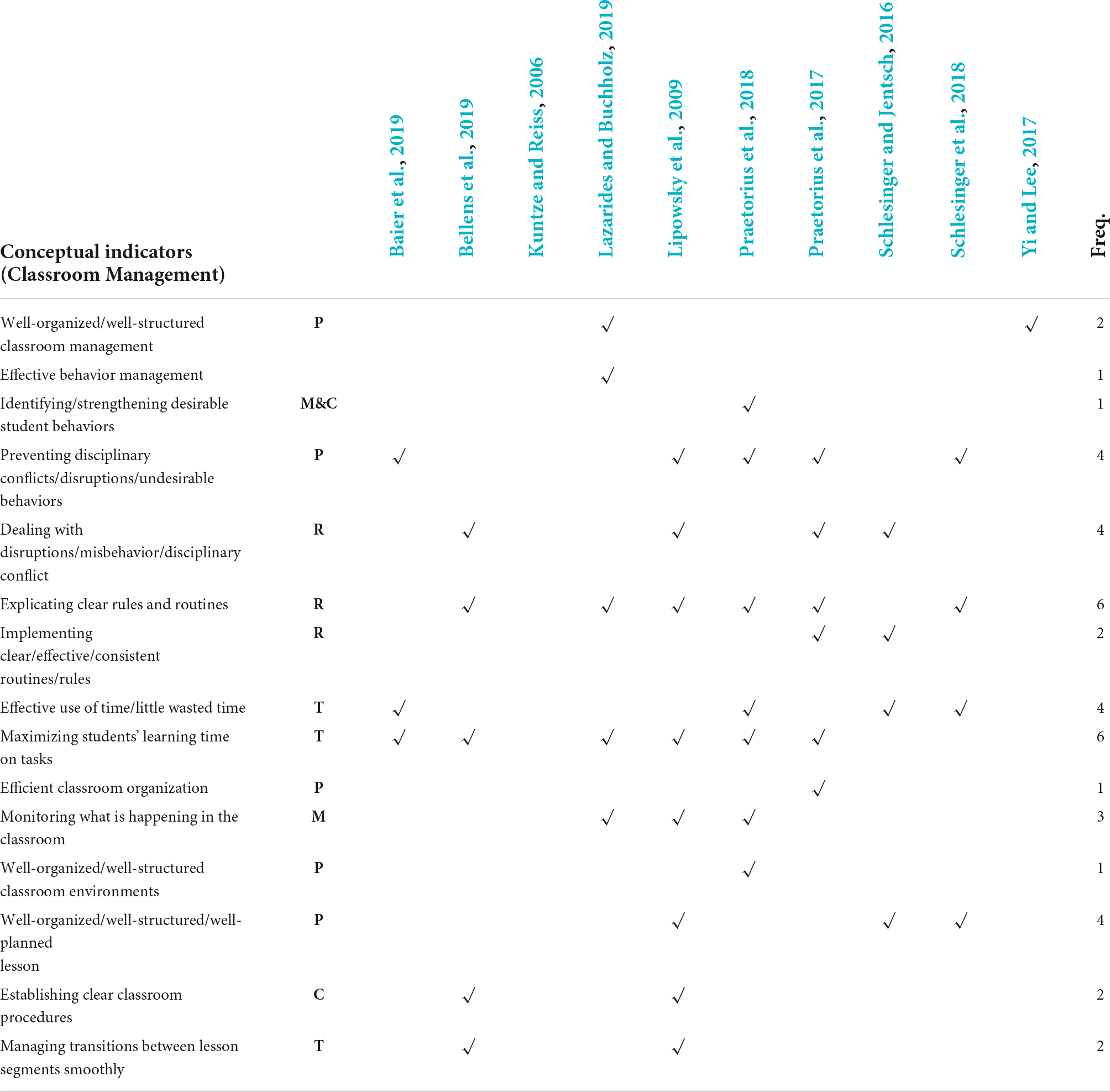

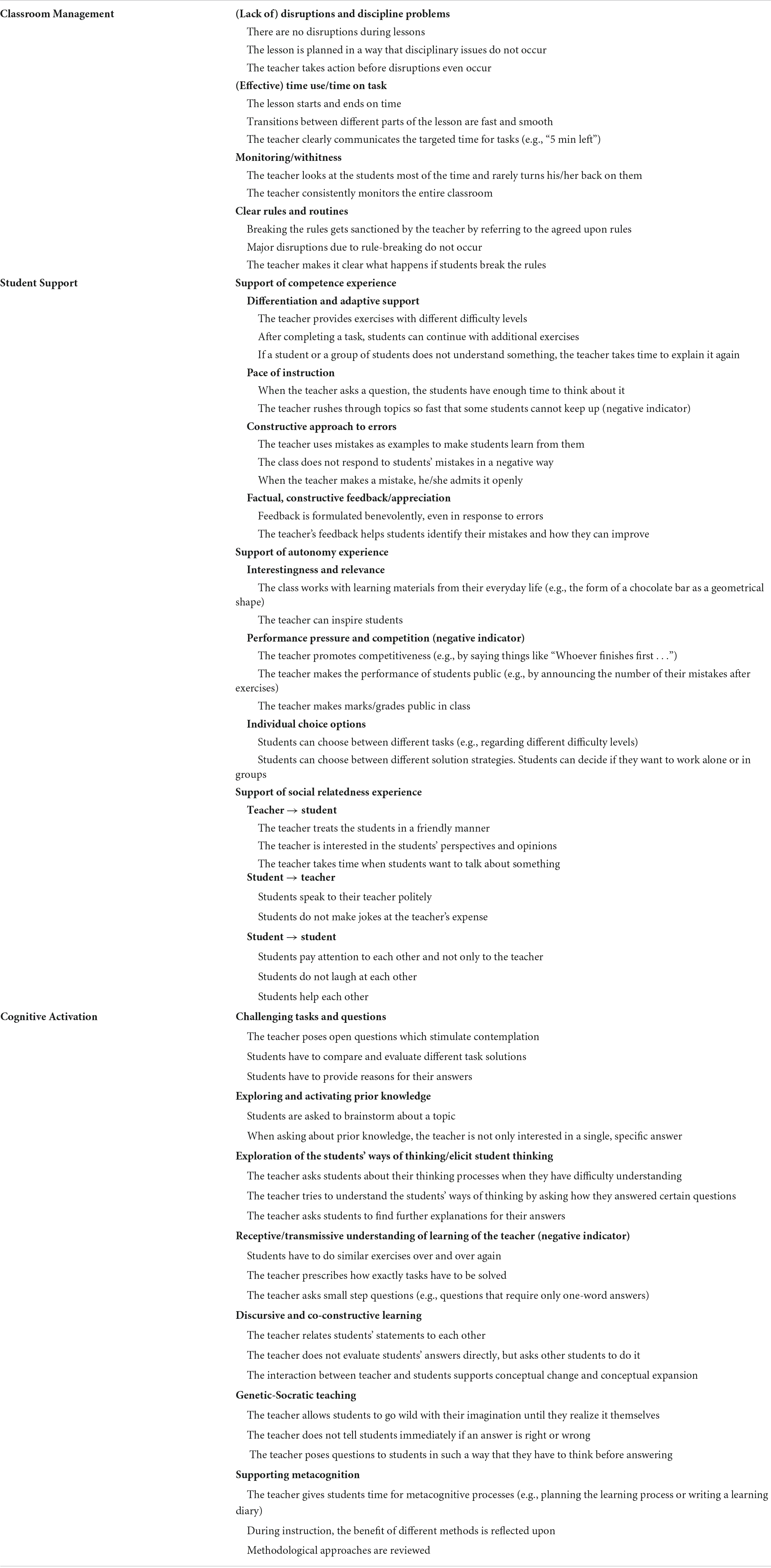

According to Klieme et al. (2009, p. 141), Classroom Management requires teachers to “establish clear rules and procedures, manage transitions between lesson segments smoothly, keep track of students’ work, plan and organize their lessons well, manage minor disciplinary problems and disruptions, stop inappropriate behavior, and maintain a whole-group focus.” Going beyond the original definition, Table 3 shows the conceptual indicators identified in the conceptual definitions of the basic dimension Classroom Management. To further systematize the conceptual indicators, we divided them into a set of sub-dimensions. The same sub-dimensions of the three-dimensional framework (see Appendix 1) were found within the conceptual definitions: disruptions and discipline problems (D), effective time use/time on task (T), monitoring/withitness (M), and clear rules and routines (R). Beyond this classification, the indicator referring to planning instruction (P) emerged as an additional sub-dimension. The resulting classification for analyzing the conceptual indicators is presented in the second column of Table 3.

Table 3. Overview of conceptual indicators of Classroom Management described by various conceptual definitions in previous studies.

The most frequent conceptual indicators used for classroom management are explicating clear rules and routines, dealing with and preventing disruptions/misbehavior/disciplinary conflict (taken together here), and maximizing students’ learning time on task.

Some conceptual indicators are described in more abstract terms, and combine other conceptual indicators. For example, Lazarides and Buchholz (2019) conceptualized classroom management as a form of effective behavior management in class. Meanwhile, Lipowsky et al. (2009) and Praetorius et al. (2018) claimed that there are various ways to manage behavior management in the classroom: identifying and strengthening desirable student behaviors, preventing disruptions and minimizing the likelihood of disciplinary problems, and dealing with misbehavior, disruptions, and conflicts.

However, some terms are used in the conceptual definitions, which are not straightforward to compare. For example, Schlesinger et al. (2018) argued that structured and well-organized lessons are evidence-based characteristics of effective classroom management. Well-organized and well-structured classroom environments have been identified by Praetorius et al. (2018) as one of the core components of successful instruction. More abstractly, Lazarides and Buchholz (2019) mention well-organized classroom management. To what extent well-organized lessons and well-organized classroom environments should be taken as the same thing or point to different aspects of instruction is not clarified in the literature.

Prior studies have mentioned aspects of classroom management, which are specific to subjects (other than mathematics; Praetorius et al., 2020). In line with the generic nature of the three basic dimensions framework, however, the reviewed conceptual definitions of classroom management did not consider aspects that could be identified as specific to mathematics education.

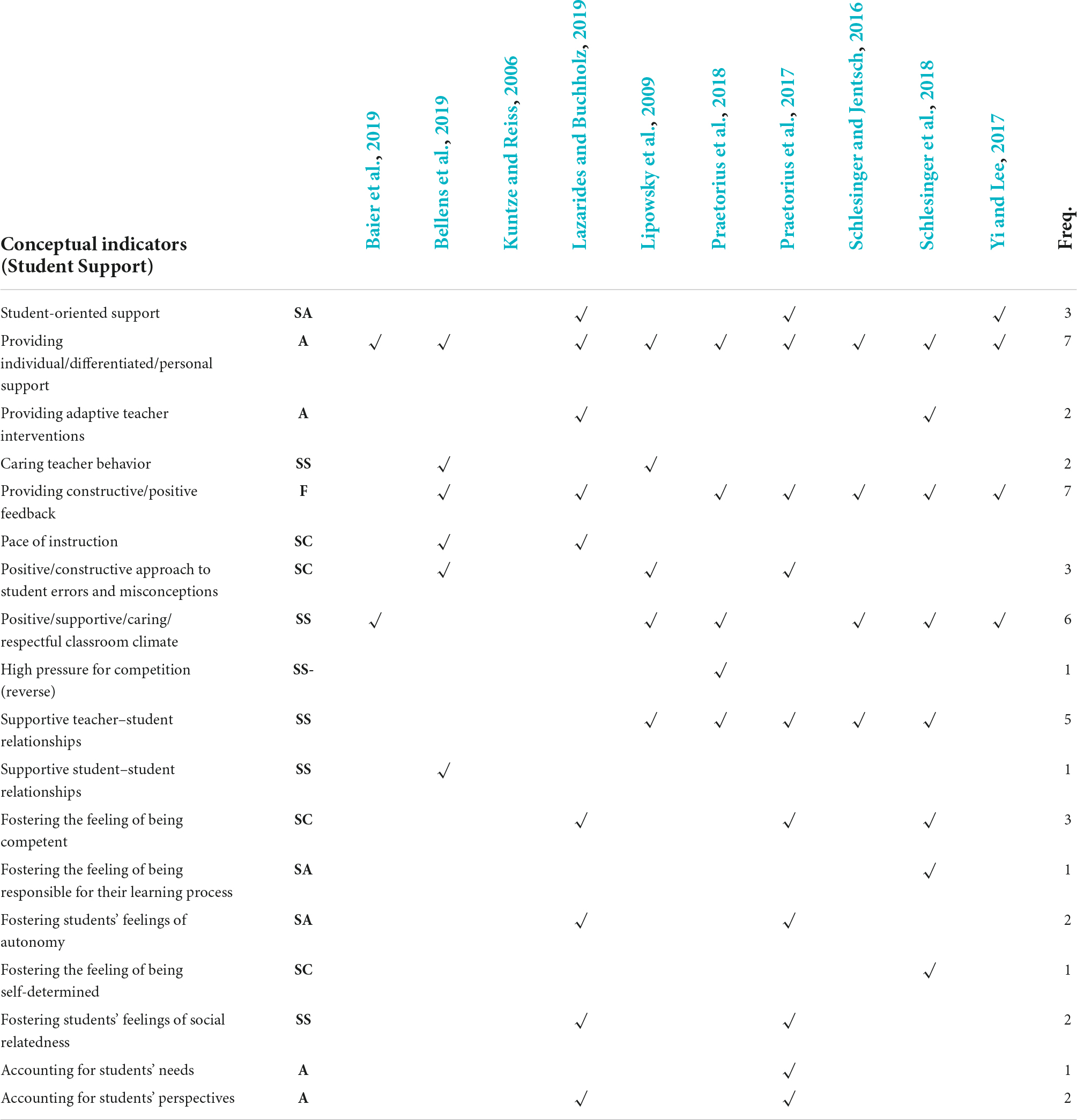

The original idea behind the basic dimension is “supportive teacher–student relationships, positive and constructive teacher feedback, a positive approach to student errors and misconceptions, individual learner support and caring teacher behavior” (Klieme et al., 2009, p. 141). In line with this, the most frequently mentioned indicators of student support are: Providing constructive/positive feedback, offering individual/differentiated/personal support, positive/supportive/caring/respectful classroom climate, and supportive student–student relationships. As shown in Table 4, the sub-dimensions adopted from the general framework are support of competence experience (SC), autonomy experience (SA), and support of social relatedness experience (SS). Additionally, the sub-dimensions referring to general learning support (LS), adaptive teacher support (A), and feedback (F) emerged from the analysis.

Table 4. Overview of conceptual indicators of Student Support described by various conceptual definitions in previous studies.

Confusion remains in naming the different conceptual indicators. In the conceptualization of Klieme et al. (2006), according to Lipowsky et al. (2009), the construct of supportive classroom climate covers a bunch of features of teacher behavior, which include caring teacher behavior. The indicators, however, are not clearly defined here. Averill (2012) describes a broad range of specific “caring” teacher behaviors, including involving students in classroom decision-making, using “safe” questioning practices (i.e., those that do not expose students to potential embarrassment or intimidation), creating a sense of shared endeavor, encouraging and expecting respectfulness and being respectful of students, and incorporating specific pedagogies such as collaborative work, stories and narratives, and journaling.

Student support is conceptualized as an overarching dimension of teaching behaviors in some studies, which aim to enhance students’ feelings of autonomy (Praetorius et al., 2017; Schlesinger et al., 2018). Yet present these approaches diverge in the definition of the term “autonomy.” Dickinson (1995) considers autonomy as measured in terms of three shared key concepts: learner independence, learner responsibility, and learner choice. Praetorius et al. (2017) argued that autonomy is closely aligned with and derived from self-determination theory, but they also included other basic needs, such as feeling competent, or being socially integrated, as part of teacher support. Therefore, despite a relatively simple conceptual definition, the construct of student support is connected to a wide range of varying conceptual indicators, which makes it difficult to compare results over studies.

In spite of this wide range of conceptual indicators, our review only identified a few aspects in the conceptual definitions of student support, which could be seen as subject-specific to mathematics. The most prominent aspect could be a positive and encouraging approach to errors and misconceptions, which has attracted specific attention in the field of mathematics (but may be of some, though varying importance also in other subjects). It comprises interventions by the teachers, which help students to deal with negative emotions when dealing with their own errors or misconceptions (Rach et al., 2012; Tulis, 2013; Kyaruzi et al., 2020).

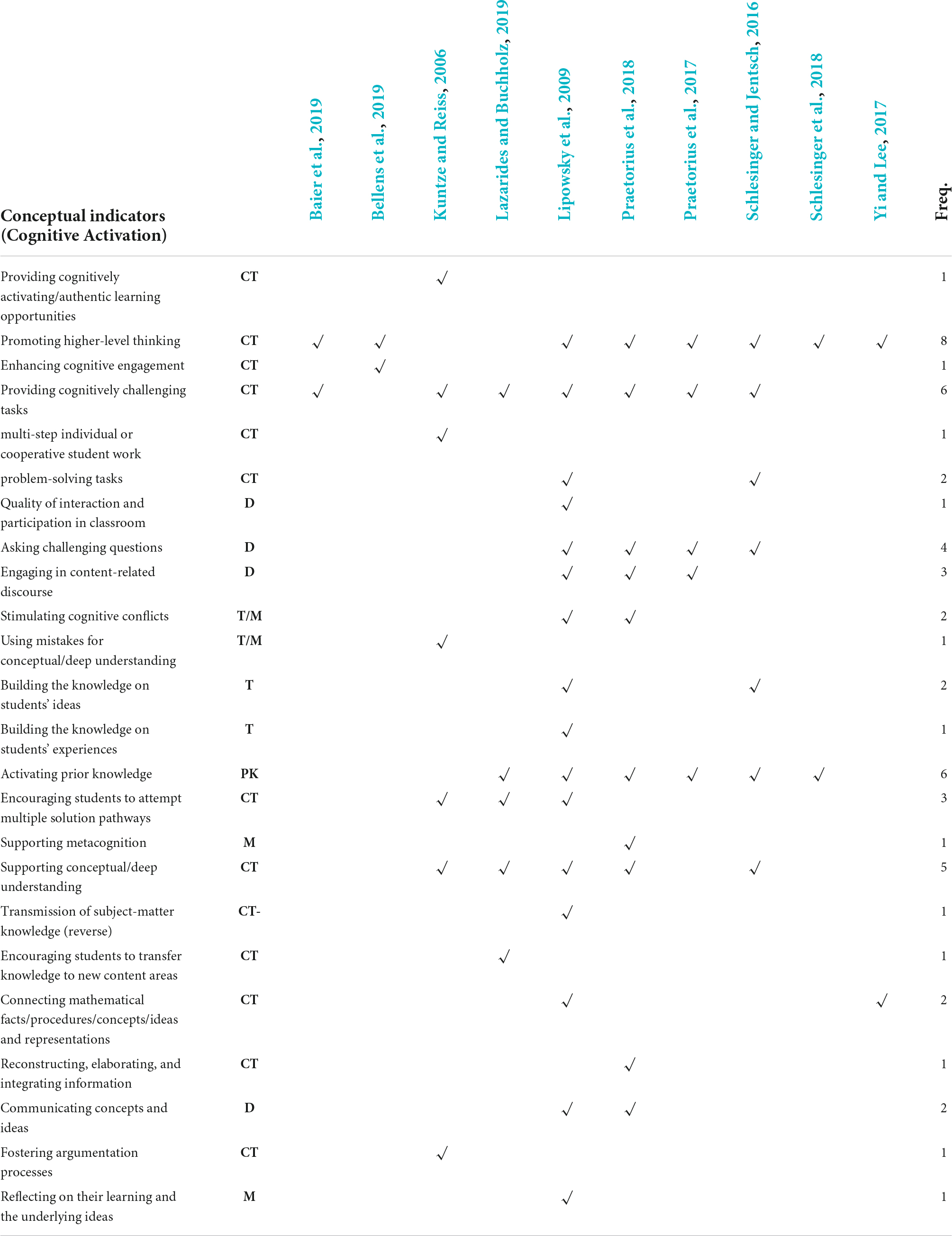

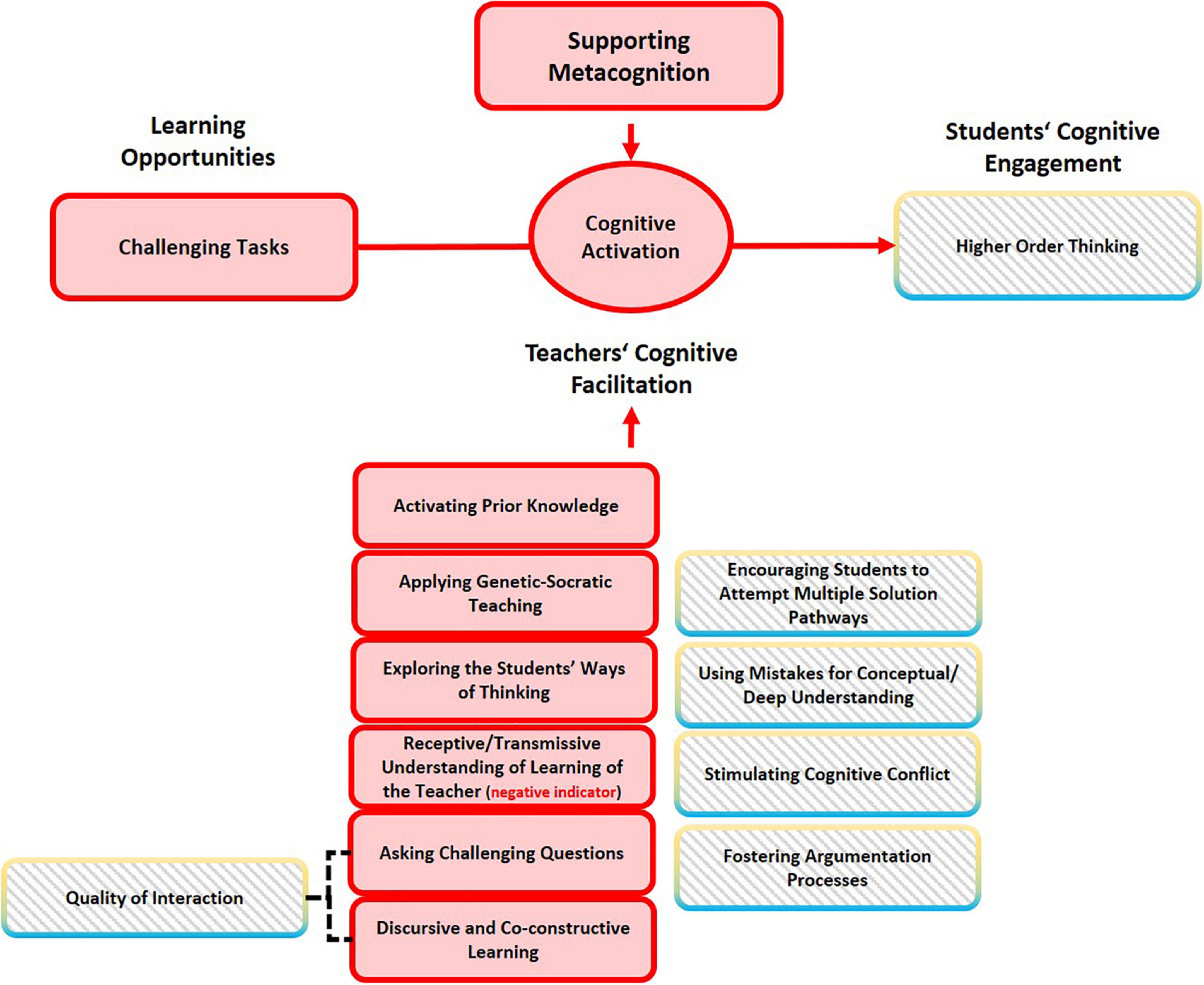

Klieme et al. (2009, p. 140) integrated “these key features of mathematical instruction—challenging tasks, activating prior knowledge, content-related discourse and participation practices within the construct of cognitive activation.” The most frequently mentioned indicators of cognitive activation are: Activating prior knowledge, providing cognitively challenging tasks, promoting higher-level thinking, and supporting conceptual/deep understanding. The resulting classification thus comprises challenging tasks and questions (CT), exploring and activating prior knowledge (PK), and discursive and co-constructive learning (D). Moreover, exploration of the students’ ways of thinking/elicit student thinking (T) and supporting metacognition (M) emerged as additional sub-dimensions.

Compared with the other two basic dimensions, the underlying conceptual indicators of Cognitive Activation are more diverse in nature. This indicates that the nature of this basic dimension may be more complex and diverse than the other two.

In Table 5 it can be seen that the most frequently mentioned indicator in the conceptual definition is higher-order thinking. For example, according to Lipowsky et al. (2009), cognitive activation is an instructional practice that encourages students to engage in higher-level thinking and thereby develop an elaborated knowledge base. In cognitively activating instruction, the teacher stimulates the students to disclose, explain, share, and compare their thoughts, concepts, and solution methods by presenting them with challenging tasks, cognitive conflicts, and differing ideas, positions, interpretations, and solutions.

Table 5. Overview of conceptual indicators of Cognitive Activation described by various conceptual definitions in previous studies.

Divergent opinions exist with regard to facilitating higher-order thinking. An incomplete list of the instructional practices assumed to facilitate higher-order thinking include encouraging students to transfer knowledge to new content areas (Lazarides and Buchholz, 2019), connecting mathematical facts/procedures/concepts/ideas and representations (Lipowsky et al., 2009; Yi and Lee, 2017), reconstructing, elaborating, and integrating information (Praetorius et al., 2018), providing problem-solving tasks (Lipowsky et al., 2009; Schlesinger and Jentsch, 2016), fostering argumentation processes (Kuntze and Reiss, 2006), and reflecting on their learning and the underlying ideas (Lipowsky et al., 2009).

Lipowsky et al. (2009) pointed out that the quality of interaction and participation in classrooms is another important means of cognitive activation. In cognitively activating classrooms, interaction is characterized by the teachers’ use of questions to stimulate students to think critically about concepts, to use them in problem-solving, decision-making or other higher-order applications, and to engage in discourse about their own ideas about these concepts and their application (Brophy, 2000).

The qualitative content analysis used to identify the conceptual indicators was based on the conceptual definitions of three basic dimensions, which have to be considered to be geared toward a generic subject-overarching conceptualization (Charalambous and Praetorius, 2018). Therefore, it is important to mention that some of these conceptual indicators also contain aspects that are closely associated with the content to be taught or special pedagogic methods commonly used in mathematics education. Most subject-specific aspects, within the identified conceptual indicators for cognitive activation, include (a) a constructive, learning-oriented approach to student errors and misconceptions, which was specifically discussed from a mathematical perspective in several studies (Rach et al., 2012; Tulis, 2013; Heemsoth and Heinze, 2014); (b) encouraging students to attempt multiple solutions: Multiple solution methods are discussed in mathematics education to build up well-connected knowledge about mathematical concepts and procedures (Achmetli et al., 2019) and to support students’ interest and self-regulation (Schukajlow and Rakoczy, 2016). Lipowsky et al. (2009) regarded it as a reverse indicator if students are requested to solve mathematical problems and tasks in a standard manner previously demonstrated by the teacher. In Cognitive Activation in the Classroom (COACTIV, Bruckmaier et al., 2016), subject-specific PCK was measured by asking teachers to provide multiple solutions to a problem; (c) using and connecting different representations, which can further contribute to gaining a deeper understanding of the learning contents (Goldin, 1998; Duval, 2006; Große, 2014); and (d) providing adaptive teacher interventions. Supporting students’ mathematical proficiency requires teachers to continuously adapt their instruction in response to their students’ instructional needs (Gallagher et al., 2022).

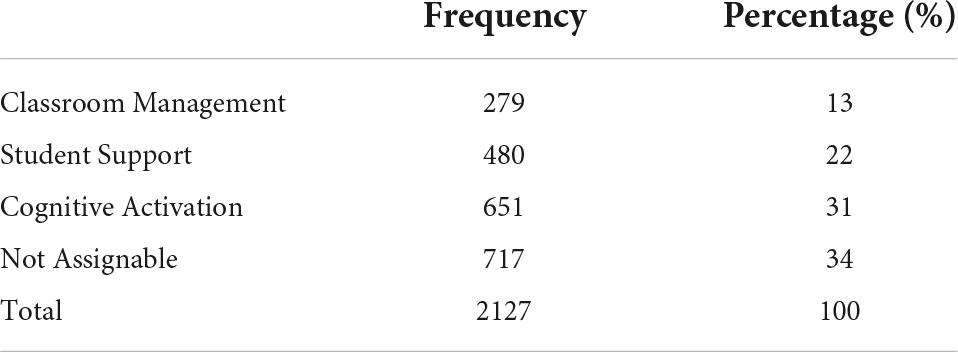

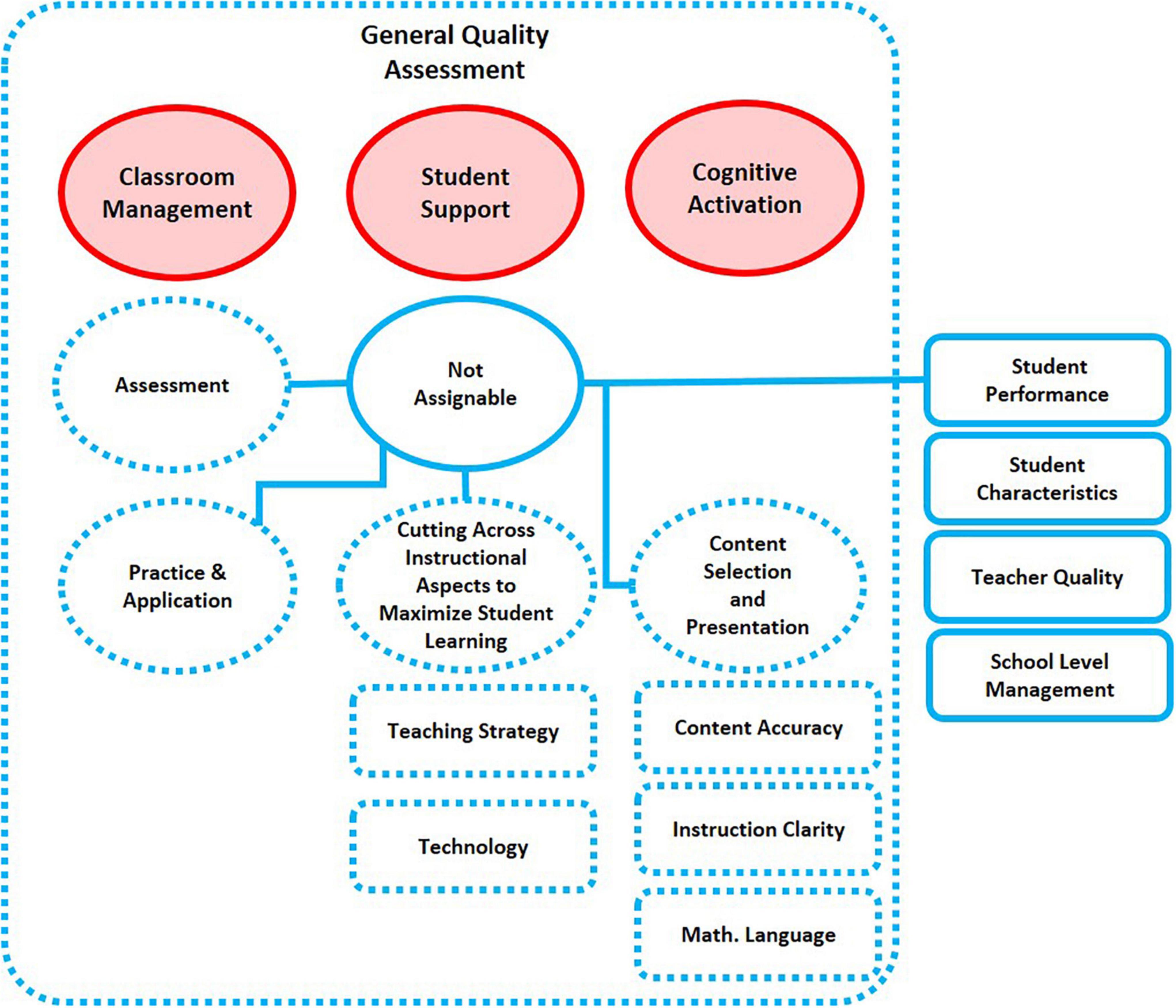

The second research question concerns as to what extent is it possible to assign the measurement dimensions found in the literature to one of the three basic dimensions of Klieme et al.’s framework based on the operational indicators they use to assess instructional quality. In the 112 reviewed publications, 292 coding frameworks to measure Instructional Quality were investigated either theoretically or empirically. They included 2,127 measurement dimensions, and 63.5% of the identified measurement dimensions (N = 1,351) have the associated explicit operational definitions that could be used to try to assign them to one of the three basic dimensions. In the other cases, assignments were—whenever possible—based on the name of the measurement dimensions and overarching measurement dimensions they were assigned to in the manuscript. Table 6 shows the results. While the fewest measurement dimensions (13%) referred to classroom management, cognitive activation accounted for the highest (31%). Notably, 34% of the measurement dimensions could not be assigned to one of the three basic dimensions.

Table 6. Frequency table of the identified measurement dimensions crossing three basic dimensions (deductive coding).

Classroom management and student support were originally conceptualized as generic dimensions without referring to a specific subject (Klieme et al., 2009). In the current study, a number of measurement dimensions show some subject-specificity—while often on a superficial level. For instance, the subject-specific nature of Classroom Management is revealed by adding an adverbial phrase (e.g., in math lessons, it is obvious what we are or not allowed to do. In mathematics, it takes a very long time at the start of the lesson until the students settle down and start working. In mathematics, our teacher makes sure that we pay attention). The adverbial phrases do not modify in any way the fundamental meaning of the measurement dimensions. Similarly, student support can be subject-specific by emphasizing the subject of the teachers (e.g., our mathematics teacher does his/her best to respond to students’ requests as far as possible. Our mathematics teacher tells me how to do better when I make a mistake. Our mathematics teacher is concerned). In this case, mathematics, however, can be easily replaced by any other subject, such as biology or English.

Beyond these more formal references to the subject, other instruments clearly refer to the content at hand as a norm to evaluate instruction. For example, instead of capturing teacher–student communication in general, these instruments attend to the interactions through a content-related lens, examining aspects and focusing on the mathematical precision and accuracy in communication and the appropriateness of the mathematical language and notations used (Charalambous and Praetorius, 2018).

Most examples of operational indicators that are substantially associated with the specific subject could be assigned to basic dimension cognitive activation: Challenging Tasks (e.g., our mathematics teacher modifies tasks in a way that allows us to recognize what we have understood); Using Mistakes for Deep Understanding (remediating student errors and difficulties: substantially addressing students’ misconceptions and difficulties with math); Encouraging Students to Attempt Multiple Solutions (e.g., comparing or considering multiple solution strategies for a mathematical problem; our mathematics teacher provides us with tasks that do not have a clear solution and lets us explain this); Using and connecting different representations (e.g., whether manipulatives or drawn representations were used for this purpose; whether the representations were appropriate for explaining the algorithm; whether the representation was explicitly and completely mapped to the algorithm); Building the Knowledge on Students’ Ideas, Experiences, and Prior Knowledge (e.g., the teacher uses mathematical contributions: captures whether and how the teacher responds to and builds on students’ mathematical product).

Some of the operational indicators that are substantially associated with a specific subject could not be assigned to any of the generic dimensions. This refers to specific ways of assessment (e.g., in a math problem, my teacher values the procedure and not just the results), or to Content Selection and Presentation (e.g., the teacher focuses on the fundamental mathematical aspects. The teacher initiates the adequate use of mathematical language). Some measurement frameworks took into account indicators like the depth of the mathematical lesson; the Richness of the mathematics; or Mathematical focus, coherence, and accuracy. The application of technology that was specifically developed for working mathematically (e.g., spreadsheet software) or mathematics learning can be seen as further examples of subject-specific indicators (e.g., Use a wide variety of materials and resources, such as games, puzzles, riddles, and technological devices, for teaching and learning mathematics. Use computers and digital technologies as tools in teaching mathematics.).

In the next step, the operational definitions of the measurement dimensions in the literature were analyzed and classified. Table 2 provides an overview of the operational indicators, which were identified through inductive coding. Although not shown in the table, some measurement dimensions in the literature were too general to derive meaningful operational indicators, since they tended to address overall evaluations of Instructional Quality (N = 13), or only named general constructs, such as one of the three basic dimensions—Classroom Management (N = 44), Cognitive Activation (N = 108), and Student Support (N = 31).

One of the challenges in this assignment was that many measurement dimensions address more than one operational indicator, such as “Challenging tasks and questions,” or “Lesson structuring and assessment.” In this case, the measurement dimensions were counted for both operational indicators.

N = 52 operational indicators were found to be reverse-scored items. Among them, the most common items that are negatively worded are assigned to Higher-Order Thinking (N = 11, e.g., memorizing formulas and procedures; doing similar exercises over and over again) and Time Management (N = 7, e.g., Students do not start working for a long time after the lesson begins; A lot of time gets wasted in mathematics lessons).

In Table 2, we further examined if the identified operational indicators are corresponding to the conceptual indicators summarized above (Q1), and if the operational indicators were already described in the previous general framework outlined by Praetorius et al. (2018). This illustrative list of indicators serves to indicate both the overlaps and the differences between existing conceptual and operational definitions. Certain subtle differences, which cannot be simply attributed to inclusions (√) and deletions (–), were highlighted with question marks.

Question 3a is concerned with the overlapping area of yellow and blue ellipses in Figure 1, that is, what characteristics of classroom instruction occur as conceptual as well as operational indicators for each basic dimension of instructional quality. In addition, we considered which of the indicators mentioned above go beyond the definitions of the basic dimensions by Klieme et al. (2009). In general, our analysis indicates that, the original German framework of three basic dimensions are covering fewer constructs, compared with the understanding of the basic dimensions in Tables 2–5. Some adjustments can be suggested to extend the three-factor general framework in light of the review conducted above.

In short, some of the operational indicators used in measurement dimensions assigned to the basic dimensions in the literature and some conceptual indicators used to characterize them go beyond the conceptual indicators described by Klieme et al. (2009). Therefore, we suggest adjustments to ensure that the new framework comprehensively reflects the conceptualization and measurement of these basic dimensions in the literature.

Moreover, some measurement dimensions used in the literature could not be classified to the three basic dimensions. Accordingly, we propose basic dimensions for an extension of Klieme et al.’s (2009) model, which reflects a broader spectrum of measurement dimensions used in the literature. In the diagrams visualizing these suggestions.

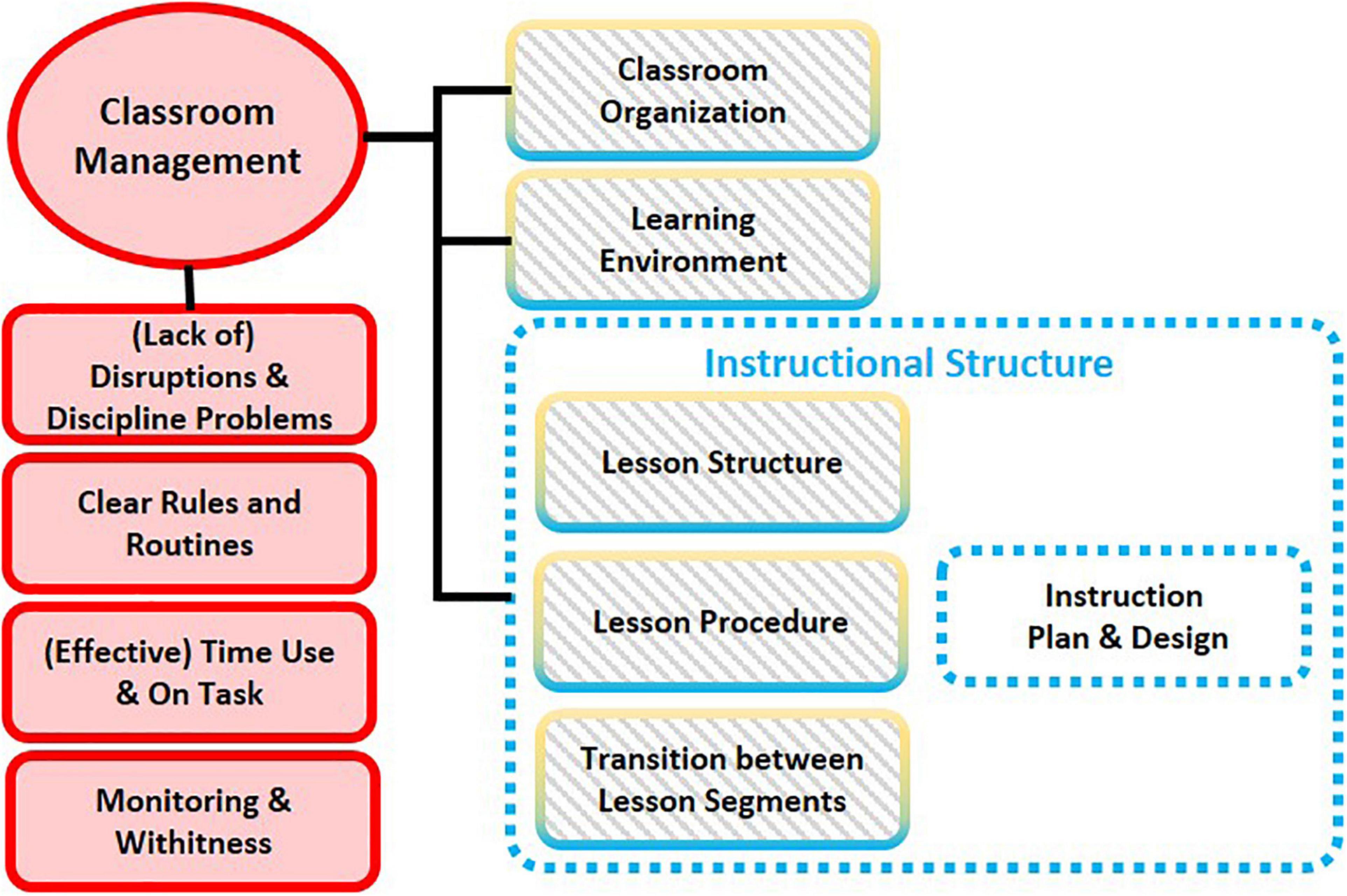

• Red circles with red area refer to the three original basic dimensions.

• Red boxes with red area describe groups of operational indicators outlined by Praetorius and Charalambous (2018).

• Dual color boxes with gray area describe groups of indicators that occur as conceptual indicators in conceptual definitions and in measurement dimensions in the literature, but not in the Klieme et al. (2009) framework.

• Blue-dotted boxes describe groups of indicators that do not occur as conceptual indicators in conceptual definitions in the literature, but occur in measurement dimensions in the literature, which can be assigned to the basic dimension.

• Blue-dotted circles with white area describe basic dimensions we suggest to be added to the framework.

• Blue boxes with white area describe those indicators irrelevant to instructional quality.

As shown in Figure 3, besides the four indicators that already existed in the three-dimensional framework, namely (Lack of) disruptions and discipline problems (Effective) time use/time on task, Monitoring/withitness and Clear rules and routines, Classroom Organization and Learning Environment should be considered as additional indicators to measure Classroom Management. This extension is based on the conceptual definition of the basic dimension as well as supported by empirical evidence. Similarly, an indicator at the upper level, which can be further divided into a set of sub-indicators, is assumed to be insightful to assess the instructional structure in the mathematics classroom.

Figure 3. Suggested adjustments on the measurement framework of Classroom Management according to the comparison between conceptual indicators and empirical measurement dimensions.

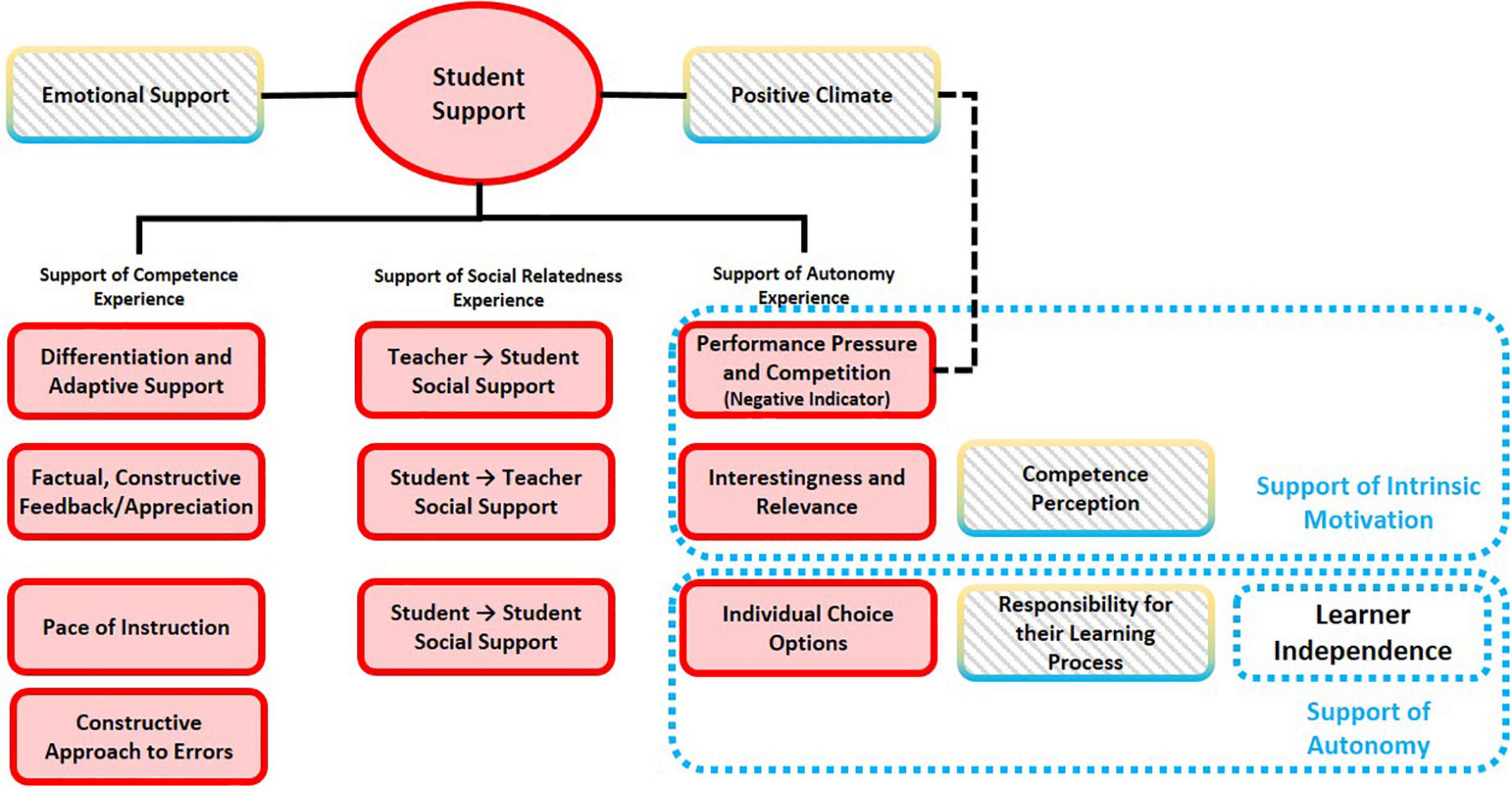

In the existing three-dimensional framework (As shown in Figure 4), Student Support is assessed from three major perspectives: Support of Competence Experience, Support of Social Relatedness Experience, and Support of Autonomy Experience. In the refined framework, we suggest to add two more indicators, that is, Emotional Support and Positive Climate. The latter one is somehow opposite to the negative indicator of Support of Autonomy Experience—Performance Pressure and Competition.

Figure 4. Suggested adjustments on the measurement framework of Student Support according to the comparison between conceptual indicators and empirical measurement dimensions.

The original three-dimensional framework presents a mixed perspective that combines different indicators to assess the sub-dimension Support of Autonomy Experience. Confusion can be caused by the indicators that obviously do not belong to the sub-dimension of Autonomy such as Interestingness and Relevance, Performance Pressure and Competition. According to the Intrinsic Motivation Inventory (IMI; Ryan, 1982; McAuley et al., 1989), interest/enjoyment is considered the self-report measure of intrinsic motivation; the perceived competence concepts are theorized to be positive predictors of intrinsic motivation, and pressure/tension is theorized to be a negative predictor of intrinsic motivation. In addition, based on the conceptual analysis of the indicators related to support motivation and autonomy, we suggest to integrate more indicators in the framework, and a reconstruction of the indications seems to be more consistent with the three shared key concepts of autonomy identified by Dickinson (1995), namely, learner independence, learner responsibility, and learner choice. Therefore, we suggest integrating more operational indicators identified from the literature and refining the structure of indicators based on the conceptual definitions of the complex constructs Intrinsic Motivation and Autonomy, which can be further regarded as the upper level of the sub-dimension of the basic dimension Student Support.

Note that in Praetorius and Charalambous (2018), the student support dimension was divided into a socio-emotional dimension capturing aspects of social relatedness, and a dimension capturing cross-cutting instructional aspects to maximize student learning, capturing most of what refers to adaptive teacher behavior (e.g., differentiation and adaptive support) and autonomy support in our categorization.