- 1Department of Psychology, Ohio University, Athens, OH, United States

- 2Department of Visual Communication, Ohio University, Athens, OH, United States

Many students with social, emotional, and behavioral problems receive school-based services. Multi-Tiered System of Support (MTSS) is the one most frequently referenced systems for coordinating services. The goal of this framework is to effectively match assessment and services to the needs of individual students. In many schools this process is limited by a lack of an overall coordinating system. As a result, many students receive services for social, emotional and behavioral problems that are unlikely to be effective, are not guided by progress monitoring, and not adequately informed by current and historical data. The Beacon System is a web-based tool created to enhance the quality of service provision for students with social, emotional, and behavioral problems by supporting continuous progress monitoring, helping educators know what services are likely to be helpful for a particular student according to their age and presenting problems, and providing educators and school mental health professionals with information to help them implement both familiar and unfamiliar interventions. Additionally, the Beacon system will enhance educators’ abilities to coordinate with a student’s entire intervention team and allow for continuity as a child changes grades, teachers, or schools. Enhancing these parts of the overall process can improve educators’ efforts to achieve the goals of MTSS to provide effective interventions matched to the students’ needs. The purpose of this manuscript is to describe the iterative development process used to create Beacon and highlight specific examples of some of the methods. In addition, we will describe how feedback from stakeholders (e.g., teachers, school mental health professionals) was used to inform decisions about design. Finally, we will describe the final development steps taken prior to pilot implementation studies and our plans for additional data collection to inform continued development of Beacon. This includes the strategies being used to measure outcomes at multiple levels including assessing a variety of behaviors of the professionals in the schools as well as student outcomes. These data will inform continuous development work that will keep us moving toward our goal of enhancing the outcomes of students with social, emotional and behavioral problems.

Introduction

Students with social, emotional, and behavioral (SEB) problems are at risk for poor long term outcomes such as dropping out of school, being unemployed, being arrested, experiencing relationship difficulties, and exhibiting high rates of substance use (Last et al., 1997; Kimonis and Frick, 2010; Kuriyan et al., 2013). Students in special education due to SEB problems have the worst long-term outcomes of all students with disabilities (Newman et al., 2009). These problems persist into high school and the transition to adulthood. Compared to typically-developing high school students, studies report these poor outcomes for students with ADHD (Barkley et al., 2006; Molina et al., 2009; Kent et al., 2011), with depression (Jaycox et al., 2009), and with a history of childhood anxiety (Grover et al., 2007). Emerging adults with SEB problems demonstrate lower achievement in post-secondary education, lower employer ratings, and more job terminations than individuals without these problems (Vander Stoep et al., 2000; Wagner et al., 2005; Barkley et al., 2006; Kuriyan et al., 2013).

School-based services for students with SEB problems tend to include classroom-based interventions, counseling services offered by school mental health professionals (SMHPs; i.e., school counselor, school social workers, or school psychologists), or alternative classroom services (e.g., special education resource rooms). However, research suggests that many services provided for these students are not evidence-based (Spiel et al., 2014; Kern et al., 2019; Hustus et al., 2020). Treatment development researchers have designed many services for students with SEB problems and evaluated them in schools with results indicating substantial benefits for students including gains extending well beyond the end of services (e.g., Evans et al., 2016; Lee et al., 2016). Many of these studies include comparison conditions that are often “community care” or “treatment as usual” conditions. Participants in these conditions continue to receive services from school or community practitioners and investigators typically measure the services received, but do not limit participants from receiving other care that is typically provided in the school or community. As a result, the benefits found for those in the experimental intervention conditions are rarely compared to effects resulting from no services, but are compared to students receiving services as they normally would. The contrasting outcomes reported in these studies represent the science-practice gap in our field and document the gap between what is and what could be for the students (Weisz et al., 2017).

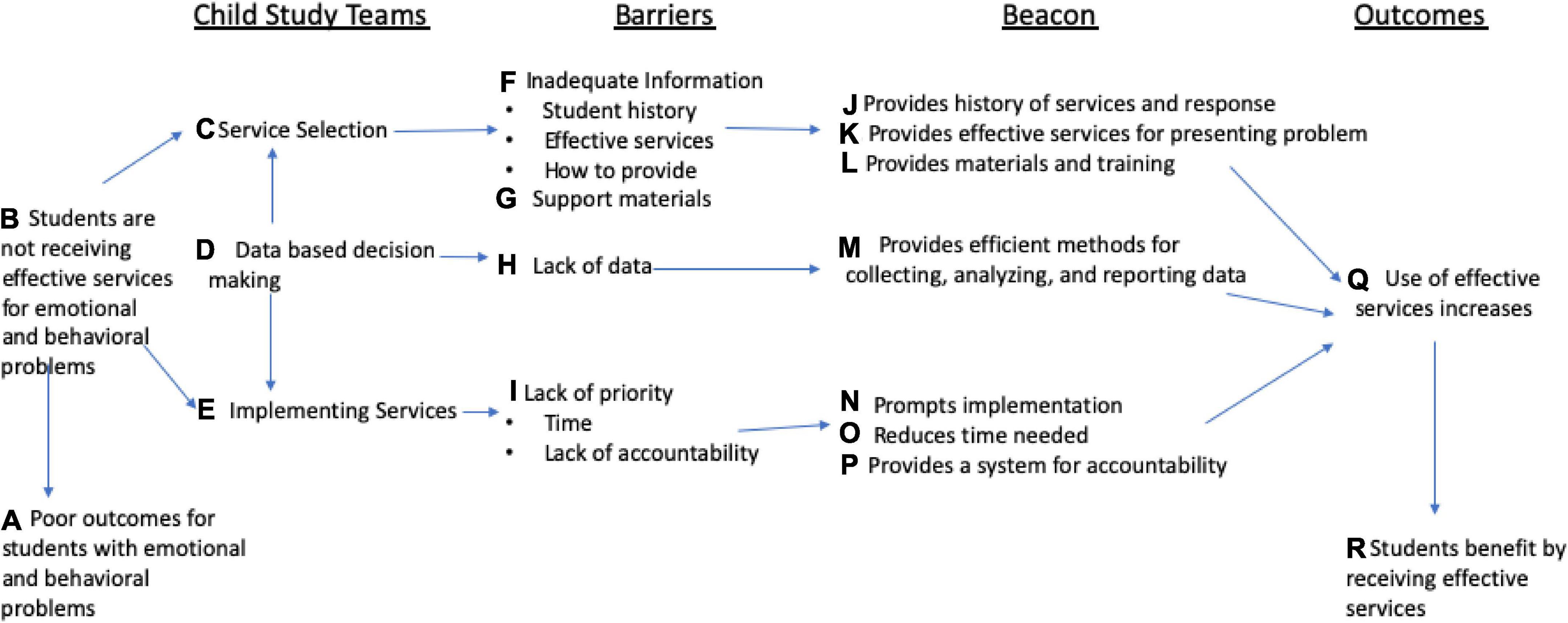

The purpose of this manuscript is to describe the intervention development process employed to develop the Beacon system. The goal of Beacon is to support educators and SMHPs when selecting and providing tier 2 or 3 services to students with SEB within a multi-tiered system of support (MTSS). Referral, progress monitoring, and intervention procedures inherent in MTSS are made efficient in Beacon. In addition, explanations of the latest research on practices are provided in a user-friendly manner to inform decision-making. Thus, Beacon is not a direct intervention to be provided to students, but it is an education and management system, based on our theory of change (see Figure 1) to help educators improve services and reduce the science-practice gap.

Barriers to closing the science-practice gap in schools

The potential to dramatically improve student outcomes by closing the science-practice gap has motivated many to develop techniques for improving the uptake of evidence-based practices in school settings (e.g., Cook et al., 2015; Lyon and Bruns, 2019; Owens et al., 2020). Informed by our theory of change (Figure 1), our goal was to address three main obstacles to effective adoption and implementation of evidence-based practices in schools including lack of information and support, absence of useful data, and a low priority (see items G – J in Figure 1). This information may be most importantly missing at meetings where services and measures are selected for individual students (e.g., student assistance, child study, special education team meetings) as this lack of information often leads to uninformed decisions.

Barrier 1 – Lack of adequate information and support

Researchers have identified that interventions often selected by school professionals tend to be heavily marketed programs that are similar to previous practices and often lack scientific support (Hallfors and Godette, 2002). Even when teachers intend to use an evidence-based intervention, there are often barriers related to obtaining information about how to implement it with fidelity (Sanetti and Kratochwill, 2009). Supports are needed to master the complexities of some effective interventions (Collier-Meek et al., 2019). Common methods for obtaining this information include pursuing information online, attending workshops, or purchasing manuals and other materials. These steps and costs lead to delays that may discourage use of effective practices. As a result, teachers often implement what they know or what is easily accessible.

Barrier 2 – Lack of data

A report by the U.S. Department of Education outlines a variety of reasons why educators do not use data available to them. One reason was their perception that available data lacks value (Means et al., 2009). Although extensive and systematic data are collected in schools related to academic performance, in many schools remarkably little data are collected and retained in a usable manner related to students with SEB problems. This is especially true for students who are not in special education. Our experience is that data gathered related to students with SEB problems are often collected in the context of school-based team meetings, sometimes referred to as a Child Study Team meetings (CST; these teams have different names in different schools). Data gathered in this context are typically collected in a non-systematic manner and if retained, they are often kept in places that make retrieving a child’s data in later years or compiling the data over time not feasible. As a result, future decisions are often not informed by a student’s history of responses to previous services.

Barrier 3 – Lack of priority

There is inconsistency across schools and staff regarding the importance of selecting valid assessments and effective interventions. Some of this is due to other activities competing for teachers’ time and the priority for addressing the needs of students with SEB problems. Many teachers, administrators and SMHPs believe that other tasks have a higher priority than implementing evidence-based practices for students with SEB problems (Means et al., 2009; Collier-Meek et al., 2019). In addition, many SMHPs are pulled to respond to immediate crises, which diminishes the time they have for proactive prevention or intervention efforts. In some schools, collaborative efforts between educators to address the needs of struggling students are not actively supported (Greenway et al., 2013). Further, in many schools, attendance at CST meetings is optional so the priority for completing team recommendations can vary at least partly as a function of the priority the principal places on the activity (Ransford et al., 2009). Furthermore, as record keeping in team meetings is quite variable, there is often a lack of accountability for implementing CST decisions.

Efforts to address barriers

Given these barriers to high quality service provision in schools, there have been attempts to develop systems that can enhance educators’ and SMHP’s knowledge and use of effective practices, increase capacity for data collection and data-driven decision making, and provide supports for high quality consistent implementation. Unfortunately, current attempts toward this goal are inadequate. Some systems are described within the mental health literature and include interventions that could be provided in schools and other systems have been developed specifically focused on classroom-based service delivery. Some of these are described below.

Mental health practices

There are many online resources for SMHPs to learn about evidence-based practices including a comprehensive set of videos prepared by the Society of Clinical Child and Adolescent Psychology (Division 53, APA) and the Center for Children and Families at Florida International University. These videos provide information and models; however, they are primarily based on clinical diagnoses (e.g., bipolar disorder, depression) and the application of many of the treatments in school settings would require substantial adaptations including the development of materials needed to provide the treatments.

A second mental health focused system is PracticeWise. It organizes services into modules that can be used based upon a student’s presenting problems, provides flow charts that facilitate clinicians understanding about how to apply the modules when the student has one or multiple problems, and offers detailed materials for implementation (i.e., handouts for parents and youth, checklists for clinicians, and videos describing the strategies). Although comprehensive, this system’s application in schools is limited in several ways. First, it was designed for clinicians working in hospitals and clinics and it relies on typical service delivery contexts in these settings. Unlike clinic-based care where students are often seen with their parents for weekly 50-min sessions, services provided at schools are much more fluid, often without parents, and integrated into students’ schedules and classrooms. Second, many of the interventions target parents and focus on problems experienced in the home setting. Finally, national prevalence data (CDC, Danielson et al., 2018) suggest that many common student behavior problems faced by teachers are those related to ADHD; however, there is little focus on this population in the system. Third, although PracticeWise offers flow charts that include some guidance for when the student is not responding, the system does not include a data entry portal for entering progress monitoring data, and therefore does not provide adequate support for data-driven decision making that is specific to student performance, a feature that is critical to MTSS. Given these limitations, PracticeWise does not adequately address the above-described barriers to students receiving needed services.

Classroom-based practices

There are a few programs specifically designed for use in schools including Infinite Campus, PowerSchool, and Positive Behavioral Interventions and Supports (PBIS) apps such as School-Wide Information System (SWIS). Infinite Campus and PowerSchool are learning management systems (e.g., organize calendars, grades, and course materials), communication and notification systems (across school professionals, parents, and students), and data warehouses (e.g., organizing attendance and grade data) but do not offer educators resources related to evidence-based services for students with SEB problems. SWIS is a web-based collection of applications that help educators document the frequency and location of behavioral infractions, track students who are referred for individualized supports and the actions resulting from disciplinary infractions, and create reports to guide educators’ decisions about positive behavioral support programming. SWIS is primarily limited to discipline and attendance, but it does include modest support for a check-in/check-out intervention. The What Works Clearinghouse (WWC) offers information about some services but is not comprehensive. Further, the WWC web pages and practice guides are not efficient to use in CST meetings when discussing a referred student. Lastly, new education technologies are emerging (e.g., Class Dojo, Panorama) that are geared toward enhancing student social emotional and behavioral functioning; however, they have undergone limited scientific testing for effectiveness, and are focused primarily on universal strategies (e.g., classroom management, universal screening procedures) instead of supporting educators use of evidence-based tier one and two interventions for youth with SEB. Thus, existing programs and resources designed for use in schools make important contributions, but they have limited utility for tracking the wide array of target behaviors that are relevant to students with SEB problems and for providing support to select and implement interventions.

In summary, although programs and online systems exist to address barriers to high quality service provision in schools, most address only a narrow aspect of the problem and many are not designed to efficiently help educators address the needs of a particular student. For a system to adequately address the barriers described above and be efficient and helpful when addressing the needs of students with SEB problems, there is value to the system including features that address multiple barriers and can be integrated into the decision- making process in meetings where and when decisions are made. If a web-based system is going to effectively influence practices and support professionals’ efforts to help students with SEB problems, it must (a) fit into schools’ systems of care, (b) inform the choices of services available to educators, (c) provide materials, support and training to facilitate use of the services, (d) provide a system for tracking a student’s response to intervention, and (e) provide mechanisms for implementation supports and accountability. The Beacon system was designed, in collaboration with educators, SMHPs and school administrators, to achieve these goals.

Beacon development: What is Beacon?

To explain Beacon and its use in schools, we provide the following example of a general education teacher referring a student to be discussed at the school’s CST meeting. After listening to the teacher’s concerns, members of the CST enter the student’s name into Beacon and information from any previous referrals appears. As the team discusses the teacher’s concerns about the student, the chair of the CST selects the most relevant presenting problems from the list provided in Beacon. This list was developed in collaboration with educators at partner schools and our network of stakeholders. Next the team is provided with progress monitoring items that correspond to the presenting concerns and the team assigns a teacher and/or others to complete the progress monitoring questions (typically 1–3 questions) at the intervals selected. At each timepoint, Beacon generates a text or email to send to the educators charged with responding to the progress monitoring questions. This educator completes the requested ratings using an app on their mobile phone or in response to an email on any device. Next, the CST is prompted to select at least one intervention to implement for the student. In Beacon, interventions are organized by the two most common professionals who work with students with SEB problems: general education teachers or by a SMHP. If this is an initial referral to the CST, the team may want the general education teacher to try a classroom intervention first, and these interventions appear in the General Education Classroom tab. Based on the presenting problems and age of the student, Beacon presents a list of services that could be provided for the student in the general education classroom. The items on the list were selected by the Beacon development team with input from our community partners and stakeholders. The literature on these interventions was extensively researched by the Beacon development team to include indices of the evidence supporting the short-term benefits, long- term benefits, and ease of implementation (i.e., Beacon Intervention Tables). The CST or individual staff can click on each service to read how to implement it, view videos about the intervention, or download forms and handouts to use in the intervention. The CST chooses from the list provided or enters information about another intervention. The student’s progress monitoring data can be reviewed on Beacon at the next CST to make decisions about further steps.

If the student’s problems persist and additional interventions are warranted (e.g., members of the team may suspect that there is a mental health problem contributing to the problems identified by the teacher), the team may decide to involve SMHPs. The CST can add the school psychologist to the student’s team on Beacon so the school psychologist can have access to this student’s information. The school psychologist may initiate use of the SMHP tab in Beacon on the identified student’s pages. The school psychologist may interview the child, consult with teachers and parents, and gather additional data and then hypothesize about the mental health problem that may be affecting the student and leading to the teacher-identified problems. Based on the age and suspected problems, the school psychologist may decide to screen for various types of problems. Beacon does not provide support for diagnosing a student using Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (American Psychiatric Association, 2013) criteria, but does provide brief assessments that can be used to narrow the focus of the student’s presenting issues (e.g., issues related to anxiety or mood). Based on this assessment and the SMHP’s leading hypothesis about the student, Beacon presents services typically provided for a student of that age with the problems identified by the SMHPs. Beacon provides information about the evidence supporting the effectiveness and some guidance about implementation and information about where to learn more about some of the interventions (i.e., Beacon Intervention Tables). For example, Beacon describes cognitive behavioral therapy (CBT) for anxiety and provides some guidance for school-based delivery (i.e., materials and video demonstrations), but Beacon does not train a SMHP to provide CBT if they do not already possess the competencies needed to implement this technique. The Beacon dashboard shows which interventions are being provided by each professional and the results of progress monitoring data over time to evaluate the impact of the classroom and SMH interventions. This process continues for as long as deemed necessary by the CST.

Beacon was designed to address the three barriers described in the theory of change guiding our work (see Figure 1). The information provided by Beacon informs school professionals about how to measure response to services and the levels of evidence for a variety of school services for presenting problems across students of various ages. This addresses the lack of information and support barrier to providing effective services. Most directly, Beacon provides considerable information about each intervention such that a teacher unfamiliar with most of the interventions could learn to provide it without much additional support. The inclusion of resources that outline procedures for the implementation of evidence-based practices, helpful tips for implementing the interventions with fidelity and overcoming obstacles, video models demonstrating implementation, and links to external training resources can empower educators to embrace new techniques. The inclusion of relevant worksheets, measures, and other materials allow educators and SMHPs to circumvent the time-consuming task of gathering or creating resources needed to implement interventions. Beacon also addresses the lack of data barrier to providing effective services. The tracking of progress monitoring data gathered from school professionals, families, and students provides data important to informing decisions about services. Furthermore, data about the history of the problems over time, responses to services provided in earlier grades, and the timing and coordination of services provides data to inform how to best help a student. Finally, Beacon is designed to address the lack of priority barrier in two ways. First, Beacon includes functions that make many of the tasks of serving students with SEB problems more efficient than current practices. For example, Beacon prompts school professionals to complete progress monitoring measures and makes all records pertaining to the intervention plan and roles of team members easily accessible. Second, Beacon is designed to make team members accountable to each other through tracking and communications systems built into Beacon. Similarly, summary data are available to administrators who can see how implementation and measurement is progressing with students in the school.

Approach to development

Although the original idea came from the years of experience of working with students and professionals in schools, in order for the project to come to fruition it was necessary to recruit a team of experts. We needed expertise in evidence-based school practices for students with SEB problems as well as people with expertise in the development and use of web-based systems and designing user-friendly interfaces and supports. Finally, we also needed practicing educators, administrators and SMHPs to share their expertise. We not only wanted to build this product for them, but with them. It was critical to capitalize on the expertise of this diverse team throughout the development process.

We leveraged the implementation science and intervention development literature to guide our development process. Many of the development models in this literature focused on integrating theory and clinical expertise into the design of a new product. For example, when describing the Deployment Focused Model (Weisz et al., 2005) the authors emphasized the importance of combining the theory around the nature and treatment of the particular problem, the clinical literature, and the guidance of those who actually intervene with students with this type of presenting problem. Many models of intervention development stress the importance of iterations and refining the product or intervention within the development process. Particularly, the ORBIT model (Czajkowski et al., 2015), the Deployment Focused model (Weisz et al., 2004), and the National Institute of Health’s (NIH) Stage Model (Onken et al., 2014) highlight this in their development process.

We employed this iterative development process and created procedures to integrate information from practitioners along with findings from the latest research literature. We created a process to solicit user feedback from teachers, school administrators, and SMHPs focused on specific functions within Beacon. We recruited practitioners to the team using a convenience sample of school personnel from previous partnerships and from an advertisement soliciting educators in a national newsletter. To date, we collected feedback from 24 educators who work in K-12 schools in the United States and whose professional roles include special education teachers, general educational teachers, school counselors, and service coordinators. The stakeholders’ years of experience ranged from less than 4 years to more than 20 years.

We gathered data in phases reflecting the progress we made developing Beacon. Tasks were developed for practitioners to complete in Beacon and they were asked to provide feedback about the usability of the system, why they chose certain interventions for their fictional student, and other features relevant to the task selected for each phase of feedback. For example, on one occasion we asked our collaborators to change the presenting problems for a particular student and revise the progress monitoring measures and services to be provided. We asked them to complete a survey about their attempts to complete the assignment and provided tools for them to submit screenshots of specific areas of the system that were confusing or not working properly. We integrated their feedback on the survey with data generated in the Beacon system that showed us what they did when trying to complete the assignment. This information helped us identify points of confusion in the system and features that were not adequately achieving our goals. Further, many of the collaborators told us very specific changes in the interface that would improve clarity and use. For example, their feedback led to us adding numerous information bubbles to describe features at various points where collaborators indicated that they were needed. We also used their feedback to guide modifications to our progress monitoring graphs to improve ease of interpretation. The feedback we received from stakeholders during early developmental testing was incorporated into the design of the system and helped us maximize the usability and utility of Beacon.

In addition to involvement from practitioners, we recruited colleagues with expertise in the field to provide regular feedback related to various development decisions. For example, the algorithm for calculating levels of evidence for various services was reviewed by two school psychology experts and one clinical child psychology expert who provided recommendations for refinement. Further, the progress monitoring items used in Beacon were informed by the extensive research on single-item scales (e.g., Chafouleas et al., 2010). Based on our experience and practitioner feedback we prioritized measurement tools that were valid indicators of what they are intended to measure and are as brief as possible.

Another critical ongoing aspect of Beacon’s development is the creation and maintenance of all possible services that are based on research or are frequently used for each presenting problem. Although it is our hope that using Beacon will increase use of evidence-based practices, based on feedback from our stakeholders we also list services that are not likely to be effective, but may be frequently used (e.g., fidget toys). This way users of the system will encounter interventions with which they are familiar and be able to compare them to alternative interventions. A team of students work with the investigators to maintain the research data for all of the services. New articles that document evaluations of a service’s effectiveness are added to the collection of research and processed and coded using the algorithm mentioned above. After double checking the codes, indices of effectiveness in Beacon are updated when warranted. The indices of effectiveness in the Beacon system are based on the evidence for the intervention and the age of participants in the relevant studies, so the same intervention may have different levels of effectiveness for one age than another. For example, a daily report card (DRC) has a strong level of evidence for elementary school students for many problems, but almost no evidence for use with high school students. Thus, if the student being entered into the system is in 10th grade, the indices of effectiveness for a DRC will be poor for that student. The algorithm used to determine the indices of effectiveness for the services was informed by the system for determining levels of effectiveness in the What Works Clearinghouse as well as similar systems used to determine evidence-based practices by the Society of Clinical Child and Adolescent Psychology (SCCAP; Div. 53 of American Psychological Association) and the system used to develop the treatment guidelines for the American Academy of Pediatrics (AAP). In addition to a straightforward indication of levels of effectiveness, Beacon also provides scores indicating the ease with which an intervention can be integrated into a classroom and the magnitude of the effects found in the research. Regardless of the indices provided in Beacon, a user is free to select any intervention. This is consistent with the goal of Beacon to help users make well-informed decisions when selecting services for each student and not serve as a tool to prescribe interventions.

Supporting educators’ use of Beacon

Another key aim of Beacon is to support educators’ implementation of evidence-based intervention through the provision of accessible and user-friendly web-based resources. Previous research on stakeholders’ perceptions of web-based trainings has shown that users value access to materials needed for implementation and the ability to view authentic applications of the technique (e.g., Helgadottir and Fairburn, 2014). Several elements of the Beacon system have been designed to meet these stakeholder needs. For example, in Beacon, each intervention or strategy has a corresponding webpage that provides information and resources needed to support effective implementation of that technique. Webpages include a description of the technique, a summary of the evidence of effectiveness, a step-by-step guide for implementation, downloadable forms that support implementation, and a list of tips for successful implementation. These pages provide users with information about how to use the intervention, videos showing its use, and explanations of the effectiveness ratings. For example, this section may include concerns about the effectiveness of a technique for certain students or in specific contexts, recommendations for applying the intervention with students at various developmental levels, descriptions of common challenges or mistakes that may be encountered, information regarding when a certain technique may be contraindicated, or recommendations regarding when the technique may need to be combined with another intervention or strategy to ensure maximum effectiveness. In sum, this page is designed to be a rich source of information for educators who are considering using the intervention and those working to ensure they are implementing it correctly.

Our use of videos on these pages was guided by literature showing that video examples are perceived to be a cognitively engaging element of training in classroom management strategies (Kramer et al., 2020). The instructional videos for Beacon were designed based on several practices that are recommended for the development of effective educational videos (see Brame, 2016 for review). The first practice is segmentation (Zhang et al., 2006). Compared to a professional development workshop or course designed to train educators on the use of evidence-based targeted interventions, Beacon’s instructional videos are chunked to allow the user to learn about and view examples of specific interventions or strategies, individually. This practice reduces working memory loads and allows users to gain support for the specific technique they are learning to use at a given point in time. In some cases, the videos are further segmented based on the developmental age of the target student (i.e., elementary- versus secondary-school students). Another recommendation for using videos is to strive for brevity (Hsin and Cigas, 2013). Beacon videos are designed to be brief, highlighting the critical components of the intervention/strategy and referring the user to the webpage for more details.

It is also a recommended practice to use complementary visual and auditory information to convey complex information (Mayer and Moreno, 2003). Beacon’s instructional videos are designed to include a mix of modalities. Although most videos begin and end with a Beacon Team member speaking directly to a camera as they introduce or conclude a discussion of a given intervention, the majority of the video content includes highly integrated visual and auditory information (e.g., lists being shown on a screen as a voiceover describes the list; a voiceover discussing an intervention technique as a teacher is shown using the technique with a student). This practice is intended to enhance both engagement and understanding of materials.

The content pages with the videos are intended to be a useful resource during the selection of interventions as well as during implementation following a meeting where services were decided. In addition, they are also available if a user wishes to browse information about various services unrelated to a specific student. For example, teachers may choose to start implementing an intervention to a student or group of students prior to making a referral to a CST. The Beacon system provides that teacher with support for independently trying approaches in the classroom.

Final development and pilot testing

As we progress through the development process in a manner similar to the implementation science procedures described earlier, we arrived at a point where we have a fully functioning early version of Beacon. At this point, we are developing partnerships with staff at schools who agree to collaborate with us on the development process by using Beacon in their schools and give us regular feedback and recommendations. The primary purposes of this feasibility work are to evaluate feasibility, collect observation data on how the system is used, and use these data to inform final revisions and refinements and shape the interface and training procedures. Partnering school staff will use Beacon in their CST meetings as well as make it available for use by individual teachers and SMHPs. A member of our development team will attend the CST meetings at each school to observe the behavior of CST members as they use Beacon. Our staff will observe the CST meeting and complete a Beacon fidelity and feasibility checklist for each student discussed. In addition, staff will take notes about aspects of the process that appear to confuse the users and record recommendations or comments from the users about the process and design. Finally, data from Google Analytics will be collected to determine the frequency and duration of use of the various pages and features of Beacon. For example, if use of the system is low in a particular school, then we will examine the reasons for this and either modify Beacon, develop education materials for the school teams, or take other approaches that allow Beacon to meet their needs. As the programming for Beacon is in a secure digital cloud, revisions can be made in a timely manner without interfering with users or compromising data.

Pilot testing process

Subsequent development work is guided by an iterative process that continues indefinitely. As described above, the first pilot work focuses almost exclusively on feasibility and user experience. The goals are to enhance the features of Beacon so it becomes optimally useful and valuable to those intended to use it. During early pilot testing there is little focus on measuring the ultimate goals of the project pertaining to improving the outcomes of students. Over time the emphasis will shift to being more focused on teacher practices and student outcomes and less focused on feasibility. Neither outcome nor feasibility measurement are ever discontinued, but the priority within the development work shifts. Ultimately, we can look at the choices for intervention options that are made by members of the CSTs, examine adherence to progress monitoring procedures, and study the gains made by students as a function of the choices of those on the CST.

Measuring feasibility and use

The Science of Behavior Change Approach to intervention development specifies the importance of utilizing appropriate measures to determine if the intervention is functioning as expected (Nielsen et al., 2018). Like other web-based systems, if Beacon is going to effectively influence practices and support professionals’ efforts to help students with SEB problems, it must (a) fit into schools’ systems of care, (b) inform the choices of services available to educators, (c) provide materials, support and training to facilitate use of the services, (d) provide a system for tracking a student’s response to intervention, and (e) be responsive to usability and feasibility reports from educators’ on the usability of the system. Data will also come from the tracking forms completed by our development staff attending the CST meetings along with feedback from the CST chair. Development staff will also track activities specific to the process such as whether all students referred to CST due to SEBs are included in Beacon and the reasons why some may not have been included. We want to be able to track who is most likely to use Beacon, which students are most likely to get Beacon intervention plans, how often educators are using Beacon, and what interventions the teams are choosing for their students. The tracking forms will also include data collection about dates of meetings and length of meetings in order to determine to what extent, if any, that using Beacon extends or shortens the amount of time members of the CST meet. These data will inform our further development of the system and training procedures.

The ultimate test of the value of Beacon to schools is the extent with which they use it outside of our development work and research. There are many school-based interventions that are rated by educators as feasible and yet teachers never use them past the end of a study. This is the challenge we will soon face and we are preparing for this by including educators and SMHPs as stakeholders in the development process, adding education technology dissemination experts as consultants, and hiring staff who worked in schools in the roles relevant to Beacon.

Measuring impact of Beacon

As we begin to incorporate outcome measures focused on the primary goals we have for Beacon, our measurement focus will shift to the process educators use to select intervention and assessment procedures. The degree with which the professionals change their selection of interventions to better align with the scientific literature is the proximal measure of the impact of Beacon. The degree to which student outcomes improve in relation to these changes in choices of interventions is the distal measure and the most important of the outcome measures. Student outcomes will be measured within Beacon through the progress monitoring data and also through brief assessments completed by educators and collected by our development staff. The use of scales outside of Beacon’s progress monitoring system is to examine the validity of the progress monitoring data within Beacon.

The overall purpose of Beacon is to help school professionals make well-informed decisions about services for students with or at risk for SEB problems, implement these services with high quality, and make data-drive decisions about them over time. The result of these improved service decisions should be improved outcomes for students. The data resulting from use over time will help us evaluate the barriers described early in this manuscript. If we have evidence that Beacon is reducing these barriers (i.e., inadequate information and support, lack of data, lack of priority) and student outcomes do not improve, then this will raise questions about whether the most important barriers to providing evidence-based practices were identified. We can consider alternative barriers by examining the choices being made for interventions.

Because Beacon does not prescribe services but presents educators with a user-friendly menu of services (and ratings of the effectiveness of those services) that can be used to address teacher concerns about a student, the data generated from the use of Beacon in practice can help us understand how choices are made. It may be that choices are made based on the easiest interventions to provide or the intervention with which the staff are most familiar. By learning how the choices are made for interventions for students, we can advance the science and guide practice to reduce the science-practice gap.

Data availability statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Author contributions

SE conceptualized the manuscript and did the majority of the writing. HB, DA, SG, JO, and EE wrote sections and made revisions to the document. All authors contributed to the article and approved the submitted version.

Funding

This work was funded by Institute of Education Sciences (grant number - R305A210323) and the funding was also provided through an innovation grant from Ohio University.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

American Psychiatric Association (2013). Diagnostic and Statistical Manual of Mental Disorders, 5th Edn. Arlington, VA: American Psychiatric Association. doi: 10.1176/appi.books.9780890425596

Barkley, R. A., Fischer, M., Smallish, L., and Fletcher, K. (2006). Young adult outcome of hyperactive children: adaptive functioning in major life activities. J. Am. Acad. Child Adolesc. Psychiatry 45, 192–202. doi: 10.1097/01.chi.0000189134.97436.e2

Brame, C. J. (2016). Effective educational videos: principles and guidelines for maximizing student learning from video content. CBE Life Sci. Educ. 15:es6. doi: 10.1187/cbe.16-03-0125

Chafouleas, S. M., Briesch, A. M., Riley-Tillman, T. C., Christ, T. J., Black, A. C., and Kilgus, S. P. (2010). An investigation of the generalizability and dependability of Direct Behavior Rating Single Item Scales (DBR-SIS) to measure academic engagement and disruptive behavior of middle school students. J. Sch. Psychol. 48, 219–246. doi: 10.1016/j.jsp.2010.02.001

Collier-Meek, M. A., Sanetti, L. M., and Boyle, A. M. (2019). Barriers to implementing classroom management and behavior support plans: an exploratory investigation. Psychol. Sch. 56, 5–17. doi: 10.1002/pits.22127

Cook, C. R., Lyon, A. R., Kubergovic, D., Browning Wright, D., and Zhang, Y. (2015). A supportive beliefs intervention to facilitate the implementation of evidence-based practices within a multi-tiered system of supports. Sch. Mental Health 7, 49–60. doi: 10.1007/s12310-014-9139-3

Czajkowski, S. M., Powell, L. H., Adler, N., Naar-King, S., Reynolds, K. D., Hunter, C. M., et al. (2015). From ideas to efficacy: the ORBIT model for developing behavioral treatments for chronic diseases. health psychology: official journal of the division of health psychology. Am. Psychol. Assoc. 34, 971–982. doi: 10.1037/hea0000161

Danielson, M. L., Visser, S. N., Chronis-Tuscano, A., and DuPaul, G. J. (2018). A national description of treatment among United States children and adolescents with attention-deficit/hyperactivity disorder. J. Pediatr. 192, 240–246.e1. doi: 10.1016/j.jpeds.2017.08.040

Evans, S. W., Langberg, J. M., Schultz, B. K., Vaughn, A., Altaye, M., Marshall, S. A., et al. (2016). Evaluation of a school-based treatment program for young adolescents with ADHD. J. Consult. Clin. Psychol. 84, 15–30. doi: 10.1037/ccp0000057

Greenway, R., McCollow, M., Hudson, R. F., Peck, C., and Davis, C. A. (2013). Autonomy and accountability: teacher perspectives on evidence-based practice and decision-making for students with intellectual and developmental disabilities. Educ. Train. Autism Dev. Disabil. 456–468.

Grover, R. L., Ginsburg, G. S., and Ialongo, N. (2007). Psychosocial outcomes of anxious first graders: a seven-year follow-up. Depress. Anxiety 24, 410–420. doi: 10.1002/da.20241

Hallfors, D., and Godette, D. (2002). Will the principles of effectiveness’ improve prevention practice? Early findings from a diffusion study. Health Educ. Res. 17, 461–470. doi: 10.1093/her/17.4.461

Helgadottir, F. D., and Fairburn, C. G. (2014). Web-centered training in psychological treatments: a study of therapist preferences. Behav. Res. Therapy 52, 61–63. doi: 10.1016/j.brat.2013.10.010

Hsin, W. J., and Cigas, J. (2013). Short videos improve student learning in online education. J. Comput. Sci. Coll. 28, 253–259.

Hustus, C. L., Evans, S. W., Owens, J. S., Benson, K., Hetrick, A. A., Kipperman, K., et al. (2020). An evaluation of 504 and individualized education programs for high school students with attention deficit hyperactivity disorder. Sch. Psychol. Rev. 49, 333–345. doi: 10.1080/2372966X.2020.1777830

Jaycox, L. H., Stein, B. D., Paddock, S., Miles, J. N. V., Chandra, A., Meredith, L. S., et al. (2009). Impact of teen depression on academic, social, and physical functioning. Pediatrics 124, e596–e605. doi: 10.1542/peds.2008-3348

Kent, K. M., Pelham, W. E., Molina, B. S. G., Sibley, M. H., Waschbusch, D. A., Yu, J., et al. (2011). The academic experience of male high school students with ADHD. J. Abnormal Child Psychol. 39, 451–462. doi: 10.1007/s10802-010-9472-4

Kern, L., Hetrick, A. A., Custer, B. A., and Commisso, C. E. (2019). An evaluation of IEP accommodations for secondary students with emotional and behavioral problems. J. Emot. Behav. Disord. 27, 178–192. doi: 10.1177/1063426618763108

Kimonis, E. R., and Frick, P. J. (2010). Oppositional defiant disorder and conduct disorder grown-up. J. Dev. Behav. Pediatr. 31, 244–254. doi: 10.1097/DBP.0b013e3181d3d320

Kramer, C., König, J., Strauss, S., and Kaspar, K. (2020). Classroom videos or transcripts? A quasi-experimental study to assess the effects of media-based learning on pre-service teachers’ situation-specific skills of classroom management. Int. J. Educ. Res. 103, 1–13. doi: 10.1016/j.ijer.2020.101624

Kuriyan, A. B., Pelham, W. E., Molina, B. S. G., Waschbusch, D. A., Gnagy, E. M., Sibley, M. H., et al. (2013). Young adult educational and vocational outcomes of children diagnosed with ADHD. J. Abnormal Child Psychol. 41, 27–41. doi: 10.1007/s10802-012-9658-z

Last, C. G., Hansen, C., and Franco, N. (1997). Anxious children in adulthood: a prospective study of adjustment. J. Am. Acad. Child Adolesc. Psychiatry 36, 645–652. doi: 10.1097/00004583-199705000-00015

Lee, S. S., Victor, A. M., James, M. G., Roach, L. E., and Bernstein, G. A. (2016). School-based interventions for anxious students: long-term follow-up. Student Psychiatry Hum. Dev. 47, 183–193. doi: 10.1007/s10578-015-0555-x

Lyon, A. R., and Bruns, E. J. (2019). From evidence to impact: joining our best school mental health practices with our best implementation strategies. Sch. Ment. Health 11, 106–114. doi: 10.1007/s12310-018-09306-w

Mayer, R. E., and Moreno, R. (2003). Nine ways to reduce cognitive load in multimedia learning. Educ. Psychol. 38, 43–52. doi: 10.1207/S15326985EP3801_6

Means, B., Padilla, C., DeBarger, A., and Bakia, M. (2009). Implementing Data-Informed Decision making in Schools: Teacher Access, Supports and Use. Menlo Park, CA: SRI International.

Molina, B. S. G., Hinshaw, S. P., Swanson, J. M., Arnold, L. E., Vitiello, B., Jensen, P. S., et al. (2009). The MTA at 8 years: prospective follow-up of children treated for combined-type ADHD in a multisite study. J. Am. Acad. Child Adolesc. Psychiatry 48, 484–500. doi: 10.1097/CHI.0b013e31819c23d0

Newman, L., Wagner, M., Cameto, R., and Knokey, A.-M. (2009). The post-high school outcomes of youth with disabilities up to 4 years after high school. A Report From the National Longitudinal Transition Study-2 (NLTS2) (NCSER 2009-3017). Menlo Park, CA: SRI International.

Nielsen, L., Riddle, M., King, J. W., Aklin, W. M., Chen, W., Clark, D., et al. (2018). The NIH science of behavior change program: transforming the science through a focus on mechanisms of change. Behav. Res. Therapy 101, 3–11. doi: 10.1016/j.brat.2017.07.002

Onken, L. S., Carroll, K. M., Shoham, V., Cuthbert, B. N., and Riddle, M. (2014). Reenvisioning clinical science: unifying the discipline to improve the public health. Clin. Psychol. Sci. 2, 22–34. doi: 10.1177/2167702613497932

Owens, J. S., Evans, S. W., Coles, E. K., Holdaway, A. S., Himaway, L. K., Mixon, C., et al. (2020). Consultation for classroom management and targeted interventions: examining benchmarks for teacher practices that produce desired change in student behavior. J. Emot. Behav. Disord. 28, 52–64. doi: 10.1177/1063426618795440

Ransford, C. R., Greenberg, M. T., Domitrovich, C. E., Small, M., and Jacobson, L. (2009). The role of teachers’ psychological experiences and perceptions of curriculum supports on the implementation of a social and emotional learning curriculum. Sch. Psychol. Rev. 38, 510–532.

Sanetti, L. M. H., and Kratochwill, T. R. (2009). Toward developing a science of treatment integrity: introduction to the special series. Sch. Psychol. Rev. 38:15.

Spiel, C. F., Evans, S. W., and Langberg, J. M. (2014). Evaluating the content of individualized education programs and 504 plans of young adolescents with attention deficit/hyperactivity disorder. Sch. Psychol. Q. 29, 452–468. doi: 10.1037/spq0000101

Vander Stoep, A., Beresford, S. A., Weiss, N. S., McKnight, B., Cauce, A. M., and Cohen, P. (2000). Community-based study of the transition to adulthood for adolescents with psychiatric disorder. Am. J. Epidemiol. 152, 352–362. doi: 10.1093/aje/152.4.352

Wagner, M., Kutash, K., Duchnowski, A. J., and Epstein, M. H. (2005). The special education elementary longitudinal study and the national longitudinal transition study: study designs and implications for children and youth with emotional disturbance. J. Emot. Behav. Disord. 13, 25–41. doi: 10.1177/10634266050130010301

Weisz, J. R., Chu, B. C., and Polo, A. J. (2004). Treatment dissemination and evidence-based practice: strengthening intervention through clinician-researcher collaboration. Clin. Psychol. 11, 300–307. doi: 10.1093/clipsy.bph085

Weisz, J. R., Jensen, A. J., and McLeod, B. D. (2005). “Development and dissemination of child and adolescent psychotherapies: Milestones, methods, and a new Deployment-Focused Model,” in Psychosocial Treatment for Child and Adolescent Disorders: Empirically based strategies for clinical practice, 2nd Edn, eds E. D. Hibbs and P. S. Jensen (Washington, DC: American Psychological Association), 9–45.

Weisz, J. R., Kuppens, S., Ng, M. Y., Eckshtain, D., Ugueto, A. M., Vaughn-Coaxum, R., et al. (2017). What five decades of research tells us about the effects of youth psychological therapy: A multilevel meta-analysis and implications for science and practice. Am. Psychol. 72:79. doi: 10.1037/a0040360

Keywords: school, intervention, technology, development, emotional, behavior

Citation: Evans SW, Brockstein H, Allan DM, Girton SL, Owens JS and Everly EL (2022) Developing a web-based system for coordinating school-based care for students with social, emotional, and behavioral problems. Front. Educ. 7:983392. doi: 10.3389/feduc.2022.983392

Received: 01 July 2022; Accepted: 24 August 2022;

Published: 28 September 2022.

Edited by:

Antonella Chifari, Institute of Didactic Technologies (CNR), ItalyReviewed by:

Colin McGee, ADDISS, The National ADHD Charity, United KingdomCrispino Tosto, Institute of Didactic Technologies (CNR), Italy

Copyright © 2022 Evans, Brockstein, Allan, Girton, Owens and Everly. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Steven W. Evans, ZXZhbnNzM0BvaGlvLmVkdQ==

Steven W. Evans

Steven W. Evans Hannah Brockstein

Hannah Brockstein Darcey M. Allan

Darcey M. Allan Sam L. Girton

Sam L. Girton Julie Sarno Owens

Julie Sarno Owens Elise L. Everly

Elise L. Everly