94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Educ., 19 October 2022

Sec. Digital Learning Innovations

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.960430

Science, technology, engineering, and mathematics (STEM) occupations are projected to grow over two times faster than the total for all occupations in the next decade. This will require reskilling and upskilling those currently in the workforce. In response, many universities are deliberately developing academic programs and individual courses focused on providing relevant skills that can be transferred to the workplace. In turn, developers of such programs and courses need ways to assess how well the skills taught in their courses translate to the workforce. Our framework, Course Assessment for Skill Transfer (CAST), is a suite of conceptual tools intended to aid course designers, instructors, or external evaluators in assessing which essential skills are being taught and to what extent. The overarching aim of the framework is to support skills transfer from the classroom to the workplace. This paper introduces the framework and provides two illustrative examples for applying the framework. The examples show that the framework offers a customizable structure for course facilitators and evaluators to assess the skills taught, learned, and retained based on their needs and the resources available to evaluate the quality and usefulness of course offerings in higher education.

Science, technology, engineering, and mathematics (STEM) occupations are projected to grow over two times faster than the total for all occupations in the next decade (US Bureau of Labor Statistics, 2021). At the same time, the speed of disruptive change in the workplace is accelerating exponentially with the introduction of artificial intelligence (AI). With this innovation, STEM occupations will profoundly transform or change the skills needed to perform them (Gownder et al., 2015) There is growing consensus among learning researchers and labor economists that a select set of essential skills, such as complex problem solving, critical thinking, creativity, emotional intelligence, systems thinking, teamwork, and interpersonal communication are emerging as foundational to an increasing number of professions—in addition, and beyond technical or specialized knowledge and skills that form the basis of a profession (National Research Council [NRC], 2012; Gray, 2016; National Academies of Sciences Engineering and Medicine [NASEM], 2017). As many more skilled workers will be needed to fill rapidly changing professions, these positions will require higher education institutions to produce workforce-ready students with the requisite dispositions and abilities to adjust to the quickly evolving nature of work in STEM and beyond.

In response to these trends, there are calls for higher education to work more closely with industry partners to connect their curricula with the workforce’s needs (Kinash and Crane, 2015; Oraison et al., 2019). Accordingly, universities and private education companies are increasingly adding workforce-focused academic programs and courses to help students succeed immediately in the workforce (Caudill, 2017). To successfully respond to the shifting needs of the workforce, the design of these courses needs to emphasize competencies rather than foundational knowledge (De Notaris, 2019) and create a bridge from the university to industry (Santandreu and Shah, 2016). Research suggests that skill transfer is best supported in courses emphasizing the relevance of learning skills, teaching theory that underpins the skills, and developing similarities between learning and workplace contexts (Jackson, 2016; National Academies of Sciences Engineering and Medicine [NASEM], 2018).

Many workforce-focused learning opportunities are online. These programs are often self-driven, asynchronous online courses hosted through an institution’s learning management platform or commercial providers such as Coursera (Bouchet and Bachelet, 2019). Researchers have assessed and evaluated online learning environments from various perspectives to facilitate the growth and development of digital learning. For instance, Guest et al. (2018) evaluated student satisfaction transitioning from in-person learning to online learning, and Zhang and Dempsey (2019) developed a reflective thinking tool to assess transformative learning (equipping students to access and use new information) in an online setting. Few studies have focused on how to support broader skill development and transfer. This level of evaluation is important because the onus of delivering relevant skills and adequately assessing, documenting, and communicating learning outcomes falls to the providers of these learning opportunities (Toutkoushian, 2005; Voorhees and Harvey, 2005; Praslova, 2010).

One of the reasons for the lack of such studies may be a lack of evaluation frameworks and instruments designed to evaluate course-based skill development and transfer. This is partly because course designers may find it challenging to bring Design-Thinking into reverse engineering course content and course instructional design from on-the-job needs as they occur in real-life work situations to specific course features specifically designed to support on-the-job training needs (Brown, 2008). For example, existing evaluation instruments such as the CET Synchronous Online Teaching Observation Checklist (USC Center for Excellence in Teaching), CET Asynchronous Online Teaching Observation Checklist, and the Classroom Observation Protocol for Undergraduate STEM (COPUS) focus on actions and behaviors of the instructor and/or students rather than assessing the course design based on skills taught and transferred (Smith et al., 2013).

The impetus for the CAST framework results from a search for an existing framework that could address to answer the following questions; How does a course facilitator assess whether their course facilitates skill learning and transfer as intended? Are the skills they identify in the learning objectives and syllabus actualized in the course? Are the course offerings connected to relevant, workforce-ready skills? We could not find a singular framework that fully captures how courses facilitate skill acquisition and transfer and/or was not easily useable. We developed the Course Assessment for Skill Transfer (CAST) framework to address this gap by aiding in course design and evaluating how courses facilitate deeper learning and skills transfer. We used a conceptual thinking process to develop the framework, resulting in a suite of conceptual tools to aid course facilitators in assessing their courses for skill transfer. This paper introduces the framework and illustrates how we developed and applied the framework in two asynchronous online courses.

Through the development of the framework we used our initial questions; How does a course facilitator assess whether their course facilitates skill learning and transfer as intended? Are the skills they identify in the learning objectives and syllabus actualized in the course? Are the course offerings connected to relevant, workforce-ready skills?, to guide our conceptual thinking process. We also referred to key elements of skill transfers that are highlighted in the literature, mainly where in the course are skills introduced with context around the relevancy of these skills and where these skills are being practiced and connected to workplace contexts (Jackson, 2016; National Academies of Sciences Engineering and Medicine [NASEM], 2018).

This process resulted in The Course Assessment for Skill Transfer (CAST) framework. CAST conceptual tool for course designers, instructors, or external evaluators to create and implement a custom essential skills assessment plan for existing or in-development courses. Ultimately CAST can empower instructors and course designers to strengthen the claims they make about skills students gain from their course in a broader effort to connect students and workers with reliable upskilling/reskilling learning opportunities. CAST is designed as a conceptual framework, specifically, an analytical tool to be used by course facilitators as a guide for course assessment. Course facilitators are encouraged to use the framework as a guide and use it with their capacity and desired level of course assessment. CAST is intended to provoke reflection and provide flexible guidelines for generating a course-specific action plan to review skills. It is not intended to be prescriptive or reductive. The framework is also not intended to be used as a model of skill transfer.

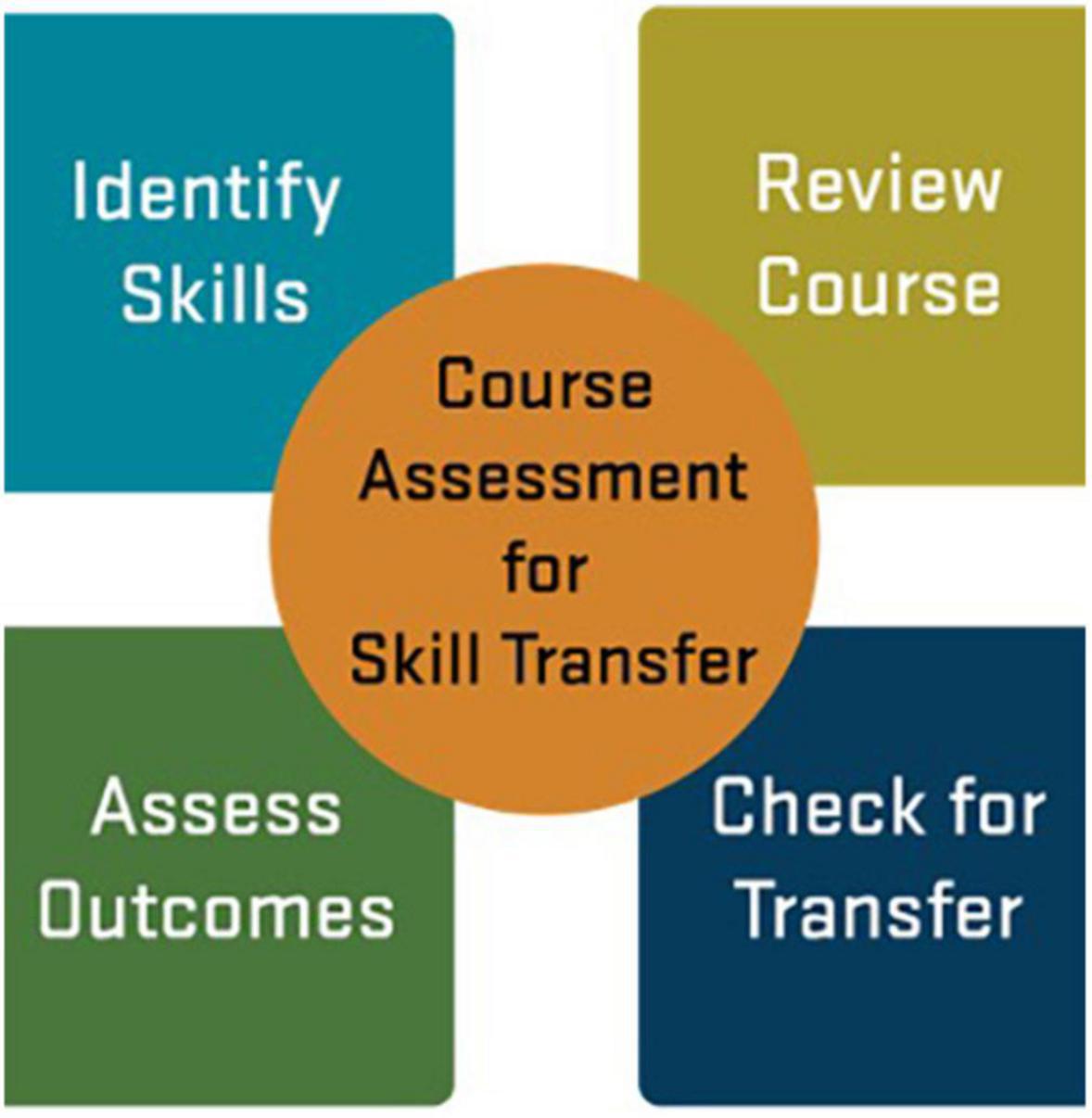

CAST consists of four parts (Figure 1): (1) identify the skills intended to be taught in a course (Identify Skills), (2) review the extent to which identified skills appear in the course (Review Course), (3) assess skills-based learning outcomes (Assess Outcomes), and 4) to track the transfer of skills into subsequent courses or the workforce (Check for Transfer).

Figure 1. The course assessment for skills transfer framework is comprised of four steps: identify skills, review the course, assess outcomes, and check for transfer.

CAST can be implemented to fit the capacity and goals of the users. For instance, course instructors seeking a holistic understanding of the skills their students learn and then carrying beyond the course itself into another course or their work environment may choose to invest resources into carrying out all four parts of CAST. Or course designers launching a new course may choose to implement only Part 1 as an exercise to reach an internal consensus on the skills intended to be taught. As such, the intended users of CAST can vary as well. They can be individuals or teams, internal or external, course designers, instructors, graduate students, evaluators, and researchers. We will broadly refer to CAST users—those who use CAST to review a course— as “reviewers.”

Table 1 provides an overview of each part of the framework, and the following section describes all four parts of CAST, including goals, example methods, and example outcomes of each part. The example methods and outcomes do not include every possible method used to implement the framework but highlight some of the methods we used to apply the framework to the courses we assessed.

Before using CAST, course facilitators should reflect on the needs and goals for this type of evaluative exercise (what do you hope to get out of this process?) and identify the resources available (time, money). The contributors to the review (internal or external?) determine roles (who is in charge of what aspects?) and decide upon a plan (enact part 1 only or the whole CAST framework?). Once these questions have been addressed and there is a comprehensive plan for the evaluation, the course designer, instructor, facilitator, or evaluator can address the review details by mapping out methods and desired outcomes for each part of CAST they plan to deploy.

The goal of part 1—Identify Skills is to determine and state which skills instructors or course designers mean to address in the course. At the end of Part 1, reviewers should answer these three questions: (1) What skills will students learn or practice in the course? (2) What is the common understanding of those skills in this course? (3) What “story” is being told to the outside world about the skills taught in the course?

Understanding what skills students should learn explicitly or practice as part of engaging with content encourages reviewers to identify a specific, limited set of skills beyond topical content that the course introduces, reinforces, and operationalizes for students. This is important and valuable because it emphasizes essential skills that are not always “called out” in outcomes and reduces ambiguity for students and instructors. It is also important that skills identified as part of learning outcomes be addressed directly in a course; claiming that a course touches on specific skills without showing where or how this might occur in Part 2 of the evaluation could lead to changes in course outcomes. Addressing this question also helps a course facilitator understand which skills are most important to teach and helps them focus on a set of select skills to prevent skill overload or the possibility that designers and instructors might believe that a wide range of skills are being addressed simply because one might be able to imagine that students are using these skills in the course. Not all skills one might imagine a student needs to complete a course are explicitly addressed and/or repeatedly practiced and reflected upon in a course. Therefore, part 1 focuses on identifying skills that students will learn and prompts reflection on which skills the course is most fit to teach in some deliberate and active form. Ideally, these are skills in demand in the growing STEM industries.

Reflection tools to identify skills include mostly interviews with course designers or instructors to accomplish the task of identifying skills. Written reflection activities can help address which skills students should learn or practice in the course. Interviews with course designers and instructors may include questions about the course’s ultimate goal, how this was determined, the course’s learning outcomes that address the goal, and how those tie to current workforce needs. Course facilitators should also be asked about the specific skills they aim to teach in the class and asked to define them, specify how they will teach them, and clarify why they think these skills are essential.

Examining the common understanding of the identified skills in the course encourages reviewers to use a common language to name and describe the nature of the skills aimed at or claimed to be taught in the course. Addressing this also helps establish a common understanding of what those skills mean and how they are operationalized in the course, making it easier to identify where those skills are addressed. Many skills, especially essential skills, are referred to by various names (e.g., “soft skills,” “workforce-ready skills,” or “21st Century skills”) and have different definitions and different metrics to measure competency in the skills. This makes it even more important to clearly define the skills and use a common language to understand what counts and what doesn’t count as “that skill” in the context of the course.

The use of non-technical terms in describing or defining skills can help to ensure that course goals and objectives are not hiding behind psychology or educational jargon. What, for instance, does it mean when a course may claim to teach or exercise “non-routine problem-solving”? What might be the more common vernacular for the term? And what does it entail, i.e., how is it different from “routine problem-solving.” Using common English terms can help non-experts see beyond the buzzwords. In the example of non-routine problem-solving, a course designer or educator might want to specify that this term refers to one’s ability and willingness to recognize when an issue arises, that is out of the norm and then use systematic strategies to address these issues, which might entail to solve them oneself, or to have enough information to seek out help and support from those who might be in a position to address the issue directly. Once skills are defined and further described in common terms, the next level of analysis needs to ensure that terminology is used consistently across course descriptions, marketing materials, syllabi, etc., to avoid confusion around what a course promises and ultimately delivers.

Developing a skills rubric for the course or program can help clarify goals and outcomes for a course related to skill development. A skills rubric provides further detail beyond defining specific characteristics of a particular skill. Skill rubrics and frameworks can be adopted from the literature or adapted to a particular evaluation need. For instance, the NRC’s consensus study on 21st Century Skills proposed a framework that focuses on key skills that promote deeper learning (learning that allows for transfer from one to another situation or setting), college and career readiness, student-centered learning, and higher-order thinking. It was developed by aligning common 21st-century skills with cognitive, interpersonal, and intrapersonal taxonomies (National Research Council [NRC], 2012).

In addition, developing a rubric and clearly defining the skills in the course is a crucial step to translating and potentially aligning a course curriculum to skills a student might see listed in a job advertisement. This also helps address what “story” is being told to the outside world about the skills taught in the course. Answering this question encourages reviewers to better align the course goals (what skills students are meant to learn or practice in the course) with learner expectations (what skills do students think they will learn or practice in the course). Aligning goals and expectations for the course creates the foundation for supporting learner satisfaction which with implications for future course taking by target audiences.

An external document review is a key method to ensure that the course goals and skills are accurately and clearly advertised. This document review would use the skills rubric and associated codes to mark where skills are advertised and how they are presented to potential course takers. The documents in this review may include the course syllabus, course catalog listing, department/program website, or the introductory page of the course on the Learning Management System (LMS) (e.g., Blackboard or Canvas). For resources on performing document analysis, see Bernard et al., 2017. One proprietary tool that partially automates analyzing documents for workforce skills is Skillabi by EMSI, a labor market analytics firm that uses data to connect learning opportunities with the job market. Open access skills frameworks—such as the National Research Council’s Clusters of 21st Century Competencies—can be used for manually coding skills.

The goal of part 2—Review Course is to understand how the course structures support students’ learning and the practicing of priority skills. In Part 2, reviewers should be able to answer two key questions; (1) Where are the key skills taught or practiced in the course? And (2) To what extent are the key skills taught or practiced? Determining where a skill is taught in the course encourages reviewers to connect Part 1 with Part 2—taking inventory of the course and locating the lesson or activity where the Identified Skills from Part 1 are explicitly addressed. It is important to capture when skills are being practiced and reinforced because substantial research suggests that learning and transfer are more successful when learners can practice a skill in various contexts (Freeman et al., 2014). In addition to noting the occurrence of a skill, reviewers are encouraged to note the characteristics of each occurrence in search for traces of processes or structures that support deeper learning, i.e., the acquisition of practice of a skill that allows the learner to transfer skills developed in one context to be successfully used or utilized in a different and maybe even unfamiliar context. This is a demand not only from employers, but expectations many educators hold about the use of prior knowledge and skills, i.e., they expect that what was learned in, for instance, chemistry 1 can form the foundation of chemistry 2, and that what students learn in both courses about “doing chemistry” will then be the basis for tackling more specialist higher level chemistry course.

A structural review of a course could build on structured observation protocols for classroom evaluation and apply them to an asynchronous online course. Structured protocols provide a common set of statements or codes to which observers respond and judge how the teaching conforms to a specific standard. There are several existing protocols for structural course review which are not STEM specific including, Inside the Classroom: Observation and Analytic Protocol (Weiss et al., 2003), Reformed Teaching Observation Protocol (RTOP; Sawada et al., 2002), and Teaching Dimensions Observation Protocol (TDOP; Hora et al., 2013): developed to observe postsecondary non-laboratory courses. In addition, the STEM-specific Classroom Observation Protocol for Undergraduate STEM (COPUS): allows observers to characterize how students and instructors spend their time in undergraduate STEM classrooms. However, none of these existing protocols examines skills or is concerned with what learning claims the instructor makes and what evidence exists for these claims. So we developed an observation protocol that identifies the skills intended to be taught in the course and assesses when and where the skills are taught (Supplementary material).

The goal of Part 3—Assess Outcomes, is to measure the extent to which students learned the identified skills through the course. In Part 3, reviewers should be able to answer questions like these: (1) How confident or proficient are students in target skills at the end of the course compared to at the beginning of the course? (2) To what degree did students engage (with) target skills during the course? (3) Did students improve on measures of target skills over the course? (4) Did students meet specific performance expectations around target skills at the end of the course?

Answering questions on student engagement with, growth in, or achievement of targeted skills is ultimately essential to determining success. It can provide formative insight into what concepts or skills need to be reinforced or practiced to a greater degree throughout the course. Note that assessment of student growth or performance on skills is not used to motivate student grades (though that is possible) but to grade the course: in that sense, student success on skill development is a measure of course quality.

Various methods can assess course outcomes, including student pre-post surveys, student retrospective surveys, student interviews, embedded assessments of tasks associated with targeted skills, performance tests, or student peer review. The main difference between methods lies in whether attitudes or dispositions toward skills, perceptions of ability, or actual performance are desired outcome measures. All of them have legitimacy, and all of them are important. A pre-post design may ask students to self-report their confidence in specific skills before taking the course, then in a post-survey, ask them to self-report on the same skills and assess if there is an increase in confidence/knowledge of those skills. A retrospective post-pre survey would similarly ask students about their growth at the end of the course. Student interviews or focus groups would focus on similar questions about skill confidence and knowledge. In our applications of the framework, we relied on student surveys to assess the outcomes of the courses. Objective performance measures around essential skills are rare, and self- or peer assessment is mainly used as a proxy, in part because objective (and often reductionist) measures for complex skills such as the ability to work well in teams, creative or non-routine problem solving, resourcefulness or self-regulation tend to not exist for use in college courses.

Part 4—Check for Transfer aims to understand which identified skills had been transferred beyond the course. The transfer results from deeper learning or “the process through which an individual becomes capable of taking what was learned in one situation and applying it to new situations” (National Research Council [NRC], 2012, 5). In Part 4, reviewers should be able to answer these questions: (1) How successfully are students in applying target skills outside the context of the course, either in subsequent courses or within the context of their work? (2) To what degree do students attribute their ability to use these skills in new contexts? [Note that they may also learn or practice skills in other contexts, making causal attributions at times difficult].

Checking for transfer can be done qualitatively and quantitatively. Still, no matter the approach claims about the transfer are more complex to measure and verify than claims about immediate course outcomes since students are not easily accessible any longer, and approaches to evaluation rely on staying reasonably well connected to alumni of a course. Therefore, studies on transfer cannot be an afterthought but have to be designed into the fabric of the class. The easiest way to ascertain skills transfer is through surveys, interviews, or focus groups with alumni. They can report back how to what degree and under what circumstances they were able to apply specific skills in a new setting. Reporting back on transfer could be automatized as “recommender” systems for the course by alumni (similar to rating systems now common in online assessments of products and services). More formal and intrusive studies on transfer could rely on observations at the workplace, journaling, or employer/supervisor assessments. In limited ways, analyzing online profiles of alumni on LinkedIn or other online profiling sites might provide insights but need to be interpreted with caution due to inconsistencies around the degree to which individuals author themselves on these sites.

The CAST Framework was developed and applied in two asynchronous online courses: the Habitable Worlds course at Arizona State University and the Visual Analytics certificate course at Indiana University. These two courses, their instructors, and course designers were involved in the larger project on course improvements toward transfer funded by the US National Science Foundation and agreed to participate in developing the CAST framework. We provide detailed descriptions of how the CAST Framework was utilized in each course to illustrate the potential usefulness of the framework for assessing skills learning and transfer in various courses. Note that institutional review board approval was obtained for the assessment of both courses (OSU IRB-2020-0658).

Habitable Worlds is a fully online science course intended for non-science majors, and it counts for ASU’s general studies laboratory science credit (Horodyskyj et al., 2018). It emphasizes learning-by-doing and authentic scientific practices centered on simulations, data analysis, and hypothesis formulation and testing. An adaptive, intelligent tutoring system (ITS) enables it to provide rapid formative feedback (the Smart Sparrow Adaptive e-Learning System). The curriculum centers on the search for life in the Universe, giving learners a tangible objective—discover a habitable planet in a rich, scientifically authentic simulation. Attaining the aim of finding a habitable planet requires learning material from several disciplines and combining that knowledge to reach the objective. Consequently, Habitable Worlds teaches procedural knowledge and critical thinking as much as content knowledge.

Habitable Worlds has been offered since 2010 to approximately 5,000 students at ASU, roughly evenly split between students enrolled in fully online degree programs at ASU Online and students taking an online class while enrolled in traditional, in-person degree programs. The online degree students are on average ∼ 31 years old and are predominantly in the workforce. Many come from a partnership program between ASU and a major US employer and are free of charge to permanent employees who enroll in ASU degree programs.

This illustrative example was characterized as having an “evaluator-driven” approach to pilot the scenario in which course designers or instructors may bring in outside perspectives to review the course. Note that these study activities were part of a larger goal of refining the CAST Framework, so not all study activities can be combined to draw broader, course-wide conclusions–rather, they do illustrate the potential uses and value of the framework overall.

In Part 1 (“Identify Skills”), evaluators interviewed course instructors and designers to identify the key skills taught in the course. Evaluators analyzed the course syllabus, highlighting the skills that appear and cross-walking those skills with the National Research Council’s framework for 21st-century competencies. After identifying 16 different 21st-century competencies implicitly and explicitly appearing in the syllabus, the course designers and evaluators iteratively edited and refined the list of skills, skill definitions, and examples for consensus-building. This process resulted in a skill rubric comprised of 11 skills: analysis, creativity, critical thinking/reasoning/argumentation, decision making, executive function, information literacy, interpretation, problem-solving, self-direction, type-1, and type-2 self-regulation.

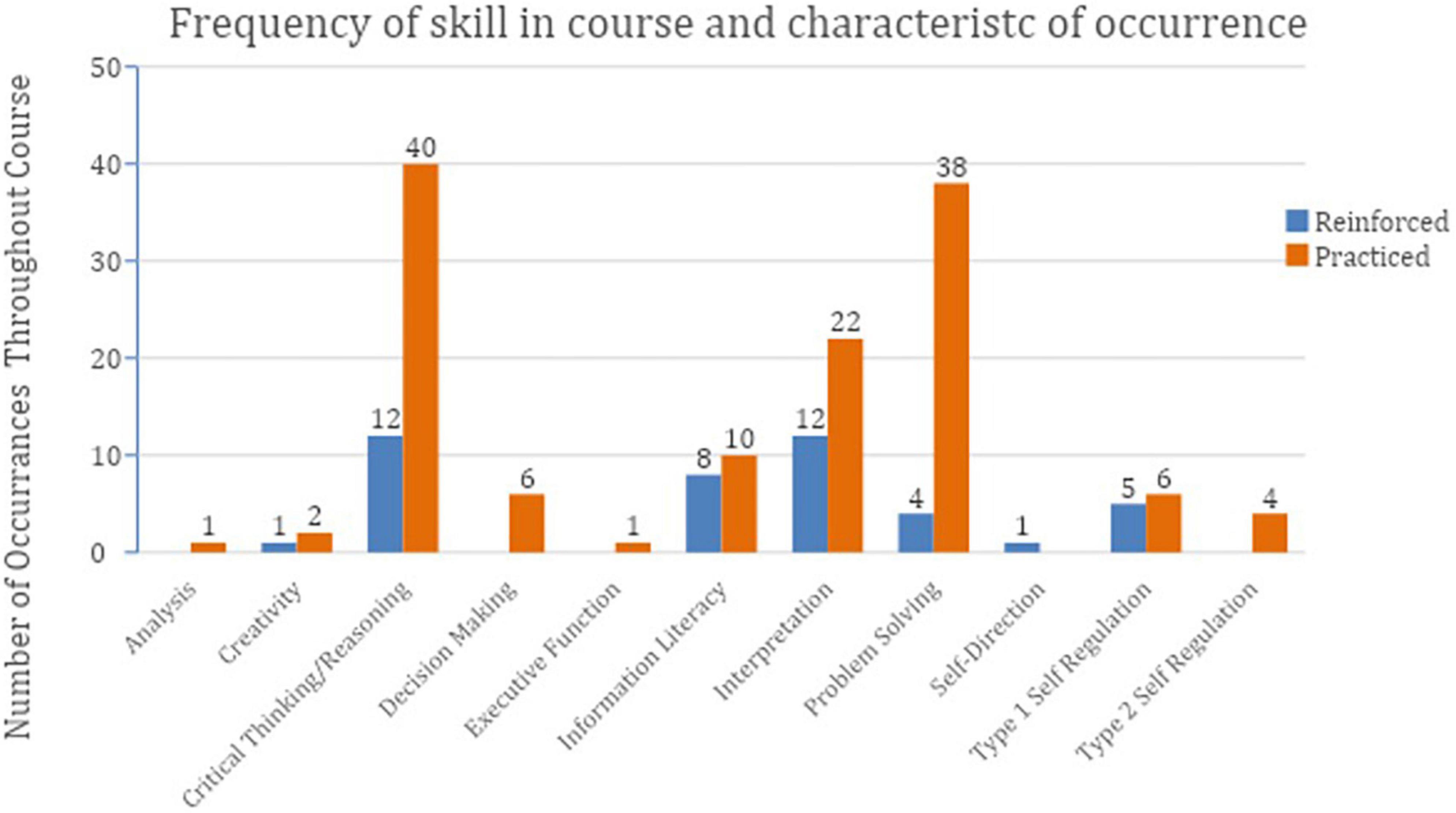

The skill rubric was then used in Part 2 (“Review Course”) to assess the presence of skills in the course at the lesson level (there were a total of 51 lessons reviewed) (Supplementary material). Researchers marked the occurrence of a skill and denoted the skill characteristic of each occurrence as either introduced, reinforced, or practiced—positing that when a particular skill is introduced, reinforced, and practiced within a course, this culmination may promote “deep learning” or transferability of the skill beyond course context. “Introduced” refers to the first time the competency appears in the course. Each competency can only be coded as “introduced” one time. “Reinforced” refers to the appearance(s) of the competency in subsequent lessons embedded in the lesson material (a competency can only be coded as “reinforced” once per lesson). “Practiced” refers to students using, discussing, or engaging with the competency (a competency was only coded as “practiced” once per lesson).

In Part 3 (“Assess Outcomes”), students in two cohorts (Fall 2019 and Spring 2020) were surveyed at the beginning of the course and upon concluding the course to understand student perception of or confidence in the skills they learned or practiced in the course. A total of 129 (Fall 2019) and 206 (Spring 2020) participated in both surveys, which allowed us to investigate changes in students’ perceptions of their scientific skills and beliefs after participating in the Habitable Worlds course. Finally, in Part 4 (“Check for Transfer”), course alumni from two different cohorts were surveyed to understand if any skills students perceived to have learned or practiced in Habitable Worlds were used outside the course.

Using the CAST framework, course facilitators were encouraged to reflect on the course and hone in on a set of focal skills they aimed to teach (Table 2). This set of skills was then used in the “Review course” step. All skills identified in the rubric appeared at least twice in the course; 6 of 11 skills were introduced, reinforced, and practiced (see Figure 2 for an example of the skills frequency graph). These skills then became the focus of the questions in the student and alumni surveys. The students and alumni survey provided evidence of skill transfer.

Figure 2. The frequency of skills addressed in the course and how the skill was addressed (reinforced or practiced).

The assessment helped the course facilitators see the misalignment between skills promised to be addressed in the course and those ultimately being taught and reinforced. It also helped instructors understand why students took this course instead of other science credit courses and how the course could be optimized to produce transferable skills to non-science majors.

The Visual Analytics Certificate (VAC) is a fully online, 6-week course with 5 h per week at Indiana University. Students design and execute data mining and visualization workflows for their data using a Make-A-Vis online interface that records their actions. The course implements the Data Visualization Literacy framework introduced in Börner et al. (2018) to define, measure, and improve people’s ability to render data into actionable knowledge.

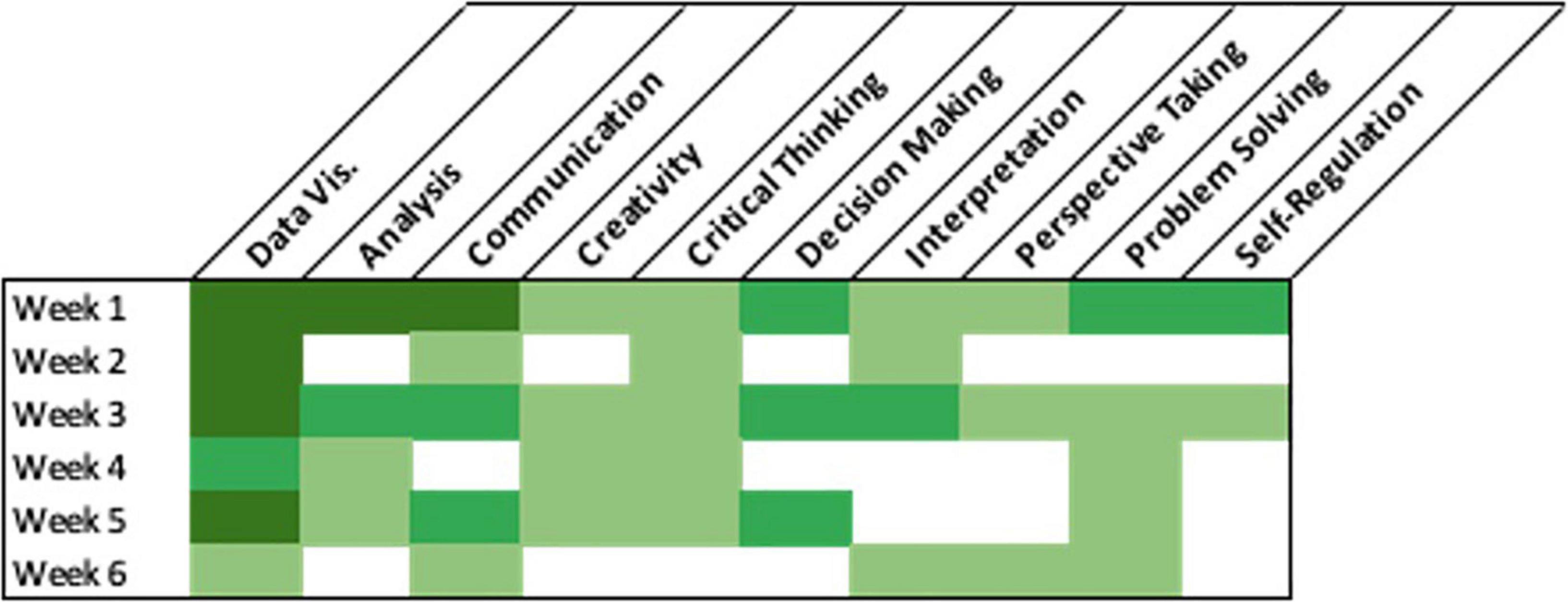

For this example, the evaluators employed a hybrid review process, guided the VAC course team through the framework, and helped them analyze and interpret outcomes from each step. For part 1, the course facilitators and the evaluators met to co-review the course materials and identify key skills. The VAC team then mapped those key skills in their course (Figure 3). The evaluators helped develop the survey used in Parts 3 and 4. The VAC team administered the surveys and co-analyzed and interpreted the results of the surveys.

Figure 3. Heatmap of the key skills and when in the Visual Analytics Certificate course those skills are present.

The steps in the CAST framework helped the course facilitators identify the skills they want to make sure they are teaching and reinforcing the course. The “Assessing Outcomes” and “Checking for Transfer” steps examined if students picked up these skills. In the initial step, the evaluators encouraged course facilitators to reflect on and hone in on a set of focal skills they aimed to teach in the course. This process yielded a skills rubric, used throughout the rest of the skills review process. The “Assess Outcomes” step included surveys of the students about what they learned in the course, and students were able to describe a range of course-related outcomes that they considered applicable in their workplace. In the alumni survey, students were asked to identify which skills they believed to have learned or practiced in the course, which ones they applied in life or work, and their confidence in applying these skills (Table 3). This resulted in a skill transfer table that allowed course designers to better understand the connection between the skills taught in the course and how those skills might be applied in real-life situations.

Table 3. The frequency of skills addressed in the course and how the skill was addressed (reinforced or practiced).

The Council of Economic Advisers (2018) recommended that workers seeking upskilling or reskilling should invest in learning opportunities that “could lead to precisely the skills and credentials employers seek” (p. 12). The market for these learning opportunities is large and mostly unregulated. The degree to which a product (a course, for instance) addresses a learner’s need and ultimately delivers on the promise is often unclear even to those who offer them. Consequently, skill seekers may not be aware of a course’s potential for reskilling/upskilling because course designers do not routinely assess, document, and communicate the outcomes of a course related to the current in-demand skills. The CAST framework provides a useful structure for course facilitators and evaluators to review their courses and identify what skills are being claimed to be taught, if they are taught, and if they are being transferred to where they are needed, namely the workplace. One of our course facilitators remarked, “We need to make it clear what we are not going to teach students and support some of our claimed skills better by improving course activities and tools.” Another commented, “it’s positive to see that a high percentage of students are applying this knowledge in their life. The question is, how do we tie this in more to their work through guided activities.”

There are some limitations to using a framework like CAST to assess a course. This tool requires a lot of buy-in from the course facilitators and - depending on the assessment methods - from students. This buy-in from course facilitators is not just for the initial review of the course and materials but also for buy-in to make changes to the course and program and enact the assessment results. A simple place to start with implementing changes to the course that may occur due to the empirical evidence is with the course syllabus by making sure the skills taught in the course are properly described across all written material. Using existing skills terminologies, a course instructor can “translate” their syllabus into more universally recognized terms for specific skills and define these skills and how they are introduced and practiced in the course. A course facilitator can do this independently or use a proprietary service like EMSI’s Skillabi, which helps course instructors translate their syllabus.

The nature of the CAST framework as an analytical tool for course facilitators to assess the course design and its effect on skill transfer sets it apart from other exciting course evaluation instruments. Many of these existing instruments focus on the actions and behaviors of the students and instructors [e.g., CET Synchronous Online Teaching Observation Checklist (USC Center for Excellence in Teaching), CET Asynchronous Online Teaching Observation Checklist, and the Classroom Observation Protocol for Undergraduate STEM (COPUS)]. Using one of these action and behavior-focused tools along with CAST can give a course facilitator a complete picture of the design of the course, the implementation of that design by the instructor, and the impact of the course on the student. Further, the use of the CAST framework can be bolstered by additional methods to assess skill transfer. For example, we use embedded assessments in another course assessment project to track skills transfer through a course and a certificate program.

We could not apply all layers of assessment or evaluation associated with CAST. We were funded to explore the specific courses used in the illustrative examples and hence have closely examined the applicability and practicality of the framework in other types of courses. We, therefore, do not know yet what support structure is needed for this to be successfully applied in a situation in which an evaluation team is not readily available and funded to support the effort. We assume, however, that all phases of the framework might benefit from professional support from experienced social science researchers or evaluators. Another limitation is that the Framework was designed initially for asynchronous online courses. To further determine the framework’s applicability, it should be used to assess other types of online courses, such as synchronous online courses, short courses, and MOOCs.

The framework can help create a plan for designing courses that facilitate skills transfer (provide strengths, weaknesses, and ideas for future iterations) since even small investments into reflective practices with a course can lead to valuable improvements on the margin. While LMS systems provide course facilitators with lots of data and analytics about a course, lots of data might not translate into many valuable and actionable insights. And conflicting results can hamper improvement cycles. It is not always clear how to improve course design or delivery, even if evidence suggests that outcomes are not achieved as desired: that might require additional expertise, just as well as it might sometimes be enough to reframe the course’s purpose or make fewer or less grandiose claims about course outcomes. The most important result of using the CAST framework might be a level of honesty about what can and cannot be learned during a course.

De-identified data will be made available by the authors upon request.

The studies involving human participants were reviewed and approved by Oregon State University IRB. The patients/participants provided their written informed consent to participate in this study.

HF oversaw the development of the framework, wrote the manuscript, and performed data analysis for the exemplary studies. KP researched skills transfer, designed an outline of the framework, developed methodologies associated with the framework, performed data collection and data analysis, and wrote the findings for the illustrative studies. NS complied and wrote portions of the literature review and developed the observational methodologies used to apply the framework and performed data analysis for the exemplary studies. MS advised the writing of the manuscript and provided intellectual support for the discussion and conclusion section, served as co-PI on the project which funded this work. All authors contributed to the article and approved the submitted version.

This work was funded by NSF award number: 1936656, Convergence Accelerator Phase I (RAISE): Analytics-Driven Accessible Pathways To Impacts-Validated Education (ADAPTIVE).

We acknowledge the support of instructors of the courses and the other members of the ADAPTIVE team. We thank Jessica Sawyer of the STEM Research center for her valuable contributions.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.960430/full#supplementary-material

Bernard, H., Russell, A. W., and Gery, W. R. (2017). Analyzing qualitative data: Systematic approaches. Thousand Oaks: SAGE publications.

Börner, K., Rouse, W. B., Trunfio, P., and Stanley, H. E. (2018). Forecasting innovations in science, technology, and education. Proc. Natl. Acad. Sci. U.S.A. 115, 12573–12581. doi: 10.1073/pnas.1818750115

Bouchet, F., and Bachelet, R. (2019). “Socializing on MOOCs: Comparing university and self-enrolled students,” in Digital education: At the MOOC crossroads where the interests of academia and business converge. EMOOCs 2019. Lecture notes in computer science, Vol. 11475, eds M. Calise, C. Delgado Kloos, J. Reich, J. Ruiperez-Valiente, and M. Wirsing (Cham: Springer). doi: 10.1007/978-3-030-19875-6_4

Caudill, J. (2017). “The emerging formalization of MOOC coursework: Rise of the MicroMasters,” in Proceedings of the EdMedia 2017, ed. J. Johnston (Washington, DC: Association for the Advancement of Computing in Education (AACE)), 1–6.

Council of Economic Advisers (2018). Addressing America’s reskilling challenge. Washington, DC: Executive Office of the President of the United States.

De Notaris, D. (2019). “Reskilling higher education professionals,” in European MOOCs stakeholders summit, eds M. Calise, C. D. Kloos, J. Reich, J. A. Ruiperez-Valiente, and M. Wirsing (Cham: Springer), 146–155.

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active learning boosts performance in STEM courses. Proc. Natl. Acad. Sci. U.S.A. 111, 8410–8415. doi: 10.1073/pnas.1319030111

Gownder, J. P., Koetzle, L., Gazala, M. E., Condon, C., McNabb, K., Voce, C., et al. (2015). The future of jobs, 2025: Working side by side with robots. Available online at: Forrester.Com (accessed October 7, 2019).

Gray, A. (2016). The 10 skills you need to thrive in the fourth industrial revolution, Vol. 19. Cologny: World Economic Forum.

Guest, R., Rohde, N., Selvanathan, S., and Soesmanto, T. (2018). Student satisfaction and online teaching. Assess. Eval. High. Educ. 43, 1084–1093. doi: 10.1080/02602938.2018.1433815

Hora, M. T., Oleson, A., and Ferrare, J. J. (2013). Teaching dimensions observation protocol (TDOP) user’s manual. Madison, WI: Wisconsin Center for Education Research.

Horodyskyj, L. B., Mead, C., Belinson, Z., Buxner, S., Semken, S., and Anbar, A. D. (2018). Habitable worlds: Delivering on the promises of online education. Astrobiology 18, 86–99. doi: 10.1089/ast.2016.1550

Jackson, D. (2016). Modelling graduate skill transfer from university to the workplace. J. Educ. Work 29, 199–231. doi: 10.1080/13639080.2014.907486

Kinash, S., and Crane, J. (2015). “Enhancing graduate employability of the 21st century learner,” in Proceedings of the international mobile learning festival 2015: Mobile learning, MOOCs and 21st century learning, May 22-23, 2015, Hong Kong, 148–171.

National Academies of Sciences Engineering and Medicine [NASEM] (2017). Supporting students’ college success: The role of assessment of intrapersonal and interpersonal competencies. Washington, DC: National Academies Press.

National Academies of Sciences Engineering and Medicine [NASEM] (2018). How people learn II: Learners, contexts, and cultures. Washington, DC: National Academies Press.

National Research Council [NRC] (2012). Education for life and work: Developing transferable knowledge and skills in the 21st century. Washington, DC: The National Academies Press. doi: 10.17226/13398

Oraison, H., Konjarski, L., and Howe, S. (2019). Does university prepare students for employment?: Alignment between graduate attributes, accreditation requirements and industry employability criteria. J. Teach. Learn. Grad. Employability 10, 173–194. doi: 10.21153/jtlge2019vol10no1art790

Praslova, L. (2010). Adaptation of Kirkpatrick’s four level model of training criteria to assessment of learning outcomes and program evaluation in higher education. Educ. Assess. Eval. Account. 22, 215–225. doi: 10.1007/s11092-010-9098-7

Santandreu, C. D., and Shah, M. A. (2016). MOOCs, graduate skills gaps, and employability: A qualitative systematic review of the literature. Int. Rev. Res. Open Distrib. Learn. 17, 67–90. doi: 10.19173/irrodl.v17i5.2675

Sawada, D., Piburn, M. D., Judson, E., Turley, J., Falconer, K., Benford, R., et al. (2002). Measuring reform practices in science and mathematics classrooms: The reformed teaching observation protocol. Sch. Sci. Math. 102, 245–253. doi: 10.1111/j.1949-8594.2002.tb17883.x

Smith, M. K., Jones, F. H. M., Gilbert, S. L., and Wieman, C. E. (2013). The classroom observation protocol for undergraduate STEM (COPUS): A new instrument to characterize university STEM classroom practices. CBE Life Sci. Educ. 12, 618–627. doi: 10.1187/cbe.13-08-0154

Toutkoushian, R. K. (2005). What can institutional research do to help colleges meet the workforce needs of states and nations? Res. High. Educ. 46, 955–984. doi: 10.1007/s11162-005-6935-5

US Bureau of Labor Statistics (2021). Employment in STEM occupations September 8, 2021. Available online at: https://www.bls.gov/emp/tables/stem-employment.htm (accessed March 11, 2022).

Voorhees, R. A., and Harvey, L. (2005). Higher education and workforce development: A strategic role for institutional research. New Dir. Inst. Res. 2005, 5–12. doi: 10.1002/ir.159

Weiss, I. R., Pasley, J. D., Smith, P. S., Banilower, E. R., and Heck, D. J. (2003). A study of K-12 mathematics and science education in the United States. Chapel Hill, NC: Horizon Research.

Keywords: essential skills, online course evaluation tools, skill transfer, assessment framework, digital learning

Citation: Fischer HA, Preston K, Staus N and Storksdieck M (2022) Course assessment for skill transfer: A framework for evaluating skill transfer in online courses. Front. Educ. 7:960430. doi: 10.3389/feduc.2022.960430

Received: 02 June 2022; Accepted: 15 September 2022;

Published: 19 October 2022.

Edited by:

Jacqueline G. Bloomfield, The University of Sydney, AustraliaReviewed by:

Shalini Garg, Guru Gobind Singh Indraprastha University, IndiaCopyright © 2022 Fischer, Preston, Staus and Storksdieck. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Heather A. Fischer, aGVhdGhlci5maXNjaGVyQG9yZWdvbnN0YXRlLmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.