- 1Department of Education and Human Development, Clemson University, Clemson, SC, United States

- 2School of Education, Iowa State University, Ames, IA, United States

A brief overview of the development of an online system to support algebra progress monitoring across several years of an iterative process of development, feedback, and revision is provided. Online instructional modules addressed progress monitoring concepts and procedures; administration and scoring of three types of algebra measures, including teacher accuracy with scoring; and navigation and use of the online data management system, including data entry, graphing, and skills analyses. In the final year of this federally funded research project, a test of the functionality of the completed system as well as an evaluation of teachers’ knowledge, accuracy, and satisfaction with the online professional development was evaluated. Specifically, 29 general and special education secondary school teachers completed 11 fully developed online instructional modules independently and administered weekly two of three types of algebra measures across 10 weeks with one of their classes of students. Data analysis included teacher accuracy in the scoring of the measures; change in their knowledge of student progress monitoring and data-based decision making; and teacher satisfaction with the online system, including instructional content, feasibility, and usability for data-based decision making. Directions for future research and implications for classroom use of this online system are discussed.

Introduction

Progress monitoring is an essential component of data-based decision making (Espin et al., 2017). Progress data help teachers to pinpoint students throughout the year whose response to their mathematics program appears insufficient to meet year-end instructional benchmarks or goals. Research corroborates that teachers who use progress monitoring to make instructional decisions, that is, teachers who revise student instruction when their data reveal inadequate progress, effect greater achievement than among students whose teachers use their own methods of assessment (Stecker et al., 2005, for review). For teachers to use data-based decision making effectively, however, they need to be knowledgeable users of technically sound progress data. Espin et al. (2017, 2021a), Wagner et al. (2017) demonstrated that teachers have difficulty, however, in using progress data for instructional decision making. Moreover, instructional supports, such as graphs with prompts about applying decision-making rules, student skills profiles illustrating levels of mastery by problem types, and consultation (in person or system-generated recommendations) may be needed to support teachers’ effective use of data (Stecker et al., 2005; Jung et al., 2018; Fuchs et al., 2021). Professional development (PD) materials may include information, directions, and examples to support teachers’ and preservice teachers’ knowledge and skill acquisition in a particular domain. Espin et al. (2021b) examined available PD materials related to progress monitoring using curriculum-based measurement and coded content in four areas: general information, conducting progress monitoring, data-based decision making, and other. They found that data-based decision making was not addressed as much as the other topics and recommended that greater consideration be devoted to this area in future PD materials. The current PD project focused on progress monitoring in algebra. This fully online PD included general content about progress monitoring, information about conducting progress monitoring in algebra, and several features related to data-based decision making.

Although several conceptually based measures exist for algebra readiness (e.g., see Helwig et al., 2002; Ketterlin-Geller et al., 2015 for sample items and description), few technically sound measures are available for secondary mathematics in algebra. Foegen et al. (2017) have developed and established the technical adequacy of three types of progress monitoring measures for algebra (Espin et al., 2018; Genareo et al., 2019). Like learning rules and applying decisions for scoring some of the elementary-level reading (e.g., knowing types of miscues that count as errors in oral reading) and mathematics measures (e.g., scoring digits correct in answers), the content and scoring of the algebra measures requires explicit instruction to ensure accuracy, or reliability, of scoring and fidelity of implementation. For example, with the algebra measures, students construct written responses, and teachers score written papers, making judgments about whether answers are mathematically equivalent. One type of measure requires examination of student work on the item solution to determine whether partial credit should be awarded if the final answer is incorrect, but part of the solution is appropriate for reaching a correct answer. Because of teacher judgment involved in progress monitoring, accuracy in scoring and fidelity of implementation are critical for effective data-based decision making. In response to interest in the measures, a professional development (PD) workshop was created in 2008 for practitioners and delivered in-person, most often with the PD staff going to the practitioners. While the in-person PD option increased access to the algebra progress monitoring measures, it was not feasible or cost-effective for individual teachers or for small districts, including those in more remote areas. The Professional Development for Algebra Progress Monitoring project was funded to address this need (Foegen and Stecker, 2009-2012). Over the course of 5 years, the research team worked with secondary teachers to develop, revise, and test an online PD system to make algebra progress monitoring accessible and efficient. In this paper, we describe briefly the development and features of the online system and the research results during the final year of the project on teachers’ learning and their use of the system. Specifically, we examined whether teachers (a) could learn critical content about algebra progress monitoring from the online professional development and (b) be able to use the online system accurately and efficiently. Researchers also examined teacher satisfaction data about the system’s content, navigation, feasibility, and usability.

Materials and methods

Algebra progress monitoring measures

The PD online system was developed to support three algebra progress monitoring measures (Algebra Basic Skills, Algebra Foundations, and Algebra Content Analysis) that had been developed during a previously funded project, Algebra Instruction and Assessment: Meeting Standards (AAIMS; Foegen, 2004-2007). During the earlier AAIMS grant, an iterative development process that incorporated teacher input, student data collection, statistical analyses to examine technical adequacy, and teacher feedback on the results was used over 4 years to refine and test five alternative algebra measures designed to reflect Pre-Algebra and Algebra 1 content typically addressed in grades 7−12. Based on our design and technical adequacy criteria, three of the five types of algebra measures were deemed acceptable for dissemination (Foegen et al., 2017; Espin et al., 2018; Genareo et al., 2019). Each of these three AAIMS measures had been based upon principles of curriculum-based measurement (Deno, 1985) that incorporated use of alternate forms of systematic sampling of core algebra skills or problem types that related to success in algebra, along with standardized administration and scoring procedures. Assessments were completed as relatively brief, timed paper/pencil tasks, either individually or as a whole class. Teachers scored the measures using the same scoring guidelines implemented in the research that established evidence of technical adequacy. Twelve parallel forms were developed for each of the three types of AAIMS measures. The three measures: Algebra Basic Skills, Algebra Foundation, and Algebra Content Analysis, originally developed through the AAIMS grant, became the foundation for the current PD project that focused on teachers’ acquisition of progress monitoring knowledge as well as data management associated with administration, scoring, and decision making for a group of their own students. These measures differed in the algebra skills addressed as well as the format used, which is described in Foegen et al. (2008). Images of the entire first page of each of the three types of algebra measures used in this study are included in Supplementary materials 1–3.

Development of the professional development online system

The online system for the current project was developed using an existing tool (i.e., ThinkSpace1) to support case-based and critical thinking instruction in higher education (Danielson et al., 2007; Bender and Danielson, 2011; Kruzich, 2013; Wolff et al., 2017). The PD system included two hubs, which are separate features of the system navigable to and from the homepage. One hub comprises the teacher PD; the other hub organizes the data management activities for entering and scoring student data and for tracking progress. Researchers worked with the developer of this platform to adapt the original tool, first creating six asynchronous modules in the PD hub to support teacher learning about algebra progress monitoring and then creating the data management hub with five asynchronous modules to support teachers’ management, review, and decision making using graphed progress monitoring data and diagnostic tools.

The content for the first six PD modules mirrored the content for the in-person workshop for practitioners and incorporated multimedia presentations of information (i.e., videos, transcripts) and interactive activities. Within each online module, interactive activities included self-check questions where user answers were followed by expert responses for comparison. All three modules that provided instruction on administration and scoring for each of the three types of algebra progress monitoring measures used a simulated administration of the measures that teachers completed to better understand the student experience. In addition, teachers engaged in scoring exercises in which the modules guided teachers through scoring procedures and provided samples of student work to score as well as an answer key. At the conclusion of each of these modules about the algebra measures, teachers completed a check-out exercise of their scoring accuracy that included automated evaluation of their scoring responses for a completed sample student paper. Teachers were required to achieve at least 90% accuracy in scoring before continuing with the next module. Additional feedback and practice opportunities were available within the system as well as additional testing opportunities to meet the criterion of 90% accuracy if it was not reached on the first attempt. Following the modules about administration and scoring, we used the same format and approach to develop five new modules to help teachers learn about the online system’s data management and decision-making features. Teachers completed all modules asynchronously at times convenient to them. The online PD modules listed the duration of the videos on each page; total module video time ranged from just under 9 to 34.33 mins (for the Algebra Content Analysis module that involved partial credit scoring based on a rubric). Total video time for the 11 modules was 2.59 h; we estimated additional time for teachers to complete activities within each module would add approximately two additional hours for a total time of 4.5−5.0 h.

Prior to the current study, we used an iterative development process across 4 years that included two rounds of “in-house” testing completed by undergraduate preservice teachers or graduate students in mathematics education or special education, followed by testing with four cohorts of teacher participants. Holistic ratings and page-by-page comments were gathered to obtain users’ views, and they provided feedback on the content, clarity, visual appeal, and usability of each module. Researchers used this feedback to make refinements to the system and to test the extent to which the system functioned as intended. Although new modules were developed sequentially with several added as each new teacher cohort tried the system, participants evaluated all modules completed to that point in time, so the refinements that researchers made to earlier modules were evaluated by subsequent cohorts. All 11 asynchronous modules were evaluated at least once prior to their use in our final study. The current PD study examined the online PD system by requiring teachers (a) to use all 11 revised modules independently and (b) to administer, score, and use the data management system for at least 10 weeks with at least one class of students taking algebra-related content.

Study design

The current study was funded as a part of a research development grant that required use of a multi-year iterative development process. This process emphasized teacher feedback as the most critical aspect of the project’s evaluation, which included feedback about the usability and potential utility of the system. Student progress monitoring is, indeed, an evidence-based practice that teachers may use to inform instructional planning and to effect student gains in achievement, particularly with students who are low achieving (Stecker et al., 2005; Jung et al., 2018; Fuchs et al., 2021); however, teachers who merely collect data and do not do anything differently instructionally based on student data patterns are not likely to effect greater student achievement (Stecker et al., 2005). Consequently, it is important that teachers learn about progress monitoring and how to use data to make meaningful decisions about the adequacy of student progress and the potential need for intervention. Beyond functionality of the system, teacher satisfaction with the PD system, both with instructional features and with the data management tools, remains a necessary first step for its effective use.

Following this iterative process of development, testing, and revisions, the research team conducted a final study of the entire system. District special education directors and curriculum coordinators sent email invitations to general education algebra and special education teachers on behalf of the research team. Interested teachers spoke with research staff for further information and clarification about study components, and the resulting volunteers became the primary research participants. Participating teachers from Iowa, Minnesota, and South Carolina completed 11 instructional online modules on their own. These modules focused on progress monitoring features; three types of algebra progress monitoring measures, including administration and scoring guidelines; and data management, including data entry, graph interpretation, and skills and error analyses. Teachers took a pre- and posttest about their progress monitoring knowledge, provided feedback at three points during their interaction with the modules, and responded to a written questionnaire at the end of the study. Following completion of the online instructional modules and in consultation with researchers, teachers were expected to administer one researcher-assigned algebra measure each week across a period of 10 weeks to at least one class of their algebra students. In addition, teachers administered one self-selected assessment of the two remaining measures to the same students across the 10 weeks and administered the third measure four times, during the first and last 2 weeks of the project. Consequently, the teacher-selected groups of students for whom they administered and scored measures and viewed progress became the secondary research participants. Because the focus of this research was on the teachers’ use of the PD system, student participation was necessary for teachers as they considered their students’ performance and provided feedback about the system.

Participants

Teachers

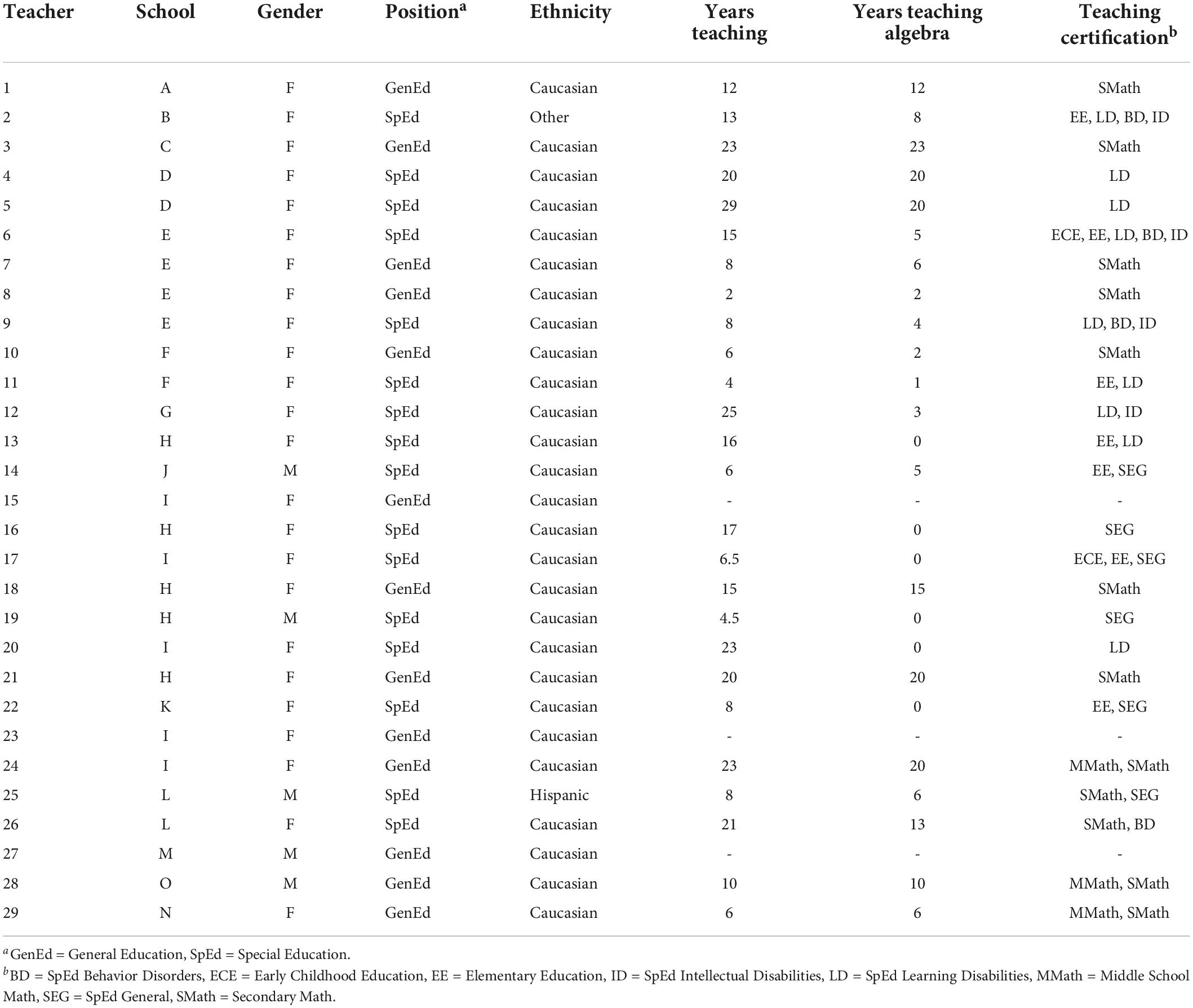

A total of 29 teachers participated in this study and completed all training, including 12 teachers in SC, 14 teachers in Iowa, and 3 teachers in Minnesota. Initially, 4 teachers had been recruited in Minnesota, but 2 discontinued their participation in the study shortly after it started, and a third teacher was recruited through nomination. Of these 29 teachers, 16 were special educators (7 in SC and 9 in IA) and 13 were general educators (5 in SC, 5 in IA, and 3 in MN). See Table 1 for demographic information for each teacher, including the number of years spent teaching, years teaching algebra, type of teacher certification held, gender, and ethnicity.

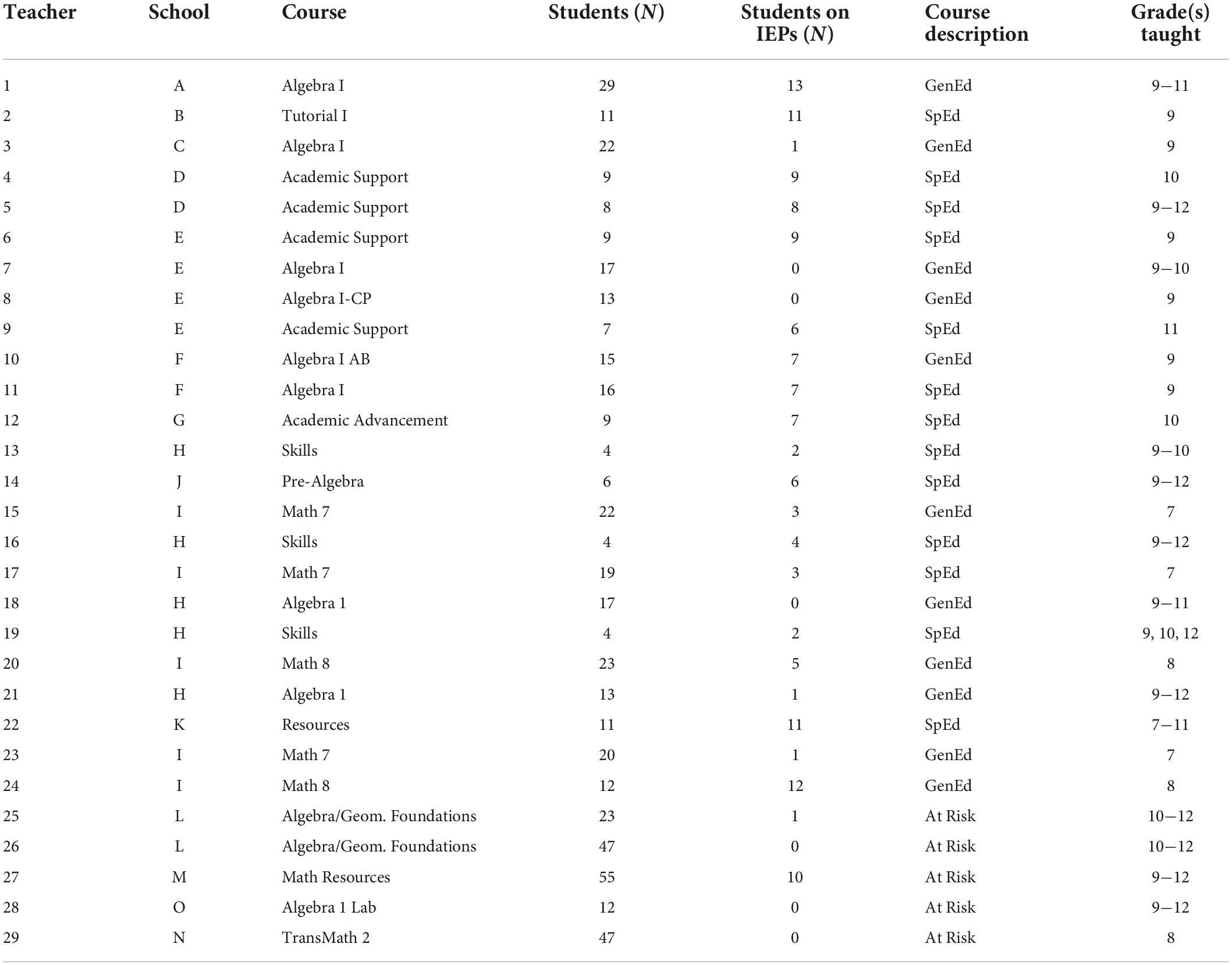

Students

Students who took the algebra progress monitoring measures (N = 460) spanned grade levels from 7 to 12, with the majority of students attending high schools. The types of courses represented included 7th- and 8th-grade General Math, Pre-Algebra, Algebra/Geometry Foundations, Skills and Instructional Strategies, and Algebra 1. Students were typically developing, or they had Individualized Education Plans (IEPs) with goals in mathematics. Students with IEPs received mathematics instruction in inclusive classrooms or received instructional support in algebra by special education teachers in special education settings. The number of students involved, including the number of students with IEPs, and the types of courses in which teachers conducted the progress monitoring activities can be found in Table 2.

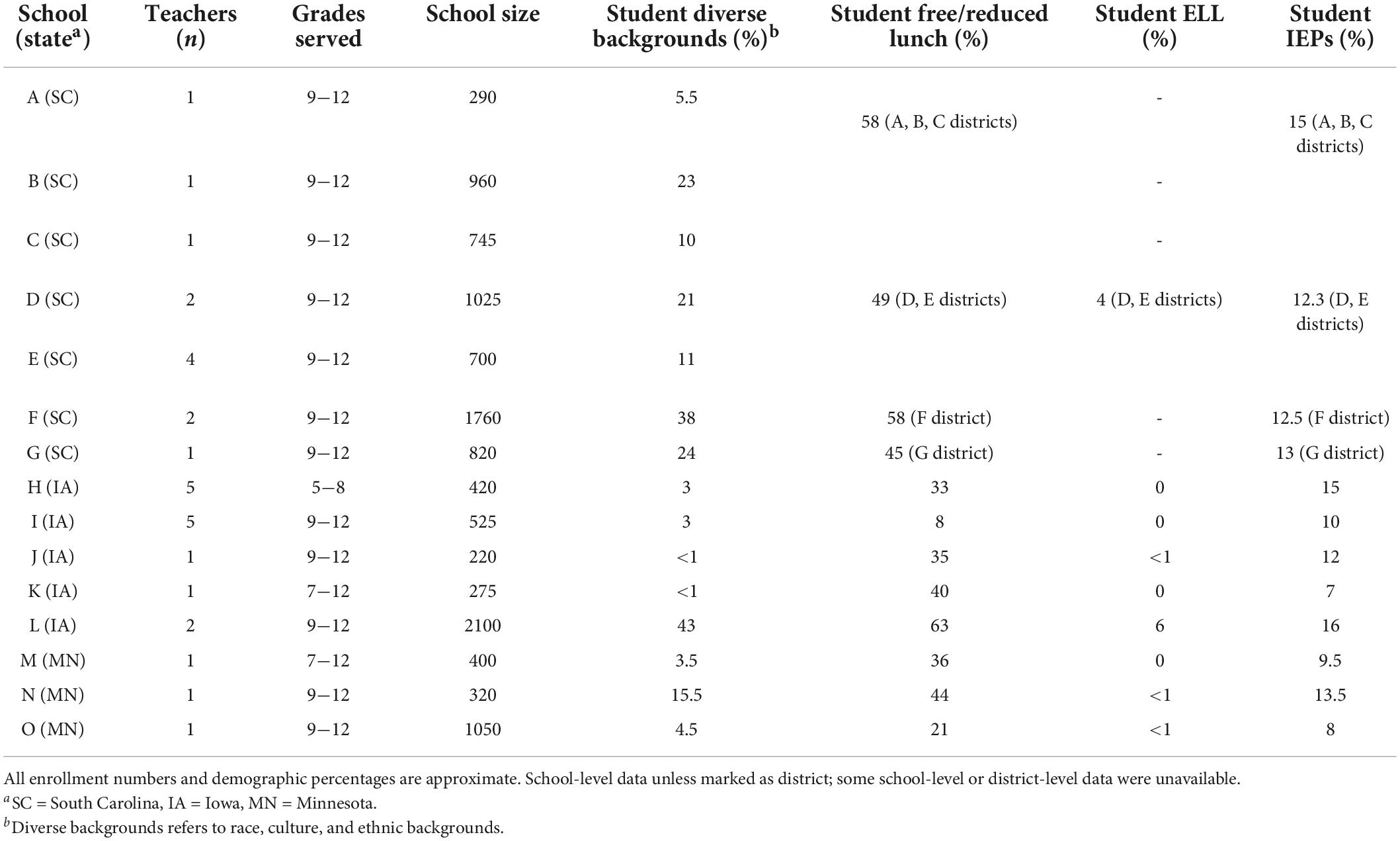

Demographic information for providing a general profile for the school or district is provided in Table 3. The number of teachers participating in the research in each school; grades included in the school; student enrollment; and percentages of students with diverse backgrounds, receiving free/reduced lunch (a common proxy for low-income households in the United States), learning English language, and with IEPs are summarized for each school or district according to available data.

Dependent measures

Teacher knowledge and accuracy

Knowledge pre- and post-test

For this study, teacher knowledge about progress monitoring and the use of the data-based system was evaluated twice: prior to the start of the instructional modules and again after teachers had completed online instruction, 10 weeks of data collection, and data management. Researchers developed the knowledge test, which was comprised of 25 multiple-choice items with four, possible answer selections (see Supplementary material 4 for the actual assessment used). In addition to assessing general knowledge about progress monitoring, specific items related to administration and scoring of the three algebra measures and the use of the data management system were included. Test items were scored as either correct or incorrect. Cronbach’s alpha for the items on the knowledge pretest was 0.86, indicating acceptable internal consistency. Cronbach’s alpha for items on the knowledge posttest was 0.84.

Accuracy of scoring and data entry

Although teachers collected data from student progress measures for 10 weeks as a part of the project, the focus of this study was on teachers’ use of the system rather than student performance. To determine the extent to which teachers could learn from the online modules about scoring and data entry, however, researchers included accuracy checks of teacher scoring of their student progress measures as well as accuracy of data entry in the online data management system (See section “Data analysis” for information about procedures for determining scoring and data entry accuracy).

Teacher use and satisfaction

Module feedback

Similar to the earlier iterative cycles of development, instructional modules included feedback pages in the final online PD at several points during the study in which teachers responded to Likert-type scales and open-ended items. Teachers were asked about the quality of features of the online system, ease of navigation, and their level of engagement during the instruction. They also responded to items about the content of the modules and their level of understanding. In addition, they judged the appropriateness of their time spent in instruction and offered suggestions for revisions that potentially could improve the system or their learning.

Final questionnaire

At the conclusion of the project in their final meeting with a researcher, teachers completed independently a written questionnaire that required holistic ratings and written responses about time they spent looking at student data during the project, tasks in which they engaged across the training and research, any instructional decisions they made based on the data they collected, and specific features about the online system.

Procedures

Meetings

Prior to participation in the module training, researchers held an individual face-to-face meeting with each teacher, except the one teacher who was recruited later in Minnesota and met virtually with a project staff member. Following a common outline, researchers presented information about the study, teachers were given a checklist of weekly responsibilities, and they took the knowledge pretest. At the end of the project, staff met again individual teachers who took the knowledge posttest and completed a written questionnaire.

Online professional development

Eleven online instructional modules provided the content for teachers to learn about progress monitoring in general and, more specifically, how to give and score three types of algebra progress monitoring measures, as well as how to use a custom-designed data management system to record and summarize student graphed data and analyses of their skills and errors. As teachers worked through the online PD on their own, they gave feedback at three points during the modules (early, middle, and endpoint), in which they responded to questions about specific features of the system. They also were able to add comments in each module at any point during their training. Teacher comments and feedback pages were intended to give researchers information about features that seemed to work well as well as any glitches or problems encountered, so any future revisions of the modules could incorporate this information.

Beginning online modules and first evaluation

The first six PD modules focused on content and activities related to progress monitoring concepts and practices and the three algebra measures included in the online system. The first two modules provided the background information for progress monitoring and development research of the algebra measures. The first module, Core Concepts, focused on central ideas about the purpose of progress monitoring, its history, and basic features. The Project AAIMS module described the development of the progress monitoring tools during a previously funded federal research project. Following completion of these first two modules, teachers completed the first, or early, round of teacher feedback on these two beginning modules.

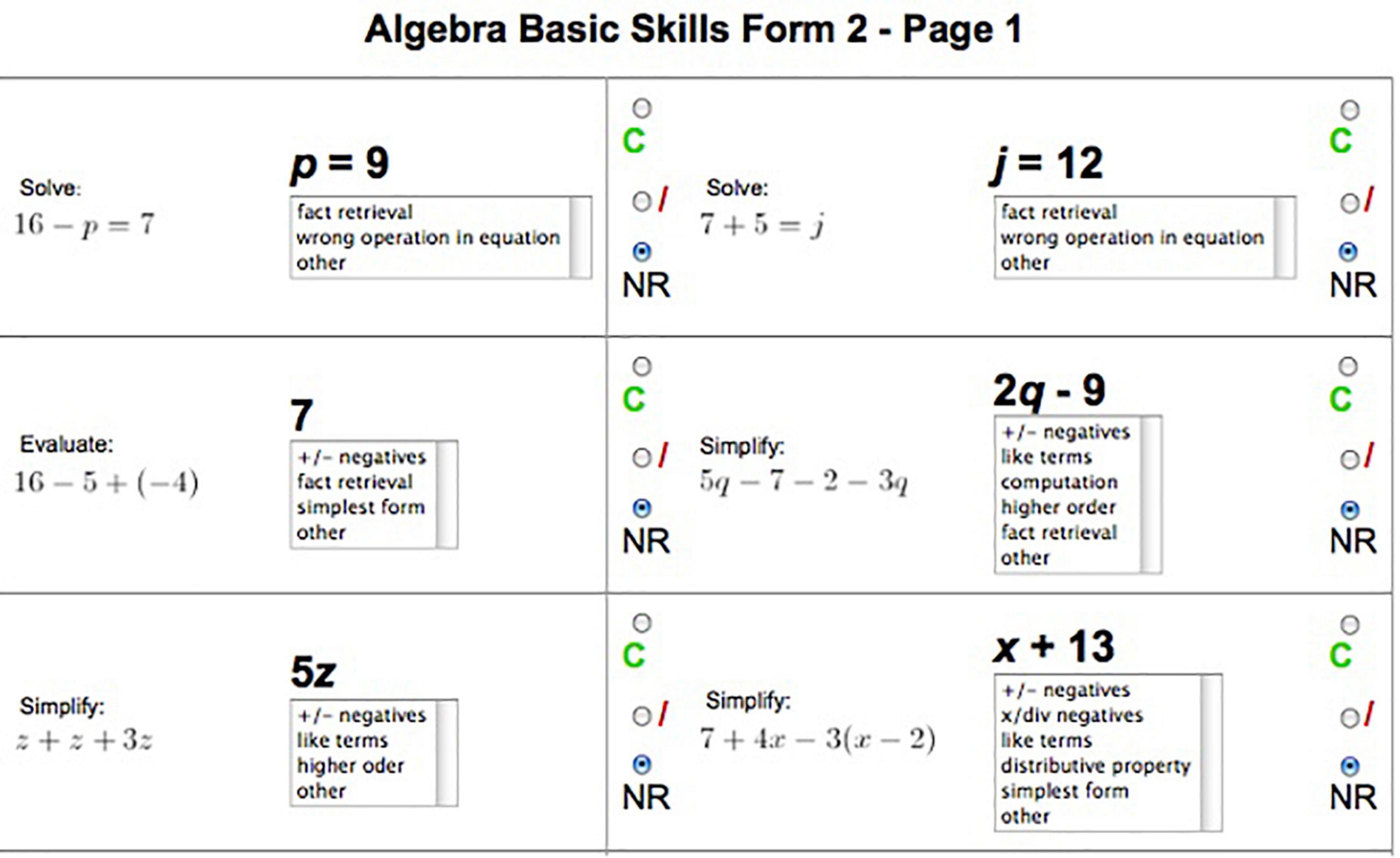

Middle set of online modules and second evaluation

The next four modules addressed the specific algebra measures. The Measures Introduction module provided information common to all three of the algebra progress monitoring measures included in this PD system. The next three modules presented information specific to administration and scoring of each algebra tool: Algebra Basic Skills, Algebra Foundations, and Algebra Content Analysis. In these three modules, teachers had the opportunity to take a measure themselves, so they could experience what would be expected of a student. Teachers learned conventions for scoring each type of measure and had a couple of opportunities to score sample student measures with feedback provided by the system on their accuracy. Teachers had to earn at least 90% accuracy with scoring a type of measure before being allowed to move to the next module. Additional practice and retest options were available. Following their learning and scoring of these three algebra measures, teachers completed the evaluation feedback page, responding to the same items for this second set of modules.

Last set of modules and third evaluation

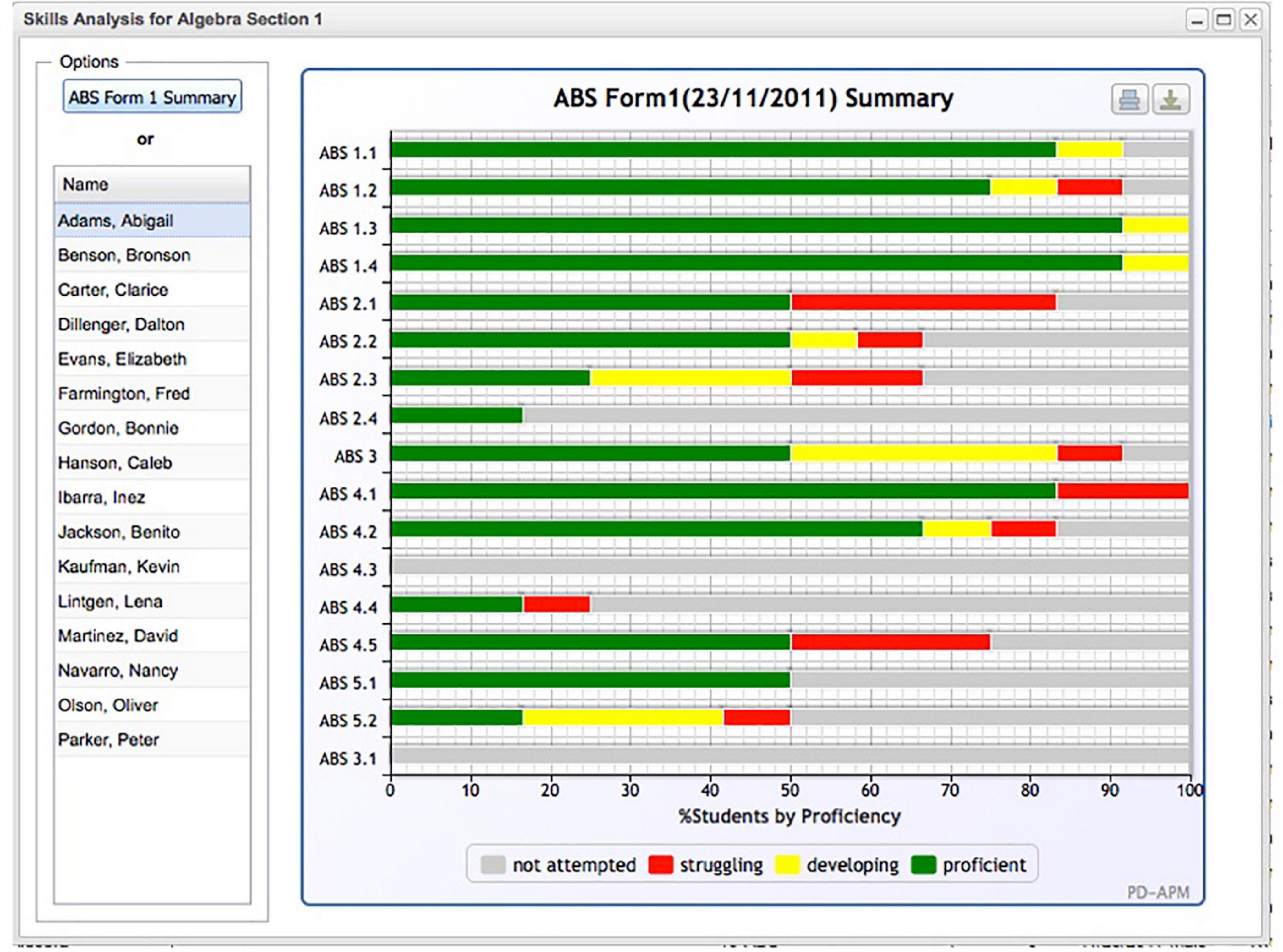

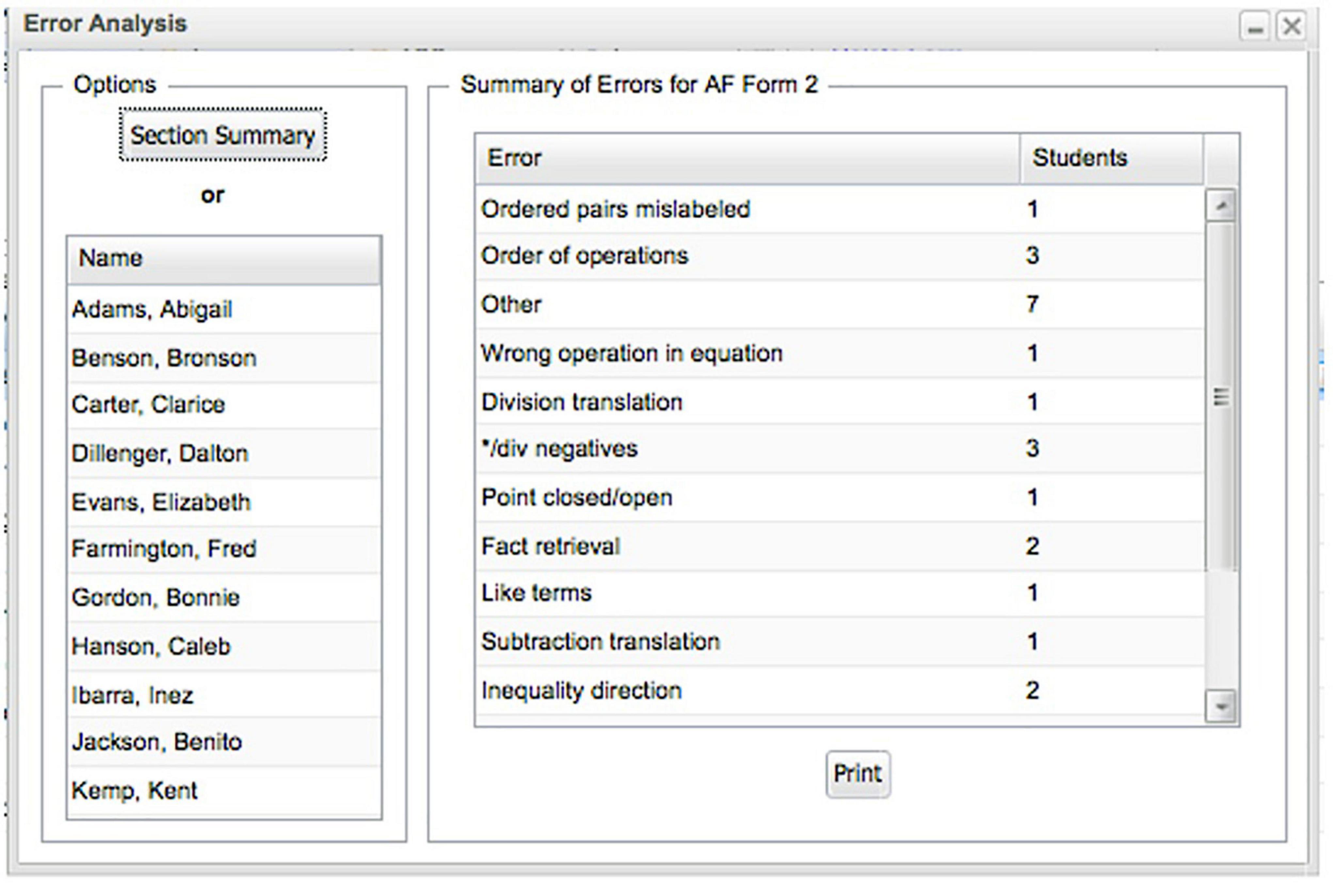

The last five online modules focused on features of the data management system. The first of these modules, Introduction to Data Management, described the overall capabilities of the system, especially how to add classes or individual students to the database, how to edit student data and make adjustments when students were absent, check student progress, and examine reports that could be generated. The next module, Evaluating Student Progress, focused on how to input student scores for the measures and how to view and interpret corresponding student graphs of progress. The Instructional Decision Making module showed teachers how to document instructional changes on the graph and how to add or change goals. In addition, recommendations for how to determine the efficacy of the instruction by evaluating graphed student progress were described. Although teachers (rather than the system) scored student performance on the algebra measures, teachers could input total scores as well as item-level data for use in aggregating information about skill proficiency and the common errors students were making. The Skills Analysis module showed teachers how data on problem types (i.e., skills) were aggregated for display. Skill reports could be generated to show skill proficiency for an individual or for a class of students. The Error Analysis module explained how teachers could choose from a list of common errors to note a potential misunderstanding a student made with an incorrect response. Although teachers made the judgments about potential student errors, a drop-down menu of common errors facilitated teachers’ data entry. An error analysis report could be generated to depict individual or classwide information, as long as the teacher had entered this item-level data. Finally, teachers completed the last evaluation page for the third set of instructional modules.

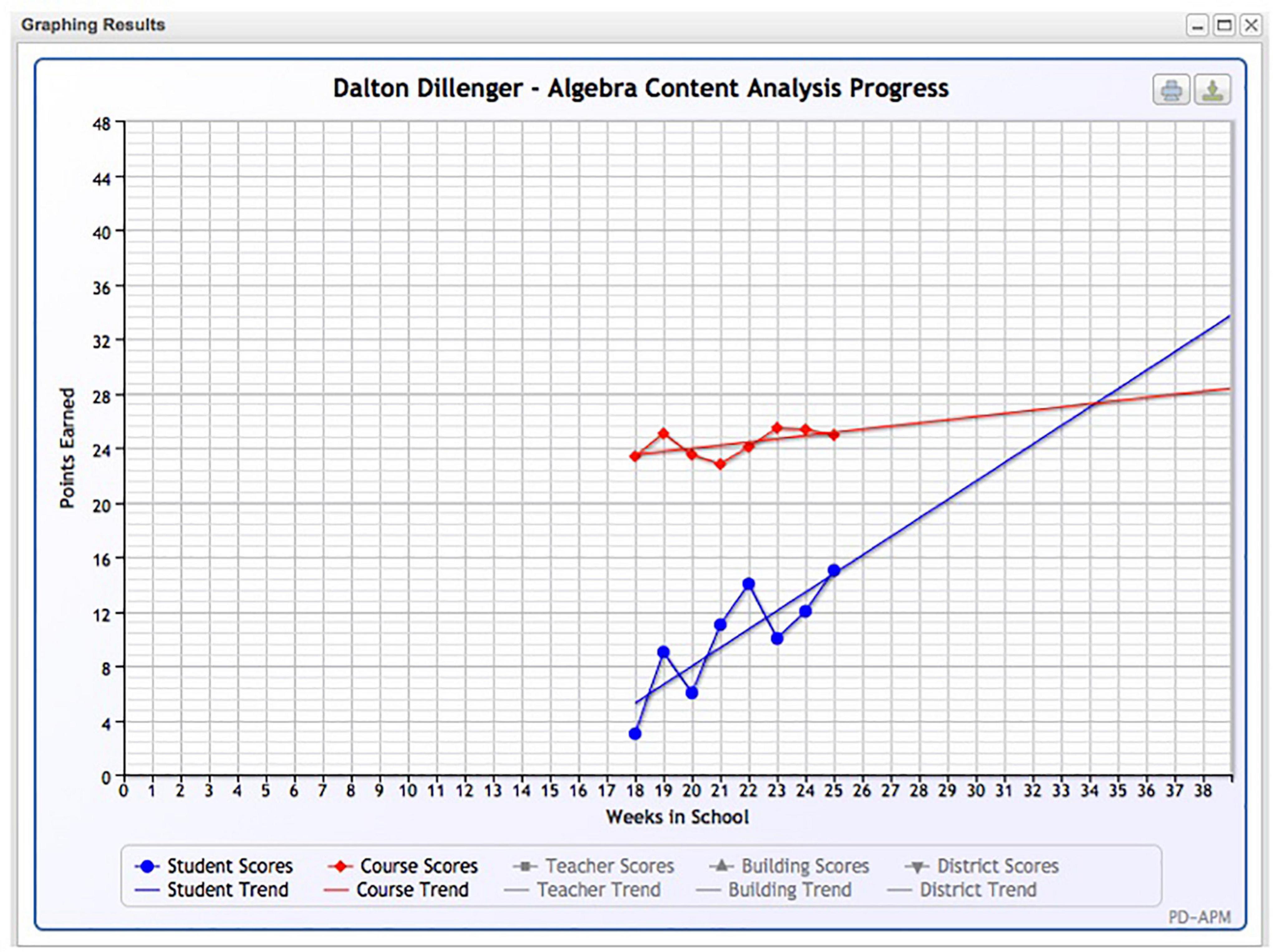

Data management and optional features

Once teachers had completed the modules on their own, they were given access to the Data Management hub and could proceed to add students to course sections, input measures used, and enter student data. After several scores were entered, the data management system could generate a student graph and show the trend of student progress. Teachers had the option to set goals for future achievement and to include phase-change lines to indicate when an instructional modification to the student’s program was made. Graphs depicted individual student data points (i.e., scores) and the trend of student progress but also could show the average score and average trend across the entire class. Figure 1 shows a student’s progress monitoring graph with trend line compared to the course average scores and trend.

Progress monitoring

To make sure that all three types of algebra measures were administered and scored during the project, researchers assigned one of the three measures to each teacher, giving consideration to the type of course each teacher selected to monitor and the teacher’s preferences. Then teachers were allowed to select a second measure themselves. Teachers gave these two measures weekly across 10 weeks to the entire class. They were required, however, to score student performance and enter data into the data management system for only the primary measure. In addition, teachers administered the third type of progress monitoring measure but only during the first 2 and last 2 weeks of the 10-week period, primarily as a way to document student growth in another way and for researchers to examine relations among the types of measures. Although allowed, teachers were not required to score either their secondary or tertiary measures. All measures were turned in to project staff.

Across the teachers, 16 teachers administered Algebra Basic Skills as the primary measure (6 in SC, 8 in IA, 2 in MN), 11 teachers administered Algebra Foundations (4 in SC, 6 in IA, 1 in MN), and 2 teachers administered Algebra Content Analysis (2 in SC). For the secondary measures, 6 teachers administered Algebra Basic Skills, 13 teachers administered Algebra Foundations, and 10 teachers administered Algebra Content Analysis. For the tertiary measure (given during first and last 2 weeks only), 6 teachers administered Algebra Basic Skills, 5 teachers administered Algebra Foundations, and 18 teachers administered Algebra Content Analysis.

Analysis of skills and errors

For required progress monitoring activities, teachers gave the primary assessments weekly to at least one class of students, scored their performance, and entered total correct responses into the data management system. However, for two students in the group, teachers were required also to enter item-level data, that is, accuracy for each student response, and indicate a possible reason for the error for any item answered incorrectly, if they were able to determine one. In this way, teachers had practice using these components of the online system without having to enter data for all items for every student. For this more fine-grained, item-level data entry, teachers were encouraged to select two students who were lower achieving or who had Individualized Education Plans (IEPs). When teachers entered item-level data (see Figure 2 for sample screen of item-level data entry), the online system was able to generate individual reports (or classroom when applicable) about level of proficiency on each type of skill evaluated on the measure (i.e., proficient, developing, struggling, or not attempted). See Figure 3 for illustration of an individual skills proficiency report. When teachers marked an item as being incorrect, they could choose from a drop-down menu the type of error the student made in that problem, or they could type in an error pattern if they did not see it listed. The system could generate a report of common errors made by student (or by class, if applicable). See Figure 4 for sample common errors report for an individual student.

Data analysis

Teacher knowledge tests and ratings

Teachers’ answers on the multiple-choice knowledge pre- and posttests were scored as correct or incorrect, and a matched-pairs t-test was used to examine gains. For teacher ratings, descriptive statistics, including frequency counts and/or means, were used to summarize teacher feedback.

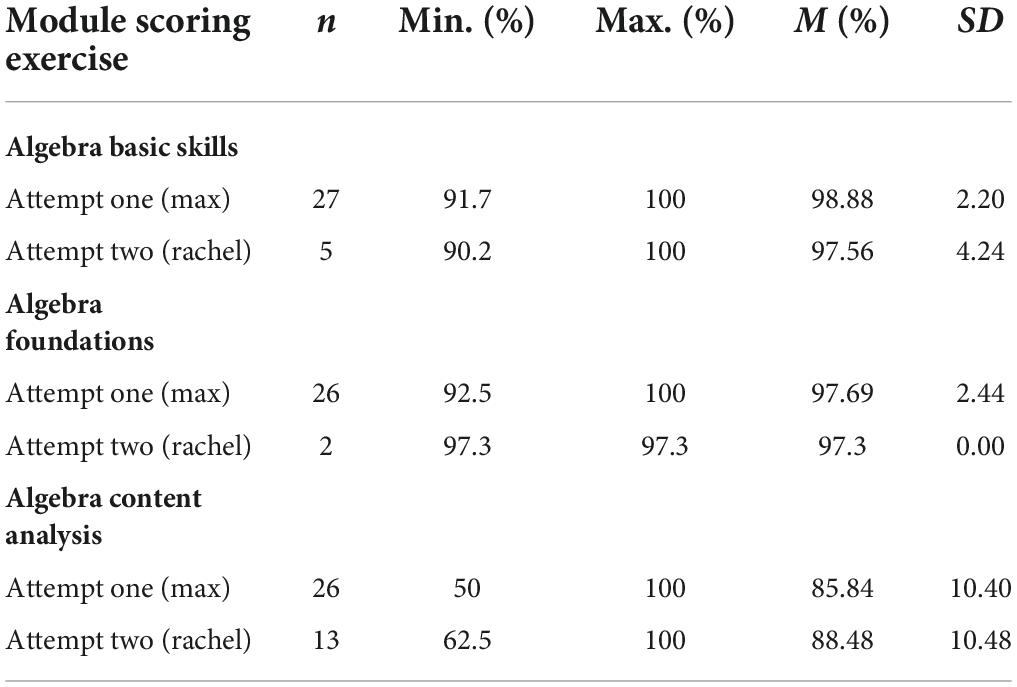

Scoring of module practice activities and student measures

To determine whether online instruction was successful in instructing teachers in scoring conventions, we examined the accuracy of their scoring during the interactive practice activities they did during online instruction about the three algebra measures. At the end of each module that addressed a type of algebra measure, teachers had a check-out exercise for a hypothetical student Max, in which they had to reach at least 90% accuracy in scoring to be allowed to move to the next module.

To check reliability of scoring with the assessments that teachers gave to their students, researchers required teachers to turn in scored papers for the first two test administrations to project staff, who then rescored the entire class. Any disagreements in scoring were discussed with the teacher. Even if the teacher had surpassed the 90% accuracy criterion during the online practice activities, researchers required a 95% accuracy threshold for scoring their own students’ measures. Any teacher who did not meet at least 95% for interrater agreement had to return additional sets of their scored measures for an interrater agreement check on all measures until they reached the 95% accuracy threshold. For subsequent administrations after reaching the 95% criterion, researchers rescored a sample of at least 20% of the class measures or a minimum of five assessments for each class administration, whichever was more. When accuracy fell below the 95% threshold, the entire class set of papers was rescored. A few teachers chose to score performance on the secondary and tertiary measures themselves. When they did, their scoring reliability was checked in the same way.

For Algebra Basic Skills and Algebra Foundations, responses were scores simply as correct or incorrect. Consistent with other progress monitoring research (e.g., Fuchs et al., 1994), interrater agreement was calculated as the total number of agreements in scoring divided by the sum of the total agreements and total disagreements. For Algebra Content Analysis, however, students could show work and be awarded partial credit for each of the 16 problems. Interrater agreement was calculated by subtracting the number of scoring disagreements from 16 and then dividing that difference by 16.

Data entry of total scores on primary measures

To determine reliability of teachers’ data entry, researchers compared the student scores teachers had recorded on the student measures with the scores they had entered into the data management system. Even if researchers had determined that the teacher had scored a student measure inaccurately and had adjusted that student score for analyses of student data, researchers still compared what the teachers had written directly on the student measures with what the data they entered in the online system. For each class, researchers figured the number of matches between the recorded scores on student papers and the scores entered into the system. The number of matches was divided by the total number of students to determine the interrater data entry percentage of agreement.

Results

Researchers analyzed data to examine the extent to which the online system worked as intended. We examined whether the online system led to improved teacher knowledge and skills with algebra progress monitoring. Researchers evaluated teachers’ knowledge through a pre-and posttest. Their accuracy in scoring and data entry were evaluated. Efficiency of the system and teacher satisfaction with instructional modules were examined through teacher self-report information and rating scales. A total of 29 teachers completed the training from beginning to end, administering algebra measures, scoring student performance, entering data in the online management system, and giving feedback. Note that some data were not accessible due to technical glitches with the system or because a few teachers chose not to respond to particular questions.

Teacher knowledge and accuracy

Knowledge test

The same knowledge assessment was given to teachers as a pre- and posttest. Cronbach’s alphas for the pretest and posttest were 0.86 and 0.84, respectively, indicating adequate internal consistency. The posttest was administered during the final, wrap-up meeting with project staff. A paired t-test indicated that teachers’ accuracy improved significantly from pre- to posttest, t(28) = −7.59, p < 0.001. Means with standard deviations in parentheses for item accuracy on the pretest and posttest were 9.97 (5.02) and 17.66 (2.83), respectively, for this 25-item assessment.

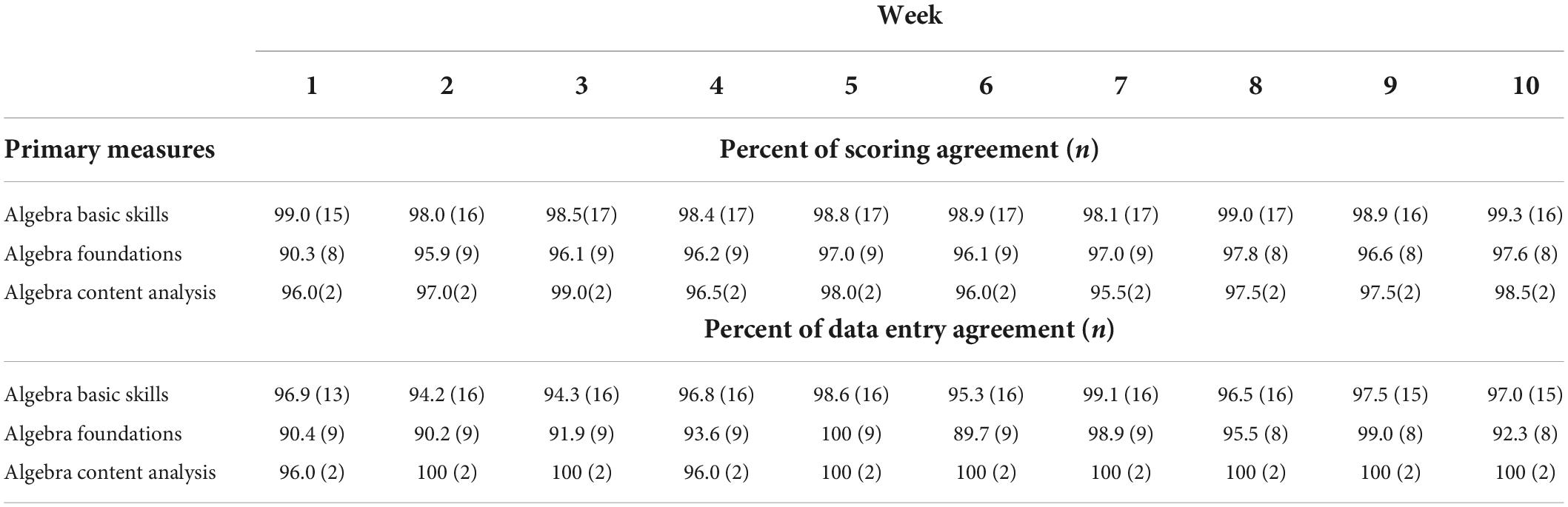

Accuracy in online scoring activities

Teachers had to reach a criterion level of accuracy in scoring the student exercise(s) before moving forward with another module (see Table 4). However, researchers also were interested in the accuracy with which they scored their own student papers. Therefore, project staff evaluated interrater agreement for teachers’ scoring on their primary measures. In addition to the scoring accuracy of algebra measures, researchers checked teachers’ accuracy for data entry based on teachers’ markings of the measures themselves. Table 5 shows percentage of interrater agreement for scoring each of the primary measures across 10 weeks of weekly data collection and the number of teachers engaged each week with those tasks as well as accuracy of their data entry in the online data management system.

Teachers’ use of the online system

Ratings of the instructional modules

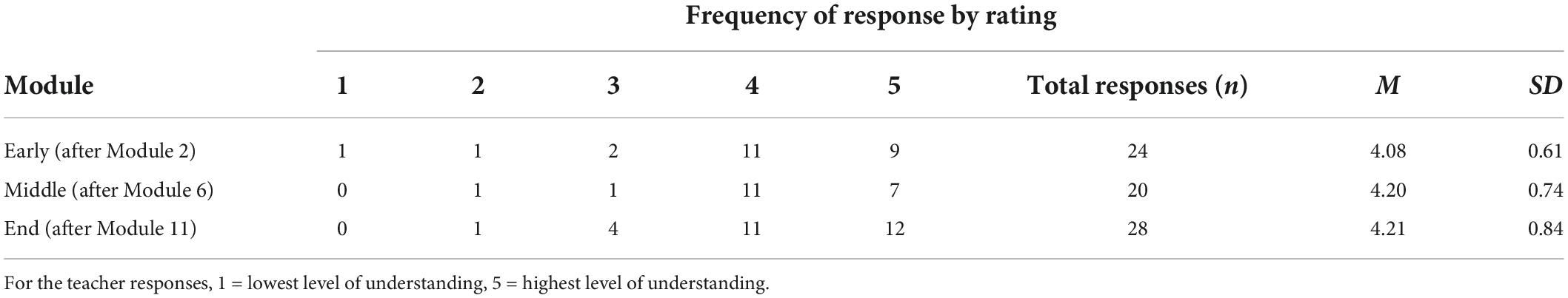

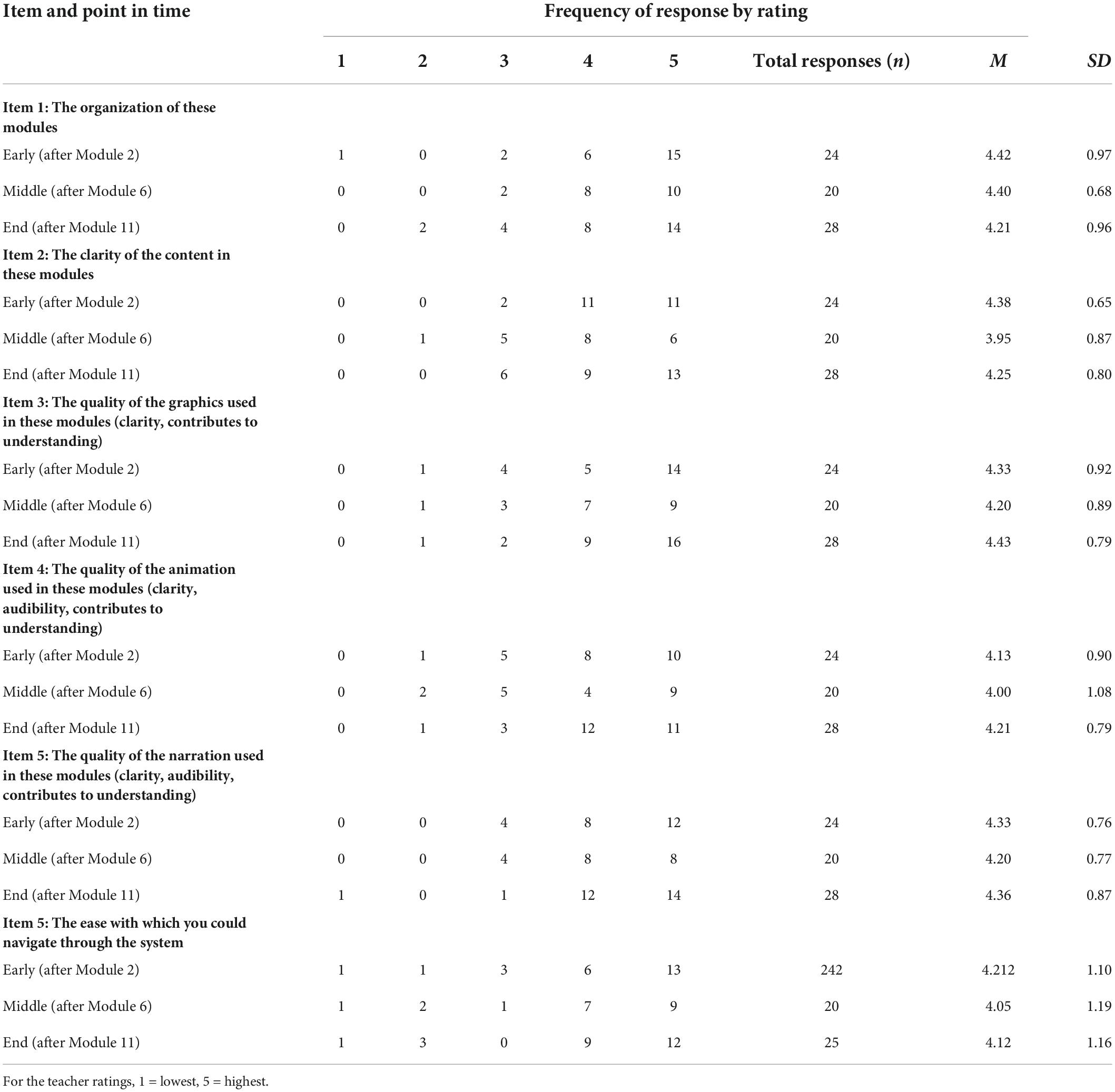

At three points during the online training (i.e., early, middle, and end), teachers completed the same set of Likert-scale ratings to indicate their level of understanding of the online instructional content on a scale of 1−5, with 1 indicating the lowest level of understanding and 5 indicating thorough understanding. The early evaluation followed the first two modules that focused on critical concepts of progress monitoring and the background research for the development of the three algebra measures to be taught. The middle evaluation took place after the next four modules. These modules introduced the three algebra measures and then focused on each individually, requiring practice in how to administer and score each type of assessment. The last set of module evaluation ratings took place after the next set of five modules that focused on features of the data management system and data entry of scoring, skill performance, and common errors. Frequencies for the teachers’ ratings are found in Table 6.

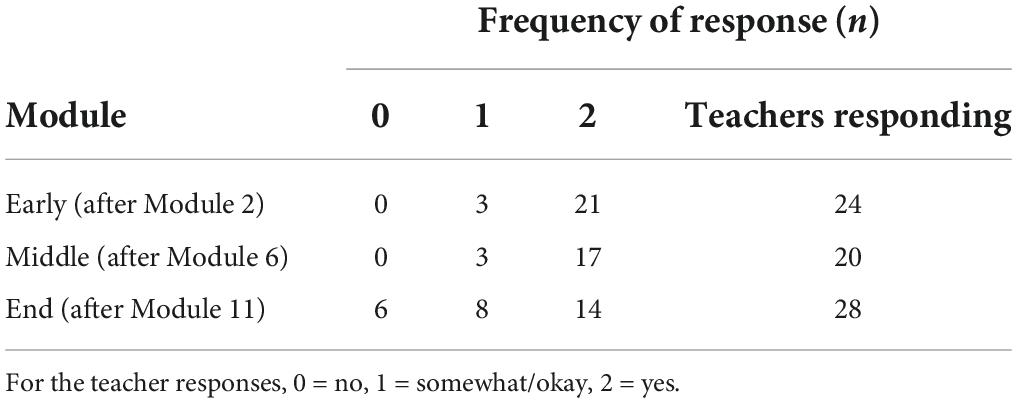

Efficiency of online modules, administration, and scoring tasks

Teachers were asked an open-ended question about whether they thought the time they spent viewing the instructional modules was reasonable. Researchers also asked for explanations to support their responses. Responses were classified and coded as a “0” if the teacher responded negatively, a “1” if indicating the time was “okay,” “somewhat” or another variation indicating moderate satisfaction, and a “2” if responding “yes.” Table 7 provides this information across teachers at the three evaluation checkpoints (i.e., early, middle, and end).

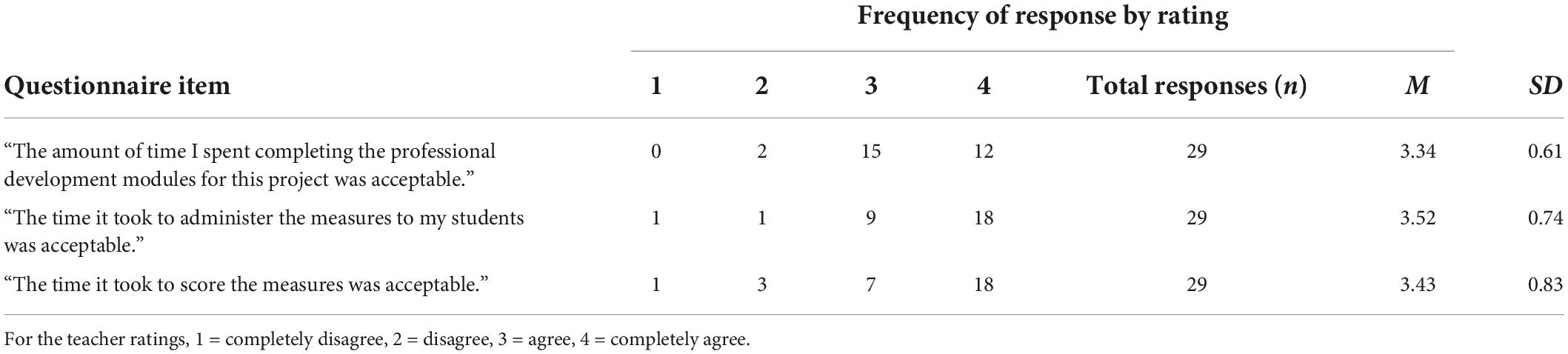

Teachers also were asked during the final meeting with researchers to complete a questionnaire containing items about their acceptability with the amount of time they spent in various activities. This Likert-type scale ranged from 1 to 4, with 1 = completely agree to 4 = completely disagree. Table 8 provides acceptability of time involved in the completion of the instructional modules, administration of measures, and scoring of measures.

Table 8. Final questionnaire: Acceptability of time for professional development (PD), administration, and scoring.

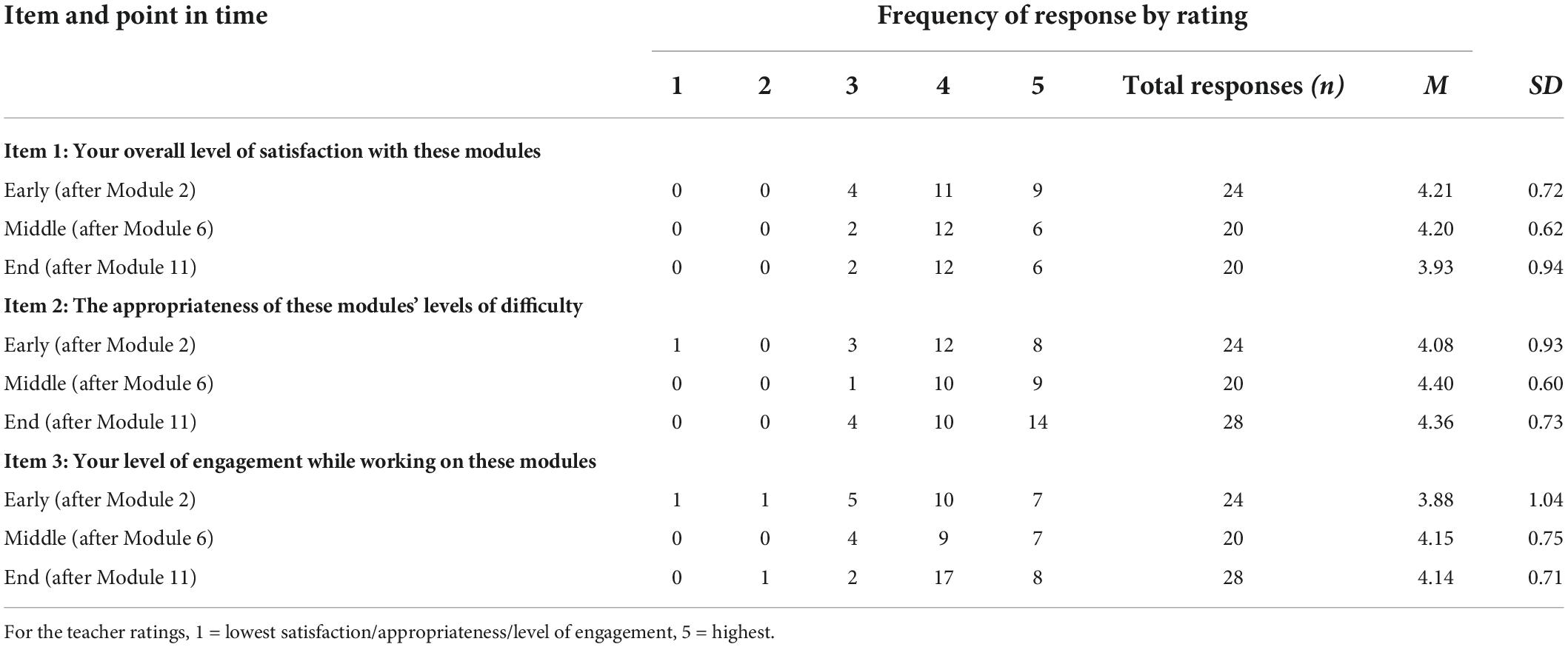

Teacher overall satisfaction with online modules

At three checkpoints during the online instruction, teachers rated their level of satisfaction (1 = low satisfaction, 5 = high satisfaction) with the modules, appropriateness of the modules’ level of difficulty, and the teachers’ level of task engagement during the modular instruction. Table 9 presents these teacher satisfaction data.

Teachers also rated their level of satisfaction (1 = low satisfaction, 5 = high satisfaction) with features imbedded in the online PD, such as the quality of graphics in the modules, clarity of module content, organization of the module, and ease of navigation. Table 10 displays the number of teachers who rated each feature by their level of satisfaction with system features.

Additionally, on the final questionnaire, teachers indicated whether they thought the content reflected on the progress monitoring measures was appropriate for their classes. Teachers used a Likert-type scale (1 = completely disagree, 2 = disagree, 3 = agree, 4 = completely agree) to reflect their level of agreement: 1 = 1 teacher, 2 = 2 teachers, 3 = 11 teachers, and 4 = 15 teachers, with M = 3.38 and SD = 0.78.

Use of optional online features

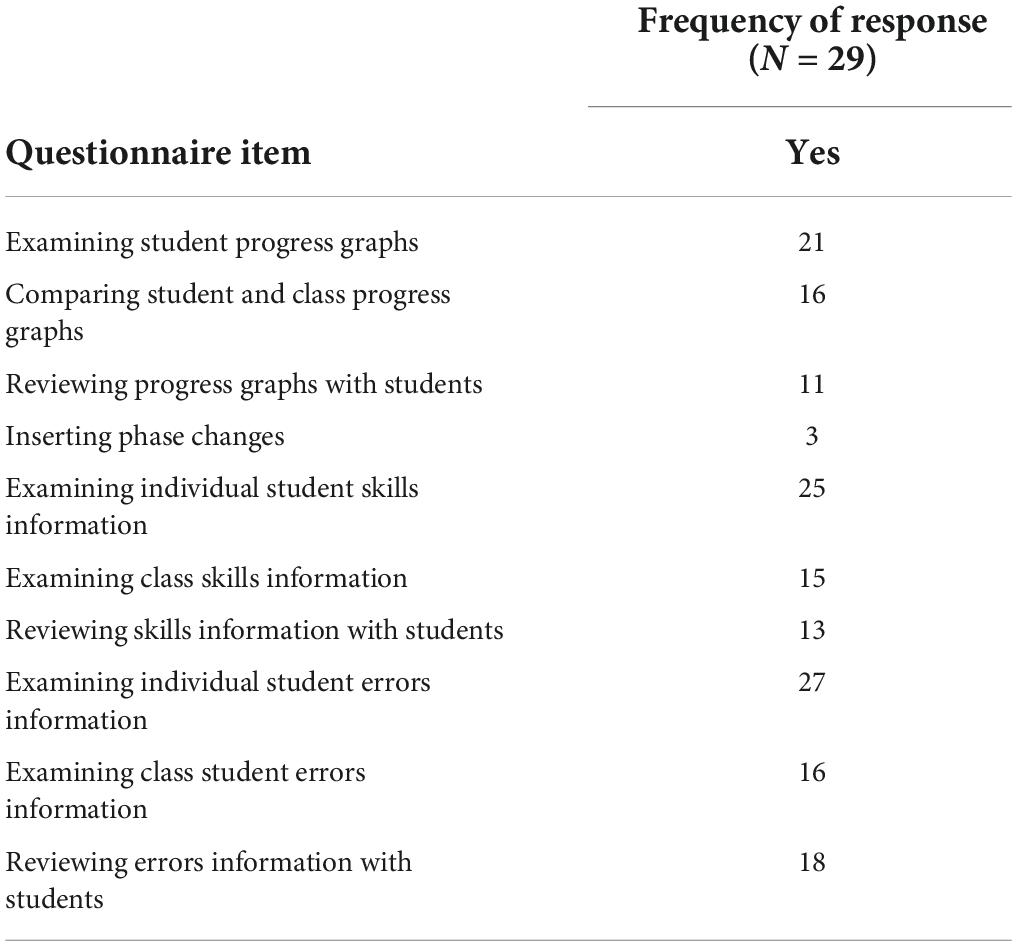

Several features in the data management system were covered in the online PD but were not required for use during the project, such as reviewing student graphs, comparing individual and class progress graphs, examining individual or class skills information, and examining individual or class common errors. However, some teachers chose to use these optional features during the project. At the final meeting, teachers indicated whether they had used specific system features. Table 11 provides the number of teachers using each data-based decision-making feature that was available but not required to be used during the project period.

Discussion

Teacher knowledge and accuracy

One goal of the study was to determine whether knowledge about algebra progress monitoring could be improved among teachers using the professional development online system and to verify that they could be highly accurate in scoring algebra measures based on the online instruction. Without a comparison group, increases in teacher knowledge must be interpreted cautiously. However, based on the study information, teachers improved significantly on the knowledge assessment about progress monitoring and the use of the online system from pre- to posttest. Teachers grew by an average of almost eight items by posttest. However, actual growth may have been a little greater. At pretest, two of the teachers took the assessment outside of research staff meetings due to complications that arose with scheduling and the distance required for travel and exhibited the highest pretest scores across the entire teacher sample (i.e., scores of 17 and 18). Consequently, the fidelity of these results is unclear.

To determine whether teachers could learn to apply scoring conventions accurately with the algebra measures used, researchers evaluated teacher learning during the practice exercises in the modules (see Table 4). Results from the practice exercises in the PD modules indicated that teachers were successful in learning scoring conventions and applying them to completed student problems. Accuracy for Algebra Basic Skills and Algebra Foundations measures was very high at 99% and 98%, respectively. The Algebra Content Analysis measure, though, required more complex scoring with potential awarding of partial credit for problems exhibiting student work. Consequently, teachers’ accuracy was not as high (i.e., 86%). More teachers completed a second scoring exercise in the module for Algebra Content Analysis measures than they had for Algebra Basic Skills and Algebra Foundations. They improved modestly with this second attempt, but not every teacher achieved the 90% criterion for moving to the next module. When that occasion occurred, researchers met with teachers individually to review scoring procedures, answer questions, and provide support.

Importantly, teachers were highly accurate in scoring their own students’ papers (see Table 5). The lowest interrater agreement percentages across all three measures occurred during the first couple of weeks of test administration, indicating that teachers improved their accuracy with additional practice. Although interrater agreement was very high for the more difficult Algebra Content Analysis measure when scoring their own students’ papers, only two of the teachers were required to score the Algebra Content Analysis as their primary assessment. Consequently, evaluation of additional teachers scoring Algebra Content Analysis measures is recommended. In addition to scoring student measures, teachers had to enter scores in the online data management system. Teachers were accurate in transferring scores from their student measures to the online system.

Teacher satisfaction and use of the online professional development system

Instructional modules

Researchers asked teachers to rate their level of understanding of the module content at three occasions, once after the first two modules, after the next four modules, and after the last five modules. Teachers used a Likert-type scale with 1 indicating the lowest level and 5 indicating the highest level of understanding. Mean scores for all three occasions were greater than 4.0, indicating that teachers thought they understood the information being presented. The lowest mean rating (i.e., 4.08) was for the earliest feedback occasion in which the modules being considered included background information about progress monitoring and the research endeavors to support development of the algebra measures. The rest of the modules focused more directly on hands-on tasks for teachers (i.e., giving and scoring the algebra measures and using the data management system) and were rated more highly in terms of their level of understanding.

Several other questions probed teacher satisfaction with the online PD system. At these same three feedback intervals, teachers rated their overall satisfaction with the modules, the appropriateness of the level of difficulty of the modules, and their level of engagement while working through the modules. Likert-type ratings from 1 to 5 were used with “1” indicating the lowest and “5” as the highest satisfaction, appropriateness of difficulty, or level of engagement. Mean scores ranged from 3.88 to 4.40, indicating overall high teacher ratings of the PD modules. With respect to the item about overall satisfaction with the modules, the lowest mean rating (i.e., 3.93) was given for the modules describing the components of the data management system. Corroborating ratings for their level of understanding of the instructional modules, the lowest mean rating for both the appropriateness of difficulty of the instructional modules and teachers’ level of engagement during the PD was given for the early modules on the background of progress monitoring and research development of the algebra measures. Across these results, the research team inferred that the background and research information may have been a little less engaging and perhaps harder to understand than the other modules focused on information that teachers would use directly with their students or within the data management features. Interestingly, though, teachers reported an overall high level of satisfaction with this same group of modules (i.e., section “Instructional modules”).

At these same three feedback intervals, researchers also asked about the system’s technical features of PD. Teachers were asked to rate from 1 to 5 (i.e., low to high) about organization of the modules, clarity of content, quality of graphics used, quality of animation used, quality of narration, and the ease of navigation through the system. Teachers’ mean ratings were high across all these features for each of the three sets of modules. In fact, mean ratings were 4.0 or higher at each feedback interval for each of the items except one. The item indicating clarity of content was 3.95 for the middle group of modules that focused on administration and scoring of the measures. In fact, the lowest mean rating (although still high at 4.0 or higher) occurred for this same group of instructional modules regarding the quality of the graphics, animation, and narration used as well as the ease of navigation through the system. This group of modules addressed three different types of algebra progress monitoring measures and taught teachers how to administer and score them. The modules were highly interactive and expected teachers to engage in practice activities. In fact, this set of modules was the only set that required teachers to reach a specified criterion with scoring before proceeding to the next module. It could be that narration, clarity, graphics, and animation were even more critical with these modules, as teachers observed models of the tasks they were to perform. Another possible explanation is that this group of modules included the scoring of the Algebra Content Analysis measures. Based on accuracy data and attempted practice exercises, this measure was harder for the teachers to learn to score successfully. It was the last module in this group of modules prior to completing the feedback, so the recency of this more difficult task may have affected teacher ratings across the entire group of modules.

Efficiency

Another aspect of teacher acceptability for the final version of the online PD system was teachers’ judgments of PD efficiency. That is, at the requested three feedback intervals, teachers indicated whether the time they spent working through the online PD was reasonable to them. They responded through an open-ended format, so they could provide context for their responses. All of the teachers indicated that the amount of time was somewhat reasonable or reasonable at the first two feedback occasions. However, 6 of 28 teachers judged the time spent on the last five modules that focused on data management aspect of the system to be unreasonable. Several teachers reported having internet connectivity problems or being busier with other tasks at this time. Because it was the last set of modules, some teachers explained that they thought the PD could have fewer modules or perhaps more condensed versions of the modules. Also, it should be noted that this last group of modules all focused on the use of the data management system. The research team first converted previous face-to-face PD to the six beginning online instructional modules. The research team then developed the data management system as a part of the overall grant project and created corresponding PD instructional modules to match the new data management system. The last several modules had been viewed by only two teachers prior to the current study. Consequently, the team had not received as much feedback for refinement with these modules as they had received with earlier modules. Additionally, other research corroborates that data-based decision making is difficult for teachers (and preservice teachers) to apply and that their interpretations often are qualitatively different (e.g., less cohesive) from expert users and trainers of progress monitoring (Espin et al., 2017, 2021a; Wagner et al., 2017). More attention may need to be directed to crafting these data-based decision-making modules to make them more explicit and acceptable to teachers. Additional feedback and knowledge checks should be solicited for each of these modules.

Researchers also asked teachers about efficiency on the final questionnaire at the end of the study. Teachers were asked to think back across all the PD as well as the administration and scoring of the progress monitoring measures. On this questionnaire, teachers used a rating scale to indicate their level of agreement with statements about the acceptability of the time they spent completing modules, administering progress measures, and scoring measures. All statements were written in the affirmative (i.e., time in tasks was acceptable), but the ratings forced a choice between agreement and disagreement. The scale was “1” for completely disagree, “2” for disagree, “3” for agree, and “4” for completely agree. All 29 teachers responded to these items. Only two teachers disagreed with the statement about the amount of time spent in the instructional modules as acceptable. Thus, across all the teachers and considering the totality of the project, teachers rated their time in the online PD as acceptable.

Of the three ratings about the acceptability of time that it took to complete tasks, teachers rated administration of the student measures with the highest mean rating of acceptability (3.52 of 4.0). However, two teachers either disagreed or completely disagreed with this statement that the time spent was acceptable. These progress measures took either 5 or 7 mins once per week, depending on the particular type of measure given. However, it is possible that these two teachers were considering that administration of all three measures (when only one was required to be scored and entered into the system) was not acceptable. The majority of the teachers, however, agreed or completely agreed that time spent administering the algebra progress measures and scoring the measures was acceptable, 27 of 29 and 25 of 29, respectively.

Optional features of the data management system

On the final questionnaire at the end of the study, teachers also responded to items indicating whether they had used components of the data management system on their own. That is, using these features was not required as a part of study participation, but the online instructional modules provided information about how to access and use these features. The majority of teachers reported examining student progress graphs (21 of 29), but far fewer actually reviewed the graphs with their students (only 11 of 29). Although teachers had been asked to enter item-level information from the measures for only two of their lower performing students, some teachers chose to enter skills and/or common errors information for more of their students or their entire class. In fact, almost all of the teachers (27 of 29) reported examining individual student errors information, with 18 teachers reviewing common error information with their students, and 16 teachers examining student errors across their class. Similarly, 25 of 29 teachers examined individual skill proficiency (i.e., level of mastery for skills included on the measures), with 13 teachers reviewing the skills information with their students, and 15 examining skills information across their class. The activity in which the fewest teachers engaged was inserting phase change lines on student graphs. When asking about time spent viewing student data each week, teachers reported a range of 5−150 mins with a mean of 45 mins. Thus, teachers appeared to take advantage of the available data management tools in the online system even when not required to do so.

Summary of results and future research

Conclusion

With this online PD system, teachers acquired knowledge and skills about how to conduct progress monitoring in algebra. They scored student algebra progress measures accurately and entered data successfully into the online management system. Teachers reported overall high levels of satisfaction with the modular training, including the content, difficulty level, and organization of the instruction as well as the clarity of the imbedded technological features. They were able to access the system’s data management components and store student data. They reported that the time spent in the PD activities, including the instructional modules and the administration and scoring of student measures, was acceptable to them. Overall, the development and implementation of an online PD system for instructing teachers in how to conduct and manage algebra progress monitoring appeared successful. It functioned as intended and enabled 29 general education and special education teachers to learn to give and score three types of algebra progress measures as well as store and view student data across time.

Study limitations

A number of limitations should be noted with this study. First, the study required teachers to report their satisfaction with the system, which could be positively biased. Second, a pretest/posttest design was used to collect information. Without a comparison group, it is difficult to judge fully the efficacy of the PD. Third, not all teachers responded to all requests for feedback. Although teachers viewed an online evaluation page at three points during the online instruction, teachers could proceed to the next module even if they failed to complete some (or all) of the items. Additionally, occasional internet connectivity issues at schools or teachers’ homes sometimes made access difficult or interfered with particular tasks. Fourth, although researchers were able to calculate accuracy for the knowledge test and scoring of student measures, direct observations of teachers working through online modules, administering measures in the classrooms, or using the data management system were not conducted. Of course, the overall purpose of the study was to determine whether teachers could learn to conduct algebra progress monitoring on their own. However, teacher self-report responses, with unknown reliability, provided the majority of the data for this project evaluation. Fifth, teachers were not required to use all the available components of the data management system in this evaluation study. Consequently, researchers received only anecdotal information about some of the available online features. Sixth, although some schools had multiple teachers using the online system, we did not evaluate systematically whether teachers completed all activities independently or whether they discussed features with one another. Last, researchers experienced a several-month delay in getting all features of the online system fully functional. Although all teachers completed all the online PD modules, depending on how quickly they worked through modules independently, some teachers had to begin administration of algebra progress measures prior to completing the modules related to data management. That is, they needed to administer progress measures for 10 weeks, and several teachers would have run out of time in the school year if they had waited until completing all modules before administering the 10 weeks of assessments. Therefore, some teachers did not enter data into the management system on a weekly basis; instead, they grouped batches of assessments (especially the first few weeks of data) to enter at one time after they had completed the modules about using the data management system, which may have affected their reliability in scoring. Relatedly, during the final meeting in which teachers completed a questionnaire about their overall satisfaction with the PD, some teachers reported anecdotally that it had been a long time since they had worked through the modules, while others said they had completed all modules closer in time to the final meeting. It is not known how this variation in length of time spanned to complete all the modules may have affected teachers’ responses about the PD modules and the related assessment activities or how the time they had left after completing data management modules affected their interest in exploring the data management features that had not been required to be used during the project.

Implications for future work

Although teachers were asked to enter problem-by-problem accuracy on measures for two students in their classes and indicate a possible error when the student’s response was inaccurate, some teachers chose to enter item-level data for their entire class. At first glance, the assumption could be made that teachers understood the potential benefits of such a data management system for ongoing progress monitoring. However, fewer teachers reported viewing individual student graphs of total scores, and less than half the teachers reported showing graphs to students. In fact, more teachers reported examining student skills and errors in the data management system and showing these graphics to students than examining and showing student graphs of progress monitoring scores of measures across time. It may be that teachers recognized how knowing about proficiency of algebra skills and the common errors students made could assist them as they decided how to alter instruction for their students. However, the basic tenets of progress monitoring that include decision making about instructional effectiveness tied to judgments about student rate of improvement may not have been realized by all the teachers or perhaps not emphasized enough in the PD. With progress monitoring, technically sound data should be used for ongoing instructional decision making, especially for determining when student progress is not adequate for meeting goal expectations. An equally important aspect in data-based individualization is the use of available progress monitoring data and other diagnostic data to determine the nature of the instructional modifications to better meet individual needs. Consequently, implementation of this PD system may need to include more specific content about both instructional decision making and appropriate intensification of intervention, especially for individual students who are not progressing as expected.

Future research with this online PD should include systematic evaluation of all the data management components of the system. Teacher evaluation of each module could be required prior to navigation to subsequent modules. Features that were optional for teachers or minimally required in the current study should be evaluated further. Recognizing that teachers frequently need support to make the best use of progress monitoring data and instructional decision making (Stecker et al., 2005; Espin et al., 2017; Wagner et al., 2017; Jung et al., 2018; Fuchs et al., 2021), a module that includes additional information focused on data interpretation and instructional utility may need to be developed. Perhaps a module for administrators or lead teachers could assist school staff if implementation of procedures were adopted for particular courses. Exploring how in-person or online data team meetings might be used effectively to support teacher decision making is another aspect that could be examined. In addition to refining data utilization aspects, the current online PD could include support for teachers about generally effective algebra instruction and how to intensify instruction when students continue to struggle.

The PD system also could be adapted easily for use with other areas of readily available mathematics measures, such as those for elementary and middle school levels in computational fluency and concepts/applications or problem solving or for early numeracy (e.g., number identification, quantity discrimination, missing number). It could be expanded for use with progress monitoring in other academic areas, such reading, writing, and discipline-specific vocabulary.

Next steps for online professional development

The development of the online PD and data management system was led by the faculty member who originally developed ThinkSpace (Bender and Danielson, 2011). Following his retirement, a small company took over the development leading to the version used in this paper and in a subsequent research project. Due to transitions within the company, along with transitions at the university level, efforts to shift the system from the cloud system used by the developer to the university’s information technology system have required more time than was anticipated. New opportunities for completing this process have become available, and we anticipate that this online PD system will be moving toward wider accessibility in the near future.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Iowa State University Institutional Review Board; Clemson University Institutional Review Board. The teacher/participants provided their written informed consent to participate in this study and aggregate information about students in their classes.

Author contributions

Both authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Funding

The research reported here was supported by the Institute of Education Sciences, United States Department of Education, through Grant R324A090295 to Iowa State University as part of the Professional Development for Algebra Progress Monitoring Project.

Acknowledgments

The authors wish to acknowledge Jeannette Olson, Vince Genareo, Amber Simpson, and Renee Lyons for their contributions to the development and evaluation of the online PD system.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

The opinions expressed here are those of the authors and do not represent views of the Institute or the United States Department of Education.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.944836/full#supplementary-material

Footnotes

References

Bender, H. S., and Danielson, J. A. (2011). A novel educational tool for teaching diagnostic reasoning and laboratory data interpretation to veterinary (and medical) students. Clin. Lab. Med. 31, 201–215. doi: 10.1016/j.cll.2010.10.007

Danielson, J. A., Mills, E. M., Vermeer, P. J., Preast, V. A., Young, K. M., Christopher, M. M., et al. (2007). Characteristics of a cognitive tool that helps students learn diagnostic problem solving. Educ. Tech. Res. Dev. 55, 499–520. doi: 10.1007/s11423-006-9003-8

Deno, S. L. (1985). Curriculum-based measurement: The emerging alternative. Except. Child. 52, 219–232. doi: 10.1177/001440298505200303

Espin, C., Chung, S., Foegen, A., and Campbell, H. (2018). “Curriculum-based measurement for secondary-school students,” in Handbook on Response to Intervention and Multi-Tiered Instruction, eds P. Pullen and M. Kennedy (New York, NY: Routledge), 291–315.

Espin, C. A., Förster, N., and Mol, S. E. (2021a). International perspectives on understanding and improving teachers’ data-based instruction and decision making: Introduction to the special series. J. Learn. Disabil. 54, 239–242. doi: 10.1177/00222194211017531

Espin, C. A., van den Bosch, R. M., van der Liende, M., Rippe, R. C. A., Beutick, M., Langa, A., et al. (2021b). A systematic review of CBM professional development materials: Are teachers receiving sufficient instruction in data-based decision making? J. Learn. Disabil. 54, 256–268. doi: 10.1177/0022219421997103

Espin, C. A., Wayman, M. M., Deno, S. L., McMaster, K. L., and de Rooij, M. (2017). Data-based decision-making: Developing a method for capturing teachers’ understanding of CBM graphs. (2017). Learn. Disabil. Res. Pract. 32, 8–21. doi: 10.1111/ldrp.12123

Foegen, A., Olson, J., Genareo, V., Dougherty, B., Froelich, A., Zhang, M., et al. (2017). Algebra Screening and Progress Monitoring data: 2013-2014 (Technical Report 3). Ames, IA: Iowa State University.

Foegen, A., Olson, J., and Impecoven-Lind, L. (2008). Developing progress monitoring measures for secondary mathematics: An illustration in algebra. Assess. Effect. Interv. 33, 240–249. doi: 10.1177/1534508407313489

Foegen, A. (2004-2007). Project AAIMS: Algebra assessment and instruction—Meeting standard (Award # HC324C030060). [Grant]. Washington, DC: U. S. Department of Education.

Foegen, A., and Stecker, P. M. (2009-2012). Professional Development for Algebra Progress Monitoring. (Award # R324A090295). [Grant]. Washington, DC: National Center for Special Education Research.

Fuchs, L. S., Fuchs, D., Hamlett, C. L., and Stecker, P. M. (1994). Effects of curriculum-based measurement and consultation on teacher planning and student achievement in mathematics operations. Am. Educ. Res. J. 28, 617–641. doi: 10.2307/1163151

Fuchs, L. S., Fuchs, D., Hamlett, C. L., and Stecker, P. M. (2021). Bringing data-based individualization to scale: A call for the next generation technology of teacher supports. J. Learn. Disabil. 54, 319–333. doi: 10.1177/0022219420950654

Genareo, V. R., Foegen, A., Dougherty, B., DeLeeuw, W., Olson, J., and Karaman Dundar, R. (2019). Technical adequacy of procedural and conceptual assessment measures in high school algebra. Assess. Effect. Interv. 46, 121–131. doi: 10.1177/1534508419862025

Helwig, R., Anderson, L., and Tindal, G. (2002). Using a concept-grounded, curriculum-based measure in mathematics to predict statewide test scores for middle school students with LD. J. Special Educ. 36, 102–112.

Jung, P.-G., McMaster, K. L., Kunkel, A. K., Shin, J., and Stecker, P. M. (2018). Effects of data-based individualization for students with intensive learning needs: A meta-analysis. Learn. Disabil. Res. Pract. 33, 144–155. doi: 10.1111/ldrp.12172

Ketterlin-Geller, L. R., Gifford, D. B., and Perry, L. (2015). Measuring middle school students’ algebra readiness: Examining validity evidence for three experimental measures. Assess. Effect. Interv. 41, 28–40.

Kruzich, L. (2013). Thinkspace technology improves critical thinking and problem solving in simulations. J. Acad. Nutr. Dietetics 113:A68. doi: 10.1016/j.jand.2013.06.239

Stecker, P. M., Fuchs, L. S., and Fuchs, D. (2005). Using curriculum-based measurement to improve student achievement: Review of research. Psychol. Sch. 42, 795–819. doi: 10.1002/pits.20113

Wagner, D. L., Hammerschmidt-Snidarich, S. M., Espin, C. A., Seifert, K., and McMaster, K. L. (2017). Pre-service teachers’ interpretation of CBM progress monitoring data. Learn. Disabil. Res. Pract. 32, 22–31. doi: 10.1111/ldrp.12125

Keywords: professional development for teachers, progress monitoring, algebra, curriculum-based measurement, online learning, data-based decision making

Citation: Stecker PM and Foegen A (2022) Developing an online system to support algebra progress monitoring: Teacher use and feedback. Front. Educ. 7:944836. doi: 10.3389/feduc.2022.944836

Received: 15 May 2022; Accepted: 22 August 2022;

Published: 15 September 2022.

Edited by:

Erica Lembke, University of Missouri, United StatesReviewed by:

Michael Schurig, Technical University Dortmund, GermanyKaitlin Bundock, Utah State University, United States

Copyright © 2022 Stecker and Foegen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anne Foegen, YWZvZWdlbkBpYXN0YXRlLmVkdQ==

†These authors have contributed equally to this work

Pamela M. Stecker

Pamela M. Stecker Anne Foegen2*†

Anne Foegen2*†