- Department of Management, Binus Online Learning, Bina Nusantara University, Jakarta, Indonesia

The impact of student performance is the focus of online learning because it can determine the success of students and higher education institutions to get good ratings and public trust. This study explores comprehensively the factors that can affect the impact of student performance in online learning. An empirical model of the impact of student performance has been developed from the literature review and previous research. The test of reliability and validity of the empirical model was evaluated through linguist reviews and statistically tested with construct reliability coefficients and confirmatory factor analysis (CFA). Overall, the results of this study prove that the structural model with second-order measurements produces a good fit, while the structural model with first-order measurements shows a poor fit.

Introduction

Apart from the COVID-19 disaster, since 2021, the Indonesian government has launched a distance education system as the forerunner of e-learning. This system brings new colors to the learning process and challenges to adopt and innovate learning. The success of a learning system is highly dependent on mixed conditions, including the learning environment, teaching methods, resources, and learning expectations.

Many developed countries have integrated e-learning systems in higher education, but Indonesia, as a developing country, has not effectively adopted this technology. Several previous studies have acknowledged the severe challenges that hinder the integration of quality e-learning in universities, particularly in developing countries (Al-Adwan et al., 2021; Basir et al., 2021). Therefore, the various benefits of e-learning as a mode of education to improve the teaching-learning process and the barriers and challenges to adopting e-learning technology must also be considered.

The characteristics of students who take online education are different from students who apply traditional learning systems. Broad reach, a high level of flexibility, and easy access are reasons for students who are just starting a career in companies and professionals to improve their careers. For this reason, universities are campaigning for the efficiency of online education to meet the needs of students (Paul and Pradhan, 2019) resulting in a big boom in the education technology (EdTech) segment (Semeshkina, 2021).

The use of technology in online learning can improve the quality of learning, help students’ complete assignments quickly, gain insight and skills. In this study, the meaning of the impact of online learning performance is that online learning abilities can affect student performance in saving resources, productivity, competence, and knowledge (Aldholay et al., 2018). Universities need to pay attention to factors that can improve online learning performance by increasing student satisfaction and involvement.

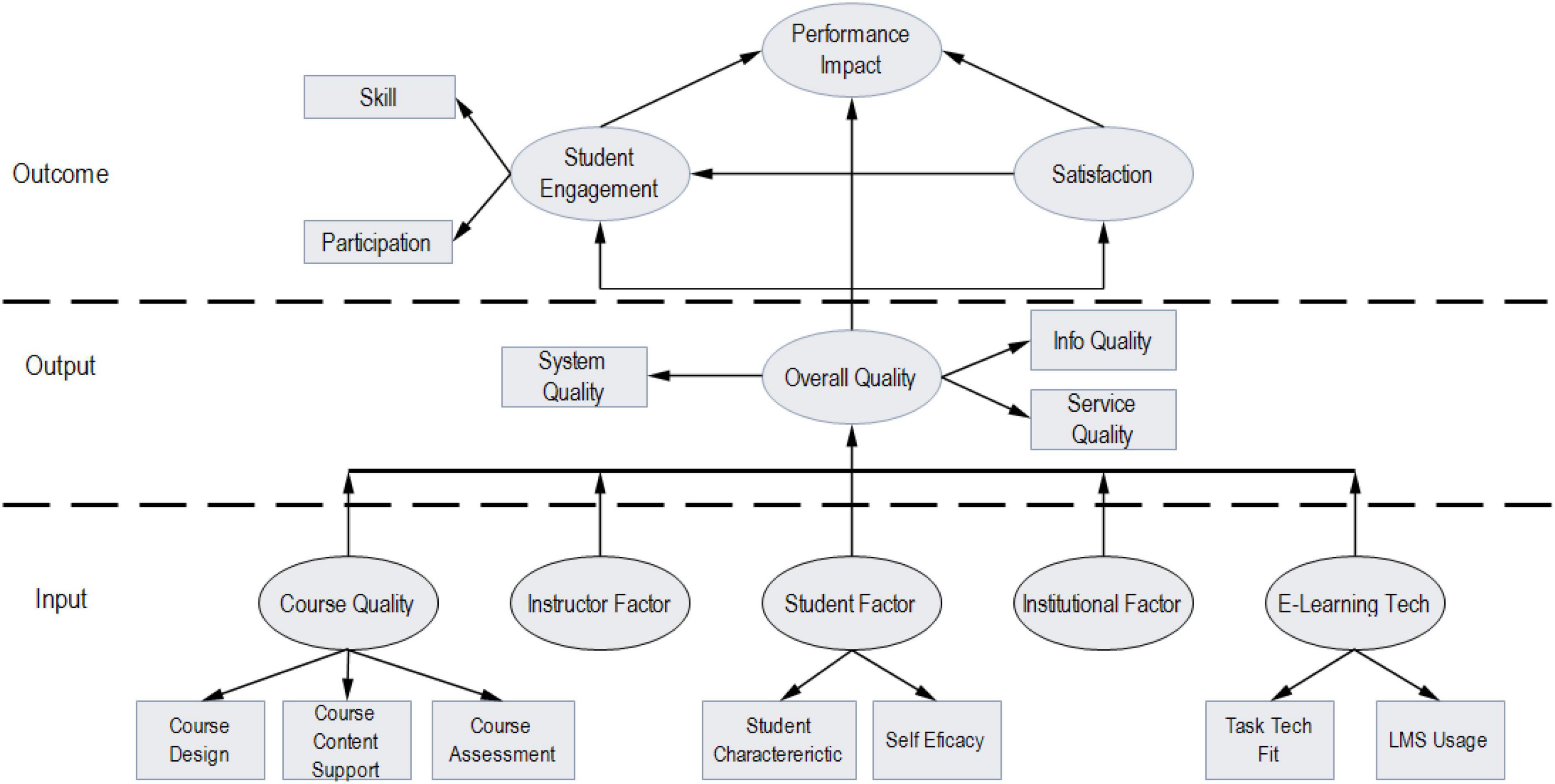

The researchers succeeded in investigating the determinants of student performance in the context of online learning (Zimmerman and Nimon, 2017; Aldholay et al., 2018; Büchele, 2021). The scope of their study is limited to student factors in learning and college infrastructure as education service providers. This study extends the results of previous researchers by examining input factors [course quality (Jaggars and Xu, 2016; Debattista, 2018), instructor (Daouk et al., 2016), student, institutional (Hadullo et al., 2018), and technology (Kissi et al., 2018; Almaiah and Alismaiel, 2019; Sheppard and Vibert, 2019; Ameri et al., 2020; Yadegaridehkordi et al., 2020)], output [overall quality (Aldholay et al., 2018; Hadullo et al., 2018; Almaiah and Alismaiel, 2019; Thongsri et al., 2019)] and outcome [engagement (Büchele, 2021), satisfaction and performance (Aldholay et al., 2018)] during the online learning process. Based on the experience and knowledge of researchers, these factors can improve the performance of online learning students. This study aims to propose a conceptual framework for students’ performance impact in online learning (Figure 1). Therefore, specifically this paper as a preliminary study in the development of a predetermined model.

Literature review

Online learning in higher education

Online learning has become an appropriate and attractive solution for students who pursue their education while undergoing busy activities (Seaman et al., 2018). Therefore, universities are constantly looking for ways to improve the quality of online courses to increase student satisfaction, enrollment, and retention (Legon and Garrett, 2017).

A unique feature of online learning is that students and lecturers are physically far apart and require a medium for delivering course material (Wilde and Hsu, 2019). The interaction of students and lecturers is mediated by technology, and the design of virtual learning environments significantly impacts learning outcomes (Bower, 2019; Gonzalez et al., 2020). For decades, research on online learning has been studied, and the effectiveness of online teaching results from instructional design and planning (Hodges et al., 2020). The COVID-19 pandemic is forcing students worldwide to shift from offline learning to online learning environments. Students and teachers have limited capacities regarding information processing, and there is a chance that a combination of learning modalities may result in the cognitive overload that impacts the ability to learn new information effectively (Patricia Aguilera-Hermida, 2020). In addition, lack of confidence in the new technology they use for learning or the absence of cognitive engagement and social connections have less than the optimal impact on student learning outcomes (Bower, 2019).

The existence of technology, if used effectively, can provide opportunities for students and teachers to collaborate (Bower, 2019; Gonzalez et al., 2020). The success of the transition from offline to online learning is strongly influenced by the intention and usefulness of technology (Yakubu and Dasuki, 2018; Kemp et al., 2019), so the effectiveness of online learning is highly dependent on the level of student acceptance (Tarhini et al., 2015). Therefore, it is essential to analyze the factors related to online learning to achieve student learning outcomes.

Course quality

As online learning continues to mature and evolve in higher education, faculty and support staff (instructional designers, developers, and technologists) need guidance on how to best design and deliver practical online courses. Course quality standards are a valuable component in the instructional design process. They help guide course writers and identify needed improvements in courses and programs and create consistency in faculty expectations and student experience (Scharf, 2017).

In general, quality is an essential factor in online learning to provide a helpful learning experience for students (Barczyk et al., 2017), while course quality supports university learning performance. Quality Matters™ (QM) is an international organization that involves collaboration between institutions and creating a shared understanding of online course quality (Ralston-Berg, 2014). This research measures course quality by three dimensions: course design, course content support, and course assessment (Hadullo et al., 2018). These three dimensions are determinants in assessing the quality of learning.

Instructor factor

The quality of the instructor in delivering the material becomes the input to achieve learning performance (Ikhsan et al., 2019). To facilitate an active learning process, instructors should use strategies to increase participation in learning. While the responsibility for learning lies with the learner, the instructor plays an essential role in enhancing learning and engagement in the online environment (Arghode et al., 2018). As an essential actor in the classroom, instructors must have psychological similarities with students to help academically lacking students by changing perceptions of external barriers and stereotypes (Sullivan et al., 2021).

Several research results have proven the influence of instructor interactivity in the classroom on online teaching, including active learning (Muir et al., 2019), instructor presence (Roque-Hernández et al., 2021), discussion and assessment techniques (Chakraborty et al., 2021; McAvoy et al., 2022), and feedback (Kim and Kim, 2021). Some of these study areas are topics often studied with the needs and values of instructor interaction. In this study, the importance of instructor interactivity in online learning is related to online discussion forum activities and instructor interaction.

Student factor

The characteristics of students who take online learning education are different from those who study conventionally (face to face). Several essential factors drive student success in online learning: understanding computers and the internet, personal desires, motivation from instructors, and reasonable access to online learning systems (Hadullo et al., 2018; Bashir et al., 2021; Glassman et al., 2021; Rahman et al., 2021). Self-efficacy is explained by social cognitive theory as the ability to self-regulation (Bandura, 2010). According to social cognitive theory, people can develop self-efficacy by observing other people’s models of achieving goals and having had various successful attempts in the past to achieve challenging goals (Duchatelet and Donche, 2019). People who have high levels of self-efficacy tend to be confident in their ability to succeed in challenging tasks, such as their own, and observe others to achieve goals.

Institutional factor

Institutional theory has been used to explore organizational behavior toward technology acceptance, as it explains how institutions adapt to institutional change (Rohde and Hielscher, 2021). Currently, most higher education institutions have migrated from traditional to online learning systems, thereby changing traditional learning environments such as the physical presence of teachers, classrooms, and exams (Bokolo et al., 2020). Today’s developing technologies have improved education due to online learning, teleconferencing, computer-assisted learning, web-based distance learning, and other technologies (Bailey et al., 2022; Fauzi, 2022). Online learning systems provide more flexibility and improve teaching and learning processes, offering more opportunities for reflection and feedback (Archambault et al., 2022). Online learning offers interactive teaching, easy access, and is cost-effective mainly (Sweta, 2021).

E-learning technology

E-learning technology in this study is defined as the learning media used by universities in going online learning. The task-technology fit (TTF) model has been used to assess how technology generates performance, evaluate the effect of use and assess the fit between task requirements and technological competence (Wu and Chen, 2017). The TTF model suggests that the user accepts the technology because it is appropriate to the task and improves learning performance (Kissi et al., 2018). Technology acceptance is determined by the individual’s understanding and attitude toward technology, but the compatibility between task and technology must be considered necessary (Zhou et al., 2010). When a student decides to use technology, such as an LMS, their decision is very likely that the assignment and technology match.

Overall quality

Developments and challenges in information systems inspire researchers and practitioners to improve the quality and functionality of a new system to take advantage of its growth potential (Aldholay et al., 2018). Overall quality is understood as a new construct that includes system quality, information quality, and service quality (Ho et al., 2010; Isaac et al., 2017d). System quality is defined as the extent to which users believe that the system is easy to use, easy to learn, easy to connect, and fun to use (Jiménez-Bucarey et al., 2021). Information quality is understood as the extent to which system users think that online learning information is up-to-date, accurate, relevant, comprehensive, and organized (Raija et al., 2010). Service quality is referred to through various attributes, such as tangible, reliability, responsiveness, assurance, functionality, interactivity, and empathy (Preaux et al., 2022).

Student engagement

Student engagement in online learning is when they use online learning platforms to learn, including behavioral, cognitive, and emotional engagement (Hu et al., 2016). Student engagement in online learning is not only due to the behavioral performance of reading teaching materials, asking questions, participating in interactive activities, and completing homework, but more importantly, cognitive performance (Lee et al., 2015). In this study, cognitive behavior is all mental activities that enable students to relate, assess, and consider an event to gain knowledge afterward. In addition, cognitive behavior is closely related to a person’s intelligence and skill level. For example: when someone is studying, building an idea, and solving a problem.

Student behavioral engagement is essential in online learning but is difficult to define clearly and fully reflect student efforts. So, it must consider students’ perception, regulation, and emotional support in the learning process (ChanMin et al., 2015). Students must fully enter online learning, including the quantity of engagement and quality of engagement, communication with others and conscious learning, guidance, assistance from others, and self-management and self-control.

Student satisfaction

Perceived satisfaction is not limited to marketing concepts but can also be used in the context of online learning (Caruana et al., 2016). User satisfaction is one of the leading indicators when assessing success in adopting a new system (Montesdioca and Maçada, 2015; DeLone and McLean, 2016). User satisfaction also refers to perceiving a system as applicable and wanting to reuse it. In the context of online learning, student satisfaction is defined as the extent to which students who use online learning are satisfied with their decision to use it and how well it meets their expectations (Roca et al., 2006). Students who are satisfied while studying with an online learning system will strive to achieve good academic scores.

Student performance impact

In the context of education, performance is the result of the efforts of students and lecturers in the learning process and students’ interest in learning (Mensink and King, 2020). The essence of education is student academic achievement; therefore, student achievement is considered the success of the entire education system. Student academic achievement determines the success and failure of academic institutions (Narad and Abdullah, 2016).

It is crucial to explore problems with online learning systems in higher education to improve the student experience in learning. Therefore, the university’s ability to design effective online learning will impact university performance and student performance. The failure of online learning design and technology can frustrate students and lead to negative perceptions of students (Gopal et al., 2021).

With rapidly changing technology and the introduction of many new systems, researchers focus on the results of using systems in terms of performance improvement to evaluate and measure system success (Montesdioca and Maçada, 2015; Isaac et al., 2017a,b,c,d). Performance impact is defined as the extent to which the use of the system improves the quality of work by helping to complete tasks quickly, enabling control over work, improving job performance, eliminating errors, and increasing work effectiveness (Isaac et al., 2017d; Aldholay et al., 2018). In this study, performance impact is interpreted as an outcome of the use of technology in online learning.

Materials and methods

Participants

As a preliminary study, this study involved 206 students at a private university in Jakarta, Indonesia. At the university, only five study programs fully implement the online learning system. Therefore, we decided to take all study programs as the unit of analysis. This study involved 206 students at a private university in Jakarta, Indonesia, who implemented an online learning system. Those who participated came from five study programs: Management Department, Accounting Department, Information System Department, Computer Science Department, and Industrial Engineering. Sampling used stratified sampling, and each study program received about 41–42 responses. Researchers sent questionnaires to each head of the department to distribute to students online. All questionnaires were successfully received within 1 week. Each participant received a souvenir for taking 15–20 min to answer all the questions in the questionnaire.

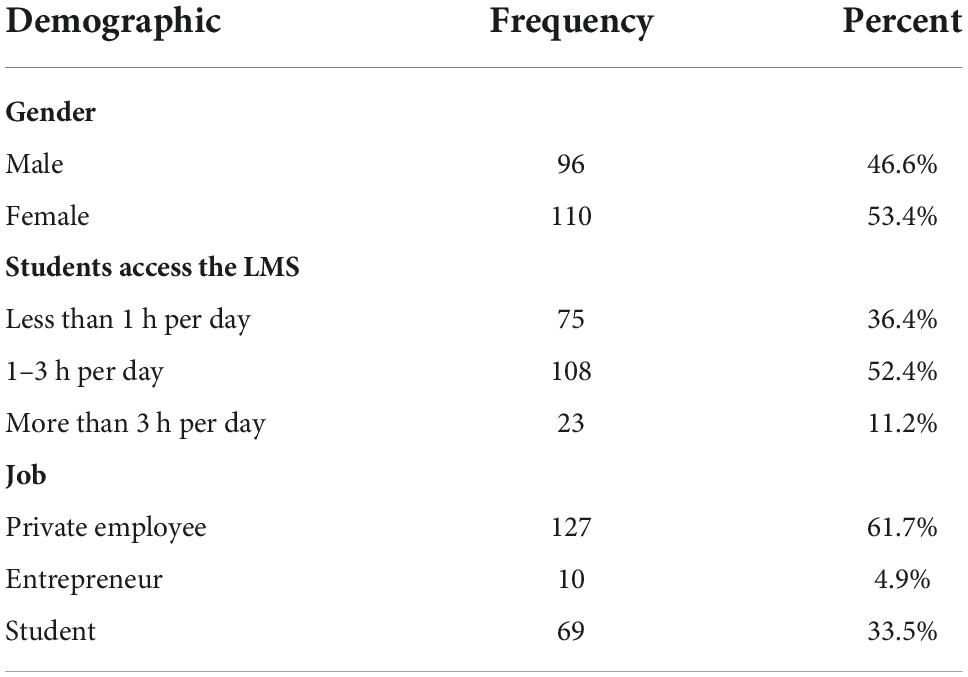

A total of 206 data were collected, 96 female students and 110 male students. 108 students access the LMS between 1–3 h a day, 75 students access the LMS less than 3 h per day, and 23 students access the LMS more than 3 h per day. The average employment status of students is private employees (n = 127), as entrepreneurs (n = 10) and the rest are only as students (n = 69) (Table 1).

Questionnaire development

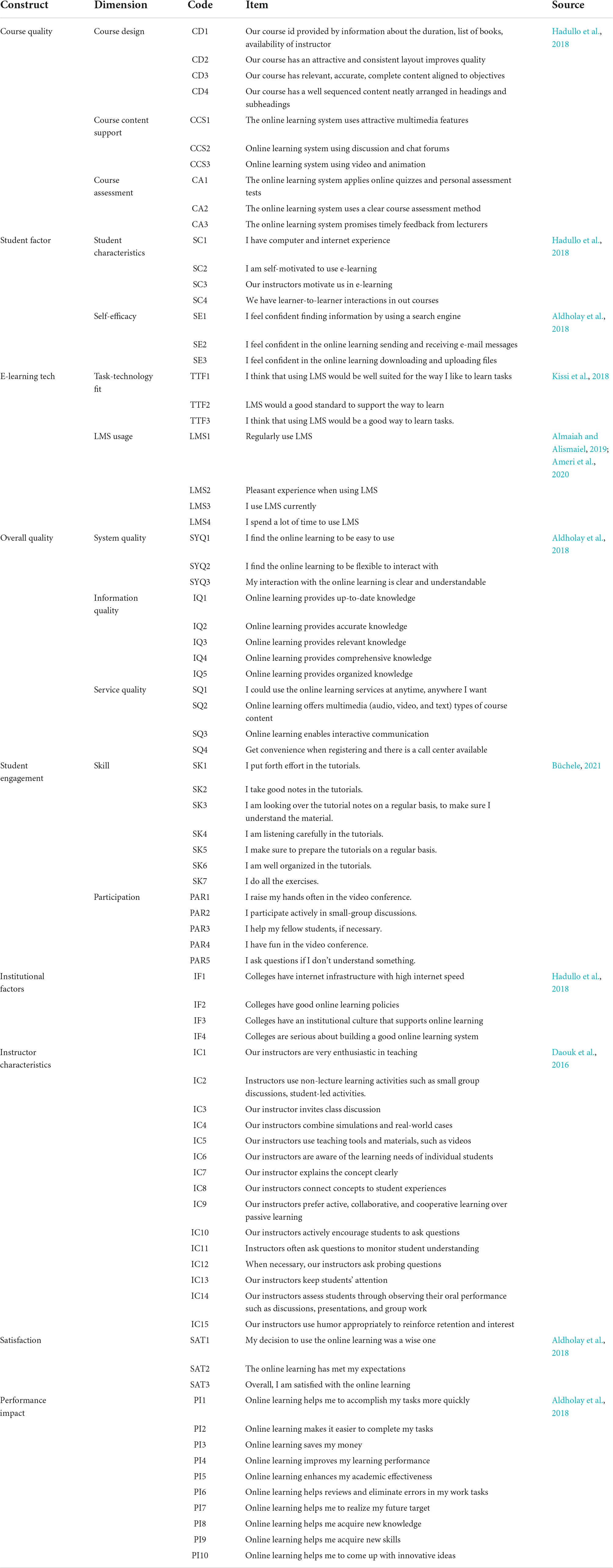

The questionnaire used in this study results from several prior studies following the research context, i.e., online learning. In detail can be seen in Table 2. The first draft of the questionnaire containing 80 statement items was tested virtually on ten students and one Indonesian language expert to ensure that each statement was easy to understand. Furthermore, all items must be answered using a 5-point Likert scale, from (1) strongly disagree to (5) strongly agree.

Validation process

The first step in the questionnaire item validation procedure starts from the pre-test and linguist review. Then the empirical data collected was calculated using Confirmatory Factor Analysis (CFA) and Construct Reliability (CR) analysis as a condition for construct validity and internal consistency.

CFA is a statistical tool helpful in finding the form of the construct of a set of manifest variables or testing a variable on the manifest assumptions that build it. Therefore, confirmatory analysis is suitable for testing a theory of variables on the manifest or the indicators that build it. The variables are assumed only to be measured by these indicators (Hair et al., 2019). The CFA results show that the multiple items in the questionnaire measure construct as hypothesized by the underlying theoretical framework. The CFA produces empirical evidence of the validity of scores for the instrument based on the established theoretical framework (George and Mallery, 2019). Construct reliability (CR) measures the internal consistency of the indicators of a variable that shows the degree to which the variables are formed. The limit value of the construct reliability test is accepted if the value is > 0.70 (Hair et al., 2019).

In the Structural Equation Modeling (SEM) framework, both variance and covariance-based, a questionnaire is valid if the loading factor value is 0.5 for analysis of covariance (Hair et al., 2019) and 0.7 for analysis of variance (Hair Joseph et al., 2019). In addition, the average variance extracted (AVE) value is more than 0.5 (Hair et al., 2019). In CFA, several goodness indices such as Chi-square (X2), Normed Chi-Square (NCS) (X2/df), Root Mean Square Error of Approximation (RSMEA), and Comparative Fit Index (CFI) were calculated to assess the model fit of the model framework under research (Kline, 2015).

Chi-square, NCS, and RMSEA statistics as absolute fit indices can be used to indicate the quality of the theoretical model being tested (Kline, 2015; Hair et al., 2019). The X2-test shows the difference between the observed and expected covariance matrices. Therefore, the smaller the X2-value indicates a better fit model (Gatignon, 2010). The X2-test should be insignificant for models with an acceptable fit. However, the statistical significance of the X2-test results is very sensitive to the sample size (Kline, 2015; Hair et al., 2019). Therefore, the NCS should also be considered. NCS is equal to Chi-square divided by degrees of freedom (X2/df). A smaller NCS value indicates a better model fit, and a NCS value equal to or less than 5 supports a good model fit (West et al., 2012; Hair et al., 2019). Another fit index model is the RMSEA. The RMSEA qualifies for the difference between the population covariance matrix and the theoretical model. An RMSEA value smaller than 0.08 indicates a better model and limits acceptable model fit (Gatignon, 2010; West et al., 2012; Hair et al., 2019). CFI was used to assess model fit in this study. If the CFI value is greater than 0.90, an acceptable model fit is indicated (Kline, 2015; Hair et al., 2019). The alpha (α) level in this study was set at 0.05 for the goodness-of-fit chi-square test (X2).

Results

Descriptive analyses

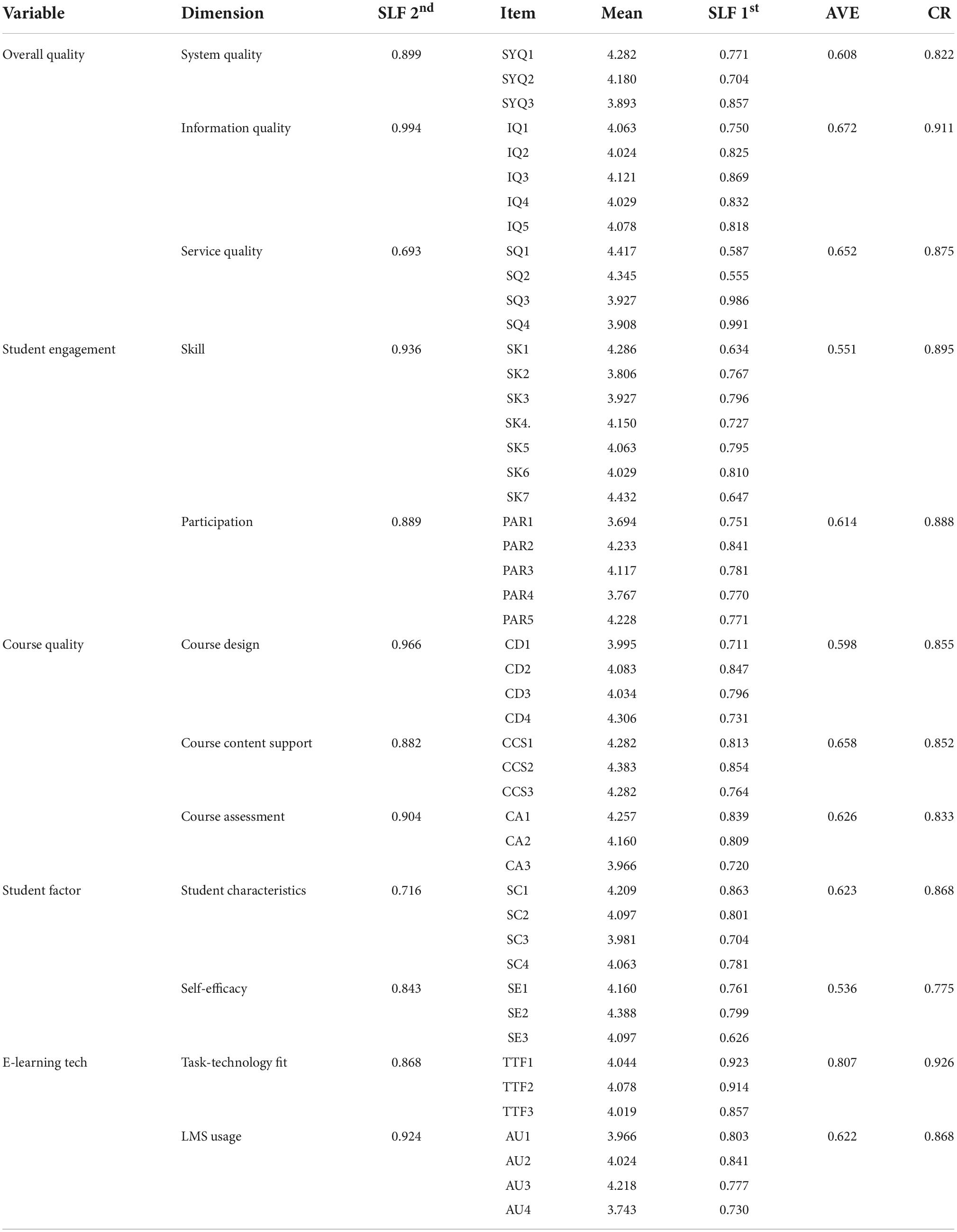

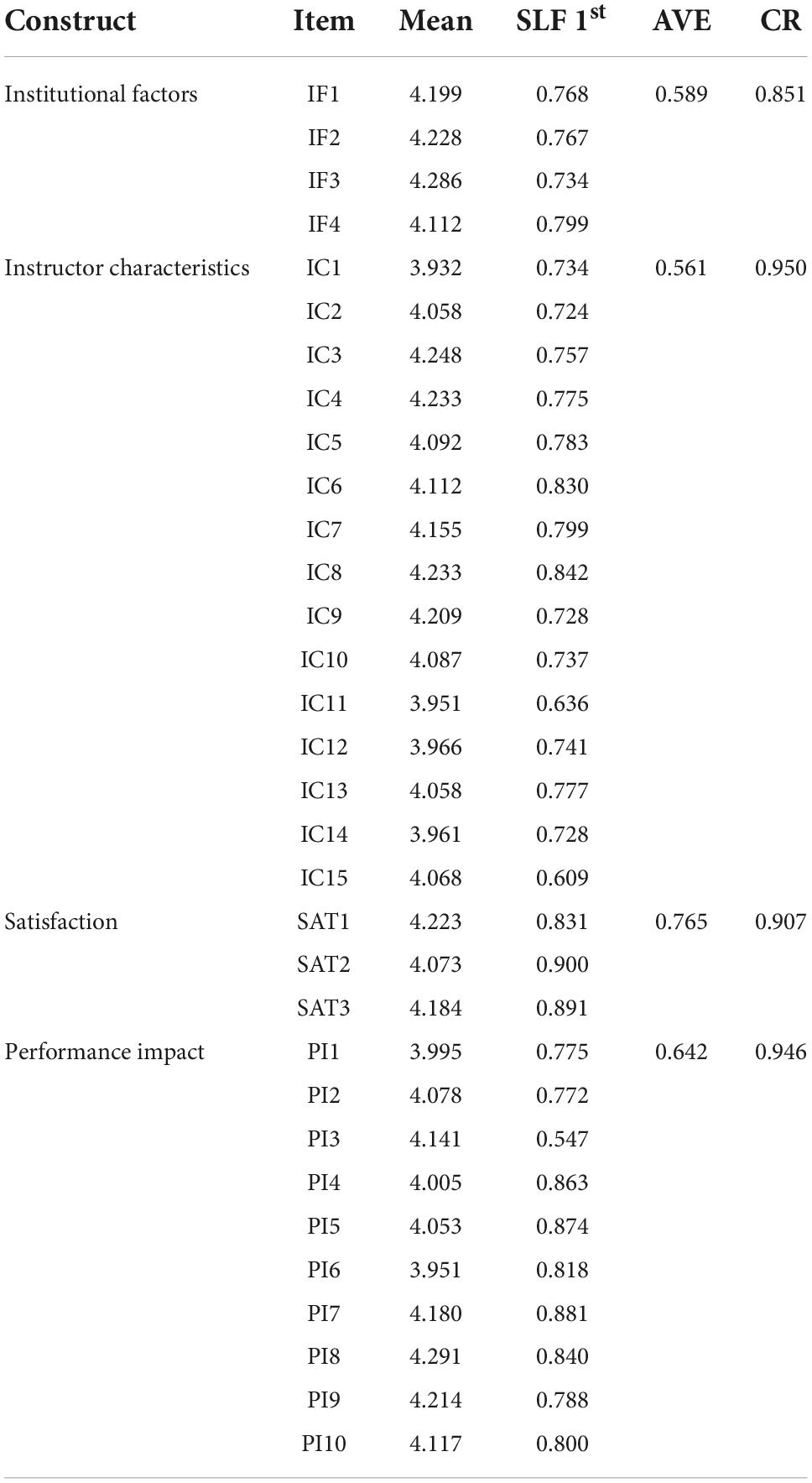

Based on descriptive analysis (Tables 3, 4), the mean value of overall quality items is in the interval range of 3.893 (SYQ3) to 4.417 (SQ1). It shows that students have given positive responses to all overall quality items. Furthermore, the mean value of student engagement items is in the interval range of 3.694 (PAR1) to 4.432 (SK7), which means that students give positive responses to all student engagement items. In the course quality construct, the mean value ranges from 3.966 (CA3) to 4.306 (CD4). That is, all students gave positive responses to the course quality items. The mean value of the student factor is in the range of 3.981 (SC3) to 4.388 (SE2). In e-learning technology, the mean value ranges from 3.743 (AU4) to 4,218. Students gave a positive response to the student factor and e-learning technology. Finally, on the constructs of institutional factors, instructor characteristics, satisfaction, and performance impact, the average student responded positively to all statement items because the mean value was in the range of 3.932–4.291. It can be concluded that all students gave a positive response to all the constructs measured in this study.

Normality test

Hair et al. (2019) illustrated that testing absolute data normality in multivariate analysis. If the data is not normally distributed, it can affect the validity and reliability of the results. In this study, we used the One-Sample Kolmogorov-Smirnov Test with the Monte Carlo method (Metropolis and Ulam, 1949). As a result, the significance value of Monte Carlo is 0.120 > 0.05. The data used in testing the validity and reliability is normally distributed.

The result of internal consistency and confirmatory factor analysis

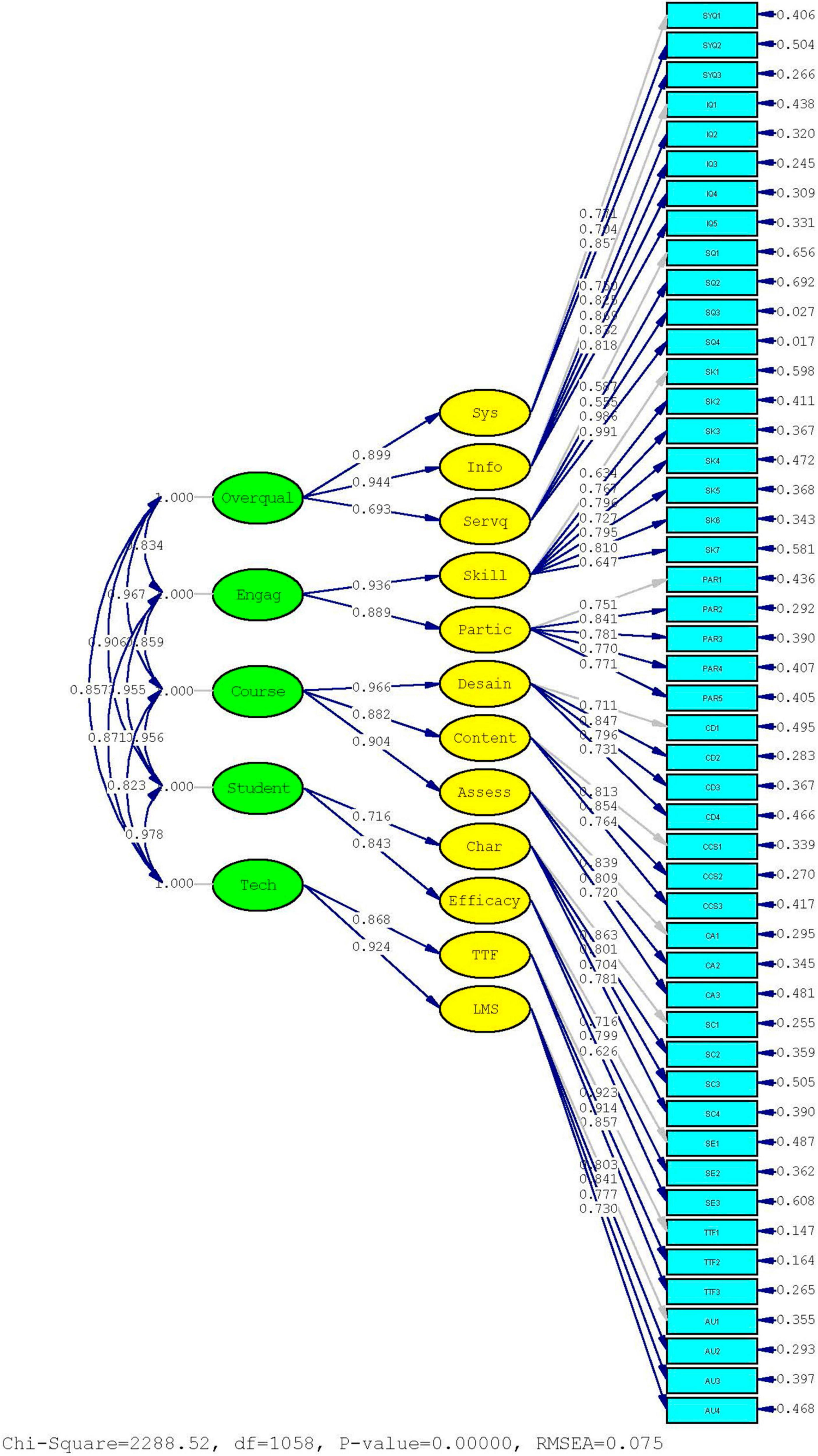

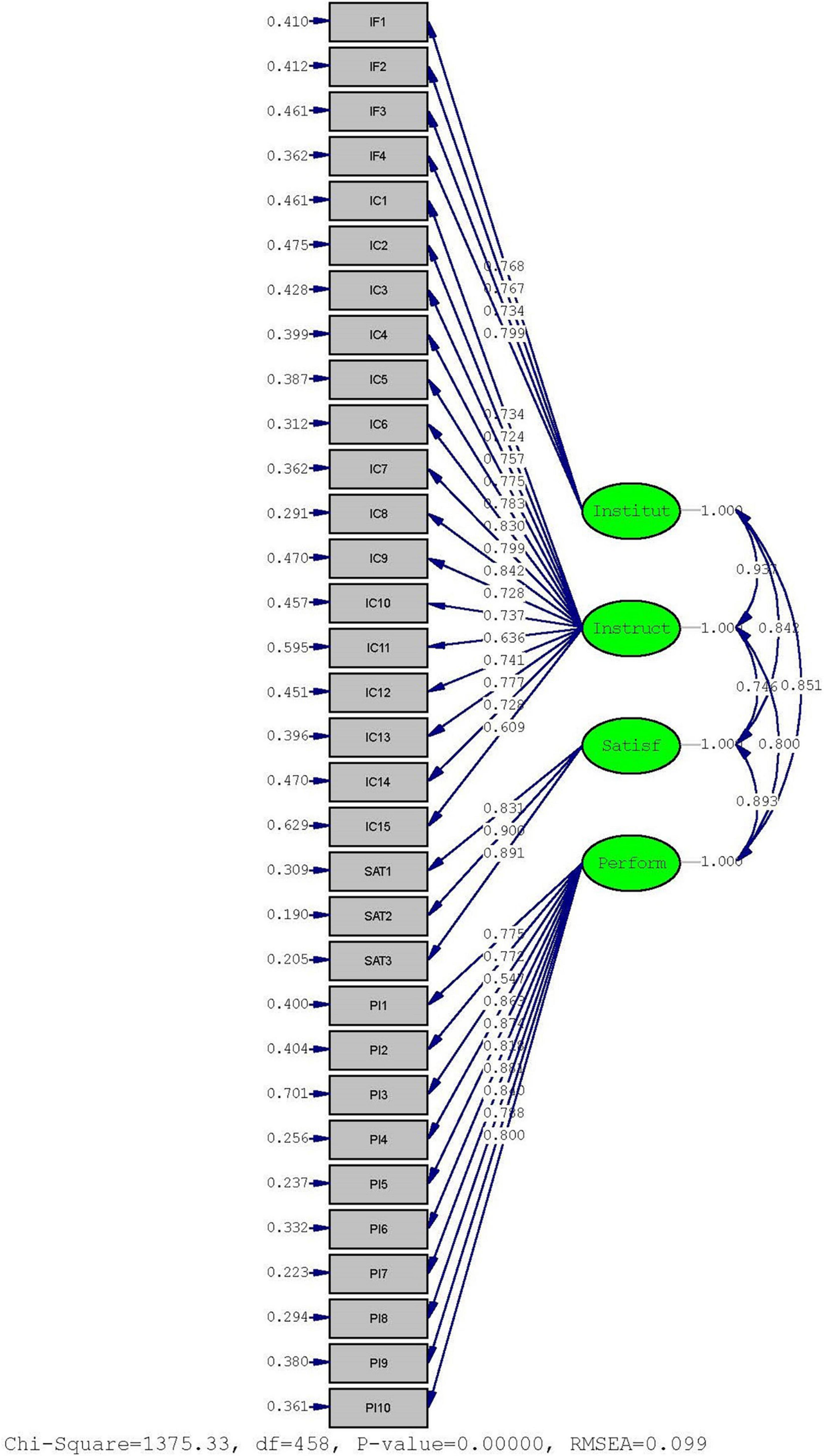

Tables 3, 4 presents the overall results of the validity and reliability tests which are CFA, AVE, and CR analyzed. The construct concept can be unidimensional or multidimensional, which impacts testing its validity and reliability. The construct is in unidimensional validity and reliability testing using CFA first order. It is multidimensional and carried out with CFA second order. This study’s constructs of course quality, student factor, e-learning tech, overall quality, and student engagement are multidimensional, so they must be measured using a second-order procedure. While the constructs of institutional factors, instructor characteristics, satisfaction, and performance impact are unidimensional, so they must be measured using a first-order procedure.

Reliability testing for all constructs in the theoretical model, both second order and first order, resulted in a CR value of more than 0.7. It means that every dimension and indicator of each measured construct can reflect the primary construct well. In other words, the questionnaire used has a high level of consistency. Likewise, for validity testing, all indicators and dimensions of the primary constructs produce standardized loading factor and AVE values of more than 0.5. It means that each dimension and indicator can reflect its primary construct. In conclusion, the questionnaire used in this study resulted in a high level of validity and reliability. In other word, examination of the correlations between the various factors shows that the factors are highly correlated. The standardized loading factors (SLF) coefficient between the tested factors and items shows that no loading factor value is lower than the bad loading factor limit.

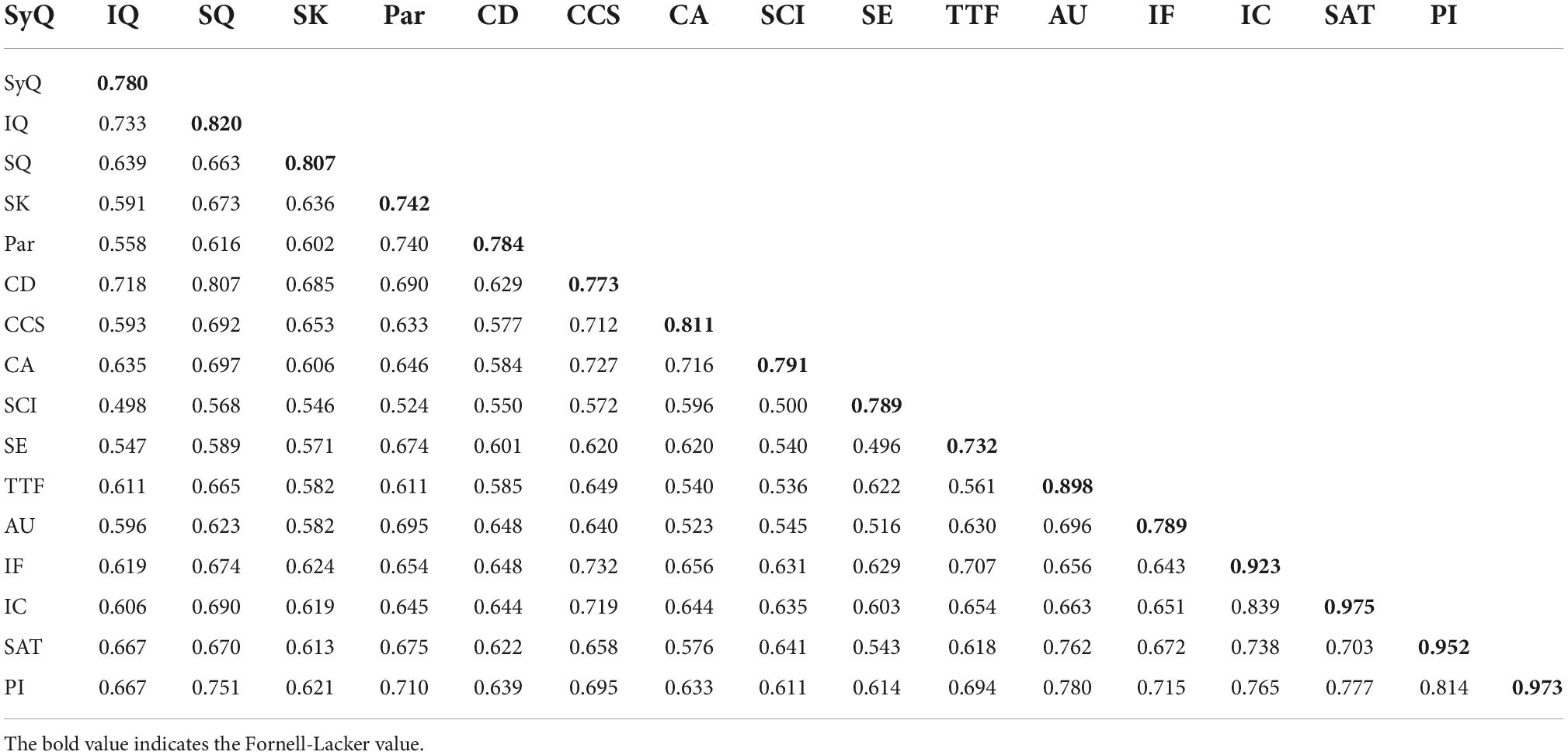

Discriminant validity

Discriminant validity is a concept that means that the two concepts are conceptually different and show a sufficient difference. The point is that a combined set of indicators is not expected to be unidimensional. The discriminant validity test in this study used the Fornell-Lacker criteria. The Fornell-Larcker postulate states that a latent variable shares more variance with the underlying indicator than other latent variables. It means that if interpreted statistically, the AVE value of each latent variable must be greater than the highest r2-value with the value of the other latent variables (Henseler et al., 2015). Table 5 presents information that the AVE root value for each variable is greater than the correlation of other variables. So that discriminant validity is fulfilled correctly.

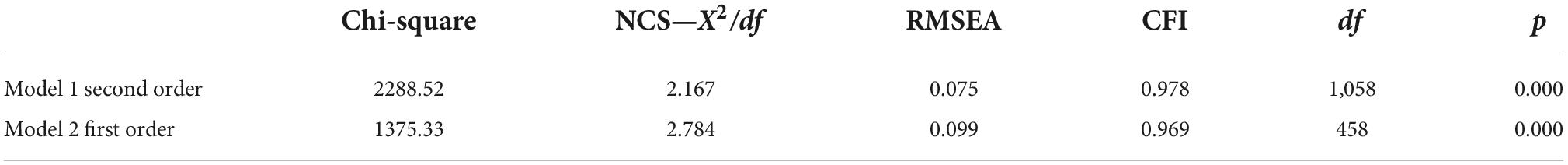

This study also measures the level of goodness of the theoretical model as measured by chi-square, NCS, RMSEA, and NFI statistics. Since chi-square is too sensitive to sample size (Hair et al., 2019), the chi-square ratio approach to degrees of freedom (χ2/df— NCS) was applied. The NCS value is less than 3, meaning that the model fit is acceptable (Hair et al., 2019). Next is the CFI. The results are presented in Table 6 and Figure 2. In the first model, overall quality, course quality, student factor, student engagement, and e-learning technology, as measured by the second-order model, resulted in a Chi-Square value (X2 = 2288.520, p 0.000 < 0.05), which indicates the model is not good. However, the NCS value of the theoretical model indicates model fit (NCS = 2.167 < 3), and the RMSEA value (0.075 < 0.08) indicates the theoretical model fits the population covariance matrix. Because the RMSEA and NCS values meet the model goodness requirements, they support a reasonable fit between the theoretical model and the data. CFI is also used to determine whether the model is sound and fits the data. CFI compares the fit of the theoretical model or the model under test with the independence model in which all latent variables are uncorrelated. In the results of this study, the CFI value of 0.978 is greater than 0.90, so it can be concluded that the model is fit.

The second model measured by first order is institutional factors, instructor characteristics, satisfaction, and performance impact (Figure 3). As a result, the first order model shows a poor data fit (χ2 = 1375.33; χ2/df = 2.784; CFI = 0.969; RMSEA = 0.099). The results of the validity and reliability model for the first order, the RMSE value of 0.099 > 0.08, are considered that the measurement model does not meet the fit criteria. However, other researchers state that RMSEA < 0.10 is still considered fit but poor (Singh et al., 2020). In addition, the NCS value of 2.784 < 3 and the CFI of 0.968 > 0.90 is considered to meet the model’s goodness.

Discussion and implication

This study aims to propose a conceptual framework for measuring the impact of student performance in online learning on a sample of students from various study programs. Because the learning models in social and technical studies programs are different, measuring the two groups of samples is necessary. This study offers an instrument in the concept of online learning that focuses on measuring student perceptions of course quality, student engagement, e-learning technology, overall quality, student factors, institutional factors, instructor characteristics, and satisfaction that impact student performance. The results of the study prove that the measurement of instrument course quality, student engagement, e-learning technology, overall quality, and student factors on a second-order basis produces good validity and reliability values with the support of model fit. While the instrument measurements on instructor characteristics, institutional factors, satisfaction, and performance impact, though they produced good validity and reliability values, the model’s fit was not satisfactory. In particular, the Lisrel program provides instructions for modifying the refinement of the model by relating the covariate errors to the instructor characteristics and performance impact factors because it produces an RMSEA value that does not fit. However, because this study is an initial finding, treatment is not carried out by relating the covariate error (Hulland et al., 2018; Hair et al., 2019).

Generally, this study provides information that most students respond positively to all constructs. It can be interpreted that students who attend lectures using the online learning method view all exogenous constructs as essential to improving their performance. Our results align with previous literature, which explains that the performance of students participating in online learning programs is still less than optimal (Kim et al., 2021), and there are still students who are unwilling to continue their studies (Xavier and Meneses, 2021). Students are unfamiliar with online learning systems and are used to traditional pedagogical styles (Maheshwari, 2021). In addition, internet access is still low compared to developed countries because infrastructure is still not well developed in Indonesia.

Conclusion, limitation, and future research

This study concludes that the model for measuring student learning performance in universities that implement online learning systems is acceptable. The results of this study contribute to universities and educators improving the performance of student learning outcomes. This model will guide them in achieving practical national education goals or help them improve the current system. From the educator’s view, it helps make plans for teaching materials that are effective and follow students’ needs. For universities, providing input to improve the online learning system that is currently running so that it can produce graduates who can compete in the world of work.

This study has several limitations, such as the lack of sample size, which impacts the value of the model’s fit. In addition, this study does not distinguish the validity and reliability of results between social and engineering studies programs. Therefore, for further research, it is possible to add a larger number of samples to provide more comprehensive results and distinguish the validity and reliability of students from social studies and engineering programs. It is because the courses are different. In addition, to test the hypothesis on the proposed conceptual model, it is possible to distinguish students’ level of performance in social studies and engineering programs using the multigroup analysis method.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

HP, RI, and YY contributed to the conception and design of the study, wrote the first draft of the manuscript, conceptual framework, and literature review. RI organized the database and performed the statistical analysis. All authors contributed to the manuscript revision, read, and approved the submitted version.

Funding

This work was supported by “Model Evaluasi Pembelajaran Daring Dalam Menilai Kualitas Sistem Pendidikan Tinggi Di Indonesia” (contract number: 3481/LL3/KR/2021 and contract date: 12 July 2021).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Al-Adwan, A. S., Albelbisi, N. A., Hujran, O., Al-Rahmi, W. M., and Alkhalifah, A. (2021). Developing a holistic success model for sustainable E-learning: A structural equation modeling approach. Sustainability 13:9453. doi: 10.3390/su13169453

Aldholay, A., Isaac, O., Abdullah, Z., Abdulsalam, R., and Al-Shibami, A. H. (2018). An extension of Delone and McLean IS success model with self-efficacy. Int. J. Inform. Learn. Technol. 35, 285–304. doi: 10.1108/IJILT-11-2017-0116

Almaiah, M. A., and Alismaiel, O. A. (2019). Examination of factors influencing the use of mobile learning system: An empirical study. Educ. Inform. Technol. 24, 885–909. doi: 10.1007/s10639-018-9810-7

Ameri, A., Khajouei, R., Ameri, A., and Jahani, Y. (2020). Acceptance of a mobile-based educational application (LabSafety) by pharmacy students: An application of the UTAUT2 model. Educ. Inform. Technol. 25, 419–435. doi: 10.1007/s10639-019-09965-5

Archambault, L., Leary, H., and Rice, K. (2022). Pillars of online pedagogy: A framework for teaching in online learning environments. Educ. Psychol. 57, 178–191. doi: 10.1080/00461520.2022.2051513

Arghode, V., Brieger, E., and Wang, J. (2018). Engaging instructional design and instructor role in online learning environment. Eur. J. Train. Dev. 42, 366–380. doi: 10.1108/EJTD-12-2017-0110

Bailey, D. R., Almusharraf, N., and Almusharraf, A. (2022). Video conferencing in the e-learning context: explaining learning outcome with the technology acceptance model. Educ. Inform. Technol. 27, 7679–7698. doi: 10.1007/s10639-022-10949-1

Bandura, A. (2010). “Self-Efficacy,” in The Corsini Encyclopedia of Psychology, eds I. B. Weiner and W. E. Craighead (Hoboken, NJ: John Wiley & Sons, Inc), 1–3.

Barczyk, C. C., Hixon, E., Buckenmeyer, J., and Ralston-Berg, P. (2017). The effect of age and employment on students’ perceptions of online course quality. Am. J. Distance Educ. 31, 173–184. doi: 10.1080/08923647.2017.1316151

Bashir, A., Bashir, S., Rana, K., Lambert, P., and Vernallis, A. (2021). Post-COVID-19 adaptations; the shifts towards online learning, hybrid course delivery and the implications for biosciences courses in the higher education setting. Front. Educ. 6:711619. doi: 10.3389/feduc.2021.711619

Basir, M., Ali, S., and Gulliver, S. R. (2021). Validating learner-based e-learning barriers: developing an instrument to aid e-learning implementation management and leadership. Int. J. Educ. Manag. 35, 1277–1296. doi: 10.1108/IJEM-12-2020-0563

Bokolo, A., Kamaludin, A., Romli, A., Mat Raffei, A. F., Eh Phon, D. N., Abdullah, A., et al. (2020). A managerial perspective on institutions’ administration readiness to diffuse blended learning in higher education: Concept and evidence. J. Res. Technol. Educ. 52, 37–64. doi: 10.1080/15391523.2019.1675203

Bower, M. (2019). Technology-mediated learning theory. Br. J. Educ. Technol. 50, 1035–1048. doi: 10.1111/bjet.12771

Büchele, S. (2021). Evaluating the link between attendance and performance in higher education: the role of classroom engagement dimensions. Assess. Eval. High. Educ. 46, 132–150. doi: 10.1080/02602938.2020.1754330

Caruana, A., La Rocca, A., and Snehota, I. (2016). Learner Satisfaction in Marketing Simulation Games: Antecedents and Influencers. J. Market. Educ. 38, 107–118. doi: 10.1177/0273475316652442

Chakraborty, P., Mittal, P., Gupta, M. S., Yadav, S., and Arora, A. (2021). Opinion of students on online education during the COVID-19 pandemic. Hum. Behav. Emerg. Technol. 3, 357–365. doi: 10.1002/hbe2.240

ChanMin, K., Seung Won, P., Joe, C., and Hyewon, L. (2015). From Motivation to engagement: The role of effort regulation of virtual high school students in mathematics courses. J. Educ. Technol. Soc. 18, 261–272.

Daouk, Z., Bahous, R., and Bacha, N. N. (2016). Perceptions on the effectiveness of active learning strategies. J. Appl. Res. High. Educ. 8, 360–375. doi: 10.1108/JARHE-05-2015-0037

Debattista, M. (2018). A comprehensive rubric for instructional design in e-learning. Int. J. Inform. Learn. Technolo. 35, 93–104. doi: 10.1108/IJILT-09-2017-0092

DeLone, W. H., and McLean, E. R. (2016). Information systems success measurement. Found. Trends§Inform. Syst. 2, 1–116. doi: 10.1561/2900000005

Duchatelet, D., and Donche, V. (2019). Fostering self-efficacy and self-regulation in higher education: a matter of autonomy support or academic motivation? High. Educ. Res. Dev. 38, 733–747. doi: 10.1080/07294360.2019.1581143

Fauzi, M. A. (2022). E-learning in higher education institutions during COVID-19 pandemic: current and future trends through bibliometric analysis. Heliyon 8:e09433. doi: 10.1016/j.heliyon.2022.e09433

Gatignon, H. (2010). “Confirmatory factor analysis,” in Statistical analysis of management data, ed. H. Gatignon (New York, NY: Springer New York), 59–122. doi: 10.1007/978-1-4419-1270-1_4

George, D., and Mallery, P. (2019). IBM SPSS Statistics 25 Step by Step: A Simple Guide and Reference. London: Routledge. doi: 10.4324/9780429056765

Glassman, M., Kuznetcova, I., Peri, J., and Kim, Y. (2021). Cohesion, collaboration and the struggle of creating online learning communities: Development and validation of an online collective efficacy scale. Comput. Educ. Open 2:100031. doi: 10.1016/j.caeo.2021.100031

Gonzalez, T., de la Rubia, M. A., Hincz, K. P., Comas-Lopez, M., Subirats, L., Fort, S., et al. (2020). Influence of COVID-19 confinement on students’ performance in higher education. PLoS One 15:e0239490. doi: 10.1371/journal.pone.0239490

Gopal, R., Singh, V., and Aggarwal, A. (2021). Impact of online classes on the satisfaction and performance of students during the pandemic period of COVID 19. Educ. Inform. Technol. 26, 6923–6947. doi: 10.1007/s10639-021-10523-1

Hadullo, K., Oboko, R., and Omwenga, E. (2018). Status of e-learning quality in Kenya: Case of Jomo Kenyatta University of agriculture and technology postgraduate students. Int. Rev. Res. Open Distribut. Learn. 19:24. doi: 10.19173/irrodl.v19i1.3322

Hair, J., William, C. B., Barry, J. B., and Rolph, E. A. (2019). Multivariate Data Analysis. United Kingdom: Cengage Learning EMEA.

Hair Joseph, F., Risher Jeffrey, J., Sarstedt, M., and Ringle Christian, M. (2019). When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 31, 2–24. doi: 10.1108/EBR-11-2018-0203

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Market. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Ho, L. A., Kuo, T. H., and Lin, B. (2010). Influence of online learning skills in cyberspace. Int. Res. 20, 55–71. doi: 10.1108/10662241011020833

Hodges, C. B., Moore, S., Lockee, B. B., Trust, T., and Bond, M. A. (2020). The Difference Between Emergency Remote Teaching and Online Learning [Online]. Boulder, CO: EDUCAUSE.

Hu, M., Li, H., Deng, W., and Guan, H. (2016). “Student engagement: One of the necessary conditions for online learning,” in Proceedings of the 2016 International Conference on Educational Innovation through Technology (EITT), Tainan. 122–126. doi: 10.1109/EITT.2016.31

Hulland, J., Baumgartner, H., and Smith, K. M. (2018). Marketing survey research best practices: evidence and recommendations from a review of JAMS articles. J. Acad. Market. Sci. 46, 92–108. doi: 10.1007/s11747-017-0532-y

Ikhsan, R. B., Saraswati, L. A., Muchardie, B. G., and Vional Susilo, A. (2019). “The determinants of students’ perceived learning outcomes and satisfaction in BINUS online learning,” in Proceedings of the 2019 5th International Conference on New Media Studies (CONMEDIA), Bali, 68–73. doi: 10.1109/CONMEDIA46929.2019.8981813

Isaac, O., Abdullah, Z., Ramayah, T., Mutahar, A., and Alrajawy, I. (2017a). Towards a better understanding of internet technology usage by Yemeni employees in the public sector: an extension of the task-technology fit (TTF) model. Res. J. Appl. Sci. 12, 205–223.

Isaac, O., Abdullah, Z., Ramayah, T., and Mutahar Ahmed, M. (2017b). Examining the relationship between overall quality, user satisfaction and internet usage: An integrated individual, technological, organizational and social perspective. Asian J. Inform. Technol. 16, 100–124.

Isaac, O., Abdullah, Z., Ramayah, T., and Mutahar, A. M. (2017c). Internet usage within government institutions in Yemen: An extended technology acceptance model (TAM) with internet self-efficacy and performance impact. Sci. Int. 29, 737–747.

Isaac, O., Abdullah, Z., Ramayah, T., and Mutahar, A. M. (2017d). Internet usage, user satisfaction, task-technology fit, and performance impact among public sector employees in Yemen. Int. J. Inform. Learn. Technol. 34, 210–241. doi: 10.1108/IJILT-11-2016-0051

Jaggars, S. S., and Xu, D. (2016). How do online course design features influence student performance? Comput. Educ. 95, 270–284. doi: 10.1016/j.compedu.2016.01.014

Jiménez-Bucarey, C., Acevedo-Duque, Á, Müller-Pérez, S., Aguilar-Gallardo, L., Mora-Moscoso, M., and Vargas, E. C. (2021). Student’s satisfaction of the quality of online learning in higher education: An empirical study. Sustainability 13:11960. doi: 10.3390/su132111960

Kemp, A., Palmer, E., and Strelan, P. (2019). A taxonomy of factors affecting attitudes towards educational technologies for use with technology acceptance models. Br. J. Educ. Technol. 50, 2394–2413. doi: 10.1111/bjet.12833

Kim, D., Jung, E., Yoon, M., Chang, Y., Park, S., Kim, D., et al. (2021). Exploring the structural relationships between course design factors, learner commitment, self-directed learning, and intentions for further learning in a self-paced MOOC. Comput. Educ. 166:104171. doi: 10.1016/j.compedu.2021.104171

Kim, S., and Kim, D.-J. (2021). Structural relationship of key factors for student satisfaction and achievement in asynchronous online learning. Sustainability 13:6734. doi: 10.3390/su13126734

Kissi, P. S., Nat, M., and Armah, R. B. (2018). The effects of learning–family conflict, perceived control over time and task-fit technology factors on urban–rural high school students’ acceptance of video-based instruction in flipped learning approach. Educ. Technol. Res. Dev. 66, 1547–1569. doi: 10.1007/s11423-018-9623-9

Kline, R. B. (2015). Principles and Practice of Structural Equation Modeling, Fourth Edn. New York, NY: Guilford Publications.

Lee, E., Pate, J. A., and Cozart, D. (2015). Autonomy support for online students. TechTrends 59, 54–61. doi: 10.1007/s11528-015-0871-9

Legon, R., and Garrett, R. (2017). The Changing Landscape of Online Education, Quality Matters & Eduventures Survey of Chief Online Officers, 2017. Available online aat: https://www.qualitymatters.org/sites/default/files/research-docs-pdfs/CHLOE-First-Survey-Report.pdf (accessed March 20, 2022).

Maheshwari, G. (2021). Factors influencing entrepreneurial intentions the most for university students in Vietnam: educational support, personality traits or TPB components? Educ. Train. 63, 1138–1153. doi: 10.1108/ET-02-2021-0074

McAvoy, P., Hunt, T., Culbertson, M. J., McCleary, K. S., DeMeuse, R. J., and Hess, D. E. (2022). Measuring student discussion engagement in the college classroom: a scale validation study. Stud. High. Educ. 47, 1761–1775. doi: 10.1080/03075079.2021.1960302

Mensink, P. J., and King, K. (2020). Student access of online feedback is modified by the availability of assessment marks, gender and academic performance. Br. J. Educ. Technol. 51, 10–22. doi: 10.1111/bjet.12752

Metropolis, N., and Ulam, S. (1949). The Monte Carlo method. J. Am. Stat. Assoc. 44, 335–341. doi: 10.1080/01621459.1949.10483310

Montesdioca, G. P. Z., and Maçada, A. C. G. (2015). Measuring user satisfaction with information security practices. Comput. Secur. 48, 267–280. doi: 10.1016/j.cose.2014.10.015

Muir, T., Milthorpe, N., Stone, C., Dyment, J., Freeman, E., and Hopwood, B. (2019). Chronicling engagement: students’ experience of online learning over time. Distance Educ. 40, 262–277. doi: 10.1080/01587919.2019.1600367

Narad, A., and Abdullah, B. (2016). Academic performance of senior secondary school students: Influence of parental encouragement and school environment. Rupkatha J. Interdiscipl. Stud. Human. 8, 12–19. doi: 10.21659/rupkatha.v8n2.02

Patricia Aguilera-Hermida, A. (2020). College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 1:100011. doi: 10.1016/j.ijedro.2020.100011

Paul, R., and Pradhan, S. (2019). Achieving student satisfaction and student loyalty in higher education: A focus on service value dimensions. Serv. Market. Q. 40, 245–268. doi: 10.1080/15332969.2019.1630177

Preaux, J., Casadesús, M., and Bernardo, M. (2022). A conceptual model to evaluate service quality of direct-to-consumer telemedicine consultation from patient perspective. Telemed. e-Health [Online ahead of print] doi: 10.1089/tmj.2022.0089

Rahman, M. H. A., Uddin, M. S., and Dey, A. (2021). Investigating the mediating role of online learning motivation in the COVID-19 pandemic situation in Bangladesh. J. Comput. Assist. Learn. 37, 1513–1527. doi: 10.1111/jcal.12535

Raija, H., Heli, T., and Elisa, L. (2010). DeLone & McLean IS success model in evaluating knowledge transfer in a virtual learning environment. Int. J. Inform. Syst. Soc. Change 1, 36–48. doi: 10.4018/jissc.2010040103

Ralston-Berg, P. (2014). Surveying student perspectives of quality: Value of QM rubric items. Int. Learn. J. 3:10. doi: 10.18278/il.3.1.9

Roca, J. C., Chiu, C.-M., and Martínez, F. J. (2006). Understanding e-learning continuance intention: An extension of the technology acceptance model. Int. J. Hum. Comput. Stud. 64, 683–696. doi: 10.1016/j.ijhcs.2006.01.003

Rohde, F., and Hielscher, S. (2021). Smart grids and institutional change: Emerging contestations between organisations over smart energy transitions. Energy Res. Soc. Sci. 74:101974. doi: 10.1016/j.erss.2021.101974

Roque-Hernández, R. V., Díaz-Roldán, J. L., López-Mendoza, A., and Salazar-Hernández, R. (2021). Instructor presence, interactive tools, student engagement, and satisfaction in online education during the COVID-19 Mexican lockdown. Interact. Learn. Environ. 2021:1912112. doi: 10.1080/10494820.2021.1912112

Scharf, P. (2017). The Importance of Course Quality Standards in Online Education [Online]. Hoboken, NJ: Wiley University Services.

Seaman, J. E., Allen, I. E., and Seaman, J. (2018). Grade Increase: Tracking Distance Education in the United States. Boston, MA: The Babson Survey Research Group.

Semeshkina, M. (2021). Five Major Trends In Online Education To Watch Out For In 2021 [Online]. Forbes. Available online at: https://www.forbes.com/sites/forbesbusinesscouncil/2021/02/02/five-major-trends-in-online-education-to-watch-out-for-in-2021/?sh=2678d06621eb (Accessed 25 September, 2021).

Sheppard, M., and Vibert, C. (2019). Re-examining the relationship between ease of use and usefulness for the net generation. Educ. Inform. Technol. 24, 3205–3218. doi: 10.1007/s10639-019-09916-0

Singh, S., Javdani, S., Berezin, M. N., and Sichel, C. E. (2020). Factor structure of the critical consciousness scale in juvenile legal system-involved boys and girls. J. Commun. Psychol. 48, 1660–1676. doi: 10.1002/jcop.22362

Sullivan, T. J., Voigt, M. K., Apkarian, N., Martinez, A. E., and Hagman, J. E. (2021). “Role with it: Examining the impact of instructor role models in introductory mathematics courses on student experiences,” in Proceedings of the 2021 ASEE Virtual Annual Conference Content Access, Washington DC.

Sweta, S. (2021). “Educational Data Mining in E-Learning System,” in Modern Approach to Educational Data Mining and Its Applications, ed. S. Sweta (Singapore: Springer Singapore), 1–12. doi: 10.1007/978-981-33-4681-9_1

Tarhini, A., Hone, K., and Liu, X. (2015). A cross-cultural examination of the impact of social, organisational and individual factors on educational technology acceptance between British and Lebanese university students. Br. J. Educ. Technol. 46, 739–755. doi: 10.1111/bjet.12169

Thongsri, N., Shen, L., and Bao, Y. (2019). Investigating factors affecting learner’s perception toward online learning: evidence from ClassStart application in Thailand. Behav. Inform. Technol. 38, 1243–1258. doi: 10.1080/0144929X.2019.1581259

West, S. G., Taylor, A. B., and Wu, W. (2012). “Model fit and model selection in structural equation modeling,” in Handbook of Structural Equation Modeling, Ed R. H. Hoyle (New York, NY: Guilford Press), 209–231.

Wilde, N., and Hsu, A. (2019). The influence of general self-efficacy on the interpretation of vicarious experience information within online learning. Int. J. Educ. Technol. High. Educ. 16:26. doi: 10.1186/s41239-019-0158-x

Wu, B., and Chen, X. (2017). Continuance intention to use MOOCs: Integrating the technology acceptance model (TAM) and task technology fit (TTF) model. Comput. Hum. Behav. 67, 221–232. doi: 10.1016/j.chb.2016.10.028

Xavier, M., and Meneses, J. (2021). The tensions between student dropout and flexibility in learning design: The voices of professors in open online higher education. Int. Rev. Res. Open Distrib. Learn. 22, 72–88. doi: 10.19173/irrodl.v23i1.5652

Yadegaridehkordi, E., Nilashi, M., Shuib, L., and Samad, S. (2020). A behavioral intention model for SaaS-based collaboration services in higher education. Educ. Inform. Technol. 25, 791–816. doi: 10.1007/s10639-019-09993-1

Yakubu, M. N., and Dasuki, S. I. (2018). Factors affecting the adoption of e-learning technologies among higher education students in Nigeria: A structural equation modelling approach. Inform. Dev. 35, 492–502. doi: 10.1177/0266666918765907

Zhou, T., Lu, Y., and Wang, B. (2010). Integrating TTF and UTAUT to explain mobile banking user adoption. Comput. Hum. Behav. 26, 760–767. doi: 10.1016/j.chb.2010.01.013

Keywords: overall quality, course quality, e-learning technology, online learning, student engagement, student and institutional factors, instructor characteristics, student performance

Citation: Prabowo H, Ikhsan RB and Yuniarty Y (2022) Student performance in online learning higher education: A preliminary research. Front. Educ. 7:916721. doi: 10.3389/feduc.2022.916721

Received: 09 May 2022; Accepted: 22 September 2022;

Published: 03 November 2022.

Edited by:

Shashidhar Venkatesh Murthy, James Cook University, AustraliaReviewed by:

Betty Kusumanigrum, Universitas Sarjanawiyata Tamansiswa, IndonesiaEnjy Abouzeid, Suez Canal University, Egypt

Copyright © 2022 Prabowo, Ikhsan and Yuniarty. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ridho Bramulya Ikhsan, cmlkaG8uYnJhbXVseWEuaUBiaW51cy5hYy5pZA==

Hartiwi Prabowo

Hartiwi Prabowo Ridho Bramulya Ikhsan

Ridho Bramulya Ikhsan Yuniarty Yuniarty

Yuniarty Yuniarty