95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 26 August 2022

Sec. Digital Learning Innovations

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.899535

This article is part of the Research Topic A Paradigm Shift in Designing Education Technology for Online Learning: Opportunities and Challenges View all 6 articles

Joshua Littenberg-Tobias*

Joshua Littenberg-Tobias* Rachel Slama

Rachel SlamaHow can large-scale open online learning serve the professional learning needs of educators which are often highly localized? In this mixed-methods study, we examine this question through studying the learning experiences of participants in four massive open online courses (MOOCs) that we developed on educational change leadership (N = 1,712). We observed that educators were able to integrate their learning from the online courses across a variety of educational settings. We argue that a key factor in this process was that the design of online courses was attentive to various levels in which participants processed and applied their learning. We therefore propose the “Content-Collaboration-Context Model” (C-C-C) as a design model for designing and researching open online learning experiences for professional learning settings where participants’ work is highly localized. In analyzing learner experiences in our MOOCs, we apply this model to illustrate how individuals integrated the de-contextualized content of the online courses into their context-specific practices. We conclude with implications for the design and research on open online professional learning experiences.

Over the last decade, massive open online courses (MOOCs) have become an important part of the online learning landscape. However, with falling enrollments and challenges to their business model, many MOOC providers began developing new online-based professional degree and credential programs (Reich and Ruipérez-Valiente, 2019). This shift also reflects a move away from content-based courses toward job-embedded professional learning with an emphasis on applying knowledge to real-world problems (Naidu and Karunanayaka, 2019).

One potential area for growth for MOOCs are developing professional learning courses for educators. Professional educators make up a large portion of total MOOCs users and often enroll in non-educator specific courses as forms of professional learning (Seaton et al., 2014; Glass et al., 2016). Although the concept of developing MOOCs specifically as professional learning for educators has been around for nearly a decade (Kleiman et al., 2013), recently, major research institutions have begun offering free or low-cost professional learning for educators with certificate and credentialing options. For example, the Friday Institute at North Carolina State has developed a set of MOOCs for educators which have been used by over 40,000 users and highlighted by the US Institute of Education Science (IES) as an innovative form of professional learning for teachers (Puamohala Bronson, 2019).

Yet, one of the challenges of creating MOOCs for educators is that the content of courses is targeted at a massive population of learners from all over the world, in a wide variety of school types. The work of educators in these various schools is highly contextual. When professional learning is offered to 10 or 100 educators in a physical place, the course can be tailored to the shared needs of the participants. When professional learning is offered to 1,000 or 10,000 learners online from around the world the content cannot be customized for the needs of each location. Furthermore, the global, large-scale nature of MOOC learning must be reconciled with the wealth of empirical evidence that finds that teachers best improve their practice when learning with others in their school context (Desimone et al., 2002; Wayne et al., 2008; Kraft and Papay, 2014). Thus, the main dilemma that we address in this paper is how to make learning in a MOOC, which is global and decontextualized, relevant for the highly contextual and situational nature of teacher professional learning.

In this study, we explore this dilemma through the example of four educator professional learning MOOCs we developed on educational change leadership. These four MOOCs had 1,712 active participants in six course instances over an 18-month period. The design of these MOOCs was informed by a design model which we describe as the “Content-Collaboration-Context Model” (“C-C-C”). Using this model, we explore the extent participants changed their practices after taking these MOOCs. We also examine whether the learning design of the courses aided participants in integrating the generalized ideas and concepts from the MOOCs into their specific contexts.

United States teachers and schools invest significant time and resources on professional learning. One study found that districts spend estimated 1–6% of their budgets is spent on professional learning activities for teachers (Hill, 2009). Researchers have identified many characteristics of effective educator professional learning related to its content and opportunities for collaboration. Namely, researchers have determined that effective professional learning is active, skill-based, linked to curriculum and school and district goals, intensive, and done in collaboration with others in their context (Garet et al., 2001; Desimone et al., 2002; Correnti, 2007; Wayne et al., 2008; Desimone, 2009). Causal evaluations of professional learning that included these elements have found meaningful improvements in teacher practice and student learning (Neuman and Cunningham, 2009; Matsumura et al., 2010; Allen et al., 2011).

Some researchers have critiqued this literature for not paying enough attention to variation in outcomes, specifically, the role that context plays in the relationship between teacher learning, changes in instruction, and improvements in student outcomes (Opfer and Pedder, 2011; Hill et al., 2013). Educators, like other professionals, learn through observing and applying ideas within their own work setting (Lave and Wenger, 1991; Brown and Duguid, 1991). Educators who work in a context with supportive and effective colleagues are more likely to learn and shift their practices toward instruction that increases student learning. For example, in a study based on nearly a decade’s worth of archival, test scores and demographic data, Kraft and Papay (2014) found that teachers in more supportive contexts (as measured by a statewide teacher surveys of professional learning context) improved more over time compared to their peers working in less supportive contexts. Over a period of 10 years, teachers working in schools rated at the 75th percentile of professional environment improved 38% more than teachers in schools at the 25th percentile. The instructional practices of other teachers in the school can also influence improvements in teaching. In a longitudinal study of a large urban U.S school district, Loeb et al. (2011) found that teachers who worked in schools that were previously more effective at raising student achievement had greater growth in performance themselves than those working schools that were less effective at raising student achievement.

The field of online professional learning for educators has expanded greatly in the past two decades as new technologies emerge and social practices around those technologies evolve (Dede et al., 2009; Lantz-Andersson et al., 2018). Online professional learning for educators—whether through online courses (Dash et al., 2012), learning networks (Noble et al., 2016; Trust et al., 2016), or small independent groups (Reich et al., 2011) connects educators across varied school contexts. However, the research literature on online professional learning has been divided between, one on side, a focus on evaluating the effectiveness of formal online professional learning experiences (Dominguez et al., 2006; Dash et al., 2012; Choi and Morrison, 2014) and, on the other side, understanding how learners apply their learning from informal online settings in their own contexts (Britt and Paulus, 2016; Noble et al., 2016; Trust, 2016).

Designers of MOOCs for educators are faced with the challenge of designing courses that can be implemented at a large-scale, but are also flexible enough that educators can integrate their learning within their specific context. Previous research on MOOCs for educators has found some evidence that educators do integrate their learning into their practice. For example, Brennan et al. (2018) studied participant learning in a MOOC-based online workshop designed to support K-12 teachers teaching Scratch; the open online programming environment for young people to create their own games, animations, and stories. They found that teachers reported the activity, peers, culture, and relevance being most salient to their learning. Similarly, Avineri et al. (2018) examined a set of MOOCs designed for US-based K-5 math teachers designed by the Friday Institute at North Carolina State University. They found that elements in the courses triggered changes in practices, particularly the case study videos of instructions and interactive tools in the courses. Focusing on an international audience, Laurillard (2016) developed a MOOC for educators on the use of information and communication technology (ICT) in primary education that attracted educators from 174 countries and found evidence of application in practice.

However, there has been limited theorizing in the literature how to design learning in a MOOC—which is large-scale and decontextualized—so that it is effective for the contextual and situational nature of educator professional learning. This dilemma does not just affect MOOCs for educators; but is arguably a challenge for all online learning models that seeks to provide skill-based professional learning training across a variety of contexts (Naidu and Karunanayaka, 2019). These challenges are distinctly different from those faced by academic MOOCs in the science or humanities. For professional learning MOOCs, designers must consider how to make the learning immediately relevant across a range of various work contexts. Otherwise, they risk losing learners who do not find the learning experience flexible and specific enough to their professional learning needs.

In the next section, we propose a design model for designing and researching MOOCs for educators, and other large-scale job-embedded professional learning environments, that models the relationship between the content of the course and the integration into practice.

Designing effective online professional learning at scale requires the integration of two ecosystems of learning. One ecosystem is the online learning platforms themselves. Previously, theorists have argued that online learning must be attentive to the need for community and attention to variation individual learner needs in virtual environments. For example, Luckin (2010) developed the “Ecology of Resources” model to argue that the instructional designers of learning technologies should take a learner-specific approach to context. Applying a socio-cultural approach, Luckin argued that designers must consider the various resources and constraints within the learners’ own context that affect how they interact with technology. Another example is the “Community of Inquiry” framework (Garrison et al., 2001; Garrison and Arbaugh, 2007) which describes the role of cognitive, social, and teaching presences in conceptualizing the online learning process. The framework argues that learning designers to be attentive no to just the design and organization of the teaching (e.g., setting curriculum and methods) but also to learners’ cognitive processes (e.g., how they reflect and integrate new content with existing knowledge) and social climate (e.g., how learners interact and collaborate with one another).

The second ecosystem is the local context in which educators work. Teaching is a highly situated form of learning (Lave and Wenger, 1991) and teaching practices cannot be easily de-contextualized from teachers’ contexts (Sanders and McCutcheon, 1986; Bartolomé, 1994). Moreover, educator professional learning is often highly relationship-based and dependent on building mutually trust (Bryk and Schneider, 2003). In order for changes in teachers’ practice to stick, they need to be built upon the pre-existing relationships and networks that already exist among educators within schools (Moolenaar et al., 2012).

We argue that effective design of professional learning environments needs to be attentive to both of these ecosystems and think about how they interact. Designing effective online professional learning requires thinking about how to both build an effective online learning model and thinking about how to build support so learners can share and disseminate their learning within their specific contexts.

We describe this design model in terms of three layers of focus: content – the interaction between learners and the online content; collaboration – the interactions that participants have with others around the content (either in-person or online) through working together and sharing resources; and context – the workplace setting where participants apply their learning with its specific culture around teaching and learning. We describe this as the “Content-Collaboration-Context” model (“C-C-C” model) (Figure 1).

In the model, the content layer represents the online learning ecosystem – what participants see and view within the online learning platform. In this layer, designers must work to make content relevant and flexible across a variety of potential settings. In the context layer, participants apply their learning within their specific supports and constraints of their own context. The middle layer, collaboration, connects these two ecosystems. Designers need to provide opportunities for both in-course and out-of-course social learning. Within the online learning platform, this might include structured discussion forums, affinity groups, Zoom office hours, Twitter chats, or Slack workspaces. Outside the learning platforms, designers should include support for facilitating local sharing and collaboration through study groups or learning circles (Napier et al., 2020; Wollscheid et al., 2020). Course designers can support these groups by developing facilitator guides, PDF versions of the courses, and video playlists. By including these instructional supports, the designers can help learners fill in the spaces between the content of the course and the specific conditions in their work environment.

The setting of this study was a set of four different MOOCs focused on change-leadership for educators; the principles of how to create change within the messy conditions of schools (Fullan, 2002). The courses were administered over an 18-month period from January 2018 to June 2019. Two of four courses were offered twice during this period so there were six total course “instances” during this time period. We describe the contents of each individual course below (using pseudonyms for blinding purposes):

• Change Leadership for Innovation in Schools was a 14-week self-paced course focused on preparing leaders at all levels, teachers, principals, superintendents, for the challenge of leading innovation in schools. This course was offered in Fall 2018.

• Introduction to Design Thinking for Educators was a 6-week course where learners were introduced to the concept of design thinking, a systematic approach to solving complex problems through iterative cycles of discovery, imagining, prototyping, testing, reflecting, and evaluating. This course was offered in both Spring 2018 and Spring 2019.

• Developing a Graduate Profile for Your School was a 4-week course focused on supporting participants in developing a graduate profile, a shareable document that conveys what high school graduates in their community should know and be able to do. This course was offered in both Spring 2018 and Spring 2019.

• Introduction to Competency-Based Education (CBE) was an 8-week course that introduced participants to CBE, explained why schools might pursue it, and the opportunities and challenges educators and others face when implementing. This course was offered in Spring 2019.

All courses were created by the same instructional design team, which included the one of the authors of this paper, and were inspired by the principles of the C-C-C model. Most units begin with videos with instructors that were used to introduce key concepts and vocabulary. Case study videos highlighted applied examples of these concepts by demonstrating how educators applied these concepts in their practice. Readings, activities, and links to resources provided opportunities for participants to extend their learning beyond the videos to deeper engagement with the content. Finally, assignments were structured around having learners apply their learning in their context. Participants might be asked to collect some data or experiment with a form of instruction and then reflect on their experience. The instructional designers set up systems to encourage participants to share this work within the course forums and provide structured feedback to other participants on their assignments.

The instructional designers also provided support for participants to take the course with other participants in their context. Participants were encouraged to form “learning circles” which were learning communities where participants could discuss the content of the courses and reflect on how they might apply their learning within their school or organization. To support the formation of the learning circles, the instructional designers created facilitators’ guides which contained tips for organizing and maintaining learning circles, suggested schedules and agendas for meetings throughout the course, questions to prompt discussions about course content, and strategies for making assignments and activities more collaborative. Course-designers also developed downloadable versions of course resources, sometimes called “take-out” packages, and created video playlists to facilitate the sharing of course resources within the participants’ context.

In this article, we investigate participating learning in four MOOC for educators through the C-C-C model to better understand how these environments can be designed to facilitate the transfer from large-scale online learning to local, contextualized practice. By mapping educator learning in these MOOCs to this model, we will provide evidence about the value of this model for designing and researching powerful learning experiences for educators and other professionals. We address the following research questions:

(1) How did participants’ integrate the content of the course into their practice?

(2) How did participants collaborate and share with others in both virtual and local contexts? To what extent were different forms of collaboration/sharing related to changes in practice?

(3) How did variation in participants’ local school context relate to how participants chose to collaborate with others and to changes in practices?

We used an integrative mixed-methods approach to our research design (Teddlie and Tashakkori, 2006). Participants were included in the analytic sample if they either (a) completed the course (which is defined by edX as having earned at least 60% of the available points on course assignments) or (b) spent at least 1 h in the course platform. Additionally, we limited the analysis sample to participants who had at least partially completed a pre- or post-course survey. Pre- and post-course surveys were integrated into the survey platform in both courses. Within the analysis sample, response rates for the pre-survey were 91 and 51% on the post-survey. Across all courses, participants in the analytic sample were more female, older, had higher levels of formal education, and were more likely to be from North America than registrants not in the analysis sample (Table 1).

Survey links were emailed to participants in the analysis sample (N = 1,712) 4–8 months after their respective course ended. Overall, 30% of participants in the analytic sample responded to the follow-up survey. Participants who responded to the follow-up survey had higher rates of completion and spent more time in the course (61% completion, 4.8 h in the course) than participants in the analytic sample who did not respond to the follow-up-survey (39% completion, 3.9 h in the course). However, because our proposed modeling method measured individual-level change in practices from the pre-survey (see section “Analysis Methods”) rather than the overall levels of practices, we can still glean valuable insights from this analysis.

We also conducted interviews with a stratified random sample of participants in each of the four courses, over-sampling for participants currently working in K-12 schools (N = 35). Interviews were conducted over the phone or video chat using a semi-structured interview protocol. The content of the interview focused on their current school context, motivations for taking the course, and current or planned future actions based on what they learned in the course. All participants were interviewed at least once during the course. In two of the courses, “Developing a Graduate Profile for Your School” and “Introduction to Design Thinking in Education,” participants were also interviewed 4–6 weeks after the courses were completed.

We developed scales of course-related practices for each course, and measured them on the pre, post, and follow-up surveys. Descriptive statistics, and reliability for each scale can be found in Supplementary Appendix Table A1. Outcomes variables were standardized using the pre-course survey mean and standard deviation for each course to allow comparisons across courses.

We measured collaboration using four indicators: participation in learning circles, sharing of course resources, downloading the facilitator’s guide, and participating in the online forums. We measured learning circle participation (N = 1,126) and sharing of course resources (N = 376) using self-reported data from the course survey. We used log data from the courses to determine which participants had downloaded the facilitator’s guide or posted in the course forums (N = 1,712).

We measured educators’ school contexts using both self-reported and publicly available data. To assess participants’ perceptions of their school’s instructional context, we administered a six-item scale that measured respondents’ attitudes toward the instructional culture in their school (e.g., “Faculty share a common vision of quality teaching and learning”). The survey item was administered on the pre-survey, only to participants who identified as K-12 educators (N = 326) (see Supplementary Appendix Table A). We also administered a question on the pre-survey about the socio-economic characteristics of the students in participants’ schools. Socio-economic characteristics of students are often good proxies for the resource level of the school (Payne and Biddle, 1999) academic achievement (Sirin, 2005) and school climate (Hopson and Lee, 2011). Using an item from the 2011 Trends in International Mathematics and Science Study (Martin et al., 2013); we asked participants to report the percentage of students who were economically disadvantaged on a scale on a scale of 1 (0–10%) to 4 (More than 50%) (N = 436). Additionally, for educators in the U.S. we used information collected about participants schools on the pre-survey to match their school to National Center for Education Statistics (NCES) data on that school from the 2015–2016 school year (N = 140). In the U.S., the economic characteristics of students in the school is based eligible for a free or subsidized lunch program. on the Students are eligible for a free lunch if they have family incomes at or below 130% of the poverty level and a reduced priced lunch if their family’s income is between 130 to 185% of the poverty level (States Department of Agriculture, 2017).

We used demographic variables in all of our analyses as statistical controls. All students are asked to fill out a brief demographic survey when signing up for the courses. The survey included questions about their age, gender, level of education, and country. Additionally, the server records the modal IP address of each student when they are taking the course which allows for identification of the user’s continent. We supplemented this data with demographic information from the pre-course survey when there was no data available from the course platform.

We organize the description of mixed-methods analysis by research question. In cases where repeat the same analysis procedure across multiple research questions, we refer back to previous research questions.

To contextualize the changes in practices we found in the quantitative data we developed short vignettes (N = 6) from the sample of participants who we interviewed. The vignettes drew on quotes from the interviews as well as forum responses and open-text from pre, post, and follow-up survey. A description of the participants in the vignettes is included in Table 2.

To model changes in practices, we used a hierarchical linear growth model (Raudenbush and Bryk, 2002) to measure changes in practices across the pre, post, and follow-up survey. Since there were only three time-points in the study, we chose to model time-points as a categorical variable in order to account for non-linearity in changes in participants’ practices. The level-1 model is as follows:

Demographic covariates were added to the model for the intercept to adjust for differences in the pre-survey. The level-2 equation

All analyses were conducted using the lmer function from the lme4 R package (Bates et al., 2015). The full model results can be found in the Supplementary Appendix.

We use the same HLM model described in the previous question but added cross-level interaction terms to the slopes for the post and follow-up surveys for each of the collaboration indicators, running separate models for each term. The level-2 intercept slopes are as follows (where t represents the time point)

We used the same approach for modeling the interaction with the school context indicators.

In Research Question 1, we asked about the extent to which participants integrated the content of their course into their practice. We illustrate the possibility space of this question through the example of Dan, a participant in “Introduction to Competency-Based Education.” During the course, Dan worked for a district office of a large U.S-based school district where he was responsible for supporting teachers in developing Individualized Education Plans (IEPs) for students with special needs that documented the skills that students would need to learn to be successful after graduation. Previously, he reported struggling to find a way to help teachers meaningfully connect the state learning standards with the skills in the IEP; “we don’t have a coherent map where we can look at the standards and identify how all of the skills within that standard are also represented across different standards.”

Taking the MOOC changed how he approached his work with teachers. Dan began working departmental teams at various schools in the district to create a coherent map, across grade levels and subjects, between the state learning standards and the skills students would be required to master. Dan reported that the process energized teachers in his district, promoting increased collaboration across departments and schools

It has gotten to this point where now I have two schools that have said I’m wondering how much more overlap we can create… So that school, their two teams started planning together in social studies and ELA. And another school, which is in our network was invited to participate…. So I was just amazed at seeing that

Across all participants in the analysis sample, we observed increases in their use of practices related to the content of the courses (Figure 2). Compared to the pre-survey, participants reported higher prevalence of practices related to course-content on the post-survey. On average, participants increased their practices by 0.42 standard deviations (SD) (p < 0.001) above their pre-survey averages. Although there was a slight attenuation of the effect on the follow-up survey, the effect persisted 4–8 months after the course ended. Four to eight months after the course ended, participants reported 0.25 standard deviation higher levels of practices related to course content (p < 0.001).

The content of the courses offered multiple “hooks” that allowed participants across varied contexts to apply the generalized concepts of the course to specific problems from their own context. Dan observed how the course videos shifted his understanding of the difference between standards and competencies:

And in the video they made them reference, the two people made the reference toward standards and competencies and up to that point, I was interpreting them as pretty much the same…when I realized that oh, okay when you’re making this reference to competencies it’s to the knowledge and skills within these standards and how these skills can be represented across that. And so it also informed my understanding too that some places have a coherent map.

This idea from the video then fostered specific actions. Dan began working with teachers to map skills across standards in different grade levels and subject areas showing how they were connected.

Participants also described how doing the assignments helped them understand how these ideas might fit within their own practice within their local context. We illustrate this through the following example: “Ann” a kindergarten teacher who described in an interview how using a particular problem-solving approach that she learned in “Introduction to Design Thinking in Education” with her students prompted her to think about broader shifts in her curriculum.

We do a unit on construction, so it kind of already fits in with the kindergarten curriculum that we use. Again, I kind of like the give back to the community aspect, which isn’t part of our curriculum. I’d really try to go back and look into the language around that and how I could incorporate that method into my class.

Eight month later, on a follow-up survey, Ann reported that she had begun making those changes to her curriculum.

I am seeking out more opportunities to engage in engineering. I am exploring novel engineering and maker mindset professional development courses…I have been able to incorporate some engineering and design and am hoping to implement more in the spring as it fits in with our curriculum.

In Research Question 2, we examined the role of collaboration in translating learning the content from the courses into practice. In our analysis, we examined two main forms of collaboration around course content. The first, which we describe as “virtual collaboration,” occurred between participants in the online course and was mainly clustered within the course forums. Based on log data, 79% of participants visited the forums and 52% of participants in the analysis sample posted at least once in the forums. Visiting the discussion forum was not required, but participants were encouraged to interact there in order to meet other learners and share what they learned.

Participants used what they learned in the discussion forums about others’ contexts to re-evaluate circumstances in their own context. “Beth,” a participant in “Introduction to Competency-Based Education,” was developing professional development for her U.S.-based school district around a large-scale learning initiative which was related to the content of the MOOC. Beth described how hearing about what other participants were doing in the forums along with the school profile videos were helpful in highlighting how difficult it would be to implement the initiative.

Just through reading and watching the videos and seeing the posts of what other people are doing, I think I’ve gained a much better understanding of what I would have to teach better with regards to CBE.

At the end of the course, Beth reported that the course affected their practice was making her think more critically about how she approached this initiative:

I used to think that it wouldn’t be too difficult to implement [the initiative] if we just had the right people willing to work hard, but now I think that there are many more layers to [it] than I previously considered and for full implementation to occur, much more expansive, strategic planning is needed.

Four months after the course ended, Beth reported the course changed the way she approached how she facilitated professional learning, “Information I share with teachers is more accurate and how we model PD is evolving.” Learning from others in the forums helped.

Beth re-calibrate how she approached this new initiative with teachers in her district.

The second type of collaborating we observed was “local collaboration” where participants took their learning outside of the online course platform, by forming learning with others in their local context. In surveys, 37% of participants reported that they either planned to or participated in a learning circle during the course. According to course log data, 11% of participants downloaded the facilitators’ guide for the course.

Another way participants collaborated with others in their local context was by sharing materials from the online courses. Nearly seven in ten participants (68%) reported sharing materials from the course with others in their school or organization. Of those who reported sharing content, the most common ways that participants reported sharing materials is through sharing content at in-person meetings (86%), adapting the content of the course for use in a professional development setting (61%), and integrating the course content into existing professional development offerings (51%).

Participants adapted the learning from the course when collaborating and sharing with others in their local context. Amina was a Canadian high school teacher whose goal in taking the online course was to build her skills to better engage with the families of immigrant students in her school. During the course, Amina organized meetings for parents in the community to help them understand how innovative practices might benefit their children. She credited the course with helping her develop the language around innovation, “I gained more knowledge about how to implant innovation in the classroom and better collaborate with the school.

Six months after completing the course, she reported organizing workshops for educators about how they can implement change in their school.

I became a change leader in my community and in my school, collaboration with the administration, parents and educators. I organized workshops on how other educators can implement change in their schools.

Through sharing with others, using the tools and resources they learned in the course, participants were able to integrate their learning from the online courses into their practice in their local context. This also extended the reach of the course, enabling even those who didn’t take the online course to be exposed to the ideas and concepts from the course within their own local setting.

As part of Research Question 2, we also investigated the extent to which collaboration and sharing, both locally and virtually, was related to learners’ changes in practices related to the content of the courses. We found that most forms of collaboration and sharing we examined did not contribute to greater changes in practices (Figure 3). Participants who formed learning circles (ES = 0.27 SD, p < 0.001) and shared resources in their context (ES = 0.44 SD, p < 0.001) reported significantly higher levels practices on the pre-survey than those who did not. However, even though all participants made gains in practices on the post and follow-up survey, there were no significant differences in the rate at which participants changed their practices. Participants who reported participating in a learning circle did not report any meaningfully higher rates of changes in practices (ES Post = –0.02 SD, p > 0.1, ES Follow-up = 0.001 SD, p > 0.1). Sharing resources was positively related to slightly higher rates of changing practices (ES Post = 0.08 SD, p > 0.1, ES Follow-up = 0.17 SD, p > 0.1), but the estimates were not statistically significant. Additionally, we saw no meaningful differences in the rate of changes in practices by whether participants posted in the discussion forums (ES Post = 0.06 SD, p > 0.1, ES Follow-up = 0.06 SD, p > 0.1).

However, we did find that accessing the facilitator’s guide was related to changes in practice. Participants who downloaded the facilitator’s guide had significantly greater growth in practices on both the post (ES = 0.30 standard deviations, p < 0.05) and follow-up survey (ES = 0.27 standard deviations, p < 0.05). This suggests that making resources available to support local collaboration may have contributed to changes in practice within participants’ local contexts.

In Research Question 3, we examine how local school contexts was related to how participants collaborate with one another, both virtually and within their local contexts. To explore this question, we use the contrasting examples of “Danielle” and “Alexis” who were U.S.-based high school teachers who participated in “Developing a Graduate Profile for Your School” Both approached the course with similar sets of motivations to change the culture of instruction at their respective schools. Danielle was concerned that instruction in her school was overly focused on standardized testing observing that “so as far as academic content, it’s really focused on making sure that the kids are ready for the test, unfortunately.” She found that this environment often undermined student motivation for learning, “the kids are just… They’re really not excited about being there and the work that they do.” Alexis similarly found her colleagues’ instruction lacking in student engagement, “this school, the majority of teachers still do direct instruction. They stand up. They teach. They do the book and answer questions. That’s it.”

However, the local contexts that they worked in differed substantially. The school where Danielle worked was a large, racially diverse public school. The academic culture was heavily focused on standardized testing and the curriculum was controlled by the district. Danielle was the only person in her school taking the course at the time it was being offered. For Danielle, participating in the course widened the gulf between her own views on education and those of her colleagues in her school. In her interview, she described her evolution this way:

I kind of thought before taking the course, I was kind of thinking that every teacher on campus kind of has an idea of what graduates should know and be able to do, and then they’re incorporating that into their lessons, because it was always important to me to make sure that we were creating good citizens. So I kind of thought that was in every teacher’s mindset when they were planning their lessons. But as I’ve been talking to people, they were just kind of focusing on academic content, and not really thinking about what we want the humans to be able to do beyond academics.

Participating in the online course helped her critically examine her school’s approach to instruction and develop a plan to act to address those concerns. Danielle successfully convinced her administration to change her schedule so she could spend more time providing extra support to students to make sure they stay on track. By taking individual steps to better support students, she was able to begin the process of transforming her own teaching practice.

In contrast, “Alexis,” worked at a private school where they were not mandated to participate in standardized testing. Alexis was also able to organize a learning circle for teachers and administrators at her school to work on the course together. Her goal with the learning circle was to integrate the concept of a graduate profile from the online course into her school’s strategic plan. She observed that “my goals are to help us create a cohesive profile that allows us and articulates the need to move the faculty forward.”

Using concepts she learned in the online course, Alexis and the colleagues in her learning circle organized a 3-day workshop for faculty in her school. She and her colleagues also encouraged school to also create graduate profiles for faculty and administrators in addition to students. She observed that ideas from the course had become part of the culture at her school.

That profile process is really good for us, going through it, because we’re now using it to create a common language across the entire school. In how we talk about things, how we interact with each other, how we look at the characteristics of what we want in people we work with and work around.

Although Danielle and Alexis both had the same goal of shifting instructional practices in their school, how they integrated what they learned from the online courses differed based on factors in their local context. Because Danielle worked in a school with fewer resources and opportunities for collaboration the changes she chose to make focused more on changes to her own teaching practice. Alexis, by comparison, had greater access to resources, the support of the school administration, and had colleagues who she could collaborate with on the course. As a result, she was able to begin to make more systemic changes to her school’s instructional culture.

Throughout the analysis sample, we observed this variation in school contexts. For example, there were substantial numbers of participants who reported working in both schools where less than one-tenth of the students were economically disadvantaged (40%) and there were also substantial numbers of participants who reported working in schools where more than half of the students were economically disadvantaged (23%).

We found similar variation in student demographics for participants working in U.S. public schools. For participants in U.S. public schools standard deviation we were able to link to public records about the percent of students eligible for free or reduced price lunch, an indicator of economic disadvantage (Harwell and LeBeau, 2010). In our sample, the average proportion of students eligible for this program within participants’ schools was 36% with a standard deviation of 27%. This meant that a participant one standard deviation below the mean on this metric was working at school with very few economically disadvantaged students (9%). In contrast, a participant one standard deviation above the mean on this metric worked at a school with more than half (63%) of the students coming from economically disadvantaged families.

Another way that educators’ school contexts varied was in the school cultures around instruction, teacher leadership, and collaboration. On the pre-survey, participants were asked to answer questions about their school cultures around instruction, teacher leadership, and collaboration which we averaged into a scale we termed “school instructional climate.” Reported school instructional climate varied substantially between participants in the analysis sample; the mean was 4.10 and the standard deviation was 0.90. A participant in a school one standard deviation reported much less supportive work environments than participants in schools one standard deviation above the mean (Table 3).

Based in part on the examples of Alexis and Danielle, we theorized participants in schools with fewer economically disadvantaged students and/or better instructional climates would also be more likely to collaborate with colleagues in their schools. We report the results from this analysis in Figure 4 which shows the relationship between school demographics and forms of collaboration. We found that having fewer economically disadvantaged students and better school instructional climate were related to higher levels of local collaboration. Participants were more likely to report participating in learning circles and sharing resources from the courses in schools with fewer economically disadvantaged students and better reported instructional climates. However, the opposite was true for virtual collaboration. Participants were more likely to post in the forums if they reported worse instructional climates in their schools and among U.S. public school teachers if they worked schools with more economically disadvantaged students.

These results are echoed by another finding from a question on the follow-up survey about learning circles. For participants who said they did not participate in a learning circle, we asked why they join one. The most common response, selected by 42% of participants, was that “there wasn’t anyone in their school or organization who could take the course with them.” This suggests that factors at the school-level may have influenced the extent participants collaborated with others in their local context.

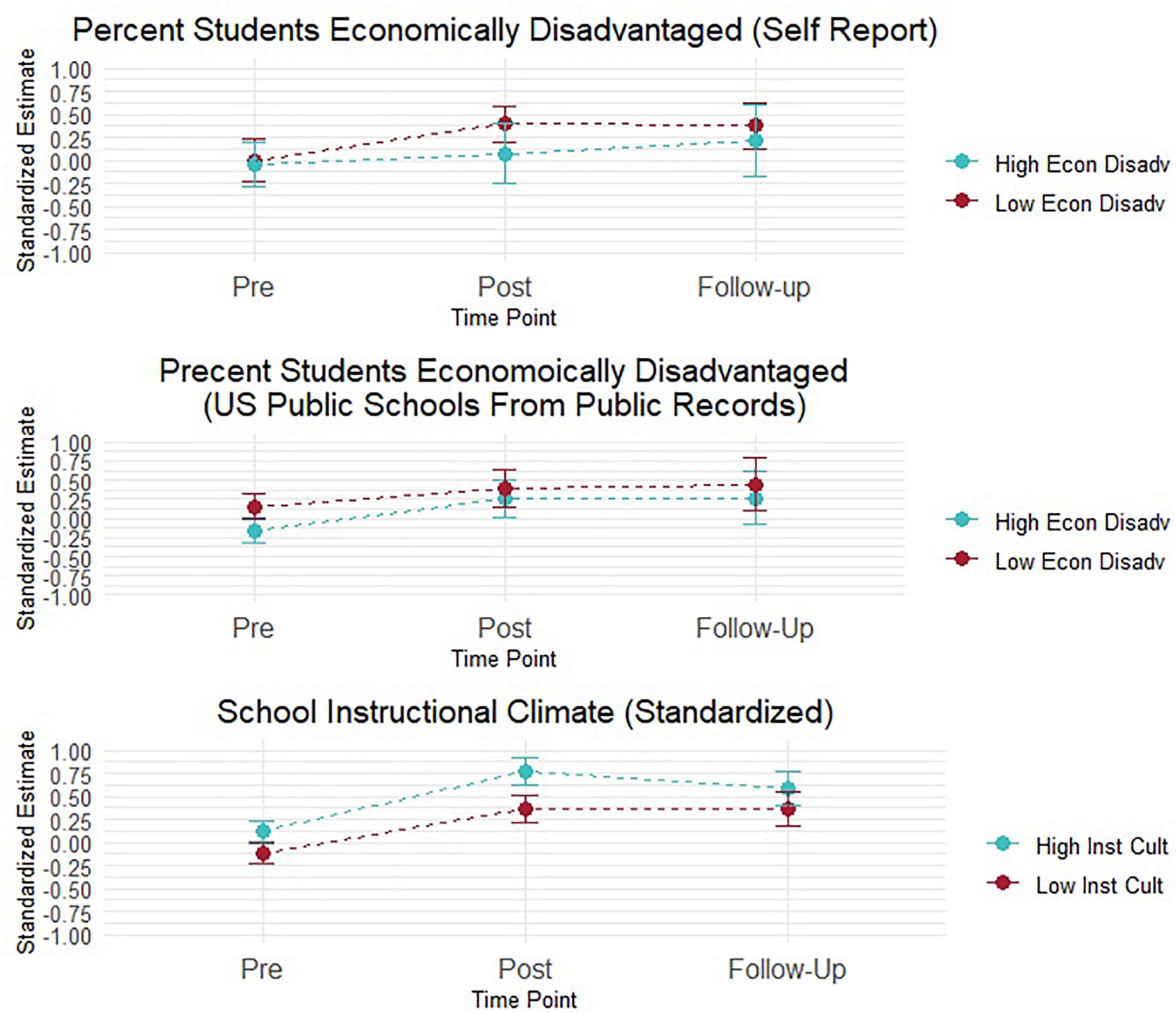

As part of Research Question 3, we also examined whether school context was related to changes in practices. We plot the estimates from our model in Figure 5 which shows the relationship between school demographics and growth in practices. Participants who reported high percentages of economically disadvantaged students (More than 50%) in their schools had less growth in practices than participants in schools with few economically disadvantaged students (0–10%) (ES = –0.29 SD, p < 0.1) and smaller, but not statistically significant changes the on the follow-up survey (ES = –0.14 SD, p > 0.1).

Figure 5. Estimated change in practices from pre-survey average by economically disadvantaged students and school instructional climate.

Among participants in U.S. public schools, for whom we had publicly available, we found that participants with more economically disadvantaged students had lower reported levels of practice on the pre-survey (ES = –0.15 SD, p < 0.1), but there were no meaningful differences in changes in practice (Post ES = 0.08 SD, p > 0.1, Follow-up ES = 0.06 SD, p > 0.1). Additionally, participants in schools with better school instructional cultures reported higher levels of course-related practices on the pre-survey (ES = 0.12 SD, p < 0.05). However there were no statistically significant differences in changes in practice on the post (ES = 0.08 SD, p > 0.1) or follow-up surveys (ES = –0.01 SD, p > 0.1).

These findings suggest that participants across a variety of contexts were able to identify ways of changing their practice after completing the online courses. Although context played a significant role in how participants interacted with course content and collaborated with others, as exemplified by the examples of Alexis and Danielle, the content was flexible enough that participants could find identify ways within their own context to change their practice.

As MOOC developers seek to develop more offerings targeted at learners looking for job-embedded professional learning, the question about how to design learning environments that work for users across different contexts will become increasingly relevant. In our C-C-C design model, we proposed an instructional design model that sought to link the two ecosystems of online learning and teacher practice by offering multiple, varied opportunities to integrate the knowledge they learn in the online course with their own specific understanding of their context and to support both virtual and in-person collaboration. Applying the design principles of this model to our set of four MOOCs, we found that participants were largely successful in making these connections as evidenced by quantitative about average changes in practice and qualitative case studies of specific learners.

Our study also found several promising components of designing courses with this model in mind. For participants in the courses we studied, the learning process within the online course was deeply intertwined with their own practice. Course content—lecture videos, video school case studies, and assignments—gave participants opportunities to integrate knowledge and practice. Because the content offered multiple “hooks” into practice, through assignments and videos, participants could choose how they would integrate the learning from the course into their practice. After the course, these ideas and concepts that participants came across in the videos and assignments later were integrated into their own professional practice.

Additionally, educators’ context was only one of many contributing factors to changes in practices. Participants reported changed in practice across different types of contexts and we did not find strong evidence that context moderated changed in practice. One reason for this may be that participants adjusted how they interacted with the courses based on the limits and constraints of their context. For example, participants in schools with a higher percentage of students from economically disadvantaged backgrounds and worse school instructional climates were less likely to collaborate with others in their local context by joining learning circles or sharing resources. However, these participants were more likely to collaborate virtually with others on the course through the course discussion forums.

These findings are consistent with other studies about how educators integrate their online learning with their own specific contextual needs. For example, Trust (2016) examined the learning processes that teachers go through when they interact with other teachers in an emergent online network. The study found teachers were transferring and adapting their learning between two settings– the context of their school and classroom and their online context and their learning was mediated by both of these two contexts. Similarly, Brennan et al. (2018) studying the experiences of educators in a MOOC argued that because educators’ contexts are variable, the design of online professional learning needs to be able to be iteratively adaptive to learner’s specific needs. Finally, other studies have also found benefits to participants in MOOC educators in both peer interactions in the online learning environment (Kellogg et al., 2014) and in study groups with fellow educators in their context (Wollscheid et al., 2020).

What our findings, and other related literature suggest is that the design of online professional learning cannot be conceived as a “closed stable system” where design elements lead to pre-specified outcomes (Opfer and Pedder, 2011). Rather, the design should maximize flexibility and adaptivity across different contexts. By including flexibility in design, instructional designers can close the gap between the large-scale audience and content and specificity of their local context. The goal should not be to generate content that is applicable to every context; this would be quite difficult to accomplish in practice. Nor should instructional designers develop MOOC content that serves only a particular audience. Rather, instructional designers should structure content so individuals have multiple, varied opportunities to integrate their knowledge both in the online setting and with others in their specific context.

However, the analysis also identified limits to how much we can link design elements in the courses with outcomes across varied contexts using the observational approach we employed in this study. Although we found qualitative evidence that participants found collaboration to be useful for their learning, we did not find significant differences in how much participants changed their practices for most of the collaboration indicators we examined. One potential issue we identified in the data was that the form of collaboration was highly contextual and participants contexts were highly variable. This constraint makes it difficult to correlate design features intended to foster collaboration since context was likely a strong confounding factor. Additionally, the courses were also perhaps unique in attempting to appeal to a broad audience of educators without focusing on a specific subject-matter or age-level. As a result, the findings of this study may not be relevant to other online professional learning environments that are more limited in scope and audience. Furthermore, the audience for change leadership MOOCs is likely different from the audiences for other subject areas, and thus the specific findings may not apply to other online professional learning settings.

In subsequent work, we intend to expand on this current research and explore more deeply the connections between design features and integration into context. Because this was an observational study, we cannot make any causal claims about the relationship between course elements or how collaboration and context influenced participants’ outcomes because participants were not randomly assigned to conditions. In future studies, we will use experimental research designs to study the causal relationships between design features and changes in practices. The design of these studies will need to ensure fairness in access to learning resources across conditions while still robust enough to study the impact of these features across varied contexts. Additionally, we will more deeply investigate how participants integrate their learning into their own contexts through ethnographic observation and site-based case studies. Finally, we will aim to collect more robust data about learning within the course through activities and open-ended reflection prompts that can help link content with changes in practices. Through these activities, we will build on the initial set of research findings to further elaborate and expand on the C-C-C model.

In this study, we addressed the dilemma of how to make the generalized content of MOOCs work across varied local contexts focusing on the example of educator professional learning. This study offers evidence of the importance of incorporating flexibility and support for in-person collaboration into the design of MOOCs in order to facilitate professional learning across varied contexts. The evidence described in this study lays a foundation for many potential future uses in other online professional learning contexts where these questions will continue to emerge.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Committee on the Use of Humans as Experimental Subjects, Massachusetts Institute of Technology. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

JL-T designed the instruments, conducted the analysis, and contributed most of the writing. RS reviewed and provided feedback on the research design and on the manuscript at several stages. Both authors contributed to the article and approved the submitted version.

The study was funded through a gift by an anonymous donor. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.899535/full#supplementary-material

Allen, J. P., Pianta, R. C., Gregory, A., Mikami, A. Y., and Lun, J. (2011). An interaction-based approach to enhancing secondary school instruction and student achievement. Science 333, 1034–1037. doi: 10.1126/science.1207998

Avineri, T., Lee, H. S., Tran, D., Lovett, J. N., and Gibson, T. (2018). “Design and Impact of MOOCs for Mathematics Teachers,” in Distance Learning, E-Learning and Blended Learning in Mathematics Education: International Trends in Research and Development, eds J. Silverman and V. Hoyos (Cham: Springer). doi: 10.1007/978-3-319-90790-1_11

Bartolomé, L. (1994). Beyond the methods fetish: toward a humanizing pedagogy. Harvard Educ. Rev. 64:173. doi: 10.17763/haer.64.2.58q5m5744t325730

Bates, D., Mächler, M., Bolker, B. M., and Walker, S. C. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Brennan, K., Blum-Smith, S., and Yurkofsky, M. M. (2018). From checklists to heuristics: Designing MOOCs to support teacher learning. Teach. Coll. Record 120, 1–48.

Britt, V. G., and Paulus, T. (2016). “Beyond the Four Walls of My Building”: A Case Study of #Edchat as a Community of Practice. Am. J. Dis. Educ. 30, 48–59. doi: 10.1080/08923647.2016.1119609

Brown, J. S., and Duguid, P. (1991). Organizational learning and communities-of-practice: toward a unified view of working, learning, and innovation. Organ. Sci. 2, 40–57.

Bryk, A. S., and Schneider, B. (2003). Trust in schools: a core resource for school reform. Educ. Leadersh. 60, 40–45.

Choi, D. S. Y., and Morrison, P. (2014). Learning to get it right: Understanding change processes in professional development for teachers of English learners. Prof. Dev. Educ. 40, 416–435. doi: 10.1080/19415257.2013.806948

Correnti, R. (2007). An empirical investigation of professional development effects on literacy instruction using daily logs. Educ. Eval. Policy Anal. 29, 262–295. doi: 10.3102/0162373707309074

Dash, S., De Kramer, R. M., O’Dwyer, L. M., Masters, J., and Russell, M. (2012). Impact of online professional development on teacher quality and student achievement in fifth grade mathematics. J. Res. Technol. Educ. 45, 1–26. doi: 10.1080/15391523.2012.10782595

Dede, C., Jass Ketelhut, D., Whitehouse, P., Breit, L., and McCloskey, E. M. (2009). A research agenda for online teacher professional development. J. Teach. Educ. 60, 8–19. doi: 10.1177/0022487108327554

Desimone, L. M. (2009). Improving impact studies of teachers’ professional development: toward better conceptualizations and measures. Educ. Res. 38, 181–199. doi: 10.3102/0013189X08331140

Desimone, L. M., Porter, A. C., Garet, M. S., Yoon, K. S., and Birman, B. F. (2002). Effects of professional development on teachers’ instruction: results from a three-year longitudinal study. Educ. Eval. Policy Anal. 24, 81–112. doi: 10.3102/01623737024002081

Dominguez, P. S., Nicholls, C., and Storandt, B. (2006). Experimental Methods and Results in a Study of PBS TeacherLine Math Courses. Syracuse, NY: Hezel Associates.

Fullan, M. (2002). Leading in a Culture of Change. Available online at: https://books.google.com/books?hl=en&lr=&id=7i0KAwAAQBAJ&oi=fnd&pg=PR9&dq=Fullan+2007&ots=kfkq3kqG-J&sig=tj9tPQf_zKaicXbN8xHk_QV8hvo#v=onepage&q=Fullan2007&f=false (accessed January 1, 2022).

Garet, M. S., Porter, A. C., Desimone, L., Birman, B. F., and Yoon, K. S. (2001). What makes professional development effective? Results from a national sample of teachers. Am. Educ. Res. J. 38, 915–945. doi: 10.3102/00028312038004915

Garrison, D. R., Anderson, T., and Archer, W. (2001). Critical thinking, cognitive presence, and computer conferencing in distance education. Int. J. Phytoremed. 21, 7–23. doi: 10.1080/08923640109527071

Garrison, D. R., and Arbaugh, J. B. (2007). Researching the community of inquiry framework: Review, issues, and future directions. Internet High. Educ. 10, 157–172. doi: 10.1016/j.iheduc.2007.04.001

Glass, C. R., Shiokawa-Baklan, M. S., and Saltarelli, A. J. (2016). Who Takes MOOCs? New Dir. Inst. Res. 2015, 41–55. doi: 10.1002/ir.20153

Harwell, M., and LeBeau, B. (2010). Student eligibility for a free lunch as an SES measure in education research. Educ. Res. 39, 120–131. doi: 10.3102/0013189X10362578

Hill, H. C. (2009). Fixing teacher professional development. Phi Delta Kappan 90, 470–476. doi: 10.1177/003172170909000705

Hill, H. C., Beisiegel, M., and Jacob, R. (2013). Professional development research. Educ. Res. 42, 476–487. doi: 10.3102/0013189X13512674

Hopson, L. M., and Lee, E. (2011). Mitigating the effect of family poverty on academic and behavioral outcomes: The role of school climate in middle and high school. Child. Youth Serv. Rev. 33, 2221–2229. doi: 10.1016/j.childyouth.2011.07.006

Kellogg, S., Booth, S., and Oliver, K. (2014). A social network perspective on peer supported learning in MOOCs for educators. Int. Rev. Res. Open Dist. Learn. 15, 263–289. doi: 10.19173/irrodl.v15i5.1852

Kleiman, G. M., Wolf, M. A., and Frye, D. (2013). The Digital Learning Transition MOOC for Educators: Exploring a Scalable Approach to Professional Development. Available online at: http://www.mooc-ed.org/ (accessed January 1, 2022).

Kraft, M. A., and Papay, J. P. (2014). Can professional environments in schools promote teacher development? Explaining heterogeneity in returns to teaching experience. Educ. Eval. Policy Anal. 36, 476–500. doi: 10.3102/0162373713519496

Lantz-Andersson, A., Lundin, M., and Selwyn, N. (2018). Twenty years of online teacher communities: A systematic review of formally-organized and informally-developed professional learning groups. Teach. Teach. Educ. 75, 302–315. doi: 10.1016/j.tate.2018.07.008

Laurillard, D. (2016). The educational problem that MOOCs could solve: Professional development for teachers of disadvantaged students. Res. Learn. Technol. 24:29369. doi: 10.3402/rlt.v24.29369

Lave, J., and Wenger, E. (1991). Situated Learning: Legitimate Peripheral Participation. Cambridge: Cambridge University Press.

Loeb, S., Kalogrides, D., and Béteille, T. (2011). “Effective Schools: Teacher Hiring, Assignment, Development, and Retention”. NBER Working Papers 17177. Cambridge, MA: National Bureau of Economic Research, Inc.

Luckin, R. (2010). “The ecology of resources model of context: A unifying representation for the development of learning-oriented technologies,” in Proceedings of the 2nd International Conference on Mobile, Hybrid, and On-Line Learning, Manhattan, NY.

Martin, M. O., Mullis, I. V. S., Foy, P., and Stanco, G. M. (2013). TIMSS 2011 International Results in Science. Amsterdam: International Association for the Evaluation of Educational Achievement.

Matsumura, L. C., Garnier, H. E., Correnti, R., Junker, B., and DiPrima Bickel, D. (2010). Investigating the Effectiveness of a Comprehensive Literacy Coaching Program in Schools with High Teacher Mobility. Element. Sch. J. 111, 35–62. doi: 10.1086/653469

Moolenaar, N. M., Sleegers, P. J. C., and Daly, A. J. (2012). Teaming up: Linking collaboration networks, collective efficacy, and student achievement. Teach. Teach. Educ. 28, 251–262. doi: 10.1016/j.tate.2011.10.001

Naidu, S., and Karunanayaka, S. P. (2019). “Orchestrating Shifts in Perspectives and Practices About the Design of MOOCs,” in MOOCs and Open Education in the Global South, eds K. Zhang, C. J. Bonk, T. C. Reeves, and T. H. Reynolds (Milton Park: Routledge), 72–80. doi: 10.4324/9780429398919-9

Napier, A., Huttner-Loan, E., and Reich, J. (2020). Evaluating Learning Transfer from MOOCs to Workplaces: A Case Study from Teacher Education and Launching Innovation in Schools. Rev. Iberoam. Educ. Dis. 23, 45–64. doi: 10.5944/ried.23.2.26377

Neuman, S. B., and Cunningham, L. (2009). The impact of professional development and coaching on early language and literacy instructional practices. Am. Educ. Res. J. 46, 532–566. doi: 10.3102/0002831208328088

Noble, A., McQuillan, P., and Littenberg-Tobias, J. (2016). “A lifelong classroom”: Social studies educators’ engagement with professional learning networks on Twitter. J. Technol. Teach. Educ. 24, 187–213.

Opfer, V. D., and Pedder, D. (2011). Conceptualizing teacher professional learning. Rev. Educ. Res. 81, 376–407. doi: 10.3102/0034654311413609

Payne, K. J., and Biddle, B. J. (1999). Poor school funding, child poverty, and mathematics achievement. Educ. Res. 28, 4–13. doi: 10.3102/0013189X028006004

Puamohala Bronson, H. (2019). IES Director Highlights Friday Institute MOOC-Eds in First-Year Report. Raleigh, NC: NC State University.

Raudenbush, S., and Bryk, A. (2002). Hierarchical Linear Models: Applications and Data Analysis Methods. Thousand Oaks, CA: Sage Publications.

Reich, J., Levinson, M., and Johnston, W. (2011). Using online social networks to foster preservice teachers’ membership in a networked community of praxis. Contemp. Issues Technol. Teach. 11, 382–397.

Reich, J., and Ruipérez-Valiente, J. A. (2019). The MOOC Pivot. Science 363, 130–131. doi: 10.1145/3051457.3053980

Sanders, D., and McCutcheon, G. (1986). The development of practical theories of teaching. J. Curricul. Supervis. 2, 50–67.

Seaton, D. T., Coleman, C. A., Daries, J. P., and Chuang, I. (2014). Teacher Enrollment in MIT MOOCs: Are We Educating Educators? Available online at: http://dspace.mit.edu/bitstream/handle/1721.1/96661/SSRN-id2515385.pdf?sequence=1 (accessed January 1, 2022).

Sirin, S. R. (2005). Socioeconomic status and academic achievement: a meta-analytic review of research. Rev. Educ. Res. 75, 417–453. doi: 10.3102/00346543075003417

States Department of Agriculture (2017). The National School Lunch Program. Available online at: http://www.fns.usda.gov/tn/team-nutrition (accessed January 1, 2022).

Teddlie, C., and Tashakkori, A. (2006). A general typology of research designs featuring mixed ethods. Res. Sch. 13, 12–28.

Trust, T. (2016). New model of teacher learning in an online network. J. Res. Technol. Educ. 48, 290–305. doi: 10.1080/15391523.2016.1215169

Trust, T., Krutka, D. G., and Carpenter, J. P. (2016). “Together we are better”: Professional learning networks for teachers. Comput. Educ. 102, 15–34. doi: 10.1016/j.compedu.2016.06.007

Wayne, A. J., Yoon, K. S., Zhu, P., Cronen, S., and Garet, M. S. (2008). Experimenting with teacher professional development: motives and methods. Educ. Res. 37, 469–479. doi: 10.3102/0013189X08327154

Keywords: professional learning, online learning, large-scale learning environments, remote learning, MOOC (massive open online course)

Citation: Littenberg-Tobias J and Slama R (2022) Large-Scale Learning for Local Change: The Challenge of Massive Open Online Courses as Educator Professional Learning. Front. Educ. 7:899535. doi: 10.3389/feduc.2022.899535

Received: 18 March 2022; Accepted: 21 June 2022;

Published: 26 August 2022.

Edited by:

Dilrukshi Gamage, Tokyo Institute of Technology, JapanReviewed by:

Enna Ayub, Taylor’s University, MalaysiaCopyright © 2022 Littenberg-Tobias and Slama. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joshua Littenberg-Tobias, jltobias@mit.edu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.