95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 19 April 2022

Sec. Special Educational Needs

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.890832

This article is part of the Research Topic The role of evidence in developing effective educational inclusion View all 11 articles

This paper considers the engagement by teachers and school leaders in England in educational practices that are both ‘research-informed’ and supportive of inclusive education. We do so by seeking to understand the benefits, costs, and signifying factors these educators associate with research-use. In undertaking the study, we first worked to develop and refine a survey instrument (the ‘Research-Use BCS survey’) that could be used to uniquely and simultaneously measure these concepts. Our survey development involved a comprehensive process that comprised: (1) a review of recent literature; (2) item pre-testing; and (3) cognitive interviews. We then administered this questionnaire to a representative sample of English educators. Although response rates were somewhat impacted by the recent COVID-19 pandemic, we achieved a sufficient number of responses (147 in total) to allow us to engage in descriptive analyses, as well as the production of classification trees. Our analysis resulted in several key findings, including that: (1) if respondents see the benefits of research, they are likely to use it (with the converse also true); (2) if educators have the needed support of their colleagues, they are more likely to use research; and (3) perceiving research-use as an activity that successful teachers and schools engage in is also associated with individual-level research use. We conclude the paper by pointing to potential interventions and strategies that might serve (at least, in the English context) to enhance research-use, so increasing the likelihood of the development and use of effective inclusive practices in schools.

This paper considers the engagement by teachers and school leaders in England in educational practices that are both ‘research-informed’ and supportive of inclusive education. For the purposes of this paper, we define research-informed educational practice (RIEP) as the use of academic research by teachers and school leaders, in order to improve aspects of their teaching, decision-making, leadership or ongoing professional learning (Walker, 2017; Brown, 2020). Inclusive practice, meanwhile, represents the development and enactment of approaches to pedagogy, curriculum and assessment that enable all students, irrespective of ability, to learn together in one environment. In other words, the aim of such practices is to enable all children to participate meaningfully and effectively in mainstream education, whilst avoiding the marginalization of learners based on labeling, pre-conception or access (Mintz and Wyse, 2015; Mintz et al., 2020).

There are strong reasons to encourage RIEP generally. For instance, the emerging evidence base indicates that, if educators engage with research-evidence to make or change decisions, embark on new courses of action, or develop new practices, then this can have a positive impact for both teaching and learning outcomes (e.g., Cordingley, 2013; Mincu, 2014; Cain, 2015; Godfrey, 2016; Rose et al., 2017; Crain-Dorough and Elder, 2021). There are also myriad social and moral imperatives which, together, present the case that educators ‘should’ engage with research-evidence if it is possible for them to do so. This argument is nicely encapsulated by Anne Oakley (2000) who some 20 years ago argued that: “those who intervene in other people’s lives [should] do so with the utmost benefit and least harm” (2000: p. 3). When it comes to inclusion, therefore, this imperative dictates that practitioners ‘ought’ to ensure approaches to inclusive practice are informed by the best available evidence, so as to be as beneficially impactful as possible. Naturally this engagement should be critical in nature, and the research in question should be of recognizably high quality; and for a comprehensive overview of both critical engagement and how to assess the quality of research-evidence, we point readers in the direction of Gough (2021).

Inclusive education is increasingly seen as a core part of how equitable education systems, globally, should function (Van Mieghem et al., 2020). Simultaneously, however, considering inclusive practice or any other type of educational practices, RIEP – as a ‘business as usual’ way of working – is yet to take hold in the vast majority of schools. This is the case both in England and more widely (Graves and Moore, 2017; Wisby and Whitty, 2017; Biesta et al., 2019; Crain-Dorough and Elder, 2021). This ‘research-practice gap’ is apparent in the findings of a mixed methods study undertaken by Coldwell et al. (2017) to examine England’s progress toward a research-evidence-informed school system. Coldwell et al. (2017, p. 7) findings include that educators generally did not feel confident in using research-evidence and that there was “limited evidence from [their] study of teachers directly [using] research findings to change their practice.” Later work, such as the recent survey of 1,670 teachers in England undertaken by the National Foundation for Educational Research, presents a similar picture. Here it was found that academic research had only a ‘small to moderate’ influence on teacher decision making. Instead of research-evidence, teachers were in fact much more likely to draw ideas and support from their own experiences (60 percent of respondents identified ideas generated by me or my school), or the experiences of other teachers/schools (42 per cent of respondents identified ideas from other schools), when deciding on approaches to improve student outcomes. In addition, non-research-based continuing professional development (CPD) was also cited as an important influence (54 percent of respondents). These compare to the much lower figures of 13 percent and seven percent for sources based on the work of research organizations and advice/guidance from a university or research organization, respectively (Walker et al., 2019).

Using research-evidence to facilitate any kind of educational improvement typically involves educators (either collectively or individually): (1) accessing academic research; (2) being able to comprehend academic research; (3) being able to critically engage with research-evidence, understanding both its strengths and weaknesses, as well as how its warrants for truth can be justified; (4) relating research-evidence to existing knowledge and understanding; and, where relevant, (5) making or changing decisions, embarking on new courses of action, or developing new practices. Reasons traditionally given for the disconnect between research and practice invariably relate to each of these five steps. For example, it has been suggested that educators can often struggle to access academic research, which can often be situated behind pay walls (Goldacre, 2013). It can also be hard for educators to engage with academic research due to the esoteric nature of the language used (Hargreaves, 1996; Goldacre, 2013; Cain et al., 2019). There has been much critique of the quality of educational research as well as the concomitant suggestion that it should not be trusted to provide a firm basis for practice development (Hargreaves, 1996; Hammersley, 1997; Biesta, 2007; Goldacre, 2013; Wisby and Whitty, 2017; Wrigley, 2018). Academic research is also often critiqued for being either too context independent or because it reports on very specific contexts. This means educators can often find it difficult to know how best to apply findings to their settings (Biesta, 2007; Wrigley, 2018; Cain et al., 2019; Gough, 2021). Another often-cited reason for the research-practice gap is that teachers and school leaders do not always have enough time to engage with research, to learn from it, or use it to develop new practices (Galdin-O’Shea, 2015; Brown and Flood, 2019; Brown, 2020). Linked to the issue of time, however, is that schools in England are typically characterized by action orientated cultures, which serves to hinder processes that take place over the mid to long term, such as research inquiry cycles (Cain et al., 2019; Mintrop and Zumpe, 2019). Related is research on educational organizations in the tradition of institutional theory (e.g., Honig, 2006); with this arguing that, when seeking to solve their problems, educators often privilege legitimacy: i.e., acting according to public expectations of what is appropriate over evidence effectiveness (Mintrop and Zumpe, 2019). In high autonomy/high accountability systems such as England, this notion of legitimacy tends to relate to the twin forces of government accountability and performativity.

At the same time, it is also clear that if teachers are to use research to promote inclusive education, then, as well as the RIEP-related issues outlined above, teachers and school leaders must also see merit in this form of education (which may prove a source of tension in high autonomy/high accountability systems). In other words, they must see value in the unique contributions that students of all backgrounds offer and want diverse groups to grow side by side, to the benefit of all. Furthermore, as well as inclusion signifying to educators an ethical vision to aim for, teachers and school leaders must also embody the catalytic behavior that can realize this change (Brown et al., 2021). This means educators need to be aware of the sociocultural context they operate in, have high expectations, a desire to make a difference, and are cognizant of the need to challenge the deficit mindset of colleagues. They may also need to identify the various means through which to overcome the professional antinomies often faced by those working in disadvantaged and challenging situations; including drawing on those holding ‘local knowledge,’ such as that of teaching assistants (Von Hippel, 2014; Lee and Louis, 2019; Brown et al., 2021). It is within this context, and toward the identification of such means, that educators are likely to direct their efforts at RIEP for inclusion.

For the purposes of this paper, we make the assumption that our work is for those who already have the ethical drive to pursue inclusive education. Our focus then is how this might be achieved in a research informed way. We note that there have already been a range of national and local initiatives which have attempted to address the separations between research and practice, which, in theory, should enable the achievement of research informed inclusive education to flourish. Most recently, these include the establishment of the Education Endowment Foundation (EEF): the ‘what works’ center for education in England, which provides freely available and accessible summaries of what works research-evidence for educators to use. In addition to this substantial investment, in 2014 the EEF launched a £1.4m fund for projects to improve the use of research in schools. This initiative was followed up in 2016 with the launch of the EEF’s Research Schools initiative; schools charged with leading RIEP development in their local area. There has also been a substantial rise in bottom-up/teacher-led initiatives, such as the emerging network of ‘Teachmeets’ and ‘ResearchED’ conferences (Wisby and Whitty, 2017) designed to help teachers connect more effectively with educational research. Furthermore, a prominent example of a teacher-led initiative was the 2017 launch of England’s Chartered College of Teaching: an organization led by and for teachers, and whose mission (in part at least) is to support the use of RIEP (Wisby and Whitty, 2017). RIEP is also increasingly promoted and supported at a government level. For example, England’s Department for Education ensured the inclusion of references to RIEP within its standards for school leaders and in the pilot Early Career Framework for newly qualified teachers. Finally, the periodic Research Excellence Framework (the ‘REF’), via which United Kingdom universities are funded, now requires them to account for the ‘impact’ their research has had on, “the economy, society, culture, public policy or services … beyond academia” (Higher Education Funding Council, England, 2011, p. 48). In other words, the government’s aim is to use REF to encourage universities to ensure that their research is used in the world beyond academia, for example, by encouraging academics to work directly with teachers and schools (Cain et al., 2019). That the evidence-practice gap still exists, however, would seem to imply that these initiatives are not fully ‘hitting the mark’ and that there are, in fact, a range of factors preventing RIEP which are still unaddressed. This is clearly problematic if we wish teachers to engage with or develop ‘research-informed’ practices that support inclusive education. In response, the purpose of this paper is to use a novel theoretical perspective to attempt to uncover additional insights into why educators do or do not employ research evidence, and to provide practice and policy-recommendations as to how this situation can be improved.

Research in the area of RIEP has often been criticized for being ‘under-theorized’ (e.g., Nutley et al., 2007; Cooper and Levin, 2010; Brown, 2014). This is problematic to the extent that it may lead to researchers failing to consider, either comprehensively or with sufficient complexity, the full range of factors influencing the research-practice gap. To provide a theoretical basis for our analysis, we adopt Baudrillard’s (1968) semiotic theory of consumption. This theoretic lens allows us to view the use of research-evidence by educators as being firmly situated within the overall culture of consumerism that encapsulates Western societies. As a social phenomenon, consumerism can be thought of as being ‘formally’ identified by Veblen (1899) in The Theory of the Leisure Class: here Veblen identified that, as well as a way of meeting needs, consumption also represents a means through which wealth can be displayed, in order to demonstrate social status. With Veblen, then, the notion of the consumer society – the society which consumes because it wants rather than needs to – was born. But while Veblen’s analysis was ground breaking, in that it identified consumption as something which stretched far beyond subsistence, what it did not do was identify the myriad ways in which the leisure class might engage with what they buy, or the ‘relationships’ that might exist between consumer and consumed. Such a theory can be located in Baudrillard’s (1968) The System of Objects. Here Baudrillard concerns himself with both consumer behavior and the ‘objects’ which are consumed: in other words, how objects are ‘experienced’ and what needs they serve in addition to those which are purely functional. Here Baudrillard (1968) utilizes semiotic analysis to contend that all consumer goods in fact possess three values. Specifically, these are: (i) their ‘benefit’ value, which corresponds to the utility that can be derived from a good; (ii) their ‘cost’ value which represents what it takes to consume a specific good; and (iii) and the value of the good as a ‘sign.’ In other words, what messages an act of consumption is signifying both to the consumer in question and to others.

We argue that employing Baudrillard’s theoretical frame as a deductive lens for examining teachers’ use of research-evidence is warranted for three reasons. First, it makes intuitive sense that, as with any other consumer object, any educator’s use of research will be a function of some combination of the following three factors:

(1) The benefits associated with using academic-research: here, using Baudrillard’s framework, the key question facing educators is whether using research-evidence is likely to have positive benefits for their leadership, their teaching practice or their professional learning. Furthermore, whether any perceived benefits are likely to be higher or lower than other means of improving their leadership, practice or professional learning. For instance, in relation to the benefits associated with professional development courses, from engaging with trusted colleagues, or those that can be accrued from using social media.

(2) The costs associated with using academic-research: research-use costs can be manifold and relate not only to money (e.g., in instances where research can only be accessed via subscription or payment), but also in relation to the time involved in searching for, engaging with and acting on research-evidence. Costs can also relate to the mental costs associated with research-use: which can be a cognitively challenging process. As with benefits, Baudrillard’s framework views such costs are relative to the costs of engaging with other forms of information, which may be cheaper, easier to find, quicker to engage with or easier to understand. Costs are also perceived in terms of whether they are likely to outweigh the benefits that might accrue from evidence-use.

(3) The signification associated with using academic research: Baudrillard’s notion of signification, as applied to this study, corresponds to the extent to which research-use is perceived by educators as desirable. This type of desirability differs from any benefits associated with research-use. Rather, desirability refers to specific actions or behaviors that one wants to be associated with. With consumer objects such as coffee makers or clothes, desirability often comes from perceptions associated with a given brand. In other words, we typically want to purchase an object of a given brand because of the caché it affords us (especially when hold benefits and costs constant). For research-use, desirability concerns the extent to which one wants to be associated with the act of engaging with academic research. Such desirability could be a function of whether an educators’ colleagues expect them to behave in this way, but equally, it could be that engaging with research provides teachers with a positive sense of professional identify: in other words, the desirability in question is internally motivated.

As well as making intuitive sense, our second reason for adopting Baudrillard’s theoretical frame is that it provides a clear focus for investigating what might be causing the research-practice gap, as well as guide the development of possible interventions for closing it. In other words, the framework enables us to ask whether the research-practice gap is caused by educators failing to perceive the benefits of engaging in RIEP; from educators believing that the costs involved with research-use are too high; or from RIEP-type activity not being sufficiently desirable for them to want to engage in it (or, more likely, some combination of all of these factors).

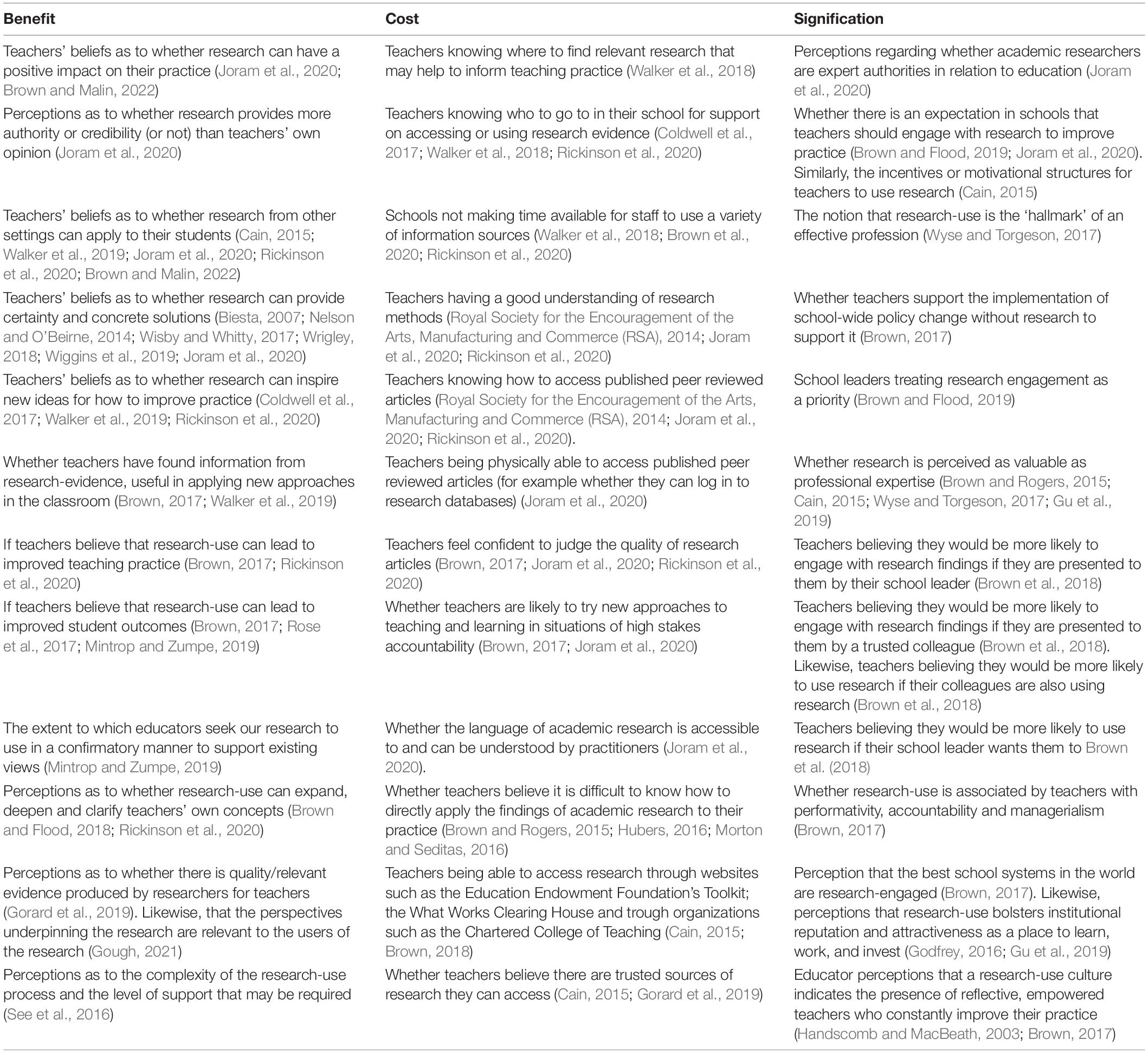

Third, Baudrillard’s frame also appears to fit the available evidence. We illustrate this using a thematic analysis of recent empirical studies that have examined educators’ use of academic research. Recent work in this area has involved a range of methods and analysis, from qualitative exploration, to the use of surveys to examine behaviors on a larger scale; with each study reporting on key research-use barriers and enablers. As can be seen in Table 1, below, the factors identified from these studies, all comfortably sit within one of the three headings of ‘benefit,’ ‘cost,’ or ‘signification.’ Furthermore, we are yet to identify a single research-use factor from the vast corpus of research examining research-use, knowledge mobilization, close to practice research, evidence-informed practice, as well as a range of related fields, that does not correspond to one of these three themes. At the same time, however, no studies appear to have quantitatively measured all of these factors simultaneously, nor used statistical modeling approaches to ascertain each factor’s relative importance. This means we have no firm understanding regarding which factors are more or less likely to either positively or negative impact on educators’ research-use.

Table 1. ‘Benefits,’ ‘costs,’ and ‘signification’ associated with research-use, identified from current research literature.

Above, we identified that a major knowledge gap is a comprehensive understanding of the relationship between the educators’ reported use of research, and the benefits, costs and signifying factors they associate with research-evidence. As such, the research questions we now address in this paper, in order to increase the likelihood that research-informed educational practices for inclusion are developed, are as follows:

• RQ1: What potential benefit, cost and signification factors can be identified that might account for the current research-practice gap?

• RQ2: Which individual and combinations of benefits, cost and signification factors appear to be most closely associated with educators’ use of research evidence?

• RQ3: What implications emerge for policy and practice in terms of how to increase educators’ use of research-evidence, so leading to more effective inclusive practice?

A survey methodology was used to address these questions. To develop the survey and address RQ1, the research team first reviewed recent literature (broadly 2010 and later) that generally encapsulated the area of RIEP (e.g., research on research-use, knowledge mobilization, close to practice research, research on evidence-informed practice, and so on). The aim of this review was to identify as many of the factors associated with the barriers to and enablers of RIEP as possible. Where this literature was empirically based, we attempted, where feasible, to adopt the questions and scales used by these studies. When the literature was non-empirical, we identified key ideas and themes from these papers and used these to develop survey question items. All survey question items were then organized according to whether they represented the benefits, costs or any signification associated with RIEP. The research team (comprising two experienced professors, one post-doctoral researcher, who is also an experienced educator, and one experienced educator undertaking a PhDs in this area), also brainstormed other possible benefit, cost and signification related reasons that might influence RIEP. Survey question items were then also developed to represent these ideas. In order to ascertain the relationship between the benefit, cost and signification (BCS) factors and the reported use of research, scales were also developed to explore if and/or how educators used research to improve their practice and professional learning. We also developed questions to examine other possible sources for practice development (such as courses, newsletters, publications from membership bodies, the use of social media, advice from colleagues etc.). Questions were also developed to examine the culture of respondents’ schools in terms of the factors associated with practice development and learning generally. For instance, the presence of cultures of trust, as well as instances of innovation, risk taking and experimentation (Brown et al., 2016; Kools and Stoll, 2016). Finally, we developed questions to capture socio-demographic information, including respondent’s levels of education, their experience, their role and about the context of the school in which they work.

To reduce the likelihood of measurement error and establish initial support for the validity of the questionnaire, we then completed a comprehensive three stage review process. The first stage involved two rounds of ex ante item review (known as item pretesting). In the first round, we made use of Graesser (2006) Question Understanding Aid web-based program, which takes individual questionnaire items as input and returns a list of potential problems, including unfamiliar technical terms, unclear relative terms, vague or ambiguous noun phrases, complex syntax, and working memory overload. As the program itself is strictly diagnostic, the research team systematically screened the output for each item and, as a team, determined any necessary revisions. In the second round, we used Willis and Lessler’s (1999) Questionnaire Appraisal System to individually screen each questionnaire item for any further issues, such as with instructions and explanations, clarity, assumptions made or underlying logic, respondent knowledge or memory, sensitivity or bias, and the adequacy of response categories. Here the research team compared individual findings and determined whether any additional changes were necessary.

For the second stage, cognitive interviews were held with one school leader and two teachers. During the interviews, respondents were asked to work their way through the questionnaire and describe what they thought each survey item was asking them to consider. Respondents were also asked to highlight any language or comprehension issues. Finally, expert interviews were held with three independent academics with substantive experience of research in the area of RIEP. For this final stage, expert respondents were asked to consider whether the survey comprehensively covered the key issues associated with RIEP and to highlight possible gaps. Respondents were also asked to consider face validity and to give their opinion on whether survey items were measuring what the research team intended them to measure, as well as assess the overall suitability of the framework for addressing the problem in hand. All feedback from stages two and three was incorporated into the design of the survey. The final version of the survey (which we have entitle the ‘Research-Use BCS survey’) can be found in the Supplementary Material.

Our efforts to ensure a rigorous questionnaire development procedure are noteworthy given the mounting evidence that few measures related to research-use have been developed with attention to their psychometric or pragmatic qualities (e.g., Asgharzadeh et al., 2019; Lawlor et al., 2019). When a measure lacks a strong theoretical and empirical basis, it cannot necessarily be assumed that the inferences and actions that emerge from its use are adequate or appropriate (Messick, 1995). Too often, disproportionate emphasis is given to supplying evidence on validity at the back-end of instrument development (i.e., after pilot data has been collected) through methods such as factor analysis and reliability analysis (Gehlbach and Brinkworth, 2011). While such evidence is important, it is only one component of the full picture. Our focus on the front-end of instrument development (ex ante item review, cognitive interviews, and expert review) has thus helped ensure that interpretations following from responses to our questionnaire are grounded in a sound scientific basis.

The aim of our sampling strategy was to achieve a representative sample of teaching staff in England, both in terms of their own individual characteristics, as well as the characteristics of the schools they work in. To identify teacher characteristics, we drew on the Department for Education’s school workforce briefing note and associated data tables. Here the latest data available at the time of the analysis (November 2018: see Department for Education, 2019a) shows that of the 499,972 full time equivalent (FTE) teachers in England, 24 percent were male while 76 percent were female. Male teachers overwhelmingly work in secondary schools (65 percent vs. 30 percent who work in primary schools, while 5 percent work in special or alternative provision). For female teachers the opposite is true: 58 percent of female teachers work in primary schools, 37 percent work in secondary schools (with 6 percent of female teachers working in special or alternative provision). This picture changes somewhat for teaching assistants (TAs) however: here 43 percent of male TAs and 74 percent of female TAs work in primary; 33 percent of male TAs and 14 of female TAs work in secondary schools; while 24 percent of female and 13 percent of male TAs work in special or alternative provision. Furthermore, the vast majority of teaching staff are classroom teachers (85 percent when just considering teachers, middle leaders and school leaders and 53 percent when considering the wider teaching workforce, including teaching assistants).

Whether teaching workforce is part time or full time can impact on research-engagement, with RIEP tending to be a behavior more associated with full time teachers/teaching assistants (Brown, 2020). According to the Department for Education’s school workforce briefing note and associated data tables, the majority of teaching staff are full time, although this increases with seniority: while only five percent of school leaders are part time, more than a quarter (26 percent) of classroom teachers are part time. For TAs, meanwhile, the vast majority (85 percent) work part time. There has been no analysis associating research-use with the age of teachers, although from various analyses – (e.g., Rogers, 1995; Hargreaves and Fullan, 2012) which examine the diffusion of innovations and the likely adoption of new ideas by teachers – it can perhaps be inferred that younger teachers may well be more enthusiastic about engaging with new ideas, such as those represented in research studies. Interestingly Department for Education data indicates that the teaching workforce in England is relatively young, with some 57 percent of teachers aged under 40. Finally, Department for Education data (Department for Education, 2019a) indicates that 99 percent of all teachers are educated to degree level. No detail is provided, however, on post graduate qualifications such as Masters of PhDs which might well be expected to positively impact on the teachers’ engagement with research (Malin et al., 2019).

In terms of school characteristics, as well as ensuring that the sample was generally representative of England’s total population of schools: for instance, in terms of school type and geographic location, we also wanted to ensure the sample mirrored those school level characteristics thought to impact on teachers’ research engagement, such as school inspection outcomes. To identify key school level characteristics, we first drew on the Department for Education’s annual schools briefing note and associated data tables. For January 2019 (Department for Education, 2019b) this showed there were a total of 24,323 schools in England. The main attributes of these schools and their pupils is set out in Table 2, below:

The first column in Table 2 provides the distribution of schools by school type. There is some indication that school phase impacts on research-use, although this picture is not necessarily clear cut (e.g., Coldwell et al., 2017). The final three columns of this table look at pupil characteristics and provide an indicator of the nature and diversity of the school intake. We are unaware of any analysis linking measures of the disadvantaged or diverse nature of a school’s intake with teachers’ engagement with research evidence. In theory, any cohort that is relatively more complex might engender higher levels of research-use as teachers seek to find ways to improve their effectiveness. Alternatively, teachers may find themselves so mired in the day-to-day activity of teaching diverse or disadvantaged groups that they are unable to find additional time, energy or resource to seek out research evidence. Examining this data as part of our analysis will therefore provide additional insight into the extent to which school intake helps or hinders research-engagement. Our approach to sampling also took into account the percentage of schools that are currently academies or free schools (which comprise 32% of primary schools and 75% of secondary schools). This is relevant, since, as schools operating outside of Local Authority funding and control, academies and free schools have certain freedoms to innovate and are expected to use such freedoms to improve teaching and learning – such as through engaging with research-evidence (Brown and Greany, 2017; Coldwell et al., 2017; Brown, 2019).

We also wished to ensure our sample mirrored the national distribution of school inspection ratings, (with school inspections undertaken by OFSTED, England’s school inspection agency). Such data is also relevant to our analysis, since there is some indication that schools tend to be more likely to engage with research evidence if they have been categorized as ‘good’ or ‘outstanding.’ This is because such a rating affords schools the freedom to experiment with potentially risky ways to improve further (alternatively, it could be that such schools are outstanding because they have been so in successful in embedding a culture of inquiry and experimentation). This stands in contrast to ‘inadequate’ or ‘requires improvement’ schools, which are regarded as being more likely to stick to what they feel are ‘safe’ or ‘tried and tested’ means of achieving improvement, including narrowing the curriculum to focus on English and Maths and on ensuring pupils achieve well in progress tests in these two subject areas (Coldwell et al., 2017; Greany and Earley, 2018; Ehren, 2019).

As no database of teachers exists it is not possible to sample at a teacher level. As such, we derived our sample at a school level, using England’s Department for Education’s https://get-information-schools.service.gov.uk/Downloads website, which provides a downloadable database of all schools in England. This database was used (after removing records for schools that were closed, proposed to close or not yet open) to provide a randomly selected sample of ten percent of all schools in England (2,424 schools). As you would expect, the characteristics of this random sample mirrored those of the school population described above. Having identified our sample, we then located the email addresses of either the school leader or school gate keeper and emailed them a link to the survey, asking them to distribute this link to all teaching staff (school leaders, teachers, and teaching assistants). Follow up emails were sent 1 month after the first. Overall response to the survey was relatively low (147 teachers, or 6.1 percent); nonetheless schools were facing unprecedented challenges due to the global COVID-19 pandemic during the period of our fieldwork. Correspondingly we did not feel that further follow-up was ethically justifiable. We also believed that the sample was sufficient to provide some initial insight and could be followed up with further surveying at a later point.

At the same time, not only was the response rate low, but 30 percent of these responses included missing data. To explore the representativeness of the sample, therefore, it was decided to make the categories broader, so as to ensure individual categories were larger and so comparable (for example the age category was reduced to just two categories -under 45 and over 45 rather than the original five in the survey). Once these categories were collapsed the survey data was compared with National data from the Department for Education data (Department for Education, 2019a) (see Table 3). Furthermore, as well as broadening the categories, percentages from the survey data were calculated from the response rate for each question rather than the return rate as a whole (147), thus accounting for (by removing) the unknown data.

In addition to the missing survey data there were other apparent limitations from the sample when compared with the national data. For example, the responses showed a significant over representation of senior and middle leaders (54.5 percent in the sample vs. 10 percent overall). Likewise, there were less staff employed in state schools than the national average and less in Northern England and the Midlands.

Given the response rate and resultant sample size obtained in this study, it was not possible to conduct a multivariate analysis of research use. Instead, to address RQ2 (“Which individual and combinations of benefits, cost and signification factors appear to be most closely associated with educators’ use of research evidence?”), we examined descriptive statistics and correlations alongside univariate classification tree models (produced using SPSS 26). The first step in this process involved exploratory factor analyses for each category of predictor variables. Factors were extracted using principal axis factoring (Fabrigar et al., 1999) and, given the potential for correlation between factors in the same predictor category, an oblique rotation (direct quartimin) was used to clarify the factor structure (Costello and Osbourne, 2005): see the Supplementary Material file for more detail on this process. Following the exploratory factor analyses, descriptive statistics were calculated for all items and factor scores, and internal consistency of each factor was determined using Cronbach’s alpha. The strength and direction of the linear relationship between factors was calculated using Pearson product-moment correlations.

In preparation for the classification tree analyses, responses to the dependent variable representing individual-level use of research were dichotomized at the median value into ‘use’ (N = 75) and ‘non-use’ (N = 72). These categories correspond, respectively, to educators who were self-assured about their use of research knowledge and those who were comparatively unsure. A chi-square automatic interaction detection (CHAID) algorithm was then used to construct classification trees for each category of predictor variables. One of the most common types of decision tree algorithms, CHAID is a non-parametric approach for recursively partitioning responses to the dependent variable into subgroups (nodes) of the independent variables that maximize homogeneity (Milanović and Stamenković, 2016). Beginning with a single unsorted group of data, the algorithm creates a hierarchically arranged set of nodes by applying “if then” logic to determine the optimal number of partitions for each independent variable (see Kass, 1980). Central to the logic operations is the use of chi-square tests to determine split points for each independent variable, creating different branches of the classification tree. The overall sequence of independent variables follows in order of strongest to weakest significant association with the dependent variable. When the CHAID algorithm reaches a point at which further splits are not statistically significant. The result – called a terminal node – provides a predicted value for the dependent variable given the values for the independent variables in each node of the respective branch.

We begin the analysis with our initial descriptive analyses and correlations. These can be found in Table 4, below. Beginning with the factors describing research-use (R1 – R3), respondents most strongly felt that research-use formed a part of their individual professional practice (M = 3.23, SD = 0.68). By contrast, respondents were less affirmative about the extent to which their schools and colleagues were using research to inform practices. In terms of the benefits of research-use factors (B1 and B2), respondents were largely in agreement that using research could improve teaching and learning by, for example, providing new ideas, guiding the development of new teaching practices, and promoting improved student outcomes. Similarly, respondents did not generally agree with statements which suggested research conferred no benefits (for instance, in terms of strength of agreement in relation to the question: “research evidence can’t provide me with concrete solutions”). Broadly, respondents also disagreed that potential costs associated with research were sufficient to discourage its use. The perceived cost most likely to influence use, concerned respondents’ ability to connect research with tangible changes to practice (M = 3.04, SD = 0.74; e.g., that, “Research evidence needs to be ‘translated’ and made practitioner friendly if I am to use it effectively”). However, response variance was greatest for whether respondents had access to research knowledge (M = 3.73, SD = 1.07) and whether there was social support for research-use in their schools (M = 3.82, SD = 1.27). That is, responses suggest a gulf between educators who worked in environments that provided access and support for research-use and educators who were largely unsure how to access research knowledge and felt unsupported in changing this situation. Turning finally to the signification factors, respondents were generally neutral about the extent to which research-use was becoming a norm (M = 3.45, SD = 0.80), an indicator of successful teachers and schools (M = 3.85, SD = 0.82), and an outcome of local organizational and social influence (M = 3.24, SD = 0.98).

Table 5 presents the Pearson product-moment correlation for each pair of factors. Although multiple correlations were statistically significant, only five could be considered strong (|r| > 0.50). The first of these suggested a positive relationship between respondents’ perceptions of their schools’ organizational climate of innovation (R1) and the extent to which they experienced joint efforts for school improvement (R3). Notably, however, neither of these factors were associated with individual-level research-use. Rather, whether respondents were engaging in research-use (R2) appeared closely related to their perceptions that using research can improve teaching and learning (B1). Shifting to the signification factors, the extent to which respondents believed research-use was becoming a norm (S1) was strongly associated with their schools’ social environment, in terms of both the existence of joint efforts for school improvement (R3) and whether they possessed the social relations that could support research-use (C1). Finally, respondents’ belief that research-use is an indicator of successful teachers and schools (S2) was linked to their belief that research-use improves teaching and learning (B1).

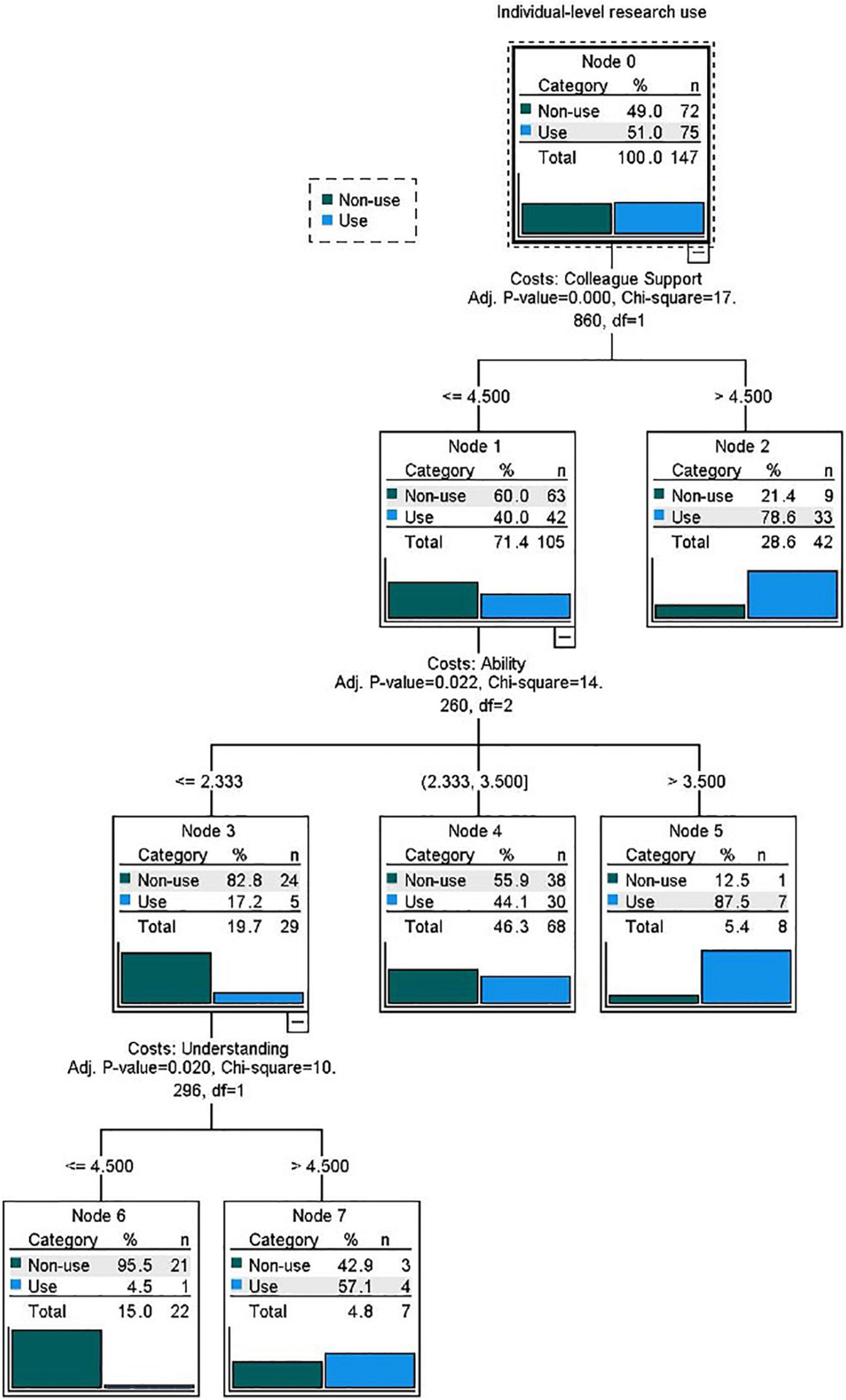

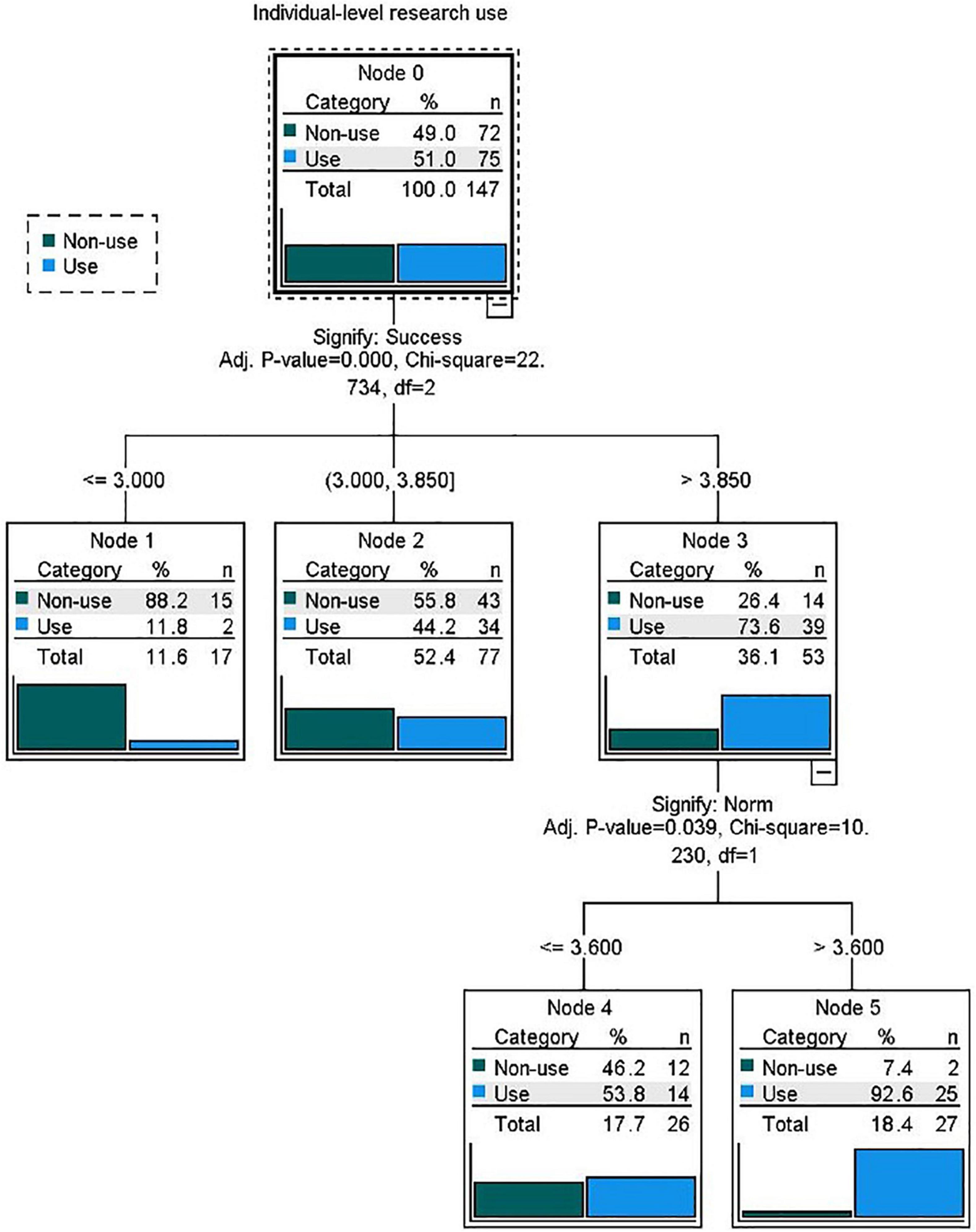

As indicated earlier, the CHAID classification tree algorithm was used for each category of factors: benefits (B1 and B2), costs (C1, C2, C3, and C4), and signification (S1, S2, and S3), using individual-level research-use (R2) as the dependent variable. As previously mentioned, only these factors were included in this exploratory analysis, as the sample size did not permit including demographic variables, such as educational qualifications or school type. Table 6 presents the prediction accuracy and risk of respondent misclassification for each category; the former represents the percentage of correctly identified respondents (i.e., use or non-use) in the terminal nodes of each classification tree model, while the latter represents the probability that a respondent chosen at random would be misclassified by the respective model. What stands out in this table is that each predictor category was approximately equally accurate, with cost factors most accurately predicting non-use and benefits factors most accurately predicting use. The model for each predictor category will now be examined in turn.

Modeling individual-level research-use (R2) based on perceived benefits yielded a one-layer classification tree that correctly classified non-use for 79.2% of respondents and use for 60.0%. Of the two predictors, only the factor corresponding to respondents’ belief that using research can improve teaching and learning (B1) was statistically significant, χ2(2) = 27.72, p < 001, rpb = 0.397. This factor was split into three nodes (see Figure 1, below), each with a specific range of values and a terminal classification. Nodes 1 and 2 (non-use) together contained respondents who did not strongly agree (M ≤ 4.43) on the benefits of research-use, while Node 3 contained respondents who strongly agreed (M > 4.43) that research can, for instance, guide the development of new teaching practices, provide new ideas and inspiration, and deepen and clarify understandings of teaching and pedagogy. In other words, this association suggests that for educators to engage in research-use, they need to see its benefits in practice.

Figure 1. Decision classification tree for the benefits of research-use factors. Light gray shading within terminal nodes denotes the final classification.

Modeling individual-level research-use (R2) based on perceived costs yielded a three-layer classification tree that correctly classified non-use for 81.9% and use for 58.7% of respondents. As illustrated in Figure 2, the most significant predictor of use was respondents’ belief that they possessed the social relations needed to support research-use (C1), χ2(1) = 17.86, p < 001, rpb = 0.205. At this first layer of the classification tree, the model sorted respondents into either Node 2 (use) if they strongly agreed (M > 0.4.50) that they had the necessary social relations, or Node 1 if they were less assured (M ≤ 4.50) about such relations. Branching from Node 1 was the second layer of the classification tree, splitting respondents into three groups based on their belief that they possessed the ability to connect research knowledge to practice (C4), χ2(2) = 14.26, p = 0.02, rpb = 0.220. Node 5 (use) corresponded to respondents who agreed (M > 3.50) they had this ability combine their professional knowledge with research knowledge, whereas Node 4 (non-use) and Node 3 corresponded to respondents who were comparatively neutral or felt they did not possess this ability (M ≤ 3.50) due to constraints, such as time and research needing to be translated by others. Branching from Node 3 was the third and final layer of the classification tree, which split respondents into two groups based on their reported understanding of research methods (C3), χ2(1) = 10.30, p = 0.02, rpb = 0.270. Node 7 (use) contained respondents who strongly agreed (M > 4.50) they understood the strengths and weaknesses of different research methods and could judge the quality of research knowledge. Node 6 (non-use) contained respondents were comparatively less confident about their understanding in this area (M ≤ 4.50).

Figure 2. Decision classification tree for the costs of research-use factors. Light gray shading within terminal nodes denotes the final classification.

Modeling individual-level research-use (R2) based on its perceived signification yielded a two-layer classification tree that correctly classified non-use for 80.6% of respondents and use for 52.0%. As can be seen in Figure 3, below, the most significant predictor of use was respondents’ belief that research-use is an indicator of successful teachers and schools (S2), χ2(2) = 22.73, p < 001, rpb = 0.289. At this first layer of the classification tree, the model split respondents into either Nodes 1 and 2 (non-use) if they were neutral or disagreed (M ≤ 3.85) with the connection between research and successful education delivery, or Node 3 if they agreed (M > 3.85) that research-use is increasingly a hallmark of an effective profession and something that enhances a school’s reputation and attractiveness as a place to learn and work. Branching from Node 3 was the second layer of the classification tree, splitting respondents into two groups based on their belief that research-use is becoming a norm in the field of education (S1), χ2(1) = 17.86, p < 001, rpb = 0.220. Although both nodes were classified as use, Node 4 corresponded to respondents who were neutral or disagreed that teachers and school leaders are increasingly aware of and using research in their practice (M ≤ 3.60), and Node 5 corresponded to respondents who agreed with this perspective (M > 3.60).

Figure 3. Decision classification tree for the signification of research-use factors. Light gray shading within terminal nodes denotes the final classification.

A summary of all terminal node classifications is presented in Table 7. Close inspection of this table in combination with Table 6, reveals that each model was more successful at correctly classifying respondents in the “non-use” category of R2 than those in the “use” category—evident, for instance, in the number of respondents predicted as non-use but observed as use. This result suggests that while non-use of research knowledge may be relatively straightforward to predict based on educators’ beliefs about the benefits and costs of research-use as well as what it signifies, predicting use may be comparatively complex and dependent on the interaction of multiple factors.

This study sought to understand educators’ use of research relative to the benefits, costs, and signifying factors (Baudrillard, 1968) they associate with it. This understanding sits within an overall umbrella of seeking to improve the ability of educators to engage in effective inclusive practice. In undertaking our study, we first worked to develop and refine a survey instrument (the ‘Research-Use BCS survey’) that could uniquely and simultaneously measure these concepts. We undertook this work after thoroughly reviewing research-evidence use literature relative to Baudrillard’s semiotic theory of consumption. We then administered our questionnaire using a sample of English educators and analyzed survey data mainly through the production of descriptive analysis and classification trees. This section focuses primarily on the meaning and implications of our results. Perhaps most importantly, these results collectively hint at what interventions and strategies might work (at least, in the English context) to enhance evidence use.

To begin with, the results from our survey appeared to provide intuitively correct findings relative to the benefits of research-use. Although we cannot determine the direction of causation, seeing benefits in research and engaging in research-use are closely linked; this finding is most evident when looking at the extremes present in the B1 factor score. If respondents see the benefits of research, they were likely to use it (with the converse also true). At the same time, however, there was another group in the middle, comprising individuals who were more undecided. While these individuals were more likely to be classified as non-users, this distinction was not necessarily clear-cut. This suggests other factors are also likely to explain their decision-making around research-use, something that we would be able to better identify with a larger sample size (which would enable us to produce further significant classification tree nodes).

We can also pick out several patterns in the classification of respondents based on their perceptions about the costs of research-use. First, if educators have the needed support of their colleagues, they are more likely to use research, a finding that coheres with the literature (e.g., Coldwell et al., 2017; Walker et al., 2018; Rickinson et al., 2020; – also see Table 1). However, when such collegial support is not available, respondents engaging in research-use tended to be those who either (a) believed they personally possessed the expertise required to connect research and practice; or (b) were confident in their understanding of research methods and quality: both human rather than social capital factors. Turning finally to the signification factors, the findings suggest that seeing research-use as an activity that successful teachers and schools engage in is associated with individual-level use. Here as well, however, the association was not unambiguous. Even when some respondents agreed with this linkage between research-use and professional success, they were less likely to engage in research-use themselves when it was not perceived as a norm among school leaders and their colleagues.

Looking at the positive or negative ends of each category of factors (benefits, costs, and signification), reasonable explanations can be developed to explain respondents’ use or non-use of research. However, for the individuals who fall closer to the middle of each spectrum (e.g., Node 2 in Figure 1; Node 4 in Figure 2; and Node 2 in Figure 3), there are clearly complex decision-making processes at play including either combinations of these factors, or other predictors not included in these models (e.g., demographic variables). This point is also evident when inspecting the accuracy of the models, which highlights that ‘non-use’ is relatively straightforward to predict, while ‘use’ is a more complex phenomenon. Nonetheless our results are suggestive of potential interventions for enhancing the use of research-evidence use; which, in the context of this paper, may well lead to more effective inclusive practice. For example, our results suggest that increased support of school leadership for research-evidence use (see Brown and Malin, 2017) would move a substantial number of evidence non-users to users. Likewise, were research-use to become a norm in more work settings (which again implicates the role of school leaders, but might also be promoted via external entities or policies; MacGregor et al., 2022; see Brown and Malin, 2022), one could imagine enhanced research-use as a consequence. For example, improvements in the mediation space (e.g., better, more well-tailored externally-provided knowledge and a more accessible knowledge provider network) might serve to reduce certain typical ‘costs,’ making it easier to obtain relevant research-evidence when needed. As well, we imagine that interventions geared toward elevating the profile and stature of research (signification) might, in some cases, attend toward broadening or altering educators’ understanding of what research is and what and how it can be helpful given one’s particular context and interests (e.g., showing how it can illuminate one’s thinking, can be carried out via participatory approaches, can be focused on enhancing equity and/or on producing counternarratives, and so on). Again, it might be possible for some professionals to make such a case and, in turn, improve the desirability of research-use for others. Related, these results have prompted us to envision a set of individuals who are apparently very close to becoming research users (e.g., analogous to ‘undecided’ or ‘swing’ voters), if only their environment were to slightly shift in favorable ways. Such individuals, for example, may already perceive benefits of research-evidence use, and merely need a bit more support around such work (e.g., more time together, better access, opportunities to discuss problems of practice and research in relation to these interests) to fully embrace it.

Although this analysis, for the most part, tends to cohere with what one might have been able to glean from the literature to date, we believe our research and its employment of Baudrillard’s theoretical frame, does provide further clarity and focus with regards to potential points for intervention. For instance, it establishes (at least for this sample) the relative importance of benefits, costs, and signification for research-use and non-use. At the same time, however, the study does have certain limitations and delimitations. These are: (1) its focus on the English context; (2) the unrepresentative nature of its sample; and (3) the relatively small size of its sample. The first of these impact on our ability to generalize widely, while, the last two of these foreclose certain analytic possibilities, including the use of regression analyses and Structural Equation Modeling (the ability to create a model of logical casual relationships between variables). As such we recommend further research to collect the views of a greater number of teachers in England, as well as the use of the survey in additional contexts. Pursuing both avenues of investigation would help us understand the wider validity of the questionnaire, as well as provide a more representative set of responses (thus enabling generalizability). Furthermore, combined with different forms of statistical analysis, these approaches should mean that this tool should, in future, be able to provide a useful way of diagnosing areas of strength/promise regarding research-use, as well as potential areas of focus with an eye toward increasing such use in the development of effective educational practices in a given context (whether for inclusion or other areas of interest). As such, we will continue to search out approaches to using the survey to bring about more research-use (in integration with other key evidentiary forms) as educators make key educational decisions (Malin et al., 2020). We do so under the assumption that this is a sustainable and effective way to enhance teaching and learning.

The data analyzed for this study can be found at https://osf.io/p45nx/.

The studies involving human participants were reviewed and approved by Durham University School of Education Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

CB: conceptualization, formal analysis, methodology, project administration, writing – original draft preparation, writing – review and editing. SM: data curation, formal analysis, investigation, methodology, and software. JF: project administration, writing – original draft preparation, writing – review and editing, project administration. JM: writing – review and editing. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.890832/full#supplementary-material

Asgharzadeh, A., Shabaninejad, H., Aryankhesal, A., and Majdzadeh, R. (2019). Instruments for assessing organisational capacity for use of evidence in health sector policy making: a systematic scoping review. Evid. Policy 17, 29–57. doi: 10.1332/174426419X15704986117256

Biesta, G. (2007). Why ‘what works’ won’t work: evidence-based practice and the democratic deficit in educational research. Educ. Theory 57, 1–22. doi: 10.1111/j.1741-5446.2006.00241.x

Biesta, G., Ourania, F., Wainwright, E., and Aldridge, D. (2019). Why educational research should not just solve problems, but should cause them as well. Br. Educ. Res. J. 45, 1–4. doi: 10.1002/berj.3509

Brown, C. (2014). Evidence Informed Policy And Practice In Education: A Sociological Grounding. London: Bloomsbury.

Brown, C. (2017). Further exploring the rationality of evidence-informed practice: a semiotic analysis of the perspectives of a school federation. Int. J. Educ. Res. 82, 28–39. doi: 10.1016/j.ijer.2017.01.001

Brown, C. (2018). Improving Teaching and Learning in Schools Through the Use of Academic Research: Exploring the Impact of Research Learning Communities, 135 Edn. Research Intelligence, 17–18.

Brown, C. (2019). School/University Partnerships: An English perspective, Die Deutsche Schule, Vol. 111. Münster: Waxmann, 22–34.

Brown, C. (2020). The Networked School Leader: How To Improve Teaching And Student Outcomes Using Learning Networks. London: Emerald.

Brown, C., and Flood, J. (2018). Lost in translation? Can the use of theories of action be effective in helping teachers develop and scale up research-informed practices? Teach. Teach. Educ. 72, 144–154. doi: 10.1016/j.tate.2018.03.007

Brown, C., and Flood, J. (2019). Formalise, Prioritise and Mobilise: How School Leaders Secure The Benefits of Professional Learning Networks. London: Emerald.

Brown, C., and Greany, T. (2017). The evidence-informed school system in england: where should school leaders be focusing their efforts? Leadersh. Policy Sch. 17, 1–23.

Brown, C., MacGregor, S., and Flood, J. (2020). Can models of distributed leadership be used to mobilise networked generated innovation in schools? A case study from England. Teach. Teach. Educ. 94:103101. doi: 10.1016/j.tate.2020.103101

Brown, C., and Malin, J. (eds) (2022). The Handbook of Evidence-Informed Practice in Education: Learning from International Contexts. London: Emerald.

Brown, C., and Malin, J. R. (2017). Five Vital Roles For School Leaders In The Pursuit Of Evidence Of Evidence-Informed Practice. Teachers College Record. Available online at: Retrieved from http://www.tcrecord.org/Content.asp?ContentId=21869. (accessed June 15, 2020).

Brown, C., and Rogers, S. (2015). Knowledge creation as an approach to facilitating evidence-informed practice: examining ways to measure the success of using this method with early years practitioners in Camden (London). J. Educ. Change 16, 79–99. doi: 10.1007/s10833-014-9238-9

Brown, C., Daly, A., and Liou, Y.-H. (2016). Improving trust, improving schools: findings from a social network analysis of 43 primary schools in england. J. Prof. Cap. Community 1, 69–91. doi: 10.1108/jpcc-09-2015-0004

Brown, C., White, R., and Kelly, A. (2021). Teachers as educational change agents: what do we currently know? Findings from a systematic review. Emerald Open Res. 3:26. doi: 10.35241/emeraldopenres.14385.1

Brown, C., Zhang, D., Xu, N., and Corbett, S. (2018). Exploring the impact of social relationships on teachers’ use of research: a regression analysis of 389 teachers in England. Int. J. Educ. Res. 89, 36–46. doi: 10.1016/j.ijer.2018.04.003

Cain, T. (2015). Teachers’ engagement with published research: addressing the knowledge problem. Curric. J. 26, 488–509. doi: 10.1080/09585176.2015.1020820

Cain, T., Brindley, S., Brown, C., Jones, G., and Riga, F. (2019). Bounded decision-making, teachers’ reflection, and organisational learning: how research can inform teachers and teaching. Br. Educ. Res. J. 45, 1072–1087. doi: 10.1002/berj.3551

Coldwell, M., Greany, T., Higgins, S., Brown, C., Maxwell, B., Stiell, B., et al. (2017). Evidence-informed Teaching: An Evaluation Of Progress In England. London: Department for Education.

Cooper, A., and Levin, B. (2010). Some Canadian contributions to understanding knowledge mobilization. Evid. Policy 6, 351–369. doi: 10.1332/174426410x524839

Cordingley, P. (2013). The contribution of research to teachers’ professional learning and development. Oxford Rev. Educ. 41, 234–252. doi: 10.1080/03054985.2015.1020105

Costello, A. B., and Osbourne, J. W. (2005). Best practices in exploratory factor analysis: four recommendations for getting the most from your analysis. Pract. Assess. 10, 1–9. doi: 10.1080/00224499.2015.1137538

Crain-Dorough, M., and Elder, A. (2021). Absorptive capacity as a means of understanding and addressing the disconnects between research and practice. Rev. Res. Educ. 45, 67–100. doi: 10.3102/0091732x21990614

Department for Education (2019a). School Workforce In England: November 2018. Available online at: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/811622/SWFC_MainText.pdf (accessed June 15, 2020).

Department for Education (2019b). Schools, Pupils And Their Characteristics: January 2019. Available online at: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/812539/Schools_Pupils_and_their_Characteristics_2019_Main_Text.pdf (accessed June 15, 2020).

Ehren, M. (2019). “Accountability structures that support school self-evaluation, enquiry and learning,” in An Eco-System for Research Engaged Schools: Reforming Education Through Research, eds D. Godfrey and C. Brown (London: Sage), 41–55.

Fabrigar, L. R., MacCallum, R. C., Wegener, D. T., and Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychol. Methods 4, 272–299. doi: 10.1037/1082-989x.4.3.272

Galdin-O’Shea, H. (2015). “Leading the use of research & evidence in schools,”in Leading ‘Disciplined Enquiries’ in Schools, ed. C. Brown (London: IOE Press), 91–106.

Gehlbach, H., and Brinkworth, M. E. (2011). Measure twice, cut down error: a process for enhancing the validity of survey scales. Rev. Gen. Psychol. 15, 380–387. doi: 10.1037/a0025704

Godfrey, D. (2016). Leadership of schools as research-led organisations in the English educational environment: cultivating a research-engaged school culture. Educ. Manag. Admin. Leadersh. 44, 301–321. doi: 10.1177/1741143213508294

Goldacre, B. (2013). Building Evidence Into Education. Available online at: https://www.gov.uk/government/news/building-evidence-into-education (accessed January 27, 2020).

Gorard, S., Griffin, N., See, B., and Siddiqui, N. (2019). How Can We Get Educators To Use Research Evidence?. Durham: Durham University Evidence Centre for Education.

Gough, D. (2021). Appraising evidence claims. Rev. Res. Educ. 45, 1–26. doi: 10.3102/0091732x20985072

Graesser, A. (2006). Question understanding aid (QUAID): a web facility that tests question comprehensibility. Public Opin. Q. 70, 3–22. doi: 10.1093/poq/nfj012

Graves, S., and Moore, A. (2017). How do you know what works, works for you? An investigation into the attitudes of school leaders to using research evidence to inform teaching and learning in schools. Sch. Leadersh. Manag. 38, 259–277. doi: 10.1080/13632434.2017.1366438

Greany, T., and Earley, P. (2018). The paradox of policy and the quest for successful leadership. Prof. Dev. Today 19, 6–12.

Gu, Q., Hodgen, J., Adkins, M., and Armstrong, P. (2019). Incentivising Schools To Take Up Evidence-Based Practice To Improve Teaching And Learning; Evidence From The Evaluation Of The Suffolk Challenge Fund. Final Report, July, 2019. London: Education Endowment Foundation.

Hammersley, M. (1997). Educational research and teaching: a response to David Hargreaves TTA lecture. Br. Educ. Res. J. 23, 141–161. doi: 10.1080/0141192970230203

Handscomb, G., and MacBeath, J. (2003). The Research Engaged School. Forum for Learning and Research Enquiry (FLARE). Essex: Essex County Council.

Hargreaves, A., and Fullan, M. (2012). Professional Capital: Transforming Teaching in Every School. New York, NY: Teachers College Press.

Hargreaves, D. (1996). The Teaching Training Agency Annual Lecture 1996: Teaching As A Research Based Profession: Possibilities And Prospects. Available online at: http://eppi.ioe.ac.uk/cms/Portals/0/PDF%20reviews%20and%20summaries/TTA%20Hargreaves%20lecture.pdf (accessed May 14, 2020).

Higher Education Funding Council, England (2011). Assessment Framework and Guidance on Submissions. Bristol: HEFCE.

Honig, M. (2006). Street-level bureaucracy revisited: frontline district central-office administrators as boundary spanners in education policy implementation. Educ. Eval. Policy Anal. 28, 357–383. doi: 10.3102/01623737028004357

Hubers, M. D. (2016). Capacity Building By Data Team Members To Sustain Schools’ Data Use. Enschede, NL: Gildeprint.

Joram, E., Gabriele, A., and Walton, K. (2020). What influences teachers ‘buy-in’ of research? Teachers’ beliefs about the applicability of educational research to their practice. Teach. Teach. Educ. 88:102980. doi: 10.1371/journal.pone.0131121

Kass, G. V. (1980). An exploratory technique for investigating large quantities of categorical data. J. R. Stat. Soc. C 29, 119–127. doi: 10.2307/2986296

Kools, M., and Stoll, L. (2016). What Makes A School A Learning Organisation: A Guide For Policy-Makers, School Leaders And Teachers. Available online at: https://www.oecd.org/education/school/school-learning-organisation.pdf (accessed July 12, 2020).

Lawlor, J. A., Mills, K. J., Neal, Z., Neal, J. W., Wilson, C., and McAlindon, K. (2019). Approaches to measuring use of research evidence in K-12 settings: a systematic review. Educ. Res. Rev. 27, 218–228. doi: 10.1016/j.edurev.2019.04.002

Lee, M., and Louis, K. S. (2019). Mapping a strong school culture and linking it to sustainable school improvement. Teach. Teach. Educ. 81, 84–96. doi: 10.1016/j.tate.2019.02.001

MacGregor, S., Malin, J. R., and Farley-Ripple, L. (2022). An application of the social-ecological systems framework to promoting evidence-informed policy and practice. Peabody J. Educ. 97, 112–125. doi: 10.1080/0161956x.2022.2026725

Malin, J. R., Brown, C., and Saultz, A. (2019). What we want, why we want it: K-12 educators’ evidence use to support their grant proposals. Int. J. Educ. Policy Leadersh. 15, 1–19. doi: 10.1017/9781108611794.001

Malin, J. R., Brown, C., Ion, G., Van Ackeren, I., Bremm, N., Luzmore, R., et al. (2020). World-wide barriers and enablers to achieving evidence-informed practice in education: what can be learnt from Spain, England, the United States, and Germany? Hum. Soc. Sci. Commun. 7, 1–14. doi: 10.1108/978-1-80043-141-620221003

Messick, S. (1995). Validity of psychological assessment: validation of inferences from persons’ responses and performances as scientific inquiry into score meaning. Am. Psychol. 50, 741–749. doi: 10.1037/0003-066X.50.9.741

Milanović, M., and Stamenković, M. (2016). CHAID decision tree: methodological frame and application. Econ. Themes 54, 563–586. doi: 10.1515/ethemes-2016-0029

Mincu, M. (2014). Inquiry Paper 6: Teacher Quality And School Improvement ?What Is The Role Of Research?. London: British Educational Research Association.

Mintrop, R., and Zumpe, E. (2019). Solving real life problems of practice and education leaders’ school improvement mindsets. Am. J. Educ. 3, 295–344. doi: 10.1086/702733

Mintz, J., and Wyse, D. (2015). Inclusive pedagogy and knowledge in special education: addressing the tension. Int. J. Incl. Educ. 19, 1161–1171. doi: 10.1080/13603116.2015.1044203

Mintz, J., Hick, P., Solomon, Y., Matziari, A., Ó’Murchú, F., Hall, K., et al. (2020). The reality of reality shock for inclusion: how does teacher attitude, perceived knowledge and self-efficacy in relation to effective inclusion in the classroom change from the pre-service to novice teacher year? Teach. Teach. Educ. 91, 1–11.

Morton, S., and Seditas, K. (2016). Evidence synthesis for knowledge exchange: balancing responsiveness and quality in providing evidence for policy and practice. Evid. Policy 14, 155–167. doi: 10.1332/174426416x14779388510327

Nelson, J., and O’Beirne, C. (2014). Using Evidence In The Classroom: What Works And Why?. Slough: National Foundation for Educational Research.

Nutley, S. M., Walter, I., and Davies, H. T. O. (2007). Using Evidence: How Research Can Inform Public Services. Bristol: The Policy Press.

Oakley, A. (2000). Experiments In Knowing: Gender And Method In The Social Sciences. Cambridge: Polity Press.

Rickinson, M., Walsh, L., Cirkony, C., Salisbury, M., and Gleeson, J. (2020). Monash Q Project “Using Evidence Better” Quality Use Of Research Evidence Framework. Melbourne: Monash University & Paul Ramsay Foundation.

Rose, J., Thomas, S., Zhang, L., Edwards, A., Augero, A., and Rooney, P. (2017). Research Learning Communities Evaluation Report And Executive Summary (December 2017). Available online at: https://educationendowmentfoundation.org.uk/public/files/Projects/Evaluation_Reports/Research_Learning_Communities.pdf (accessed May 15, 2020).

See, B., Gorard, S., and Siddiqi, N. (2016). Teachers’ use of research evidence in practice: a pilot study of feedback to enhance learning. Educ. Res. 58, 56–72. doi: 10.1080/00131881.2015.1117798

Royal Society for the Encouragement of the Arts, Manufacturing and Commerce (RSA) (2014). The Role Of Research In Teacher Education: Reviewing the Evidence. Available online at: www.bera.ac.uk/wp-content/uploads/2014/02/BERA-RSA-Interim-Report.pdf (accessed May 8, 2020).

Van Mieghem, A., Verschueren, K., Petry, K., and Struyf, E. (2020). An analysis of research on inclusive education: a systematic search and meta review. Int. J. Incl. Educ. 24, 675–689. doi: 10.1080/13603116.2018.1482012

Von Hippel, A. (2014). Program planning caught between heterogeneous expectations – An approach to the differentiation of contradictory constellations and professional antinomies. Edukacja Dorosłych 1, 169–184.

Walker, J., Nelson, J., Bradshaw, S., and Brown, C. (2018). Researching Teachers’ Engagement with Research and EEF Resources. London: Education Endowment Foundation.

Walker, J., Nelson, J., Bradshaw, S., and Brown, C. (2019). Teachers’ Engagement with Research: What Do We Know? A Research Briefing. London: Education Endowment Foundation.

Walker, M. (2017). Insights into the Role of Research and Development in Teaching Schools. Slough: NfER.

Wiggins, M., Jerrim, J., Tripney, J., Khatwa, M., and Gough, D. (2019). The Rise Project; Evidence Informed School Improvement, Final Report, May 2019. London: EEF.

Willis, G., and Lessler, J. T. (1999). Question Appraisal System: QAS-99. Rockville, MD: Research Triangle Institute.

Wisby, E., and Whitty, G. (2017). “Is evidence-informed practice any more feasible than evidence-informed policy,” in Proceedings of the Presented At The British Educational Research Association Annual Conference, Sussex.

Wrigley, T. (2018). The power of ‘evidence’: reliable science or a set of blunt tools? Br. Educ. Res. J. 44, 359–376. doi: 10.1002/berj.3338

Keywords: research-use, research-informed practice, teacher research use, classification tree analysis, Jean Baudrillard, benefits of research, costs of research, signification of research

Citation: Brown C, MacGregor S, Flood J and Malin J (2022) Facilitating Research-Informed Educational Practice for Inclusion. Survey Findings From 147 Teachers and School Leaders in England. Front. Educ. 7:890832. doi: 10.3389/feduc.2022.890832

Received: 06 March 2022; Accepted: 25 March 2022;

Published: 19 April 2022.

Edited by:

Joseph Mintz, University College London, United KingdomReviewed by:

Kevin Cahill, University College Cork, IrelandCopyright © 2022 Brown, MacGregor, Flood and Malin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chris Brown, Y2hyaXMuYnJvd25AZHVyaGFtLmFjLnVr

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.