- Department of Psychology, Denison University, Granville, OH, United States

College students with disabilities may be entitled to academic accommodations such as additional time on exams, testing in a separate setting, or assistance with note-taking. To receive accommodations, students must request services from their college and show that they experience substantial limitations in academic functioning. Without norm-referenced data, it is difficult for college disability support professionals to determine if students’ self-reported academic problems reflect substantial limitations characteristic of a disability, or academic challenges experienced by most other students. The Academic Impairment Measure (AIM) is a brief, multidimensional, norm-referenced rating scale that can help professionals identify college students with significant impairment who need academic support. Exploratory and confirmatory factor analysis indicate that the AIM assesses seven distinct and interpretable domains of academic functioning relevant to postsecondary students. Initial studies also provide evidence of internal and temporal consistency; composite reliability; content, convergent and discriminant validity; and the ability to differentiate students with and without disabilities. Finally, the AIM includes a response validity scale to detect non-credible ratings. Normative data from a large, diverse standardization sample allow professionals to use AIM scores to screen students for significant impairment, tailor accommodations to students’ specific limitations, and monitor the effectiveness of accommodations over time.

Introduction

College students with disabilities may be entitled to academic accommodations under the auspices of the Americans with Disabilities Act (ADA; US Department of Justice, 2016; Keenan et al., 2019). Academic accommodations such as additional time on exams; testing in a separate, distraction-reduced setting; or assistance with reading, math, or note-taking are designed to provide students with disabilities equal access to educational opportunities as their classmates without disabilities (Lovett, 2014; Lovett and Lewandowski, 2015). To receive accommodations, students must contact their school’s disability support office and show that they have a condition that “substantially limits their ability to perform a major life activity compared to most people in the general population” (US Department of Justice, 2016, p. 53224). College disability professionals will typically interview students and collect data regarding their current academic functioning (Banerjee et al., 2020). In practice, the type of evidence that colleges require from students to support their accommodation requests and the methods disability professionals use to render accommodation decisions vary across institutions (Miller et al., 2019).

The (Association on Higher Education and Disability [AHEAD], 2012) provides guidance regarding disability-determination and accommodation-granting in postsecondary settings. Previously, the Association on Higher Education and Disability (1994) established best practices for evaluating students’ accommodation requests. These practices involved engaging the student in an interactive process to gather information about current limitations, interviewing other informants (e.g., parents, teachers) about the student’s developmental history and accommodation use, and reviewing the student’s educational, medical, or psychological records. These best practices also described essential components of documentation including a clear diagnosis made by a qualified professional, a written description of the student’s current limitations, and recommendations tailored to mitigate the student’s academic problems.

Association on Higher Education and Disability (2012) replaced these best practices with new guidance designed to facilitate the provision of services. The new guidance identifies three sources of “documentation” that colleges might use when rendering accommodation decisions. Primary documentation consists of the student’s narrative regarding his or her disability history, current limitations, and perceived effectiveness of past accommodations. According to the guidance, the student’s narrative “may be sufficient for establishing disability and a need for accommodation” (p. 2). Secondary documentation includes the disability professional’s impressions of the student. The disability professional is told to use a “commonsense standard” (Association on Higher Education and Disability, 2012, p. 4) when determining the need for accommodations and to “trust your instincts” (Meyer et al., 2020, p. 3) when assessing the validity of the student’s reports. Tertiary documentation includes all third-party information about the student’s history and current functioning such as educational, medical, or psychological records; observations or reports from parents, teachers, and other informants; data showing the provision and/or effectiveness of previous accommodations; and the results of psychoeducational testing. The guidance emphasizes that “no third-party information may be necessary to confirm a disability or evaluate requests for accommodations… and no specific language, tests, or diagnostic labels are required” (p. 4).

Altogether, disability determination and accommodation granting in higher education has seen a shift in emphasis from objective evidence to self-reports and impressions (Lovett and Lindstrom, 2022). For students with chronic physical or sensory disabilities, there is typically little need for colleges to gather extensive, third-party documentation before granting services. However, most postsecondary students seeking accommodations report limitations caused by non-apparent conditions such as attention-deficit/hyperactivity disorder (ADHD), learning disabilities, and anxiety. Many of these students have no history of a formal diagnosis or functional limitations prior to college (Sparks and Lovett, 2009a,b; Weis et al., 2012, 2017; Harrison et al., 2021b). In these instances, reliance on self-reports and impressions may lead to accommodation decision-making errors (Lovett et al., 2015).

Based on an interview alone, it is difficult to determine if students’ self-reported academic problems indicate substantial limitations in functioning compared to most people in the general population (i.e., a disability) or reflect the academic challenges experienced by most college students (Lovett and Lindstrom, 2022). Reading long and complex passages, completing high-stakes exams, and learning new languages are difficult and often stressful activities. Many students without disabilities report problems with common academic tasks like these (Suhr and Johnson, 2022) and recognize that accommodations might increase academic performance (Lewandowski et al., 2014). Without normative data, disability professionals must make accommodation decisions based on the quality of students’ narratives and their subjective impressions. Students with the social awareness to know that accommodations are available; the support of parents, teachers, and other individuals to seek them out; and the cultural, linguistic, and self-advocacy skills to convince disability professionals that they are warranted may be most likely to receive services, regardless of their disability status (Waterfield and Whelan, 2017). In contrast, reliance on interviews, impressions, and instincts may disadvantage students with disabilities who lack these resources (Bolt et al., 2011; McGregor et al., 2016; Lovett, 2020; Cohen et al., 2021). Indeed, the number of students receiving accommodations has increased exponentially at America’s most selective and expensive private colleges, whereas access to accommodations at community colleges has remained low (Weis and Bittner, 2022).

The purpose of our study was to develop a norm-referenced, self-report measure of academic impairment that might help college disability professionals render accommodation decisions in an evidence-based manner. The Academic Impairment Measure (AIM) is designed to be a brief, multidimensional, norm-referenced instrument that assesses substantial limitations in major academic activities typically required of college students. When combined with the results of psychoeducational testing and/or a review of educational, medical, or psychological records, the AIM can help professionals systematically collect information about students’ academic functioning across multiple domains, assess the validity of students’ self-reports, and determine if students’ perceived problems reflect substantial limitations in functioning or academic challenges experienced by most postsecondary students. Four principles guided the development of our measure.

Academic impairment

The AIM is designed to assess academic impairment in college students, that is, substantial limitations in students’ ability to effectively engage in major academic activities relevant to postsecondary education. Examples of academic impairment include problems with reading and understanding assignments, taking notes during class, and completing tests within standard time limits. Impairment is different from, and often the consequence of, the symptoms of neurodevelopmental and psychiatric disorders experienced by college students (Gordon et al., 2006). For example, students with ADHD may experience symptoms of inattention and poor concentration. As a result, they may experience substantial limitations taking notes during class or ignoring distractions during exams. Similarly, students with anxiety disorders may experience the symptoms of fear, panic, or worry. As a result, they may be unable to give a class presentation, or they may forget information during tests and earn low grades.

The ADA differentiates the symptoms of disorders from their functional consequences (Lovett et al., 2016). According to the law, not every physical or mental condition automatically constitutes a disability; only disorders that substantially limit a major life activity are classified as disabilities and require accommodation (US Department of Justice, 2016). Similarly, the American Psychiatric Association (2013) recognizes that not all people with symptoms show impairment. They caution, “the clinical diagnosis of a mental disorder…does not imply that an individual with such a condition meets…a specific legal standard (e.g., disability). Additional information is usually required beyond that contained in the DSM-5 diagnosis, which might include information about the individual’s functional impairments and how these impairments affect the abilities in question” (p. 25).

Many mental health professionals who conduct disability evaluations for college students fail to document impairment (Nelson et al., 2019). Similarly, many college students who receive accommodations have no evidence of previous or current limitations (Weis et al., 2015, 2019, 2020). This lack of attention to impairment is concerning, given that it is central to the legal conceptualization of a disability (Lovett et al., 2016) and because research has shown only modest correlations between symptom severity and impairment (Gordon et al., 2006; Miller et al., 2013; Suhr et al., 2017).

Professionals who want to assess college students’ academic impairment face the additional challenge that most existing measures conflate diagnostic signs and symptoms with their functional consequences (Lewandowski et al., 2016;Lombardi et al., 2018). For example, the Kane Learning Difficulties Assessment (KLDA, Kane and Clark, 2016) includes items like I tend to act impulsively and It’s hard for me to sit still for very long, which describe DSM-5 ADHD symptoms rather than impairment. Similarly, the Learning and Study Strategies Inventory (LASSI; Weinstein et al., 2016) includes items like I worry that I will flunk out of school and I feel very panicky when I take an important test, which describe the symptoms of anxiety disorders rather than their impact on learning.

We wanted to create a measure that assesses impairment in a manner that is largely independent of diagnostic signs and symptoms. An independent assessment of academic functioning is important given the multifinality of many neurodevelopmental and psychiatric disorders. For example, students with ADHD can experience a range of adverse consequences including problems with reading, note-taking, and time management (DuPaul et al., 2021). Similarly, an independent assessment of academic functioning is important because of the equifinality of students’ academic difficulties. For example, problems completing timed tests might arise because of ADHD, learning disabilities, or anxiety disorders (Gregg, 2012). There is seldom a one-to-one correspondence between specific disorders and their functional consequences. We hoped that our measure might assess impairment independent of diagnostic signs and symptoms and be applicable to students with a range of neurodevelopmental and psychiatric conditions.

Multidimensional

The AIM is designed to assess multiple domains of academic functioning. In contrast, most existing impairment measures assess academic functioning using a single or limited number of items (Lombardi et al., 2018). For example, the Barkley Functional Impairment Scale (Barkley, 2011) assesses limitations in educational activities using one item. The World Health Organization Disability Assessment Schedule 2.0 (Üstün et al., 2010) includes only two items that assess school and work functioning, respectively. Although useful for research purposes, these measures do not allow professionals in applied settings to determine which areas of academic functioning require attention (Lewandowski et al., 2016).

Other instruments assess multiple domains of academic functioning, but they overlap significantly with each other making interpretation difficult. For example, the LASSI (Weinstein et al., 2016) assesses 10 domains of academic functioning. However, the same items are used to assess multiple domains and one-fourth of the correlations between domains exceed 0.50 indicating conceptual and statistical overlap. The KLDA (Kane and Clark, 2016) yields scores on 8 scales and 14 subscales of academic functioning. However, the same items are used to calculate scores on multiple scales, correlations between different scales are very high (e.g., r = 0.88), and correlations between scales and subscales approach unity (e.g., r = 0.97). These features make it difficult to use these measures in applied settings (Prevatt et al., 2006).

We hoped to assess seven domains of academic functioning: reading, math, foreign language learning, social-academic functioning, note-taking, test-taking, and time management. These domains reflect major life activities identified in the ADA or corresponding regulations (US Department of Justice, 2016) or activities that often necessitate accommodations in college (Gregg, 2012). We wanted to measure these domains separately, by creating an instrument with a simple factor structure with each item loading onto only one domain, and with low to moderate intercorrelations between domains. Consequently, we hoped professionals might use the AIM to identify specific areas of academic impairment, to plan accommodations, and to monitor the effectiveness of interventions over time.

Norm-referenced

The AIM is designed to be a norm-referenced measure that allows professionals to compare students’ self-reported academic problems to the reports of others. Without normative data, it is difficult to determine if students’ perceived difficulties reflect substantial limitations in functioning or academic challenges experienced by most postsecondary students. Several studies have shown high rates of self-reported academic problems among students without disabilities (Lewandowski et al., 2008; Suhr and Johnson, 2022). Students without disabilities may report academic problems because they evaluate their performance with respect to an idealized level of functioning or to academically talented peers (Johnson and Suhr, 2021). A student who finds himself working much harder and earning lower grades in college than in high school, might believe he has significant impairment when his experience may simply reflect the increased demands of postsecondary education. Similarly, a student who compares her academic skills to those of other high functioning classmates at her elite institution may perceive significant limitations, although her functioning may be within normal limits (Weis et al., 2017).

Although the authors of the ADA did not define a “significant limitation” in major life activities, they emphasized that limitations should be based in comparison to other people in the general population (US Department of Justice, 2016). We wanted to create a measure with normative data from a large sample of undergraduates from diverse backgrounds and educational settings to allow professionals to measure the degree of impairment each respondent experiences compared to others.1 Impairment scores >1 or 2 standard deviations above the mean of the standardization sample could indicate a substantial limitation in functioning on that domain. This limitation might merit mitigation if corroborated by data from other sources.

Response validity

The AIM is designed to estimate response validity. Between 10% and 40% of postsecondary students seeking accommodations for non-apparent disabilities provide non-credible data (Suhr et al., 2017; Harrison et al., 2021a). Non-credible responding is especially likely when students are motivated to receive accommodations (Cook et al., 2018). Unfortunately, students can effectively feign non-apparent disabilities with minimal preparation (Johnson and Suhr, 2021) and professionals are typically unable to assess the validity of students’ reports based on interviews alone (Wallace et al., 2019; Sweet et al., 2021). Professionals who make accommodation decisions based only on self-reports and impressions are prone to decision-making errors. Consequently, we wanted to create a response validity scale for our measure. It would include items that appear to reflect academic problems experienced by college students but are endorsed by few students in the standardization sample. Elevated ratings on this scale might indicate either severe impairment that is atypical of nearly all college students, or non-credible responding.

To create the AIM, we followed the methods of scale development recommended by DeVellis (2017) including item generation and screening; exploratory factor analysis and item reduction; confirmatory factor analysis and refinement; reliability and validity analyses; and examination of the response validity scale. We relied on diverse samples of degree-seeking undergraduates from postsecondary institutions across the United States. We hoped to develop a brief, multidimensional measure of academic impairment, with a simple factor structure, that professionals could use to identify students with substantial limitations in specific areas of academic functioning.

Study 1: Item generation and screening

Purpose

The purpose of Study 1 was to generate items and examine their initial descriptive statistics using a development sample. The AIM was designed as a brief, self-report measure of academic impairment for postsecondary students. Our operational definition of “academic impairment” mirrored the ADA definition of a disability: a substantial limitation in major academic activities typically required by degree-seeking, postsecondary students. We operationally defined “substantial limitation” as a statistically significant elevation (e.g., 1 or 2 standard deviations above the mean) in self-reported academic problems compared to other degree-seeking postsecondary students in the standardization sample.

Method

Item generation

We generated items that reflected major life activities that are often the basis of ADA accommodations, relevant to the academic life of postsecondary students, and frequently reported as limited by students with non-apparent disabilities (Gregg, 2012; US Department of Justice, 2016). These items conceptually fell into seven broad domains: reading, math, foreign language learning, social-academic functioning (e.g., working with classmates, giving a class presentation), note-taking, test-taking, and time management. The initial pool consisted of items like those on existing measures of academic functioning including the Academic Competence Evaluation Scales (DiPerna and Elliott, 2001), Behavior Assessment System for Children (BASC; Reynolds and Kamphaus, 2015), KLDA (Kane and Clark, 2016), LASSI (Weinstein et al., 2016), and Weiss Functional Impairment Rating Scale (WFIS; Weiss, 2000). Additional items were generated from the research literature on academic problems typically reported by college students with non-apparent disabilities. Finally, we generated items by identifying the most common accommodations granted to college students with disabilities (Gregg, 2012; Weis et al., 2015, 2020; US Government Accountability Office, 2022) and then inferring the academic impairments these accommodations were designed to mitigate. For example, two common test accommodations, additional time and testing in a separate location, are typically granted when students experience problems completing exams within standard time limits and ignoring distractions during exams, respectively.

We reduced the initial item pool in several ways. First, we combined or eliminated items that were redundant. Second, we eliminated most items that described the signs or symptoms of disorders (e.g., anxiety, inattention, diminished concentration, loss of energy, panic, worry) rather than their functional consequences. However, we retained items reflecting impairment in reading or math, because they describe both the DSM-5 criteria for specific learning disorder and academic impairment (American Psychiatric Association, 2013). Third, we eliminated items that reflected attitudinal or motivational factors rather than academic problems. For example, the WFIS asks students to report problems with teachers and problems with school administrators which could reflect interpersonal conflicts rather than academic difficulties. Similarly, the BASC includes the items I am bored with school and I feel like quitting school which seem to assess motivational factors. Fourth, we simplified wordy, double-barreled, or otherwise confusing items so that they were presented at a 5th-grade reading level. Finally, we asked two groups of people to review our item pool: 25 undergraduates receiving academic accommodations for non-apparent disabilities and 5 researchers at other institutions who study college students with disabilities.

The final pool consisted of 54 items that we believed would assess specific domains of academic impairment. At the recommendation of others, we included three additional items that might reflect students’ perceptions of overall impairment: needing to spend more effort on academic tasks than other people to perform well; needing to spend more time on academic tasks than other people to perform well; and earning course grades that do not show my full potential. These items would be included in the final version of our measure but were not designed to measure specific areas of impairment.

Item screening

We examined the psychometric properties of the initial item pool using a development sample of undergraduates (DeVellis, 2017). Specifically, we calculated each item’s mean, standard deviation, and corrected item-scale correlation. We also examined each scale’s internal consistency to determine the dimensionality of the measure and identify items that might be deleted because they did not fit the intended scale or were redundant.

We recruited participants using Prolific, an online crowdsourcing data collection platform for behavioral research. The sample consisted of 200 degree-seeking, adult undergraduates attending 2- or 4-year postsecondary institutions in the United States. Participants included 30.7% men, 65.3% women, and 4.0% non-binary gender identity. Participants’ racial identification included White (73.4%), Asian or Asian American (20.1%), Black or African American (8.5%), American Indian or Alaska Native (1.5%), Native Hawaiian or Pacific Islander (0.5%), and other (3%). Approximately 14.6% reported Hispanic or Latino ethnicity. Participants’ ages ranged from 18 to 51 years (M = 22.02, Mdn = 21.00, SD = 4.66). Participants were enrolled in public (77%) or private (23%) postsecondary institutions. Carnegie classification of these institutions included associate’s (7%), baccalaureate (6%), master’s (16%), doctoral (70%), and special-focus colleges or universities (1%). One participant failed the attention check items and was removed from subsequent analysis.

Participants were invited to complete an online survey about academic problems experienced by college students. After granting consent, they were asked to read each item and rate how much they experience problems with each activity compared to most other people using a five-point scale: (1) never, (2) rarely, (3) sometimes, (4) often, or (5) always a problem. After responding to the items, participants completed a demographics questionnaire and were asked to report their history of disabilities and/or accommodations. Our procedures followed the American Psychological Association’s Ethical Principles of Psychologists and Code of Conduct and were approved by our university’s Institutional Review Board.

Results and discussion

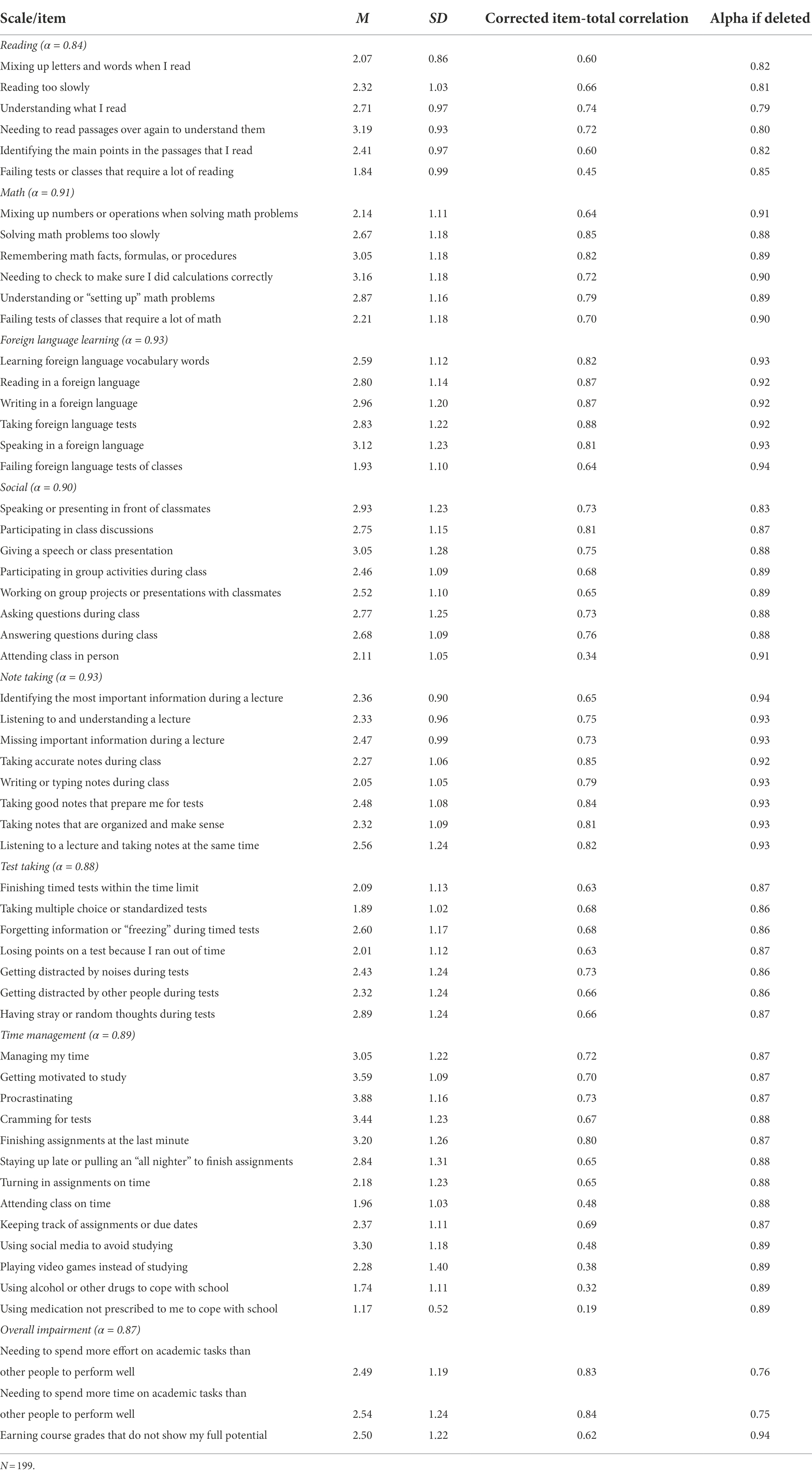

Results (Table 1) showed internal consistency estimates ranging from 0.84 to 0.93, which meets the acceptable lower bound value (Streiner, 2003). Although alphas exceeding 0.90 are sometimes interpreted as indicators of item redundancy, DeVellis (2017) argues that these values are appropriate for scales intended for academic decision making.

Descriptive statistics showed item means falling in the low- to mid-range of the scale with standard deviations consistent across items. No single item showed clear evidence of a potential floor or ceiling effect, restricted range, or possible heterogeneity of variance across items with the exception of using medication not prescribed to me. This item also correlated weakly with other items believed to assess impairment in time management. Consequently, we removed it from the seven-dimension structure of the measure but included it on the final version of the AIM to flag students who might engage in this behavior.

Corrected item-scale correlations revealed several other items that did not associate highly with others. Using a cutoff of r > 0.50, these items included failing tests or classes that require a lot of reading, attending class in person, attending class on time, using social media to avoid studying, playing video games instead of studying, and using alcohol or other drugs to cope with school. Inspection of the correlation matrix indicated that these items did not exceed this cutoff; consequently, we removed them from the seven-dimension structure of the measure. However, we kept the item using alcohol or other drugs on the final version of the AIM to flag students who might engage in this behavior. The final pool consisted of 47 items that reflected specific areas of impairment, three items that reflected overall impairment, and two items that reflected maladaptive substance use.

Study 2: Principal component analysis

Purpose

The purpose of Study 2 was to refine the measure by determining the number of components that best reflect students’ self-reported impairment. Although we wanted to create a measure that assessed multiple domains of academic functioning, it is possible that students’ perceived impairment is more accurately conceptualized as a unidimensional construct. To accomplish this goal, we administered the 47 specific impairment items to a new sample of undergraduates and used principal component analysis (PCA) to determine the optimal number of impairment domains (Tabachnick and Fidell, 2017; Widaman, 2018). We anticipated that seven components would emerge from the data. We hoped to rotate these components in a manner that yielded an interpretable solution, reflecting the domains of impairment previously described. We also hoped to refine the measure by eliminating unnecessary items and generating a simple structure, that is, a solution in which each item loads highly on only one, theoretically-meaningful component.

Method

We recruited a new sample of 325 undergraduates using the criteria and methods described previously. Participants included 29.0% men, 67.6% women, and 3.4% non-binary gender identity. Participants’ racial identification included White (70.8%), Asian or Asian American (21.2%), Black or African American (10.2%), American Indian or Alaska Native (1.2%), Native Hawaiian or Pacific Islander (0.3%), and other (4.3%). Approximately 11.7% reported Hispanic or Latino ethnicity. Participants’ ages ranged from 18 to 55 years (M = 22.96, Mdn = 21.00, SD = 5.76). Participants were enrolled in public (75%) or private (25%) postsecondary institutions. Carnegie classification of these institutions included associate’s (10%), baccalaureate (7%), master’s (20%), doctoral (62%), and special-focus colleges or universities (1%). Four participants failed the attention check items and were removed from subsequent analyses.

Results and discussion

We conducted a PCA on the 47 impairment items to determine the optimal number of components from the data. An initial, unrotated extraction yielded a KMO value of 0.91, a significant Bartlett’s test of sphericity (χ2(1081) = 12,011.60, p < 0.001), and small values in the off-diagonal elements of the anti-correlation matrix indicating adequate sample size and factorability (Tabachnick and Fidell, 2017). There were no violations of normality, linearity, outliers, or missing data.

Nine components had eigenvalues ≥1; however, inspection of the scree plot showed only seven components with eigenvalues >1.5 with remaining values close to or below one. Parallel analysis using eigenvalues at the 95th percentile generated from a random sample of 500 matrices also suggested seven components (Vivek et al., 2017).

We used oblique rotation to interpret these components. Students with neurodevelopmental and psychiatric disabilities often experience impairment in multiple domains of academic functioning. For example, the executive functioning deficits often seen in students with ADHD can lead to impairment in reading and math, problems taking accurate notes in class, and difficulty ignoring distractions during timed exams (DuPaul et al., 2021). Similarly, the psychological distress and maladaptive thoughts that characterize social anxiety disorder can cause students to avoid class presentations and group activities, to panic during exams, or to procrastinate (Nordstrom et al., 2014). Consequently, we used oblique rotation to reflect the fact that students with academic difficulties often show impairment across multiple domains.

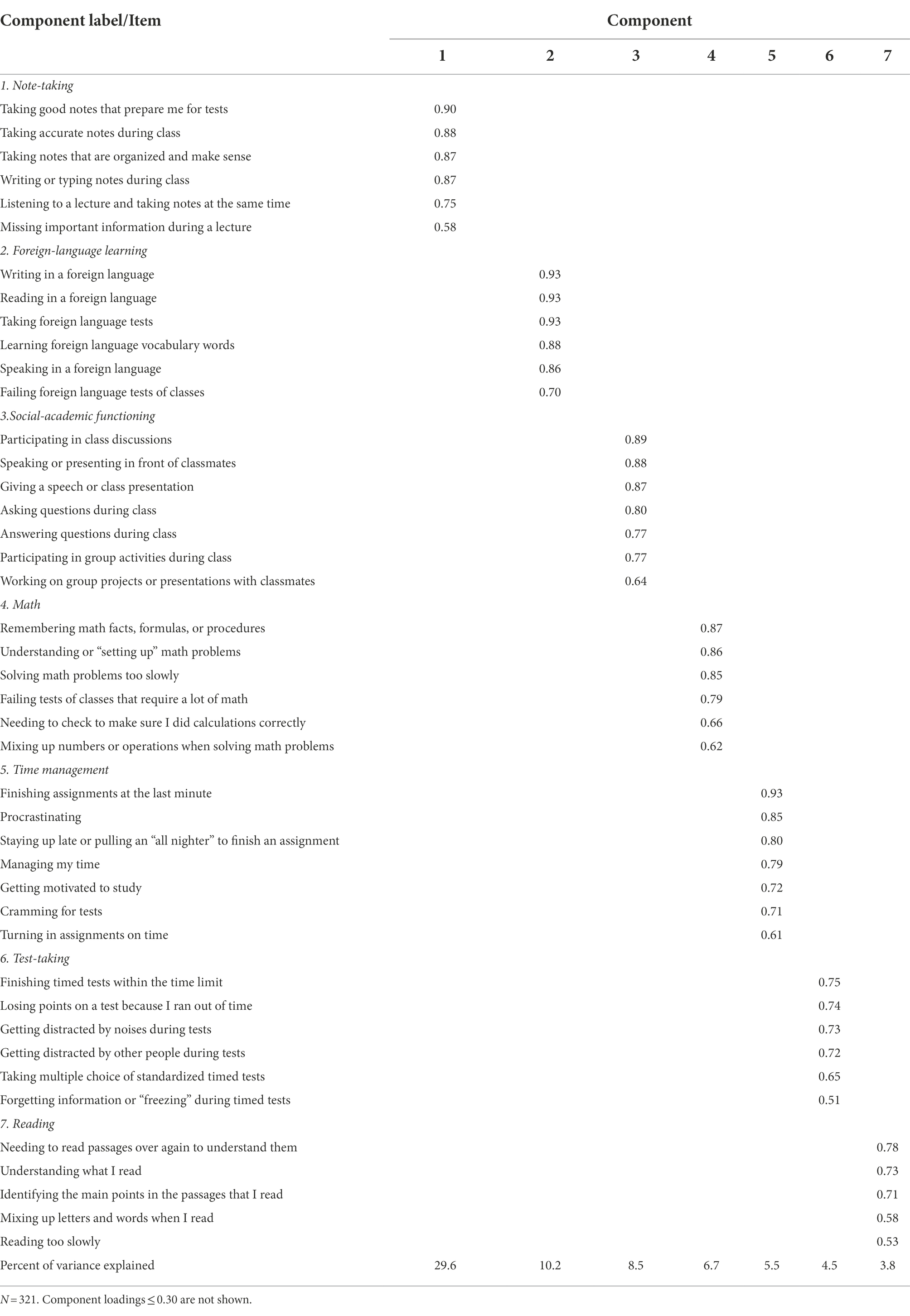

Results (Table 2) indicated a seven-component solution, consisting of 43 items, and accounting for 70% of the variance in academic impairment. The rotated matrix yielded an interpretable structure with each component loading ≥0.50 on its expected domain and < 0.30 on all other domains, consistent with the recommendations of previous authors (Tabachnick and Fidell, 2017). The components reflect impairment in note-taking, foreign-language learning, social-academic functioning, math, time management, test-taking, and reading, respectively.

Four items were removed from the measure because they loaded on multiple components. Two items that were believed to describe impaired note-taking (i.e., identifying the most important information during a lecture, listening to and understanding a lecture) also loaded onto the reading impairment component, perhaps because deficits in oral language might underlie impairment in both reading and listening comprehension (Snowling and Hulme, 2021). Two other items (i.e., having stray or random thoughts during tests, keeping track of assignments or due dates) loaded onto multiple components, indicating that they reflect more general problems with academic functioning.

Table 3 shows the intercorrelations among the component composite scores. Three correlations exceeded 0.32, indicating at least 10% overlap in variance. Therefore, oblique rotation was likely warranted (Tabachnick and Fidell, 2017). It is noteworthy that components did not show the very high intercorrelations seen in previous research, suggesting that although several components are associated, they can be differentiated from one another and may meaningfully reflect different academic domains.

Study 3: Confirmatory factor analysis

Purpose

The purpose of Study 3 was to test the factorial validity of our measure using confirmatory factor analysis (CFA). Although the PCA yielded a seven-part, oblique structure, the resulting model could capitalize on chance or reflect idiosyncrasies of the dataset (Byrne, 2016). CFA with an independent sample is necessary to examine how well the seven-part model fits students’ reported academic problems, whether AIM items accurately measure their respective impairment domain (i.e., convergent validity), and whether the domains can be distinguished from each other (i.e., discriminant validity).

Method

We recruited a new sample of 650 undergraduates using the same criteria and methods described previously. The sample included 40.5% men, 55.7% women, and 3.8% non-binary gender identity. Participants’ racial identification included White (70.9%), Asian or Asian American (18.5%), Black or African American (12.6%), American Indian or Alaska Native (1.5%), Native Hawaiian or Pacific Islander (0.5%), and other (3.7%). Approximately 15.4% reported Hispanic or Latino ethnicity. Participants’ ages ranged from 18 to 55 years (M = 22.42, Mdn = 21.00, SD = 5.163). Participants were enrolled in public (74%) or private (26%) postsecondary institutions. Carnegie classification of these institutions included associate’s (6%), baccalaureate (6%), master’s (23%), doctoral (63%), and special-focus colleges or universities (1%). Four participants failed the attention check items and were removed from subsequent analyses.

Results and discussion

Model estimation

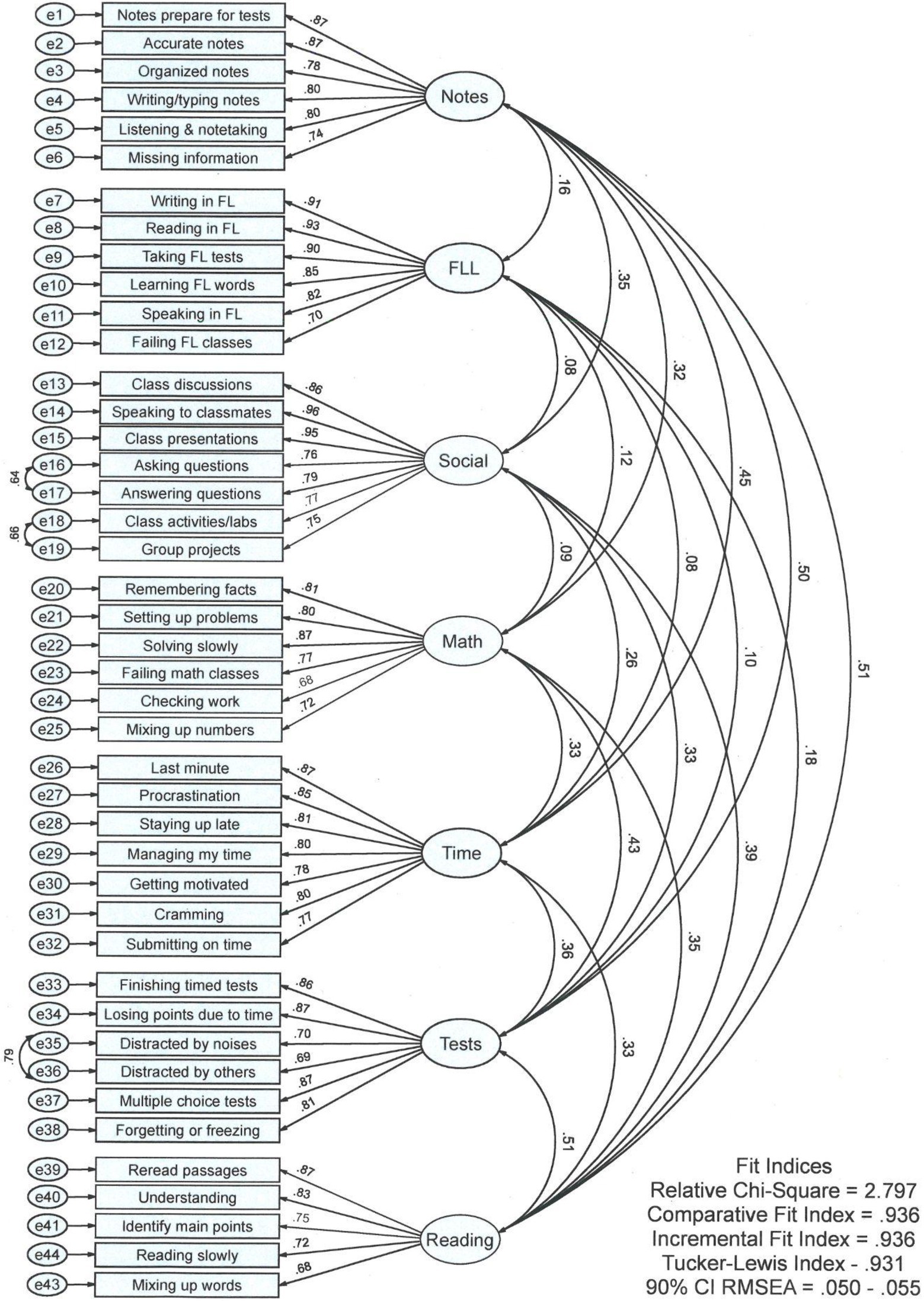

We used AMOS 26 to examine the factorial validity of the measure. The model (Figure 1) was based on the results of the PCA and consisted of seven, correlated latent variables, each reflecting one domain of academic impairment, with 43 indicators. Assumptions of univariate normality and linearity were met. There were no missing data. We used maximum likelihood estimation on the covariance matrix for all analyses.

An initial estimation suggested three modifications resulting in a significant improvement in overall fit (i.e., Δχ2 > 10.00). All three modifications involved correlating two error terms between indicators within a latent variable. According to Collier (2020), such modifications are appropriate if they are rationally justifiable and improve the overall fit and utility of the model. First, we correlated the error variance of the items asking questions during class and answering questions during class because they seemed to reflect similar behaviors related to class participation. Second, we correlated the error variance of the items getting distracted by noises during tests and getting distracted by other people during tests for similar reasons. The third modification involved correlating the error variance for the items participating in group activities during class and working on group projects with classmates because these items seemed to reflect similar activities, albeit in potentially different settings.

As expected, the independence model provided a poor fit for the data, χ2(903, N = 650) = 24387.12, p < 0.001; CFI, IFI, NFI, and TLI = 0; RMSEA = 0.200, CI.90 = 0.198–0.202. The hypothesized model provided a significantly better fit for the data, Δχ2(67, N = 650) = 22048.72, p < 0.001. Although the overall goodness-of-fit statistic was significant, χ2(836, N = 650) = 2338.40, p < 0.001, it is sensitive to complex models with large samples (Collier, 2020). The relative chi-square test (i.e., χ2/df), which partially adjusts for these characteristics, was 2.80, which indicated acceptable fit according to criteria suggested by Kline (2011) and Schumacker and Lomax (2004). In contrast, the relative chi-square statistic for the independence model was 27.01 which is considered unacceptable by these standards. Various incremental fit statistics yielded values >0.90, suggesting acceptable fit: CFI = 0.94, IFI = 0.94, NFI = 0.91, and TLI = 0.93. The root mean square error of approximation indicated fit within the acceptable range (RMSEA = 0.053; CI.90 = 0.040–0.055) using criteria suggested by MacCallum et al. (1996).

Internal consistency, convergent, and discriminant validity

To estimate internal consistency, we calculated the composite reliability of each of the seven academic impairment domains (Raykov, 1997). Composite reliability estimates the internal consistency of scales using the factor loadings of indicators generated from CFA (Hair et al., 2014). Our calculations yielded the following composite reliabilities for note-taking (0.91), foreign language learning (0.93), social-academic functioning (0.93), math (0.90), time management (0.92), test-taking (0.91), and reading (0.88).

We assessed convergent validity in two ways, following the recommendations of Collier (2020). First, we examined each standardized factor loading for indicators assessing the same academic domain (see Figure 1). All loadings were significant and ranged from 0.68 to 0.96. Using criteria established by Comrey and Lee (2013), four loadings fell in the very good range (i.e., ≥ 0.63) and the other loadings fell in the excellent range (i.e., r ≥ 0.71) indicating that they converge or assess a similar construct. Second, we examined the average variance extracted (AVE) from each of the academic impairment domains. Fornell and Larcker (1981) suggest that an AVE ≥ 0.50 is necessary to show that latent variables explain at least one-half of the variance of its indicators, on average. Our calculations showed that all seven impairment domains met this criterion, supporting the convergent validity of the items: note-taking (0.66), foreign language learning (0.74), social-academic functioning (0.70), math (0.61), time management (0.69), test-taking (0.65), and reading (0.60).

Evidence of discriminant validity can be shown by comparing the AVE within each of the seven impairment domains to the shared variances between the domains. The AVE for each domain of impairment should be higher than the shared variance across different domains of impairment (Fornell and Larcker, 1981). To make this comparison, we computed an average composite score for each participant on the seven academic impairment domains. Then, we calculated bivariate correlations between the seven average composite scores. Finally, we squared these correlations to determine the shared variance between latent variables (see Collier, 2020). The shared variance between latent variables ranged from 0.01 (between foreign language learning and time management) to 0.22 (between reading and note-taking). The median shared variance between domains was 0.10. All values were far below the AVE within each domain, indicating that the domains reflect distinguishable facets of academic functioning.

Altogether, analyses indicated that a seven-part model provided an acceptable fit for the data. The seven domains of academic impairment displayed acceptable internal consistency and convergent validity. Although modestly correlated with each other, these domains could also be rationally and statistically differentiated from one another.

Study 4: Content validity

Purpose

The purpose of Study 4 was to assess the content validity of our measure, that is, to determine whether the final items in the measure accurately and adequately reflect the seven domains of academic impairment. To accomplish this goal, we asked college disability support professionals to rate the relevance, representativeness, and clarity of each item (DeVellis, 2017).

Method

Thirty-one AHEAD members participated in the study. All members were college disability support professionals and had experience in disability determination and accommodation granting. Participants’ professional experience ranged from 2 to 20 years (M = 9.81, Mdn = 10.00, SD = 5.69). Participants worked in public (74%) or private (26%) institutions. Carnegie classification of these schools included baccalaureate (6.5%), masters (83.8%), and doctoral (9.7%).

Participants were recruited through the AHEAD members message board and asked to participate in a study to assess the quality of items for a new measure of academic impairment in college students. Participants were offered a $15 gift card for their help with the study. Participants rated each item on a five-point scale ranging from 1 (low) to high (5) in terms of its relevance, representativeness, and clarity, respectively (Haynes et al., 1995). Relevance refers to the appropriateness of the item to the targeted construct and purpose of the questionnaire. Does the item relate to that domain of impairment or something else? Representativeness refers to the degree to which the item captures an important facet of the targeted construct. Does the item reflect a central feature of that domain of impairment or something tangential or unimportant? Clarity refers to the degree to which the item is likely to be understood by respondents. Would a college student interpret the item correctly or is the item confusing or vague?

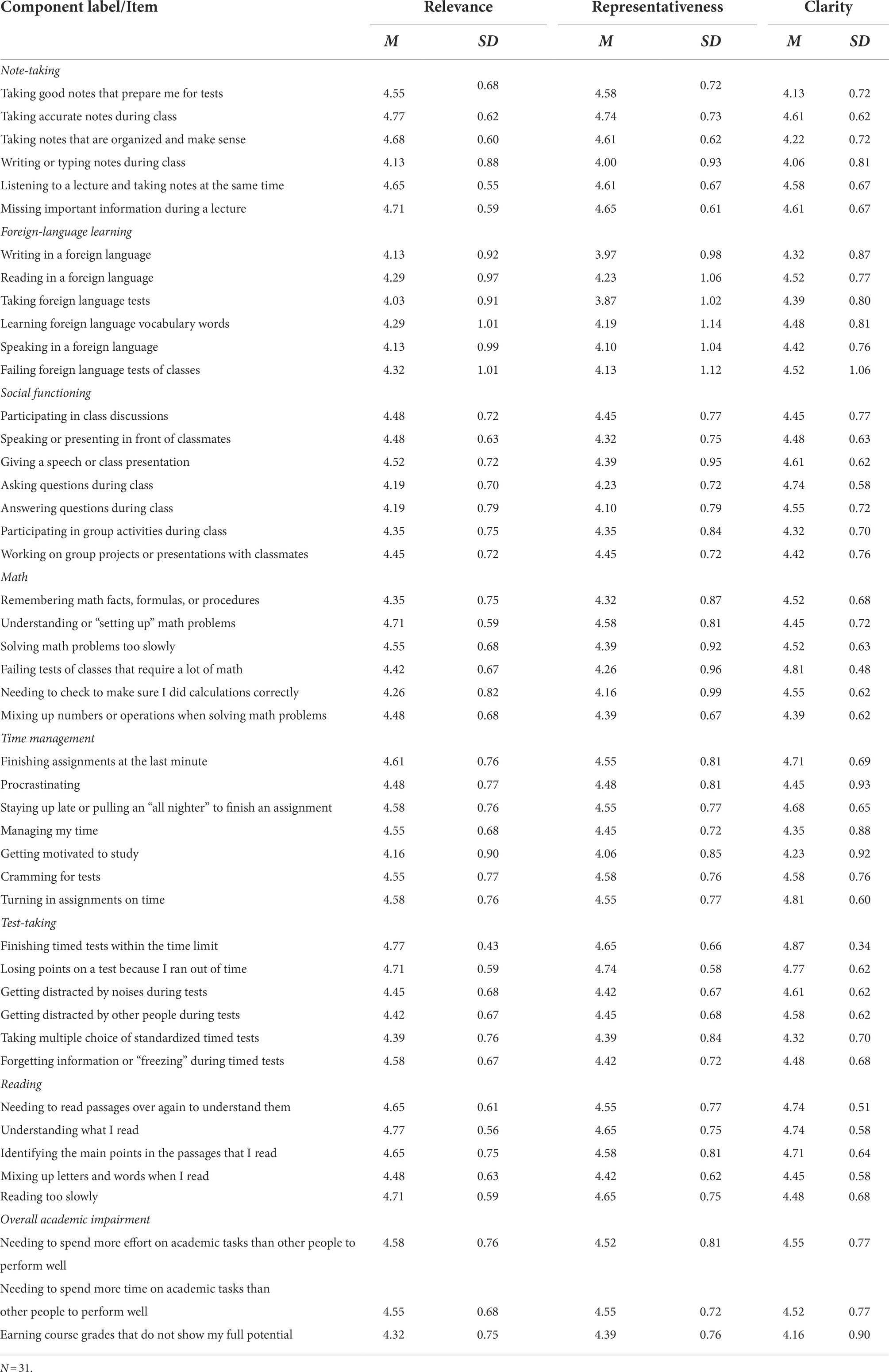

Results and discussion

Nearly all of the mean ratings (Table 4) exceeded 4 on a 5-point scale with standard deviations ≤1. Only two items received representativeness ratings <4: reading in a foreign language (M = 3.97, SD = 0.98) and taking foreign language tests (M = 3.87, SD = 1.02). This finding may be due to the fact that most non-apparent disabilities experienced by college students, such as ADHD and learning disabilities, typically do not impair foreign language learning (Sparks and Luebbers, 2018) and because some undergraduates are not required to complete foreign language classes to earn their degrees. Altogether, however, results support the content validity of the items.

Study 5: Temporal stability

Purpose

The purpose of Study 5 was to estimate the test–retest reliability of the AIM impairment composite scores. Most non-apparent disabilities experienced by college students should be relatively stable over time. For example, neurodevelopmental disorders such as ADHD, autism spectrum disorder (ASD), and learning disabilities are generally considered to be chronic conditions; although symptom severity may decrease over time as individuals learn compensatory strategies and participate in treatment, some impairment tends to persist (American Psychiatric Association, 2013). Similarly, the median duration of depressive episodes in young adults is three to 6 months, with roughly one-third showing a recurrent or chronic course. The median duration of most anxiety disorders tends to be longer (Penninx et al., 2011). Because of these findings, we expected consistency in students’ self-reported impairment over time.

Method

One-hundred participants from Study 1 completed the measure a second time, 1 month after their initial assessment. The sample included 49% women, 47% men, and 4% non-binary gender identity. Participants’ racial identification included White (70%), Asian or Asian American (27%), Black or African American (9%), American Indian or Alaska Native (4%), and other (2%). Approximately 14% reported Hispanic or Latino ethnicity. Participants’ ages ranged from 18 to 55 years (M = 25.3, Mdn = 22.0, SD = 7.46). Participants were enrolled in public (82%) or private (18%) postsecondary institutions. Carnegie classification of these institutions included associate’s (5%), baccalaureate (8%), master’s (26%), and doctoral (61%).

Results and discussion

Bivariate correlations between composite scores across 1-month duration were as follows: note-taking (0.83), foreign language learning (0.86), social-academic functioning (0.88), math (0.86), time management (0.89), test-taking (0.80), and reading (0.85). All correlations were significant and indicated acceptable temporal stability.

Study 6: Criterion-related validity

Purpose

The purpose of Study 6 was to assess the criterion-related validity of the measure. Criterion-related validity refers to the degree to which test scores relate to theoretically-expected indicators of the construct it purports to measure (Cronbach and Meehl, 1955). One way to examine criterion-related validity is to see if students’ self-reported impairment varies as a function of their disability status. If the domains assess academic impairment, students with a history of disabilities, students receiving academic accommodations, and students currently receiving treatment should report significantly greater impairment than students without this history or current symptom presentation.

Method

We combined participants in Studies 1, 2, and 3 to render a dataset of 1,175 students. The gender distribution was 35% men, 60% women, and 5% non-binary gender identity. Race included White (71%), Asian or Asian American (20%), Black or African American (11%), American Indian or Alaska Native (1%), and other (4%). Approximately 14% reported Hispanic or Latino ethnicity. Participants’ ages ranged from 18 to 55 years (M = 22.5, Mdn = 21.0, SD = 5.26). These demographics approximate the population of degree-seeking undergraduates in the United States with respect to race, ethnicity, and age (de Brey et al., 2019). The representativeness of our sample’s gender identity to the US undergraduate population is difficult to ascertain because the National Center for Education Statistics do not provide the percent of students who report non-binary gender identity. However, the percent of students who reported non-binary gender identity in our study (5%) is nearly identical to the percent of adults aged 18 to 30 in the US population who report this gender identity (5.1%; Brown, 2022).

After completing the AIM, participants were asked a series of questions to assess their disability status and current functioning. First, participants were asked if they had ever been diagnosed by a physician, psychologist, or other licensed professional with any of the following conditions: ADD or ADHD; specific learning disability such as dyslexia, dyscalculia, or dysgraphia; ASD; hearing impairment including deafness; visual impairment including blindness; orthopedic impairment such as cerebral palsy; speech or language disorder; traumatic brain injury; intellectual disability; or none. These categories correspond to the disabilities recognized by the Individuals with Disabilities Education Act. Participants who reported that they were unsure about their history were not included in the analyses.

Second, participants were also asked three questions about their history of accommodations. In primary or secondary school, did you receive an IEP, Section 504 Plan, or special education? In high school, did you receive test accommodations on college entrance exams like the ACT or SAT? In college, did you receive academic accommodations such as extra time on exams because of a disability? Participants who reported that they were unsure about their history were not included in the analyses.

Finally, participants were asked if, in the past 12 months, they received treatment for any of the following conditions: ADD or ADHD; anxiety; depression; another mental health problem; or none of these conditions.

Results and discussion

Twenty percent of participants reported a history of at least one disability. This percentage is nearly identical to the percent reported by the US undergraduate population (19.4%; US Department of Education, 2021). Approximately 10.8% of participants reported that they received an IEP, 504 Plan, or special education prior to college. This percentage is similar to the percent of K-12 students enrolled in such programs 6 years ago, when most of our participants attended primary or secondary school (12%; US Department of Education, 2022). Roughly 7.8% of participants reported current treatment for ADHD; a percentage similar to the US undergraduate population taking psychostimulants (6%; Eisenberg et al., 2022). Roughly 28.1 and 27.3% of participants reported anxiety and depression, respectively. These percentages also approximate the percent of US undergraduates reporting anxiety (31%) or depressive (27%) disorders (Eisenberg et al., 2022).

We calculated average composite scores for each participant on the seven impairment domains. Specifically, we added the participant’s ratings on the items comprising each composite and divided by the number of items to generate an average score ranging from 1 to 5. Average composite scores have an advantage over total scores because they allow comparisons across composites that have an unequal number of items.

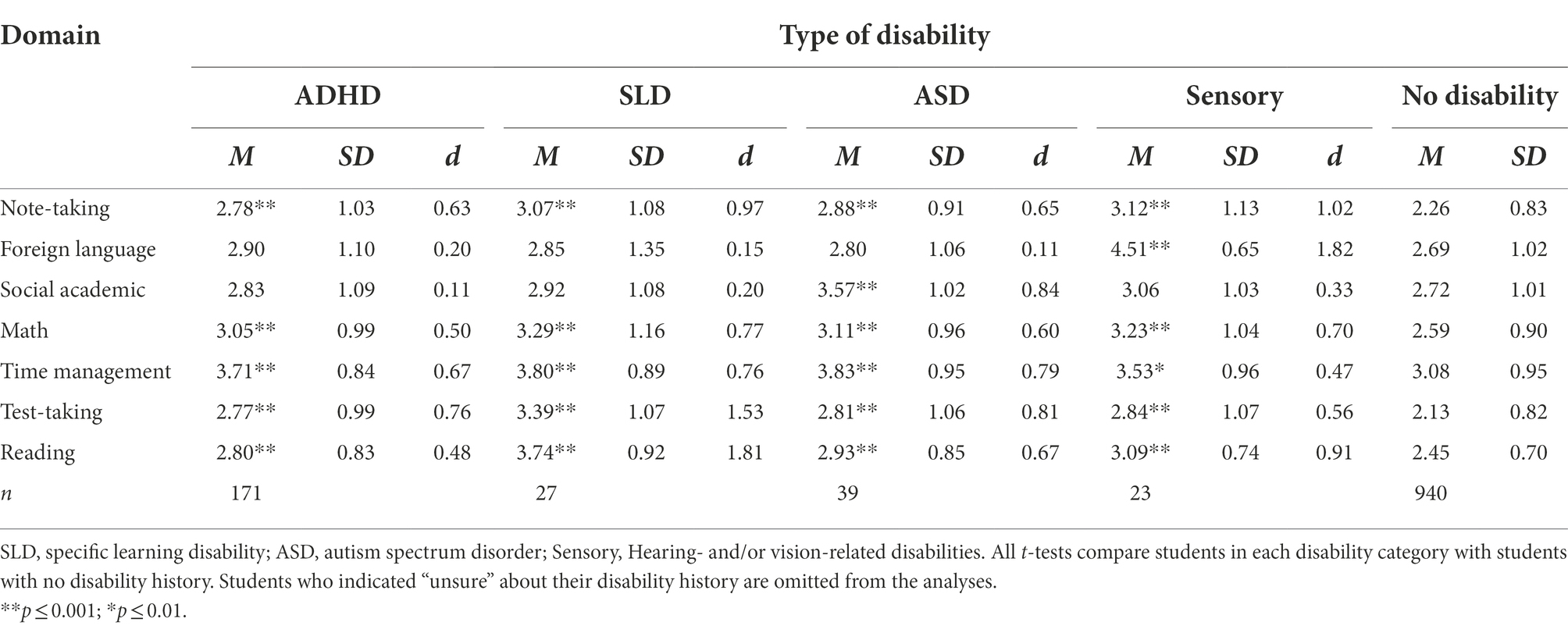

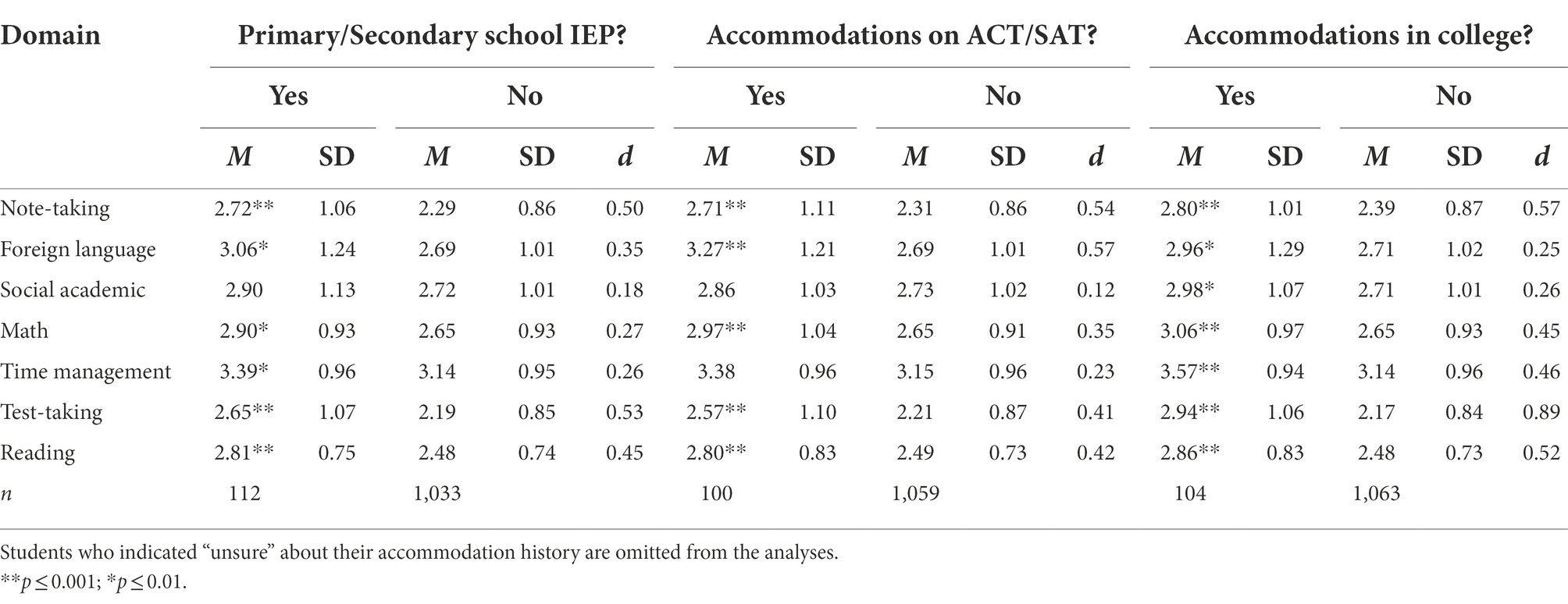

Next, we conducted a series of independent sample t-tests examining differences in average composite scores as a function of students’ disability history (Table 5), accommodation history (Table 6), and current treatment (Table 7). Each t-test compares students with a disability history, accommodation history, or current treatment to students without a disability history, accommodation history, or current treatment, respectively.

Results provided support for the criterion-related validity of the AIM impairment domains. Students with a history of disabilities, previous accommodations, or current mental health problems earned significantly higher scores on the reading, math, note-taking, test-taking, and time management domains than students without a history of disabilities, accommodations, or current treatment, respectively. The magnitude of these differences ranged from moderate (d ≥ 0.50) to large (d ≥ 0.80; Cohen, 1988).

Evidence of the validity of the foreign language learning domain comes from significantly higher scores reported by students with sensory disabilities and a history of accommodations. These findings likely reflect the way hearing and/or vision problems can interfere with second-language learning and the need for accommodations to mitigate educational barriers for these students. Students with a history of other disabilities and students experiencing current mental health problems did not report impairment in foreign language learning on average, which is consistent with research showing that students with ADHD, learning disabilities, and similar conditions typically do not experience limitations in second-language learning and do not automatically require modifications to foreign language coursework (Sparks, 2016). However, in our sample, 19.9% of students with a history of ADHD, 18.5% of students learning disabilities, and 12.8% of students with ASD reported impairment in foreign language learning ≥1.5 SD above the mean. Moreover, accommodations for impairment in foreign language learning are often provided to students with these conditions (Gregg, 2012). Consequently, it was important to retain this domain on the AIM so that clinicians could identify students who might report significant impairment in foreign language learning and differentiate these students who report problems within normal limits.

Evidence for the validity of the social-academic impairment domain comes from significantly higher scores reported by students with ASD, anxiety, or depression. ASD is characterized by problems with social communication which often lead to difficulty interacting with others in academic settings (Ashbaugh et al., 2017). Similarly, students with anxiety and mood disorders often have trouble in peer relationships and interactions at school (Moeller and Seehuus, 2019).

Study 7: Response validity

Purpose

The purpose of our final study was to provide initial descriptive data regarding a potential response validity scale for our measure. Base rates for non-credible responding among college students participating in disability testing for ADHD range from 11% to 40% (Harrison et al., 2012), whereas ~15% of students seeking accommodations for learning disabilities typically provide non-credible data (Weis and Droder, 2019). There are also well-documented cases of students deliberately misreporting academic problems or performing poorly on diagnostic tests to obtain services (Harrison et al., 2012; Lovett, 2020). It is best practice for psychologists to administer symptom and performance validity tests as part of the assessment process (Sweet et al., 2021). Unfortunately, many psychologists do not include the results of validity testing in their reports, making it difficult for college disability professionals to judge the validity of students’ narratives and test data (Nelson et al., 2019; Weis et al., 2019).

We embedded eight items in our measure to estimate response validity. These items describe common tasks that appear to require academic skills, but that nearly all undergraduates would perform without difficulty. Respondents who engage in unsophisticated impairment exaggeration or feigning may report that many of these tasks are “often” or “always” a problem in contrast to their peers. Significant response validity composite scores (i.e., ≥ 1 or 2 SD above the mean) might indicate non-credible responding and suggest caution when interpreting the student’s self-reported impairment.

Method

The 1,175 undergraduates described in Study 6 participated in this study. Each participant completed the eight response validity items embedded within the AIM using the same response scale. The content of the eight response validity items is not provided to protect test integrity.

Results and discussion

Item mean scores ranged from 1.23 (SD = 0.52) to 1.65 (SD = 0.96) of a five-point scale, indicating that respondents generally regarded the activities described by these items as “never” or “seldom” a problem with little variability. Cronbach’s alpha for the scale was 0.83 with no possible improvement in internal consistency based on the corrected item-total correlations.

We calculated each participant’s response validity composite using mean ratings for the eight items on the scale. Results (M = 1.48, Mdn = 1.25, SD = 0.54) indicated that participants did not report impairment in the activities described on this scale. The distribution of composite scores was very positively skewed (1.36). Approximately 86% of participants had mean composite scores ≤2.00 indicating that activities were “never” or “seldom” a problem. In contrast, only 2.2% of participants had composite scores ≥3.00 indicating that activities were “often” or “always” a problem.

We conducted a series of paired-samples t-tests comparing mean composite scores on the response validity scale with mean composite scores on the seven impairment scales. Results were evaluated at p ≤ 0.007 to control for familywise error. All results were significant with participants reporting higher scores on each of the impairment scales than the response validity scale. Effect sizes were large ranging from d = 0.89 (between the validity scale and test-taking impairment) to d = 1.74 (between the validity scale and time management impairment). Correlations between the validity scales and the impairment scales were small to moderate, ranging from 0.12 to 0.37.

Altogether, our findings indicate that the response validity scale shows adequate internal consistency, that most participants did not report impairment on the activities described by the items on this scale, and that response validity composite scores were not strongly associated with academic impairment. Mean response validity composite scores ≥3 indicate statistically infrequent responding. When students earn high scores on the validity composite, professionals should interpret the results of the AIM impairment scores with extreme caution. Although professionals should always look for evidence of academic impairment before granting accommodations, objective data about students’ real-world functioning is especially necessary for students with elevated validity scores. High validity composite scores, combined with a lack of evidence of impairment from other sources (e.g., educational, medical, psychological records) would likely indicate non-credible responding.

It is important to note that the response validity composite provides only one estimate of unsophisticated non-credible responding and should be interpreted alongside the results of other measures of symptom or performance validity (see Harrison et al., 2021a,b for a review). Disability professionals should insist on at least one indicator of response validity before granting accommodations.

General discussion

The number of postsecondary students seeking accommodations has increased significantly, especially at America’s most elite institutions (Weis and Bittner, 2022). Students recognize the value of additional test time, access to technology during assignments and exams, and other modifications to their methods of instruction, testing, and curricular requirements. College disability professionals face the challenging task of reviewing students’ accommodation requests and deciding who will and will not receive services. Accommodation decision-making errors can have immediate, high-stakes consequences. Failing to accommodate students with disabilities risks discrimination and denies students services to which they are legally and ethically entitled. On the other hand, accommodating students without substantial impairment, however well-intentioned, may give these students an advantage over their classmates, waste limited resources, and erode academic integrity (Weis et al., 2021). We sought to develop a self-report rating scale that can assist professionals in rendering accommodation decisions in an evidenced-based way.

The AIM is a brief, multidimensional, self-report measure of academic impairment in college students. It is unique in at least four respects. First, it assesses academic impairment in a manner consistent with the ADA conceptualization of a disability. High scores on the AIM reflect substantial limitations in major academic activities compared to most other people. The AIM’s impairment domains correspond to the major academic activities mentioned in the ADA and relevant to college students (US Department of Justice, 2016). Unlike most other measures, AIM items do not conflate diagnostic signs and symptoms with their functional consequences or include items that reflect attitudinal or motivational factors. As a result, the AIM yields information about students’ perceived academic impairment that is largely independent of their diagnostic labels or thoughts and feelings about school. The content validity of items is further supported by feedback from students with disabilities and researchers during the item-generation stage of test development and the ratings of college disability professionals regarding relevance, representativeness, and clarity.

Second, the AIM is a standardized, norm-referenced measure that allows professionals to compare the ratings of each student to the ratings of a large and diverse group of degree-seeking undergraduates in the standardization sample. College disability professionals are told to rely on students’ narratives and their subjective impressions to judge the need for accommodations (Association on Higher Education and Disability, 2012; Meyer et al., 2020). These methods favor students who are aware that accommodations are available in college, have the support of parents, teachers, and other adults to request them, and possess the social and self-advocacy skills needed to convince disability professionals that they are warranted. Students with disabilities who lack these resources may be denied the legal protection they deserve. First-generation students; international students; students from diverse cultural, economic, and linguistic backgrounds; or students with communication, language, or social skill deficits may be especially at risk (Bolt et al., 2011; McGregor et al., 2016; Waterfield and Whelan, 2017).

As a standardized measure, the AIM allows professionals to systematically collect information about each student’s academic functioning. It prompts students to rate their degree of impairment on a wide range of academic domains, rather than requiring students to recall and describe their academic difficulties during an interview. As a norm-referenced measure, the AIM allows professionals to compare a student’s functioning with other undergraduates and quantify the degree of impairment. Consequently, the AIM can help professionals differentiate students with substantial limitations in academic activities from students experiencing challenges that are typical of most undergraduates.

Third, the AIM is a multidimensional instrument that allows professionals to assess functioning across a wide range of academic tasks. AIM composite scores have adequate internal consistency and temporal stability over 1 month. Factorial validity of the seven-part structure is supported by PCA and CFA using two large, independent samples. Impairment scores show adequate composite reliability. Their convergent validity is supported by high standardized factor loadings and AVEs. Their discriminant validity is supported by low to moderate intercorrelations among the composites. Initial evidence of their construct validity comes from significant and theoretically-expected differences in composite scores as a function of students’ disability history, previous accommodations, and current treatment with effect sizes in the moderate to large range. Altogether, these findings suggest that the AIM assesses seven distinct and interpretable domains of academic impairment. Professionals can use scores on these domains to identify specific areas in need of accommodation, skill development, or treatment.

Finally, the AIM includes a response validity scale that can help professionals identify non-credible ratings. Previous research has shown moderate rates of non-credible responding among college students seeking accommodations (Harrison et al., 2021a,b). Reasons for non-credible responding include a desire to gain academic accommodations or curricular modifications; access to stimulant medication for ADHD; eligibility for bursary funds, scholarships, and other financial resources available to students classified with disabilities; and the maintenance of a “disability identity” that can help students preserve self-esteem despite academic struggles (Harrison et al., 2021a,b). Unfortunately, college students can feign disabilities with little preparation and professionals are largely unable to detect non-credible responding without the results of testing (Johnson and Suhr, 2021). Elevated scores (i.e., ≥ 3) on the AIM’s response validity composite reflect atypical impairment, seen in only 2.2% of students in the standardization sample. High scores could indicate very severe impairment that is uncharacteristic of nearly all undergraduates or non-credible responding. In either case, elevated response validity scores should prompt professionals to collect additional information about students’ real-world functioning and interpret assessment results with caution.

Recommendations for professionals

The AIM can be used in applied settings in three ways. First, it can be used to screen students who seek accommodations but who lack recent documentation to support their request for services. In postsecondary settings, disability support professionals can use the AIM to determine if a student’s self-reported impairment is significant prior to referring the student for a formal evaluation. In clinical settings, psychologists might use the AIM early in the assessment process to determine if more extensive testing is necessary. If the student’s limitations are within normal limits, professionals may wish to discuss other ways to improve academic functioning rather than grant accommodations. For example, professionals may refer students to the college’s academic support service, tutors, or coaches to help students develop more effective ways to study, take-notes, or manage their time. Alternatively, students might benefit from individual or group counseling to reduce academic stress, test anxiety, or social–emotional problems that can interfere with learning. If students’ reports indicate significant elevations on the response validity scale, professionals may wish to explore other reasons for students’ perceived need for services.

Second, for students with a documented disability history and current limitations, the AIM can be used to plan accommodations. Recall that accommodations are designed to provide students with disabilities equal access to educational opportunities as their classmates without disabilities (Lovett, 2014; Lovett and Lewandowski, 2015). Accommodations mitigate limitations that arise because of the interaction between the student’s medical, neurodevelopmental, or psychiatric condition and the demands of the educational setting. Therefore, accommodations must be tailored to the specific environmental barriers experienced by the student and not awarded in an arbitrary or non-specific manner. Unfortunately, psychologists and disability professionals often grant accommodations that are not connected to students’ functional limitations (Weis et al., 2017). For example, a student with dyslexia might be awarded additional time on all exams, including math exams, despite having impairment only in reading. A student with ADHD might be granted a waiver for foreign language coursework despite having no impairment in second-language learning. The AIM can help professionals select accommodations that target specific limitations; consequently, students receive accommodations that are most likely to reduce barriers to learning while holding students to the same academic expectations as their peers in areas where they do not experience significant impairment. In doing so, the AIM can help professionals focus on the purpose of accommodations, which is to guarantee access rather than success in college.

Third, the AIM can be used to monitor accommodation effectiveness. It is critical that disability professionals evaluate each accommodation they assign to determine if it effectively removes functional barriers to students’ learning. The Standards for Educational and Psychological Testing warn, “the effectiveness of a given accommodation plays a role in determinations of appropriate use. If a given accommodation or modification does not increase access…there is little point in using it” [American Educational Research Association, American Psychological Association, and National Council on Measurement in Education (AERA/APA/NCME), 2014, p. 62]. Assessing accommodation effectiveness is particularly important given that so few randomized, controlled studies have been conducted examining the efficacy of accommodations for adults with disabilities. The AIM’s brevity and temporal stability allow professionals to periodically reassess students’ functioning to determine the effectiveness of accommodations over the course of the academic year. Evidence of effectiveness might come from AIM impairment scores within normal limits after the implementation of accommodations. Elevated scores after the provision of accommodations could suggest that the accommodations are not being used consistently, they do not fully mitigate the student’s disability, or that there are other reasons for the student’s academic problems.

To help professionals administer, score, and interpret the instrument we developed the AIM Calculator (neuropsychology.denison.edu/tests). Professionals can either administer the AIM digitally using the calculator or print the AIM from the calculator and transfer students’ ratings. The calculator converts students’ responses to standardized T scores and percentile ranks using data from the standardization sample. Scores ≥1 or 2 standard deviations above the mean on any of the AIM impairment domains (i.e., T scores ≥60 or 70) suggest a substantial limitation in academic activities compared to most other people and a possible need for accommodation. The calculator also provides a narrative and graphical interpretation of students’ functioning on each of the seven impairment domains and flags students who report potentially problematic alcohol and other substance use problems. Unlike commercial measures of academic functioning (e.g., KLDA, LASSI), the AIM, calculator, and interpretive report is free to use.

Although the AIM provides a norm-referenced assessment of a student’s functioning across multiple academic domains, it is not designed to replace the interactive accommodation decision-making process between the student and disability resource professional. A significant score on an AIM composite suggests the need for accommodations when performing certain academic activities, but it cannot determine which accommodations are most appropriate by itself. The student and disability resource professional must work together to consider the specific demands of the academic task and barriers to participation, the nature of the student’s disability and preferences for accommodations, the effectiveness of the accommodation in mitigating the student’s disability, and whether the accommodation fundamentally alters the activity itself. Indeed, when addressing disability determination in the workplace, the US Equal Employment Opportunity Commission (2022) warns, “The Americans with Disabilities Act avoids a formulistic approach to accommodations in favor of an interactive discussion between the employer and the individual with a disability” and the US District Court’s initial ruling in Doe v. Skidmore College (No. 1:17–1,269) suggests that the same interactive process is required in postsecondary settings. Consequently, the AIM helps to identify students with substantial limitations in specific academic domains but refrains from prescribing specific accommodations in an actuarial manner.

Limitations and future directions

Professionals must be mindful of the AIM’s limitations. Like all self-report measures, the AIM is susceptible to reporting biases (Nelson and Lovett, 2019) and non-credible responding (Suhr et al., 2017; Harrison et al., 2021a,b). Consequently, professionals must corroborate elevated AIM composite scores with other indicators of real-world impairment. Students’ educational, medical, or psychological records should provide clear evidence of barriers to learning and/or test-taking resulting in normative deficits in functioning compared to most other adults in the general population.

It is equally important to remember that elevated AIM scores and real-world academic problems do not necessarily indicate the existence of a disability or merit accommodations. Professionals must rule out alternative causes for students’ academic difficulties. Some students engage in maladaptive alcohol and other drug use that contributes to low achievement.2 Other students experience sleep problems that cause daytime fatigue and low grades. Many students lack basic academic skills because of disruptions to education caused by the pandemic. Perhaps most commonly, students often face family, financial, interpersonal, or occupational stressors that compromise their academic performance. However well-intentioned, granting accommodations to these students does them a disservice by ignoring the causes of their academic troubles. Professionals can use students’ responses on the AIM as an invitation to explore alternative reasons for students’ impairment and provide interventions that target the source of their academic problems.

Professionals must also be mindful of our limited knowledge regarding the efficacy of many commonly-granted accommodations (Madaus et al., 2018). We know very little about the way many academic accommodations affect students’ learning and the validity of test scores (Lovett and Nelson, 2021). For example, additional time on exams is the most commonly-granted accommodation to postsecondary students (Gelbar and Madaus, 2021; US Government Accountability Office, 2022); however, not all students with ADHD, anxiety, or depression experience deficits in test-taking speed. Moreover, the provision of 50% or 100% additional time to any student, regardless of their disability status, can yield test scores that exceed those of students without disabilities who complete exams under normal time limits (Miller et al., 2015) and may not be necessary to mitigate impairment (Holmes and Silvestri, 2019; Lindstrom et al., 2021). We know much less about the effects of other types of accommodations, such as providing a separate testing location, presenting exams in different formats, allowing different methods of responding or grading, and granting waivers or substitutions for required coursework. Perhaps most concerning, we do not know how the provision of accommodations affects students’ long-term educational attainment and the quality and safety of services they provide to the public after graduation. Ideally, accommodation-granting must be based on evidence supporting its effectiveness, either from empirical studies of individuals with similar disabilities or objective data generated by the student [American Educational Research Association, American Psychological Association, and National Council on Measurement in Education (AERA/APA/NCME), 2014].

Finally, accommodation-planning must not overshadow evidence-based treatment. The past decade has witnessed a marked increase in the number of time-limited, psychosocial treatments for mental health problems in college students. For example, several cognitive-behavioral treatment packages have been developed for postsecondary students with ADHD who want to improve their studying, note-taking, and time management skills (Anastopoulos et al., 2021; Solanto and Scheres, 2021). Similarly, cognitive-behavioral, interpersonal, and mindfulness-based interventions have been developed to help young adults overcome academic and social problems caused by anxiety and mood disorders (Cuijpers et al., 2016; Bamber and Morpeth, 2019). Whereas accommodations are often necessary to help students with these conditions access educational opportunities in the short-term, they do not teach new skills or improve students’ long-term social–emotional wellbeing.

We hope our research will prompt future studies investigating the validity and clinical utility of the AIM. For example, considerable attention has been directed in recent years regarding the need for psychologists and college disability professionals to look for evidence of response and/or performance validity before making accommodation decisions (Harrison et al., 2021a,b). Future research might be directed at further validating the AIM’s response validity scale using a simulation study to examine its ability to identify non-credible responding. Other research might be directed at determining the optimal cut scores on each of the AIM impairment scales to differentiate students with and without disabilities. Finally, future research might examine the AIM’s sensitivity to the benefits of accommodations. Ideally, our measure might be used to systematically monitor the effectiveness of accommodations on student’s real-world functioning and provide systematic feedback to professionals in everyday practice.

Data availability statement

The datasets presented in this article are not readily available because we do not have permission to share user-level data with others. Requests to access the datasets should be directed to RW, d2Vpc3JAZGVuaXNvbi5lZHU=.

Ethics statement

The studies involving human participants were reviewed and approved by the Denison University IRB. The patients/participants provided their written informed consent to participate in this study.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This research was supported by grants from the Laurie and David Hodgson Endowment for Disability Research and the Denison University Research Foundation.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^Ideally, norms would be collected from adults in the general population rather than from college students. However, most people in the general population do not regularly engage in academic activities such as taking notes in class, giving a class presentation, or completing time-limited exams and items reflecting these activities would be inapplicable to these respondents.

2. ^The two AIM items that assess this behavior can screen for substance use problems and provide an avenue by which professionals might gather additional information and provide assistance.

References

American Educational Research Association, American Psychological Association, and National Council on Measurement in Education (AERA/APA/NCME) (2014). Standards for Educational and Psychological Testing. Washington, DC: AERA.

American Psychiatric Association. (2013). Diagnostic and Statistical Manual of Mental Disorders 5th edition. Arlington, VA: American Psychiatric Publishing.

Anastopoulos, A. D., Langberg, J. M., Eddy, L. D., Silvia, P. J., and Labban, J. D. (2021). A randomized controlled trial examining CBT for college students with ADHD. J. Consult. Clin. Psychol. 89, 21–33. doi: 10.1037/ccp0000553

Ashbaugh, K., Koegel, R., and Koegel, L. (2017). Increasing social integration for college students with autism spectrum disorder. Behav. Dev. Bull. 22, 183–196. doi: 10.1037/bdb0000057

Association on Higher Education and Disability. (1994). Best Practices: Disability Documentation in Higher Education. Huntersville, NC: Author.

Association on Higher Education and Disability. (2012). Supporting Accommodation Requests: Guidance on Documentation Practices. Huntersville, NC: Author.