- 1Centro de Investigação em Psicologia para o Desenvolvimento, Instituto de Psicologia e Ciências das Educação, Universidade Lusíada, Porto, Portugal

- 2Centro de Psicologia da Universidade do Porto, Faculdade de Psicologia e Ciências da Educação da Universidade do Porto, Porto, Portugal

- 3Centro de Investigação em Psicologia, Escola de Psicologia, Universidade do Minho, Braga, Portugal

Fluency is a central skill for successful reading. Research has provided evidence that systematic reading fluency interventions can be effective. However, research is scarce on the effects of interventions delivered remotely versus face-to-face. This study investigated the efficacy of a systematic and standardized intervention for promoting reading fluency in third-grade students (N = 207) during the COVID-19 pandemic. The study had a pretest, posttest, and follow-up design, with two intervention groups (remote vs face-to-face) and a control group. The intervention groups experienced 20 intervention sessions (2 sessions per week), each lasting approximately 50 min. Word reading accuracy, text reading accuracy, and fluency were measured in the three rounds of assessment. In both intervention groups, all measures of reading showed gains from pretest to posttest. The results also suggested that the efficacy of the intervention was similar in the remote and face-to-face modalities. These findings highlight the relevance of systematic interventions in increasing reading fluency and support the use of remote interventions as an adequate alternative to face-to-face interventions.

Introduction

Learning to read is perhaps the most challenging goal that children face in the first years of elementary school. The first reading goal is the acquisition of word and text level decoding (Suggate, 2016). With instruction and training, this process helps the child become increasingly fluent in reading. Reading fluency is commonly defined as the ability to read text quickly, accurately, and with prosody (National Institute of Child Health and Human Development, 2000; Zimmerman et al., 2019), and constitutes one of the foundation skills for reading competency (National Governors Association Center for Best Practices and Council of Chief State School Officers, 2010; Gersten et al., 2020). Thus, there is a broad consensus that reading fluency has three main elements: word reading accuracy and speed, and prosody in oral reading (Rasinski et al., 2011).

Word recognition has two major components: accuracy and automaticity (National Institute of Child Health and Human Development, 2000). Accuracy refers to the reader’s precision in orally representing words from their orthographic forms (National Institute of Child Health and Human Development, 2000; Zimmerman et al., 2019). However, accuracy alone is insufficient for reading fluency. The speed and ease of word recognition (i.e., automaticity) appear later with the practice of reading (National Institute of Child Health and Human Development, 2000), as is embedded in the learner’s instant recognition repertoire (Ehri, 1998). With effortless word and sentence recognition more cognitive resources are available to employ higher-level thinking processes that are often decisive for reading comprehension (LaBerge and Samuels, 1974; Zimmerman et al., 2019).

Although accuracy and automaticity are essential for reading fluency, they do not fully account for all variance in fluency. A third element accounting for fluency is reading prosody, which can be described as the ability to read aloud with expression and intonation; often considered to be “the music of speech” (Wennerstrom, 2001). The addition of prosody to the definition of fluency contributed to the deconstruction of the idea that a good reader gets to the end of the text quickly (Dowhower, 1991). Instead, prosody implies that a “good reader” reads in the same manner that he or she speaks; that is, they read with appropriate rhythm and intonation facilitating the understanding of the content by the listener (Kuhn et al., 2010; Calet et al., 2017). Further, prosody comprises the variables timing, smoothness, and pace, expression, volume, and phrasing that speaker use to convey meaning (Rasinski and Padak, 2005; Calet et al., 2017; Zimmerman et al., 2019; Godde et al., 2020).

Prior studies have uncovered various features associated with student improvement in reading fluency (Wanzek et al., 2016). Evidence supports the use of systematic, explicit instruction and indicates that gains in fluency are influenced by the amount of time students spend; (a) practicing this skill, (b) listening to reading models (teacher assisted reading training), (c) receiving immediate feedback, and (d) recording and listening to his/her reading (Grabe, 2010; Gersten et al., 2020; Beach and Philippakos, 2021). Studies have also shown that “repeated reading” fluency interventions improve oral reading fluency, including in struggling readers (Meeks et al., 2014; Martens et al., 2019; Zimmerman et al., 2019). Repeated reading strategies involve students reading a grade-level text multiple times, either to complete a defined number of readings or to reach a predetermined fluency criterion. However, research shows that this approach lacks generalizability to new texts (Martens et al., 2019; Zimmerman et al., 2019).

Nonrepetitive reading fluency interventions have been recommended to overcome the limitation of repeated reading. Nonrepetitive reading strategies involve the same procedures as repeated reading, but applied to different texts, with an implicit focus on the story as a whole rather than on specific text extracts. In this way, students are allowed to have contact with a wider range of new words, genres, and text structures, which may facilitate transferring the fluency performance to unpracticed texts (Kuhn, 2005; Zimmermann et al., 2021). Current literature advises complementing nonrepetitive reading with a broad reading strategy in which students read passages of multiple texts with support, modeling, and correction from an interventionist (Lembke, 2003; Zimmermann et al., 2021). Text genres and text length also seem to contribute to fluency outcomes, with intervention studies mainly including literary and informational texts, or a combination of the two genres, and short texts (range = 94–600 words per text) (Zimmermann et al., 2021). Although the intervention in fluency has a much stronger empirical basis (Zimmerman et al., 2019; Gersten et al., 2020; Beach and Philippakos, 2021), research has also focused accuracy. Szadokierski et al. (2017) consider that the most common method for assessing reading accuracy is to ask a student to read an instructional material composed of three 1-min tests, in order to determine the percentage of words read correctly. They suggest that if the percentage of words read correctly falls below 93%, an intervention that increases accuracy is needed. Begeny and Martens (2006) verified that direct methods, such as teaching students the alphabetic principle and practicing the reading of a vast number of words, improved the performance in reading accuracy of third-grade students.

Several recent meta-analyses indicate that there are myriads of variables that influence the effectiveness of reading fluency strategies and interventions (see Wanzek et al., 2016; Gersten et al., 2020; Kim et al., 2020; Zimmermann et al., 2021). These include the duration of intervention, session length, number of sessions, and size of the group. However, these meta-analyses are consistent in their finding that scripted, short interventions (i.e., more than 10 and less than 100 sessions), in small groups of students (i.e., two to five students) with similar academic needs, and sessions with 20–40 min, or 10–60 min, between 3 and 5 times a week, are effective in promoting reading outcomes, including reading fluency (Wanzek et al., 2016; Gersten et al., 2020; Kim et al., 2020). Kim et al. (2020) and Wanzek et al. (2016) also suggest that universal screening, as part of the Response to Intervention (Rti) process, is an appropriate method for identifying at-risk students providing small-group intensity intervention to produce positive effects (Fuchs and Fuchs, 2006; Gilbert et al., 2012).

A noteworthy variable considered in meta-analyses of interventions for reading fluency is the type of interventionist (i.e., the person who implements the intervention). Reading interventionists can include certified teachers, paraprofessionals, researchers, reading specialists, university students working with researchers, psychologists, and other educational specialists (Gersten et al., 2020; Kim et al., 2020). Several studies have found the effect sizes from interventions delivered by different interventionists did not differ significantly (Balu et al., 2015; Scammacca et al., 2015; Suggate, 2016; Wanzek et al., 2016). However, a meta-analysis by Kim et al. (2020) identified larger effects sizes for groups followed by teachers. This evidence supports the need to provide adequate training for reading interventionists as a critical and powerful condition that can increase the likelihood of students’ growth in reading. However, Wanzek et al. (2016) indicate that less extensive interventions have the potential to be implemented by a variety of interventionists.

Interventions in reading performance try to improve general gains in each student (intraindividual gains), but also try to decrease differences in reading between students (interindividual gains). Pfost et al. (2014) summarize three developmental patterns of early interindividual differences in reading that are associated with different theoretical approaches. The first is consistent with the so-called Matthew effect (Stanovich, 1986) and implies that gains in reading across time are faster for better readers, whereas gains for poor readers are slower, and thus the difference between them becomes larger over time. In contrast, the second developmental pattern draws from the compensatory model or developmental-lag model of reading development (Francis et al., 1996) and assumes “a negative relationship between students’ initial reading level and the developmental gains leading to decreasing differences in reading over the course of development” (Pfost et al., 2014, p. 205). The third developmental pattern assumes full stability (i.e., no increase or decrease) in the differences between good and poor readers.

With an unprecedented impact on education systems, COVID-19 reinforced concerns about educational equity and raised concerns about the quality of teaching and learning in online environments, as well as possible learning deficits and losses as a result of long-term school closures. New worries also arose when designing reading instruction and interventions, despite previous studies having already conceived other models of service delivery, rather than face-to-face, in several domains of healthcare and education (Barbour and Reeves, 2009; Vasquez et al., 2011; Valentine, 2015). In Portugal, the first lockdown began on March 18th, 2020, and elementary school students only returned to school in September. Between March and July, teachers’ responses to the school closures ranged from sending worksheets via WhatsApp or email to using Zoom, Google Classroom, Teams (or other similar platforms) to host classes and provide assignments (Carvalho et al., 2020).

Research on how the pandemic might have influenced reading performance is still scarce, both in typical and struggling readers, with only a few studies examining the effects of online education and interventions. For example, during the COVID-19 outbreak, Beach et al. (2021) implemented an intervention in foundational reading skills, including fluency. This reading intervention was delivered in a virtual format and involved 15–21 h of synchronous instruction with groups of two or fewer students. During this remote intervention, students maintained their performance in fluency skills and improved their scores in curriculum-based mastery tests. Duijnen (2021) also describes a synchronous online fluency intervention, with three struggling readers in second and third grade, with similar reading performance. The students were involved in an 8-weeks small group intervention, for a total of 15 sessions, of 45 min each. This study identified growth in word reading accuracy, decoding skills, and reading comprehension as a result of the intervention. Alves and Romig (2021) translated a face-to-face instruction plan for students with reading disabilities to an online synchronous intervention, proposing that virtual instruction can be an important delivery service in various situations, not only the pandemic. Despite these promising findings and statements, more studies are required to investigate the efficacy of online reading interventions.

The Present Study

The present study aimed to contribute to current literature by implementing a short-term, standardized intervention to promote reading fluency. Considering the conditions forced by the COVID-19 outbreak and first lockdown, the intervention was designed to be implemented in two modalities: remote and face-to-face. The research question guiding this study was whether fluency training had an impact on the reading accuracy and fluency skills of third-grade students and whether the effects differed between face-to-face and remote modalities. Three rounds of data collection were used to measure the impact of the intervention. The first round took place before the intervention began, at the beginning of the third grade. The second round occurred immediately at the end of the intervention, in April. The final round occurred in June, to see whether the impacts on reading performance were maintained, without further intervention, in a follow-up assessment.

Materials and Methods

Participants

In total, 207 third graders (94.1% Portuguese, 3.5% Brazilian, 1.2% Ukrainian and 1.2% Romanian; 51.2% female; mean age = 7.97 years; SD = 0.57; 94.1% had Portuguese as a primary language) participated in this study. These students were attending seven state-funded schools in the northern (64.2%), central (34.4%) and southern (1.4%) regions of Portugal.

Because of the limited available human resources for implementing the intervention, only 59% of the eligible students in these schools participated in this study. Students were selected randomly from the schools’ databases and then randomly distributed into the two conditions: intervention (n = 121; 58.5%) and control (n = 86; 41.5%). Students assigned to the intervention condition were assessed on whether they had a computer and internet access at home, and whether their parents were available for the intervention schedule. Based on the satisfaction of these conditions, students were then assigned to either the face-to-face intervention group (n = 45) or the remote intervention group (n = 76). The remote intervention group comprised 49.3% males and 50.7% females (mean age = 8.00 years; SD = 0.52). The face-to-face intervention group comprised 43.8% males and 56.3% females (mean age = 7.94 years; SD = 0.56). The control group comprised 50.7% f males and 49.3% females (mean age = 7.88 years; SD = 0.37). The groups did not differ significantly in terms of age (F (2,175) = 1.093, p > 0.05) and gender (χ2 (2) = 0.436, p > 0.05).

Measures

Word Reading Accuracy. We used the Test of Word Reading (Chaves-Sousa et al., 2017a; Chaves-Sousa et al., 2017b), designed for primary school students, to assess word reading accuracy. This measure, administered using a computer application, has four equated test forms, each comprising 30 items (single test words). Each of these test words is presented in isolation, in a randomized order, on a computer screen. During the test application, participants are asked to read aloud each of the presented words (without being timed). Word reading accuracy (correct vs incorrect) was recorded by the evaluator and a total score was computed by summing the number of words read correctly. This raw score was then converted into an equated score. The equated scores of each test form are placed on a single scale, allowing the scores obtained with different test forms to be compared. During the development of this test, the vertical scaling process was performed using a non-equivalent group design with anchor items (Viana et al., 2014; Cadime et al., 2021). Therefore, each test form included specific items for each grade level and items that were common to adjacent grade test forms. This design allowed the linking of the scores and their placement on a single scale, thus allowing direct comparison. The estimated reliability coefficients (person separation reliability, Kuder–Richardson formula 20, and item separation reliability) were higher than 0.80 for all test forms. As evidence of validity, the scores in the test are correlated with scores obtained in other tests of word reading, oral reading fluency, reading comprehension, vocabulary, working memory and teachers’ assessments of reading skills (Chaves-Sousa et al., 2017a).

Text Reading Accuracy and Fluency. We used the “O Rei” reading fluency and accuracy test (Carvalho, 2010) to evaluate text reading accuracy. For this test, participants are asked to read aloud a text of 281 words within 3 minutes. The number of words read correctly per minute was calculated, as was the number of words read incorrectly. Text reading fluency was calculated by dividing the total number of words read correctly by the reading time. Text reading accuracy was calculated by dividing the total number of words read correctly by the total number of words read (i.e. % words read correctly). The reliability of the scores was good: test-retest coefficient for fluency was 0.938 and accuracy was 0.797. Validity evidence has been provided by statistically significant correlation coefficients with teachers’ assessments of oral reading fluency (Carvalho, 2010).

Procedures

The study was conducted according to the ethical recommendations of the Psychology for Positive Development Research Center and was integrated within a larger project on reading performance after the first COVID-19 lockdown.

We obtained formal authorizations for all assessment and intervention procedures at the beginning of the school year. Individual assessments were organized with school teachers in a day and time that would not compromise students’ daily routines. Trained psychologists performed data collection between October and December 2020 (pretest), in April 2021 (posttest) and in June 2021 (follow up). The intervention program occurred between January and April 2021 (i.e. between pretest and posttest data collection). We excluded data from five students, who missed more than five intervention sessions, from the final analysis. To comply with our ethical responsibility to deliver an effective intervention to all eligible students, the control group received the same 20-session intervention between April and June 2021.

Fluency Training

Fluency Training included techniques such as non-repetitive reading, model reading, and assisted reading. Student reading fluency was trained by reading aloud in small groups (two to four students).

The program involved two sessions of training per week over 10 weeks (for a total of 20 sessions). Each session used a different brief text (range = 62–204 words), with increased complexity over time. The selected texts were of different genres (9 narrative, four poetic, four informative and three dramatic texts). Sessions lasted 50–60 min each, meaning that on average students experienced approximately 18 h of intervention.

The training sessions were given by psychologists and teachers (regular and special education teachers), referred to as “fluency coaches,” who had themselves attended a training program (25-h training). Teachers and psychologists who were involved in implementing the intervention did not have other third-grade students (e.g., control group students) to support during the school year.

The intervention occurred outside the classroom either remotely or face-to-face. Remote interventions occurred via ZOOM at a schedule determined by the students’ parents, either after school and/or during the weekend. Face-to-face interventions occurred during the school day at a schedule determined by the school. In both modalities, the intervention was performed with groups of between two and four students.

Each session had the same basic structure. First, a fluency coach introduced the new text and read the text title. This strategy contributed to discussions concerning the meaning of the text and activated previous knowledge about the content of the text. Next, the fluency coach read four challenging words. Selected words were expected to be difficult for students in terms of their meaning or phonological structure. Students were invited to read each word after the fluency coach word and discuss its meaning. This component of the session terminated with each student reading the four words.

Next, the fluency coach read the text aloud to the students. Through this reading, the fluency coach modeled a quality reading and aroused general curiosity in the text. Students were invited to follow along. Afterward, the fluency coach led the students in a short discussion of the meaning of the text. Next, each student practiced reading the text aloud. The fluency coach assisted if necessary and provided feedback.

Once all students had read the text, they were involved in the text re-telling. This strategy was implemented to explore the meaning of the text and the main ideas from the narrative. Re-tell fluency is considered an effective strategy for improving and remediating instructional reading needs (Zimmerman et al., 2019). Next, the students were given another opportunity to rehearse their reading to feel the sense of success and achievement that comes from practice (Zimmerman et al., 2019). Specifically, this strategy aimed to achieve correct decoding, increase automatization, improve text understanding, and improve prosody.

Students were then given different tasks according to the type of text. Examples include: (a) read the text as if it was the news from the TV, (b) read the text as if you were a character from the text, and (c) read the text with different emotions (e.g., as if it was sad, as if it was very happy). Once this task was complete, students read the text again and their performances were recorded.

Next, students’ reading of the text was analyzed in terms of word and sentence recognition, speed and prosody. This analysis was performed by students using a specifically designed evaluation sheet. The fluency coach helped in this analysis by giving feedback from the reading. Once there was an agreement for each component, the fluency coach recorded the outcome on the sheet. The same procedure was followed for each student.

Students took a hard copy of the text home, or it was sent by email, and they were encouraged to practice their reading until the next session. The text was reread by each student at the beginning of each new session followed by an analysis of the progress from the last reading. The trainer recorded the performance in the sheet and discussed with each student the changes in reading fluency and the contribution of the homework.

Fidelity of Implementation

To guarantee the fidelity of the intervention implementation, both remote and face-to-face, the research team provided training (25-h training, which 10-h were previously to the beginning of the intervention) and supervision (3-h mensal sessions) for each fluency coach. The team also provided a guided practice manual that explained each activity and had appropriately structured and organized session plans. A monitoring sheet was also provided for fluency coaches to complete at the end of each session. In this document, fluency coaches reported the strategies employed, the time spent in the session and the number of students that attended each session. Finally, for every session, the accuracy, fluency, and prosody of each student for the text were recorded. These steps were taken to ensure adherence to the intervention protocol (Suggate, 2016).

Data Analysis

Data were analyzed using IBM SPSS version 26. First, we performed univariate analyses of variance (ANOVAs) to determine if there were pre-intervention differences in reading measures between the three study groups (control vs remote intervention vs face-to-face) intervention.

To address our research question, we performed two 3 × 2 (Between-subjects effect of Group [remote intervention vs face-to-face intervention vs control]; Within-subjects effect of Time [pretest vs posttest]) mixed-model ANOVAs, one for word reading accuracy and a second for text reading accuracy. These analyses allowed us to compare how the three groups differed in their change in reading over time (Tabachnick and Fidel, 2007). When groups differed significantly in a reading measure at pretest (as was the case for reading fluency), we performed analysis of covariance (ANCOVA) to control for these differences.

We performed 2 × 2 mixed-model ANOVAs (Between-subjects effect of Group [remote intervention vs face-to-face intervention]; Within-subjects effect of Time [posttest vs follow-up]) to compare the retention of gains in each intervention group over 2 months after posttest.

Eta partial squared (ηp2) was used as a measure of effect size: ηp2>0.14 indicates a large effect; ηp2>0.06, a medium effect, and ηp2>0.01, a small effect (Cohen, 1992).

Finally, to estimate the extent to which final gains are conditioned by the initial performance we conducted Pearson correlations between the results in M1 and the difference between the results in M3 and M1 for word reading accuracy, text reading accuracy and fluency. We also calculated the intraclass correlation coefficient (ICC) to analyze the stability of change, according to Cicchetti (1994) and Field (2005) suggestions (values above 0.80 indicate stability in change). In the follow-up, 10 students from the remote intervention group and three from the face-to-face-intervention group were not assessed because they were in prophylactic isolation due to covid risk contacts.

Results

Three ANOVAs were performed to determine if there were pre-intervention differences in reading measures between the three groups. These analyses indicated that there was a significant difference in reading fluency between the groups, F (2, 207) = 6.81, p < 0.01. For word reading accuracy and text reading accuracy the differences between groups at pretest were not significant (p > 0.05).

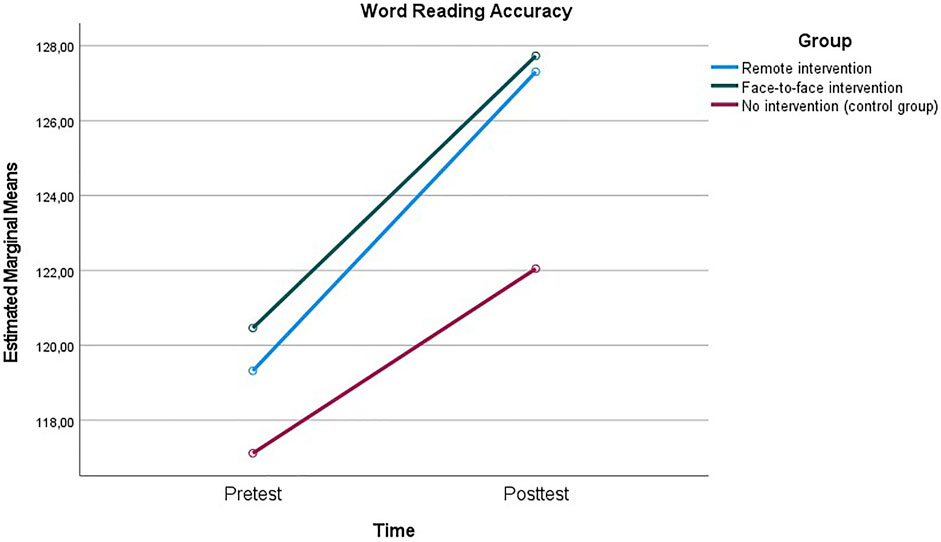

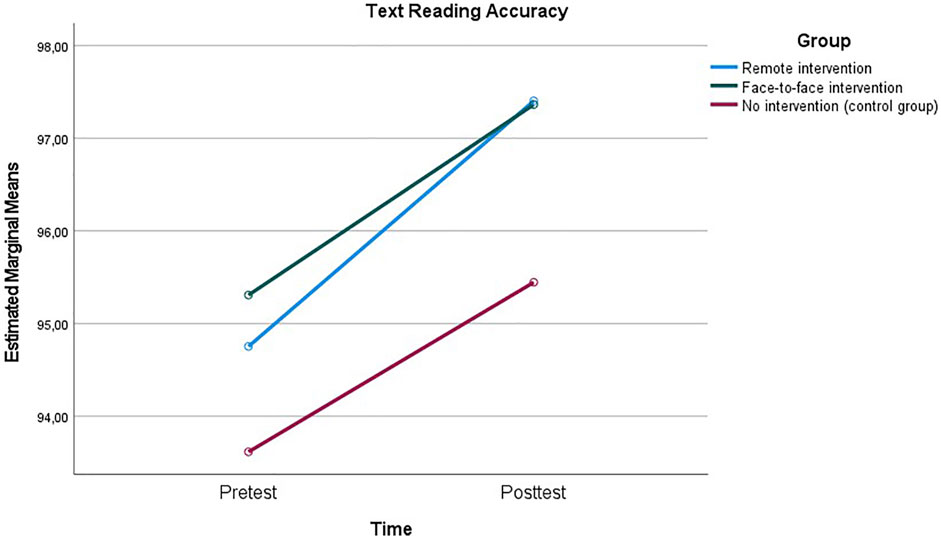

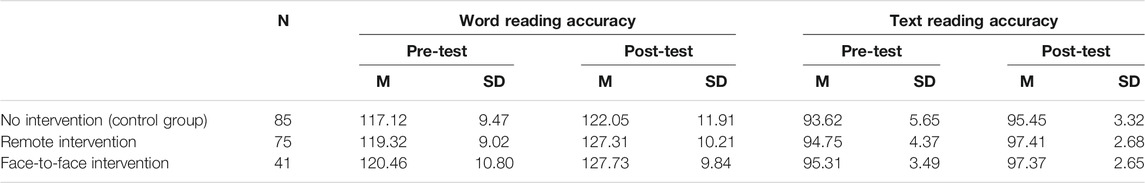

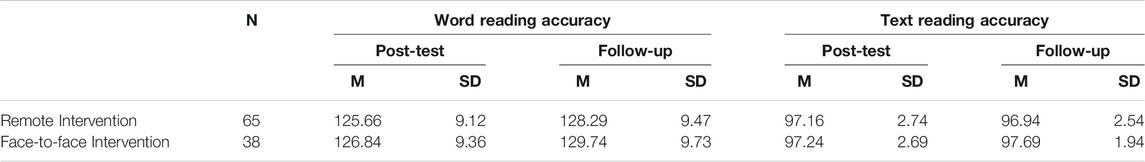

Next, we used mixed-effects ANOVAs to examine whether the three groups improved in reading accuracy from pretest to posttest. Table 1 reports the means and standard deviations for measures of word reading accuracy and text reading accuracy at pretest and posttest.

TABLE 1. Means and standard deviations for word reading accuracy and text reading accuracy in pre-test and post-test.

Multivariate results indicated a significant interaction effect Group × Time, Wilks’ Lambda = 0.951, F (4, 394) = 2.496, p < 0.05, ηp2 = 0.03. Univariate results revealed a significant interaction for word reading accuracy, F (2, 198) = 3.688, p < 0.05, ηp2 = 0.04, but not for text reading accuracy, F (2, 198) = 1.236, p > 0.05, ηp2 = 0.01. Multivariate results for the between-subjects effect of Group were also significant, Wilks’ Lambda = 0.948, F (4, 394) = 2.685, p < 0.05, ηp2 = 0.03. Univariate results indicate significant effects both for word reading accuracy, F (2, 198) = 4.381, p < 0.05, ηp2 = 0.04, and text reading accuracy, F (2, 198) = 4.800, p < 0.01, ηp2 = 0.05.

Post-hoc pairwise comparisons showed significant differences in word reading accuracy between the control group and the two intervention groups (p < 0.05). However, the two intervention groups did not differ significantly (p > 0.05). Similar results were shown regarding text reading accuracy (see Figures 1, 2). However, the size of the differences (representing a gain in reading accuracy) was small for both variables.

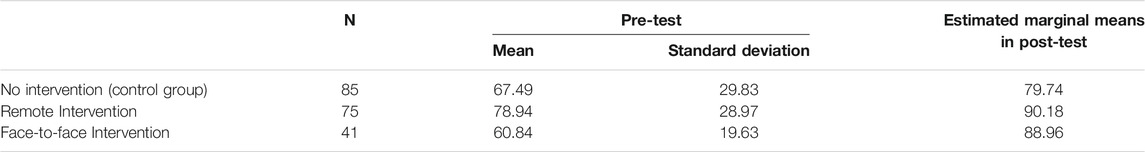

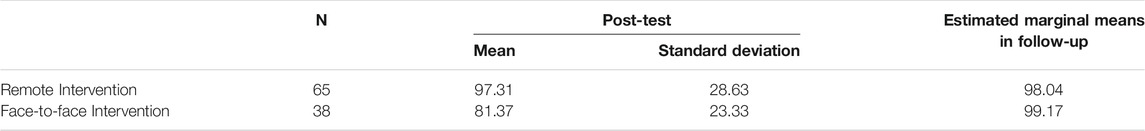

Because the groups differed significantly in fluency at pretest, we performed an ANCOVA controlling for these differences. Descriptive statistics for this analysis are presented in Table 2.

TABLE 2. Analysis of covariance for the differences between groups in post-test, after controlling the initial performance in reading fluency.

In this analysis, the covariate (initial fluency performance) explained a significant amount of variance in the dependent variable, F (2, 202) = 509.239, p < 0.001. A significant between-subjects effect of Intervention Group was also identified, F (2, 202) = 10.868, p < 0.001, with a medium effect size (ηp2 = 0.10). Post-hoc pairwise comparisons revealed a significant difference between the students who were in the two intervention groups (remote and face-to-face intervention groups) versus the control group (p < 0.01). There was no significant difference in fluency between students in the face-to-face versus remote intervention groups (p > 0.05).

Table 3 reports the means and standard deviations for measures of word reading accuracy and text reading accuracy at posttest and follow-up.

TABLE 3. Means and standard deviations for word reading accuracy and text reading accuracy in post-test and follow-up.

Multivariate results indicated that the interaction effect Group × Time was not significant, Wilks’ Lambda = 0.959, F (2, 100) = 2.123, p > 0.05, ηp2 = 0.04, as well as the effect for the intervention group, Wilks’ Lambda = 0.992, F (2, 100) = 0.405, p > 0.05, ηp2 = 0.01. However, the effect of time was significant and the effect size was medium, Wilks’ Lambda = 0.873, F (2, 100) = 7.246, p < 0.01, ηp2 = 0.13. Univariate results suggest that the effect of time was significant for word reading accuracy, F (1,101) = 13.409, p < 0.001, ηp2 = 0.12, but not for text reading accuracy, F (1,101) = 0.532, p > 0.05, ηp2 = 0.01. These results suggest gains in word reading accuracy for both groups in follow-up, but a stabilization in text reading accuracy.

For fluency, we performed an ANCOVA to explore the existence of differences between remote and face-to-face intervention groups in follow-up, after controlling for fluency levels at posttest.

The results for the between-subjects effects show that the covariate (fluency performance in posttest) explained a significant amount of variance in the follow-up for fluency, F (1, 105) = 52,335.725, p < 0.001. There was no significant effect of the intervention group in the fluency gain in follow-up, F (1, 105) = 0.116, p > 0.05, suggesting a similar evolution in both groups (Table 4).

TABLE 4. Analysis of covariance for the differences in fluency between groups in follow-up, after controlling the performance in reading fluency in post-test.

Finally, to estimate to what extent the final gains of the intervention groups were conditioned by the initial performance we estimated Pearson correlations between the results in M1 and the difference between the results in M3 and M1 for word reading accuracy, text reading accuracy and fluency. Correlation coefficients for word reading accuracy (r = −0.408, p < 0.001), text reading accuracy (r = -0.797, p < 0.001) and fluency (r = −0.260, p < 0.01) were statistically significant, which suggests that students with worse performance in M1 had a higher growth in each variable.

The results of intraclass coefficient are statistically significant for word reading accuracy (r = 0.769, p < 0.001), text reading accuracy (r = 0.784, p < 0.001) and fluency (r = 0.846, p < 0.001), in a confidence interval of 95%. These results highlight that although gains were registered in all subjects and higher gains were shown in students with lower initial performance, their relative positions within the group are maintained.

Discussion

This study aimed to investigate whether fluency training had an impact on the reading accuracy and fluency skills of third-grade students and whether the effects differed for face-to-face and remote modalities. The results suggest that the intervention had a positive and significant effect on the reading accuracy and fluency of the students when compared to the control group. However, there were no significant differences between the two intervention groups. Previous studies conducted during the COVID-19 pandemic indicated that remote interventions in reading skills are effective (e.g., Duijnen, 2021; Beach et al., 2021). The results of our study extend this finding by showing that a remote intervention appeared to be about as effective as a face-to-face intervention. This is an important result given that remote interventions may be the primary method of intervention in future situations of lockdown in a pandemic. We must also highlight that the delivered intervention was universal, i.e., was delivered to all students, whether they were experiencing fluency difficulties or not. Overall, the observed positive effects of the intervention are consistent with previous research findings that indicate reading interventions tend to benefit all readers (Suggate, 2016). Moreover, although the effect sizes in our study were low, this result is consistent with previous research. For example, the results of one meta-analysis by Scammacca et al. (2015) showed that interventions in reading fluency had lower effect sizes than interventions in other reading components, such as reading comprehension. Overall, the results of our study strengthen findings regarding the benefits of using strategies such as model reading, immediate feedback, recording and listening to own reading, performing complementary training at home, and using non-repetitive approaches in which the students are exposed to a wide range of texts (Grabe, 2010; Gersten et al., 2020; Beach and Philippakos, 2021; Zimmermann et al., 2021). The results also suggest that both intervention groups have a similar performance in reading accuracy and fluency 2 months after the end of the intervention (follow-up). Moreover, the results indicate gains in word reading accuracy for both groups in follow-up, but a stabilization in text reading accuracy. This finding may reflect the need of continuous practice and deliberated intervention so that students can generalize the gains obtained in word reading accuracy to text reading accuracy.

The study also shows that gains in accuracy and fluency were dependent on their initial levels at pretest. Specifically, negative correlation coefficients indicated that students with lower reading levels before the intervention obtained the highest gains. This finding is in accordance with the findings of Suggate (2010) meta-analysis, where the largest effect sizes of reading interventions were found for the students with the higher reading deficits (struggling readers). This result shows that this type of intervention is especially important for students experiencing difficulties in reading acquisition, such as those in TIER two models of Response to Intervention (RTI). RTI integrates assessment and intervention within Multi-tiered Systems of Support (MTSS) to maximize students’ academic achievement. To ensure that RTI works most effectively, schools use universal screening data to identify students at risk, provide differentiated, evidence-based interventions to those students, monitor the effectiveness of those interventions, and adjust the instruction based on how a student responds (Jenkins et al., 2013). This process is designed to accelerate instructional attention to students presenting risk factors in learning to read (van Norman et al., 2020). In this study, the program was delivered to all students, regardless of their reading status. However, a universal implementation of this program, which requires work in small groups twice a week and highly trained professionals, requires a lot of human resources and, consequently, high costs. Therefore, it may be more feasible to implement this program only with students that are at high risk of failing in reading automatization.

The study showed that students tend to maintain their relative position within the group, regardless of the improvement in reading skills. On the one hand, this result indicates that the program is effective for all achievement levels, and not only for some subgroups, such as struggling readers. On the other hand, it also suggests that the likelihood of students with low reading levels catching up or surpassing their peers that had higher reading levels in the pretest is low.

Although the goal of our study was not to compare models of development of early interindividual differences in reading, our findings are consistent with a compensatory model (e.g., Parrila et al., 2005). Although interindividual differences tended to be stable, higher gains after the intervention program were observed for students with lower reading proficiency. We also highlight that the values of the intraclass correlation coefficients obtained in our study were at the lower bound of the reference value (0.80). Thus, the widespread use of evidence-based and systematic reading fluency intervention programs can contribute to change failure patterns in reading acquisition.

The outcomes of this study have some important practical implications. As noted above, our findings suggest that remote interventions can be a useful tool in a scenario of generalized lockdown in a pandemic. However, in more normal circumstances, remote interventions have the potential to be a practical alternative to face-to-face interventions for enhancing the accessibility of students to systematic reading fluency intervention programs. Given that coaches and students do not need to be in the same space, the intervention can be delivered to students who need it, even if they are located in regions where human resources to deliver this type of program are scarce. Moreover, the program can be delivered outside school hours and thus does not conflict with or disrupt the classroom activities.

Some limitations of this study should be highlighted. While the students were randomly sampled within schools, the schools themselves were selected using a convenience strategy. Therefore, we caution against generalizing the results to different samples. Additionally, the allocation of students into the remote or face-to-face intervention groups depended on the fulfillment of specific conditions, including the availability of a computer and the internet. Future studies should consider complete randomization when constituting the intervention groups and provide adequate equipment to students that receive remote intervention.

Another limitation relates to the procedures used to assess intervention fidelity, i.e. adherence to the intervention protocol (Carroll et al., 2007; Trickett et al., 2020). Although some techniques, such as the creation of a manual and a monitoring sheet to be completed by each trainer, were used to potentiate the likelihood that the program was administered as intended, other procedures of intervention fidelity were not considered. King-Sears et al. (2018) suggested a five-step fidelity process: 1) intervention modeling; 2) sharing the intervention’s fidelity protocol with the coordinator for program delivery; 3) coach the coordinator for program delivery before implementation; 4) observe fidelity during implementation; and 5) reflect with the coordinator for program delivery using fidelity data. Although the first three steps were addressed in the fluency coach training, the last two were not performed and should be undertaken in future studies.

A further limitation was that the measures used to assess the effects of the program covered only the fluency dimensions of accuracy and speed. Future studies should explore if the program also has a positive impact on prosody, especially as research has suggested that this type of intervention has strong effects on prosody (for a review see Hudson et al., 2020).

The time of the follow-up (only 2 months after the intervention ended) was also a limitation of this study, given that it may be insufficient to evaluate the maintenance of gains in the long-term. For example, in the meta-analysis by Suggate (2016), which explored the long-term effects of phonemic awareness, phonics, fluency, and reading comprehension interventions, the mean time from posttest to follow-up of the reviewed studies was around 11 months. Future research should investigate if the gains in reading accuracy and fluency obtained with the administration of this program, in the two modalities, are maintained for longer intervals. However, studies must have caution with longer follow-up measurements, as the results can be confounded with summer effects. Future studies should also explore the effects of the program in struggling readers, not only to assess its feasibility and effects when used in TIER two intervention, but also to obtain evidence of the long-term effects in this group of students. The meta-analysis of Suggate (2016) showed evidence of greater retention of intervention effects in follow-up for low achieving students in comparison to typical readers. However, it is unclear if these long-term effects are also verified when interventions are performed remotely.

In conclusion, the findings of this study suggest that our evidence-based and systematic program for promoting reading accuracy and fluency was effective, both when delivered face-to-face and remotely. The fluency training presented in the study is a scripted intervention for typical readers, meaning the implementation decisions can be replicated in other contexts: the focus on promoting reading fluency through non-repetitive reading, the type of texts used, the intervention length and frequency, the training of the interventionist and the size of the groups.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Comissão de Ética da Universidade Lusíada. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

Author Contributions

JC made substantial contributions to the conception and design of the study, data collection, statistical data analysis, and interpretation of the results. SMe, SMa, and DA made substantial contributions to conception and design of the study, data collection, and discussion of the results. IC made substantial contributions to statistical data analysis and discussion of the results. All authors were involved in drafting the manuscript and revising it critically for important intellectual content.

Funding

This work was financially supported by the Portuguese Foundation for Science (FCT) and Technology and the Portuguese Ministry of Science, Technology, and Higher Education through national funds within the framework of the Psychology for Positive Development Research Center–CIPD (grant number UIDB/04375/2020) and the Psychology Research Centre (UIDB/PSI/01662/2020).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alves, K. D., and Romig, J. E. (2021). Virtual reading Lessons for Upper Elementary Students with Learning Disabilities. Intervention Sch. Clinic 57 (2), 95–102. doi:10.1177/10534512211001865

Balu, R., Zhu, P., Doolittle, F., Schiller, E., Jenkins, J., and Gersten, R. (2015). Evaluation of Response to Intervention Practices for Elementary School reading. NCEE 2016-4000. Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education. Available at: http://ies.ed.gov/ncee/pubs/20164000/pdf/20164000.pdf.

Barbour, M. K., and Reeves, T. C. (2009). The Reality Of Virtual Schools: A Review Of The Literature. Computers & Education 52 (2), 402–416.

Beach, K. D., and Traga Philippakos, Z. A. (2021). Effects of a Summer reading Intervention on the reading Performance of Elementary Grade Students from Low-Income Families. Reading Writing Q. 37 (2), 169–189. doi:10.1080/10573569.2020.1760154

Beach, K. D., Washburn, E. K., Gesel, S. A., and Williams, P. (2021). Pivoting an Elementary Summer reading Intervention to a Virtual Context in Response to COVID-19: An Examination of Program Transformation and Outcomes. J. Educ. Students Placed Risk 26 (2), 112–134. doi:10.1080/10824669.2021.1906250

Begeny, J. C., and Martens, B. K. (2006). Assisting Low-Performing Readers with a Group-Based reading Fluency Intervention. Sch. Psychol. Rev. 35 (1), 91–107. doi:10.1080/02796015.2006.12088004

Cadime, I., Chaves-Sousa, S., Viana, F. L., Santos, S., Maia, J., and Ribeiro, I. (2021). Growth, Stability and Predictors of Word reading Accuracy in European Portuguese: A Longitudinal Study from Grade 1 to Grade 4. Curr. Psychol. 40 (10), 5185–5197. doi:10.1007/s12144-019-00473-w

Calet, N., Gutiérrez-Palma, N., and Defior, S. (2017). Effects of Fluency Training on reading Competence in Primary School Children: The Role of Prosody. Learn. Instruction 52, 59–68. doi:10.1016/j.learninstruc.2017.04.006

Carroll, C., Patterson, M., Wood, S., Booth, A., Rick, J., and Balain, S. (2007). A Conceptual Framework for Implementation Fidelity. Implement. Sci. 2, 40. doi:10.1186/1748-5908-2-40

Carvalho, A. (2010). Teste de avaliação da fluência e precisão de leitura. O Rei. Vila Nova de Gaia: Edipsico.

Carvalho, M., Azevedo, H., Cruz, J., and Fonseca, H. (2020). “Inclusive Education on Pandemic Times: From Challenges to Changes According to Teachers’ Perceptions,” in Proceedings: 13th annual International Conference of Education, Research and Innovation-ICERI 2020. Seville, 6113–6117. doi:10.21125/iceri.2020.1316

Chaves-Sousa, S., Ribeiro, I., Viana, F. L., Vale, A. P., Santos, S., and Cadime, I. (2017a). Validity Evidence of the Test of Word Reading for Portuguese Elementary Students. Eur. J. Psychol. Assess. 33 (6), 460–466. doi:10.1027/1015-5759/a000307

Chaves-Sousa, S., Santos, S., Viana, F. L., Vale, A. P., Cadime, I., Prieto, G., et al. (2017b). Development of a Word reading Test: Identifying Students At-Risk for reading Problems. Learn. Individ. Diff. 6, 159–166. doi:10.1016/j.lindif.2016.11.008

Cicchetti, D. V. (1994). Guidelines, Criteria, and Rules of Thumb for Evaluating Normed and Standardized Assessment Instruments in Psychology. Psychol. Assess. 6 (4), 284–290. doi:10.1037/1040-3590.6.4.284

Dowhower, S. L. (1991). Speaking of Prosody: Fluency's Unattended Bedfellow. Theor. Into Pract. 30 (3), 165–175. doi:10.1080/00405849109543497

Ehri, L. C. (1998). “Grapheme-phoneme Knowledge Is Essential for Learning to Read Words in English,” in Word Recognition in Beginning Literacy. Editors J. L. Metsala, and L. C. Ehri (Mahwah, NJ: Erlbaum), 3–40.

Francis, D. J., Shaywitz, S. E., Stuebing, K. K., Shaywitz, B. A., and Fletcher, J. M. (1996). Developmental Lag versus Deficit Models of reading Disability: A Longitudinal, Individual Growth Curves Analysis. J. Educ. Psychol. 88 (1), 3–17. doi:10.1037/0022-0663.88.1.3

Fuchs, D., and Fuchs, L. S. (2006). Introduction to Response to Intervention: what, Why, and How Valid Is it? Read. Res. Q. 41 (1), 93–99. doi:10.1598/rrq.41.1.4

Gersten, R., Haymond, K., Newman-Gonchar, R., Dimino, J., and Jayanthi, M. (2020). Meta-analysis of the Impact of reading Interventions for Students in the Primary Grades. J. Res. Educ. Eff. 13 (2), 401–427. doi:10.1080/19345747.2019.1689591

Gilbert, J. K., Compton, D. L., Fuchs, D., and Fuchs, L. S. (2012). Early Screening for Risk of reading Disabilities: Recommendations for a Four-step Screening System. Assess. Eff. Interv. 38 (1), 6–14. doi:10.1177/1534508412451491

Godde, E., Bosse, M.-L., and Bailly, G. (2020). A Review of reading Prosody Acquisition and Development. Read. Writ. 33 (2), 399–426. doi:10.1007/s11145-019-09968-1

Grabe, J. (2010). Marine Gründungsbauwerke. Read. Foreign Lang. 22 (1), 71–135. doi:10.1002/9783433600443.ch9

Hudson, A., Koh, P. W., Moore, K. A., and Binks-Cantrell, E. (2020). Fluency Interventions for Elementary Students with Reading Difficulties: A Synthesis of Research from 2000-2019. Educ. Sci. 103, 52–28. doi:10.3390/educsci10030052

Jenkins, J. R., Schiller, E., Blackorby, J., Thayer, S. K., and Tilly, W. D. (2013). Responsiveness to Intervention in Reading. Learn. Disabil. Q. 36 (1), 36–46. doi:10.1177/0731948712464963

Kim, D., An, Y., Shin, H. G., Lee, J., and Park, S. (2020). A Meta-Analysis of Single-Subject reading Intervention Studies for Struggling Readers: Using Improvement Rate Difference (IRD). Heliyon 6 (11), e05024. doi:10.1016/j.heliyon.2020.e05024

King-Sears, M. E., Walker, J. D., and Barry, C. (2018). Measuring Teachers' Intervention Fidelity. Intervention Sch. Clinic 54 (2), 89–96. doi:10.1177/1053451218765229

Kuhn, M. R. (2005). A Comparative Study of Small Group Fluency Instruction. Reading Psychol. 26 (2), 127–146. doi:10.1080/02702710590930492

Kuhn, M. R., Schwanenflugel, P. J., Meisinger, E. B., Levy, B. A., and Rasinski, T. V. (2010). Aligning Theory and Assessment of reading Fluency: Automaticity, Prosody, and Definitions of Fluency. Read. Res. Q. 45 (2), 230–251. doi:10.1598/RRQ.45.2.4

LaBerge, D., and Samuels, S. J. (1974). Toward a Theory of Automatic Information Processing in reading. Cogn. Psychol. 6 (2), 293–323. doi:10.1016/0010-028510.1016/0010-0285(74)90015-2

Lembke, E. S. (2003). Examining the Effects of Three reading Tasks on Elementary Students’ reading Performance. Doctoral Thesis. Minnesota (MN): University of Minnesota.

Martens, B. K., Young, N. D., Mullane, M. P., Baxter, E. L., Sallade, S. J., Kellen, D., et al. (2019). Effects of Word Overlap on Generalized Gains from a Repeated Readings Intervention. J. Sch. Psychol. 74, 1–9. doi:10.1016/j.jsp.2019.05.002

Meeks, B. T., Martinez, J., and Pienta, R. S. (2014). Effect of Edmark Program on reading Fluency in Third-Grade Students with Disabilities. Int. J. Instr. 7 (2), 103–118.

National Governors Association Center for Best Practices and Council of Chief State School Officers (2010). Common Core State Standards Initiative. Available at: http://www.corestandards.org/the-standards/english-language-arts-standards. Accessed August 15, 2021

National Institute of Child Health and Human Development (2000). Report of the National Reading Panel. Teaching Children to Read: An Evidence-Based Assessment of the Scientific Research Literature on reading and its Implications for reading Instruction (NIH Publication No. 00- 4769). Rockville: U.S. Government Printing Office.

Parrila, R., Aunola, K., Leskinen, E., Nurmi, J.-E., and Kirby, J. R. (2005). Development of Individual Differences in reading: Results from Longitudinal Studies in English and Finnish. J. Educ. Psychol. 97 (3), 299–319. doi:10.1037/0022-0663.97.3.299

Pfost, M., Hattie, J., Dörfler, T., and Artelt, C. (2014). Individual Differences in Reading Development. Rev. Educ. Res. 84 (2), 203–244. doi:10.3102/0034654313509492

Rasinski, T. V., and Padak, N. D. (2005). 3-Minute reading Assessments: Word Recognition, Fluency and Comprehension. New York: Scholastic Incorporated.

Rasinski, T. V., Reutzel, R. D., Chard, D., and Linan-Thompson, S. (2011). “Reading Fluency,” in Handbook of Reading Research. Editors M. L. Kamil, P. D. Pearson, B. Moje, and P. Afflerbach (New York: Routledge), 286–319.

Scammacca, N. K., Roberts, G., Vaughn, S., and Stuebing, K. K. (2015). A Meta-Analysis of Interventions for Struggling Readers in Grades 4-12: 1980-2011. J. Learn. Disabil. 48 (4), 369–390. doi:10.1177/0022219413504995

Stanovich, K. E. (1986). Matthew Effects in reading: Some Consequences of Individual Differences in the Acquisition of Literacy. Selections 21 (4), 360–407. doi:10.1598/RRQ.21.4.1

Suggate, S. P. (2010). Why what We Teach Depends on when: Grade and reading Intervention Modality Moderate Effect Size. Dev. Psychol. 46 (6), 1556–1579. doi:10.1037/a0020612

Suggate, S. P. (2016). A Meta-Analysis of the Long-Term Effects of Phonemic Awareness, Phonics, Fluency, and reading Comprehension Interventions. J. Learn. Disabil. 49 (1), 77–96. doi:10.1177/0022219414528540

Szadokierski, I., Burns, M. K., McComas, J. J., and Eckert, T. (2017). Predicting Intervention Effectiveness from reading Accuracy and Rate Measures through the Instructional Hierarchy: Evidence for a Skill-By-Treatment Interaction. Sch. Psychol. Rev. 46 (2), 190–200. doi:10.17105/SPR-2017-0013.V46-2

Tabachnick, B. G., and Fidel, L. S. (2007). Using Multivariate Statistics. London: Pearson Education.

Trickett, E., Rasmus, S. M., and Allen, J. (2020). Intervention Fidelity in Participatory Research: a Framework. Educ. Action. Res. 28 (1), 128–141. doi:10.1080/09650792.2019.1689833

Valentine, D. T. (2015). Stuttering Intervention in Three Service Delivery Models (Direct, Hybrid, and Telepractice): Two Case Studies. Int. J. Telerehabil. 6 (2), 51–63. doi:10.5195/ijt.2014.6154

van Duijnen, T. (2021). The Efficacy Of A Synchronous Online reading Fluency Intervention With Struggling Readers. Master's Thesis. Victoria, BC: University of Victoria

van Norman, E. R., Nelson, P. M., and Klingbeil, D. A. (2020). Profiles of reading Performance after Exiting Tier 2 Intervention. Psychol. Schs. 57 (5), 757–767. doi:10.1002/pits.22354

Vasquez, E., Forbush, D. E., Mason, L. L., Lockwood, A. R., and Gleed, L. (2011). Delivery and Evaluation of Synchronous Online reading Tutoring to Students At-Risk of reading Failure. Rural Spec. Educ. Q. 30 (3), 16–26. doi:10.1177/875687051103000303

Viana, F. L., Ribeiro, I., Vale, A. P., Chaves-Sousa, S., Santos, S., and Cadime, I. (2014). Teste de Leitura de Palavras [Word Reading Test]. Rockville: CEGOC.

Wanzek, J., Vaughn, S., Scammacca, N., Gatlin, B., Walker, M. A., and Capin, P. (2016). Meta-analyses of the Effects of Tier 2 Type reading Interventions in Grades K-3. Educ. Psychol. Rev. 28 (3), 551–576. doi:10.1007/s10648-015-9321-7

Wennerstrom, A. (2001). The Music of Everyday Speech: Prosody and Discourse Analysis. New York: Oxford University Press.

Zimmerman, B. S., Rasinski, T. V., Was, C. A., Rawson, K. A., Dunlosky, J., Kruse, S. D., et al. (2019). Enhancing Outcomes for Struggling Readers: Empirical Analysis of the Fluency Development Lesson. Reading Psychol. 40 (1), 70–94. doi:10.1080/02702711.2018.1555365

Keywords: reading fluency, reading accuracy, reading intervention, remote intervention, elementary education

Citation: Cruz J, Mendes SA, Marques S, Alves D and Cadime I (2022) Face-to-Face Versus Remote: Effects of an Intervention in Reading Fluency During COVID-19 Pandemic. Front. Educ. 6:817711. doi: 10.3389/feduc.2021.817711

Received: 18 November 2021; Accepted: 10 December 2021;

Published: 18 January 2022.

Edited by:

Angela Jocelyn Fawcett, Swansea University, United KingdomReviewed by:

Chase Young, Sam Houston State University, United StatesGerald Tindal, University of Oregon, United States

Copyright © 2022 Cruz, Mendes, Marques, Alves and Cadime. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joana Cruz, am9hbmFjcnV6QHBvci51bHVzaWFkYS5wdA==

Joana Cruz

Joana Cruz Sofia Abreu Mendes

Sofia Abreu Mendes Sofia Marques

Sofia Marques Diana Alves

Diana Alves Irene Cadime

Irene Cadime