95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 19 October 2021

Sec. Educational Psychology

Volume 6 - 2021 | https://doi.org/10.3389/feduc.2021.759624

This article is part of the Research Topic COVID-19: Mid- and Long-Term Educational and Psychological Consequences for Students and Educators View all 18 articles

K. Supriya1,2†

K. Supriya1,2† Chris Mead3†

Chris Mead3† Ariel D. Anbar3

Ariel D. Anbar3 Joshua L. Caulkins4

Joshua L. Caulkins4 James P. Collins4

James P. Collins4 Katelyn M. Cooper1

Katelyn M. Cooper1 Paul C. LePore5

Paul C. LePore5 Tiffany Lewis4

Tiffany Lewis4 Amy Pate4

Amy Pate4 Rachel A. Scott1

Rachel A. Scott1 Sara E. Brownell1*

Sara E. Brownell1*Institutions across the world transitioned abruptly to remote learning in 2020 due to the COVID-19 pandemic. This rapid transition to remote learning has generally been predicted to negatively affect students, particularly those marginalized due to their race, socioeconomic class, or gender identity. In this study, we examined the impact of this transition in the Spring 2020 semester on the grades of students enrolled in the in-person biology program at a large university in Southwestern United States as compared to the grades earned by students in the fully online biology program at the same institution. We also surveyed in-person instructors to understand changes in assessment practices as a result of the transition to remote learning during the pandemic. Finally, we surveyed students in the in-person program to learn about their perceptions of the impacts of this transition. We found that both online and in-person students received a similar small increase in grades in Spring 2020 compared to Spring 2018 and 2019. We also found no evidence of disproportionately negative impacts on grades received by students marginalized due to their race, socioeconomic class, or gender in either modality. Focusing on in-person courses, we documented that instructors made changes to their courses when they transitioned to remote learning, which may have offset some of the potential negative impacts on course grades. However, despite receiving higher grades, in-person students reported negative impacts on their learning, interactions with peers and instructors, feeling part of the campus community, and career preparation. Women reported a more negative impact on their learning and career preparation compared to men. This work provides insights into students’ perceptions of how they were disadvantaged as a result of the transition to remote instruction and illuminates potential actions that instructors can take to create more inclusive education moving forward.

In the early months of 2020, the COVID-19 pandemic led to an unprecedented disruption of the normal mode of course instruction across most institutions of higher education. In the United States, most universities abruptly stopped conducting in-person classes and closed their campuses in March 2020 (Baker et al., 2020; Hartocollis, 2020). Mid-semester, many students and instructors were forced into learning and teaching remotely, respectively, for the first time due to the need for social distancing as a response to the pandemic (Johnson et al., 2020; Moralista and Oducado, 2020). Syllabi, teaching approaches, and assessments had to be modified to account for this altered mode of learning; most instructors only had one to two weeks to redesign their courses before remote instruction began. This abrupt shift to remote learning has been distinguished from online learning in general (Hodges et al., 2020) and it is commonly assumed that this abrupt shift adversely affected student learning (Kimble-Hill et al., 2020; Gin et al., 2021). There are many factors directly associated with the shift to remote learning that could have affected student learning (Hodges et al., 2020; Gin et al., 2021), which are in addition to the stress experienced by students in other aspects of their lives affected by the pandemic (e.g., health, employment, isolation, issues of societal inequalities).

The pandemic affected people across various social identities such as age, nationality, racial/ethnic background, LGBTQ+ status, and socio-economic status. Despite being termed as “the great equalizer” by politicians like New York’s Governor Andrew Cuomo and celebrities such as Madonna (Gaynor and Wilson, 2020; van Buuren et al., 2020), it had differential impacts on people along the lines of power and privilege in our society due to various systems of oppression including, but not limited to, racism, classism, sexism, and ableism (Copley et al., 2020; Garcia et al., 2020; Gaynor and Wilson, 2020; Lokot and Avakyan, 2020; Mein, 2020). In the United States, case and death rates have been higher among Black, Hispanic/Latinx, and Native American people than white people (Gold et al., 2020; Karaca-Mandic et al., 2020; Wortham, 2020). COVID-19 infections and deaths were also higher for people living in areas with higher poverty levels compared to areas with little or no poverty (Krieger et al., 2020; Chen and Krieger, 2021). Further, these more vulnerable communities experienced more negative financial impacts such as job losses or reduced working hours due to the economic shutdowns (Moen et al., 2020). When considering the educational impact of this crisis, these differential medical and financial impacts may have contributed to more negative educational consequences for students with marginalized social identities.

In addition to health and financial impacts, several other factors may have differentially exacerbated the negative effects of the COVID-19 pandemic on student learning in Spring 2020. Losing access to student housing and meal plans contributed to housing and food insecurities for many students, including low-income students, international students, first-generation students, and Black, Hispanic/Latinx, and Indigenous students (Chen et al., 2020; Lederer et al., 2020; Barber et al., 2021). Heightened housing and food insecurities impacted off-campus students as well (Goldrick-Rab et al., 2020). Moreover, poor internet connection and lack of a quiet or safe space to study made it more difficult for students to complete their assignments and succeed during remote instruction (Means and Neisler, 2020; Ramachandran and Rodriguez, 2020; Tigaa and Sonawane, 2020; Villanueva et al., 2020; Barber et al., 2021). For example, one recent study of college students in introductory sociology courses showed that more than 50% of all students experienced occasional internet problems during remote learning in Spring 2020 (Gillis and Krull, 2020). In the same study, about 90% of the students reported distractions in their new workspace and about 65% of the students reported the lack of a dedicated workspace (Gillis and Krull, 2020). While these issues negatively affect all students, students from low-income families, first generation to college students and Black, Hispanic/Latinx, and Indigenous students were more likely to be disproportionately impacted by poor internet connections or distracting environments (Barber et al., 2021). Another factor that likely affected remote learning in Spring 2020 is additional caregiving responsibilities necessitated by remote learning in K-12 schools and greater health risks for older family members (Collins et al., 2020). These additional responsibilities would reduce available time for coursework and could affect academic outcomes. Likely due to societal gender roles that assume women take on primary caregiving, these responsibilities are reported to have disproportionately affected women (Alon et al., 2020; Collins et al., 2020; Fortier, 2020). Needing to work jobs that require frequent interaction with others at places such as grocery stores and pharmacies is yet another element influencing student learning during the pandemic, especially for Black, Hispanic/Latinx, immigrant students, and those from low-income households (McCormack et al., 2020). Working such jobs could increase students’ risk of contracting COVID-19 and may cause greater anxiety in their daily lives (Pappa et al., 2020; Parks et al., 2020). All these factors are likely to differentially affect students depending on their locations along the various axes of power and privilege.

A limited number of studies have examined the educational impact of the pandemic on students. Several publications have reported that students were less engaged (Perets et al., 2020; Wester et al., 2021) and struggled with their motivation to study after the transition to remote learning in Spring 2020 (Al-Tammemi et al., 2020; Gillis and Krull, 2020; Means and Neisler, 2020; Petillion and McNeil, 2020), although one study on public health students at Georgia State University did not report lower motivation among students (Armstrong-Mensah et al., 2020), perhaps because of the heightened awareness of the relevance of public health during a global pandemic. It has also been demonstrated that the transition to remote learning had a negative impact on student relationship-building, specifically the extent to which students interact with each other in and out of class (Jeffery and Bauer, 2020; Means and Neisler, 2020), and on students’ sense of belonging in the class (Means and Neisler, 2020; Wester et al., 2021). In response to the pandemic, several universities changed course policies to extend the deadline for course withdrawals or to allow greater access to pass/fail grading options (Burke, 2020). For example, Villanueva and colleagues (Villanueva et al., 2020) found higher course withdrawal rates among general chemistry undergraduates after students were offered an extended deadline for withdrawing from the course. Despite these negative student experiences, some studies have reported small increases in student grades in Spring 2020 compared to similar courses in previous years (Gonzalez et al., 2020; Loton et al., 2020; Bawa, 2021). Similarly, a nationwide analysis of scores on a microbiology concept inventory showed no decline and even some improvement in student learning gains in Spring 2020 (Seitz and Rediske, 2021).

There is some evidence for differential impacts of the transition to remote learning for students with different social identities. For example, a report based on survey data from 600 undergraduates in STEM courses across the United States showed that women, Hispanic students, and students from low-income households experienced major challenges to continuing with remote learning more often than men, white students, and students from middle- or high-income households, respectively (Means and Neisler, 2020). Another survey study found that the likelihood of lower-income students delaying graduation because of COVID-19 was 55% higher than higher-income students (Aucejo et al., 2020).

In contrast to students in in-person degree programs whose mode of learning changed drastically, the crisis did not fundamentally change the mode of learning for students who were already enrolled in fully online degree programs. Although other aspects of the lives of online students were still affected by the pandemic, online learning was not new to them or their instructors, courses did not need to be modified halfway through the term, and students expected to complete all coursework remotely when they signed up for the course. Therefore, comparing the impact of the pandemic on the grades of online and in-person students might allow us to tease apart the influence of the rapid transition to online learning from the stress of living through a global pandemic. One prediction would be that online students would experience less of a negative impact on learning due to the pandemic compared to their in-person counterparts because their educational modality did not change. An alternative prediction is that the differences in the student populations online and in person, specifically the higher percentage of individuals in the online program who hold one or more marginalized social identities and may be more vulnerable to the negative effects of the pandemic outside the class, would lead to greater negative impacts for online students as a result of the COVID-19 pandemic. Specifically, we know that the percentage of women, older students, students who are primary caregivers, and students from low-income households are consistently higher in online programs compared to in-person programs (Wladis et al., 2015; Cooper et al., 2019; Mead et al., 2020). These are groups that have been unequally disadvantaged during the pandemic in general. Therefore, it is important to control for demographic variables when comparing the effects of the COVID-19 pandemic on grades between students in online and in-person degree programs. Even though grades are an imperfect measure of learning (Yorke, 2011), it is important to examine them because receiving poor grades in STEM courses has been shown to have a major effect on students’ trajectories in college (Weston et al., 2019).

The biology program at Arizona State University (ASU) offers a unique opportunity to examine the impact of the emergency transition to remote learning on undergraduates. First, ASU offers equivalent in-person and fully online biology degree programs that have aligned curricula. This allows for comparison of the experiences of students in an in-person program transitioned to remote learning to the experiences of students enrolled in an online program prior to the COVID-19 pandemic. Our approach is akin to the “difference-in-difference” approach as we compare students’ grades before and during the pandemic in our “treatment group,” i.e., students who experienced an abrupt transition to remote learning, to the “control group,” i.e., students in the online program who did not experience an abrupt transition. However, we do not intend to make causal claims, but instead view the comparison to the online program courses as helping us in understanding the results from the courses that transitioned to remote learning. Second, ASU has a large, diverse population of students that allows for the examination of the extent to which the transition affected students with different social identities. Science, technology, engineering, and math (STEM) disciplines, such as biology, have long been exclusionary spaces dominated by relatively wealthy white men (Noordenbos, 2002; Hill et al., 2010; Ong et al., 2011). Underrepresentation of women, people of color, people with disabilities, and people with low socioeconomic status is well documented in the sciences (National Science Foundation and National Center for Science and Engineering Statistics, 2019). Therefore, it is important to examine the impact of the transition to remote learning on STEM students with social identities historically underrepresented in the sciences, for which ASU’s biology program provides a suitable context.

In this study, following the recommendation from Hodges et al. (2020) we use the term “remote” to refer to in-person courses that transitioned abruptly to online instruction, while using the term “online” for courses that were designed to be online from the beginning. One important difference between the online and in-person programs after the transition to remote learning in Spring 2020 was that courses in the online program were fully asynchronous. In contrast, the courses in the in-person program were generally taught synchronously using web conferencing (e.g., Zoom) for lectures and typical in-class activities.

This study uses course grades during the Spring 2020, Spring 2019, and Spring 2018 semesters and survey data from instructors and students about the Spring 2020 semester to examine the impacts of the abrupt transition to remote learning due to COVID-19 during the Spring 2020 semester. While previous studies have examined the impact of the abrupt transition to remote learning on either student grades or instructional practices or student experiences, our study looks at all three of these in the same student population. Thus, our study gives us a more holistic understanding of the impact of the transition to remote learning on student learning in undergraduate STEM courses.

Specifically, our research questions were:

1. Did the abrupt transition to remote learning due to the COVID-19 pandemic affect grades for undergraduate students in an in-person biology program during the Spring 2020 semester? Was this effect on grades different from that found in the equivalent online biology program during Spring 2020? To what extent did the abrupt transition to remote learning disproportionately affect students with identities historically underrepresented in STEM?

2. What changes did in-person biology instructors make to their assessment practices after the abrupt transition to remote learning in Spring 2020 and to what extent do these explain any differences in student grades observed?

3. To what extent do in-person biology students perceive that their learning, interactions with peers and instructors, career preparation, interest in science, and feeling a part of the biology community were affected because of the abrupt transition to remote learning? To what extent did the abrupt transition to remote learning disproportionately affect these perceptions for students with identities historically underrepresented in STEM?

We acknowledge that our own identities influence the research questions that we ask and how we may interpret the data. Our author team includes individuals who identify as men, women, white, South Asian, Jewish, first-generation college-goers, first-generation immigrants, military veterans, and members of the LGBTQ+ community; members of our team grew up in middle class families in the United States, except KS who grew up in India. All the authors are committed to diversity, equity, and inclusion in the sciences and conduct education research focused on equity. This paper was motivated by our concerns regarding social inequities and how they are perpetuated and, in some cases, may be amplified in undergraduate science classrooms.

To understand the context of our data collection, it is necessary to briefly summarize the university’s academic policy responses to the COVID-19 pandemic. All in-person instruction was shifted to remote learning at the midpoint of the 15-week Spring 2020 semester. Online degree programs operate on a 7.5-week schedule, so although these students did not experience a change in learning modality, the societal effects of the pandemic would have been present in the Spring 2020 “B”-term. Hybrid or fully remote learning remained the norm in Fall 2020 when the surveys for this study were collected. This research was conducted under a protocol approved by the Arizona State University institutional review board (STUDY #9105).

We obtained course grades and student demographic information from the university registrar for Spring 2020 and two spring semesters prior to the pandemic for comparison, Spring 2019 and Spring 2018. Note that for the online degree program, the Spring 2020 “A”-term was completed prior to widespread COVID-19 spread in the United States, so those course enrollments are treated as “pre-COVID.” The population of interest is undergraduate biology majors enrolled in either the in-person biology degree program or the fully online biology degree program. Therefore, we obtained course grades for 42 STEM courses that are core courses taken by students in these biology majors, including general biology courses, biochemistry, chemistry, physics, mathematics, and statistics. See Supplementary Table S1 for the full list of courses.

Our initial grades analysis dataset included a total of 25,100 student-course enrollments, with 8,323 from the Spring 2020 pandemic semester and the remainder from Spring 2018 or 2019. Of these, 19,181 course enrollments were in-person courses and the remaining 5,919 were in online degree program courses.

The demographic variables we collected for this study were gender, race/ethnicity, and two proxies for socioeconomic status (college generation status and federal Pell grant eligibility). Federal Pell grants are given to undergraduate students in the United States based on financial need, and the eligibility criteria take both income and assets into account (Federal Pell Grants, 2021). Therefore, it is an appropriate proxy for socioeconomic status for college students in the United States. The transition away from an in-person lecture and having to adapt to a large change mid-semester could also have negatively affected the learning of students with disabilities (Gin et al., 2021) as changing learning environments have presented novel challenges for deaf and hard of hearing students (Lynn et al., 2020) and students with disabilities more broadly (Gin et al., 2021). However, because we are using institutional data in these analyses and data on disabilities is protected by federal law, we were not able to examine the impact of the transition on students with disabilities in this study, nor were we able to explore other identities not routinely collected by the university registrar.

To explore changes in instructional practices in the Spring 2020 semester for instructors who had to transition to remote learning, we created a preliminary survey with several open-ended questions regarding changes in instructional practices, such as ways that they may interact with students and assessments used after the transition to remote learning (a copy of the survey questions analyzed is provided in the Supplementary Material). We contacted all of the biology instructors whose Spring 2020 courses transitioned to remote learning (132 in total); 27 instructors responded to the survey (20% response rate). Faculty members were recruited first via email, then verbally encouraged to participate at several follow-up virtual events attended by many of those in the recruitment group.

Building on the open-ended responses from the preliminary instructor survey, we created a second survey that asked in more detail about instructional changes in response to the pandemic. To assess cognitive validity, we conducted two think-aloud interviews with biology faculty members who taught in person during Spring 2020 and had to transition to remote learning (Beatty and Willis, 2007). These think-aloud interviews indicated that the instructors understood the questions. We then distributed this revised survey to all biology instructors who taught in-person courses in Spring 2020 (n = 132). In the event that they taught multiple courses, the survey asked them to respond based on their largest course size. This was done because large course instructors are subject to greater practical constraints when considering how to shift instruction to remote learning and because the larger sizes mean that a greater number of students in total are impacted by these decisions. The survey first asked instructors to identify any changes they made in their course. This question used a multiple-selection format with 1) 24 options provided, 2) an option to say that no changes were made, and 3) an option to describe other changes not listed. The survey also asked instructors to report the extent to which they tried to reduce cheating in their course, the extent to which they made their course more flexible, and the extent to which they made their course easier. Each of these questions was answered using a six-point Likert scale from strong agreement to strong disagreement with no neutral option and they were asked to explain each answer (a copy of the survey questions analyzed is provided in the Supplementary Material). While instructors also experienced many of the same personal challenges resulting from the pandemic that students did, our focus was on the student experience and therefore we only asked instructors about instructional changes.

A total of 43 out of the 132 biology instructors who were contacted completed the second survey (33% response rate) based on their experiences teaching an in-person biology course that shifted to fully remote instruction in the Spring 2020 semester. Of these, 18 had taught an in-person course that transitioned during Spring 2020 with at least 100 students.

To explore student perceptions of learning during Spring 2020, we surveyed in-person biology students during Fall 2020 to ask specifically about their experiences during the Spring 2020 semester when their in-person courses rapidly transitioned to remote learning.

Our survey contained both closed-ended and open-ended questions. We asked students to think about the largest biology course they took in the Spring 2020 semester to answer the questions that were course-specific, (i.e., impact on grades, impact on learning, and perceived instructional changes). Asking about the largest class made it more likely that student survey responses would be comparable to instructor survey data. To assess cognitive validity of survey items, we conducted six think-aloud interviews with undergraduate students and iteratively revised survey items until no further changes were suggested (Beatty and Willis, 2007). The final survey contained questions about the perceived impact of the rapid transition to remote learning on student learning, grades, interest in their biology major, interest in learning about scientific topics, feeling a part of the biology community at the university, and career preparation. Each question was answered using a seven-point scale from “strong negative impact” to “strong positive impact.” In addition, we asked about the impact of the transition on the amount of time spent interacting with instructors and other students, and the amount of time spent studying. These items were also answered using a seven-point scale ranging from “greatly decreased” to “greatly increased.” During our think-aloud interviews with undergraduate students, the necessity of a “neutral” option for these survey items was brought up by multiple students. Therefore, we used a seven-point scale for these items instead of the six-point scale used in our instructor survey. We also asked students about perceived instructional changes to the course in terms of measures to prevent cheating, increase flexibility, and make the course easier. These were on a six-point scale from “strongly agree” to “strongly disagree” with no neutral option for consistency with the instructor survey (see Supplementary Material for the analyzed survey questions).

We included some demographic questions at the end of the survey so we could test for any differential effects on student experience by social identities, specifically gender, race/ethnicity, college generation status and eligibility for federal Pell grants. For race/ethnicity, we asked students two questions: whether they identified as Hispanic/Latinx and whether they identified as Black/African American, Native American/Alaska Native, or Native Hawaiian/Pacific Islander. Students that selected “yes” to either of these questions were grouped together as BLNP for our analyses. We grouped students in this manner because all these groups are historically underrepresented in the sciences and our sample sizes for the student survey were not large enough to allow us to disaggregate race/ethnicity data.

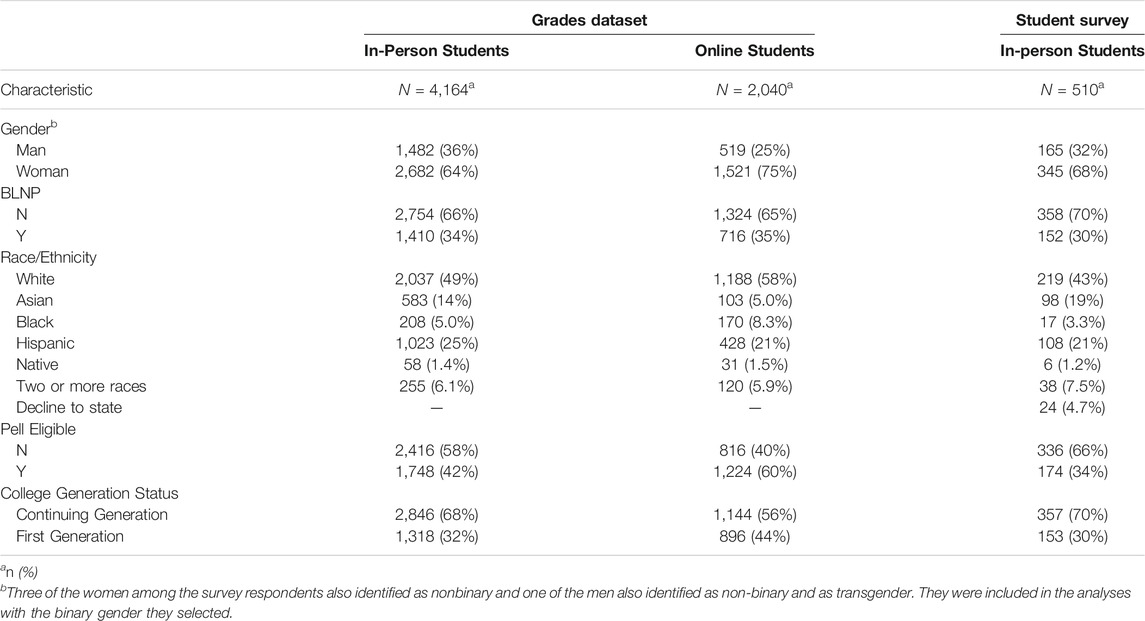

In Fall 2020, we used a convenience sampling approach to recruit eight biology instructors who agreed to distribute our survey to students in their classes. The survey was sent to a total of 1,540 students in these eight courses and students were offered a small amount of extra credit for completing the survey. A total of 798 students completed the survey, resulting in a response rate of 51.8%. However, only 601 of these students were enrolled in the in-person biology degree program in Spring 2020. Of these students, 70 reported that they did not take any biology courses in Spring 2020 and 21 students had missing data. After removing these students, we were left with responses from 510 students who had taken in-person biology courses that had transitioned to remote learning in Spring 2020 (Table 1). The demographics of student survey respondents included in the analyses largely reflect the demographics of in-person students in the course grades dataset. However, Pell-eligible students, white students, and Hispanic/Latinx students were slightly under-represented among survey respondents (Table 1). Asking students to think about the largest in-person biology course they took in Spring 2020 for the survey gave us data for 25 courses, although for 13 of these courses, we had fewer than 10 respondents.

TABLE 1. Demographics for unique students in the in-person and online course grades and survey data set. Pell eligibility and college generation status are included as proxies for socioeconomic status. BLNP refers to Black, Latinx, Native American, and Pacific Islanders.

Course grades were analyzed on a 0–4.33 scale (A+ = 4.33, A = 4.0, A− = 3.66, ... E = 0). Two students identified as non-binary gender and were excluded from the analyses due to small sample size. Grades other than A–E (e.g., withdraw grades) were excluded from analysis; this was a total of 2,404 student-course enrollments, or 9.6% of the total dataset. The decision to remove these grades from analysis is consistent with prior studies (Matz et al., 2017; Mead et al., 2020). To control for prior academic performance, we use “GPAO,” which refers to a student’s grade point average in other courses, including both STEM and non-STEM courses (Huberth et al., 2015; Matz et al., 2017). Entries with missing GPAO were excluded; this occurs for first-semester students who enroll in a single course or who withdraw from all courses. After all exclusions, our final dataset contained 22,314 student-course enrollments.

In Spring 2018 and 2019, the non-letter grades were almost exclusively W or “withdraw” grades. In Spring 2020, about a third of the non-letter grades were “Y” grades, which is designated as “satisfactory” work at a level of a C or higher. In normal circumstances, most students would not be eligible to receive a Y grade, but this was relaxed in response to the unique circumstances of the pandemic. There were no policy changes made regarding withdrawals and the withdraw dates were consistent across the three terms studied. The combined proportion of non-letter grades increased in Spring 2020 compared to 2018 and 2019, rising to 14.6% from 13.8% for online courses and to 9.5% from 7.5% for in-person courses. Looking in detail, the withdraw percentage decreased in both modalities but this was largely offset by the number of students taking the Y grade. We can infer from this that students employed a strategic approach to the Y grade in Spring 2020 that is similar to the approach ordinarily taken to the withdraw option. We will return to this issue in the discussion.

To determine the direction and significance of the effect of the shift to remote learning on student grades, we performed a linear mixed-effects regression on the numerical course grades. The fixed effects in the model included a dummy variable for the Spring 2020 (“COVID-19”) semester, whether the student was enrolled in the in-person or online degree program, an interaction between these two variables, and the GPAO term. We included random effect terms for course section, to account for the fact that each section was graded differently, and for each student, to account for the fact that most students are represented multiple times across the grades data. We examined and report intraclass correlation coefficients (ICC) for each model to quantify the contribution of the random effects.

To determine the direction and significance of the effect of the shift to remote learning on grades received by students with identities historically underrepresented in STEM, we added interaction terms between the dummy variable for the Spring 2020 (“COVID-19”) semester and each of the demographic terms to the model described above. We again controlled for GPAO and included random effect terms for course section and student in this model (see Supplementary Table S2 for model specifications). From this model, we performed stepwise removal of terms and made model selections based on AIC and BIC values and statistical comparisons using likelihood ratio tests to arrive at a final regression model.

We summarized the changes in instructional practices based on the frequency of selection of practices by instructors. To understand the extent to which changes in assessment practices made by instructors might explain differences in student grades in Spring 2020 compared to previous semesters, we examined data from 10 instructors who responded to our survey who had taught the same course in Spring 2020 and either Spring 2019 or 2018. We performed course-level linear regressions on the relative grade difference using the following variables as predictors: total number of changes made, use of lockdown browsers for exams, whether they made efforts to reduce cheating, and whether they worked to make the course easier. All variables were dichotomous except number of changes made. The question about making the course more flexible was not included because all ten of the instructors who had taught the same course in Spring 2020 and Spring 2019 or 2018 agreed with this question.

We calculated the total percentage of students that reported negative impacts on their learning, amount of time studying and interacting with peers and instructors, career preparation, interest in science, and feeling a part of the biology community. To analyze the open-ended data, we used open-ended coding methods to identify themes that emerged from student responses (Strauss and Corbin, 1990). We used constant comparison methods to develop the coding scheme; student responses were assigned to a category and were compared to ensure that the description of the category was representative of that response and not different enough to require a different category. Inter-rater reliability was established by having two coders (S.E.B. and R.A.S.) analyze 20% of the data, after which one person coded the rest of the data. For student perceptions of the positive impact of the transition to remote instruction on learning codes: Two raters compared their codes and their inter-rater reliability was at an acceptable level (k = 0.88). For student perceptions of the negative impact of the transition to remote instruction on learning codes: Two raters compared their codes and their inter-rater reliability was at an acceptable level (k = 0.88). We report out any code that at least 10 students mentioned.

For eight of the Spring 2020 courses in our dataset, we had data from both the instructor and more than 10 students for each course. For these courses, we assessed if student responses to perceived instructional changes to the course aligned with the instructional changes as reported by the instructors. We analyzed the strength of this relationship through Spearman rank correlations between the median Likert value for students in each course and the strength of the instructor’s agreement using a Likert scale.

To examine demographic differences in the perceived impact on students, we used ordinal mixed model regressions with the Likert scale option chosen by students as the outcome and gender, race/ethnicity, Pell-eligibility, and first-generation to college status as predictors. We used course section as a random effect with varying intercepts in all the models to account for the nested nature of our data.

All statistical analyses were performed in R (R Core Team, 2019) and made use of the lme4 (Bates et al., 2015, 4), lmerTest (Kuznetsova et al., 2017), performance (Lüdecke et al., 2020), sjPlot (Lüdecke, 2020), and ordinal (Christensen, 2015) packages.

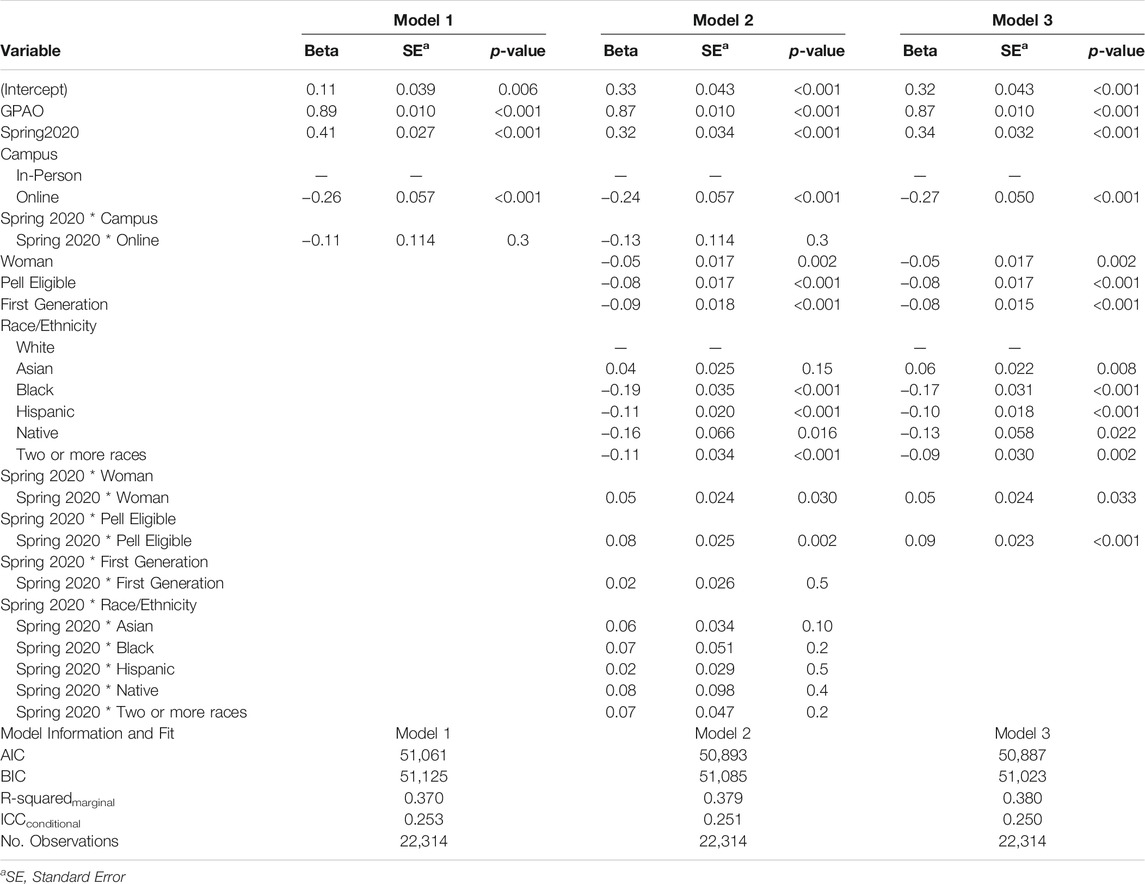

Overall, our linear mixed effects regression results show that the Spring 2020 semester was associated with a positive grade shift of 0.41 grade units (Table 2). Students earned higher grades in Spring 2020 courses compared to students enrolled in those courses in Spring 2019 and Spring 2018. Results also show that this Spring 2020 grade effect was not significantly different between the online and in-person programs (Table 2). The online program is associated with lower course grades overall, which is consistent with our prior work (Mead et al., 2020).

TABLE 2. Linear regression results for courses in in-person and online degree program. These models show interaction effects among each demographic category and the COVID-19 semester. Model 3 is the final result of a model selection process starting from Model 2.

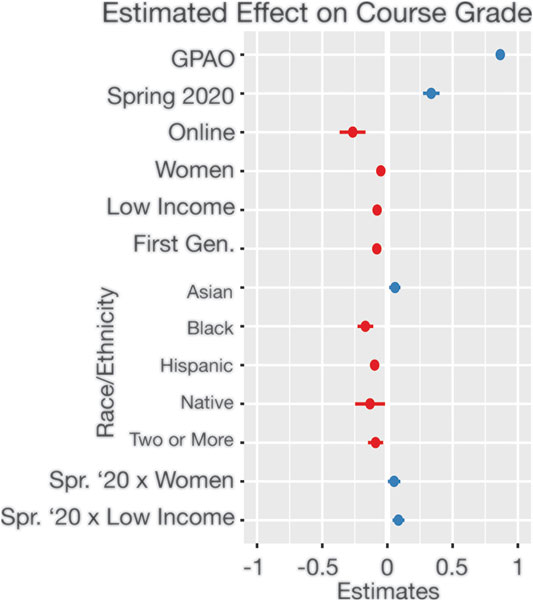

Our final regression model (Model 3) showed no significant negative interactions for any of the demographic variables that we examined, including gender, race/ethnicity, and socioeconomic status (Figure 1; Table 2). Contrary to our prediction, the modelling shows positive, but mostly non-significant, interaction effects for all groups compared to their historically overrepresented counterparts. The two statistically significant interactions indicate that women have a Spring 2020 effect 0.05 greater than men and that Pell-eligible students have an effect 0.09 greater than non-Pell-eligible students.

FIGURE 1. Regression estimates of effect on course grade. Results are shown from the final model (Model 3) after model selection. Full model specifications, including reference categories for categorical variables, are provided in Table 2. All predictors shown are statistically significant. Error bars are ± 2SE.

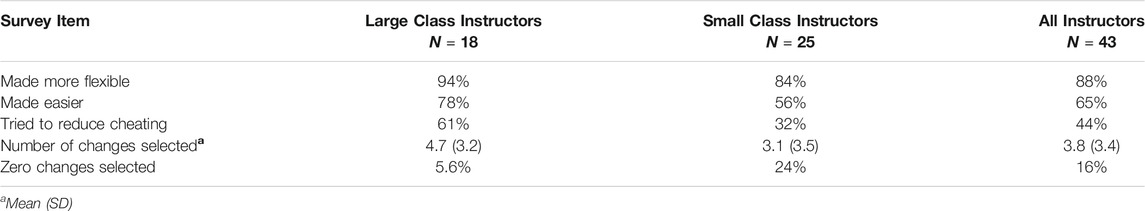

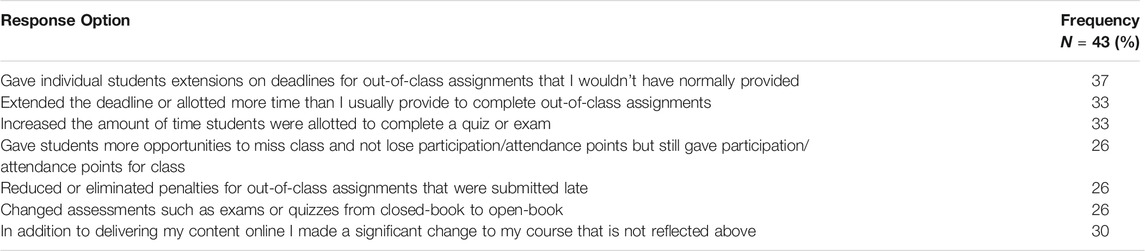

Overall, most instructors who responded to the survey reported making changes to their in-person courses when they needed to transition to remote learning during the COVID-19 pandemic, including being more flexible and making the course easier (Table 3). Focusing on the large courses, about 60% of instructors agreed that they took steps to reduce cheating. Nearly all large course instructors (94%) agreed that they made changes to be more flexible to help students who were experiencing challenges and most (78%) agreed that they made it easier for students to do well.

TABLE 3. Summary of in-person instructor survey responses about the changes they made to their course after the transition to remote learning in Spring 2020.

From our list of 24 options (Table 4, See Supplementary Table S3 for the full set of options), on average, instructors of large courses selected about five changes. The most frequently selected changes were generally related to time and deadline extensions as well as conducting open-book exams. Changing the weighting or number of exams or changing the difficulty of questions on quizzes or exams were less commonly selected. Thirteen respondents added open-ended comments in addition to the provided choices. Five of these related to changes needed to replace planned fieldwork or labs. The remainder detailed specific content-related adjustments or discussed changes to increase instructor availability to students.

TABLE 4. Frequencies of selection of fixed choice options for course changes by in-person instructors after the transition to remote learning in Spring 2020. This table only shows options chosen by ≥25% of respondents; for full results, see Supplementary Table S4.

Within the subset of surveyed instructors who taught the same class in Spring 2020 and one of the prior Spring terms, our linear regression showed that none of the instructional changes in assessment practices were significant predictors of the difference in grades received by the analyzed students in Spring 2020 compared to previous two Spring semesters (Supplementary Table S4). Greater instructor flexibility could be associated with the increase in grades across all courses, but we were not able to test this relationship because all ten of the instructors in this subset reported increasing flexibility in their courses.

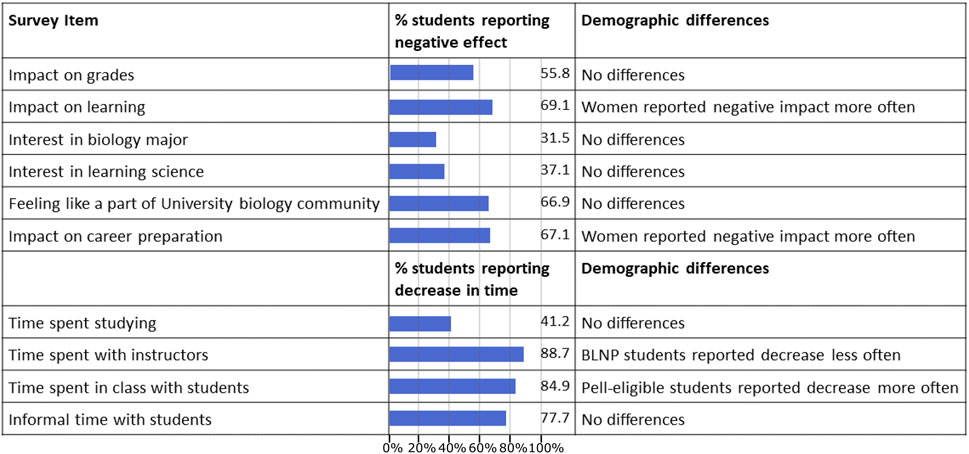

About 56% of students reported that they think the transition to remote learning negatively impacted their grade, although our grade analysis suggests this is unlikely considering that the average student earned higher grades. However, almost 70% of students said the transition to remote learning negatively impacted their learning in the same course (Figure 2). We analyzed the reasons why students felt that the transition to remote instruction either positively or negatively affected their learning (Tables 5, 6). For the 30% of students who thought that it positively impacted their learning, they said it did so because lectures were recorded so they could review them or see more of them (18.3%), they felt as though they could learn at their own pace (15.0%), they felt like remote learning allowed them to engage with the material in a more active learning way (11.7%), or they felt more comfortable learning at home as opposed to in a large classroom (8.3%). There was also a subset of students who felt as though they had more time in general during the pandemic, which allowed them to focus more on studying (16.7%). For the 70% of students who reported that the pandemic negatively impacted their learning, 27.2% of students reported that they felt as though they understood less and remembered less during remote instruction (Table 6). Students also reported a loss of concentration or focus (24.6%), fewer opportunities to interact with others and ask questions (17.0%), and having less motivation or interest (9.9%). Less common responses included: feeling overwhelmed by greater amounts of work after the transition to remote learning (5.9%), lack of hands-on learning, particularly in lab courses (4.0%), general stress associated with the pandemic that increased distractions outside of coursework (3.7%), procrastination and less accountability (3.1%), and technical issues (2.8%).

FIGURE 2. Percentage of students who reported a negative impact or reported a decrease in the time spent on various activities during Spring 2020 along with ordinal regression results on demographic differences. BLNP refers to Black, Latinx, Native American, and Pacific Islanders. Pell eligibility and college generation status were included as proxies for socioeconomic status. The reference groups for the regression analyses were: men, non-BLNP students, continuing-generation students, and students who were not eligible for Pell grants.

Our analyses of the closed-ended Likert scale data showed that a large proportion of students (67.1%) reported that the transition to remote learning in Spring 2020 had a negative impact on their career preparation. A relatively smaller, but still significant, proportion of students reported a negative impact of the transition to remote learning on their interest in their biology major (31.5%) or interest in learning about scientific topics (37.1%). However, many more students (66.9%) reported a negative impact of the transition to remote learning on their feeling of being a part of the biology community at the university. See the Supplementary Material for full Likert scale responses for each of the survey items.

Most students reported that the amount of time they spent on interactions with instructors and other students, both in and outside of class, decreased as a result of the transition to remote learning. In fact, about 63% of students said that the amount of time they spent interacting with other students in class and outside of class greatly decreased in Spring 2020, which was the strongest response option (Supplementary Table S11). However, student responses were fairly split on the amount of time spent studying for a course, with about 45% of students reporting an increase in the amount of time they spent studying and 41% reporting a decrease (Figure 2).

We compared student and instructor perceptions of the adjustments made in Spring 2020. For the eight courses where both student and instructor data were available, this comparison showed that students’ perceptions of instructional practices were not correlated with instructor’s perception of their own practices. Spearman rank correlations were not statistically significant for any of the three questions about instructional practices posed to instructors and students, i.e., whether instructors took steps to reduce cheating in their course, whether instructors tried to make their course more flexible, and whether instructors tried to make the course easier. Visual examination of our data shows that students tended to slightly overestimate instructor efforts to reduce cheating and slightly underestimate instructor efforts to make the course easier and more flexible (Supplementary Figure S1).

We did not find significant demographic differences in the student Likert responses to most of the survey items. In Figure 2, we describe the few demographic differences we found through our ordinal mixed models (see full ordinal regression results in the Supplementary Material). Although most students reported that the time spent with instructors decreased or greatly decreased during the pandemic, the proportion of BLNP students that chose these options was lower than non-BLNP students. Pell-eligible students were more likely to report that time spent with other students in class greatly decreased compared to students that were not Pell-eligible. Lastly, women were significantly more likely than men to report negative impacts on their learning in a course and on career preparation.

Contrary to our predictions, transition to remote learning due to the COVID-19 pandemic in Spring 2020 did not have a negative effect on student grades and instead had a small positive effect across demographic groups among students enrolled in the in-person and online biology degree programs. Our instructor surveys showed that instructors who had to transition to remote learning increased flexibility and made several other changes in assessment practices that might have contributed to the slight increase in student grades in the in-person courses. Despite this increase in grades, our student surveys revealed several negative impacts of the transition to remote learning, particularly on students’ perceived understanding of course content, interactions with other students and instructors, feeling like a part of the biology community at the university, and career preparation. These negative impacts do not seem to have a stronger effect on students with certain social identities over others for the most part. However, women were more likely to report negative impacts on their learning and career preparation compared to men, a result consistent with concerns about widening gender inequities due to the COVID-19 pandemic. Additionally, Pell-eligible students reported a decrease in the amount of time spent in and outside of class interacting with other students more often, which is consistent with concerns regarding logistical difficulties for students from less wealthy backgrounds. Together these findings suggest that instructor responses were effective in mitigating negative impacts on student grades across all demographic groups examined in this study, and notably did not seem to induce any new inequities based on demographics, but that the abrupt transition to remote learning still led to a diminished perception of learning and career development during the Spring 2020 semester for many students.

The observed mismatch between grades and student perceptions of their learning might be because students underestimated their learning (Carpenter et al., 2020). Some studies have shown that student perceptions of learning can be positively correlated with their grades (Anaya, 1999; Kuhn and Rundle-Thiele, 2009; Rockinson-Szapkiw et al., 2016). However, a recent study comparing the effects of active and passive (i.e., lectures) instruction on student learning found that students who received active instruction scored higher on the learning assessment but perceived that they learned less than their peers who received passive instruction (Deslauriers et al., 2019). Thus, even though it has been shown that students, on average, learn more from active learning (Freeman et al., 2014; Theobald et al., 2020), students’ perception of learning might not match their actual learning. A meta-analysis showed that student perceptions of their learning are more strongly related to affective outcomes, such as motivation and satisfaction, and have a much weaker relationship to learning outcomes, such as scores (Sitzmann et al., 2010). However, one reason for this may be that grades are often not an accurate measure of student learning (Yorke, 2011). Given this background and our results that instructors were more flexible with grading after the transition to remote learning in Spring 2020, we think it is likely that the increase in grades does not actually reflect an increase in student understanding of the course material. In contrast, students earned higher grades while self-reporting that they learned less, which we find concerning for the extent to which their completion of these college courses is preparing them for their future courses and careers.

The slight increase in average student grades in Spring 2020 compared to previous semesters is consistent with other studies that have examined student grades in Spring 2020 at other institutions (Gonzalez et al., 2020; Loton et al., 2020; Bawa, 2021). Interestingly, no significant interaction was observed between instruction mode and the Spring 2020 effect, indicating that the increase in grades was similar for courses that experienced the emergency transition to remote learning and courses in the online degree program that did not experience a transition in modality. Although we did not survey the online instructors, this suggests that both in-person and online instructors may have been responsive to the public health and economic crisis due to the COVID-19 pandemic and been more lenient and flexible in their grading. Our models show no evidence that the shift in grades in the Spring 2020 term exacerbated pre-existing demographic grade gaps. In fact, for women and Pell-eligible students, the Spring 2020 grade shift was slightly more positive than for men and non-Pell-eligible students, respectively. Thus, the grade increase in Spring 2020 did not fall along the lines of power and privilege in our society and benefited students with all social identities. A similar result was found in a study on student scores at Victoria University in Australia where the researchers found statistically significant but very small differences in the impact of COVID-19 on student scores between demographic groups (Loton et al., 2020).

The instructor surveys show that among our study population, most instructors made accommodations related to deadlines and stated that they took steps to make their courses easier for students to do well. Other studies have also reported greater flexibility among instructors in Spring 2020, including instructors in general chemistry courses at a liberal arts college in the United States (Villanueva et al., 2020). A survey study of faculty members and administrators across the United States found that 64% of faculty members changed the kinds of exams or assignments they asked students to complete in the course and about half of them lowered expectations on the amount of work from their students in the Spring 2020 semester (Johnson et al., 2020). Additionally, many universities expanded access to pass/fail grading structure instead of the more traditional A-F letter grades for students, with some institutions even making the pass/fail grading structure mandatory for all courses (Burke, 2020). Arizona State University allowed faculty members to use the range of grading options that have always been available, but perhaps not used as often prior to Spring 2020. This included the traditional A through E grading scale, plus the use of the I or Incomplete grade (allowing students to complete coursework within 1 year of the end of the term) and the Y grade which indicates “Satisfactory” work at a level of C or higher, similar to the Pass grade at other universities. Thus, our study affirms other reports that the focus across colleges and universities to make courses more flexible and less stressful for students in Spring 2020 may have off-set potential drops in student grades. While we see the benefit of this flexibility for students, particularly that we did not see demographic differences in these grade increases, we do find it concerning that students still felt as though they learned less. We encourage instructors to be thoughtful of what they are doing to make their courses flexible. Some of these changes may have eliminated difficulty unrelated to course content and/or learning goals (e.g., timed exams) and retaining these changes could make courses more equitable and inclusive moving forward. Such changes can be made while maintaining the quality of teaching and providing students with ways to engage in deep learning so that they are not disadvantaged at a later timepoint because they have not learned as much as they needed to in that earlier course.

Many students recognized the positive impact of greater instructor flexibility and changes in assessment practices on their grades, while recognizing the negative impact of the transition on their understanding of the course material. This is consistent with other survey studies that show that students perceived a negative impact on their learning or were less satisfied with their learning after the transition to remote learning (Loton et al., 2020; Means and Neisler, 2020; Petillion and McNeil, 2020). In our study, most students also reported negative impacts on interactions with other students and instructors, career preparation, and a feeling of being a part of the biology community at the university. These are also consistent with other studies on student experiences (Jeffery and Bauer, 2020; Means and Neisler, 2020). A larger survey study of in-person students at Arizona State University across various degree programs, the same institution where our study was conducted, found several striking negative impacts on career preparation due to COVID-19. According to this study, 13% of students delayed graduation, 40% suffered the loss of a job/internship, and 29% of students expected to earn less by age 35 (Aucejo et al., 2020).

We found similar perceptions of negative impacts on student learning, interactions, and career preparation across demographic groups with few significant differences. We found that women were more likely to report negative impacts on their learning and career preparation compared to men. This is not surprising given the greater childcare obligations with school closures and that women spend more time doing unpaid care work compared to men (Fortier, 2020). In an interview study of engineering students, women reported having to spend more time on domestic duties while men described having more free time after the transition to remote learning during the Spring 2020 semester (Gelles et al., 2020). Together this suggests that the COVID-19 pandemic has exacerbated gender inequities and could have long-term negative impacts on women’s education and careers that are not captured in simply examining student course grades. We encourage future studies to explore how the COVID-19 pandemic affected the persistence of women in STEM careers.

The only survey item in which we found a significant difference between BLNP and non-BLNP students was the time spent with instructors, where BLNP students chose the option “greatly decreased” less often. Previous studies show that BLNP students often have more interactions with faculty members compared to white students, although they also have negative interactions with faculty members more often (Lundberg and Schreiner, 2004; Park et al., 2020). Still, their greater experience of interacting with faculty members might have prepared them better to communicate with instructors during emergency remote learning. High-quality interactions with faculty members have been shown to have positive effects on student learning (Lundberg and Schreiner, 2004; Cole, 2011; Tovar, 2015). However, BLNP students did not report less negative impacts on learning compared to non-BLNP students. This suggests that even though BLNP students reported a decrease in the time spent with instructors less often, it might not have translated into benefits for their learning.

We also found that students from less wealthy backgrounds (operationalized through federal Pell grant eligibility) more often reported a reduction in time spent with other students in class after the transition to remote learning. Pell-eligible students were also 1.2 times more likely to be working a job after the transition to remote learning and 1.5 times more likely to be working more than 20 h a week compared to students that were not eligible for federal Pell grants (Supplementary Table S12). With greater availability of recorded lectures, Pell-eligible students may have attended fewer synchronous sessions, thus further reducing their interactions with other students. Although the decrease in interactions with other students is not desirable, making lectures available for students to watch later might offer students greater flexibility in juggling coursework with other work/family responsibilities. Indeed, some students reported positive impacts on learning after the transition to remote learning due to the availability of recorded lectures and being able to learn at their own pace (Table 5). Overall, instructors may need to find a balance between asynchronous learning to make learning more accessible with synchronous learning to foster peer interactions.

The transition to remote learning had a negative impact on students’ interest in their biology major or interest in learning about scientific topics in about a third of the students. A similar study of students enrolled in a general chemistry course at a large public university in the southern United States found no significant change to students’ identities and intention to pursue a career in science due to COVID-19 (Forakis et al., 2020). However, we did not find any demographic differences in student responses to questions about science interest, which is encouraging given the importance of increasing representation of women, Black students, Latinx students, and students that grew up in low-income households in STEM. Almost two-thirds of students reported a negative impact of the transition to remote learning on students’ feelings of being a part of the biology community at the university, which is alarming, although not surprising, given that students reported spending less time interacting with both instructors and their peers. Creating opportunities for increasing interactions using various modes of synchronous and asynchronous communication (e.g., online office hours, discussion boards, apps) might help students feel a greater sense of community and social presence of others in the class.

Instructor responses to our survey items about whether they took steps to prevent cheating, increased flexibility, or made the course easier are in broad agreement with student responses to those survey items. Most students seem to recognize their instructors’ efforts during the transition to adapt their courses to the online modality as well as the public health and economic crisis. However, students' underestimation of instructor flexibility and changes to make courses easier suggests that communication between students and instructors might need to be strengthened. Instructors may have needed to use more “instructor talk,” which is defined as any discussion that is not specific to the course content, to signify the changes that they were making to the courses and why they were making these changes (Seidel et al., 2015). It is also possible that the steps that instructors took might not have been sufficient to reach students’ needs or expectations. Because instructors tend to be in better financial situations than their students, perhaps they underestimated some of the student challenges. Setting up robust systems of communication among students, instructors, student support staff members, and administrators might improve the academic climate for all stakeholders and prepare us better for future emergencies or needs to change instruction rapidly. Indeed, an interview study with engineering students found that faculty members communicating care and increasing flexibility was a key element for supporting students (Gelles et al., 2020). In another study, students indicated the need for constant communication from instructors during remote learning (Murphy et al., 2020). Thus, developing stronger communication with students and improving “instructor social presence” in online courses, i.e., the sense that the instructor is connected and available for interactions, is critical (Pollard et al., 2014; Richardson and Lowenthal, 2017; Oyarzun et al., 2018). This may be done through casual conversations on discussion boards, leveraging social media, and using time in class and during office hours to build classroom community.

Prior work shows that grades are an imperfect measure of student learning. Thus, we are limited in our ability to accurately measure the effects of the abrupt transition to remote learning due to COVID-19 on student learning (Allen, 2005; Yorke, 2011; Schinske and Tanner, 2014). Moreover, student perceptions of negative impacts of the transition on their learning that we observed might be attributed to the abrupt transition itself or the difficulty of learning during a pandemic. Surveying students in the online program about their experiences in the Spring 2020 semester could have helped us tease apart these two factors more. Similarly, because we surveyed only in-person instructors, we do not know the extent to which the online program instructors made changes to their instruction or course policies. However, the similarity of Spring 2020 grade shifts across instruction modes in our regression models suggests that accommodations were made in response to the pandemic even in courses that did not undergo a change in instruction mode. We also had a relatively low response rate from in-person instructors that shifted to remote learning, and it is possible that instructors that were more flexible were more likely to respond to our survey. In spite of the overall low response rate, the dataset includes responses from most instructors that taught large enrollment courses required for biology majors.

In our course grade analysis, we excluded all non-letter (A–E) grades, yet we know that the percentage of non-letter grades increased somewhat in Spring 2020. Because many of the non-letter grades indicate poor course performance, it is possible that our decision to exclude these data has created a positive grade bias in favor of the Spring 2020 term. To test for this possibility, we recoded all non-letter grades as 0.0 (“E” or fail). This is an extremely conservative standard as it is quite possible that most students who received Y grades would not have failed and that some of them would not have chosen to withdraw under normal circumstances. Rerunning our final model (model 3) with these alternative grades reduces the regression coefficient associated with the Spring 2020 term from 0.34 to 0.28. Although this is not a trivial difference, we are confident that this sensitivity test demonstrates that our overall conclusions have not been unduly affected by our treatment of the non-letter grades.

Another limitation of our study is the relatively small sample size for our survey dataset which caused us to group data from Black/African American, Hispanic/Latinx, Native American/Alaska Native and Pacific Islander/Native Hawaiian students for analyses. The histories and experiences of racial oppression of these groups in the United States are different from each other and grouping them together erases these differences. Similarly, grouping white and Asian students together into a group is problematic as well, because there are several different ethnicities included in the category of “Asian” in the United States which includes ethnicities that are underrepresented in STEM in the United States (Teranishi, 2002). Despite limited statistical power, we ran ordinal regressions on the survey data with disaggregated race/ethnicity data and have included the results in the Supplementary Material. We found some significant effects by race/ethnicity in those analyses. Specifically, Asian students perceived being negatively impacted less often on grades, sense of community, and career preparation. Also, Black students reported a positive impact on the amount of time studying more often and multiracial students reported a negative impact on grades more often.

The choice to ask instructors and students to respond about only their largest courses does mean that our conclusions regarding those survey data are primarily relevant to larger courses. That said, the analysis of course grades was not conditional on course size and, as indicated by Table 3, we did receive a number of responses from instructors of smaller courses. Another limitation is that we did not separate the survey responses from students that opted to receive a Y grade from students that took the course for a letter grade. Student perceptions of their learning in a course might be affected by this choice. However, given that this was a relatively small proportion of the student population, we do not expect it to have a significant impact on our overall conclusions.

Finally, the indicators of socioeconomic status we used (federal Pell grant eligibility and first-generation status) are coarse measures that do not capture socioeconomic status accurately. However, these were the only indicators that we could access from the university registrar.

Instructors responded to the rapid transition to remote learning in Spring 2020 with greater flexibility in grading and students received higher grades on average. This shows that instructor response was effective in preventing grade declines for students and doing so equitably across the student population. However, student perceptions of the Spring 2020 semester were less positive, including a sense of diminished learning, loss of community, and reduced career preparation. Even if students’ perceptions of their learning are not accurate, perceived learning losses might still have important effects on students’ confidence in the course content or interest in pursuing a career in biology. Similar learning losses may have occurred in the Fall 2020 semester and Spring 2021 as the COVID-19 pandemic continued to spread in the United States and worldwide.

As we look ahead, these students affected by the pandemic may need more support in subsequent courses, especially in courses that build on prior learning. Dedicating class time to reminding students of important concepts at the beginning of each course or course module could be one form of support. However, upper-level courses may not have class time to spare, so adding supplemental tutorials or instruction may be an alternative way to counteract these potential learning deficits of pre-requisite knowledge. Further, the loss of feeling a part of the biology community needs to be addressed. More intentional community-building exercises in classes or in the larger department outside of classes could be ways to heal the damage to students’ sense of belonging.

Although COVID-19 may only affect college education for a particular timeframe, it is important to garner lessons from this experience to prepare for the next emergency, which could be global such as a pandemic, or local such as a natural disaster. Building robust networks of communication among students, instructors, and staff members, and offering greater training and support for online teaching for instructors are steps that could help us prevent some of the challenges associated with the rapid transition to remote learning experienced during the COVID-19 pandemic. We hope that some of the flexibility afforded to students during the pandemic is carried on even after in-person courses resume as instructors may have a better understanding of the myriad of challenges that college students experience daily. This could include making video recordings of in-person class sessions available as standard practice. Lastly, as the COVID-19 pandemic reminded us, our classrooms and universities do not exist in isolation and are a part of the larger society and are therefore affected by the larger societal forces and power structures that impact student learning in our institutions. Therefore, we must continue to strive toward social justice inside and outside our higher education institutions.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

The studies involving human participants were reviewed and approved by The Arizona State University Institutional Review Board. Written informed consent from the participants' legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

KS, CM, and SB conceptualized the study and all authors contributed to the study design. KS and CM collected the data and analyzed all the quantitative data. SB and RS analyzed students' open-ended responses on the survey. KS, CM and SB wrote the first draft of the manuscript. AA, JPC, PL and SB acquired funding for the project. All authors contributed to the review and editing of the manuscript.

This work was supported by grant #GT11046 from the Howard Hughes Medical Institute (www.hhmi.org), awarded to JPC, SB, PL, and AA and grant #1711272 from the National Science Foundation (www.nsf.gov), awarded to SB.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We would like to thank the instructors who filled out our survey and those who distributed our survey to students. We would also like to thank the students who filled out our survey and shared their experiences. Lastly, we want to thank the instructors and undergraduate researchers who participated in our think-aloud interviews to establish cognitive validity of our survey instruments. A version of this manuscript previously appeared online in the form of a preprint (Supriya et al., 2021).

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.759624/full#supplementary-material

Al-Tammemi, A. B., Akour, A., and Alfalah, L. (2020). Is it Just about Physical Health? an Online Cross-Sectional Study Exploring the Psychological Distress Among University Students in Jordan in the Midst of COVID-19 Pandemic. Front. Psychol. 11, 562213. doi:10.3389/fpsyg.2020.562213

Allen, J. D. (2005). Grades as Valid Measures of Academic Achievement of Classroom Learning. The Clearing House 78, 218–223. doi:10.3200/TCHS.78.5.218-223

Alon, T., Doepke, M., Olmstead-Rumsey, J., and Tertilt, M. (2020). The Impact of COVID-19 on Gender Equality. Natl. Bur. Econ. Res. 36, 1475–1499. doi:10.3386/w26947

Anaya, G. (1999). College Impact on Student Learning: Comparing the Use of Self-Reported Gains, Standardized Test Scores, and College Grades. Res. Higher Educ. 40, 499–526. doi:10.1023/A:1018744326915

Armstrong-Mensah, E., Ramsey-White, K., Yankey, B., and Self-Brown, S. (2020). COVID-19 and Distance Learning: Effects on Georgia State University School of Public Health Students. Front. Public Health 8, 576227. doi:10.3389/fpubh.2020.576227

Aucejo, E. M., French, J., Ugalde Araya, M. P., and Zafar, B. (2020). The Impact of COVID-19 on Student Experiences and Expectations: Evidence from a Survey. J. Public Econ. 191, 104271. doi:10.1016/j.jpubeco.2020.104271

Baker, M., Hartocollis, A., and Weise, K. (2020). First U.S. Colleges Close Classrooms as Virus Spreads. More Could Follow. The New York Times. Available at: https://www.nytimes.com/2020/03/06/us/coronavirus-college-campus-closings.html (Accessed December 10, 2020).

Barber, P. H., Shapiro, C., Jacobs, M. S., Avilez, L., Brenner, K. I., Cabral, C., et al. (2021). Disparities in Remote Learning Faced by First-Generation and Underrepresented Minority Students during COVID-19: Insights and Opportunities from a Remote Research Experience. J. Microbiol. Biol. Educ. 22, ev22i1–2457. doi:10.1128/jmbe.v22i1.2457

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting Linear Mixed-Effects Models Usinglme4. J. Stat. Soft. 67, 1–48. doi:10.18637/jss.v067.i01

Bawa, P. (2020). Learning in the Age of SARS-COV-2: A Quantitative Study of Learners' Performance in the Age of Emergency Remote Teaching. Comput. Educ. Open 1, 100016. doi:10.1016/j.caeo.2020.100016

Beatty, P. C., and Willis, G. B. (2007). Research Synthesis: The Practice of Cognitive Interviewing. Public Opin. Q. 71, 287–311. doi:10.1093/poq/nfm006

Burke, L. (2020). Colleges Go Pass/fail to Address Coronavirus. Inside HigherEd. Available at: https://www.insidehighered.com/news/2020/03/19/colleges-go-passfail-address-coronavirus (Accessed December 16, 2020).

Carpenter, S. K., Witherby, A. E., and Tauber, S. K. (2020). On Students' (Mis)judgments of Learning and Teaching Effectiveness. J. Appl. Res. Mem. Cogn. 9, 137–151. doi:10.1016/j.jarmac.2019.12.009

Chen, J. H., Li, Y., Wu, A. M. S., and Tong, K. K. (2020). The Overlooked Minority: Mental Health of International Students Worldwide under the COVID-19 Pandemic and beyond. Asian J. Psychiatr. 54, 102333. doi:10.1016/j.ajp.2020.102333

Chen, J. T., and Krieger, N. (2021). Revealing the Unequal Burden of COVID-19 by Income, Race/Ethnicity, and Household Crowding: US County versus Zip Code Analyses. J. Public Health Manag. Pract. 27 (Suppl. 1), S43–S56. doi:10.1097/PHH.0000000000001263

Christensen, R. H. B. (2015). Analysis of Ordinal Data with Cumulative Link Models—Estimation with the R-Package Ordinal.

Collins, C., Landivar, L. C., Ruppanner, L., and Scarborough, W. J. (2020). COVID‐19 and the Gender gap in Work Hours. Gend. Work Organ. 28, 101–112. doi:10.1111/gwao.12506

Cooper, K. M., Gin, L. E., and Brownell, S. E. (2019). Diagnosing Differences in what Introductory Biology Students in a Fully Online and an In-Person Biology Degree Program Know and Do Regarding Medical School Admission. Adv. Physiol. Educ. 43, 221–232. doi:10.1152/advan.00028.2019

Copley, A., Decker, A., Delavelle, F., Goldstein, M., O'Sullivan, M., and Papineni, S. (2020). COVID-19 Pandemic through a Gender Lens. World Bank 1, 1–8. doi:10.1596/34016

Darnell Cole, D. (2010). Debunking Anti-intellectualism: An Examination of African American College Students' Intellectual Self-Concepts. Rev. Higher Educ. 34, 259–282. doi:10.1353/rhe.2010.0025

Deslauriers, L., McCarty, L. S., Miller, K., Callaghan, K., and Kestin, G. (2019). Measuring Actual Learning versus Feeling of Learning in Response to Being Actively Engaged in the Classroom. Proc. Natl. Acad. Sci. U S A. 116, 19251–19257. doi:10.1073/pnas.1821936116

Federal Pell Grants (2021). Federal Pell Grants | Federal Student Aid. Available at: https://studentaid.gov/understand-aid/types/grants/pell (Accessed June 21, 2021).

Forakis, J., March, J. L., and Erdmann, M. (2020). The Impact of COVID-19 on the Academic Plans and Career Intentions of Future STEM Professionals. J. Chem. Educ. 97, 3336–3340. doi:10.1021/acs.jchemed.0c00646

Fortier, N. (2020). COVID-19, Gender Inequality, and the Responsibility of the State. Intnl. J. Wellbeing 10. Available at: https://www.internationaljournalofwellbeing.org/index.php/ijow/article/view/1305 (Accessed December 14, 2020).

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active Learning Increases Student Performance in Science, Engineering, and Mathematics. Proc. Natl. Acad. Sci. U S A. 111, 8410–8415. doi:10.1073/pnas.1319030111

Garcia, M. A., Homan, P. A., García, C., and Brown, T. H. (2020). The Color of COVID-19: Structural Racism and the Disproportionate Impact of the Pandemic on Older Black and Latinx Adults. The Journals Gerontol. Ser. B 76, e75–e80. doi:10.1093/geronb/gbaa114

Gaynor, T. S., and Wilson, M. E. (2020). Social Vulnerability and Equity: The Disproportionate Impact of COVID-19. Public Adm. Rev. 80, 832–838. doi:10.1111/puar.13264

Gelles, L. A., Lord, S. M., Hoople, G. D., Chen, D. A., and Mejia, J. A. (2020). Compassionate Flexibility and Self-Discipline: Student Adaptation to Emergency Remote Teaching in an Integrated Engineering Energy Course during COVID-19. Educ. Sci. 10, 304. doi:10.3390/educsci10110304

Gillis, A., and Krull, L. M. (2020). COVID-19 Remote Learning Transition in Spring 2020: Class Structures, Student Perceptions, and Inequality in College Courses. Teach. Sociol. 48, 283–299. doi:10.1177/0092055X20954263

Gin, L. E., Guerrero, F. A., Brownell, S. E., and Cooper, K. M. (2021). COVID-19 and Undergraduates with Disabilities: Challenges Resulting from the Rapid Transition to Online Course Delivery for Students with Disabilities in Undergraduate STEM at Large-Enrollment Institutions. CBE Life Sci. Educ. 20, ar36. doi:10.1187/cbe.21-02-0028

Gold, J. A. W., Rossen, L. M., Ahmad, F. B., Sutton, P., Li, Z., Salvatore, P. P., et al. (2020). Race, Ethnicity, and Age Trends in Persons Who Died from COVID-19 - United States, May-August 2020. MMWR Morb Mortal Wkly Rep. 69, 1517–1521. doi:10.15585/mmwr.mm6942e1

Goldrick-Rab, S., Coca, V., Kienzl, G., Welton, C., Dahl, S., and Magnelia, S. (2020). #RealCollege during the Pandemic: New Evidence on Basic Needs Insecurity and Student Well-Being. Rebuilding Launchpad: Serving Students During Covid Resource Libr. 5, 1–23.

Gonzalez, T., de la Rubia, M. A., Hincz, K. P., Comas-Lopez, M., Subirats, L., Fort, S., et al. (2020). Influence of COVID-19 Confinement on Students' Performance in Higher Education. PLOS ONE 15, e0239490. doi:10.1371/journal.pone.0239490

Hartocollis, A. (2020). ‘An Eviction Notice’: Chaos after Colleges Tell Students to Stay Away. The New York Times. Available at: https://www.nytimes.com/2020/03/11/us/colleges-cancel-classes-coronavirus.html (Accessed December 10, 2020).