- 1Faculty of Education (CETL), The University of Hong Kong, Pokfulam, Hong Kong, SAR China

- 2Teaching and Learning Evaluation and Measurement Unit, The University of Hong Kong, Pokfulam, Hong Kong, SAR China

Effective assessment of university experiences is critical for quality assurance/enhancement but fragmented across the Pacific-Asian universities. A shared conceptual and measurement foundation for understanding student experiences is a necessary first step for inter-institutional communication across the region. The current study is a first step toward such a foundation, uniting two of the most internationally and locally prominent instruments: Chinese College Student Survey (university engagement; Mainland China) and Student Learning Experience Questionnaire (programme engagement; Hong Kong). The survey was completed by students from one research-intensive Hong Kong university (n = 539). Random, split-half CFA, latent-reliability, pair-wise correlations, ANOVA (gender) and MANOVA (faculty) were conducted. Factor-structure (good CFA fit for the test/retest) and scale reliability (0.07 > Raykov’s Rho) suggested a robust, short (63-items) survey resulted. Intra-/inter-survey relationships were consistent with the existing Student Engagement and Student Approaches to Learning theory. ANOVA indicated small differences for gender for a few latent constructs, but MANOVA revealed substantial differences across the 10 faculties. This study resulted in a robust Pacific-Asian intra-/inter-institutional student experience instrument which brings together two equally important perspectives on the student experience. This comprehensive student engagement instrument stands ready for cross-national and longitudinal tests. The new instrument’s benefits extend to theoretical connections to student experience assessment in the United States, Australia, and the United Kingdom enabling international connections to be made.

Introduction

In general, the more we know about a thing, the better situated we are to improve on it. Adding to the power of the depth and breadth of our information, are points of shared reference, critical for measuring progress and establishing lines of communication (and potential collaboration). These basic principles fit universities’ increasingly complex quality assurance/teaching needs: an in-depth understanding of their students and students from other universities (i.e., points of reference). While all universities assess the student experiences in one way or another, and many have access to points of reference (shared national surveys), two critical gaps are common. The first gap is theoretical, as most institutions, and in fact most nations, pursue a single theoretical perspective on the student experience. The second is a failure to embrace the international nature of higher education, and often seeking only to compare and potentially learn from other national institutions.

The present measurement orientated study, along with a critical and integrative review of the literature (Zeng et al., 2019; Zeng et al., Forthcoming), seeks to address these issues. The focus of our review (Zeng et al., 2019; Zeng et al., Forthcoming) was to establish the rationale for integrating the two major theories of higher education student experience: student approaches to learning (Marton and Säljö, 1984) and student engagement (Pace, 1984). The focus of the study presented herein was the development of a short integrative instrument combining Mainland Chinese (Student Engagement; Chinese College Student Survey, CCSS; Luo, 2016) and Hong Kong (Student Approaches to Learning; Student Learning Experience Questionnaire, (Zhao et al., 2017a; Zhao et al., 2017b); student experience assessment surveys. The result of this research yields a student experience instrument that integrates critical theory (expanding our understanding of the student experience) and crosses borders (expanding institutions’ points of references and opportunities to learn from each other).

Background

Assessing the Student Experience

The majority of our current student experience assessment practices can be traced back to five or more decades. Two points of origin stand out for their long-term impact on many of today’s assessment practices. Unsurprisingly, these origins are in the national epi-centers of modern-day higher education: The United States and Great Britain. The United States in addition to having a very well-established system of higher education, and home to many of the world’s most prestigious institutions, also has one of the largest and most complex systems. One persistent issue, not specific to the United States, but certainly a critical issue, is student retention. By the 1970s, “university dropout” had noticeably shifted from a sign of institutional prestige (i.e., our university is so challenging that only the best students survive) to a statistical concern (i.e., our university needs to take better care of its students) that needed to be addressed (Barefoot, 2004). This transition was both heralded and stimulated by higher education researchers. From the growing literature around the topic of retention in the United States, a collative theory (combination of several theoretical components) of engagement emerged. This theory was formed slowly across three research programmes: A) Astin’s (Astin, 1984; 1973) theory of involvement focused on the amount of physical and psychological energy students invest in their higher education; B) Tinto acknowledged the role of involvement for positive outcomes, but his contribution was the critical role of students integrating into university life (i.e., making university their new home); C) The third programme of research is shared by several researchers and is ongoing. The development of the Course Student Experience Questionnaire (CSEQ; Pace 1984) and eventual National Survey of Student Experience (Kuh, 1997).

The second point of origin is experimental research testing the relationship between learners’ cognitive processing and their perceptions of future assessment (Martön and Säljö, 1976a; Martön and Säljö, 1976b). Early work established connections between expectations of surface knowledge assessment (e.g., multiple choice-based tests) and surface cognitive processing. This link between perceptions of the learning environment and the depth of cognitive processing led to the development of large-scale surveys assessing university students’ surface and deep processing (Entwistle and Hounsell, 1979; Entwistle and Ramsden, 1983) and perceptions of courses’ learning environments (Ramsden, 1991). For recent in-depth reviews of both points of origin, we direct you to Fryer et al. (2020).

Measuring University Engagement

University engagement grew into its current national roles by successfully bringing together multiple traditions of research (e.g., time on task, Tyler, 1966; involvement, Astin, 1984; integration, Tinto, 1993). The CSEQ (Pace 1984) and its successor the NSSE were widely adopted across the United States. Partly due to this success and in reaction to other approaches to assessing the student experience, assessing university engagement has seen substantial growth during the past decade. Australia was one country that made a significant commitment to assessing university student engagement, developing a national instrument based in part on the NSSE: Australian University Survey of Student Engagement (AUSSE; Coates, 2009). Definitely the largest expansion to this field was Mainland China’s decision to adopt this tradition of university student engagement as the central means of assessing university students’ experiences across Mainland China. The Chinese College Student Survey (CCSS; Shi, et al., 2014) is the latest version of this national instrument.

Programme Experiences

Entwistle and Ramsden played a central role in transitioning Martön and Säljö’s early experimental findings to the large-scale survey tradition of Student Approaches to Learning (SAL; Martön and Säljö, 1984). Seeking to marry students’ approaches to learning and their broader perceptions of course environments, Entwistle and Ramsden’s early collaborations (for a review see Entwistle and Ramsden, 1983) resulted in instruments for both. The Course Experience Questionnaire and variations on its constructs have been utilised widely in Australia (Ginn, et al., 2007; Lizzio, et al., 2002), United Kingdom (Sun and Richardson, 2012) and Hong Kong (e.g., Webster, et al., 2009).

Both of the two traditions of assessing the student experience reviewed have considerable theoretical and empirical history. Both traditions are directed at the student experience and aim to assess factors contributing to students’ learning outcomes. However, they diverge substantially in their grain-sizes (i.e., level of measurement) and how they hypothesise students achieving these learning outcomes. Assessment of university engagement has the larger grain size (i.e., general university experiences) and hypothesises that an increasing amount of behavioural engagement in university activities (student self-reported) will predict increasing amounts of learning outcomes. At the smaller grain-size (programme- or course-level), perceptions of learning environments related instruments focus narrowly on course(s) learning experiences and their contribution to the quality of student learning (deep and surface) and learning outcomes.

University engagement and Student Approaches to Learning overlap in their grand aims (i.e., assessing factors contributing to learning outcomes). They can be seen as either having opposing hypotheses about how students reach these aims, or they can be seen as two equally critical halves of this pursuit. The current study took the latter stance and undertook to bring these halves together in a single, robust instrument.

Current Study

The current study is a quantitative, large-scale survey-based investigation that brings together the university engagement and SAL traditions of assessing the student experience in the specific context of Greater China (Hong Kong and Mainland China). The CCSS (Mainland China; Guo and Shi, 2016; Luo, et al., 2009) and the Student Learning Experience Questionnaire (SLEQ, Hong Kong; Zhao et al., 2017a; Zhao et al., 2017b) are therefore the focus of this integrative empirical investigation.

The CCSS and its national implementation grew out of concerns about the quality of undergraduate education in Mainland China (Liu, 2013). In the face of these concerns, Mainland China’s higher education system shifted from the expansion of quantity to the enhancement of quality during the early 2000’s (Yin and Ke, 2017). During this transition, there was a move from focusing on macro-factors such as facilities and regulations of teaching administration to micro-factors such as the realities of teaching and learning experiences (Yin and Ke, 2017). During this period, a Chinese version of the NSSE, NSSE-China, was adapted and trialed by a research team at Tsinghua University. This process included interviews and piloting towards an instrument which reflects the characteristics of Chinese student learning and Chinese higher education (Luo, et al., 2009). This initial adaptation served as a basis for the current China College Student Survey (CCSS), which is now widely used across a substantial number of higher education institutions in Mainland China. It is now a national measure of student engagement and institutional effectiveness (Guo and Shi, 2016).

Universities across Hong Kong adopted outcome-based approaches to student learning (Biggs and Tang, 2011) model of university teaching and learning. Under this model, Hong Kong universities are required to demonstrate evidence of student learning for accountability purposes (ECEQW, 2005). With the aim of enhancing student learning experiences and achieving student learning outcomes, Hong Kong universities also established internal processes for monitoring quality and facilitating continuous improvement of teaching and learning. In response to the external (e.g., accountability) and internal demands (e.g., quality monitoring and improvement), the SLEQ was developed as a means for informing curriculum efforts and enhancing student learning. It has been administered institutionally on a regular basis for more than 10 years. The focus of this administration is the assessment of a range of students’ perceptions related to the learning environment and the extent to which students perceive the attainment of the university-wide learning outcomes. The SLEQ items pertaining to students’ perceptions of the learning environment are modeled on the Course Experience Questionnaire (CEQ), which was developed based on the works by Ramsden (1991) in the context of Australian higher education. The factor structure of the CEQ (e.g., good teaching, clear goals and standards, appropriate workload, and emphasis on independence) has been validated in various studies with Western and Eastern populations (e.g., Ginn et al., 2007; Webster, Chan, Prosser, and Watkins, 2009; Fryer et al., 2012).

Consistent with a burgeoning body of evidence (Voyer and Voyer, 2014), gender is considered to be a potentially critical source of variance across this study’s constructs and therefore must be addressed along with construct validity/reliability analysis. Similarly, the implications of students clustered within faculties (disciplines; Leach, 2016) needs to be assessed to ensure the resulting instrument is sufficiently sensitive to contextual factors.

The current research brings these two perspectives on the university student experience, and their respective instruments, together in an integrative, validation study. The general aim of this study is to develop an instrument that combines the best parts of programme and university experience evaluation. While the current study integrates Hong Kong and Mainland Chinese evaluation approaches, the long-term aim is for the present study to be the first in a series of adaptation and validation studies across Pacific Asia. This series of studies will yield a valid, reliable, and comprehensive instrument, which can act as a benchmark to support communication about student learning (programme and university levels) across Pacific Asian Higher Education.

Research Questions

The present study addressed four specific questions related to the adaptation, integration, and validation of the SLEQ and CCSS.

RQ1 and RQ 2: Are translated (i.e., into Chinese in complicated form) versions of the SLEQ and CCSS sufficiently valid (convergent, Confirmatory Factor Analysis; and divergent, inter/intra-correlations), and reliable (Raykov’s Rho) enough to support use in the Hong Kong university context?

RQ3: Are the finalised CCSS and SLEQ constructs inter-related in a manner consistent with their underlying theory (i.e., university engagement and student approaches to learning)?

RQ4: The final question was related to differences across the SLEQ and CCSS constructs. Were there significant gender and/or faculty differences for each construct assessed?

Methods

Participants

The current study was undertaken at one research-intensive university in Hong Kong. Prior to beginning this research and in accordance with the Hong Kong Research Grant Council (which funded this research), the project was reviewed by an Institutional Ethics Review Board and subsequently permission to conduct the study was received.

Participants in the study were first- and second-year students from across the university’s 10 faculties. Students were asked to volunteer to complete the short survey at seven public locations (e.g., libraries, campus thoroughfares, study halls, etc.) across the university main and new campus and four university Residental Halls. The survey’s instructions, ethics statement (informed consent), and items were presented in Cantonese only. This study therefore specifically targeted local undergraduate students only, which comprise roughly 80% of Hong Kong’s undergraduate population. This approach was taken for three reasons. First to ensure a relatively uniform population, second to enable single survey language, and third to be confident of students’ understanding of all survey items (i.e., in their first language) for this first step, validation study.

In addition to restricting sampling to local students, research assistants were instructed to sample strategically, aiming to balance gender and, where possible, faculties representativeness (Table 1). Finally, sampling aimed to collect a large enough data set to permit a random split-half exploratory and confirmatory construct validation of the surveys’ scales. The final sample (n = 539; Female = 321) was sufficient to achieve each of these aims.

Procedures

Sampling for this research was undertaken strategically, managed by a project coordinator. Research assistants (university students) were selected from across the university’s faculties and on-campus Halls (i.e., dormitories). Research assistants were sent out to collect data at specific locations across campus, across a period of 3 weeks near the end of the second semester of university. The growing sample was monitored and sampling locations/times were progressively adjusted to ensure sample proportions that resembled the overall undergraduate student body. Prospective participants were approached and asked by research assistants to complete the two-page survey. Willing students were asked to first review the ethics statement and project information before making a final decision about proceeding. Survey data were input separately by two research assistants and cross-referenced to address potential input errors.

Instruments

The CCSS

In the transition from the Chinese-NSSE to the CCSS, variables were added to reflect the Chinese educational context and interests. Due to the wide variety of undergraduate students in Mainland, the CCSS includes more items that look into the diverse background of the college students, such as social/economic status, pre-college education, enrollment information, financial support information, and so on (Tu, 2013). The major dimensions that have consistently been reported on as having sound psychometric properties include: Level of Academic Challenge, Active and Collaborative Learning, Student-faculty Interaction, Enriching Educational Experience, Supportive Campus Environment (Tu et al., 2013; Shi et al., 2014; Guo and Shi, 2016). Since the development of the CCSS, the team at Tsinghua University has adjusted it based on annual results; however, the overall structure of the questionnaire has not changed substantially since its inception (Guo and Shi, 2016). Due to word-length limitations, for a full review of these constructs, we refer you to the original research and the full, piloted survey included in the Supplementary materials: Supplemental Materials 1: Piloted Integrated Survey of Student Engagement (71 items).

The SLEQ

The SLEQ was designed to assess how undergraduates have experienced their learning in their university studies, with a focus on students’ perceptions of the learning environment and learning outcomes. Taken from the CEQ which was validated in multiple studies (e.g., Ginn et al., 2007; Webster, Chan, Prosser, and Watkins, 2009), this study aimed to assess students’ perceptions of their learning environment with five scales from the SLEQ (i.e., Active Learning, Feedback From Teacher, Good Teaching, Clear Goals, and Assessment For Understanding)–15 items in total. Cronbach’s alphas for the scales ranged from 0.78 to 0.90 indicating acceptable to high reliabilities. These 15 items formed a correlated five-factor structure based on factor analysis (Zhao et al., 2017a). In the current study, students’ perceptions of their achievement of learning outcomes were captured by three domains including Cognitive Learning Outcomes (e.g., critical thinking, lifelong learning, and problem-solving), Social Learning Outcomes (e.g., empathetic understanding, communication skills, and collaboration) and Value Learning Outcomes (e.g., global perspective and civic commitment). The original version contained a total of 27 items demonstrating high reliability and uni-dimensionality for each of the three domains (Zhao et al., 2017b). In the present study, we adopted a short 12-item version by selecting the top four highly discriminating items in each domain, while balancing construct coverage.

Combined Instrument

For the current study, both surveys were translated into Chinese (Cantonese; traditional, rather than the simplified script used in Mainland China). Translation followed standard translation procedures: back-translation, and verification (followed by a discussion to address discrepancies) procedures by the two bilingual authors and a research assistant (Brislin, 1980). Items were self-reported across a cumulative scale from 1–5(SLEQ) and 1–4(CCSS). For the CCSS the scale labels were b1 to b45 (Integrated Learning(CIL), Reflective Learning (CRL), Information analysis(CIA), Academic Challenge(BAC); Active and Collaborative Learning(BAL); Student-Faculty Interaction(BFI); Richness of Educational Experience(BRE); Expanded Learning(BEL)). For the SLEQ the scale labels were s1-s15 and m1-m12. There were five items asking about demographic information such as gender, faculty, years of study, and so on. The combined pilot survey included 77-items in total and took between 5–8 min to complete.

Analyses and Results

For all latent analysis, missing data were handled through Full Information Maximum Likelihood which is widely accepted as being the most appropriate means of dealing with missing data, particularly when it is less than five percent, as it was in the current study (Enders, 2010). Construct validity was assessed by utilising Confirmatory Factor Analyses. Multiple fit indices were used to assess all structural models, in alignment with current quantitative approaches Comparative Fit Index(CFI) and Tucker Lewis Index(TLI) were judged to be acceptable and excellent if >0.90 and >0.95, respectively (Hu and Bentler, 1999), while Root Mean Square Error of Approximation was judged to be acceptable and excellent if <0.05 and <0.08, respectively (Yuan, 2005). The latent reliability of the instruments was calculated as Raykov’s Rho (Raykov, 2009) with >0.7 representing acceptable reliability. Consistent with (Tabachnik and Fidell, 2007), a cut-off for multi-collinearity related concerns were set at < 0.90 (Tabachnick and Fidell, 2007).

Construct validity analysis (RQ1-RQ2) proceeded in two stages. In the first stage, the aim was an initial examination of the prior latent constructs as they have been used in their original contexts. This was done by conducting CFAs for SLEQ and CCSS, each separately with a random split-half of the sample (twice). Then a single CFA with the entire sample, with both surveys together. This was an appropriate first stage of tests because both surveys had seen considerable, high-stakes use (the CCSS in mainland China and SLEQ in Hong Kong), and the only adjustment for the current study was language, from Mandarin to Cantonese (two strongly related languages) for the CCSS and English to Cantonese for the SLEQ. These five CFAs sought to establish the validity of the translated surveys’ scales and pairing of the surveys within one inventory. Toward the second stage, these analyses were used to point to where we might reduce the number of scale items while strengthening their convergent and divergent construct validity. When considering removing items, underfit (<0.50 factor loading) overfit (>0.95 factor loading) as well as a balance of item content coverage were considered (Hair et al., 2019) in addition to overall model fit. Following construct validity/reliability analysis, the potential implications of gender and faculty clustering were resolved through ANOVA and MANOVA, respectively. To describe the magnitude of the relationships (person’s correlation r) or difference (Cohen’s d) presented in this study (Cohen, 1988), well-established heuristic cutoffs have utilised.

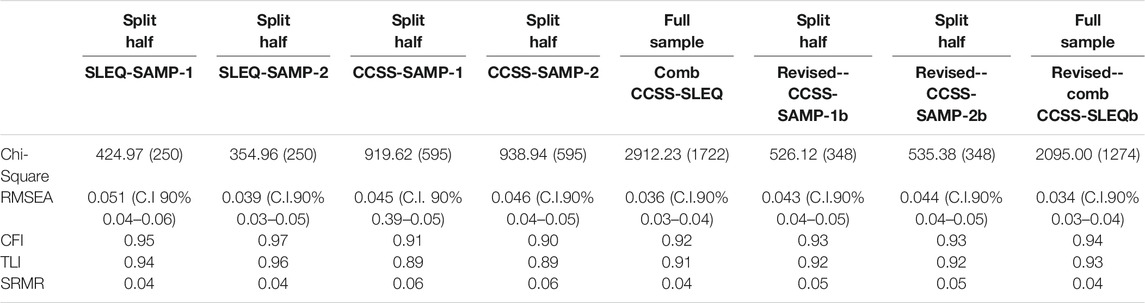

Results for stage one analyses are presented in Table 2. Stage one analyses indicated excellent fit for the SLEQ scales (with the exception of the two-item scale for Good Teaching), but marginal fit for the CCSS and the CFA of the combination of the two surveys together.

TABLE 2. Fit for random split-halves and full sample for each SLEQ and CCSS as well as the combined instrument.

For the second stage of individual survey analysis, five of the CCSS scales had their number of items reduced: BAL = 11 to 5; CIL = 5 to 4; CRL = 6 to 5; BAC = 5 to 4; BRE = 9 to 5. In addition, the CCSS Expanded Learning Behavior scale (two items) was removed due to poor fit (i.e., multicollinearity). With the exception of Good Teaching (two items), which was removed due to poor fit (i.e., multicollinearity), SLEQ scales were maintained in full. Utilising a second split-half pair of data sets, the revised CCSS was tested and retested with CFAs. Fit for the revised scale arrangement improved (Table 2). In a final analysis, the revised CCSS and SLEQ were tested (CFA) together again with the full sample. Based on the model fit guidelines presented and given the complexity of the combined survey, the resulting model fit was acceptable. Along with gender, the fully latent pairwise intercorrelations between the two surveys’ scales were calculated, along with the Raykov’s Rho for each finalised scale. Along with mean and standard deviation, all of these results are presented in Table 3.

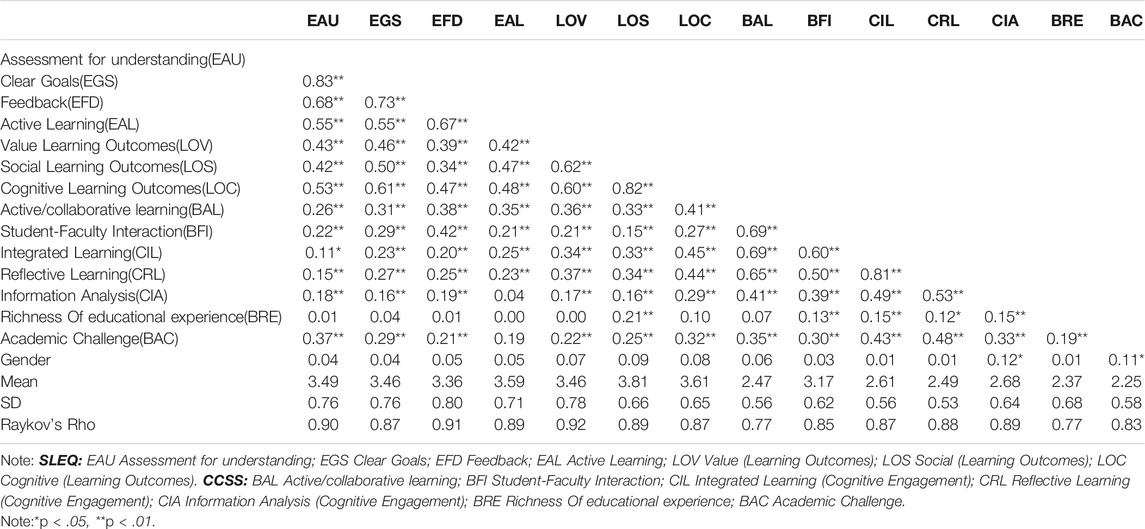

Inter-relationships Between University and Programme Engagement

Intercorrelations (Table 3) within and across each survey were reviewed to assess the theoretical consistency of the empirical connections (RQ3). As expected, strong intra-survey correlations between constructs were present. The strongest were between the learning environment components of the SLEQ (i.e., Assessment for Understanding and Clear Goals, r = . 83) and the learning components of the CCSS (Integrated Learning and Reflective Learning, r = 0.81); both were to be expected given their related content. Smaller relationships between the SLEQ and CCSS were present. In particular, relationships between SLEQ scales and the CCSS’ learning scales (i.e., Integrated Learning, Reflective Learning, and Information Analysis) were generally small (r = from 0.45 to 0.11, p <0 .01). The CCSS construct Richness of Educational Experience stood out as it presented a statistically significant relationship with only one SLEQ construct (Learning Outcome Social: r = 0.21, p <0.01). Overall, most of the inter-survey construct relationships were small to medium in size and statistically significant (p <0 .01).

Faculty and Gender Differences

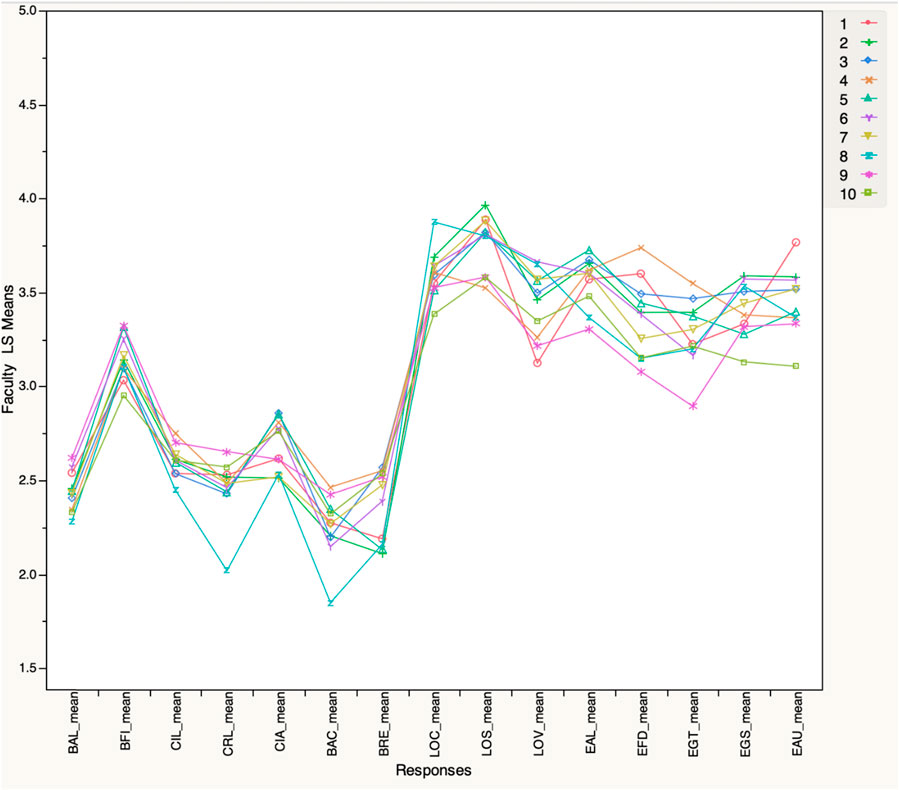

To examine how a student’s specific faculty context impacted their university and programme engagement, a MANOVA was conducted. For the MANOVA, faculty (all 10 of the university’s faculties were represented) was the independent variable and the 14 constructs (seven SLEQ and seven CCSS) were the dependent variables. The MANOVA suggested that a substantial amount of variance (R2 =0.41) could be explained by students’ faculties (Wilks Lambda = 0.59, F = 2.14, DF = 135, p <0.0001). Figure 1 (presented in the appendices) provides a visual sense of faculty differences across the 14 finalised constructs.

FIGURE 1. Faculty differences across the 14 finalised constructs. Notes: SLEQ: EAU Assessment for understanding; EGS Clear Goals; EFD Feedback; EAL Active Learning; LOV Value (Learning Outcomes); LOS Social (Learning Outcomes); LOC Cognitive (Learning Outcomes). CCSS: BAL Active/collaborative learning; BFI Student-Faculty Interaction; CIL Integrated Learning (Cognitive Engagement); CRL Reflective Learning (Cognitive Engagement); CIA Information Analysis (Cognitive Engagement); BRE Richness Of educational experience; BAC Academic Challenge. 1) Architecture 2) Business-Economics 3) Arts 4) Dentistry 5) Education 6) Social Sciences 7) Science 8) Law 9) Engineering 10) Medicine.

To assess the role of students’ gender on their university and programme engagement, an ANOVA was conducted wherein gender (Male = 1, Female = 2) was the independent variable and the 14 engagement constructs were the dependent variables. Only two constructs were statistically significantly different between the genders. For Information Analysis (CCSS), women reported engaging in information analyses more (Malemean = 2.57, Femalemean = 2.75; F = 8.96, DF = 537, p =0.003). The difference would be considered small in size (Cohen’s d = 0.25). In contrast, men reported having stronger Cognitive Learning Outcomes than women (Malemean = 3.67, Femalemean = 3.56; F = 4.05, DF = 537, p = 0.04). The difference was marginally statistically significant and small (Cohen’s d =0.18).

Discussion

In the current study, two well-established student experience surveys, one regarding programme experiences in Hong Kong (SLEQ) and the second regarding university experiences in Mainland China (CCSS) were integrated to become one, short, theoretically integrative university student experience inventory. The resulting instrument was completed by 542 students at one research-intensive university in Hong Kong. Construct validations (convergent and divergent: CFA) for each survey were conducted twice on random split-half samples (RQ1). Following refinement and finalisation of the CCSS (RQ2), the combined inventory was assessed with CFA, resulting in an acceptable fit. Reliability for the 14 latent constructs included in the final inventory was found to be acceptable. In summary, the SLEQ presented excellent construct validity (with the exception of the two-item Good Teaching scale) despite translation (English to Cantonese). The CCSS (Mandarin to Cantonese) benefited from shortening some of its longer scales to a more manageable number, undertaken based on theoretical consistency of items and their respective factor loading.

A review of the two surveys’ intra-correlations suggested reasonable relationships consistent with theory and past empirical findings. Inter-survey relationships similarly were consistent with theoretical alignment between the surveys’ latent constructs (RQ3). The finalised inventory of paired surveys resulted in robust construct validity and reliability. The constructs presented appropriate relationships consistent with the theoretical alignment of their content.

MANOVA assessed the effect of students’ faculty context on students’ programme and university experiences. Considerable variance was explained, which is reasonable to expect given the very different environments faculties such as Medicine and Dentistry, relative to Arts and Social Sciences provide (RQ4). The final test was an ANOVA assessing gender differences for each of the 14 finalised inventory constructs. Statistically significant, small differences were observed for just two constructs (higher Cognitive Learning Outcomes for men and higher Information Analysis) for women. Difference testing therefore pointed to the strong effect of specific faculty environments on students’ experiences, but small to no effect for gender.

Theoretical Implications

As expected, the individual surveys’ constructs presented strong intra-correlations. The only exception to this was the Richness of Educational Experience CCSS construct (BRE). This construct presented low or non-significant relationships with other CCSS constructs and was only related to Social Learning Outcomes from the SLEQ. This was the construct (BRE) that had its items reduced the most dramatically during the current study. Many of the removed items both overlapped with other constructs and/or presented very low factor loading, providing sufficient rationale for removal (Hair et al., 2019). This reduction of weaker items is unlikely to be the reason for the resulting low correlations. An alternative hypothesis is that this construct is too general, too loosely defined to present clear connections with more specific aspects of the student experience at the university or programme level. Despite these overall weak connections, BRE’s connection to SLEQ Social Learning Outcomes and its face validity, as an important component of the higher education experience that many institutions aspire to provide (Denson and Shirley, 2010) makes it worthwhile including in future studies.

Overall the strongest points of interconnection between the two perspectives were for Active/collaborative Interaction (CCSS construct with SLEQ survey constructs) and Cognitive Learning Outcomes (SLEQ construct with CCSS survey constructs), rather than the obvious alternative (i.e., Social Learning Outcomes). These areas of robust connection point to the broader implications of students’ collaborative learning and learning experience yielding deeper outcomes across and through university and programme experiences.

In the present pairing of two lenses on the university student experience (i.e., broad university engagement, Pace, 1984; programme engagement, Entwistle and Ramsden, 1983) two potential, equally valid, outcomes were possible. First, a bifocal integration, with two separate focal distances in one combined lens or, second, a binocular, stereoscopic vision bringing them both into focus together. The correlational findings across the integrated instruments’ constituent parts, with the exception of Richness, were consistently medium in size. This suggested the kind of moderate overlap to be expected from a stereoscopic view of the student experience. In particular, the robust connections between the programme level constructs (e.g., assessment for understanding and clear goals) and broader aspects of university (e.g., academic challenge and student-faculty interaction) indicate both how these different levels of engagement might elaborate on and potentially reciprocate between each other.

The scant and small differences between the genders’ university and programme experiences were somewhat surprising based on recent reviews of the individual difference literature (e.g., Voyer and Voyer, 2014). This might simply be due to the less commonly researched nature of the institutional context (i.e., a research-intensive Asian university).

Practical Implications

Students’ faculty-specific experiences clearly had a substantial effect on the nature of their university and, to a greater degree, their programme experiences. This was to be expected and suggests that the instrument would support benchmarking faculties intra-institutionally. It is easy to see how senior management would clearly prefer to see consistency in the quality of students’ experiences across their institution–consistency that might be supported by robust indicators. The pairing of students’ experience evaluations at the university and programme levels provides both university and faculty management an opportunity to compare laterally and horizontally. University experiences might act as a baseline for programme experiences and vice versa. Furthermore, points of a strong connection between these spheres of experience (e.g., collaborative learning and cognitive learning outcomes) can also suggest how one might enhance the other.

While the inventory resulting from this project has benchmarking implications intra-institutionally, its real added potential is inter-institutional communication. The finalised inventory has been proven to be empirically robust and conceptually clearly connected. Its constituent components are already used by a considerable proportion of the Pacific Asian higher education population (i.e., hundreds of institutions: CCSS across Mainland China and variations on the SLEQ across Hong Kong). The final programme and university experience inventory utilises both of the world’s most dominant theories for understanding students’ higher education experiences. If adopted, even at a smaller, but representative scale, at universities across Pacific Asia, it would provide a valuable foundation for discussion and shared development.

It is worth noting that the theories being employed are consistent with student experience assessment in Australia, the United Kingdom, and the United States. While the inventory does not always have a one-to-one comparison with the constructs from student experience surveys in these nations, they are often close enough to enable discussion. Universities everywhere are keen to improve and many universities in Pacific Asia feel the need to catch up with the West (e.g., Rung, 2015; Yonezawa, 2016), which dominates international rankings. As the student experience and the environment that supports it grows in importance (regionally and internationally), a shared understanding of what that experience is might provide Pacific Asian universities with a sound foundation for regional and international communication/collaboration. This will give them the international injection they need and at the same time support Pacific Asian universities in growing together, ensuring they take centre stage in the coming Asian Century (Kahanna, 2019).

Limitations and Future Directions

There are several limitations with the present study that might be overcome by future research in this area. The first is the fact that the current research was undertaken at one university. This work needs to be expanded to a range of universities in Hong Kong and Mainland China to ensure reliability across contexts (Greater China) and populations. The present study was undertaken in Cantonese and future studies will need to be undertaken with English and Mandarin to start, but Korean and Japanese will be necessary to develop a meaningful Pacific Asian benchmarking instrument.

In addition to confirming the inventory’s reliability/validity in other tertiary contexts, it is also critical that future studies include a range of additional self-reported and observed learning-related covariates. These will be necessary to demonstrate the implications of increasing and decreasing amounts of the student experience components assessed.

Conclusion

The present research set out to adapt and integrate two instruments from schools of thought regarding the university student experience. Considerable research in the areas of programme (e.g., Webster et al., 2009; Fryer and Ginns, 2018; Zhao et al., 2017b) and university student engagement (Coates, 2009; Shi et al., 2014; Luo, 2016) has marked them as important, separate areas of quality assurance. This study emphasises the considerable overlap and points to interconnections that might be key to unlocking further enhancement in both. One example is the potential role of collaborative learning (BAL) experiences’ robust relationship with important programme level learning outcomes. These connections point to how the university and programme experience inter-relate and potentially amplify each other, which in turn stress the importance of stereoscopic vision which integrates both levels of student engagement.

This research resulted in an inventory of 14-constructs assessing the student experience both within specific programmes and broadly across a university. The finalised inventory (Supplemental Materials 2: Finalised Integrated Survey of Student Engagement) demonstrated robust construct validity, reliability and conceptual consistency. The finalised inventory is short, consisting of 63-items, fits comfortably on one page (double-sided) and can be completed in less than 10-mins. The inventory’s pairing of the two dominant university student experience theories and its links to assessment across Greater China and much of the Western world position it as a powerful tool to support dialogue about the student experience locally and internationally. The results of the current research are in a strong position to create a foundation for discussion and ensure Pacific Asian institutions begin to learn from each other as well as the West.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Hong Kong University Ethics Committe. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

Hong Kong Research Grant Council. General Research Fund Grant # 17615218.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.748590/full#supplementary-material

References

Astin, A. W. (1984). Student Involvement: A Developmental Theory for Higher Education. J. Coll. student personnel 25, 297–308.

Barefoot, B. O. (2004). Higher Education's Revolving Door: Confronting the Problem of Student Drop Out in US Colleges and Universities. Open Learn. J. Open, Distance e-Learning 19, 9–18. doi:10.1080/0268051042000177818

Biggs, J. B., and Tang, C. (2011). Teaching for Quality Learning at university: What the Student Does. McGraw-hill education.

Brislin, R. W. (1980). “Translation and Content Analysis of Oral and Written Materials,” in Handbook of Cross-Cultural Psychology. Editors H. C. Triandis, and J. W. Berry (Boston: Allyn & Bacon), 389–444.

Coates, H. (2009). Development of the Australasian Survey of Student Engagement (AUSSE). High Educ. 60, 1–17. doi:10.1007/s10734-009-9281-2

Cohen, Jacob. (1988). Statistical Power Analysis for the Behavioral Sciences. New York, NY: Routledge.

Denson, N., and Zhang, S. (2010). The Impact of Student Experiences with Diversity on Developing Graduate Attributes. Stud. Higher Edu. 35 (5), 529–543. doi:10.1080/03075070903222658

ECEQW (2005). Education Quality Work: The Hong Kong Experience: A Handbook on Good Practices in Assuring and Improving Teaching and Learning Quality. Hong Kong: Editorial Committee of Education Quality Work/The Hong Kong Expereince on behalf of the eight UGC-funded higher education institutions..

Fryer, L. K., Ginns, P., Walker, R. A., and Nakao, K. (2012). The Adaptation and Validation of the CEQ and the R-SPQ-2f to the Japanese Tertiary Environment. Br. J. Educ. Psychol. 82, 549–563. doi:10.1111/j.2044-8279.2011.02045.x

Fryer, L. K., Lee, S., and Shum, A. (2020). “Student Learning, Development, Engagement, and Motivation in Higher Education,” in Oxford Bibliographies in Education. Editor A. Hynds (Oxford University Press). doi:10.1093/OBO/9780199756810-0246

Fryer, L. K., and Ginns, P. (2018). A reciprocal test of perceptions of teaching quality and approaches to learning: A longitudinal examination of teaching-learning connections. Educational Psychology 8, 1032–1049. doi:10.1080/01443410.2017.1403568

Ginns, P., Prosser, M., and Barrie, S. (2007). Students’ Perceptions of Teaching Quality in Higher Education: The Perspective of Currently Enrolled Students. Stud. Higher Edu. 32, 603–615. doi:10.1080/03075070701573773

Guo, F., and Shi, J. (2016). The Relationship between Classroom Assessment and Undergraduates' Learning within Chinese Higher Education System. Stud. Higher Edu. 41, 642–663. doi:10.1080/03075079.2014.942274

Hair, J. F., Risher, J. J., Sarstedt, M., and Ringle, C. M. (2019). When to Use and How to Report the Results of PLS-SEM. Eur. Business Rev. 31, 2–24. doi:10.1108/EBR-11-2018-0203

Hu, L. T., and Bentler, P. M. (1999). Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives. Struct. Equation Model. A Multidisciplinary J. 6, 1–55. doi:10.1080/10705519909540118

Kuh, G. D., Pace, C. R., and Vesper, N. (1997). The Development of Process Indicators to Estimate Student Gains Associated with Good Practices in Undergraduate Education. Res. Higher Edu. 38, 435–454. doi:10.1023/a:1024962526492

Leach, L. (2016). Exploring Discipline Differences in Student Engagement in One Institution. Higher Edu. Res. Dev. 35 (4), 772–786. doi:10.1080/07294360.2015.1137875

Liu, S. (2013). Quality Assessment of Undergraduate Education in China: Impact on Different Universities. High Educ. 66 (4), 391–407. doi:10.1007/s10734-013-9611-2

Lizzio, A., Wilson, K. L., and Simons, R. (2002). University Students' Perceptions of the Learning Environment and Academic Outcomes: Implications for Theory and Practice. Stud. Higher Edu. 27, 27–52. doi:10.1080/03075070120099359

Luo, Y. (2016). Globalization and university Transformation in China (In Chinese). Fudan Edu. Forum 3, 5–10.

Luo, Y., Ross, H., and Cen, Y. H. (2009). Higher Education Measurement in the Context of Globalization: The Development of NSSE-China: Cultural Adaptation, Reliability and Validity (In Chinese). Fudan Edu. Forum 7 (5), 12–18.

Marton, F., and Säaljö, R. (1976b). On Qualitative Differences in Learning-Ii Outcome as a Function of the Learner's Conception of the Task. Br. J. Educ. Psychol. 46, 115–127. doi:10.1111/j.2044-8279.1976.tb02304.x

Marton, F., and Säljö, R. (1984). “Approaches to Learning,” in The Experience of Learning. Editors F. Marton, D. J. Hounsell, and N. Entwistle (Edinburgh, UK: Scottish Academic Press), 36–55.

Marton, F., and Säljö, R. (1976a). On Qualitative Differences in Learning: I-Outcome and Process*. Br. J. Educ. Psychol. 46, 4–11. doi:10.1111/j.2044-8279.1976.tb02980.x

Pace, C. R. (1984). Measuring the Quality of College Student Experiences. California, LA: Higher Education Research Institute.

Ramsden, P. (1991). A Performance Indicator of Teaching Quality in Higher Education: The Course Experience Questionnaire. Stud. Higher Edu. 16, 129–150. doi:10.1080/03075079112331382944

Raykov, T. (2009). Evaluation of Scale Reliability for Unidimensional Measures Using Latent Variable Modeling. Meas. Eval. Couns. Dev. 42, 223–232. doi:10.1177/0748175609344096

Shi, J. H., Wen, W., Li, Y. F., and Chu, J. (2014). China College Student Survey (CCSS): Breaking Open the Black Box of the Process of Learning. Int. J. Chin. Edu. 3, 132–159.

Sun, H., and Richardson, J. T. E. (2012). Perceptions of Quality and Approaches to Studying in Higher Education: a Comparative Study of Chinese and British Postgraduate Students at British Business Schools. Higher Edu. 63, 299–316. doi:10.1007/s10734-011-9442-y

Tabachnick, B. G., and Fidell, L. S. (2007). Using Multivariate Statistics. 5th ed. Pearson, NY: Pearson Education.

Tinto, V. (1993). Leaving College: Rethinking the Causes and Cures of Student Attrition Research. 2nd ed. University of Chicago.

Tu, D. B., Shi, J. H., and Guo, F. F. (2013). The Study on Psychometric Properties of NSSE-China (In Chinese). Fudan Edu. Forum 11, 55–62.

Tyler, R. W. (1966). Assessing the Progress of Education. Sci. Ed. 50, 239–242. doi:10.1002/sce.3730500312

Voyer, D., and Voyer, S. D. (2014). Gender Differences in Scholastic Achievement: a Meta-Analysis. Psychol. Bull. 140, 1174–1204. doi:10.1037/a0036620

Webster, B. J., Chan, W. S. C., Prosser, M. T., and Watkins, D. A. (2009). Undergraduates' Learning Experience and Learning Process: Quantitative Evidence from the East. High Educ. 58, 375–386. doi:10.1007/s10734-009-9200-6

Yin, H., and Ke, Z. (2017). Students' Course Experience and Engagement: an Attempt to Bridge Two Lines of Research on the Quality of Undergraduate Education. Assess. Eval. Higher Edu. 42, 1145–1158. doi:10.1080/02602938.2016.1235679

Yonezawa, A. (2016). “Can East Asian Universities Break the Spell of Hierarchy? the challenge of Seeking an Inherent Identity,” in The Palgrave Handbook of Asia Pacific Higher Education (New York: Palgrave Macmillan), 247–260. doi:10.1057/978-1-137-48739-1_17

Yuan, K. H. (2005). Fit Indices versus Test Statistics. Multivariate Behav. Res. 40, 115–148. doi:10.1207/s15327906mbr4001_5

Zeng, L. M., Fryer, L. K., and Zhao, M. Y. (2019). Measuring Student Engagement and Learning Experience for Quality Assurance in Higher Education. In Paper presented at the International Conference on Education and Learning. Osaka, Japan.

Zeng, L. M., Fryer, L. K., and Zhao, M. Y. (Forthcoming). Toward a comprehensive and distributed approach to assessing university student experience. High. Educ. Quart.

Zhao, Y., Huen, J. M. Y., and Chan, Y. W. (2017). Measuring Longitudinal Gains in Student Learning: A Comparison of Rasch Scoring and Summative Scoring Approaches. Res. High Educ. 58, 605–616. doi:10.1007/s11162-016-9441-z

Keywords: student experience, Pacific Asia, programme evaluation, university engagement, SEM

Citation: Fryer LK, Zeng LM and Zhao Y (2021) Assessing University and Programme Experiences: Towards an Integrated Asia Pacific Approach. Front. Educ. 6:748590. doi: 10.3389/feduc.2021.748590

Received: 24 August 2021; Accepted: 12 October 2021;

Published: 26 November 2021.

Edited by:

Kate M. Xu, Open University of the Netherlands, NetherlandsReviewed by:

Rining (Tony) Wei, Xi’an Jiaotong-Liverpool University, ChinaYaw Owusu-Agyeman, University of the Free State, South Africa

Copyright © 2021 Fryer, Zeng and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luke Kutszik Fryer, bHVrZWZyeWVyQHlhaG9vLmNvbQ==

Luke Kutszik Fryer

Luke Kutszik Fryer Lily Min Zeng

Lily Min Zeng Yue Zhao

Yue Zhao