- 1Department of Electrical and Computer Engineering, Virginia Commonwealth University, Richmond, VA, United States

- 2Office for Diversity, Equity, and Inclusion, University of Virginia, Charlottesville, VA, United States

- 3Department of Teaching and Learning, School of Education, Virginia Commonwealth University, Richmond, VA, United States

- 4Office of Graduate and Postdoctoral Affairs, Diversity Programs, University of Virginia, Charlottesville, VA, United States

- 5School of Medicine, University of Virginia, Charlottesville, VA, United States

Millions of dollars each year are invested in intervention programs to broaden participation and improve bachelor degree graduation rates of students enrolled in science, technology, engineering, and mathematics (STEM) disciplines. The Virginia–North Carolina Louis Stokes Alliance for Minority Participation (VA-NC Alliance), a consortium of 11 higher education institutions and one federal laboratory funded by the National Science Foundation (NSF), is one such investment., The VA-NC Alliance partners implement evidence-based STEM intervention programs (SIPs) informed by research and specifically designed to increase student retention and graduation rates in STEM majors. The VA-NC Alliance is conducting an Alliance-wide longitudinal research project based in Social Cognitive Career Theory (SCCT) titled “What’s Your STEMspiration?” The goal of the research project is to assess the differentiated impacts and effectiveness of the Alliance’s broadening participation efforts and identify emergent patterns, adding to the field of knowledge about culturally responsive SIPs. In other words, “What’s Your STEMspiration?” explores what influences and inspires undergraduates to pursue a STEM degree and career; and how does the development of a STEM identity support students in achieving their goals. In order to complete this research, the research team developed a survey instrument to conduct the quantitative portion of the study. Two preliminary studies, statistical analysis, and cognitive interviews were used to develop and validate the survey instrument. This paper discusses the theoretical and conceptual frameworks and preliminary studies upon which the survey is built, the methodology used to validate the instrument, and the resulting final survey tool.

Introduction

A 2015 study from the US Bureau of Labor and Statistics (Xue and Larson 2015) found that certain disciplines in science, technology engineering and mathematics (STEM) were in a labor market crisis because of a lack of trained professional in the workforce. Furthermore, specific regions experienced this crisis more acutely than others. The study found that in Virginia and North Carolina, the supply of STEM professionals (specifically B.S. degrees in engineering, cybersecurity, software developers, data science and those in skilled trades) currently does not meet the demand, particularly in industries that must hire US. citizens or permanent residents due to security issues. While there has been an increase of representation in some STEM occupations, women, and racial–ethnic minorities continue to be underrepresented in many STEM fields (Byars-Winston et al., 2015). For example, the number of racial–ethnic minorities completing bachelor’s degrees in psychology, social sciences, biological, and computer sciences has increased over the past two decades. However, as observed by Fouad and Santana (2017), since 2000, underrepresented racial–ethnic minorities’ graduation rates have flat-lined in engineering and physical sciences, and their numbers have dropped specifically in mathematics and statistics (National Science Foundation, 2017). The President’s Council of Advisors on Science and Technology President’s Council of Advisors on Science and Technology, (2012) articulates how the ongoing underrepresentation of certain racial and ethnic groups in the STEM fields continues to be a pressing concern for the nation. In order to address the challenges of the 21st century, particularly in the science and technology sectors, increased diversification of the United States STEM labor force is critical to enhancing the nation’s competitiveness.

The Virginia–North Carolina Louis Stokes Alliance for Minority Participation (VA-NC Alliance) was established and funded to address the pressing need of broadening participation STEM. The VA-NC Alliance is a consortium of 11 higher education institutions and one federal laboratory funded by the National Science Foundation (NSF).1 The VA-NC Alliance implements several types of intervention programs to increase the recruitment, retention, and graduation rates of students from underrepresented racial and ethnic groups in science, technology, engineering, and mathematics (STEM) fields2. For the purpose of this work, the research team will refer to individual program participants who identify as one of these groups as AALANAI (African American, Latinx American, Native American or Indigenous populations). These student participants are enrolled in community colleges, Historically Black Colleges and Universities (HBCUs), and predominantly white research institutions (PWIs) within the VA-NC Alliance. The VA-NC Alliance’s overarching goal is to broaden participation in the STEM disciplines and contribute to the nation’s critical need for a more diverse STEM workforce.

By preparing a workforce previously underrepresented in the STEM fields, the VA-NC Alliance is ensuring that diverse perspectives are applied to complex and global problems, benefitting its geographic region and the nation. The VA-NC Alliance partners implement evidence-based SIPs informed by research and specifically designed to increase student retention and graduation rates in STEM majors. The VA-NC Alliance partners’ efforts to broaden participation in STEM include transition programs, tutoring, peer mentoring, speaker series, undergraduate research experiences, financial support (stipends), intrusive or targeted advising, academic monitoring, professional development workshops, and graduate school preparation, to name a few. While SIPs have shown varying degrees of success in improving academic achievement and graduation rates, a better understanding is needed regarding how such programs affect targeted students and improve (or do not improve) their chances of attaining a bachelor’s degree. Since the inception of the Alliance in 2007, the number of STEM degrees obtained by AALANAI students from the partner institutions has increased by 285%. During this same time period, the number of AALANAI students enrolled in STEM disciplines at the partner institutions has increased by 210%. As a result of this success, the VA-NC Alliance is uniquely situated to conduct a research study to understand the specific impacts of the partner schools’ environments and SIPs on students’ persistence and STEM career goals.

Thus the VA-NC Alliance is conducting an Alliance-wide longitudinal research study to assess the differentiated impacts and effectiveness of the Alliance’s broadening participation efforts and identify emergent patterns, adding to the field of knowledge about the impacts of culturally responsive STEM Intervention Programs (SIPs). The study explores the degree to which SIPs, cultural contexts, and personal inputs impact students’ interests, goals, and actions pertaining to college retention, career decisions, and expected outcomes. The Alliance partners implement similar interventions, although tailored for their individual campuses, allowing the VA-NC Alliance an opportunity to conduct a longitudinal comparison study of the SIPs within the unique cultural contexts of each institution. A consortium such as the VA-NC Alliance provides a useful context in which to conduct this study. First, three different institutional types comprise the Alliance, allowing the research team to compare student experiences across these different contexts. The research team anticipates finding that there are strengths and needs within the different institutional contexts, informing their programming. Second, the Alliance provides access to a pool of AALANAI students and control groups to recruit for survey participation and later for focus groups and interviews. Third, the Alliance and its partner schools provide students with STEM intervention programs that would benefit from assessment in order to determine which programs are most impactful according to the data on outcomes and may be correlated with STEM students’ academic and career achievements. This information would be useful for signaling the types of targeted interventions that institutions need to implement and funding agencies need to invest. This paper discusses the theoretical and conceptual frameworks for this research, the preliminary studies, the methodology used to validate the instrument, and the resulting final survey tool.

Overview of Study

The Alliance-wide longitudinal research study, “What’s Your STEMspiration?”, will provide additional understanding of the factors impacting AALANAI student academic success, retention, graduation and post-graduate career decisions in STEM disciplines at VA-NC Alliance institutions. The goal of the research project is to assess how students’ personal inputs and sources of self-efficacy intersect with the differentiated impacts and effectiveness of the Alliance’s broadening participation efforts. For this study, “STEMspiration” includes what influences and inspires undergraduates to pursue a STEM degree and career; and how does the development of a STEM identity support students in achieving their goals? The research team seeks to evaluate the effectiveness of interventions, identify differentiated impacts, and describe emergent patterns, adding to the field of knowledge about culturally responsive SIPs.

The study is based primarily on the theoretical framework of Social Cognitive Career Theory (SCCT) and investigates the underlying processes that impact AALANAI students’ successful pursuit of STEM degrees and careers. Building upon existing theoretical frameworks and two preliminary studies conducted at VA-NC Alliance partner schools, the research team developed a survey instrument to identify specific areas to explore further in focus groups and interviews, increase knowledge pertaining to AALANAI STEM student success, and adapt Alliance programming as needed in response to the study’s findings. Statistical analyses of pilot survey data and cognitive interviews utilizing the inductive methodological approach of grounded theory were used to validate the survey instrument.

Theoretical Foundation

The “What’s Your STEMspiration?” theoretical framework builds upon the work of Vincent Tinto, John C. Weidman et al., Martin M. Chemers et al., and theorists associated with Social Cognitive Career Theory. The foundation for the development of the NSF’s Louis Stokes Alliance program was Tinto’s model of student retention, which emphasizes the academic and social integration of students into the institution (Tinto, 1987) In its early years, the VA-NC Alliance relied on the Tinto model for its program design. As the Alliance’s research study team formed in 2017, members broadened their understanding of student identity through Weidman’s concept of disciplinary socialization, a process by which students build community and develop interpersonal relationships with those within their discipline (Weidman et al., 2014). Given the Alliance’s study would focus on self-efficacy, STEM interventions, outcome expectations, and identity, the research team turned to Chemers et al. (2011) to consider the mediation model of the effects of science support experiences. A model in which various support components affect relevant psychological processes, which in turn lead to commitment to and involvement in a scientific career. With the inclusion of sources of self-efficacy and the career development process in the study, the research team turned to the work of Byars-Winston et al. (2010) and others associated with Social Cognitive Career Theory (SCCT). This theory postulates that students’ interests, choices, and performance are impacted in some way by contextual factors throughout the lifelong academic and career development process. SCCT considers the influence of self-efficacy, outcome expectations, identity, goal attainment on academic and career interests, and goal setting (Bandura 1986; Lent R. W. et al., 2005; Usher and Pajares, 2008; Byars-Winston et al., 2010; Navarro et al., 2014; Lent R. W. et al., 2015; Byars-Winston et al., 2016; Dickinson et al., 2017). As Fouad and Santana stated:

The SCCT (Lent R. W. et al., 1994; Lent R. W. et al., 2000) has continued to be the major theoretical framework investigating factors that have contributed to the underrepresentation of women and racial–ethnic minorities in STEM fields. This has continued to be an area of investigation because there have been consistent race and gender disparities at the educational and occupational levels in STEM professions, even 35 years after Betz and Hackett (1981) began to study it. SCCT has also been used as a frame to examine all of the empirical studies in the past 40 years that have examined gender differences in STEM careers (Kanny et al., 2014), primarily because the model explicitly incorporates gender as a person input and explicitly includes contextual influences at proximal and distal levels (Fouad and Santana, 2017, 26).

The SCCT interest model (focuses on the role of individual interests in motivating choices of behavior and skill acquisition) and choice model (holds that interests are typically related to the choices that people make and to the action they take to implement their choices) utilize self-efficacy in a particular domain, outcome expectations, and interests as well as proximal and distal experiences to explore factors that influence career choices. Studies over the past four decades (Betz and Hackett 1981; Hackett and Betz 1989; Betz and Schifano 2000; Ferry et al., 2000; Fouad and Byars-Winston 2005; Carlone and Johnson 2007; Hurtado et al., 2009; Blake-Beard et al., 2011; Lent R. W. et al., 2011; Johnson et al., 2012; Flores et al., 2014; Navarro et al., 2014; Alhaddab and Alnatheer 2015; Lent R. W. et al., 2015; Dickinson et al., 2017; Fouad and Santana 2017) have examined the fit of the SCCT interest and choice models among college students and have shown that building self-efficacy in a STEM related domain (mathematics, science, etc.) and fostering the development of positive and realistic outcome expectations for entering a STEM career would lead to interests in STEM related activities, in turn, lead to STEM career goals and preparation for, and entry into a STEM career. Furthermore, the SCCT framework incorporates contextual factors, such as research experiences, mentoring, interventions programs, etc., in understanding the underrepresentation of certain populations in STEM careers. As stated in Fouad and Santana, “Using an SCCT framework allows us to understand the complexity of factors and opportunities for intervention presented along a career trajectory. SCCT can also be an asset to those working in direct practice, as it points directly to areas where intervention can facilitate the decision-making process” (Fouad and Santana, 2017, 27). “In sum, SCCT has been instrumental in investigating undergraduate women and underrepresented minorities’ career interests, choice, and persistence while pursing STEM majors” (Fouad and Santana, 2017, 32).

Building on the work of Tinto, Weidman, and others, the “What’s Your STEMspiration” survey instrument specifically incorporated existing SCCT measures (Byars-Winston et al., 2010) and mediation model measures (Chemers et al., 2011). Chemers et al. examined how psychological factors, such as self-efficacy and personal identity, mediated the relationships between science support experiences (i.e., research experience, mentoring, and community involvement) and desirable outcomes (i.e., commitment to and effort expended toward a career in scientific research). Byars-Winston et al. (2016) composed and validated a survey instrument based on SCCT that examined the internal reliability and factor analyses for measures of research-related self-efficacy beliefs, sources of self-efficacy, outcome expectations, and science identity. The “What’s Your STEMspiration?” study responds to the call from Byars-Winston et al. (2010) for additional research into how cognitive, cultural, and contextual characteristics indirectly influence AALANAI STEM students’ outcomes and from Fouad and Santana (2017) to examine if there are some contextual supports (professors, financial aid, mentors, or research experiences) more important for some groups than others and if there are key intervention points that would effectively prevent college attrition in STEM majors.

The “What’s Your STEMspiration?” study is also built upon two preliminary research studies that focused on undergraduate recruitment and retention conducted within the VA-NC Alliance at partner schools, Virginia Commonwealth University (VCU) and the University of Virginia (UVA). The VCU study focused only on its Louis Stokes Alliance for Minority Participation (LSAMP) activities and utilized an emergent mixed-methods design including a survey instrument and focus groups. The UVA study, qualitative in nature, was a VA-NC Alliance wide study and utilized participant interviews. The results from these two preliminary studies informed the development of the survey instrument for this longitudinal study. Overviews of these two studies are provided below.

Virginia Commonwealth University Preliminary Study: An Exploration of Factors Influencing VCU LSAMP Students’ Decisions to Stay in STEM

The VCU LSAMP program offered various STEM SIPs over its fourteen-year history, including transition programs, research experiences, mentoring, scholarship programs, etc., that engage undergraduate AALANAI STEM majors. During that time, the VCU LSAMP team has conducted studies and evaluations to improve program outcomes (Alkhasawneh R. and Hobson, 2009; Alkhasawneh R. and Hobson, 2010; Alkhasawneh, R. and Hobson 2011; Alkhasawneh R. and Hargraves, 2012; Brinkley et al., 2014; Alkhasawneh R. and Hargraves, 2014; Griggs et al., 2016). In 2015, the VCU team conducted a preliminary research study on the design and implementation of the VCU LSAMP Hybrid Summer Transition Program and accompanying intervention programs, and to facilitate student academic and social integration into VCU. The team developed a 63 item survey instrument to investigate: 1) factors that contributed to retention and academic success for their LSAMP students; 2) the impact of the summer transition program on student retention and academic success, as well as its impact on first year success; and 3) the role existing STEM intervention programs played in student academic integration, social integration, and career preparedness. The survey was developed from existing publicly available surveys that assessed academic and social integration and was informed by Tinto’s model of academic and social integration (Tinto, 1987), Strayhorn’s model of sense of belonging (Strayhorn, 2012, Strayhorn, 2018), and Bourdieu’s cultural capital model (Bourdieu, 1986).

At the time of the study, all 154 students in the VCU LSAMP program were invited to participate after the study received IRB approval (HM#20001406). The survey findings provided areas of focus for the qualitative portion of the study, which used focus groups and interviews with targeted students to explore the extent to which SIPs have influenced their perceptions of issues deemed crucial to academic success. Two focus groups were conducted and 10–12 students, current or former STEM majors who had participated in one or more LSAMP SIPs, took part in the focus groups.

The VCU study identified activities and factors important to the academic and social integration of the LSAMP students and their sense of belonging in a STEM field. These findings informed areas of inquiry for the “What’s Your STEMspiration?” survey instrument. This VCU study also provided insight into specific response options for certain survey questions (see Model Development and Pilot Survey Instrument). In summary, regarding STEM related academic support activities and STEM intervention programs, students expressed willingness to attend peer mentoring sessions and career/professional development events; thus warranting exploration in the Alliance-wide study However, students were less likely to take advantage of university sponsored SIPs, such as tutoring, academic coaching, visiting the writing center, or even meeting with a faculty member during office hours; thus warranting possible exclusion in the Alliance-wide survey. While students felt positively about the social interactions they had with other students in their program and their choice in academic major, they were neutral about their faculty members’ knowledge about their future. No statistically significant relationship emerged between the examined sense of belonging and academic capital variables and students’ GPAs. When exploring students’ plans for the future, the most highly indicated reasons for remaining in STEM were personal interest, aptitude, as well as employment and salary opportunities. However, the most commonly cited reason for considering leaving STEM was unappealing employment opportunities. Further findings from the VCU LSAMP preliminary study are explored in Griggs et al. (2016).

University of Virginia Preliminary Study: An Exploration of LSAMP Students’ Experiences and Future Plans

The UVA study was designed to test qualitative research protocols as well as inform the development of the “What’s Your STEMspiration?” survey instrument’s questions and response options, prior to conducting the broader Alliance-wide research study.3 After receiving IRB approval (SBS # 2017021800), the research team conducted interviews over a period of three months with a goal of interviewing two students from each of the partner schools (nine schools at the time of this preliminary study) for a total of eighteen interviews. Using an online randomization tool called Research Randomizer4, the research team randomly selected participants representing each of the schools from the 2017 VA-NC Alliance Annual Undergraduate Research Symposium registration database listing students and their home institutions. In the recruitment email, the research team members informed students that their participation in this study was completely voluntary. Despite offering the incentive of a $20 gift card from Amazon for each participant, the team recruited fifteen rather than eighteen participants for the interviews. Respondents were de-identified using pseudonyms and findings reported in aggregate, keeping participant identities confidential.

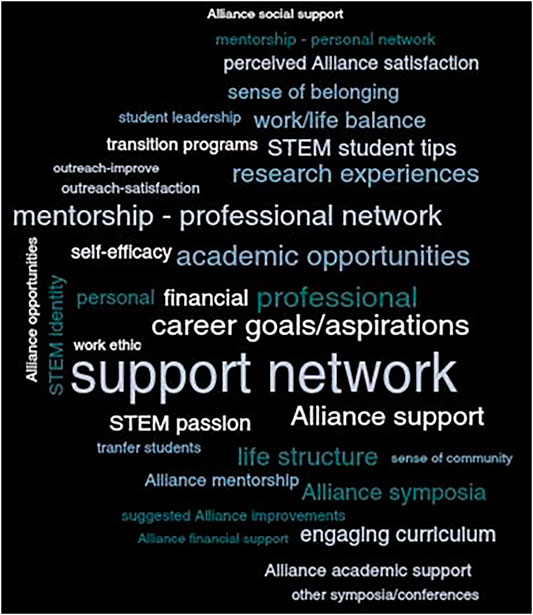

Based on the interview transcripts, a set of codes with definitions were drafted by each research team member and revised until consensus was reached. Then, the transcripts and codes were entered into Dedoose. Out of 17 parent codes, the ones applied the most often to transcript excerpts were the following, in descending order: “support network,” “career goals/aspirations,” and “academic opportunities” (see Figure 1). Interviewees described a variety of support networks, including family, friends in the residential halls who were also struggling with STEM courses, professional organizations, peer mentors, graduate students, faculty, and research labs. Analysis of the surveys revealed the importance of mentors for students. Some students from Bennett College noted that they have multiple mentors. Others such as a student from Saint Augustine’s University shared how academic opportunities impacted her career goals/aspirations, saying that the undergraduate research symposium she attended was

“really an eye-opener for me because I was able to surround myself with people who think like I do, and people who have done work in areas that I didn’t know before, and sparked interests in areas that I would have never knew [sic] if I didn’t go … That’s the role it played for me, is really an eye-opener into reality and what other scientists are doing across the nation.”

Analysis of how the codes intersected with each other clarified for the research team that it would be necessary to utilize factor analysis in the Alliance-wide study in order to understand how numerous variables interact.

The UVA preliminary study identified activities, topics, and themes important to the interviewees - these informed areas of exploration for the Alliance-wide research study to prioritize and incorporate into its survey instrument. Furthermore, the analysis of these results demonstrated how various forms of academic and social support were interconnected in students’ minds. This informed the structure of the subsequent and broader Alliance wide research study’s survey questions, response options, and analysis. Development of the broader Alliance-wide survey instrument is discussed in What’s Your STEMspiration? Instrument Development.

What’s Your STEMspiration? Instrument Development

Model Development and Pilot Survey Instrument

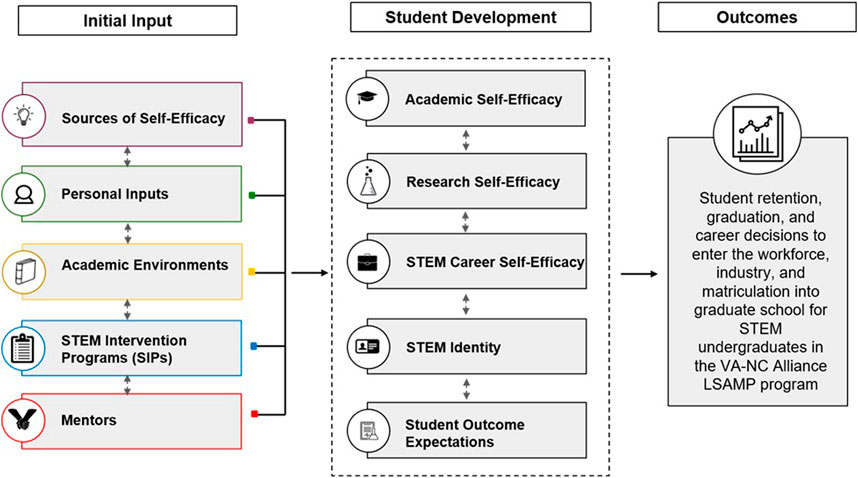

Using the instrument from the VCU preliminary study and the findings from the UVA preliminary study, a pilot survey was developed for a VA-NC Alliance-wide longitudinal research study. The purpose of this study was to better understand the factors impacting academic success, retention, graduation, and post-graduate career decisions for students in STEM of the VA-NC Alliance. The survey instrument was composed of associated factors mapped to ten content areas categorized in a two-tier model. This two-tier conceptual framework segments the study’s exploration of factors influencing student retention and career decisions into five factors in each of the two tiers, as shown in Figure 2.

FIGURE 2. Survey instrument model of the effects of associated factors for student retention and career decisions in the VA-NC alliance program.

The first tier, labeled as the “Initial Input” tier, involves multiple factors including sources of self-efficacy, personal inputs, academic environments, STEM intervention programs, and mentors. Bandura et al. (1999) hypothesized that there are four sources of self-efficacy: mastery experiences, vicarious experiences, verbal and social persuasions, emotional and psychological states. These experiences and states of being influence students’ self-efficacy in the three domains explored in this research (academic-related self-efficacy, research-related self-efficacy, and STEM-career self-efficacy), thus our model incorporates sources of self-efficacy. For this study, personal inputs are defined as those experiences and distal and proximal contextual affordances that may have played a role in the students’ choice of major or desire to pursue a STEM career (Lent R. W. et al., 2000). While sources of self-efficacy may include personal inputs, this study specifically identifies personal inputs as a factor and includes social identities, academic information (e.g., major, GPA, institution, etc.), and previous experiences that may have contributed to the student’s choice to pursue a STEM degree. To explore the impact of student participation in STEM intervention programs and the nuanced differences in students’ experiences at different institutions, i.e., community colleges, HBCUs, and PWI academic environments, both SIP participation and academic environment are included as input factors. Common themes that emerged from the interviews conducted for the UVA preliminary study included “support network” and “mentoring,” thus it was important to include mentoring as a stand-alone input factor in the “Initial Input” tier.

Prior research guided the selection of the five associated factors of the “Student Development” tier of the model, which included research-related self-efficacy, academic-related self-efficacy, STEM-career self-efficacy, STEM identity, and student outcome expectations. Self-efficacy is a central tenet of Social Cognitive Career Theory (SCCT) and is shown to influence students’ choices of career paths, including STEM (Byars-Winston et al., 2016). Dickinson et al. (2017) also reported harmful academic treatment towards African American students may discourage undergraduates from taking classes to prepare for STEM careers, therefore, negatively affecting self-efficacy and outcome expectations. Given that several VA-NC Alliance students noted that they either participate in research experiences or internships, it was important to include research-related self-efficacy and STEM-career self-efficacy in the “Student Development” tier in addition to academic-self-efficacy. Academic self-efficacy refers to one’s perceived capability to perform given academic tasks at desired levels. Academic self-efficacy is often conceptualized as a domain-specific construct, and its relationships with various achievement indexes have frequently been probed in the context of carrying out a specific task of interest (Bong, 1997). Research-related self-efficacy (or research self-efficacy) is defined as one’s confidence in successfully performing tasks associated with conducting research (e.g., performing a literature review or analyzing data) (Forester et al., 2004). STEM-career self-efficacy is defined as one’s belief in one’s ability to successfully pursue a STEM career and perform the job functions required by that career (Milner et al., 2014).

Researchers have also examined the role of science identity in students’ persistence in STEM. When students feel as if they are scientists then they are more likely to pursue careers in the field (Estrada et al., 2011). Given the VA-NC Alliance includes majors beyond science including engineering, agriculture, technology, and mathematics, it was important to explore not just science identity, but STEM identity. As a result, the research team chose to include STEM identity broadly as a factor in the “Student Development” tier. In fact, because students are pursuing interdisciplinary career interests and are finding that the traditional disciplinary boundaries are fading, students may be more likely to see themselves as part of a broad STEM community not just as a scientist, engineer, mathematician, or technologist.

As illustrated in Figure 2, the research team hypothesized that the initial inputs represent factors that directly shape student development factors in the second tier: academic self-efficacy, research self-efficacy, STEM-career self-efficacy, STEM identity, and student outcome expectations. The research team also hypothesize that factors within a tier are interdependent and possibly influence other factors within the tier. For example, academic self-efficacy could influence STEM-career self-efficacy. For the pilot survey, behavioral questions were included to account for any influences that may have contributed to a student’s academic performance, support, and well-being, such as employment, family obligations and engagement, transportation (i.e., commuting from job, school, or class), involvement in academic activities outside of class, time for study, use of social media, and physical activity (i.e., university athletics, intramural sports, physical recreation). The pilot survey included most of the questions from the VCU survey instrument in addition to new questions regarding research self-efficacy, STEM career self-efficacy, STEM identity and mentoring. These questions were added based upon the findings of the UVA interviews and to fit into the proposed model. What began as a SCCT model emerged into a nuanced model appropriate for this study; however, as a result of the additional questions, a 63-item survey instrument evolved into 103 questions. Although respondents did not have to answer all questions, because of branching logic, the instrument became much longer.

Testing the Validity of the Pilot Survey Instrument – Statistical Analysis

To test the pilot survey instrument before submitting to a wider distribution of students, surveys were directly distributed to participants of the VCU LSAMP program and the Elizabeth City State University (ECSU) LSAMP program. Contact information for the VCU and ECSU LSAMP participants had been previously made available by the program staff. In total, more than 350 students and alumni from the two programs were invited to participate. Study data were collected and managed using REDCap5 (Research Electronic Data Capture), a secure web application tool used to design and administer surveys and research databases hosted by VCU.

Traditionally, mixed methods research aids in the validity of a study through triangulation, whereby generalizable findings of quantitative research are enhanced by contextual understandings in the qualitative. But this method of validation is generally attributed to checking the results, and not necessarily verifying that the instrument is really measuring what it is intended to. The research team desired to validate the instrument using statistical analysis. However, after several months of eliciting responses, only 49 completed survey responses had been collected, even after extending the initial deadline an additional two months and after sending additional requests to the VA-NC Alliance students.

The research team noted that there were also a high number of partial responses (approximately 50%), raising concerns about the potential effect of survey fatigue. Subsequently, the research team discussed the estimated time of 15–20 min for completion of the survey, based on preliminary testing by the coordinators of the VA-NC partner schools. They also took note of the survey instrument’s 243 separate survey questions when all branching was considered. Upon a closer review of the partial responses in REDCap, a clear drop out pattern did not emerge; some participants would stop about halfway through the survey, while others would be close to finishing before they stopped. The research team then discussed the option of conducting cognitive interviews to evaluate the survey instrument’s feasibility, simplicity, and time required. Ultimately, the research team decided to first run a principal component analysis (PCA) in SPSS (Statistical Package for the Social Sciences) to confirm that the factors represented in the survey are the ones that the research team were ultimately trying to measure. This method of analysis would also assist the research team in identifying poor performing items based on quantitative summaries of data, to help aid in the decision regarding reducing the number of questions.

Before performing the PCA, the research team discussed in detail the questions and their intended mapping with the study’s proposed model (Figure 2). During this process, the research team recognized that parts of the measure were adapted directly from other instruments (Byars-Winston et al., 2010; Chemers et al., 2011; Byars-Winston et al., 2016). Therefore, the research team decided to focus the analysis on the questions that were newly created/added for this study’s focus, and that served as indicators of attributes in the Student Development tier (Figure 2). Survey questions were then grouped according to these five factors: Academic Self-Efficacy, Research Self-Efficacy, STEM Career Self-Efficacy, STEM Identity, and Student Outcome Expectations. Only completed survey responses were included, but zeros for any non-applicable responses remained. Missing data for completed surveys (e.g., where the question was skipped in branching) was replaced with the column mean (which was 0 for any instances of this), to avoid errors when running the data. A principal component analysis was then performed with Varimax (orthogonal) rotation using SPSS software to test the five factor structures identified.

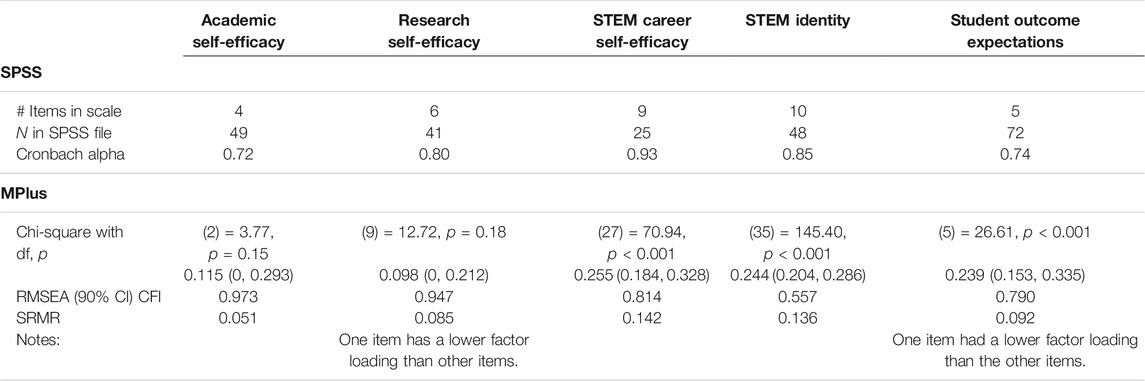

The analysis yielded five factors explaining a total proportion of 48.96% of the variance for the entire set of variables. The communalities of the variables included are rather low overall, which would indicate that the variables chosen for this analysis are only weakly related with each other. However, the correlation matrix showed that most items had some correlation with each other, ranging from r = −0.7 to r = 0.966. All questions did load onto a factor(s). To review the internal consistency of questions that load onto the same factors, Cronbach’s Alpha (CA) was used. Scores ranged from 0.72 to 0.93 (Table 1), indicating that question reliability was good and the scales were acceptable. However, with recognition that communalities of the variables were rather low, and that this type of analysis does not give information about significant cross-loadings, the research team decided to conduct a Confirmatory Factor Analyses (CFA) using Mplus (Table 1).

Prior to conducting the analysis in Mplus, all responses were pulled (partial and full responses) and variables were re-coded to ensure the variables had not been flipped. Information regarding overall results can be found in Table 1. The model for STEM Identity terminated normally, although one item was not significant and the overall model fit was poor, likely due to the low power, or the small N. The small N made it difficult to test the STEM Career Self-Efficacy scale with CFA, however the Cronbach alpha was high, indicating this construct is reliable as one measure. The small N may have also impacted the testing of the Academic Self-Efficacy scale, as the residual covariance matrix was not positive definite, which could indicate a high correlation between variables of dependency. However it is difficult to be certain with the small sample size. Most of the MPlus indicators of model fit, with the exception of the Research Self-Efficacy Scale, which did not meet acceptable scientific levels. Ultimately, the results of this different approach to the analysis did indicate that two questions had low factor loadings (see Table 1), and a change to the question, “previously you indicated that you are considering changing your major,” which mapped to Student Outcome Expectations, was needed. Specifically, descriptive information provided that nine of the 13 items went unchecked each time, resulting in a lot of zeros, which impacted the reliability of the factor analysis. Therefore, the wording of the question was changed to “please explain why you are considering changing your major,” followed by a fill-in-the-blank field. In considering the findings for this question using Mplus, the research team also noted the need for a review of, and some revisions to, any multi-item questions.

Overall, the research team concluded that running the factor analyses on the data that was available did provide some beginning information, but not enough to adjust any additional items in the survey. The results in both analyses conducted in SPSS and Mplus were similar, leaving the research team confident that they were not missing factors in their model. In short, the desired domains are being captured, and the reliability of the instrument is good. However, this does not equal validity, and there were not enough data to conduct a solid analysis or decide which questions could be removed to see if that would help with the low response rate. Therefore, the research team revisited the idea of conducting cognitive interviews in order to firmly identify sources of confusion in assessment items, and to assess validity evidence based on content and response processes.

Testing the Validity of the Pilot Survey Instrument - Cognitive Interviews

The research team decided to conduct cognitive interviews to ensure survey respondents understood the questions as they were intended, respondents could provide and recall accurate answers across the time periods in the survey, determine if respondent experiences were missing from the survey, and that response options captured respondents’ experience. In addition, the team wanted to determine if the survey items supported the survey constructs surrounding self-efficacy.

Cognitive Interview Methods

Seven students from one partner university were invited to participate in the cognitive interviews and five female LSAMP students consented, including one freshman and one senior. A team member conducted the cognitive interviews via Zoom with responses captured by another team member through extensive notes. Interviews took approximately 60 min. At the start of the interview, interviewees were emailed a copy of the survey in a PDF format with the questions to be tested highlighted in yellow. The interviewer used “think aloud talk aloud” and probing methods to elicit responses that allowed the team to understand how interviewees conceptualized the questions and the source of their answers.

At the end of the cognitive interview, interviewees were asked questions about the overall purpose of the survey. Specifically, the interviewee was reminded of the concepts of self-efficacy and STEM identity and then asked the following meta questions:

• What does STEM identity mean to you? Or In what way do you feel you have a STEM identity?

• How well do you feel this survey asked you about your own perseverance, determination, or any barriers you have overcome as a STEM student? Or What has helped you to create an ability to overcome obstacles and succeed as a STEM student?

Following the grounded theory framework for data qualitative analysis, interview notes were loaded into Dedoose for blind coding by three team members. A coding index based on the four broad cognitive interview categories and a set of child codes were developed. The four parent codes were:

• Understanding: interviewee had issues understanding the question, terms, concepts, or misinterpreted the question.

• Recall: the interviewee had limited knowledge or experience to answer the question; had difficulty remembering the time-period; or could not do the mental calculations to answer the question (e.g., hours, number of times, etc.).

• Response: the interviewee could not find a response option that reflected their experience; response options were not mutually exclusive.

• Judge: the interviewee found the question sensitive; did not give an honest response; or the question or response options were not relevant.

The child codes specified the challenge or issue interviewees had with the question. For example, if interviewees could not find a response option that met their experience, the item was coded as “RESPNSMISS” for response missing. Responses from the STEM identity questions were coded as “STEMID = ” and paired with a child code to describe the meaning of STEM identity for that interviewee. This parent code was also used at any point during the interview when interviewees described or discussed their STEM identity. Sources of self-efficacy were coded as “SESOURCE = ” with a child code for the source, linking back to the literature. Like STEM identity, this parent code was used throughout the interview anytime an interviewee discussed a source of self-efficacy. This data set was analyzed separately, and recommendations made to the team regarding changes to survey items.

Dedoose Memos were used to categorize the types of changes being recommended by interviewees. The following memo categories were used:

• Add: add a response option or question

• Change: make a change in the survey structure or question structure

• Clarify: change the language used to clarify a time-period, a term, a response option, or the instructions

• Rephrase: rephrase the question or a response option

• Two additional Memo categories were created:

o Question: a memo that contains a question for the team (these were not analyzed but discussed by the team)

o STEMID: a description or memo related to the STEMID =, or SESOURCE = codes further explaining how the interviewee’s view of their identity or source of self-efficacy links to the literature or is connected to other interviewees’ understanding of the survey construct

Three team members blind coded all the interviews. The team then reviewed the coded interviews to identify items where coding did not agree. The team then reviewed and discussed the few instances (1.72%) where codes differed among the team, comparing the items to others in the code group to determine which code to use. The results of the CI analysis were then mapped onto the survey questions with recommendations for changes based on the analysis.

Results of the Cognitive Interviews

Overall, the cognitive interviews revealed the survey needed adjustment due to interviewee understanding, recall, and response option challenges. Questions, terms, and response options needed to be clarified or rephrased due to assumptions, confusing terms, missing elements, and generational language differences in the questions and response options. In addition, the interviews revealed student STEM identity began in high school, however, the survey did not include this time-period in questions or response options. As a result, interviewees felt they could not accurately answer many questions.

More broadly, responses to the meta questions showed interviewees felt the survey was about their study habits, not their self-efficacy. Because of this perception, they reported answering many questions based on how they wanted faculty to see them vs. how they saw themselves or the actual actions they had taken. As a result, interviewees reported other students would not answer questions honestly. In addition, they pointed out the survey lacked questions about their belief in themselves, their perseverance or persistence, and any obstacles they had faced as a STEM student. During the interviews, students described many challenges they had overcome and how their own persistence had helped construct their academic self-efficacy. Even though the survey generated these memories as part of their answering process, the instrument was not capturing or measuring these aspects of academic-related self-efficacy or STEM identity.

Two students noted their source of self-efficacy came from their own agency, which included changing their current STEM major to another STEM major they “enjoy” more, which also better suited their long-term career goals. This suggests that changing your major may not be a barrier to academic-related self-efficacy but rather a source of self-efficacy depending on the student’s view of themselves as either active agent (changing it to suit personal goals) or passive participant (changing because their grades are low or because of parental pressure).

Interviews also showed academic self-efficacy waxed and waned depending on the time of the semester and the class status of the student when they took the survey. Interviewees who were juniors or seniors noted they felt very confident in their self-efficacy because they were close to graduation. This raised a question regarding how student graduation dates might influence the data.

Case Study: Mentors and Academic Self-Efficacy

Although the survey asked questions about mentors, CI interviewees found these questions confusing, jargon-laden, or could not find an adequate response option to answer the question based on their experience. This section provides a case study of the changes made to questions and response options related to mentors.

The survey used the term “mentor” throughout, however, only defined it in the question specifically dedicated to mentoring toward the end of the survey. The cognitive interview process revealed interviewees’ definition of the term mentor included role models, or people who had inspired their interest in science. For example, one interviewee considered her African American female pediatrician a mentor. The student had looked up to this woman as a young girl and described how the pediatrician contributed to her STEM identity, but the experience described a role model.

Another question grouped having mentors under academic services and opportunities (Which of the services or activities listed below did you take part in or use during your undergraduate career?). Interviewees noted this formalized the mentoring process as a university sponsored activity, which did not reflect their experience. As a result, they did not report having mentors in this question. Therefore, these response options were removed from the question.

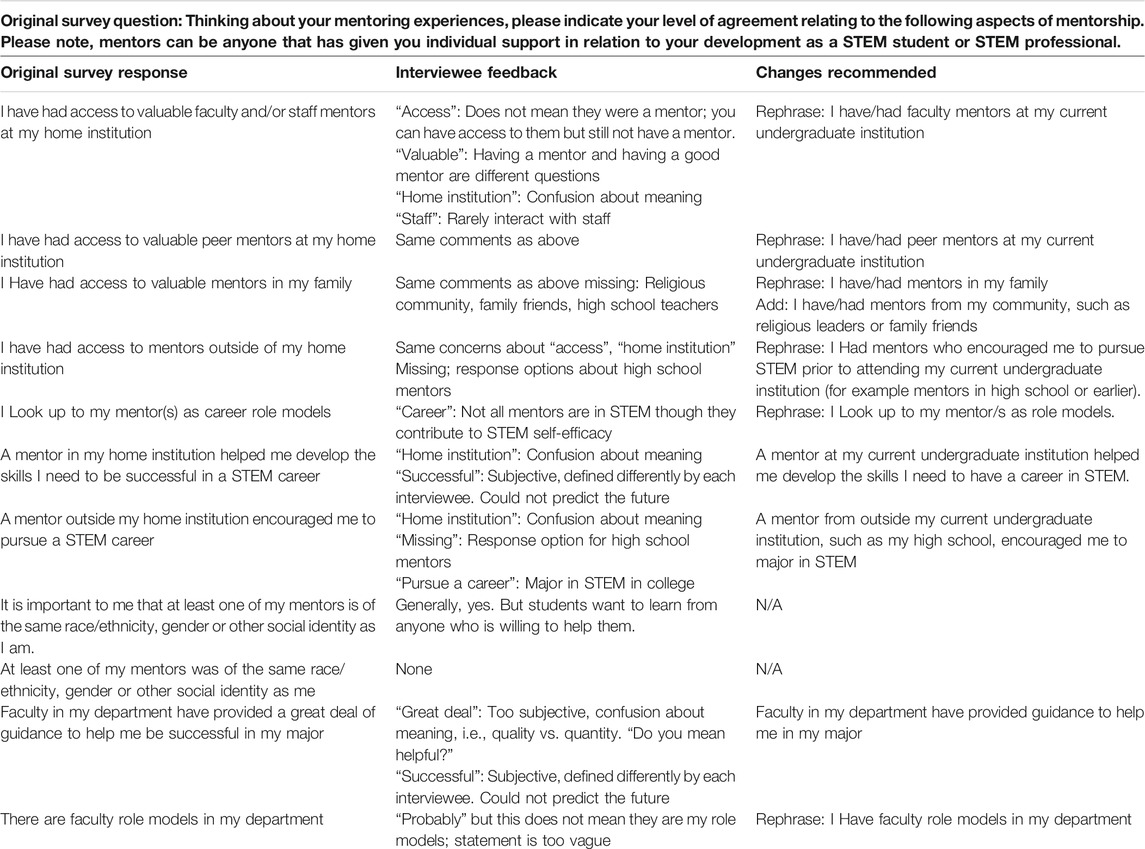

The primary question on mentoring asked interviewees to indicate their level of agreement with a series of statements about their experience being mentored using a five-point scale. Cognitive interviews showed that mentors from high school had significant influence over student decisions about college and majoring in STEM and continued to be mentors for these students during college. However, these high school mentors were not reflected in the statements for the mentoring question nor were they reflected in the rest of the survey. Interviewees also found language in the statements confusing or vague. For example, interviewees found the phrase “home institution” confusing, which appeared in many of the response options. Further, response options contained subjective terms, such as “valuable,” or used terms such as “access” to a mentor rather than “had” a mentor. Further, interviewees commented throughout the survey that their STEM identity and self-efficacy was not as narrowly defined as the survey questions and response options. For example, interviewees reported having mentors who were not in STEM, but who contributed to their STEM self-efficacy. Table 2 provides the original question and statements with the feedback from interviewees, and the initial suggested changes.

TABLE 2. Original survey question and statements with the feedback from interviewees, and the initial suggested change.

Interviewee comments about subjective terms, such as “valuable,” led to team discussions about the purpose of the mentoring question. What did the research team really want to know: if they had mentors? Or who the mentors were and what they contributed to STEM identity and self-efficacy? Based on interviewee comments and our own discussion, the research team restructured the question on mentoring. The new structure more directly links mentors to STEM identity and self-efficacy.

The new question (Table 3) provides interviewees with a list of people and asks them to first indicate who has been a mentor for them, currently or in the past. The people include high school teacher, faculty member at my current undergraduate institution, family member or guardian, peer, and other general categories. The selected answers are then piped into a matrix question which asks interviewees to indicate their level of agreement with a series of statements about what they may have gained from these mentors. The statements are directly linked to sources of self-efficacy.

“What’s Your STEMspiration?” Finalized Survey Instrument

Based upon the cognitive interviews and statistical analysis, the validated survey instrument was finalized. The questions were tailored to address each area of the conceptual framework (Figure 2). It is anticipated that this research will provide insight into the influence of STEM intervention programs as well as the experiences and opportunities they provide for STEM-career self-efficacy, research-related self-efficacy, academic-related self-efficacy, sources of self-efficacy, outcome expectations, and STEM identity within different institutional contexts.

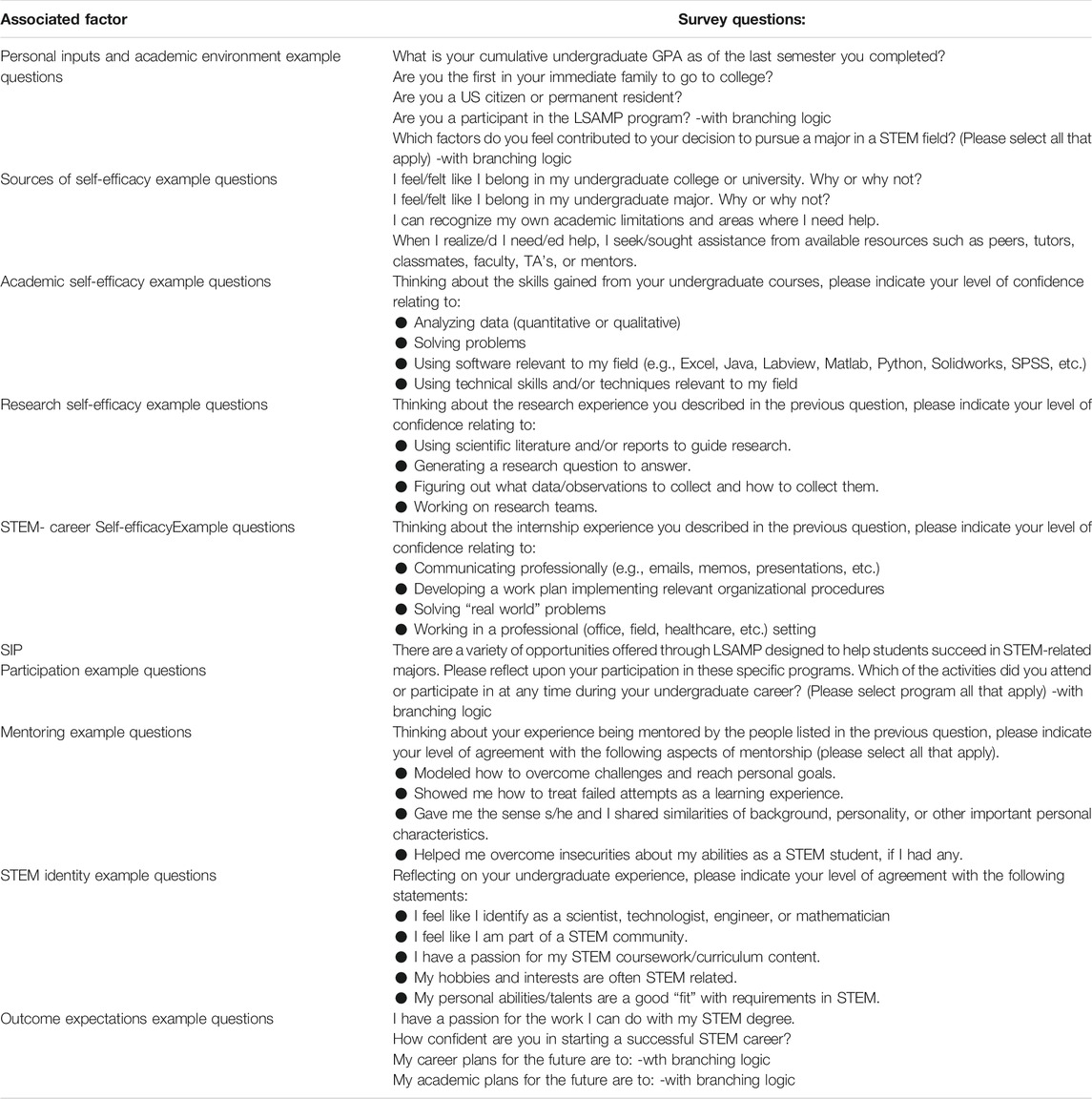

A subset of questions was used to determine survey respondents’ personal inputs, which are defined as those distal and proximal contextual factors that may have played a role in the students’ choice of major or desire to pursue a STEM career. These “personal inputs” are unique lived experiences and cultural/social identities that influence choices, behaviors, norms, and expectations. These may be distal (e.g., family encouragement, middle school experiences, etc.) or more proximal (e.g., undergraduate extracurricular activities, cumulative GPA, etc.) in time. The survey also includes demographic information as personal inputs in this category, recognizing that students’ social identities and cultural context may also provide contextual information (see examples in Table 3).

While personal inputs, mentors, participation in SIPs, and academic environments are all sources of self-efficacy in the domains of research, academic, and STEM careers, the “What’s Your STEMspiration?” survey explores other factors that also influence self-efficacy. These include a sense of belonging at the respondent’s institution and/or major, their confidence in their ability to remain in their major and complete their course work, and their own self-awareness. The survey explores these aspects as sources of self-efficacy with a series of questions, a subset of which are shown in Table 3.

As shown in Figure 2, the “What’s Your STEMspiration?” survey is investigating self-efficacy across three domains: academic, research, and STEM career. These three areas were chosen based upon the responses from the UVA study, the cognitive interviews, and the types of intervention programs and opportunities offered by the VA-NC Alliance partners. For example, research experiences and research preparation are a core component of the VA-NC Alliance programs, thus it is important to investigate how participation in these programs correlate with research self-efficacy. Many VA-NC Alliance students participate in internships, externships, and/or cooperatives, thus this area was also deemed a focus area. Finally, fostering academic self-efficacy is a central tenet of the student educational experience and several SIP’s (e.g., through peer mentoring, tutoring, supplemental instruction, study skills workshops, etc.). If students do not experience a mastery of certain skills needed for academic success in their respective majors, it could influence their retention in the major and expected outcomes. Sample questions which explore these areas are also provided in Table 2. Initially, mentoring was not included as a specific area of inquiry for this survey. However, based upon the responses during UVA’s preliminary study, it was found that mentoring was a key component of the VA-NC Alliance student experience. Even though some models might include mentoring under sources of self-efficacy, SIPs, or personal inputs, this research revealed that it was significant enough to warrant its own uniquely identified factor in the model (Table 3).

As defined by Carol Couvillion Landry (2003), outcome expectancy is a “person’s estimate that a certain behavior will produce a resulting outcome … Outcome expectation is thus a belief about the consequences of a behavior.” In the domain of student outcome expectations, the research team members explore the future students envision for themselves after graduating with a STEM degree and how career or educational “next steps” align with their passions. The research team members also explore how prepared they feel to embark upon that career given the educational experiences (curricular, co-curricular, and extracurricular) in which they have been able to participate (Table 3).

The finalized survey instrument explores all aspects of the proposed model. The responses will provide data which will inform the focus groups’ questions and interviews to be conducted in the next stage of this research.

Conclusion

The research team plans to compare and contrast survey responses regarding student perceptions of the following: self-efficacy, research-related self-efficacy, academic-related self-efficacy, sources of self-efficacy, outcome expectations, and STEM identity in the context of their overall undergraduate institution(s) experiences, STEM disciplines, participation in SIPs, and aspirations for STEM graduate school and/or STEM careers. In order to identify disparities, the research team will also compare the responses of community college transfer, HBCU, and PWI students, as well as other groups within the Alliance (e.g., categorized by major, race, ethnicity, gender, among others). The validated survey instrument distribution began in February 2021. Data will be compared longitudinally and will inform the questions asked in student focus groups planned for the future.

Understanding that organizational cultures differ amongst Alliance institutions and that students possess intersecting identities, the research team anticipates finding a range of student experiences and program impacts specific to institutional contexts and personal inputs. This research project will assess the differentiated impacts and effectiveness of the Alliance’s broadening participation efforts in order to improve program effectiveness. In addition, the research team will seek to identify emergent patterns, adding to the field of knowledge about culturally responsive SIPs. Results will be shared with the VA-NC Alliance partners, the Alliance’s external evaluator, the National Science Foundation, and LSAMP programs across the country, among other stakeholders.

Data Availability Statement

The datasets presented in this article are not readily available because The raw dataset is only available to the research team as specified in the IRB approval. Requests to access the datasets should be directed to cmhvYnNvbkB2Y3UuZWR1.

Ethics Statement

The studies involving human participants were reviewed and approved by Virginia Commonwealth University Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This material is based upon work supported by the National Science Foundation under grant number 1712724. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation. The VA-NC Alliance social science research study team recognizes the generous support of the National Science Foundation’s Louis Stokes Alliances for Minority Participation Program for its longstanding support. In addition, the team is deeply grateful for the VA-NC Alliance partner schools’ project directors, program coordinators, and other personnel who provided feedback about the study and survey as well as assisted with student recruitment to test the instrument.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The team greatly appreciates the students from the UVA and VCU preliminary studies, the Alliance student respondents to the initial survey instrument, and those who participated in the cognitive interviews. Everyone’s participation significantly improved the instrument and the study. The team wishes to express thanks to the following individuals who contributed to the study in important ways: Ruba Alkhasawneh, Kendra Brinkley, Krystal Clemons, DeVante’ Cunningham, Joy Elmore, Lauren Griggs, Brianna James, Wendy Kliewer, Jessica McCauley Huskey, Falcon Rankins, Mychal Smith, Maurice Walker, and LaChelle Waller.

Footnotes

1The partner institutions are: Bennett College, Elizabeth City State University, George Mason University, Johnson C. Smith University, the National Radio Astronomy Observatory, Old Dominion University, Piedmont Virginia Community College, Saint Augustine’s University, Thomas Nelson Community College, University of Virginia, Virginia Commonwealth University, and Virginia Tech.

2The NSF defines historically underrepresented racial and ethnic minorities in STEM as African Americans (or Black), Alaska Natives, Hispanic Americans (or Latinx), Native Americans, Native Hawaiians, and Native Pacific Islanders.

3At the time of the UVA study, the VA-NC Alliance included the following partner schools: Bennett College, Elizabeth City State University, George Mason University, Johnson C. Smith University, Piedmont Virginia Community College. St. Augustine’s University, University of Virginia, Virginia Commonwealth University, and Virginia Tech.

5https://www.project-redcap.org/

References

Alhaddab, T. A., and Alnatheer, S. A. (2015). Future Scientists: How Women's and Minorities' Math Self-Efficacy and Science Perception Affect Their STEM Major Selection. Princeton, NJ: IEEE. doi:10.1109/isecon.2015.7119946

Alkhasawneh, R., and Hargraves, R. H. (2012). Identifying Significant Features that Impact URM Student Academic Success and Retention Upmost Using Qualitative Methodologies: Focus Groups. San Antonio, TX: Atlanta: American Society for Engineering Education-ASEE.

Alkhasawneh, R., and Hobson, R. (2009). Summer Transition Program: A Model for Impacting First Year Retention Rates for Underrepresented Groups. Atlanta.

Alkhasawneh, R., and Hobson, R. (2011). Modeling Student Retention in Science and Engineering Disciplines Using Neural Networks. Amman: IEEE. doi:10.1109/educon.2011.5773209

Alkhasawneh, R., and Hobson, R. (2010). Pre College Mathematics Preparation: Does it Work? Louisville. KY: Atlanta: American Society for Engineering Education-ASEE.

Alkhasawneh, R., and Hargraves, R. (2014). Developing a Hybrid Model to Predict Student First Year Retention in STEM Disciplines Using Machine Learning Techniques. J. STEM Educ. 15 (3), 35.

Strayhorn, L. T. (2018). College Students' Sense of Belonging: A Key to Educational Success for All Students. Baltimore, MD: Routledge.

Bandura, A. (1986). Social Foundations of Thought and Action: A Social Cognitive Theory. Englewood Cliffs, N.J.Englewood Cliffs, N.J.: Prentice-Hall.

Bandura, A., Freeman, W., and Lightsey, R. (1999). Self-efficacy: The Exercise of Control. New York, NY: Springer.

Betz, N. E., and Hackett, G. (1981). The Relationship of Career-Related Self-Efficacy Expectations to Perceived Career Options in College Women and Men. J. Couns. Psychol. 28 (5), 399–410. doi:10.1037/0022-0167.28.5.399

Betz, N. E., and Schifano, R. S. (2000). Evaluation of an Intervention to Increase Realistic Self-Efficacy and Interests in College Women. J. vocational Behav. 56 (1), 35–52. doi:10.1006/jvbe.1999.1690

Blake-Beard, S., Bayne, M. L., Crosby, F. J., and Muller, C. B. (2011). Matching by Race and Gender in Mentoring Relationships: Keeping Our Eyes on the Prize. J. Soc. Issues. 67 (3), 622–643. doi:10.1111/j.1540-4560.2011.01717.x

Bong, M. (1997). Generality of Academic Self-Efficacy Judgments: Evidence of Hierarchical Relations. J. Educ. Psychol. 89 (4), 696–709. doi:10.1037/0022-0663.89.4.696

Bourdieu, P. (1986). “Cultural Capital: The Forms of Capital.” in The Handbook of Theory and Research for the Sociology of Education. Palo Alto, CA: Stanford Universirt Press, 241–258.

Brinkley, K. W., Rankins, F., Clinton, S., and Hargraves, R. H. (2014). Keeping up with Technology: Transitioning Summer Bridge to a Virtual Classroom. Atlanta: Atlanta: American Society for Engineering Education-ASEE.

Byars-Winston, A., Estrada, Y., Howard, C., Davis, D., and Zalapa, J. (2010). Influence of Social Cognitive and Ethnic Variables on Academic Goals of Underrepresented Students in Science and Engineering: a Multiple-Groups Analysis. J. Couns. Psychol. 57 (2), 205–218. doi:10.1037/a0018608

Byars-Winston, A., Fouad, N., and Wen, Y. (2015). Race/ethnicity and Sex in U.S. Occupations, 1970-2010: Implications for Research, Practice, and Policy. J. Vocational Behav. 87, 54–70. doi:10.1016/j.jvb.2014.12.003

Byars-Winston, A., Rogers, J., Branchaw, J., Pribbenow, C., Hanke, R., and Pfund, C. (2016). New Measures Assessing Predictors of Academic Persistence for Historically Underrepresented Racial/Ethnic Undergraduates in Science. CBE Life Sci. Educ. 15 (3), ar32. doi:10.1187/cbe.16-01-0030

Carlone, H. B., and Johnson, A. (2007). Understanding the Science Experiences of Successful Women of Color: Science Identity as an Analytic Lens. J. Res. Sci. Teach. 44 (8), 1187–1218. doi:10.1002/tea.20237

Chemers, M. M., Zurbriggen, E. L., Syed, M., Goza, B. K., and Bearman, S. (2011). The Role of Efficacy and Identity in Science Career Commitment Among Underrepresented Minority Students. J. Soc. Issues 67 (3), 469–491. doi:10.1111/j.1540-4560.2011.01710.x

Dickinson, J., Abrams, M. D., and Tokar, D. M. (2017). An Examination of the Applicability of Social Cognitive Career Theory for African American College Students. J. career Assess. 25 (1), 75–92. doi:10.1177/1069072716658648

Estrada, M., Woodcock, A., Hernandez, P. R., and Schultz, P. W. (2011). Toward a Model of Social Influence that Explains Minority Student Integration into the Scientific Community. J. Educ. Psychol. 103 (1), 206–222. doi:10.1037/a0020743

Ferry, T. R., Fouad, N. A., and Smith, P. L. (2000). The Role of Family Context in a Social Cognitive Model for Career-Related Choice Behavior: A Math and Science Perspective. J. vocational Behav. 57 (3), 348–364. doi:10.1006/jvbe.1999.1743

Flores, L. Y., Navarro, R. L., Lee, H. S., Addae, D. A., Gonzalez, R., Luna, L. L., et al. (2014). Academic Satisfaction Among Latino/a and White Men and Women Engineering Students. J. Couns. Psychol. 61 (1), 81–92. doi:10.1037/a0034577

Forester, M., Kahn, J. H., and Hesson-McInnis, M. S. (2004). Factor Structures of Three Measures of Research Self-Efficacy. J. Career Assess. 12 (1), 3–16. doi:10.1177/1069072703257719

Fouad, N. A., and Byars-Winston, A. M. (2005). Cultural Context of Career Choice: Meta-Analysis of Race/Ethnicity Differences. Career Development Q. 53 (3), 223–233. doi:10.1002/j.2161-0045.2005.tb00992.x

Fouad, N. A., and Santana, M. C. (2017). SCCT and Underrepresented Populations in STEM Fields. J. Career Assess. 25 (1), 24–39. doi:10.1177/1069072716658324

Griggs, L., Stringer, J., Rankins, F., and Hargraves, R. H. (2016). Investigating the Impact of a Hybrid Summer Transition Program. IEEE Front. Education Conf. (Fie), 12–15. doi:10.1109/FIE.2016.7757527

Hackett, G., and Betz, N. E. (1989). An Exploration of the Mathematics Self-Efficacy/Mathematics Performance Correspondence. J. Res. Math. Educ. 20 (3), 261–273. doi:10.5951/jresematheduc.20.3.0261

Hurtado, S., Cabrera, N. L., Lin, M. H., Arellano, L., and Espinosa, L. L. (2009). Diversifying Science: Underrepresented Student Experiences in Structured Research Programs. Res. High Educ. 50 (2), 189–214. doi:10.1007/s11162-008-9114-7

Johnson, J. D., Starobin, S. S., Laanan, F. S., and Russell, D. (2012). The Influence of Self-Efficacy on Student Academic Success, Student Degree Aspirations, and Transfer Planning. Ames, IA: Iowa State UniversityOffice of Community College Research and Policy.

Kanny, M. A., Sax, L. J., and Riggers-Piehl, T. A. (2014). INVESTIGATING FORTY YEARS OF STEM RESEARCH: HOW EXPLANATIONS FOR THE GENDER GAP HAVE EVOLVED OVER TIME. J. Women Minor. Scien Eng. 20 (2), 127–148. doi:10.1615/jwomenminorscieneng.2014007246

Landry, C. C. (2003). Self-efficacy, Motivation, and Outcome Expectation Correlates of College Students' Intention Certainty. Ann Arbor, MI: ProQuest Dissertations Publishing. doi:10.1037/e343782004-001

Lent, R. W., Brown, S. D., and Hackett, G. (1994). Toward a Unifying Social Cognitive Theory of Career and Academic Interest, Choice, and Performance. J. vocational Behav. 45 (1), 79–122. doi:10.1006/jvbe.1994.1027

Lent, R. W., Brown, S. D., and Hackett, G. (2000). Contextual Supports and Barriers to Career Choice: A Social Cognitive Analysis. J. Couns. Psychol. 47 (1), 36–49. doi:10.1037/0022-0167.47.1.36

Lent, R. W., Brown, S. D., Sheu, H.-B., Schmidt, J., Brenner, B. R., Gloster, C. S., et al. (2005). Social Cognitive Predictors of Academic Interests and Goals in Engineering: Utility for Women and Students at Historically Black Universities. J. Couns. Psychol. 52 (1), 84–92. doi:10.1037/0022-0167.52.1.84

Lent, R. W., Lopez, F. G., Sheu, H.-B., and Lopez, A. M. (2011). Social Cognitive Predictors of the Interests and Choices of Computing Majors: Applicability to Underrepresented Students. J. vocational Behav. 78 (2), 184–192. doi:10.1016/j.jvb.2010.10.006

Lent, R. W., Miller, M. J., Smith, P. E., Watford, B. A., Hui, K., and Lim, R. H. (2015). Social Cognitive Model of Adjustment to Engineering Majors: Longitudinal Test across Gender and Race/ethnicity. J. vocational Behav. 86, 77–85. doi:10.1016/j.jvb.2014.11.004

Milner, D. I., Horan, J. J., and Tracey, T. J. G. (2014). Development and Evaluation of STEM Interest and Self-Efficacy Tests. J. Career Assess. 22 (4), 642–653. doi:10.1177/1069072713515427

National Science Foundation, N. C. f. S. a. E. S. (2017). Women, Minorities, and Persons with Disabilities in Science and Engineering: 2017. Arlington, VA.

Navarro, R. L., Flores, L. Y., Lee, H.-S., and Gonzalez, R. (2014). Testing a Longitudinal Social Cognitive Model of Intended Persistence with Engineering Students across Gender and Race/ethnicity. J. vocational Behav. 85 (1), 146–155. doi:10.1016/j.jvb.2014.05.007

President’s Council of Advisors on Science and Technology (2012). Report to the President, Engage to Excel Producing One Million Additional College Graduates with Degrees in Science, Technology, Engineering, and mathematics.Engage to Excel, Producing One Million Additional College Graduates with Degrees in Science, Technology, Engineering, and Mathematics. Washington, D.C.: Washington, D.C.: Executive Office of the President, President's Council of Advisors on Science and Technology.

Strayhorn, T. L. (2012). Exploring the Impact of Facebook and Myspace Use on First-Year Students' Sense of Belonging and Persistence Decisions. J. Coll. Student Development 53 (6), 783–796. doi:10.1353/csd.2012.0078

Tinto, V. (1987). Leaving College: Rethinking the Causes and Cures of Student Attrition. Chicago, IL: University of Chicago Press.

Usher, E. L., and Pajares, F. (2008). Sources of Self-Efficacy in School: Critical Review of the Literature and Future Directions. Rev. Educ. Res. 78 (4), 751–796. doi:10.3102/0034654308321456

Weidman, J. C., DeAngelo, L., and Bethea, K. A. (2014). Understanding Student Identity from a Socialization Perspective. New Dir. higher Educ. 2014 (166), 43–51. doi:10.1002/he.20094

Keywords: STEM identity, self-efficacy, outcome expectations, survey instrument validation, cognitive interviews, social cognitive career theory

Citation: Hargraves RH, Morgan KL, Jackson H, Feltault K, Crenshaw J, McDonald KG and Martin ML (2021) What’s Your STEMspiration?: Adaptation and Validation of A Survey Instrument. Front. Educ. 6:667616. doi: 10.3389/feduc.2021.667616

Received: 14 February 2021; Accepted: 27 April 2021;

Published: 31 May 2021.

Edited by:

Chris Botanga, Chicago State University, United StatesReviewed by:

Taro Fujita, University of Exeter, United KingdomWang Ruilin, Capital Normal University, China

Copyright © 2021 Hargraves, Morgan, Jackson, Feltault, Crenshaw, McDonald and Martin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rosalyn Hobson Hargraves, cmhvYnNvbkB2Y3UuZWR1

Rosalyn Hobson Hargraves

Rosalyn Hobson Hargraves Kristin L. Morgan

Kristin L. Morgan Holly Jackson

Holly Jackson Kelly Feltault3

Kelly Feltault3 Jasmine Crenshaw

Jasmine Crenshaw