- Department of Communication Sciences and Disorders, Adelphi University, Garden City, NY, United States

Research methods courses are a critical component of teaching the applications of evidence based practice in the health professions. With the shift to online learning during the Covid-19 pandemic, new possibilities for teaching research methods have emerged. This case study compares two 5-week asynchronous online graduate level research methods courses in the field of Communication Sciences and Disorders. One online section of the course used traditional methods (TDL) common in face-to-face courses with recorded slide-based lectures, written discussion forums, and a final presentation. The other online section of the course used project-based learning (PBL), which consisted of weekly projects that forced students to engage with the literature and work both collaboratively and autonomously. We measured students’ research self-efficacy and course satisfaction before and after their courses. Overall, research self-efficacy was higher for the TDL class at both time points. However, the PBL class showed a higher percent increase in research self-efficacy, specifically for more difficult and unfamiliar tasks like statistical analysis. Students in both courses were equally satisfied with their course and instructor; however, students in the PBL class reported a greater workload and level of difficulty. We interpret the results as showing benefits of PBL in facilitating greater engagement with the research literature and course content; while TDL had advantages in students’ confidence with the course, likely due to familiarity with the instructional format.

Introduction

There is sparse research regarding students’ experiences in online research methods courses, particularly in the health professions. There is an increased awareness of the importance of research-based skills in training pre-service students in clinical fields. In the field of Communication Sciences and Disorders (CSD), there is a growing research to practice gap (Olswang and Prelock, 2015). Successful engagement of pre-service clinical students in research methods courses and exposure to the research process can increase their knowledge and use of evidence based practice later in their career (Zipoli and Kennedy, 2005). Further, knowledge of how research informs evidence based practice expands the clinician’s role in implementation science, which is needed to translate research to clinical practice (Olswang and Prelock, 2015). Moreover, online health-related programs are now expanding to a larger scale with pandemic restrictions. In the health professions, there is a translational relationship between online learning and telehealth services, which are now increasing due to Covid-19 (McCormack et al., 2014; Ng et al., 2014; Regina Molini-Avejonas et al., 2015; McDonald et al., 2018; Overby, 2018). The format of online classes frees students to spend more time in their clinical practicum experiences during their externship training (e.g. Ng et al., 2014). Success in online courses has been tied to collaborative and active learning (Chickering and Ehrmann, 1996; Gaytan and McEwen, 2007) and instructor presence (Zhao et al., 2005; Dixson, 2010). Often students enroll in research methods courses with a preconceived notion of difficulty or lack of relevance to their lives (Early, 2013; Ni, 2013). It is unknown how the online research methods courses facilitate engagement in research and the development of skills to translate research to practice.

Approximately 1.4 billion students shifted to online learning worldwide at the onset of the Covid-19 pandemic (Crawford et al., 2020). As the World Health Organization declared Covid-19 a public health emergency of international concern, communities across the globe went into quarantine and many schools and universities closed (World Health Organization, 2020). Faculty were compelled to rapidly alter their instructional method and shift to online teaching (Ilanes et al., 2020). This rapid and temporary shift, termed “emergency remote teaching,” lies in contrast to planned online instruction (Hodges et al., 2020). Partners and Analytics, 2020 reports that prior to fall semester 2020, only 50% of faculty felt that online learning is an effective method for teaching. Given the protracted period of vaccine development and distribution, virtual learning will continue to be a mainstay in higher education (Burki, 2020), prompting investigations regarding online instructional practices during and post-pandemic.

There is a growing body of literature regarding online pedagogy and its advantages (or lack of disadvantages) over offline learning (McKenna et al., 2014; Pei and Wu, 2019; Rapanta, et al., 2020; Reeves et al., 2017). Students in online courses can outperform their counterparts in traditional face-to-face learning environments (Conolly et al., 2007; Lim et al., 2008; Maki and Maki, 2007) and show greater engagement with course content (Robinson and Hullinger, 2008). Johnson and Aragon (2003) suggest that using interactive and active learning over recorded lectures, traditional readings, homework, and tests drive success in online learning. Online course offerings in higher education can be a cost-effective approach that enhance individualized interaction with students (e.g. Kashy et al., 2000; National Education Association (NEA), 2001; Swan, 2003), flexibility for learning (e.g. Floyd and Coogle, 2015; National Education Association (NEA), 2001, and increased access to higher education for students from all backgrounds (National Education Association (NEA), 2001). During the shift to semi-permanent online learning during Covid-19, faculty reported some positive revisions to their courses including updating learning objectives, assessments, and activities; using new digital tools; and enhancing student engagement with more active learning elements (Partners and Analytics, 2020). As many faculty were thrust into online instruction, there was a need to find novel ways to facilitate collaboration, engagement, and presence. Due to this semi-permanent shift to online learning, further investigation of online pedagogy is needed to inform faculty’s instructional choices. The aim of this case study is to describe research self-efficacy and satisfaction with online research methods courses taught using either project-based learning or traditional methods commonly found in face-to-face learning.

Research Methods in Communication Sciences and Disorders

Graduate programs in CSD train audiologists and speech-language pathologists to diagnose and treat communication impairments that impact hearing, speech, language, learning, feeding and swallowing in education and healthcare contexts. Fundamental to these clinical skills is evidence based practice (EBP), the integration of external scientific evidence, internal evidence from clinical practice, clinical expertise/expert opinion, and client/patient/caregiver perspectives (American Speech and Hearing Association ASHA, 2019, n.d.; Sackett et al., 1996). Research methods coursework is a critical component of EBP in graduate programs in CSD (Greenwald, 2006). The curriculum of graduate CSD programs is designed to reinforce student’s understanding and application of research principles in both coursework and clinical practicum experiences. All graduate programs in CSD offer a core course in research methods to address the knowledge and skills in research (Council on Academic Accreditation, 2020). In order to engage in EBP, student-clinicians must learn how to ask clinical questions, locate research resources to search the primary literature, critically review the literature, and apply findings to clinical practice.

Zipoli and Kennedy's (2005) survey of speech-language pathologists indicated that attitudes toward EBP are predicted by exposure to research and EBP during both graduate training and the first year of clinical practice. However, there is a significant decrease in exposure to research between graduation and the first year of clinical practice. Thus, clinicians who continue engaging with research during the first year of clinical practice are more likely to implement EBP (Zipoli and Kennedy, 2005). In addition to engaging in EBP as a consumer of research, it is critical for the growth of CSD as a profession to increase the number of doctoral-level scholars in the field. Over 20 years ago the Council on Academic Programs in Communication Sciences and Disorders (CAPCSD), 2002 and ASHA identified a shortage, termed a “looming crisis”, of doctoral-level scholars to assume positions in academic programs (Council on Academic Programs in Communication Sciences and Disorders (CAPCSD), 2002). Prior research experience increases interest in pursuing a doctor of philosophy (PhD) degree (Davidson et al., 2013). Therefore, one mechanism for increasing the number of potential doctoral applicants is increasing research engagement through research-focused coursework in the undergraduate and master’s level programs.

Project and Problem-Based Learning

One way to increase active learning and engagement with research is to involve students in inquiry-based experiences. Project-based learning is a constructivist approach, organized around projects designed to stimulate problem solving, critical thinking, and learner autonomy using realistic problems. However, the term project-based learning has been used broadly to encompass many different pedagogical practices (Tretten and Zachariou, 1997). John and Thomas (2000) review of project-based learning outlined five criteria: projects are central and not peripheral to the curriculum; projects focus on questions and problems that force students to encounter the concepts and principles of their discipline; projects involve constructive investigation; projects are student-driven rather than teacher-led; and projects are realistic. Barber and King (2016) contrasted the pedagogy of project-based learning and traditional lecture as student-centered rather than teacher-centered, real world rather than theoretical situations, collaborative rather than individual work, and multiple outcomes over one correct outcome.

Another type of experiential learning, problem-based learning, has been utilized in clinical education in the health professions. Problem-based learning is often considered a branch of project-based learning, although it has its own history. The main contrast between the two methodologies is that project-based learning results in the creation of an artifact while problem-based learning results in a solution to a problem. Barrows (1996) outlined the objectives and characteristics of problem-based learning, which correspond to the learning processes and objectives in clinical education. In problem-based learning knowledge is structured for clinical contexts through the development of clinical reasoning processes. Students gain self-directed learning skills while increasing their motivation for learning. Strobel and Van Barneveld (2009) performed a meta-synthesis, a qualitative study that examines both qualitative and quantitative studies, to identify themes regarding outcomes of problem-based learning as compared to traditional learning in medical education. Results of the meta-synthesis indicated that problem-based learning favored student and faculty satisfaction, long-term knowledge retention, response elaboration, and case-based assessments focused on clinical skill and reasoning. Conversely, traditional teaching and learning approaches were found to favor short-term knowledge retention, responses to multiple choice tests, and true-false questions.

Ng and colleagues (2014) compared outcomes of online and in-class problem-based learning in undergraduate CSD students at the University of Hong Kong. Noting the logistical difficulties of students having field placements combined with the emphasis on collaborative learning in problem-based curricula, the authors examined the outcomes of problem-based learning in an online environment. Results indicated that students preferred online to in-person problem-based learning, citing that it saved them time from commuting, they contributed as much as in person problem-based learning, and felt less anxiety and more freedom to communicate. Academic outcomes were equivalent between the online and in-class problem based learning groups.

Kong (2014) examined CSD students’ perception of problem-based learning in a course on cognitive communication disorders. Students recorded their reflections about their experiences working collaboratively through clinical problems in the problem-based learning course. Content analysis identified positive and negative themes in the students’ reflections. Positive themes included collaboration, deeper understanding of material, and development of clinical skills. Negative themes included organization and clarity of information presented and concerns with the depth and breadth of information provided by peers. Interestingly, collaboration with peers was viewed both positively and negatively depending on the effort and contribution of peers. Although negative themes were identified in the students’ reflections, negative comments decreased as the semester progressed, indicating that problem-based learning was viewed more positively as students adjusted to a new method of learning.

Due to its focus on a clinical problem, outcomes of problem-based learning, but not project-based learning, have been reported in the field of CSD. It is argued that project-based learning and problem-based learning exist on a continuum and they play complementary roles in active learning (Donnelly and Fitzmaurice, 2005; DeFillippi and Milter, 2009; Whitehill et al., 2014). Indeed, there is considerable overlap in the application of the approaches (Bereiter and Scardamalia, 2003). Both approaches increase critical thinking in students, although project-based learning shows greater outcomes for creativity (Anazifa and Djukri, 2017). Thus, depending on the learning objectives, an instructor may employ either of these approaches or some combination of them within a framework of collaborative, active learning.

Greenwald (2006) discusses the use of problem-based learning in CSD research methods courses. She notes that problem-based learning supports students in developing the self-directed learning skills that underlie the development of clinically-based research skills. For example, using problem-based learning students formulate clinical questions, learn research design as they investigate answers to clinical questions, work collaboratively in groups that mimic clinical and research teams, and experimentally compare different clinical methods (Greenwald, 2006). Similarly, online learning fosters self-directed learning skills as part of the pedagogical process. Students in online courses develop self-directed learning skills as they keep track of assignments, manage their time due to the flexibility of online lectures, and initiate engagement with peers and the instructor (Xu, 2020).

Research Self-Efficacy

Self-efficacy is a social cognitive construct, defined as one’s beliefs in their own ability to carry out specific tasks (Bandura, 1977; Bandura, 1997). This integrative measure has been previously employed with undergraduate and graduate students in pedagogical settings across disciplines (Phillips and Russell, 1994; Kahn, 2001; Pasupathy and Bogschutz, 2013; Lambie et al., 2014). However, it is unknown whether hands-on learning via problem or project-based learning will increase self-efficacy in students. Research self-efficacy is one’s beliefs regarding their ability to carry-out research related tasks (Forrester et al., 2004). Self-efficacy is believed to develop through positive enactive experiences (Gist, 1987; Pajares, 2002). For example, research self-efficacy in students is based on mentorship and positive research experiences (Bieschke et al., 1996; Love et al., 2007; Lambie et al., 2014). Thus, one may theorize that enactive experiences doing hands-on activities in class may increase research self-efficacy. Research self-efficacy is a predictor of future research productivity in both graduate students and faculty (Bieschke et al., 1996; Kahn, 2001; Landino and Owen, 1988; Pasupathy and Siwatu, 2014). Higher research self-efficacy is also related to interest in conducting research (Bishop and Bieschke et al., 1998). Therefore, one may theorize that enactive experiences doing hands-on activities in class may increase self-efficacy. Both clinical and research self-efficacy have been studied in CSD. Clinical self-efficacy is positively correlated with clinical performance in CSD graduate students (Pasupathy and Bogschutz, 2013) and increases during the course of study (Lee and Schmaman, 1987). Research doctoral students in rehabilitation sciences, who have more hands-on research experience, rated themselves higher in research self-efficacy compared to clinical doctoral students in the same program (Pasupathy, 2018). Hands-on experience with research equipment increased research self-efficacy and interest in pursuing a PhD degree in CSD Master’s students (Randazzo and Khamis-Dakwar, 2018). Research self-efficacy is greater in graduate students when research courses are combined with hands-on research experience (Unrau and Beck, 2004). Pasupathy (2018) notes that both research production and consumption may be moderated by one’s own beliefs about their research understanding and capabilities. As research self-efficacy is predicted by research experience, it would follow that research self-efficacy would be higher using skill-focused projects and problem-based learning activities compared to traditional lecture-based instruction.

Current Study/Research Questions

The current case study compared two fully online asynchronous graduate-level Research Methods in Communication Sciences and Disorders classes taught in a 5-week session during summer 2020. One online class employed a combination of problem-based learning and project-based learning (PBL) while the other online class employed a traditional format with online lecture (TDL). Both courses covered the same topics, course content, and required readings from Research in Communication Sciences and Disorders: Methods for Systematic Inquiry 3rd Edition (Nelson, 2016). We compared the two courses on pre-post measures of research self-efficacy and student satisfaction. Research Questions addressed in this study are:

(1) Does PBL or TDL drive a greater increase in research self-efficacy from pre- to post- course as measured by the Research Self Efficacy Scale?

(2) Do students report greater satisfaction with PBL or TDL in an online course as measured by their ratings of the usefulness of their assignments, the likelihood of engaging in research and doctoral study in the future, and open-ended comments?

We predict that students engaged in both PBL and lecture-based TDL online learning will increase their research self-efficacy by the end of the course. However, given the hands-on aspect of PBL, we predict that students in the PBL section of the course will show greater gains in research self-efficacy. We predict that students may report greater engagement in the PBL course but may perceive the workload to be greater, decreasing satisfaction.

Methods

Participants

Participants were graduate students in the Communication Sciences and Disorders Master of Science Program at Adelphi University who completed their second semester in the program at the time of the study. At the time of this case study, all students had completed a full academic year of face-to-face courses and at least one semester of fully online courses. Therefore, the students included in the current study were in a position to evaluate their experiences in these online courses. Thirty-eight students were enrolled in Research Methods in Communication Sciences and Disorders as an asynchronous online course during a 5-week summer 2020 semester. Students were blind to the format of the two course sections during their registration and enrollment. The PBL class, which was conducted as a combination of problem and project-based learning, had 18 students (18 females) and the TDL class, which was conducted online as a traditional lecture-based course, had 20 students (20 females). However, three students were excluded from the TDL class’s data, as their pre-post anonymous codes were incongruent and their data could not be matched. Therefore, the TDL group’s data consisted of 17 students (17 females). In the PBL class 5 students reported previous experience working in a research environment, 7 reported previously participating in an experiment, and 2 reported previously presenting at a research conference. In the TDL class 5 students reported previous experience working in a research environment, 10 reported previously participating in an experiment, and 2 reported previously presenting at a research conference. Based on this information, the two classes were deemed equal on prior research exposure and experience.

Procedures

This scholarship of teaching and learning case study was designated as exempt status by the Institutional Review Board at Adelphi University. Students were advised in the syllabus that they could contact the research coordinator to opt out of the study and their instructor would be blind to whose data were included in the final analysis. The PBL class, taught by the first author, and the TDL class, taught by the third author, were conducted asynchronously over a 5-week summer session during the Covid-19 pandemic. The PBL instructor had 10 years of experience teaching in higher education, with 4 years of experience teaching in online and hybrid formats. The PBL instructor had previously used PBL activities the online research methods course, taught twice prior to the current study, but formally transitioned the course to the PBL format at the time of the current study. The TDL instructor had 16 years of experience teaching in higher education, with 8 years of experience teaching online and hybrid courses, and taught research methods for 10 years. Both instructors are licensed speech-language pathologists and primary investigators of their respective laboratories. Students in the PBL and the TDL classes completed pre-post measures of research self-efficacy and student satisfaction. All of the course content was delivered through the Moodle Learning Management System. Pre-post measures were collected anonymously through SurveyMonkey Pro via links provided in the class Moodle page. Students created a unique identifier code to be used to match data between the beginning and end of the semester. Survey completion was counted towards participation points in each course. Data were exported from SurveyMonkey Pro and analyzed in Jamovi Version 1.2.27.0.

Course Descriptions

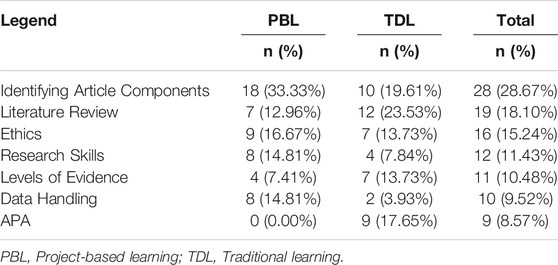

Both the TDL and PBL courses addressed the ASHA (2020) standards outlined in Table 1. Within these standards, both courses addressed overlapping learning objectives (e.g. describe the design of a research study; identify the components of a clinical Population Intervention Comparison Outcome question; evaluate the findings of a research article). Students in each course completed four open book quizzes based on the textbook chapters. The reading quizzes covered evidence based practice, ethics, research designs, data collection and analysis, and APA formatting. Students in both courses also completed the Collaborative Institutional Training Initiative (CITI Program) Human Subjects Training Certificate in Social Behavioral Research (www.citiprogram.org).

TABLE 1. A breakdown of ASHA (2020) Standards and how each class addressed them.

The PBL class did not include any lecture-based materials. In order to meet the learning objectives in the project-based format, three projects were designed to facilitate engagement with course content while creating an artifact, aligned with the pedagogy of project-based learning. Two of the three projects were group projects. Analytic rubrics with instructor feedback and student revisions were used in formative assessments for each project. Competencies in the PBL class favored problem-solving skills, collaboration, and procedures and techniques related to statistical analysis and applying research to clinical practice. The PBL class addressed Bloom’s Taxonomy by emphasizing applying, analyzing, and creating in the development of procedural knowledge (Forehand, 2010; Krathwohl, 2002). Assigned projects include the following:

(1) Informed Consent in Aphasia Project: Students were given the outline of a research experiment. They wrote a protocol for obtaining informed consent from a participant with aphasia.

(2) Experimental Research Project: Students participated in an online research experiment examining working memory using the Stroop Task with a reading speed control task. Students were randomly assigned to a morning or evening group and asked to complete the task either within one hour of waking up or one hour of going to bed. They identified the research questions, research design, independent and dependent variables of the study that they themselves created and participated in.

(3) Data Analysis and Reporting Project: With their group, students analyzed the class data obtained from the Experimental Research Project. They wrote up a research report including literature review, research questions and hypotheses, methods, results including graphs and tables, discussion, and limitations.

(4) Critical Evaluation Project: Students compared two research articles with competing findings. They prepared a webinar examining the strengths and weaknesses of each study. Students voted in a “Webby Awards” for the best webinar across different categories.

The TDL class included five asynchronous, online lectures, totaling 300 minutes over the 5-week semester. In addition to the reading quizzes and human subjects training, the students in the TDL class completed two individual assignments. In required forum discussions students posted a response to a prompt related to evidence based practice or ethics, and replied to at least one peer on the forum. TDL students also individually completed a critically appraised topic article review. Students recorded their review in a slide presentation, which included their literature search strategy, summary and appraisal of the evidence, and discussion of the experimental aspects of the clinical question, along with findings and limitations. The TDL instructor used a combination of formative and summative assessments, along with analytic rubrics. Competencies in the TDL class favored content knowledge, models of evidence based practice, and ethics in clinical practice. The TDL class addressed Bloom’s Taxonomy by emphasizing remembering, understanding, and analyzing in the development of content knowledge (Krathwohl, 2002; Forehand, 2010).

Main distinctions between the courses include collaboration in group projects in the PBL class and extensive online lectures in the TDL class. Additionally, the courses diverged in how research methods were addressed in the overlapping learning objectives through the assignments. For example, PBL students actively engaged in the research process to collect data, analyze data, and report and interpret their findings. In contrast, the TDL class reported on already published research in the critical appraisal assignment. When the PBL course reported on already published research in the critical evaluation project, they compared two articles with competing findings.

Measures

Research self-efficacy was examined using an adaptation of the Research Self Efficacy Inventory (RSEI) which is composed of several scales outlined below (Pasupathy and Siwatu, 2014). Participants rated their confidence on a scale from no confidence at all (0) to completely confident (10) in completing research related tasks from the General Research Self-Efficacy (GRSE) scale and the Mixed Methods Research Self-Efficacy (MMRSE) scale of the RSEI. The GRSE is composed of 10-items for which participants rate their confidence in their ability to execute tasks related to conducting a research study in the social and behavioral sciences. The GRSE has good internal consistency with a Cronbach’s alpha of .94. (Sijtsma, 2009). The MMRSE is composed of items from the Quantitative Research Self-Efficacy scale and Qualitative Research Self-Efficacy scale, which have high internal consistency ratings of .97 and .94, respectively (Sijtsma, 2009). For the purposes of the current study, we utilized all 10 items from the GRSE and adapted 7 items from the MMSE that would be appropriate for Master’s level students who have had limited hands-on research experience. Responses to the RSEI items were further divided into two categories that reflect the focus of the research methods courses in the current study: Interpreting Research (i.e. identify variables of a study, identify research design of a study; 10 items) and Research Processes (i.e. collect quantitative data, perform a statistical analysis; 7 items). Cronbach’s alpha for our adaptation of the RSEI is α = .91, indicating high internal consistency (Cronbach, 1951; Santos, 1999; Tavakol and Dennick, 2011). The RSEI was completed the week before the classes started (Time 1) and the last week of class (Time 2). Data for the RSEI measure were analyzed using non-parametric 2x2 Friedman ANOVA between Class (PBL, TDL) and Time (1, 2). See Appendix A for RSEI items used in this study.

At the end of the course, Time 2, students were asked what they had learned during the course and to list three skills or knowledge areas of mastery. They rated their satisfaction with the course according to the usefulness of the various assignments and features of their respective courses on a scale from not useful at all (1) to extremely useful (5). They also compared aspects of the course to previous classes (e.g. number of assignments, difficulty of content, support from instructor) on a scale of less than my other classes (1), the same as my other classes (2), or more than my other classes (3). Finally, students were asked to rate, based on their experience during the course, how likely they are to engage in a future research project and pursue a doctoral degree on a scale from very unlikely (1) to very likely (5). These ratings, along with open-ended comments, were taken as subjective measures of satisfaction with their course. The Mann-Whitney U test was used to examine differences between PBL and TDL classes on Likert-style ratings of satisfaction with course components. Open-ended questions were analyzed using text analyzers, subdividing responses into themes and subcategories.

Results

Research Self-Efficacy

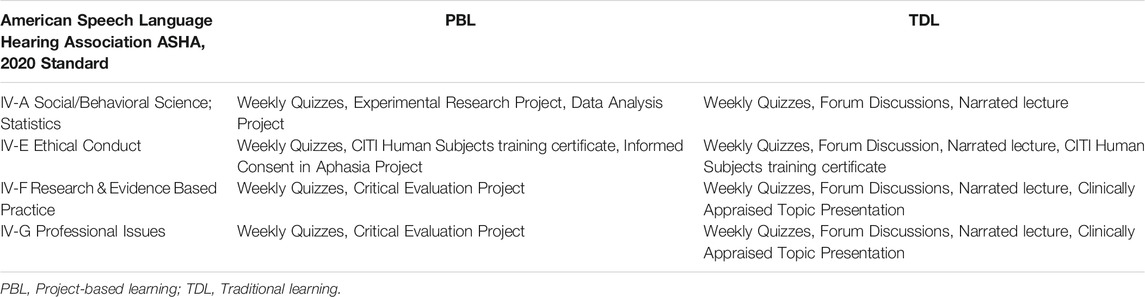

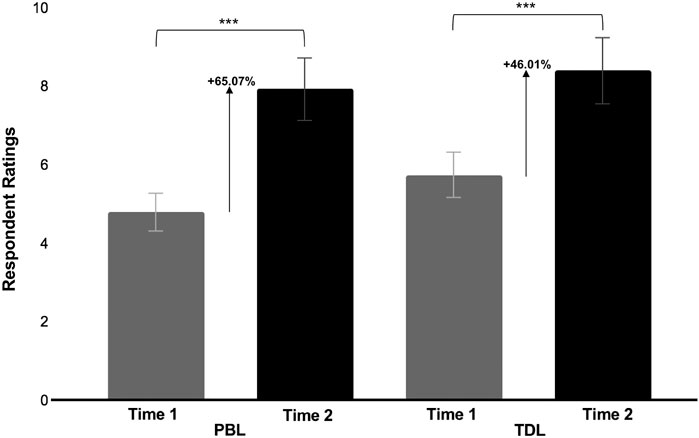

A non-parametric 2×2 Friedman ANOVA between Class (PBL, TDL) and Time (1, 2) was conducted on the Interpreting Research and Research Processes adapted RSEI scores. The PBL class had Interpreting Research scores that differed significantly (χ2(3) = 39.7; p < .001) between Time 1 (M = 4.81, SD = 1.96) and Time 2 (M = 7.94, SD = 0.99) with a percent increase of 65.07%. The PBL class had Research Processes scores that differed significantly (χ2(3) = 34; p < .001) between Time 1 (M = 4.01, SD = 1.80) and Time 2 (M = 7.49, SD = 0.97) with a percent increase of 86.78%. The TDL class had Interpreting Research scores that differed significantly (χ2(3) = 39.7; p < .001) between Time 1 (M = 5.76, SD = 1.79) and Time 2 (M = 8.41, SD = 0.83) with a percent increase of 46.01%. The TDL class had Research Process scores that differed significantly (χ2(3) = 34; p < .001) between Time 1 (M = 5.16, SD = 1.83) and Time 2 (M = 8.29, SD = 1.00) with a percent increase of 60.66%.

There were no significant differences (χ2(3) = 39.7; p = .107) in Interpreting Research scores at Time 1 between the PBL class (M = 4.81, SD = 1.96) and the TDL class (M = 5.76, SD = 1.79). Research Processes scores differed significantly (χ2(3) = 34; p = .049) at Time 1 for the PBL class (M = 4.01, SD = 1.80) and the TDL class (M = 5.16, SD = 1.83). Interpreting Research scores did not significantly differ (χ2(1) = 2.21; p = .137) at Time 2 for the PBL class (M = 7.94, SD = 0.99) and the TDL class (M = 8.41, SD = 0.83). Research Processes scores did not differ significantly (χ2(3) = 34; p = .267) at Time 2 between the PBL class (M = 7.49, SD = 0.97) and the TDL class (M = 8.29, SD = 1.00). A breakdown of participants’ average ratings for the RSEI are outlined in Figure 1 for Interpreting Research and Figure 2 for Research Processes. All averages are out of a maximum of 10.

FIGURE 1. Average Interpreting Research Ratings for both the PBL and TDL class at Time 1 and Time 2. PBL = Project-based learning; TDL = Traditional learning. Note: *= p < .05; **= p < .01; ***= p < .001; ****= p < .0001

FIGURE 2. Average Research Processes Ratings for both the PBL and TDL class at Time 1 and Time 2. PBL = Project-based learning; TDL = Traditional learning. Note: *= p < .05; **= p < .01; ***= p < .001; ****= p < .0001

Interpreting Research and Research Processes RSEI scores were divided into quartiles to identify specifically which tasks students felt most and least confident to conduct successfully from 0 (least confident) to 10 (most confident) (Pasupathy and Siwatu, 2014). At Time 1, upper quartile items for Interpreting Research were “Utilize APA style guidelines in writing a clinical or research report” for the PBL class (M = 7.28, SD = 2.37) and “Search an electronic database for peer-reviewed articles relevant to your clinical or research question” for both classes (PBL: M = 7.94; SD = 1.66; TDL: M = 7.47, SD = 1.55). At Time 1, there were no lower quartile items for Interpreting Research for both classes. At Time 2, upper quartile items for Interpreting Research included “Search an electronic database for peer-reviewed articles relevant to your clinical or research question” for both classes (PBL: M = 9.00, SD = 0.97; TDL: M = 9.06, SD = 1.03). At Time 2, lower quartile items for Interpreting Research included “Interpret the statistical significance of the findings of a research study” for both classes (PBL: M = 6.72, SD = 1.32; TDL: M = 7.47, SD = 1.77).

At Time 1, neither class had items that fell within the upper or lower quartiles for Research Processes. Therefore, all ratings fell between the 25th and 75th percentile; this could be explained by the fact that most participants’ ratings fell towards the median. At Time 2, upper quartile items for Research Processes included “Obtain informed consent for a research study from a participant with a communication disorder” for both classes at (PBL: M = 8.83, SD = 1.10; TDL: M = 9.23, SD = 1.05) and “Create visual representations of data (e.g. charts, graphs, tables)” for the PBL class (M = 8.00, SD = 1.61). At Time 2, lower quartile items included “Select an appropriate research design that will answer a specific research question” for both classes (PBL: M = 6.78, SD = 1.44; TDL: M = 7.76, SD = 1.39) and “Perform a statistical analysis of data to provide answers to existing research questions” for the TDL class” (M = 7.53, SD = 1.59).

Student Satisfaction

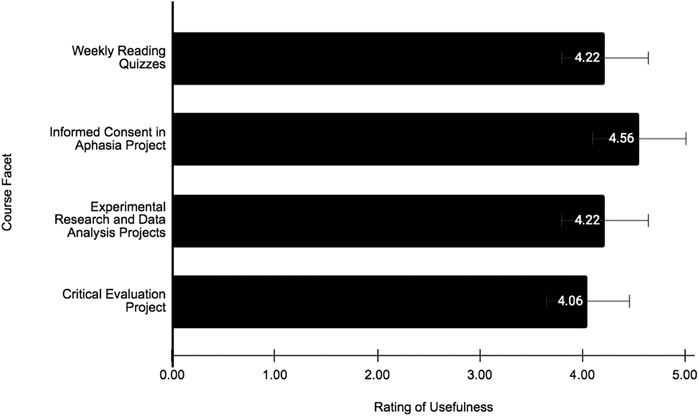

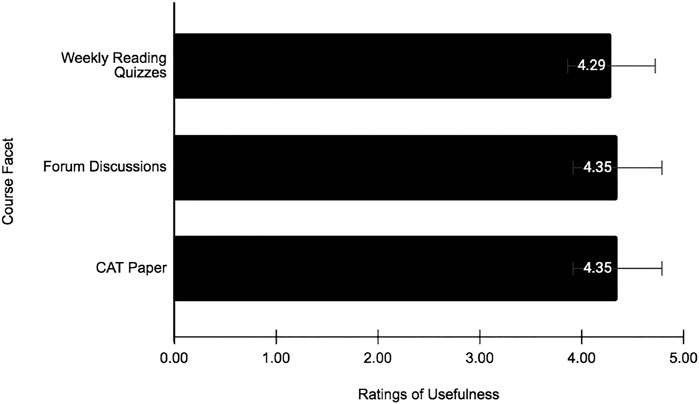

Students were asked to rate the usefulness of several aspects of the course on a scale of 1 (not useful at all) to 5 (very useful). In the PBL class, their average scores are reported as follows: the weekly reading quizzes in helping you prepare to answer research-based questions on the Praxis/Comprehensive Exam (M = 4.22, SD = 0.94); the Informed Consent in Aphasia Project in helping you understand how to use your clinical skills in a research environment (M = 4.56, SD = 0.62); the Experimental Research and Data Analysis Projects in helping you gain “hands on” experience of all aspects of the research process (M = 4.22, SD = 0.94); the Critical Evaluation Project in providing you with the opportunity to engage in critical evaluation of competing resources in the context of clinical decision-making (M = 4.06, SD = 1.00). The TDL class’s average scores are reported as follows: the weekly reading quizzes in helping you prepare to answer research-based questions on the Praxis/Comprehensive Exam (M = 4.29, SD = 0.77); the forum discussions in helping you understand the course content (M = 4.35, SD = 0.79); the Intervention Paper in providing you with the opportunity to engage in critical evaluation of research articles (M = 4.35, SD = 0.79). Average ratings for the usefulness of different course aspects are shown in Figures 3,4.

FIGURE 3. Average ratings for the usefulness of different course characteristics for the PBL class at Time 2. PBL = Project-based learning.

FIGURE 4. Average ratings for the usefulness of different course characteristics for the TDL class at Time 2. CAT = Critically-appraised topic. TDL = Traditional learning.

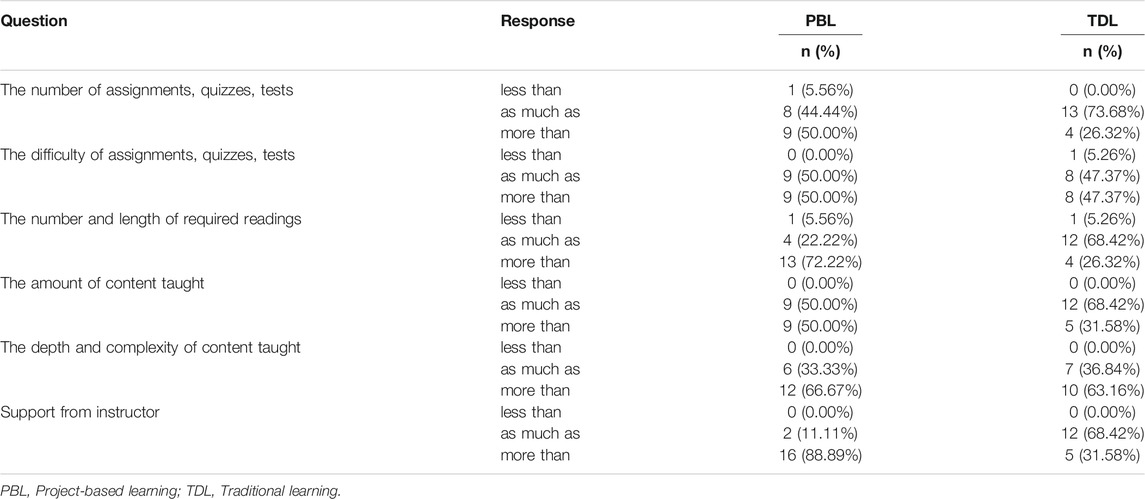

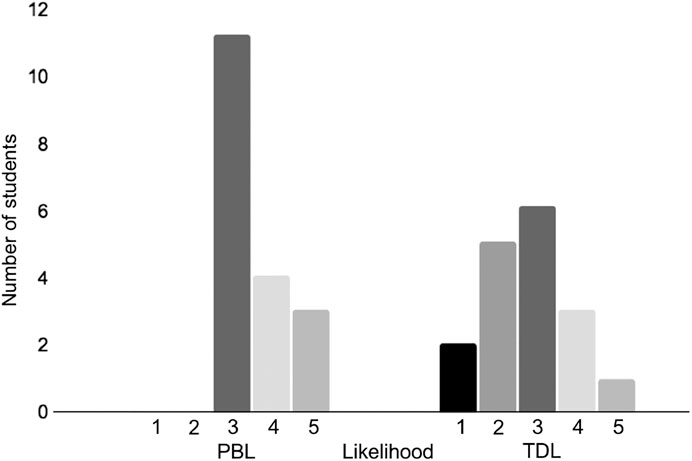

Students were asked to compare different aspects of their course to other courses they have taken in the program. They rated these aspects as: less than their other courses, as much as their other courses, and more than their other courses. Table 2 outlines this student response information. A Mann-Whitney U test was run to determine if there were differences in ratings between the PBL and the TDL classes for both the likelihood of engaging in a future research project and the likelihood of joining a doctoral program. Average ratings for the likelihood of engaging in a future research project for the PBL class (M = 3.72, SD = 0.75) and the TDL class (M = 3.59, SD = 0.78) were not statistically significantly different (U = 148, p = .861). Average ratings for the likelihood of joining a doctoral program for the PBL (M = 3.56, SD = 1.12) and the TDL class (M = 2.76, SD = 1.09) were significantly different (U = 88.5, p = .024). Figure 5 shows student ratings for joining a doctoral program.

TABLE 2. A breakdown of ratings of different aspects of the course taken at Time 2, split by PBL and TDL.

FIGURE 5. Average ratings for the likelihood of considering joining a doctoral program in the future, taken at Time 2, split by class. PBL = Project-based learning; TDL = Traditional learning.

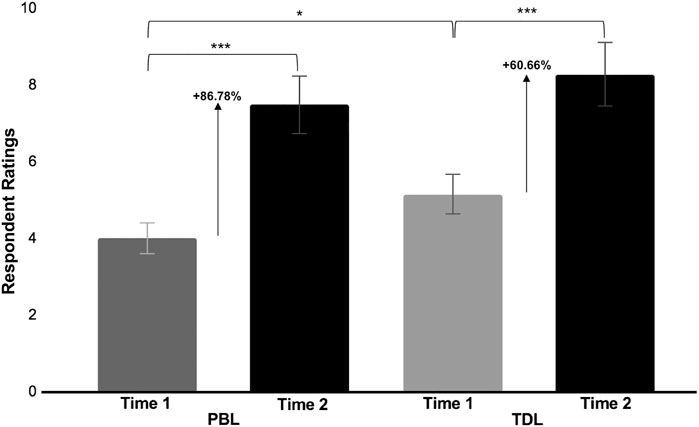

Students were asked to list three things they learned from the course. Their responses were input into two different text analyzers to determine the most frequently used words within the responses to assist in defining broad themes. Two judges (second author and one outside rater) mapped relationships between responses in order to conceptualize themes, then further broke these classifications down into subcategories for response coding. In order to minimize bias or unwarranted assumptions, judges avoided attributing further meaning to comments during the rating and classification process. Finally, the responses were re-coded and categorized according to the judges’ final conclusions as a group. The following categories were created: Identifying Article Components, Literature Review, Ethics, Research Skills, Levels of Evidence, Data Handling, and American Psychological Association (APA).

In the PBL class, of the 18 respondents (54 responses total) who denoted three things they learned during the course, which fell under the themes of Identifying Article Components (“Figuring out dependent and independent variables within research”), Literature Review (“Develop resources to use when finding literature”), Ethics (“Creating an informed consent paper for research participants”), Research Skills (“The ability to create my own research and develop a sufficient report and interpret my data”), Levels of Evidence (“Defining the level of evidence of a study”), Data Handling (“Knowledge regarding Excel spreadsheets”), and APA style (“writing in APA”). Open-ended coded responses are outlined in Table 3.

Discussion

Results of the study indicate that both classes increased in research self-efficacy and found elements of the courses beneficial; however, there were differential responses and scores reflective of the method of course facilitation.

Research Self-Efficacy

Research self-efficacy was examined for skills related to Interpreting Research and Research Processes. Overall, the PBL class showed a larger percent increase in their confidence in their ability to carry out research-related tasks between Time 1 and Time 2. For Interpreting Research, students in both classes shared the same upper and lower quartile items. For example, both PBL and TDL students were highly confident in their abilities to search an electronic database for peer-reviewed articles. At Time 2 interpreting the significance of statistics in a research article was in the lower quartile for students in both courses. Thus, the practice of performing a statistical analysis for the PBL class did not generalize to their confidence in interpreting the statistics of a research article. Therefore, research self-efficacy ratings may only increase with direct practice of a skill and not generalize to related skills. Given the short time frame of the course, it is also possible that students need repeated practice over a longer period of time to increase their self-efficacy with more challenging tasks. As Prosser and Sze (2014) noted, problem-based learning did not favor clinical applications in the short-term. Burda and Hageman (2015) described a cyclical process of engagement in problem-based learning as students continue to evaluate what they know and what they need to know to solve a realistic problem. This cyclical process may not fully unfold during a 5-week session.

Although the TDL class initially rated their self-efficacy for Research Processes higher than the PBL class, by Time 2 the PBL class demonstrated a larger percent increase in self-efficacy for Research Processes. Unrau and Beck (2004) noted a similar phenomenon when comparing research self-efficacy for social work and speech-language pathology students that were enrolled in practicum-only or research plus practicum experiences. Initially, the practicum-only students had higher research self-efficacy ratings, but the students that completed a research experience showed greater gains in research self-efficacy at the end of the study. Both classes in the current study felt highly confident in obtaining informed consent from a research participant at Time 2. Although the PBL course wrote protocols to obtain informed consent from a research participant with aphasia, students in the TDL course also felt highly confident in performing this task. Thus, given tasks for which students already had high self-efficacy ratings, hands-on practice using PBL did not differentiate students’ self-efficacy. At Time 2, performing statistical data analysis was in the upper quartile for the PBL course, showing an increase from Time 1. However, performing a statistical analysis remained in the lower quartile for the TDL class at Time 2. Thus, it seems that the gains in research self-efficacy pertain to more demanding tasks that require hands-on practice, like using software programs to do analysis. A recent survey of PhD students in CSD indicated that limited skills in statistics was one of the top barriers to their degree completion (Crais and Savage, 2020). Hands-on practice with statistics during the Master’s-level research methods requirement increases research self-efficacy, which may benefit students who later pursue a PhD.

The TDL class overall had higher RSE ratings than the PBL course at both time points. Although these scores were numerically but not statistically higher at the end of the semester, the ratings are indicators of self-perception. Similarly, Barraket (2005) found that students favored more didactic teaching of research methods. Self-report of confidence in one’s ability can diverge from their performance, such that individuals rate themselves higher due to lack of awareness of what it takes to complete a task (Kruger and Dunning, 1999). Once a skill is learned, self-confidence becomes more calibrated to one’s actual ability. Burda and Hageman (2015) noted that students may feel insecure when transitioning to a PBL course. They may feel like the information is not presented in a straightforward way, they may feel anxious or frustrated with group dynamics, and may feel like they lack preparation as more time is required to complete projects (Visconti, 2010; Kong et al., 2014; Burda and Hageman, 2015). This insecurity can result in overall lower self-efficacy in the PBL group despite having some hands-on practice with the research tasks. Thus, self-efficacy may follow a U-shaped curve in project-based learning. Additionally, self-efficacy may increase over a more protracted period in novel instructional settings like PBL.

Prosser and Trigwell (1999) outlined two approaches to learning in problem based curricula- surface and deep. Surface learning favors short-term retention of knowledge to reproduce content. Students engaged in surface learning in a problem-based assignment are generally applying strategies learned in a traditional context. In contrast, a deep approach to learning focuses on long-term retention and application of information. Based on a case study measuring student perception, Prosser and Sze (2014) found that during a problem-based learning course students initially applied traditional learning strategies to focus on short term knowledge, favoring surface learning. They argued that for problem based learning to be useful, learning processes should be explicitly taught. Given the short format of the current case study, it is possible that students were still transitioning from using traditional strategies to more problem-based strategies at the post-course interval.

Overall, students’ research self-efficacy ratings are aligned with the top three topics they reported that they learned during their course. The topics of identifying article components, ethics, research skills, and data handling were reported most often by the PBL class. For the PBL class, research skills were mentioned twice as much and data handling three times as much as in the TDL class. This is reflected in their upper quartile Research Process scores of performing a statistical analysis, creating visual representations of data, and obtaining informed consent from a participant. The topics of literature review, identifying article components, and using APA-style notations were reported most often by the TDL class. The TDL class had twice as many students mention Levels of Evidence, and all nine responses categorized under APA were by TDL students, as there was a greater emphasis on citations with the final presentation assignment. This is reflected in their upper quartile items of searching the electronic database for peer-reviewed articles.

Student Satisfaction

Students in both classes found their respective courses beneficial to their achievement of the learning objectives. Ratings for the usefulness of individual assignments in both courses were above average indicating that the assignments, regardless of pedagogy, met the students’ needs in achieving the course objectives. Exposure to research during graduate training is a predictor of future attitudes toward research and EBP (Zipoli and Kennedy, 2005). Both classes inspired students to become more involved in research. However, students in PBL indicated they were more likely to pursue doctoral studies in the future, likely due to hands-on experience with research. This finding is aligned with previous work in CSD showing that hands-on research experience increases student interest in pursuing a PhD (Mueller and Lisko, 2003; Hagstrom et al., 2009; Friberg et al., 2013; Coston and Myers-Jennings, 2014; Randazzo and Khamis-Dakwar, 2018). Given the shortage of doctoral-level scholars in the field of CSD, this preliminary finding is suggestive of a pathway to increase research interest and engagement. The majority of students from both classes rated the depth and complexity of content as greater than that of their other classes. This is not surprising, as the course is the only research course required in the program. Ni (2013) found that online research methods courses were more difficult than face-to-face research courses for students. Additionally, the majority of students from both classes rated the difficulty of their respective courses as equivalent to or greater than the other courses they have taken in the program. The agreement between the two classes on these items indicates that regardless of the type of instruction (PBL vs TDL), online courses carry at least the same rigor as face-to-face courses (Ng et al., 2014).

The students’ ratings between the instructional methods diverged in how they perceived the workload. The majority of students in PBL perceived the course to be more work than their other classes, while the majority of students in TDL perceived the course to be the same amount of work as their other classes. Thus, the multiple projects in PBL likely imposed greater effort to complete the course requirements. Similarly, the majority of students in PBL reported that the number and length of readings were greater than that of their other classes, while the majority of students in TDL indicated the readings were equivalent to their other classes. Both courses had the same required readings from Nelson (2016). It is likely that PBL rated their course as having more readings because they had to engage with the literature more to find resources to complete their projects (Kong, 2014). For the amount of content taught, PBL was split between equivalent to and more than their other classes, while the majority of TDL indicated that the amount of content was equivalent to their other classes. Again, the projects likely enhanced the content as students had to do more research outside of class to complete the projects.

Students in PBL perceived receiving greater instructor support, although both courses were online and asynchronous. This finding is consistent with previous work noting that PBL educators spent 3 to 4 times more student contact hours than in traditional courses (Koh et al., 2008). The instructor for TDL was engaged in the weekly discussion board postings with the students and had recorded lectures, while the PBL instructor communicated with students via email or video chats as needed to facilitate project completion. Although we don’t have a direct measure of instructor time in the current study, given the short time frame of the 5-week course and the asynchronous format, instructors likely spent the same amount of time engaged with students. In the current study, we interpret this finding as not based on the amount of time spent, but rather that the attention was individualized to facilitate project completion in the PBL class (Visconti 2010; Burda and Hageman, 2015).

Summary and Implications

The current study indicates that both the TDL and PBL online research methods courses provided constructive learning environments which supported the learning goals for research methods. Students in both classes increased their research self-efficacy and were satisfied with their course, assignments, and instructor. Rather, the results of the current study suggest that in a short session what is emphasized in a course is more related to students’ confidence in their abilities than how it is taught. TDL yielded more immediate benefits in terms of workload and confidence in completing familiar tasks. The benefits of PBL were found for more demanding tasks, or tasks that students likely had the least experience in performing, and that require hands-on practice like statistical analysis. Additionally, PBL showed advantages in facilitating engagement with course content and outside resources.

One differential impact of offering a PBL course in comparison to TDL is related to potential future engagement in research. This finding highlights the importance of infusing research engagement opportunities for students in CSD programs. We argue for a need to expand research engagement opportunities for students in the Master’s program to support an increase of researchers to ameliorate the shortage of doctoral level scholars in the field. Offering research methods courses in a project-based learning format may provide students with hands-on opportunities that build their self-efficacy and inspire them to pursue research careers (Mueller and Lisko, 2003; Hagstrom et al., 2009; Friberg et al., 2013; Coston and Myers-Jennings, 2014).

Instructors considering a transition to project-based learning or problem-based learning in their online courses should consider the findings of this case study along with the research on instructor effort in these formats. Donner and Bickley (1993) noted that problem-based learning instructors devoted 4 to 5 times more preparation hours than instructors in traditional formats. First, the development of problems and projects requires careful consideration (Pawson, 2006; Visconti, 2010; Burda and Hageman, 2015). Assignments should have real-world implications, engage students in the literature, and facilitate active problem solving (Barrows 1996). Second, instructors act as facilitators in student-led inquiry (Albanese and Mitchell, 1993; Bridges and Hallinger, 1996; Savery and Duffy, 1995). This process provides more individualized attention to students, but may take more of the instructor’s time (Albanese and Mitchell, 1993; Stinson and Milter, 1996). Results of the current study indicate that traditional lectures, recorded for the online format, provided an added benefit in research self-efficacy as a measure of confidence (see also Tiwari et al., 2006). Time spent writing and recording lectures for TDL may have benefits in terms of student satisfaction and confidence in familiarity with the traditional format. On the other hand, time developing and facilitating PBL activities may benefit students’ confidence in completing tasks with greater perceived difficulty. Further, PBL activities are collaborative, which may support the development of interpersonal skills that can be applied to clinical and interprofessional practice. Whitehill et al., (2014) note that there is flexibility in how instructors utilize problem-based learning in their courses. As instructors transition to online teaching or revise current courses in the post-Covid instructional landscape, they may consider using either PBL or TDL methodology tailored the specific learning goals.

Limitations and Future Directions

This case study presents findings that are informative to instructors planning online courses in research methods. The current sample is small but homogeneous, which allows for direct comparisons between the two classes; however, it is not generalizable. In the field of CSD, 95.5% of clinicians are females and 91.7% of clinicians are white (American Speech Language Hearing Association ASHA, 2019). The current study did not specifically address the demographics of the students in the research methods courses. Future studies should further explore the impact of online courses for Black, Indigenous, and People of Color (BIPOC) students, currently under-represented in the field of CSD. BIPOC students have reported experiencing marginalization, bias, and micro-aggressions in face-to-face classes (Ginsberg, 2018) or limited access to resources (Fuse and Bergen, 2018). Further examination of online course offerings may elucidate whether the inherent distance of online learning can decrease or exacerbate negative experiences for BIPOC students. Additionally, we cannot account for differences between the instructors but this should be minimized due to the asynchronous online format.

We focused on research self-efficacy, which although highly validated, only measures self-perception of ability. Future studies should further examine the relationship between research self-efficacy and performance on research-related tasks as it relates to method of instruction. Learning over instruction is emphasized in PBL, thus measures that tap into learning and application of knowledge should be further explored. These learning opportunities may be enhanced by increased instructor resources in the form of clinical and research case examples that correspond to the textbook and learning goals. Students in the PBL class indicated they were more likely to consider applying to a doctoral program in the future compared to those in the TDL class. Future studies may more directly examine how the experience of PBL instruction in research methods contributes to interest in pursuing research and a doctoral degree. The courses examined in this case study took place over an intensive 5-week summer session. Although the short time frame provides greater control of potential confounds, it is possible that self-efficacy ratings could change given more time to develop skills during a longer semester. Furthermore, although these courses are always taught fully online during the summer in our program, the data for this paper were collected during the Covid-19 pandemic. Given the course was offered during the Covid-19 pandemic it may be difficult to separate student perception of rigor or complexity in the course with other factors outside the scope of this study. Future studies should examine differences in PBL and TDL online research methods courses over longer time periods and follow-up post-course to evaluate the retention and application of skills and knowledge.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Adelphi University Institutional Review Board. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

MR, RK-D contributed to the conception and design of the study. MR, RP, and RK-D collected the data. RP managed the data, performed the statistical analysis and created the figures and tables. MR wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

RSEI Items

Interpreting Research

(1) Identify a clinically-relevant research problem that can be investigated scientifically.

(2) Write clinically-relevant research questions to address issues in clinical practice.

(3) Search an electronic database for peer-reviewed articles relevant to your clinical or research question.

(4) Identify the research design of a scientific study.

(5) Identify the independent and dependent variables of a scientific study.

(6) Identify the level of evidence of a peer-reviewed article.

(7) Interpret the statistical significance of the findings of a research study.

(8) Apply the results from a research study to your clinical practice

(9) Discuss the limitations of the findings of a research study.

(10) Utilize APA style guidelines in writing a clinical or research report.

Research Processes

(11) Select an appropriate research design that will answer a specific research question.

(12) Obtain informed consent for a research study from a participant with a communication disorder.

(13) Collect quantitative data using techniques that are suitable in answering research questions regarding clinical practice.

(14) Collect qualitative data using techniques that are suitable in answering research questions regarding clinical practice.

(15) Perform a statistical analysis of data to provide answers to existing research questions.

(16) Create visual representations of data (e.g. charts, graphs, table).

(17) Write a research report documenting the findings of a research study.

References

American Speech Language Hearing Association ASHA (2020). Evidence-Based Practice (EBP). Available at: https://www.asha.org/certification/2020-slp-certification-standards/ (Accessed September 12, 2020).

American Speech-Language and Hearing Association ASHA (2019). The 2019 Member and Affiliate Profile. Available at: https://www.asha.org/uploadedFiles/2019-Member-Counts.pdf (Accessed September 12, 2020).

Anazifa, R. D., and Djukri, D. (2017). Project- Based Learning and Problem-Based Learning: Are They Effective to Improve Student's Thinking Skills? Jpii. 6 (2), 346–355. doi:10.15294/jpii.v6i2.11100

Bandura, A. (1997). Self-efficacy: The Exercise of Control. W H Freeman/Times Books/Henry Holt & Co.

Bandura, A. (1977). Self-efficacy: toward a Unifying Theory of Behavioral Change. Psychol. Rev. 84 (2), 191–215. doi:10.1037/0033-295X.84.2.191

Barber, W., and King, S. (2016). Teacher-Student Perspectives of Invisible Pedagogy: New Directions in Online Problem-Based Learning Environments. Electron. J. e-Learning 14 (4), 235–243.

Barraket, J. (2005). Teaching Research Method Using a Student-Centred Approach? Critical Reflections on Practice. J. Univ. Teach. Learn. Pract. 2 (2), 3.

Barrows, H. S. (1996). Problem-based Learning in Medicine and beyond: A Brief Overview. New Dir. Teach. Learn. 1996 (68), 3–12. doi:10.1002/tl.37219966804

Bereiter, C., and Scardamalia, M. (2003). “Learning to Work Creatively with Knowledge,” in Powerful Learning Environments: Unravelling Basic Components and Dimensions. Editors E. DeCorte, N. Entwistle, and J. van Merriënboer (Amsterdam: Pergamon), 55–68.

Bieschke, K. J., Bishop, R. M., and Garcia, V. L. (1996). The Utility of the Research Self-Efficacy Scale. J. Career Assess. 4 (1), 59–75. doi:10.1177/106907279600400104

Burda, A. N., and Hageman, C. F. (2015). Problem-based Learning in Speech-Language Pathology: Format and Feedback. Cicsd. 42, 47–71. doi:10.1044/cicsd_42_S_47

Burki, T. K. (2020). COVID-19: Consequences for Higher Education. Lancet Oncol. 21 (6), 758. doi:10.1016/s1470-2045(20)30287-4

Chickering, A. W., and Ehrmann, S. C. (1996). Implementing the Seven Principles: Technology as a Lever. AAHE Bull., 3-6. Available at: http://www.tltgroup.org/programs/seven.html. (Accessed December 12, 2020).

Coston, J. H., and Myers-Jennings, C. (2014). Engaging Undergraduate Students in Child Language Research. Sig10 Perspect. 17 (1), 4–16. doi:10.1044/aihe17.1.4

Council on Academic Accreditation (2020). Standards for Accreditation of Graduate Education Programs in Audiology and Speech-Language Pathology. Available at: https://caa.asha.org/wp-content/uploads/Accreditation-Standards-for-Graduate-Programs.pdf (Accessed September 12, 2020).

Council on Academic Programs in Communication Sciences and Disorders (CAPCSD) (2002). Crisis in the Discipline: A Plan for Reshaping Our Future. Available at: http://www.asha.org/members/phd-faculty-research/reports/ and http://www.capcsd.org/status.html (Accessed September 12, 2020).

Crais, E., and Savage, M. H. (2020). Communication Sciences and Disorders PhD Graduates' Perceptions of Their PhD Program. Perspect. ASHA Sigs. 5 (2), 463–478. doi:10.1044/2020_PERSP-19-00107

Crawford, J., Butler-Henderson, K., Rudolph, J., Malkawi, B., Glowatz, M., Burton, R., Lam, S., et al. (2020). COVID-19: 20 Countries' Higher Education Intra-period Digital Pedagogy Responses. J. Appl. Learn. Teach. 3 (1), 1–20. doi:10.37074/jalt.2020.3.1.7

Cronbach, L. J. (1951). Coefficient Alpha and the Internal Structure of Tests. psychometrika. 16 (3), 297–334. doi:10.1007/bf02310555

Davidson, M. M., Weismer, S. E., Alt, M., and Hogan, T. P. (2013). Survey on Perspectives of Pursuing a PhD in Communication Sciences and Disorders. Cicsd 40 (Fall), 98–115. doi:10.1044/cicsd_40_F_98

DeFillippi, R., and Milter, R. G. (2009). “Problem-based and Project-Based Learning Approaches: Applying Knowledge to Authentic Situations,” in The Sage Handbook of Management Learning, Education and Development (London: SAGE Publications Ltd), 344–363.

Dixson, M. D. (2010). Creating Effective Student Engagement in Online Courses: What Do Students Find Engaging?. J. Scholarship Teach. Learn. 1–13.

Donnelly, R., and Fitzmaurice, M. (2005). Collaborative Project-Based Learning and Problem-Based Learning in Higher Education: A Consideration of Tutor and Student Roles in Learner-Focused Strategies. Emerging Issues Pract. Univ. Learn. Teach., 87–98.

Donner, R. S., and Bickley, H. (1993). Problem-based Learning in American Medical Education: an Overview. Bull. Med. Libr. Assoc. 81 (3), 294–298.

Floyd, K., and Coogle, C. (2015). Synchronous and Asynchronous Learning Environments of Rural Graduate Early Childhood Special Educators Utilizing Wimba© and Ecampus. [Electronic Version]. J. Online Learn. Teach. 11 (2), 173–187.

Friberg, J., Folkins, J., Harten, A., and Pershey, M. (2013). SIGnatures: Undergrad Today, CSD PhD Tomorrow. Leader. 18 (7), 54–55. doi:10.1044/leader.SIGN.18072013.54

Fuse, A., and Bergen, M. (2018). The Role of Support Systems for success of Underrepresented Students in Communication Sciences and Disorders [Electronic Version]. Teach. Learn. Commun. Sci. Disord. 2 (3). doi:10.30707/TLCSD10.30707/tlcsd2.3fuse

Gaytan, J., and McEwen, B. C. (2007). Effective Online Instructional and Assessment Strategies. Am. J. Distance Edu. 21 (3), 117–132. doi:10.1080/08923640701341653

Ginsberg, S. M. (2018). Stories of Success: African American Speech-Language Pathologists' Academic Resilience. Tlcsd. 2 (3). doi:10.30707/TLCSD2.3Ginsberg

Gist, M. E. (1987). Self-efficacy: Implications for Organizational Behavior and Human Resource Management. Amr. 12 (3), 472–485. doi:10.5465/amr.1987.4306562

Greenwald, M. L. (2006). Teaching Research Methods in Communication Disorders. Commun. Disord. Q. 27 (3), 173–179. doi:10.1177/15257401060270030501

Hagstrom, F., Baker, K. F., and Agan, J. P. (2009). Undergraduate Research: A Cognitive Apprenticeship Model. Sig10 Perspect. 12 (2), 45–52. doi:10.1044/ihe12.2.45

Hodges, C., Moore, S., Lockee, B., Trust, T., and Bond, A. (2020). The Difference between Emergency Remote Teaching and Online Learning. Educause Rev. 27, 1–12.

John, W. T., and Thomas, W. (2000). A Review of Research on Project-Based Learning. California: TA Foundation.

Johnson, S. D., and Aragon, S. R. (2003). An Instructional Strategy Framework for Online Learning Environments. New Dir. adult Contin. Educ. 2003 (100), 31–43. doi:10.1002/ace.117

Kahn, J. H. (2001). Predicting the Scholarly Activity of Counseling Psychology Students: A Refinement and Extension. J. Couns. Psychol. 48 (3), 344–354. doi:10.1037/0022-0167.48.3.344

Kashy, E., Thoennessen, M., Albertelli, G., and Tsai, Y. (2000). Implementing a Large On-Campus ALN: Faculty Perspective. J. Asynchronous Learn. Networks 4 (3), 231–244. doi:10.1002/j.2168-9830.2001.tb00631.x

Koh, G. C.-H., Khoo, H. E., Wong, M. L., and Koh, D. (2008). The Effects of Problem-Based Learning during Medical School on Physician Competency: a Systematic Review. Can. Med. Assoc. J., 178(1), 34–41. doi:10.1503/cmaj.070565

Kong, A. P.-H. (2014). Students' Perceptions of Using Problem-Based Learning (PBL) in Teaching Cognitive Communicative Disorders. Clin. Linguistics Phonetics. 28 (1-2), 60–71. doi:10.3109/02699206.2013.808703

Kong, L.-N., Qin, B., Zhou, Y.-q., Mou, S.-y., and Gao, H.-M. (2014). The Effectiveness of Problem-Based Learning on Development of Nursing Students' Critical Thinking: A Systematic Review and Meta-Analysis. Int. J. Nurs. Stud. 51 (3), 458–469. doi:10.1016/j.ijnurstu.2013.06.009

Krathwohl, D. R. (2002). A Revision of Bloom's Taxonomy: An Overview. Theor. into Pract. 41 (4), 212–218. doi:10.1207/s15430421tip4104_2

Kruger, J., and Dunning, D. (1999). Unskilled and Unaware of it: How Difficulties in Recognizing One's Own Incompetence lead to Inflated Self-Assessments. J. Personal. Soc. Psychol. 77 (6), 1121–1134. doi:10.1037/0022-3514.77.6.1121

Lambie, G. W., Hayes, B. G., Griffith, C., Limberg, D., and Mullen, P. R. (2014). An Exploratory Investigation of the Research Self-Efficacy, Interest in Research, and Research Knowledge of Ph.D. In Education Students. Innov. High Educ. 39 (2), 139–153. doi:10.1007/s10755-013-9264-1

Lee, C., and Schmaman, F. (1987). Self-efficacy as a Predictor of Clinical Skills Among Speech Pathology Students. High Educ. 16 (4), 407–416. doi:10.1007/bf00129113

Lim, J., Kim, M., Chen, S. S., and Ryder, C. E. (2008). An Empirical Investigation of Student Achievement and Satisfaction in Different Learning Environments. [Electronic Version]. J. Instructional Psychol. 35 (2), 113–119.

Love, K. M., Bahner, A. D., Jones, L. N., and Nilsson, J. E. (2007). An Investigation of Early Research Experience and Research Self-Efficacy. Prof. Psychol. Res. Pract. 38 (3), 314–320. doi:10.1037/0735-7028.38.3.314

Maki, R. H., and Maki, W. S. (2007). “Handbook of Applied Cognition Online Courses,”. Editor F. T. Durso. 2nd ed. (New York: Wiley & Sons, Ltd), 527–552.

McCormack, J., Easton, C., and Morkel-Kingsbury, L. (2014). Educating Speech-Language Pathologists for the 21st century: Course Design Considerations for a Distance Education Master of Speech Pathology Program. Folia Phoniatr Logop. 66 (4-5), 147–157. doi:10.1159/000367710

McDonald, P. L., Harwood, K. J., Butler, J. T., Schlumpf, K. S., Eschmann, C. W., and Drago, D. (2018). Design for success: Identifying a Process for Transitioning to an Intensive Online Course Delivery Model in Health Professions Education. Med. Edu. Online. 23 (1), 1415617. doi:10.1080/10872981.2017.1415617

McKenna, L., Boyle, M., Palermo, C., Molloy, E., Williams, B., and Brown, T. (2014). Promoting Interprofessional Understandings through Online Learning: a Qualitative Examination. Nurs. Health Sci. 16 (3), 321–326. doi:10.1111/nhs.12105

Mueller, P. B., and Lisko, D. (2003). Undergraduate Research in CSD Programs: A Solution to the PhD Shortage? Cicsd. 30 (Fall), 123–126. doi:10.1044/cicsd_30_F_123

National Education Association (NEA) (2001). Focus on Distance Education, Update 7(2). Washington, DC.

Nelson, L. K. (2016). Research in Communication Sciences and Disorders: Methods for Systematic Inquiry. 3rd Edition. San Diego: Plural publishing.

Ng, M. L., Bridges, S., Law, S. P., and Whitehill, T. (2014). Designing, Implementing and Evaluating an Online Problem-Based Learning (PBL) Environment - A Pilot Study. Clin. Linguistics Phonetics. 28 (1-2), 117–130. doi:10.3109/02699206.2013.807879

Ni, A. Y. (2013). Comparing the Effectiveness of Classroom and Online Learning: Teaching Research Methods. J. Public Aff. Edu. 19 (2), 199–215. doi:10.1080/15236803.2013.12001730

Olswang, L. B., and Prelock, P. A. (2015). Bridging the gap between Research and Practice: Implementation Science. J. Speech Lang. Hear. Res. 58 (6), S1818–S1826. doi:10.1044/2015_JSLHR-L-14-0305

Overby, M. S. (2018). Stakeholders' Qualitative Perspectives of Effective Telepractice Pedagogy in Speech-Language Pathology. Int. J. Lang. Commun. Disord. 53 (1), 101–112. doi:10.1111/1460-6984.12329

Pajares, F. (2002). Gender and Perceived Self-Efficacy in Self-Regulated Learning. Theor. into Pract. 41 (2), 116–125. doi:10.1207/s15430421tip4102_8

Pasupathy, R., and Bogschutz, R. J. (2013). An Investigation of Graduate Speech-Language Pathology Students' SLP Clinical Self-Efficacy. Cicsd. 40 (Fall), 151–159. doi:10.1044/cicsd_40_F_151

Pasupathy, R. (2018). Rehabilitation Sciences Doctoral Education: A Study of Audiology, Speech-Language Therapy, and Physical Therapy Students' Research Self-Efficacy Beliefs. Clin. Arch. Commun. Disord. 3 (1), 59–66. doi:10.21849/cacd.2018.00255

Pasupathy, R., and Siwatu, K. O. (2014). An Investigation of Research Self-Efficacy Beliefs and Research Productivity Among Faculty Members at an Emerging Research university in the USA. Higher Edu. Res. Develop. 33 (4), 728–741. doi:10.1080/07294360.2013.863843

Pawson, R. (2006). Evidence-based Policy: A Realist Perspective. Thousand Oaks: Sage Publications. doi:10.4135/9781849209120

Pei, L., and Wu, H. (2019). Does Online Learning Work Better Than Offline Learning in Undergraduate Medical Education? A Systematic Review and Meta-Analysis. Med. Educ. Online 24 (1), 1666538. doi:10.1080/10872981.2019.1666538

Phillips, J. C., and Russell, R. K. (1994). Research Self-Efficacy, the Research Training Environment, and Research Productivity Among Graduate Students in Counseling Psychology. Couns. Psychol. 22 (4), 628–641. doi:10.1177/0011000094224008

Prosser, M., and Sze, D. (2014). Problem-based Learning: Student Learning Experiences and Outcomes. Clin. Linguistics Phonetics 28 (1-2), 131–142. doi:10.3109/02699206.2013.820351

Prosser, M., and Trigwell, K. (1999). Understanding Learning and Teaching: The Experience in Higher Education. UK: McGraw-Hill Education.

Randazzo, M., and Khamis-Dakwar, R. (2018). Development of a Neuroimaging Course to Increase Research Engagement in Master's Degree Students. Perspect. ASHA Sigs. 3 (10), 83–101. doi:10.1044/persp3.SIG10.83

Rapanta, C., Botturi, L., Goodyear, P., Guàrdia, L., and Koole, M. (2020). Online university Teaching during and after the Covid-19 Crisis: Refocusing Teacher Presence and Learning Activity. Postdigital Sci. Edu., 1–23. doi:10.1007/s42438-020-00155-y

Reeves, S., Fletcher, S., McLoughlin, C., Yim, A., and Patel, K. D. (2017). Interprofessional Online Learning for Primary Healthcare: Findings from a Scoping Review. BMJ open. 7 (8), e016872. doi:10.1136/bmjopen-2017-016872

Regina Molini-Avejonas, D., Rondon-Melo, S., de La Higuera Amato, C. A., and Samelli, A. G. (2015). A Systematic Review of the Use of Telehealth in Speech, Language and Hearing Sciences. J. Telemed. Telecare. 21 (7), 367–376. doi:10.1177/1357633X15583215

Robinson, C. C., and Hullinger, H. (2008). New Benchmarks in Higher Education: Student Engagement in Online Learning. J. Edu. Business 84 (2), 101–109. doi:10.3200/JOEB.84.2.101-109

Santos, J. R. A. (1999). Cronbach’s Alpha: A Tool for Assessing the Reliability of Scales. J. extension 37 (2), 1–5.

Sijtsma, K. (2009). On the Use, the Misuse, and the Very Limited Usefulness of Cronbach's Alpha. Psychometrika. 74 (1), 107–120. doi:10.1007/s11336-008-9101-0

Strobel, J., and Van Barneveld, A. (2009). When Is PBL More Effective? A Meta-Synthesis of Meta-Analyses Comparing PBL to Conventional Classrooms. Interdiscip. J. Problem-based Learn. 3 (1), 44–58. doi:10.7771/1541-5015.1046

Swan, K. (2003). “Learning Effectiveness: what the Research Tells Us,” in Elements of Quality Online Education, Practice and Direction. Editors J. Bourne, and J. C. Moore (Needham, MA: Sloan Center for Online Education), 13–45.

Tiwari, A., Chan, S., Wong, E., Wong, D., Chui, C., Wong, A., et al. (2006). The Effect of Problem-Based Learning on Students' Approaches to Learning in the Context of Clinical Nursing Education. Nurse Educ. Today. 26 (5), 430–438. doi:10.1016/j.nedt.2005.12.001

Tretten, R., and Zachariou, P. (1997). Learning about Project-Based Learning: Assessment of Project-Based Learning in Tinkertech Schools, 37. San Rafael, CA: The Autodesk Foundation.

Partners, T., and Analytics, B. V. (2020). Time for Class 2020 Resource Collection. Context and Implementation Matter in the Use of Courseware, pp. 14–15. Available at:https://www.everylearnereverywhere.org/resources/time-for-class-2020.

Unrau, Y. A., and Beck, A. R. (2004). Increasing Research Self-Efficacy Among Students in Professional Academic Programs. Innovative higher Educ. 28 (3), 187–204.

Visconti, C. F. (2010). Problem-based Learning: Teaching Skills for Evidence-Based Practice. Sig10 Perspect. 13 (1), 27–31. doi:10.1044/ihe13.1.27

Whitehill, T. L., Bridges, S., and Chan, K. (2014). Problem-based Learning (PBL) and Speech-Language Pathology: A Tutorial. Clin. Linguistics Phonetics 28 (1-2), 5–23. doi:10.3109/02699206.2013.821524

World Health Organization, and Staff, W. H. O. (2020). Coronavirus Disease (COVID-19). Available at: https://www.who.int/emergencies/diseases/novel-coronavirus-2019.

Xu, D. (2020). COVID-19 and the Shift to Online Instruction: Can Quality Education Be Equitably provided to All? – Third Way. Available at: https://www.thirdway.org/report/covid-19-and-the-shift-to-online-instruction-can-quality-education-be-equitably-provided-to-all (Accessed December 15, 2020). doi:10.1109/icmeim51375.2020.00071

Zhao, Y., Lei, J., Yan, B., Lai, C., and Tan, H. S. (2005). What Makes the Difference? A Practical Analysis of Research on the Effectiveness of Distance Education. Teach. Coll. Rec. 107 (8), 1836–1884. doi:10.1111/j.1467-9620.2005.00544.x

Keywords: research methods, project-based learning, online, research self-efficacy, COVID-19

Citation: Randazzo M, Priefer R and Khamis-Dakwar R (2021) Project-Based Learning and Traditional Online Teaching of Research Methods During COVID-19: An Investigation of Research Self-Efficacy and Student Satisfaction. Front. Educ. 6:662850. doi: 10.3389/feduc.2021.662850

Received: 01 February 2021; Accepted: 07 May 2021;

Published: 28 May 2021.

Edited by:

Carla Quesada-Pallarès, Universitat Autònoma de Barcelona, SpainReviewed by:

Lori Caudle, The University of Tennessee, Knoxville, United StatesSandra Fernandes, Portucalense University, Portugal

Copyright © 2021 Randazzo, Priefer and Khamis-Dakwar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Melissa Randazzo, bXJhbmRhenpvQGFkZWxwaGkuZWR1

Melissa Randazzo

Melissa Randazzo Ryan Priefer

Ryan Priefer Reem Khamis-Dakwar

Reem Khamis-Dakwar