- Applied Cognitive Neuropsychology Laboratory, Department of Psychology, University of South Carolina, Columbia, SC, United States

Research suggests Specific Learning Disabilities (SLD) are directly linked to specific neurocognitive deficits that result in unexpected learning delays in academic domains for children in schools. However, meta-analytic studies have failed to find supporting evidence for using neurocognitive tests and, consequently, have discouraged their inclusion in SLD identification policies. The current study critically reviews meta-analytic findings and the methodological validity of over 200 research studies used in previous meta-analytic studies to estimate the causal effect of neurocognitive tests on intervention outcomes. Results suggest that only a very small percentage (6–12%) of studies used in previous meta-analytic studies were methodologically valid to estimate a direct effect of cognitive tests on academic intervention outcomes, with the majority of studies having no causal link between neurocognitive tests and intervention outcomes. Additionally, significant reporting discrepancies and inaccurate effect size estimates were found that warranted legitimate concerns for conclusions and policy recommendations provided in several meta-analytic studies. Given the lack of methodological rigor linking cognitive testing to academic interventions in current research, removing neurocognitive testing from learning disability evaluations may be premature. Suggestions for future studies evaluating the impact of neurocognitive tests on intervention outcomes as well as guidelines for synthesizing meta-analytic findings are discussed.

Introduction

Theoretical explanations of Specific Learning Disabilities (SLD) have historically referenced a neurological basis for the disability; however, the specific neurocognitive causes have only more recently been understood by interdisciplinary research in cognitive neuroscience, neuropsychology, and molecular genetics (Grigorenko et al., 2020). Additionally, research has found different neurocognitive deficits underly different types of learning disabilities (Butterworth et al., 2011; Butterworth and Kovas, 2013; Berninger et al., 2015; Eckert et al., 2017; Pennington, 2019) and are responsive to specific interventions (Frijters et al., 2011; Lorusso et al., 2011). Additionally, neurocognitive theories have gained importance for guiding clinical applications, specifically for special education evaluations in schools (Decker, 2008; Miller, 2008; Callinan et al., 2015).

Translational neurocognitive research has considerable promise for education (Decker, 2008), especially for helping children with neurodevelopmental learning problems in schools (D'Amato, 1990; Berninger and Richards, 2002; Wodrich and Schmitt, 2006; Ellison and Semrud-Clikeman, 2007; Miller, 2007; Decker, 2008; Feifer, 2008; Pennington, 2009). Surprisingly, however, recent meta-analytic studies have been summarized to suggest there is insufficient evidence for using neurocognitive tests in schools (Burns, 2016). As determined from reviewing over 200 studies in seven different meta-analyses, Burns (2016) concluded, “Assessing students' cognitive abilities to determine appropriate academic interventions has clearly been shown to be ineffective by multiple meta-analyses.” (p. 5). More definitively, the synthesized findings led Burns (2016) to conclude “Examining cognitive processing data does not improve intervention effectiveness and doing so could distract attention from more effective interventions.” (p. 4). Given federal law requires educational policy decisions to be based on evidence (Zirkel and Krohn, 2008) primarily from meta-analytic studies (Burns et al., 2015; Burns, 2016), the lack of evidence for using neurocognitive tests from meta-analytic studies has led some researchers to suggest SLD identification policies should be changed to no longer permit the option of using neurocognitive tests as part of a comprehensive disability evaluation in schools (Reschly, 2000; Miciak et al., 2016; Fletcher and Miciak, 2017; Kranzler et al., 2019; Grigorenko et al., 2020).

More specifically, meta-analytic studies have been viewed as collectively invalidating SLD identification options that permit the use of a child's Pattern of Strengths and Weakness (PSW) (Stuebing et al., 2015; McGill and Busse, 2016; Miciak et al., 2016; Kranzler et al., 2019), which has primary been guided by neurocognitive research. Given educational policies have a significant impact on the integration of neuroscience research in educational settings (Decker, 2008; Decker et al., 2013), policy recommendations for SLD identification have specifically been made to exclude neurocognitive testing in schools (Fletcher and Miciak, 2017, 2019; RTI Action Network, 2021), which has created a barrier for using neuropsychological research in education (Reschly and Gresham, 1989; Reschly and Ysseldyke, 2002).

While meta-analytic studies are important for guiding educational policies, eliminating neurocognitive testing as an SLD identification option, according to Kearns and Fuchs (2013), may be premature because: “First, the evidence suggests it may have potential. Second there is indisputable need for alternative methods of instruction for the 2 to 6% …of the general student population for whom academic instruction—including DI [Direct Instruction]-inspired skills-based instruction—is ineffective.” (p. 285). Similarly, Schwaighofer et al. (2015) also suggested calls to discontinue cognitively-informed academic intervention were “pre-mature” and that research on cognitive interventions “…could instead be interpreted as implicating that we have not even started to seriously design and vary the training conditions or, put more generally, the learning environment.” (p. 157). More importantly, recent meta-analytic findings have yet to be validated from external reviews, which is considered a required step prior to being used to guide educational policy decisions (Slavin, 2008). Critical reviews are vital given meta-analytic findings can be impacted by theoretical assumptions which may have an explicit (Kavale and Forness, 2000; Rosenthal and DiMatteo, 2001; Maassen et al., 2020) or implicit (Card, 2012) bias on research study outcomes. The critical need to review meta-analytic findings was most notably demonstrated in a study by Maassen et al. (2020) who found only half of the reported effect sizes (250 from a k = 500) in 33 different published meta-analytic studies could actually be validated, typically due to missing or inadequately reported methodological procedures.

Prior to informing educational policy decisions, meta-analytic studies should be critically reviewed and externally validated (Kavale and Forness, 2000; Rosenthal and DiMatteo, 2001; Simmons et al., 2011; Ferguson and Brannick, 2012; Maassen et al., 2020). Concerningly, meta-analytic studies evaluating the treatment utility of neurocognitive tests have already started to influence SLD identification policies (e.g., Burns et al., 2015; Burns, 2016; Fletcher and Miciak, 2017, 2019; RTI Action Network, 2021), despite having yet to be externally reviewed or validated. Specifically, research has created a “false dichotomy” between using either an RTI or cognitive testing approach (Feifer, 2008) in SLD identification procedures. Perpetuating the dichotomous view, federal guidelines for SLD identification have been characterized by Fletcher and Miciak (2019) in noting, “Two general frameworks are relevant for IDEA 2004: cognitive discrepancy frameworks and instructional frameworks that emanate from RTI” (p. 20) (Fletcher and Miciak, 2019), and are consistent with perspectives from national organizations providing training and guidance to schools (National Association of School Psychologists, 2011; RTI Action Network, 2021). As a dichotomous option, the lack of supporting evidence from meta-analytic studies (e.g., Burns et al., 2015; Burns, 2016) has led to recommendations to no longer permit cognitive testing as an option in SLD policy, which would result in RTI as the only option for learning disability evaluations (Burns et al., 2015; Stuebing et al., 2015; Burns, 2016; Fletcher and Miciak, 2017, 2019; RTI Action Network, 2021).

Problems with RTI models identified in other research studies have yet to be considered relevant (Gerber, 2005; Fuchs and Deshler, 2007; Reynolds and Shaywitz, 2009). Furthermore, numerous concerns for using RTI in schools have emerged on a variety of issues including legal (Dixon et al., 2011), procedural (Office of Special Education Programs, 2011), and parent dissatisfaction (Phillips and Odegard, 2017; Ward-Lonergan and Duthie, 2018; Mather et al., 2020), all of which are suggestive of systemic problems. More concerning, these systemic problems are consistent with concerns identified by the first large-scale evaluation study of RTI that found some negative impacts on reading outcomes for young children (Balu et al., 2015).

Arguably, the greatest relevance for neuroscientific research in education is for understanding the neurocognitive causes of learning disabilities. Additionally, the consequences for not permitting identification methods based on neurocognitive research has yet to be fully explored (Fuchs and Deshler, 2007). Not only is mandating the use of instructional-response methods for SLD identification unsupported by research, but it would also create significant barriers for bridging neuroscience and education, as facilitated by educational psychologists.

Educational psychologists are important mediators for ensuring neuroscientific research applied to education is evidence-based. Specifically, educational psychology can play a role in filtering out illegitimate neuroscientific research using basic cognitive neuroscience assumptions and making decisions on what neurocognitive-based interventions could feasibly be used within an academic setting. Evaluating the validity of meta-analytic assumptions that have policy implications for permitting neurocognitive based methods for SLD identification, thus, has important implications for supporting educational psychologists in translating neuroscientific research into educational applications.

Theoretical Basis of Neurocognitive Impacts on Intervention Outcomes

A critical aspect for understanding the causal impacts of intervention research is based on the theoretical models used to create behavioral change (Michie, 2008; De Los Reyes and Kazdin, 2009). The theoretical foundation of intervention studies not only provides insight into how interventions works (Rothman, 2009) but also how to conceptualize indirect and mediating factors that may impact intervention outcomes (Melnyk and Morrison-Beedy, 2018). Unfortunately, theoretical specification has been largely neglected in intervention research (Michie et al., 2009) as well as meta-analytic reviews of intervention studies, in part due to a lack of its inclusion in coding schemes (Brown et al., 2003).

Understanding the theoretical assumptions of translational research in educational neuroscience are especially important given the diversity of perspectives and widespread misconceptions in popular “Left Brain-Right Brain” theories of education (Hruby, 2012). More importantly, review of theoretical assumptions is important for understanding the causal relationship between neurocognitive tests and intervention outcomes. Ostensibly, neurocognitive tests should have no plausible impact on intervention outcomes unless theoretically specified as part of the intervention process. Ensuring neurocognitive deficits are theoretically linked to academic deficits is a critical element for using neurocognitive tests in schools (Feifer, 2008; Flanagan et al., 2010b; Decker et al., 2013).

Although effect size estimates in meta-analytic studies are interpreted to reflect causal hypotheses (Burns et al., 2015; Burns, 2016), the degree to which the theoretical framework of individual research studies permit a causal interpretation of calculated effect size estimates reported in meta-analytic reviews is often unclear. Additionally, inclusion/exclusion criteria in meta-analytic studies, which is a primary source of bias in meta-analytic methodology (Rosenthal and DiMatteo, 2001), have typically not considered theoretical assumptions of individual studies, despite the importance for linking neurocognitive deficits to targeted skill deficits in interventions (Decker et al., 2013). For example, evidence supports neurocognitive theories linking phonological deficits to word reading deficits (Morris et al., 2012; Berninger et al., 2015).

Given the impact on special education policy decisions, there is a critical need to review research evaluating the treatment utility of neurocognitive tests. The aim of the current study is to systematically review meta-analytic studies that evaluate cognitive-based interventions and educational outcomes to specifically: (1) evaluate the theoretical and methodological basis of every research study in each meta-analysis to specify causal impacts between cognitive/neurocognitive tests and intervention outcomes, and (2) determine the potential confounds that may impact the validity of conclusions and policy recommendations provided in each meta-analytic study. Toward this goal, a review of each individual study included in previous meta-analyses is completed as well as a review of the methodological approach used by each meta-analysis for integrating the effect size estimates across studies. This approach is used to identify potential methodological and theoretical assumptions that may be of relevance for understanding the impact of cognitive deficits on learning outcomes from intervention research studies. The results from this study are important for ensuring neurocognitive theories of learning disabilities are appropriately translated into educational applications.

Methods

The current study utilized keyword search criteria from previous meta-analytic reviews (i.e., Burns, 2016) to ensure only the most Recently published meta-analytic studies in peer-reviewed journals were selected for review. Consistent with most recently published studies (i.e., Burns et al., 2015; Burns, 2016), inclusion criteria required meta-analytic studies had to be published 2015-present and search terms included “cognitive tests,” “intervention”/“academic interventions,” “meta-analysis/review.” For inclusion criteria, the meta-analysis/critical review had to include some form of effect size analysis and had to include studies primarily written in English. A total of 8 studies were identified (Stuebing et al., 2009, 2015; Scholin and Burns, 2012; Kearns and Fuchs, 2013; Melby-Lervåg and Hulme, 2013; Burns et al., 2015; Faramarzi et al., 2015; Schwaighofer et al., 2015), one of which was excluded because the individual articles were written primarily in Persian and could not be coded (Faramarzi et al., 2015). These results are consistent with the studies reviewed by Burns (2016). Of note, although Kearns and Fuchs (2013) was not identified as a meta-analysis, the authors grouped effect size estimates, which have been synthesized by other studies (Burns, 2016).

The identified studies were considered to have some relevance for evaluating the intervention utility of neurocognitive testing. However, unlike Burns (2016), the current study included a review of the theoretical basis for specified hypotheses in each meta-analytic study. For example, hypotheses specified in Burns et al. (2015) were primarily in reference to neuropsychological models specified by Feifer (2008) and, more generally, neuropsychological models specified for identifying children with SLD (Hale et al., 2006). Kearns and Fuchs (2013) addressed three very specific hypotheses that, arguably, were the most methodologically valid for making causal inferences, although also not guided by any particular theory. Similarly, both studies by Stuebing et al. (2009) and Stuebing et al. (2015) provided no reference to cognitive or neurocognitive theories and relied only on studies retrieved from “keyword” searches of general terms.

Coding of Theoretical Assumptions of Cognitive Interventions

The general coding scheme developed by Michie and Prestwich (2010) to review intervention research was adapted for use in the current study. It was guided by hypotheses stated in previous meta-analytic studies and specifically to describe the theoretical assumptions of studies used to answer the question: Are academic learning outcomes influenced by academic interventions informed by cognitive test results? Detailed coding of individual research studies was conducted because: (1) it provided an objective criteria uniform to all studies, (2) it provided data to evaluate the causal impact of cognitive/neuropsychological tests with intervention outcomes, (3) informed the generalizability of theoretical models in applied settings, and (4) helped determine the degree of consistency between actual data reported in individual studies with the data reported in meta-analytic studies as an integrated summary.

Consistent with the coding scheme of Michie and Prestwich (2010), four criteria were used for documenting the theoretical assumptions for linking neurocognitive tests to intervention outcomes to reflect the general principles recommended for using neurocognitive tests (Decker, 2008). Each criterion is evaluated on a “Yes, No” rating indicating whether the criteria was met for each individual study. The criteria include:

1. Did the study use the results of cognitive/neuropsychological test (however defined by the study) to directly inform an intervention or intervention group assignment in the study?

2. Did the results of the study provide any direct empirical value or estimate for determining the degree in which cognitive/neuropsychological tests contributed to intervention outcomes?

3. Did the study evaluate or include a theoretical model for linking academic and cognitive deficits to academic interventions?

4. Did the authors of the study make any explicit comment or conclusions regarding the utility of cognitive/neuropsychological tests for academic interventions?

The criteria provide a general indicator to evaluate the causal validity of intervention research by coding the most basic elements required for making causal attributions. Generally, research studies evaluating causal relationships require research methodologies intentionally designed for measuring intervention effects using valid measures of behavior consistent with the theoretical model guiding the specific intervention being evaluated. More specifically, the theoretical and methodological approach of over 200 studies were objectively coded to evaluate each study's adequacy for providing a valid estimate of the treatment utility of neurocognitive tests. Individual studies were coded by two primary reviewers each trained to identify specific features of research articles. A third coder was included to provide a reliability estimate. Consistency across raters was high >90% and was anticipated given the relative simplicity of the criteria. For the few cases of inconsistent ratings, the third coder resolved the rating discrepancies by reviewing the article independently and considering coding justifications by each coder. Generally, rating differences occurred due to ambiguities in semantic interpretations of whether an explicit statement met criterion 4.

After coding each of the individual studies within each of the meta-analyses, the authors reviewed general meta-analysis methodology. Specifically, for each of the meta-analyses, the method of effect size estimation described in the meta-analysis was recorded and its efficacy for synthesizing effects was evaluated. Effect size estimates were not re-calculated for each meta-analysis for several reasons. First, there was a lack of transparency in effect size reports in most meta-analytic studies which made such a task impossible, which is contrary to standard guidelines in meta-analysis methodology (Borman and Grigg, 2009; Stegenga, 2011). Typically, the meta-analytic studies reviewed in the current study did not report effect sizes for individual studies, but rather only reported the summarized effect sizes as derived from numerous studies. Additionally, many studies provided insufficient details to accurately replicate the procedure for identifying both the effect and sample size calculations found in each individual study. Unfortunately, the inability to trace or replicate the exact methods and procedures used in some of the meta-analyses is not uncommon and is another example of the “replicability problem” in psychology (Maassen et al., 2020; Sharpe and Poets, 2020). As such, the current focus on research methodology was influenced by the information that could be obtained from each original meta-analysis and was considered important given the educational policy impact of these studies to influence SLD identification practices.

Methodology for Identifying and Excluding Redundant Studies

Previous studies attempting to synthesize meta-analytic findings (i.e., Burns, 2016) have neglected to account for studies included for review in more than one meta-analytic study. The inclusion of redundant studies that overlap across different meta-analytic studies violates a key statistical assumption for synthesizing effect sizes—the assumption of independence of variables. The lack of independence of effect sizes within a specific meta-analysis is a recognized methodological issue in the meta-analysis field (Rosenthal and DiMatteo, 2001), an issue that could “percolate up” (and possibly become a larger confound) as successive meta-analyses are combined. For example, non-independence may be a problematic if numerous studies are from the same research lab, which should be examined as a moderator variable (Rosenthal and DiMatteo, 2001). As such, overlap of studies was considered when evaluating meta-analyses.

To retrieve articles, the authors used EBSCOhost, ERIC, Google Scholar, and Interlibrary Loans when articles could not be located.

Results

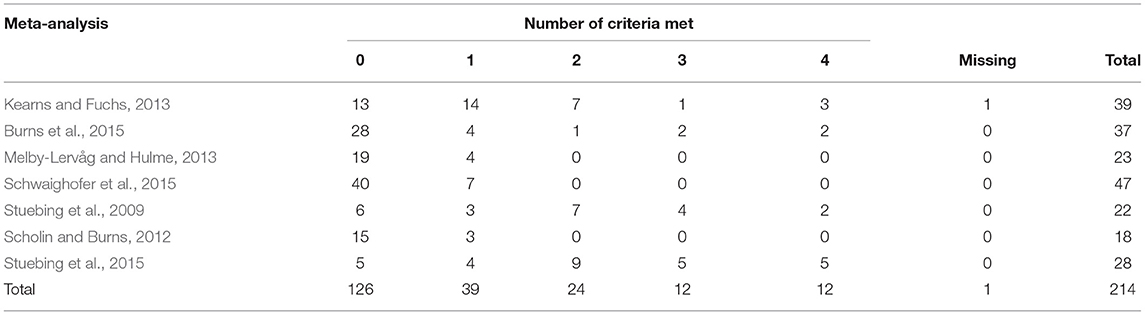

Tables 1–7 provide the coding results for each specific study used in each of the seven meta-analytic studies. For organizational purposes, the review categorized each meta-analysis by unique methodological aspects, either by the specific type of interventions and assessments evaluated, or effect size extrapolation method used. Through coding each study, it was determined that 36 studies overlapped across different meta-analytic reviews. The degree of bias introduced from violating independence assumptions cannot be determined but suggests the estimated effect sizes reported for 214 independent studies may be inaccurate considering the overlap of studies was not accounted for. In the current review of the seven meta-analyses discussed, a total of 214 total studies were identified, 176 of which were unique articles. Only one article could not be retrieved and was not coded (Boden and Kirby, 1995).

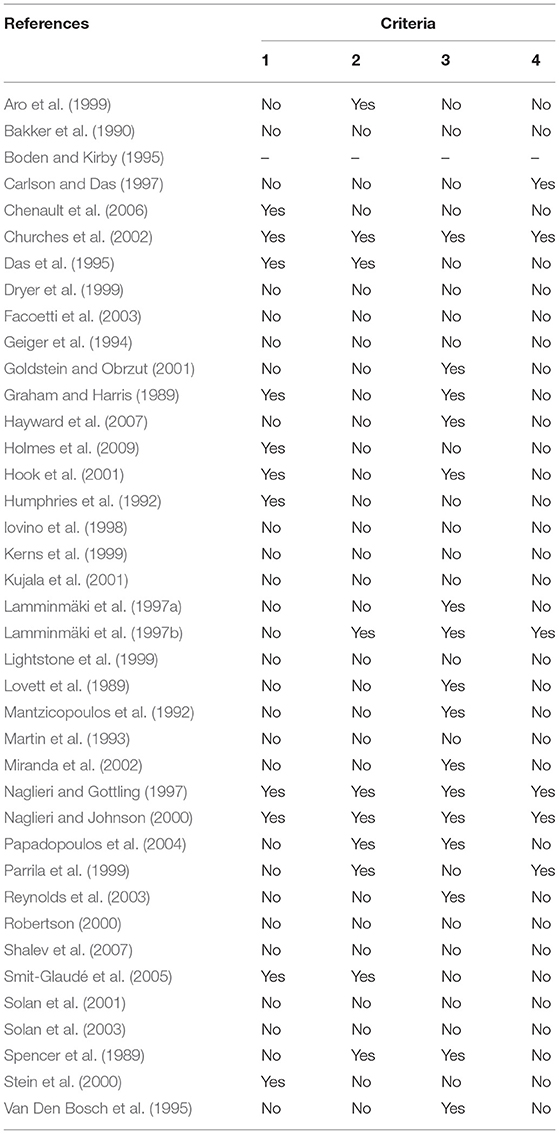

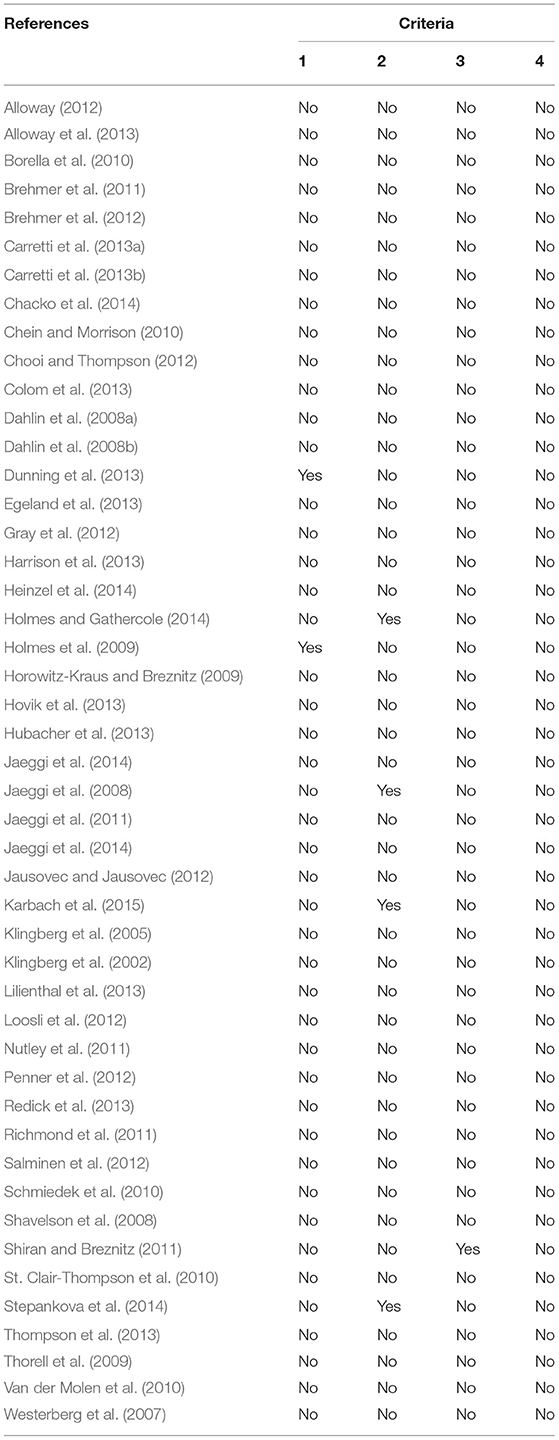

Table 1. Kearns and Fuchs (2013).

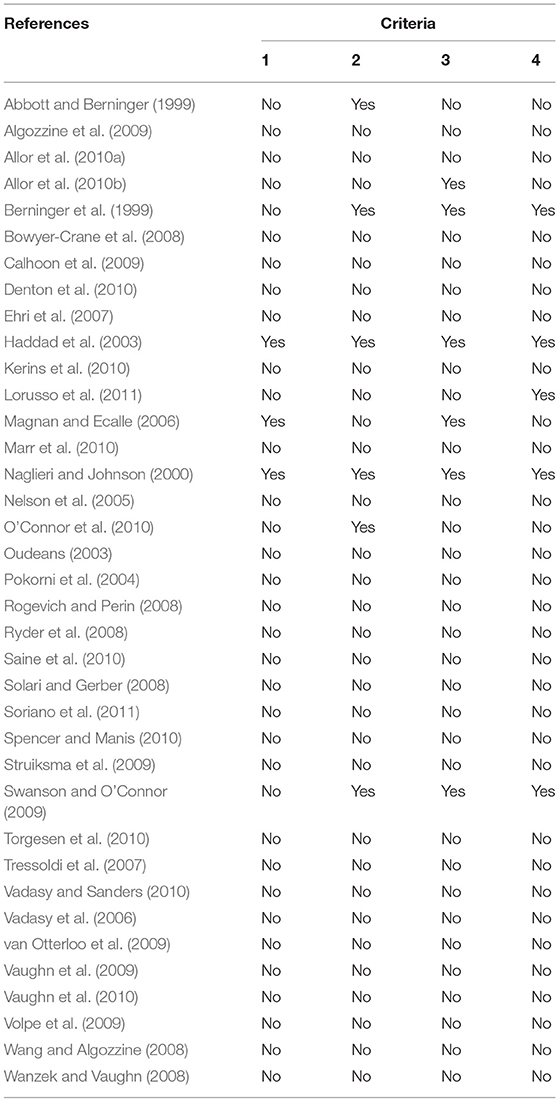

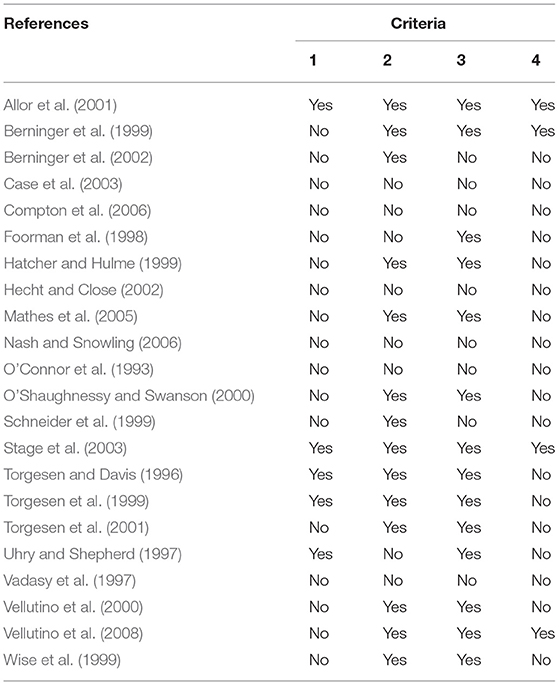

Table 2. Burns et al. (2015).

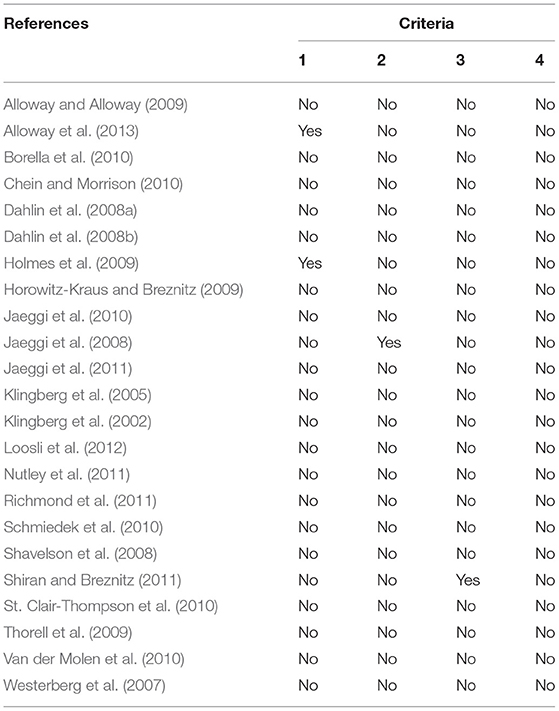

Table 3. Melby-Lervåg and Hulme (2013).

Table 4. Schwaighofer et al. (2015).

Table 5. Stuebing et al. (2009).

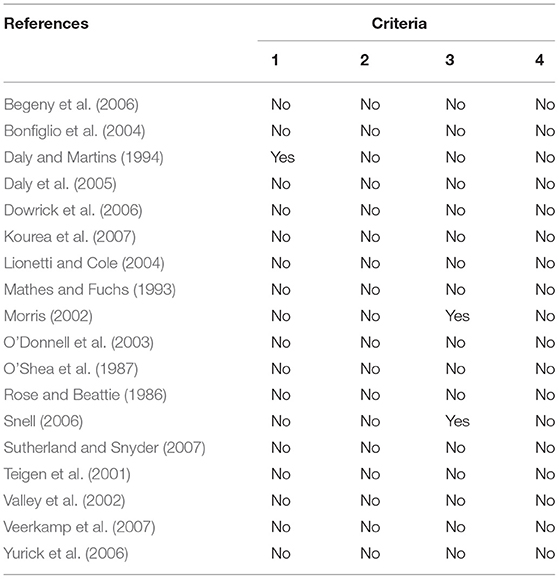

Table 6. Scholin and Burns (2012).

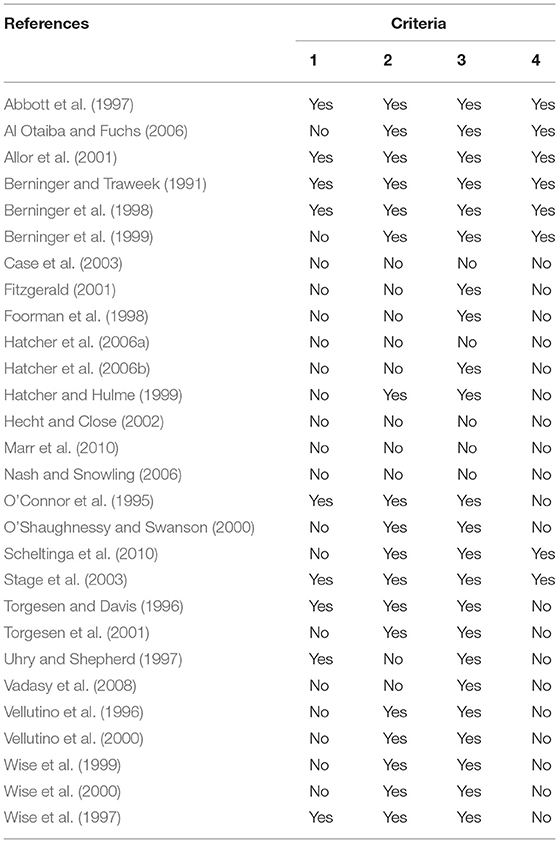

Table 7. Stuebing et al. (2015).

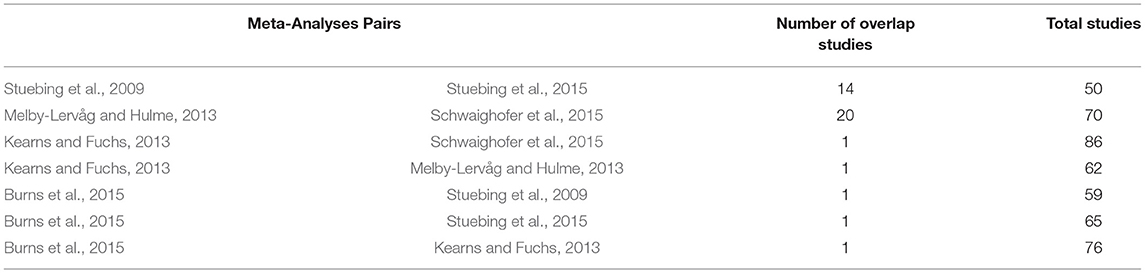

Table 8 provides a comparison of studies used in each meta-analytic review to determine the number of overlapping studies. As indicated by Table 8, all meta-analytic studies included at least one study that overlapped with another meta-analytic study. Overall, the percentage of overlapping studies was low for most studies but ranged from 1 to 29%. Since effect sizes from most studies could not be replicated, the impact of overlapping studies could not be determined but should be considered when synthesizing data from different meta-analytic studies.

Meta-Analytic Studies Explicitly Evaluating Diagnostic Testing on Intervention Outcomes

Two meta-analytic studies were found that explicitly attempted to evaluate the causal impact of diagnostic testing (i.e., cognitive, neuropsychological, and/or academic testing) on intervention outcomes. However, there were considerable differences between the research approach used in each study. As such, relevant details of each study's research methodology was reviewed.

Study 1

Kearns and Fuchs (2013) analyzed 39 articles that specifically addressed multiple hypotheses related to the impact of cognitive testing on intervention outcomes. Although reported as a meta-analysis by other articles (Burns, 2016), Kearns and Fuchs (2013) noted “…we did not conduct a meta-analysis.” (p. 286), rather the article is a review of literature. One of the hypotheses tested by Kearns and Fuchs directly evaluated the relationship between cognitive tests and intervention outcomes by stipulating studies must provide explicit matching of interventions to cognitive deficits. The mean effect size reported for this specific hypothesis, which most directly evaluates the effect size of cognitive tests on intervention outcomes, was estimated to be large effect (d = 1.12, k = 5). As previously mentioned, this large effect size has been generally disregarded due to the small sample size and potential outlier of one effect. However, a notable strength of this study was inclusion of a methodological evaluation of research studies to determine effect size estimates.

Of note to the current evaluation, although interventions specifically matched cognitive deficits, most commonly the individual interventions analyzed in the studies were purely cognitive, and not academic interventions (e.g., brain stimulation interventions aimed at improving reading). As such, when Kearns and Fuchs (2013) was coded, only three studies met all four criteria for testing academic intervention effectiveness using cognitive tests (Table 1). Kearns and Fuchs (2013) concluded that results were improved for interventions (both cognitive and academic) that specifically aimed at alleviating tested cognitive deficits. Although a comprehensive review, the studies in Kearns and Fuchs (2013) do not primarily evaluate cognitive-informed academic interventions, and as such, do not specifically address the question of whether cognitive testing should be used to inform academic interventions.

Study 2

Burns et al. (2015) conducted a meta-analysis to evaluate the utility of cognitive/ neuropsychological testing to inform academic interventions. Burns et al. (2015) provided the statistical formula used for calculating effect sizes; however, the data sampled from each study as well as the effect size for each study after adjustment was not included. A total of 37 studies met review inclusion criteria. The effect size reported across all studies was small (d = 0.47, k = 37). Additionally, more specific estimates of effect sizes were reported by grouping studies into narrower categories. The generally small effect sizes were interpreted as evidence against the general use of cognitive/neuropsychological tests in special education evaluations as well as discrediting SLD identification approaches using cognitive testing (e.g., Naglieri and Johnson, 2000; Dehn, 2008; Feifer, 2008; Flanagan et al., 2010a; Fiorello et al., 2014).

After coding, only four articles included in Burns et al. (2015) met three or four criteria and as such, these articles used a methodological approach that could potentially provide a valid effect size estimate between cognitive/neuropsychological tests and intervention outcomes as defined in the current review (Table 2). Although not meeting full coding criteria, 6 (19%) reviewed studies did directly link diagnostic test results to intervention conditions (Criterion #2) (Abbott and Berninger, 1999; Berninger et al., 1999; Naglieri and Johnson, 2000; Haddad et al., 2003; Swanson and O'Connor, 2009; O'Connor et al., 2010) and as such, each of these studies may also provide an accurate estimate of the effect of cognitive tests on intervention outcomes.

Meta-Analytic Studies Focused on Working Memory

Two meta-analytic studies of relevance for academic interventions have more narrowly focused on a specific cognitive domain: Working Memory (WM) (Melby-Lervåg and Hulme, 2013; Schwaighofer et al., 2015). While perhaps seeming less relevant, there are a significant number of studies evaluating WM interventions on academic outcomes, and these studies have previously been identified as highly relevant for evaluating the utility of cognitive tests (Burns, 2016). The large number of studies available is due, in part, from the well-developed theoretical research linking WM performance to important variables such as academic learning, general cognitive ability, and diagnostic classification for numerous clinical conditions (Englund et al., 2014; Schneider and McGrew, 2018). Additionally, numerous studies have provided evidence for the effectiveness of formal working memory intervention programs (e.g., Holmes and Gathercole, 2014; Pearson, 2018), and the veracity of claims is an active topic of research (Morrison and Chein, 2011; Shipstead et al., 2012).

Study 3

The study by Melby-Lervåg and Hulme (2013) provided a meta-analytic review of intervention studies specifically focused on the cognitive construct of Working Memory. Melby-Lervåg and Hulme (2013) reviewed 23 studies that where systematically coded to determine if moderator variables may have impacted the WM interventions. Only research studies that included a control group and pre-test/post-test measures were included. Effect sizes estimates were reported for each study and a transparent coding of article features is provided in Table 3. The importance of WM has led to the development of intervention approaches to remediate WM deficits, with CogMed being one of the most researched (Pearson, 2018). A core variable of interest in this meta-analysis was whether WM interventions produced short-term gains that were sustained over longer periods of time and whether the intervention effects generalized (i.e., transferred) to other variables, such as academic achievement. Overall, the authors concluded the evidence does not support the use of WM training programs for clinical purposes.

Evaluating the efficacy of WM interventions is beyond the scope of the current article, as the efficacy of WM interventions is a topic of debate. However, there are several important concerns in the use of this meta-analysis for evaluating the relevance of cognitive tests for guiding academic interventions. Like Kearns and Fuchs (2013), many articles in Melby-Lervåg and Hulme (2013) used cognitive working memory interventions, rather than cognitively informed academic interventions. Due to this, none of the studies included in the meta-analytic review of WM training met more than one criterion (Table 3). Additionally, also noted by Melby-Lervåg and Hulme (2013), few studies provided any theoretical basis for linking WM interventions to specific tests of WM, noting most WM interventions “…do not appear to rest on any detailed task analysis or theoretical account of the mechanisms by which such adaptive training regimes would be expected to improve working memory capacity.” (p. 272). In culmination, findings in Melby-Lervåg and Hulme (2013) demonstrate little value for sole cognitive interventions on academic outcomes (Burns, 2016), and do not address the impact of cognitively informed academic interventions on academic outcomes.

Study 4

Schwaighofer et al. (2015) also reported a meta-analysis specifically for WM interventions. A diverse array of studies was used that included children as well as adults up to the age of 75 (47 studies total). The methodology and hypotheses were similar, if not identical, to Melby-Lervåg and Hulme (2013). As stated by the authors, this meta-analysis served to include research studies published after the Melby-Lervåg and Hulme (2013) study and to provide a more differentiated analysis of training conditions used in each study. Effect size calculations were made transparent and listed for each study. Unlike other meta-analytic reviews, a random-effects model was used which provided a more methodologically defensible approach for integrating various effect sizes and as well as mixed-effects models for examining the influence of moderating variables. Overall, results were consistent with Melby-Lervåg and Hulme (2013) and suggested that WM training provides both immediate and sustained near-transfer effects in WM but does not result in far-transfer effects (e.g., word decoding and math skills). Importantly, the authors note that cognitive training should not be conducted in isolation from its intended effect or goal, but rather should be embedded as part of the academic intervention. For example, children with reading comprehension deficits due to low WM are more likely to benefit from WM interventions that embed supports for specific cognitive elements directly in evidence based academic interventions [see Peng and Goodrich (2020)].

While the significant heterogeneity of outcomes across different studies provides a challenge for calculating a single effect size, the methodological limitations of the study were clearly described. However, one concern for the current study is the large number of studies that overlapped with Melby-Lervåg and Hulme (2013). A total of 20 studies overlapped between the studies, meaning 57% of articles were shared between the two meta-analyses. While intentional, this is problematic for studies attempting to synthesize effect sizes from these two meta-analyses due to violation of the mutually independent outcomes assumption (Rosenthal and DiMatteo, 2001; Card, 2012). Indeed, it does not appear the effect sizes reported for each meta-analysis by Burns (2016) considered the overlap of studies. Generally, both Melby-Lervåg and Hulme (2013) and Schwaighofer et al. (2015) meta-analyses specifically examined WM-based cognitive interventions, and thus are not useful for answering the question of whether cognitive testing is important for informing academic interventions.

Meta-Analytic Studies Using Extrapolated Data

The following three studies were conceptually grouped based on a common methodological approach for estimating the effect sizes (for diagnostic tests on intervention) that generally required some form of methodological extrapolation. Extrapolation of data to calculate effect sizes in these meta-analyses typically used correlation matrices. Often, the creation of correlation matrices in the meta-analyses required various conversion methods or re-estimation using statistical models to provide empirical estimates of the effect between diagnostic tests and intervention outcomes in each study evaluated.

Study 5

Stuebing et al. (2009) reviewed 22 studies that included IQ as part of an intervention study in reading. Effect size estimates reported by Stuebing et al. (2009) were based on obtaining the correlation of IQ scores with intervention outcomes reported in individual studies. The effect sizes reported were based on averaging the correlations found in different studies which was then used to evaluate different theoretical models. As described in the methods section, “…if we had two effects, one of which was r = −0.2 and the other was r = 0.3 and we average them, we get a mean r = 0.05 as our result.” (p. 5). Using various models to control for pre-test reading scores, IQ was found to have a small to negligible relationship with intervention outcomes. Results from the study were interpreted as refuting previous studies demonstrating intelligence uniquely predicted intervention response (Fuchs and Young, 2006), which was suggested to have resulted due to biased methods.

As noted by the authors, none of the studies reviewed were specifically designed to evaluate the effect of IQ on intervention response and the effect sizes used were extrapolated from the correlation matrices of each study. Of concern, estimating effect sizes by averaging correlations is a critical data analytic error (Silver and Dunlap, 1987). Averaging correlation coefficients requires an initial transformation method (e.g., Fisher's z) and results in an underestimation of the association between two variables (Silver and Dunlap, 1987). All effect size estimates derived from this approach should be considered invalid.

As noted in Table 5, only two articles met all four coding criteria, with an additional four articles meeting three criteria. Although many studies in this meta-analysis met criteria for having some theoretical model linking academic and cognitive deficits, it was uncommon for the studies to use an academic intervention that matched underlying cognitive deficits or measure the impact of neuropsychological testing as related to intervention outcomes.

Study 6

Scholin and Burns (2012) reported a meta-analytic review of research that specifically focused on predictors of oral reading fluency interventions. A total of 18 studies were reviewed that included 31 different reading interventions. Eight of these studies included IQ data. Effect size estimates were based on correlations reported in each study between IQ scores with post-intervention fluency scores and growth indicators as determined by the change in scores divided by the number of weeks in the intervention. Scores were corrected for restriction of range and Fisher's z transformation was used for correlations. The study reported a negative correlation (-0.47) between IQ and post-intervention reading fluency as well as a negative correlation between IQ and reading fluency growth (-.09). Non-parametric analyses were presented by dividing 37 participants into three categories based on percentile range of IQ scores (below 5th percentile, 6th to 25th percentile, and 25th percentile or greater) to compare mean rank reading growth scores. The nonsignificant Kruskal-Wallis test was interpreted as evidence refuting recommendations suggested by Fiorello et al. (2006) for linking cognitive measures to intervention process. Based on this finding, authors concluded, “…a negative relationship with post-intervention levels raised questions about the relevance of IQ to intervention design.” (p. 394), and despite its limitations, “…this meta-analysis could potentially validate RTI research and practice. Practitioners could question the utility of IQ scores within an intervention framework…” (p. 395).

Unlike previous studies, the study by Scholin and Burns (2012) appropriately used a Fisher's z transformation for correcting correlation estimates. However, the quality of evidence was low as none of the reviewed studies explicitly used cognitive test (i.e., IQ) scores to inform the interventions being evaluated. As seen in Table 6, only two studies in this review met at least a single coding criterion, with 0% meeting at least two criteria. Interesting, the theoretical disconnection was acknowledged by the authors in noting the reviewed studies only used test scores, “…for screening purposes rather than to design specific interventions…” (p. 388) (Scholin and Burns, 2012). Additionally, findings of a negative correlation between IQ and reading tests are unusual and inconsistent with findings from other research studies (Fuchs and Young, 2006). Unfortunately, there was a lack of transparency for describing data transformation methods used to estimate IQ correlations with growth z-scores. Additionally, there were significant inconsistencies between the original effect size estimates reported in Scholin and Burns (2012) with the effect size estimate used by Burns (2016) to represent the findings from this study. For example, the effect size estimate reported by Burns (2016) of (d = 0.27, k = 18) was not found in Scholin and Burns (2012) in any table or in text. Thus, the validity of results from both studies are questionable and the lack of transparency clearly demonstrates the replication problems inherent in psychological research that strengthen the need for critical reviews (Maassen et al., 2020). Nonetheless, effect size estimates were interpreted as support for policy recommendations to discontinue IQ testing (Scholin and Burns, 2012), and although similar effect size estimates were found for reading comprehension tests, caution is warranted when using this meta-analysis to determine the impact of cognitively-informed academic interventions on academic outcomes.

Study 7

Stuebing et al. (2015) conducted a meta-analysis to evaluate the importance of pre-intervention cognitive characteristics for predicting reading growth from studies that evaluated various reading interventions. The review included 28 studies that included cognitive test scores of participants who received reading interventions. One important strength of this study is specific cognitive predictors were not limited to general composites of IQ and included specific reading related measures (e.g., phonological analysis and rapid naming) which provided a more direct evaluation of how cognitive tests were used in neuro-cognitive theories. Effect sizes were coded for any study with pre-intervention measures of cognition that also provided correlations with interventions. Additionally, a coding scheme was used to describe the degree in which each study provided sufficient information, as rated on a Likert Scale from 1 to 5, for estimating cognitive scores with intervention outcome metrics. Effect sizes for studies were presented based on the study's coding classification. The coding scheme was used because different studies provided different data estimates. Cognitive and intervention effect sizes were estimated based on available data. Additionally, because the type of model was considered a confound, effects were analyzed with 3 statistical models to predict growth curve slope, gain, or post-intervention reading after controlling for pre-intervention reading levels. The relationship between cognitive characteristics and reading growth was estimated across each of the models to have a low of 0.15 (conditional model) to a high of 0.31 (growth model) effect size. The authors concluded the small effect sizes found in this study, “…calls into question the utility of using cognitive characteristics for prediction of response…” (p. 395).

Given effect sizes for cognitive characteristics on intervention outcome were extrapolated from different studies, it is not surprising that only 5 of the 28 studies met all four criteria (see Table 7). However, the methodological rating used in this meta-analysis suggests a methodological evaluation of individual studies within meta-analyses may improve quality of study inclusion. Regardless, the majority of studies included in Stuebing et al. (2015) did not directly test the effect of using cognitive test results to inform theory-based intervention approaches. Furthermore, while including studies with attention and working memory measures, this study also categorized measures of “print knowledge,” “spelling,” “word reading,” and other academic variables as “cognitive” characteristics. Most studies were based on the use of phonological awareness as the cognitive test, as only one effect size was listed for Attention and two for WM, neither of which had effect sizes reported for all three estimation models.

While the results of Stuebing et al. (2015) were interpreted to suggest cognitive characteristics were unimportant for predicting intervention response outcomes, it is important to note, based on the methodological approach used in the study, the results have little relevance for determining the value of cognitive test scores, however defined, for improving academic interventions. Rather, the results provide an estimate for the correlation between cognitive test scores and changes in reading scores for a restricted range of children (at-risk) receiving some type of reading intervention for some unspecified amount of time. That is, the correlations estimated likely reflect predictors of a more general learning effect found across general reading interventions. While interesting, this is inconsistent with the use of cognitive tests from neurocognitive theories which suggest children with reading deficits due to phonological awareness deficits would benefit more from a phonological reading intervention than from other types of interventions, such as a vocabulary building intervention (Decker et al., 2013). Similarly, cognitive predictors of growth are only relevant if they are meaningfully related to the type of intervention provided, and only relevant for predicting intervention success if there is a clear standard for determining a child's successful or unsuccessful response to intervention. In such circumstances, cognitive predictors provide a method for determining the relative odds of success for using a particular intervention, given the specific level of cognitive functioning (as measured by cognitive/neuropsychological tests).

Results From Coding Summaries Across All Studies

Table 9 provides a summary to describe the theoretical and/or methodological adequacy for each research study to directly estimate the causal effect of neurocognitive tests on intervention outcomes based on the coding scheme previously described. Duplicates are not excluded and 1 of the 214 studies could not be retrieved for review (Boden and Kirby, 1995), which resulted in a total of 213 studies. For the 213 reviewed studies, over half studies (59%) were coded as meeting 0 criteria which indicates most studies were theoretically or methodological insufficient to estimate the effect of cognitive/neurocognitive tests on intervention outcomes. For the remaining studies, 18% were coded as a one, 11% were coded as a two, 6% were coded as a three, and only 6% were coded as a four.

Table 9. Coding summary of evidence quality (0 to 4 with higher ratings representing higher quality of evidence) for research studies included in each meta-analytic study.

When evaluating why many studies did not meet criteria, the authors found many intervention studies only “incidentally” included “cognitive tests” with no methodological connection to the intervention evaluated in the study. For example, the intervention study by Vaughn et al. (2010) did include a cognitive test, the Kaufman Brief Intelligence Test−2 (KBIT-2; Kaufman, 2004), but as stated by the authors, the KBIT-2 was “…used in this study for descriptive purposes.” (p. 7). As such, many of the studies evaluated used cognitive/neuropsychological tests, but not in a way that informed the academic interventions that were being performed in the individual study.

Additionally, it was noted that meta-analytic studies that included ratings of research quality (Kearns and Fuchs, 2013; Stuebing et al., 2015) also had a higher number of studies meeting at least 3 criteria, which may be an important consideration for future studies evaluating the utility of cognitive testing on academic intervention outcomes. In contrast, research quality was not a component of most meta-analytic studies which predominately included research studies with insufficient validity for providing evidence to evaluate the treatment utility of cognitive/neurocognitive tests. Examples of such research may include intervention studies that reported the results of cognitive tests only for sample description purposes or simply to rule-out low intellectual ability as a confound in the study. Nonetheless, data from Table 9 suggest current meta-analytic studies which have collectively been used for guiding SLD identification policy may be limited by the small percentage of studies (approximately 6%) that have an adequate theoretical or methodological basis for evaluating the potential of using neurocognitive testing to impact intervention outcomes.

Discussion

Identifying children with learning disabilities and recommending appropriate intervention strategies is a complex diagnostic decision that should be guided by evidence-based research (Decker et al., 2013). Meta-analytic studies serve to summarize results across numerous research studies and guide social policy decisions (Kavale and Forness, 2000). However, the utility of neuropsychological-based interventions has not been supported by meta-analytic studies which creates a significant barrier to integrating neuropsychological research into the educational environment. The goal of the current study was to provide a critical review of recent meta-analytic studies with a specific focus on the theoretical and methodological validity for research studies to provide a causal link between cognitive or neuropsychological measures and intervention outcomes. Studies were evaluated to determine if they answered the question: Are academic learning outcomes influenced by academic interventions informed by cognitive test results? More specifically, 200 studies were reviewed and evaluated based on their theoretical and methodological adequacy to directly estimate the treatment utility of neurocognitive tests.

Overall, the current study found 59–88% of the 212 individual studies within meta-analytic studies that estimated the effect of diagnostic cognitive/neuropsychological tests do not adequately test the direct impact of neuropsychological tests on educational outcomes through academic interventions. Indeed, many studies only “incidentally” included cognitive tests for descriptive or exclusionary purposes. Only 6–12% of individual studies reviewed here (meeting 3 or 4 criteria) evaluated cognitive testing and interventions used adequate methodology to directly test the impact of neuropsychological testing on targeted academic interventions and educational outcomes. Additionally, the lack of transparency, methodological adjustments, and selective omission of effect sizes were also noted as potential methodological confounds impacting special education policy recommendations informed by these meta-analytic studies (e.g., Fletcher and Miciak, 2019). Given the lack of studies that directly tested the association between cognitive testing and academic interventions within these meta-analyses and possible methodological confounds, there is a question of whether neuropsychological testing should be removed from educational identification practices.

Additionally, clear methodological and reporting inconsistencies were found in meta-analytic studies evaluating neurocognitive tests. For example, the small effect size reported for neurocognitive tests (d = 0.26, k = 203), as reported by Burns (2016) from an integration of seven different meta-analytic studies (Stuebing et al., 2009, 2015; Scholin and Burns, 2012; Kearns and Fuchs, 2013; Melby-Lervåg and Hulme, 2013; Burns et al., 2015; Schwaighofer et al., 2015) was based on inaccurate effect and sample sizes estimates. Specifically, sample size from one meta-analytic study (i.e., Burns et al., 2015) included a total of 37 studies in which only 8 were used for estimating the effect size of cognitive/neurocognitive tests (d = 0.17, k = 8). However, when integrated with other meta-analytic studies, the effect size from the small subcategory of 8 studies (d = 0.17, k = 8) was reported to represent the total sample of all 37 studies [see Table 2 in Burns (2016)]. This inaccuracy, which reduces the effect size but over-inflates the sample size, is significantly different than the overall effect size reported in the study (d = 0.41, k = 37). Of additional concern, some meta-analytic studies found large effect sizes for neuropsychological tests on learning outcomes, often from studies specifically designed to measure such an effect (e.g., Kearns and Fuchs, 2013). However, the effect sizes from these studies were omitted from the effect size calculation in synthesized studies despite being counted as part of the overall sample size calculations (e.g., Burns, 2016), which systematically lowers the effect size while over-inflating the sample size. Although clearly questioning the validity of reported effect size estimates, it is unclear to what extent such methodological discretions have impacted educational policy recommendations (Burns et al., 2015; Burns, 2016; Fletcher and Miciak, 2017) as derived from meta-analytic studies.

As noted in the current study, there is a scarcity of research studies that have been conducted to rigorously evaluate the effect of diagnostic tests on academic intervention outcomes. Additionally, the general lack of training in understanding cognitive neuroscience, as well as the lack of explicit methodological standards for testing neurocognitive models, has likely contributed to conceptual misunderstandings (Decker, 2008). Educational psychologists must adequately address these misconceptions to prevent erroneous conclusions arising from research studies based on inaccurate assumptions of how brain-based research can be used to inform educational applications. Educational research often exclusively focuses on behavioral outcomes of learning from instruction. In contrast, neuroscience research places a greater focus on mechanistic relationships of neurocognitive influences on behavioral outcomes. Research from educational psychology is ideally suited for integrating neuropsychological theories with behavioral measures of academic achievement to evaluate the validity of cognitively informed academic interventions.

Most contemporary cognitive or neurocognitive based tests are grounded in research-based theoretical models and thus, only those studies that directly test the link between the theory-based test results and intervention studies seem relevant to informing educational policy. Consequently, the meta-analytic studies reviewed here have primarily estimated the random association of cognitive test results with intervention outcomes rather than the more specific effect of cognitive test data (when used to inform or adapt interventions) on learning outcomes from specific interventions.

Overall, the review of meta-analytic studies forming the evidence-base for guiding special education policy suggests a critical need for more research and greater transparency in meta-analytic methodology before neurocognitive testing is removed from current SLD identification practices. Given the lack of research directly testing the impact of cognitively informed academic interventions on educational outcomes, changes to special education policies for diagnostic testing based on meta-analytic studies reviewed may be premature. Additionally, the growing insistency for reducing diagnostic identification options on the basis of “evidence-based” research may also be premature. While RTI approaches are valuable, implementation challenges have resulted in the need to investigate alternative approaches as well as integrated approaches that combine RTI with diagnostic testing. However, theoretical bias in research has been a historic problem in SLD research and has been a specific concern for using “scientific, research-based” interventions in IDEA 2004 (Allington and Woodside-Jiron, 1999; Allington, 2002; Hammil and Swanson, 2006; Swanson, 2008; Kaufman et al., 2020).

Educational policy decisions concerning identification and intervention practices are guided by research evidence. As such, the onus is on educational psychology researchers to adequately assess the evidence-base of neuropsychological testing and academic interventions using neurocognitive models of learning. As noted in the current review, research studies that often argue against the use of neuropsychological testing in schools, although important in their own right, do not often test the causal link between neuropsychological testing and academic outcomes and as such, should not be used to make educational decisions until their utility is adequately assessed. Although beyond the scope of the current study, the authors highly recommend a new synthesis of research articles that methodologically test whether neuropsychological testing resulting in targeted academic interventions truly impact educational outcomes. The current study determined the need for such research articles and urges educational agencies to consider the plethora of neuroscientific research that suggests the importance of using neuropsychologically-targeted academic interventions to improve educational outcomes.

Author Contributions

SD conceived the original idea for the review. JL helped to code all articles in the review and synthesized results. SD and JL wrote and edited the entire manuscript. Both authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Abbott, S. P., and Berninger, V. W. (1999). It's never too late to remediate: Teaching word recognition to students with reading disabilities in grades 4–7. Ann. Dyslexia 49, 221–250. doi: 10.1007/s11881-999-0025-x

Abbott, S. P., Reed, E., Abbott, R. D., and Berninger, V. W. (1997). Year-long balanced reading/writing tutorial: a design experiment used for dynamic assessment. Learn. Disab. Q. 20, 249–263. doi: 10.2307/1511311

Al Otaiba, S., and Fuchs, D. (2006). Who are the young children for whom best practices in reading are ineffective? An experimental and longitudinal study. J. Learn. Disab. 39, 414–431. doi: 10.1177/00222194060390050401

Algozzine, B., Marr, M. B., Kavel, R. L., and Dugan, K. K. (2009). Using peer coaches to build oral reading fluency. J. Educ. Stud. Placed Risk 14, 256–270. doi: 10.1080/10824660903375735

Allington, R. L. (2002). “Research on reading/learning disability interventions,” in What Research has to Say About Reading Instruction, Vol. 3, 261–290.

Allington, R. L., and Woodside-Jiron, H. (1999). Politics of literacy teaching: How “research” shaped education policy. Educ. Res. 28, 4–13. doi: 10.3102/0013189X028008004

Allor, J. H., Fuchs, D., and Mathes, P. (2001). Do students with and without lexical retrieval weaknesses respond differently to instruction? J. Learn. Disab. 34, 264–275. doi: 10.1177/002221940103400306

Allor, J. H., Mathes, P. G., Roberts, J. K., Cheatham, J. P., and Champlin, T. M. (2010a). Comprehensive reading instruction for students with intellectual disabilities: findings from the first three years of a longitudinal study. Psychol. Schools 47, 445–466 doi: 10.1002/pits.20482

Allor, J. H., Mathes, P. G., Roberts, J. K., Jones, F. G., and Champlin, T. M. (2010b). Teaching students with moderate intellectual disabilities to read: An experimental examination of a comprehensive reading intervention. Educ. Train. Aut. Dev. Disab. 45, 3–22.

Alloway, T., and Alloway, R. (2009). The efficacy of working memory training in improving crystallized intelligence. Nat. Prec. doi: 10.1038/npre.2009.3697.1

Alloway, T. P. (2012). Can interactive working-memory training improve learning? J. Inter. Learn. Res. 23, 197–207.

Alloway, T. P., Bibile, V., and Lau, G. (2013). Computerized working memory training: can it lead to gains in cognitive skills in students? Comp. Human Behav. 29, 632–638. doi: 10.1016/j.chb.2012.10.023

Aro, T., Ahonen, T., Tolvanen, A., Lyytinen, H., and de Barra, H. T. (1999). Contribution of ADHD characteristics to the academic treatment outcome of children with learning difficulties. Dev. Neuropsychol. 15, 291–305. doi: 10.1080/87565649909540750

Bakker, D. J., Bouma, A., and Gardien, C. J. (1990). Hemisphere-specific treatment of dyslexia subtypes: a field experiment. J. Learn. Disab. 23, 433–438. doi: 10.1177/002221949002300707

Balu, R., Zhu, P., Doolittle, F., Schiller, E., Jenkins, J., and Gersten, R. (2015). Evaluation of Response to Intervention Practices for Elementary School Reading. NCEE 2016-4000. National Center for Education Evaluation and Regional Assistance.

Begeny, J., Daly, E. J. I. I. I., and Valleley, R. (2006). Improving oral reading fluency through response opportunities: a comparison of phrase drill error correction with repeated readings. J. Behav. Educ. 15, 229–235. doi: 10.1007/s10864-006-9028-4

Berninger, V., and Traweek, D. (1991). Effects of a two-phase reading intervention on three orthographic-phonological code connections. Learn. Individ. Diff. 3, 323–338. doi: 10.1016/1041-6080(91)90019-W

Berninger, V. W., Abbott, R. D., Vermeulen, K., Ogier, S., Brooksher, R., Zook, D., et al. (2002). Comparison of faster and slower responders to early intervention in reading: differentiating features of their language profiles. Learn. Disab. Q. 25, 59–76. doi: 10.2307/1511191

Berninger, V. W., Abbott, R. D., Zook, D., Ogier, S., Lemos-Britton, Z., and Brooksher, R. (1999). Early intervention for reading disabilities: teaching the alphabet principle in a connectionist framework. J. Learn. Disabil. 32, 491–503. doi: 10.1177/002221949903200604

Berninger, V. W., Richards, T., and Abbott, R. D. (2015). Differential diagnosis of dysgraphia, dyslexia, and OWL LD: behavioral and neuroimaging evidence. Read. Writ. 28, 1119–1153. doi: 10.1007/s11145-015-9565-0

Berninger, V. W., and Richards, T. L. (2002). Brain literacy for educators and psychologists. San Diego, CA: Academic Press.

Berninger, V. W., Vaughan, K., Abbott, R. D., Brooks, A., Abbott, S. P., Rogan, L., et al. (1998). Early intervention for spelling problems: teaching functional spelling units of varying size with a multiple-connections framework. J. Educ. Psychol. 90:587. doi: 10.1037/0022-0663.90.4.587

Boden, C., and Kirby, J. R. (1995). Successive processing, phonological coding, and the remediation of reading. J. Cog. Educ. 4, 19–32.

Bonfiglio, C. M., Daly, E. J. III., Martens, B. K., Lin, L. R., and Corsaut, S. (2004). An experimental analysis of reading interventions: generalization across instructional strategies, time, and passages. J. Appl. Behav. Anal. 37, 111–114. doi: 10.1901/jaba.2004.37-111

Borella, E., Carretti, B., Riboldi, F., and De Beni, R. (2010). Working memory training in older adults: evidence of transfer and maintenance effects. Psychol. Aging 25, 767–778. doi: 10.1037/a0020683

Borman, G. D., and Grigg, J. A. (2009). “Visual and narrative interpretation,” in The Handbook of Research Synthesis and Meta-Analysis, eds H. Cooper, L. V. Hedges, and J. C. Valentine (Russell Sage Foundation), 497–520.

Bowyer-Crane, C., Snowling, M. J., Duff, F. J., Fieldsend, E., Carroll, J. M., Miles, J., et al. (2008). Improving early language and literacy skills: differential effects of an oral language versus a phonology with reading intervention. J. Child Psychol. Psychiatry 49, 422–432. doi: 10.1111/j.1469-7610.2007.01849.x

Brehmer, Y., Rieckmann, A., Bellander, M., Westerberg, H., Fischer, H., and Backman, L. (2011). Neural correlates of training-related workingmemory gains in old age. Neuroimage 58, 1110–1120. doi: 10.1016/j.neuroimage.2011.06.079

Brehmer, Y., Westerberg, H., and Backman, L. (2012). Working-memory training in younger and older adults: training gains, transfer, and maintenance. Front. Hum. Neurosci. 6, 1–7. doi: 10.3389/fnhum.2012.00063

Brown, S. A., Upchurch, S. L., and Acton, G. J. (2003). A framework for developing a coding scheme for meta-analysis. West. J. Nurs. Res. 25, 205–222. doi: 10.1177/0193945902250038

Burns, M. K. (2016). Effect of cognitive processing assessments and interventions on academic outcomes: can 200 studies be wrong. NASP Commun. 44:1. Available online at: http://readingmatterstomaine.org/wp-content/uploads/2016/10/Burns-2016.pdf

Burns, M. K., Petersen-Brown, S., Haegele, K., Rodriguez, M., Schmitt, B., Cooper, M., et al. (2015). Meta-analysis of academic interventions derived from neuropsychological data. Sch. Psychol. Q. 31, 28–42. doi: 10.1037/spq0000117

Butterworth, B., and Kovas, Y. (2013). Understanding neurocognitive developmental disorders can improve education for all. Science 340, 300–305. doi: 10.1126/science.1231022

Butterworth, B., Varma, S., and Laurillard, D. (2011). Dyscalculia: from brain to education. Science 332, 1049–1053. doi: 10.1126/science.1201536

Calhoon, M. B., Sandow, A., and Hunter, C. V. (2009). Reorganizing the instructional reading components: Could there be a better way to design remedial reading programs to maximize middle school students with reading disabilities' response to treatment? Annals Dyslexia 60, 57–85. doi: 10.1007/s11881-009-0033-x

Callinan, S., Theiler, S., and Cunningham, E. (2015). Identifying learning disabilities through a cognitive deficit framework: can verbal memory deficits explain similarities between learning disabled and low achieving students? J. Learn. Disabil. 48, 271–280. doi: 10.1177/0022219413497587

Card, N. A. (2012). Applied Meta-Analysis for Social Science Research. New York, NY: The Guilford Press.

Carlson, J. S., and Das, J. P. (1997). A process approach to remediating word-decoding deficiencies in Chapter 1 children. Learn. Disab. Q. 20, 93–102. doi: 10.2307/1511217

Carretti, B., Borella, E., Fostinelli, S., and Zavagnin, M. (2013a). Benefits of training working memory in amnestic mild cognitive impairment: specific and transfer effects. Int. Psychogeriatr. 25, 617–626. doi: 10.1017/S1041610212002177

Carretti, B., Borella, E., Zavagnin, M., and de Beni, R. (2013b). Gains in language comprehension relating to working-memory training in healthy older adults: working-memory training in older adults. Int. J. Geriatr. Psychiatry 28, 539–546. doi: 10.1002/gps.3859

Case, L. P., Speece, D. L., and Molloy, D. E. (2003). The validity of a response-to-instruction paradigm to identify reading disabilities: A longitudinal analysis of individual differences and contextual factors. School Psychol. Rev. 32, 557–582. doi: 10.1080/02796015.2003.12086221

Chacko, A., Bedard, A. C., Marks, D. J., Feirsen, N., Uderman, J. Z., Chimiklis, A., et al. (2014). A randomized clinical trial of Cogmed Working-memory training in school-age children with ADHD: a replication in a diverse sample using a control condition. J. Child Psychol. Psychiatry 55, 247–255. doi: 10.1111/jcpp.12146

Chein, J. M., and Morrison, A. (2010). Expanding the mind's workspace: training and transfer effects with a complex working memory span task. Psychon. Bull. Rev. 17, 193–199. doi: 10.3758/PBR.17.2.193

Chenault, B., Thomson, J., Abbott, R. D., and Berninger, V. W. (2006). Effects of prior attention training on child dyslexics' response to composition instruction. Dev. Neuropsychol. 29, 243–260. doi: 10.1207/s15326942dn2901_12

Chooi, W.-T., and Thompson, L. A. (2012). Working-memory training does not improve intelligence in healthy young adults. Intelligence 40, 531–542. doi: 10.1016/j.intell.2012.07.004

Churches, M., Skuy, M., and Das, J. P. (2002). Identification and remediation of reading difficulties based on successive processing deficits and delay in general reading. Psychol. Rep. 91, 813–824. doi: 10.2466/pr0.2002.91.3.813

Colom, R., Roman, F. J., Abad, F. J., Shih, P. C., Privado, J., Froufe, M., et al. (2013). Adaptive n-back training does not improve fluid intelligence at the construct level: gains on individual tests suggest that training may enhance visuospatial processing. Intelligence 41, 712–727. doi: 10.1016/j.intell.2013.09.002

Compton, D. L., Fuchs, D., Fuchs, L. S., and Bryant, J. D. (2006). Selecting at-risk readers in first grade for early intervention: a two-year longitudinal study of decision rules and procedures. J. Educ. Psychol. 98, 394–409. doi: 10.1037/0022-0663.98.2.394

Dahlin, E., Neely, A., Larsson, A., Backman, L., and Nyberg, L. (2008b). Transfer of learning after updating training mediated by the striatum. Science 320, 1510–1512. doi: 10.1126/science.1155466

Dahlin, E., Nyberg, L., Backman, L., and Neely, A. (2008a). Plasticity of executive functioning in young and older adults: Immediate training gains, transfer, and long- term maintenance. Psychol. Aging 23, 720–730. doi: 10.1037/a0014296

Daly, E., Bonfiglio, C., Matton, T., Persampieri, M., and Foreman-Yates, K. (2005). Experimental analysis of academic skills deficits: an investigation of variables that affect generalized oral reading performance. J. Appl. Behav. Anal. 38, 485–497. doi: 10.1901/jaba.2005.113-04

Daly, E., and Martins, B. (1994). A comparison of three interventions for increasing oral reading performance: Application of the instructional hierarchy. J. Appl. Behav. Anal. 27, 459–469. doi: 10.1901/jaba.1994.27-459

D'Amato, R. C. (1990). A neuropsychological approach to school psychology. Sch. Psychol. Q. 5, 141–160. doi: 10.1037/h0090608

Das, J. P., Mishra, R. K., and Pool, J. E. (1995). An experiment on cognitive remediation of word-reading difficulty. J. Learn. Disab. 28, 66–79. doi: 10.1177/002221949502800201

De Los Reyes, A., and Kazdin, A. E. (2009). Identifying evidence-based interventions for children and adolescents using the range of possible changes model: a meta-analytic illustration. Behav. Modif. 33, 583–617. doi: 10.1177/0145445509343203

Decker, S. L. (2008). School neuropsychology consultation in neurodevelopmental disorders. Psychol. Sch. 45, 799–811. doi: 10.1002/pits.20327

Decker, S. L., Hale, J. B., and Flanagan, D. P. (2013). Professional practice issues in the assessment of cognitive functioning for educational applications. Psychol. Sch. 50, 300–313. doi: 10.1002/pits.21675

Dehn, M. J. (2008). Working Memory and Academic Learning: Assessment and Intervention. New York, NY: Wiley.

Denton, C. A., Nimon, K., Mathes, P. G., Swanson, E. A., Kethley, C., Kurz, T. B., et al. (2010). Effectiveness of a supplemental early reading intervention scaled up in multiple schools. Except. Child. 76, 394–416. doi: 10.1177/001440291007600402

Dixon, S. G., Eusebio, E. C., Turton, W. J., Wright, P. W., and Hale, J. B. (2011). Forest Grove school district vs. TA Supreme court case: implications for school psychology practice. J. Psychoeduc. Assess. 29, 103–113. doi: 10.1177/0734282910388598

Dowrick, P., Kim-Rupnow, W. S., and Power, T. (2006). Video feed forward for reading. J. Spec. Educ. 39, 194–207. doi: 10.1177/00224669060390040101

Dryer, R., Beale, I. L., and Lambert, A. J. (1999). The balance model of dyslexia and remedial training: an evaluative study. J. Learn. Disab. 32, 174–186. doi: 10.1177/002221949903200207

Dunning, D. L., Holmes, J., and Gathercole, S. E. (2013). Does working memory training lead to generalized improvements in children with low working memory? A randomized controlled trial. Dev. Sci. 16, 915–925. doi: 10.1111/desc.12068

Eckert, M. A., Vaden, K. I., Maxwell, A. B., Cute, S. L., Gebregziabher, M., and Berninger, V. W. (2017). Common brain structure findings across children with varied reading disability profiles. Sci. Rep. 7:6009. doi: 10.1038/s41598-017-05691-5

Egeland, J., Aarlien, A. K., and Saunes, B. K. (2013). Few effects of far transfer of working memory training in ADHD: a randomized controlled trial. PLoS ONE 8:e75660. doi: 10.1371/journal.pone.0075660

Ehri, L. C., Dreyer, L. G., Flugman, B., and Gross, A. (2007). Reading rescue: an effective tutoring intervention model for language-minority students who are struggling readers in first grade. Am. Educ. Res. J. 44, 414–448. doi: 10.3102/0002831207302175

Ellison, P. A., and Semrud-Clikeman, M. (2007). Child Neuropsychology: Assessment and Interventions for Neurodevelopmental Disorders. New York, NY: Springer.

Englund, J. A., Decker, S. L., Allen, R. A., and Roberts, A. M. (2014). Common cognitive deficits in children with attention-deficit/hyperactivity disorder and autism: working memory and visual-motor integration. J. Psychoeduc. Assess. 32, 95–106. doi: 10.1177/0734282913505074

Facoetti, A., Lorusso, M. L., Paganoni, P., Umilta, C., and Mascetti, G. G. (2003). The role of visuospatial attention in developmental dyslexia: evidence from a rehabilitation study. Cog. Brain Res. 15, 154–164. doi: 10.1016/S0926-6410(02)00148-9

Faramarzi, S., Shamsi, A., Samadi, M., and Ahmadzade, M. (2015). Meta-analysis of the efficacy of psychological and educational interventions to improve academic performance of students with learning disabilities in Iran. J. Educ. Health Promot. 4:58. doi: 10.4103/2277-9531.162372

Feifer, S. G. (2008). Integrating response to intervention (Rti) with neuropsychology: a scientific approach to reading. Psychol. Sch. 45, 812–825. doi: 10.1002/pits.20328

Ferguson, C., and Brannick, M. (2012). Publication bias in psychological science: prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychol. Methods. 17, 120–8. doi: 10.1037/a0024445

Fiorello, C. A., Flanagan, D. P., and Hale, J. B. (2014). The utility of the pattern of strengths and weaknesses approach. Learn. Disabil. 20, 57–61. doi: 10.18666/LDMJ-2014-V20-I1-5154

Fiorello, C. A., Hale, J. B., and Snyder, L. E. (2006). Cognitive hypothesis testing and response to intervention for children with reading problems. Psychol. Sch. 43, 835–853. doi: 10.1002/pits.20192

Fitzgerald, J. (2001). Can minimally trained college student volunteers help young at-risk children to read better? Read. Res. Q. 36, 28–46. doi: 10.1598/RRQ.36.1.2

Flanagan, D. P., Alfonso, V. C., Ortiz, S. O., and Dynda, A. M. (2010a). “Integrating cognitive assessment in school neuropsychological evaluations,” in Best Practices in School Neuropsychology: Guidelines for Effective Practice, Assessment, and Evidence-Based Intervention, ed D. C. Miller (Hoboken, NJ: John Wiles & Sons, Inc.), 101–140. doi: 10.1002/9781118269855.ch6

Flanagan, D. P., Fiorello, C. A., and Ortiz, S. O. (2010b). Enhancing practice through application of cattell-horn-carroll theory and research: a “third method” approach to specific learning disability identification. Psychol. Sch. 47, 739–760. doi: 10.1002/pits.20501

Fletcher, J. M., and Miciak, J. (2017). Comprehensive cognitive assessments are not necessary for the identification and treatment of learning disabilities. Arch. Clin. Neuropsychol. 32, 2–7. doi: 10.1093/arclin/acw103

Fletcher, J. M., and Miciak, J. (2019). The Identification of Specific Learning Disabilities: A Summary of Research on Best Practices. Austin, TX: Texas Center for Learning Disabilities.

Foorman, B. R., Francis, D. J., Fletcher, J. M., Schatschneider, C., and Mehta, P. (1998). The role of instruction in learning to read: Preventing reading failure in at-risk children. J. Educ. Psychol. 90, 37–55. doi: 10.1037/0022-0663.90.1.37

Frijters, J. C., Lovett, M. W., Steinbach, K. A., Wolf, M., Sevcik, R. A., and Morris, R. D. (2011). Neurocognitive predictors of reading outcomes for children with reading disabilities. J. Learn. Disabil. 44, 150–166. doi: 10.1177/0022219410391185

Fuchs, D., and Deshler, D. D. (2007). What we need to know about Responsiveness to Intervention (and Shouldn't be afraid to ask). Learn. Disabil. Res. Pract. 22, 129–136. doi: 10.1111/j.1540-5826.2007.00237.x

Fuchs, D., and Young, C. L. (2006). On the irrelevance of intelligence in predicting responsiveness to reading instruction. Except. Child 73, 8–30. doi: 10.1177/001440290607300101

Geiger, G., Lettvin, J. Y., and Fahle, M. (1994). Dyslexic children learn a new visual strategy for reading: a controlled experiment. Vis. Res. 34, 1223–1233. doi: 10.1016/0042-6989(94)90303-4

Gerber, M. M. (2005). Teachers are still the test: limitations of response to instruction strategies for identifying children with learning disabilities. J. Learn. Disabil. 38, 516–524 doi: 10.1177/00222194050380060701

Goldstein, B. H., and Obrzut, J. E. (2001). Neuropsychological treatment of dyslexia in the classroom setting. J. Learn. Disab. 34, 276–285. doi: 10.1177/002221940103400307

Graham, S., and Harris, K. R. (1989). Components analysis of cognitive strategy instruction: Effects on learning disabled students' compositions and self-efficacy. J. Educ. Psychol. 81, 353. doi: 10.1037/0022-0663.81.3.353

Gray, S. A., Chaban, P., Martinussen, R., Goldberg, R., Gotlieb, H., Kronitz, R., et al. (2012). Effects of a computerized working-memory training program on working memory, attention, and academics in adolescents with severe LD and comorbid ADHD: a randomized controlled trial: computerized working-memory training in adolescents with severe LD/ADHD. J. Child Psychol. Psychiatry 53, 1277–1284. doi: 10.1111/j.1469-7610.2012.02592.x

Grigorenko, E. L., Fuchs, L. S., Willcutt, E. G., Compton, D. L., Wagner, R. K., and Fletcher, J. M. (2020). Understanding, educating, and supporting children with specific learning disabilities: 50 years of science and practice. Am. Psychol. 75, 37–51. doi: 10.1037/amp0000452

Haddad, F. A., Garcia, Y. E., Naglieri, J. A., Grimditch, M., McAndrews, A., and Eubanks, J. (2003). Planning facilitation and reading comprehension: instructional relevance of the PASS theory. J. Psychoeduc. Assess. 21, 282–289. doi: 10.1177/073428290302100304

Hale, J. B., Kaufman, A., Naglieri, J. A., and Kavale, K. A. (2006). Implementation of IDEA: Integrating response to intervention and cognitive assessment methods. Psychol. Sch. 43, 753–770. doi: 10.1002/pits.20186

Hammil, D. D., and Swanson, H. L. (2006). The national reading panel's meta-analysis of phonics instruction: another point of view. Elem. Sch. J. 107, 17–26. doi: 10.1086/509524

Harrison, T. L., Shipstead, Z., Hicks, K. L., Hambrick, D. Z., Redick, T. S., and Engle, R. W. (2013). Working-memory training may increase working memory capacity but not fluid intelligence. Psychol. Sci. 24, 2409–2419. doi: 10.1177/0956797613492984

Hatcher, P. J., Goetz, K., Snowling, M. J., Hulme, C., Gibbs, S., and Smith, G. (2006a). Evidence for the effectiveness of the Early Literacy Support programme. Br. J. Educ. Psychol. 76, 351–367. doi: 10.1348/000709905X39170

Hatcher, P. J., and Hulme, C. (1999). Phonemes, rhymes, and IQ as predictors of children's responsiveness to remedial reading instruction: Evidence from a longitudinal study. J. Exp. Child Psychol. 72, 130–153. doi: 10.1006/jecp.1998.2480

Hatcher, P. J., Hulme, C., Miles, J. N., Carroll, J. M., Hatcher, J., Gibbs, S., et al. (2006b). Efficacy of small group reading intervention for beginning readers with reading-delay: a randomised controlled trial. J. Child Psychol. Psychiatry 47, 820–827. doi: 10.1111/j.1469-7610.2005.01559.x

Hayward, D., Das, J. P., and Janzen, T. (2007). Innovative programs for improvement in reading through cognitive enhancement: a remediation study of Canadian First Nations children. J. Learn. Disab. 40, 443–457. doi: 10.1177/00222194070400050801

Hecht, S. A., and Close, L. (2002). Emergent literacy skills and training time uniquely predict variability in response to phonemic awareness training in disadvantaged kindergarteners. J. Exp. Child Psychol. 82, 93–115. doi: 10.1016/S0022-0965(02)00001-2