- School of Education, Bath Spa University, Bath, United Kingdom

Classroom assessment is purposeful when the information is utilised by teachers to support learning. Such formative assessment practices can be difficult to enact in a primary science classroom, with the whole class often involved in practical activities and with limited lesson time. This preliminary study seeks to explore formative decision-making and the subsequent actions taken by teachers in the classroom. Primary teachers who used a Teacher Assessment in Primary Science (TAPS) Focused Assessment activity were asked to describe what action they took as a result of the classroom interactions stimulated by the activity. 142 teachers in 9 regions of England completed a paper questionnaire at a training day. The qualitative data pertinent to the study was extracted and thematic content analysis carried out to determine the kinds of actions and changes to practice that were described. It was found that the “next step” described by teachers varied in timing; some made changes within the lesson, others provided follow up activities or made longer-term adaptation to teaching practices. Being responsive to the assessment information provided by the children took many forms, for example, supporting pupils to reflect on investigations during the lesson, discussing vocabulary or concepts, providing time for further exploration, or explicit modeling of science skills. Formative decisions were taken at a whole class level, rather than making individual adaptations. It is argued that enabling teachers to be more explicit about their tacit decision-making could support them to make more formative use of assessment information to support pupil learning.

Introduction

Formative assessment has widely been hailed as key to supporting children’s learning (e.g. Black and William, 1998; Harlen, 2013; Wiliam, 2018). Gardner et al. (2010) assert that assessment should focus on improving learning, explaining why formative assessment became commonly known as “Assessment for Learning” (Assessment Reform Group, 1999). However, in practice, formative use of assessment information has been difficult to implement, with changes to teacher practice taking time and often skewed by current policy such as an increased focus across the world on using assessment for accountability (DeLuca et al., 2019). The difficulties encountered with implementation indicate that there is still a need for further research in this area, with the aim of finding manageable ways for teachers to make use of formative assessment in the classroom. Low levels of teacher assessment literacy or capability across the profession (Gardner, 2007) also point to a need for further support for teachers. This article aims to explore the teacher decision-making involved in utilising formative assessment information, in order make such processes more explicit and enhance understanding in the field.

Assessment education is in a constant state of flux (DeLuca et al., 2019) as it responds to changes in assessment policy and emphasis. Significant changes have taken place in the last decade in England, with primary schools (for children aged 4–11) following a new statutory National Curriculum with assessment indicators presented as age-related expectations (Department for Education, 2013). Science is included as a core subject, but with English and mathematics featuring on school league tables, primary science is often perceived to be of lower status, meaning less time for both teaching and teacher professional learning (CFE Research, 2017). Such time pressures mean that primary science lessons would typically cover a different topic each lesson, with it being normal to “move on” once the lesson was taught. This means that there would be little time for follow up or extension discussions, little time for acting upon formative assessment information.

Formative assessment, with its focus on supporting learning, could be a useful tool for schools dealing with the impact of the Covid-19 global pandemic. Modeling from seasonal learning research suggests that attainment may slow or decline during long periods of school closures (Kuheld and Tarasawa, 2020). Others have suggested that disadvantaged children are more likely to experience a such a ”learning loss,” further widening the gap between children from lower socio-economic backgrounds and their more affluent peers (Education Endowment Foundation, 2020; Müller and Goldenberg, 2020). However, an over-emphasis on “identifying gaps” on a return to school may miss the point of formative assessment. Focusing on “lost learning” via frequent testing has long been identified as a misinterpretation of formative assessment (Klenowski, 2009); identifying the “gap” is only a precursor to formative action.

The purpose of formative assessment is to inform decisions about future learning experiences in the classroom (Harlen, 2007). Strategies associated with formative assessment include: identifying and making explicit success criteria; elicitation of children’s existing ideas; feedback; self-assessment and peer assessment (Wiliam, 2018). However, these strategies are not separate to classroom teaching, formative assessment is embedded, it is part of the teaching process. For researchers, this makes it difficult to monitor, but for teachers, this means it should not add to their workload. By following such an approach, any interaction with pupils can provide useful assessment information. Such “assessment interactions” point to the need for planning and teaching to be responsive rather than wholly decided in advance: the interaction becomes formative when it provokes a response, when “action” is taken. Black and Wiliam suggest that: “assessment provides information to be used as feedback … Such assessment becomes “formative assessment” when the evidence is actually used to adapt the teaching work to meet the needs” (1998: 2), thus it is the use of assessment information to support the learning process which distinguishes formative and summative assessment, rather than the assessment task itself. Use of such assessment information could include: judgment according to criteria or comparison with previous performance in similar events to identify ongoing areas of concern, consideration of next steps, decision making and then formative action. Such formative assessment interactions and actions can be at the class, group or individual level.

Webb and Jones (2009) note that change in teacher practice is difficult and takes time, with practice needing time to be trialled, integrated and embedded. Teacher assessment literacy is a developmental process that requires teacher’s reflection and critical evaluation of their diverse use assessment (Deluca et al., 2016). Assessment literacy or capability also requires an understanding of the subject being taught: content and pedagogical content knowledge (PCK, Shulman, 1986), since the teacher needs an understanding of the subject matter to be able to make judgements regarding pupil understanding, as well as pedagogical understanding of the most appropriate ways to teach and assess the content. Assessment capability cannot be separated from the subject context (Edwards, 2013). The teacher needs knowledge of the key concepts to identify what to assess and knowledge of assessment processes to identify how to assess and what to do with the information gained. This means that professional learning around assessment needs subject-specific elements for it to be usable in the classroom.

The Primary Science Teaching Trust funds the Teacher Assessment in Primary Science (TAPS) project (2013+) to develop support for teachers, which includes a range of examples and activity plans on the TAPS website linked to each of the curricular in the four countries of the United Kingdom (TAPS Website, 2020). TAPS uses a Design-Based Research methodology, which promotes collaboration between teachers and researchers, involving iterative cycles to trial and refine both resources and theoretical principles to impact educational practice (Design-Based Research Collective, 2003; Anderson and Shattuck, 2012; Easterday et al., 2018). The principles of formative assessment provide the theoretical basis for guidance to support teacher decision-making (Davies et al., 2017). When applied to the primary science classroom, these principles emphasise the elicitation of pupil understanding, the responsiveness of teachers to adapt their lessons in response to this information from the pupils and the active role of pupils in self and peer assessment (Wiliam, 2018).

One strand of TAPS, which is still evolving in the iterative cycles, is the use of a Focused Assessment approach for teaching and assessing scientific inquiry (Davies and McMahon, 2003). This approach proposes that one element of inquiry becomes the focus for teacher attention and any pupil drawing or writing, within the context of a whole inquiry. For example, in an investigation dropping different sized paper “spinners” (or helicopters) the teacher selects one part which will be given more teaching time. For example, a focus on recording results could include time on drawing tables or graphs; a focus on controlling variables could include more time planning and setting up the investigation; whilst a focus on drawing conclusions could involve individual writing to draw conclusions from the results. Selection of a focus in this way is designed to make teaching and assessment more manageable in a practical lesson. The TAPS Focused Assessment activity plans are being trialled in all four countries of the United Kingdom, and the approach has become the subject of a large randomised control trial across England, which is being funded by the Education Endowment Foundation and the Wellcome Trust.

The TAPS Focused Assessment approach provides practical guidance for suggested activities, but carrying out the activities is not the same as implementing formative assessment. Formative assessment requires action, something needs to be done with the information gained from interactions with pupils. This study sought to find out what teachers who have carried out a TAPS task do next, whether they use the assessment information to tweak their teaching, what kind of action is taken and when this takes place. This study analyses initial findings to answer the following research question:

RQ. How do primary teachers act on information arising from classroom interactions stimulated by the TAPS Focused Assessment activities?

Methods

This preliminary study of teacher-decision making is a small part of the larger TAPS project, which utilises a Design-Based Research methodology of iterative and collaborative research and development (Anderson and Shattuck, 2012). In order to answer the RQ, teachers undertaking TAPS training were directly asked to describe their practice; a purposive sample (Teddlie and Yu, 2007) who would be able to comment on their classroom interactions in response to TAPS Focused Assessment activities.

During the 2019–20 academic year, 142 teachers in 9 regions across England took part in TAPS Focused Assessment professional development (first day in October 2019, second day in January/February 2020, third day canceled due to Covid-19). The training included explanation of the science inquiry process (since many primary school teachers are not science specialists), together with consideration of assessment strategies. In between training days, the teachers were asked to carry out some TAPS Focused Assessment activities with their class and then feedback about their use at the next training day. On Day 2, teachers discussed their experiences, shared pupil work and completed a paper questionnaire (sharing of further cycles of formative assessment did not take place due to the cancellation of Day 3). As part of the questionnaire, all teachers were asked to describe the activities carried out with their class and what they did as a result of such classroom interactions. Responses to the question “What did you do next?” form the basis for this study. The teachers were explicitly asked to provide details of the changes to their practice. Such changes indicate formative use of information: teachers changing their practice in response to information gained from interactions with the children.

An open-ended question was selected so that teachers described their practice rather than assigned it to a pre-determined category (Oppenheim, 1992), particularly important for such a preliminary study to find out how teachers acted on the classroom information. It should be noted that the lead trainer was also the lead researcher, which may have influenced the teacher responses, however, it also enabled a fuller understanding of the teacher responses for this preliminary study. The study is qualitative, exploring the participant experience, but the size of the sample does enable numerical summaries for discussion of prevalence. The sample consisted of half science subject leaders (teaching any year group) and half Year five teachers (pupils aged 9–10). For full teacher details, see Table 1.

In line with ethical procedures, all teachers were fully informed regarding the collection, use and storage of their questionnaire answers. They were also given the opportunity to withdraw their data (BERA, 2018). The paper questionnaires were anonymised at the point of typing up and then stored securely.

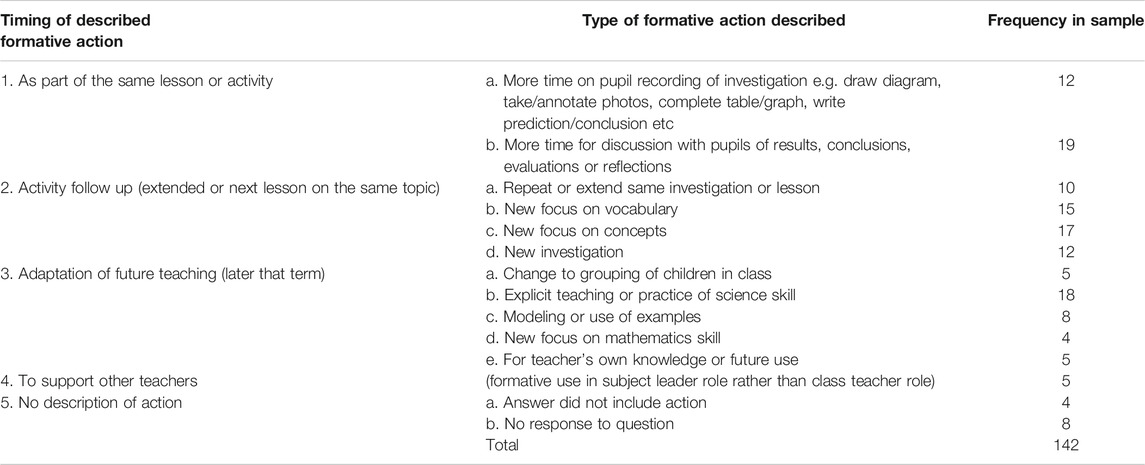

The data for the “What did you do next?” question was extracted into a spreadsheet for this study. Thematic content analysis was carried out on the 142 descriptions. They were sorted thematically into emergent groups and this was revisited multiple times to ensure that the final themes represented the dataset. Initially the data was sorted twice: in terms of timing of the described “next step” or action (during the lesson, extensions to the lesson, future teaching) and separately into the type of action described (changes to the teaching, the next tasks given to the children, the children’s groups etc). Types of teacher action mapped onto the timings for when this took place, with different kinds of action happening at different time, for example, children’s groups could be changed in the following lesson, but this did not happen during the same lesson. Thus the final themes presented below consist of types of action, placed into time order.

Results

The majority of teachers described an action, something that they did next in response to information gained from interactions with pupils during the TAPS lesson. With time pressured primary science, the “normal” next step would be to move on to the next topic as per the pre-written school planning, so taking an action which extended or adapted the lesson for example, would indicate that the teachers were making a formative decision.

Thematic groups emerged in terms of both the kind of action taken and whether the action took place immediately: as part of the same lesson; soon after in a follow up lesson; or the adaptation of future teaching. Examples of the thematic groups are listed below, following a frequency table to show prevalence of the teacher actions in Table 2.

Theme 1a. As part of same lesson or activity, the teacher’s next step focused on a change to pupil recording of the results, such as drawing a table or graph (N = 12). For example, using a “planning board” from the TAPS resources to support children to construct a graph, or the addition of discussion time for children to discuss how they had recorded their results:

“Children worked as a group to put their data onto a bar graph - we used the planning boards to help to know where to put the correct data” (Teacher 13).

“After spinners - discussion explaining how to record our results” (Teacher 87).

Theme 1b. As part of same lesson or activity, the teacher noted the discussion of conclusions, perhaps supporting the pupils to evaluate or reflect on their investigation (N = 19). In a normal primary science lesson, full discussion of conclusions is often difficult to include because it needs to take place at the end of the lesson, when “tidying up” time may seemingly take priority, so making time for this “review” stage of the investigation indicates a change from normal practice. For example:

“Discussed the results with the class and got those who understood to share their findings with others” (Teacher 18).

“Reflection on their own planning of an experiment using their recordings/findings, how would they replan/do differently” (Teacher 5).

“Review - what did we find out? How could it change?” (Teacher 97).

Theme 2a. In a follow up to the activity, which could take place in an immediate lesson extension (continuing the same lesson) or continue into the next lesson (on the same topic), the teacher may support the pupils to repeat or extend their investigation (N = 10). Finding time for this (and the other actions below) indicates a change to the normal practice of moving on to the next topic. For example:

“Allowed them/us time to carry out improvements. Gave them time to record” (Teacher 17).

“Let the pupils choose other materials to test” (Teacher 15).

Theme 2b. Other actions following the activity focused on the pupils’ use of vocabulary (N = 15). For example:

“Identify areas of weakness to build on e.g. including scientific vocab in conclusions/explanations” (Teacher 26).

“Ensure vocabulary displayed in classroom/table mats. Quick quiz at the start of a lesson to recap vocabulary and ensure retention” (Teacher 33).

“Give children opportunities to explore and discuss scientific vocabulary more in depth before investigation and sharing their interpretations” (Teacher 45).

Theme 2c. For some teachers, the next step involved further consideration of conceptual understanding (N = 17). For example:

“Verbal recap of different forces. Explaining what each force does” (Teacher 25).

“I showed them videos of a harp - real life example of pitch with different lengths of string” (Teacher 32).

“Returned to asking questions about air resistance - concept cartoons and post-it notes to elicit” (Teacher 42).

“Discussed misconceptions as a class. Discussed particles and why types of sugar dissolve certain ways” (Teacher 110).

Theme 2d. Other teachers chose to continue the investigating the same topic or set up new inquiries on the same topic (N = 12), rather than moving on to the next topic, which would have been normal practice. For example:

“Set up further experiments based on reversing dissolving” (Teacher 80).

“Next we investigated shadows so we used the planning booklets and post-its more confidently but only recorded the table and results in books.” They found it hard to look at patterns in data so did some discreet work on this from “Handling Science Data Y5.” From this we then did Biscuit Dunk to compare our Y4 and 6 reflections to look at progression of skills (Teacher 131).

“Applied results of investigation to real-life scenarios - new footpath at school” (Teacher 133).

Theme 3a. For some teachers, the formative assessment information was not used immediately, it was used to adapt future lessons, like in changing the way pupils were grouped (N = 5). For example:

“Grouped children differently for follow up lesson for insulation lesson” (Teacher 1).

“In the groups, assigned a role to each child e.g. to time, to measure, to record, etc. Discussed conclusions, improvements, etc as a class” (Teacher 75).

Theme 3b. In response to formative assessment information, some teachers decided to adapt their future lessons by being more explicit in their teaching of science skills (N = 18). For example:

“I taught how to identify variables and make choices” (Teacher 9).

“Lots of work around fair testing - use of planning grids with post-its as a whole class (only changing one variable). Use of planning grids in small groups - scaffolded at each stage” (Teacher 85).

“Changed to have a measure focus and taught each child to read from the scale” (Teacher 134).

Theme 3c. Some teachers planned to make more use of modeling and examples in their next lessons (N = 8). For example:

“Give examples of ways we could measure and discuss which was most appropriate” (Teacher 21).

“I modeled to children how to write a conclusion. Next experiment - children wrote their own conclusion” (Teacher 86).

Theme 3d. For other teachers, they decided mathematics skills needed a focus in future lessons (N = 4). It is not clear whether these were changes to planned maths lessons, but this indicates a recognition from the teacher of the interplay between the subjects. For example:

“Maths - thermometer lesson. 1 key recorder (whiteboard)” (Teacher 30).

“In maths - looked at graphs - in particular line graphs (scales)” (Teacher 35).

Theme 3e. A small number of teachers described how they would use the experience to feed into later teaching, but without a specific next step (N = 5). Such lack of specific action could indicate a lack of clarity or use of the formative assessment information. For example:

“Used lesson to plan for the next input” (Teacher 74).

“Follow the plan more and focus on individual children to ascertain learning” (Teacher 99).

Theme 4. For those teachers with subject leadership roles, the next step was more about supporting other teachers, rather than specific next steps for pupils (N = 5). For example:

“Meet with the staff to moderate progress across the school. Talk about and note down next steps” (Teacher 108).

“Reflected with staff” (Teacher 136).

Theme 5. A small number of teachers did not answer the question (N = 8) or described the interaction with pupils, without explaining how the information would be utilised (N = 4). This included activities carried out at the end of term, or teachers who were trialling the activities with pupils who they did not normally teach. For example:

“This was the final lesson at the end of term” (Teacher 59).

“The graphs were marked but (they were not my class) there were no formative comments.

The students did not follow up this activity with either conclusions or evaluations” (Teacher 139).

It is important to note that a “what next” question requires an end point to an activity, which may not take into account the ongoing responsive teaching taking place. It should also be noted that the action, or planned action, described by the teachers is specific to the activity. It would be expected that the same teacher may take a different action in a different situation, since responsive teaching is context-specific. Tracking the effectiveness of such feedforward actions was not possible in this study, both due to Covid-19 school closures and the difficulties of following the effect of individual formative actions without access to the classroom setting. The impact of teachers’ formative actions on children’s learning, in the short, medium and long term, merits further research.

Discussion

The focus for this article was to explore teacher formative decision-making, to consider the kind of actions teachers took as a result of assessment interactions. The majority of teachers in this sample described an action, a change to practice, using the information gained from an interaction with pupils to make a decision about what to do next with the class. Making such changes, to for example adapt the lesson end or subsequent lesson, suggests that teachers were using the assessment information formatively (Black and Wiliam, 1998).

Findings from this preliminary study suggest that teachers may adapt their practice: within the lesson (Theme 1), when following up the lesson (Theme 2) or when planning future teaching (Theme 3); each of which will be considered in turn.

The Theme 1 actions “within the lesson” included the addition of discussions with children regarding pupil recording of results (12 teachers) or drawing conclusions (19 teachers). It could be questioned whether the teachers interpreted the question “what happened next” to mean “what happened after the practical activity?” and so just described the end of the lesson. However, during the second training day both of the Theme 1 actions were raised by teachers, for example, discussing how they had made more time for reflecting on investigation findings, so their responses could indicate that these were key areas that had changed in their practice. Pupil recording (drawing, writing etc) was discussed a number of times at the training day, both considering how to record the results of a science investigation in terms of the layout of a table or graph to help with interpretation of results, and the bigger question of how much of an investigation the pupils should be writing down. Traditional experiment write ups (Method, Results, Conclusion) can take a large amount of time for younger children, meaning that the lessons can become focused on the mechanics of writing rather than science content. The essence of the TAPS Focused Assessment approach is that an inquiry focus should be selected for the lesson, for example, if conclusions is the focus then more lesson time is devoted to this and it is this part of the inquiry which the pupils will record. By spending more time on areas which may normally be neglected (e.g. with lesson time often running out before discussing conclusions), the teachers could be applying their training to both act on formative assessment interaction and maintain a science focus for the lesson.

Many teachers identified elements that merited further class time on the same topic, for example, extending the investigation, and addressing gaps in vocabulary or conceptual understanding (Theme 2). Such decisions require flexibility in planning and teaching time, together with appropriate content knowledge regarding the choice of which are the key concepts to follow up (Shulman, 1986; Edwards, 2013). It is important to note that these were class-wide interventions. The teacher had identified something that a number of children in the class struggled with, so this could be considered formative assessment at a class level rather than an individual level. This is perhaps an indication that use of formative assessment information needs to be manageable, especially in a subject that is only taught once a week. There cannot be an expectation of individual interventions for each of the pupils in a class of 30, but if many of them struggle with one element, a “tipping point” is reached and it is a reasonable adjustment to change or adapt subsequent sessions to address this. Looking at formative assessment at a class level could lead to individual needs going unaddressed, but teaching is a balance of what is ideal and what is possible. This suggests that in practical primary science lessons, it may often only be manageable to gauge a general level of understanding or performance, and individual assessments may need a different elicitation strategy.

For those teachers who described adapting future teaching, there was consideration of explicit teaching of skills (science or mathematics) with modeling of further examples to support pupils’ learning (Theme 3). Again this occurred largely at the class level, with future planning adapted to include more opportunities to teach and practice. Identification of next steps for learning is a key feature of formative assessment. It appears that the majority of teachers in this sample were able to use the information gained from the assessment interactions to decide on next steps for learning. Whether such knowledge is acted upon immediately within the lesson, or in subsequent lessons, depends on a variety of factors about the lesson content and the class. It is not necessarily preferable to act immediately, since it may be that content needs revisiting in a different way or with a different example. It also may not be possible to act immediately, if areas for development are not identified until the end or after the lesson. Nevertheless, if teachers only “log” areas for development, but never get the chance to return to them, then formative assessment does not fulfill its purpose.

This study has found that teacher decision-making in response to formative assessment interactions can result in changes in the same or future lessons. Teaching adaptations include making space in the lesson schedule for further discussion and reflection, or explicit teaching and modeling of particular skills or concepts. It was found that in the time pressured primary science context, balancing the need to support individual learning with whole class manageability may lead to formative decision-making which is a “best fit” approach for the class. Decision-making within lesson time is difficult, especially when the teacher is busy managing the classroom activities as well as collecting assessment information. Such decision-making can be supported by subject-specific assessment training, since teachers need both assessment literacy and subject knowledge to be able to consider and decide on appropriate next steps. Developing an appropriate classroom assessment language to articulate and share evidence, decisions and the effectiveness of future actions with colleagues could lead to more assessment capable teachers (DeLuca et al., 2019). Enabling teachers to be more explicit about their tacit decision-making, could support them to make more formative use of assessment information to support pupil learning. This study was only able to consider one cycle of teacher action and reflection due to Covid-19 school closures, so further research is needed to explore changes to teacher practice over time and whether such formative decision-making processes become embedded in teacher practice or are just a one off “project effect”. Research into such professional learning is ongoing, with the TAPS project working with teachers across the United Kingdom to collaboratively design principled support for the use of formative assessment in primary science.

Data Availability Statement

The raw data supporting the conclusions of this article can be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Bath Spa University. The participants provided their written informed consent to participate in this study.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Funding

The Teacher Assessment in Primary Science (TAPS) project benefits from funding from the Primary Science Teaching Trust, the Education Endowment Foundation and the Wellcome Trust.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Thanks goes to the teachers and members of the TAPS team who share their thoughts about assessment so readily.

References

Anderson, T., and Shattuck, J. (2012). Design-based research: a decade of progress in education research?. Educ. Res. 41 (1), 16–25. doi:10.3102/0013189x11428813

Assessment Reform Group (1999). Assessment for learning: beyond the black box. Cambridge: University of Cambridge Faculty of Education.

CFE Research (2017). State of the nation’ report of UK primary science education: baseline research for the Wellcome Trust Primary Science Campaign. Leicester: CFE Research.

Davies, D., Earle, S., McMahon, K., Howe, A., and Collier, C. (2017). Development and exemplification of a model for teacher assessment in primary science. Int. J. Sci. Educ. 39 (14), 1869–1890. doi:10.1080/09500693.2017.1356942

Davies, D., and McMahon, K. (2003). Assessment for enquiry: supporting teaching and learning in primary science. Sci. Educ. Int. 14 (4), 29–39. doi:10.1039/D0RP00283F

DeLuca, C., LaPointe-McEwan, D., and Luhanga, U. (2016). Approaches to Classroom Assessment Inventory: a new instrument to support teacher assessment literacy. Educ. Assess. 21 (4), 248–266. doi:10.1080/10627197.2016.1236677

DeLuca, C., Willis, J., Cowie, B., Harrison, C., Coombs, A., Gibson, A., and et al. Trask, S. (2019). Policies, programs and practices: exploring the complex dynamics of assessment education in teacher education across four countries. Front. Educ. 4, 132. doi:10.3389/feduc.2019.00132

Department for Education (DfE) (2013). National Curriculum in England: science programmes of study. London: DfE.

Design-Based Research Collective (2003). Design-based research: an emerging paradigm for educational inquiry. Educ. Res. 32 (No. 1), p5–8. doi:10.3102/0013189X032001005

Easterday, M., Rees Lewis, D., and Gerber, E. (2018). The logic of design research. Learn. Res. Pract. 4 (2), 131–160. doi:10.1080/23735082.2017.1286367

Education Endowment Foundation (2020). Impact of school closures on the attainment gap: rapid evidence assessment. https://educationendowmentfoundation.org.uk/covid-19-resources/best-evidence-on-impact-of-school-closures-on-the-attainment-gap Accessed 3 June 2020.

Edwards, F. (2013). Quality assessment by science teachers: five focus areas. Sci. Educ. Int. 24 (2), 212–226. doi:10.1039/D0RP00121J

Gardner, J., Harlen, W., Hayward, L., and Stobartwith Montgomery M, G. (2010). Developing teacher assessment. Maidenhead: Oxford University Press.

Harlen, W. (2013). Assessment and inquiry-based science education: issues in policy and practice. Trieste: Global Network of Science Academies.

Klenowski, V. (2009). Assessment for learning revisited: an asia-pacific perspective. Assess Educ. Princ. Pol. Pract. 16 (3), 263–268. doi:10.1080/09695940903319646

Kuhfeld, M., and Tarasawa, B. (2020). The COVID-19 slide: What summer learning loss can tell us about the potential impact of school closures on student academic achievement. Collaborative for Student Growth: Brief https://www.nwea.org/blog/2018/summer-learning-loss-what-we-know-what-were-learning Accessed June 2, 2020.

Müller, L. M., and Goldenberg, G. (2020). Education in times of crisis: the potential implications of school closures for teachers and students https://chartered.college/2020/05/07/chartered-college-publishes-report-into-potential-implications-of-school-closures-and-global-approaches-to-education Accessed June 2, 2020.

Oppenheim, A. (1992). Questionnaire design, interviewing and attitude measurement. 2nd edition. London: Pinter Publishers.

Shulman, L. (1986). Those who understand: knowledge growth in teaching. Educ. Res. 15 (2), 4–14. doi:10.3102/0013189x015002004

Teacher Assessment in Primary Science (TAPS) Website (2020). https://pstt.org.uk/resources/curriculum-materials/assessment Accessed July 15, 2020.

Teddlie, C., and Yu, F. (2007). Mixed methods sampling: a typology with examples. J. Mix. Methods Res. 1, 77–100. doi:10.1177/1558689806292430

Webb, M., and Jones, J. (2009). Exploring tensions in developing assessment for learning, Assessment in Education: Principles. Policy Pract. 16 (2), 165–184. doi:10.1080/09695940903075925

Keywords: TAPS, design-based research, primary science, formative assessment, teacher assessment literacy

Citation: Earle S (2021) Formative Decision-Making in Response to Primary Science Classroom Assessment: What to do Next?. Front. Educ. 5:584200. doi: 10.3389/feduc.2020.584200

Received: 16 July 2020; Accepted: 21 December 2020;

Published: 25 January 2021.

Edited by:

Gavin T. L. Brown, The University of Auckland, New ZealandReviewed by:

Wei Shin Leong, Ministry of Education, SingaporeHui Yong Tay, Nanyang Technological University, Singapore

Copyright © 2021 Earle. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sarah Earle, cy5lYXJsZUBiYXRoc3BhLmFjLnVr

Sarah Earle

Sarah Earle