94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 09 June 2020

Sec. Digital Education

Volume 5 - 2020 | https://doi.org/10.3389/feduc.2020.00072

This article is part of the Research TopicResearch in Underexamined Areas of Open Educational ResourcesView all 7 articles

One solution to the increasing cost of textbooks is open educational resources (OER). While previous studies have evaluated the effects of OER on student performance and perceptions of the material quality, few studies have evaluated other possible effects of OER. The goal of these studies was to examine whether the use of OER affects students' perceptions of instructors and whether students are more likely to select hypothetical courses using OER than courses using commercial materials. Results indicated that instructors assigned to use OER were rated more positively than those assigned a commercial textbook and students were more likely to select courses that had no course costs. These findings should motivate instructors and universities to adopt and advertise their OER use.

The rapid rise in textbook costs in the United States (US Public Interest Research Group, 2014) has motivated a drive to increase the use of open educational resources (OER), which are freely available and have liberal copyright licenses which allow for modification of content (i.e., editing, remixing). There has been a related push to conduct research on OER to ensure that educators are not trading the burden of high textbook costs for a subpar learning experience. One popular approach to studying OER is the Cost, Outcomes, Use, Perceptions (COUP; Bliss et al., 2013) framework. This framework has guided much of the early research on OER and provides a multi-faceted view of how use of OER can affect both students and instructors. Overall, this work has been promising with results generally indicating that students who use OER perform as well as, or better than, students using commercial textbooks (Hilton, 2016; Hardin et al., 2018; Jhangiani et al., 2018). Moreover, recent research suggests that the use of OER may have enhanced benefits for students who are racial minorities and those with financial need. Specifically, Colvard et al. found that OER improved grades and reduced D/F/W rates (i.e., students who earn a D/F in the class or withdraw from the class) for all students, but at even higher rates for racial minorities, part-time students, and Pell-eligible students (Colvard et al., 2018).

Survey research using the COUP framework has further indicated that nearly half of students self-reported that they have not registered for a specific class because of the costs of the associated textbook (Florida Virtual Campus, 2016) and ~16% indicated they had dropped or withdrawn from at least one course because the textbook was too expensive (Hendricks et al., 2017; Jhangiani and Jhangiani, 2017). Results such as these have led a number of institutions (e.g., the University of Hawaii, Rutgers University, the City University of New York system) to label courses in their catalogs based on textbook costs and to denote courses that have zero textbook costs. This allows students to have the information necessary to make informed decisions about their course costs when enrolling in classes. Given that we know high course costs can detrimentally affect a variety of student outcomes, such as grades, number of courses taken per semester, and withdrawals (US Public Interest Research Group, 2014), it is possible that this type of labeling would affect the outcomes portion of the COUP research framework. However, the literature on outcomes has predominantly focused on student outcomes within specific OER-using courses (e.g., how does the use of OER affect grades in the course using OER) and to our knowledge no prior research has evaluated whether these course designations affect student behaviors such as selections of courses or majors, which are important for long-term student outcomes (Webber, 2016).

Further, much of the work focused on the perceptions portion of the COUP framework has exclusively examined student and instructor perceptions of the open course materials. However, there are other types of perceptions that may be affected by the use of OER. For example, it is not currently clear whether and/or how the use of OER may affect student perceptions of instructors who use OER. To date, only one study has looked at whether the use of OER affects student perceptions of instructors. Specifically, Vojtech and Grissett (2017) had participants read vignettes about instructors who either used an open or commercial textbook. They found that the instructor who was described as using an open textbook was rated as more kind, encouraging, and creative than the instructor described as using a commercial book. Additionally, participants reported that they wanted to take the class with the instructor using the open textbook more than the class with the instructor using the commercial textbook. More work is needed to explore this novel finding.

The present studies sought to address several gaps in the literature on how OER might affect non-performance aspects of the student educational experience. First, we examined whether the use of OER would affect student perceptions of (a) instructors who they were currently taking a class from and (b) instructors whose syllabi they were evaluating. Second, we assessed whether the inclusion of cost indicators in a hypothetical course catalog would affect students' course selections.

Participants were recruited from 11 sections of Introductory Psychology at a large Pacific Northwest university in the Fall 2018 semester. Six of these sections were assigned an open textbook and five were assigned a commercial textbook. Of the sample of 774 participants, 397 (open n = 228, closed n = 169) reported reading the assigned textbook and were included in the study. Note that the pattern of results remains the same when participants who did not read the book are also included in the sample. The mean age of this sample was 18.96 (SD = 1.31). The sample was predominantly women (72.2%). A total of 27% of the sample identified as an ethnic minority and 29.9% identified as a first-generation student. Comparisons of the two groups are shown in Table 1.

The Office of Research Assurances deemed the study exempt from the need for review by the Institutional Review Board under exemption category 45 CFR 46.101(b)(1) and 45 CFR 46.101(b)2 [Certificate # 16758-001]. The course coordinator pseudo-randomly assigned the instructors of the 11 sections of Introductory Psychology to use either a traditional commercial textbook (Scientific American: Psychology from Worth Publishing) or an open textbook (OpenStax Psychology). This process is different than complete randomization, as some factors related to the instructors were taken into consideration when making the assignment. Specifically, instructor skill, course days (2 vs. 3 days per week), and course time were considered when making the assignments. For example, the course coordinator attempted to assign roughly equal numbers of new instructors to each group and efforts were made to spread the M/W/F classes and the T/Th classes equally between the groups. Importantly, the instructors had no influence on which textbook they were assigned to use.

As part of a larger online survey administered at the end of the semester, students were asked about their experiences in the course. The results from a subset of these survey questions are reported in this paper. More specifically, students provided informed consent to participate in the study and then completed a questionnaire asking them to rate how well a series of adjectives describe the instructor they had for their course. The descriptors are shown in Table 2. Participants used a scale ranging from 1 (strongly disagree) to 5 (strongly agree) to indicate how well each descriptor characterized their instructor. Following the completion of the semester, student information (i.e., standardized test scores) was provided by the Institutional Research office at our university, with the students' informed consent. Despite the Office of Research Assurances deeming the study exempt, a consent document approved by the Department of Psychology was used in order to comply with our department policies and the Nuremberg Code. Students who completed the survey were given extra credit in their class.

A multivariate analysis of covariance (MANCOVA) with group (open, closed) as the independent variable and ratings on the 17 adjectives used to describe the instructor as the dependent variables was conducted to evaluate whether use of OER would affect students' perceptions of instructors from whom they were currently taking a class. Age, standardized test scores, and number of courses enrolled in during the current semester were included as covariates as they differed significantly between groups (see Table 1).

There was a significant main effect of group, F(25, 306) = 3.327, p < 0.001, ηp2 = 0.214. As shown in Table 2, follow-up one-way analyses of covariance (ANCOVAs) indicated that ratings of all of the descriptors were significantly different between the two groups, with instructors randomly assigned to use the open textbook rated better than those assigned to use the commercial textbook.

Participants were recruited from the Department of Psychology's subject pool. They completed the study in exchange for a credit they could apply to an eligible psychology course. The study contained two parts: (i) instructor perceptions and (ii) course selection and a total of 440 participants completed at least one part of the study. More specifically, 436 completed the instructor perceptions task (OER n = 218, Traditional Cost n = 218) and 401 completed the course selection task (OER n = 199, Traditional Cost n = 202). The mean age of the entire sample was 20.91 (SD = 3.91). The sample was predominantly white (69%) and predominantly women (73.7%). The two sets of randomly assigned groups did not differ significantly on any demographic variables.

The Office of Research Assurances deemed the study exempt from the need for review by the Institutional Review Board under exemption category 45 CFR.46.104(d)2(i) [Certificate # 17605-001]. Participants in this study were randomly assigned to complete one version of an instructor perceptions task and one version of a course selection task in a random order.

For the instructor perceptions task, participants viewed a series of three syllabi pages from three different subjects and then completed an instructor perceptions questionnaire. More specifically, Generic History, Economics, and Biology syllabi were found online and anonymized for use in the study. Only the first page of each syllabus was presented to participants. Participants were asked to read each syllabus as if they were a student in the course. Each was randomly assigned to either a Traditional Cost group in which they viewed three syllabi that included course costs (i.e., History—$115 new/$88 used; Economics—$181 paperback/$135 loose-leaf; and Biology—$191 at bookstore/$64 e-book) or to an OER group in which they viewed three syllabi that indicated the course materials were available at no cost. After viewing each syllabus, participants completed the same instructor perceptions questionnaire used in Study 1. This same questionnaire was used in order to have continuity between Study 1 and Study 2. As such, each participant provided three sets of quantitative results.

For the course selection task, participants were presented with descriptions of 10 courses and their associated textbook costs and were instructed to select their top two courses. An example course description is: “HD 101: Human Development across the Lifespan: Overview of lifespan development from a psychosocial ecological perspective; individuals, families, organizations, and communities and their interrelationships $140.” They completed this task for five categories of courses. Specifically, in order to enhance mundane realism, we adopted the general education categories used at our university and all courses were actual courses offered at our university. Participants were randomly assigned to select from all 50 courses with full text costs (Traditional Cost group) or from 35 courses with full text costs and 15 “target” courses with zero cost (OER group). More specifically, three of the 10 courses in each of the five groups were zero cost. Text costs were found at the university bookstore website. If costs could not be found for a course, a representative book was located and the cost from an online book retailer was used. Finally, participants completed a series of demographic questions.

For each syllabus, a multivariate analysis of variance (MANOVA) was conducted with course material cost (Traditional Cost, OER) as the independent variable and ratings on the 17 adjectives used to describe the hypothetical instructor as the dependent variables. These analyses were used to examine whether the use of OER would affect students' perceptions of instructors whose syllabi they were evaluating. Follow-up univariate analyses examining the effect of course material cost on each of the 17 ratings were conducted when the multivariate test was significant in order to further explore which adjectives were affected by the manipulation of course materials costs on the syllabus.

There was no significant multivariate effect of group on ratings of instructors for either the Biology [F(19, 393) = 0.974, p = 0.491, ηp2 = 0.046] or the Economics syllabus [F(19, 374) = 1.220, p = 0.237, ηp2 = 0.058]. However, there was a significant multivariate effect of group on ratings of the instructor listed on the History syllabus [F(19, 375) = 10.115, p < 0.001, ηp2 = 0.339]. Follow-up analyses of variance (ANOVAs) further revealed that the only significant group difference was for the descriptor “engaging” [F(1, 393) = 91.432, p < 0.001, ηp2 = 0.189], with participants rating the hypothetical instructor in the Traditional Cost group as significantly more engaging (M = 2.90, SD = 0.736) than the hypothetical instructor in the OER group (M = 2.07, SD = 0.975).

Two primary dependent measures were assessed to determine whether the inclusion of courses with no textbook costs would alter course selections. First, we examined whether participants were more likely to select courses when they were listed as having no cost vs. when they were listed as having an associated textbook cost. That is, we directly compared selections of the 15 target courses that were free of costs in the OER group with selections of the same 15 courses that had associated text costs in the Traditional Cost group. To this end, we tallied the number of times those 15 target courses were selected for each group and compared these numbers across the two groups using an independent-samples t-test.

Second, we compared the overall textbook costs for the courses that participants selected. We conducted this analysis because it is feasible that students may select the courses that are free of costs more often, but then counteract that by selecting other courses that have more expensive course materials. For these analyses, participants (n = 86) without all choices (i.e., those who skipped any sections) were removed from the analysis because costs were totaled. Once again, these total costs were compared across the two groups using an independent samples t-test to determine whether the inclusion of cost indicators in the hypothetical course catalog would affect students' course selections.

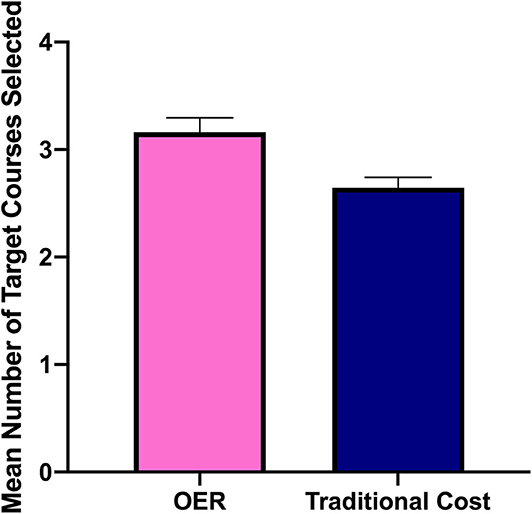

An independent-samples t-test revealed a significant group difference [t(399) = 3.092, p = 0.002], such that the 15 target courses were selected more often in the OER group than in the Traditional Cost group (see Figure 1).

A second independent-samples t-test revealed a significant group difference [t(313) = −15.151, p < 0.001], with those in the OER group spending significantly less money overall on course costs than those in the Traditional Cost group (see Figure 2).

Figure 2. Overall mean cost of textbooks in courses selected between OER and Traditional Cost groups.

Results of the present studies shed light on how increasing use of OER may affect student perceptions of their instructors and their course selections. Study 1 demonstrated that students who were enrolled in actual courses using OER rated their instructors significantly more positively on many descriptors (e.g., approachable, encouraging, kind) than students enrolled in courses using a commercial textbook. However, this effect did not generalize to Study 2 where participants were evaluating hypothetical courses and instructors. Study 2 further revealed that when participants were selecting courses for a hypothetical semester, they were more likely to select courses when they were listed as zero cost than when they were listed with an associated textbook cost.

While the predominant frameworks for evaluating OER (the COUP framework) includes perceptions, past research utilizing this framework has focused primarily on how students and instructors perceive the OER materials they are using in the course (e.g., are the materials equivalent in quality to commercials materials?). Here, we have demonstrated that students' perceptions of their instructors can also be enhanced as a function of their use of OER. In many studies, the use of OER is confounded by the instructor's willingness to use innovative methods (e.g., Fischer et al., 2015; Winitzky-Stephens and Pickavance, 2017). However, in the present study the instructors were randomly assigned to use either the OER or commercial textbook, which means that instructor's willingness to adopt OER is not a confounding factor. This suggests that there is something distinct about use of OER that leads to students having more positive perceptions of their instructors, independent of the instructor's willingness to use novel teaching tools.

However, the effect of OER on instructor perceptions was only present for students actually enrolled in courses using OER and it did not extend to students evaluating syllabi for hypothetical courses and instructors using OER. This is in contrast with previous work from Vojtech and Grissett (2017), who found that students rated hypothetical instructors using OER more positively than hypothetical instructors who were using a commercial textbook. Instead of looking at a syllabus, as in our study, their participants read a paragraph describing the hypothetical instructors, their classes, and the textbooks in use. The discrepancy between our findings and theirs suggests that participants in our study may not have been paying sufficient attention to the sample syllabi and may not have noticed/considered the textbook costs that were listed. It is possible that students evaluating hypothetical instructors either need direct contact with the instructor (as in Study 1) or additional information in a narrative format (as in the Vojtech and Grissett study) to make it apparent enough to impact decisions and perceptions. Finally, the questionnaire used to rate instructors may not have been as appropriate for Study 2 as it was for Study 1 and more differences in the groups' ratings may have been detected if we had of used items more related to aspects of the instructor that could be gleaned from reviewing the syllabi. These assertions should be empirically tested in future research.

The results of Study 2 also indicate that the use of OER may increase the likelihood that students will take a specific class compared to an equivalent class with an associated textbook cost. This finding has important implications for institutions seeking to reduce costs for students and decrease withdrawal rates. Previous research indicates that 16% or more of students have dropped or withdrawn from at least one course because the textbook was too expensive (Hendricks et al., 2017; Jhangiani and Jhangiani, 2017). Providing up front information about associated textbook costs would allow students to make informed financial decisions about their courses prior to enrollment. Some institutions have already begun including textbook costs in their course catalogs and our findings support such practices by indicating that students use this information to reduce their course costs. Students with the option to select hypothetical courses using OER saved $219 on average, which is roughly equivalent to 1 month of groceries on a low-cost food plan for adults age 19–50 (United States Department of Agriculture, 2019). If all courses moved to free course materials, students could save enough for almost a semesters worth of groceries. Finally, the finding that students are more likely to select courses without associated textbook costs also supports the importance of ensuring that OER courses have equivalent or better outcomes than courses that use traditional textbooks. Fortunately, research generally shows that students in OER courses perform just as well as, or better than, students using commercial textbooks (e.g., Hilton, 2016; Jhangiani et al., 2018).

While OER are generally considered a net gain for students, it is important to remember that changing textbooks or redesigning courses around a new textbook represents a substantial burden for instructors. This is especially important considering that more than 70% of faculty positions at US institutions are non-tenure-track, suggesting some level of precarity for most instructors (American Association of University Professors (AAUP), 2018). If more colleges move to openly disclosing their OER courses, and there are many instructors in non-permanent positions who may not have the time or resources to adapt their courses, this could result in a situation where students gravitate away from their classes. While entirely hypothetical, it is important to consider any possible implications that further marginalize adjunct or other part-time instructors. It should be noted that this is not an argument for failing to provide students with the information they need to make informed decisions, but rather an argument for providing precarious instructors with resources such as small grants to support the creation and adoption of OER. Indeed, it was a small grant from our university that allowed us to begin using OER in introductory psychology and conduct Study 1. Nevertheless, the results of Study 1 indicate that one hidden benefit of OER may be improved instructor evaluations. This finding may help to further compel some instructors to devote the time, energy and resources into adopting OER for their courses.

There are several limitations to these studies. The first relates to when we collected data for Study 1. These data were collected near the end of the semester, which means it is possible that some students had withdrawn from the course by that point in the semester. It is possible that the students who withdrew would have provided important information about our questions of interest. For example, research shows GPA (Aragon and Johnson, 2008; Boldt et al., 2017) and financial aid availability (Morris et al., 2005) are both related to student persistence in a course. It is also true that our samples were predominantly white, young adult, and continuing-generation. Past research shows that students who are marginalized are more likely to take higher loan amounts (e.g., Furquim et al., 2017), shoulder the financial burden of college on their own (McCabe and Jackson, 2016), default on their loans once they leave college (Hillman, 2014), and benefit more from OER (Colvard et al., 2018). All of this suggests that students who are most likely to be helped by the use of an OER (e.g., those with low GPAs and high amounts of financial need and stress) were not well-represented in our sample. Future studies should assess the effects of OER throughout the semester, not just at the end, and examine OER perceptions and outcomes at more diverse campuses and/or programs.

Second, while participants were more likely to select hypothetical courses that had no associated textbook costs, it is unclear whether this effect would hold when students are making actual course selections. When students are selecting classes they will actually take, other factors likely come into play such as the day/time of classes, which classes fulfill requirements for their major, and their familiarity with, or the reputation of, the instructor teaching the course. It is also true that the salience of the cost of the course materials could be reduced when this other information is provided to students. Future research is needed to examine whether course selection decisions based on use of OER interact with these other factors. Finally, it is possible that the questionnaire used to assess students' perceptions of the hypothetical instructors in Study 2 was not appropriate to evaluate perceptions of instructors on the basis of their syllabi alone.

Overall, results from the present two studies demonstrate that the use of OER at both the classroom and institutional level can affect student outcomes beyond performance in any one particular class. Specifically, our results indicate that OER use can affect students' perception of their instructors and increase their likelihood of selecting specific classes. As such, findings from our studies suggest that OER have important hidden impacts and the potential to affect more than simple student outcomes in any given class.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by Washington State University Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

AN and CC contributed conception and design of the study. AN organized the data and performed the statistical analyses with help from CC. AN wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

AN would like to acknowledge the Open Education Group and the Hewlett Foundation for funding her open educational resources fellowship.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

American Association of University Professors (AAUP) (2018). Data Snapshot: Contingent Faculty in US Higher Ed. Retrieved from: https://www.aaup.org/news/data-snapshot-contingent-faculty-us-higher-ed#.XYhc4ChKg2w (accessed September 22, 2019).

Aragon, S. R., and Johnson, E. S. (2008). Factors influencing completion and noncompletion of community college online courses. Am. J. Distance Educ. 22, 146–158. doi: 10.1080/08923640802239962

Bliss, T. J., Robinson, T., Hilton, J., and Wiley, D. (2013). An OER COUP: college teacher and student perceptions of open educational resources. J. Interactive Media Educ. 1:4. doi: 10.5334/2013-04

Boldt, D. J., Kassis, M. M., and Smith, W. J. (2017). Factors impacting the likelihood of student withdrawals in core business classes. J. Coll. Student Retent. 18, 415–430. doi: 10.1177/1521025115606452

Colvard, N. B., Watson, C. E., and Park, H. (2018). The impact of open educational resources on various student success metrics. Int. J. Teach. Learn. Higher Educ. 30, 262–276.

Fischer, L., Hilton, J., Robinson, T. J., and Wiley, D. A. (2015). A multi-institutional study of the impact of open textbook adoption on the learning outcomes of post-secondary students. J. Comput. Higher Educ. 27, 159–172. doi: 10.1007/s12528-015-9101-x

Florida Virtual Campus (2016). 2016 Florida Student Textbook and Course Materials Survey. Tallahassee, FL.

Furquim, F., Glasener, K. M., Oster, M., McCall, B. P., and DesJardins, S. L. (2017). Navigating the financial aid process: borrowing outcomes among first-generation and non-first-generation students. Ann. Am. Acad. Political Soc. Sci. 671, 69–91. doi: 10.1177/0002716217698119

Hardin, E. E., Eschman, B., Spengler, E. S., Grizzell, J. A., Moody, A. T., Ross-Sheehy, S., et al. (2018). What happens when trained graduate student instructors switch to an open textbook? a controlled study of the impact on student learning outcomes. Psychol. Learn. Teach. 18, 48-64. doi: 10.1177/1475725718810909

Hendricks, C., Reinsberg, S. A., and Rieger, G. (2017). The adoption of an open textbook in a large physics course: an analysis of cost, outcomes, use, and perceptions. Int. Rev. Res. Open Distributed Learn. 18:3006. doi: 10.19173/irrodl.v18i4.3006

Hillman, N. W. (2014). College on credit: a multilevel analysis of student loan default. Rev. Higher Educ. 37, 169–195. doi: 10.1353/rhe.2014.0011

Hilton, J. (2016). Open educational resources and college textbook choices: a review of research on efficacy and perceptions. Educ. Technol. Res. Dev. 64, 573–590. doi: 10.1007/s11423-016-9434-9

Jhangiani, R., and Jhangiani, S. (2017). Investigating the perceptions, use, and impact of open textbooks: a survey of post-secondary students in British Columbia. Int. Rev. Res. Open Distribut. Learn. 18:3012. doi: 10.19173/irrodl.v18i4.3012

Jhangiani, R. S., Dastur, F. N., Le Grand, R., and Penner, K. (2018). As good or better than commercial textbooks: students' perceptions and outcomes from using open digital and open print textbooks. Can. J. Scholar. Teach. Learn. 9:22. doi: 10.5206/cjsotl-rcacea.2018.1.5

McCabe, J., and Jackson, B. A. (2016). Pathways to financing college: race and class in students' narratives of paying for school. Soc. Curr. 3, 367–385. doi: 10.1177/2329496516636404

Morris, L. V., Wu, S.-S., and Finnegan, C. L. (2005). Predicting retention in online general education courses. Am. J. Distance Educ. 19, 23–36. doi: 10.1207/s15389286ajde1901_3

United States Department of Agriculture (2019). Official USDA Food Plans: Cost of Food at Home at Four Levels. U.S. Average. Retrieved form: https://fns-prod.azureedge.net/sites/default/files/media/file/CostofFoodAug2019.pdf (accessed October 15, 2019).

US Public Interest Research Group Student PIRG. (2014). Fixing the Broken Textbook Market. Retrieved from: https://uspirg.org/sites/pirg/files/reports/NATIONAL%20Fixing%20Broken%20Textbooks%20Report1.pdf (accessed September 24, 2019).

Vojtech, G., and Grissett, J. (2017). Student perceptions of college faculty who use OER. Int. Rev. Res. Open Distribut. Learn. 18:3032. doi: 10.19173/irrodl.v18i4.3032

Webber, D. A. (2016). Are college costs worth it? How ability, major, and debt affect the returns to schooling. Econ. Educ. Rev. 53, 296–310. doi: 10.1016/j.econedurev.2016.04.007

Keywords: open educational resources, educational equity, textbooks, marginalized students, college costs

Citation: Nusbaum AT and Cuttler C (2020) Hidden Impacts of OER: Effects of OER on Instructor Ratings and Course Selection. Front. Educ. 5:72. doi: 10.3389/feduc.2020.00072

Received: 08 January 2020; Accepted: 07 May 2020;

Published: 09 June 2020.

Edited by:

Xiaoxun Sun, Australian Council for Educational Research, AustraliaReviewed by:

Anthony Philip Williams, University of Wollongong, AustraliaCopyright © 2020 Nusbaum and Cuttler. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Amy T. Nusbaum, YW15Lm51c2JhdW1Ad3N1LmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.