94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 29 May 2020

Sec. STEM Education

Volume 5 - 2020 | https://doi.org/10.3389/feduc.2020.00066

This paper aims at identifying ontological categories as higher-order knowledge structures that underlie engineering students' thinking about technical systems. Derived from interviews, these ontological categories include, inter alia, a focus on the behavior, structure, or purpose of a technical system. We designed and administered a paper-based test to assess these ontological categories in a sample of N = 340 first-year students in different engineering disciplines. Based on their activation patterns across ontological categories, students clustered into six different ontological profiles. Study program, gender as well as objective and self-perceived cognitive abilities were associated with differences in jointly activated ontological categories. Additional idiosyncratic influences and experiences, however, seemed to play a more important role. Our results can inform university instruction and support successful co-operation in engineering.

Many of today's challenges are engineering problems that refer to the design, construction, or maintenance of technical systems—from mobile phones or car brakes to power plants or automatic production facilities. Such technical systems consist of several interrelated technical components that belong together in a larger unit and work together to achieve some common objective (Lauber and Göhner, 1999; VDI, 2011). They may comprise software, mechanical, and electronic components. To solve engineering problems, technical content knowledge is necessary (Ropohl, 1997; Abdulwahed et al., 2013). However, due to the complexity of technical systems, different content knowledge could be activated to think about a certain engineering problem. For instance, imagine you had to construct a soccer-playing robot, what comes to your mind immediately might differ inter-individually and range from depicting the robot body to reflecting on programming solutions. The question we ask in this study is whether there is a superordinate structure that determines what comes to your mind immediately and that could be described as a thinking tendency adopted to deal with engineering problems. A problem solver, for example, could have the tendency to focus on the logical relations and object classifications within the technical system (e.g., reflecting on programming solutions), the configuration of the different components (e.g., what components the soccer robot is made of), on the behavior of the system (e.g., how sensory information leads to certain body movements), or on its appearance (e.g., depicting the robot body) to name just a few. As knowledge structures, these tendencies are subject to change: they develop based on prior experiences and can potentially be modified by instruction (Chi et al., 1981, 2012; Jacobson, 2001; Vosniadou et al., 2012; Björklund, 2013; Smith et al., 2013). Being able to identify such tendencies in thinking about engineering problems can open up new ways of analyzing communication in engineering teams and designing instruction in engineering education.

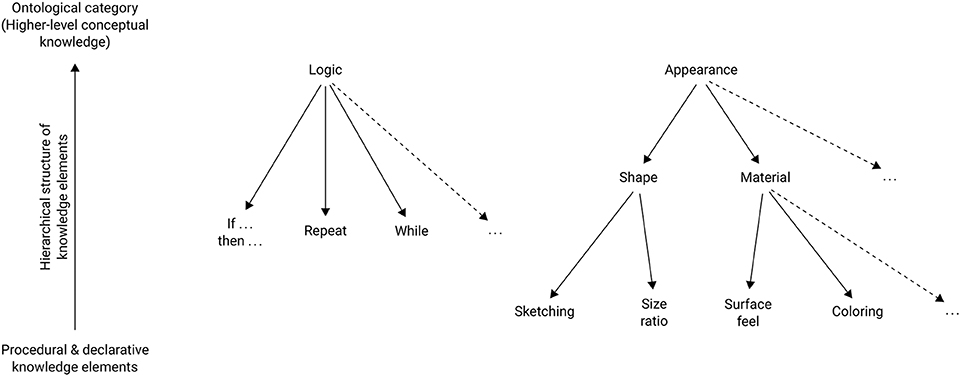

A focus on the “appearance of a technical system” (e.g., depicting the robot body in the above example) can be considered to represent higher-level conceptual knowledge that activates subordinate knowledge including procedural knowledge about designing pleasant facial expressions or conceptual knowledge about the look and feel of different surface materials. Likewise, a focus on the “logic of technical systems” (e.g., reflecting on programming solutions) may represent another higher-level knowledge element that triggers subordinate elements such as knowledge about repeat or while algorithms. Since these higher-level knowledge elements to some extent determine the possibility space of thinking, they are called ontological categories (e.g., focus on appearance, logic, behavior, or on structures of technical systems). Since one may activate several ontological categories (e.g., focus on the appearance and the behavior of a system) during engineering problem solving, we speak of ontological profiles to describe the joint activation of different ontological categories.

Most instruments focus on the assessment of conceptual or procedural knowledge, for example, about programming languages, characteristics of different surface materials for soccer-playing robots, physics laws or circuits. Examining ontological profiles allows us to describe similarities and differences in first-year civil-, mechanical-, electrical-, and software engineering students' tendencies in thinking about technical systems. Acknowledging and explicitly addressing ontological categories in engineering education could improve university instruction and support student learning. To do so, we first aim to identify the ontological categories that students entering engineering disciplines may activate when they think about engineering problems. In this paper, we present and discuss an approach to assess these higher-level knowledge structures in first-year engineering students.

In the following sections, we first briefly describe the cognitive architecture model this work is based on and illustrate the idea of ontological categories and ontological profiles. Covariates of ontological profiles and the assessment of conceptual knowledge structures are addressed in the next sections before we focus on the present study.

Knowledge is organized in several layers of hierarchically and network-like structured knowledge elements (see Figure 1 for an exemplary structure in the context of technical systems)—with basic declarative knowledge, like surface feel, and procedural knowledge, like sketching, at the lowest level that may be grouped to intermediate-level conceptual knowledge structures, like material (Gupta et al., 2010; Chi et al., 2012). Conceptual knowledge structures describe content-specific knowledge elements and their interrelations (Posner et al., 1982; Chi, 1992; de Jong and Ferguson-Hessler, 1996; Carey, 2000; Duit and Treagust, 2003), shaping reasoning, communication, and thus thinking in the respective knowledge domain (Schneider and Stern, 2010; Shtulman and Valcarcel, 2012). Higher-level conceptual knowledge structures are also referred to as ontologies that provide categories for thinking about entities, like “appearance” in Figure 1. Attributes of an ontological category describe its substantial characteristics (e.g., entities in the ontological category “appearance of technical systems” all share attributes such as purposeful or visual, and entities in the ontological category “animals” all share attributes such as has blood or breathes, see Slotta et al., 1995). Building on the ideas of Piaget's cognitive constructivism (Powell and Kalina, 2009), individuals actively construct intermediate- and higher-level conceptual knowledge structures based on their existing knowledge. Without formal instruction, these knowledge structures often reflect naïve theories or everyday experiences. They develop and change with increasing expertise in a content domain (Baroody, 2003; Chi, 2006; Ericsson, 2009; Kim et al., 2011; Vosniadou et al., 2012).

Figure 1. Visualization of a potential hierarchical structure of knowledge elements in the context of “technical systems” with ontological categories at the highest level, intermediate-level conceptual knowledge, and procedural and declarative knowledge elements at the lowest level. The trees could be extended in any manner.

The often cumbersome process of developing and revising existing conceptual knowledge on the intermediate-level has been investigated and described in conceptual change studies (Hake, 1998; Hofer et al., 2018; Vosniadou, 2019). However, only a few researchers have assessed higher-level conceptual knowledge structures (i.e., ontological categories). With the exception of the assessment and modification of the ontological categories sequential processes and emergent processes in the specific context of understanding diffusion, heat transfer, and microfluidics in a sample of undergraduate engineering students (Yang et al., 2010), ontological categories in engineering have been largely unknown so far.

Depending on the discipline and situational requirements, the activation of certain ontological categories may be more beneficial than others. Accordingly, physics experts have been found to flexibly apply different ontological categories to deal with intermediate-level concepts such as energy or quantum particles depending on situational goals and requirements such as communication with novices or experts (Gupta et al., 2010). In studies on complex systems problem solving, novices thought about complex systems as static structures, while experts thought about complex systems as equilibration processes (Jacobson, 2001; Chi et al., 2012).

An engineering problem that is defined by the underlying technical system is by definition multi-facetted and allows approaching a solution from several perspectives—as illustrated at the beginning with the soccer-playing robot. To give another example, if you had to sketch a shelf stocker system, you could immediately think about the appearance of the system, or the processes performed by the system, or the functions of different elements of the system, or the logic behind the system. We think of these different tendencies in thinking as examples of ontological categories that might be activated in the context of engineering problems. We refer to different patterns of ontological categories that are activated when thinking about engineering problems as ontological profiles.

Due to the complexity of engineering problems, we expect that first-year students without formal discipline-specific education may tend to activate several of such ontological categories during problem solving—whereas experts might tend to adhere to particular ontological categories that reflect common discipline-specific thinking approaches (to give an example from the field of medicine, for instance, like a dermatologist might focus on a patient's skin and a neurologist on a patient's behavior when diagnosing). What first comes to these students' minds when thinking about technical systems, however, cannot be expected to be arbitrary but to depend on cognitive prerequisites and prior experiences (Chi et al., 1981, 2012; Jacobson, 2001; Vosniadou et al., 2012; Björklund, 2013; Smith et al., 2013). We accordingly included objective as well as self-perceived measures of ability (e.g., general reasoning, spatial ability, self-concept) that have proven to correlate with success in technology-related domains (Deary et al., 2007; Marsh and Martin, 2011; Wei et al., 2014; Hofer and Stern, 2016) to explore whether differences in ontological category activation are associated with differences on these variables.

More specifically, differences and similarities may be associated with individual characteristics that have been found to predict achievement in STEM subjects. General reasoning ability and verbal abilities were identified as predictors of academic achievement in general (e.g., Deary et al., 2007). While numerical abilities turned out to be particularly predictive of science achievement, the spatial ability to mentally manipulate figural information successfully predicted spatial-practical achievement (Gustafsson and Balke, 1993). There is broad evidence that spatial abilities, especially mental rotation, contribute to the development of expertise in STEM domains and, in particular, in engineering (e.g., Shea et al., 2001; Sorby, 2007; Wai et al., 2009). In a sample of engineering students, for instance, spatial ability could significantly predict overall course grades (Hsi et al., 1997). A recent study assessing undergraduate mechanical engineering and math-physics students, reported that verbal and numerical abilities were associated with students' achievements on most physics and math courses, whereas spatial ability was associated with students' achievements on an engineering technical drawing course (Berkowitz and Stern, 2018). Based on existing literature, we can accordingly expect general reasoning ability as well as verbal, numerical and—in particular—spatial abilities to affect thinking about engineering problems in specific underlying ontological profiles. In addition to basic cognitive abilities, students' subject-specific belief in their own abilities—their self-concept—turned out to be a frequent predictor of academic success (Marsh, 1986; Marsh and O'Mara, 2008; Marsh and Martin, 2011; Marsh et al., 2012). Students' self-concept related to technology may hence be informative when interpreting ontological profiles in engineering.

In addition, students choosing different study programs in the engineering domain (e.g., mechanical engineering vs. software engineering) may also differ in their thinking approaches (e.g., a stronger focus on the behavior and purpose of a system vs. a stronger focus on classification and logic; see Vogel-Heuser et al., 2019). Although in the first semester at the university these knowledge structures can be expected to be still rather unaffected by formal education in the different engineering disciplines, student self-selection effects reflecting their prior experiences can be expected.

Conceptual knowledge structures have been assessed directly, for example, by means of thinking-aloud protocols (Ford and Sterman, 1998) that externalize participants' thinking processes, and indirectly by means of observations of actual behavior (Badke-Schaub et al., 2007; Jones et al., 2011; Silva and Hansman, 2015), in the context of design briefs, for instance (Björklund, 2013). Those methods are rather time-consuming and not fit for efficient data collection in large groups. More efficient concept tests that are based on single- or multiple-choice questions have been proposed for different STEM contents (Hestenes et al., 1992; Klymkowsky and Garvin-Doxas, 2008; Hofer et al., 2017; Lichtenberger et al., 2017)—but have not been applied to assess higher-level conceptual knowledge up to as far as we know.

Supporting students to become successful problem solvers in engineering domains can be considered one central goal of engineering education. To reach this goal, one reasonable step is to learn more about how students think about technical systems and advance research on the identification, assessment, and description of engineering students' ontological categories. The present study aims at assessing tendencies in thinking about engineering problems. Since ontological categories in engineering have been largely unknown so far, a first goal of this study was to identify the different ontological categories activated by individuals when thinking about engineering problems. For this first step, a structured interview study was conducted. To efficiently assess the ontological categories identified in the first step in larger groups, a test instrument inspired by existing concept tests was developed and evaluated in the next step. In the third step and main part of this study, this test was administered to a sample of first-year civil-, mechanical-, electrical-, and software engineering students. We expected engineering students at the beginning of their studies to show ontological profiles that are characterized by several ontological categories that are activated when thinking about technical systems. We further assumed that inter-individual differences in ontological profiles might be associated with differences in cognitive prerequisites and prior experiences. Accordingly, cluster analyses were used to describe students' ontological profiles. Objective and self-perceived measures of ability as well as study program were used as covariates of profile membership to better understand and interpret students' ontological profiles.

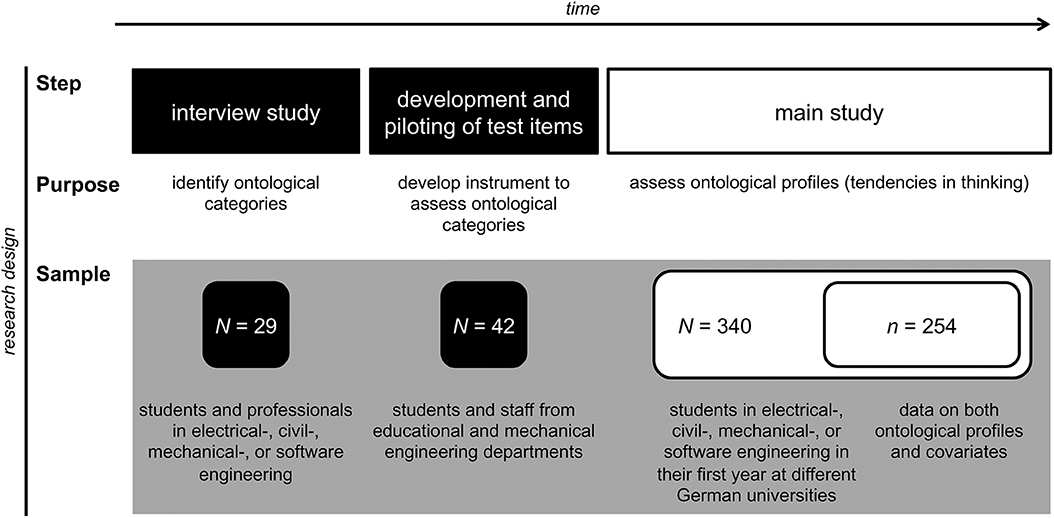

Figure 2 represents the research design of this study. To assess tendencies in thinking about engineering problems, we proceeded in three steps. First, we conducted an interview study to identify ontological categories occurring in novices' and experts' thinking about technical systems. In a second step, we designed test items to assess these categories on a quantitative level and evaluated our instrument in a pilot study. In the main study, we applied this test instrument to first-year university students and clustered the data to describe their ontological profiles in engineering.

Figure 2. Schematic representation of the research design. Time flows from left to right. Corresponding information is vertically aligned. The first row provides information on the step within the research process, the second row on its purpose, and the third row on the samples examined in each step.

Twenty-nine students and professionals in electrical-, civil-, mechanical-, or software engineering were recruited at different German universities to participate in the interview study on a voluntary basis. Forty-two participants from the educational and mechanical engineering department of the Technical University of Munich, Germany, served to evaluate our test instrument to assess ontological categories in engineering. A total of N = 340 students (26.8% female) took part in the main study. They studied electrical-, civil-, mechanical-, or software engineering in their first year at different German universities. For a subsample of n = 254 students (28.5% female), we have data on both ontological profiles and covariates (see Table 1). Their mean age was 20.8 years (SD = 2.8).

Ontological categories for thinking about engineering problems were derived from structured interviews with open card sorting tasks, where N = 29 participants with varying expertise were asked to describe what comes to their minds when dealing with technical systems, i.e., a bicycle gearing (including shifting mechanism, sprocket, and chain) and an extended pick and place unit (including stack depot, crane, sorting belt, and stamp; see Vogel-Heuser et al., 2014). Instead of written or verbal stimuli, we used two short silent video sequences that visualized the technical system without any descriptions that could influence participants' thinking in a certain direction (for the bicycle gearing-scenario, we used a video zooming in on a common bicycle gearing in action; for the extended pick and place unit, see Video 1 in the Supplementary Material). After watching a video, participants were instructed to describe what they saw in the film in their own words. They were asked to verbalize their initial impression of what they saw. After that, they used empty cards of different color and shape that they could label to visualize their thinking about the technical system, while thinking aloud. To give some examples, they used the cards to represent different components of the bicycle gearing or the pick and place unit and arranged the cards on the table reflecting the shape of the technical system. Other participants used the cards to indicate functions of elements of the system or included arrows to visualize a process or temporal order. Their verbalized thoughts during this activity helped us to understand the meaning of their card sorting (for a more detailed description of the interview study, see Vogel-Heuser et al., 2019). When finished, the whole procedure was repeated with the video of the second technical system. The order of the two technical systems was randomized. Participants gave written consent for the use of the recorded audio files as well as photographs of the produced card sorting models for research purposes by the authors.

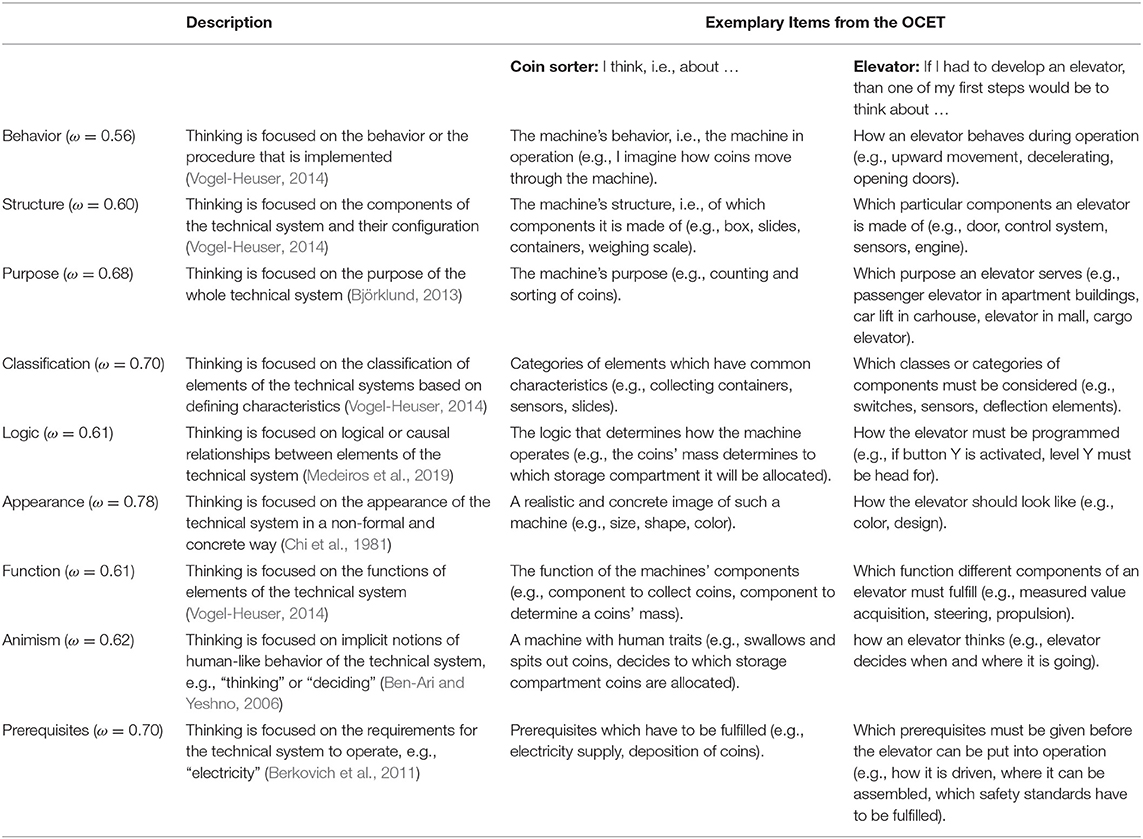

Based on Grounded Theory (Charmaz and Belgrave, 2015) all available data—interview data and the products from the open card sorting tasks—were jointly scrutinized to derive potential categories of thinking that were present in the interviewees' mental representations of the technical systems. These potential categories were then described in a coding manual (general description of each category, indicators) and used by three raters to code the data of all interviewees. Discussions of the results of the coding process within the author team led to the exclusion, merging, refining, broadening, and/or renaming of categories. This process was repeated until all interviewees' representations could be unambiguously described by relating their statements and visual structures to specific ontological categories. These empirically derived categories are theoretically substantiated by existing research in psychology and engineering (see Table 2). For each of the nine final categories Behavior, Structure, Purpose, Classification, Logic, Appearance, Function, Animism, and Prerequisites, Table 2 gives brief category descriptions, substantiating literature references, and two sample items from the test we developed in the next step.

Table 2. Nine ontological categories and their reliability, their descriptions, literature references, and two sample items from the Ontological Categories in Engineering Test (OCET).

We developed a test instrument asking students to judge whether or not statements representing each of the nine ontological categories in the context of a variety of different engineering problems conform with their spontaneous mental representation of the problem. The spontaneous mental representations triggered by short paragraphs describing the respective engineering problem (like drafting an elevator) should reflect the ontological categories that shape students' thinking. The engineering problems were embedded into different technical systems, i.e., coin sorter, assembly line, shelf stocker, traffic lights, Leonardo's alternate motion machine, elevator, respirator, bicycle gearing, computer, hamburger automat, drip coffee maker, TV remote control device, ticket machine, and robot in assembly line production (the seven technical systems printed in bold type are included in the final instrument).

Each engineering problem requires the student to contemplate nine forced-choice items representing the nine ontological categories and asks students to judge whether or not each statement conforms with their initially activated mental representation (i.e., activated knowledge structures). Sample items from the final test are provided in Table 2 for the coin sorter and the elevator.

This approach allows detecting the pattern of activation across the nine ontological categories. The first draft of the instrument with all of the 14 technical systems listed above was evaluated in an unpublished pilot study with an independent sample of N = 42 participants. In addition to the psychometric analysis of the test items, participants' comments were recorded, discussed, and considered in the test instrument, if applicable. This evaluation led to the exclusion of seven technical systems and modifications in the instructions and item wording. The final instrument (i.e., Ontological Categories in Engineering Test, OCET) contains the seven technical systems printed in bold type above (see Vogel-Heuser et al., 2019, for a more detailed description of the test development process). Accordingly, the OCET assesses each ontological category with seven items that refer to seven different engineering problems.

In the following sections, we introduce the instruments used in the main assessment of the study that aimed at describing and understanding students' ontological profiles. Study program (electrical-, civil-, mechanical-, or software engineering) was directly assessed in a brief demographic questionnaire.

With the OCET, we assessed the following nine ontological categories of thinking about engineering problems: Behavior, Structure, Purpose, Classification, Logic, Appearance, Function, Animism, and Prerequisites. Each ontological category is measured by seven items across seven different technical systems (i.e., the engineering problem context). McDonald's ω was calculated for each ontological category (see Table 2) and ranged from ω = 0.56 (Behavior) to ω = 78 (Appearance). The, in part, rather low reliability scores could be explained by the different engineering problem contexts that might have stimulated the activation of particular ontological categories in first-year students. For instance, students might be more likely to activate the ontological category “Behavior” when they have to think about a “hamburger automat” (preparing hamburgers) compared to “traffic lights.” Capturing the fluctuation (several ontological categories activated across the seven problem contexts in the OCET) and uniformity (the same few ontological categories activated across the seven problem contexts) of ontological category activation, is one of the objectives of the OCET. Table 2 provides more detailed information on this test instrument. An independent double coding of 50% of all tests revealed an excellent inter-rater reliability, κ = 0.98. For the final instrument, please contact the corresponding authors of this paper.

We assessed verbal, numerical, spatial, and general reasoning ability, and students' self-concept regarding technical systems.

Verbal Ability was measured with a verbal analogies scale: 20 items; possible scores range from 0 to 20; ω = 0.67; pair of words given, missing word in a second pair must be picked out of five possible answer alternatives to reproduce the relation represented in the given pair; part of the Intelligence Structure Test IST 2000 R (see Liepmann et al., 2007).

Numerical Ability was measured with a calculations scale: 20 items; possible scores range from 0 to 20; ω = 0.78; basic arithmetic problem given that has to be solved with the solution being a natural number; part of the Intelligence Structure Test IST 2000 R (see Liepmann et al., 2007).

Spatial Ability was measured with a mental rotation scale: 24 items; possible scores range from 0 to 24; ω = 0.93; three-dimensional geometrical structure built out of cubes given, the two correctly rotated structures must be picked out of four (see Peters et al., 1995, 2006).

General Reasoning was measured with a short form of Raven's Advanced Progressive Matrices: 12 items; possible scores range from 0 to 12; ω = 0.69 (see Arthur and Day, 1994).

In addition, we adapted standardized measures for participants' self-concept (Marsh and Martin, 2011) on four-point Likert-scales—as beliefs in one's own abilities regarding technical systems (Self-Concept; 5 items; possible scores representing the mean Likert-scale-scores across the five items range from 1 to 4; ω = 0.85; sample item: “I am perceived as an expert with technical systems”).

An independent double coding of 7% of the sample revealed acceptable to excellent inter-rater reliabilities: all scales showed inter-rater reliabilities of κ > 0.96, with the exception of the Calculations scale with κ = 0.65.

Participants were tested in their major lectures during the first semester of their university studies. All instruments were paper-based and conducted under controlled and standardized conditions by the authors.

Participants received the cognitive and affective scales in a 40-page booklet. We kept to the time limits recommended for each scale resulting in a total working time of 45 min. In a subsequent lecture, students worked on the OCET (a 44-page booklet) for 40 min (as shown in the pilot study, this time limit allows working on the test without time pressure). The OCET was explicitly introduced as a non-evaluative test instrument that does not assess correct or wrong answers.

Participants took part in the study on a voluntary basis and were not given any remuneration. They were asked for their informed consent in both tests separately. Consent could be withdrawn within a time period of two months—15.4% of the students attending the lectures did not give consent and were excluded from the analyses. Data from both tests were matched using anonymized personal codes. A list matching the anonymized personal codes and participants' names was kept by one person not related to this study and was destroyed after the given time for withdrawing consent—resulting in completely anonymized data. Data was analyzed only after this anonymization process was completed.

All statistical analyses were run in R (R Core Team, 2008). In a first step, a hierarchical cluster analysis on the data of the full sample of N = 340 participants was conducted on the nine ontological categories (i.e., Behavior, Structure, Purpose, Classification, Logic, Appearance, Function, Animism, and Prerequisites). As we were interested in ontological profiles across all nine ontological categories—and not mere differences in the scale values—we used a hierarchical clustering algorithm with Pearson correlation coefficients as proximity measures in combination with a complete linkage approach. Scores were z-standardized within the scales before clustering. We took quantitative and qualitative indicators (elbow criterion, gap statistic, consideration of the explanatory value of each cluster solution) into account to specify the number of clusters.

In a second step, the resulting ontological profiles (i.e., the clusters) were further described by analyzing the distribution of study program within the profiles and the profile-specific manifestations of the cognitive and affective covariates described above. For study program, we used Chi-squared tests to check for differences in the distributions between each profile and the total sample.

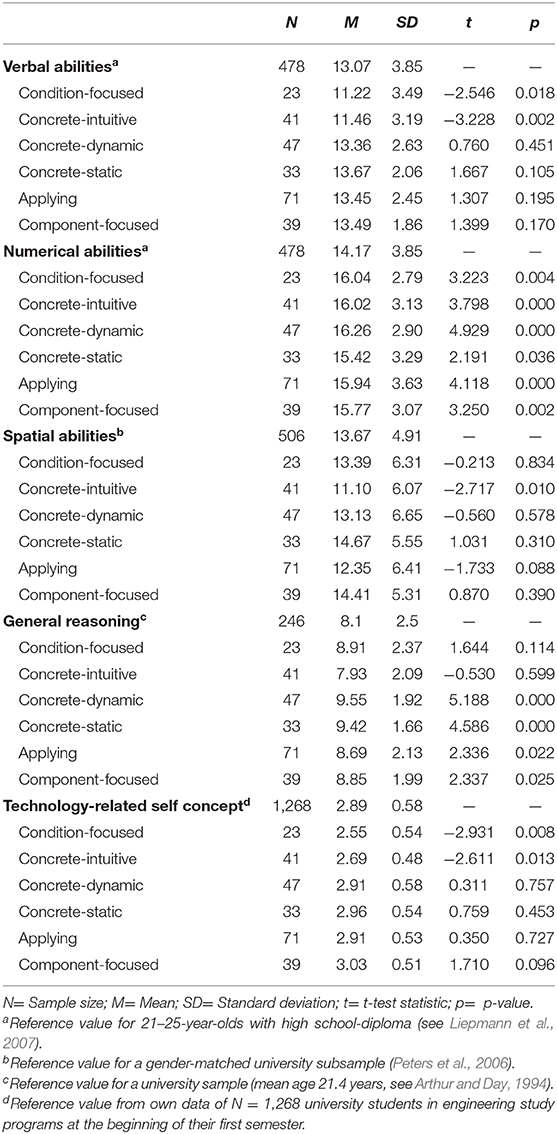

For the cognitive and affective covariates, we conducted one-sample t-tests against reported means from comparable reference groups based on the subsample of n = 254 students (see Table 1) for which data on both the ontological profiles and the cognitive and affective covariates were available. For verbal and numerical abilities, the reference sample are 21–25-year-old students from Germany with comparable formal education (i.e., higher education entrance qualification, German: “Abitur,” see Liepmann et al., 2007). For general reasoning, we compared our sample to a sample of US university students with a mean age of 21.4 years, which represent the only available published reference values for the short form of the Raven APM (see Arthur and Day, 1994). For spatial ability, we compared our sample to a gender-matched university students' sample from Germany within science study fields (see Peters et al., 2006). For technology-related self-concept, we refer to an own data collection of N = 1,268 university students in engineering study programs at the very beginning of their first semester.

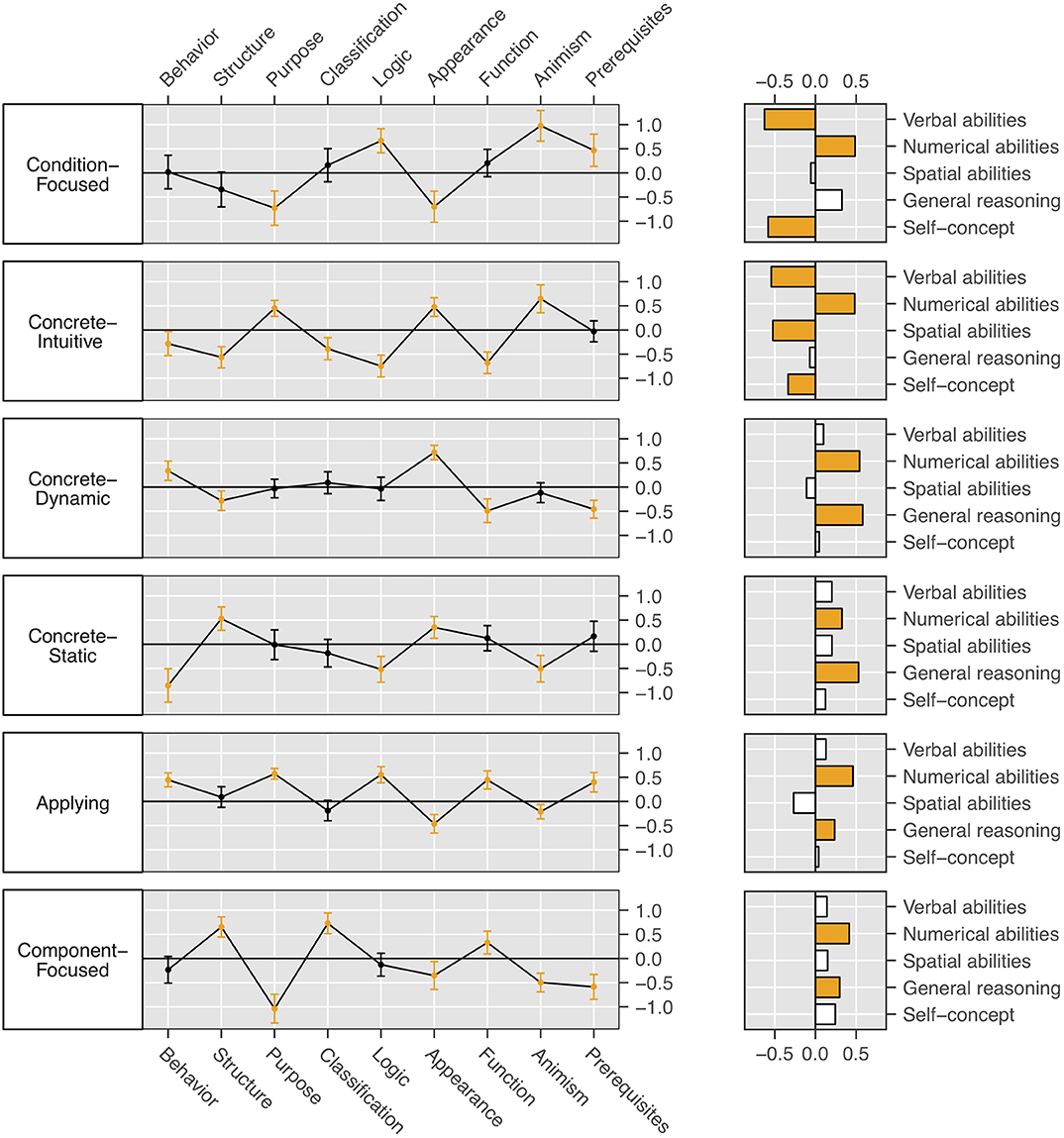

We used the OCET to assess first-year students' answer patterns across the nine ontological categories which are assumed to reflect tendencies in thinking about engineering problems. A cluster analysis was conducted on the nine ontological categories, which were each standardized at the category mean. Based on the elbow criterion, the gap statistic, and with respect to the explanatory value of each cluster solution, we decided to interpret a solution that distinguished between six ontological profiles shown in Figure 3. In addition to study program, we used standardized measures of general reasoning, verbal, numerical, and spatial ability as well as students' technology-related self-concept to describe similarities and differences in the resulting ontological profiles. To ease interpretation, the profile-specific scores on these measures were compared to published reference values or representative samples (only for self-concept), respectively (see Table 5). The results of all analyses are reported jointly in the context of the descriptions of the six ontological profiles. For the sake of readability, the description and interpretation of the ontological profiles are not reported in separate sections but jointly presented in the next sections.

Figure 3. Left: Cluster centers of the six ontological profiles (and 95% CIs), resulting from the cluster analysis of 340 university students based on the OCET, assessing nine ontological categories in engineering. Values are z-standardized for each ontological category on the total sample. Values differing significantly (i.e., p < 0.05) from the total sample are colored in orange. Right: Ontological profile-specific scores on the covariates compared to published reference values (i.e., general reasoning, verbal, numerical, and spatial ability; see Arthur and Day, 1994; Peters et al., 2006; Liepmann et al., 2007) or the sample mean (i.e., technology-related self-concept). Values are z-standardized for each covariate on the reference sample. Values differing significantly (i.e., p < 0.05) from the reference values are colored in orange.

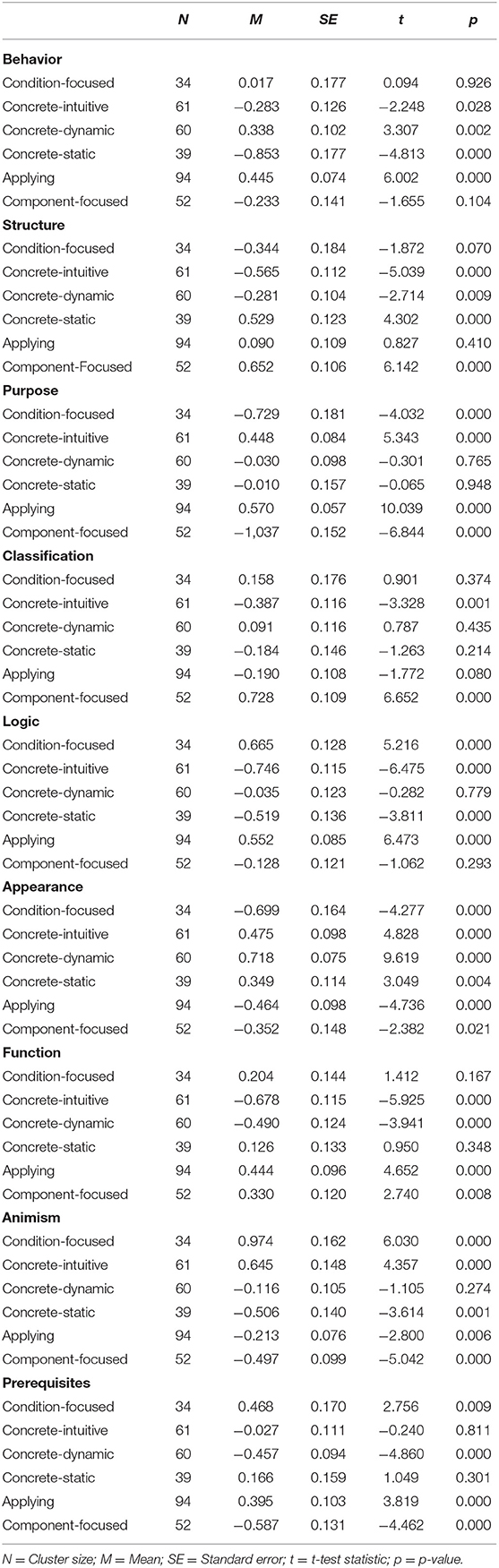

In the following, we describe the ontological profiles by focusing on those ontological categories within each profile that show a significant difference (see Table 3) to the sample mean (see Figure 3) and provide a short interpretation. Please refer to Table 4 for profile sizes and the distribution of gender and study program for each ontological profile.

Table 3. Z-standardized values and one-sample t-tests (H0 = 0) of the ontological categories-scales in the OCET for each ontological profile used in Figure 1.

The profiles do not reflect traits that are enduring and stable but rather the actual state of students' higher-level conceptual knowledge structures that are malleable and expected to change in the course of their discipline-specific socialization at university (Schneider and Hardy, 2013; c.f., Flaig et al., 2018).

Students in the Condition-Focused profile focus on the conditions that determine the technical system—both logical and regarding more general prerequisites (e.g., “What conditions and conditional relations have to be considered in order that the system can work?”). They do not care much about the appearance and purpose of the system, and rather tend to animistic thinking. Despite above-average numerical abilities, they also show below-average verbal abilities and lower technology-related self-concept (Tables 3, 5).

Table 5. Values and one-sample t-tests against reported means from comparable reference groupsabcd of the cognitive and affective scales for each ontological profile used in Figure 1.

The Concrete-Intuitive profile consists of students who report animism as one major ontological category when thinking about engineering problems. Their thinking is context-driven in the sense that they focus on the concrete appearance (e.g., “How does the technical system look like?”) and the purpose of the technical system at hand. They do not focus on the classification of elements of technical systems, logical relationships between those elements, their function, behavior, or structure (Table 3). These students hence show a profile that rather reflects intuitive thinking and a naturalistic, pragmatic approach to technical systems. Besides above-average numerical abilities, they reached below-average scores on the verbal and spatial ability measures, and a low technology-related self-concept (Table 5).

Students in the Concrete-Dynamic profile seem to have a very naturalistic (focused on appearance) and dynamic conception (focused on behavior) of the technical system when solving engineering problems: they activate a realistic representation of the system at work. They do so without zooming in on the components of the system and their function and without zooming out to consider the general prerequisites for using the system (Table 3). Students in this profile show above-average general reasoning abilities in addition to above-average numerical abilities.

Students in the Concrete-Static profile also seem to have a very naturalistic (focused on appearance) but—different from the Concrete-Dynamic profile—static conception of the technical system that is characterized by a focus on its structure with little consideration of its behavior. They tend to visualize the appearance of the system with its structural components but without considering the logical relationships between them. These students do not show an animistic approach when thinking about technical systems (Table 3). They are characterized by above-average general reasoning abilities in addition to above-average numerical abilities (Table 5).

Within the Applying profile, students focus on what the technical system can be used for (purpose), its requirements to operate (prerequisites), its behavior, as well as on logical relationships and the functions of its elements. These students show a profile that vividly considers many aspects and conditions that are important for its application without considering its appearance and without implicit notions of human-like behavior (animism) of the technical system (Table 3). They reached above-average general reasoning and numerical ability scores (Table 5).

In addition to low animistic tendencies, students within the Component-Focused profile do not think about the purpose, the appearance, or the prerequisites of a technical system. They rather focus on its structural components, their classification, and their specific functions within the technical system. These students seem to follow a rather theoretical approach that focuses on the characteristics and functions of the components of the technical system (Table 3). They likewise show above-average general reasoning and numerical ability scores (Table 5).

Importantly, students in all six ontological profiles show above-average numerical abilities (see Figure 3 and Table 5), which can be considered a fundamental prerequisite for choosing a study program in the field of engineering (representing one main driver for self-selection into engineering disciplines, e.g., Gustafsson and Balke, 1993). Students in the last four profiles (Concrete-Dynamic, Concrete-Static, Applying, Component-Focused), all show the same pattern of above-average general reasoning abilities in addition to above-average numerical abilities, although they largely differ in their ontological profiles (Table 5). The only thing that they have in common is a low tendency for animism. The two remaining profiles, Concrete-Intuitive and Condition-Focused, seem to have activated the ontological category animism more often during engineering problem solving. Together with the students' lower technology-related self-concept and lower scores on the verbal ability measure in both profiles, this tendency may reflect higher uncertainty in dealing with engineering problems.

There are three profiles described as Concrete that all tend to a non-formal and naturalistic approach to engineering problem solving (focus on appearance). Students in the Concrete-Intuitive profile, with slightly less favorable cognitive and affective preconditions, additionally focused on purpose and animism, compared to a focus on either the behavior or structure of a technical system in the Concrete-Dynamic and Concrete-Static profiles.

Contrasting the three profiles not concerned with the appearance of a technical system, students in the Condition-Focused profile have in mind what is necessary for the system to work—both in terms of general requirements like electricity and in terms of the logic behind all operations such as “if-then” relations. By contrast, students in the Component-Focused profile seem to zoom in on the individual constituents of the system. Students in the Applying profile, on the other hand, focus on both conditions and the functionality of the system, from a dynamic (behavior) perspective and without thinking about the component structure and component classification.

Only in the Concrete-Intuitive profile, the distribution over study programs significantly deviated from expectations, X2(3, N = 336) = 11.73, p < 0.01. It is noteworthy that there were significantly more women within that profile than could be expected from the existing gender ratio within the total sample, X2(1, N = 337) = 5.14, p < 0.05. Since a high number of students in the Concrete-Intuitive profile attend study programs with a relatively high proportion of female students (especially civil engineering) and a low number of students in this profile study mechanical engineering with the lowest proportion of female students, differences between study programs could be attributed to a gender effect (gender-specific preferences resulting in self-selection into particular study programs; Table 3). However, self-selection effects and effects of experiences gained in different engineering study programs cannot yet be separated in this study. Although still at the beginning of their studies, students attending civil engineering classes might have already developed more intuitive, naturalistic, and pragmatic thinking approaches adequate for problem solving in this engineering domain. By contrast, this ontological profile might be particularly different from problem solving approaches encountered at the beginning of mechanical engineering studies. Apart from the effects reported for the Concrete-Intuitive profile, study program per-se could not explain differences and similarities in ontological profiles. With the exception described above, how individuals think about engineering problems does not seem to influence their choice of study program within the engineering domain or, to put it the other way around, first contact with their specific study program apparently did not yet influence their thinking approaches. Accordingly, first-year students' ontological profiles may rather reflect idiosyncratic preconditions and personal experiences than self-selection or the socialization into discipline-specific thinking that only has just begun in the first semester of their study program.

Following up on the previous section, the cross-sectional design of the study can be considered a limitation of this study. Considering ontological categories in longitudinal models of knowledge development in engineering, future research may be able to differentiate between idiosyncratic variation (including prior experiences with engineering topics, for instance), variation by self-selection, and variation by socialization into different study programs. Such designs also allow investigating differences between discipline experts and novices in terms of lower- and higher-level knowledge as well as ontological flexibility reflecting the ability to flexibly activate different profiles depending on the problem context and the collaboration partner. For instance, a reversed u-shaped development could be expected for ontological flexibility, with many years of socialization in a specific discipline leading to less flexibility after an initial increase.

The solution of complex problems often involves co-operations between many different individuals—who need to understand each other. Although ways of thinking and underlying knowledge structures may differ largely, people tend to extrapolate from own knowledge to the knowledge of others (the so-called false-consensus bias). In consequence, co-operations are likely to suffer from misunderstanding and often fail to reach their full potential (Bromme, 2000; Godemann, 2006, 2008; Reif et al., 2017). Existing research focuses on infrastructure and organizational techniques supporting co-operation or on consequences of differing and shared knowledge (Bromme et al., 2001; Gardner et al., 2017). Assessing and identifying ontological profiles reflecting tendencies in thinking about not only engineering but also other complex problems might open up new ways to investigate and support team work.

Our assessment instrument, the OCET, does not capture the time sequence of ontological category activation on a more fine-grained level. With slight modifications—instructing participants to indicate what came to their minds first (what second, third, etc.) in a single-choice format, for instance—we could use the OCET to learn more about the hierarchy of ontological categories. In this first study, we did not include any achievement measures to be related to the ontological categories and profiles. We are currently conducting a follow-up study where we have the chance to look at students' examination performance in addition.

Developing sensitivity for different thinking tendencies, which are subject to change themselves, can be an important step toward university instruction that acknowledges diversity and against uniform teaching for a selective group of students. Addressing higher-level knowledge structures (i.e., ontological categories and ontological profiles) can pave the way for constructing and reconstructing intermediate-level knowledge elements (i.e., concepts). For example, students thinking about technical systems mostly in terms of the behavior of the system (Concrete-Dynamic and Applying) may struggle with instructional materials that assume a structure-perspective and try to integrate the new content into the behavior-focused thinking approach. If students have to work with a block definition diagram, for instance, the Component-Focused profile might be particularly beneficial. This ontological profile is the only one where thinking is focused on the classification of elements of the technical systems based on defining characteristics. Classification is a central element of block definition diagrams. Other thinking approaches might be advantageous when doing a fault tree analysis, a deductive procedure that requires the specification of manifold human errors and system failures potentially causing undesired events. A fault tree analysis of a technical system, such as a space capsule, may be easier having activated the Applying profile with its focus on logic (event A leading to event B if C), function (knowledge of the function of each system element under normal conditions), behavior (knowledge of the different system states and their succession), and prerequisites (knowledge of requirements of the system to function normally). Constructive university instruction may explicitly address ontological knowledge structures—thereby also facilitating intermediate-level conceptual learning (Jacobson, 2001; Yang et al., 2010; Chi et al., 2012). Direct teaching of an emergent-causal ontological category to interpret non-sequential science processes, for instance, helped students develop appropriate conceptual knowledge on the emergent process of diffusion (Chi et al., 2012). Ontological flexibility may further be supported by university instruction that explicitly discusses how different ontological categories shape thinking in different situations, when working on engineering problems. By doing so, students may develop metacognitive knowledge on the relation between ontological categories, intermediate-level conceptual knowledge, and situational requirements (Gupta et al., 2010). In addition to meta-knowledge on when to activate which connection, this kind of model flexibility requires highly interconnected knowledge (Krems, 1995).

This study identified and interpreted similarities and differences in the higher-order knowledge structures—the ontological categories—that underlie engineering problem solving in a sample of first-year students in engineering disciplines. These students seem to begin their studies with quite different conceptions of engineering problems that vary with individual characteristics such as study program or technology-related self-concept to some extent. Additional idiosyncratic influences and experiences, however, seem to play a more important role at this early stage of their subject-specific socialization. Individual engineering-related experiences like special engineering courses or activities at secondary school (e.g., Dawes and Rasmussen, 2007) or different attitudes toward engineering (e.g., Besterfield-Sacre et al., 2001) may affect students' thinking approaches. Just as it is the case for intermediate knowledge structures, the ontological profiles described in this paper may also partly reflect diverse everyday experiences (Baroody, 2003; Chi, 2006; Ericsson, 2009; Kim et al., 2011; Vosniadou et al., 2012). This study is explorative in nature and confirmatory follow-up studies are required to substantiate—and further explain—our results. While we focused on a sample of first-year students, the approach suggested in this paper allows modeling how individual characteristics and discipline-specific learning opportunities may shape thinking about engineering problems and knowledge development over time.

The datasets generated for this study are available on request to the corresponding author.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

SH, FR, FL, and BV-H: conception or design of the work, data collection, data analysis and interpretation, critical revision of the article, and final approval of the version to be published. SH and FR: drafting the article.

This work was supported by the German Research Foundation (DFG) and the Technical University of Munich within the funding programme Open Access Publishing.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank Sarah Scheuerer, Saskia Böck, Eleni Pavlaki, Dominik Farcher, Martin Mayer, Sebastian Seehars, and Kristina Reiss for their support throughout the project.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2020.00066/full#supplementary-material

Video 1. Video of the extended pick and place unit (Vogel-Heuser et al., 2014) in action, as used in the structured interviews with open card sorting tasks.

Abdulwahed, M., Balid, W., Hasna, M. O., and Pokharel, S. (2013). “Skills of engineers in knowledge based economies: a comprehensive literature review, and model development,” in Proceedings of 2013 IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE) (Bali, Indonesia: IEEE), 759–765. doi: 10.1109/TALE.2013.6654540

Arthur, W., and Day, D. V. (1994). Development of a short form for the raven advanced progressive matrices test. Educ. Psychol. Meas. 54, 394–403. doi: 10.1177/0013164494054002013

Badke-Schaub, P., Neumann, A., Lauche, K., and Mohammed, S. (2007). Mental models in design teams: a valid approach to performance in design collaboration? CoDesign 3, 5–20. doi: 10.1080/15710880601170768

Baroody, A. J. (2003). “The development of adaptive expertise and flexibility: the integration of conceptual and procedural knowledge,” in Studies in Mathematical Thinking and Learning. The Development of Arithmetic Concepts and Skills: Constructing Adaptive Expertise, eds A. J. Baroody and A. Dowker (Mahwah, NJ: Lawrence Erlbaum), 1–33.

Ben-Ari, M., and Yeshno, T. (2006). Conceptual models of software artifacts. Interact. Comput. 18, 1336–1350. doi: 10.1016/j.intcom.2006.03.005

Berkovich, M., Esch, S., Mauro, C., Leimeister, J. M., and Krcmar, H. (2011). “Towards an artifact model for requirements to it-enabled product service systems” in Wirtschaftsinformatik Proceedings 2011, 95. Available online at: https://aisel.aisnet.org/wi2011/95

Berkowitz, M., and Stern, E. (2018). Which cognitive abilities make the difference? Predicting academic achievements in advanced STEM studies. J. Intell. 6:E48. doi: 10.3390/jintelligence6040048

Besterfield-Sacre, M., Moreno, M., Shuman, L. J., and Atman, C. J. (2001). Gender and ethnicity differences in freshmen engineering student attitudes: a cross-institutional study. J. Eng. Educ. 90, 477–489. doi: 10.1002/j.2168-9830.2001.tb00629.x

Björklund, T. A. (2013). Initial mental representations of design problems: differences between experts and novices. Des. Stud. 34, 135–160. doi: 10.1016/j.destud.2012.08.005

Bromme, R. (2000). “Beyond one's own perspective: the psychology of cognitive interdisciplinarity,” in Practising Interdisciplinarity, eds N. Stehr and P. Weingart (Toronto: University of Toronto Press), 115–133. doi: 10.3138/9781442678729-008

Bromme, R., Rambow, R., and Nückles, M. (2001). Expertise and estimating what other people know: the influence of professional experience and type of knowledge. J. Exp. Psychol. Appl. 7, 317–330. doi: 10.1037/1076-898X.7.4.317

Carey, S. (2000). Science education as conceptual change. J. Appl. Dev. Psychol. 21, 13–19. doi: 10.1016/S0193-3973(99)00046-5

Charmaz, K., and Belgrave, L. L. (2015). “Grounded theory,” in The Blackwell Encyclopedia of Sociology, ed G. Ritzer (Oxford: John Wiley & Sons, Ltd). doi: 10.1002/9781405165518.wbeosg070.pub2

Chi, M. (1992). “Conceptual change within and across ontological categories: examples from learning and discovery in science,” in Cognitive Models of Science, ed R. N. Giere (Minneapolis, MN: University of Minnesota Press), 129–186.

Chi, M. (2006). “Laboratory methods for assessing experts' and novices' knowledge,” in The Cambridge Handbook of Expertise and Expert Performance, ed K. A. Ericsson, N. Charness, P. J. Feltovich, and R. R. Hoffman (Cambridge: Cambridge University Press), 167–184. doi: 10.1017/CBO9780511816796.010

Chi, M., Feltovich, P. J., and Glaser, R. (1981). Categorization and representation of physics problems by experts and novices*. Cogn. Sci. 5, 121–152. doi: 10.1207/s15516709cog0502_2

Chi, M., Roscoe, R. D., Slotta, J. D., Roy, M., and Chase, C. C. (2012). Misconceived causal explanations for emergent processes. Cogn. Sci. 36, 1–61. doi: 10.1111/j.1551-6709.2011.01207.x

Dawes, L., and Rasmussen, G. (2007). Activity and engagement - keys in connecting engineering with secondary school students. Aust. J. Eng. Educ. 13, 13–20. doi: 10.1080/22054952.2007.11464001

de Jong, T., and Ferguson-Hessler, M. G. M. (1996). Types and qualities of knowledge. Educ. Psychol. 31, 105–113. doi: 10.1207/s15326985ep3102_2

Deary, I. J., Strand, S., Smith, P., and Fernandes, C. (2007). Intelligence and educational achievement. Intelligence 35, 13–21. doi: 10.1016/j.intell.2006.02.001

Duit, R., and Treagust, D. F. (2003). Conceptual change: a powerful framework for improving science teaching and learning. Int. J. Sci. Educ. 25, 671–688. doi: 10.1080/09500690305016

Ericsson, K. A., (ed.). (2009). Development of Professional Expertise: Toward Measurement of Expert Performance and Design of Optimal Learning Environments. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511609817

Flaig, M., Simonsmeier, B. A., Mayer, A-K., Rosman, T., Gorges, J., and Schneider, M. (2018). Conceptual change and knowledge integration as learning processes in higher education: a latent transition analysis. Learn. Individ. Diff. 62, 49–61. doi: 10.1016/j.lindif.2017.12.008

Ford, D. N., and Sterman, J. D. (1998). Expert knowledge elicitation to improve formal and mental models. Syst. Dyn. Rev. 14, 309–340. doi: 10.1002/(SICI)1099-1727(199824)14:4<309::AID-SDR154>3.0.CO;2-5

Gardner, A. K., Scott, D. J., and AbdelFattah, K. R. (2017). Do great teams think alike? An examination of team mental models and their impact on team performance. Surgery 161, 1203–1208. doi: 10.1016/j.surg.2016.11.010

Godemann, J. (2006). Promotion of Interdisciplinarity Competence as a Challenge for Higher Education. 5, 51–61. J. Soc. Sci. Educ. doi: 10.4119/UNIBI/jsse-v5-i4-1029

Godemann, J. (2008). Knowledge integration: a key challenge for transdisciplinary cooperation. Environ. Educ. Res. 14, 625–641. doi: 10.1080/13504620802469188

Gupta, A., Hammer, D., and Redish, E. F. (2010). The case for dynamic models of learners' ontologies in physics. J. Learn. Sci. 19, 285–321. doi: 10.1080/10508406.2010.491751

Gustafsson, J-E., and Balke, G. (1993). General and specific abilities as predictors of school achievement. Multivar. Behav. Res. 28, 407–434. doi: 10.1207/s15327906mbr2804_2

Hake, R. R. (1998). Interactive-engagement versus traditional methods: a six-thousand-student survey of mechanics test data for introductory physics courses. Am. J. Physics 66, 64–74. doi: 10.1119/1.18809

Hestenes, D., Wells, M., and Swackhamer, G. (1992). Force concept inventory. Physics Teach. 30, 141–158. doi: 10.1119/1.2343497

Hofer, S. I., Schumacher, R., and Rubin, H. (2017). The test of basic Mechanics Conceptual Understanding (bMCU): using Rasch analysis to develop and evaluate an efficient multiple choice test on Newton's mechanics. Int. J. STEM Educ. 4:18. doi: 10.1186/s40594-017-0080-5

Hofer, S. I., Schumacher, R., Rubin, H., and Stern, E. (2018). Enhancing physics learning with cognitively activating instruction: a quasi-experimental classroom intervention study. J. Educ. Psychol. 110, 1175–1191. doi: 10.1037/edu0000266

Hofer, S. I., and Stern, E. (2016). Underachievement in physics: when intelligent girls fail. Learn. Individ. Diff. 51, 119–131. doi: 10.1016/j.lindif.2016.08.006

Hsi, S., Linn, M. C., and Bell, J. E. (1997). The role of spatial reasoning in engineering and the design of spatial instruction. J. Eng. Educ. 86, 151–158. doi: 10.1002/j.2168-9830.1997.tb00278.x

Jacobson, M. J. (2001). Problem solving, cognition, and complex systems: differences between experts and novices. Complexity 6, 41–49. doi: 10.1002/cplx.1027

Jones, N. A., Ross, H., Lynham, T., Perez, P., and Leitch, A. (2011). Mental models: an interdisciplinary synthesis of theory and methods. Ecol. Soc. 16:46. doi: 10.5751/ES-03802-160146

Kim, K., Bae, J., Nho, M-W., and Lee, C. H. (2011). How do experts and novices differ? Relation versus attribute and thinking versus feeling in language use. Psychol. Aesth. Creat. Arts 5, 379–388. doi: 10.1037/a0024748

Klymkowsky, M. W., and Garvin-Doxas, K. (2008). Recognizing student misconceptions through ed's tools and the biology concept inventory. PLoS Biol. 6:e3. doi: 10.1371/journal.pbio.0060003

Krems, J. F. (1995). “Cognitive flexibility and complex problem solving,” in Complex Problem Solving: The European Perspective, eds P. A. Frensch and J. Funke (New York: Routledge), 201–218.

Lauber, R., and Göhner, P. (1999). Prozessautomatisierung 1. Heidelberg: Springer. doi: 10.1007/978-3-642-58446-6

Lichtenberger, A., Wagner, C., Hofer, S. I., Stern, E., and Vaterlaus, A. (2017). Validation and structural analysis of the kinematics concept test. Phys. Rev. Phys. Educ. Res. 13:010115. doi: 10.1103/PhysRevPhysEducRes.13.010115

Liepmann, D., Beauducel, A., Brocke, B., and Amthauer, R. (2007). Intelligenz-Struktur-Test 2000 R [Intelligence Structure Test 2000 R]. 2nd ed. Göttingen, Germany: Hogrefe.

Marsh, H. W. (1986). Global self-esteem: its relation to specific facets of self-concept and their importance. J. Person. Soc. Psychol. 51, 1224–1236. doi: 10.1037/0022-3514.51.6.1224

Marsh, H. W., and Martin, A. J. (2011). Academic self-concept and academic achievement: relations and causal ordering: academic self-concept. Br. J. Educ. Psychol. 81, 59–77. doi: 10.1348/000709910X503501

Marsh, H. W., and O'Mara, A. (2008). Reciprocal effects between academic self-concept, self-esteem, achievement, and attainment over seven adolescent years: unidimensional and multidimensional perspectives of self-concept. Person. Soc. Psychol. Bull. 34, 542–552. doi: 10.1177/0146167207312313

Marsh, H. W., Xu, M. K., and Martin, A. J. (2012). “Self-concept: a synergy of theory, method, and application,” in APA Educational Psychology Handbook, eds K. R. Harris, S. Graham, and T. C. Urdan (Washington, DC: American Psychological Association), 427–458. doi: 10.1037/13273-015

Medeiros, R. P., Ramalho, G. L., and Falcao, T. P. (2019). A systematic literature review on teaching and learning introductory programming in higher education. IEEE Trans. Educ. 62, 77–90. doi: 10.1109/TE.2018.2864133

Peters, M., Laeng, B., Latham, K., Jackson, M., Zaiyouna, R., and Richardson, C. (1995). A redrawn vandenberg and kuse mental rotations test - different versions and factors that affect performance. Brain Cogn. 28, 39–58. doi: 10.1006/brcg.1995.1032

Peters, M., Lehmann, W., Takahira, S., Takeuchi, Y., and Jordan, K. (2006). Mental rotation test performance in four cross-cultural samples (N = 3367): overall sex differences and the role of academic program in performance. Cortex 42, 1005–1014. doi: 10.1016/S0010-9452(08)70206-5

Posner, G. J., Strike, K. A., Hewson, P. W., and Gertzog, W. A. (1982). Accommodation of a scientific conception: toward a theory of conceptual change. Sci. Educ. 66, 211–227. doi: 10.1002/sce.3730660207

Powell, K. C., and Kalina, C. J. (2009). Cognitive and social constructivism: developing tools for any effective classroom. Education 130, 241–250.

R Core Team (2008). R: A Language and Environment for Statistical Computing.Vienna,: R Foundation for Statistical Computing Available online at: https://www.R-project.org/ (accessed March 10, 2019).

Reif, J., Koltun, G., Drewlani, T., Zaggl, M., Kattner, N., Dengler, C., et al. (2017). “Modeling as the basis for innovation cycle management of PSS: making use of interdisciplinary models,” in 2017 IEEE International Systems Engineering Symposium (ISSE) (Vienna: IEEE), 1–6. doi: 10.1109/SysEng.2017.8088310

Ropohl, G. Ü. (1997). Knowledge types in technology. Int. J. Technol. Des. Educ. 7, 65–72. doi: 10.1023/A:1008865104461

Schneider, M., and Hardy, I. (2013). Profiles of inconsistent knowledge in children's pathways of conceptual change. Dev. Psychol. 49, 1639–1649. doi: 10.1037/a0030976

Schneider, M., and Stern, E. (2010). The developmental relations between conceptual and procedural knowledge: a multimethod approach. Dev. Psychol. 46, 178–192. doi: 10.1037/a0016701

Shea, D. L., Lubinski, D., and Benbow, C. P. (2001). Importance of assessing spatial ability in intellectually talented young adolescents: a 20-year longitudinal study. J. Educ. Psychol. 93, 604–614. doi: 10.1037/0022-0663.93.3.604

Shtulman, A., and Valcarcel, J. (2012). Scientific knowledge suppresses but does not supplant earlier intuitions. Cognition 124, 209–215. doi: 10.1016/j.cognition.2012.04.005

Silva, S. S., and Hansman, R. J. (2015). Divergence between flight crew mental model and aircraft system state in auto-throttle mode confusion accident and incident cases. J. Cogn. Eng. Decis. Mak. 9, 312–328. doi: 10.1177/1555343415597344

Slotta, J. D., Chi, M. T. H., and Joram, E. (1995). Assessing students' misclassifications of physics concepts: an ontological basis for conceptual change. Cogn. Instruct. 13, 373–400. doi: 10.1207/s1532690xci1303_2

Smith, J. I., Combs, E. D., Nagami, P. H., Alto, V. M., Goh, H. G., Gourdet, M. A. A., et al. (2013). Development of the biology card sorting task to measure conceptual expertise in biology. LSE 12, 628–644. doi: 10.1187/cbe.13-05-0096

Sorby, S. A. (2007). Developing 3D spatial skills for engineering students. Aust. J. Eng. Educ. 13, 1–11. doi: 10.1080/22054952.2007.11463998

VDI (2011). VDI 4499-2: Digitale Fabrik: Digitaler Fabrikbetrieb [Digital factory: Digital Factory Operations]. Berlin: Beuth.

Vogel-Heuser, B., Legat, C., Folmer, J., and Feldmann, S. (2014). Researching Evolution in Industrial Plant Automation: Scenarios and Documentation of the Pick and Place Unit. Technical Report TUM-AIS-TR- 01-14-02, Technische Universität München. Available online at: https://mediatum.ub.tum.de/node?id=1208973 (accessed August 05, 2019).

Vogel-Heuser, B., Legat, C., Folmer, J., and Feldmann, S. (2014). Researching Evolution in Industrial Plant Automation: Scenarios and Documentation of the Pick and Place Unit. Available online at: https://mediatum.ub.tum.de/node?id=1208973.

Vogel-Heuser, B., Loch, F., Hofer, S., Neumann, E.-M., Reinhold, F., Scheuerer, S., et al. (2019). “Analyzing students' mental models of technical systems,” in 2019 IEEE 17th International Conference on Industrial Informatics (INDIN) (Helsinki, Finland: IEEE), 1119–1125. doi: 10.1109/INDIN41052.2019.8972071

Vosniadou, S. (2019). The development of students' understanding of science. Front. Educ. 4:32. doi: 10.3389/feduc.2019.00032

Vosniadou, S., De Corte, E., Glaser, R., and Mandl, H. (2012). “Learning environments for representational growth and cognitive flexibility,” in International Perspectives on the Design of Technology-supported Learning Environments (Abingdon: Routledge), 23–34.

Wai, J., Lubinski, D., and Benbow, C. P. (2009). Spatial ability for STEM domains: aligning over 50 years of cumulative psychological knowledge solidifies its importance. J. Educ. Psychol. 101, 817–835. doi: 10.1037/a0016127

Wei, X., Wagner, M., Christiano, E. R. A., Shattuck, P., and Yu, J. W. (2014). Special education services received by students with autism spectrum disorders from preschool through high school. J. Spec. Educ. 48, 167–179. doi: 10.1177/0022466913483576

Keywords: conceptual knowledge, ontologies, engineering education, higher education, cluster analysis

Citation: Hofer SI, Reinhold F, Loch F and Vogel-Heuser B (2020) Engineering Students' Thinking About Technical Systems: An Ontological Categories Approach. Front. Educ. 5:66. doi: 10.3389/feduc.2020.00066

Received: 21 November 2019; Accepted: 04 May 2020;

Published: 29 May 2020.

Edited by:

Subramaniam Ramanathan, Nanyang Technological University, SingaporeReviewed by:

Nuria Jaumot-Pascual, TERC, United StatesCopyright © 2020 Hofer, Reinhold, Loch and Vogel-Heuser. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frank Reinhold, ZnJhbmsucmVpbmhvbGRAdHVtLmRl; Sarah Isabelle Hofer, c2FyYWguaG9mZXJAbG11LmRl

†These authors share first authorship

‡ORCID: Birgit Vogel-Heuser 0000-0003-2785-8819

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.