- ISPA-Instituto Universitário, CIE-ISPA, Lisbon, Portugal

Classroom interactions play an important role in the learning and teaching of mathematics, and feedback emanating from these interactions is a powerful tool for enhancing student learning. These exchanges have been widely studied in higher education, but very few investigations have been carried out at the level of elementary students and teachers. This study aimed to contribute to existing knowledge of feedback, and to formulate guidelines to improve teacher feedback in elementary school. The specific objectives were to analyse the focus of feedback (a) by lesson purpose and type of interaction, (b) by type of question and student's answer, and (c) by gender and student achievement. Participants comprised five teachers and their 82 third-grade students attending an elementary school in Portugal. Mathematics lessons were video-recorded and a categorisation system to assess teacher-student interaction was developed, based on a review of the literature and empirical data. The results showed that most of the teacher–student interactions contained feedback, which was usually focused on a specific task, and less frequently on the ways in which tasks were processed. In terms of lesson purpose, teachers' feedback was evaluative, especially when they had initiated the interaction. Feedback became more effective when the initial move was made by the students. The focus of feedback was not related to the type of question asked, but it was associated with the certitude of the students' answers. We also observed an interaction effect between the focus of feedback, gender and achievement, with high-achievement boys receiving advantages. Our results hold important implications for teachers' classroom practices and professional development.

Introduction

Sociocultural researchers (e.g., Mercer, 2010; Mercer and Howe, 2012) have focussed their attention to the effects of teacher-student dialogues on problem-solving, learning, and conceptual change. In the mathematical learning process, interaction between teacher and students can facilitate students' learning (Apriliyanto and Saputro, 2018). For socio-constructivists (e.g., Hardman et al., 2003) learning is a social, active process involving others, and takes the form of a constant interplay between student and teacher that assists the learner in acquiring the necessary skills and knowledge. The learning process comprises a succession of steps that the student takes, building on the scaffolding provided by the teacher, ultimately leading to the learner's self-regulation and development through a process of internalisation (Vygotsky, 1978). In addition, socio-constructivists argue that language serves to mediate higher order thinking, thereby playing a critical role in the teaching-learning process.

Nevertheless, Hardman et al. (2003) found that the discourses invoked in classroom situations do not always support learning, as much of the class time is devoted to teachers' talk, with little time spent on teacher-students interactions or group discussions. Similar results were obtained by Burns and Myhill (2004), who reported that teachers made statements or asked factual questions 84% of the time. Classrooms were dominated by teachers' talk and students' answers to teachers demands. The dominance of the teacher's talk entrenches the pre-dominance of a transmissive method of knowledge, controlled by the teacher, with students having little autonomy and involvement in their learning (Hattie, 2012b).

Previous studies surfaced the structure of teacher talk, which corresponds to the structural model of classroom conversation developed by Sinclair and Coulthard (1975). Teaching exchanges are used to deliver the pedagogic content of the lesson and are composed by structures. Several structures of exchange in terms of moves are distinguished. Among them the I-R-F structure that consist of initiation (I), response (R), and finally follow-up/feedback move (F). This structure is characteristic of discussion controlled by the teacher, where they start the interaction by posing a closed question to their students (I), for which they expect a particular response (R), to which they then respond (F), ultimately conforming to the result obtained previously (Hattie, 2012b). These exchanges also contain two structures that are at the initiative of the student in which in the opening move the pupil elicit a verbal response from the teacher and it is called I-R structure and a second one is the I-F structure where the pupil convey information to the teacher and teacher provides feedback (Raine, 2010).

The evidence provided through the studies of Hardman et al. (2003) and Burns and Myhill (2004) showed that classes were often dedicated to teaching rather than learning, with the transmission of contents in such a way as to produce the intended objectives being the central goal. In these scenarios, instead of classroom interactions that open talk to students to promote their deep thinking, classroom talk was found to be teacher-centred (Hattie, 2012b).

Studying classroom talk in primary school classrooms in England, France, India, Russia, and USA, Alexander (2001) frequently found the I-R-F exchange utilised in classrooms, with the majority initiated by the teacher. Nevertheless, he observed that students' participation and cognitive engagement differed across nationalities, based on teachers' pedagogical approaches. Based on these results (Alexander, 2004), Alexander (2006) developed the concept of dialogical talk applied to the classroom. This approach comprises different characteristics: collective—teacher and students address learning activities together; reciprocal—teacher and students listen to each other, share ideas and provide their different viewpoints; supportive—they help each other in a supportive environment with no fear of making a mistake; and cumulative—involving ongoing discussions to build their knowledge based on their own and each other's ideas. Finally, by posing open-ended questions, teachers encourage problem-solving instead of mere explanation and recall, and such an approach is therefore purposeful, with educational goals always present in teacher's mind. According to Alexander (2017) and Alexander et al. (2017), dialogic teaching has a positive impact on primary school children's achievement, engagement and overall learning, and it helps students to develop core skills such as listening and arguing, formulating questions, and developing critical thinking.

Usually teachers spend large amounts of classroom time questioning their students, considering this to be a strategy that enables students to participate in class, remain active and become interested in the contents they are taught. However, Hattie (2012a) points out that the majority of questions teachers ask are factual, and little time is provided for students to consider their answers; however, the best students are frequently afforded extra time to consider their responses. We argue that the amount of factual questions asked are related to teachers' conceptions of learning and teaching. If a teacher's conceptions or beliefs are focused on the teaching of content, and the learner's role is to memorise the contents, it makes sense to ask many factual questions to check if students had learned the information transmitted. These surface questions promote surface knowledge. In contrast, if teachers are focused on a student-centred or learning-oriented approach, they would be more pre-occupied with supporting students' autonomy and individual differences during the learning process. In those situations, teachers tend to ask more open questions that require justifications, arguments and connections between subjects, promoting conversation, student involvement, and the use of dialogue for learning (Smith et al., 2006). In this scenario, higher-order questions can promote deeper understanding.

A study by Smart and Marshall (2013) revealed that teachers' classroom questioning, specifically with regards to the level of questioning and the complexity of the question, was directly related to students' achievement. Based on the revised Bloom's Taxonomy (Noble, 2004), teachers' question levels were categorised as follows: (1) lower order (recall, remember, and understand); (2) apply (demonstrate, modify, compare); (3) analyse/evaluate (verify, justify, interpret); and (4) create (combine, construct, develop, formulate). The researchers observed a prevalence of lower-order questioning strategies that resulted in lower levels of student cognitive functioning. Regarding complexity of questions, Smart and Marshall (2013) analysed data along a continuum of teachers' feedback focus—ranging from a focus on the correct answer to evidence and reasoning. They found a positive correlation between students' cognitive level and the complexity of questions. Nevertheless, examining classroom interactions, and teachers' questions in particular, Smith and Higgins (2006) emphasise that, in order to provoke student participation in an effective interaction, what is more important is how teachers react to students' responses, coupled with the intentions of their questioning, rather than the type of questions asked. Against this backdrop it becomes clear that teacher-student interactions should be aimed at the collaborative construction of knowledge.

In dialogical talk teachers' speech is as important as students' talk (Alexander, 2017). As Chin and Osborne (2010) argue, questions students ask during the learning process help them to engage in dialogic argumentation. They claim that students' questions foster critical dialogue and can support students' argument-construction by stimulating co-elaboration and justification of their points of view. If students' questions can benefit learners, teachers should foster and encourage discussion and debate in classroom discourses. It becomes vital for teachers to challenge their students to ask questions and make statements to resolve doubts or seek answers, because it helps them to broaden their own thinking. Furthermore, Chapin et al. (2009) state that teachers' responses to students' questions are important tools in maintaining the interaction in a way that facilitates improved mathematic thinking and reasoning among students. Thus, as these authors suggest, classroom talk promotes students' learning under certain circumstances. Asking students to talk about mathematics fosters their understanding and it renders visible their thinking processes and capacity to reason.

Recent research in the domain of feedback supports the concepts of dialogic talk (Alexander, 2017) and dialogic feedback (Carless, 2016). In line with this research, feedback is central to learning when teachers' comments enable students to enhance their learning strategies. Effective feedback involves a dialogic process involving both student and teacher, and it is essential that students understand the meaning of teachers' feedback and use that information to close the gap between what they know and what they are expected to know (Sadler, 1989). Such a process needs to generate opportunities for students to actively participate in their own learning and to talk about their understanding of the work they have to do. The student must be able to comprehend, judge and act on the information given by the teacher (Henderson et al., 2018).

Different perspectives inform the definition of feedback. For many years feedback was conceived as information transmission, and research in this field focused principally on the content and delivery of feedback (Ajjawi and Boud, 2017). In this approach the learner is viewed as a passive recipient of what the teacher says, without any concern regarding the learner's level of understanding or ability to act, based on the feedback provided by the teacher. More recently, and in contrast to more traditional approaches, researchers like Carless (2016) and Molloy and Boud (2013) reinforced the argument that feedback should be seen as a dialogic process of communication that is social constructed. The main purpose of feedback, in this socio-constructivist view, is to promote self-regulation.

Hattie and Timperley's (2007) conceptual model of feedback takes into account its self-regulatory purpose. They consider that three questions need to be addressed in feedback interactions. The first of these, “where is the student going?,” relates to the goals the student needs to master. The second, “how is the student going?,” relates to the student's current level of achievement. The third, “where to next?,” is the most important for students as it not only describes the learning strategies they need to choose to master the goals, but also facilitates self-regulation in that process.

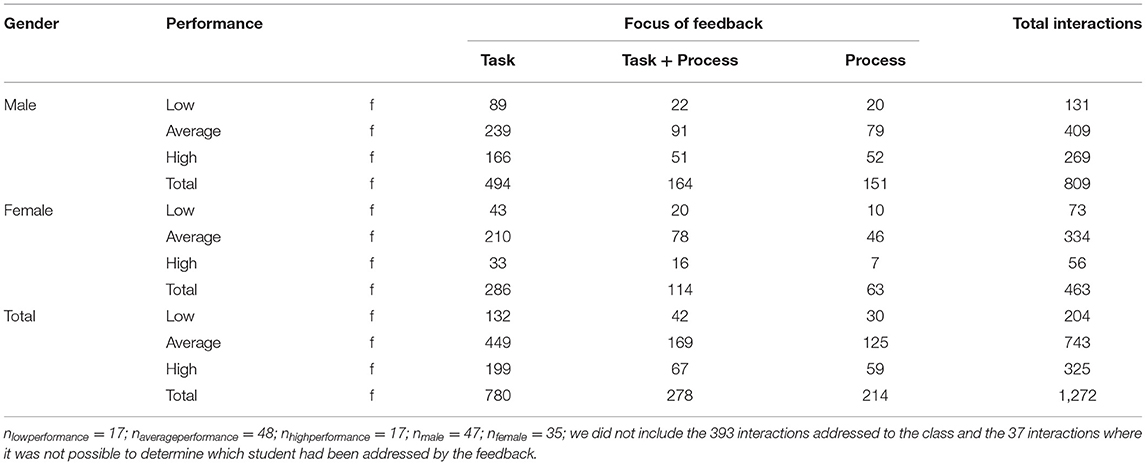

As reported by Hattie and Timperley (2007), each of these questions can work at four separate levels: task, process, self-regulation, and self. They argue that feedback has to be related to the task in order to have a positive impact on learning. This is a condition that the feedback at the level of self—also known as praise—does not fulfil. When teachers praise their students, students take their attention off the task and focus it on the praise. Feedback at the task or product level has limited positive consequences and promote more surface knowledge. The potential for development in terms of learning is bigger when the feedback focuses at the level of process. This type of feedback allows the student to have access to more detailed information on the process needed to solve the task, enhances deeper learning, and improves self-efficacy. The level of self-regulation is focused on the development of students' skills in monitoring their own learning process, it facilitates greater confidence in students to get involved with the task, and promotes students' autonomy (Table 1). Hattie (2012a) considers these feedback levels to form a progression, from task, to process, and finally to self-regulation:

The first three feedback levels (Task, Process and Self-regulation) form a progression. The hypothesis is that it is optimal to provide appropriate feedback at or one level above where the student is currently functioning and to clearly distinguish between feedback at the first three and the fourth (self) level. Feedback at the self-level can interact negatively with attainment as it focuses more on the person than the proficiencies (Hattie, 2012a, p.267).

Table 1. Focus of the feedback: the four levels (Hattie and Timperley, 2007).

In consonance with Hattie (2012a), for feedback to be effective teachers need to provide feedback one level above the student's actual position; clarify the goal of the activity; ensure that the student understands the feedback; and ask students to provide feedback on their teaching. When studying the effects of feedback on students' outcomes, researchers have found several variables that mediate these effects. Nevertheless, it seems that strategies for self-regulation promote student engagement, effort and self-efficacy (Butler and Winne, 1995; Hattie and Timperley, 2007). In line with Hattie and Gan (2011), self-regulatory feedback is the most powerful type, yet task feedback is more often given in the classroom (Van den Bergh et al., 2013). This is also consistent with results obtained by Gan and Hattie (2014) related to peer feedback. They investigated students' feedback features in relation to the use of feedback levels, and verified that, similar to teachers, task-level feedback was the most commonly used among peers, while self-regulation feedback was rarely provided.

Hattie and Yates (2014) stress that the levels of feedback should be adapted to students' needs. They consider three stages of knowledge according to students' needs. In the first, initial knowledge acquisition, learners construct new knowledge, and need feedback focused on the task level. The application of knowledge is the second stage, where students have already acquired some basic concepts and now need to apply their skills. The focus of feedback at this stage should be at the level of process to assure that the students are on the right path and, if not, they need to be supported with alternative strategies and interventions that help them apply the knowledge acquired earlier. At the advanced mastery stage, where students exhibit high levels of expertise, feedback should be aimed at the level of self-regulation. Hattie and Yates (2014) point out that these stages work in a cyclical manner and so, when a student attains the first stage, they need more cognitive resources to move on to the next stage. Barriers to these cognitive resources may lead the student to give up or reduce their effort in accomplishing the task and seek easier goals.

The existence of gender bias in teacher-student interactions in mathematics has been of concern for researchers (Sadker and Sadker, 1986; Eccles and Harold, 1992; Kelly, 1998; Zakkamaris and Balash, 2017). Not only are female students consistently treated differently in mathematics classrooms, teachers also give more attention to male students. Sadker and Sadker's (1986) research on classroom interactions in elementary and secondary schools showed that male students received more attention from teachers and were given more time to talk in classrooms when compared to female students. Moreover, their findings demonstrate that the types of interactions teachers have with boys differ from those they have with girls. In their study, teachers provided more feedback, including praise, criticism, help, and correction to boys, while providing mostly confirmation feedback to girls. Boys were most likely to be rewarded for a correct answer, or given feedback to enhance their learning, compared to girls. According to these researchers, educators are generally unaware of the presence or impact of this bias. The results obtained by Stevens (2015) and Zakkamaris and Balash (2017) similarly reveal that teachers in mathematics classes provide a wider range of interactions to males than to female students. In contrast, Myhill (2002) found that the student's status as a learner (low achiever vs. higher achiever), was a significantly greater indicator of whether a child would interact in the classroom. In her study low achievers, regardless of gender, do not participate in classroom discussions in classroom as much as overachievers do. Based on her findings, Myhill argues that gender does not play such an important role in the mediation of academic achievement.

Apart from the students' gender, needs and performance level there is another possible factor that could determine the form of a teacher's feedback, namely the nature of the student's response (Chin, 2006). In Chin's (2006) study, she analysed the types of teacher feedback to students' responses (correct, combination of correct and incorrect, and incorrect answers). In feedback to students' correct answers, the teacher confirmed and reinforced the correct responses and then continued to expose the matter as they were doing up to that point. In feedback to an answer containing a combination of correct and incorrect information, the teacher accepted the answer, then asked a series of questions related to what the student had just answered in an attempt to get the student to better explain their thinking. In feedback to an incorrect student response the teacher corrected it explicitly and then further explained the answer, making evaluative or neutral comments followed by a reformulation of the question. Thus, the quality of feedback differs according to the nature of the student's response and, as Kluger and DeNisi's (1996) demonstrated in their meta-analysis, while feedback usually improved performance, in some cases it decreased performance, especially when feedback were simple judgments of correct or incorrect responses.

The current study of classroom interaction forms part of a larger investigation into the assessment and feedback practices of mathematics teachers in Portuguese primary schools. The aim of the current study is to examine teacher-student interactions, mainly to characterise the focus of feedback used by third-grade teachers and whether it differs according to the type of interaction, the lesson purpose, the type of question used by the teacher, the type of answer given by the student, and the students' gender and prior achievement.

According to Hattie and Timperley (2007) the focus of feedback can be categorised into four major levels: about task; aimed at the process; focused on self-regulation; and self-directed. They claim that “the level at which feedback is directed influences its effectiveness” (Hattie and Timperley, 2007, p.90). They concluded that feedback at process and self-regulation levels are the most effective in promoting achievement.

In order to characterise teacher feedback during active learning we examined the focus of the feedback, especially the four levels of feedback (task, process, self-regulation, and self-level), and four research questions guided our study:

1. What are the characteristics of teacher–student interactions related to the teacher feedback move (task, process, self-regulation, and self) during active learning during mathematics lessons in the third grade of primary schools?

2. Are teacher feedback characteristics (F moves) different according to

i. the patterns of classroom interactions (I-R- F or S-F) and the lesson purpose (introducing new content—practising—assessment)?

ii. the type of question posed by the teacher (open or closed) and the type of answer provided by the student (correct or other than correct)?

iii. according to the gender (male or female) and prior achievement (lower, average, or higher) of the student at whom the feedback is addressed?

Materials and Methods

Participants

Data collected for this cross-sectional study were part of a broader longitudinal research project. We used multiple-case sampling to add confidence to our findings (Miles et al., 2014). The methodological option for case studies allowed us to achieve a more profound understanding of data gathered, a better comprehension of the participants' behavior and the contextual and socio-cultural influences on the behavior of participants during the observations (Rahman, 2017). As suggested by Miles et al. (2014) we selected five researched cases, the minimum for multiple-case sampling adequacy, consisting of five teachers and their 82 students attending the third grade of four private elementary schools in Lisbon. The main reason for choosing private schools as study sites was the necessity of ensuring that students remained at the same school with the same teacher for 2 years. This prerequisite would be difficult to fulfil at a public school, as most teachers must apply annually to work in any educational institution. To ensure maximum variation sampling (Etikan et al., 2016) teacher selection also took into account teachers' years of professional experience and gender, to see if the main pattern observed in one teacher would hold for the others; the selected teachers (one male and four females) had between 3 and 25 years' experience. Class size varied between 11 and 23 students per classroom. Three teachers had their respective classes for the first time, and two had accompanied their classes for more than 1 or 2 years. Students' average age was 8.07 years (SD = 0.47), ranging from 7 to 10; 47 students were male and 35 female. The proportion of girls and boys were similar in all classes [ = 2.17, p = 0.705].

Instruments and Procedures

To determine students' prior achievement, mathematics report card grades from previous evaluations were collected from school records to characterise students' achievement in mathematics. In Portugal, for the first cycle of basic education, the information resulting from the summative assessment translates into qualitative attribution on a four-point scale: very good, good, satisfactory, and unsatisfactory. In our sample, however, some teachers, however, used a five-point scale: unsatisfactory, satisfactory, good, very good, and excellent. All grades were converted to z-scores, which allowed for a context-free evaluation that captured the individual achievement of each student relative to their classroom's mean and standard deviation (Cross, 1995; Stake, 2002). We grouped the students as follows: the lower achievement group comprised the 20% of students with the worst achievement (n = 17, Mz−score = −1.40); the higher achievement group comprised 20% of students with the best achievement (n = 17, Mz−score = 1.0); and the average achievement group consisted of students with average achievement (n = 48, Mz−score = 0.11).

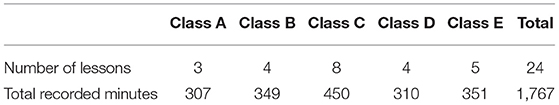

The complexity of mathematics and the kind of reasoning involved in doing mathematics are important characteristics that are difficult to define and code reliably (Hiebert et al., 2003). To attenuate this effect, the structured observations of classroom interaction were undertaken in two curricular units, common to all classes, covering the following mathematical topics: stem and leaf diagrams, and addition and subtraction with decimal numbers. Each curricular unit was composed of several lessons with specific aims, designed to teach those topics as part of the curriculum. We filmed both units in their entirety for each of the five teachers. A total of 24 lessons were videotaped, accounting for a total of 1,767 min of footage.

According to the teachers, the recorded lessons were similar to their usual mathematics lessons and teachers followed their normal lesson plans. Mathematics lessons differed in terms of the amount of time teachers allocated to teach these curricular units. Teachers from classes C, E, and B devoted more time to these topics than teachers A and D. Table 2 displays the total number of lessons and the total of minutes filmed for each class in both units.

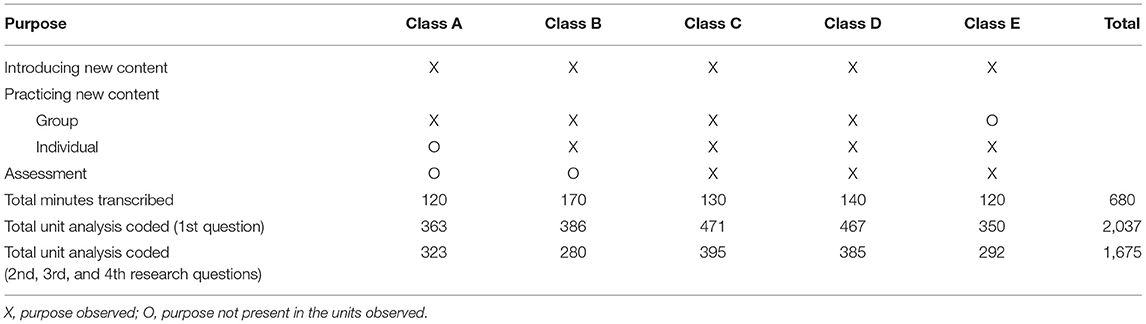

For each entire unit videotaped, the purposes of each lesson were identified. We classified the lesson purposes according to Hiebert et al. (2003):

1. Introducing new content: The teacher introduces content that students had not previously worked on by using expositions, demonstrations, illustrations, problem-solving, and class discussions.

2. Practicing new content: Students practice or apply the newly introduced content, usually in one or both of the following formats:

i. working individually; and

ii. working in a small group (two to four students).

3. Assessment: This additional category was included as the first two lesson purposes were considered non-exhaustive and over-inclusive for the analysis of lesson structures of some of the teachers. This category focused on verbally checking the answers for previously completed problems, quizzes and grading exercises completed during the Practicing lessons.

Each lesson may have a single purpose, or it may have been segmented into different purposes. Table 3 displays the types of lesson purposes for each class in the two curricular units.

The amount of time teachers devoted to each purpose differed, so, for each purpose identified, segments of 30 min (maximum) video data were selected for closer analysis, with a total of 680 min of videotaped data selected for transcription and analysis (c.f. Table 3). The qualitative data from the selected segment were analysed by two trained researchers, using an observation grid derived from literature on feedback assessment practices (Brophy, 1981; Balzer et al., 1989; Hattie and Timperley, 2007; Shute, 2008; Hattie, 2012b; Brookhart, 2017).

The unit of analysis/interaction was limited to the following exchanges: teacher-student-teacher (I-R-F) and student-teacher (S-F). The acronym of the structures of the student initiative were modified from the original structure, as both originals (I-R and I-F) were incorporated into a single structure (S-F). Only interactions about the identified mathematical topics—stem and leaf diagrams, and addition and subtraction with decimal numbers—were analysed. A total of 2,037 units of analysis/interactions were analysed in response to the first research question, and 1,675 of these interactions were analysed to answer the remaining three research questions. To characterise the teacher feedback (first research question), we included all the interactions that occurred between the teacher and the students on the relevant topics, regardless of whether or not the teacher gave feedback to the answers or questions posed by the students. To answer the remaining research questions, we only used interactions where there was teacher feedback and which, according to Hattie and Timperley (2007), would somehow contribute to student learning. Thus, only the interactions whose feedback from the teacher was focused on the task, process and self-regulation were analysed. In Table 3 we present the number of interactions observed for each class. The proportion of these interactions did not differ significantly among the classes (z-test p > 0.050).

The classroom interactions were analysed in conformance to Sinclair and Coulthard's (1975) pattern of talk I-R-F (opening, answering, and follow-up/feedback moves). In opening moves, the teacher elicits a verbal response from a pupil. Each opening move is followed by a student answering move, in response to the opening move. Follow-up moves follow answering moves, and are defined as feedback moves from teacher.

Within each I-R-F discourse move, two different modifiers are available concerning the type of question and response, coded as “open question” if the teacher accepted more than one answer and extended students' answers with the intention of bringing the interaction back in line, or “closed question” if the teacher accepted only one correct answer which both teacher and pupils know to be the true answer to an original factual question. Initially, student responses were coded according to four categories: “correct,” where the teacher accepts the answer; “incorrect,” where the teacher's feedback indicated that the student's answer was wrong; “incomplete,” if feedback indicated that the response was incomplete; and “don't know,” if the student did not answer the question. Given the data obtained for the categories: “incorrect,” “incomplete,” and “don't know” were reduced, and it was decided to group all the relevant data into the category “other than correct.”

In the S-F discourse moves, the pupil elicits a verbal response from the teacher or the pupil conveys information to the teacher in the opening move (S), followed by the follow-up/feedback moves (F) from the teacher. In this case, any response by the teacher to a student's interlocution on the subject under study, was considered to be feedback. The teachers' feedback moves were examined with respect to the focus of feedback, as described by Hattie and Timperley (2007), who proposed four levels of feedback focus, with a distinction between the different levels of feedback (Table 1).

Using an observation grid, approximately 30% of all recorded interactions were coded by a third researcher to determine the percentage inter-rater agreement, as described by McHugh (2012). Further, both researchers coded all the interactions 4 months after the first codification to determine the intra-rater reliability, calculated as the number of agreement scores divided by the total number of scores. A mean agreement of 91.6 and 93.7% for inter-coder reliability was achieved; the lowest agreement was 70.7% on the dimension “Type of question.” For intra-coder reliability, a mean agreement of 92.5 and 94.7% was achieved; the lowest agreement was 79.8% in the dimension “Focus of feedback.”

Data Analysis

The aim of this study was to analyse teacher-student interactions and, more specifically, to characterise the focus of feedback used by third-grade teachers and to determine whether it changed according to the type of interaction (I-R-F or S-F), the lesson purpose (introducing new content, practising, or assessment), the type of question (open or closed) used by the teacher, the type of answer given by the student (correct or other than correct), the student's gender (male or female), and prior achievement (lower, average, or higher). With this in mind, the qualitative data from the 2,037 interactions collected using the observation grid was converted into categorical variables that could be analysed using descriptive and inferential statistic (Bazeley, 2018). Then, we calculated the frequency of all the categories in the observed interactions. We organized the data into contingency tables containing the number of interactions in each category of the three dimensions studied for each research question.

To analyse these complex and multivariate contingency tables, we used a log-linear analysis, which permits the examination of various types of higher order interactions among several categorical variables. It is similar to an analysis of variance, but for entirely categorical data (Field, 2015). Since most studies have used univariate techniques to study the relationships between feedback and the variables under study, one of the contributions of the current research was the use of multivariate statistics to answer complex questions involving more than two variables. The use of multivariate statistics in the current study allowed us to examine these relationships in depth and to quantify them; this approach “gives a much richer and realistic picture than looking at a single variable and provides a powerful test of significance compared to univariate techniques” (Shiker, 2012, p. 56).

Log-linear analyses aim to fit a simpler model to the data in the contingency tables, without any substantial loss of predictive power. Therefore, log-linear analysis works on a principle of backward elimination. It starts with the saturated model, including all interaction effects, after which terms are removed from the model (starting with the highest-order interaction) to see whether their removal significantly affects the fit of the model. If the removed term does affects the fit of the model, the term is not removed but rather interpreted, and all lower-order effects are ignored (Field, 2015).

In the current study, log-linear analysis assumptions (independence of cell and expected frequencies >5) were met. The focus of feedback was defined as the response variable, with the remaining dimensions as explanatory variables. Therefore, potential main effects and interaction between the explanatory variables were implied by using an asymmetrical inquiry as suggested by Kennedy (1988). Three asymmetrical log-linear analyses were applied to three-way contingency tables of frequencies, with the focus of feedback as a dependent variable and using the procedures suggested by Kennedy (1988): (a) logit-models were specified, (b) their expected cell frequencies were compared with observable data, and (c) model terms that contributed significantly to the enhancement of these models were identified. The model was subsequently interpreted by collapsing the table to the factors in the term of interest and analysing the percentages (Field, 2015). We also calculated the odds ratios (OR) by breaking the effects into logical 2 × 2 contingency tables, as suggested by Field (2015). All the analyses were implemented with the statistical analysis software SPSS Statistics (v. 25).

Results

Teacher-Student Interaction: Feedback Characteristics

A total of 2,037 units of analysis/interactions were examined and classified in two categories related to the pattern/type of interaction. From those, 1,275 (62.5%) interactions were initiated by the teacher (I-R-F) and 762 (37.4%) by the students (S-F).

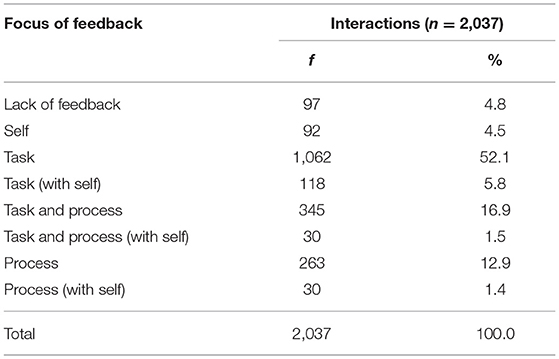

Interactions were also categorised according to the teacher's feedback move (Table 4). After observing the interactions and feedback moves, we found that teachers would sometimes focus feedback at one level only in one utterance. In other utterances, however, feedback was focused at two or three levels, as illustrated in the following example: “What a disaster! (Self) …this isn't the way to represent these data! (Task) I asked you what kind of graph you would choose to represent this data. For example, you could try to look at the table, to the title, numbers and words. You want to compare parts of a whole, in different categories (Process).”

Analysis revealed that in 4.8% of the interactions the teacher decided not to give any feedback; in 13.2% of the interactions, teachers' feedback was at the level of self (alone or combined with other feedback levels). In 69% of the interactions, teachers' feedback focused at the level of task (16.9% combined with the process level). Only 12.9% of the interactions were focused at the level of process, autonomously. We did not observe any interactions with feedback at the self-regulation level. Table 4 presents a description of the feedback focus of the observed interactions.

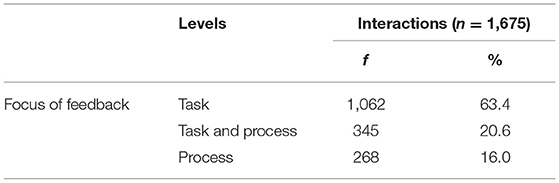

To answer the second, third, and fourth research questions, feedback at the level of self was not included. Several authors consider self-level feedback to have a detrimental impact on learning (Brooks et al., 2019), even when it is used in combination with other feedback levels. So, after omitting the interactions with feedback moves that included self-level feedback, a total of 1,675 interactions were analysed and classified according to the different levels. In Table 5 we present the frequency for all levels of feedback observed. Teachers' feedback focused at the level of task in 69% of the interactions, with 16.9% also incorporating some content at the level of process. Only 13.3% of the interactions were focused at the level of process alone. As mentioned before, we did not observe interactions with feedback at the self-regulation level.

Focus of Feedback by Lesson Purpose and Type of Interaction

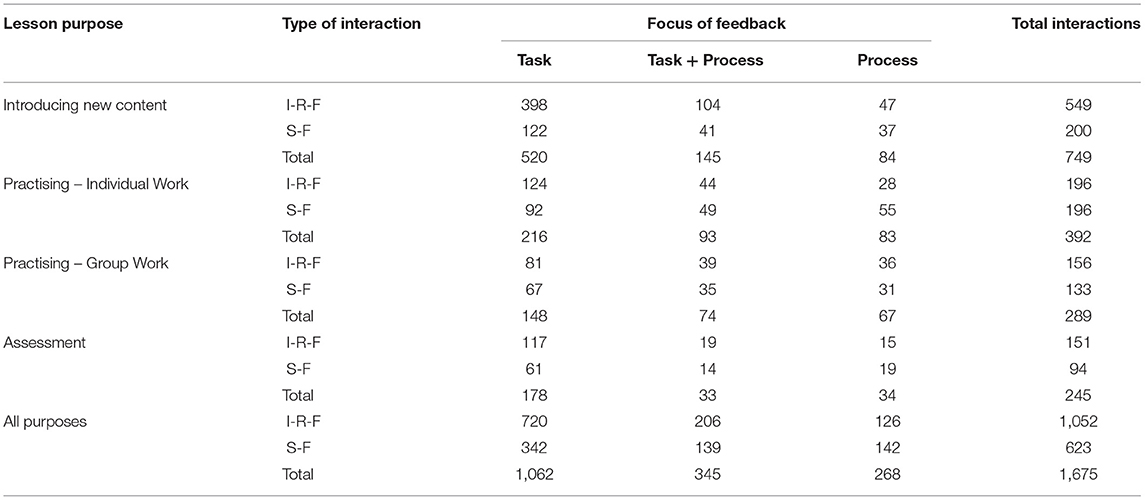

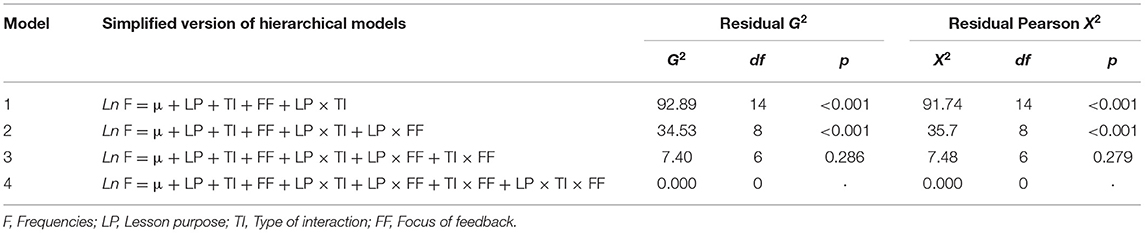

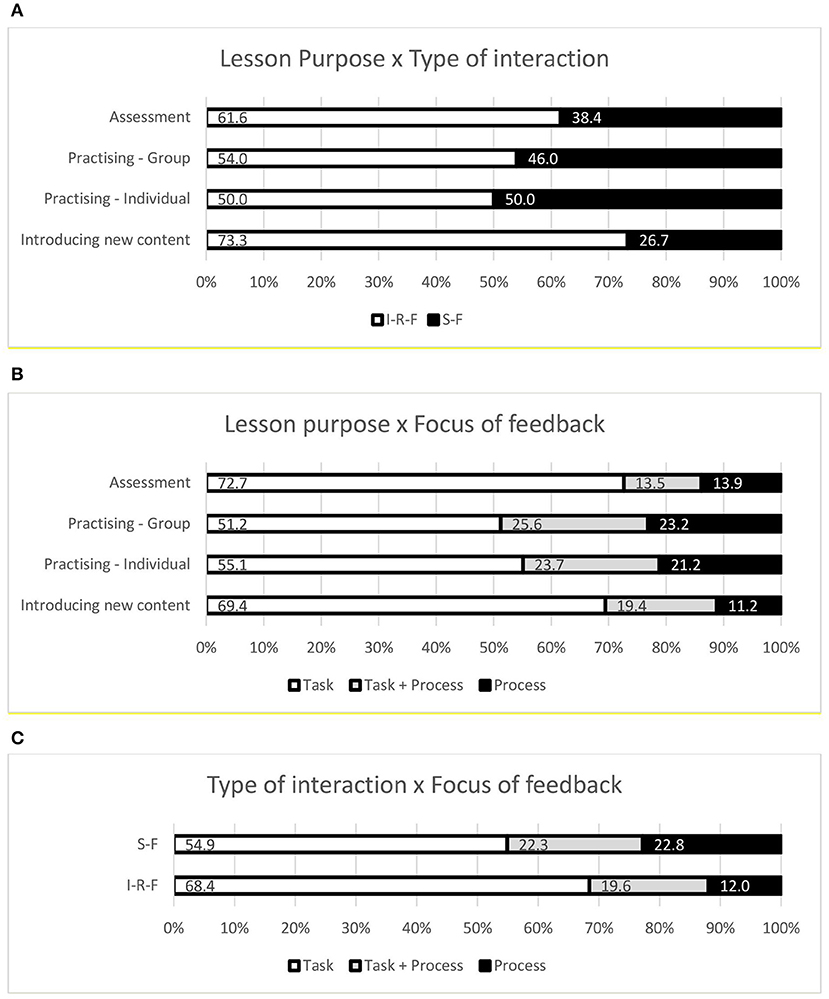

To understand if the teacher feedback characteristics (focus of feedback) differed according to the patterns of classroom interactions (I-R-F or S-F) and lesson purpose (introducing new content, practising, or assessment), a log-linear analysis was applied to the data organized in the three-way contingency table of frequencies presented in Table 6: Lesson purpose (4 categories) × Type of Interaction (2 categories) × Focus of Feedback (3 categories). Since we are assuming focus of feedback to be the dependent variable, an asymmetrical inquiry was used. This means that we incorporated the interaction between the independent variables lesson purpose and type of interaction in all models. If not controlled, these interactions could serve to distort patterns of response over levels of the focus of feedback (Kennedy, 1988). Consequently, in Model 1 we included all main interactions and the two-way interaction between the Lesson purpose and the Type of interaction; in Model 2 we added the two-way interaction between the Lesson purpose and the Focus of feedback; in Model 3 we added the two-way interaction between the Type of interaction and the Lesson Purpose; and finally, in Model 4, we added the three-way interaction among all variables. The residual maximum likelihood ratio statistic (G2) and the residual Pearson chi-square statistic (X2) associated with the four tested models are presented in Table 6.

Table 6. Frequency of the focus of feedback according to the lesson purpose and the type of interaction.

As can be observed in Table 7, when the highest-order effect (the interaction between the three variables) was removed from the model, the likelihood ratio statistic did not significantly change, indicating that removing the three-way interaction does not significantly affect the fit of the model, and our data can be explained by a simpler model. Thus, we moved on to lower-order interaction. Removing the interaction between Type of interaction and Focus of feedback (Model 2) or the interaction between the Lesson purpose and the Focus of feedback (Model 1) changed the likelihood ratio statistic significantly, and removing these interactions terms have a significant effect on the fit of the model. Therefore, the most efficient model capable of properly describing the observed distributions is Model 3, which presented residuals that did not differ significantly from our data (p > 0.050, c.f. Table 7). This model includes the main effect of the three variables and the significant effects of the interactions: Lesson purpose × Type of interaction, Lesson purpose × Focus of feedback, and Type of interaction × Focus of feedback.

Table 7. Logit model analysis of proportional focus of feedback by lesson purpose and type of interaction.

The interaction Lesson purpose × Type of interaction was significant, indicating that there was an association between these variables that explained the distribution observed in Table 6. As shown in Figure 1A, there was a higher proportion of I-R-F interactions in lessons with the purpose of introducing new content. The chances of teachers initiating the interaction were 2.3 times higher when the purpose was introducing new content than for any other purpose. Figure 1A shows that there were more S-F interactions (initiated by students) when the lesson purpose was practising the new content. OR indicated that the chances of the student initiating the interaction was 2 and 1.5 times higher when practising individually and in a group, respectively. Figure 1A further depicts the large proportions of I-R-F interactions in the lesson segments aimed at assessment. Yet, the adjusted estimated residuals indicate that there was no significant effect of this purpose on the type of interaction and we cannot confirm, with 95% confidence that the higher numbers of I-R-F interactions observed in the assessment segments were not due to chance.

Figure 1. Graphical representation of the association between the Lesson purpose and the Type of interaction (A), between the Lesson purpose and the Focus of feedback (B), and between the Type of interaction and the Focus of feedback (C).

The interaction Lesson purpose × Focus of feedback was also significant. The graphic representation of this interaction in Figure 1B allow us to observe that, in lessons with the purpose of introducing new content and/or assessment, the proportion of feedback at the task level was 1.6 times higher than in lessons with a different purpose. When the lesson purpose was practising new content, the chances of the teachers using task-level feedback was slightly lower −0.6 times lower for individual work and 0.5 times lower for group work. Further, the chances of teachers using process-level feedback was higher when the purpose was practising new content (1.6 and 1.8 times higher), but was 0.4 times lower when the purpose was introducing new content. The use of task + process feedback was significatively higher when the purpose was practising in group work (OR = 1.4), and significatively lower when the purpose was the assessment of the students (OR = 0.6).

Finally, there was a significant association between the type of interaction and the focus of feedback that help to explain the distribution observed in Table 6. As depicted in Figure 1C, there were more feedback at the task level in I-R-F interactions, and more feedback at the process level in S-F interactions. The teachers used task-level feedback 1.8 times more when they initiated the interactions, and 0.6 times less when the interactions were initiated by the students. In contrast, the chances of teachers using process-level feedback was 0.5 times lower in I-R-F interactions and 1.0 time higher in S-F interactions. No significant effect of the type of interaction were observed over the use of task + process feedback.

Focus of Feedback by Type of Question and Type of Answer in I-R-F Interactions

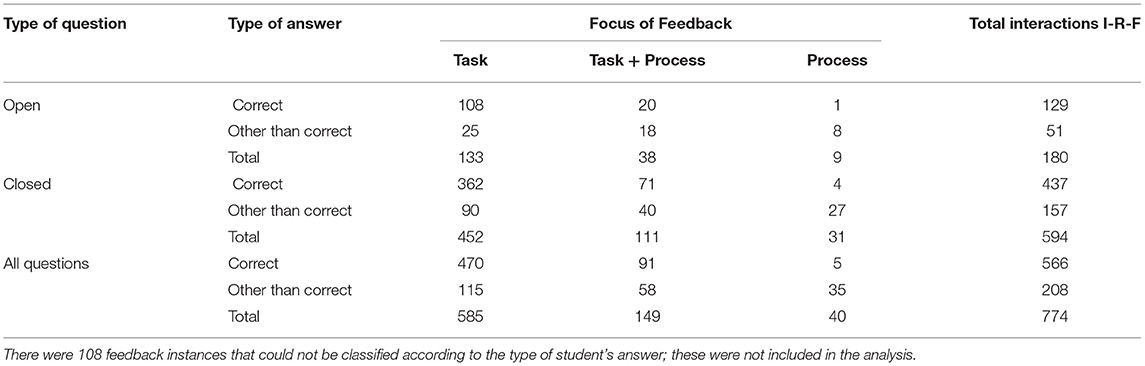

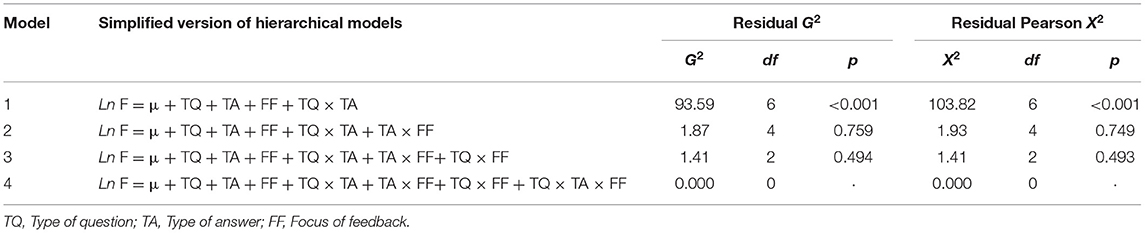

Our third research question related to whether teacher feedback characteristics (F moves) differed according to the type of question posed by the teacher (open or closed) and the type of answer provided by the student (correct or other than correct). Similar to what was done for research question 1, a log-linear analysis was applied to the three-way contingency table of frequencies (Table 8) to analyse the relations among: Type of question (2) × Type of answer (2) × Focus of feedback (3). Again, since we assume the focus of feedback to be the response variable, we incorporate the interactions between the type of question and the type of answer in all models to control distorted response patterns across different levels of the focus of feedback. The residual maximum likelihood ratio statistic and the residual Pearson chi-square statistic associated with the four tested models are presented in Table 8.

Table 8. Frequency of the focus of feedback according to type of question and type of answer in I-R-F interactions.

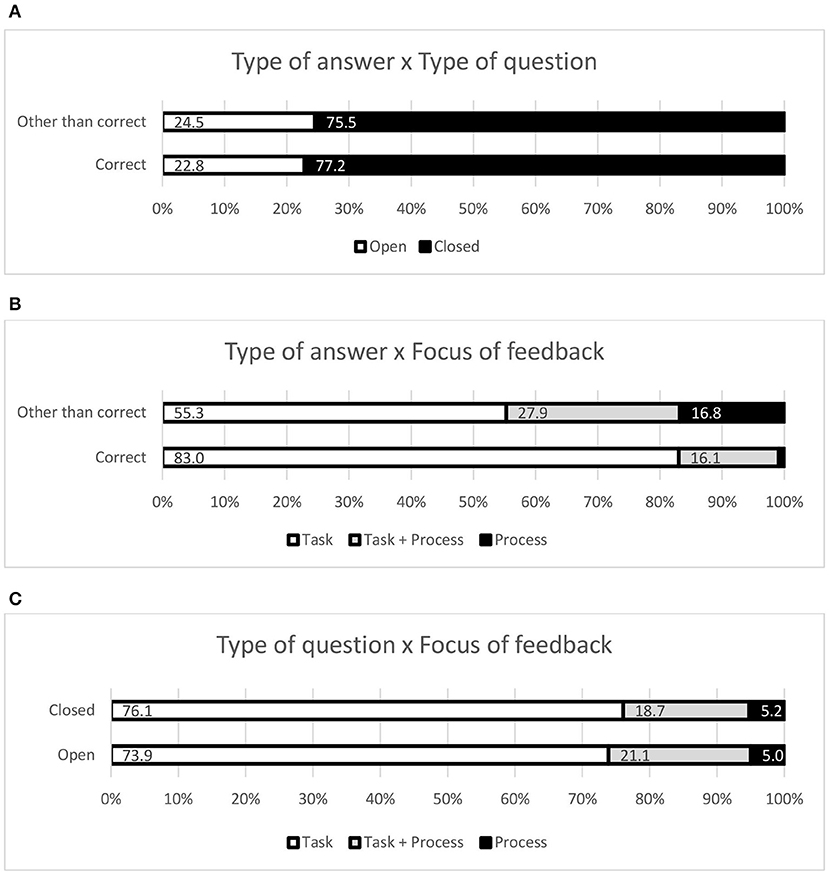

Conforming to the log-linear modelling (Table 9), removing the three-way interaction did not significantly affect the fit of the model, so Model 4 was not the simpler model that can explain our data. Removing the interaction between Type of question and Focus of feedback (Model 3) did not significantly affect the fit of the model. Only the removal of the interaction between the Type of answer and the Focus of feedback (Model 1) changed the likelihood ratio statistic significantly, having a significant effect on the fit of the model. The most efficient model capable of properly describing the observed distributions is Model 2 (p < 0.050, c.f. Table 9), which includes the main effect of the three variables and the significant effect of the interactions: Type of answer × Type of question and Type of answer × Focus of feedback. The interaction Type of question × Focus of feedback did not contribute significantly to the model, indicating that there were only minor differences on the focus of feedback between the open and closed questions. As we can see in the graphic representation of this interaction in Figure 2C, for both closed and open questions, ~75% of the feedback were focused at the task level, close to 20% were focused at both the task and process levels, and only 5% were focused at the level of process.

Table 9. Logit model analysis of proportional focus of feedback by type of question and type of answer in I-R-F interactions.

Figure 2. Graphical representation of the association between the Type of answer and the Type of question (A), between the Type of answer and the Focus of feedback (B), and between the Type of question and the Focus of feedback (C).

A significant interaction between the type of question and the type of answer was included in the model to control distorted response patterns across the levels of focus of feedback, as recommended by Kennedy (1988). Yet, there was not a significant relation between these variables [ = 0.25, p = 0.614]. As we can see in Figure 2A, approximately the same proportion of “correct” (22.8%) and “other than correct” answers (24.5%) were observed for both open and closed questions.

The only significant association that explain the distribution on Table 9 is the association between the type of answer and the focus of feedback, represented Figure 2B. In this graphic, we can see that students received a higher proportion of task feedback when their answers were correct, and more task + process and process-level feedback when their answers were incorrect or incomplete. The chances of a student receiving feedback at the level of task when their answer was correct was 4.2 times higher than when their answer was other than correct. Further, students had a 2.7 times higher chance of receiving feedback at the level of task + process, and a 20.7 times higher chance of receiving feedback at the process level when their answer was other than correct.

Focus of Feedback by Gender and Achievement of the Student

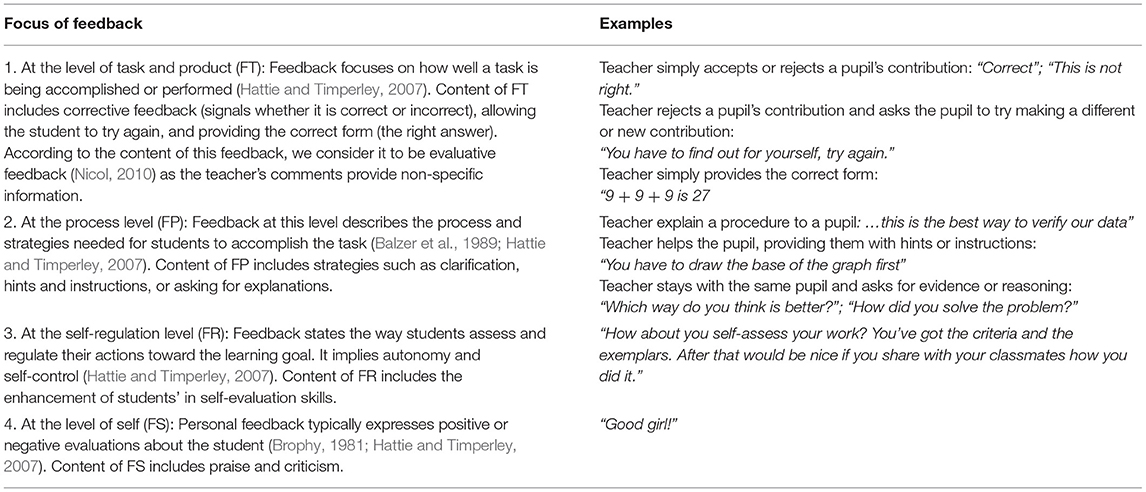

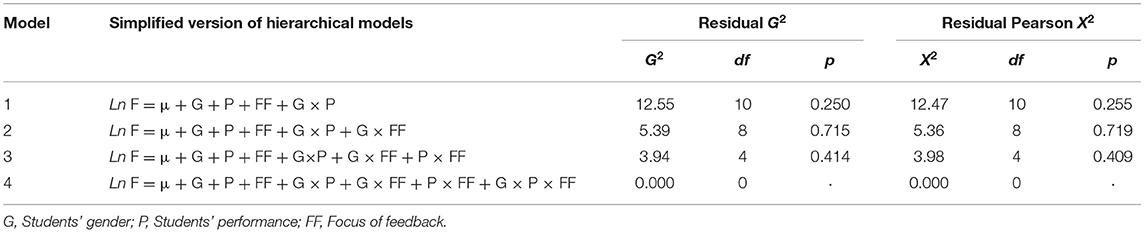

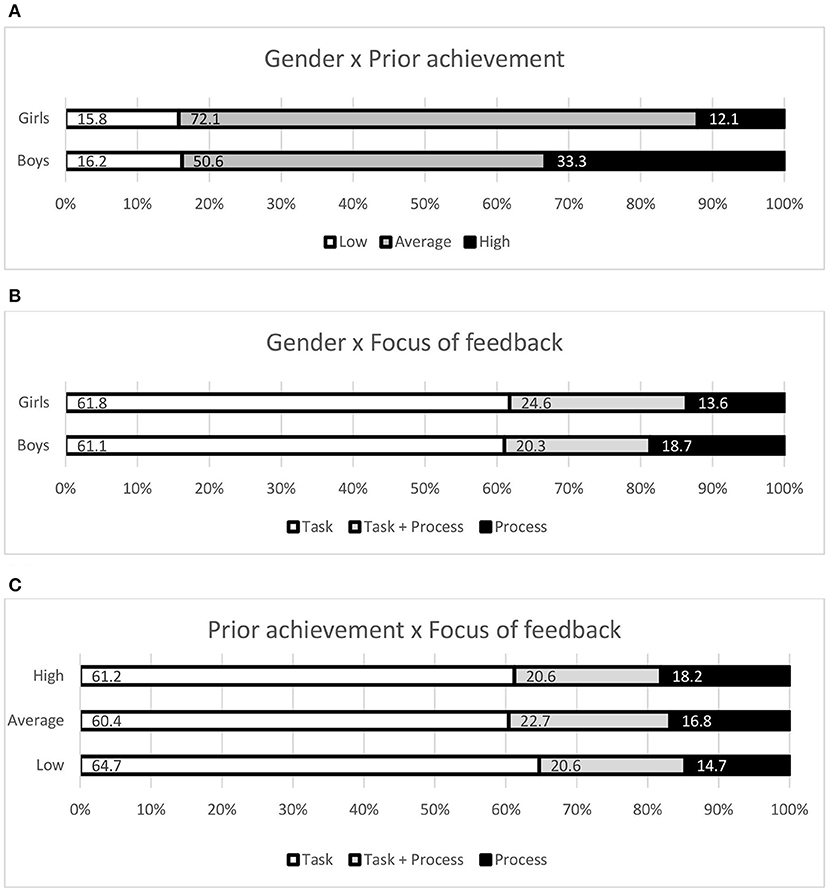

Finally, our last question pertained to whether teacher feedback characteristics (F moves) differed according to the gender (male or female) and prior achievement (lower, average, or higher) of the student to whom the feedback is addressed. Therefore, the focus of feedback according to students' gender and prior achievement is presented in Table 10. Once again, log-linear analysis was applied to this three-way contingency table in order to respond to our third research question. Again, since we are assuming that the focus of feedback is the response variable, we incorporated the interaction between students' gender and prior achievement in all models.

According to the log-linear modelling, Model 1 is sufficient to explain the distributions observed in Table 10 (p < 0.050, c.f. Table 11). Yet, Model 2 significantly improves the model [G2(2) = 7.19, p = 0.027; X2(2) = 7.11, p = 0.028]. Therefore, the most efficient model capable of properly describing the observed distributions is Model 2, which includes the main effect of the three variables and the significant effects of the interactions: Gender × Focus of feedback and Gender × Achievement.

Table 11. Logit model analysis of proportional focus of feedback by students' gender and performance.

As depicted in Figure 3A, there were more boys with high prior achievement and more girls with average prior achievement. OR indicated that the chances for boys to present prior high achievement were 3.62 times higher, while there were 2.5 more chance of a girl presenting an average prior achievement. The other significant association observed is between gender and level of feedback. According to the adjusted residuals there was a significant effect of gender only on the chances of receiving feedback at the process-level. Boys had a 1.5 time higher chance of receiving feedback at this level than girls, as shown in Figure 3B. The interaction between prior achievement and focus of feedback did not contribute to explain the distributions observed in Table 10. As shown in Figure 3C, the students received approximately the same proportion of the different levels of feedback, independently of their prior achievement.

Figure 3. Graphical representation of the association between students' Gender and students' Prior achievement (A), between students' Gender and the Focus of feedback (B), and between students' Prior achievement and the Focus of feedback (C).

Discussion

In order to better understand everyday teacher-student interactions in mathematics classrooms, we analysed the exchanges that occurred in terms of I-R-F and S-F patterns. Our results support the findings of Hardman et al. (2003), which suggest that teaching is dominated by teacher talk. Most of the time exchanges between teacher and student present an I-R-F pattern controlled by teachers, with their major goal being the evaluation of students' surface understanding of what they were taught. Thus, the majority of exchanges were initiated by the teacher, who asked closed questions requiring factual answers well-known to the students, and leading to a level of feedback that was focused at the task or product. This narrow focus limits gains in learning and restrains the increase of knowledge and understanding (Alexander, 2017). According to social constructivist theories of learning, this type of classroom pattern discourse is not effective, as pupils do not play an active part in their learning (Zher, 2017). Teachers should therefore encourage students to speak and actively participate in class, promoting dialogical teaching and learning (Alexander, 2017) which could stimulate students' cognitive engagement. Deeper learning should be emphasised, and this can only occur through exchanges where students have the opportunity to talk about, present, and discuss their ideas with their teacher.

In order to be effective, feedback should be of high quality and adapted to the student's needs (Hattie, 2012b). Nevertheless, we found several patterns of interaction in the current study where the feedback move was characterised by feedback directed to the “self,” with and without the addition of another level of feedback. According to Hattie (2012a), such feedback rarely enhances achievement or learning and, when it is combined with more effective types, like process-level feedback, it weakens the positive effect of such comments. We also found a high number of interactions where the teacher did not provide any feedback on their students' answers. Thus, if feedback plays an important role in assisting learners to improve their performances (Hattie, 2012b) and if adequate feedback enables the student to close the gap between what they know and a desirable level of achievement (Sadler, 1989), we propose that moving forward may be a huge challenge for students who do not receive the information necessary to close or even reduce that gap from their teacher, compromising their engagement in mathematics activities.

In the present study we observed that there were more I-R-F interactions than S-F interactions, and in both types of interaction patterns the teacher's feedback focused essentially on the task. However, teacher feedback focused at the process level tend to occur in S-F interactions in particular. According to Carless (2019) and Hattie and Timperley (2007) students' responses to teachers' feedback are “influenced by the level at which the feedback operates” (p. 2). We observed that teachers mostly use feedback at the task level, meaning that they provide information about a specific response, namely the current student performance level. This has a limiting impact on students' future responses; can lead to students' dependence on teachers' judgements; and does not promote dialogical feedback as it does not stimulate students' engagement in obtaining information to improve and self-regulate their learning (Carless, 2019). The teacher's feedback goal seems to be to provide information about the task to students, who are passive recipients of these facts. Nevertheless, when teachers' feedback operates at the process level, they focus on how to perform the task. This is a type of feedback that Hattie and Timperley (2007) consider more effective in promoting achievement, and we suggest that it stimulates students' thinking and reflection which are important supports in leading the students to act. This form of feedback, which promotes student engagement, is essential for students to understand the meaning of teachers' feedback and to know how to use this information to close the gap. This process more closely resembles dialogic feedback (Carless, 2019).

Feedback in every classroom interactions is part of the communication between the teacher and students; researchers have claimed that some types of feedback are more effective than others when it comes to learning. Numerous studies have been carried out in this field implementing very detailed micro-level analysis, ignoring the influence of classroom contexts in teacher feedback (Svanes and Skagen, 2017). To address this gap in literature we took into consideration the classroom setting, bringing the lesson purpose and the students' participation into consideration to better understand the daily feedback teachers provide in the classroom.

In line with the findings of Hattie and Yates (2014), we expected that in the lessons where the purpose was to introduce new content, a moment dedicated to the acquisition of new knowledge, the probability of a teacher providing feedback focussing at the task-level would be higher than in lessons with other purposes, where students should exhibit advanced levels of mastery. While this was confirmed by our results, we found that this pattern of feedback spreads throughout all the lessons, regardless of their purpose. As students improve their knowledge, feedback focused at the task level should be replaced with more elaborate, informative and dialogical feedback.

The exchanges analysed from practice lessons (both individual and group) showed that it contained process-level feedback more often than lessons where teachers introduced new content, or even lessons aimed at assessing learned content. This finding was reinforced by our observation that practice lessons tended to contain more student-initiated interactions (SF). Hattie and Timperley (2007) claim that a student's response to a teacher's feedback is influenced by the level at which the feedback operates; feedback focused at the process level tend to produce more interactions between the teacher and students, and stimulate students' involvement in classroom dialogue by facilitating participation. In lessons requiring individual work, rather than merely praising their students, teachers introduced a type of process-level feedback that allowed a more conversational reaction from students by providing them with opportunities to ask questions and comment on their learning difficulties. This type of feedback move is important in the facilitation of dialogic feedback (Carless, 2016) and in the promotion of achievement. Although this type of exchange (S-F focused at the process level) emerged more in lesson moments with the purpose of learning consolidation or practice, its occurrence was, however, infrequent.

Lessons with the purpose of assessment should represent a time when students are better able to self-regulate and consequently require less support from the teacher, as students would have already mastered the subject of assessment (Hattie, 2012a). Nevertheless, in the present research this moment was used by teachers to ensure that students had acquired the necessary knowledge, and we observed a predominance of I-R-F interactions with feedback moves mostly focused at the task-level. Teachers' intention was to verify knowledge and provide corrective feedback, instead of supporting self-regulated learning.

Our third research problem was to consider the possibility of relationships among the type of question asked by the teacher, the students' answer, and the type of feedback provided by the teacher. In the present research, the opening moves made by the teachers in all the observed lessons, closed questions were usually employed to test students' knowledge. Also, most of the questions were answered correctly by the students, confirming that both the question level and complexity were low. Noble (2004) states that lower-order questioning strategies would promote lower student cognitive levels. The results of some studies showed that the cognitive level of questions posed by students is, to some extent, dependent on the type of instruction that their teachers use (e.g., Hofstein et al., 2004) and on the nature of classroom tasks and cognitive demands required of the students (e.g., Chin et al., 2002). So, if students do not experience instruction that enhances their ability to ask good quality questions, and if they do not practice through learning tasks that provide opportunities for them to ask questions from different formats students will continue to use the common and basic information questions that their teachers use to ask more often (Chin and Osborne, 2008).

According to Zher (2017), students need coaching in these learning processes to become more self-regulated toward the learning goal—learners who frequently and regularly frame their own questions and comments in different formats tend to be more independent of their teacher and develop greater confidence in their own competence. Our findings show that the type of question (mostly closed) and the type of feedback (mostly at the level of task) did not vary much, and that the teacher's feedback only varied according to the student's response. In general, as we have seen, the level of feedback was focused at the task or product, particularly when the answers were correct. For other types of responses (incorrect and incomplete), teachers tended to provide more process feedback than in the case of correct answers. For these teachers, verification feedback is considered sufficient when the student knows the right answer. When teachers provide feedback at the level of process it is meant to assist students with difficulties. In these infrequent situations, the purpose of teachers' feedback can facilitate the construction of knowledge among students. It is important to note that successful students also need constructive feedback. The correctness of the student's answer should therefore not be the only consideration in terms of feedback, but learners should also be encouraged to explain why it is so (Brookhart, 2017). Suggestions for next steps should be encouraged, and sharing the process of doing the work with classmates is very important, as additional information in the feedback message will improve learning (Butler et al., 2013).

In general, scholars have agreed that regular and effective feedback could improve student achievement (Hattie and Jaeger, 1998; Hattie, 2012b); it is, in fact, one of the most powerful influences on student achievement. Hattie and Jaeger (1998, p. 111–112) postulate “that achievement is enhanced as a function of feedback” and “that achievement is enhanced to the degree that students are trained to receive feedback to verify rather than enhance their sense of efficacy of achievement.” In the current study, we found that students received approximately the same proportion of the different levels of feedback, regardless of their prior achievement. In general, the quality of feedback was often poor, it was focused at the level of task, and was mostly evaluative. According to Hattie (2009, 2012a), these are characteristic of the least effective forms of feedback for enhancing achievement. In fact, some authors (Carless, 2016; Alexander, 2017) consider feedback to be central to learning where teachers' comments enable students to use it to enhance their work or learning strategies. This is not the case for evaluative feedback because it contains little task information. Using only corrective feedback strategies, where students are encouraged to try again and provide the correct answer, makes it difficult for teachers and students to reduce the discrepancy between current achievement and learning goals (Hattie, 2009).

When considering gender, prior achievement, and type of feedback—the fourth research question of the current study—teachers provided more feedback focused at the process level for boys than they did for girls, who received more task-level feedback. We can conclude that these teachers provide more effective feedback to male learners, which can promote better forms of learning. As far as the other groups are concerned, the practices applied by teachers have limited positive consequences in learning (Hattie and Timperley, 2007).

Although we cannot ascertain from our findings the reasons for the differences in treatment of learners in mathematics classrooms, we posit that it can be explained in light of the subject matter being taught (mathematics) and teachers' expectations—an interpretation that is supported by Brophy (1985) and Eccles and Blumenfeld (1985). Brophy (1985) found that in teacher-student interactions in maths classrooms, teachers interacted with and gave more attention to boys than girls, which translates practically into a pattern of interaction that is more advantageous to boys. Eccles and Blumenfeld (1985) introduced another variable to elucidate the differences in classroom interaction: teachers' expectations. They argue that teachers' expectations are related to students' gender and achievement in maths, and claim that when teachers have low expectations for girls—based on their belief that girls would perform more poorly than boys—they would provide girls with more evaluative feedback than statistically predicted. This would be an adequate strategy as teachers tend to perceive low achieving girls as docile and not engaged. On the other hand, the authors suggested that teachers' high expectations of boys, informed by their belief that boys would perform better than girls, translated into higher rates of interactions with teachers, as well as higher quality feedback. As stated by Hattie (2012a), the most effective form of feedback to close students' knowledge gaps is targeted at, or just above, the level where they are working. We can infer that these teachers, thinking that girls will perform poorly, focus their feedback for girls at lower levels (task), whereas they focus their feedback to boys at the process level in anticipation of high achievement in maths. Self-regulation is facilitated when teachers provide feedback at higher levels.

In general, to provide effective feedback, teachers should employ strategies aimed at filling the gap between what the students know and the goal of their activity (Sadler, 1989), ensuring that the students understand the feedback and use it in their learning (Carless, 2016). The findings of the present study suggest that the level of feedback in mathematics is contingent on learner attributes and teacher practices, which could limit the effectiveness of the feedback. We observed that teachers' talk dominated all lessons, independently of the lesson purpose, and their exchanges mostly follow the I-R-F structure. Teachers encouraged students to respond by generally asking closed questions, while feedback moves were frequently focused at the task level, becoming more focused at the process level when students initiated the verbal interaction. This was especially the case in those lessons where the purpose was for students to apply their knowledge. The teachers also used this pattern of feedback independently of the type of the question and the quality of the student's answer. Finally, they interacted more with the boys who were good students, providing more process feedback.

This paper contributes to existing literature in the domain of classroom feedback, especially as it pertains to the currently understudied area of elementary school education. It presents an ecological description of teachers' feedback moves that contributes to the debate on what teachers in primary school classrooms intend to communicate to their pupils through feedback, and it highlights the use of feedback as a tool to aid students' learning. These findings can be used to inform policy recommendations for teachers' pedagogic practices.

Hattie and Timperley's (2007) research showed that feedback focused at the level of process and on the student's metacognition was effective in improving student learning. Although feedback on students' metacognition was scarce across all teacher-student interactions, in our study we could corroborate this earlier finding at the level of Task + Process and the level of process itself. Hattie and Timperley (2007) conclude that feedback at the level of process seems to be more powerful in terms of enhancing student learning, than that at the level of task. They add that feedback at the level of task can be effective when it is combined with feedback at the level of task processing, enhancing students' use of different strategies. Based on these results, it is reasonable to state that teachers should pay more attention to the development of their students' metacognitive knowledge and skills during learning. In order to enhance students' quality of learning, it is important for teachers to improve students' metacognition which will help them develop toward achieving their goal and close the learning gap (Van den Bergh et al., 2013).

As we have also argued, in most teacher–student interactions, teachers provided feedback in an evaluative way. This may be linked to the role of the teacher during the learning process. If the teacher's intentions are to transfer knowledge, they control the learning process. In a previous study we identified that these teachers mostly used summative assessment practices (Monteiro et al., 2018), which reinforced this more transmissive approach to knowledge. Effective feedback, focused at the levels of process and self-regulation, requires teachers to interact as tutors who promote dialogical feedback. Therefore, we consider that teachers need more preparation for this role as facilitators of the construction of learning.

Finally, one major finding emerged from our study related to gender. Boys and girls in mathematics lessons have different lived experiences. Sometimes undetected or even ignored, gender bias is still present in elementary schools, and it is important to support teachers with different strategies to improve the level of equality in their classroom.

Several limitations to this study have to be noted. We are aware of the researchers' subjective role in the qualitative observation of the classroom, especially when they had to decide what kind of feedback the teacher is giving their students. To minimise observer bias, researchers received adequate training in the appropriate recording findings; methods, tools, and timeframes for data collection were clearly defined. Although the collection of information had been quite extensive, we are also aware that the data collected may not provide an accurate representation of the teacher's effectiveness in the classroom. As we included a very small number of teachers (five) in our study, the findings should not be generalised to all primary school teachers. Indeed, we recommend more studies employing qualitative and quantitative research methods to observe student-teacher interaction in the classroom, as a larger body of evidence will enable greater understanding of the value and utility of feedback as a promoter of meaningful learning.

Data Availability Statement

The datasets generated for this study are available on request from the corresponding author.

Ethics Statement

This study was approved by the ethical committee (of the centre for research management of ISPA - Instituto Universitário), and by the National Commission for Data Protection (CNPD). Written informed consent was obtained from school boards, teachers, parents and students. Written informed consent to participate in this study was provided by the participants' legal guardian/next of kin.

Author Contributions

Each author contributed equally to the conception of this work, and to the analysis and interpretation of data. All authors drafted the work and revised it, and have approved the submitted version.

Funding

This study was supported by the FCT—Science and Technology Foundation—Research project PTDC/MHC-CED/1680/2014 and UID/CED/04853/2016.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Ajjawi, R., and Boud, D. (2017). Researching feedback dialogue: an interactional analysis approach. Assess. Eval. High Educ. 42, 252–265. doi: 10.1080/02602938.2015.1102863

Alexander, R. J. (2001). “Basics, cores and choices: towards a new curriculum,” in Teacher Development: Exploring Our Own Practice, eds J. Soler, A. Craft, and H. Burgess (London: Paul Chapman, 26–40.

Alexander, R. J. (2004). Still no pedagogy? Principles, pragmatism and compliance in primary education. Camb. J. Educ. 34, 7–33. doi: 10.1080/0305764042000183106

Alexander, R. J. (2006). “Dichotomous pedagogies and the promise of cross-cultural comparison,” in Education: Globalisation and Social Change, eds A. H. Halsey, P. Brown, H. Lauder, and J. Dilabough (Oxford: Oxford University Press, 722–733.

Alexander, R. J. (2017). “Developing dialogue: process, trial, outcomes,” in Paper Presented at the 2017 EARLI Conference, (Tampere). Available online at: http://www.robinalexander.org.uk/wp-content/uploads/2017/08/EARLI-2017-paper-170825.pdf (accessed December 15, 2018).

Alexander, R. J., Hardman, F., Hardman, J., Rajab, T., and Longmore, M. (2017). Changing Talk, Changing Thinking: Interim Report From the in-House Evaluation of the CPRT/UoY Dialogic Teaching Project. Available online at: http://eprints.whiterose.ac.uk/151061/ (accessed December 15, 2018).

Apriliyanto, B., and Saputro, D. R. S. (2018). Student's social interaction in mathematics learning. J. Phys. Conf. Ser. 983, 1–6. doi: 10.1088/1742-6596/983/1/012130

Balzer, W. K., Doherty, M. E., and O'Connor, R. Jr. (1989). Effects of cognitive feedback on performance. Psychol. Bull. 106, 410–433. doi: 10.1037//0033-2909.106.3.410

Brooks, C., Carroll, A., Gillies, R. M., and Hattie, J. (2019). A matrix of feedback for learning. Aust. J. Teach. Educ. 44, 14–32. doi: 10.14221/ajte.2018v44n4.2

Brophy, J. (1981). Teacher praise: a functional analysis. Rev. Educ. Res. 51, 5–32. doi: 10.3102/00346543051001005

Brophy, J. (1985). “Interactions of male and female students with male and female teachers,” in Gender Influences In Classroom Interaction, eds L. C. Wilkinson and C. B. Marrett (Orlando, FL: Academic Press, 115–142.

Burns, C., and Myhill, D. (2004). Interactive or inactive? A consideration of the nature of interaction in whole class teaching. Camb. J. Educ. 34, 35–49. doi: 10.1080/0305764042000183115

Butler, A. C., Godbole, N., and Marsh, E. J. (2013). Explanation feedback is better than correct answer feedback for promoting transfer of learning. J. Educ. Psychol. 105:290. doi: 10.1037/a0031026

Butler, D. L., and Winne, P. H. (1995). Feedback and self-regulated learning: a theoretical synthesis. Rev. Educ. Res. 65, 245–281. doi: 10.3102/00346543065003245

Carless, D. (2016). “Feedback as dialogue,” in Encyclopedia of Educational Philosophy and Theory, ed M. A. Peters (Singapore: Springer, 803–808. doi: 10.1007/978-981-287-532-7_389-1

Carless, D. (2019). Feedback loops and the longer-term: towards feedback spirals. Assess. Eval. High. Educ. 44, 705–714. doi: 10.1080/02602938.2018.1531108

Chapin, S. H., O'Connor, C., O'Connor, M. C., and Anderson, N. C. (2009). Classroom Discussions: Using Math Talk to Help Students Learn, Grades K-6. California, CA: Math Solutions.

Chin, C. (2006). Classroom interaction in science: teacher questioning and feedback to students' responses. Int. J. Sci. Educ. 28, 1315–1346. doi: 10.1080/09500690600621100

Chin, C., Brown, D. E., and Bruce, B. C. (2002). Student-generated questions: a meaningful aspect of learning in science. Int. J. Sci. Educ. 24, 521–549. doi: 10.1080/09500690110095249

Chin, C., and Osborne, J. (2008). Students' questions: a potential resource for teaching and learning science. Stud. Sci. Educ. 44, 1–39. doi: 10.1080/03057260701828101

Chin, C., and Osborne, J. (2010). Students' questions and discursive interaction: their impact on argumentation during collaborative group discussions in science. J. Res. Sci. Teach. 47, 883–908. doi: 10.1002/tea.20385

Eccles, J. S., and Blumenfeld, P. (1985). “Classroom experiences and student gender: are there differences and do they matter?,” in Gender Influences in Classroom Interaction, eds L. C. Wilkinson, and C. B. Marrett (Orlando, FL: Academic Press, 79–114.

Eccles, J. S., and Harold, R. D. (1992). “Gender differences in educational and occupational patterns among the gifted,” in Talent Development: Proceedings from the 1991 Henry B. and Jocelyn Wallace National Research Symposium on Talent Development, eds N. Colangelo, S. G. Assouline, and D. L. Ambroson (Unionville, NY: Trillium, 3–29.

Etikan, I., Musa, S. A., and Alkassim, R. S. (2016). Comparison of convenience sampling and purposive sampling. Am. J. Theor. Appl. Stat. 5, 1–4. doi: 10.11648/j.ajtas.20160501.11

Gan, M. J., and Hattie, J. (2014). Prompting secondary students' use of criteria, feedback specificity and feedback levels during an investigative task. Instr. Sci. 42, 861–878. doi: 10.1007/s11251-014-9319-4

Hardman, F., Smith, F., and Wall, K. (2003). Interactive whole class teaching in the national literacy strategy. Camb. J. Educ. 33, 197–215. doi: 10.1080/03057640302043

Hattie, J. (2012a). Visible Learning for Teachers: Maximizing Impact on Learning. New York, NY: Routledge.

Hattie, J. (2012b). “Feedback in schools,” in Feedback: The Communication of Praise, Criticism, and Advice, eds R. Sutton, M. J. Hornsey, and K. M. Douglas (New York, NY: Peter Lang Publishing, 265–278.

Hattie, J., and Gan, M. (2011). “Instruction based on feedback”. in Handbook of Research on Learning and Instruction, eds R. E. Mayer and P. A. Alexander (New York, NY: Routledge, 263–285.

Hattie, J., and Jaeger, R. (1998). Assessment and classroom learning: a deductive approach. Assess. Educ. Princ. Policy Pract. 5, 111–122. doi: 10.1080/0969595980050107

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Hattie, J. A. (2009). Visible learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement. New York, NY: Routledge.

Hattie, J. A., and Yates, G. C. (2014). “Using feedback to promote learning,” in Applying Science of Learning in Education: Infusing Psychological Science into the Curriculum, eds V. A. Benassi, C. E. Overson, and C. M. Hakala (London: Routledge) 45–58. Available online at: http://teachpsych.org/ebooks/asle2014/index.php (accessed December 15, 2018).

Henderson, M., Boud, D., Molloy, E., Dawson, P., Phillips, M., Ryan, T., et al. (2018). Feedback for Learning: Closing the Assessment Loop–Final Report. Canberra, ACT: Australian Government Department of Education and Training.

Hiebert, J., Gallimmore, R., Garnier, H., Givvin, K. B., Hollingsworth, H., Jacobs, J., et al. (2003). Teaching Mathematics in Seven Countries. Results from the TIMSS 1999 Video Study. Washington, DC: National Center for Education Statistics.

Hofstein, A., Shore, R., and Kipnis, N. (2004). Providing high school students with opportunities to develop learning skills in an inquiry-type laboratory. Int. J. Sci. Educ. 26, 47–62. doi: 10.1080/0950069032000070342

Kelly, A. (1998). Gender differences in teacher-pupil interactions: a meta-analytic review. Res. Educ. 9, 1–23. doi: 10.1177/003452378803900101

Kennedy, J. J. (1988). Applying log-linear models in educational research. Aus. J. Educ. 32, 3–24. doi: 10.1177/000494418803200101

Kluger, A. N., and DeNisi, A. (1996). The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 119, 254–284.

McHugh, M. L. (2012). Interrater reliability: the kappa statistic. Biochem. Med. 22, 276–282. doi: 10.11613/BM.2012.031

Mercer, N. (2010). The analysis of classroom talk: Methods and methodologies. Brit. J. Educ. Psychol. 80, 1–14. doi: 10.1348/000709909X479853

Mercer, N., and Howe, C. (2012) Explaining the dialogic processes of teaching learning: the value potential of sociocultural theory. Learn. Cult. Soc. Interact. 1, 12–21. doi: 10.1016/j.lcsi.2012.03.001

Miles, M. B., Huberman, A. M., and Saldaña, J. (2014). Qualitative Data Analysis. A Methods Sourcebook, 3rd Edn. Thousand Oaks,CA: SAGE.

Molloy, E., and Boud, D. (2013). “Changing conceptions of feedback,” in Feedback in Higher and Professional Education, eds D. Boud and E. Molloy (London: Routledge, 21–43.