94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 15 October 2019

Sec. Digital Education

Volume 4 - 2019 | https://doi.org/10.3389/feduc.2019.00110

This article is part of the Research TopicResearch in Underexamined Areas of Open Educational ResourcesView all 7 articles

Open textbooks, which provide students with electronic access to texts without fees, have been developed as alternatives to commercial textbooks. Building on prior quasi-experiments, the purpose of this study is to experimentally compare an open and commercial textbook. College students (N = 144) were randomly assigned to read an excerpt from an open or commercial textbook, answer questions about content, and indicate their perceptions of textbook quality. Learning was similar between textbook types. Perceptions differed in that the discussion of research findings was reported as higher quality in the open textbook while the visuals and writing were reported as higher quality in the commercial textbook. Neither perceptions of research findings nor visuals correlated with learning performance. However, perceptions of writing quality and everyday examples were correlated with learning performance. Findings may inform initiatives for open textbook adoption as well as textbook development, but are limited due to the use of an excerpt. Reading to learn is a fundamental activity for knowledge construction (Duke et al., 2003; Alfassi, 2004; Maggioni et al., 2015). Textbooks are common educational tools for reading to learn, even in the digital age (Fletcher et al., 2012; Knight, 2015; Illowsky et al., 2016). The rising cost of commercial textbooks, along with the affordances of the internet and growing interest in expanding access to knowledge, has brought about the development of open textbooks, which students can access electronically without cost (Smith, 2009). There have been multiple studies indicating that students' learning from and opinions of open textbooks are similar to or better than those of commercial textbooks (e.g., Clinton, 2018; Lawrence and Lester, 2018; Medley-Rath, 2018; Cuttler, 2019; Grissett and Huffman, 2019). However, these studies have all been quasi-experimental or correlational; therefore, causal claims were not possible. Moreover, students in these studies were aware that the open textbooks were free whereas the commercial textbooks were not, which could bias their attitudes (Clinton, 2019). An experimental examination with participants who are naive to the cost of the textbook would address the confounds related to student awareness of cost. The purpose of this experiment is to examine students' learning from and perceptions of an open textbook compared to a commercial textbook.

Textbooks are frequently used for required reading in college courses (Illowsky et al., 2016). Students use them for independent reading as well as for class activities (Landrum et al., 2012; Seaton et al., 2014). College instructors often expect their students to learn independently from reading (McCormick et al., 2013); therefore, it is not surprising that course grades tend to increase with the amount of reading completed (Gurung et al., 2012; Junco and Clem, 2015).

Many college students lack the financial resources for commercial textbooks (Smith et al., 2016). Often, college students do not purchase the required textbooks for their courses to save money (Florida Virtual Campus, 2016). This is understandable given that the cost of a commercial textbook has increased dramatically in the past few decades, far exceeding the rate of inflation (Perry, 2015; Senack and Donaghue, 2016; U.S. Bureau of Labor Statistics, 2016). This leads to students often choosing not to purchase a textbook or to enroll in fewer courses, both of which can have detrimental academic consequences (U.S. Public Interest Research Group Education Fund and Student Public Interest Research Groups, (USPIRG), 2014; Florida Virtual Campus, 2016). Therefore, one advantage of open textbooks is that more students have access to the material.

Overall, numerous studies have indicated no meaningful differences in student learning performance between open and commercial textbooks. For example, after high school grade point averages are considered, course grades for students in introduction to psychology courses with commercial or open textbooks were similar (Clinton, 2018). Consistent findings were observed in another study examining introduction to psychology courses in which students in courses with open or commercial textbooks, who had comparable levels of prior psychology knowledge based on pretest findings, had similar exam grades (Jhangiani et al., 2018). In physics, students earned similar grades and experienced similar changes in attitudes toward science in courses with commercial and open textbooks (Hendricks et al., 2017). However, a large study comparing multiple disciplines found a benefit for open textbooks over commercial textbooks in terms of final grades, especially for students who were categorized as lower in socioeconomic status based on financial aid eligibility (Colvard et al., 2018). Nevertheless, when expanding comparisons to students at different institutions, at least one study has noted poorer learning performance for students in courses using open textbooks (Gurung, 2017).

Systematic reviews and a meta-analysis have concluded that, based on the overarching findings across studies, the learning outcomes from open and commercial textbooks are similar (see Hilton, 2016, 2019, for systematic reviews; Clinton and Khan, 2019, for a meta-analysis). However, these findings regarding the efficacy of open textbooks are confounded in several ways. One issue is that a substantial portion of these studies did not hold the instructor and course constant (Clinton and Khan, 2019), which is problematic as teaching quality and grading criteria vary by instructor (de Vlieger et al., 2016). Additionally, the learning performance measures in studies often varied for courses with open compared to commercial textbooks (see Gurung, 2017, for exceptions). The issue of objective, identical measures is notable given that Gurung (2017) found students enrolled in courses with open textbooks did less well on objective measures of learning than did students in courses with commercial textbooks.

Another issue when considering findings on open textbook adoption is the access hypothesis, which articulates that students have improved access to open textbooks compared to commercial textbooks because there is no cost barrier (Grimaldi et al., 2019). Based on the access hypothesis, open textbooks would primarily benefit students who could not afford commercial textbooks because they would not struggle academically from a lack of materials. Students who can afford commercial textbooks would not have an academic advantage in courses with open textbooks because these students would have the textbook regardless of whether instructors adopted commercial or open textbooks. According to Grimaldi et al. (2019), the overall lack of differences in student learning outcomes when open textbooks are adopted may be due to the small number of students who benefit from the access open textbooks afford.

Previous comparisons of student performance in courses with open textbooks vs. commercial textbooks have been quasi-experimental, meaning comparisons were generally made using naturally occurring groups, rather than random assignment to textbook type (Hilton, 2016, 2019). The findings from these quasi-experimental studies have ecological validity as they involve the learning performance of actual students in actual courses. Some of these quasi-experiments accounted for measured baseline characteristics, such as prior academic achievement, in their findings (e.g., Allen et al., 2015; Grewe and Davis, 2017; Gurung, 2017; Clinton, 2018), which enhances the validity of their findings. However, quasi-experiments are limited in that they are more likely than randomized experiments to have group differences on unmeasured characteristics, making quasi-experiments more likely to have biases than randomized experiments (Penuel and Frank, 2015; What Works Clearinghouse, 2017). Examples of potential biases when comparing students in different courses include students self-selecting into courses with open textbooks to save costs, student population changes across semesters, and changes in institutional policies on course enrollment (e.g., Hilton et al., 2013).

In addition to student access, perception of textbook quality is an important area of inquiry regarding open textbooks (Illowsky et al., 2016). College students tend to see value in textbooks as reliable sources of knowledge and appreciate that topics are covered in a uniform manner (in terms of writing style, layout, and visuals; Skinner and Howes, 2013). Students often view textbooks as useful learning tools for activities such as understanding the material presented in class as well as preparing for class discussions and exams (Skinner and Howes, 2013; French et al., 2015). However, many students resent the high costs of commercial textbooks (Skinner and Howes, 2013). This resentment could potentially lead to students indicating more positive views toward open textbooks based on price rather than actual quality. In this way, the use of a randomized-controlled experiment in which students are unaware of the costs of a textbook would be an effective means to address this possible confound.

Student perceptions are important to consider in textbook research because students are likely more inclined to read and subsequently learn from a textbook perceived to be of high quality compared to one perceived to be of low quality. However, previous research has not demonstrated many significant relationships between student perceptions of textbooks and learning in terms of exam scores (Gurung and Martin, 2011). That said, there has been minimal inquiry directly testing this relationship. Having a better understanding of how student perceptions of textbooks relate to student learning from textbooks would be informative for interpreting findings comparing open and commercial textbooks. If students perceive one aspect of a textbook as better than another, but that aspect is not related to learning from the textbook, differences in that aspect would arguably be inconsequential. Moreover, examining relationships between student perceptions of quality and learning performance could inform textbook design. In particular, textbook designers would better understand how the characteristics of a textbook relate to student learning.

One way to build on previous research findings on open textbooks would be through a randomized-controlled experiment in which students read about the same topic from open and commercial textbooks. Although it is impractical to randomly assign students to courses with open and commercial textbooks, students in a research study can be randomly assigned to read excerpts from different types of textbooks covering similar concepts. Moreover, this design allows for a standardized measure of learning performance, which addresses another confound in previous research. All students in the experiment would have a copy of the textbook excerpt; therefore, access would be equivalent. Students would not know the cost of the textbook from which they read; therefore, their perceptions of quality would not be biased by cost. Although the findings from this study are limited in generalizability due to only using an excerpt on a single topic in one discipline, the findings from this study would build on the body of quasi-experimental findings on open textbooks.

The following research questions were addressed in this experiment:

1) Does the type of textbook (open or commercial) affect student learning performance?

2) Do student perceptions of textbook features differ between types of textbooks (open or commercial)?

3) What are the associations between student learning performance and perceptions of textbook quality?

To increase the robustness of the findings, measures of reading skill and prior academic achievement are included in the analyses. These constructs are known to be related to student learning from textbooks (Voss and Silfies, 1996; Ozuru et al., 2009).

Approval of all study procedures was obtained from the authors' Institutional Review Board prior to data collection. Participants were 144 undergraduate students at a mid-sized Midwestern university who received course credit for taking part in the study. All participants indicated they were native speakers of English who had not previously taken Introduction to Sociology from the study authors. The average age of participants was 19.45 years (SD 1.94) and their average high school grade point average was 3.62 (out of 4.00; SD = 0.45). The majority were in their first year of postsecondary education (60.8%), with 20.9% in their second year, 12.2% in their third year, and 6.1% in their fourth year or beyond. In terms of gender, 74.3% reported identifying as women, 25% reported identifying as men, and one participant declined to report their gender identity. Regarding racial and ethnic identities, most participants indicated that they were Caucasian (92.6%), 1.4% identified as African American, 1.4% identified as Native American or Pacific Islander, 2.1% identified as bi- or multi-racial, one participant (0.7%) identified as Middle Eastern, and two participants (1.4%) declined to report their race/ethnicity.

The textbook excerpts for the experiment cover the topic of marriage and family structures. The open textbook was Introduction to Sociology, Second Edition (Griffiths et al., 2015), published by OpenStax and the excerpt had 2166 words. The commercial textbook was Sociology Now: The Essentials, Second Edition (Kimmel and Aronson, 2011) and the excerpt had 1958 words. These textbooks were chosen by a sociology professor because they are well-known and commonly used and contained similar concepts on marriage and families. For example, both textbooks described definitions of family, challenges families face, and different family units, forms of marriage, and lines of descent. Both textbook excerpts had photographs to illustrate the content, but no diagrams or tables. In the interest of ecological validity, the only changes made to the texts were the removal of summary content at the beginning of one excerpt and the removal of additional content at the end of the other excerpt as this material was not covered in both textbooks. This also helped to ensure that both excerpts were similar in length.

The computerized text tool Coh-Metrix (Graesser et al., 2004) was used to provide descriptive information about the readability of the two textbook excerpts. From viewing the output (see Supplementary Materials), most of the measures were similar between the two texts. Nevertheless, there were some notable differences. The Flesch Reading Ease, which is based on the number of syllables per word and number of words per sentence, was 43.18 for the open textbook and 52.07 for the commercial textbook, with higher scores indicating greater reading ease (Kincaid et al., 1975). The use of pronouns, especially second-person pronouns (e.g., you) is also higher for the commercial textbook excerpt than the open textbook excerpt. Pronouns can benefit reading comprehension because the process of connecting a pronoun to its referent can assist in making connections in the text (Graesser and McNamara, 2011). In addition, the use of passive voice, which tends to be more difficult to comprehend than active voice (Just and Carpenter, 1987) was more common in the open textbook excerpt than the commercial textbook excerpt. Taken together, the Coh-Metrix output indicates that the commercial textbook excerpt was easier to read than the open textbook excerpt.

A maze task, in which readers must circle the correct word out of three options for every seventh word in a text (Chung et al., 2018), was used to assess reading skill. This type of measure is thought to assess a variety of constructs important to reading, including word recognition, fluency, and comprehension (Muijselaar et al., 2017; Shin and McMaster, 2019). The 3-min measure used in this study has been found to be a reliable and valid measure of reading skill for undergraduate students (Hebert, 2016). The score for this measure was determined by the number of correct choices a student selected in the 3-min time limit. The scores for this measure have been found to be positively correlated with longer measures of reading comprehension and fluency for college students such as the Nelson Denny reading comprehension subtest (Brown et al., 1993; reading comprehension subtest, r = 0.42, p < 0.001, Nelson-Denny Reading Test, reading rate, r = 0.43, p < 0.001) and the Scholastics Abilities Test for Adults reading comprehension subtest (Bryant et al., 1991; correlations from Hebert, 2016; reading comprehension subtest, r = 0.27, p < 0.01, and the Scholastic Abilities Test for Adults, reading rate, r = 0.35, p < 0.001). In the current study, the validity of the maze task was also assessed by examining correlations with two measures of prior academic achievement: self-reported high school grade point averages and ACT scores (see Background questionnaire for details). Maze task scores were positively correlated with self-reported high school grade point averages r(142) = 0.22, p = 0.01 and ACT scores, r(136) = 0.49, p < 0.001, which support the validity this measure. The correlation with the total score on the learning assessment of the textbook excerpt for this study (see Assessment) was also positive, r(144) = 0.40, p < 0.001. A brief measure, such as this maze task, was optimal for the purposes of this study so as not to fatigue students prior to engaging in the key tasks, that is, to read about a sociology topic and have their learning on this topic assessed.

The assessment of student learning originally consisted of 10 multiple-choice questions. A sociology professor chose five of the items from the test bank for the open textbook and five from the test bank of the commercial textbook. The information needed to choose the correct answer for each item could be found in both textbook excerpts. The items were adapted for use in this study. Distractors were adapted to make them more plausible, thereby reducing the likelihood of guessing a correct answer (Gierl et al., 2017). In addition, stems and options were edited to reduce clues to the correct answer through wording (also termed clang associations; Draaijer et al., 2016). In a few instances, options were reworded to make the lengths similar within items (Haladyna et al., 2002). Following McNeish (2018) and Peters (2014), internal consistency was assessed through Omega total. The “psych” package in R was used to determine that internal consistency was Ω = 0.71 for this assessment (Revelle, 2018). This type of assessment has ecological validity because it is similar to assigning students to independently read a section of their textbook and answer questions about it (i.e., take a quiz), which is common in postsecondary education (Starcher and Proffitt, 2011; Heiner et al., 2014). To further examine the validity, total scores on this assessment were correlated with two measures of prior academic achievement: self-reported high school grade point averages and ACT scores (see Background questionnaire for details). The assessment scores were positively correlated with self-reported high school grade point averages r(142) = 0.18, p = 0.03 and ACT scores, r(136) = 0.52, p < 0.001, which support the validity this assessment.

A 14-item Textbook Assessment and Usage Scale (TAUS; Gurung and Martin, 2011) was adapted to examine perceptions of the textbook excerpts. Specifically, the TAUS items about figures and tables were removed because there were no figures or tables in the textbook excerpts used in this study. Students responded to each item with a seven-point Likert scale ranging from “not at all” to “very much so.” Two open-ended items (“What features of the text were most helpful for your learning?” and “What features of the text were least helpful for your learning or distracted from your learning?”) were added.

Students were asked to self-report their demographics, including gender, race/ethnicity, age, and year in school. They were also asked to report their high school grade point average for a measure of prior academic achievement (two students did not report their high school grade point average) and their ACT scores (eight students did not report their ACT scores). Self-reported GPAs and ACT scores have been found to be highly and positively correlated with actual measures (Cole and Gonyea, 2010; Sanchez and Buddin, 2015).

The experiment was administered in groups of 1–15 participants in a small lecture hall on campus. All participants in each data collection session had the same textbook excerpt (either the commercial textbook excerpt or the open textbook excerpt). After obtaining informed consent, participants had 3 min for the maze assessment. After, participants were given the textbook excerpt. They were instructed that they had 10 min to read the excerpt and to read as if they were preparing for an exam. Participants were then informed that they had 5 min to answer the assessment items. Finally, participants completed the TAUS and self-reported demographic and other background information. Participants were thanked and debriefed upon completion.

Random assignment to condition does not necessarily ensure groups are comparable on key variables (Saint-Mont, 2015). To determine if there were significant differences in reading skill or prior academic achievement, three one-way analysis of variance tests were conducted with textbook type (open or commercial text) as the independent variable and maze score or high school grade point average as the dependent variables. There were no significant differences between textbook types for reading skill based on maze scores, F(1, 144) = 0.06, p = 0.80, Cohen's d = 0.04 (M = 23.36, SD = 7.13 for open, M = 23.65, SD = 6.87 for commercial) or for academic achievement based on high school grade point average (on a four-point scale), F(1, 142) = 1.10, p = 0.30, Cohen's d = 0.18 (M = 3.59, SD = 0.51 for open, M = 3.67, SD = 0.38 for commercial) and ACT scores, Cohen's d = 0.05, F(1, 135) = 0.07, p = 0.80 (M = 24.72, SD = 4.14 for open, M = 24.91, SD = 4.16 for commercial). Based on the What Works Clearinghouse's (2017) criteria for baseline equivalence, there was baseline equivalence for maze scores and ACT scores, but not high school GPA. Following WWC guidelines (What Works Clearinghouse, 2017), high school GPA will be used as a covariate in analyses comparing assessment performance by textbook condition (research question one).

To address the first research question, an ANCOVA was conducted with textbook type (open or commercial) as the independent variable, total assessment score as the dependent variable, and self-reported high school GPA (as a measure of prior academic achievement) as the covariate. No statistically significant differences in assessment score by textbook condition were noted, F(1, 139) = 0.05, p = 0.82, Cohen's d = 0.04 (adjusted mean for open = 6.99, SE = 0.24, adjusted mean for commercial = 7.07, SE = 0.23), and high school GPA was a significant covariate, F(1, 139) = 4.45, p = 0.04.

To address the second research question, a series of one-way analyses of variance with textbook type as the independent variable and aspects of perceived textbook quality as the dependent variables were conducted. As shown in Table 1, students perceived no significant differences in the difficulty of the textbook, how well everyday life examples used explain the material, the helpfulness of the everyday life examples, the relevance of examples, and visual distractions. The commercial textbook was rated as having more relevant photographs, having better placed photographs, more visual appeal, more engaging writing, and more understandable/clear writing. The open textbook was rated better in terms of the three research items: how well research findings explained the material, and how recent the research findings were.

In addition to the data gathered from reading assessments, students were asked to provide open-ended feedback on what they found most and least helpful about the textbook excerpts. Careful consideration was given to the best method of analyzing these qualitative data in relation to the quantitative metrics. Alone, each response meant little, and it would be difficult to compare the responses in a spreadsheet. In order to ground the responses in the larger research question, a coding method based on grounded theory was developed.

Grounded theory is a method of qualitative assessment that emphasizes measurement and accountability (Miles and Huberman, 1994; Padgett, 2017). In grounded theory, the goal is to relate data that may not be easily classifiable or comparable to real world situations. Often, the method focuses on coding qualitative data and counting instances of that code to determine how grounded each code may be (Weaver-Hightower, 2019). Examples of use of similar grounded theory-based methods include Williams' analysis of the validity of patient satisfaction as a concept (Williams, 1994), in which he analyzed patient survey responses and counted references to the idea of satisfaction, which were then coded and assessed for Groundedness, that is, how many instances of the code appeared in the data. One student could generate multiple codes; however, unless those codes were reflected by other students, groundedness would be low.

Tools such as Atlas.ti. can be useful in this process, as data can be imported into a document that retains the individual delineators. This allows the researcher to electronically tag each instance of a code while ensuring no respondent's answers are tagged with the same code twice. This ensures each instance of the code represents one individual response. Charmaz (1990) also used this approach in a study of patients with chronic illness, noting that a grounded theory approach helps researchers “find recurrent themes or issues in the data, then they need to follow up on them, which can, and often, does lead grounded theorists in unanticipated directions” (p. 1,162).

For this project, the first step was to upload the qualitative responses in Atlas.ti. As the researcher reviewed the codes, short words, such as “layout” and “writing” were attached to each response. One response could have multiple descriptors attached, as some students wrote very detailed responses while others just provided one or two words. Reading through responses was repeated five times, with each read more thoroughly refining the codes. Codes were reviewed based on grounded theory methods, in particular groundedness, meaning how many instances of a particular code appeared in the data (Strauss and Corbin, 1998). An initial review of the responses showed several common themes, which were then used as codes. Codes were attached to each response in Atlas.ti. One response represented one participant. A response could have one single code or many, depending on how in-depth the students' response was. While a code could be generated by a single response, that response would have one instance and therefore low groundedness. One student could generate multiple codes; however, unless those codes were reflected by other students, groundedness would be low.

Only the most highly grounded codes were included in the analysis in order to prevent one individual from disproportionately affecting the results. The first round of coding produced 49 initial codes (see Strauss and Corbin, 1998, for guidelines). The second round of coding focused on finding similarities between codes to see if any could be combined or if new codes should be added to replace previous codes. The end result was 31 codes.

A total of 357 quotations were reviewed, and of those, more than one third (132) could be accounted for in the top 5 most grounded codes, and more than half (200) could be accounted for in the top 10 most grounded code (see Table 2). Upon finalizing the codes, the resulting codes were categorized to develop themes, resulting in four themes ranked by groundedness (see Table 3). Two themes are related to the most helpful aspects of the texts and two are related to the least helpful aspects of the texts. The number of references to these themes by textbook type is shown in Table 3. Highly grounded codes appeared dozens of times, meaning that dozens of students mentioned those codes, whereas less grounded codes appeared only a few times.

One of the four major themes identified in the open-ended questions was “Clear layout of content helped students parse and better understand the material.” Among open textbook participants, there were 50 instances in which participants made reference to this theme, and among commercial textbook participants, there were 59 references. Students overwhelmingly found bolded words to be the most helpful feature of both texts, with 32 (open) and 38 (commercial) individual participants reporting that it was the most helpful aspect while only 6 participants said it was unhelpful. Examples of typical comments for open textbook included “The way the text was broken up with the use of pictures/columns of text. I find it difficult when textbooks are all text with minimal breakage.” Examples of typical comments from commercial textbooks included “That the paragraphs were fairly short, so I didn't space out too often reading a long section of text,” and “Use of headers, color, and definitions.”

The second most grounded theme was “Visual layout was unhelpful due to poor page layout, inconsistent use of font and font size, irrelevant images, and density of text.” Among open textbook participants, there were 60 references to this theme and among commercial textbook participants, there were 52 references. Students had complaints about the font and layout for both textbooks. Students using the commercial textbook reported “Bubbles on the sides; Different fonts & sizes,” as being distracting. Students using the open textbook reported “When there are extra boxes or quotes on the side of the page; those distract me quite a bit.”

The third most grounded theme for users of both textbook types was “Use of clear, simple, non-academic language made the text accessible to students.” Among open textbook participants, there were 28 references to this theme, and among commercial textbook participants, there were 36 references. In this theme, language was very much tied to context: “Real life examples and having multiple ways of explaining” and “The highlighted words and the examples to help explain them” were noted as being helpful among students using open textbooks. Students using commercial textbooks noted “It was helpful that the topics mentioned were straight forward and not trying to explain it scientifically for a simple topic.”

The final theme was that the “Content was too academic, wordy, statistical, and lacked sufficient examples to make it accessible to everyone.” Among open textbook participants, there were 23 references to this theme, and among commercial textbook participants, there were 23 references. In this theme, students described whether they found the text language to be a hindrance to their learning. Examples in this theme included “Long walls of text with small or no breaks” and “The extra fluff that was in there lead me to become disinterested” from users of commercial texts and “Big words (could simplify)” from users of the commercial texts. Students complained that not only was some of the language too academic, but simply too many words were used when fewer would have sufficed. For example, one student using a commercial textbook wrote that “Additional info is nice but ….some pruning of the text would help.” This sentiment was reflected in comments by students using both types of text. Overall students' comments regarding useful and distracting features remained consistent regardless of which textbook type they used.

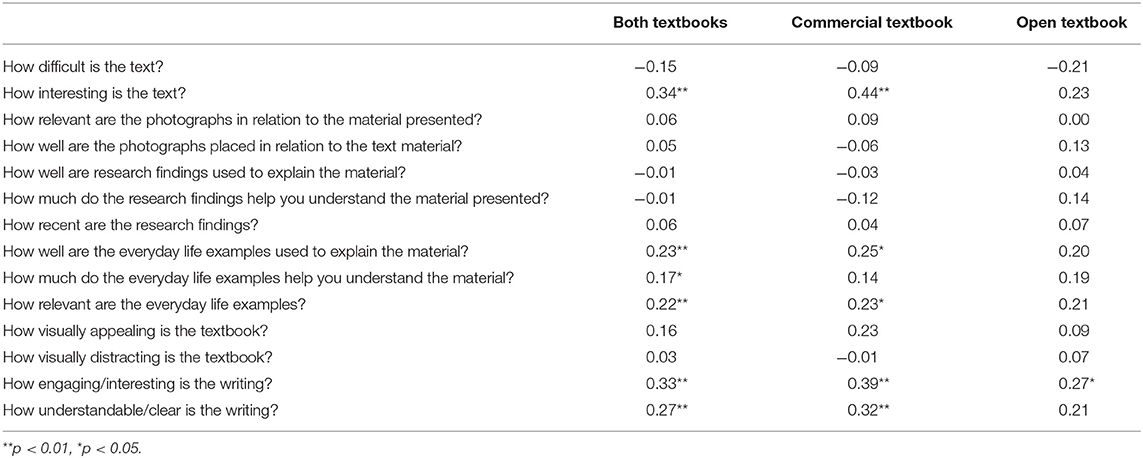

To answer the third research question, associations between student learning performance and perceptions of textbook quality were examined by textbook type and also combined (see Table 4). Combining the textbook groups provided a global understanding of how perceptions of textbook quality and learning performance, based on assessment scores, were related to each other. In contrast, analyses by textbook allowed for investigation of whether textbook type influenced the relationship between perceptions of textbook quality and student learning performance. Overall, there were positive correlations between assessment scores and perceptions of textbook quality, including how interesting the text was, how well everyday life examples explained material, the helpfulness of everyday life examples, the relevance of everyday life examples, engagement of the writing, and understandable/clear writing. For the commercial textbook, the same positive correlations were found with the exception that there was not a significant correlation for how much everyday life examples helped with understanding the material. For the open textbook, the only significant correlation was with how engaging the writing was. There were no significant correlations between perceptions of research findings and assessment performance. In addition, there were no significant correlations between perceptions of visuals, such as use of photographs and visual appeal, and assessment performance. There were also no correlations between perceived difficulty and assessment performance.

Table 4. Correlations of learning performance (number correct on assessment) with perceptions of textbook quality by textbook type, separately and combined.

The purpose of this experiment was to test the effects of textbook type (commercial or open) on student learning and perceptions of textbook quality. Based on the findings, the textbooks were not significantly different in terms of effects on student learning. There were several differences in student perceptions of the textbooks though, with some favoring the commercial textbook (e.g., visual appeal, engagement) and some favoring the open textbook (e.g., relevance of research findings, timeliness of research findings). However, there were no statistically significant differences between textbooks in student perceptions of items relating to everyday life examples and difficulty.

The lack of difference in learning performance by textbook type is consistent with previous quasi-experimental research comparing open textbooks with commercial textbooks (e.g., Hendricks et al., 2017; Clinton, 2018; Jhangiani et al., 2018; Lawrence and Lester, 2018; see Hilton, 2016, for a systematic review; see Clinton and Khan, 2019, for a meta-analysis). However, a key difference is that in this experiment, it was certain that everyone had access to the excerpt of the textbook. In previous quasi-experimental work, there was the possibility that some students did not have access to the commercial textbook. Therefore, differences in access to the materials did not affect potential learning from the textbook. In addition, the assessment was standardized across conditions, which allows for better comparisons between the textbooks. Similar results across textbook type with standardized measures contrasts with Gurung's (2017) findings that students enrolled in courses with open textbooks performed less well on a standardized measure than did students in courses with commercial textbooks. One possible explanation for these findings is that this current experiment involved students from the same campus population whereas Gurung (2017) compared students at different institutions and subsequently different populations.

The findings for perception of textbook quality varied considerably. Generally, visual aspects of the textbook, namely, appeal and use of photographs, were higher for the commercial textbook compared to the open textbook. This converges with previous faculty reviews of open textbooks that the graphics quality was not as good as in commercial counterparts (Jung et al., 2017). However, because of their licensing, open textbooks are typically customizable (Wiley et al., 2014). This means that open textbooks can be revised and remixed to the instructor's liking (Hilton et al., 2012). Instructors can also assign their students to add and develop graphics, which could help them both better understand the concepts and develop design skills (Atenas et al., 2015).

Commercial textbooks were also rated higher on engagement and clarity of writing, both of which were positively correlated with learning performance. Clear writing was also indicated as important for learning in the open-ended responses. For the open textbook, aspects of the textbook related to how well research findings explain the material and the timeliness of research findings were rated higher than for the commercial textbook. One of the touted benefits of open textbooks is the ease of updating them (Kimmons, 2015; Lashley et al., 2017), which may facilitate the inclusion of more recent research. Nevertheless, items regarding research were not correlated with learning performance. Overall, the findings on perceptions indicate that there may be more of an emphasis on visual design for the commercial textbook and more of an emphasis on research findings in the open textbook.

The findings on perceptions of what was helpful or not helpful for learning based on open-ended responses did not indicate many differences between textbook types. Students indicated that bolded words were helpful and pictures were distracting for both the open and commercial textbooks. Although the layouts for the textbooks were quite different, students reported disliking the layouts of both textbooks. However, the reasons for the dislike differed with students stating the font was too small and too much information was densely on a page for the open textbook and the different use of fonts and bubbles with extra information was considered unhelpful for the commercial textbook.

Although only one open and one commercial textbook were used in this experiment, the findings regarding perceptions can inform textbook design. Namely, visual appeal, especially with relevance and placement of visuals, is an area in which open textbooks could be developed. Although it should be noted that visual appeal was not reliably predictive of learning from the text, developing better visuals could help with two key aspects of quality perception that were related to learning from the text—engaging and understandable writing. Well-designed visual representations, such as diagrams, could help students better understand and be more engaged with written texts (Mayer, 2014a,b; Clinton et al., 2016). That said, the visuals in textbooks should be helpful and relevant for learning—the pictures were noted as unhelpful for both the open and commercial textbooks. In both books, the pictures showed examples of families that were likely intended to contextualize the information and add decoration, but these visuals were not necessarily designed to help understand the information.

Based on both the quantitative and qualitative findings from this study, writing quality is an important aspect of textbook design. In addition to positively correlating with learning performance, students frequently noted how writing was helpful or unhelpful for their learning. Students found the use of clear, straightforward language as helpful for their learning. They also appreciated examples, although perceptions of examples in the textbook were not correlated with learning performance. In contrast, students noted that academic jargon, dense statistics, and irrelevant information were unhelpful for their learning.

The writing quality differences noted between the commercial and open textbook excerpts could be related to the differences in the readability measures. Based on the Flesch reading ease scores, the writing of the commercial textbook excerpt was simpler than that of the open textbook excerpt. In addition, the writing of the commercial textbook used less passive voice than did the open textbook and passive voice is more difficult to understand than active voice (Just and Carpenter, 1987). Given these differences, it is logical that the writing would be rated as more understandable for the commercial textbook excerpt than the open textbook excerpt. These issues of readability may be important to examine given that previous work has noted that the writing in an open textbook was more difficult than that of a commercial textbook for the same course (Jhangiani et al., 2018). In addition, the commercial textbook had more use of pronouns, especially second-person pronoun use (e.g., “you”). Second person pronouns have been found to increase learner engagement possibly because these pronouns make the material more personal (Mayer et al., 2004; Kartal, 2010; Zander et al., 2015). This could potentially be related to the higher ratings for the commercial textbook on how engaging and interesting the writing was. Because the writing ratings, both in terms of understandable and engaging, were both positively correlated with assessment performance, the issues of writing quality may be of particular importance for textbook design.

Certain limitations to this experiment should be noted. First, the learning measure was brief and assessed content limited to a short excerpt from the textbooks. Therefore, the learning involved was much less comprehensive than would be involved in a semester-long course. Furthermore, only one open and one commercial textbook from a single discipline, using students from one campus who were all native speakers of English, were analyzed. For this reason, generalizations about all open and commercial textbooks and all students cannot appropriately be made based on the findings in this study.

While a degree of generalizability may be lacking in this study, it can serve as a roadmap for future research. True experimentation in this area has historically been difficult due to the nature of OER and their use, which lends research in this field to a number of confounds (Griggs and Jackson, 2017). However, the experiment model could be replicated across grade, education, language, and ability levels to control for the confounding factors of perceptions based on cost. Such replication structured as a time series assessment could gather data throughout the course of a semester, year, or even follow a cohort of students through their program. The possibilities for further development of experimental OER research are numerous.

Another limitation in this study that could be addressed in future experimental OER research is the lack of a prior knowledge assessment. This study did not include a pretest for two reasons. One was that the students in this study could be assumed to lack a background in the topic because one of the participation criteria was not having taken a sociology course before. The second was concern that a pretest could prime participants to focus on certain information in the textbook excerpts and inflate results, a phenomenon known as the pretest sensitization effect (Willson and Putnam, 1982; Willson and Kim, 2010). This could potentially be addressed in a future study using a two-by-two design in which some participants take a pretest and some do not (see All et al., 2017, for an example of this design).

For both textbook excerpts, the difficulty was rated rather low. Although there did not appear to be ceiling effects on the assessment based on the means and standard deviations, a future area for research could be to examine more difficult texts. It is possible that the challenges of reading more difficult texts would yield differences between textbook type.

Open textbooks have been developed in response to the rising prices of commercial textbooks. This experiment allowed for a comparison of learning and perceptions between an open and commercial textbook. Based on the findings, learning did not differ between textbooks, which contributes to the wealth of quasi-experimental evidence that generally indicates that open textbooks are not detrimental to learning. Given that open textbooks reduce the financial burden of a college education, the lack of difference in learning findings is noteworthy. Nevertheless, perceptions of textbook quality varied, which informs areas in which open textbooks could be developed. Instructors may use these findings to aid in making evidence-based decisions about course materials.

All datasets generated for this study are included in the manuscript/Supplementary Files.

The studies involving human participants were reviewed and approved by University of North Dakota Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

VC and EL designed the materials and analyzed quantitative data. BR analyzed the qualitative data.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Chia Lin Chang, Brennon Davis, and Tessa Isabel are thanked for their assistance on this project.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2019.00110/full#supplementary-material

Supplementary Table 1. Coh-Metrix Output.

Supplementary Table 2. Dataset.

Alfassi, M. (2004). Reading to learn: effects of combined strategy instruction on high school students. J. Educ. Res. 97, 171–185. doi: 10.3200/JOER.97.4.171-185

All, A., Plovie, B., Castellar, E. P. N., and Van Looy, J. (2017). Pre-test influences on the effectiveness of digital-game based learning: a case study of a fire safety game. Comput. Educ. 114, 24–37. doi: 10.1016/j.compedu.2017.05.018

Allen, G., Guzman-Alvarez, A., Smith, A., Gamage, A., Molinaro, M., and Larsen, D. S. (2015). Evaluating the effectiveness of the open-access ChemWiki resource as a replacement for traditional general chemistry textbooks. Chem. Educ. Res. Pract. 16, 939–948. doi: 10.1039/C5RP00084J

Atenas, J., Havemann, L., and Priego, E. (2015). Open data as open educational resources: towards transversal skills and global citizenship. Open Prax. 7, 377–389. doi: 10.5944/openpraxis.7.4.233

Brown, J. I., Fishco, V. V., and Hanna, G. (1993). The Nelson–Denny reading Test. Chicago, IL: Riverside.

Bryant, B. R., Patton, J. R., and Dunn, C. (1991). Scholastic Abilities Test for Adults. Austin, TX: Pro-Ed.

Charmaz, K. (1990). ‘Discovering' chronic illness: using grounded theory. Soc. Sci. Med. 30, 1161–1172. doi: 10.1016/0277-9536(90)90256-R

Chung, S., Espin, C. A., and Stevenson, C. E. (2018). CBM Maze-Scores as indicators of reading level and growth for seventh-grade students. Read. Writ. 31, 627–648. doi: 10.1007/s11145-017-9803-8

Clinton, V. (2018). Savings without sacrifices: a case study of open-source textbook adoption. Open Learn. 33, 177–189. doi: 10.1080/02680513.2018.1486184

Clinton, V. (2019). Cost, outcomes, use, and perceptions of Open Educational Resources in psychology: a narrative review of the literature. Psychol. Learn. Teach. 18, 4–20. doi: 10.1177/1475725718799511

Clinton, V., Alibali, M. W., and Nathan, M. J. (2016). Learning about posterior probability: do diagrams and elaborative interrogations help? J. Exp. Educ. 84, 579–599. doi: 10.1080/00220973.2015.1048847

Clinton, V., and Khan, S. (2019). Efficacy of open textbook adoption on learning performance and course withdrawal rates: a meta-analysis. AERA Open 5, 1–20. doi: 10.1177/2332858419872212

Cole, J. S., and Gonyea, R. M. (2010). Accuracy of self-reported SAT and ACT test scores: implications for research. Res. High. Educ. 51, 305–319. doi: 10.1007/s11162-009-9160-9

Colvard, N., Watson, C. E., and Park, H. (2018). The impact of open educational resources on various student success metrics. Int. J. Teach. Learn. High. Educ. 30, 262–276. Retrieved from https://eric.ed.gov/?id=EJ1184998

Cuttler, C. (2019). Students' use and perceptions of the relevance and quality of open textbooks compared to traditional textbooks in online and traditional classroom environments. Psychol. Learn. Teach. 18, 65–83. doi: 10.1177/1475725718811300

de Vlieger, P., Jacob, B., and Stange, K. (2016). Measuring instructor effectiveness in higher education (Working Paper No. 22998). Cambridge, MA: National Bureau of Economic Research.

Draaijer, S., Schoonenboom, J., Beishuizen, J., and Schuwirth, L. (2016). Supporting divergent and convergent production of test items for teachers in higher education. Thinking Skills Creativity 20, 1–16. doi: 10.1016/j.tsc.2015.12.003

Duke, N., Bennett-Armistead, S., and Roberts, E. (2003). Filling the nonfiction void: why we should bring nonfiction into the early-grade classroom. Am. Educ. 27, 30–34, 46. Retrieved from https://digitalcommons.library.umaine.edu/cgi/viewcontent.cgi?article=1000&context=erl_facpub

Fletcher, G., Schaffhauser, D., and Levin, D. (2012). Out of print: Reimagining the K-12 Textbook in a Digital Age. Washington, DC: State Educational Technology Directors Association (SETDA).

Florida Virtual Campus (2016). 2016 Florida Student Textbook Survey. Tallahassee, FL: Office of Distance Learning & Student Services.

French, M., Taverna, F., Neumann, M., Paulo Kushnir, L., Harlow, J., Harrison, D., et al. (2015). Textbook use in the sciences and its relation to course performance. Coll. Teach. 63, 171–177. doi: 10.1080/87567555.2015.1057099

Gierl, M. J., Bulut, O., Guo, Q., and Zhang, X. (2017). Developing, analyzing, and using distractors for multiple-choice tests in education: a comprehensive review. Rev. Educ. Res. 87, 1082–1116. doi: 10.3102/0034654317726529

Graesser, A. C., and McNamara, D. S. (2011). Computational analyses of multilevel discourse comprehension. Top. Cogn. Sci. 3, 371–398. doi: 10.1111/j.1756-8765.2010.01081.x

Graesser, A. C., McNamara, D. S., Louwerse, M. M., and Cai, Z. (2004). Coh-Metrix: analysis of text on cohesion and language. Behav. Res. Methods Instrum. Comput. 36, 193–202. doi: 10.3758/BF03195564

Grewe, K., and Davis, W. (2017). The impact of enrollment in an OER course on student learning outcomes. Int. Rev. Res. Open Distrib. Learn. 18. doi: 10.19173/irrodl.v18i4.2986

Griffiths, H., Keierns, N., Strayer, E., Sadler, T., Cody-Rydzewski, S., Scaramuzzo, G., et al. (2015). Introduction to Sociology, 2nd Edn. Houston, TX: Open Stax.

Griggs, R. A., and Jackson, S. L. (2017). Studying open versus traditional textbook effects on students' course performance: confounds abound. Teach. Psychol. 44, 306–312. doi: 10.1177/0098628317727641

Grimaldi, P., Basu Mallick, D., Waters, A., and Baraniuk, R. (2019). Do open educational resources improve student learning? Implications of the access hypothesis. PLoS ONE 14:e0212508. doi: 10.1371/journal.pone.0212508

Grissett, J., and Huffman, C. (2019). An open versus traditional psychology textbook: student performance, perceptions, and use. Psychol. Learn. Teach. 18, 21–35. doi: 10.1177/1475725718810181

Gurung, R. A. (2017). Predicting learning: comparing an open educational resource and standard textbooks. Scholarsh. Teach. Learn. Psychol. 3, 233–248. doi: 10.1037/stl0000092

Gurung, R. A., Landrum, R. E., and Daniel, D. B. (2012). Textbook use and learning: a North American perspective. Psychol. Learn. Teach. 11, 87–98. doi: 10.2304/plat.2012.11.1.87

Gurung, R. A., and Martin, R. C. (2011). Predicting textbook reading: the textbook assessment and usage scale. Teach. Psychol. 38, 22–28. doi: 10.1177/0098628310390913

Haladyna, T. M., Downing, S. M., and Rodriguez, M. C. (2002). A review of multiple-choice item-writing guidelines for classroom assessment. Appl. Meas. Educ. 15, 309–333. doi: 10.1207/S15324818AME1503_5

Hebert, M. (2016). An examination of reading comprehension and reading rate in university students (Unpublished dissertation). Edmonton, AB:University of Alberta.

Heiner, C. E., Banet, A. I., and Wieman, C. (2014). Preparing students for class: how to get 80% of students reading the textbook before class. Am. J. Phys. 82, 989–996. doi: 10.1119/1.4895008

Hendricks, C., Reinsberg, S. A., and Rieger, G. W. (2017). The adoption of an open textbook in a large physics course: an analysis of cost, outcomes, use, and perceptions. Int. Rev. Res. Open Distrib. Learn. 18. doi: 10.19173/irrodl.v18i4.3006

Hilton, J. (2016). Open educational resources and college textbook choices: a review of research on efficacy and perceptions. Educ. Technol. Res. Dev. 64, 573–590. doi: 10.1007/s11423-016-9434-9

Hilton, J. (2019). Open educational resources, student efficacy, and user perceptions: a synthesis of research published between 2015 and 2018. Edu. Technol. Res. Dev. 2019, 1–24. doi: 10.1007/s11423-019-09700-4

Hilton, J. L. III., Gaudet, D., Clark, P., Robinson, J., and Wiley, D. (2013). The adoption of open educational resources by one community college math department. Int. Rev. Res. Open Distrib. Learn. 14. doi: 10.19173/irrodl.v14i4.1523

Hilton, J. L. III., Lutz, N., and Wiley, D. (2012). Examining the reuse of open textbooks. Int. Rev. Res. Open Distrib. Learn. 13, 45–58. doi: 10.19173/irrodl.v13i2.1137

Illowsky, B. S., Hilton, J. III., Whiting, J., and Ackerman, J. D. (2016). Examining student perception of an open statistics book. Open Prax. 8, 265–276. doi: 10.5944/openpraxis.8.3.304

Jhangiani, R. S., Dastur, F. N., Le Grand, R., and Penner, K. (2018). As good or better than commercial textbooks: students' perceptions and outcomes from using open digital and open print textbooks. Can. J. Scholarsh. Teach. Learn. 9, 1–22. doi: 10.5206/cjsotl-rcacea.2018.1.5

Junco, R., and Clem, C. (2015). Predicting course outcomes with digital textbook usage data. Internet Higher Educ. 27, 54–63. doi: 10.1016/j.iheduc.2015.06.001

Jung, E., Bauer, C., and Heaps, A. (2017). Higher education faculty perceptions of open textbook adoption. Int. Rev. Res. Open Distrib. Learn. 18. doi: 10.19173/irrodl.v18i4.3120

Just, M. A., and Carpenter, P. A. (1987). The Psychology of Reading and Language Comprehension. Boston, MA: Allyn & Bacon.

Kartal, G. (2010). Does language matter in multimedia learning? Personalization principle revisited. J. Educ. Psychol. 102, 615–624. doi: 10.1037/a0019345

Kimmons, R. (2015). OER quality and adaptation in K-12: comparing teacher evaluations of copyright-restricted, open, and open/adapted textbooks. Int. Rev. Res. Open Distrib. Learn. 16. doi: 10.19173/irrodl.v16i5.2341

Kincaid, J. P., Fishburne, R. P., Rogers, R. L., and Chissom, B. S. (1975). Derivation of New Readability Formulas (Automated Readability Index, Fog Count, and Flesch Reading Ease Formula) for Navy Enlisted Personnel (Research Branch Report 8–75). Millington, TN: Naval Technical Training Command, Research Branch. Retrieved from: https://stars.library.ucf.edu/istlibrary/56/

Knight, B. A. (2015). Teachers' use of textbooks in the digital age. Cogent Educ. 2:1015812. doi: 10.1080/2331186X.2015.1015812

Landrum, R. E., Gurung, R. A., and Spann, N. (2012). Assessments of textbook usage and the relationship to student course performance. Coll. Teach. 60, 17–24. doi: 10.1080/87567555.2011.609573

Lashley, J., Cummings-Sauls, R., Bennett, A. B., and Lindshield, B. L. (2017). Cultivating textbook alternatives from the ground up: one public university's sustainable model for open and alternative educational resource proliferation. Int. Rev. Res. Open Distrib. Learn. 18. doi: 10.19173/irrodl.v18i4.3010

Lawrence, C., and Lester, J. (2018). Evaluating the effectiveness of adopting open educational resources in an introductory American government course. J. Polit. Sci. Educ. 14, 555–566. doi: 10.1080/15512169.2017.1422739

Maggioni, L., Fox, E., and Alexander, P. A. (2015). “Beliefs about reading, text, and learning from text,” in International Handbook of Research on Teacher's Beliefs, eds H. Fives, and M. G. Gill (London: Routledge, 353–356.

Mayer, R. E. (2014a). Incorporating motivation into multimedia learning. Learn. Instr. 29, 171–173. doi: 10.1016/j.learninstruc.2013.04.003

Mayer, R. E. (2014b). “Cognitive theory of multimedia learning,” in Cambridge Handbook of Multimedia Learning, 2nd Edn, ed R. E. Mayer (New York, NY: Cambridge University Press, 43–71).

Mayer, R. E., Fennell, S., Farmer, L., and Campbell, J. (2004). A personalization effect in multimedia learning: students learn better when words are in conversational style rather than formal style. J. Educ. Psychol. 96:389. doi: 10.1037/0022-0663.96.2.389

McCormick, J., Hafner, A. L., and Saint-Germain, M. (2013). From high school to college: teachers and students assess the impact of an expository reading and writing course on college readiness. J. Educ. Res. Pract. 3, 30–49.3. doi: 10.5590/JERAP.2013.03.1.03

McNeish, D. (2018). Thanks coefficient alpha, we'll take it from here. Psychol. Methods 23, 412–433. doi: 10.1037/met0000144

Medley-Rath, S. (2018). Does the type of textbook matter? Results of a study of free electronic reading materials at a community college. Community Coll. J. Res. Pract. 42, 908–918. doi: 10.1080/10668926.2017.1389316

Miles, M. B., and Huberman, A. M. (1994). Qualitative Data Analysis: An Expanded Sourcebook. Thousand Oaks, CA: Sage.

Muijselaar, M. M. L., Kendeou, P., de Jong, P. F., and van den Broek, P. W. (2017). What does the CBM-maze test measure? Sci. Stud. Reading 21, 120–132. doi: 10.1080/10888438.2016.1263994

Ozuru, Y., Dempsey, K., and McNamara, D. S. (2009). Prior knowledge, reading skill, and text cohesion in the comprehension of science texts. Learn. Instr. 19, 228–242. doi: 10.1016/j.learninstruc.2008.04.003

Penuel, W. R., and Frank, K. A. (2015). “Modes of inquiry in educational psychology and learning sciences research,” in Handbook of Educational Psychology (Routledge), 30–42.

Perry, M. (2015). The New Era of the $400 College Textbook, Which is Part of the Unsustainable Higher Education Bubble. American Enterprise Institute Ideas Blog Post. Retrieved from: https://www.aei.org/publication/the-new-era-of-the-400-college-textbook-which-is-part-of-theunsustainable-higher-education-bubble/

Peters, G.-J. Y. (2014). The alpha and the omega of scale reliability and validity: why and how to abandon Cronbach's alpha and the route towards more comprehensive assessment of scale quality. Eur. Health Psychol. 16, 56–69. doi: 10.31234/osf.io/h47fv

Revelle, W. (2018). Package “psych”. Retrieved from: http://personality-project.org/r/psych/psych-manual.pdf

Saint-Mont, U. (2015). Randomization does not help much, comparability does. PLoS ONE 10:e0132102. doi: 10.1371/journal.pone.0132102

Sanchez, E., and Buddin, R. (2015). “How accurate are self-reported high school courses, course grades, and grade point average,”. in ACT Working Paper Series No. WP-2015–03. (Iowa City, IA: American College Testing Program).

Seaton, D. T., Kortemeyer, G., Bergner, Y., Rayyan, S., and Pritchard, D. E. (2014). Analyzing the impact of course structure on electronic textbook use in blended introductory physics courses. Am. J. Phys. 82, 1186–1197. doi: 10.1119/1.4901189

Senack, E., and Donaghue, R. (2016). Covering the Cost. Student PIRGs. Retrieved from: https://studentpirgs.org/2016/02/03/covering-cost/

Shin, J., and McMaster, K. (2019). Relations between CBM (oral reading and maze) and reading comprehension on state achievement tests: a meta-analysis. J. Sch. Psychol. 73, 131–149. doi: 10.1016/j.jsp.2019.03.005

Skinner, D., and Howes, B. (2013). The required textbook-friend or foe? Dealing with the dilemma. J. Coll. Teach. Learn. 10:133. doi: 10.19030/tlc.v10i2.7753

Smith, L., Mao, S., and Deshpande, A. (2016). “Talking across worlds”: classist microaggressions and higher education. J. Poverty 20, 127–151. doi: 10.1080/10875549.2015.1094764

Starcher, K., and Proffitt, D. (2011). Encouraging students to read: what professors are (and aren't) doing about it. Int. J.Teach. Learn. Higher Educ. 23, 396–407. Retrieved from https://eric.ed.gov/?id=EJ946166

Strauss, A., and Corbin, J. (1998). Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks, CA: Sage Publications.

U.S. Bureau of Labor Statistics (2016). College Tuition and Fees Increase 63 Percent Since January 2006.The Economics Daily: U.S. Bureau of Labor Statistics. Retrieved from: http://www.bls.gov/opub/ted/2016/college-tuition-and-fees-increase-63-percent-since-january-2006.htm

U.S. Public Interest Research Group Education Fund Student Public Interest Research Groups (USPIRG). (2014). Fixing the Broken Textbook Market. Washington, DC. Retrieved from: http://www.uspirg.org/reports/usp/fixing-brokentextbook-market

Voss, J. F., and Silfies, L. N. (1996). Learning from history text: the interaction of knowledge and comprehension skill with text structure. Cogn. Instr. 14, 45–68. doi: 10.1207/s1532690xci1401_2

What Works Clearinghouse (2017). What Works Clearinghouse Standards Handbook Version 4.0. Retrieved from: https://ies.ed.gov/ncee/wwc/Docs/referenceresources/wwc_standards_handbook_v4.pdf

Wiley, D., Bliss, T. J., and McEwen, M. (2014). “Open educational resources: a review of the literature,” in Handbook of Research on Educational Communications and Technology eds J. Spector, M. Merrill, J. Elen, and M. Bishop (New York, NY: Springer), 781–789.

Williams, B. (1994). Patient satisfaction: a valid concept? Soc. Sci. Med. 38, 509–516. doi: 10.1016/0277-9536(94)90247-X

Willson, V. L., and Kim, E. S. (2010). “Pretest sensitization,” in Encyclopedia of Research Design, ed N. Salkind. Thousand Oaks, CA: Sage.

Willson, V. L., and Putnam, R. R. (1982). A meta-analysis of pretest sensitization effects in experimental design. Am. Educ. Res. J. 19, 249–258. doi: 10.3102/00028312019002249

Keywords: college students, textbook adoption, open educational resources (OER), randomized controlled experiment, student perceptions

Citation: Clinton V, Legerski E and Rhodes B (2019) Comparing Student Learning From and Perceptions of Open and Commercial Textbook Excerpts: A Randomized Experiment. Front. Educ. 4:110. doi: 10.3389/feduc.2019.00110

Received: 02 August 2019; Accepted: 23 September 2019;

Published: 15 October 2019.

Edited by:

Xiaoxun Sun, Australian Council for Educational Research, AustraliaReviewed by:

Liang-Cheng Zhang, Australian Council for Educational Research, AustraliaCopyright © 2019 Clinton, Legerski and Rhodes. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Virginia Clinton, dmlyZ2luaWEuY2xpbnRvbkB1bmQuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.